Abstract

Individual differences in dopamine (DA) signaling, including low striatal D2/3 receptors, may increase vulnerability to substance abuse, although whether this phenotype confers susceptibility to nonchemical addictions is unclear. The degree to which people use “irrational” cognitive heuristics when choosing under uncertainty can determine whether they find gambling addictive. Given that dopaminergic projections to the striatum signal reward expectancy and modulate decision-making, individual differences in DA signaling could influence the extent of such biases. To test this hypothesis, we used a novel task to model biased, risk-averse decision-making in rats. Animals chose between a “safe” lever, which guaranteed delivery of the wager, or an “uncertain” lever, which delivered either double the wager or nothing with 50:50 odds. The bet size varied from one to three sugar pellets. Although the amount at stake did not alter the options' utility, a subgroup of “wager-sensitive” rats increased their preference for the safe lever as the bet size increased, akin to risk aversion. In contrast, wager-insensitive rats slightly preferred the uncertain option consistently. Amphetamine increased choice of the uncertain option in wager-sensitive, but not in wager-insensitive rats, whereas a D2/3 receptor antagonist decreased uncertain lever choice in wager-insensitive rats alone. Micro-PET and autoradiography using [11C]raclopride confirmed a strong correlation between high wager sensitivity and low striatal D2/3 receptor density. These data suggest that the propensity for biased decision-making under uncertainty is influenced by striatal D2/3 receptor expression, and provide novel support for the hypothesis that susceptibility to chemical and behavioral addictions may share a common neurobiological basis.

Introduction

Most decisions involve elements of risk or uncertainty. Using Bayesian rationality, any option's expected value can be computed as the product of an outcome's value and its probability of occurring. Although we understand such principles, our decisions are instead primarily influenced by cognitive biases (Kahneman and Tversky, 1979). For example, we are excessively intolerant of uncertainty as the wager increases, a bias that can engender problematic risk-seeking to avoid guaranteed losses (Trepel et al., 2005). Such strategies are suboptimal when compared with mathematical norms. Furthermore, irrational decision-making under risk has been linked to the manifestation and severity of problem gambling (PG) (Ladouceur and Walker, 1996; Miller and Currie, 2008; Emond and Marmurek, 2010) and correcting such irrational cognitions is a key target of cognitive therapy for PG (Sylvain et al., 1997; Ladoucer et al., 2001). Understanding the biological basis of these decision-making biases could therefore provide valuable insight into gambling and its addictive nature.

Given that the dopamine (DA) system plays a critical role in drug addiction, it is perhaps unsurprising that differences in DA activity have been hypothesized to contribute to PG. The psychostimulant amphetamine, which potentiates the actions of DA, can increase the drive to gamble in problem gamblers but not in healthy controls (Zack and Poulos, 2004), indicating that problem gamblers may be hypersensitive to increases in DA release. DA may also be fundamentally important in representing risk at a neuronal level due to its role in signaling reward prediction errors within the striatum (Schultz et al., 1997; Cardinal et al., 2002; O'Doherty et al., 2004; Day et al., 2007). Given that dopaminergic drugs have been shown to modulate decision-making between probabilistic outcomes in rats and humans (Pessiglione et al., 2006; St. Onge and Floresco, 2009), DA signaling, particularly within the striatum, could theoretically contribute to biases in decision-making under risk.

Experiments using animal models of human cognitive functioning can provide vital insight into the mechanisms underlying complex brain functions. Although a multitude of cognitive distortions has been identified in gamblers, it is relatively unclear which, if any, contribute meaningfully toward the formation or maintenance of an “addicted” state. However, many of these biases, such as the illusion of control or preferences when picking numbers in a lottery, are inherently difficult to model in nonhuman subjects. It could be argued that, when considering biased or subjective preference, the critical comparison occurs when subjects choose between certain and uncertain options that yield equal payoffs on average. Although rational decision-makers should be indifferent under such circumstances, most human subjects are initially risk-averse and favor the guaranteed reward, particularly as the wager increases (Kahneman, 2003), although individuals vary as to the extent and resilience of this bias (Weber et al., 2004; Brown and Braver, 2007, 2008; Gianotti et al., 2009). Using a novel decision-making task, we therefore aimed to determine whether rats are likewise more prone to risk aversion when the reward at stake increases, and whether the size of such a bias is mediated by individual differences in DA signaling.

Materials and Methods

Subjects.

Subjects were 32 male Long–Evans rats (Charles River Laboratories) weighing between 275 and 300 g at the start of testing. Animals were maintained at 85% of their ad libitum feeding weight and food restricted to 14 g of rat chow daily, in addition to the sugar pellets earned during behavioral testing. Water was available ad libitum. Animals were pair-housed and kept in a temperature-controlled and climate-controlled colony room (21°C) on a reverse 12 h light/dark schedule (lights off, 8 A.M.). All testing and housing conditions were in accordance with the Canadian Council for Animal Care and all experiments were approved by the Animal Care Committee of the University of British Columbia.

Behavioral apparatus.

Testing took place in eight standard five-hole operant chambers, each enclosed within a ventilated sound-attenuating cabinet (Med Associates). An array of five evenly spaced nosepoke apertures, or response holes, was located 2 cm above a bar floor along one wall of the chamber. A recessed stimulus light was located in the back of each aperture and nosepoke responses could be detected by a horizontal infrared beam passing across the front of each response hole. A food tray, also equipped with an infrared beam and a tray light, was located on the opposite wall. Sucrose pellets (45 mg, Bio-Serv) were delivered to the food tray via an external pellet dispenser. Retractable levers were located on either side of the food tray. Chambers could be illuminated via a houselight and were controlled by software, written in MED-PC by C.A.W., running on an IBM-compatible computer.

Behavioral training.

Animals were initially habituated to the testing chambers with 2 daily 30 min sessions. During these sessions, the response apertures and the food tray were baited with sugar pellets. In subsequent sessions, animals were trained to respond in the apertures when the light inside was illuminated, similar to the procedure used for training rats to perform the five-choice serial reaction time task as has been described in detail previously (Winstanley et al., 2007, 2010). In essence, rats were trained to nosepoke into an illuminated aperture within 10 s to gain food reward. Sessions lasted 30 min, or 100 trials, and the spatial position of the stimulus light varied pseudorandomly between trials. Once rats were capable of responding in the correct aperture with an accuracy of 80% or higher, and omitted <20% of trials, animals were then trained to respond on the retractable levers for reward under a fixed ratio 1 schedule. Only one lever was presented per 30 min session. Once the animal had made >50 lever presses during a 30 min session, the other lever was presented in the subsequent session. The order in which the levers were presented (left/right) was counterbalanced across subjects.

Betting task.

A task schematic is provided in Figure 1. Before task commencement, levers were permanently designated as “safe” or “uncertain,” and these designations were counterbalanced across subjects. Animals initially performed 10 sessions of a forced-choice version of the task in which only one lever extended per trial. All trials were initiated via a nosepoke response into the illuminated food tray. Following such a response, the tray light was extinguished and one, two, or three lights within the five-hole array were illuminated in holes 2, 3, or 4. The number of lights presented equaled the bet size on each trial. Rats were required to make a nosepoke response at each illuminated aperture to turn off the light inside it. Once all the stimulus lights had been turned off in this manner, the levers were inserted into the chamber. A response on the “safe” lever led to the guaranteed delivery of the number of pellets wagered, whereas a response on the “uncertain” lever yielded a 50% chance of double the available safe reward or nothing. The expected utility of both options was therefore equal, and there was no net advantage in choosing one over the other. On rewarded trials, the designated number of pellets was dispensed into the food tray. Regardless of whether reward was delivered, the tray light was illuminated after a response had been made on one of the two levers, and a response at the food tray initiated the next trial. Failure to respond on either lever within 10 s led to the trial being scored as a choice omission. Similarly, failure to respond at all illuminated apertures within 10 s resulted in the trial being scored as a hole omission. Both errors of omission were immediately punished by a 5 s time-out period. During such time-outs, the house light illuminated the chamber and no reward could be earned or trials initiated. Following the time-out period, the tray light was illuminated, indicating the animal could commence another trial.

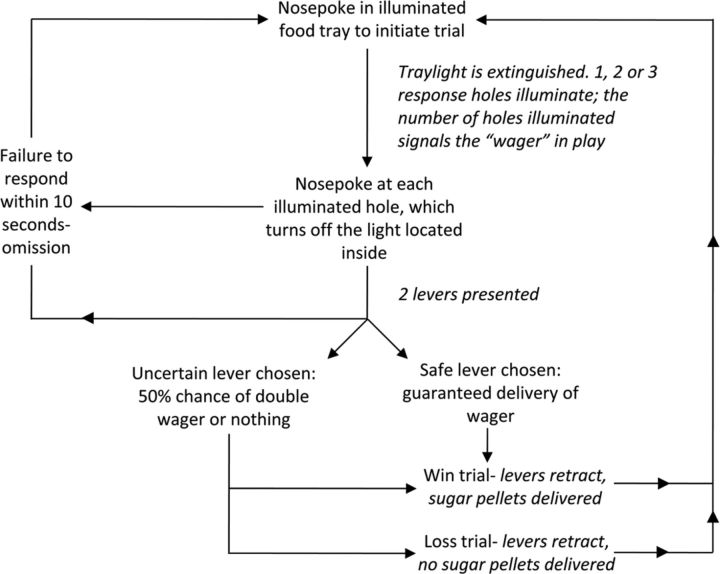

Figure 1.

Schematic diagram showing the trial structure for the betting task. The rat initiated each trial by making a nosepoke response at the illuminated food tray. The tray light was then extinguished, and 1–3 response holes were illuminated, signaling the size of the bet or wager (1–3 sugar pellets). A nosepoke response at an illuminated aperture turned off the light inside it. Once all the aperture lights had been extinguished in this manner, two levers were presented to the rat. Selection of the uncertain lever resulted in a 50:50 chance of receiving either double the wager or nothing, whereas selection of the safe lever always lead to delivery of the wager. The trial was scored as a choice omission if the rat failed to choose one of the levers within 10 s. Likewise, if the rat failed to respond at each illuminated response hole within 10 s, the trial was scored as a hole omission.

Each session consisted of 12 blocks of 10 trials each. The bet size remained constant within each block but varied between blocks in a pseudorandom fashion that ensured four blocks of each bet size within a session, and not >2 consecutive blocks of the same bet size. The first four trials of each block were forced choice, such that only the safe (2 trials) or uncertain (2 trials) lever was presented in random order to ensure the animal sampled from both options throughout the session and was familiar with the current contingency in play. Sessions lasted until all 120 trials had been completed, up to a maximum of 30 min.

Animals received five daily sessions per week and were tested until a statistically stable pattern of responding was observed across all variables analyzed over five sessions (total sessions to behavioral stability, 46–54). All animals were able to complete the 120 trials within the 30 min time limit.

Pharmacological challenges.

Once a stable behavioral baseline had been established, the effects of the following compounds were investigated: the psychostimulant d-amphetamine (0, 0.3, 1.0 mg/kg), the DA D2/3 receptor antagonist eticlopride (0, 0.01, 0.03, 0.06 mg/kg), and the DA D1 receptor antagonist R(+)-7-chloro-8-hydroxy-3-methyl-1-phenyl-2,3,4,5-tetrahydro-1H-3-benzazepine hydrochloride (SCH 23390; 0, 0.001, 0.003, 0.01 mg/kg). Drugs were administered according to a series of digram-balanced Latin-square designs (for doses A–D: ABCD, BDAC, CABD, DCBA) (Cardinal and Aitken, 2006). Drug injections were given on a 3 d cycle starting with a baseline session. On the following day, drug or saline would be administered 10 min before testing. On the third day, animals remained in their home cages. Animals were tested drug free for at least 1 week between each series of injections to allow a stable behavioral baseline to be re-established, and to ensure that exposure to any of the compounds had not had lasting effects on behavior.

All drug doses were calculated as the salt and dissolved in 0.9% sterile saline. All drugs were prepared fresh daily and administered via the intraperitoneal route. d-Amphetamine sulfate was purchased under a Health Canada exception from Sigma-Aldrich. SCH 23390 and eticlopride hydrochloride were purchased from Tocris Bioscience.

PET analysis.

In a subgroup of rats (n = 9), D2/3 receptor availability and density were assessed via PET and autoradiography. All rats were tested for a week following the last drug challenge to ensure that drug administration had not led to any lasting changes in behavior. Animals then remained in their home cages and were fed 20 g of food per day until it was possible to perform the PET scan (46–212 d after the last behavioral test session).

PET studies were performed on a Siemens micro-PET Focus F120 scanner (Laforest, 2007), which has a resolution of ∼1.8 mm3. Rats were maintained under 2.5% isofluorane anesthesia throughout the scanning procedure. Six minutes of transmission data were collected with a 57Co source to allow the calculation of attenuation and scatter corrections. Following intravenous injection of [11C]raclopride (1.02 ± 0.02 μCi/g; specific activity, > 4000Ci/mmol), 1 h of emission data were collected. Data were histogrammed into 6 × 30 s, 2 × 60 s, 5 × 300 s, 2 × 450 s, and 2 × 480 s frames and reconstructed using Fourier rebinning and filtered backprojection. Corrections for normalization, scatter, and attenuation were applied during reconstruction.

Rectangular regions of interest (ROIs) were placed bilaterally on the dorsal striatum across three image slices (2.6 × 3.5 × 2.4 mm) and on the cerebellum across three image slices (6.9 × 2.6 × 2.4 mm). An average time activity curve for the left striatum, right striatum, and cerebellum were generated from these ROIs. Using the graphical Logan method (Logan, 1996), the binding potential (BPND) was calculated for the left and right striatum using the cerebellum as a reference region.

Autoradiography.

One to two hours after the PET scan had been completed, each animal was killed via decapitation and the brain removed, frozen in isopentane, and stored at −80°C. Brains were sliced into 16 μm coronal sections along the same axis as the collected PET data and mounted on glass slides. Sections from the dorsal striatum (anterior, medial, and posterior) were taken. To measure D2/3 receptor availability, slides were incubated in 3 nm [11C]raclopride to determine total binding, or a mixture of 3 nm [11C]raclopride and 10 μm (+)-butaclamol to determine nonspecific binding. Standard curves were prepared by serially diluting a known amount of [11C]raclopride and pipetting a drop from each dilution onto a small piece of paper (Strome, 2005). Following incubation, the slides and standard curves were dried and apposed to radiosensitive phosphor screens for 2 h. These screens were then read out using a Cyclone phosphor imaging system. The resulting optical densities were converted to pmol/ml via the standard curves. Rectangular ROIs with the same in-plane (or in-slice) area as those used in the PET analysis were placed on the striatum in each of the imaged slices. ROIs were also placed in the nucleus accumbens (area of bilateral ellipses, 2.5 mm2), medial prefrontal cortex (area of bilateral ellipses, 2.0 mm2), and ventrolateral orbitofrontal cortex (area of rectangle, 3.4 mm2). A single binding measurement for each region was reported by averaging the measured optical density across all relevant tissue slices in a given ROI and converting to pmol/ml using the standard curve data. A similar method was used to measure D1 receptor availability with [3H]SCH 23390 (PerkinElmer), with the exception that commercially available [3H] microscales (GE Healthcare) were used to convert the measured optical densities.

Behavioral data analysis.

All behavioral statistical analyses were conducted using SPSS (version 16, IBM). The percentage choice rather than the absolute number of choices of the uncertain lever was analyzed to prevent any drug-induced changes in the number of trials completed from confounding our analyses. The percentage of trials on which the uncertain lever was chosen for each bet size was therefore calculated according to the following formula: [(number of times uncertain lever chosen)/(total number of trials)] × 100. These data were arcsine transformed before analysis to minimize any artificial ceiling effects (i.e., 100%). Other measurements analyzed were the following: the number of hole omissions, the number of choice omissions, the latency to choose a lever, the latency to collect reward at the food tray, and the number of trials completed per session. Stable baseline behavior across five sessions was determined via repeated-measures ANOVA for all variables measured, with session (5 levels: sessions 1–5) and bet size (3 levels: 1–3 sugar pellets) as within-subjects factors. A third within-subjects factor, lever choice (2 levels: safe, uncertain) was also included for all variables except percentage choice of the uncertain lever. The number of trials completed was also analyzed via repeated-measures ANOVA for all variables measured, with session (5 levels: sessions 1–5) as a within-subjects factor. The order in which blocks of different bet size were presented was controlled for within-the-task design and was therefore not included as an additional factor; if the block sequence was influencing behavior, then it would be impossible to see consistent effects of bet size across sessions, nor to reach statistically stable behavior as the data would vary day to day.

It became clear during analysis of these baseline data that individual animals differed dramatically in their wager sensitivity. Rats were therefore divided into two groups based on a linear regression analysis. A singular measure of wager sensitivity was obtained for each rat as follows. The choice of the uncertain option at each bet size was averaged across the five previous baseline sessions and plotted in Microsoft Excel to generate an equation of the form y = mx + c, in which the factor m indicates the gradient of the line (i.e., the degree to which choice of the risky option changed as a function of increasing bet size). This distinction was used as a between-subjects factor (group, 2 levels) and henceforth incorporated into all ANOVAs. Data from pharmacological challenges were also analyzed using repeated-measures ANOVAs with drug dose [4 levels: vehicle plus 3 doses of compound (with the exception of amphetamine, which had 3 levels: vehicle plus 2 doses of compound)] and bet size as within-subjects factors, and group as a between-subjects factor. In all analyses, any significant (p < 0.05) main effects were followed up post hoc using one-way ANOVA or Student's t tests. When data values are given in the text, the mean ± SEM are provided.

D2/3 striatal binding and wager sensitivity.

It was not possible to perform PET scans on all the rats. Therefore nine were selected pseudorandomly, the behavior of which was representative of the variation inherent in the group as a whole. ANOVAs were conducted to ensure that, statistically, the behavior of this subgroup did not significantly differ from that of the remainder of the cohort across the course of the experiment. Choice data from five baseline sessions, occurring both before and following all pharmacological challenges, were analyzed by ANOVA as described above with session and bet size as within-subjects factors, plus an additional between-subjects variable: PET group (2 levels, scanned and not scanned). The degree to which the level of wager sensitivity exhibited during these baseline sessions predicted the availability of D2/3 receptors in the dorsal striatum, as measured through BPND, was then determined. The relationship between the density of D1 or D2/3 receptors in the dorsal striatum, as measured by autoradiography, was also analyzed as an estimate of wager sensitivity. The degree to which the PET and autoradiography data correlated was also determined.

Results

Baseline performance of the betting task

Lever choice

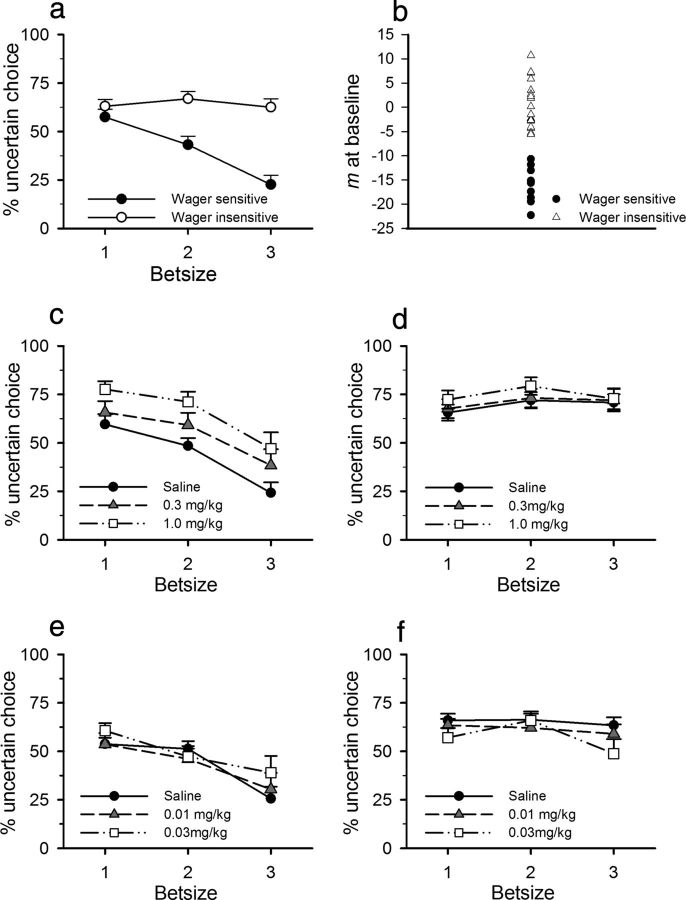

Objectively there was no optimum strategy to the betting task: exclusive choice of either option did not yield any greater or lesser reward. However, animals showed significant preferences for one option over the other, and these preferences were modulated by the bet size in play (Fig. 2a; bet size: F(2,60) = 32.498, p < 0.0001). Furthermore, there were marked individual differences in the expression of such preferences, and these differences were exemplified by the degree to which animals showed a linear shift away from the uncertain lever as the bet size increased. Hence, animals were separated into two groups on the basis of their wager sensitivity (m in the equation y = mx + b; mean, −5.06 ± 10.50; Fig. 2b). Animals that chose the safe lever more as the wager increased, demonstrated by an m value of ≥1 SD below a theoretical 0 were classified as wager sensitive (n = 10), whereas all other animals were classified as wager insensitive (n = 22) (bet size–group: F(2,60) = 37.783, p < 0.0001; wager-insensitive bet size: F(2,42) = 3.309, p = 0.06; wager-sensitive bet size: F(2,18) = 57.596, p < 0.0001; bet size 1 vs 2: F(1,9) = 13.298, p = 0.005; 2 vs 3: F(1,9) = 114.551, p < 0.0001). Although there was a trend for bet size to influence choice in the wager-insensitive group, this was predominantly mediated by a slight increase in choice of the uncertain option at bet size 2. This was unlikely to represent a meaningful pattern of responding and certainly did not compare with the linear decrease in uncertain choice demonstrated by wager-sensitive animals. Perhaps unsurprisingly, the degree of wager sensitivity correlated strongly with the total choice of the uncertain lever (r2 = 0.522, p = 0.002). However, the classification of rats as wager sensitive versus wager insensitive captured more than just general preference for one lever or the other, in that choice behavior of the two groups was indistinguishable at the smallest bet size but pulled apart as wager-sensitive rats decreased their preference for the uncertain option as the bet size increased (bet size 1 group: F(1,27) = 1.759, not significant; bet size 2 group: F(1,27) = 10.681, p = 0.003; bet size 3 group: F(1,27) = 23.406, p < 0.0001).

Figure 2.

Rats exhibit individual differences in preference for the uncertain reward, and this determines the response to amphetamine and eticlopride. a, Wager-sensitive rats shifted their preference as the bet size increased, whereas the choice pattern of wager-insensitive rats did not change. b, The degree of wager sensitivity shown by each rat, as indicated by the gradient (m) of the line obtained by plotting choice of the uncertain lever against bet size. c, d, Amphetamine increased choice of the uncertain option in wager-sensitive (c) but not wager-insensitive rats (d). e, f, In contrast, eticlopride had no effect in wager-sensitive rats (e), but decreased uncertain choice in wager-insensitive animals (f). Data shown are mean ± SEM.

Other behavioral measurements

Both wager-sensitive and wager-insensitive animals were quicker to select the uncertain over the safe lever, regardless of bet size (choice: F(1,28) = 11.238, p = 0.002; group: F(1,28) = 0.863, not significant; mean choice latency ± SEM, safe: 1.58 ± 0.03; mean choice latency ± SEM, uncertain 1.40 ± 0.03). Statistically, all animals were also quicker to collect the reward as the bet size increased (bet size: F(2,56) = 16.445, p < 0.0001; bet size–group: F(2,56) = 0.015, not significant; bet size 1 vs 2: F(1,28) = 12.493 p < 0.001; bet size 2 vs 3: F(1,28) = 16.521, p < 0.0001), although the magnitude of such changes were minimal (bet size 1: 0.42 ± 0.006 s; bet size 2: 0.4 ± 0.005 s; bet size 3: 0.39 ± 0.005 s). All animals completed a similar number of trials per session (wager sensitive, 120.0 ± 0.0; wager insensitive, 119.55 ± 0.26; group: F(1,30) = 0.770, not significant). There were so few omissions made per session that meaningful analysis was compromised due to the large number of cells containing null values (all rats: hole omissions, 0.006 ± 0.0004; choice omissions, 0.44 ± 0.06).

Effects of amphetamine administration on performance of the betting task

Lever choice.

Amphetamine significantly increased choice of the uncertain option in the wager-sensitive group, yet did not affect choice behavior in the wager-insensitive animals (Fig. 2c,d; group: F(1,30) = 6.560, p = 0.0016; dose: F(2,60) = 5.056, p = 0.009; wager sensitive to dose: F(2,18) = 6.483, p = 0.008; wager insensitive to dose: F(2,42) = 0.806, not significant; wager sensitive: saline vs 0.3 mg/kg amphetamine; F(1,9) = 3.647, p = 0.088; saline vs 1.0 mg/kg amphetamine: F(1,9) = 13.307, p = 0.005).

Other behavioral measures.

Amphetamine increased choice latencies, although this effect was only evident at the highest dose (dose: F(2,56) = 13.363, p < 0.0001; saline vs 0.6 mg/kg amphetamine: F(1,28) = 0.019, not significant; saline vs 1.0 mg/kg amphetamine: F(1,28) = 13.719). Both sets of animals displayed the same pattern of responding seen at baseline whereby they were quicker to respond on the uncertain lever (choice: F(1,28) = 8.024, p = 0.008; safe, 1.93 ± 0.19; uncertain, 1.66 ± 0.04). The latency to collect reward was not affected by amphetamine administration (dose: F(2,42) = 1.106, not significant; dose–choice–group: F(2,42) = 0.623, not significant), but the highest dose increased the number of choice omissions made (wager sensitive, 0.40 ± 0.18; wager insensitive, 0.53 ± 0.16; dose: F(2,60) = 5.264, p = 0.029; saline vs 1.0 mg/kg amphetamine: F(1,30) = 5.263, p = 0.029). Hole omissions were not affected following amphetamine administration (dose: F(2,60) = 2.344, p = 0.105; group: F(1,30) = 0.623, not significant). Although the higher dose of amphetamine appeared to decrease the number of trials completed, this effect fell short of significance when compared with saline administration (1.0 mg/kg amphetamine: wager sensitive, 118.9 ± 1.1; wager insensitive, 112.5 ± 4.59; dose: F(2,60) = 2.616, not significant; saline vs 1.0 mg/kg amphetamine: F(1,30) = 2.066, not significant).

Effects of eticlopride administration on performance of the betting task

At the highest dose, eticlopride reduced the total number of trials completed to <50%. Hence, this dose was not included in the final analysis.

Lever choice.

Eticlopride reduced choice of the uncertain lever in wager-insensitive rats, yet did not alter choice behavior in wager-sensitive animals (Fig. 2e,f; dose–group: F(2,60) = 2.729, p = 0.073; dose–group–bet size: F(4,120) = 2.821, p = 0.028; wager sensitive to dose: F(2,18) = 0.405, not significant; wager insensitive to dose: F(2,42) = 5.250, p < 0.009; saline vs 0.01 mg/kg eticlopride: F(1,21) = 4.477, p = 0.046; saline vs 0.03 mg/kg eticlopride: F(1,21) = 8.601, p < 0.008).

Other behavioral measures.

Eticlopride caused a general increase in lever choice latency, regardless of group (dose: F(1,29) = 13.794, p = 0.001; group: F(1,29) = 0.32, not significant). However this effect was only significant at the highest dose and animals retained the tendency to choose the uncertain lever more quickly (saline vs 0.01 mg/kg eticlopride: F(1,29) = 0.008, not significant; saline vs 0.03 mg/kg eticlopride: F(1,29) = 5.23, p = 0.03; choice: F(1,29) = 13.794, p = 0.001). Contrary to the increases in lever choice latency, eticlopride did not affect the time taken to collect reward (dose: F(2,34) = 0.267, not significant; dose–choice–group: F(2,34) = 0.99, not significant). Although the drug did not affect the number of choice omissions made (dose: F(2,58) = 1.626, not significant; dose–group: F(2,58) = 0.132, not significant), the higher dose of eticlopride included in the analysis significantly increased the number of hole omissions in both groups, although these numbers remained low (wager sensitive, 3.57 ± 0.67; wager insensitive, 2.82 ± 0.45; dose: F(2,58) = 29.143, p < 0.0001; saline vs 0.03 mg/kg eticlopride: F(1,29) = 37.679, p < 0.0001). The higher dose also decreased the number of trials completed in both groups (wager sensitive, 101.18 ± 9.11; wager insensitive, 78.4 ± 2.99; dose: F(2,60) = 24.854, p < 0.0001; saline vs 0.03 mg/kg eticlopride: F(1,30) = 31.663, p < 0.0001).

Effects of SCH 23390 administration on performance of the betting task

Lever choice.

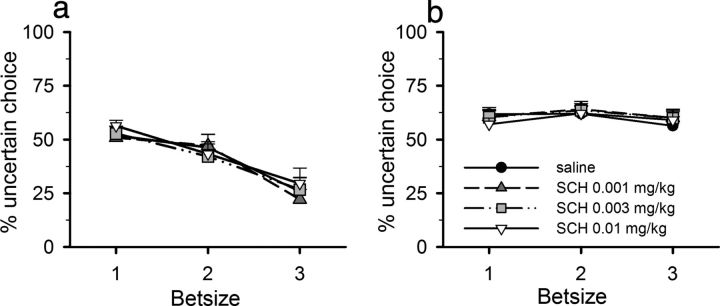

SCH 23390 did not affect lever choice behavior in either group (Fig. 3; dose–group: F(3,90) = 0.507, not significant).

Figure 3.

Lack of effect of the D1 receptor antagonist SCH 23390 on choice behavior. SCH 23390 did not alter preference for the uncertain lever at any bet size in either wager-sensitive (a) or wager-insensitive (b) rats. Data shown are mean ± SEM.

Other behavioral measures.

In both groups, the highest dose of SCH 23390 significantly increased the time taken to choose either lever (wager sensitive, 1.48 ± 0.04; wager insensitive, 1.53 ± 0.03; dose: F(3,90) = 4.791, p = 0.004; dose–choice–group: F(3,90) = 1.925, not significant; saline vs 0.01 mg/kg SCH 23390: F(1,30) = 13.066, p = 0.001). SCH 23390 did not affect the latency to collect reward (dose: F(3,90) = 0.216, not significant; dose–choice–group: F(3,90) = 0.406, not significant). The highest dose administered significantly increased hole omissions (wager sensitive, 2.30 ± 0.50; wager insensitive, 1.65 ± 0.28; dose: F(3,90) = 32.869, p < 0.0001; saline vs 0.01 mg/kg SCH 23390: F(1,30) = 38.63, p < 0.0001) and decreased the number of trials completed (wager sensitive, 83.7 ± 14.88; wager insensitive, 100.91 ± 5.28; dose: F(3,90) = 25.709, p < 0.0001; saline vs 0.01 mg/kg SCH 23390: F(1,30) = 25.247, p < 0.0001).

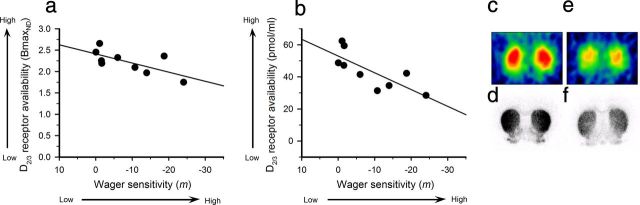

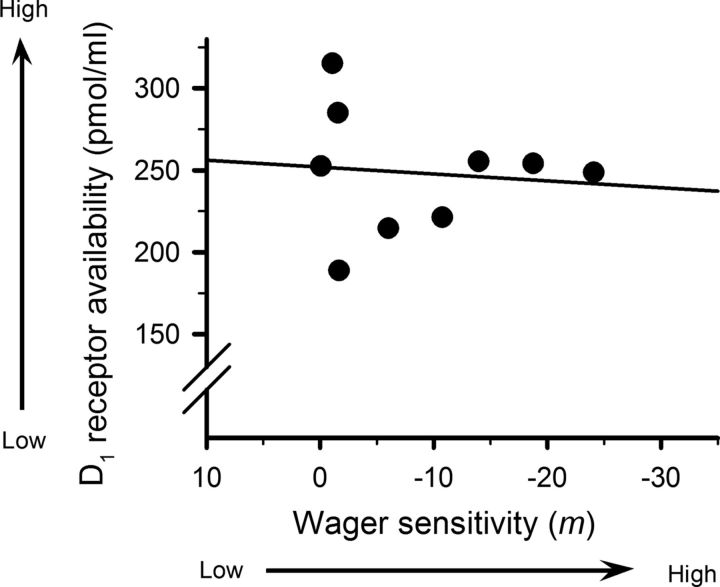

Correlations between wager sensitivity and striatal D2/3 or D1 receptor density

Nine animals were selected randomly for PET scanning. The selected group showed no difference in lever choice behavior from the rest of the group at baseline (bet size–PET group: F(2,20) = 1.336, not significant). Higher wager sensitivity correlated with lower levels of D2/3 receptor availability in the dorsal striatum (Fig. 4a; r2 = 0.483, p = 0.04). Autoradiography analysis confirmed that this reduction was caused by a selective decrease in the density of dorsal striatal D2/3 receptors (Fig. 4b; r2 = 0.601, p = 0.01), rather than enhanced DA release. Both the PET and autoradiography binding measurements were likewise strongly correlated with each other (r2 = 0.60, p = 0.02). In contrast, no significant correlations were observed between wager sensitivity and D2/3 receptor density in the nucleus accumbens, medial lateral orbitofrontal cortex, or medial cortex (r2 = 0.17, not significant; r2 = 0.12, not significant; r2 = 0.12, not significant, data not shown). Similarly there was no significant correlation between wager sensitivity and D1 receptor binding in the striatum (Fig. 5; r2 = 0.03, not significant).

Figure 4.

Wager sensitivity correlates with striatal D2/3 receptor density. a, b, Striatal D2/3 receptor availability, measured by (a) PET as a tissue input BPND and (b) autoradiography using [11C]raclopride, predicts wager sensitivity as estimated by coefficient m (high negative values indicate high wager sensitivity). c, d, D2/3 receptor availability in a wager-insensitive animal as measured by PET and autoradiography respectively. e, f, The same data from a wager-sensitive rat. Data are shown on the same scale. The binding measurements obtained from PET and autoradiography analyses were strongly correlated (r2 = 0.60, p = 0.02).

Figure 5.

Null relationship between the degree of wager-sensitivity and [3H]SCH 23390 binding to D1 receptors in the striatum. Wager-sensitivity could not be predicted from striatal D1 receptor binding.

Discussion

Here, animals chose between a “safe” lever which guaranteed delivery of the wager, and an “uncertain” lever which delivered double the bet size or nothing with 50:50 odds. Some rats appeared largely insensitive to bet size, maintaining a moderate preference for the uncertain option. However, others drastically shifted their preference toward guaranteed rewards as the wager increased, despite extensive training. Such a choice pattern can be considered irrational in that switching from the uncertain option did not confer any benefit.

Amphetamine increased choice of the uncertain option in these wager-sensitive rats, whereas the D2/3 antagonist eticlopride had the opposite effect in wager-insensitive animals. Such group differences in the response to dopaminergic drugs suggest that individual variation in DA signaling, particularly through D2/3 receptors, may influence the degree of wager sensitivity. PET and autoradiography analyses confirmed that higher wager sensitivity correlated with lower dorsal striatal D2/3 receptor availability and density, a pattern thought to confer vulnerability to stimulant abuse. Hence, irrational choice under uncertainty, a putative risk factor for PG, was associated with a similar biomarker as chemical dependency, perhaps indicating that susceptibility to chemical and behavioral addictions is underpinned by a common biological phenotype. It is possible that behavioral training, or the pharmacological challenges, altered D2/3 receptor levels. However, as all animals were exposed to the same drugs and testing protocol, these factors are unlikely to account for the relationship between wager sensitivity and receptor expression.

The results may seem counterintuitive; one might expect a preference for uncertainty, rather than greater wager sensitivity, to be associated with addiction vulnerability (Lane and Cherek, 2001). Indeed, preferential choice of high-risk options in tests like the Iowa Gambling Task has been observed in substance abusers, pathological gamblers, and those at-risk for addiction (Bechara et al., 2001; Goudriaan et al., 2005; Garon et al., 2006). However, in such paradigms, the “tempting” uncertain options are ultimately disadvantageous and result in less reward. Furthermore, subjects are unaware of the reinforcement contingencies at the outset, whereas the degree of uncertainty was expected in our well trained rats (for discussion, see Yu and Dayan, 2005; Platt and Huettel, 2008). Exclusive choice of either option in the rodent task also led to identical net reward over time. Hence, we could examine biases in the response to uncertainty without confounds caused by variation in learning rates, or how subjects evaluated expected differences in net gain, both of which can influence the neural circuitry involved and the degree of risk aversion observed (Yu and Dayan, 2005; Schönberg et al., 2007; Platt and Huettel, 2008). Our data therefore match clinical observations that the degree of biased decision-making under uncertainty, rather than simple preference for high risk, differentiates problem gamblers from the general population (Coventry and Brown, 1993; Michalczuk et al., 2011).

Indeed, some of our findings suggest that wager sensitivity and the preference for uncertainty are dissociable. Amphetamine increased choice of the uncertain lever across all bet sizes in wager-sensitive rats without affecting the degree of wager sensitivity. As the bet sizes are presented in random order, it is difficult to argue that this resulted from amphetamine's ability to increase perseveration (Robbins, 1976). Given that amphetamine did not alter the consistent preference for the uncertain lever in wager-insensitive rats, it is doubtful that amphetamine increased switching tendencies (Evenden and Robbins, 1985; Weiner, 1990). Baseline choice of the uncertain lever was also at 70% in this group, leaving plenty of opportunity for drugs to increase preference (St. Onge and Floresco, 2009) and making a ceiling effect unlikely. The most parsimonious conclusion is that amphetamine increased wager-sensitive rats' preference for uncertain options, replicating previous findings using a probability discounting task (St. Onge and Floresco, 2009). These data also suggest that wager-insensitive rats were less susceptible to amphetamine's effects, potentially mirroring the finding that amphetamine primes the desire to gamble in gamblers but not in healthy controls (Zack and Poulos, 2004).

It may be adaptive for animals to preferentially explore options that have less predictable outcomes to develop a better model of the environment (Pearce and Hall, 1980; Hogarth et al., 2008). When there is no cost to choosing probabilistic options, many healthy animals and humans prefer uncertain outcomes (Adriani and Laviola, 2006; Hayden et al., 2008; Hayden and Platt, 2009). Social psychology experiments suggest that, contrary to our expectations, experiencing some degree of uncertainty engenders greater feelings of happiness, again indicating we may be predisposed to favor uncertain outcomes in the absence of negative consequences (Wilson et al., 2005).

The question remains as to what governs the shift in choice seen in wager-sensitive rats. Assuming behavioral choices signify cognitive processes, numerous experiments demonstrate that rats can integrate reward delivery over time (Balleine and Dickinson, 1998). It therefore seems unlikely that wager-sensitive rats were unaware of the reinforcement contingencies in play. Impaired temporal integration should also result in constant preference for the safe option, rather than a shift toward guaranteed rewards as bet size increases. As wager-sensitive and wager-insensitive rats completed comparable numbers of trials, and exhibited similar latencies to choose a lever and collect reward, it is hard to infer that wager-sensitive animals were less able or motivated to perform the task. Likewise, these latency data do not suggest that the two groups differed in their subjective appraisal of relative reward value, although it would be useful in future to demonstrate this explicitly given the assumption that animals evaluate increases in reward value linearly.

Data suggest that, as need increases, animals switch from preferring certain/reliable options associated with constant incremental gain to uncertain/probabilistic options that lead to intermittent but larger rewards (Bateson and Kacelnik, 1995; Caraco, 1981; Schuck-Paim et al., 2004). Such shifts can be explained: the bigger reward might guarantee survival when gradual accumulation of smaller rewards would not satisfy the need in time. However, such factors cannot explain the shift toward the uncertain option as bet size decreased here. Although satiety levels probably fluctuated throughout each session, the order in which animals experienced the different bet sizes was randomized within and between daily tests. Delaying reward delivery can also decrease preference for uncertain options, as can increasing the intertrial interval (Bateson and Kacelnik, 1997; Hayden and Platt, 2007). However, reward was always delivered immediately following selection of either lever in the current experiment. Nevertheless, the intermittent reward delivery on the uncertain lever inevitably led to longer gaps between rewards. It is therefore possible that the choice pattern exhibited by wager-sensitive animals reflected a myopia for immediate rewards. Wager sensitivity could therefore contribute to poor decision-making under risk due to a short-sighted focus on immediate gains over future returns. Indeed, pathological gamblers discount delayed rewards more steeply, which correlates with the degree of cognitive distortions observed (Dixon et al., 2003; Michalczuk et al., 2011).

Wager sensitivity is somewhat reminiscent of the risk-averse arm of the framing effect, in which subjects are less likely to gamble for a bigger reward if a smaller but guaranteed gain is available (Kahneman, 2003). Risk aversion increases with the size of the wager, even though the net gain remains constant. In seeking to explain this apparent irrationality, dual-process theorists posit two decision-making modes: judgments made in the deliberative mode are effortful and require thoughtful analyses, whereas choices made in the affective mode are effortless, intuitive, and often influenced by heuristics (Osman, 2004; Evans, 2008; Strough et al., 2011). Wager-sensitive and wager-insensitive animals could therefore be approaching the task in the affective and deliberative modes respectively. However, affective choices should be quicker to enact, yet decision-making speed was equivalent across the two groups and across bet size, suggesting that animals also did not find the decision more effortful as the wager increased. The fact that rats exhibit biased decision-making under uncertainty may, nevertheless, imply a limited role for complex reasoning. Furthermore, making decisions using the framing heuristic is associated with decreased frontocortical activation and increased recruitment of the amygdala, suggesting that this irrational behavior is primarily driven by subcortical, emotional processing (De Martino et al., 2006). Decreased striatal D2/3 receptor expression is linked to hypofunction within the orbitofrontal cortex of substance abusers (Volkow and Fowler, 2000), though whether such reduced cortical activity likewise contributes to wager sensitivity remains to be determined.

Neuroimaging data indicate that decreasing DA-modulated striatal activity, via a D2 receptor antagonist, alters the representation of reward prediction errors in this region, leading to impaired value-based decision-making (Pessiglione et al., 2006). Decreased striatal activation has also been observed in problem gamblers during risk-based decision-making (Reuter et al., 2005). It is therefore conceivable that individual differences in striatal D2/3 receptor expression would mediate the behavioral effects of eticlopride and influence the degree of wager sensitivity, as observed here. Rats that make significantly more premature, or impulsive, responses in an attentional task also express fewer striatal D2/3 receptors, and develop a pattern of drug-taking that resembles addiction (Dalley et al., 2007). Although disparate cognitive processes control motor impulsivity and decision-making biases, both phenomena have been linked to addictive disorders (Verdejo-García et al., 2008; Clark, 2010), and the current data suggest that the underlying neurobiology may overlap at least at the level of the striatum. Further work is needed to explore the interdependence of these behaviors, and whether similar brain mechanisms confer vulnerability to chemical and behavioral addictions. Such information could prove invaluable when considering whether treatments will be effective in multiple addiction disorders.

Footnotes

This work was supported by an operating grant awarded to C.A.W. from the Canadian Institutes for Health Research (CIHR), and a National Sciences and Engineering Research Council of Canada grant awarded to V.S. C.A.W. and V.S. also receive salary support through the Michael Smith Foundation for Health Research and C.A.W. through the CIHR New Investigator Award program.

C.A.W. has previously consulted for Theravance, a biopharmaceutical company, on an unrelated matter. The other authors declare no competing financial interests.

References

- Adriani W, Laviola G. Delay aversion but preference for large and rare rewards in two choice tasks: implications for the measurement of self-control parameters. BMC Neurosci. 2006;7:52. doi: 10.1186/1471-2202-7-52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balleine BW, Dickinson A. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37:407–419. doi: 10.1016/s0028-3908(98)00033-1. [DOI] [PubMed] [Google Scholar]

- Bateson M, Kacelnik A. Preferences for fixed and variable food sources: variability in amount and delay. J Exp Anal Behav. 1995;63:313–329. doi: 10.1901/jeab.1995.63-313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bateson M, Kacelnik A. Starlings' preferences for predictable and unpredictable delays to food. Anim Behav. 1997;53:1129–1142. doi: 10.1006/anbe.1996.0388. [DOI] [PubMed] [Google Scholar]

- Bechara A, Dolan S, Denburg N, Hindes A, Anderson SW, Nathan PE. Decision-making deficits, linked to a dysfunctional ventromedial prefrontal cortex, revealed in alcohol and stimulant abusers. Neuropsychologia. 2001;39:376–389. doi: 10.1016/s0028-3932(00)00136-6. [DOI] [PubMed] [Google Scholar]

- Brown JW, Braver TS. Risk prediction and aversion by anterior cingulate cortex. Cogn Affect Behav Neurosci. 2007;7:266–277. doi: 10.3758/cabn.7.4.266. [DOI] [PubMed] [Google Scholar]

- Brown JW, Braver TS. A computational model of risk, conflict, and individual difference effects in the anterior cingulate cortex. Brain Res. 2008;1202:99–108. doi: 10.1016/j.brainres.2007.06.080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caraco T. Energy budgets, risk and foraging preferences in dark-eyed juncos (Junco hyemalis) Behav Ecol Sociobiol. 1981;8:213–217. [Google Scholar]

- Cardinal RN, Parkinson JA, Hall J, Everitt BJ. Emotion and motivation: the role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci Biobehav Rev. 2002;26:321–352. doi: 10.1016/s0149-7634(02)00007-6. [DOI] [PubMed] [Google Scholar]

- Cardinal RN, Aitken M. ANOVA for the behavioural sciences researcher. London: Lawrence Erlbaum Associates; 2006. [Google Scholar]

- Clark L. Decision-making during gambling: an integration of cognitive and psychobiological approaches. Philos Trans R Soc Lond B Biol Sci. 2010;365:319–330. doi: 10.1098/rstb.2009.0147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Coventry KR, Brown RI. Sensation seeking, gambling and gambling addictions. Addiction. 1993;88:541–554. doi: 10.1111/j.1360-0443.1993.tb02061.x. [DOI] [PubMed] [Google Scholar]

- Dalley JW, Fryer TD, Brichard L, Robinson ES, Theobald DE, Lääne K, Peña Y, Murphy ER, Shah Y, Probst K, Abakumova I, Aigbirhio FI, Richards HK, Hong Y, Baron JC, Everitt BJ, Robbins TW. Nucleus accumbens D2/3 receptors predict trait impulsivity and cocaine reinforcement. Science. 2007;315:1267–1270. doi: 10.1126/science.1137073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Day JJ, Roitman MF, Wightman RM, Carelli RM. Associative learning mediates dynamic shifts in dopamine signaling in the nucleus accumbens. Nat Neurosci. 2007;10:1020–1028. doi: 10.1038/nn1923. [DOI] [PubMed] [Google Scholar]

- De Martino B, Kumaran D, Seymour B, Dolan RJ. Frames, biases, and rational decision-making in the human brain. Science. 2006;313:684–687. doi: 10.1126/science.1128356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dixon MR, Marley J, Jacobs EA. Delay discounting by pathological gamblers. J Appl Behav Anal. 2003;36:449–458. doi: 10.1901/jaba.2003.36-449. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emond MS, Marmurek HH. Gambling related cognitions mediate the association between thinking style and problem gambling severity. J Gambl Stud. 2010;26:257–267. doi: 10.1007/s10899-009-9164-6. [DOI] [PubMed] [Google Scholar]

- Evans JS. Dual-processing accounts of reasoning, judgment, and social cognition. Annu Rev Psychol. 2008;59:255–278. doi: 10.1146/annurev.psych.59.103006.093629. [DOI] [PubMed] [Google Scholar]

- Evenden JL, Robbins TW. The effects of d-amphetamine, chlordiazepoxide and alpha-flupenthixol on food-reinforced tracking of a visual stimulus by rats. Psychopharmacology (Berl) 1985;85:361–366. doi: 10.1007/BF00428202. [DOI] [PubMed] [Google Scholar]

- Garon N, Moore C, Waschbusch DA. Decision making in children with ADHD only, ADHD-anxious/depressed, and control children using a child version of the Iowa Gambling Task. J Atten Disord. 2006;9:607–619. doi: 10.1177/1087054705284501. [DOI] [PubMed] [Google Scholar]

- Gianotti LR, Knoch D, Faber PL, Lehmann D, Pascual-Marqui RD, Diezi C, Schoch C, Eisenegger C, Fehr E. Tonic activity level in the right prefrontal cortex predicts individuals' risk taking. Psychol Sci. 2009;20:33–38. doi: 10.1111/j.1467-9280.2008.02260.x. [DOI] [PubMed] [Google Scholar]

- Goudriaan AE, Oosterlaan J, de Beurs E, van den Brink W. Decision making in pathological gambling: a comparison between pathological gamblers, alcohol dependents, persons with Tourette syndrome, and normal controls. Brain Res Cogn Brain Res. 2005;23:137–151. doi: 10.1016/j.cogbrainres.2005.01.017. [DOI] [PubMed] [Google Scholar]

- Hayden BY, Platt ML. Temporal discounting predicts risk sensitivity in rhesus macaques. Curr Biol. 2007;17:49–53. doi: 10.1016/j.cub.2006.10.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayden BY, Platt ML. Gambling for Gatorade: risk-sensitive decision making for fluid rewards in humans. Anim Cogn. 2009;12:201–207. doi: 10.1007/s10071-008-0186-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayden BY, Heilbronner SR, Nair AC, Platt ML. Cognitive influences on risk-seeking by rhesus macaques. Judgm Decis Mak. 2008;3:389–395. [PMC free article] [PubMed] [Google Scholar]

- Hogarth L, Dickinson A, Austin A, Brown C, Duka T. Attention and expectation in human predictive learning: the role of uncertainty. Q J Exp Pscychol (Hove) 2008;61:1658–1668. doi: 10.1080/17470210701643439. [DOI] [PubMed] [Google Scholar]

- Kahneman D. A perspective on judgment and choice: mapping bounded rationality. Am Psychol. 2003;58:697–720. doi: 10.1037/0003-066X.58.9.697. [DOI] [PubMed] [Google Scholar]

- Kahneman D, Tversky A. Prospect theory: an analysis of decision under risk. Econometrica. 1979;47:263–292. [Google Scholar]

- Ladouceur R, Sylvain C, Boutin C, Lachance S, Doucet C, Leblond J, Jacques C. Cognitive treatment of pathological gambling. J Nerv Ment Dis. 2001;189:774–780. doi: 10.1097/00005053-200111000-00007. [DOI] [PubMed] [Google Scholar]

- Ladouceur R, Walker M. A cognitive perspective on gambling. In: Salkovskis PM, editor. Trends in cognitive therapy. Oxford: Wiley; 1996. pp. 89–120. [Google Scholar]

- Laforest R, Longford D, Siegel S, Newport DF, Yap J. Performance evaluation of the microPET-Focus-F120. Trans Nucl Sci. 2007;54:42–49. [Google Scholar]

- Lane SD, Cherek DR. Risk taking by adolescents with maladaptive behavior histories. Exp Clin Psychopharmacol. 2001;9:74–82. doi: 10.1037/1064-1297.9.1.74. [DOI] [PubMed] [Google Scholar]

- Logan J, Fowler JS, Volkow ND, Wang GJ, Ding YS, Alexoff DL. Distribution volume ratios without blood sampling from graphical analysis of PET data. J Cereb Blood Flow Metab. 1996;16:834–840. doi: 10.1097/00004647-199609000-00008. [DOI] [PubMed] [Google Scholar]

- Michalczuk R, Bowden-Jones H, Verdejo-Garcia A, Clark L. Impulsivity and cognitive distortions in pathological gamblers attending the UK National Problem Gambling Clinic: a preliminary report. Psychol Med. 2011:1–11. doi: 10.1017/S003329171100095X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller NV, Currie SR. A Canadian population level analysis of the roles of irrational gambling cognitions and risky gambling practices as correlates of gambling intensity and pathological gambling. J Gambl Stud. 2008;24:257–274. doi: 10.1007/s10899-008-9089-5. [DOI] [PubMed] [Google Scholar]

- O'Doherty J, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable role of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- Osman M. An evaluation of dual-process theories of reasoning. Psychon Bull Rev. 2004;11:988–1010. doi: 10.3758/bf03196730. [DOI] [PubMed] [Google Scholar]

- Pearce JM, Hall G. A model for Pavlovian learning: variations in the effectiveness of conditioned but not unconditioned stimuli. Psychol Rev. 1980;87:532–552. [PubMed] [Google Scholar]

- Pessiglione M, Seymour B, Flandin G, Dolan RJ, Frith CD. Dopamine-dependent prediction errors underpin reward-seeking behaviour in humans. Nature. 2006;442:1042–1045. doi: 10.1038/nature05051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Platt ML, Huettel SA. Risky business: the neuroeconomics of decision making under uncertainty. Nat Neurosci. 2008;11:398–403. doi: 10.1038/nn2062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reuter J, Raedler T, Rose M, Hand I, Gläscher J, Büchel C. Pathological gambling is linked to reduced activation of the mesolimbic reward system. Nat Neurosci. 2005;8:147–148. doi: 10.1038/nn1378. [DOI] [PubMed] [Google Scholar]

- Robbins TW. Relationship between reward-enhancing and stereotypical effects of psychomotor stimulant drugs. Nature. 1976;264:57–59. doi: 10.1038/264057a0. [DOI] [PubMed] [Google Scholar]

- Schönberg T, Daw ND, Joel D, O'Doherty JP. Reinforcement learning signals in the human striatum distinguish learners from nonlearners during reward-based decision making. J Neurosci. 2007;27:12860–12867. doi: 10.1523/JNEUROSCI.2496-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schuck-Paim C, Pompilio L, Kacelnik A. State-dependent decisions cause apparent violations of rationality in animal choice. PLoS Biol. 2004;2:e402. doi: 10.1371/journal.pbio.0020402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schultz W, Dayan P, Montague PR. A neural substrate of prediction and reward. Science. 1997;275:1593–1599. doi: 10.1126/science.275.5306.1593. [DOI] [PubMed] [Google Scholar]

- St Onge JR, Floresco SB. Dopaminergic regulation of risk-based decision-making. Neuropsychopharmacology. 2009;34:681–697. doi: 10.1038/npp.2008.121. [DOI] [PubMed] [Google Scholar]

- Strome EM, Jivan S, Doudet DJ. Quantitative in vitro phosphor imaging using [3H] and [18F] radioligands: effects of chronic desipramine treatment on serotonin 5HT2 receptors. J Neurosci Methods. 2005;141:143–154. doi: 10.1016/j.jneumeth.2004.06.008. [DOI] [PubMed] [Google Scholar]

- Strough J, Karns TE, Schlosnagle L. Decision-making heuristics and biases across the life span. Ann N Y Acad Sci. 2011;1235:57–74. doi: 10.1111/j.1749-6632.2011.06208.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sylvain C, Ladouceur R, Boisvert JM. Cognitive and behavioral treatment of pathological gambling: a controlled study. J Consult Clin Psychol. 1997;65:727–732. doi: 10.1037//0022-006x.65.5.727. [DOI] [PubMed] [Google Scholar]

- Trepel C, Fox CR, Poldrack RA. Prospect theory on the brain? Toward a cognitive neuroscience of decision under risk. Brain Res Cogn Brain Res. 2005;23:34–50. doi: 10.1016/j.cogbrainres.2005.01.016. [DOI] [PubMed] [Google Scholar]

- Verdejo-García A, Lawrence AJ, Clark L. Impulsivity as a vulnerability marker for substance-use disorders: review of findings from high-risk research, problem gamblers and genetic association studies. Neurosci Biobehav Rev. 2008;32:777–810. doi: 10.1016/j.neubiorev.2007.11.003. [DOI] [PubMed] [Google Scholar]

- Volkow ND, Fowler JS. Addiction, a disease of compulsion and drive: involvement of the orbitofrontal cortex. Cereb Cortex. 2000;10:318–325. doi: 10.1093/cercor/10.3.318. [DOI] [PubMed] [Google Scholar]

- Weber EU, Shafir S, Blais AR. Predicting risk sensitivity in humans and lower animals: risk as variance or coefficient of variation. Psychol Rev. 2004;111:430–445. doi: 10.1037/0033-295X.111.2.430. [DOI] [PubMed] [Google Scholar]

- Weiner I. Neural substrates of latent inhibition: the switching model. Psychol Bull. 1990;108:442–461. doi: 10.1037/0033-2909.108.3.442. [DOI] [PubMed] [Google Scholar]

- Wilson TD, Centerbar DB, Kermer DA, Gilbert DT. The pleasures of uncertainty: prolonging positive moods in ways people do not anticipate. J Pers Soc Psychol. 2005;88:5–21. doi: 10.1037/0022-3514.88.1.5. [DOI] [PubMed] [Google Scholar]

- Winstanley CA, LaPlant Q, Theobald DE, Green TA, Bachtell RK, Perrotti LI, DiLeone RJ, Russo SJ, Garth WJ, Self DW, Nestler EJ. DeltaFosB induction in orbitofrontal cortex mediates tolerance to cocaine-induced cognitive dysfunction. J Neurosci. 2007;27:10497–10507. doi: 10.1523/JNEUROSCI.2566-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winstanley CA, Zeeb FD, Bedard A, Fu K, Lai B, Steele C, Wong AC. Dopaminergic modulation of the orbitofrontal cortex affects attention, motivation and impulsive responding in rats performing the five-choice serial reaction time task. Behav Brain Res. 2010;210:263–272. doi: 10.1016/j.bbr.2010.02.044. [DOI] [PubMed] [Google Scholar]

- Yu AJ, Dayan P. Uncertainty, neuromodulation, and attention. Neuron. 2005;46:681–692. doi: 10.1016/j.neuron.2005.04.026. [DOI] [PubMed] [Google Scholar]

- Zack M, Poulos CX. Amphetamine primes motivation to gamble and gambling-related semantic networks in problem gamblers. Neuropsychopharmacology. 2004;29:195–207. doi: 10.1038/sj.npp.1300333. [DOI] [PubMed] [Google Scholar]