Abstract

Fundamental to the experience of music, beat and meter perception refers to the perception of periodicities while listening to music occurring within the frequency range of musical tempo. Here, we explored the spontaneous building of beat and meter hypothesized to emerge from the selective entrainment of neuronal populations at beat and meter frequencies. The electroencephalogram (EEG) was recorded while human participants listened to rhythms consisting of short sounds alternating with silences to induce a spontaneous perception of beat and meter. We found that the rhythmic stimuli elicited multiple steady state-evoked potentials (SS-EPs) observed in the EEG spectrum at frequencies corresponding to the rhythmic pattern envelope. Most importantly, the amplitude of the SS-EPs obtained at beat and meter frequencies were selectively enhanced even though the acoustic energy was not necessarily predominant at these frequencies. Furthermore, accelerating the tempo of the rhythmic stimuli so as to move away from the range of frequencies at which beats are usually perceived impaired the selective enhancement of SS-EPs at these frequencies. The observation that beat- and meter-related SS-EPs are selectively enhanced at frequencies compatible with beat and meter perception indicates that these responses do not merely reflect the physical structure of the sound envelope but, instead, reflect the spontaneous emergence of an internal representation of beat, possibly through a mechanism of selective neuronal entrainment within a resonance frequency range. Taken together, these results suggest that musical rhythms constitute a unique context to gain insight on general mechanisms of entrainment, from the neuronal level to individual level.

Introduction

Feeling the beat is fundamental to the experience of music (London, 2004). It refers to the spontaneous ability to perceive periodicities (as expressed for instance through periodic head nodding or foot tapping in musical stimuli that are not strictly periodic in reality) (London, 2004; Phillips-Silver et al., 2010). This phenomenon is well illustrated by syncopated rhythms, that is, rhythmic patterns in which the perceived beat does not systematically coincide with an actual sound (Fitch and Rosenfeld, 2007; Velasco and Large, 2011). Moreover, beats are usually perceived within meters (e.g., a waltz, which is a three-beat meter) corresponding to (sub)harmonics — i.e., integer ratios—of beat frequency. These multiple periodic levels are nested hierarchically. Among these, the beat may be considered as the most prominent periodicity (London, 2004). Finally, perception and movement on beat and meter has been shown to occur within a specific range of tempo corresponding to frequencies around 2 Hz (van Noorden and Moelants, 1999; London, 2004; Repp, 2005, 2006) and assimilated to a resonance frequency range within which internal representations of beat and meter would be optimally induced by external inputs (van Noorden and Moelants, 1999; Large, 2008).

How the brain spontaneously builds internal beat representations from music remains unknown. Recently, we showed that listening to simple periodic sounds elicits periodic neuronal activities frequency tuned to the periodicity of the sound envelope. This neuronal entrainment was captured in the human electroencephalogram (EEG) as a beat-related, steady state-evoked potential (SS-EP) appearing at the exact frequency of the beat (Nozaradan et al., 2011).

Here, using this novel approach, we explored the EEG activity induced by listening to complex rhythmic patterns that can be assimilated to syncopated rhythms and are commonly found in Western music. The patterns consisted in sequences of events, that is, sounds alternating with silences (Fig. 1) such as to induce a spontaneous perception of a beat and meter based on the preferential grouping of four events (Essens and Povel, 1985). This was confirmed by a task performed at the end of the EEG recording in which participants were asked to tap along with the beat spontaneously perceived in each pattern. Therefore, building on prior assumptions (Essens and Povel, 1985), which were confirmed by the tapping task, the multiple frequencies constituting the envelope spectrum of the five sound patterns were categorized as either (1) related to the beat and metric levels (integer ratio subdivisions and groupings of the beat period) or (2) unrelated to beat and meter frequencies.

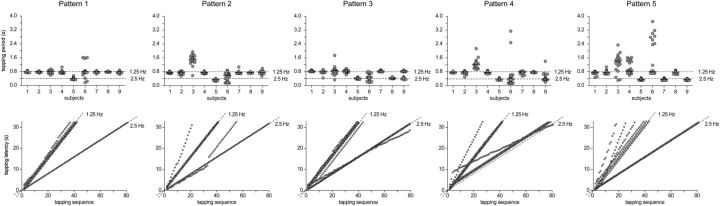

Figure 1.

In the last trial of each block, participants were asked to perform a rhythmic hand-tapping movement synchronized to the perceived beat. Top row, Tapping periods produced by each participant while listening to each of the five rhythmic patterns. Each dot corresponds to an individual tapping period. The median tapping period is represented by the horizontal black line. Bottom row, Tapping latencies along the entire trial for each participant and each sound pattern. Note that most participants tapped at a frequency corresponding to a grouping by four events (1.25 Hz) except in pattern 5, where tapping was much less consistent.

We expected that these patterns would elicit multiple SS-EPs at frequencies corresponding to the frequencies of the sound pattern envelope in the EEG spectrum. Most importantly, we aimed to capture the spontaneous building of internal beat and meter representations hypothesized to emerge from a nonlinear transformation of the acoustic inputs. Specifically, we examined whether the SS-EPs elicited at frequencies corresponding to the expected perception of beat and meter were selectively enhanced, as such an observation would constitute evidence for a selective neuronal entrainment underlying beat and meter neuronal representations.

Materials and Methods

Participants

Nine healthy volunteers (four females, all right-handed, mean age 29 ± 4 years) took part in the study after providing written informed consent. They all had musical experience in Western music, either in performance (three participants with 15–25 years of practice) or as amateur listeners or dancers. None had prior experience with the tapping task used in the present study. They had no history of hearing, neurological, or psychiatric disorder, and were not taking any drug at the time of the experiment. The study was approved by the local Ethics Committee.

Experiment 1: SS-EPs elicited by five different rhythmic patterns

Auditory stimuli.

The stimulus consisted of five distinct rhythmic patterns lasting either 2.4 s (patterns 1, 3, and 4) or 3.2 s (patterns 2 and 5) and looped continuously during 33 s. The structure of the patterns was based on the alternation of events, i.e., sounds and silence intervals of 200 ms duration. The sounds consisted of 990 Hz pure tones lasting 200 ms (10 ms rise and fall time). The patterns, inspired by the work of Essens and Povel (1985), were designed to induce the perception of a beat based on the preferential grouping of four events (i.e., a period of 0.8 s, corresponding to a 1.25 Hz beat) and at related metric levels. The related metric levels were constituted (1) by the subdivision of the beat periods by 2 (2.5 Hz) and 4 (5 Hz, thus corresponding to the unitary event period at 0.2 s) and (2) by the integer ratio grouping of beat period by 2 (0.625 Hz) and 4 (0.312 Hz) in patterns 2 and 5 (because these patterns contained 16 events, thus allowing groupings by 2 × 4 and 4 × 4 events, respectively) and by 3 (0.416 Hz) in patterns 1, 3, and 4 (because these patterns contained 12 events, thus allowing groupings by 3 × 4 events) (Fig. 2).

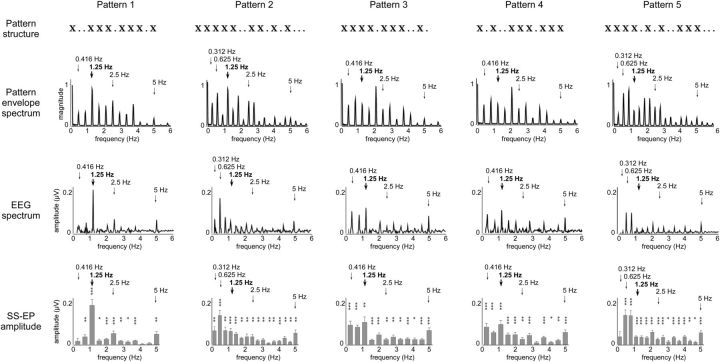

Figure 2.

Top row, Structure of the five rhythmic patterns that consisted of a sequence of 200 ms pure tones (represented by a cross) and 200 ms silences (represented by a dot). Patterns 1, 3, and 4 contained a succession of 12 events, whereas patterns 2 and 5 contained a succession of 16 events. Second row, Frequency spectrum of the pattern sound envelope. The thick vertical arrow marks the expected beat frequency. The thin vertical arrows mark the related meter frequencies. Note that in patterns 3 and 5 the acoustic energy did not predominate at the frequency of the expected beat frequency. Third row, Frequency spectrum of the EEG recorded while listening to each of the five sound patterns (noise-subtracted amplitude averaged across all scalp channels in microvolts). Fourth row, Mean magnitude (±SEM) of the SS-EPs elicited by each of the five sound patterns (noise-subtracted amplitude averaged across all scalp channels in microvolts). SS-EP amplitudes significantly different from zero are marked by *p < 0.05, **p < 0.01, or ***p < 0.001.

The auditory stimuli were created in Audacity 1.2.6 (http://audacity.sourceforge.net/) and presented binaurally through earphones at a comfortable hearing level (Beyerdynamic DT 990 PRO) using the Psychtoolbox extensions (Brainard, 1997) running under Matlab 6.5 (MathWorks).

Experimental conditions.

The five rhythmic patterns were presented in separate blocks. In each block, the 33 s auditory pattern was repeated 11 times. The onset of each pattern was self-paced and preceded by a 3 s foreperiod. The order of the blocks was counter-balanced across participants.

During the first 10 trials of each block, participants were asked to listen carefully to the stimulus to detect the occurrence of a very short acceleration (duration of two successive events reduced by 10 ms, i.e., 190 ms) or deceleration (duration of two successive events increased by 10 ms, i.e., 210 ms) of tempo inserted at a random position in two of the trials interspersed within the block. The participants were instructed to report the detection of the change in tempo at the end of each trial. This task ensured that participants focused their attention on the temporal aspects of the presented sound. The two trials containing a short tempo change were excluded from further analyses.

During the 11th trial of each block, participants were asked to perform a tapping task to assess their perception of a periodic beat in each of the five patterns. They were instructed to tap to the regular periodic strong beat of the patterns, similarly as what they would do in a concert when spontaneously entrained to clap their hands on the beat of music. Moreover, participants were asked to start tapping as soon as they heard the first beat of the trial and to maintain their movement accurately paced on the beat that they spontaneously perceived from the patterns. The tapping was performed using their right hand, with small up and down movements of the hand starting from the wrist joint, maintaining the forearm and elbow fixed on an armrest cushion. When performing the tapping movement, the fingers of the tapping hand came transiently in contact with the armrest cushion. All participants naturally synchronized their movement such that the occurrence of this contact coincided with the occurrence of the beat. The experimenter remained in the recording room at all times to monitor compliance to these instructions.

Hand movement recordings.

Movements of the hand were measured using a three-axis accelerometer attached to the hand dorsum (MMA7341L, Pololu Robotics and Electronics). The signals generated by the accelerometer were digitized using three additional bipolar channels of the EEG system. Only the vertical axis of the accelerometer signal was analyzed, as it sampled the greatest part of the accelerations related to the tapping movement.

EEG recording.

Participants were comfortably seated in a chair with the head resting on a support. They were instructed to relax, avoid any unnecessary head or body movement, and keep their eyes fixated on a point displayed on a computer screen in front of them. The electroencephalogram was recorded using 64 Ag-AgCl electrodes placed on the scalp according to the International 10/10 system (Waveguard64 cap, Cephalon). Vertical and horizontal eye movements were monitored using four additional electrodes placed on the outer canthus of each eye and on the inferior and superior areas of the left orbit. Electrode impedances were kept below 10 kΩ. The signals were recorded using an average reference amplified and low-pass filtered at 500 Hz and digitized using a sampling rate of 1000 Hz (64 channel high-speed amplifier, Advanced Neuro Technology).

Hand movement analysis.

The accelerometer signals recorded when participants performed the hand-tapping movements in the last trial of each block were analyzed by extracting, for each rhythmic pattern, the latencies at which the fingers hit the armrest cushion corresponding to the time points of maximum deceleration (Fig. 1). Tapping period estimates were then obtained by subtracting, from each tapping latency, the latency of the preceding tapping (Fig. 1).

Sound pattern analysis.

To determine the frequencies at which steady state-evoked potentials were expected to be elicited in the recorded EEG signals, the temporal envelope of the 33 s sound patterns was extracted using a Hilbert function that yielded a time-varying estimate of the instantaneous amplitude of the sound envelope, as implemented in the MIRToolbox (Lartillot and Toiviainen, 2007). The obtained waveforms were then transformed in the frequency domain using a discrete Fourier transform (Frigo and Johnson, 1998) yielding a frequency spectrum of envelope magnitude (Bach and Meigen, 1999). The frequencies of interest were determined as the set of frequencies ≤ 5 Hz, i.e., the frequency corresponding to the 200 ms period of the unitary event of the patterns. As shown in Figure 2, the envelopes of patterns 1, 3, and 4 consisted of 12 distinct frequencies ranging from 0.416 Hz to 5 Hz with an interval of 0.416 Hz, whereas the envelopes of patterns 2 and 5 consisted of 16 distinct frequencies ranging from 0.312 Hz to 5 Hz with an interval of 0.312 Hz.

Within each pattern, z-score values were then computed across the magnitude obtained at each of these frequencies in the spectra of the patterns envelope as follows: z = (x − μ)/σ, where μ and σ corresponded to the mean and standard deviation of the magnitudes obtained across the different peaks. This procedure allowed assessing the magnitude of each frequency relative to the others and, thereby, determining which frequencies stood out relative to the entire set of frequencies.

EEG analysis in the frequency domain.

The continuous EEG recordings were filtered using a 0.1-Hz high-pass Butterworth zero-phase filter to remove very slow drifts in the recorded signals. Epochs lasting 32 s were obtained by segmenting the recordings from +1 to +33 s relative to the onset of the auditory stimulus. The EEG recorded during the first second of each trial was removed: (1) to discard the transient auditory evoked potentials related to the onset of the stimulus (Saupe et al., 2009; Nozaradan et al., 2011, 2012); (2) because SS-EPs require several cycles of stimulation to be steadily entrained (Regan, 1989); and (3) because several repetitions of the beat are required to elicit a steady perception of beat (Repp, 2005). These EEG processing steps were carried out using Analyzer 1.05 (Brain Products).

Artifacts produced by eye blinks or eye movements were removed from the EEG signal using a validated method based on an independent component analysis (Jung et al., 2000) using the runica algorithm (Bell and Sejnowski, 1995; Makeig, 2002). For each subject and condition, EEG epochs were averaged across trials. The time–domain averaging procedure was used to enhance the signal-to-noise ratio of EEG activities time locked to the patterns. The obtained average waveforms were then transformed in the frequency domain using a discrete Fourier transform (Frigo and Johnson, 1998) yielding a frequency spectrum of signal amplitude (μV) ranging from 0 to 500 Hz with a frequency resolution of 0.031 Hz (Bach and Meigen, 1999). This procedure allowed assessing the neuronal entrainment to beat and meter, i.e., the appearance of frequency components in the EEG elicited by the frequency components of the sound patterns and induced beat percept (Pikovsky et al., 2001). Importantly, the deliberate choice of computing Fourier transforms of long-lasting epochs was justified in the present experiment by the fact that: (1) beat and meter perception is assumed to be stationary enough along the trials, as suggested by the results of the tapping task; and (2) it improves the resolution of the obtained EEG frequency spectrum. Indeed, this allows concentrating the magnitude of the SS-EP into a very narrow band necessary to enhance their signal-to-noise ratio as well as to disentangle between nearby SS-EP frequencies in the EEG spectrum (Regan, 1989). These EEG processing steps were carried out using Letswave4 (Mouraux and Iannetti, 2008), Matlab (The MathWorks), and EEGLAB (http://sccn.ucsd.edu).

Within the obtained frequency spectra, signal amplitude may be expected to correspond to the sum of (1) stimulus-induced SS-EPs and (2) unrelated residual background noise due, for example, to spontaneous EEG activity, muscle activity, or eye movements. Therefore, to obtain valid estimates of the SS-EPs, the contribution of this noise was removed by subtracting, at each bin of the frequency spectra, the average amplitude measured at neighboring frequency bins (two frequency bins ranging from −0.15 to −0.09 Hz and from +0.09 to +0.15 Hz relative to each frequency bin). The validity of this subtraction procedure relies on the assumption that, in the absence of an SS-EP, the signal amplitude at a given frequency bin should be similar to the signal amplitude of the mean of the surrounding frequency bins (Mouraux et al., 2011; Nozaradan et al., 2011, 2012. This subtraction procedure is important: (1) to assess the scalp topographies of the elicited SS-EPs, as the magnitude of the background noise is not equally distributed across scalp channels; and (2) to compare the amplitude of SS-EPs elicited at distinct frequencies, as the magnitude of the background noise is not equally distributed across the frequency spectrum.

The magnitude of the SS-EPs was then estimated by taking the maximum noise-subtracted amplitude measured in a range of three frequency bins centered over the expected SS-EP frequency based on the spectrum of the sound envelope. This range of frequencies allowed accounting for possible spectral leakage due to the fact that the discrete Fourier transform did not estimate signal amplitude at the exact frequency of any of the expected SS-EPs (Nozaradan et al., 2011, 2012).

To exclude any electrode selection bias, SS-EP magnitudes were averaged across all scalp electrodes for each rhythmic pattern and participant (Fig. 2). A one-sample t test was then used to determine whether the average SS-EP amplitudes were significantly different from zero (Fig. 2). Indeed, in the absence of an SS-EP, the average of the noise-subtracted signal amplitude may be expected to tend toward zero. Finally, for each frequency, topographical maps were computed by spherical interpolation (Fig. 3).

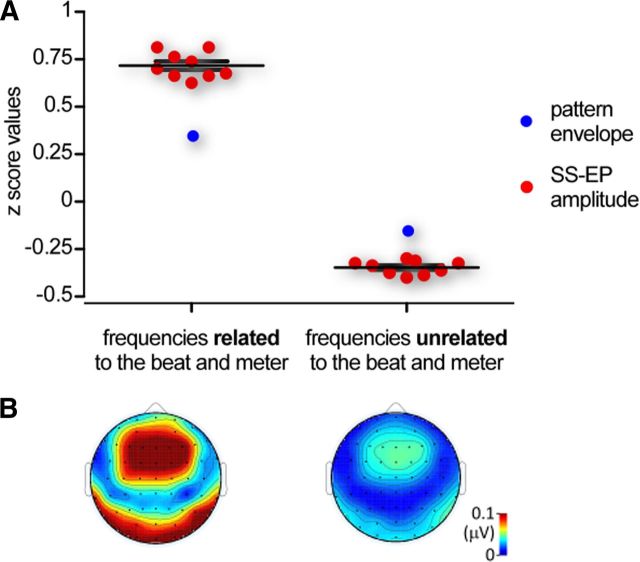

Figure 3.

A, The red dots represent the z-score values of the magnitude of the SS-EPs elicited at frequencies related to the beat and meter (left) and frequencies unrelated to the beat and meter (right) averaged across all five sound patterns in each participant. Note that, as compared to the average z score of the sound pattern envelope at corresponding frequencies (blue dots), the magnitude of the beat- and meter-related SS-EPs was markedly enhanced, whereas the magnitude of SS-EPs unrelated to the beat and meter was markedly dampened. B, Average topographical map of the SS-EPs elicited at frequencies related to the beat and meter (left) and at frequencies unrelated to the beat and meter (right), averaged across all patterns and participants (noise-subtracted amplitude in microvolts).

Like the sound pattern analysis, the amplitude of the SS-EPs obtained at the expected frequencies were expressed as z scores, using the mean and standard deviation of the magnitudes obtained across the different peaks to assess how each of the different SS-EPs stood out relative to the entire set of SS-EPs (Figs. 3 and 4).

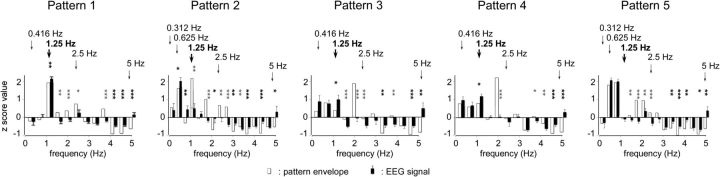

Figure 4.

The black bars represent the group level mean z scores of the magnitude of the SS-EPs elicited between 0 and 5 Hz within each sound pattern (error bars, SEM). The white bars represent the z scores of the magnitude of the sound envelope at corresponding frequencies. Significant differences between SS-EP and sound envelope amplitude are represented with a black (SS-EP > sound envelope) or gray (SS-EP < sound envelope) asterisk (one-sample t test; *p < 0.05, **p < 0.01, ***p < 0.001). The expected beat and meter frequencies are highlighted by vertical arrows (beat frequency shown in bold).

To assess specifically whether SS-EPs elicited at frequencies related to beat and meter perception (0.416, 1.25, 2.5, and 5 Hz in patterns 1, 3 and 4; 0.312, 0.625, 1.25, 2.5, and 5 Hz in patterns 2 and 5) were selectively enhanced, the average of the z-score values representing SS-EP amplitude at beat- and meter-related frequencies was compared to the average of the z-score values representing these same frequencies in the sound pattern envelope using a one-sample t test (Fig. 3). A similar procedure was used to compare the magnitude of SS-EPs and the magnitude of the sound envelope at frequencies unrelated to the beat and meter. Significance level was set at p < 0.05.

Finally, to compare the magnitude of each of the different SS-EPs obtained in each of the five sound patterns relative to the magnitude of the sound envelope, a one-sample t test was used to compare the standardized SS-EP amplitudes to the standardized sound envelope magnitudes at each frequency constituting the envelope spectrum of the rhythmic patterns (Fig. 4).

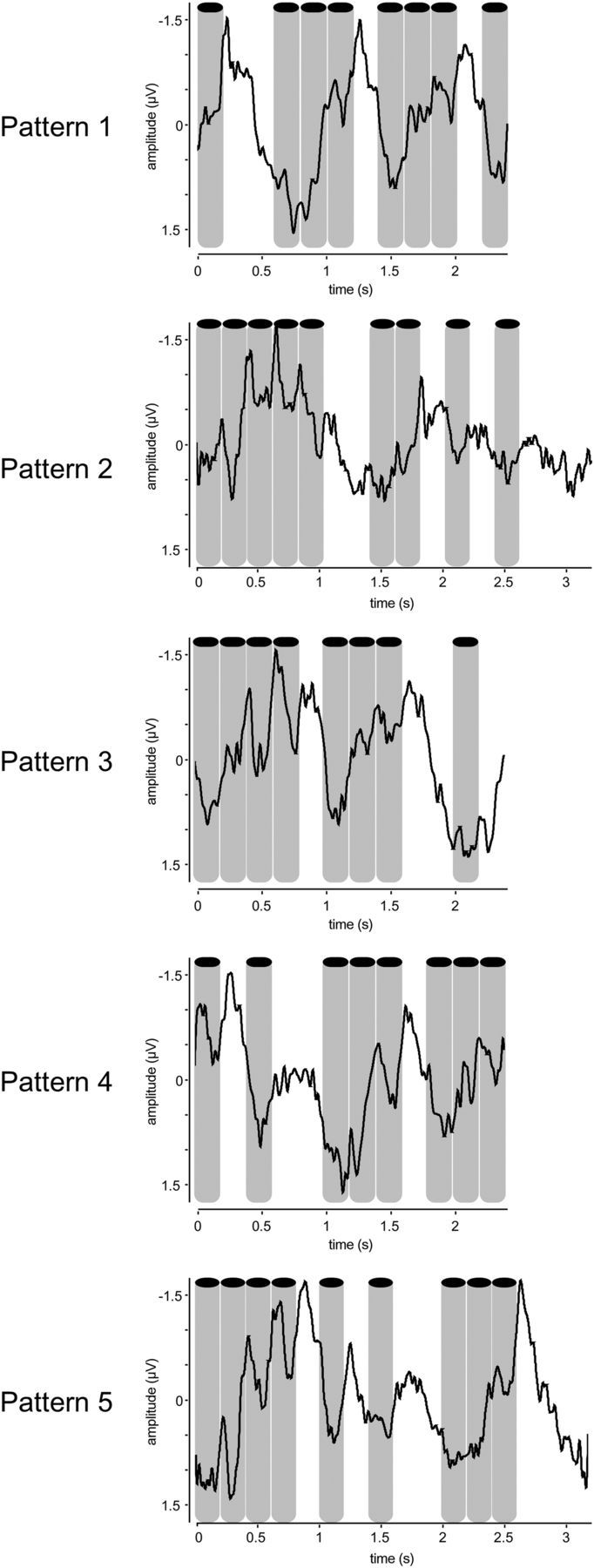

EEG analysis in the time domain.

The time course of the EEG signals recorded during presentation of the sound patterns of Experiment 1 was examined to give a better sense of the stimulus–response relationship. These signals were obtained after bandpass filtering the signals between 0.1 and 30 Hz, segmenting the EEG epochs according to the length of each pattern (2.4 s length in patterns 1, 3, and 4; 3.2 s length in patterns 2 and 5), and averaging these epochs (Fig. 5).

Figure 5.

Time course of the EEG responses recorded in Experiment 1 (group-level average of the signal recorded at electrode FCz, segmented according to the length of each pattern: 2.4 s in patterns 1, 3, and 4; 3.2 s in patterns 2 and 5). The obtained signals were examined from electrode FCz, as this electrode displayed the maximum amplitude independently of the elicited signals frequencies in the EEG spectra. Some periodicity in the average signals can be observed, mostly in pattern 1, in which the 1.25 Hz frequency was predominant in the EEG spectrum. In the other patterns these periodicities are more difficult to assess in the time domain, as several frequencies were present concurrently. The sounds are represented by the gray bars.

Experiment 2: SS-EPs elicited by pattern 1 presented at upper musical tempi

All participants took part in a second experiment performed on a different day. In this experiment, participants listened to pattern 1 of the first experiment presented at either two or four times the original tempo. The two accelerated sound patterns were presented in separate blocks. The order of the blocks was counterbalanced across participants. The faster tempi were obtained by reducing the duration of the pattern events from 200 to 100 ms (tempo × 2) and 50 ms (tempo × 4). The task was similar to the task performed in Experiment 1. The sound pattern, EEG, and movement signals were analyzed using the same procedures (Figs. 6–8). Pattern 1 was chosen for this second experiment because it appeared to elicit the most consistent beat percept, as compared to the other patterns (Fig. 1). Importantly, increasing the tempo did not substantially distort the envelope spectra of pattern 1 (Fig. 7). Indeed, it kept relatively intact the balance of frequencies relative to each other in the envelope spectrum. For example, in both the original and the accelerated versions of pattern 1, the most salient frequency remained the third frequency present in the envelope spectrum. These accelerated patterns could thus be considered as suitable to examine the effect of tempo on the magnitude of the elicited SS-EPs.

Figure 6.

Experiment 2. Top row, Tapping periods produced by each participant while listening to pattern 1 presented at the original tempo, at tempo × 2, and at tempo × 4. Bottom row, Tapping latencies along the entire trial for each participant and tempo. Experiment 3. Top row, Tapping periods produced by each participant while listening to pattern 2 presented at the original tempo, at tempo × 2, and at tempo × 3. Bottom row, Tapping latencies along the entire trial for each participant and tempo.

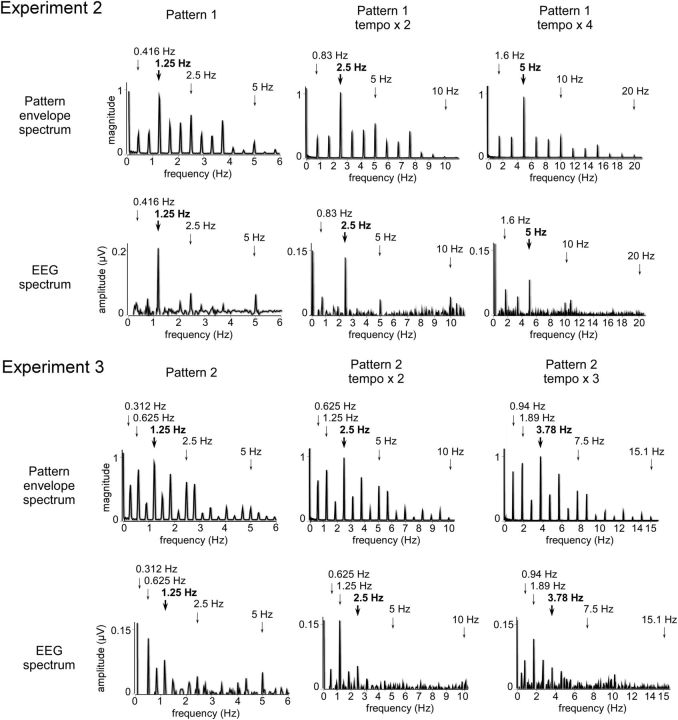

Figure 7.

Top rows, Envelope spectrum of pattern 1 at the original tempo, tempo × 2, and tempo × 4 (Experiment 2) and of pattern 2 at the original tempo, tempo × 2, and tempo × 3 (Experiment 3). The expected beat and meter frequencies are highlighted by the arrows (the grouping by four events is shown in bold). Bottom rows, Spectrum of the corresponding EEG (noise-subtracted amplitude averaged across all scalp channels in microvolts).

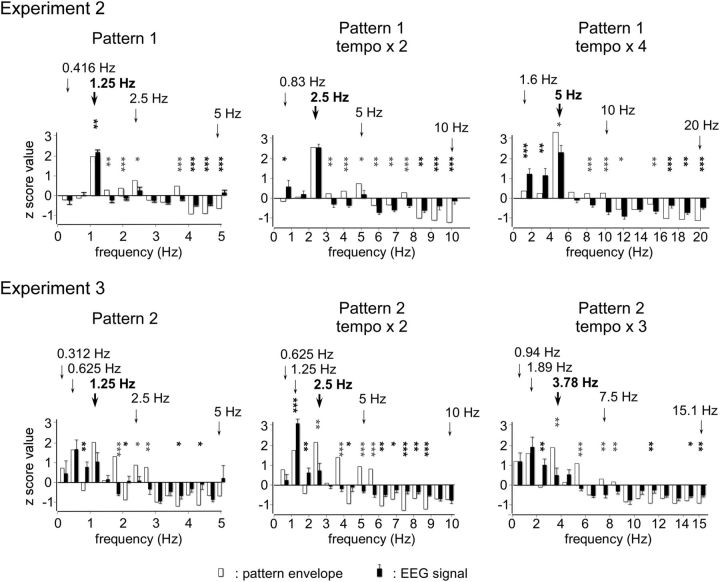

Figure 8.

The red bars represent the group-level mean z scores of the magnitude of the SS-EPs elicited by pattern 1 at original tempo, tempo × 2, and tempo × 4 (Experiment 2) and by pattern 2 at original tempo, tempo × 2, and tempo × 3 (Experiment 3). The black bars represent the z scores of the magnitude of the sound envelope at corresponding frequencies. Significant differences between SS-EP and sound envelope amplitude are represented with a black (SS-EP > sound envelope) or gray (SS-EP < sound envelope) asterisk (one-sample t test; *p < 0.05, **p < 0.01, ***p < 0.001). The expected beat and meter frequencies are highlighted by the arrows (frequencies corresponding to groupings with four events are shown in bold).

Experiment 3: SS-EPs elicited by pattern 2 presented at upper musical tempi

Six participants (three of which took part in Experiments 1 and 2) took part in a third experiment performed on a different day. In this experiment, participants listened to pattern 2 of the first experiment presented at the original tempo, and at two and three times the original tempo. The faster tempi were obtained by reducing the duration of the pattern events from 200 to 100 ms (tempo × 2) and 66 ms (tempo × 3). The task was similar to the task performed in Experiment 1. The three tempi were presented in separate blocks. The order of the blocks was counterbalanced across participants. The sound pattern, EEG and movement signals were analyzed using the same procedures as described in Experiment 1 (Figs. 6–8). As in Experiment 2, the spectrum of the sound envelope of the accelerated patterns was not substantially distorted compared to that of the original pattern (Fig. 7). Pattern 2 was chosen here because in the first experiment an apparent discrepancy was observed between the frequency of the SS-EP selectively enhanced in the EEG spectrum (0.625 Hz, corresponding to the subharmonic of beat frequency and thus to a grouping by eight events) and the frequency of the tapping on this pattern (1.25 Hz, corresponding to a grouping by four events). Therefore, Experiment 3 aimed at clarifying the adequacy between the frequency selectively enhanced in the EEG spectrum and the frequency selected in the tapping task by using different tempi.

Results

Experiment 1

Hand-tapping movement

As shown in Figure 1, the participants tapped periodically at a frequency corresponding, in most cases, to a grouping by four events (1.25 Hz) and, in some cases, at related metric levels (corresponding to a grouping by two or eight events, thus at 2.5 or 0.625 Hz, respectively). Importantly, in patterns 3–5,the acoustic energy was not predominant at the frequency of the perceived beat selected on average for the tapping (Fig. 2) but at distinct, non-meter-related frequencies, thus confirming that the frequency at which a beat is perceived does not necessarily correspond to the frequency showing maximum acoustic energy in the physical structure of the pattern envelope.

As compared to the tapping performed on the other sound patterns, the distribution of tapping periods in pattern 5 showed a much greater variability, both within and across participants, indicating that this sound pattern did not elicit a stable and unequivocal beat percept (Fig. 1).

Detection task

During the recording, participants performed the detection task with a median score of 8.5/10 (interquartile range, 8–10) and no apparent difference in difficulty reported between the patterns.

Steady state-evoked potentials

For each of the five sound patterns, most of the frequencies constituting their envelope elicited clear SS-EPs in the EEG frequency spectrum (Fig. 2). SS-EP amplitudes were significantly different from zero at most of the frequencies corresponding to the perceived beat and related metric levels (Fig. 2). The scalp topography of the elicited SS-EPs was generally maximal over frontocentral regions and symmetrically distributed over the two hemispheres (Fig. 3). Moreover, as illustrated in Figure 3, the scalp topography of the SS-EPs elicited at beat- and meter-related frequencies did not substantially differ from the SS-EPs elicited at unrelated frequencies, thus suggesting that they originate from similar neuronal populations or that the EEG did not allow disentangling the different spatial location of the underlying neural activity in our experiment.

At the frequencies expected to relate to beat and meter, the standardized estimates of the SS-EP amplitudes, averaged across the five sound patterns, were significantly enhanced as compared to the standardized estimates of the sound envelope at these frequencies (t = 15.85, df = 8, p < 0.0001; t = 16.62, df = 8, p < 0.0001; Fig. 3).

When examining the sound patterns separately, the SS-EP elicited at the expected beat frequency (1.25 Hz) was significantly enhanced in patterns 1 (t = 3.15, p = 0.01, df = 8), 3 (t = 2.55, p = 0.03, df = 8), and 4 (t = 3.31, p = 0.01, df = 8), but not in patterns 2 (reduced; t = 4.04, p = 0.003, df = 8) and 5 (t = 0.46, p = 0.65, df = 8) (Fig. 4). In pattern 2, the SS-EP corresponding to the beat frequency assessed in the hand-tapping condition was not significantly enhanced. However, the SS-EP corresponding to its subharmonic (grouping by eight events instead of four events) was enhanced (t = 2.48, p = 0.03, df = 8; Fig. 4). In pattern 5, except at the frequency of the unitary event (5 Hz), none of the SS-EPs elicited at beat- and meter-related frequencies appeared to be enhanced as compared to the sound envelope (Fig. 4). One possible explanation for the enhancement of the EEG signal observed at 5 Hz could be that although none of the frequencies within this sound pattern was able to induce a stable perception of beat, the frequency corresponding to the rate at which the individual sounds were presented constituted a relatively salient feature in the pattern. The fact that this frequency did not elicit a perception of beat could be related to the fact that it lay outside the ecological range for beat and meter perception (Repp, 2006).

Time domain analysis of the EEG signals

As displayed in Figure 5, some periodicity in the average signals could be observed in the time domain, mostly in pattern 1, in which the 1.25 Hz frequency was highly enhanced compared to other frequencies in the EEG spectrum. In the other patterns, these periodicities were more difficult to assess in the time domain, as several frequencies were present concurrently. The lack of clear time-locked evoked responses is most probably due to: (1) the fact that the sounds used to elicit the beat percepts were not as transient as those used in previous studies; and (2) the fact that in addition to the beat frequency, various metric levels were also enhanced, and these various frequencies are mixed when observed in the time domain.

Experiment 2

Hand-tapping movement

As shown in Figure 6, the participants changed their tapping frequency across the different tempi of presentation of pattern 1. Specifically, they tapped periodically at a frequency corresponding, in most cases, to the grouping by four events (i.e., at 2.5 Hz) in tempo × 2, and to the grouping by 12 events (i.e., at 1.6 Hz) in tempo × 4 (Fig. 6). Moreover, the distribution of tapping periods showed a greater variability across participants when accelerating the tempo, thus confirming previous observations that a stable and unequivocal percept of beat is preferentially elicited by a pattern presented at tempi lying within a specific frequency range (Repp, 2005, 2006) (Fig. 6).

Detection task

During the recording, participants performed the detection task with a median score of 3.5/4 (interquartile range, 3–4), with no apparent difference in difficulty reported between the different tempi.

Steady state-evoked potentials

Most of the frequencies constituting the envelope of the sound pattern elicited SS-EPs at corresponding frequencies (Fig. 7). Figure 8 shows the means and standard errors of the means of the z-score values obtained for the EEG signals across participants, as well as the z-score values obtained for the sound envelope of each tempo of presentation. Importantly, in contrast to the original tempo, the standardized amplitude of the SS-EP elicited at a frequency corresponding to the grouping by four events (at 2.5 Hz) was not significantly greater than the standardized magnitude measured at that frequency in the sound envelope in tempo × 2 (t = 0.01, p = 0.98, df = 8). Moreover, it was significantly smaller than the sound envelope in tempo × 4 (t = 2.86, p = 0.02, df = 8) (Fig. 8). In contrast, the beat subharmonic (corresponding to a grouping by 12 events; 1.6 Hz) was significantly enhanced in tempo × 4 (t = 4.03, p = 0.003, df = 8; Fig. 8).

Experiment 3

Hand-tapping movement

As shown in Figure 6, the participants changed their tapping frequency across the different tempi of presentation of pattern 2. Specifically, they tapped periodically at a frequency corresponding, in most cases, to the grouping by four events (i.e., at 1.25 Hz) at the original tempo. At tempo × 2, half of the participants still tapped on a grouping by four events (at 2.5 Hz), and the other half tapped on a grouping by eight events (at 1.25 Hz) (Fig. 8). At tempo × 3, some participants tapped to a grouping by eight events (1.89 Hz), whereas others tapped to a grouping by sixteen events (0.94 Hz).

Detection task

During the recording, participants performed the detection task with a median score of 3.5/4 (interquartile range, 3–4). There was no apparent difference in difficulty between the different tempi.

Steady state-evoked potentials

Most of the frequencies constituting the envelope of the sound pattern elicited SS-EPs at corresponding frequencies (Fig. 7). As in Experiment 1, presentation of the sound pattern using the original tempo elicited SS-EPs that were predominant at the frequency corresponding to the subharmonic of the beat (i.e., a grouping by eight events) (Figs. 7 and 8). Figure 8 shows the means and SEMs of the z-score values obtained for the EEG signals across participants, as well as the z-score values obtained for the sound envelope for each tempo of presentation. The z-score value of the SS-EP corresponding to the grouping by eight events (i.e., at 0.625, 1.25, and 1.875 Hz in the three tempi, respectively) was the highest in average. When compared to the sound, this z-score value was significantly enhanced at tempo × 2 (t = 7.45, p = 0.0007, df = 5), but not at the original tempo (t = 0.11, p = 0.91, df = 5) and not at tempo × 3 (t = 0.67, p = 0.52, df = 5) (Fig. 8).

Discussion

Participants listened to rhythmic sound patterns expected to induce a spontaneous perception of beat and meter at 1.25 Hz and its (sub)harmonics (Essens and Povel, 1985). The multiple periodic features of these complex sound patterns elicited corresponding periodic signals in the EEG, identified in the frequency domain as steady state-evoked potentials or SS-EPs (Regan, 1989). This result is in line with previous evidence of envelope locking in neurons of the auditory cortex, that is, the ability of these neurons to synchronize their activity to the temporal envelope of acoustic streams (for review, see Eggermont, 2001; Bendor and Wang, 2007).

Importantly, when comparing the frequency spectrum of the EEG to the frequency spectrum of the sound envelope, we found that the magnitude of the SS-EPs elicited at beat- and meter-related frequencies was significantly increased as compared to the magnitude of the SS-EPs elicited at frequencies unrelated to the beat and meter percept (Fig. 2). The fact that the magnitude of the elicited SS-EPs did not faithfully reflect the magnitude of the acoustic energy at corresponding frequencies (Fig. 3) suggests a mechanism of selective enhancement of neural activities related to beat and meter perception, possibly resulting from a process of dynamic attending (Jones and Boltz, 1989).

It is worth noting that this selective enhancement of beat- and meter-related SS-EPs was observed even in sound patterns in which the acoustic energy was not predominant at beat frequency (e.g., patterns 3 and 4; Fig. 4). Conversely, frequencies showing predominant acoustic energy in the sound envelope but unrelated to the beat and meter were markedly reduced in the EEG. Taken together, this suggests that beat and meter perception could involve spontaneous neural mechanisms of selection of beat-relevant frequencies in processing rhythmic patterns, and that these mechanisms can be captured with SS-EPs (Velasco and Large, 2011; Zion Golumbic et al., 2012).

This interpretation may also account for previous observations that event-related potentials elicited at different time points relative to beat or meter cycle exhibit differences in amplitude when observed in the time domain (Iversen et al., 2009; Fujioka et al., 2010; Schaefer et al., 2011). Indeed, the observed SS-EP enhancement at beat-related frequencies could be interpreted as resulting from a stronger neural response to sounds or silences occurring “on beat.” Whether SS-EPs result from a resonance within neurons responding to the stimulus or whether they are explained by the superimposition of transient event-related potentials remains a matter of debate (Galambos et al. 1981, Draganova et al. 2002). As compared to analyses in the time domain, characterizing beat- and meter-related EEG responses using SS-EPs offers several advantages. First, it allows assessing a selective enhancement not merely at one frequency, corresponding to the beat, but also at other frequencies corresponding to distinct metric levels associated with beat perception (London, 2004; Large, 2008). Second, because the sounds eliciting a beat percept are often not cleanly separated from one another by long-lasting periods of silence, response overlap can make it difficult to assess the enhancement of beat- and meter-related activities in the time domain (Fig. 5).

Interestingly, when examining SS-EPs elicited by each pattern separately, we found that pattern 5 did not elicit enhanced SS-EPs at the frequency of the expected beat (Figs. 3 and 4). Importantly, moving to the beat in this pattern showed variability both within and across participants (Fig. 1), suggesting that this pattern failed to elicit a stable and unequivocal beat percept. This was possibly due to the intrinsic complexity of its rhythmic structure (Thul and Toussaint, 2008) or, perhaps, to cultural or individual long-term exposure bias, yielding a blurred attraction for the frequency expected to correspond to the beat in this specific rhythmic pattern. Hence, the lack of beat-related SS-EP enhancement for this pattern actually corroborates the view that nonlinear transformation of the sound by neural populations is involved in building beat and meter representations while listening to rhythms.

Most importantly, this interpretation is strengthened by the fact that this selective enhancement phenomenon appeared to be sensitive to the tempo at which the sound patterns were presented. This was tested in Experiment 2, in which the tempo was accelerated by 2 and by 4, thus reaching the upper limit for beat perception (grouping by four events at 2.5 Hz in tempo × 2 and 5 Hz in tempo × 4). Indeed, beat and meter perception is known to emerge within a specific tempo range corresponding to frequencies around 2 Hz (van Noorden and Moelants, 1999; Repp, 2005, 2006). This is explained, at least in part, by the temporal limits for dynamic attending to discrete events along time (Jones and Boltz, 1989) and for perceptual grouping in the auditory system (Bregman, 1990; van Noorden, 1975). This tempo sensitivity for beat perception was confirmed in Experiment 2. Indeed, the tapping task showed that at twice the original tempo, participants still tapped, on average, to the grouping by four events (2.5 Hz). However, at four times the original tempo, participants no longer tapped to the grouping by four events (5 Hz) and, instead, tapped to a grouping by 12 events (1.6 Hz) (Fig. 6). Most importantly, the magnitude of the SS-EP appearing at the frequency corresponding to the grouping by four events was dampened at the fastest tempo. In contrast, the magnitude of the SS-EPs appearing at subharmonics lying within the frequency range for beat perception at this accelerated tempo was enhanced (Figs. 7 and 8). Hence, the shift in beat percept appeared to parallel the shift in the selective enhancement of SS-EPs, supporting the view that the enhancement of beat-related SS-EPs underlies the emergence of beat perception (Figs. 7 and 8). Moreover, as varying the frequency of stimulation can act as a probe of a resonance bandpass (Hutcheon and Yarom, 2000), these results suggest that beat- and meter-related SS-EPs could, at least in part, result from a resonance phenomenon (Large and Kolen, 1994; Large, 2008). Hence, it may corroborate a hypothesis according to which beat perception in music emerges from the entrainment of neuronal populations resonating at beat frequency (van Noorden and Moelants, 1999; Large, 2008).

Finally, the relationship between beat perception as assessed through overt movement of a body segment and selective SS-EP enhancement observed in the absence of overt movement was explored further in pattern 2. Indeed, although in this pattern participants consistently moved to a grouping by four events in the hand-tapping task (1.25 Hz; Fig. 1), the SS-EPs recorded in the absence of hand tapping showed a relative reduction at that frequency in the EEG spectrum. Instead, a significant enhancement was observed for the SS-EP appearing at the frequency corresponding to a grouping by eight events. A possible explanation for this discrepancy is that the frequency of the beat perceived when listening without moving differed from the frequency of the beat perceived when performing the hand-tapping task. Indeed, the hand-tapping frequency could be biased by several constraints, such as biomechanical constraints. This interpretation is in line with evidence showing that humans are spontaneously entrained to move on musical rhythms using specific body segments depending on the tempo (e.g., slow metric levels preferentially lead to move axial body segments such as bouncing the head, whereas fast metric levels tend to entrain more distal body parts, such as foot tapping) (van Noorden and Moelants, 1999; MacDougall and Moore, 2005; Toiviainen et al., 2010). To explore this further, we examined in Experiment 3 the effect of accelerating the tempo of pattern 2. The tapping showed that at two times the original tempo, half of the participants still tapped to the grouping by four events (2.5 Hz, as in the original tempo), but the other half tapped to the grouping by eight events (1.25 Hz) (Fig. 6). At three times the original tempo, about the same tapping distribution was found between a tapping frequency corresponding to the grouping by eight (1.89 Hz) and sixteen events (0.94 Hz) (Fig. 6). In contrast, when listening without moving, a tendency to selective enhancement at frequencies corresponding to the same grouping by eight events was observed across the tempi in the EEG (Figs. 7 and 8). Hence, it could be that performing the hand-tapping task engaged specific constraints leading to differences between the frequency of the beat perceived in the hand-tapping condition and the frequency of the beat perceived in the passive condition.

Taken together, the observation that SS-EPs are selectively enhanced when elicited at frequencies compatible with beat and meter perception indicates that these responses do not merely reflect the physical structure of the sound envelope but instead reflect the spontaneous emergence of an internal representation of beat, possibly through a mechanism of selective neuronal entrainment within a resonance frequency range.

Footnotes

S.N. is supported by the Fund for Scientific Research of the French-Speaking Community of Belgium (F.R.S.-FNRS). A.M. has received the support from the French-speaking community of Belgium (F.R.S.-FNRS) FRSM 3.4558.12 Convention Grant. I.P. is supported by the Natural Sciences and Engineering Research Council of Canada, the Canadian Institutes of Health Research, and a Canada Research Chair.

References

- Bach M, Meigen T. Do's and don'ts in Fourier analysis of steady-state potentials. Doc Ophthalmol. 1999;99:69–82. doi: 10.1023/a:1002648202420. [DOI] [PubMed] [Google Scholar]

- Bell AJ, Sejnowski TJ. An information-maximization approach to blind separation and blind deconvolution. Neural Comput. 1995;7:1129–1159. doi: 10.1162/neco.1995.7.6.1129. [DOI] [PubMed] [Google Scholar]

- Bendor D, Wang X. Differential neural coding of acoustic flutter within primate auditory cortex. Nat Neurosci. 2007;10:763–771. doi: 10.1038/nn1888. [DOI] [PubMed] [Google Scholar]

- Brainard DH. The psychophysics toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]

- Bregman AS. Auditory scene analysis. Cambridge, MA: MIT; 1990. [Google Scholar]

- Draganova R, Ross B, Borgmann C, Pantev C. Auditory cortical response patterns to multiple rhythms of AM sound. Ear Hear. 2002;23:254–265. doi: 10.1097/00003446-200206000-00009. [DOI] [PubMed] [Google Scholar]

- Eggermont JJ. Between sound and perception: reviewing the search for a neural code. Hear Res. 2001;157:1–42. doi: 10.1016/s0378-5955(01)00259-3. [DOI] [PubMed] [Google Scholar]

- Essens PJ, Povel DJ. Metrical and nonmetrical representations of temporal patterns. Percept Psychophys. 1985;37:1–7. doi: 10.3758/bf03207132. [DOI] [PubMed] [Google Scholar]

- Fitch WT, Rosenfeld AJ. Perception and production of syncopated rhythms. Music Percept. 2007;25:43–58. [Google Scholar]

- Frigo M, Johnson SG. FFTW: an adaptive software architecture for the FFT. Proceedings of the 1998 IEEE International Conference on Acoustics, Speech, and Signal Processing; Seattle: IEEE; 1998. pp. 1381–1384. [Google Scholar]

- Fujioka T, Zendel BR, Ross B. Endogenous neuromagnetic activity for mental hierarchy of timing. J Neurosci. 2010;30:3458–3466. doi: 10.1523/JNEUROSCI.3086-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galambos R, Makeig S, Talmachoff PJ. A 40-Hz auditory potential recorded from the human scalp. Proc Natl Acad Sci U S A. 1981;78:2643–2647. doi: 10.1073/pnas.78.4.2643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hutcheon B, Yarom Y. Resonance, oscillation and the intrinsic frequency preferences of neurons. Trends Neurosci. 2000;23:216–222. doi: 10.1016/s0166-2236(00)01547-2. [DOI] [PubMed] [Google Scholar]

- Iversen JR, Repp BH, Patel AD. Top-down control of rhythm perception modulates early auditory responses. Ann NY Acad Sci. 2009;1169:58–73. doi: 10.1111/j.1749-6632.2009.04579.x. [DOI] [PubMed] [Google Scholar]

- Jones MR, Boltz M. Dynamic attending and responses to time. Psychol Rev. 1989;96:459–491. doi: 10.1037/0033-295x.96.3.459. [DOI] [PubMed] [Google Scholar]

- Jung TP, Makeig S, Westerfield M, Townsend J, Courchesne E, Sejnowski TJ. Removal of eye activity artifacts from visual event-related potentials in normal and clinical subjects. Clin Neurophysiol. 2000;111:1745–1758. doi: 10.1016/s1388-2457(00)00386-2. [DOI] [PubMed] [Google Scholar]

- Large EW. Resonating to musical rhythm: theory and experiment. In: Simon Grondin S, editor. The psychology of time. Bingley, West Yorkshire, UK: Emerald; 2008. pp. 189–232. [Google Scholar]

- Large EW, Kolen JF. Resonance and the perception of musical meter. Connect Sci. 1994;6:177–208. [Google Scholar]

- Lartillot O, Toiviainen P. A Matlab toolbox for musical feature extraction from audio. Paper presented at the 10th International Conference on Digital Audio Effects (DAFx-07); September; Bordeaux, France. 2007. [Google Scholar]

- London J. Hearing in time: psychological aspects of musical meter. New York: Oxford UP; 2004. [Google Scholar]

- MacDougall HG, Moore ST. Marching to the beat of the same drummer: the spontaneous tempo of human locomotion. J Appl Physiol. 2005;99:1164–1173. doi: 10.1152/japplphysiol.00138.2005. [DOI] [PubMed] [Google Scholar]

- Makeig S. Response: event-related brain dynamics — unifying brain electrophysiology. Trends Neurosci. 2002;25:390. doi: 10.1016/s0166-2236(02)02198-7. [DOI] [PubMed] [Google Scholar]

- Mouraux A, Iannetti GD. Across-trial averaging of event-related EEG responses and beyond. Magn Reson Imaging. 2008;26:1041–1054. doi: 10.1016/j.mri.2008.01.011. [DOI] [PubMed] [Google Scholar]

- Mouraux A, Iannetti GD, Colon E, Nozaradan S, Legrain V, Plaghki L. Nociceptive steady-state evoked potentials elicited by rapid periodic thermal stimulation of cutaneous nociceptors. J Neurosci. 2011;31:6079–6087. doi: 10.1523/JNEUROSCI.3977-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nozaradan S, Peretz I, Missal M, Mouraux A. Tagging the neuronal entrainment to beat and meter. J Neurosci. 2011;31:10234–10240. doi: 10.1523/JNEUROSCI.0411-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nozaradan S, Peretz I, Mouraux A. Steady-state evoked potentials as an index of multisensory temporal binding. Neuroimage. 2012;60:21–28. doi: 10.1016/j.neuroimage.2011.11.065. [DOI] [PubMed] [Google Scholar]

- Phillips-Silver J, Aktipis CA, Bryant GA. The ecology of entrainment: foundations of coordinated rhythmic movement. Music Percept. 2010;28:3–14. doi: 10.1525/mp.2010.28.1.3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pikovsky A, Rosenblum M, Kurths J. Synchronization: a universal concept in non-linear sciences. Cambridge, UK: Cambridge UP; 2001. [Google Scholar]

- Regan D. Human brain electrophysiology: evoked potentials and evoked magnetic fields in science and medicine. New York: Elsevier; 1989. [Google Scholar]

- Repp BH. Sensorimotor synchronization: a review of the tapping literature. Psychon Bull Rev. 2005;12:969–992. doi: 10.3758/bf03206433. [DOI] [PubMed] [Google Scholar]

- Repp BH. Rate limits of sensorimotor synchronization. Adv Cogn Psychiatr. 2006;2:163–181. [Google Scholar]

- Saupe K, Schröger E, Andersen SK, Müller MM. Neural mechanisms of intermodal sustained selective attention with concurrently presented auditory and visual stimuli. Front Hum Neurosci. 2009;3:58. doi: 10.3389/neuro.09.058.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schaefer RS, Vlek RJ, Desain P. Decomposing rhythm processing: electroencephalography of perceived and self-imposed rhythmic patterns. Psychol Res. 2011;75:95–106. doi: 10.1007/s00426-010-0293-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thul E, Toussaint G. Rhythm complexity measures: a comparison of mathematical models of human perception and performance. Paper presented at the 9th International Conference on Music Information Retrieval; September; Philadelphia. 2008. [Google Scholar]

- Toiviainen P, Luck G, Thompson M. Embodied meter: hierarchical eigenmodes in music-induced movement. Music Percept. 2010;28:59–70. [Google Scholar]

- van Noorden L. Eindhoven University of Technology. 1975. Temporal coherence in the perception of tone sequences. PhD thesis. [Google Scholar]

- van Noorden L, Moelants D. Resonance in the perception of musical pulse. J New Music Res. 1999;28:43–66. [Google Scholar]

- Velasco MJ, Large EW. Pulse detection in syncopated rhythms using neural oscillators. Paper presented at the 12th Annual Conference of the International Society for Music Information Retrieval; October; Miami. 2011. [Google Scholar]

- Zion Golumbic EM, Poeppel D, Schroeder CE. Temporal context in speech processing and attentional stream selection: a behavioral and neural perspective. Brain Lang. 2012;122:151–161. doi: 10.1016/j.bandl.2011.12.010. [DOI] [PMC free article] [PubMed] [Google Scholar]