Abstract

Direction of gaze (eye angle + head angle) has been shown to be important for representing space for action, implying a crucial role of vision for spatial updating. However, blind people have no access to vision yet are able to perform goal-directed actions successfully. Here, we investigated the role of visual experience for localizing and updating targets as a function of intervening gaze shifts in humans. People who differed in visual experience (late blind, congenitally blind, or sighted) were briefly presented with a proprioceptive reach target while facing it. Before they reached to the target's remembered location, they turned their head toward an eccentric direction that also induced corresponding eye movements in sighted and late blind individuals. We found that reaching errors varied systematically as a function of shift in gaze direction only in participants with early visual experience (sighted and late blind). In the late blind, this effect was solely present in people with moveable eyes but not in people with at least one glass eye. Our results suggest that the effect of gaze shifts on spatial updating develops on the basis of visual experience early in life and remains even after loss of vision as long as feedback from the eyes and head is available.

Introduction

Spatial coding for action depends heavily on where we are looking and what we see. Results from behavioral and physiological studies suggest that spatial memory for reaching is at least initially coded and updated relative to gaze (where we are looking) both for visual (Henriques et al., 1998; Batista et al., 1999; Medendorp et al., 2003) and nonvisual (Pouget et al., 2002; Fiehler et al., 2010; Jones and Henriques, 2010) targets, before being transformed into a more stable motor-appropriate representation (for review, see Andersen and Buneo, 2002; Crawford et al., 2011). Likewise, vision (what we see) influences spatial coding for action. Altered visual feedback of the environment using prisms (Harris, 1963; Held and Freedman, 1963; Jakobson and Goodale, 1989; van Beers et al., 2002; Zwiers et al., 2003; Simani et al., 2007) or of the hand using virtual reality (Ghahramani et al., 1996; Vetter et al., 1999; Wang and Sainburg, 2005) systematically changes reaching movements toward visual and nonvisual targets. Even perceptual localization of visual (Redding and Wallace, 1992; Ooi et al., 2001; Hatada et al., 2006) and nonvisual (Lackner, 1973; Cressman and Henriques, 2009, 2010) stimuli is affected by distorted vision. Given the important role of gaze direction and vision for representing space, how does the absence of vision affect spatial coding for action?

There is converging evidence that lack of vision from birth shapes the architecture of the CNS, and thus cortical functions, in a permanent manner (Lewis and Maurer, 2005; Pascual-Leone et al., 2005; Levin et al., 2010; Merabet and Pascual-Leone, 2010). Studies in congenitally and late blind people have demonstrated that visual experience early in life is crucial to develop an externally anchored reference frame. Late blind persons, like sighted individuals, primarily code targets in an external reference frame, while congenitally blind individuals mainly rely on an internal body-centered reference frame (Gaunet and Rossetti, 2006; Pasqualotto and Newell, 2007; Röder et al., 2007; Sukemiya et al., 2008). However, spatial coding strategies in the congenitally blind also depend on early nonvisual experience of space (Fiehler et al., 2009). Humans who were visually deprived during a critical period in early life show permanent deficits in cross-modal interactions (Putzar et al., 2007), spatial acuity, and complex form recognition (Maurer and Lewis, 2001; Fine et al., 2003), while similarly deprived nonhuman primates show imperfect reach and grasp movement (Held and Bauer, 1974). Yet, we do not know how the loss of vision affects spatial coding and updating of targets for goal-directed movements.

Here, we test the impact of visual experience on people's ability to localize targets and update their locations following movements of the head, i.e., shifts in gaze direction. Three groups of people with different visual experience (sighted, late blind, and congenitally blind) faced in the direction of a briefly presented proprioceptive reach target (left hand), before changing gaze direction and reaching to the remembered target location. In this way, we investigated how reaching endpoints vary as a function of gaze and visual experience.

Materials and Methods

Subjects.

We investigated three groups of participants (for details, see Table 1) consisting of 12 congenitally blind (CB; 30.2 ± 5.92 years old; onset of blindness at birth), 12 late blind (LB; 30.7 ± 7.78 years old; onset of blindness at age >3 years), and 12 sighted controls (SC; 27.9 ± 6.17 years old; normal or corrected-to-normal vision). All three groups were matched by gender (6 females, 6 males), age (maximal deviation, 8 years; mean, 2.89 years), and level of education (high school degree). Furthermore, all of them were right-handed according to the German translation of the Edinburgh Handedness Inventory (EHI) (Oldfield, 1971) (EHI score: CB, 70 ± 22; LB, 75 ± 19; SC, 91 ± 10). Participants were either paid for their participation or received course credits. The experiment was performed in accordance with the ethical standards laid down by the German Psychological Society and in the Declaration of Helsinki (2008).

Table 1.

Description of the congenitally blind, late blind, and sighted participants

| ID | Sex | Congenitally blind |

Late blind |

Sighted Age (years) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Age (years) | Onset | Cause of blindness | Age (years) | Onset age (years) | Cause of blindness | Glass eye(s) | Glass eyes since age of (years) | |||

| 1 | F | 28 | Birth | Retinal detachment and cataract | 26 | 21 | Retinitis pigmentosa | R | 24 | 26 |

| 2 | F | 32 | Birth | Retinopathy of prematurity | 32 | 20 | Morbus Behçet | 29 | ||

| 3 | M | 35 | Birth | Inherited retinal dysplasia | 40 | 14 | Retinal detachment and glaucoma | L | 31 | 37 |

| 4 | M | 23 | Birth | Retinitis pigmentosa | 23 | 13 | Retinitis pigmentosa | 20 | ||

| 5 | F | 42 | Birth | Birth injury | 48 | 28 | Macular degeneration | 40 | ||

| 6 | M | 30 | Birth | Leber's congenital amaurosis | 35 | 17 | Glaucoma | L | 27 | 28 |

| 7 | F | 18 | Birth | Retinitis pigmentosa | 23 | 15 | Cataract | L | 11 | 20 |

| 8 | M | 26 | Birth | Retinitis pigmentosa | 24 | 18 | Macular degeneration | 23 | ||

| 9 | F | 31 | Birth | Retinopathy of prematurity | 26 | 6 | Haematom excision at optic nerve | 27 | ||

| 10 | M | 34 | Birth | Recessively inherited disease | 36 | 24 | Retinitis pigmentosa and macular degeneration | 33 | ||

| 11 | F | 31 | Birth | Optic nerve atrophy | 29 | 4 | Retinoblastoma | R, L | 5 | 27 |

| 12 | M | 29 | Birth | DDT during pregnancy | 26 | 7 | Inherited disease | 25 | ||

The side of glass eye(s) in the late blind is indicated with R for right and with L for left.

Equipment.

Participants sat in front of a movement apparatus mounted on a table. Two servomotors controlled by LabVIEW (http://www.ni.com/labview/) steered a handle of the apparatus in the x and y plane with an acceleration of 0.4 m/s2 and a maximum velocity of 0.2 m/s. Thus, we were able to bring the participants' left hand to a proprioceptive target site through a straight movement of the device. We registered reach endpoints to proprioceptive targets with a touch screen panel (Keytec) of 430 × 330 × 3 mm (Fig. 1, light gray rectangle) mounted above the movement apparatus to prevent any tactile feedback of the target hand. Head position was fixed by a moveable bite bar equipped with a mechanical stopper, which allowed participants to perform controlled head movements to a predefined position. We recorded head movements with an ultrasound-based tracking system (Zebris CMS20; Zebris Medical) using two ultrasound markers mounted on a special holder along the interhemispheric midline (Fig. 1). Data were sampled with 100 Hz and analyzed offline. Horizontal eye movements were measured by means of a horizontal electrooculogram (HEOG) with a sampling rate of 500 Hz (cf., Fiehler et al., 2010). Silver/silver chloride electrodes were placed next to the canthi of the left and right eyes (bipolar recording) and at the left mastoid (ground). Impedances were kept <5 kΩ. The locations of all stimuli were defined as angular deviations (degrees) from the midline, centered at the rotational axis of the head. We presented task-specific information by auditory signals through three loud speakers placed 125 cm from the participant.

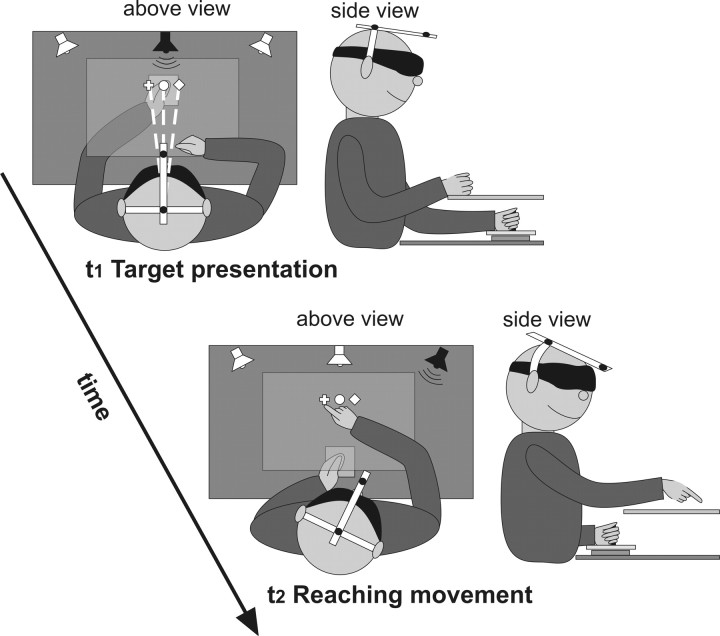

Figure 1.

Setup and procedure for the main experiment (left, above view; right, side view). t1, Proprioceptive target (left thumb) was presented at one of three possible locations (cross, 0°; circle, 5° left; diamond, 5° right) for 1 s, while the head was directed toward the target (dashed lines). t2, After the left-target hand was moved back to the starting point, the speaker indicated the direction that subjects had to move their head, by 10° or 15° leftwards or rightwards. With the head in this direction, participants used their outstretched right index finger to reach to the remembered proprioceptive target.

Procedure.

The participants' left thumb served as the proprioceptive reach target. Participants grasped the handle of the apparatus with a power grip of the left hand with the thumb on the top. The left hand was passively moved by the apparatus within 1500 ms along a straight horizontal path of 10 cm (Fig. 1, white dashed lines) from a starting point located 25 cm in front of the chest at the body midline to one of three target positions. The target positions lay straight at 0°, 5° left, or 5° right (Fig. 1, white circle, white cross, or white diamond, respectively) relative to the body midline. During the movement, a speaker placed in target direction (Fig. 1, upper left, black speaker) produced a continuous low-pitched 440 kHz tone. A high-pitched 1 kHz tone (500 ms) prompted participants to shift the head in the direction of the tone and then to reach to the target with the outstretched right index finger as accurately as possible. Before the experiment, participants underwent several training trials to ensure correct task execution.

Conditions and tasks.

In the main experiment (Fig. 1), blindfolded participants performed controlled head movements that inevitably induced corresponding eye movements (gaze shifts). First, the apparatus guided the hand to the proprioceptive target position (0°, 5° left, or 5° right relative to the body midline, with the origin at the head central axis; Fig. 1, upper left, white dashed lines) while participants directed their gaze toward the target with the help of a low-pitched tone and tactile guidance for the tip of the nose. The left hand (proprioceptive target) stopped for 1 s at the target site and then was moved back to the starting point (Fig. 1, bottom). Before the reaching movement, gaze was varied across four different heading (facing) directions that lay 10° and 15° leftwards and rightwards (Fig. 1, bottom). A speaker on the left or right side (at 10° or 15°) presented the high-pitched tone (Fig. 1, bottom, black speaker) that instructed participants to change gaze in the direction of the tone, i.e., they turned the head until it reached the predefined head position marked by a mechanical stopper. While keeping their head in this direction, participants reached to the remembered proprioceptive target (Fig. 1, bottom). Thus, gaze was shifted after target presentation and before reaching (forces updating of target location). Change in gaze direction was varied blockwise, i.e., participants turned their head either 10° leftwards or rightwards in one block and 15° leftwards or rightwards in another block. In total, participants performed 15 reaching movements to each of the three targets with four different heading directions (10° and 15° to the left and to the right), resulting in 12 combinations.

In addition, we ran three proprioceptive-reaching control conditions for all three groups and one eye movement control condition for the sighted group. In the proprioceptive-reaching control conditions, the presentation of the proprioceptive target was the same as in the main experiment. The target either remained in that outward location (Fig. 1, top) for the online and baseline reaching control condition, or, like in the main experiment, was returned to the start position before reaching for the remembered reaching control condition (Fig. 1, bottom). The main difference in these three control tasks was that the head was not deviated but remained facing the target site during reaching (for the online and remembered reaching control conditions), or the head direction was not restricted to any direction (baseline reaching control condition). We used the baseline control condition to measure any individual biases in reaching and then to remove them from all other conditions. Here, participants reached to each of three target sites five times. The purpose of the online and remembered proprioceptive controls was to determine how precisely participants can reach to proprioceptive targets when gaze/head is directed toward the target (for results, see Fig. 4A). In each of these two conditions, participants reached to the three targets 15 times. The timing and signals were otherwise identical to those in the main experiment as described above.

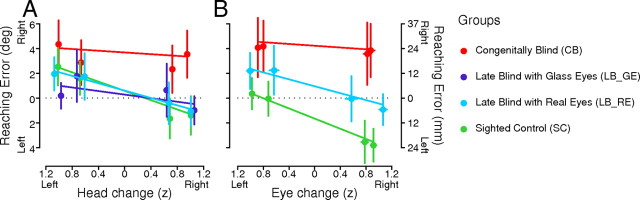

Figure 4.

A, B, Horizontal reaching errors as a function of standardized change in head direction (A) and eye position (B), averaged across targets and plotted for the congenitally blind, the sighted controls, and the late blind, separated into those with glass eyes and with real movable eyes. Colors, scales, and error bars as in Figure 3.

The fourth control condition was an eye movement control condition performed only on sighted participants, in order to compare the blindfolded eye movements that accompanied the head shift in the main experiment with those when the eyes were uncovered and free to move to the visual target while the head remained stationary (see Fig. 3B). In this condition, both eyes and head were initially directed toward one of three visual targets (LED lit for 1.5 s) located at 0°, 5° left, or 5° right, but then only the eyes moved to a visible saccade target (LED lit for 3 s) presented at 10° or 15° to the left or right of the body midline (analogous to the head directions of the main experiment). The presentation of the saccade target was accompanied by a high-pitched tone from the same direction. Participants had to saccade 15 times from each of the initial visual targets (0°, 5° left, 5° right) to each of the four saccadic targets (15° left, 10° left, 10° right, 15° right).

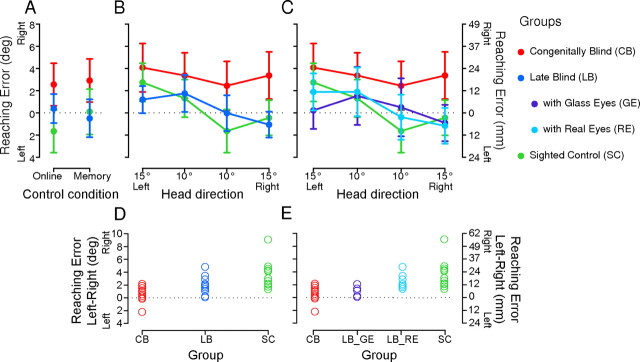

Figure 3.

Horizontal reaching errors represented in degree on the left y-axis and approximate millimeters on the right y-axis. A–C, Errors are averaged across the three targets and across participants in each group. A, Proprioceptive-reaching control conditions: online and remembered reaching conditions in the congenitally blind (red), late blind (blue), and sighted control (green) groups. B, C, Main experiment: Errors plotted as a function of the four different changes in head direction for congenitally blind (red), late blind (blue), and sighted control (green) groups (B), and with the late blind divided into subgroups with glass eyes (violet) and with real movable eyes (cyan) (C). Error bars are SEM. Target location is represented by the dotted horizontal line at zero degrees on the y-axis. D, E, Individual differences of reaching errors to the left (mean of 10° and 15° left) minus reaching errors to the right (mean of 10° and 15° right) for congenitally blind (red), late blind (blue), and sighted control (green) groups (D), and with the late blind divided into subgroups with glass eyes (violet) and with real movable eyes (cyan) (E).

Participants performed the baseline condition first, followed by the main experiment and the proprioceptive control conditions (online and remembered reaching task) in separate blocks, whose order was randomized across participants. Additionally, sighted participants underwent the eye movement control condition at the end of the experiment. The whole experiment lasted ∼180 min and was split into two sessions.

Data processing.

In the main experiment, participants turned their head (10° or 15° leftwards or rightwards) before they initiated the reaching movement. To verify whether participants started the reach movement after the head had reached the required heading direction, we recorded head movements with an ultrasound-based tracking system. Positional changes of the ultrasound markers were analyzed offline in the x and y plane using R 2.11.0 and SPSS 17.0. The continuous head position signals were segmented in time windows of interest ranging from 100 ms before to 2000 ms after the high-pitched tone, comprising the time of head direction change together with the subsequent reach movement. Finally, the segments were baseline corrected by setting the head direction to zero at the time when the high-pitched tone occurred, i.e., when the head movement started. On the basis of the head movement data, we excluded all trials from further analyses where participants did not move their head in the time window of interest or moved their head in the wrong direction (in total, 9.97% of all trials).

Eye movements were recorded by the HEOG and analyzed offline with VisionAnalyzer Software (http://www.brainproducts.com). First, HEOG signals were corrected for DC drifts and low-pass filtered with a cutoff frequency of 5 Hz. Second, the time windows of interest were set from 100 ms before to 2000 ms after the high-pitched tone that induced the gaze direction change. Third, we excluded those trials with muscle artifacts (5.57% of all trials) from all analyses of eye movements. Artifact-rejected eye movement segments were then baseline corrected using the first 100 ms of each HEOG segment and down-sampled to 200 Hz. For further analysis of eye movement data, we did not include the two CBs who showed nystagmus-like eye movements and the two CBs and five LBs who had at least one glass eye.

Since eye movements were recorded in microvolts, eye and head amplitudes are difficult to compare. Yet, to roughly gauge how much the eyes moved with the head, we ran the eye movement control condition in the sighted participants and compared these visually directed eye movements with the eye movements under blindfolded conditions. We did this by computing an individual gain factor (G) for each sighted participant consisting of the eye movement distance in the eye movement control condition, i.e., the angular difference between the visual target (vT) at 0° or 5° left or right and the saccade target position (sT) at 10° or 15° left or right, divided by the HEOG signal:

|

The mean value of this gain factor represents the mean change in eye position in degrees corresponding to 1 μV. We transformed the individual eye movement signal measured in microvolts in the main experiment and the eye movement control condition by multiplying the HEOG signal with the individual gain factor per participant, resulting in corresponding values in degrees (Fig. 2B). In this way, we were able to approximate the size of the eye movements in degrees in the main experiment for the sighted participants (Fig. 2B, solid line) and use this rough approximation to simply scale the HEOG amplitude across all groups (Fig. 2A). This allowed us to compare the extent to which the movement of the eyes contribute to final gaze shift across the three groups.

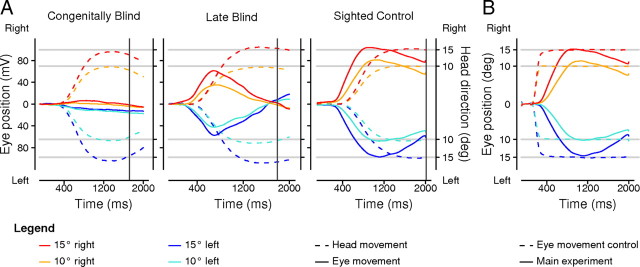

Figure 2.

A, Head traces (dashed lines) for all participants (n = 12) of the three groups and eye traces (full lines) for the congenitally blind group (left, n = 8), the late blind group with real eyes (middle, n = 7), and the sighted control group (right, n = 12) during the main experiment. Eye movements shown in head-centered coordinates are depicted in microvolts (y-axis) and head movements are represented in degrees (x-axis). Time 0 ms marks the start of the auditory signal prompting the head turn. Participants were instructed to initiate the reaching movement after their head reached the predefined head position marked by a mechanical stopper (∼1200 ms after the auditory signal). The vertical dark gray lines indicate the average time of touch for each group (CB, 1724 ms; LB, 1776 ms; SC, 2002 ms), which did neither differ statistically between head displacements nor between groups (p > 0.1). B, Eye movements of the sighted control group (n = 12) transformed into degree, shown for the main experiment (full lines) and the eye movement control condition (dashed lines). A, B, Head and/or eye traces are shown for the segmented time window of 100 ms before and up to 2000 ms after the high-pitched tone that induced the change in gaze direction or a saccade. Movement amplitudes were averaged across all trials of the four different head directions or saccade target positions (blue, 15° left; cyan, 10° left; orange, 10° right; red, 15° right). Reference lines are shown for all four head directions (light gray lines).

Statistical analyses.

Average touch times in milliseconds measured from the start of the high-pitched tone that induced the change in gaze direction were calculated for each group. To test whether touch times differed for the four different head directions within each subject group (CBs, LBs, SCs), we performed a repeated-measures ANOVA with the within-subject factor head direction (15° left, 10° left, 10° right, 15° right). In addition, we tested for differences in touch times between groups by calculating a repeated-measures ANOVA with the within-subject factor head direction (15° left, 10° left, 10° right, 15° right) and the between-subject factor group (CBs, LBs, SCs).

The reaching endpoints recorded by the touch screen panel were subtracted from the respective target location (derived from the reaching endpoints during the baseline reaching condition) to calculate horizontal reaching errors. These were converted into degrees relative to the body midline. One degree was ∼6 mm. Since we varied the horizontal direction of the head and target in degree (relative to the body midline), we only report the results of horizontal reaching errors in degree as well, which were analyzed using R 2.11.0 and SPSS 17.0.

The main experiment contained three variables of interest: visual experience that varied across the three subject groups (CBs, LBs, SCs), target position (0°, 5° left, 5° right), and head direction (15° left, 10° left, 10° right, 15° right). To determine how reach errors varied as function of these variables, we used a regression analysis with a nested design, i.e., the multilevel analysis (MLA) for the nested variables, target location, and head direction. In addition to the hierarchical structure, the MLA can properly deal with empty cells and small number of cases in the present dataset. We performed a fixed-effects MLA with target position and head direction as repeated variable at the first level and participants with their different visual experience as subject variable at the second level. We defined fixed effects for all variables and interactions.

A change in head direction was accompanied by an eye movement in the same direction in LB and SC participants. In the next step, we examined the extent to which reaching errors varied with the obtained head and eye movements (in addition to visual experience). Therefore, we defined the magnitude of change in head direction and eye position by the maximal amplitude of movement within each trial. Since both movements were highly correlated, we conducted two separate MLAs including either measured change in head direction or measured change in eye position as covariate (z-standardized). Both head direction and eye position were treated as repeated variables at the first level and participants differing in visual experience were treated as a subject variable at the second level.

To investigate the impact of head and eye movements on reach errors, we exploited the fact that our LB group included both late blind individuals with two real movable eyes (LB_RE) and late blind individuals with one or two glass eyes (LB_GE). While both LB_RE and LB_GE had visual experience before they lost vision, only LB_RE showed systematic head-and-eye coupling, whereas LB_GE with two glass eyes lacked eye movements and LB_GE with one glass eye showed low-amplitude eye movements that were more variable than in LB_RE and elicited no systematic pattern with respect to the head turn. This enabled us to examine the influence of head direction and eye position on reach errors by comparing LB_RE and LB_GE. Including the CBs and SCs, we had four subject groups that did not differ only in their visual experience but also in their proprioceptive and oculomotor feedback from the eyes. With these four groups, we again conducted the MLA with target position and head direction as repeated variables at the first level and participants divided into four groups as subject variables at the second level. Likewise, we again used the MLA for four groups that additionally contained the measured change in head direction as covariate. In this way, we obtained indirect evidence about the role of eye position changes in addition to visual experience for updating proprioceptive targets for reaching.

Furthermore, we were interested in how accurately CBs, LBs, and SCs can localize proprioceptive target positions in the dark when their gaze/head is directed toward the target, both when the proprioceptive target is at the target site (online) and when it is removed before reaching (remembered) in these two proprioceptive-reaching control conditions. One-sample t tests were conducted to test whether the horizontal reaching errors significantly deviated from zero. We performed three t tests (one per target) for all groups and proprioceptive control tasks (online and remembered) and corrected the α value accordingly by Bonferroni correction.

Results

Head and eye movements

Figure 2A illustrates the head and eye movements for the four changes in head direction performed in the main experiment (15° left, 10° left, 10° right, 15° right) averaged across trials and participants per group (CB, LB, SC). All participants followed the instructions and turned their head in the correct direction and with the required amplitude.

While CB participants (who completely lacked visual experiences) produced no systematic movements of the eyes along with head, the LBs and SCs did move their eyes in the same direction and ∼300 ms before they initiated the head movement. Thus, at the time of maximal head displacement (corresponding to the time where participants were asked to initiate the reach-to-touch movement; cf., Fig. 2A, ∼1200 ms after the auditory signal), eyes were eccentric in the LBs and SCs but not in the CBs. Shifts in eye position were larger for SC than LB; however, gaze-dependent reaching errors tend to saturate beyond 10° gaze relative to target (Bock, 1986; Henriques et al., 1998). The sighted individuals continued to maintain their eyes eccentrically with respect to the head; this likely reflects the natural contribution of the eyes and head seen for volitional large gaze shifts (Goossens and Van Opstal, 1997; Phillips et al., 1999; Populin and Rajala, 2011). Moreover, it is known for peripheral gaze shifts that the eyes of the sighted deviate further than the head (Henriques and Crawford, 2002; Henriques et al., 2003). However, if no fixation stimulus is present and head movements are constrained, the deviations of the eyes relative to the head seem to be even larger and no vestibulo-ocular reflex seems to arise. In contrast, LBs did not maintain their eyes eccentric along with the head, but the eyes appear to mechanically drift back to the center of the orbit. At the time of touch (Fig. 2A, vertical gray lines), on average, 1840 ms after the auditory signal (which was consistent across groups and across head directions; F stats, p > 0.1), the head had reached and maintained its final position, while the eyes appeared to revert back to the center of the orbit (aligned with the head) for LBs, but only partly so for the SC. In summary, we find that head turns evoked accompanying eye movements in sighted people and in the late blind (although somewhat diminished in the latter), but not in congenitally blind individuals.

Reaching to targets in gaze direction

When gaze was directed toward the target, participants reached very accurately to both the online and remembered proprioceptive targets regardless of their visual experience (Fig. 3A). The reach errors were not significantly different from zero in proprioceptive-reaching control in all three groups (Bonferroni corrected p > 0.1).

Reaching to remembered targets after change in gaze direction (target updating)

To determine the effect of gaze shifts and visual experience on reaching errors, we plotted the horizontal reach errors as a function of head direction for the three groups (Fig. 3B) and for the four groups with the LB divided into those with real eyes and those with one or two glass eyes (Fig. 3C). The results of the MLA analysis indicate that reaching errors are affected by the interaction of head direction and group (F(2,36.08) = 17.33, p < 0.001). Hence, reaching errors can be well explained by the change in head direction in SCs (slope = −0.10, t(36.13) = −5.64, p < 0.01) and in LBs (slope = −0.08, t(35.96) = −4.28, p < 0.01), but not in CBs (slope = −0.01, t(35.96) = −0.69, p = 0.49). In addition to a change in head turn, SCs and LBs who produced these systematic reaching errors also showed a corresponding change in eye position, in contrast to the CBs (Fig. 2A). Thus, reaching errors of participants with visual experience (SCs and LBs) systematically varied with gaze, i.e., they misreached to the right of the target if gaze was directed to the left and vice versa. In contrast, participants who never had any visual experience (CBs) produced reaching errors that did not vary with changes in gaze direction, but were merely shifted to the right of the target (intercept = 5.08, t(35.7) = 4.27, p < 0.01). This general rightward bias was observed in all following analyses.

To explore the significance of eye position on reach errors, we considered the two late blind subgroups, LB_RE (change in head direction accompanied by a systematic change in eye position) and LB_GE (change in head direction accompanied by no eye movements in subjects with two glass eyes and by small and unsystematic changes in eye movements in subjects with one glass eye). We found that the interaction of head direction and group influenced reaching (F(3,36.02) = 13.50, p < 0.001). Thus, as shown in Figure 3C, reach errors varied significantly and somewhat linearly with head direction in SCs (slope = −0.10, t(36.17) = −5.66, p < 0.01) and LB_REs (slope = −0.10, t(35.9) = −4.90. p < 0.01). But head shifts did not significantly influence reaching errors in the CBs and LB_GEs. Comparable to the results in the SCs, the LB_REs misreached the targets in the direction opposite to the shift in head and corresponding eye (gaze). This means that only participants with visual experience and real movable eyes (i.e., SCs and LB_REs) demonstrated gaze-dependent reaching errors.

We further calculated individual reaching errors as the difference of the average reaching errors for leftward head direction minus the average reaching errors for rightward head direction. If participants show the typical gaze-dependent error pattern as observed in previous studies (Henriques et al., 1998), positive difference errors are expected. SCs and LBs revealed a positive difference error pattern that significantly differed from zero (SCs: t(11) = 5.95, p < 0.001; note, we also found a significant effect when we exclude the participant with the highest positive difference error: t(10) = 8.45, p < 0.001; LBs: t(11) = 5.04, p < 0.001) in contrast to CBs where difference errors varied around zero (t(11) = 2.15, p > 0.05; Fig. 3D). For the two late blind groups, we found that difference errors for LB_REs were more positive than zero (t(11) = 5.73, p < 0.001) but not for LB_GEs (t(11) = 2.60, p > 0.05; Fig. 3E).

Next, we tested how well measured movements of the head and eye can explain reach errors as a function of visual experience. Since head and eye movements were highly correlated (Fig. 2A), we separately analyzed horizontal reaching errors as a function of change in head direction (Fig. 4A) or as a function of change in eye position (Fig. 4B) using MLAs. For measured change in head direction, we found that reaching errors varied as a function of head direction and group (F(2,36.39) = 12.01, p < 0.001), i.e., that head direction influenced reaching in SCs (slope = −1.53, t(36.35) = −4.90, p < 0.01) and in LBs (slope = −0.84, t(36.54) = −2.71, p < 0.05), but not in CBs (slope = −0.34, t(36.53) = −1.52, p = 0.14). However, the effect found in LBs was mainly driven by LB_REs, since the analysis with four groups (Fig. 4A) also resulted in an interaction of head direction and group (F(3,36.34) = 9.92, p < 0.001) and revealed a significant effect of measured head direction on reaching error only for SCs (slope = −1.52, t(36.32) = −5.13, p < 0.01) and LB_REs (slope = −1.14, t(36.55) = −3.36, p < 0.01), but not for LB_GEs (slope = −0.37, t(36.24) = −0.98, p = 0.34) or CBs (slope = −0.35, t(36.49) = −1.62, p = 0.11). Hence, only participants with past visual experience and two real moveable eyes (SCs and LB_REs) systematically misestimated proprioceptive targets opposite to the direction of their head movement.

To determine the relationship between reaching errors and measured changes in eye position, we could only include those participants with reliable HEOG signals (N: CB = 8, LB_RE = 7, SC = 12). The analysis showed that reaching errors varied as a function of eye position and group (F(2,23.85) = 7.31, p < 0.001), meaning that the measured change in eye position significantly influenced reaching error only in SCs (slope = −1.70, t(23.31) = −3.82, p < 0.01) and in LB_REs (slope = −1.04, t(24.8) = −2.34, p < 0.05). Thus, only participants with systematic eye signals and former visual experience (SCs and LB_REs) misreached targets in the opposite direction to their eye movements that accompanied shifts in head direction.

Furthermore, we explored whether late blind and congenitally blind individuals differed in their reaching error depending on the years of blindness or on the age at mobility training, as we showed in a previous study where we assessed spatial discrimination ability of sighted and congenitally blind individuals (Fiehler et al., 2009). However, we did not find any interaction or effect of those variables in the present data (years of blindness: F(1,24) = 1.55, p = 0.23; age at mobility training: F(1,24) = 0.67, p = 0.42).

Discussion

The aim of this study was to examine whether gaze-dependent updating of targets for action depends on visual experience early in life and, if so, whether gaze-dependent coding still remains after the loss of vision. We examined spatial updating of proprioceptive reach targets in congenitally blind, late blind, and sighted people by having them move their head after being presented with a proprioceptive target (left thumb) but before reaching toward this remembered target. A change in head direction was accompanied by a corresponding change in eye position (gaze shift) due to inherent head-and-eye coupling, but only within subjects who had visual experience (sighted and late blind). Participants systematically misreached toward the target depending on shifted gaze direction. However, in the late blind, this effect was mainly driven by the subgroup of people with two real moveable eyes. Participants who never acquired any visual experience in life (congenitally blind) showed a general rightward bias regardless of gaze direction. Thus, only participants with visual experience early in life and two moveable eyes (sighted and late blind with real eyes) demonstrated reach errors that varied as a function of gaze, i.e., change of head direction and eye position.

Our results may imply that the brain updates remembered proprioceptive targets with respect to gaze (eye angle + head angle), similar to remembered visual targets following movements of the eyes and/or movements of the head (Henriques and Crawford, 2002; Henriques et al., 2003). This would be consistent with previous studies that reported gaze-dependent spatial updating of proprioceptive reach targets following saccadic eye shifts to visual fixation points (Pouget et al., 2002; Fiehler et al., 2010; Jones and Henriques, 2010). Here, we showed that sighted people also produced gaze-dependent reaches to proprioceptive targets following movements of the head and accompanying eyes, even when they were not visually directed. More importantly, we are the first to investigate how blind people (with early or no visual experience) compensate for movements of the head that occur between proprioceptive target presentation and reaching to the remembered site.

Role of developmental vision

Gaze-dependent reaching errors were only present in those individuals who have or had visual experience, i.e., sighted and late blind but not congenitally blind people. This suggests that the process or format by which targets are coded in spatial memory is not innate but rather develops in interaction with visual input early in life and even remains after loss of vision. Our results are consistent with studies in the auditory domain that found that dichotic sound stimuli are misperceived opposed to head direction in sighted but not in congenitally blind individuals (Lewald, 2002; Schicke et al., 2002). However, those results and ours could also be explained by a misestimation of head rotation, rather than a representation of space anchored to gaze. Yet, the latter explanation that targets are coded and updated in gaze- or eye-centered coordinates would be consistent with the results of other studies investigating the reference frames used in coding and updating remembered visual and proprioceptive targets following intervening eye movements (Henriques et al., 1998; Pouget et al., 2002; Fiehler et al., 2010; Jones and Henriques, 2010). Even if it were the case that reaching errors to remembered targets were due to a misestimate of a subsequent head turn, the absence of gaze-dependent errors in congenitally blind people suggests that using such estimates for localizing proprioceptive targets may require early visual experience. However, this same lack of gaze-dependent errors may be better explained by congenitally blind individuals primarily using a stable internal or anatomical reference frame fixed to the body, whereas sighted and late blind individuals preferably code and update space with respect to external coordinates, i.e., they take into account relative changes between target and gaze (Gaunet and Rossetti, 2006; Pasqualotto and Newell, 2007; Röder et al., 2007; Sukemiya et al., 2008).

It is known that external coding of space develops on the basis of visual input during critical periods early in life. A recent study by Pagel et al. (2009) reported that sighted children remap tactile input into an external reference frame after the age of 5 years. Remapping of stimuli of different sensory modalities also requires optimal multisensory integration, which probably does not develop before the age of 8 years (Ernst, 2008). Such functional changes are grounded in the maturation of different brain areas. The posterior parietal cortex (PPC), which plays a central role in gaze-centered spatial updating (Duhamel et al., 1992; Batista et al., 1999; Nakamura and Colby, 2002; Medendorp et al., 2003; Merriam et al., 2003), slowly increases in gray matter volume and reaches the maximum at ∼10–12 years of age (Giedd et al., 1999), i.e., higher-order association cortices like the PPC mature even during early adulthood after maturity of lower-order sensory cortices (Gogtay et al., 2004; for review, see Lenroot and Giedd, 2006). Moreover, corpus callosum areas substantially increase from ages 4 to 18 years (Giedd et al., 1996), which facilitates interhemispheric transfer and thus might enhance spatial remapping (cf., Berman et al., 2005). As a consequence, a lack of vision during that specific time may influence the development of the corpus callosum, since congenitally blind compared with sighted and late blind people show a reduced volume of a callosal region that primarily connects visual–spatial areas in the PPC (Leporé et al., 2010). Such changes in the structural and functional organization of the brain during childhood might trigger the switch from internal to external spatial coding strategies. When vision is lost after brain areas involved in spatial remapping have matured, as likely was the case for most, if not all, of the late blind individuals in this study, spatial coding strategies similar to the sighted are implemented and, more importantly, may remain dominant. This is consistent with our results: the gaze-dependent reaching errors for the late blind with two real eyes were similar to the sighted, both when subjects who developed blindness before the age of 8 years (n = 3) were included in the analysis and when they were not. However, when vision is completely lacking during development, brain areas seem to mature differently and reorganize as a consequence of visual loss. Such structural brain changes in congenitally blind individuals probably induce functional changes in how spatial memory is coded.

Contribution of the eyes to spatial updating

To assess the contribution of the eyes to spatial updating, we divided late blind individuals into those with one or two glass eyes and those with two real eyes. We found that reaching errors varied as a function of measured head direction and eye position only in individuals with early visual experience and real movable eyes (sighted and late blind with two real eyes). This suggests that spatial updating of proprioceptive targets with respect to gaze requires visual input during development, as well as the ability to sense or control the eyes. This ability would naturally be absent in late blind people with two glass eyes, and is probably lacking in congenitally blind people due to atrophy of optomotor muscles (Leigh and Zee, 1980; Kömpf and Piper, 1987). One can speculate that late blind people with one glass and one real eye suffer from a combined effect, i.e., absent sensory signals from the missing eye and muscle atrophy of the real eye due to the loss of coherent signals from both eyes. This may be reflected by the variable, low-amplitude electroocular signals that we recorded from the LB_GE. In contrast, late blind individuals with two real eyes produce eye movements comparable to the sighted individuals (Leigh and Zee, 1980; Kömpf and Piper, 1987) and therefore probably have access to the extraretinal signals necessary for updating reach targets with respect to gaze direction (Klier and Angelaki, 2008). Thus, it may be that early visual experience provides the basis for normal oculomotor sensation and control, which in turn allows for spatial updating of targets relative to gaze.

Given that reaching errors tend to vary systematically both when the eyes and the eyes and head are shifted following target presentation in sighted individuals, and not (or less so) when only the head turns but the eyes remain directed toward the target site (Henriques and Crawford, 2002; Henriques et al., 2003), the movement of the eyes away from the target appears to be critical. This is consistent with the interpretation that reaching errors systematically vary as a function of the angular distance between the target site and gaze direction. Thus, although our goal was to explore how target locations are updated depending on subsequent gaze shifts, gaze could only reliably be varied by having participants move the head (given the poor control of the eyes in the CB and the lack of eyes in some LB). Yet, it is significant that only when the eyes contributed to the gaze shift did we see a pattern of errors consistent with previous results on updating of remembered visual and proprioceptive targets in the sighted. Since we observed gaze-dependent reaching errors to proprioceptive targets when the direction of head rotation was restricted to a predefined path, this suggests that gaze does not have to be aimed toward a fixation target to use this movement to update reaching targets.

To conclude, our results suggest that gaze-dependent spatial updating develops on the basis of early visual experience. Such spatial coding strategies, once established, seem to be used even after later loss of vision as long as signals from both eyes and head (gaze) are available.

Footnotes

This work was supported by Grant Fi 1567 from the German Research Foundation (DFG) to F.R. and K.F., the research unit DFG/FOR 560 ‘Perception and Action’, and the TransCoop-Program from the Alexander von Humboldt Foundation assigned to D.Y.P.H. and K.F. D.Y.P.H. is an Alfred P. Sloan fellow. We thank Oguz Balandi and Christian Friedel for help in programming the experiment, Jeanine Schwarz and Charlotte Markert for support in acquiring data, and Mario Gollwitzer and Oliver Christ for statistical support.

References

- Andersen RA, Buneo CA. Intentional maps in posterior parietal cortex. Annu Rev Neurosci. 2002;25:189–220. doi: 10.1146/annurev.neuro.25.112701.142922. [DOI] [PubMed] [Google Scholar]

- Batista AP, Buneo CA, Snyder LH, Andersen RA. Reach plans in eye-centered coordinates. Science. 1999;285:257–260. doi: 10.1126/science.285.5425.257. [DOI] [PubMed] [Google Scholar]

- Berman RA, Heiser LM, Saunders RC, Colby CL. Dynamic circuitry for updating spatial representations. I. Behavioral evidence for interhemispheric transfer in the split-brain macaque. J Neurophysiol. 2005;94:3228–3248. doi: 10.1152/jn.00028.2005. [DOI] [PubMed] [Google Scholar]

- Bock O. Contribution of retinal versus extraretinal signals towards visual localization in goal-directed movements. Exp Brain Res. 1986;64:476–482. doi: 10.1007/BF00340484. [DOI] [PubMed] [Google Scholar]

- Crawford JD, Henriques DY, Medendorp WP. Three-dimensional transformations for goal-directed action. Annu Rev Neurosci. 2011;34:309–331. doi: 10.1146/annurev-neuro-061010-113749. [DOI] [PubMed] [Google Scholar]

- Cressman EK, Henriques DY. Sensory recalibration of hand position following visuomotor adaptation. J Neurophysiol. 2009;102:3505–3518. doi: 10.1152/jn.00514.2009. [DOI] [PubMed] [Google Scholar]

- Cressman EK, Henriques DY. Reach adaptation and proprioceptive recalibration following exposure to misaligned sensory input. J Neurophysiol. 2010;103:1888–1895. doi: 10.1152/jn.01002.2009. [DOI] [PubMed] [Google Scholar]

- Duhamel JR, Colby CL, Goldberg ME. The updating of the representation of visual space in parietal cortex by intended eye movements. Science. 1992;255:90–92. doi: 10.1126/science.1553535. [DOI] [PubMed] [Google Scholar]

- Ernst MO. Multisensory integration: a late bloomer. Curr Biol. 2008;18:R519–R521. doi: 10.1016/j.cub.2008.05.002. [DOI] [PubMed] [Google Scholar]

- Fiehler K, Reuschel J, Rösler F. Early non-visual experience influences proprioceptive-spatial discrimination acuity in adulthood. Neuropsychologia. 2009;47:897–906. doi: 10.1016/j.neuropsychologia.2008.12.023. [DOI] [PubMed] [Google Scholar]

- Fiehler K, Rösler F, Henriques DY. Interaction between gaze and visual and proprioceptive position judgements. Exp Brain Res. 2010;203:485–498. doi: 10.1007/s00221-010-2251-1. [DOI] [PubMed] [Google Scholar]

- Fine I, Wade AR, Brewer AA, May MG, Goodman DF, Boynton GM, Wandell BA, MacLeod DI. Long-term deprivation affects visual perception and cortex. Nat Neurosci. 2003;6:915–916. doi: 10.1038/nn1102. [DOI] [PubMed] [Google Scholar]

- Gaunet F, Rossetti Y. Effects of visual deprivation on space representation: immediate and delayed pointing toward memorised proprioceptive targets. Perception. 2006;35:107–124. doi: 10.1068/p5333. [DOI] [PubMed] [Google Scholar]

- Ghahramani Z, Wolpert DM, Jordan MI. Generalization to local remappings of the visuomotor coordinate transformation. J Neurosci. 1996;16:7085–7096. doi: 10.1523/JNEUROSCI.16-21-07085.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Giedd JN, Vaituzis AC, Hamburger SD, Lange N, Rajapakse JC, Kaysen D, Vauss YC, Rapoport JL. Quantitative MRI of the temporal lobe, amygdala, and hippocampus in normal human development: ages 4–18 years. J Comp Neurol. 1996;366:223–230. doi: 10.1002/(SICI)1096-9861(19960304)366:2<223::AID-CNE3>3.0.CO;2-7. [DOI] [PubMed] [Google Scholar]

- Giedd JN, Blumenthal J, Jeffries NO, Castellanos FX, Liu H, Zijdenbos A, Paus T, Evans AC, Rapoport JL. Brain development during childhood and adolescence: a longitudinal MRI study. Nat Neurosci. 1999;2:861–863. doi: 10.1038/13158. [DOI] [PubMed] [Google Scholar]

- Gogtay N, Giedd JN, Lusk L, Hayashi KM, Greenstein D, Vaituzis AC, Nugent TF, 3rd, Herman DH, Clasen LS, Toga AW, Rapoport JL, Thompson PM. Dynamic mapping of human cortical development during childhood through early adulthood. Proc Natl Acad Sci U S A. 2004;101:8174–8179. doi: 10.1073/pnas.0402680101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goossens HH, Van Opstal AJ. Human eye-head coordination in two dimensions under different sensorimotor conditions. Exp Brain Res. 1997;114:542–560. doi: 10.1007/pl00005663. [DOI] [PubMed] [Google Scholar]

- Harris CS. Adaptation to displaced vision: visual, motor, or proprioceptive change? Science. 1963;140:812–813. doi: 10.1126/science.140.3568.812. [DOI] [PubMed] [Google Scholar]

- Hatada Y, Rossetti Y, Miall RC. Long-lasting aftereffect of a single prism adaptation: shifts in vision and proprioception are independent. Exp Brain Res. 2006;173:415–424. doi: 10.1007/s00221-006-0381-2. [DOI] [PubMed] [Google Scholar]

- Held R, Bauer JA., Jr Development of sensorially-guided reaching in infant monkeys. Brain Res. 1974;71:265–271. doi: 10.1016/0006-8993(74)90970-6. [DOI] [PubMed] [Google Scholar]

- Held R, Freedman SJ. Plasticity in human sensorimotor control. Science. 1963;142:455–462. doi: 10.1126/science.142.3591.455. [DOI] [PubMed] [Google Scholar]

- Henriques DY, Crawford JD. Role of eye, head, and shoulder geometry in the planning of accurate arm movements. J Neurophysiol. 2002;87:1677–1685. doi: 10.1152/jn.00509.2001. [DOI] [PubMed] [Google Scholar]

- Henriques DY, Klier EM, Smith MA, Lowy D, Crawford JD. Gaze-centered remapping of remembered visual space in an open-loop pointing task. J Neurosci. 1998;18:1583–1594. doi: 10.1523/JNEUROSCI.18-04-01583.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henriques DY, Medendorp WP, Gielen CC, Crawford JD. Geometric computations underlying eye-hand coordination: orientations of the two eyes and the head. Exp Brain Res. 2003;152:70–78. doi: 10.1007/s00221-003-1523-4. [DOI] [PubMed] [Google Scholar]

- Jakobson LS, Goodale MA. Trajectories of reaches to prismatically-displaced targets: evidence for “automatic” visuomotor recalibration. Exp Brain Res. 1989;78:575–587. doi: 10.1007/BF00230245. [DOI] [PubMed] [Google Scholar]

- Jones SA, Henriques DY. Memory for proprioceptive and multisensory targets is partially coded relative to gaze. Neuropsychologia. 2010;48:3782–3792. doi: 10.1016/j.neuropsychologia.2010.10.001. [DOI] [PubMed] [Google Scholar]

- Klier EM, Angelaki DE. Spatial updating and the maintenance of visual constancy. Neuroscience. 2008;156:801–818. doi: 10.1016/j.neuroscience.2008.07.079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kömpf D, Piper HF. Eye movements and vestibulo-ocular reflex in the blind. J Neurol. 1987;234:337–341. doi: 10.1007/BF00314291. [DOI] [PubMed] [Google Scholar]

- Lackner JR. Visual rearrangement affects auditory localization. Neuropsychologia. 1973;11:29–32. doi: 10.1016/0028-3932(73)90061-4. [DOI] [PubMed] [Google Scholar]

- Leigh RJ, Zee DS. Eye movements of the blind. Invest Ophthalmol Vis Sci. 1980;19:328–331. [PubMed] [Google Scholar]

- Lenroot RK, Giedd JN. Brain development in children and adolescents: insights from anatomical magnetic resonance imaging. Neurosci Biobehav Rev. 2006;30:718–729. doi: 10.1016/j.neubiorev.2006.06.001. [DOI] [PubMed] [Google Scholar]

- Leporé N, Voss P, Lepore F, Chou YY, Fortin M, Gougoux F, Lee AD, Brun C, Lassonde M, Madsen SK, Toga AW, Thompson PM. Brain structure changes visualized in early- and late-onset blind subjects. Neuroimage. 2010;49:134–140. doi: 10.1016/j.neuroimage.2009.07.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levin N, Dumoulin SO, Winawer J, Dougherty RF, Wandell BA. Cortical maps and white matter tracts following long period of visual deprivation and retinal image restoration. Neuron. 2010;65:21–31. doi: 10.1016/j.neuron.2009.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewald J. Vertical sound localization in blind humans. Neuropsychologia. 2002;40:1868–1872. doi: 10.1016/s0028-3932(02)00071-4. [DOI] [PubMed] [Google Scholar]

- Lewis TL, Maurer D. Multiple sensitive periods in human visual development: evidence from visually deprived children. Dev Psychobiol. 2005;46:163–183. doi: 10.1002/dev.20055. [DOI] [PubMed] [Google Scholar]

- Maurer D, Lewis TL. Visual acuity: the role of visual input in inducing postnatal change. Clin Neurosci Res. 2001;1:239–247. [Google Scholar]

- Medendorp WP, Goltz HC, Vilis T, Crawford JD. Gaze-centered updating of visual space in human parietal cortex. J Neurosci. 2003;23:6209–6214. doi: 10.1523/JNEUROSCI.23-15-06209.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merabet LB, Pascual-Leone A. Neural reorganization following sensory loss: the opportunity of change. Nat Rev Neurosci. 2010;11:44–52. doi: 10.1038/nrn2758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merriam EP, Genovese CR, Colby CL. Spatial updating in human parietal cortex. Neuron. 2003;39:361–373. doi: 10.1016/s0896-6273(03)00393-3. [DOI] [PubMed] [Google Scholar]

- Nakamura K, Colby CL. Updating of the visual representation in monkey striate and extrastriate cortex during saccades. Proc Natl Acad Sci U S A. 2002;99:4026–4031. doi: 10.1073/pnas.052379899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychol. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Ooi TL, Wu B, He ZJ. Distance determined by the angular declination below the horizon. Nature. 2001;414:197–200. doi: 10.1038/35102562. [DOI] [PubMed] [Google Scholar]

- Pagel B, Heed T, Röder B. Change of reference frame for tactile localization during child development. Dev Sci. 2009;12:929–937. doi: 10.1111/j.1467-7687.2009.00845.x. [DOI] [PubMed] [Google Scholar]

- Pascual-Leone A, Amedi A, Fregni F, Merabet LB. The plastic human brain cortex. Annu Rev Neurosci. 2005;28:377–401. doi: 10.1146/annurev.neuro.27.070203.144216. [DOI] [PubMed] [Google Scholar]

- Pasqualotto A, Newell FN. The role of visual experience on the representation and updating of novel haptic scenes. Brain Cogn. 2007;65:184–194. doi: 10.1016/j.bandc.2007.07.009. [DOI] [PubMed] [Google Scholar]

- Phillips JO, Ling L, Fuchs AF. Action of the brain stem saccade generator during horizontal gaze shifts. I. Discharge patterns of omnidirectional pause neurons. J Neurophysiol. 1999;81:1284–1295. doi: 10.1152/jn.1999.81.3.1284. [DOI] [PubMed] [Google Scholar]

- Populin LC, Rajala AZ. Target modality determines eye-head coordination in non-human primates: implications for gaze control. J Neurophysiol. 2011;106:2000–2011. doi: 10.1152/jn.00331.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pouget A, Ducom JC, Torri J, Bavelier D. Multisensory spatial representations in eye-centered coordinates for reaching. Cognition. 2002;83:B1–B11. doi: 10.1016/s0010-0277(01)00163-9. [DOI] [PubMed] [Google Scholar]

- Putzar L, Goerendt I, Lange K, Rösler F, Röder B. Early visual deprivation impairs multisensory interactions in humans. Nat Neurosci. 2007;10:1243–1245. doi: 10.1038/nn1978. [DOI] [PubMed] [Google Scholar]

- Redding GM, Wallace B. Effects of pointing rate and availability of visual feedback on visual and proprioceptive components of prism adaptation. J Mot Behav. 1992;24:226–237. doi: 10.1080/00222895.1992.9941618. [DOI] [PubMed] [Google Scholar]

- Röder B, Kusmierek A, Spence C, Schicke T. Developmental vision determines the reference frame for the multisensory control of action. Proc Natl Acad Sci U S A. 2007;104:4753–47538. doi: 10.1073/pnas.0607158104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schicke T, Demuth L, Röder B. Influence of visual information on the auditory median plane of the head. Neuroreport. 2002;13:1627–1629. doi: 10.1097/00001756-200209160-00011. [DOI] [PubMed] [Google Scholar]

- Simani MC, McGuire LM, Sabes PN. Visual-shift adaptation is composed of separable sensory and task-dependent effects. J Neurophysiol. 2007;98:2827–2841. doi: 10.1152/jn.00290.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sukemiya H, Nakamizo S, Ono H. Location of the auditory egocentre in the blind and normally sighted. Perception. 2008;37:1587–1595. doi: 10.1068/p5949. [DOI] [PubMed] [Google Scholar]

- van Beers RJ, Baraduc P, Wolpert DM. Role of uncertainty in sensorimotor control. Philos Trans R Soc Lond B Biol Sci. 2002;357:1137–1145. doi: 10.1098/rstb.2002.1101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vetter P, Goodbody SJ, Wolpert DM. Evidence for an eye-centered spherical representation of the visuomotor map. J Neurophysiol. 1999;81:935–939. doi: 10.1152/jn.1999.81.2.935. [DOI] [PubMed] [Google Scholar]

- Wang J, Sainburg RL. Adaptation to visuomotor rotations remaps movement vectors, not final positions. J Neurosci. 2005;25:4024–4030. doi: 10.1523/JNEUROSCI.5000-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zwiers MP, Van Opstal AJ, Paige GD. Plasticity in human sound localization induced by compressed spatial vision. Nat Neurosci. 2003;6:175–181. doi: 10.1038/nn999. [DOI] [PubMed] [Google Scholar]