Abstract

It is known that olfaction and vision can work in tandem to represent object identities. What is yet unclear is the stage of the sensory processing hierarchy at which the two types of inputs converge. Here we study this issue through a well established visual phenomenon termed binocular rivalry. We show that smelling an odor from one nostril significantly enhances the dominance time of the congruent visual image in the contralateral visual field, relative to that in the ipsilateral visual field. Moreover, such lateralization-based enhancement extends to category selective regions so that when two images of words and human body, respectively, are engaged in rivalry in the central visual field, smelling natural human body odor from the right nostril increases the dominance time of the body image compared with smelling it from the left nostril. Semantic congruency alone failed to produce this effect in a similar setting. These results, taking advantage of the anatomical and functional lateralizations in the olfactory and visual systems, highlight the functional dissociation of the two nostrils and provide strong evidence for an object-based early convergence of olfactory and visual inputs in sensory representations.

Introduction

Both olfaction and vision serve the function of object identification. Visual cues are known to facilitate the detection of congruent odorants, and such enhancement has been proposed to be mediated by mnemonic processes based on their semantic associations (Gottfried and Dolan, 2003). Likewise, olfaction modulates visual object perception, even in the absence of conscious visual awareness (Zhou et al., 2010). Yet it remains unclear at which stages of the sensory processing hierarchy the two types of inputs converge, despite recent advances in our understandings of multisensory regions and multisensory integration (Beauchamp, 2005; Macaluso and Driver, 2005; Stein and Stanford, 2008). At first glance, olfaction and vision are anatomically distant, with primary olfactory areas situated in the inferior frontal and anterior temporal regions, and primary visual areas in the occipital lobe. Primary olfactory projections are largely ipsilateral, from the olfactory epithelium in one nostril to the olfactory bulb and then the anterior olfactory nucleus, olfactory tubercle, piriform, amygdala, and entorhinal cortex on the same side, with only slight projection to the contralateral side by way of the anterior commissure (Powell et al., 1965; Price, 1973). By contrast, primary visual projections are mainly contralateral: inputs from the left or right visual field are transferred to the striate and extrastriate cortices on the opposite side (DeYoe et al., 1996). Further downstream, there are category-selective regions including the left-lateralized visual word form area (McCandliss et al., 2003) and the right-lateralized extrastriate body area and fusiform body area (Downing et al., 2001; Schwarzlose et al., 2005; Willems et al., 2010), that selectively respond to words and human bodies, respectively. Taking advantage of such anatomical and functional lateralizations in the olfactory and visual systems, we carry out three experiments to probe the aforementioned issue of where the two senses converge. We do so using a well established visual phenomenon termed binocular rivalry—perceptual alternations that occur when distinctively different images are separately presented to the two eyes (Blake and Logothetis, 2002)—a paradigm that has proven sensitive to the interplays between vision and other senses (van Ee et al., 2009; Lunghi et al., 2010; Zhou et al., 2010).

Materials and Methods

Participants

A total of 82 healthy right-handers with normal or corrected-to-normal vision participated in the study; 24 (10 males, mean age ± SEM = 21.8 ± 0.35 years) took part in Experiment 1, 30 (11 males, 22.1 ± 0.86 years) in Experiment 2, and 28 (10 males, 24.2 ± 0.40 years) in Experiment 3. At the time of testing, all subjects reported to have normal sense of smell and no respiratory allergy or upper respiratory infection. They gave informed consent for participation and were unaware of the purposes of the experiments.

Materials and procedure

Visual stimuli.

All visual stimuli were displayed on a 19 inch flat screen monitor, dichoptically presented to the two eyes, and engaged in rivalry. We individually adjusted which eye viewed which image to produce a more balanced rivalry between the competing images in the absence of olfactory cues. In Experiment 1, two colored images of a rose and a banana, respectively, were displayed side by side and fused with a mirror stereoscope mounted to a chinrest (1.7 × 2.2°, with the center 1.3° horizontally from the fixation either in the left or the right visual field, Fig. 1A), such that the rose image was presented to the left or right visual field of one eye while the banana image was presented to the same visual field of the other eye. To facilitate stable convergence of the two eyes' images, each image was enclosed by an identical square frame (10.7 × 10.7°) centered on the fixation cross. In Experiment 2, a composite image of words in green and a human body in red (2.7 × 3.2°) was shown at the center of the monitor and viewed through red-green anaglyph glasses, so that the words were presented to the central visual field of one eye while the human body was presented to that of the other eye (Fig. 2A). We chose to use red-green anaglyph glasses instead of mirror stereoscope as it produced a more balanced rivalry between the relatively low contrast human body image and the relatively high contrast words image with adjustments of their colors, without making the images look unnatural to the observers. Experiment 3 adopted the same visual stimulation setup as in Experiment 1 except that the rose image was replaced with an image in which the word “rose” was repeated four times (1.7 × 2.2°) and the two competing images were respectively presented to the central visual field of each eye (Fig. 3A).

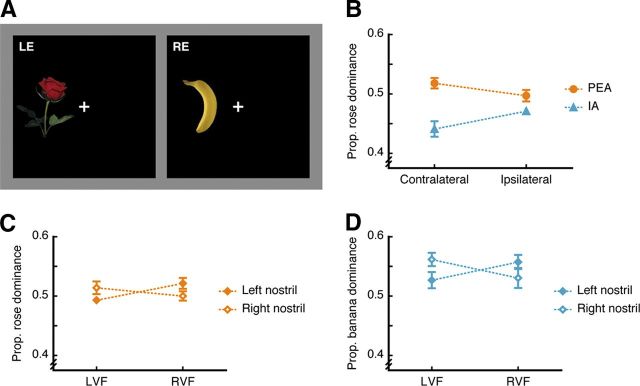

Figure 1.

Nostril- and visual field-specific olfactory modulation of visual perception in binocular rivalry. A, Visual stimuli used in Experiment 1 viewed through mirror stereoscope and dichoptically presented to the left eye (LE) and the right eye (RE), with fused images of rose and banana in either the left visual field (LVF) or the right visual field (RVF). B–D, The dominance proportion of an image depended on both the input odorant and the nostril receiving that odorant (B) such that relative to the ipsilateral nostril, smelling PEA (C) or IA (D) in the nostril contralateral to the rivalry site increased the dominance of the rose image (C) or the banana image (D), respectively, on top of an overall enhancement of the congruent image's dominance over the incongruent one (B). Error bars represent SEM, adjusted for individual differences. Error bars shorter than the diameter of the markers are not displayed.

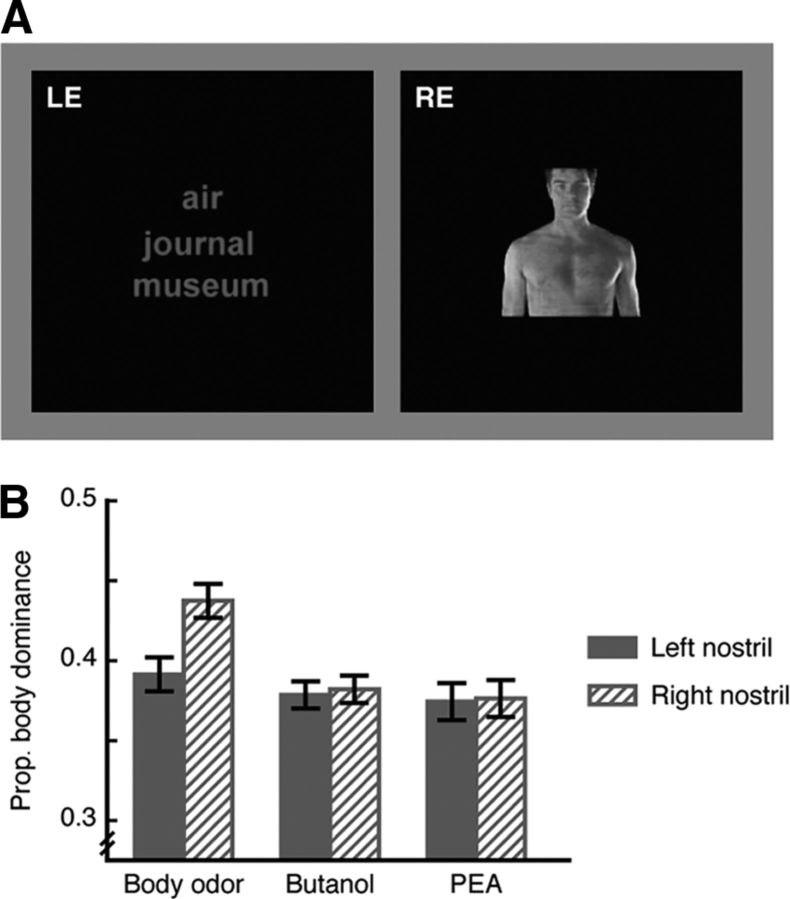

Figure 2.

Nostril-specific olfactory modulation of category-selective visual processing. A, Visual stimuli used in Experiment 2 viewed through red/green anaglyph glasses and dichoptically presented to the two eyes, with fused images of words and human body in the central visual field. B, Compared with butanol and PEA, smelling human body odor increased the proportion that the body image was dominant in view, and such increase was more pronounced when the smell was sampled from the right nostril relative to the left nostril. Error bars represent SEM, adjusted for individual differences.

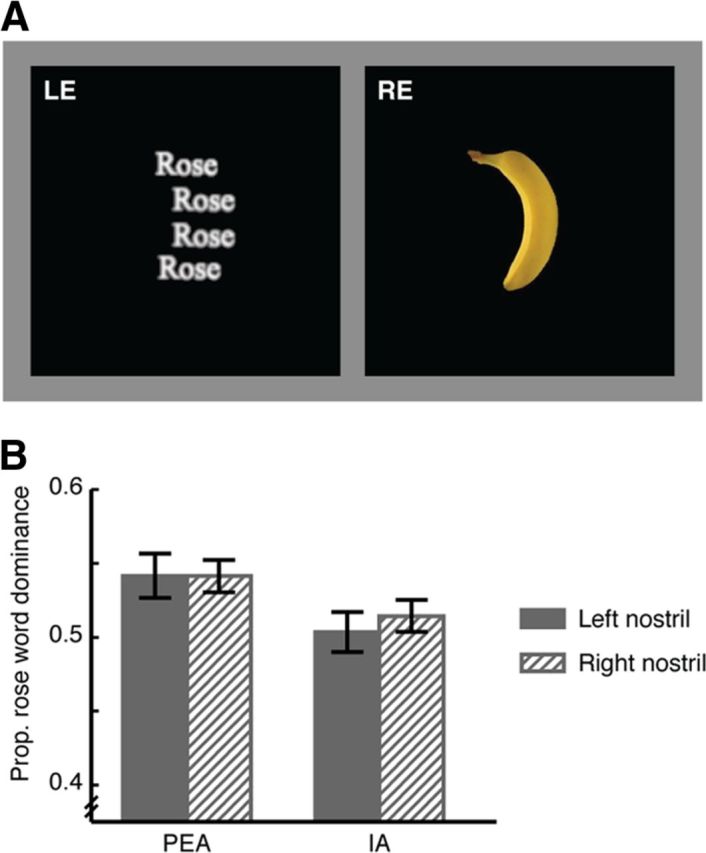

Figure 3.

Nostril-specific olfactory modulation of visual processing depends on sensory rather than semantic congruency. A, Visual stimuli used in Experiment 3 viewed through mirror stereoscope and dichoptically presented to the left eye (LE) and the right eye (RE), with fused images of rose word and banana in the central visual field. B, Compared with IA, smelling PEA increased the dominance of the rose word with no difference between the two nostrils. Error bars represent SEM, adjusted for individual differences.

Olfactory stimuli.

The olfactory stimuli in Experiments 1 and 3 consisted of phenyl ethyl alcohol (PEA; a rose-like smell, 0.5% v/v in propylene glycol) and isoamyl acetate (IA; a banana-like smell, 0.02% v/v in propylene glycol). In addition, purified water was used to achieve unilateral smell presentation. These were presented in identical 20 ml polypropylene jars. Each jar contained 10 ml of clear liquid and was fitted with a Teflon nosepiece. The olfactory stimuli in Experiment 2 consisted of PEA (1% v/v in propylene glycol), n-butanol (a marker-pen like smell, 0.5% v/v in propylene glycol), and natural human body odor (pooled sweat collected from three male donors aged 20, 23, and 24, who kept a 4 × 4 inch nylon/polyester blended pad under each armpit for 2 h when performing non-strenuous daily activities). Purified water was also used to achieve unilateral smell presentation. These stimuli were presented on nylon/polyester-blended pads (4 × 4 inch) in identical 40 ml polypropylene jars, each fitted with a Teflon nosepiece. To form a single “pooled sweat pad,” the ∼⅓ of layers closest to the skin during sweat collection were taken from one of each donor's pads and mixed together. For PEA, butanol, and water, 1 ml of each was respectively applied to a different pad and placed in a separate jar. Detailed procedures for sweat collection and storage have been described previously (Zhou and Chen, 2009b).

In each experiment, the subjects held two jars (one containing a smell and the other containing purified water) with their left hand and positioned the nosepieces into the two nostrils as instructed by the experimenter. They were told to continuously inhale through the nosepieces and exhale through their mouth. This method is standard in the field to achieve unilateral olfactory stimulation (Wysocki et al., 2003). All olfactory stimuli were suprathreshold to all the subjects.

Procedure.

The subjects in Experiments 1 and 3 first sampled the olfactory stimuli with both nostrils, one at a time in a randomized order. After the sampling of each stimulus, they rated its intensity, pleasantness, as well as similarities to the smells of rose and banana, respectively, on a 100-unit visual analog scale. There was at least a 1 min break in between the samplings. After the olfactory stimuli assessment, the experimenter individually adjusted the mirror stereoscope for each subject to ensure binocular fusion. The subjects then completed a practice session so that they were comfortable with viewing the images through the mirror stereoscope and maintaining their fixation at the central fixation point while continuously inhaling through their nose and exhaling through their mouth. They were instructed to press one of two buttons with their right hand when they saw predominantly “rose” (rose image in Experiment 1 and rose word in Experiment 3), and press the other button when it switched to predominantly “banana.” The button presses marked the time points of perceptual switches. Each subject in Experiment 1 completed the actual binocular rivalry task eight times, each time with a different combination of olfactory stimulus (PEA or IA), nostril side (smelling the olfactory stimulus in the left or the right nostril), and rivalry visual field (binocular rivalry taking place in the left or the right visual field). Those in Experiment 3 viewed the competing images in the central visual field and completed the actual binocular rivalry task four times, each time with a different combination of olfactory stimulus (PEA or IA) and nostril side (smelling the olfactory stimulus in the left or the right nostril). Each run lasted 60 s, with a 3 min break in between the runs. The order of the conditions was randomized and balanced across the subjects. At the end of each run, the subjects reported which nostril they thought received a smell. No feedback was provided during the experiment.

Experiment 2 followed procedures similar to those in Experiments 1 and 3, except that red-green anaglyph glasses were used. The subjects assessed the intensity and pleasantness of each olfactory stimulus and verbally described what each smelled like before performing the binocular rivalry task, in which they pressed one of two buttons when they saw predominantly “words,” and pressed the other button when the percept switched to predominantly “human body.” The two competing images were centrally fixated. There were a total of six 60 s runs, each with a different combination of olfactory stimulus (PEA, butanol, or natural human body odor) and nostril side (smelling the olfactory stimulus in the left or the right nostril). The order of the runs was randomized and balanced across the subjects, and there was a 3 min break in between the runs.

Data analyses

For each condition, we first calculated the mean duration (d) that one image predominated over the other, namely, the averaged duration between pressing one button for beginning to see predominantly one of the rivalry images and pressing the other button for beginning to see predominantly the other rivalry image. This was then converted to the proportion (prop) that one image predominated over the other, and used as our dependent measure. For example, in Experiments 1 and 3, the proportion that the rose image (Experiment 1) or rose word (Experiment 3) predominated over the banana image (proprose) was calculated as: proprose = drose/(drose + dbanana). Correspondingly, propbanana = 1 − proprose. In Experiment 2, we specifically used the proportion that the body image predominated over the words as the dependent measure.

The data were analyzed with repeated-measures ANOVA, using olfactory stimulus (PEA vs IA), nostril side (left vs right nostril), and visual field (left vs right visual field) as the within-subject factors in Experiment 1; olfactory stimulus (PEA vs butanol vs natural body odor) and nostril side (left vs right nostril) as the within-subject factors, and sweat identification (describing the sweat samples as human-related vs as other non-biological objects) as the between-subjects factor in Experiment 2; olfactory stimulus (PEA vs IA) and nostril side (left vs right nostril) as the within-subject factors in Experiment 3. In Experiments 2 and 3, paired sample t tests were further performed for each olfactory stimulus to compare the dominance proportion of a rivalry image when smelling it from the left versus the right nostril.

Results

Nostril- and visual field-specific olfactory modulation of visual perception in binocular rivalry

In Experiment 1, two images of rose and banana were engaged in binocular rivalry either in the left or the right visual field (Fig. 1A) while the subjects were being exposed continuously to PEA or IA in one of the two nostrils, and purified water in the other nostril (see Materials and procedure for details). As compared with IA, PEA was rated as much more like the smell of rose (p < 0.001), much less like the smell of banana (p < 0.001), but equally intense (p = 0.38) and pleasant (p = 0.20). Overall, the rose image was dominant in view for longer when the subjects smelled PEA relative to IA (F(1,23) = 10.77, p = 0.003, Figure 1B), and vice versa, replicating an earlier finding (Zhou et al., 2010). Critically, this effect varied with whether the side of the nostril that received PEA or IA was contralateral or ipsilateral to the visual field where the rivalry took place (F(1,23) = 10.57, p = 0.004, Figure 1B). Smelling PEA from the contralateral relative to the ipsilateral nostril significantly increased the proportion that the rose image was dominant (F(1,23) = 4.49, p = 0.045, Figure 1C), and smelling IA from the contralateral relative to the ipsilateral nostril significantly increased the proportion that the banana image was dominant (F(1,23) = 4.63, p = 0.042, Figure 1D). The effect was observed even though the subjects were unaware of which nostril received an odorant (mean accuracy = 0.48 and 0.44 for PEA and IA, respectively; versus chance = 0.50). As primary olfactory regions receive mainly inputs from the ipsilateral nostril (Powell et al., 1965; Price, 1973) and early visual areas receive mainly inputs from the contralateral visual field (DeYoe et al., 1996), these results show a clear within-hemisphere advantage (Hellige, 1993) in the integration of olfactory and visual information that occurs relatively early in the sensory processing hierarchy.

Nostril-specific olfactory modulation of category-selective visual processing

We went on to examine whether such nostril-specific effect persists in the downstream category-selective areas. Experiment 2 introduced three smells—PEA, n-butanol, and natural human body odor, each presented in a unilateral manner as in Experiment 1—to the binocular rivalry between two images of words and human body in the fovea (Fig. 2A; see Materials and Procedure for details). The three odorants were matched in intensity (p = 0.18). Butanol and body odor were rated as equally unpleasant (p = 0.17), and significantly less pleasant than PEA (p-values <0.001). The subjects did not know which nostril received an odor throughout the experiment (mean accuracy = 0.52, 0.58, and 0.48 for body odor, PEA, and butanol, respectively; versus chance = 0.50). Whereas generally speaking smelling natural human body odor increased the proportion that the body image was dominant in view (F(2,56) = 3.80, p = 0.028) regardless of whether the subjects were verbally aware of the nature of the odorant (F(1,28) = 0.37, p = 0.55), smelling it from the right nostril led to a greater increase relative to the left nostril (t(29) = 2.16, p = 0.039), an effect not found with PEA (p = 0.93) or butanol (p = 0.84) (Fig. 2B). These results again reflect a within-hemisphere advantage in the integration of the two senses further down the visual processing hierarchy, since words and human bodies, though engaged in binocular rivalry in the central visual field, are processed in visual word form area (McCandliss et al., 2003) and body-selective regions (Downing et al., 2001; Schwarzlose et al., 2005; Willems et al., 2010) lateralized to the left and the right hemisphere, respectively.

Nostril-specific olfactory modulation of visual processing depends on sensory rather than semantic congruency

Experiment 2 does not address whether category selective processing of word forms in the left hemisphere also benefits from a semantically congruent odor in the left as opposed to the right nostril. This was tested in Experiment 3 with a design similar to that of Experiment 2. The same olfactory stimuli as in Experiment 1 were used, but the rose image was replaced with an image where the word “rose” was repeated four times (to emulate the contour of the banana image and facilitate binocular rivalry). As in Experiment 2, the two images (that of a banana and that of the word “rose”) were engaged in binocular rivalry in the central visual field (Fig. 3A) while the subjects smelled PEA or IA in either the left or the right nostril (see Materials and Procedure for details). PEA was again perceived to be more like the smell of rose (p < 0.001) and less like the smell of banana (p < 0.001) relative to IA, but equally intense (p = 0.25) and pleasant (p = 0.10). Similar to Experiments 1 and 2, the subjects did not know to which nostril an odor was being presented (mean accuracy = 0.48 and 0.46 for PEA and IA, respectively; versus chance = 0.50). Here we observed a main effect of smell, such that the word “rose” was dominant in view for longer when the subjects smelled PEA compared with IA, and vice versa (F(1,27) = 4.92, p = 0.035 in both cases); yet there was no nostril difference for either smell (t(27) = 0.008 and −0.55, p = 0.99 and 0.59 for PEA and IA, respectively) (Fig. 3B). The visual processing of the centrally presented banana image is not lateralized, thus smelling IA in either nostril was expected to produce comparable effects in boosting the dominance of the banana image. However, smelling PEA in the left nostril did not preferentially enhance the dominance of the word “rose” relative to the right nostril, despite that the neural representations of the visual word form and the semantic meaning of “rose” are both left lateralized (Frost et al., 1999; McCandliss et al., 2003). We therefore concluded that nostril-specific olfactory modulation of visual processing, as observed in Experiments 1 and 2, relied not only on the anatomical and functional lateralizations in the two systems, but also on the sensory rather than semantic congruency between olfactory and visual inputs.

Discussion

The human brain is wired to efficiently coordinate the senses and integrate their inputs. In the case of olfaction and vision, both capturing the identities of objects, it is commonly held that they interact in a top-down manner at the semantic level with olfaction frequently succumbing to visual modulations (Morrot et al., 2001; Gottfried and Dolan, 2003). Whereas the current study by no means negates this account, it has shed new light into the basic neural substrates underlying olfactory-visual integration by taking advantage of the anatomical and functional lateralizations in the two systems. We observe a nostril-specific olfactory modulation of binocular rivalry for processes in early visual cortices as well as category selective visual regions based on sensory rather than semantic congruency. Such nostril-specific modulation cannot be due to top-down attentional or cognitive control as the subjects were unaware of which nostril received an odorant. It was also highly unlikely that they knew about the lateralizations in both the visual and the olfactory systems. Our results thus indicate that olfactory and visual integration occurs at the stage of sensory representations early in the information processing hierarchy. There information from the two sources is automatically assembled in an object-based manner (Experiments 1 and 2), independent of object identification or semantic processing at the conscious level (Experiments 2 and 3) (Zhou et al., 2010). In doing so, they provide strong human behavioral evidence for multisensory integration in relatively early sensory cortices.

Moreover, while a large body of literature exists on visual hemifield and retinotopic mappings, there has only been very limited research on the functional relevance of the ipsilateral primary olfactory projections (Porter et al., 2007; Zhou and Chen, 2009a). By highlighting the functional dissociation of the two nostrils, our findings narrow this gap.

Recent animal studies have outlined direct connections among primary auditory, visual, and somatosensory cortices (Falchier et al., 2002; Fu et al., 2003; Wallace et al., 2004; Iurilli et al., 2012). It has also been proposed that associative neuronal plasticity prevails in early sensory cortices, possibly involving a Hebbian mechanism for enhancement of synaptic efficacy (Albright, 2012). Whereas the anatomical connectivity between olfactory and visual regions remains poorly understood, convergent projections from the retina and from the olfactory bulbs have been observed in the olfactory tubercle and piriform cortex in a range of mammalian species including primates (Pickard and Silverman, 1981; Mick et al., 1993; Cooper et al., 1994). In humans, individual differences in the nasal cycle and binocular rivalry alternation rate are correlated, pointing to an endogenous shared mechanism regulating both the olfactory and the visual systems (Pettigrew and Carter, 2005). Furthermore, a latest study showed that repetitive transcranial magnetic stimulation of V1 enhances odor quality discrimination (Jadauji et al., 2012). The exact signaling pathways mediating the observed early convergence of olfactory and visual information await future studies.

Footnotes

This work was supported by the National Basic Research Program of China (2011CB711000), the National Key Technology R&D Program of China (2012BAI36B00), the National Natural Science Foundation of China (31070906), the Knowledge Innovation Program of the Chinese Academy of Sciences (KSCX2-EW-BR-4 and KSCX2-YW-R-250), and the Key Laboratory of Mental Health, Institute of Psychology, Chinese Academy of Sciences. We thank Andrea Elliot, Yeonhee Kim, and Stefan Nazar for assistance with data collection, and Sheng He for helpful comments.

References

- Albright TD. On the perception of probable things: neural substrates of associative memory, imagery, and perception. Neuron. 2012;74:227–245. doi: 10.1016/j.neuron.2012.04.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beauchamp MS. See me, hear me, touch me: multisensory integration in lateral occipital-temporal cortex. Curr Opin Neurobiol. 2005;15:145–153. doi: 10.1016/j.conb.2005.03.011. [DOI] [PubMed] [Google Scholar]

- Blake R, Logothetis NK. Visual competition. Nat Rev Neurosci. 2002;3:13–21. doi: 10.1038/nrn701. [DOI] [PubMed] [Google Scholar]

- Cooper HM, Parvopassu F, Herbin M, Magnin M. Neuroanatomical pathways linking vision and olfaction in mammals. Psychoneuroendocrinology. 1994;19:623–639. doi: 10.1016/0306-4530(94)90046-9. [DOI] [PubMed] [Google Scholar]

- DeYoe EA, Carman GJ, Bandettini P, Glickman S, Wieser J, Cox R, Miller D, Neitz J. Mapping striate and extrastriate visual areas in human cerebral cortex. Proc Natl Acad Sci U S A. 1996;93:2382–2386. doi: 10.1073/pnas.93.6.2382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Downing PE, Jiang Y, Shuman M, Kanwisher N. A cortical area selective for visual processing of the human body. Science. 2001;293:2470–2473. doi: 10.1126/science.1063414. [DOI] [PubMed] [Google Scholar]

- Falchier A, Clavagnier S, Barone P, Kennedy H. Anatomical evidence of multimodal integration in primate striate cortex. J Neurosci. 2002;22:5749–5759. doi: 10.1523/JNEUROSCI.22-13-05749.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frost JA, Binder JR, Springer JA, Hammeke TA, Bellgowan PS, Rao SM, Cox RW. Language processing is strongly left lateralized in both sexes: evidence from functional MRI. Brain. 1999;122:199–208. doi: 10.1093/brain/122.2.199. [DOI] [PubMed] [Google Scholar]

- Fu KM, Johnston TA, Shah AS, Arnold L, Smiley J, Hackett TA, Garraghty PE, Schroeder CE. Auditory cortical neurons respond to somatosensory stimulation. J Neurosci. 2003;23:7510–7515. doi: 10.1523/JNEUROSCI.23-20-07510.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottfried JA, Dolan RJ. The nose smells what the eye sees: crossmodal visual facilitation of human olfactory perception. Neuron. 2003;39:375–386. doi: 10.1016/s0896-6273(03)00392-1. [DOI] [PubMed] [Google Scholar]

- Hellige JB. Unity of thought and action: varieties of interaction between the left and right cerebral hemispheres. Curr Dir Psychol Sci. 1993;2:21–25. [Google Scholar]

- Iurilli G, Ghezzi D, Olcese U, Lassi G, Nazzaro C, Tonini R, Tucci V, Benfenati F, Medini P. Sound-driven synaptic inhibition in primary visual cortex. Neuron. 2012;73:814–828. doi: 10.1016/j.neuron.2011.12.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jadauji JB, Djordjevic J, Lundström JN, Pack CC. Modulation of olfactory perception by visual cortex stimulation. J Neurosci. 2012;32:3095–3100. doi: 10.1523/JNEUROSCI.6022-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lunghi C, Binda P, Morrone MC. Touch disambiguates rivalrous perception at early stages of visual analysis. Curr Biol. 2010;20:R143–R144. doi: 10.1016/j.cub.2009.12.015. [DOI] [PubMed] [Google Scholar]

- Macaluso E, Driver J. Multisensory spatial interactions: a window onto functional integration in the human brain. Trends Neurosci. 2005;28:264–271. doi: 10.1016/j.tins.2005.03.008. [DOI] [PubMed] [Google Scholar]

- McCandliss BD, Cohen L, Dehaene S. The visual word form area: expertise for reading in the fusiform gyrus. Trends Cogn Sci. 2003;7:293–299. doi: 10.1016/s1364-6613(03)00134-7. [DOI] [PubMed] [Google Scholar]

- Mick G, Cooper H, Magnin M. Retinal projection to the olfactory tubercle and basal telencephalon in primates. J Comp Neurol. 1993;327:205–219. doi: 10.1002/cne.903270204. [DOI] [PubMed] [Google Scholar]

- Morrot G, Brochet F, Dubourdieu D. The color of odors. Brain Lang. 2001;79:309–320. doi: 10.1006/brln.2001.2493. [DOI] [PubMed] [Google Scholar]

- Pettigrew JD, Carter OL. Perceptual rivalry as an ultradian oscillation. In: Alais D, Blake R, editors. Recent advances in binocular rivalry. Boston: MIT; 2005. pp. 283–300. [Google Scholar]

- Pickard GE, Silverman AJ. Direct retinal projections to the hypothalamus, piriform cortex, and accessory optic nuclei in the golden hamster as demonstrated by a sensitive anterograde horseradish peroxidase technique. J Comp Neurol. 1981;196:155–172. doi: 10.1002/cne.901960111. [DOI] [PubMed] [Google Scholar]

- Porter J, Craven B, Khan RM, Chang SJ, Kang I, Judkewicz B, Volpe J, Settles G, Sobel N. Mechanisms of scent-tracking in humans. Nat Neurosci. 2007;10:27–29. doi: 10.1038/nn1819. [DOI] [PubMed] [Google Scholar]

- Powell TP, Cowan WM, Raisman G. The central olfactory connexions. J Anat. 1965;99:791–813. [PMC free article] [PubMed] [Google Scholar]

- Price JL. An autoradiographic study of complementary laminar patterns of termination of afferent fibers to the olfactory cortex. J Comp Neurol. 1973;150:87–108. doi: 10.1002/cne.901500105. [DOI] [PubMed] [Google Scholar]

- Schwarzlose RF, Baker CI, Kanwisher N. Separate face and body selectivity on the fusiform gyrus. J Neurosci. 2005;25:11055–11059. doi: 10.1523/JNEUROSCI.2621-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stein BE, Stanford TR. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci. 2008;9:255–266. doi: 10.1038/nrn2331. [DOI] [PubMed] [Google Scholar]

- van Ee R, van Boxtel JJ, Parker AL, Alais D. Multisensory congruency as a mechanism for attentional control over perceptual selection. J Neurosci. 2009;29:11641–11649. doi: 10.1523/JNEUROSCI.0873-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallace MT, Ramachandran R, Stein BE. A revised view of sensory cortical parcellation. Proc Natl Acad Sci U S A. 2004;101:2167–2172. doi: 10.1073/pnas.0305697101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Willems RM, Peelen MV, Hagoort P. Cerebral lateralization of face-selective and body-selective visual areas depends on handedness. Cereb Cortex. 2010;20:1719–1725. doi: 10.1093/cercor/bhp234. [DOI] [PubMed] [Google Scholar]

- Wysocki CJ, Cowart BJ, Radil T. Nasal trigeminal chemosensitivity across the adult life span. Percept Psychophys. 2003;65:115–122. doi: 10.3758/bf03194788. [DOI] [PubMed] [Google Scholar]

- Zhou W, Chen D. Binaral rivalry between the nostrils and in the cortex. Curr Biol. 2009a;19:1561–1565. doi: 10.1016/j.cub.2009.07.052. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou W, Chen D. Fear-related chemosignals modulate recognition of fear in ambiguous facial expressions. Psychol Sci. 2009b;20:177–183. doi: 10.1111/j.1467-9280.2009.02263.x. [DOI] [PubMed] [Google Scholar]

- Zhou W, Jiang Y, He S, Chen D. Olfaction modulates visual perception in binocular rivalry. Curr Biol. 2010;20:1356–1358. doi: 10.1016/j.cub.2010.05.059. [DOI] [PMC free article] [PubMed] [Google Scholar]