Abstract

Area V6A encodes hand configurations for grasping objects (Fattori et al., 2010). The aim of the present study was to investigate whether V6A cells also encode three-dimensional objects, and the relationship between object encoding and grip encoding. Single neurons were recorded in V6A of two monkeys trained to perform two tasks. In the first task, the monkeys were required to passively view an object without performing any action on it. In the second task, the monkeys viewed an object at the beginning of each trial and then they needed to grasp that object in darkness. Five different objects were used. Both tasks revealed that object presentation activates ∼60% of V6A neurons, with about half of them displaying object selectivity. In the Reach-to-Grasp task, the majority of V6A cells discharged during both object presentation and grip execution, displaying selectivity for either the object or the grip, or in some cases for both object and grip. Although the incidence of neurons encoding grips was twofold that of neurons encoding objects, object selectivity in single cells was as strong as grip selectivity, indicating that V6A cells were able to discriminate both the different objects and the different grips required to grasp them.

Hierarchical cluster analysis revealed that clustering of the object-selective responses depended on the task requirements (view only or view to grasp) and followed a visual or a visuomotor rule, respectively. Object encoding in V6A reflects representations for action, useful for motor control in reach-to-grasp.

Introduction

While looking at an object we intend to grasp, several aspects of visual information are processed to transform object attributes into commands appropriate for the effectors. It is now well established that the dorsal visual stream processes vision for action (Goodale and Milner, 1992; Milner and Goodale, 1995), and the dorsomedial pathway has been recently shown to be involved in the encoding of all phases of prehension (Galletti et al., 2001, 2003; Gamberini et al., 2009; Passarelli et al., 2011). Indeed, one of the areas of the dorsomedial pathway, the medial posterior-parietal area V6A, hosts neurons encoding the direction of arm reaching movements (Fattori et al., 2001, 2005), neurons modulated by the orientation of the hand in reach-to-grasp movements (Fattori et al., 2009), and neurons encoding the type of grip required to grasp objects of different shapes (Fattori et al., 2010).

Area V6A receives visual information from area V6 (Galletti et al., 2001, 2003; Gamberini et al., 2009; Passarelli et al., 2011), a retinotopically organized extrastriate area of the medial parieto-occipital cortex (Galletti et al., 1999b), and from other visual areas of the posterior parietal cortex (Gamberini et al., 2009; Passarelli et al., 2011). It has been reported that area V6A contains visual neurons that are very sensitive to the orientation of visual stimuli (Galletti et al., 1996, 1999a; Gamberini et al., 2011), thus providing critical information for object grasping (Fattori et al., 2009).

The visual sensitivity of other parietal areas of the dorsal visual stream involved in grasping, such as area AIP and area 5, have been tested with real, three-dimensional (3D) objects (Murata et al., 2000; Gardner et al., 2002; Chen et al., 2009; Srivastava et al., 2009). In contrast, although in the last 15 years area V6A has been repeatedly suggested to be involved in the control of prehension, its neurons have never been tested with real, 3D objects.

The aim of the present study was to explore (1) whether V6A neurons describe characteristics of 3D graspable objects and what is the code of this description, (2) whether the same V6A neuron encodes both the object and the grip type used for its grasping, and (3) whether there is a relationship between object and grip encoding. For this purpose, single neuron activity was recorded from area V6A of two monkeys trained to perform two tasks involving objects of different shapes. In the first task, the monkey viewed an object without performing any action on it. In the second task, the monkey saw an object that subsequently, in the same trial, it had to reach for and grasp in darkness.

We found that a large number of V6A cells were responsive to real, graspable objects, with many of them displaying strict (for an object) to broad (for a set of objects) selectivity. Among the cells tested in the Reach-to-Grasp task, we found neurons selective either for both object(s) and grip type(s), or for only object(s) or grip type(s). Clustering of the object-selective responses depended on the task requirements (view only or view-to-grasp) and followed a visual or a visuomotor rule, respectively. We propose that the object description accomplished by V6A neurons serves for orchestrating and monitoring prehensile actions.

Materials and Methods

Experimental procedure

Experiments were performed in accordance with national laws on care and use of laboratory animals, in accordance with the European Communities Council Directive of September 22, 2010 (2010/63/EU). During training and recording sessions, particular attention was paid to any behavioral and clinical sign of pain or distress. Experiments were approved by the Bioethical Committee of the University of Bologna.

Two male Macaca fascicularis monkeys, weighing 2.5–4.0 kg, were used. A head-restraint system and a recording chamber were surgically implanted in asepsis and under general anesthesia (sodium thiopenthal, 8 mg/kg/h, i.v.) following the procedures reported in Galletti et al. (1995). Adequate measures were taken to minimize pain or discomfort. A full program of postoperative analgesia (ketorolac trometazyn, 1 mg/kg, i.m., immediately after surgery, and 1.6 mg/kg, i.m., on the following days) and antibiotic care [Ritardomicina (benzatinic benzylpenicillin plus dihydrostreptomycin plus streptomycin) 1–1.5 ml/10 kg every 5–6 d] followed the surgery.

Single cell activity was recorded extracellularly from the posterior parietal area V6A (Galletti et al., 1999a). We performed single microelectrode penetrations using homemade glass-coated metal microelectrodes with a tip impedance of 0.8–2 MΩ at 1 kHz, and multiple electrode penetrations using a five-channel multielectrode recording minimatrix (Thomas Recording). The electrode signals were amplified (at a gain of 10,000) and filtered (bandpass between 0.5 and 5 kHz). Action potentials in each channel were isolated with a dual time-amplitude window discriminator (DDIS-1, Bak Electronics) or with a waveform discriminator (Multi Spike Detector, Alpha Omega Engineering). Spikes were sampled at 100 kHz and eye position was simultaneously recorded at 500 Hz. Location of area V6A was identified on functional grounds during recordings (Galletti et al., 1999a), and later confirmed following the cytoarchitectonic criteria of Luppino et al. (2005).

Histological reconstruction of electrode penetrations

At the end of the electrophysiological recordings, a series of electrolytic lesions (10 μΑ cathodic pulses for 10 s) were performed at the limits of the recorded region. Then each animal was anesthetized with ketamine hydrochloride (15 mg/kg, i.m.) followed by an intravenous lethal injection of sodium thiopental. The animals were perfused through the left cardiac ventricle with the following solutions: 0.9% sodium chloride, 3.5–4% paraformaldehyde in 0.1 m phosphate buffer, pH 7.4, and 5% glycerol in the same buffer. The brains were then removed from the skull, photographed, and placed in 10% buffered glycerol for 3 d and in 20% glycerol for 4 d. Finally, they were cut on a freezing microtome at 60 μm in parasagittal plane. In all cases, one section of every five was stained with the Nissl method (thionin, 0.1% in 0.1 m acetate buffer, pH 3.7) for the cytoarchitectonic analysis. Procedures to reconstruct microelectrode penetrations and to assign neurons recorded in the anterior bank of the parieto-occipital sulcus to area V6A were as those previously described by our group (Galletti et al., 1996, 1999a, 1999b; Gamberini et al., 2011). Briefly, electrode tracks and the approximate location of each recording site were reconstructed on histological sections of the brain on the basis of the lesions and several other cues, such as the coordinates of penetrations within the recording chamber, the kind of cortical areas passed through before reaching the region of interest, and the depths of passage points between gray and white matter. All neurons of the present work were assigned to area V6Ad or V6Av on the basis of their location in one of the two cytoarchitectonic sectors of V6A following the criteria defined by Luppino et al. (2005). This process is presented in more detail in a recent work by our group (Gamberini et al., 2011).

Behavioral tasks

The monkey sat in a primate chair (Crist Instrument) with the head fixed, in front of a personal computer (PC)-controlled carousel containing five different objects. The objects were presented to the animal one at a time, in a random order. The object selected for each trial was set up by a PC-controlled rotation of the carousel during the intertrial period. Only the selected object was visible in each trial; the view of the other objects was occluded. The objects were always presented in the same spatial position (22.5 cm away from the animal, in the midsagittal plane).

All tasks began when the monkey pressed a “home” button (Home button push) near its chest, outside its field of view, in complete darkness (Fig. 1A,B). The animal was allowed to use the arm contralateral to the recording side. It was required to keep the button pressed for 1 s, during which it was free to look around, though remaining in darkness (epoch FREE, see Data Analysis). After this interval, a LED mounted above the object was switched on (Fixation LED green) and the monkey had to fixate on it. Breaking of fixation and/or premature button release interrupted the trial.

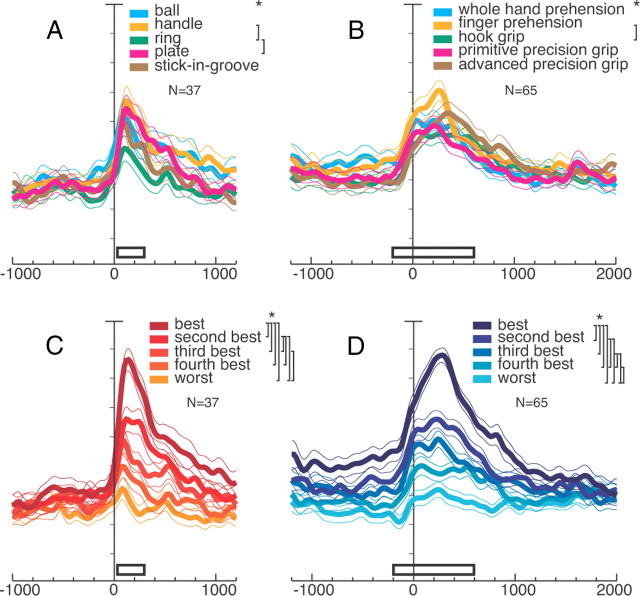

Figure 1.

Behavioral tasks used to assess object selectivity (A, B), object and motor selectivity (C), and objects and grips tested (D). A, Time course of the Object viewing task. The sequence of status of the home button, status of the LIGHT illuminating the object (LIGHT) and color of the fixation point (Fixation LED) are shown. Below the scheme, typical examples of eye traces during a single trial and time epochs are shown. Dashed lines indicate task and behavioral markers: trial start (Home Button push), fixation target appearance (Fixation LED green), eye traces entering the fixation window, object illumination onset (LIGHT on), go signal for home button release (fixation LED red), home button release (Home Button off) coincident with object illumination off (LIGHT off), and fixation target switching off (fixation LED off), end of data acquisition. B, Scheme of the experimental set-up showing an object presentation (ring) in the monkey peripersonal space, while the animal keeps its hand on the home button (black rectangle). C, Time course of the Reach-to-Grasp task. In addition to the status of home button, fixation LED, and LIGHT, as in A, also the status of the target object is shown (Target object, pull and off). Markers are, from left to right: trial start (Home Button push), fixation target appearance (Fixation LED green), eye traces entering the fixation window, object illumination on and off (LIGHT on and LIGHT off, respectively), go signal for reach-to-grasp execution (fixation LED red), start and end of the reach-to-grasp movement (Home Button off, and Target object pull, respectively), go signal for return movement (fixation LED switching off), start of return movement to the home button (Target object off). All other details, as in A. D, Drawing of the 5 objects tested and of the grip types used by the monkey: from left to right, ball (grasped with the whole hand), handle (grasped with fingers only), ring (grasped with the index finger only), plate (grasped with a primitive precision grip with fingers/thumb opposition), stick-in-groove (grasped with an advanced precision grip with precise index-finger/thumb opposition). The object changed from trial to trial, randomly chosen by the computer.

The brightness of fixation LED (masked by a 1.5 mm aperture) was lowered so as to be barely visible during the task. Standing by the monkey, the experimenter could see neither the object nor the monkey's hand moving toward the object, even following an adaptation period.

Animals underwent two tasks: Object viewing task and Reach-to-Grasp task.

Object viewing task.

The time sequence of the Object viewing task is shown in Figure 1A. After button pressing, during LED fixation, two lights at the sides of the selected object were switched on, thus illuminating it (LIGHT on). The monkey was required to keep fixation without releasing the home button. After 1 s, a color change of the fixation LED (from green to red; Fixation LED red) instructed the monkey to release the home button (Home Button release). Then the lights illuminating the object were turned off (LIGHT off) and the monkey could break fixation, receiving its reward. Reach and grasp actions were not required in this task. In any case, they were prevented by a door at the front of the chair blocking the hand access to the object.

Different objects were presented in random order in a block of 50 correct trials, 10 trials for each one of the five objects tested. The task was used to assess the effect of object vision preventing any possible contamination by a forthcoming reach-to-grasp action.

Randomly interleaved among the presentation of different objects there was a condition in which no objects were presented and the illumination remained switched off (dark) during the entire trial. In other words, during these trials the animal did not see any object. It had to fixate on the fixation LED until its color changed (after 0.5–1.5 s), then release the home button and receive its reward (Fixation in darkness). Apart from the fixation point, there were no other visual stimuli. This task was run to assess a possible effect of fixation per se on neural discharges.

Reach-to-Grasp task.

In the Reach-to-Grasp task, the animal was required to perform reach-to-grasp movements in darkness following a brief presentation of the object to be grasped. The time sequence of the Reach-to-Grasp task is shown in Figure 1C. During LED fixation, after a delay period of 0.5–1 s, the object was illuminated (LIGHT on) for 0.5 s. The lights were then switched off (LIGHT off) for the rest of the trial. After a second delay period (0.5–2 s) during which the monkey was required to keep fixation on the LED without releasing the home button, the LED color changed (Fixation LED red). This was the go-signal for the monkey to release the button (Home Button release), to perform a reaching movement toward the object, and to grasp and pull it (Target object pull), and hold it until the LED was switched off (0.8–1.2 s). The LED switch-off (Fixation LED off) cued the monkey to release the object (Target object off) and to press the home button again (Home Button push). Button pressing ended the trial, allowed the monkey reward, and started another trial, in which another randomly chosen object was presented.

This task was run interleaved with the Object viewing task for each recorded cell. Monkeys were trained for 5–6 months to reach a performance level >95%.

It is worthwhile to note that in this task the monkey could see the object only briefly at the beginning of the trial, when no arm movement was allowed, and that the subsequent reach-to-grasp action was executed in complete darkness. In other words, during the reach-to-grasp movement the monkey adapted the grip to the object shape on the basis of visual information it had received at the beginning of the trial, well before the go-signal for arm movement.

A subset of neurons of this study have been also used in a recent article reporting on the neural discharges during grasp execution (Fattori et al., 2010).

All behavioral tasks were controlled by custom-made software implemented in LabView Realtime environment (Kutz et al., 2005). Eye position was monitored through an infrared oculometer system (Dr. Bouis Eyetracker). Trials in which the monkey's behavior was incorrect were discarded.

Monkeys performed the Reach-to-Grasp task with the arm contralateral to the recording side, while hand movements were continuously video-monitored by means of miniature video cameras sensitive to infrared illumination. Movement parameters during reach-to-grasp execution were estimated using video images at 25 frames/s (Gardner et al., 2007a, 2007b; Chen et al., 2009). Video images captured allowed us to establish 3 stages of grasping action: Approach: This stage began at the video frame preceding the onset of hand movement toward the object, and ended when the fingers first contacted the target object. Within the approach phase, we considered as reaching onset the video frame where the hand started to move toward the object; hand preshaping stage, the period starting from initial increase of distance between index and thumb fingers (grip aperture), passing through maximum grip aperture, ending at the contact of the object (constant grip aperture); Contact: video frame where the hand assumed the static grasping posture on the object; Grasp: period of full enclosure of the object in the static hand before object pulling.

Tested objects

The objects have been chosen to evoke different grips. The objects and the grip types used for grasping them (Fig. 1D) are as follows: “ball” (diameter: 30 mm) grasped with the whole-hand prehension, with all the fingers wrapped around the object and with the palm in contact with it; “handle” (thickness, 2 mm; width, 34 mm; depth, 13 mm; gap dimensions, 2×28×11 mm) grasped with the finger prehension, all fingers except the thumb inserted into the gap; “ring” (external diameter, 17 mm; internal diameter, 12 mm) grasped with the hook grip, the index finger was inserted into the hole of the object; “plate” (thickness, 4 mm; width, 30 mm; length, 14 mm) grasped with the primitive precision grip, using the thumb and the distal phalanges of the other fingers; “stick-in-groove” (cylinder with base diameter of 10 mm and length of 11 mm, in a slot 12 mm wide, 15 mm deep, and 30 mm long) was grasped with the advanced precision grip, with the pulpar surface of the last phalanx of the index finger opposed to the pulpar surface of the last phalanx of the thumb.

Data analysis

The neural activity was analyzed by quantifying the discharge in each trial in different time epochs: FREE, from button press to fixation LED switch-on. It is a control period in which neither fixation nor reach and grasp movements are performed, and neither object nor fixation LEDs are visible; VIS, response to object presentation, from 40 ms after object illumination to 300 ms after it. This epoch starts at 40 ms because visual responses in V6A have a delay of this order (Kutz et al., 2003), and ends at 300 ms to include the transient, more brisk part of the visual responses (Galletti et al., 1979). It was calculated for both the Object viewing task and the Reach-to-Grasp task; DELAY (in Reach-to-Grasp task only), from 300 ms after the end of object illumination to the go signal (color change of fixation LED). This epoch starts 300 ms after switching off the object light to exclude possible responses elicited by light turn-off; R-to-G (in Reach-to-Grasp task only), reach-to-grasp movement time, from 200 ms before the movement onset (Home Button release) up to the movement end (object pulling); FIX (only in the Fixation in darkness), from fixation onset until go-signal.

Statistical analyses were performed using the STATISTICA software (StatSoft). We analyzed only those units tested with at least five objects in at least seven trials for each object. The reasons for these conservative criteria are dictated by the intrinsic high variability of biological responses, and are explained in detail in Kutz et al. (2003).

A preliminary analysis has been performed for the Fixation in darkness task to exclude from further analyses all neurons showing fixation-related discharges. A t test was used to compare neural activity in FIX with that in FREE (p < 0.05). All neurons that in the Fixation in darkness task showed significant differences in discharge between the epoch FIX and FREE (t test, p < 0.05) have been discarded.

All neurons having a discharge rate lower than 5 spikes/s in the epochs of interest have been discarded from the population.

A neuron was considered as task related when it displayed a statistically significant difference (Student's t test, p < 0.05) in activity between either the VIS epoch and epoch FREE (Object viewing task and Reach-to-Grasp task) or the R-to-G epoch and epoch FREE (Reach-to-Grasp task only). A one-way ANOVA followed by a Newman–Keuls procedure (p < 0.05), was performed on epoch VIS (Object viewing task and Reach-to-Grasp task) to assess neural selectivity for objects, and on epoch R-to-G (Reach-to-Grasp task only) to assess neural selectivity for the grip types.

The latency of neural responses was calculated for each object in each task-related cell. The cell's response latency was defined as the mean of the latencies measured for different objects. To find the onset of the response, we measured the activity in a 20 ms window sliding in 2 ms steps, starting from the beginning of object illumination. This activity was compared with the firing rate observed in the epoch FREE (Student's t test; p < 0.05). Latency was determined as the time of the first of 10 consecutive 2 ms bins in which the activity was significantly higher than that in FREE. The above procedure was similar to the one used for another study we recently performed in V6A (Breveglieri et al., 2012).

Population response was calculated as averaged spike density function (SDF). A SDF was calculated (Gaussian kernel, half-width 40 ms) for each neuron included in the analysis, and averaged across all the trials for each tested object. The peak discharge of the neuron found over all five objects during the relevant epochs was used to normalize all the SDFs for that neuron. The normalized SDFs were then averaged to obtain population responses (Marzocchi et al., 2008). To statistically compare the different SDFs, we performed a permutation test using the sum of squared error as the test statistic. For each pair of responses, the two sets of curves were randomly permuted and the resulting sum of squared error was compared with the actual one over 10,000 repetitions. Comparisons of responses to object presentation have been made in the interval from 40 ms after object illumination until 300 ms after it for epoch VIS. Comparisons of motor responses have been made in the interval from 200 ms before movement onset to 600 ms after it for epoch R-to-G (this duration has been set considering that the mean movement time was 593 ± 258 ms). Emergence of object selectivity and of grip selectivity was calculated as the time of divergence of population SDFs to the best and worst object (half-Gaussian kernel, width 5 ms).

To quantify the preference of recorded neurons for the different objects and grips, we computed a preference index (PI) based on the magnitude of the neuronal response to each of the 5 objects/grips. According to Moody and Zipser (1998) it was computed as follows:

|

where n is the number of objects/grips, ri the activity for object /grip i, and rpref the activity for the preferred object/grip in the relevant epoch (VIS for objects, or R-to-G for grips). The PI can range between 0 and 1. A value of 0 indicates the same magnitude of response for all five objects, whereas a value of 1 indicates a response for only one object.

The percentage discharge difference between best and second-best object was computed as (rbest − rsecond best)*100/rbest. Similarly, the best–worst difference was estimated as (rbest − rworst)*100/rbest).

Comparisons of the distributions of the same index between the different epochs of interest have been performed using the Kolmogorov–Smirnov test (p < 0.05). All the analyses were performed using custom scripts in Matlab (Mathworks).

To compare the object selectivity of the same cell in the two task epochs considered in the Reach-to-Grasp task (VIS and R-to-G), we compared the PI of the same cell in the two different epochs by estimating confidence intervals on the preference indices using a bootstrap test. Synthetic response profiles were created by drawing N firing rates (with replacement) from N repetitions of experimentally determined firing rates. The PI was recomputed using N firing rates. Ten thousand iterations were executed, and confidence intervals were estimated as the range that delimited 95% of the computed indices (Batista et al., 2007).

To assess how the neurons encode the similarity among the five objects/grips, we performed hierarchical cluster analysis (HCA) and multidimensional scaling (MDS) using the SPSS software. For these analyses, the average firing rate for each object/grip was taken into account (see Fig. 8).

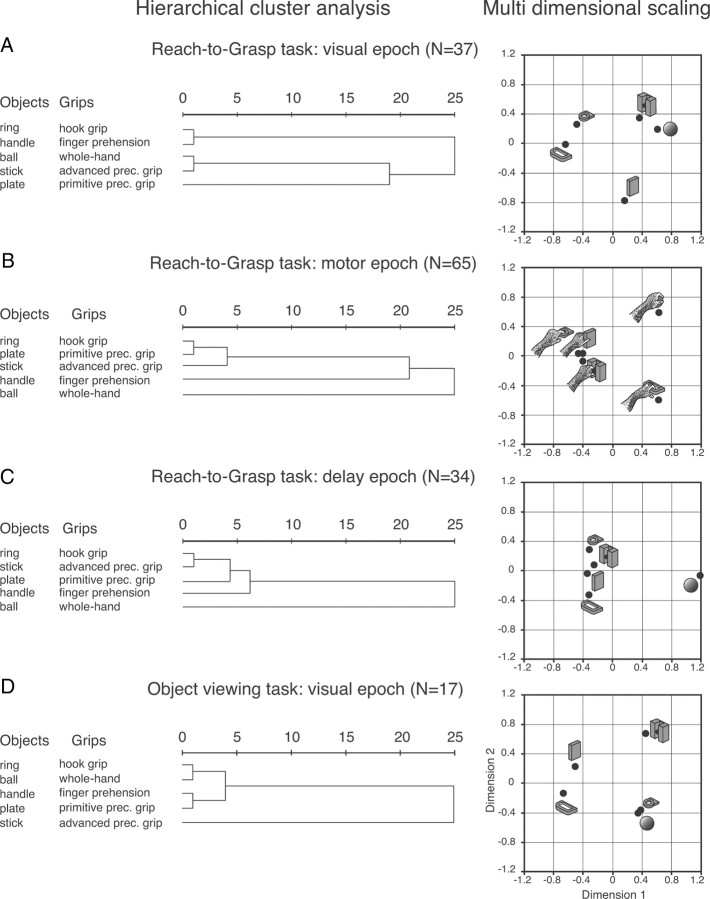

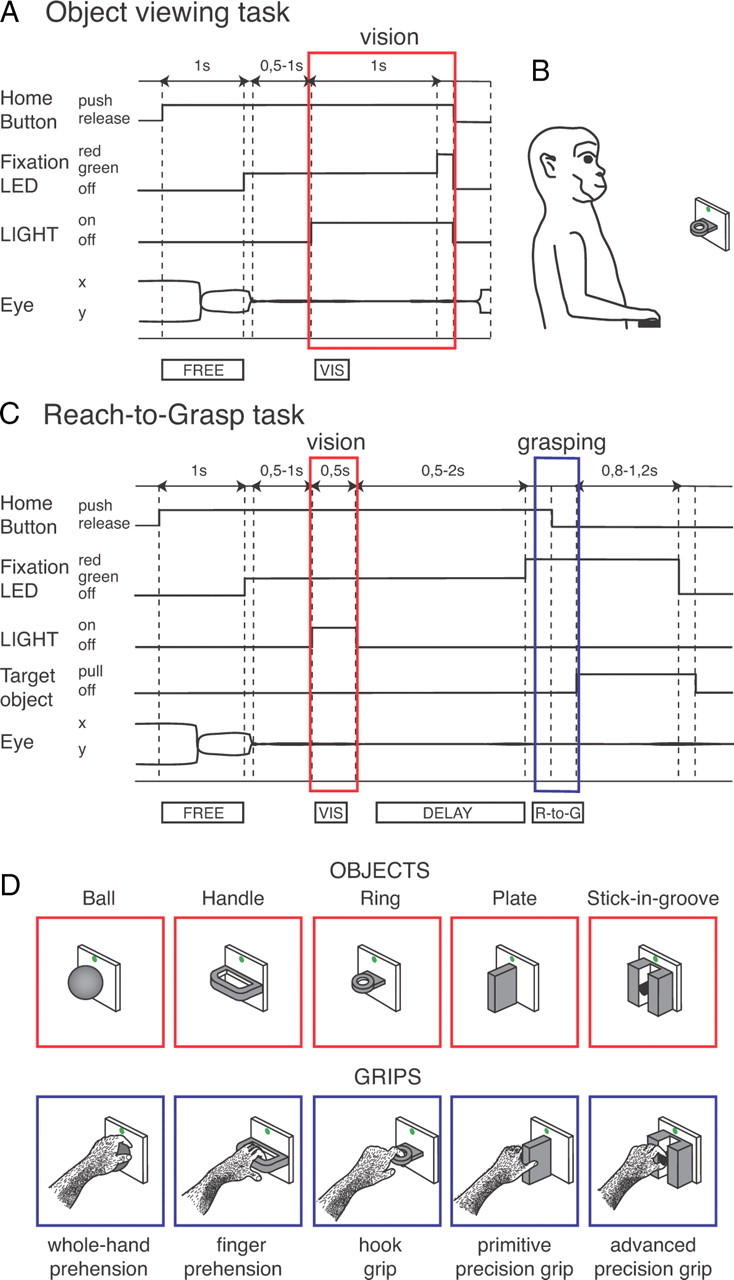

Figure 8.

Dendrograms and two-dimensional maps illustrating the results of the HCA and MDS, respectively. Horizontal axis in the dendrogram indicates the distance coefficients at each step of the hierarchical clustering solution (see Materials and Methods, Data analysis). Actual distances have been rescaled to the 0–25 range. A–C, Reach-to-Grasp task. D, Object viewing task. Visuomotor encoding of the objects in object presentation (A) changes to a motor encoding in the reach-to-grasp execution (B). This change of encoding starts in the delay (C). In the Object viewing task (D), the encoding is purely visual.

At the first step of the HCA, each object/grip represents its own cluster and the similarities between the object/grip are defined by a measure of cluster distance. In the present study we used the squared Euclidean distance, which places progressively greater weight on objects/grips that are further apart. On the basis of the calculated distance, the two closest clusters are merged to form a new cluster replacing the two old clusters. The distances between these new clusters are determined by a linkage rule. In the present study, the complete linkage rule has been used. For this method, the dissimilarity between cluster A and cluster B is represented by the maximum of all possible distances between the cases in cluster A and the cases in cluster B. The steps in a hierarchical clustering solution that shows the clusters being combined and the values of the distance coefficients at each step are shown by dendrograms. Connected vertical lines designate joined cases. The dendrogram rescales the actual distances to numbers between 0 and 25, preserving the ratio of the distances between steps.

In an attempt to detect meaningful underlying dimensions that would help us to explain the clustering between the investigated objects/grips, we performed MDS using the squared Euclidean distances (which also had been used for the HCA) to obtain two-dimensional maps of the location of the objects/grips. The procedure minimized the squared deviations between the original object proximities and their squared Euclidean distances in the two-dimensional space. The resulting low stress value, measuring the misfit of the data (normalized raw stress <0.001), as well as the amount of explained variance, and Tucker's coefficient of congruence (>0.999), measuring the goodness of fit, indicated that the two-dimensional solution obtained was appropriate (the distances in the solution approximate well the original distances).

Results

To study the responses of V6A neurons to the presentation of 3D, graspable objects, we performed two tasks. In the Object viewing task, the object was presented to the animal while it was maintaining fixation. In the Reach-to-Grasp task, the object was briefly presented to the animal at the beginning of the trial and then, after a delay, the animal was required to grasp the object in darkness. The two tasks were tested in blocks of 50 trials each. The sequence of the two tasks was inverted cell by cell and the sequence of object presentation was randomized in each block, trial after trial. The results obtained in the Object viewing task will be described first.

Object viewing task

Object sensitivity in V6A was assessed in the Object viewing task as detailed in Materials and Methods, Behavioral tasks (see the time sequence of events in Fig. 1A). This task required the monkey to keep fixation of a LED while one graspable object, located just below the LED, was illuminated. After 1 s, the fixation LED changed in color, the monkey released the home button, and the object illumination was switched off. At each trial, a different object was presented to the monkey, randomly chosen among the 5 mounted on a carousel it faced. The upper part of Figure 1D shows the five objects tested.

A total of 178 neurons underwent this task; 24 of them displayed neural inhibition during object presentation (t test between FREE and VIS, p < 0.05). As area V6A has been shown to host attention-related inhibitions of cell activity (Galletti et al., 2010), the inhibition observed during object presentation could be due to attentional modulations. In any case, due to the difficulty in managing the inhibition on a quantitative point of view, the 24 inhibited cells were excluded from further analyses, leaving a neural population of 154 cells.

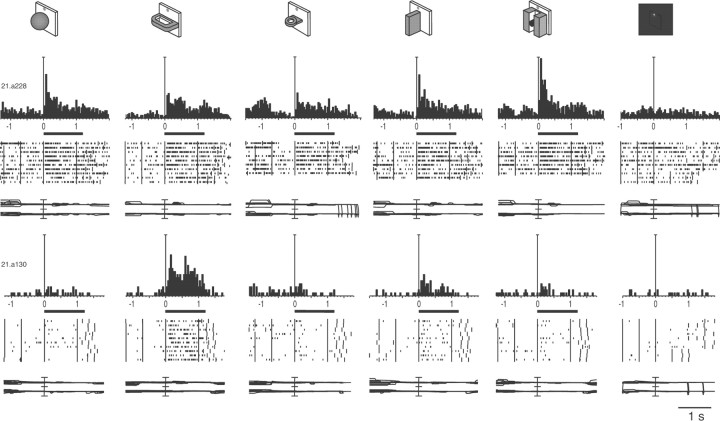

Approximately 60% of these neurons (91/154) responded during object presentation in the Object viewing task, as assessed by the comparison of the activity between epochs VIS and FREE (Student's t test, p < 0.05), and were unresponsive to the LED fixation as assessed by the comparison of the activity between epochs FIX and FREE during fixation in darkness (Student's t test, p > 0.05). Thirty-nine (39/154, 25%) of the neurons discharging during object presentation displayed statistically significant different responses for the objects tested (as assessed by a one-way ANOVA followed by Newman–Keuls, p < 0.05) and were defined as object-selective cells. Figure 2 shows two examples of object-selective neurons. Both neurons did not respond to LED fixation in darkness (Fig. 2, far right). The selectivity of the cell illustrated in the upper row was rather broad. The neuron displayed the maximum response to the presentation of the stick-in-groove, weaker responses to the ball and the plate, and discharged significantly less for the handle and the ring (F(4,40) = 2.8250, p < 0.05). The neuron presented in the lower row of Figure 2 was, on the contrary, highly selective. The discharge of this neuron was maximum during the presentation of the handle and very weak during the presentation of all the remaining objects (F(4,45) = 20.9946, p ≪ 0.0001).

Figure 2.

Object selectivity in the Object viewing task. Top, objects tested (first five responses) and condition of LED fixation in darkness (rightmost condition). Bottom, two example V6A neurons selective for objects. Activity is shown as peristimulus time histograms (PSTHs) and raster displays of impulse activity. Below each display is a record of horizontal (upper trace) and vertical components (lower trace) of eye movements. Neural activity and eye traces are aligned (long vertical line) on the onset of the object illumination. Long vertical ticks in raster displays are behavioral markers, as indicated in Figure 1A. Both cells are selective for objects, the first preferring the vision of the stick-in-groove, the second of the handle. The tuning is sharper for the second, as it was not activated by 3 of the 5 objects. Black bars below PSTH indicate time of object illumination. Vertical scale bars on histograms: top, 160 spikes/s; bottom, 50 spikes/s. Eye traces: 60°/division.

For most neurons the response to object presentation was not confined to a transient activation at the onset of object illumination, but lasted for the entire duration of it (see the long-lasting responses to the stick-in-groove and to the handle of the neurons illustrated in the top and bottom rows of Fig. 2, respectively).

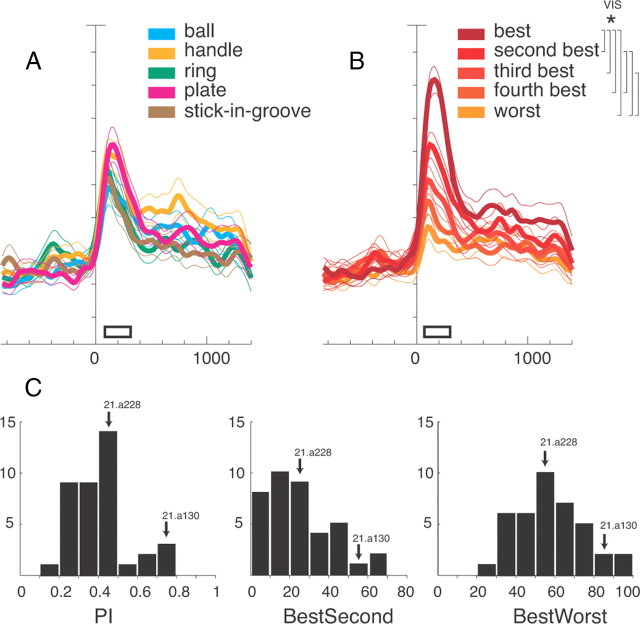

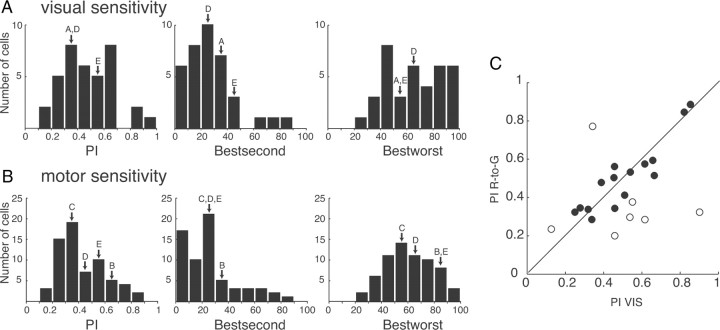

To examine the representation of objects in the entire cell population, we calculated the average normalized SDFs of cell population for each one of the five objects presented (Fig. 3A). The prominent feature of the population as a whole is that the temporal profile and intensity of discharge is similar for the five objects (permutation test, p > 0.05). To examine the capacity of the object-selective cell population to discriminate between the different objects, we plotted the SDFs of cell population for each of the five objects tested, ranking neural activity according to each cell preference (Fig. 3B). Figure 3B shows that the best response to object presentation (whatever the object is), the second best response, the third, and so on, up to the fifth (worst) response, are uniformly scaled from the maximum discharge rate to the resting level (significant differences are reported in the top right corner of Fig. 3). The five curves peak ∼200 ms after object illumination. Then, the activity decreases and, at ∼400 ms from response onset, a tonic discharge proportional to the intensity of the transient response continues for the remaining time of object presentation. To determine when neural selectivity for objects emerged, we calculated the time of divergence between the population responses to the “preferred” object (Fig. 3B, darkest curve) and to the “worst” object (Fig. 3B, lightest curve). The neural population discriminated between these two extreme objects 60 ms after object presentation (p < 0.0001).

Figure 3.

Selectivity to objects in the Object viewing task: population data. A, B, Activity of object-selective V6A neurons expressed as averaged normalized SDFs (thick lines) with variability bands (SEM; light lines), constructed by ranking the response of each neuron for each individual object (A) and in descending order (from darker red to lighter red) according to the intensity of the response (B) elicited in the VIS epoch (N = 39). Each cell of the population has been taken into account five times, once for each object. Neuronal activities are aligned with the onset of the object illumination. Scale on abscissa: 200 ms/division; vertical scale: 10% of normalized activity/division. White bars, duration of epochs of statistical comparisons (VIS). Vertical parentheses, significant comparisons in the permutation test (p < 0.05). Significant differences in A, none; in B, best with all, second with fourth and worst, third with worst. The many significant differences indicate that V6A object-selective neurons are able to discriminate the different object types. C, Visual selectivity in V6A (N = 39). Distribution of the preference index (left), percentage discharge difference between best and second best objects (center), and percentage discharge difference between best and worst object. Ordinate, number of cells. The arrows indicate the two examples shown in Figure 2.

To quantify the selectivity of V6A neurons to object presentation, we calculated a PI (see Materials and Methods, Data analysis) as well as the percentage differences between (1) the best and the second best and (2) the best and the worst responses to object presentation (Fig. 3C). For these calculations, the entire neuronal firing rate has been used. The distributions of the PI and of the percentage differences were unimodal. PI ranged from 0.12 to 0.79 (average 0.42 ± 0.16), with the majority of neurons at intermediate values (0 = equal response to all objects; 1 = response to one object only). The cells presented in the upper and lower row of Figure 2 are examples of broadly tuned (21.a228) and sharply tuned (21.a130) cells. The object-selective neurons showed average percentages of discharge differences between the best and the second best object of 29.95 ± 16.83 (Fig. 3C, center), and between the best and the worst object of 57.12 ± 16.79, with some neurons approaching 100%.

Reach-to-Grasp task

Given that area V6A belongs to the dorsal visual stream (Galletti et al., 2003) and is rich in reaching and grasping neurons (Fattori et al., 2005, 2010), it is reasonable to hypothesize that part of the object-selective neurons could also be modulated by reaching and grasping movements directed toward these objects. To test this hypothesis, we used the Reach-to-Grasp task in which the monkey had to reach for and grasp in darkness an object that was briefly presented at the beginning of each trial.

With this task we aimed to explore whether single V6A neurons encode both object feature and grip type used for its grasping, and to establish the relationship between object and grip encoding when both object presentation and grasping occur in the same trial.

We recorded the activity of 222 V6A neurons from the same 2 monkeys used for the Object viewing task. As shown in Figure 1C, the Reach-to-Grasp task required the monkey to fixate on a LED while an object located just below the LED was briefly illuminated. Then, the task continued in complete darkness, apart from the fixation point that was barely visible and did not light up the object (see Materials and Methods, Behavioral tasks). After a variable delay, the monkey performed a reach and grasp movement that transported the hand from a position near the body to the object to be grasped, and then grasped and held it. A different object, randomly chosen among the five mounted in the carousel, was presented to the monkey at each trial, each object requiring a different grip type (Fig. 1D, bottom). The grips varied from the most rudimentary (whole-hand prehension) to the most skilled ones (advanced precision grip).

According to our video-based investigation of grip evolution during grasping movement, the mean distance between index and thumb fingers (grip aperture) started to increase soon after movement toward the target began (∼25% of the total approach phase duration), similarly to what has already been reported for human subjects (Haggard and Wing, 1998; Winges et al., 2004). Grip aperture continued to rise up to a few centimeters from the object and then diminished until contact. Maximum grip aperture occurred at ∼75% of approach phase duration. A similar pattern has previously been observed by other kinematic studies in monkeys and humans (Jeannerod, 1981; Wing et al., 1986; Marteniuk et al., 1987; Roy et al., 2002). All these evidences demonstrate that our monkeys did not preshape the hand at the conclusion of the approach phase, but started hand preshaping while the approach component of prehension action was evolving.

Of the 222 recorded neurons, 32 exhibited an inhibition during object presentation with respect to FREE (t test, p < 0.05). Similarly to the Object viewing task, these cells were excluded from further analyses.

In the remaining 190 neurons, we assessed the visual sensitivity by comparing the activity during VIS and FREE epochs (t test, p < 0.05). We found that 114 of 190 cells (60%) were sensitive to the object presentation (visual neurons), an incidence similar to that found in the Object viewing task.

We analyzed the latency of the visual responses in both tasks. The mean visual response in the Object viewing task was 80.72 ± 38.45 ms and in the Reach-to-Grasp task was 80.01 ± 41.82, with similar distribution of values in the two tasks (Kolmogorov–Smirnov test, n.s.).

Visual, motor, and visuomotor neurons in V6A

We defined as task-related those cells that, in the Reach-to-Grasp task, displayed a significant modulation in epoch VIS and/or epoch reach-to-grasp compared with epoch FREE (t test, p < 0.05). Task-related cells represented the vast majority of the recorded neurons (152/190; 80%). Eighty of 152 neurons were selective for objects and/or grips as assessed by one-way ANOVA followed by Newman–Keuls post hoc test (p < 0.05), as reported in Table 1.

Table 1.

Classification and selectivity of task-related neurons

| Responsivity to task | Number of task-related neurons | % | Selectivity | Selective |

Nonselective |

||

|---|---|---|---|---|---|---|---|

| Number of neurons | % | Number of neurons | % | ||||

| Visual | 26 | 17 | Object | 7 | 9 | 19 | 26 |

| Motor | 38 | 25 | Grip | 18 | 22 | 20 | 28 |

| Visuomotor | 88 | 58 | 55 | 69 | 33 | 46 | |

| Object | 8 | 10 | |||||

| Grip | 25 | 31 | |||||

| Object and grip | 22 | 28 | |||||

| Total | 152 | 80 | 72 | ||||

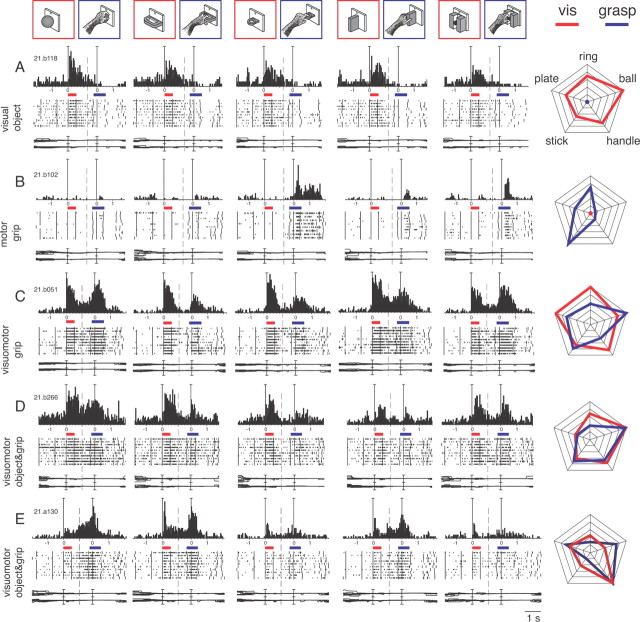

Based on the neuronal activity in epochs VIS and R-to-G, the task-related cells were subdivided into 3 classes: visual (n = 26), motor (n = 38), and visuomotor (n = 88). The remaining 33 neurons did not display any selectivity. It must be noted that the classification we used is not the same as previous studies had used in area AIP (e.g., Murata et al., 2000).

Typical examples of neurons belonging to the different classes are shown in Figure 4. Visual neurons (Fig. 4A) responded to object presentation and were unmodulated during grasping execution. They represented a minority of the task-related cells (17%) and only a fourth of them (7/26) were selective for objects (Table 1). The cell shown in Figure 4A had weak, although significant, visual selectivity. It preferred the ball and showed the weakest discharge to the presentation of the stick-in-groove (Fig. 4A, right, radar plot; F(4,45) = 7.4127, p < 0.01), but it discharged to every object presented. During the execution of reach-to-grasp action, this neuron was unresponsive regardless of the type of grip used. Other visual cells preferred different objects but, similarly to the cell in Figure 4A, were not activated by the execution of reach-to-grasp action.

Figure 4.

Typologies of selective neurons found in V6A with the Reach-to-Grasp task. Top, objects and types of grips. A, Example of a visual neuron encoding objects. The two colored bars indicate the time of object illumination (red) and reach-to-grasp execution (blue). Activity has been aligned twice, on object illumination and on movement onset. The cell displays visual selectivity for objects but lack of motor responses for either grip. All conventions are as in Figures 1 and 2. Vertical scale on histogram: 43 spikes/s; time scale: 1 tick = 1 s. Eye traces: 60°/division. B, Motor neuron encoding grips. It shows absence of visual responses for all five objects and strong tuning of motor responses. Vertical scale on histogram: 80 spikes/s. C, Visuomotor neuron encoding grips. The strong visual response is not selective for objects, but the motor response is modulated according to the grip performed. Vertical scale on histogram: 70 spikes/s. D, E, Visuomotor neurons encoding objects and grips. Both cells discharge during object vision and R-to-G execution and the visual responses are tuned for objects and the motor discharges are tuned to the different grips, displaying different preferences. Vertical scale on histogram: 80 spikes/s (D), 58 spikes/s (E). Right column: radar plots of the responses of the cells shown in A–E. For radar plots C–E, activity has been normalized for each epoch separately. Correspondence between objects of the corresponding activities is indicated on the top plot. Visual activity, red lines; motor activity, blue lines.

Motor neurons (Fig. 4B) discharged during the execution of reach-to-grasp action in darkness and were not modulated by object presentation. This class of cells represented the 25% of task-related neurons, and almost half of them (18/38) were selective for grips (Table 1). The cell in Figure 4B is a highly specific motor neuron that discharged intensively for the execution of the hook grip and weakly or not at all for the execution of other types of grip (Fig. 4B, right, radar plot; F(4,43) = 15.3535, p ≪ 0.0001). The cell did not exhibit any sign of activation during the presentation of any type of object. It is worthwhile to note that the number of grip-selective neurons was approximately twofold that of the object-selective ones.

Visuomotor neurons constitute the most represented class of task-related neurons (58%; Table 1). They discharged during both object presentation and action execution in the dark. Visuomotor neurons displayed selectivity either for object and grip (22/88), or for object only (8/88) or grip only (25/88). Three examples of visuomotor cells are reported in Figure 4C–E. The cell reported in Figure 4C discharged vigorously during the presentation of objects, but the responses were similar for the different objects, thus the neuron was classified as nonselective for object (F(4,45) = 2.1580, p = 0.09). In contrast, the motor-related responses were specifically dependent on the grip type (F(4,45) = 6.9269, p < 0.0001). In particular, the firing rate of this neuron was maximum when the animal performed the whole hand prehension (grasping the ball), weaker during the execution of the advanced precision grip, and even weaker during the performance of the other grips (Fig. 4C, right, radar plot). The visuomotor neurons that exhibited nonselective responses to object presentation and selective motor discharge were called “visuomotor grip” cells. This subcategory of visuomotor neurons was the more abundant one in our population (25/80, 31%; Table 1). Visuomotor neurons exhibiting the reverse response profile (i.e., selectivity for object presentation and lack of selectivity for grip execution) were called “visuomotor object” cells and represented only 10% of selective visuomotor cell population (Table 1).

Visuomotor cells that selectively encoded both the objects presented to the animal and the grips used to grasp them were called “visuomotor object and grip” cells (Table 1). This subcategory of visuomotor neurons represents 28% of the population. Figure 4D,E shows two examples of these cells. In the neuron presented in Figure 4D, both the presentation of the ball and the execution of the whole hand prehension evoked the maximum activation (VIS: F(4,45) = 4.3089, p < 0.01; R-to-G: F(4,45) = 12.1146, p ≪ 0.0001). In contrast, the presentation of the plate and its grasping evoked the weakest responses. The radar plot in the right part of Figure 4D shows that the object and grip selectivity of this neuron are highly congruent.

The neuron presented in Figure 4E is another highly selective visuomotor object and grip cell in which the object (the handle) evoking the strongest activity during its presentation (F(4,45) = 7.3332; p < 0.001) also evoked the optimal activity during its grasping (F(4,45) = 40.4686, p ≪ 0.0001). We found this type of congruency in 16 of the 22 visuomotor object and grip cells. It is noteworthy that the neuron also displayed the same object selectivity when the object presentation was not followed by a grasping action (Object viewing task; Fig. 2, bottom).

To examine the representation of objects and grips in the whole V6A population, we computed the average normalized SDFs for each of the objects and grips tested. For this purpose, responses to object presentation from all neurons selective for objects (visual, visuomotor object, and visuomotor object and grip) and motor responses from all neurons selective for grips (motor, visuomotor grip, and visuomotor object and grip) were used. The average normalized SDFs for each of the five objects and grips are illustrated in Figure 5A,B, respectively. Figure 5A shows that the temporal profile and the intensity of the discharges are similar for the five objects, and Figure 5B shows the same phenomenon for the five grips. In other words, there is not a clear bias in V6A either for a specific object or for a specific grip. Individual neurons do show clear object and/or grip preferences (Fig. 4), but evidently, these individual preferences compensate one another at population level, so that all tested objects and grips are approximately equally represented.

Figure 5.

Object and grip responses in V6A population. A, C, All neurons showing object selectivity (N = 37): visual neurons encoding objects, visuomotor object, and visuomotor object and grip neurons. A, Each color is the activity of all neurons for a certain object; C, response of each neuron for each object type in descending order according to the intensity of the response elicited in the VIS epoch. All conventions are as in Figure 3A. Activity aligned at object illumination onset. Scale on abscissa: 200 ms/division; vertical scale: 10% of normalized activity/division. A, Permutation tests all n.s. except handle versus ring and ring versus plate. C, Permutation tests, all p < 0.05 except third best object versus fourth, and fourth versus worst, as indicated with the squared parentheses. B, D, All neurons with motor selectivity (N = 65): all motor grip, visuomotor grip, and visuomotor object and grip neurons. B, Each color is the activity of all neurons for a certain grip. D, Response of each neuron for each grip type in descending order according to the intensity of the response elicited in the reach-to-grasp epoch. B, permutation tests all n.s. except finger prehension versus hook grip. D, Permutation tests all p < 0.05 except second best grip versus third best. White bars indicate periods where the permutation test has been performed: VIS and R-to-G. A, B show that there is not a clear preference for an object, nor a grip in the population; C, D show that V6A population discriminates objects and grips.

A different result is obtained if the neural activity is ranked according to the strength of the neuronal response, independently to the object (Fig. 5C) or the grip (Fig. 5D) evoking that response. As Figure 5C shows, the best, second best, third, and so on, up to the fifth (worst) response to object presentation are uniformly scaled from the maximum discharge rate to the rest level. Almost all of the five curves are significantly different one to another (Fig. 5C, top right, significant differences). The strength of object selectivity is rather high, with the best discharge being more than double the worst one. This selectivity started to rise, as found for the Object viewing task, 60 ms after object illumination onset (p < 0.0001). This means that the cell population is able to discriminate the different objects presented before the execution of the grasping action.

Figure 5D shows that there is a clear distinction among the activations occurring during the execution of different reach-to-grasp actions. It is noteworthy that the curves are already separated in advance of the movement occurrence (divergence of best from worst population discharges occurred 620 ms before movement onset (p < 0.0001), during the delay period, when likely different motor plans were prepared according to the type of object presented to the monkey. The video-monitoring of hand movements, showing early onset of grip aperture during object approach, suggests that this tuning of delay-related activity reflects motor plans to adapt the upcoming hand movement to the object intrinsic features.

As shown in Fig. 5D, the motor-related discharge starts ∼100 ms before the movement onset, and the maximum of curve separation (encoding) is achieved during movement execution (almost all permutation tests are significant in epoch reach-to-grasp; for a similar result see also Fattori et al., 2010). Together, the SDFs reported in Figure 5 indicate that V6A cells are able to discriminate the different objects and the different grips required to grasp those objects.

As we have done for the Object viewing task, the selectivity of V6A neurons to object presentation in the Reach-to-Grasp task was quantified by calculating a PI (see Materials and Methods, Data analysis) as well as the normalized discharge difference between the best and the second best object, and between the best and the worst object (see Materials and Methods, Data analysis). The PIs of V6A neurons recorded during object presentation in the Reach-to-Grasp task ranged from 0.13 to 0.90 (mean ± SD, 0.47 ± 0.19) and their distribution (Fig. 6A) is very similar to the one obtained in the Object viewing task (Fig. 3C; Kolmogorov–Smirnov test, n.s.). The majority of neurons (24/38) displayed a difference between best and second best response of >20% (average 26.86 ± 19.60), while the difference between the best and the worst object was >20% in all cases (average 65.82 ± 21.72).

Figure 6.

V6A entire population of selective neurons: strength of encoding of objects and grips. A, B, Arrows indicate the cells shown in Figure 4. All conventions are as in Figure 3C. C, Similarity of degree of selectivity in VIS and R-to-G. Distribution of PIs calculated for VIS and R-to-G for the visuomotor object and grip neurons (N = 22). Each point represents one neuron. Closed circles: neurons whose bootstrap-estimated confidence intervals cross the diagonal; open circles: neurons whose bootstrap-estimated confidence intervals do not cross the diagonal. Most of the visuomotor object and grip neurons show a similar degree of selectivity in the two epochs of the grasping task.

The motor selectivity of V6A neurons in the Reach-to-Grasp task was similarly quantified and the results are reported in Figure 6B. The distributions of the PIs and the differences obtained during object presentation are not statistically different from the corresponding ones obtained during grip execution (Kolmogorov–Smirnov tests, n.s.). Thus, motor encoding is as selective as object encoding.

To examine the degree of object and grip selectivity in the visuomotor object and grip cells, we compared the PIs in the VIS and in the R-to-G epochs, as shown in Figure 6C. The vicinity to the diagonal, whose significance was assessed with a bootstrap procedure, is a measure of the similarity in the selectivity in the two epochs. This analysis showed that the vast majority of visuomotor object and grip neurons have similar degrees of selectivity in object vision and reach and grasp execution, as reflected in the corresponding PIs.

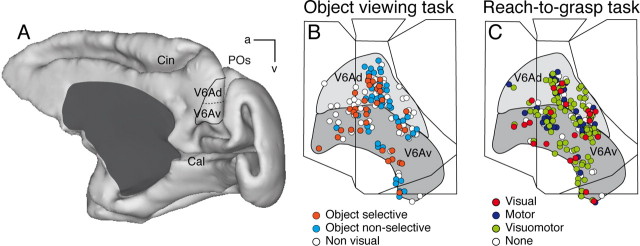

Spatial distribution of recorded neurons within V6A

The anatomical reconstruction of the recorded sites was performed using the cytoarchitectonic criteria described by Luppino et al. (2005) and the functional criteria detailed by Galletti et al. (1999a) and Gamberini et al. (2011), summarized in Materials and Methods, Histological reconstruction of electrode penetrations. Figure 7A shows the location of the parieto-occipital sulcus, where area V6A is located, in a 3D reconstruction of one hemisphere, as well as the extent of V6Ad and V6Av where all the neurons of this study were recorded. Figure 7B,C shows a superimposition of individual flattened maps of the studied animals, to show the location of cells recorded in area V6A. The maps of the left hemispheres of each case were flipped, so that all recording sites were projected on the maps of the right hemispheres. The maps also show the average extent of the dorsal and ventral part of area V6A (V6Ad and V6Av, respectively). Recorded neurons are located in V6Ad and V6Av. Figure 7B shows the distribution of cells tested in the Object viewing task: visual neurons selective for objects are intermixed with nonselective visual neurons and with cells not activated by the view of the 3D graspable objects. This occurs both in V6Ad and in V6Av, with no significant trend toward clustering (χ2 test, p > 0.05).

Figure 7.

Spatial distribution of recorded neurons in V6A. A, The location of area V6A in the parieto-occipital sulcus (POs) is shown in a postero-medial view of a hemisphere reconstructed in 3D using Caret software (http://brainmap.wustl.edu/caret/). Cal, calcarine sulcus; Cin, cingulate sulcus; a, anterior; v, ventral. B, C, Summarized flattened map of the superior parietal lobule of the two cases; each dot represents the location in V6A of every neuron tested in the Object viewing task (B) and in the Reach-to-Grasp task (C). As evident, the recording site extended to the entire V6A (Gamberini et al., 2011) and all cell categories are distributed throughout the whole recorded region.

The different cell categories found in the Reach-to-Grasp task are spatially distributed across V6Ad and V6Av without an apparent order, nor a significant segregation, as documented in Figure 7C (χ2 test, p > 0.05). All in all, Figure 7 shows a lack of segregation of any cell class encountered in the two tasks used.

Object description codes in V6A

In an attempt to unravel the factors determining grip and object selectivity, and to compare grip and object encoding, we performed HCA and MDS.

Based on the discharge during object presentation in the Reach-to-Grasp task (Fig. 8A), both HCA and MDS reveal that the objects with a hole (ring and handle) are separated from the solid objects (ball, stick-in-groove, plate); thus, the objects which require insertion of the fingers in the hole for their grasping are grouped separately from those grasped by wrapping the fingers around the object. In addition, MDS reveals that the round objects (ball, stick-in-groove, and ring) are separated from the flat ones (plate and handle). We propose that the latter grouping follows a visual rule while the rule of the former clustering contains motor-related elements. The objects that require insertion of the fingers in the hole for their grasping are separated from those grasped by wrapping the fingers around the object.

The clustering of the responses during prehension (Fig. 8B) suggests that the use of the index finger is a critical factor (see also Fattori et al., 2010 for similar results on grasp-related discharges). The hook grip (ring) and the precision grips (plate, stick-in-groove) for which the use of the index finger is indispensable are very close, while the finger prehension (handle) and whole-hand prehension (ball), which are performed without the index finger, have a large distance from the other grips. The clustering of motor responses is clearly different from that found in the visual responses (Fig. 8, compare A,B).

Considering that the same neurons in the same task followed two different rules, we investigated when this code switched from one rule to the other. To this purpose, we also analyzed the responses during the delay epoch which follows object presentation and precedes grasping execution. The object was not visible during delay and the arm was motionless with the hand pressing the home button (Fig. 1C). The reach-to-grasp movement was likely prepared during this time, but not executed. The results of the HCA and the MDS in DELAY for the neurons selective in delay epoch (Fig. 8C) are very similar to those obtained during action performance, suggesting that soon after object presentation, and in advance of movement onset, the encoding follows a motor rule.

Cluster analysis based on the activity during object presentation in the Object viewing task (Fig. 8D) revealed that the object grouping is different from the one obtained during object presentation in the Reach-to-Grasp task (Fig. 8, compare A,D). In the Object viewing task (Fig. 8D) the round objects (stick-in-groove, ring and ball) are separated from the flat ones (handle and plate). The clustering appears to follow a visual encoding based on the physical features of the objects.

The fact that the response to object presentation in the two tasks follows different clustering indicates that object encoding by V6A neurons depends on the task context. In other words, when grasping of the presented object is not a task requirement, the object encoding is only visual, but when the presented object has to be grasped, a visuomotor transformation occurs and the elicited object description also contains motor-related elements.

Discussion

The main finding of this work is that ∼60% of V6A neurons respond to the presentation of 3D objects in the peripersonal space, and that approximately half of these neurons are selective for the object type. Object-selective cells are spread all over V6A, without any evident segregation. The clustering of neural responses to object presentation follows a visual rule (i.e., the physical attributes of the objects) when the object is presented outside a grasping context, whereas when object presentation is made in a grasping context, the rule contains motor elements. In other words, the object representation in area V6A is dynamically transformed to subserve action. The results of the cluster analysis strongly support the view that the “visual” response to the object presentation is actually the “visuomotor” representation of the action to be performed. The existence of sustained tonic activity following the brisk transient phasic response to object presentation, and in particular the maintenance of this tonic discharge well beyond the end of visual stimulation when the task also required grasping the object (Fig. 5C,D), further supports this view.

Object and grip encoding in V6A

V6A hosts visual neurons activated by 3D objects of different shapes, motor neurons activated by the execution of reach-to-grasp actions, and visuomotor neurons activated by both 3D objects and grips. A part of the visual and visuomotor cells are selective for objects and a part of the motor and visuomotor cells are selective for grips. The latter are more numerous than the former, but apart from this, the indexes quantifying the strength of object and grip selectivity are comparable (Fig. 6A,B). The different cell categories are intermingled in the entire recorded region (Fig. 7C, V6Ad and V6Av). V6A population does not prefer either a specific type of object or a specific type of grip: all objects and grips are almost equally represented (Fig. 5A,B). However, the neural population is able to discriminate among objects of different shapes and among different grips (Fig. 5C,D).

Approximately 30% of task-related cells are visuomotor neurons encoding objects and grips: they associate the visual representation of graspable objects with the motor representation of how to reach and grasp these objects. Likely, they continuously update the state of the effector and are useful in the on-line control of the shape of the hand as it approaches the target object while reaching and grasping for it.

Comparison of object processing in areas of the macaque dorsal stream

Area AIP, considered a central area in a dedicated grasping circuit (Jeannerod et al., 1995), codes object properties in a coarse fashion (Murata et al., 1996; Sakaguchi et al., 2010), different from the detailed object representation achieved in the ventral visual stream (e.g., area TE in inferotemporal cortex). The object encoding in AIP has been shown to be relevant for object manipulation (Srivastava et al., 2009).

The type of selective neurons found in V6A (selective for object/grip only, or selective for both) is very similar to that observed in AIP, to which area V6A is directly connected (Borra et al., 2008; Gamberini et al., 2009). Because of lack of population quantitative data on object selectivity in AIP, it is not possible to compare the strength of object selectivity in the two areas. Areas AIP and V6A have similar incidence of visual responsivity to 3D objects, though object-selective neurons in AIP are twofold those in V6A (Murata et al., 2000). The smaller population of object-selective cells in V6A compared with AIP may explain why, in functional magnetic resonance studies in monkeys, area V6A did no show any activation for object viewing (Durand et al., 2007, 2009). This is not surprising, considering that area AIP has direct strong connections with areas involved in object processing: the inferior temporal cortex and the upper bank of the superior temporal sulcus (Perrett et al., 1989; Janssen et al., 2000, 2001; Borra et al., 2008; Verhoef et al., 2010). Area V6A, on the contrary, has no direct connections with the ventral stream (Gamberini et al., 2009; Passarelli et al., 2011).

An interesting finding about the differences in the encoding processes in areas AIP and V6A comes from the HCA of visual/motor responses. The cluster analysis revealed that the grouping of visual responses in AIP depended on the common geometric features of the object both in fixation and in grasping tasks (Murata et al., 2000). On the contrary, the code of V6A to object presentation is visual in the Object viewing task and is visuomotor in the Reach-to-Grasp task. Considering also the higher incidence of grip- than object-selective neurons in V6A, this area seems to be more directly involved in the visuomotor control of the action, rather than in elaborating visual information on object geometry. This is in line with the view that V6A is involved in monitoring the ongoing prehension movements (Galletti et al., 2003).

Another area involved in encoding reaching and grasping is the dorsal premotor area F2 (Raos et al., 2004), which is strongly connected with area V6A (Matelli et al., 1998; Shipp et al., 1998; Gamberini et al., 2009). F2 grasp-related neurons show specific responses to object presentation, which are congruent with the type of prehension required to grasp them. It has been proposed that visual responses of F2 neurons have to be interpreted as an object representation in motor terms (Raos et al., 2004). A similar interpretation can be advanced for the object-selective responses in V6A. The tonic discharges that we observed during the delay between object presentation and the onset of arm movement, whose intensity was scaled according to the grip preference (Fig. 5D), strongly support this view.

Object processing in human dorsomedial parietal cortex

An involvement of the dorsomedial parietal cortex in object processing within the context of visuomotor tasks also has been recently reported in humans (Konen and Kastner, 2008). The region around the dorsal aspects of the parieto-occipital sulcus is active when graspable objects are presented (Maratos et al., 2007), preferentially within reachable space (Gallivan et al., 2009) and when the action has been previously experienced (Króliczak et al., 2008). All these findings suggest that activations for object presentation in this cortical sector play a role in computations related to grasping (Maratos et al., 2007; Grafton, 2010).

The putative human V6A is active during grasp planning. It is sensitive to the slant of the object to be grasped and insensitive to the type of depth cue presented, thus suggesting the involvement of this region in prehension motor planning on the basis of visual information elaborated previously (Verhagen et al., 2008).

Also, some neuropsychological findings can be interpreted along this line. Object selectivity of the kind found in V6A can account for the ability of patients with a huge lesion in the ventral stream but with an almost intact dorsal stream (such as patient D.F. in James et al., 2003) to perform visual-form discrimination within the context of a visuomotor task (Carey et al., 1996; Schenk and Milner, 2006). Conversely, a patient with optic ataxia caused by lesions of the medial parieto-occipital region that likely include the human V6A (patient A.T. in Jeannerod et al., 1994), is impaired in grasping a geometric object, like a cylinder, but she can accurately reach and grasp a familiar lipstick of the same size and shape, presented in the same position. This indicates that the integrity of the medial posterior parietal areas is needed to analyze the visual features of novel objects and to perform the visuomotor transformations needed to reach and grasp those objects. As optic ataxia deficits are overcome by using previous experience based on object knowledge (Milner et al., 2001), this means that object knowledge is achieved in other brain areas (i.e., the ventral visual stream) whereas the medial posterior parietal cortex uses visual information for online control of hand actions. This view also is supported by brain imaging work demonstrating that the dorsomedial stream adapts motor behavior to the actual conditions by integrating perceptual information, acquired before motor execution (processed in the ventral stream), into a prehension plan (Verhagen et al., 2008).

All of these data support the view that V6A uses visual information for the on-line control of goal-directed hand actions (Galletti et al., 2003; Bosco et al., 2010). Present findings are consistent with the hypothesis that visual mechanisms mediating the control of skilled actions directed to target objects are functionally and neurally distinct from those mediating the perception of objects (Goodale et al., 1994).

Conclusions

Area V6A contains an action-oriented visual representation of the objects present in the peripersonal space. As suggested for other dorsal stream areas encoding object properties (area 5, Gardner et al., 2007b; Chen et al., 2009; LIP, Janssen et al., 2008; AIP, Srivastava et al., 2009; Verhoef et al., 2010), we suggest that V6A also has its own representation of object shape, that this representation is oriented to action, and that it is appropriate for being used for on-line control of object prehension.

Footnotes

This research was supported by EU FP6-IST-027574-MATHESIS, and by Ministero dell'Università e della Ricerca and Fondazione del Monte di Bologna e Ravenna, Italy. We are grateful to Marco Ciavarro for helping in performing the quantitative analyses, to Giulia Dal Bò for helping with the figures, and to Michela Gamberini for reconstruction of penetrations.

The authors declare no competing financial interests.

References

- Batista AP, Santhanam G, Yu BM, Ryu SI, Afshar A, Shenoy KV. Reference frames for reach planning in macaque dorsal premotor cortex. J Neurophysiol. 2007;98:966–983. doi: 10.1152/jn.00421.2006. [DOI] [PubMed] [Google Scholar]

- Borra E, Belmalih A, Calzavara R, Gerbella M, Murata A, Rozzi S, Luppino G. Cortical connections of the macaque anterior intraparietal (AIP) area. Cereb Cortex. 2008;18:1094–1111. doi: 10.1093/cercor/bhm146. [DOI] [PubMed] [Google Scholar]

- Bosco A, Breveglieri R, Chinellato E, Galletti C, Fattori P. Reaching activity in the medial posterior parietal cortex of monkeys is modulated by visual feedback. J Neurosci. 2010;30:14773–14785. doi: 10.1523/JNEUROSCI.2313-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Breveglieri R, Hadjidimitrakis K, Bosco A, Sabatini SP, Galletti C, Fattori P. Eye-position encoding in three-dimensional space: integration of version and vergence signals in the medial posterior parietal cortex. J Neurosci. 2012;32:159–169. doi: 10.1523/JNEUROSCI.4028-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carey DP, Harvey M, Milner AD. Visuomotor sensitivity of shape and orientation in a patient with visual form agnosia. Neuropsychologia. 1996;34:329–337. doi: 10.1016/0028-3932(95)00169-7. [DOI] [PubMed] [Google Scholar]

- Chen J, Reitzen SD, Kohlenstein JB, Gardner EP. Neural representation of hand kinematics during prehension in posterior parietal cortex of the macaque monkey. J Neurophysiol. 2009;102:3310–3328. doi: 10.1152/jn.90942.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Durand JB, Nelissen K, Joly O, Wardak C, Todd JT, Norman JF, Janssen P, Vanduffel W, Orban GA. Anterior regions of monkey parietal cortex process visual 3D shape. Neuron. 2007;55:493–505. doi: 10.1016/j.neuron.2007.06.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Durand JB, Peeters R, Norman JF, Todd JT, Orban GA. Parietal regions processing visual 3D shape extracted from disparity. Neuroimage. 2009;46:1114–1126. doi: 10.1016/j.neuroimage.2009.03.023. [DOI] [PubMed] [Google Scholar]

- Fattori P, Gamberini M, Kutz DF, Galletti C. ‘Arm-reaching’ neurons in the parietal area V6A of the macaque monkey. Eur J Neurosci. 2001;13:2309–2313. doi: 10.1046/j.0953-816x.2001.01618.x. [DOI] [PubMed] [Google Scholar]

- Fattori P, Kutz DF, Breveglieri R, Marzocchi N, Galletti C. Spatial tuning of reaching activity in the medial parieto-occipital cortex (area V6A) of macaque monkey. Eur J Neurosci. 2005;22:956–972. doi: 10.1111/j.1460-9568.2005.04288.x. [DOI] [PubMed] [Google Scholar]

- Fattori P, Breveglieri R, Marzocchi N, Filippini D, Bosco A, Galletti C. Hand orientation during reach-to-grasp movements modulates neuronal activity in the medial posterior parietal area V6A. J Neurosci. 2009;29:1928–1936. doi: 10.1523/JNEUROSCI.4998-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fattori P, Raos V, Breveglieri R, Bosco A, Marzocchi N, Galletti C. The dorsomedial pathway is not just for reaching: grasping neurons in the medial parieto-occipital cortex of the macaque monkey. J Neurosci. 2010;30:342–349. doi: 10.1523/JNEUROSCI.3800-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galletti C, Squatrito S, Maioli MG, Riva Sanseverino E. Single unit responses to visual stimuli in cat cortical areas 17 and 18. II. Responses to stationary stimuli of variable duration. Arch Ital Biol. 1979;117:231–247. [PubMed] [Google Scholar]

- Galletti C, Battaglini PP, Fattori P. Eye position influence on the parieto-occipital area PO (V6) of the macaque monkey. Eur J Neurosci. 1995;7:2486–2501. doi: 10.1111/j.1460-9568.1995.tb01047.x. [DOI] [PubMed] [Google Scholar]

- Galletti C, Fattori P, Battaglini PP, Shipp S, Zeki S. Functional demarcation of a border between areas V6 and V6A in the superior parietal gyrus of the macaque monkey. Eur J Neurosci. 1996;8:30–52. doi: 10.1111/j.1460-9568.1996.tb01165.x. [DOI] [PubMed] [Google Scholar]

- Galletti C, Fattori P, Kutz DF, Gamberini M. Brain location and visual topography of cortical area V6A in the macaque monkey. Eur J Neurosci. 1999a;11:575–582. doi: 10.1046/j.1460-9568.1999.00467.x. [DOI] [PubMed] [Google Scholar]

- Galletti C, Fattori P, Gamberini M, Kutz DF. The cortical visual area V6: brain location and visual topography. Eur J Neurosci. 1999b;11:3922–3936. doi: 10.1046/j.1460-9568.1999.00817.x. [DOI] [PubMed] [Google Scholar]

- Galletti C, Gamberini M, Kutz DF, Fattori P, Luppino G, Matelli M. The cortical connections of area V6: an occipito-parietal network processing visual information. Eur J Neurosci. 2001;13:1572–1588. doi: 10.1046/j.0953-816x.2001.01538.x. [DOI] [PubMed] [Google Scholar]

- Galletti C, Kutz DF, Gamberini M, Breveglieri R, Fattori P. Role of the medial parieto-occipital cortex in the control of reaching and grasping movements. Exp Brain Res. 2003;153:158–170. doi: 10.1007/s00221-003-1589-z. [DOI] [PubMed] [Google Scholar]

- Galletti C, Breveglieri R, Lappe M, Bosco A, Ciavarro M, Fattori P. Covert shift of attention modulates the ongoing neural activity in a reaching area of the macaque dorsomedial visual stream. PLoS ONE. 2010;5:e15078. doi: 10.1371/journal.pone.0015078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallivan JP, Cavina-Pratesi C, Culham JC. Is that within reach? fMRI reveals that the human superior parieto-occipital cortex encodes objects reachable by the hand. J Neurosci. 2009;29:4381–4391. doi: 10.1523/JNEUROSCI.0377-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gamberini M, Passarelli L, Fattori P, Zucchelli M, Bakola S, Luppino G, Galletti C. Cortical connections of the visuomotor parietooccipital area V6Ad of the macaque monkey. J Comp Neurol. 2009;513:622–642. doi: 10.1002/cne.21980. [DOI] [PubMed] [Google Scholar]

- Gamberini M, Galletti C, Bosco A, Breveglieri R, Fattori P. Is the medial posterior-parietal area V6A a single functional area? J Neurosci. 2011;31:5145–5157. doi: 10.1523/JNEUROSCI.5489-10.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner EP, Debowy DJ, Ro JY, Ghosh S, Babu KS. Sensory monitoring of prehension in the parietal lobe: a study using digital video. Behav Brain Res. 2002;135:213–224. doi: 10.1016/s0166-4328(02)00167-5. [DOI] [PubMed] [Google Scholar]

- Gardner EP, Babu KS, Reitzen SD, Ghosh S, Brown AS, Chen J, Hall AL, Herzlinger MD, Kohlenstein JB, Ro JY. Neurophysiology of prehension. I. Posterior parietal cortex and object-oriented hand behaviors. J Neurophysiol. 2007a;97:387–406. doi: 10.1152/jn.00558.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gardner EP, Babu KS, Ghosh S, Sherwood A, Chen J. Neurophysiology of prehension. III. Representation of object features in posterior parietal cortex of the macaque monkey. J Neurophysiol. 2007b;98:3708–3730. doi: 10.1152/jn.00609.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action (Review) Trends Neurosci. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Meenan JP, Bülthoff HH, Nicolle DA, Murphy KJ, Racicot CI. Separate neural pathways for the visual analysis of object shape in perception and prehension. Curr Biol. 1994;4:604–610. doi: 10.1016/s0960-9822(00)00132-9. [DOI] [PubMed] [Google Scholar]

- Grafton ST. The cognitive neuroscience of prehension: recent developments. Exp Brain Res. 2010;204:475–491. doi: 10.1007/s00221-010-2315-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haggard P, Wing A. Coordination of hand aperture with the spatial path of hand transport. Exp Brain Res. 1998;118:286–292. doi: 10.1007/s002210050283. [DOI] [PubMed] [Google Scholar]

- James TW, Culham J, Humphrey GK, Milner AD, Goodale MA. Ventral occipital lesions impair object recognition but not object-directed grasping: an fMRI study. Brain. 2003;126:2463–2475. doi: 10.1093/brain/awg248. [DOI] [PubMed] [Google Scholar]

- Janssen P, Vogels R, Orban GA. Selectivity for 3D shape that reveals distinct areas within macaque inferior temporal cortex. Science. 2000;288:2054–2056. doi: 10.1126/science.288.5473.2054. [DOI] [PubMed] [Google Scholar]

- Janssen P, Vogels R, Liu Y, Orban GA. Macaque inferior temporal neurons are selective for three-dimensional boundaries and surfaces. J Neurosci. 2001;21:9419–9429. doi: 10.1523/JNEUROSCI.21-23-09419.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Janssen P, Srivastava S, Ombelet S, Orban GA. Coding of shape and position in macaque lateral intraparietal area. J Neurosci. 2008;28:6679–6690. doi: 10.1523/JNEUROSCI.0499-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jeannerod M. Intersegmental coordination during reaching at natural visual objects. In: Long J, Baddeley A, editors. Attention and performance. Hillsdale: Erlbaum; 1981. pp. 153–168. [Google Scholar]

- Jeannerod M, Decety J, Michel F. Impairment of grasping movements following a bilateral posterior parietal lesion. Neuropsychologia. 1994;32:369–380. doi: 10.1016/0028-3932(94)90084-1. [DOI] [PubMed] [Google Scholar]