Abstract

Reduced focus toward the eyes is a characteristic of atypical gaze on emotional faces in autism spectrum disorders (ASD). Along with the atypical gaze, aberrant amygdala activity during face processing compared with neurotypically developed (NT) participants has been repeatedly reported in ASD. It remains unclear whether the previously reported dysfunctional amygdalar response patterns in ASD support an active avoidance of direct eye contact or rather a lack of social attention. Using a recently introduced emotion classification task, we investigated eye movements and changes in blood oxygen level-dependent (BOLD) signal in the amygdala with a 3T MRI scanner in 16 autistic and 17 control adult human participants. By modulating the initial fixation position on faces, we investigated changes triggered by the eyes compared with the mouth. Between-group interaction effects revealed different patterns of gaze and amygdalar BOLD changes in ASD and NT: Individuals with ASD gazed more often away from than toward the eyes, compared with the NT group, which showed the reversed tendency. An interaction contrast of group and initial fixation position further yielded a significant cluster of amygdala activity. Extracted parameter estimates showed greater response to eyes fixation in ASD, whereas the NT group showed an increase for mouth fixation.

The differing patterns of amygdala activity in combination with differing patterns of gaze behavior between groups triggered by direct eye contact and mouth fixation, suggest a dysfunctional profile of the amygdala in ASD involving an interplay of both eye-avoidance processing and reduced orientation.

Introduction

The specific functional role of the amygdala within human social cognition remains a topic of debate. Recent findings suggest a crucial role in detecting and processing environmental features (Adolphs et al., 1995; Whalen, 2007; De Martino et al., 2010). Consistently, neuroimaging and lesion studies suggest amygdalar sensitivity to the eyes, which themselves represent cues for processing social information (Kawashima et al., 1999; Morris et al., 2002; Whalen et al., 2004). In line with this amygdala function framework, typically developed participants show increased amygdala activity underlying a focus on the eyes (Gamer and Büchel, 2009).

In contrast, autism spectrum disorders (ASD) show atypical gaze on emotional faces—marked by a reduced eye focus (Klin et al., 2002; Pelphrey et al., 2002; Kliemann et al., 2010). Within the social symptomatology in ASD, processing information from the eyes seems to be specifically impaired (Leekam et al., 1998; Baron-Cohen et al., 2001b). Previous research highlighted two potential explanations: one hypothesis suggests a general lack of social attention, resulting in a missing orientation toward social cues, such as the eyes (Dawson et al., 1998; Grelotti et al., 2002; Schultz, 2005; Neumann et al., 2006). Another hypothesis suggests an aversiveness of eye contact, leading to an avoidance of eye fixation (Richer and Coss, 1976; Kylliäinen and Hietanen, 2006). In fact, these processes do not have to be mutually exclusive (Spezio et al., 2007).

On a neural level, atypical gaze in ASD has been repeatedly reported together with aberrant amygdala activity. The exact relationship of gaze and brain findings in ASD, however, remains unclear. Previous studies reported both amygdalar hyperactivation (Dalton et al., 2005) and hypoactivation (Baron-Cohen et al., 1999; Critchley et al., 2000; Corbett et al., 2009; Kleinhans et al., 2011) triggered by faces. Within normal functioning, an increase in amygdala activation seems to be associated with immediate orientation toward the eyes (Gamer and Büchel, 2009; Gamer et al., 2010). Along the same lines, patients with bilateral amygdala lesions fail to reflexively gaze toward the eyes (Spezio et al., 2007). Thus, if the amygdala triggers orientation toward salient social cues, such as the eyes, decreased amygdalar response to faces in ASD would rather support the reduced orientation hypothesis. Increased amygdala activation, however, was found to positively correlate with duration of eye contact in a study by Dalton et al. (2005), which was interpreted as an overarousal indicating aversiveness of eye fixation. These results would rather favor the avoidance hypothesis, consistent with findings indicating amygdalar involvement in aversion processing.

To further define the amygdala's functional role within atypical gaze in ASD we applied a facial emotion classification task, using eye tracking during fMRI, varying the initial fixation position on faces. We hypothesized that individuals with ASD would show reduced eye movements toward the eyes along with decreased amygdala activity when starting fixation on the mouth (in accordance with reduced orientation) and/or enhanced eye movements away from the eyes accompanied by increased amygdala activity when starting fixation on the eyes (in accordance with avoidance), compared with controls.

Materials and Methods

Participants.

Seventeen neurotypically developed (NT) male controls and 16 male participants with autism spectrum disorders (ASD) participated in the current study. Controls were recruited through databases of the Max Planck Institute for Human Development, Berlin, Germany and the Freie Universität Berlin, Berlin, Germany. Participants with ASD were recruited through psychiatrists specialized in autism in the Berlin area and the outpatient clinic for autism in adulthood of the Charité University Medicine, Berlin, Germany. Controls were asked twice to indicate any psychiatric or neurological disorder during recruitment as well as before study onset and were excluded from the study in case of a report. Diagnoses were made according to DSM-IV (American Psychiatric Association, 1994) criteria for Asperger syndrome and autism without mental retardation using an in-house developed semistructured interview tapping the diagnostic criteria for autism (Dziobek et al., 2006, 2008) and the Asperger Syndrome (and High-Functioning Autism) Diagnostic Interview (ASDI; Gillberg et al., 2001), which is specifically targeted toward adults with autism spectrum disorders. In 13 participants with available parental informants, diagnoses were additionally confirmed with the Autism Diagnostic Interview-Revised (ADI-R; Lord et al., 1994). All diagnoses were made by two clinical psychologists [I.D. and J.K. (not an author on this paper)], trained and certified in making ASD diagnoses using the ADI-R based on DSM-IV (American Psychiatric Association, 1994) criteria.

All participants had normal or corrected-to-normal vision, were native German speakers, received payment for participation, and gave written informed consent, according to the requirements of the ethics committee of the Charité University Medicine, Berlin, Germany.

Experimental stimuli.

We chose 120 faces (20 females, 20 males, each displaying happy, fearful, and neutral expressions) from a standardized dataset (Lundqvist et al., 1998) for the emotion classification task, based on a recent validation study (Goeleven et al., 2008). Each image was rotated to ensure that, when displayed, the eyes were at the same vertical height. Additionally, an elliptic mask was applied resulting in images containing just the face (Fig. 1). All images were converted to grayscale and the cumulative brightness was normalized across images.

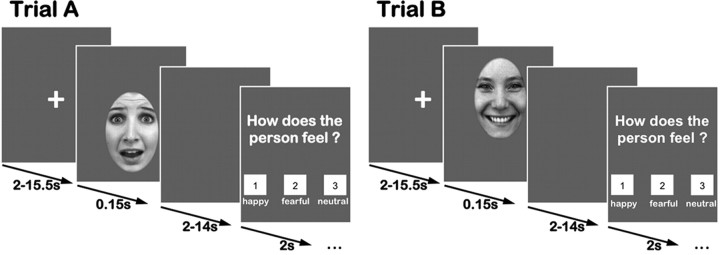

Figure 1.

Emotion classification task. Trials started with a presentation cross (2–15.5 s), followed by an emotional face presented for 150 ms. After a blank screen, participants had to indicate the emotional expression displayed via button press. Faces were shifted vertically on the screen, so that participants initially fixated the eyes (trial A) or the mouth (trial B).

Task and procedures.

In each trial, a fixation cross was presented initially (jittered, 2–15.5 s), followed by the presentation of a face (150 ms) (Fig. 1) (Gamer and Büchel, 2009; Gamer et al., 2010; Kliemann et al., 2010). After showing a blank gray screen (jittered, 2–14 s), participants were asked to indicate the emotional expression via button press on a fiber optic response device (Current Designs Inc.). The emotional classification experiment followed a 2 × 3 within-subjects design with the factors Initial Fixation (eyes, mouth), Emotion (happy, fearful, neutral) and the between-subject factor Group (NT, ASD). To investigate the effect of Initial Fixation, half of the faces within each emotion category were shifted either downward (Fig. 1, Trial A) or upward (Fig. 1, Trial B), so that the eyes or the mouth appeared at the location of the formerly presented fixation cross. Thereby, the task allowed the investigation of changes in amygdalar blood oxygen level-dependent (BOLD) signal as a response to direct eye contact and when initially fixating the mouth in combination with respective eye movements.

Presentation order and timing of the six experimental conditions were optimized using the afni toolbox (3dDeconvolve, make_random_timing; http://afni.nimh.nih.gov/afni/). Visual stimuli were presented in the scanner using Presentation (Version 12.4., Neurobehavioral Systems Inc.) running on a Microsoft Windows XP operating system via MR-compatible LCD-goggles (800 × 600 pixels resolution; Resonance Technology).

Eye movement data analysis.

Eye movements were recorded during scanning using a 60 Hz embedded infrared camera (ViewPoint Eye Tracker, Arrington Research). The resulting time series were split into trials starting 150 ms before and ending 1 s after stimulus presentation. To suppress impulse noise, each time series was filtered using a recursive median filter (Nodes and Gallagher, 1982; see Juhola, 1991, for median filtering of saccadic eye movement; Stork, 2003, for a discussion on recursive median filters). The filter was iterated with an increasing window size from 3, 5, 7, 9 and 11 data points respectively to eliminate spikes of varying widths due to improper pupil recognition. This technique successfully suppressed impulse noise while preserving edge information necessary for the proper recognition of saccades where previously used linear filtering techniques failed. Gaze points outside the screen (not within 5° of the fixation cross) were discarded. Trials were considered for further analysis if all gaze points within the baseline period were valid, within 2° of the fixation cross and if the total amount of invalid samples due to blinks or other data defects in the remainder of the trial did not exceed 250 ms. The baseline period started 150 ms before and ended 150 ms after stimulus presentation. The first fixation change was determined as the first deviation in the gaze position of at least 1° away from the mean gaze position during the baseline period. If the first fixation occurred during the baseline period, the trial was said to contain a saccade during the baseline and was excluded from further analysis. As a result, only trials were considered where a steady fixation close to the fixation cross with no blinks or saccades was found for the whole duration of the baseline period and with <250 ms worth of missing data during the remainder of the trial. Four participants from the ASD group had to be excluded from further analysis based on the number of invalid trials due to blinks, motion or other artifacts. Since calibration was performed separately for each run and for each participant, runs with <30% valid trials were excluded individually from further analysis. No significant differences in the percentage of valid trials could be found between the groups (NT: 45.6%; ASD: 47.2%; p > 0.1). For some participants the number of valid trials fell under the 30% mark for individual conditions even though the total number of valid trials exceeded 30% per run. To ensure reliable percentages while avoiding excluding whole subjects, those values were replaced by the respective group means. No significant difference in the number of valid conditions was found between the groups (NT: 91.1%; ASD: 88.9%; p > 0.1). For each emotion separately, the percentage of trials that contained a fixation change downward from the eyes or upward from the mouth was computed depending on whether the eyes or the mouth was presented at the fixation cross. Because of a reoccurring buffer overflow error in the eye tracker data acquisition computer, a significant portion of the eye tracker trials were not recorded, randomly across the experiment. There was no significant effect for missing trials for the factors Group, Emotion or Initial Fixation, but within individual participants missing trials were unevenly distributed across conditions. To retain the trials for those conditions that had a sufficient number of valid trials a total of 17 conditions in 9 different participants (8 conditions in the ASP group and 9 in the NT group) were interpolated, which allowed inclusion of more subjects and increased power as a consequence. Overall, 9.8% of a total of 29 × 6 = 174 cases were interpolated. It is possible that this manipulation introduced a bias toward false positives in subsequently used mean difference-based statistics. Correlation analyses with the eye movement data were performed using non-interpolated trials only as interpolation was performed within group and per condition and the interpolated values are thus orthogonal to the remaining variables within each subject.

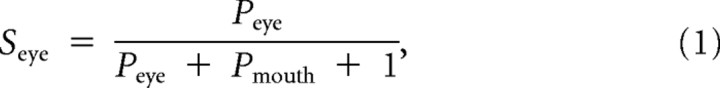

To further investigate potential effects between groups when combining eye movements away from and toward the eyes to a general measure, we calculated an eye preference index. The index measures the directionality of gaze shifts toward the eyes compared with the mouth while correcting for between-subject variability due to differences in the overall proportion of trials containing gaze shifts. Comparable indices have been used in two previous studies, using the same experimental paradigm (Gamer and Büchel, 2009; Kliemann et al., 2010). The proportion of gaze shifts toward the mouth or toward the eyes are corrected by dividing the respective variable by the sum of the gaze shifts toward both features in each emotion and adding one to offset the divisor away from zero:

|

|

The eye preference index (J) is defined as the difference of the corrected gaze shifts,

The eye preference index ranges in the interval [−0.5, +0.5] and is first defined for each emotion separately and then additionally combined over all emotions. Positive values indicate stronger preference for the eyes (i.e., increased orientation to the eyes, as suggested in the NT group) and negative values indicate stronger preference for the mouth (i.e., avoidance of the eyes, as suggested in the ASD group). To control for possible discrepancies regarding the number of trials between groups and among conditions we performed an ANOVA on the overall proportion of trials containing fixation changes and found no significant effects or interactions for diagnosis or emotion. In addition individual independent samples t tests between the groups for each emotion separately showed no significant effects.

Statistical analysis.

Since we were primarily interested in effects and interaction of the two factors Initial Fixation and Emotion within and between groups, any data collected during the task were first analyzed applying a 2 × 3 (Initial Fixation [Eyes vs Mouth] × Emotion [Happy vs Fear vs Neutral]) repeated-measures ANOVA with between-subject factor Group ([ASD vs NT]), unless otherwise specified. Additionally, post hoc within group ANOVAs, independent-samples t tests, as well as paired-samples t tests were conducted. All statistics used a significance level of p < 0.05, unless otherwise specified. Data were analyzed using PASW (version 18.0 for Mac, SPSS Inc., an IBM Company).

fMRI data acquisition.

Participants were scanned using a Siemens Magnetom Tim Trio 3T system equipped with a 12-channel head coil at the Dahlem Institute for Neuroimaging of Emotion (D.I.N.E., http://www.dine-berlin.de/) at the Freie Universität Berlin, Germany. For each participant, we acquired 4 functional runs of 180 BOLD-sensitive T2*-weighted EPIs (TR, 2000 ms; TE, 25 ms; flip angle, 70°; FOV, 204 × 20 mm2; matrix, 102 × 102; voxel size, 2 × 2 × 2 mm). To achieve a better in-plane resolution, reduce susceptibility effects and with regard to the specific hypotheses, we collected 33 axial slices covering a 6.6 cm block, which included the bilateral amygdalae. For registration of the functional images, high-resolution T1-weighted structural images (TR, 1900 ms; TE 2.52 ms; flip angle, 9°; 176 sagittal slices; slice thickness 1 mm; matrix, 256 × 256; FOV, 256; voxel size, 1 × 1 × 1 mm) were collected.

fMRI data analysis.

The fMRI data were preprocessed and analyzed using FEAT (FMRI Expert Analysis Tool) within the FSL toolbox (FMRIB's Software Library, Oxford Centre of fMRI of the Brain, www.fmrib.ox.ac.uk/fsl; Smith et al., 2004). Preprocessing included nonbrain tissue removal, slice time and motion correction and spatial smoothing using a 5 mm FWHM Gaussian kernel. To remove low-frequency artifacts, we applied a high-pass temporal filter (Gaussian-weighted straight line fitting, σ = 50 s) to the data. Functional data were first registered to the T1-weighted structural image and then transformed into standard space (Montréal Neurological Institute, MNI) using 7- and 12-parameter affine transformations, respectively (using FLIRT; Jenkinson and Smith, 2001).

We then modeled the time series individually for each participant and run with seven event-related regressors (six regressors represented face onsets according to the six conditions of our 2 × 3 within-subject design, one additional regressor represented the onset of the emotion recognition question). Regressors were generated by convolving the impulse function related to the onsets of events of interest with a Gamma HRF. Contrast images were computed for each participant, spatially normalized, transformed into standard space and then submitted to a second-order within-subject fixed-effects analysis across runs. Because of our specific hypotheses regarding amygdala functioning in ASD, we used an anatomically determined mask to restrict our analyses to the amygdala. The mask included voxels with a 10% probability to belong to the bilateral amygdalae as specified by the Harvard-Oxford subcortical atlas (http://www.cma.mgh.harvard.edu/fsl_atlas.html) provided in the FSL atlas tool. Higher level mixed-effects analyses across participants were applied to the resulting contrast images using the FMRIB Local Analysis of Mixed Effects tool provided by FSL (FLAME, stage 1 and 2). We report clusters of maximally activated voxels that survived statistical thresholding with a z-value of 1.7 and FWE correction (corresponding to p < 0.05, corrected for multiple comparisons) (see Table 3). For visualization purposes, the resulting z-images were thresholded with a z-value from 1.7 to 2.6 and displayed on a standard brain (MNI-template) (see Fig. 5). To further characterize possible interactions, we extracted parameter estimates (PEs) of voxels for the identified cluster for relevant task conditions. In contrast to previous studies using the same design (Gamer and Büchel, 2009; Gamer et al., 2010) we did not focus our analyses on potential interactions of emotional expression and initial fixation position because (1) eye tracking data strongly suggested that gaze behavior (specifically gaze toward or away from the eyes) in autistic individuals was not modulated by the emotional expression on the face (Kliemann et al., 2010) and (2) previous studies suggest that amygdala activity is influenced by the face per se rather than by the emotional expression (Dalton et al., 2005). Thus, to gain more power in analyses of neuronal data with respect to between-group effects, we collapsed data across emotional expressions.

Table 3.

Results of Interaction Contrast Initial Fixation Position and Group in the Amygdala

| Hemisphere | Multiple comparison correction | Voxels | Z-max | MNI-coordinates (mm) |

||

|---|---|---|---|---|---|---|

| X | Y | Z | ||||

| Left | FWE corrected p = 0.05 | 148 | 3 | −26 | 4 | −20 |

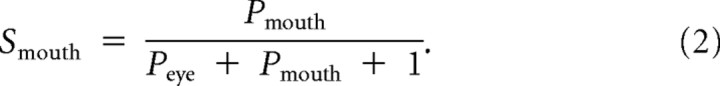

Figure 5.

Amygdala region showing a significant interaction of Initial Fixation position and Group. The upper two panels show statistical maps of coronal and left (L) sagittal planes. The lower bar shows the extracted β values of the cluster reported in Table 2 (p = 0.05, FWE corrected). Error bars represent SE.

Results

Demographics

Groups were matched with respect to age (NT: 30,47, SD: 6.23, range 24–46; ASD: mean 30.44, SD 6.34, range: 22–42; t(31) = −0.015, p = 0.99) and intelligence level (IQ) [a verbal multiple choice vocabulary test, Mehrfachwahl-Wortschatztest (MWT) (Lehrl et al., 1995), and a nonverbal strategic thinking test (Leistungsprüfsystem, subtest 4, in 16 ASD (Horn, 1962); Table 1]. We additionally used the Autism Spectrum Quotient (AQ) (Baron-Cohen et al., 2001a) in both groups to control for clinically significant levels of autistic traits in the NT group. Groups differed significantly in AQ-scores (NT: 11.82, ASD: 36.06; t(31) = 11.41, p < 0.001). None of the controls scored above the cutoff score of 32, in fact, the highest score was 22, indicating a very low level of autistic traits in the NT group.

Table 1.

Demographic variables, IQ measures, and diagnostic scores

| ASD | NT | p | |

|---|---|---|---|

| n | 16 | 7 | |

| Age (years) | 30.44 ± 6.34 | 30.47 ± 6.24 | 0.99 |

| MWT-IQ | 108.06 ± 7.38 | 108.12 ± 14.76 | 0.99 |

| LPS-IQ | 128.47 ± 10.82 (in 15 ASD) | 126.4 ± 8.94 | 0.55 |

| AQ | 36.06 ± 1.85 | 11.82 ± 1.11 | 0.000 |

p values reflect levels of significance from independent samples t test. Values are given in mean ± SD. n, sample size; MWT-IQ, multiple choice vocabulary IQ test; LPS-IQ, strategic thinking IQ test; AQ, Autism Quotient.

Behavioral results

Reaction times

We analyzed participants' reaction times (RT) during the emotion classification task by condition with respect to the onset of the face stimuli. Over all conditions, individuals with ASD showed a tendency toward slower responses than control participants [ASD: 706.31 ms, NT: 623.86 ms; t(31) = 1.66, p = 0.11]. The 2 × 3 ANOVA with between-subject factor Group yielded a main effect of emotion over both groups [F(2,62) = 4.9, p = 0.01, partial η2 = 0.14], but no further main or interaction effects. Over both groups, participants were faster in responding to happy compared with fearful [happy: 643.2 ms, fearful: 676.6 ms; t(32) = −3.8, p = 0.001] and neutral faces [happy: 643.2 ms, neutral: 670.1 ms; t(32) = −2.1, p = 0.043].

Emotion classification performance

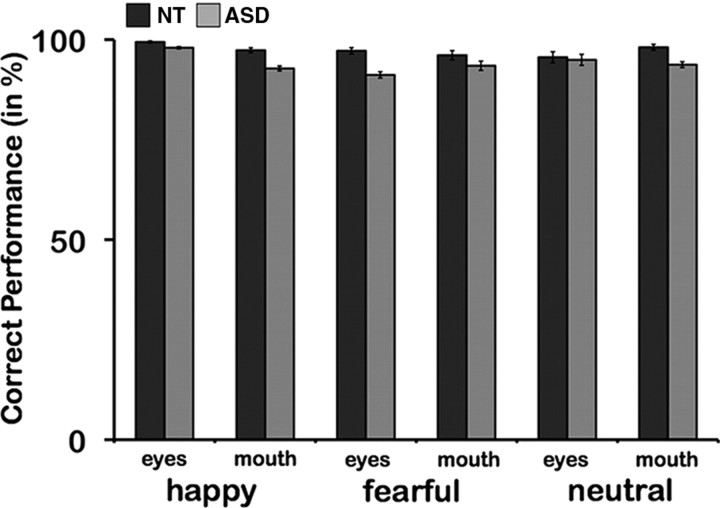

For the emotion classification task, the NT group showed a higher percentage of correct emotion classification than the ASD group in a post hoc t test [NT: 97.3; ASD: 93.8; t(31) = −2.8; p = 0.01]. Performance was further modulated by a significant two-way interaction of Initial Fixation and Emotion [F(2,62) = 9.4; p < 0.001, partial η2 = 0.2]. Over both groups, there were trends toward a main effect of Emotion [F(2,62) = 2.5; p = 0.09, partial η2 = 0.07] and an interaction of all three factors [F(2,62) = 2.6; p = 0.08, partial η2 = 0.08] (Initial Fixation [Eyes vs Mouth] × Emotion [Happy vs Fear vs Neutral]) × Group ([ASD vs NT]).

Both groups were separately influenced by an interaction of Emotion and Initial Fixation (NT: [F(2,32) = 46.9; p = 0.014, partial η2 = 0.24]; ASD: [F(2,30) = 6.2; p = 0.006, partial η2 = 0.3]). All participants classified more happy than fearful expressions correctly [NT: happy: 98.4; fear: 96.7; t(16) = 2.0; p = 0.06; ASD: happy: 95.4; fear: 92.3; t(15) = 2.9; p = 0.01]. Nonetheless, the NT group showed significantly increased performance for those emotional expressions compared with the ASD group (NT: happy: 98.4; ASD: happy: 95.4; t(31) = −2.2, p = 0.034; NT: fearful: 96.7; ASD: fearful: 92.3; t(31) = −2.3; p = 0.03). For happy faces, the effect was mainly driven by differences for the mouth conditions (p = 0.05), whereas for fearful faces the magnitude of effects was increased for eyes conditions (p = 0.008) (Fig. 2). Greater accuracy was also observed for both groups when the eyes were initially fixated for happy faces [NT: eyes: 99.4; mouth: 97.4; t(16) = 2.9; p = 0.01; ASD: eyes: 97.9; mouth: 92.8; t(15) = 2.6; p = 0.02]. Interestingly, both groups showed a higher rate of emotion recognition when initially fixating the mouth for neutral faces, though only on trend level for the NT group [NT: eyes: 95.6; mouth: 98.1; t(16) = −1.8; p = 0.09; ASD: eyes: 92.5; mouth: 94.9; t(15) = −2.1; p = 0.05].

Figure 2.

Emotion classification performance. The ASD group (lighter) showed on average less correct emotion classification than the NT group (darker) (p = 0.01). For happy faces, group differences were mainly driven by the mouth condition (p = 0.05), whereas for fearful faces, the difference was the largest for eyes conditions (p = 0.008).

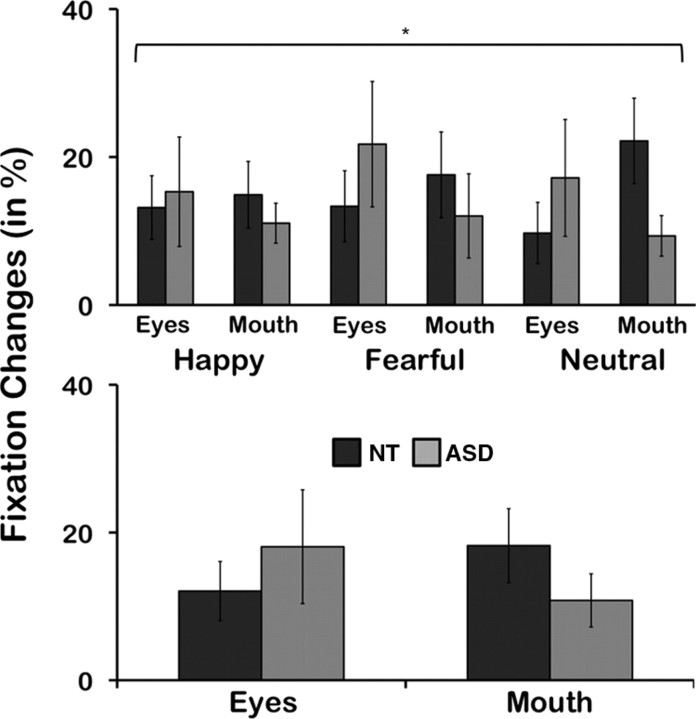

Eye Tracking results

The repeated measure ANOVA yielded a significant three-way interaction of all three factors [F(2,54) = 3.87; p = 0.027, partial η2 = 1.25]. Group differences for neutral faces when initially fixating the mouth region were marginally significant [NT: 22.2, ASD: 9.4; t(22.4) = −2, p = 0.057]. The NT group's gaze was further modulated by a significant interaction of Emotion and Initial Fixation [F(2,32) = 6.2; p = 0.005, partial η2 = 0.28]. For neutral faces, participants in the NT group showed a trend toward more fixation changes from the mouth to the eyes [mouth: 22.2, eyes: 9.7; t(16) = −1.86, p = 0.08]. When starting fixation at the mouth, more fixation changes to the eyes were observed for neutral, compared with happy faces [neutral: 22.2, happy: 14.9; t(16) = −2.7, p = 0.016], and showed a trend for neutral compared with fearful faces [neutral: 22.2, fearful: 17.6; t(16) = −1.77, p = 0.097]. We further analyzed the group means on a descriptive level: though not reaching statistical significance, over each emotion and for each emotion separately, the ASD group showed more eye movements away from the eyes than toward the eyes (from the mouth). In contrast, the NT group showed the opposite pattern (Fig. 3, Table 2). The magnitudes of these descriptive differences were greatest for neutral and fearful faces, between and within groups.

Figure 3.

Eye movements as a function of Initial Fixation, Emotion, and Group (top); and Initial Fixation and Group (bottom). Eye movements were significantly modulated by all the factors (Group, Emotion, Initial Fixation, p = 0.027). Descriptively, the ASD groups showed more eye movements away from the eyes than toward the eyes, whereas the NT group showed more eye movements from the mouth toward the eyes, than away from the eyes (Table 2).

Table 2.

Descriptives of Eye Movements per Emotion, Initial Fixation, and Group

| NT | ASD | |

|---|---|---|

| Happy | ||

| Eyes | 13.2 (4.3) | 15.3 (7.5) |

| Mouth | 14.9 (4.5) | 11.1 (2.7) |

| Fearful | ||

| Eyes | 13.4 (4.9) | 21.7 (8.5) |

| Mouth | 17.6 (5.9) | 12.04 (5.8) |

| Neutral | ||

| Eyes | 9.7 (4.2) | 12.8 (7.9) |

| Mouth | 22.2 (5.8) | 9.4 (2.8) |

Values are given in mean and SE (in parentheses).

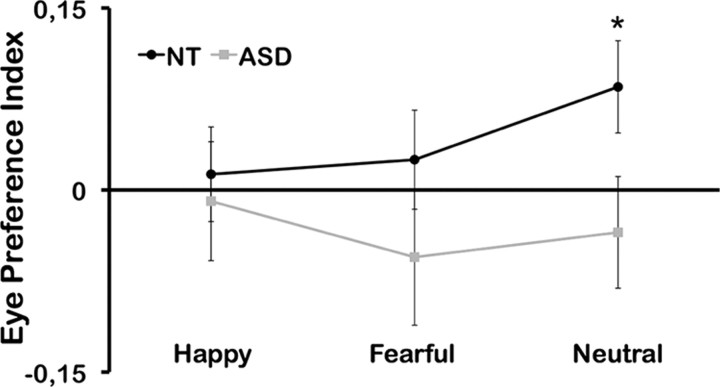

A Group × Emotion ANOVA on the eye preference index revealed a significant interaction of Group * Emotion (F2, 54 = 4.388; p < 0.05; partial η2 = 0.140) (Fig. 4). Within the NT group a one-way ANOVA revealed a significant effect for Emotion (F2, 32 = 6.692; p < 0.01; partial η2 = 0.295). No effect of Emotion could be found in the ASD group again, which is replicating a lack of an emotion effect on eye movements in ASD (Kliemann et al., 2010). Within each emotion, participants in the control group showed consistently higher preference (positive index) for eye regions whereas participants in the ASD group showed consistently lower preference (and thus a negative index) for the eyes as outlined in Figure 4. The differences were again most pronounced for neutral faces for which controls showed an eye preference index of 0.085 (SD 0.160) and participants in the ASD group −0.035 (SD 0.163) (t(27) = 1.970, p = 0.05). In addition, the eye preference index differed significantly from zero in the control group for neutral faces (t(16) = 2.185, p < 0.05) but did not differ from zero in the ASD group. This strong effect for neutral faces is most likely due to the fact that the main effect of Emotion in the NT group is most pronounced in this condition.

Figure 4.

Eye preference index as a function of Emotion and Group. The ASD group showed consistently diminished eye preference (negative values) over all emotions, whereas controls show pronounced eye preference (positive values). The group difference was significant for neutral faces (p < 0.05).

Relationship between eye movements and emotion recognition performance

The number of eye movements away from the eyes was further correlated with performance on the emotion recognition task in ASD (eyes trials: p = 0.076, r = −0.62; mouth trials: p = 0.021, r = −0.74; all trials: p = 0.026, r = −0.73) but not in NT. Notably, this difference between groups (assessed with Fisher r-to-z transformation) was significant for mouth trials (z = −2.19, p = 0.029, two-tailed) and marginally significant for all trials (z = −1.9, p = 0.057, two-tailed). In other words, the more participants gazed away from the eyes, the less correctly emotions were identified, especially for mouth conditions.

fMRI results

We investigated how amygdala activity is differentially affected by the initial fixation of the eye compared with the mouth. For this purpose, we analyzed the fMRI data in predefined regions of interest (bilateral amygdalae, see Materials and Methods) with respect to the main hypotheses: (1) increased BOLD response within the amygdala for ASD compared with NT in trials where the eyes were fixated initially compared with trials where the mouth was fixated initially (ASD > NT; eyes > mouth, i.e., avoidance) and (2) increased BOLD response within the amygdala for NT compared with ASD in trials where the mouth was fixated initially compared with trials where the eyes were fixated initially (NT > ASD; mouth < eyes, i.e., orientation). The resulting 2 × 2 interaction contrast (Initial Fixation [eyes vs mouth] × Group [ASD vs NT]) revealed a significant cluster of activation within the left (p < 0.05, FWE corrected) amygdala (Fig. 5, Table 3). Extracted PEs averaged across emotions further illustrate the direction of the interaction effect: the ASD group showed increased amygdala activity at initial fixation of the eyes compared with initial fixation of the mouth and compared with the NT group. In contrast, the NT group showed increased amygdala activity at initial fixation of the mouth compared with initial fixation of the eyes (Fig. 5) and compared with the ASD group.

There were no significantly activated clusters showing a three-way interaction of Emotion × Initial Fixation × Group in the amygdala.

Discussion

To further define the role of the amygdala within atypical gaze in ASD, we investigated eye movements and amygdala activity when participants initially fixated the mouth or the eyes of faces during an emotion recognition task. Eye movements were mediated by a significant interaction of Emotion, Initial Fixation and Group. Within this interaction, ASD participants showed more eye movements away from than toward the eyes, whereas NT participants gazed more frequently toward than away from the eyes. fMRI data analyses revealed a significant interaction effect of Initial Fixation and Group in the amygdala, reflecting reversed group patterns in response to eyes and mouth fixation: ASD participants exhibited relatively greater amygdala response when initially fixating the eyes, whereas NT participants showed a relative increase when initially fixating the mouth, compared with the other facial feature and group, respectively.

Social functioning requires the recognition and orientation toward important environmental cues. During communication, the eyes carry information about the other agent's inner state as well as information about the environment. Already early in development neurotypically developed individuals focus immediately on the eyes (Nation and Penny, 2008). Our data support this, by revealing relatively more eye movements toward than away from the eyes, as additionally indicated by the positive eye preference index (Fig. 4). At the same time, NT participants classified more fearful faces correctly when fixating the eyes. Thus, the previously reported orientation toward the eyes leads to increased performance when only information from the eyes is available, potentially reflecting a developmental expertise in processing information from the eyes due to the eye preference. Recently, the orientation toward (fearful) eyes has been associated with increased amygdala activity (Gamer and Büchel, 2009; Gamer et al., 2010), suggesting that the amygdala triggers reflexive orientation in controls. In other words, the greater the orientation toward the eyes (from the mouth), the greater the increase in underlying amygdala activity. Here, we replicated these findings, showing a relative increase of amygdala activity while orienting gaze to the eyes (Fig. 5). Our data therefore support the proposed functional profile of the amygdala to represent (social) salience mediation triggering reflexive orientation toward the eyes in NTs.

In contrast, autistic individuals' gaze on faces is strongly characterized by a reduced eye focus (Klin et al., 2002; Pelphrey et al., 2002). Two—not mutually exclusive—explanations have been highlighted in the literature. First, previous research suggested that eye contact might be aversive (Hutt and Ounsted, 1966; Richer and Coss, 1976; Kylliäinen and Hietanen, 2006), grounded on behavioral and psychophysiological findings (Joseph et al., 2008). A previous study using the same paradigm, furthermore, showed a strong increase in gaze away from the eyes when starting fixation on the eyes compared with controls (Kliemann et al., 2010). The present group interaction effect replicated those findings (Fig. 3), showing relatively more eye movements away from than toward the eyes in autism compared with controls.

The correlation between gaze away from the eyes and performance could further reflect a behavioral significance of actively gazing away from the eye region. The magnitude of this effect for mouth trials does not imply a behavioral benefit for processing information from the mouth, consistent with previous studies (Kliemann et al., 2010; Kirchner et al., 2011). If current eye movements could be explained by a greater interest in the mouth in ASD, this should lead to increased mouth exposure across the lifespan, resulting in increased performance when looking at the mouth. Performance, however, was not superior when directed to fixate the mouth versus the eyes in ASD.

Dalton et al. (2005) strikingly connected the duration of eye fixation and the magnitude of amygdala activity in ASD. This amygdalar hyperresponsiveness to direct gaze has been suggested to represent a neural indicator for a heightened (and negatively valenced) emotional arousal triggered by eye contact. This is in accordance with our data and the suggested influence of avoidance: if directed to fixate the eyes versus the mouth, ASD participants showed increased amygdala activity compared with controls in the interaction contrast. The aversion then results in an avoidance of eye contact, as represented in the number of gazes away from the eyes. Of note, there are several studies showing amygdalar involvement during processing of aversive or threat related stimuli in general (Gallagher and Holland, 1994; Adolphs et al., 1995; LeDoux, 1996; Whalen, 2007). In light of these findings, increased amygdala activity in response to eye contact, as shown in the interaction contrast, together with the observed gaze patterns could be interpreted as reflecting an avoidance reaction to eye contact in ASD.

Another explanation for the reduced eye focus in ASD is diminished social attention (Grelotti et al., 2002; Schultz, 2005; Neumann et al., 2006). Thereby, ASD participants would fail to actively orient toward the eyes. Support for amygdalar involvement in the lack of attention toward the eyes comes from elegant studies in patients with amygdala lesions. These patients showed reduced reflexive orientation toward the eyes when looking at faces on photographs or videos (Adolphs et al., 2005; Adolphs, 2007; Spezio et al., 2007). Thus, if reduced orientation to the eyes would be mediated by the amygdala in autism, this should be represented in decreased activity. In fact, we found reduced amygdala activity in ASD compared with control participants when initially fixating the mouth, accompanied by reduced eye movements toward the eyes.

Amygdalar activation patterns in combination with the atypical gaze complement our behavioral findings as well: emotion recognition performance was particularly impaired in ASD participants when looking at the most discriminative regions for the respective emotion (eyes for fearful, mouth for happy faces). Atypical gaze and underlying amygdala activity may thus lead to reduced expertise in emotion recognition abilities in ASD.

In sum, the results provide new and important insights into aberrant amygdala functioning within social information processing in autism: the increase in amygdala activity triggered by the eyes along with previously reported increased gaze away from the eyes, supports the hypothesis of active avoidance of eye contact, modulated via amygdalar avoidance processing. The decrease in amygdala activity when starting gaze at the mouth further underlines amygdalar dysfunction within social saliency detection and orientation. ASD is, however, of multifactorial nature with interacting risk factors (e.g., genetic variants, epigenetic and environmental factors) producing the autistic phenotype and symptom heterogeneity (Abrahams and Geschwind, 2010; Scherer and Dawson, 2011). Variance within the autistic sample is thus very likely leading to pronounced avoidance of eye contact more than reduced orientation in some individuals or vice versa. We propose that both components may coexist, yet to varying intra- and interindividual degrees, as indicated in the eye preference index. However, the exact influence of avoidance and reduced orientation only in the ASD group cannot be disentangled here. The exact contribution should be specifically tested in future studies, including measurements of arousal and anxiety. Along these lines, the integration of other types of data, such as genetic information, may help to better disentangle subtypes of (social) symptomatologies associated with different phenotypes (Yoshida et al., 2010; Chakrabarti and Baron-Cohen, 2011).

Both gaze and neuronal effects have been consistently reported in healthy samples. Thus the effects observed in the present study may partly be due to the greater homogeneity in individual gaze behavior and associated amygdalar response in NT, as compared with ASD samples. Although ASD participants showed increased amygdala activity for eyes > mouth trials (peak voxel: x: −16, y: −12, z: −14; z-max = 2.8), the respective cluster did not survive adequate cluster correction. Within group effects in ASD should be carefully addressed in future studies to further specify the mechanisms behind the reported group interactions.

Importantly, the amygdala is not a (cyto-)anatomically homogeneous structure, but consists of several subnuclei (Gloor, 1997; Freese and Amaral, 2009; Saygin et al., 2011) with specific connections to other brain regions (Price and Amaral, 1981; McDonald, 1992; Kleinhans et al., 2008; Dziobek et al., 2010), and presumably different functional profiles (Ball et al., 2007; Straube et al., 2008). Avoidance and orientation may therefore be modulated via distinct amygdalar subnuclei. Further, the functional specification of the subnuclei may be dysfunctional during the developmental trajectory in ASD. Current spatial resolution of functional images together with registration to standard brain templates, however, make it hard to functionally localize subnuclei activation patterns. Future research and advances in neuroimaging methods will help to further define (dys-)functions of amygdalar subnuclei.

Our data suggest a specific dysfunctional profile of the amygdala in autism: whereas the amygdala in controls accompanies social salience mediation (such as orientation toward eyes), this process seems to be dysfunctional in ASD. Orientation processing is not absent or replaced, instead, it seems to interact with aberrant aversion processing of eye contact. This interpretation further supports the emerging opinion that the amygdala is not the cause of the autistic pathophysiology but rather represents a dysfunctional node within the neuronal network underlying effective social functioning (Paul et al., 2010).

Footnotes

This work was supported by the German Federal Ministry of Education and Research (01GW0723), the Max Planck Society, and the German Research Foundation (Cluster of Excellence “Language of Emotion,” EXC 302). We thank Zeynep M. Saygin and Claudia Preuschhof for comments on the manuscript, Hannah Bruehl for discussion of data analysis, and Rosa Steimke for help in data acquisition.

References

- Abrahams BS, Geschwind DH. Connecting genes to brain in the autism spectrum disorders. Arch Neurol. 2010;67:395–399. doi: 10.1001/archneurol.2010.47. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R. Looking at other people: mechanisms for social perception revealed in subjects with focal amygdala damage. Novartis Found Symp. 2007;278:146–159. [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio AR. Fear and the human amygdala. J Neurosci. 1995;15:5879–5891. doi: 10.1523/JNEUROSCI.15-09-05879.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433:68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- American Psychiatric Association. Diagnostic and Statistical Manual of Mental Disorders. 4th ed. Edition. Washington, DC: American Psychiatric; 1994. [Google Scholar]

- Ball T, Rahm B, Eickhoff SB, Schulze-Bonhage A, Speck O, Mutschler I. Response properties of human amygdala subregions: evidence based on functional MRI combined with probabilistic anatomical maps. PLoS One. 2007;2:e307. doi: 10.1371/journal.pone.0000307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baron-Cohen S, Ring HA, Wheelwright S, Bullmore ET, Brammer MJ, Simmons A, Williams SC. Social intelligence in the normal and autistic brain: an fMRI study. Eur J Neurosci. 1999;11:1891–1898. doi: 10.1046/j.1460-9568.1999.00621.x. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Skinner R, Martin J, Clubley E. The autism-spectrum quotient (AQ): evidence from Asperger syndrome/high-functioning autism, males and females, scientists and mathematicians. J Autism Dev Disord. 2001a;31:5–17. doi: 10.1023/a:1005653411471. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Wheelwright S, Hill J, Raste Y, Plumb I. The “Reading the Mind in the Eyes” Test revised version: a study with normal adults, and adults with Asperger syndrome or high-functioning autism. J Child Psychol Psychiatry. 2001b;42:241–251. [PubMed] [Google Scholar]

- Chakrabarti B, Baron-Cohen S. Variation in the human cannabinoid receptor CNR1 gene modulates gaze duration for happy faces. Mol Autism. 2011;2:10. doi: 10.1186/2040-2392-2-10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corbett BA, Carmean V, Ravizza S, Wendelken C, Henry ML, Carter C, Rivera SM. A functional and structural study of emotion and face processing in children with autism. Psychiatry Res. 2009;173:196–205. doi: 10.1016/j.pscychresns.2008.08.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Critchley HD, Daly EM, Bullmore ET, Williams SC, Van Amelsvoort T, Robertson DM, Rowe A, Phillips M, McAlonan G, Howlin P, Murphy DG. The functional neuroanatomy of social behaviour: changes in cerebral blood flow when people with autistic disorder process facial expressions. Brain. 2000;123:2203–2212. doi: 10.1093/brain/123.11.2203. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, Alexander AL, Davidson RJ. Gaze fixation and the neural circuitry of face processing in autism. Nat Neurosci. 2005;8:519–526. doi: 10.1038/nn1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawson G, Meltzoff AN, Osterling J, Rinaldi J, Brown E. Children with autism fail to orient to naturally occurring social stimuli. J Autism Dev Disord. 1998;28:479–485. doi: 10.1023/a:1026043926488. [DOI] [PubMed] [Google Scholar]

- De Martino B, Camerer CF, Adolphs R. Amygdala damage eliminates monetary loss aversion. Proc Natl Acad Sci U S A. 2010;107:3788–3792. doi: 10.1073/pnas.0910230107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dziobek I, Fleck S, Kalbe E, Rogers K, Hassenstab J, Brand M, Kessler J, Woike JK, Wolf OT, Convit A. Introducing MASC: a movie for the assessment of social cognition. J Autism Dev Disord. 2006;36:623–636. doi: 10.1007/s10803-006-0107-0. [DOI] [PubMed] [Google Scholar]

- Dziobek I, Rogers K, Fleck S, Bahnemann M, Heekeren HR, Wolf OT, Convit A. Dissociation of cognitive and emotional empathy in adults with Asperger syndrome using the Multifaceted Empathy Test (MET) J Autism Dev Disord. 2008;38:464–473. doi: 10.1007/s10803-007-0486-x. [DOI] [PubMed] [Google Scholar]

- Dziobek I, Bahnemann M, Convit A, Heekeren HR. The role of the fusiform-amygdala system in the pathophysiology of autism. Arch Gen Psychiatry. 2010;67:397–405. doi: 10.1001/archgenpsychiatry.2010.31. [DOI] [PubMed] [Google Scholar]

- Freese JL, Amaral DG. Neuroanatomy of the primate amygdala. In: Whalen PJ, Phelps EA, editors. The human amygdala. New York: Guilford; 2009. pp. 3–43. [Google Scholar]

- Gallagher M, Holland PC. The amygdala complex: multiple roles in associative learning and attention. Proc Natl Acad Sci U S A. 1994;91:11771–11776. doi: 10.1073/pnas.91.25.11771. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gamer M, Büchel C. Amygdala activation predicts gaze toward fearful eyes. J Neurosci. 2009;29:9123–9126. doi: 10.1523/JNEUROSCI.1883-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gamer M, Zurowski B, Büchel C. Different amygdala subregions mediate valence-related and attentional effects of oxytocin in humans. Proc Natl Acad Sci U S A. 2010;107:9400–9405. doi: 10.1073/pnas.1000985107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gillberg C, Råstam M, Wentz E. The Asperger Syndrome (and high-functioning autism) Diagnostic Interview (ASDI): a preliminary study of a new structured clinical interview. Autism. 2001;5:57–66. doi: 10.1177/1362361301005001006. [DOI] [PubMed] [Google Scholar]

- Gloor P. The amygdaloid system. In: Gloor P, editor. The temporal lobe and limbic system. New York: Oxford UP; 1997. pp. 5591–5721. [Google Scholar]

- Goeleven E, De Raedt R, Leyman L, Verschuere B. The Karolinska Directed Emotional Faces: a validation study. Cogn Emot. 2008;22:1094–1118. [Google Scholar]

- Grelotti DJ, Gauthier I, Schultz RT. Social interest and the development of cortical face specialization: what autism teaches us about face processing. Dev Psychobiol. 2002;40:213–225. doi: 10.1002/dev.10028. [DOI] [PubMed] [Google Scholar]

- Horn W. Das Leistungsprüfsystem (L-P-S) Göttingen: Hogrefe; 1962. [Google Scholar]

- Hutt C, Ounsted C. Biological significance of gaze aversion with particular reference to syndrome of infantile autism. Behav Sci. 1966;11:346–356. doi: 10.1002/bs.3830110504. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Smith S. A global optimisation method for robust affine registration of brain images. Med Image Anal. 2001;5:143–156. doi: 10.1016/s1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- Joseph RM, Ehrman K, McNally R, Keehn B. Affective response to eye contact and face recognition ability in children with ASD. J Int Neuropsychol Soc. 2008;14:947–955. doi: 10.1017/S1355617708081344. [DOI] [PubMed] [Google Scholar]

- Juhola M. Median filtering is appropriate to signals of saccadic eye movements. Comput Biol Med. 1991;21:43–49. doi: 10.1016/0010-4825(91)90034-7. [DOI] [PubMed] [Google Scholar]

- Kawashima R, Sugiura M, Kato T, Nakamura A, Hatano K, Ito K, Fukuda H, Kojima S, Nakamura K. The human amygdala plays an important role in gaze monitoring. A PET study. Brain. 1999;122:779–783. doi: 10.1093/brain/122.4.779. [DOI] [PubMed] [Google Scholar]

- Kirchner J, Hatri A, Heekeren HR, Dziobek I. Autistic symptomatology, face processing abilities, and eye fixation patterns. J Autism Dev Disord. 2011;41:158–167. doi: 10.1007/s10803-010-1032-9. [DOI] [PubMed] [Google Scholar]

- Kleinhans NM, Richards T, Sterling L, Stegbauer KC, Mahurin R, Johnson LC, Greenson J, Dawson G, Aylward E. Abnormal functional connectivity in autism spectrum disorders during face processing. Brain. 2008;131:1000–1012. doi: 10.1093/brain/awm334. [DOI] [PubMed] [Google Scholar]

- Kleinhans N, Richards T, Johnson LC, Weaver KE, Greenson J, Dawson G, Aylward E. fMRI evidence of neural abnormalities in the subcortical face processing system in ASD. Neuroimage. 2011;54:697–704. doi: 10.1016/j.neuroimage.2010.07.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kliemann D, Dziobek I, Hatri A, Steimke R, Heekeren HR. Atypical reflexive gaze patterns on emotional faces in autism spectrum disorders. J Neurosci. 2010;30:12281–12287. doi: 10.1523/JNEUROSCI.0688-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch Gen Psychiatry. 2002;59:809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Kylliäinen A, Hietanen JK. Skin conductance responses to another person's gaze in children with autism. J Autism Dev Disord. 2006;36:517–525. doi: 10.1007/s10803-006-0091-4. [DOI] [PubMed] [Google Scholar]

- LeDoux J. Emotional networks and motor control: a fearful view. Prog Brain Res. 1996;107:437–446. doi: 10.1016/s0079-6123(08)61880-4. [DOI] [PubMed] [Google Scholar]

- Leekam SR, Hunnisett E, Moore C. Targets and cues: gaze-following in children with autism. J Child Psychol Psychiatry. 1998;39:951–962. [PubMed] [Google Scholar]

- Lehrl S, Triebig G, Fischer B. Multiple choice vocabulary test MWT as a valid and short test to estimate premorbid intelligence. Acta Neurol Scand. 1995;91:335–345. doi: 10.1111/j.1600-0404.1995.tb07018.x. [DOI] [PubMed] [Google Scholar]

- Lord C, Rutter M, Le Couteur A. Autism Diagnostic Interview-Revised: a revised version of a diagnostic interview for caregivers of individuals with possible pervasive developmental disorders. J Autism Dev Disord. 1994;24:659–685. doi: 10.1007/BF02172145. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Öhmann A. The Karolinska directed emotional faces—KDEF, CD-ROM from Department of Clinical Neuroscience, Psychology section, Karoliska Institutet. 1998 [Google Scholar]

- McDonald AJ. Cell types and intrinsic connections of the amygdala. In: Aggleton J, editor. The amygdala: neurobiological aspects of emotion, memory, and mental dysfunction. New York: Wiley; 1992. pp. 67–96. [Google Scholar]

- Morris JS, deBonis M, Dolan RJ. Human amygdala responses to fearful eyes. Neuroimage. 2002;17:214–222. doi: 10.1006/nimg.2002.1220. [DOI] [PubMed] [Google Scholar]

- Nation K, Penny S. Sensitivity to eye gaze in autism: is it normal? Is it automatic? Is it social? Dev Psychopathol. 2008;20:79–97. doi: 10.1017/S0954579408000047. [DOI] [PubMed] [Google Scholar]

- Neumann D, Spezio ML, Piven J, Adolphs R. Looking you in the mouth: abnormal gaze in autism resulting from impaired top-down modulation of visual attention. Soc Cogn Affect Neurosci. 2006;1:194–202. doi: 10.1093/scan/nsl030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nodes T, Gallagher N. Median filters: some modifications and their properties. IEEE Trans ASSP. 1982;30:739–746. [Google Scholar]

- Paul LK, Corsello C, Tranel D, Adolphs R. Does bilateral damage to the human amygdala produce autistic symptoms? J Neurodev Disord. 2010;2:165–173. doi: 10.1007/s11689-010-9056-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. J Autism Dev Disord. 2002;32:249–261. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Price JL, Amaral DG. An autoradiographic study of the projections of the central nucleus of the monkey amygdala. J Neurosci. 1981;1:1242–1259. doi: 10.1523/JNEUROSCI.01-11-01242.1981. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Richer JM, Coss RG. Gaze aversion in autistic and normal children. Acta Psychiatr Scand. 1976;53:193–210. doi: 10.1111/j.1600-0447.1976.tb00074.x. [DOI] [PubMed] [Google Scholar]

- Saygin ZM, Osher DE, Augustinack J, Fischl B, Gabrieli JD. Connectivity-based segmentation of human amygdala nuclei using probabilistic tractography. Neuroimage. 2011;56:1353–1361. doi: 10.1016/j.neuroimage.2011.03.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Scherer SW, Dawson G. Risk factors for autism: translating genomic discoveries into diagnostics. Hum Genet. 2011;130:123–148. doi: 10.1007/s00439-011-1037-2. [DOI] [PubMed] [Google Scholar]

- Schultz RT. Developmental deficits in social perception in autism: the role of the amygdala and fusiform face area. Int J Dev Neurosci. 2005;23:125–141. doi: 10.1016/j.ijdevneu.2004.12.012. [DOI] [PubMed] [Google Scholar]

- Smith SM, Jenkinson M, Woolrich MW, Beckmann CF, Behrens TE, Johansen-Berg H, Bannister PR, De Luca M, Drobnjak I, Flitney DE, Niazy RK, Saunders J, Vickers J, Zhang Y, De Stefano N, Brady JM, Matthews PM. Advances in functional and structural MR image analysis and implementation as FSL. Neuroimage. 2004;23(Suppl 1):S208–S219. doi: 10.1016/j.neuroimage.2004.07.051. [DOI] [PubMed] [Google Scholar]

- Spezio ML, Huang PY, Castelli F, Adolphs R. Amygdala damage impairs eye contact during conversations with real people. J Neurosci. 2007;27:3994–3997. doi: 10.1523/JNEUROSCI.3789-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stork M. Median filters theory and applications. Third International Conference on Electrical and Electronics Engineering Papers-Chamber of Electrical Engineering; December 3–7; Bursa, Turkey. 2003. [Google Scholar]

- Straube T, Pohlack S, Mentzel HJ, Miltner WHR. Differential amygdala activation to negative and positive emotional pictures during an indirect task. Behav Brain Res. 2008;191:285–288. doi: 10.1016/j.bbr.2008.03.040. [DOI] [PubMed] [Google Scholar]

- Whalen PJ. The uncertainty of it all. Trends Cogn Sci. 2007;11:499–500. doi: 10.1016/j.tics.2007.08.016. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, Davis FC, Kim H, Polis S, McLaren DG, Somerville LH, McLean AA, Maxwell JS, Johnstone T. Human amygdala responsivity to masked fearful eye whites. Science. 2004;306:2061. doi: 10.1126/science.1103617. [DOI] [PubMed] [Google Scholar]

- Yoshida W, Dziobek I, Kliemann D, Heekeren HR, Friston KJ, Dolan RJ. Cooperation and heterogeneity of the autistic mind. J Neurosci. 2010;30:8815–8818. doi: 10.1523/JNEUROSCI.0400-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]