Abstract

A central controversy in the field of attention is how the brain deals with emotional distractors and to what extent they capture attentional processing resources reflexively due to their inherent significance for guidance of adaptive behavior and survival. Especially, the time course of competitive interactions in early visual areas and whether masking of briefly presented emotional stimuli can inhibit biasing of processing resources in these areas is currently unknown. We recorded frequency-tagged potentials evoked by a flickering target detection task in the foreground of briefly presented emotional or neutral pictures that were followed by a mask in human subjects. We observed greater competition for processing resources in early visual cortical areas with shortly presented emotional relative to neutral pictures ∼275 ms after picture offset. This was paralleled by a reduction of target detection rates in trials with emotional pictures ∼400 ms after picture offset. Our finding that briefly presented emotional distractors are able to bias attention well after their offset provides evidence for a rather slow feedback or reentrant neural competition mechanism for emotional distractors that continues after the offset of the emotional stimulus.

Introduction

Our study focuses on a central controversy in the field of attention, namely to what extent emotional distractors bias competition for processing resources in visual cortex (cf. Öhman et al., 2001; Vuilleumier et al., 2001; Pessoa et al., 2002). The current debate is fed by experimental findings that emotional stimuli can capture attention reflexively by showing for example that emotional stimuli are detected very rapidly in visual search tasks (cf. Öhman et al., 2001) or activate subcortical structures, in particular the amygdala, in spatial orienting tasks when they are presented at the to-be-ignored location (cf. Vuilleumier et al., 2001). This view was challenged by the observation of equal amygdala activation for emotional and neutral faces under certain conditions in a spatial attention task (Pessoa et al., 2002), leading to the claim that emotional stimuli are under top-down control (Holmes et al., 2003).

To further detail the temporal dynamics of such emotion–attention interactions, we recorded frequency-tagged evoked potentials elicited by flickering stimuli that also formed the attentional task while emotional or neutral pictures from the International Affective Picture System (IAPS; Lang et al., 1997) were briefly and unpredictably presented in the background. We used a short picture presentation time of 200 ms, which allows one fixation only (Christianson et al., 1991) but is sufficient for emotional content categorization (Thorpe et al., 1996; Schupp et al., 2004). Thus, brief presentations and masking may be more suitable for assessing the immediate affective impact of a stimulus without further elaborative and (emotional) regulating processes inherent to longer presentation times (Larson et al., 2005).

We measured the time course of distraction from the foreground target detection task as a function of emotional content of background pictures by recording steady-state visual evoked potentials (SSVEPs) elicited by flickering dots as well as the time course of detection rates of coherent motion targets of these dots. The SSVEP is the oscillatory field potential generated by visual cortical neurons in response to a flickering stimulus that indexes neural activity related to stimulus processing continuously, thereby providing a sensitive and direct neuronal measure of the time course of the allocation of processing resources (Müller et al., 1998; Müller, 2008; Andersen and Müller, 2010). The sources of the SSVEP signal are in early visual processing areas (Müller et al., 2006; Di Russo et al., 2007; Andersen et al., 2008), which are similar to the ones activated by IAPS pictures (Bradley et al., 2003; Moratti et al., 2004). This should result in maximal competition for processing resources given overlap in neural activation patterns (Desimone and Duncan, 1995).

Materials and Methods

Subjects and EEG recording

Twenty right-handed subjects between the ages of 19 and 29 years (mean age of 24.25 years, 10 female) with normal or corrected-to-normal vision gave informed written consent, and the experiment was conducted in accordance with the Declaration of Helsinki. EEG was recorded from 64 Ag–AgCl scalp electrodes that were mounted in an elastic cap at a sampling rate of 256 Hz using a BioSemi ActiveTwo amplifier system (BioSemi). Lateral eye movements were monitored with a bipolar outer canthus montage (horizontal electrooculogram). Vertical eye movements and blinks were monitored with a bipolar montage positioned below and above the right eye (vertical electrooculogram).

Stimuli and procedure

Stimuli were presented centrally on a black background on a 19 inch computer screen set to a refresh rate of 60 Hz and viewed at a distance of 80 cm. Each picture subtended 12.8° × 9.6° of visual angle. The luminance of the experimental display was 30 cd/m2 on average and did not differ between picture sets. For each valence category 45 pictures were chosen on the basis of normative valence and arousal ratings and were presented twice throughout the experiment in randomized order (no repetition within the next three pictures) resulting in 90 trials per condition. Picture categories differed on normative Self-Assessment Manikin ratings of valence and arousal (neutral: 5.70 and 3.76; pleasant: 7.30 and 5.60; unpleasant: 2.22 and 6.21, respectively). The average RGB values of the three picture sets were equalized in a two-step process: pictures were chosen as to minimize differences in average RGB values. Any remaining differences were then removed by multiplying the RGB values of each picture in a set with the ratio of the average RGB values across all pictures divided by the average RGB values for that set. For a baseline measure and a control condition, we used scrambled versions of these pictures. Scrambling of pictures was performed by a Fourier transform of each image, yielding the amplitude and phase components. The original phase spectrum was then replaced by random phase values before rebuilding the new scrambled images with an inverse Fourier transform. As a consequence, scrambled pictures had the same amplitude spectrum as the original picture; i.e., they were equal in global physical properties, while any content-related information was deleted.

In each trial, 100 yellow dots flickered at a rate of 10 Hz (each dot had a size of 0.6° × 0.6° of visual angle) and were presented together with the background picture for a period of 4100 ms (i.e., 41 cycles, each with 3 frames “on” and 3 frames “off”). Every frame of refresh (i.e., every 16.67 ms), each dot moved in a random direction (up, down, left, or right) by 0.04°. This entirely random motion was interrupted by short intervals (3 cycles of 10 Hz or 300 ms) of coherent motion (35% coherence) in one of the four cardinal directions (targets). Subjects were instructed to press a button upon detection of such coherent motion targets. In each trial, between zero and four coherent motion targets could occur unpredictably for the subject. Over the entire experiment, the occurrence of such targets was equally distributed across all experimental conditions. Furthermore, to allow for time-sensitive analysis of the behavioral data before and after picture change, targets were equally distributed across the entire trial duration within small time segments of 1 cycle or 100 ms each.

Each trial started with the simultaneous presentation of a scrambled version of the IAPS picture and the yellow dots (Fig. 1). To ensure the unpredictability of the first picture change, the time point of change was randomly assigned to an early (13% of trials, 300–1200 ms), middle (60% of trials, 1300–2100 ms), or late (27% of trials, 2200–4100 ms) time window after stimulus onset. The relatively smaller proportion of trials in the early and late time windows served as “catch trials” and were not included in the analysis. In sum, the experiment consisted of 10 experimental blocks of 60 trials each, but only the 360 trials with a picture change in the middle time window entered the analysis.

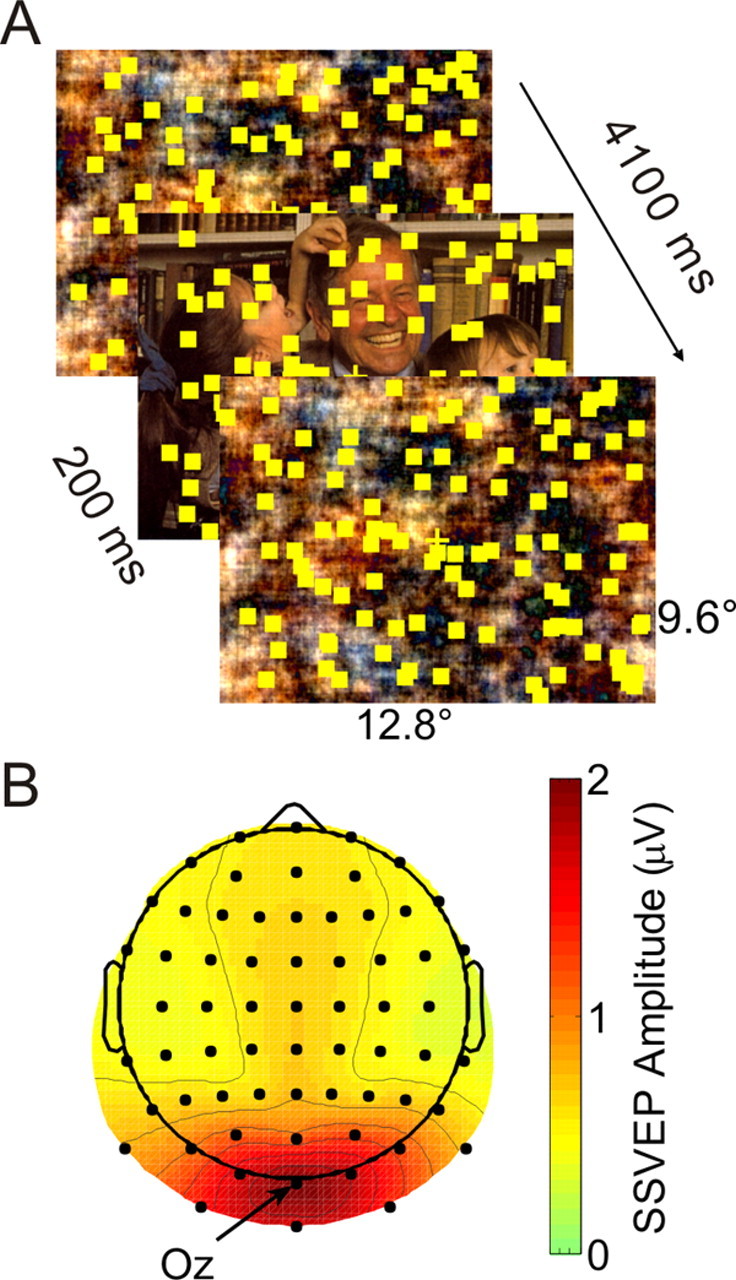

Figure 1.

Schematic stimulus design and SSVEP topography. A, Schematic stimulus design. Each trial lasted for 4100 ms and started with the presentation of a scrambled version of an IAPS picture. After a variable interval, the scrambled picture changed to the concrete version for 200 ms in four steps and back to a new scrambled form again in four steps. Subjects attended to the flickering and moving dots to detect and to respond to intervals of coherent motion of a subset of dots. B, Grand mean spline interpolated voltage map of SSVEP amplitude across all subjects and experimental conditions averaged across the whole analysis epoch. Electrode Oz with greatest SSVEP amplitude is indicated.

To avoid an abrupt onset or offset of the background pictures that might have elicited a visual evoked potential that could have interfered with the SSVEP amplitude, the picture change (back and forth) was done in a stepwise manner. Over four frames, a weighted average of scrambled and original picture was presented with the weight for the picture increasing by 20% per frame (i.e., 20%, 40%, 60%, and 80%). The onset of the picture was defined as the first time point at which the weight for the picture exceeded 50% (i.e., the third frame with a weight of 60%). For picture offset, the reverse sequence of weighted images was presented. Thus, a full 100% view of the picture was present for 133 ms only.

To test whether a change in the background per se distracts attention from the foreground task, we included a condition in which the scrambled picture briefly changed in the same manner as concrete pictures to another scrambled picture and then changed back to a new scrambled view. A separate set of pictures for all valence categories was used for catch trials and during training.

Data analysis

EEG data.

Epochs were extracted from 500 ms before to 1600 ms after picture change, and the mean and any linear trend was subtracted from each trial. Trials with eye blinks or movements were rejected using automated in-house routines, and the result was verified by manual inspection. The remaining trials were subjected to automated artifact rejection and correction using an established method (SCADS, Junghöfer et al., 2000) and then transformed to average reference. On average, 11.7% of all trials were rejected from further analysis. All remaining trials of the same condition were averaged and the time course of SSVEP amplitudes was extracted by means of a Gabor filter (Gabor, 1946) with a time resolution of ±88.25 ms (full-width at half-maximum) at the central occipital electrode Oz, which exhibited the greatest amplitudes in all conditions (Fig. 1B).

A baseline from −300 to −200 ms before picture change was subtracted. This baseline range was chosen as to strictly exclude any contamination of the baseline by poststimulus processes due to the temporal smearing of the Gabor filter. We found no differences between pleasant and unpleasant background pictures, neither in SSVEP amplitudes nor in target detection rates. Therefore, we averaged SSVEP amplitudes across emotional pictures and tested the averaged signal against the time course for neutral background pictures by means of running two-sided paired t tests against zero for each sampling point. A threshold of α = 0.05 was adopted and only intervals with a minimum of 10 consecutive significant sampling points were reported (Andersen and Müller, 2010).

Source analysis.

Cortical sources of SSVEPs were localized by subjecting the complex valued SSVEP amplitudes in the time range from 450 to 750 ms after stimulus onset to variable resolution electromagnetic tomography (Bosch-Bayard et al., 2001) and statistically comparing the results between emotional and neutral conditions (α = 0.001, Bonferroni corrected for multiple comparisons).

Behavioral data.

Only button presses in a time window between 250 and 900 ms after target onset were considered as correct responses. Hit rates were averaged across two successive time bins, resulting in a temporal resolution of 200 ms. As with SSVEP amplitudes, we averaged across pleasant and unpleasant background pictures and tested these time bins by means of paired t tests to determine (1) when hit rates dropped compared to baseline and (2) in which time bin hit rates differed between emotional and neutral background pictures. Given the nature of the task, we observed practically no false alarms (i.e., button press in the absence of a coherent motion event), which restricted our analysis to hit rates instead of d′ values.

Results

Figure 2A depicts SSVEP amplitudes at electrode Oz as a function of time after onset of emotional and neutral background pictures. SSVEP amplitudes dropped with the change to a concrete neutral or emotional picture. The divergence in SSVEP amplitude between emotional and neutral background pictures started very late at 474 ms and lasted until 744 ms (all p values <0.05) after picture onset (see vertical lines in Fig. 2A). Given an overall picture presentation time of 200 ms, emotional pictures withdrew more attentional resources from the foreground task than neutral pictures for 270 ms beginning 274 ms after offset and masking of the picture. No significant differences in SSVEP amplitudes compared to baseline (i.e., before the change) were found at any time point in trials in which the scrambled picture changed to a new scrambled version and back to a new scrambled version.

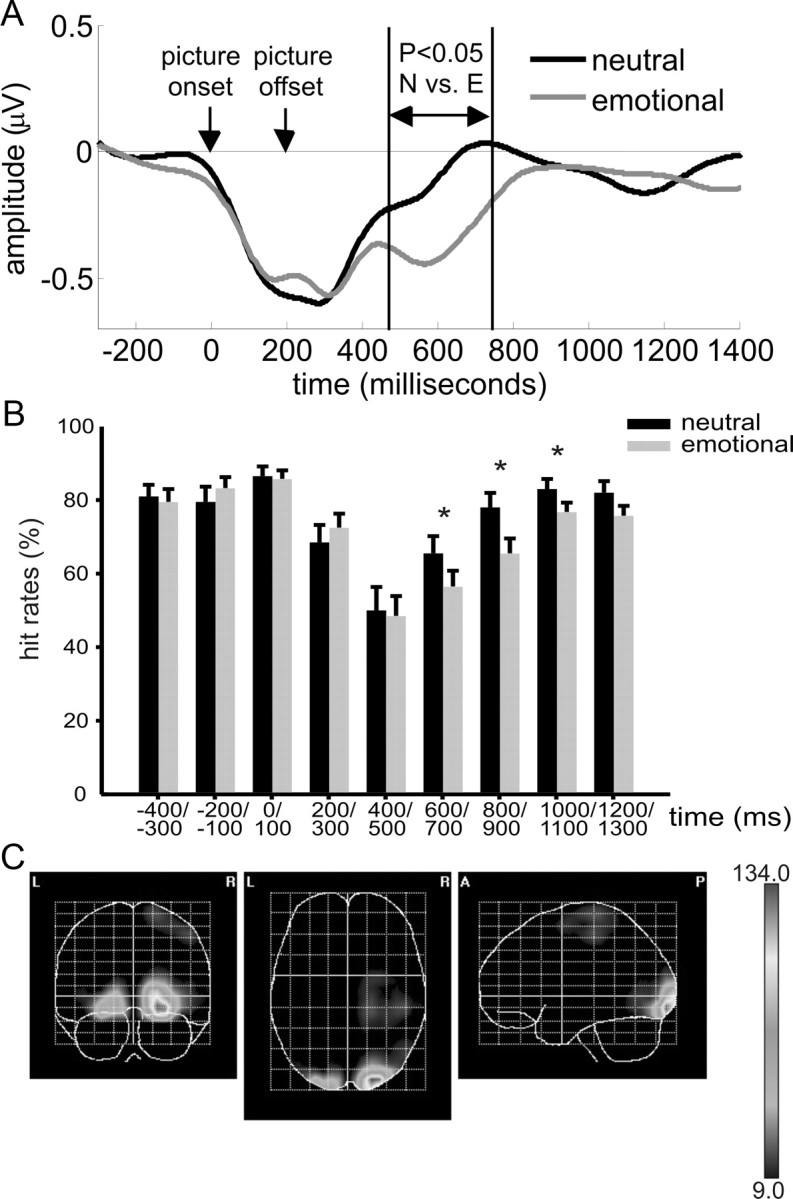

Figure 2.

Time course of SSVEP amplitudes and hit rates and source localization of SSVEP emotion effects. A, Baseline corrected time course of SSVEP amplitudes across all subjects at electrode location Oz for trials with neutral (black line) and emotional (gray line) background pictures. Time point zero indicates the onset of a concrete picture. Picture offset is also indicated. Vertical lines indicate the time window from 474 to 744 ms after onset of the concrete picture with significant differences between neutral and emotional background pictures. B, Time course of mean target hit rates across subjects before (first two windows) and after the change. The change covered the time windows from 0 to 100 ms and ended right with the start of the window from 200 to 300 ms. Significant differences in hit rates between trials with neutral and emotional background pictures are indicates with * (p < 0.05). Error bars correspond to 95% within-subject confidence intervals. C, Statistical parametric maps of the cortical current-density distributions giving rise to the SSVEP-amplitude differences between conditions with neutral and emotional background pictures in the time range from 450 to 750 ms after picture onset. The scale represents t2 values, and the threshold of 9.0 corresponds to p < 0.001, corrected for multiple comparisons.

Figure 2B depicts the time course of correctly detected coherent motion targets (i.e., hit rates) before and after picture change for trials with change to neutral and emotional background pictures. Similar to SSVEP amplitudes, hit rates dropped significantly (t(19) = −2.11, p < 0.05) in the time bin 200–300 ms after onset of a concrete picture regardless of emotional valence. The difference in hit rates between emotional and neutral pictures became significant well after the emotional picture was masked with the scrambled version. We found significant differences beginning in the time bin that started 600 ms after initial picture onset (t(19) = 3.02, p < 0.01). The significant drop in hit rates for emotional compared to neutral preceding pictures lasted until the time segment that ended at 1100 ms after initial picture onset. Thus, behavioral costs related to emotional background pictures started 400 ms after disappearance of the emotional picture and lasted for 500 ms. These costs were quite substantial and averaged to ∼10% reduction (t(19) = −6.06, p < 0.0001) in hit rates during that time range. No differences in hit rates compared to baseline were found for any time bin in trials in which the scrambled picture changed to a new scrambled version and back to another scrambled version. Thus, a change in the background alone without concrete picture content did not withdraw attentional resources from the foreground task.

Source localization revealed maximal differences between SSVEPs with neutral or emotional background pictures in posterior medial occipital cortex. This region contains the early visual areas V1, V2, and V3 (Fig. 2C) and was found to be the cortical source of nonspatial attention effects on the SSVEP in previous studies, which used overlapping random dot stimuli (Müller et al., 2006; Andersen et al., 2008; Andersen and Müller, 2010).

Discussion

Affective bias in visual processing during performance of a demanding detection task was triggered by short presentation of emotional background pictures that were masked by scrambled versions of the same pictures. The short presentation time was chosen to allow for one fixation of the pictures only (Christianson et al., 1991) that affects initial orientation to emotional pictures (Calvo and Lang, 2004; Larson et al., 2005). We were able to show that competition for processing resources in early visual areas took place even after the offset of emotional stimuli, i.e., when pictures were already masked for several hundred milliseconds. Thus, competitive interactions seem to be “ballistic” in the sense that once they are activated, they continue after the disappearance and masking of the affective stimulus.

The present study was not designed to directly test the possibility of fast feedforward modulation of early visual areas by means of subcortical structures such as the amygdala. However, although the amygdala has direct projections to visual cortical areas (Freese and Amaral, 2005), recent intracranial recordings in human amygdala have questioned fast amygdala activation by affective stimuli (Krolak-Salmon et al., 2004; Pourtois et al., 2010) (but see Luo et al., 2010). In these studies, amygdala activation to fearful compared to neutral faces peaked in a latency range of ∼200 ms, which is not in accordance with fast amygdala activation through a shortcut thalamus connection (LeDoux, 1996). Furthermore, intracranial recordings in human fusiform gyrus showed sustained negative activity at ∼350 ms after onset of emotional faces (Pourtois et al., 2010). This latency range is similar to the time window in which we found that emotional distractors demand processing resources from the foreground task in the present study. Such a long latency is difficult to explain with fast feedforward projections to fusiform gyrus or to early visual areas given that our source analysis confirmed that greatest competitive interactions took place in early visual areas including V1–V3. However, both results do not exclude a possible fast projection to inferotemporal cortex (IT) that receives dense projections from the amygdala (Freese and Amaral, 2005). Nevertheless, together these results suggest the involvement of top-down feedback mechanisms (Pessoa et al., 2002) or reentrant projections possibly with limbic contributions (cf. Keil et al., 2009) or a mixture of both (for review, see Vuilleumier, 2005) in these competitive interactions. Possible alternative processing pathways that result in greater neocortical control upon the processing of emotional stimuli have recently been put forward (Pessoa and Adolphs, 2010) with the major idea that emotional stimulus processing might be performed in waves of activation which include many visual pathways.

In a recent study, we investigated the neural mechanisms of voluntary attentional shifts to one of two spatially superimposed flickering random dot kinematograms (RDKs) of either red or blue dots (Andersen and Müller, 2010). Shifting attention to one color resulted in an enhanced processing of the attended color RDK, which in turn caused a suppression of SSVEP amplitudes driven by the to-be-ignored RDK. Based on this previous study, we suggest that the reduction of SSVEP amplitude observed in the present study is the consequence of shifting attention to the background pictures. Interestingly, in our previous study suppression of the unattended stimulus was significant from 360 ms after cue onset onwards. Hence, using similar stimuli and methods, we found suppressive effects of voluntary attention, which preceded the earliest affective modulations of SSVEP amplitudes in the present study by >100 ms. The fact that voluntary attentional shifts can occur faster than the affective modulation observed here support the idea that top-down influence on competitive interactions cannot entirely be excluded as one of the underlying neural mechanisms of the observed results of the present study.

We observed a consistent pattern of affective modulation of SSVEP amplitudes and hit rates, albeit with a slightly later onset for hit rates and a somewhat longer duration. Given the lower temporal resolution of our behavioral data, which was analyzed in bins of 200 ms, an exact correspondence of the time courses of both measures is not to be expected. Although SSVEP amplitudes and behavioral date are different in nature, in previous studies in which we investigated the time course of SSVEP amplitudes, we always found a close relation between the two measures, even with correlations in the 0.8 range, suggesting that the two measures are closely linked to each other (cf. Müller et al., 1998, 2008; Andersen et al., 2008; Müller, 2008; Hindi Attar et al., 2010).

Taking the time courses of SSVEP amplitudes and hit rates together, their time courses suggest two successive mechanisms. The initial drop might be related to the capture of attention by the onset of a new concrete object (Yantis and Hillstrom, 1994; Hindi Attar et al., 2010), with no difference between neutral and emotional pictures. Following that, SSVEP amplitudes and hit rates differed between emotional and neutral background pictures, clearly suggesting affective content-related resource withdrawal from the foreground task. Event-related potential studies on the processing of shortly presented IAPS pictures revealed an early posterior negativity relative to neutral pictures that occurs in a time window between 200 and 350 ms after the onset of the picture (Schupp et al., 2004), and is seen as the neural signature of early selective processing of affective cues and emotional content (cf. Bradley et al., 2007). In the present study, affective modulation of SSVEP amplitude started right after that processing stage, which might indicate that even shortly presented and subsequently masked complex pictures require some amount of cortical processing to extract the emotional content.

In conclusion, our data show that the processing bias to emotional pictures follows a time course that includes a number of coordinated processing steps, but once triggered, it continues. While detecting and processing emotional stimuli is fast, the ensuing changes in attention and behavior are rather slow.

Footnotes

This research was supported by the Deutsche Forschungsgemeinschaft. We thank Renate Zahn for help in data recording.

The authors declare no competing financial interests.

References

- Andersen SK, Müller MM. Behavioral performance follows time-course of neural facilitation and suppression during cued shifts of feature-selective attention. Proc Natl Acad Sci U S A. 2010;107:13878–13882. doi: 10.1073/pnas.1002436107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Andersen SK, Hillyard SA, Müller MM. Attention facilitates multiple stimulus features in parallel in human visual cortex. Curr Biol. 2008;18:1006–1009. doi: 10.1016/j.cub.2008.06.030. [DOI] [PubMed] [Google Scholar]

- Bosch-Bayard J, Valdés-Sosa P, Virues-Alba T, Aubert-Vázquez E, John ER, Harmony T, Riera-Díaz J, Trujillo-Barreto N. 3D statistical parametric mapping of EEG source spectra by means of variable resolution electromagnetic tomography (VARETA) Clin Electroencephalogr. 2001;32:47–61. doi: 10.1177/155005940103200203. [DOI] [PubMed] [Google Scholar]

- Bradley MM, Sabatinelli D, Lang PJ, Fitzsimmons JR, King W, Desai P. Activation of the visual cortex in motivated attention. Behavioral Neuroscience. 2003;117:369–380. doi: 10.1037/0735-7044.117.2.369. [DOI] [PubMed] [Google Scholar]

- Bradley MM, Hamby S, Löw A, Lang PJ. Brain potentials in perception: picture complexity and emotional arousal. Psychophysiology. 2007;44:364–373. doi: 10.1111/j.1469-8986.2007.00520.x. [DOI] [PubMed] [Google Scholar]

- Calvo MC, Lang PJ. Gaze patterns when looking at emotional pictures: motivationally biased attention. Motiv Emot. 2004;28:221–243. [Google Scholar]

- Christianson SA, Loftus EF, Hoffman H, Loftus GR. Eye fixations and memory for emotional events. J Exp Psychol Learn Mem Cogn. 1991;17:693–701. doi: 10.1037//0278-7393.17.4.693. [DOI] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Di Russo F, Pitzalis S, Aprile T, Spitoni G, Patria F, Stella A, Spinelli D, Hillyard SA. Spatiotemporal analysis of the cortical sources of the steady-state visual evoked potential. Hum Brain Mapp. 2007;28:323–334. doi: 10.1002/hbm.20276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Freese JL, Amaral DG. The organization of projections from the amygdala to visual cortical areas TE and V1 in the macaque monkey. J Comp Neurol. 2005;486:295–317. doi: 10.1002/cne.20520. [DOI] [PubMed] [Google Scholar]

- Gabor D. Theory of communication. Proc Inst Electr Eng. 1946;93:429–441. [Google Scholar]

- Hindi Attar C, Andersen SK, Müller MM. Time-course of affective bias in visual attention: convergent evidence from steady-state visual evoked potentials and behavioral data. Neuroimage. 2010;53:1326–1333. doi: 10.1016/j.neuroimage.2010.06.074. [DOI] [PubMed] [Google Scholar]

- Holmes A, Vuilleumier P, Eimer M. The processing of emotional facial expression is gated by spatial attention: evidence from event-related brain potentials. Brain Res Cogn Brain Res. 2003;16:174–184. doi: 10.1016/s0926-6410(02)00268-9. [DOI] [PubMed] [Google Scholar]

- Junghöfer M, Elbert T, Tucker DM, Rockstroh B. Statistical correction of artifacts in dense array EEG/MEG studies. Psychophysiology. 2000;37:523–532. [PubMed] [Google Scholar]

- Keil A, Sabatinelli D, Ding M, Lang PJ, Ihssen N, Heim S. Re-entrant projections modulate visual cortex in affective perception: evidence from Granger causality analysis. Hum Brain Mapp. 2009;30:532–540. doi: 10.1002/hbm.20521. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krolak-Salmon P, Hénaff M-A, Vighetto A, Bertrand O, Mauguière F. Early amygdala reaction to fear spreading in occipital, temporal, and frontal cortex: a depth electrode ERP study in human. Neuron. 2004;42:665–676. doi: 10.1016/s0896-6273(04)00264-8. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. Gainesville, FL: University of Florida, The Center for Research in Psychophysiology; 1997. International affective picture system (IAPS): technical manual and affective ratings. [Google Scholar]

- Larson CL, Ruffalo D, Nietert JY, Davidson RJ. Stability of emotion-modulated startle during short and long picture presentation. Psychophysiology. 2005;42:604–610. doi: 10.1111/j.1469-8986.2005.00345.x. [DOI] [PubMed] [Google Scholar]

- LeDoux J. The emotional brain: the mysterious underpinning of emotional life. New York: Simon and Schuster; 1996. [Google Scholar]

- Luo Q, Holroyd T, Majestic C, Cheng X, Schechter J, Blair RJ. Emotional automaticity is a matter of timing. J Neurosci. 2010;30:5825–5829. doi: 10.1523/JNEUROSCI.BC-5668-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moratti S, Keil A, Stolarova M. Motivated attention in emotional picture processing is reflected by activity in cortical attention networks. Neuroimage. 2004;21:954–964. doi: 10.1016/j.neuroimage.2003.10.030. [DOI] [PubMed] [Google Scholar]

- Müller MM. Location and features of instructive spatial cues do not influence the time course of covert shifts of visual spatial attention. Biological Psychology. 2008;77:292–303. doi: 10.1016/j.biopsycho.2007.11.003. [DOI] [PubMed] [Google Scholar]

- Müller MM, Teder-Sälejärvi W, Hillyard SA. The time course of cortical facilitation during cued shifts of spatial attention. Nat Neurosci. 1998;1:631–634. doi: 10.1038/2865. [DOI] [PubMed] [Google Scholar]

- Müller MM, Andersen S, Trujillo NJ, Valdés-Sosa P, Malinowski P, Hillyard SA. Feature-selective attention enhances color signals in early visual areas of the human brain. Proc Natl Acad Sci U S A. 2006;103:14250–14254. doi: 10.1073/pnas.0606668103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller MM, Andersen SK, Keil A. The time course of competition for visual processing resources between emotional pictures and foreground task. Cereb Cortex. 2008;18:1892–1899. doi: 10.1093/cercor/bhm215. [DOI] [PubMed] [Google Scholar]

- Öhman A, Flykt A, Esteves F. Emotion drives attention: detecting the snake in the grass. J Exp Psychol Gen. 2001;130:466–478. doi: 10.1037//0096-3445.130.3.466. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Adolphs R. Emotion processing and the amygdala: from a ‘low road’ to ‘many roads’ of evaluating biological significance. Nat Rev Neurosci. 2010;11:773–783. doi: 10.1038/nrn2920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, McKenna M, Gutierrez E, Ungerleider LG. Neural processing of emotional faces requires attention. Proc Natl Acad Sci U S A. 2002;99:11458–11463. doi: 10.1073/pnas.172403899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Spinelli L, Seeck M, Vuilleumier P. Temporal precedence of emotion over attention modulations in the lateral amygdala: Intracranial ERP evidence from a patient with temporal lobe epilepsy. Cognitive, affective and behavioral neuroscience. 2010;10:83–93. doi: 10.3758/CABN.10.1.83. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Junghöfer M, Weike AI, Hamm AO. The selective processing of briefly presented affective pictures: an ERP analysis. Psychophysiology. 2004;41:441–449. doi: 10.1111/j.1469-8986.2004.00174.x. [DOI] [PubMed] [Google Scholar]

- Thorpe S, Fize D, Marlot C. Speed of processing in the human visual system. Nature. 1996;381:520–522. doi: 10.1038/381520a0. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P. How brains beware: neural mechanisms of emotional attention. Trends Cogn Sci. 2005;9:585–594. doi: 10.1016/j.tics.2005.10.011. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–8841. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Yantis S, Hillstrom AP. Stimulus-driven attentional capture: evidence from equiluminant visual objects. J Exp Psychol Hum Percept Perform. 1994;20:95–107. doi: 10.1037//0096-1523.20.1.95. [DOI] [PubMed] [Google Scholar]