Abstract

Interest has increased recently in correlations across brain regions in the resting-state fMRI blood oxygen level-dependent (BOLD) response, but little is known about the functional significance of these correlations. Here we directly test the behavioral relevance of the resting-state correlation between two face-selective regions in human brain, the occipital face area (OFA) and the fusiform face area (FFA). We found that the magnitude of the resting-state correlation, henceforth called functional connectivity (FC), between OFA and FFA correlates with an individual's performance on a number of face-processing tasks, not non-face tasks. Further, we found that the behavioral significance of the OFA/FFA FC is independent of the functional activation and the anatomical size of either the OFA or FFA, suggesting that face processing depends not only on the functionality of individual face-selective regions, but also on the synchronized spontaneous neural activity between them. Together, these findings provide strong evidence that the functional correlations in the BOLD response observed at rest reveal functionally significant properties of cortical processing.

Introduction

Recently a number of functional magnetic resonance imaging (fMRI) studies have investigated neural activity in the human brain during periods of rest (when no stimuli are presented and no tasks are performed), and found that the spontaneous blood oxygenation level-dependent (BOLD) fluctuations are not random, but correlated across cortical regions with similar functional properties [for review, see Fox and Raichle (2007) and Greicius (2008)]. Further, these functional correlations are thought to reflect functional relationships mediated by anatomical connections (e.g., Vincent et al., 2007; Greicius et al., 2009; Honey et al., 2009). However, despite the abundance of work finding such correlations across cortical regions, little is known about the functional significance of these correlations: is synchronized spontaneous neural activity across cortical regions relevant for behavior, or is it merely epiphenomenal? Here we addressed this question by directly testing the behavioral significance of the resting-state correlations between two face-selective regions in the occipitotemporal cortex that are primarily involved in recognition of individual identity (Haxby et al., 2000; Calder and Young, 2005; Ishai, 2008)—the occipital face area (OFA) (Gauthier et al., 2000) and the fusiform face area (FFA) (Kanwisher et al., 1997)—found to be functionally correlated using resting-state fMRI (Nir et al., 2006; Zhang et al., 2009).

First, we calculated the correlation in spontaneous BOLD fluctuations between OFA and FFA during the resting state in participants, and then behaviorally tested the same participants outside the scanner on a number of face and non-face tasks. If the correlation in spontaneous BOLD fluctuations between OFA and FFA, henceforth referred to as functional connectivity (FC), is behaviorally relevant, then we predict that the magnitude of the OFA/FFA FC will correlate with an individual's performance on the face-processing tasks, not non-face tasks.

Materials and Methods

Participants

Nineteen participants (age: 18–23; 13 female) were recruited for this experiment. All participants were right handed, had normal or corrected-to-normal visual acuity, and gave informed consent. Both the behavioral and fMRI protocol were approved by the Institutional Review Board of Beijing Normal University, Beijing, China.

Experimental design

We used a region of interest (ROI) approach, in which we localized the OFA and FFA (as well as two scene-selective regions, used as control regions) (Localizer runs), and then using an independent set of resting-state data calculated correlations between these predefined category-selective regions (Resting-state runs). Unlike in traditional fMRI studies, the resting-state fMRI data were acquired while participants were instructed to lie still, keep their eyes closed, and think of whatever they would like. Importantly, the Resting-state runs were conducted before the Localizer runs to eliminate the possibility that participants might imagine any stimulus seen in the Localizer runs.

A few days after the scan, the same participants were again tested (outside the scanner) on a set of computerized behavioral tests examining several aspects of face and object processing. Specifically, there were three tests on faces (the face-recognition task, the face-inversion task, and the whole-part task) and four tests on non-face object processing (the object-recognition task, the global-form task, the global-motion task, and the global-local task). One participant participated only in the face-inversion task and the whole-part task. Finally, we then investigated how the resting-state correlation between the OFA and FFA related to an individual's performance on the above face- and object-processing tasks.

fMRI scanning

Scanning was done on a 3 T Siemens Trio scanner at the Beijing Normal University Imaging Center for Brain Research, Beijing, China. Functional images were acquired using a standard 12-channel head matrix coil and a gradient-echo single-shot echo planar imaging sequence [25 slices, repetition time (TR) = 1.5 s, echo time (TE) = 30 ms, voxel size = 3.1 × 3.1 × 4.0 mm, 0.8 mm interslice gap]. Slices were oriented parallel to each participant's temporal cortex covering the whole brain. High-resolution anatomical images were also acquired for each participant for reconstruction of the cortical surface.

For the Localizer runs, we used a standard localizer method to identify ROIs. Specifically, participants viewed two runs during which 15 s blocks (20 stimuli per block) of faces, objects, scenes, or scrambled objects were presented. Each image was presented for 300 ms, followed by a 450 ms interstimulus interval (ISI). Each run contained 21 blocks (four blocks of each of the four different stimulus categories, and five blocks of fixation only), totaling 5 min and 15 s. During the scan, participants performed a one-back task (i.e., responding via a button press when two consecutive images were identical).

For the Resting-state runs, all scanning parameters were the same as those for the Localizer runs except that (1) participants were instructed to lie still in the scanner without performing any tasks, and (2) the total scan time was 10 min 30 s, yielding a continuous time course consisting of 420 data points (TR = 1.5 s) (Cole et al., 2010).

fMRI data analysis

Data preprocessing.

Functional data were analyzed with the Freesurfer functional analysis stream (CorTechs) (Dale et al., 1999; Fischl et al., 1999), the fROI (http://froi.sourceforge.net), and in-house Matlab code. The preprocessing consisted of motion correction, intensity normalization, and spatial smoothing (Gaussian kernel, 5 mm full width at half maximum). Then, voxel time courses for each individual subject were fitted by a general linear model, with each condition modeled by a boxcar regressor matching its time course that was then convolved with a gamma function (δ = 2.25, τ = 1.25). In addition, six motion correction parameters and slow signal drifts (linear and quadratic) were also removed from the functional data.

ROI selection.

To define the OFA and FFA (in each hemisphere in each participant), we located the peak voxel within a region that responded more strongly to faces than objects and scenes (p < 10−2, uncorrected), and then selected a set of 27 contiguous significantly activated voxels (p < 10−4, uncorrected) within a 9-mm-radius sphere centered at the peak voxel. The reasons to limit the number of voxels in an ROI rather than to select all voxels within a face-selective region are (1) to remove the possible overlap between ROIs that may cause unwanted correlations from shared voxels, and (2) to keep the signal-to-noise ratio consistent across ROIs while averaging. Two scene-selective regions, the transverse occipital sulcus (TOS) and the parahippocampal place area (PPA), were defined as those regions that responded more strongly to scenes than objects and faces; otherwise, all section criteria were identical to those described above. These regions served as control regions.

In addition, three characteristics of the OFA and FFA were calculated: (1) the interregional distance between two regions, (2) the face selectivity, and (3) the size of each ROI. The interregional distance between the OFA and FFA was estimated as the distance between the peak voxels of each ROI in the native volume space of each participant. The selectivity of the OFA and FFA was calculated as the average of the t scores of all voxels within an ROI with the contrast of faces versus scenes and objects. Thus, the larger the value, the greater the degree of selectivity. The size of the OFA and FFA was calculated in two steps. First, we defined the ROIs in each participant as a contiguous cluster of voxels peaking in the fusiform gyrus (for the FFA) or the inferior occipital gyrus (for the OFA) that responded more to faces than objects and scenes (p < 10−2, uncorrected). Second, we counted the number of voxels in that ROI. Because the OFA and FFA sometimes overlap, especially at this threshold, anatomical constraints were used in determining the border between any overlapping OFAs and FFAs. Specifically, the lateral occipitotemporal gyrus (i.e., the fusiform gyrus) and sulcus derived from FreeSurfer parcellation were used for the boundary of the FFA, whereas the inferior and middle occipital gyrus and sulcus were used for that of OFA.

Resting-state correlation.

In addition to the aforementioned standard preprocessing of fMRI data, several other preprocessing steps were used to reduce spurious variance unlikely to reflect neural activity in resting-state data. These steps included using a temporal bandpass filter (0.01–0.08 Hz) to retain low-frequency signals only (Cordes et al., 2001), regression of the time course obtained from rigid-body head motion correction, and regression of the mean time course of whole-brain BOLD fluctuations.

After the preprocessing, a continuous time course for each ROI was extracted by averaging the time courses of all 27 voxels in each of the ROIs. Thus, we obtained a time course consisting of 420 data points for each ROI and for each participant. Temporal correlation coefficients between the extracted time course from a given ROI and those from other ROIs were calculated to determine which regions were functionally correlated at rest. Correlation coefficients (r) were transformed to Gaussian-distributed z scores via Fisher's transformation to improve normality, and these z scores were then used for further analyses (Fox et al., 2006).

Behavioral tests

Face- and object-recognition tasks.

In the face-recognition task, 60 face images on a natural background were used to measure participants' ability in recognizing familiar faces (Fig. 1A, top). In each trial, a face image was presented for 33 ms, immediately masked by the scrambled version of that image (mosaic-like) for the rest of the trial. Participants then performed a two-alternative forced-choice (2AFC) task, reporting whether the image was a prespecified target (e.g., Chiu-Wai Leung, a Chinese movie star), or another exemplar from the same basic-level category (e.g., another Chinese movie star). There were 60 trials, half of which contained targets. In the object-recognition task, three object categories were tested in separate blocks: (1) chrysanthemum versus other flowers, (2) jeep versus other cars, and (3) pigeon versus other birds (Fig. 1A, bottom). There were 20 exemplars and thus 20 trials for each object category. In each trial, an object image was presented for 50 ms, while the rest of the experimental parameters were identical to those in the face-recognition task. The order of the face- and object-recognition tasks was counterbalanced across participants. Accuracy for face and object recognition was calculated separately, and was corrected for guessing: Accuracy = (Hit − False Alarm)/(1 − False Alarm).

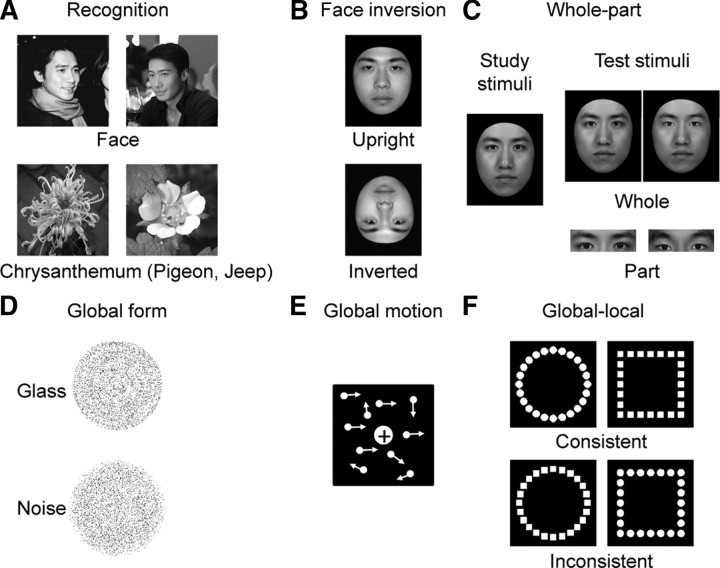

Figure 1.

Stimulus exemplars of the behavioral tasks. A, Face- and object-recognition tasks. Participants reported the prespecified targets at the subordinate level: Chiu-Wai Leung (a famous Chinese movie star), chrysanthemum, pigeon, and jeep. B, Face-inversion task. Participants performed a successive same-different matching task on upright and inverted faces, respectively. C, Whole-part task. Participants identified a face part of one individual (nose, mouth, or eyes) that was presented either in the context of the rest face (whole) or in isolation (part). D, Global-form task. Participants differentiated stimuli containing the Glass pattern from stimuli comprised solely of noise dots. E, Global-motion task. Participants reported the overall direction of motion by integrating local motion cues. F, Global-local task. Participants identified the shape at either the global or local level either when the global and the local shapes shared identity (consistent) or not (inconsistent).

Face-inversion task.

Twenty-five face images were used, which were gray-scale adult Chinese male faces, with all external information (e.g., hair) removed (Fig. 1B). Pairs of face images were presented sequentially, either both upright or both inverted, with upright- and inverted-face trials randomly interleaved. Each trial started with a blank screen for 1 s, followed by the first face image presented at the center of the screen for 0.5 s. Then, after an ISI of 0.5 s, the second image was presented until a response was made. Participants were instructed to judge whether the two sequentially presented faces were identical. There were 50 trials for each condition, half of which consisted of face pairs that were identical, and half of which consisted of face pairs from different individuals. The face-inversion effect (FIE), a classic and reliable behavioral marker for face-specific processing, was calculated as the difference in performance on upright versus inverted faces: FIE = (Upright − Inverted)/(Upright + Inverted).

Whole-part task.

This task was used to measure participants' tendency to process faces as integrated wholes, rather than as sets of independent components. It consisted of two segments: a study segment and a test segment (Fig. 1C). In the study segment, participants were instructed to memorize three faces and their corresponding names. Each face–name pair was shown for 5 s with an ISI of 1 s. Only when the participants could correctly identify all face–name pairs were they allowed to enter the test segment. In the test segment, a question like “Which is Xiao Zhang's nose (or mouth, or eyes)?” was presented, followed by two images presented to the left and right sides of the screen. The display remained on the screen until the participants made a 2AFC response. There were two conditions, the Part condition and the Whole condition. For the Part condition, the display contained two images of an individual face part (e.g., two noses): one was from the target face (i.e., Xiao Zhang's face), and the other from another one of the studied faces. For the Whole condition, the display contained two whole faces, with the target and a foil face differing only with respect to the individual face part that had been tested in the Part condition; the rest of the face parts were the same. These part and whole condition were randomly interleaved, each of which consisted of 36 trials. The whole-part effect (WPE), a behavioral marker for holistic processing, was calculated as an improvement in accuracy when a face part was presented in the context of the rest of face than in isolation: WPE = (Whole − Part)/(Whole + Part).

Global-form task.

Concentric Glass patterns (i.e., concentric swirls) were used to assess participants' sensitivity to the global structure in an image (Fig. 1D). There were two types of stimuli: Glass patterns and noise patterns. For Glass patterns, pairs of black dots were placed tangentially to concentric circles, some of which were then replaced by randomly oriented dot pairs. There were 10 levels of concentricity, ranging from 15% to 33% of the aligned dot pairs in steps of 2%. For noise patterns, all aligned dot pairs were replaced with randomly oriented dot pairs. In each trial, either a Glass pattern or a noise pattern was presented for 1 s, and participants were instructed to report whether the Glass pattern or the noise pattern had appeared. There were 80 trials, half of which contained Glass patterns. The order of two types of trials was randomized. The overall accuracy was used as a measure of participants' ability to integrate local elements into a global form.

Global-motion task.

Random dot kinematograms were used to examine participants' ability to integrate local motion cues to produce a percept of global motion (Fig. 1E). In each trial, white dots, against a black background, moved randomly at a speed of 6.18° of visual angle per second within a square region for 1.5 s, with a proportion moving coherently either leftward or rightward. The percentage of dots that moved coherently, ranging from 4% to 11.5% in steps of 0.5%, varied across trials, and participants reported whether the overall direction of motion was leftward or rightward. There were 128 trials in total. The accuracy in detecting the global motion was used as a measure of participants' ability to integrate local motion cues.

Global-local task.

This task, a variant of Navon's task, was used to measure participants' ability to globally process visual information. The stimuli were four hierarchical shapes of two types: consistent shapes in which the global and the local shapes shared identity (e.g., local circles forming a global circle), and inconsistent shapes for which the shapes at the two levels had different identities (e.g., local squares forming a global circle) (Fig. 1F). There were two blocks, each of which contained 80 trials, preceded by instructions to identify shapes at either the local or global level. In each block, there were 40 trials of consistent shapes and 40 trials of inconsistent shapes, which were randomly interleaved. Each trial started with a central fixation cross for 0.7 s, followed by one of the four possible stimuli presented for 0.15 s. Participants were instructed to respond as quickly and accurately as possible whether they saw a circle or a square. The global-to-local interference (GLI), a measure of for global visual information to be privileged attentionally over local information, was calculated based on reaction time: GLI = [Consistent (Global − Local) − Inconsistent (Global − Local)]/[Consistent (Global + Local) + Inconsistent (Global + Local)].

Results

OFA and FFA are functionally connected

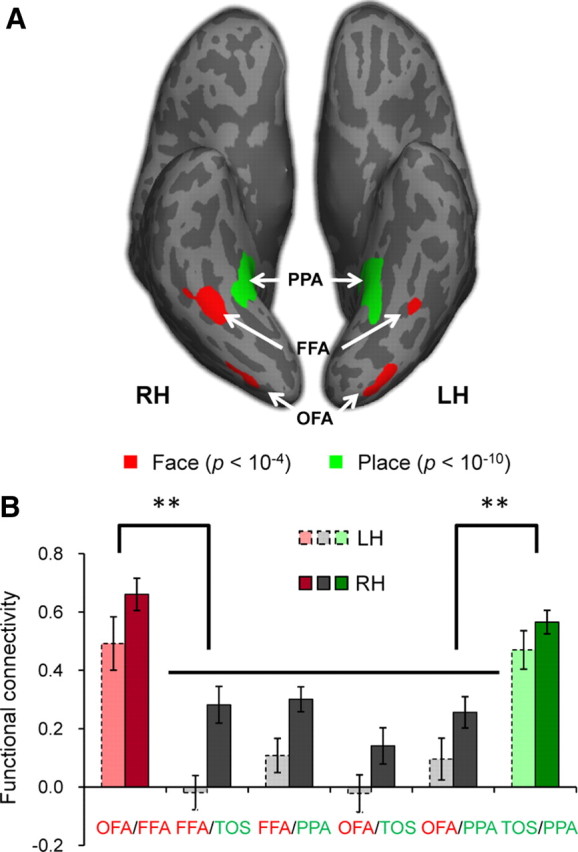

Two face-selective (OFA and FFA) and two scene-selective (TOS and PPA) regions were localized within the occipital-temporal cortex of both hemispheres in 16 participants scanned (for Talairach coordinates, see Table 1). The other three participants who did not show all ROIs were excluded from further analyses. Figure 2A shows an activation map for faces and scenes on an inflated cortical surface of a typical participant.

Table 1.

Talairach coordinates of ROIs averaged across participants (mean ± SD)

| ROI | Hemisphere | Talairach coordinates |

||

|---|---|---|---|---|

| x | y | z | ||

| FFA | Right | 40 ± 4 | −59 ± 7 | −13 ± 5 |

| Left | −40 ± 2 | −56 ± 4 | −15 ± 4 | |

| OFA | Right | 37 ± 6 | −82 ± 4 | −4 ± 4 |

| Left | −36 ± 6 | −84 ± 7 | −5 ± 4 | |

| PPA | Right | 28 ± 4 | −44 ± 6 | −8 ± 3 |

| Left | −27 ± 5 | −47 ± 4 | −7 ± 4 | |

| TOS | Right | 37 ± 4 | −79 ± 6 | 25 ± 6 |

| Left | −34 ± 7 | −82 ± 6 | 22 ± 5 | |

Figure 2.

The hierarchical structure of face-selective and scene-selective network. A, Face- and scene-selective regions of a typical subject. Face-selective regions, the OFA and FFA (p < 10−4, uncorrected, red), and scene-selective region, the PPA (p < 10−10, uncorrected, green), from the Localizer scan are shown on an inflated brain. Sulci are shown in dark gray and gyri in light gray. The scene-selective region, the TOS, is not shown. B, The hierarchical structure of functional connectivity. The functional connectivity between regions that were selective to the same perceptual objects (faces, red; scenes, green) was larger than those between regions that were selective to different object categories respectively (gray). In addition, the functional connectivity between ROI pairs in the right hemisphere (dark) was in general larger than that in the left hemisphere (light). Error bars indicate SEM. **p < 0.001.

A two-way ANOVA of FC between all ROI pairs by hemisphere shows that the FC in the right hemisphere (RH) was significant larger than that in the left hemisphere (LH) (F(1,15) = 20.91, p < 0.001); however, there was no interaction (F(5,75) = 1.07, p = 0.38) (Fig. 2B). Thus, to simplify analyses, the FCs were averaged across hemisphere for each ROI pair.

Importantly, the FC between two face-selective regions (OFA/FFA FC: r = 0.69) was significantly larger than the correlation between a face-selective and a scene-selective region (FFA/TOS FC: r = 0.25; FFA/PPA FC: r = 0.15; OFA/TOS FC: r = 0.18; OFA/PPA FC: r = 0.06) (F(4,12) = 9.64, p < 0.001), demonstrating a “functional connection” between OFA and FFA (Fig. 2B). This finding is consistent with two other studies reporting functional correlations across face-selective regions (Nir et al., 2006; Zhang et al., 2009).

Face processing, not object processing, is correlated with OFA/FFA FC

Next, we asked whether the OFA/FFA FC was correlated with performance on three face-processing tasks: the face-recognition task, the face-inversion task, and the whole-part task. If the OFA/FFA FC is behaviorally relevant, then we predict that individuals' performance on a number of face tasks, not non-face tasks, will be correlated with the magnitude of their OFA/FFA FC.

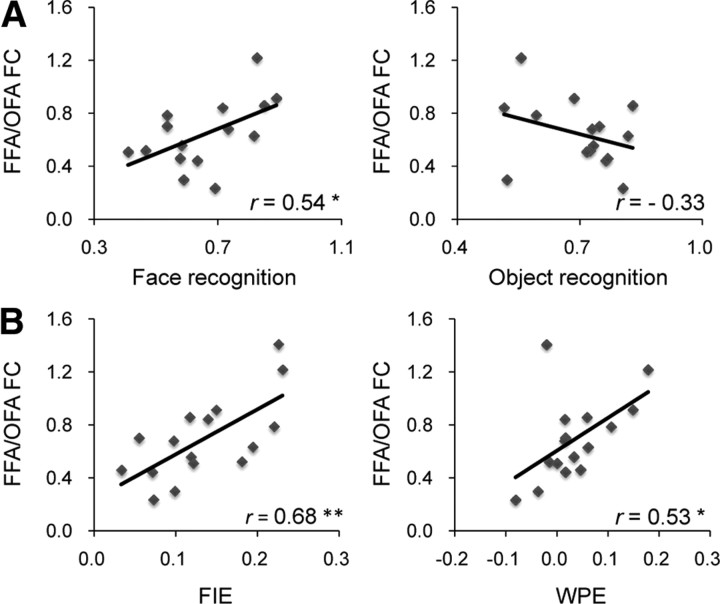

In the face-recognition task, participants were briefly presented famous faces, immediately followed by a mask, and then asked to respond to the face of a particular individual (Grill-Spector et al., 2004) (Fig. 1A). As predicted, we found that individuals' accuracy of recognizing familiar faces was correlated with their OFA/FFA FC (r = 0.54, p = 0.04) (Fig. 3A, left) (for behavioral results, see Table 2). Similar results were obtained from Spearman's rank correlation analysis (Spearman's ρ = 0.51, p = 0.05), ruling out the possibility that the behavior–OFA/FFA FC correlation was driven by outlier participants.

Figure 3.

Behavioral correlates of the OFA/FFA FC. A, The OFA/FFA FC was positively correlated with the accuracy in recognizing the face of a famous movie star from other movie stars (left) (*p < 0.05; **p < 0.01). No such correlation was found between the OFA/FFA FC and participants' performance in recognizing exemplars of the within-category target (e.g., chrysanthemum) among other exemplars from the same basic-level category (e.g., other flowers) (right). Note that the accuracy shown here was the average of performance on three object categories. B, The OFA/FFA FC was also correlated with two behavioral markers of face-specific processing: the FIE (left) and the WPE (right). y-axis is the functional connectivity between OFA and FFA indexed by the correlation coefficients after Fisher's transformation (Fisher's z scores), and x-axis is the behavioral measure of each task.

Table 2.

Means and SDs for each of the behavioral tests

| Behavioral Tests | Mean (SD) |

|---|---|

| Recognition | |

| Faces | 0.66 (0.15) |

| Birds | 0.57 (0.20) |

| Cars | 0.75 (0.23) |

| Flowers | 0.78 (0.11) |

| Face-inversion | |

| Upright | 0.89 (0.08) |

| Inverted | 0.68 (0.11) |

| FIE | 0.13 (0.06)** |

| Whole-part | |

| Whole | 0.82 (0.14) |

| Part | 0.77 (0.14) |

| WPE | 0.03 (0.07)* |

| Global-form | 0.71 (0.12) |

| Global-motion | 0.71 (0.11) |

| Global-local (RT) | |

| Consistent | 0.38 (0.12) |

| Inconsistent | 0.39 (0.11) |

| GLI | 0.005 (0.03) |

RT, Reaction time.

*p < 0.05;

**p < 0.001.

By contrast, no significant behavior–OFA/FFA FC correlation was found when the same participants had to distinguish pigeons from other birds (r = −0.07, p = 0.81; Spearman's ρ = −0.14, p = 0.63), jeeps from other cars (r = −0.33, p = 0.23; Spearman's ρ = −0.03, p = 0.92), or chrysanthemums from other flowers (r = −0.14, p = 0.62; Spearman's ρ = −0.01, p = 0.99). Crucially, the correlation between face recognition and the OFA/FFA FC was significantly larger than the correlation between the average performance on objects and the OFA/FFA FC (r = −0.33) (Steiger's Z = 2.27, p = 0.02) (Fig. 3A, right).

While the above findings suggest that the OFA/FFA FC may be behaviorally relevant for face recognition, not for object recognition, images of faces and objects used in the above experiment differed in many ways (e.g., low-level visual properties, prior knowledge on familiar faces and objects), any of which might account for the behavioral significance of the OFA/FFA FC. Thus, a more direct test would be to contrast performance on upright novel faces with inverted ones (Fig. 1B), which share virtually all visual properties of faces yet are not processed as faces (Yin, 1969). The FIE—the difference in discrimination performance on upright versus inverted faces—is one of the most classic and reliable behavioral markers for face-specific processing. We found a significant correlation between participants' FIE scores and their OFA/FFA FC (r = 0.68, p = 0.004; Spearman's ρ = 0.63, p = 0.009) (Fig. 3B, left). Consistent with our hypothesis, this result suggests that it is face processing, in particular, that is related to the synchronized spontaneous neural activity between the OFA and FFA.

To further investigate the face-specific nature of the behavior–OFA/FFA FC correlation, we asked whether the OFA/FFA FC was related to the holistic representation of faces. A large body of evidence consistently demonstrates that the key difference in the way that faces are processed, compared to objects, is that faces are represented as integrated wholes, rather than as sets of independent components. One classic test of holistic processing is the whole-part task, in which the participants were instructed to recognize a face part either in the context of the whole face (Whole) or in isolation (Part) (Tanaka and Farah, 1993) (Fig. 1C). The WPE then reflects the participants' ability to recognize a face part in the context of the rest of the face compared to the same face part in isolation. We found a significant correlation between participants' WPE scores and their OFA/FFA FC (r = 0.53, p = 0.03; Spearman's ρ = 0.49, p = 0.05) (Fig. 3B, right). Further, the Whole condition is most responsible for the positive correlation, because after regressing out the variance of the Part condition from the Whole condition, the residual variance was still correlated with the OFA/FFA FC (Pearson r = 0.48, p = 0.06; Spearman ρ = 0.55, p = 0.03). Therefore, the synchronized spontaneous neural activity between OFA and FFA is related to the mechanisms that are engaged specifically in the holistic processing of faces.

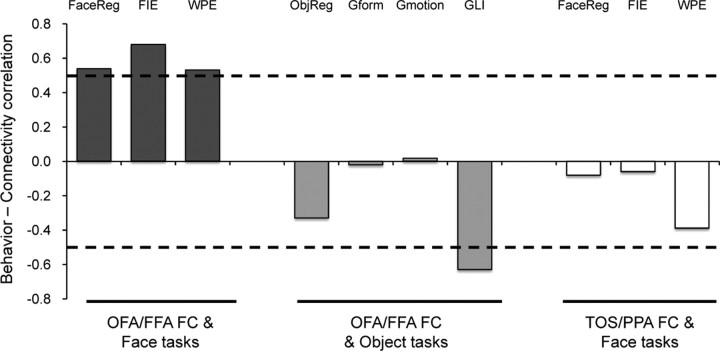

Global processing, in general, is not correlated with OFA/FFA FC

Might it be the case that the above behavior–OFA/FFA FC correlation is being driven by general cognitive mechanisms such as global processing of visual stimuli, rather than face-specific mechanisms? To test this possibility, we examined participants' ability to (1) integrate local elements into a global form (Fig. 1D), and (2) to integrate local motion cues to produce a percept of global motion (Le Grand et al., 2006) (Fig. 1E). No significant behavior–OFA/FFA FC correlation was found in either the global-form task (r = −0.02, p = 0.94; Spearman's ρ = −0.08, p = 0.79) or the global-motion task (r = 0.02, p = 0.95; Spearman's ρ = 0.12, p = 0.67) (Fig. 4).

Figure 4.

Behavior–FC correlations. The OFA/FFA FC positively correlated with participants' performance in all three face tasks tested (dark gray). Critically, no positive correlation was found either between the OFA/FFA FC and the object tasks (light gray) or between the TOS/PPA FC and the face tasks (white). The dashed lines indicate the significance level in the behavior–FC correlation analysis (p = 0.05). The y-axis denotes the correlation coefficient for the behavior–FC correlation. FaceReg, Face-recognition task; ObjReg, object recognition task; Gform, global-form task; Gmotion, global-motion task.

Perhaps an even more direct test of an individual's ability to globally process visual (non-face) information is the global-local task (Barton et al., 2002; Mevorach et al., 2006; Bentin et al., 2007), where participants report the shape of compound objects at either the global or local level (Fig. 1F). The GLI reflects the fact that inconsistent global shapes have a larger effect in delaying local identification than inconsistent local shapes have on global identification. We found that the GLI scores were not positively correlated with the OFA/FFA FC (Fig. 4). Instead, the correlation was negative (r = −0.63, p = 0.01; Spearman's ρ = −0.67, p = 0.006). This negative correlation was most strongly accounted for by the local identification task (i.e., the global-interfering-with-local task) (r = −0.56, p = 0.03), not the global identification task (r = 0.42, p = 0.11), suggesting that individuals with larger OFA/FFA FC have a tendency for local information to be privileged attentionally over global information when the visual stimuli are not faces. This dissociation further confirms that the OFA/FFA FC is behaviorally relevant for individuals' performance in holistic processing of faces only.

The behavior–OFA/FFA FC correlation is cortically specific

Another possibility is that the face-specific behavior–OFA/FFA FC correlation may reflect a general property of synchronized spontaneous BOLD fluctuations in the high-level visual cortex. To rule out this possibility, we examined whether the FC between two scene-selective regions (i.e., TOS and PPA) is related to participants' performance on faces as well. Although the TOS/PPA FC was as large as the OFA/FFA FC (t(15) = 1.1, p = 0.28) (Fig. 2B), it was not correlated with the behavioral performance in recognizing familiar faces (r = −0.08, p = 0.77; Spearman's ρ = −0.09, p = 0.75), discriminating upright faces (vs inverted faces) (FIE) (r = −0.06, p = 0.82; Spearman's ρ = −0.09, p = 0.74), or recognizing face parts in the context of the rest of the face (vs in isolation) (WPE) (r = −0.39, p = 0.14; Spearman's ρ = −0.40, p = 0.13) (Fig. 4). In addition, to rule out the possibility that the behavioral relevance of the OFA/FFA FC may be accounted for by some physiological noise embedded in the resting data (e.g., cardiac and respiratory artifacts), we calculated the partial correlation between the OFA/FFA FC and the behavioral performance while controlling for spontaneous fluctuations observed in TOS and PPA. If fluctuations in OFA and FFA were affected by physiological noise, so would TOS and PPA; thus, by partialing out the spontaneous fluctuations in TOS and PPA, we filter out any possible physiological noise. As expected, after controlling for any physiological noise the behavior–OFA/FFA FC correlations remained (Face recognition: r = 0.58, p = 0.02; FIE: r = 0.69, p = 0.003; WPE: r = 0.54, p = 0.03).

The OFA/FFA FC reflects a network-level property in face processing

Could the correlation between behavior and the OFA/FFA FC be explained by functional properties of its constituent parts? A previous study has shown that one measure of face perception performance (i.e., FIE) was correlated with the response in FFA when participants performed the same task in the scanner (Yovel and Kanwisher, 2005). Therefore, one may expect that participants who exhibit a strong OFA/FFA FC (and hence better performance in the face tasks) also exhibit a greater face-selective response in their OFA, FFA, or both. We addressed this possibility by examining the relationship between the OFA/FFA FC and a standard measure of the functional property of the OFA and FFA (i.e., face selectivity determined by the contrast of faces vs non-face objects during the localizer task).We found that the OFA/FFA FC was not correlated with the face-selective response of either the OFA (r = 0.09, p = 0.74) or FFA (r = −0.15, p = 0.58), or the average response of the two regions (r = −0.03, p = 0.90). In addition to the functional activation, we also tested whether the size of the ROIs (Golarai et al., 2007, 2010) were correlated with the OFA/FFA FC, and found no significant correlations between the OFA/FFA FC and OFA size (r = −0.16, p = 0.56), OFA/FFA FC and FFA size (r = 0.12, p = 0.65), or OFA/FFA FC and the averaged size of both regions (r = −0.09, p = 0.75). Therefore, the OFA/FFA FC is unlikely accounted for by either the functional activation or the anatomical size of its constituent parts.

Second, the face-selective response of the OFA and FFA in the right hemisphere was significantly higher than that in the left hemisphere (F(1,15) = 6.2, p = 0.03), consistent with previous findings (for a review, see Kanwisher and Yovel, 2006); however, there was no hemispheric difference in the behavior–OFA/FFA FC correlations (Face recognition, LH/RH: r = 0.49/0.37, Steiger's Z = 0.4, p = 0.37; FIE, LH/RH: r = 0.51/0.53, Steiger's Z = 0.1, p = 0.40; WPE, LH/RH: r = 0.45/0.27, Steiger's Z = 0.6, p = 0.33). Finally, the OFA/FFA FC was negatively correlated with the interregional distance between the OFA and FFA (r = −0.50, p = 0.05; Spearman's ρ = −0.47, p = 0.07), as expected (Honey et al., 2009); However, after controlling for interregional distance, the correlation between the OFA/FFA FC and the perception of upright faces (vs inverted faces), for example, remained (Partial correlation, r = 0.75, p = 0.001). Together, these results provide evidence that the OFA/FFA FC possesses a functional property that corresponds to the face network better than to any of its constituent parts.

Discussion

Here we asked whether synchronized spontaneous neural activity across cortical regions is relevant for behavior, or merely epiphenomenal. We addressed this question by directly testing the behavioral significance of the functional connectivity between two face-selective regions that are involved in the recognition of individual identity: the OFA and FFA. We found that the OFA/FFA FC was correlated with individuals' performance on a variety of face tasks focusing on different aspects of face processing, such as recognition of familiar faces (the face-recognition task), perceptual discrimination of novel faces (the face-inversion task), and holistic processing of faces (the who-part task), suggesting that synchronized spontaneous neural activity between the OFA and FFA is behaviorally relevant. Second, the behavioral significance of the OFA/FFA FC is independent of the functional properties of either the OFA or FFA alone, suggesting that the OFA/FFA FC reflects a network-level property in face processing. Finally, we found that this functionally connected face network was relatively encapsulated, not related to individuals' performance on similar cognitive processes on non-face objects, such as recognition of familiar objects, perception of global form or global motion, and global processing of visual stimuli. Together, these findings provide strong evidence that the synchronized spontaneous neural activity across cortical regions is functionally significant, not epiphenomenal.

Prior work with fMRI has identified multiple face-selective regions in the brain, with each involved in a different aspect of face processing (Haxby et al., 2000; Calder and Young, 2005). Specifically, the OFA and FFA, which are primarily involved in recognition of individual identity (Grill-Spector et al., 2004), are sensitive to different aspects of faces (Liu et al., 2010). The OFA is sensitive to the presence of face parts (Pitcher et al., 2007; Harris and Aguirre, 2008; Andrews et al., 2010), whereas the FFA is preferentially involved in analyzing the configuration among them (Barton et al., 2002; Yovel and Kanwisher, 2004; Schiltz and Rossion, 2006; Rotshtein et al., 2007; Schiltz et al., 2010). Further, typical face processing requires the interaction of the OFA and FFA, because the FFA, for example, can be preserved in an individual with a lesion to the OFA and suffering from prosopagnosia (i.e., severe deficits in face recognition) (Rossion et al., 2003; Steeves et al., 2006). However, exactly how anatomically distributed regions with different functionality work in concert to give rise to a unified representation of faces remains unknown. Our study suggests that one way of coordinating multiple regions may rely on synchronized neural activity (Fries, 2009), and the breakdown of synchronized propagation of information among face-selective regions may result in selective deficits in face processing (Baker, 2008; Fox et al., 2008; Avidan and Behrmann, 2009). This hypothesis dovetails with a recent study showing that after extensive behavioral training in holistic processing, the functional connectivity between the OFA and FFA, not the selectivity of each individual region, increases along with improvement in face recognition (DeGutis et al., 2007). Therefore, typical face recognition likely depends on not only the intact functionality of individual face-selective region (e.g., Barton et al., 2002; Rossion et al., 2003; Steeves et al., 2006; Riddoch et al., 2008) but also synchronized spontaneous neural activity across these regions.

Our finding of a significant correlation in the synchronized spontaneous neural activity between the OFA and FFA might reflect a functional relationship mediated by anatomical connections between these two regions (Vincent et al., 2007; Greicius et al., 2009; Honey et al., 2009). This idea fits nicely with a recent finding showing a hierarchically organized anatomical network for face processing as electrical microstimulation of one face-selective region in the macaque brain induces activation only in other face-selective regions (Moeller et al., 2008). In our study, we found a similar hierarchical structure formed by the FC, with the OFA/FFA FC being larger than FC between a face-selective region and a scene-selective region. On the other hand, FC can be disrupted in the absence of anatomical damages to their connections (He et al., 2007), suggesting that intact anatomical connections may be not sufficient for normal FC. Second, the OFA/FFA FC cannot directly result in the behavioral performance; instead, its behavioral relevance must be indirect, possibly via its role in organizing and coordinating neural activation of each individual region during tasks (DeGutis et al., 2007). Future studies are needed to investigate the link among anatomical connections across regions, synchronized neural activity across regions at rest, and the neural activation of the same regions during tasks.

The behavioral significance of the synchronized spontaneous neural activity between OFA and FFA demonstrated here invites a border investigation of whether other functionally connected networks, including visual areas sensitive to other object categories and even cortical regions found in other cognitive domains (e.g., auditory, memory, language, and attention), also play a role in their corresponding cognitive processes (Hampson et al., 2006a,b; He et al., 2007; Seeley et al., 2007; Tambini et al., 2010). In addition, this work may also help elucidate the mechanisms through which regions interact with each other to bring about a unified representation of the human mind.

Footnotes

This study was funded by the 100 Talents Program of the Chinese Academy of Sciences, the National Basic Research Program of China (2010CB833903 and 2011CB505402), and the Fundamental Research Funds for the Central Universities. We thank Nancy Kanwisher and Joshua Julian for comments on the manuscript and Xiangyu Long, Zonglei Zhen, and Siyuan Hu for data analysis.

References

- Andrews TJ, Davies-Thompson J, Kingstone A, Young AW. Internal and external features of the face are represented holistically in face-selective regions of visual cortex. J Neurosci. 2010;30:3544–3552. doi: 10.1523/JNEUROSCI.4863-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avidan G, Behrmann M. Functional MRI reveals compromised neural integrity of the face processing network in congenital prosopagnosia. Curr Biol. 2009;19:1146–1150. doi: 10.1016/j.cub.2009.04.060. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker CI. Face to face with cortex. Nat Neurosci. 2008;11:862–864. doi: 10.1038/nn0808-862. [DOI] [PubMed] [Google Scholar]

- Barton JJ, Press DZ, Keenan JP, O'Connor M. Lesions of the fusiform face area impair perception of facial configuration in prosopagnosia. Neurology. 2002;58:71–78. doi: 10.1212/wnl.58.1.71. [DOI] [PubMed] [Google Scholar]

- Bentin S, Degutis JM, D'Esposito M, Robertson LC. Too many trees to see the forest: performance, event-related potential, and functional magnetic resonance imaging manifestations of integrative congenital prosopagnosia. J Cogn Neurosci. 2007;19:132–146. doi: 10.1162/jocn.2007.19.1.132. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Young AW. Understanding the recognition of facial identity and facial expression. Nat Rev Neurosci. 2005;6:641–651. doi: 10.1038/nrn1724. [DOI] [PubMed] [Google Scholar]

- Cole DM, Smith SM, Beckmann CF. Advances and pitfalls in the analysis and interpretation of resting-state FMRI data. Front Syst Neurosci. 2010;4:8. doi: 10.3389/fnsys.2010.00008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cordes D, Haughton VM, Arfanakis K, Carew JD, Turski PA, Moritz CH, Quigley MA, Meyerand ME. Frequencies contributing to functional connectivity in the cerebral cortex in “resting-state” data. AJNR Am J Neuroradiol. 2001;22:1326–1333. [PMC free article] [PubMed] [Google Scholar]

- Dale AM, Fischl B, Sereno MI. Cortical surface-based analysis: I. Segmentation and surface reconstruction. Neuroimage. 1999;9:179–194. doi: 10.1006/nimg.1998.0395. [DOI] [PubMed] [Google Scholar]

- DeGutis JM, Bentin S, Robertson LC, D'Esposito M. Functional plasticity in ventral temporal cortex following cognitive rehabilitation of a congenital prosopagnosic. J Cogn Neurosci. 2007;19:1790–1802. doi: 10.1162/jocn.2007.19.11.1790. [DOI] [PubMed] [Google Scholar]

- Fischl B, Sereno MI, Dale AM. Cortical surface-based analysis: II. Inflation, flattening, and a surface-based coordinate system. Neuroimage. 1999;9:195–207. doi: 10.1006/nimg.1998.0396. [DOI] [PubMed] [Google Scholar]

- Fox CJ, Iaria G, Barton JJ. Disconnection in prosopagnosia and face processing. Cortex. 2008;44:996–1009. doi: 10.1016/j.cortex.2008.04.003. [DOI] [PubMed] [Google Scholar]

- Fox MD, Raichle ME. Spontaneous fluctuations in brain activity observed with functional magnetic resonance imaging. Nat Rev Neurosci. 2007;8:700–711. doi: 10.1038/nrn2201. [DOI] [PubMed] [Google Scholar]

- Fox MD, Corbetta M, Snyder AZ, Vincent JL, Raichle ME. Spontaneous neuronal activity distinguishes human dorsal and ventral attention systems. Proc Natl Acad Sci U S A. 2006;103:10046–10051. doi: 10.1073/pnas.0604187103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fries P. Neuronal gamma-band synchronization as a fundamental process in cortical computation. Annu Rev Neurosci. 2009;32:209–224. doi: 10.1146/annurev.neuro.051508.135603. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Moylan J, Skudlarski P, Gore JC, Anderson AW. The fusiform “face area” is part of a network that processes faces at the individual level. J Cogn Neurosci. 2000;12:495–504. doi: 10.1162/089892900562165. [DOI] [PubMed] [Google Scholar]

- Golarai G, Ghahremani DG, Whitfield-Gabrieli S, Reiss A, Eberhardt JL, Gabrieli JDE, Grill-Spector K. Differential development of high-level visual cortex correlates with category-specific recognition memory. Nat Neurosci. 2007;10:512–522. doi: 10.1038/nn1865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Golarai G, Hong S, Haas BW, Galaburda AM, Mills DL, Bellugi U, Grill-Spector K, Reiss AL. The fusiform face area is enlarged in Williams syndrome. J Neurosci. 2010;30:6700–6712. doi: 10.1523/JNEUROSCI.4268-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Greicius M. Resting-state functional connectivity in neuropsychiatric disorders. Curr Opin Neurol. 2008;21:424–430. doi: 10.1097/WCO.0b013e328306f2c5. [DOI] [PubMed] [Google Scholar]

- Greicius MD, Supekar K, Menon V, Dougherty RF. Resting-state functional connectivity reflects structural connectivity in the default mode network. Cereb Cortex. 2009;19:72–78. doi: 10.1093/cercor/bhn059. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K, Knouf N, Kanwisher N. The fusiform face area subserves face perception, not generic within-category identification. Nat Neurosci. 2004;7:555–562. doi: 10.1038/nn1224. [DOI] [PubMed] [Google Scholar]

- Hampson M, Driesen NR, Skudlarski P, Gore JC, Constable RT. Brain connectivity related to working memory performance. J Neurosci. 2006a;26:13338–13343. doi: 10.1523/JNEUROSCI.3408-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hampson M, Tokoglu F, Sun Z, Schafer RJ, Skudlarski P, Gore JC, Constable RT. Connectivity-behavior analysis reveals that functional connectivity between left BA39 and Broca's area varies with reading ability. Neuroimage. 2006b;31:513–519. doi: 10.1016/j.neuroimage.2005.12.040. [DOI] [PubMed] [Google Scholar]

- Harris A, Aguirre GK. The representation of parts and wholes in face-selective cortex. J Cogn Neurosci. 2008;20:863–878. doi: 10.1162/jocn.2008.20509. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends Cogn Sci. 2000;4:223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- He BJ, Snyder AZ, Vincent JL, Epstein A, Shulman GL, Corbetta M. Breakdown of functional connectivity in frontoparietal networks underlies behavioral deficits in spatial neglect. Neuron. 2007;53:905–918. doi: 10.1016/j.neuron.2007.02.013. [DOI] [PubMed] [Google Scholar]

- Honey CJ, Sporns O, Cammoun L, Gigandet X, Thiran JP, Meuli R, Hagmann P. Predicting human resting-state functional connectivity from structural connectivity. Proc Natl Acad Sci U S A. 2009;106:2035–2040. doi: 10.1073/pnas.0811168106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ishai A. Let's face it: it's a cortical network. Neuroimage. 2008;40:415–419. doi: 10.1016/j.neuroimage.2007.10.040. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, Yovel G. The fusiform face area: a cortical region specialized for the perception of faces. Philos Trans R Soc Lond B Biol Sci. 2006;361:2109–2128. doi: 10.1098/rstb.2006.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Le Grand R, Cooper PA, Mondloch CJ, Lewis TL, Sagiv N, de Gelder B, Maurer D. What aspects of face processing are impaired in developmental prosopagnosia? Brain Cogn. 2006;61:139–158. doi: 10.1016/j.bandc.2005.11.005. [DOI] [PubMed] [Google Scholar]

- Liu J, Harris A, Kanwisher N. Perception of face parts and face configurations: an FMRI study. J Cogn Neurosci. 2010;22:203–211. doi: 10.1162/jocn.2009.21203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mevorach C, Humphreys GW, Shalev L. Opposite biases in salience-based selection for the left and right posterior parietal cortex. Nat Neurosci. 2006;9:740–742. doi: 10.1038/nn1709. [DOI] [PubMed] [Google Scholar]

- Moeller S, Freiwald WA, Tsao DY. Patches with links: a unified system for processing faces in the macaque temporal lobe. Science. 2008;320:1355–1359. doi: 10.1126/science.1157436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nir Y, Hasson U, Levy I, Yeshurun Y, Malach R. Widespread functional connectivity and fMRI fluctuations in human visual cortex in the absence of visual stimulation. Neuroimage. 2006;30:1313–1324. doi: 10.1016/j.neuroimage.2005.11.018. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Yovel G, Duchaine B. TMS evidence for the involvement of the right occipital face area in early face processing. Curr Biol. 2007;17:1568–1573. doi: 10.1016/j.cub.2007.07.063. [DOI] [PubMed] [Google Scholar]

- Riddoch MJ, Johnston RA, Bracewell RM, Boutsen L, Humphreys GW. Are faces special? A case of pure prosopagnosia. Cogn Neuropsychol. 2008;25:3–26. doi: 10.1080/02643290801920113. [DOI] [PubMed] [Google Scholar]

- Rossion B, Caldara R, Seghier M, Schuller AM, Lazeyras F, Mayer E. A network of occipito-temporal face-sensitive areas besides the right middle fusiform gyrus is necessary for normal face processing. Brain. 2003;126:2381–2395. doi: 10.1093/brain/awg241. [DOI] [PubMed] [Google Scholar]

- Rotshtein P, Geng JJ, Driver J, Dolan RJ. Role of features and second-order spatial relations in face discrimination, face recognition, and individual face skills: behavioral and functional magnetic resonance imaging data. J Cogn Neurosci. 2007;19:1435–1452. doi: 10.1162/jocn.2007.19.9.1435. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schiltz C, Rossion B. Faces are represented holistically in the human occipito-temporal cortex. Neuroimage. 2006;32:1385–1394. doi: 10.1016/j.neuroimage.2006.05.037. [DOI] [PubMed] [Google Scholar]

- Schiltz C, Dricot L, Goebel R, Rossion B. Holistic perception of individual faces in the right middle fusiform gyrus as evidenced by the composite face illusion. J Vis. 2010;10:25, 21–16. doi: 10.1167/10.2.25. [DOI] [PubMed] [Google Scholar]

- Seeley WW, Menon V, Schatzberg AF, Keller J, Glover GH, Kenna H, Reiss AL, Greicius MD. Dissociable intrinsic connectivity networks for salience processing and executive control. J Neurosci. 2007;27:2349–2356. doi: 10.1523/JNEUROSCI.5587-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Steeves JKE, Culham JC, Duchaine BC, Pratesi CC, Valyear KF, Schindler I, Humphrey GK, Milner AD, Goodale MA. The fusiform face area is not sufficient for face recognition: evidence from a patient with dense prosopagnosia and no occipital face area. Neuropsychologia. 2006;44:594–609. doi: 10.1016/j.neuropsychologia.2005.06.013. [DOI] [PubMed] [Google Scholar]

- Tambini A, Ketz N, Davachi L. Enhanced brain correlations during rest are related to memory for recent experiences. Neuron. 2010;65:280–290. doi: 10.1016/j.neuron.2010.01.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tanaka JW, Farah MJ. Parts and wholes in face recognition. Q J Exp Psychol A. 1993;46:225–245. doi: 10.1080/14640749308401045. [DOI] [PubMed] [Google Scholar]

- Vincent JL, Patel GH, Fox MD, Snyder AZ, Baker JT, Van Essen DC, Zempel JM, Snyder LH, Corbetta M, Raichle ME. Intrinsic functional architecture in the anaesthetized monkey brain. Nature. 2007;447:83–86. doi: 10.1038/nature05758. [DOI] [PubMed] [Google Scholar]

- Yin RK. Looking at upside-down faces. J Exp Psychol. 1969;81:141–145. [Google Scholar]

- Yovel G, Kanwisher N. Face perception: domain specific, not process specific. Neuron. 2004;44:889–898. doi: 10.1016/j.neuron.2004.11.018. [DOI] [PubMed] [Google Scholar]

- Yovel G, Kanwisher N. The neural basis of the behavioral face-inversion effect. Curr Biol. 2005;15:2256–2262. doi: 10.1016/j.cub.2005.10.072. [DOI] [PubMed] [Google Scholar]

- Zhang H, Tian J, Liu J, Li J, Lee K. Intrinsically organized network for face perception during the resting state. Neurosci Lett. 2009;454:1–5. doi: 10.1016/j.neulet.2009.02.054. [DOI] [PMC free article] [PubMed] [Google Scholar]