Abstract

How and where in the human brain high-level sensorimotor processes such as intentions and decisions are coded remain important yet essentially unanswered questions. This is in part because, to date, decoding intended actions from brain signals has been primarily constrained to invasive neural recordings in nonhuman primates. Here we demonstrate using functional MRI (fMRI) pattern recognition techniques that we can also decode movement intentions from human brain signals, specifically object-directed grasp and reach movements, moments before their initiation. Subjects performed an event-related delayed movement task toward a single centrally located object (consisting of a small cube attached atop a larger cube). For each trial, after visual presentation of the object, one of three hand movements was instructed: grasp the top cube, grasp the bottom cube, or reach to touch the side of the object (without preshaping the hand). We found that, despite an absence of fMRI signal amplitude differences between the planned movements, the spatial activity patterns in multiple parietal and premotor brain areas accurately predicted upcoming grasp and reach movements. Furthermore, the patterns of activity in a subset of these areas additionally predicted which of the two cubes were to be grasped. These findings offer new insights into the detailed movement information contained in human preparatory brain activity and advance our present understanding of sensorimotor planning processes through a unique description of parieto-frontal regions according to the specific types of hand movements they can predict.

Introduction

Significant developments in understanding the neural underpinnings of highly cognitive and abstract processes such as intentions and decision-making have predominantly come from neurophysiological investigations in nonhuman primates. Principal among these has been the ability to predict or decode upcoming sensorimotor behaviors (such as movements of the arm or eyes) based on changes in parieto-frontal neural activity that precede movement onset (Andersen and Buneo, 2002; Gold and Shadlen, 2007; Andersen and Cui, 2009; Cisek and Kalaska, 2010). To date, the ability to predict goal-directed movements based on intention-related cortical signals has almost entirely been constrained to invasive neural recordings in nonhuman primates. Recently, however, advances in neuroimaging using pattern classification, a multivariate statistical technique used to discriminate classes of stimuli by assessing differences in the elicited spatial patterns of functional magnetic resonance imaging (fMRI) signals, have made it possible to probe the cognitive contents of the human mind with a level of sensitivity previously unavailable. Indeed, pattern classification has provided a wealth of knowledge within the domain of sensory-perceptual processing, showing that visual stimuli being viewed (Haxby et al., 2001; Kamitani and Tong, 2005), imagined (Stokes et al., 2009), or remembered (Harrison and Tong, 2009) and that categories of presented auditory stimuli (Formisano et al., 2008) can be accurately decoded from the spatial pattern of signals in visual and auditory cortex, respectively.

Few pattern classification experiments to date, however, have examined the primary purpose of perceptual processing: the planning of complex object-directed actions. Given the rather poor understanding of the human sensorimotor planning processes that guide target-directed behavior, the goals of this experiment were twofold. The first goal was to examine whether object-directed grasp and reach actions with the hand can be decoded from intention-related activity recorded before movement execution, as has only been shown previously with neural recording studies in monkeys (Andersen and Buneo, 2002). The second goal, pending success of the first, was to determine whether different parieto-frontal brain areas can be characterized according to the types of planned movements they can decode. For instance, we questioned whether plan-related activity in interconnected reach-related areas, such as superior parietal cortex, middle intraparietal sulcus (IPS), and dorsal premotor (PMd) cortex (Andersen and Cui, 2009), can predict an upcoming reach movement. Similarly, we questioned whether preparatory signals in interconnected hand-related areas, such as posterior (pIPS) and anterior (aIPS) IPS and ventral premotor (PMv) cortex (Rizzolatti and Matelli, 2003; Grafton, 2010), can predict upcoming grasp movements and moreover even discriminate different precision grasps. More revealingly, we wondered whether the increased sensitivity of decoding approaches would enable us to predict an upcoming movement from brain regions not previously implicated in coding particular hand actions. Given that conventional fMRI analyses in humans have shown widespread, highly overlapping, and essentially undifferentiated activations for different movements (Culham et al., 2006), combined with mounting evidence that standard fMRI methods may ignore the neural information contained in distributed activity patterns (Harrison and Tong, 2009), we expected that our pattern classification approach might offer a new understanding of how various parieto-frontal brain regions contribute to the planning of goal-directed hand actions.

Materials and Methods

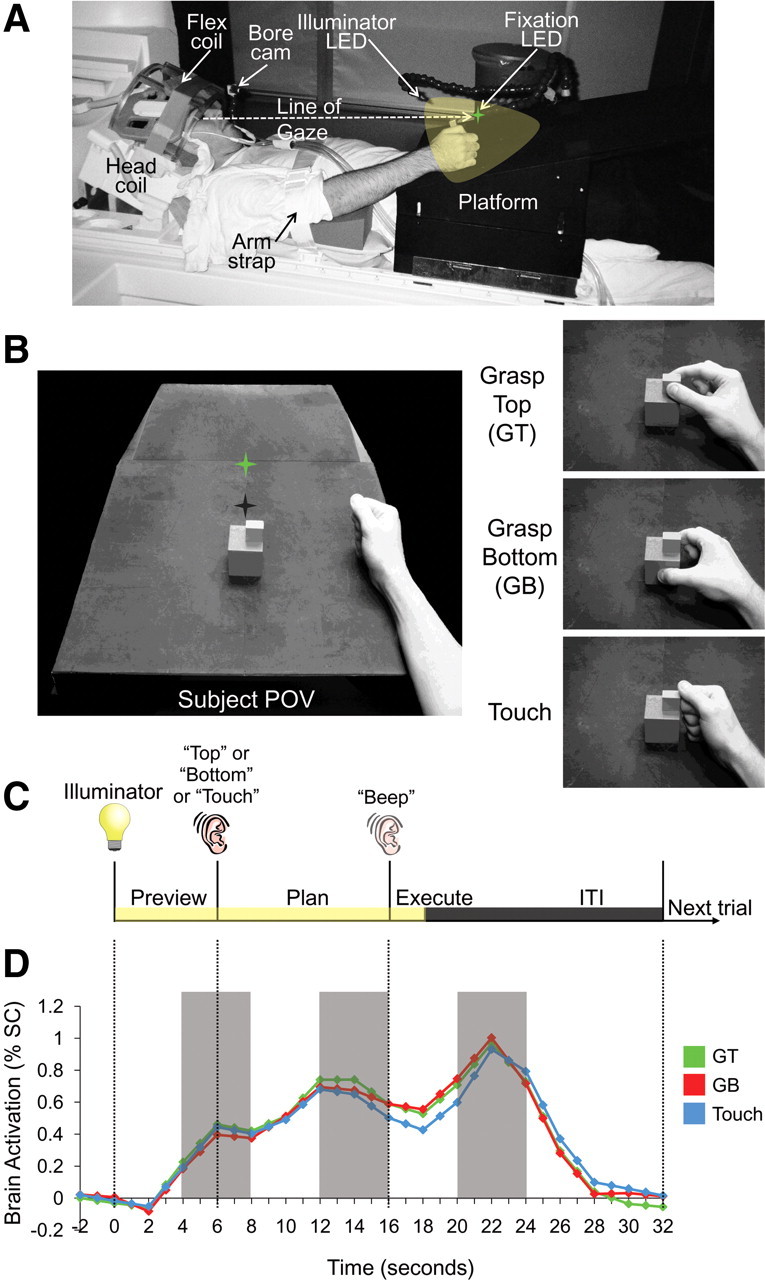

To address these two main questions, we measured activity across the whole brain using fMRI while human subjects performed a delayed object-directed movement task. The task required three different hand actions to be performed on a target object comprising a small block attached atop a larger block (Fig. 1). These actions included grasping the top (GT), grasping the bottom (GB), or touching the side (touch) of the target object (Fig. 1B). This delayed movement task allowed us to separate, in time, the transient neural activity associated with visual responses (preview phase) and movement execution (execute phase) responses from the more sustained plan-related responses that evolve before the movement (plan phase) (Andersen and Buneo, 2002; Beurze et al., 2007) (Fig. 1C,D). This experimental design permits a direct investigation of whether pattern classifiers implemented during the planning phase of an action in a given brain area can decode (1) upcoming grasp versus touch actions (GT vs touch and GB vs touch), two general types of hand movements requiring slight differences in wrist orientation and hand preshaping, and (2) upcoming grasp movements from each other (GT vs GB), performed on different blocks, requiring far more subtle differences in size and location. Emphasis on decoding during the planning phase of actions has the added advantage of using activity patterns uncontaminated by the subject's limb movement. Importantly, given this task design, in which all actions are performed on a centrally located object that never changes position from trial-to-trial, any movement decoding during planning would be independent of simple retinotopic and general attention-related differences across trial types.

Figure 1.

Experiment setup, conditions, timing, and trial-related brain activity. A, Setup from side view. The participant's head is tilted to permit direct viewing of objects on the platform. B, Experimental apparatus and graspable object shown from the participant's point of view. The same object (consisting of a smaller cube attached atop a larger cube) was always presented at the same location on the platform for every trial. Green star with dark shadow represents the fixation LED and its location in depth. Hand is positioned at its starting location. Right, The three different hand movements. C, Timing of one event-related trial. Trials began with the 3D graspable object being illuminated while the subject maintained fixation (preview phase; 6 s). Subjects were then instructed via headphones to perform one of three hand actions: grasp the top cube (Top), grasp the bottom cube (Bottom), or touch both cubes with their knuckles (Touch). This cue initiated the plan phase portion of the trial, in which, in addition to having visual information from the object, subjects also knew which hand action they were to perform. After a delay interval (10 s), subjects were cued (via an auditory beep) to perform the instructed hand movement (execute phase). Two seconds after the movement, vision of the object was extinguished and participants waited for commencement of the following trial (14 s, ITI). D, Example event-related BOLD activity from parietal cortex (posterior IPS) over the length of a trial. Events in D are time locked to correspond to events in C. Pattern classification analysis was performed on single trials based on the windowed average of the percentage signal change (% SC) corresponding to the three different time points denoted by each of the gray shaded bars (each corresponding to activity elicited from the 3 distinct trial phases preview, plan, and execute).

First, to localize the common brain areas among individuals in which to perform pattern analyses, we searched for regions at the group level preferentially involved in movement planning. To do this, we contrasted activity elicited by the planning of a hand action (i.e., after movement instruction) versus the transient activity elicited by visual presentation of the object before the instruction (plan > preview). We reasoned that, compared with the activity elicited when the object was illuminated and the subject was unaware of the action to be performed (preview phase), areas involved in movement planning should show heightened responses once movement instruction information has been given (plan phase), although the object was visible in both phases. This rationale provides a logical extension of recent studies that examined areas involved in planning to temporally spaced instructions about target location, effector to be used, and grasp type in fMRI movement tasks (Beurze et al., 2007, 2009; Chapman et al., 2011). This group contrast allowed us to define 14 well-documented action-related regions-of-interest (ROIs) as well as three sensory-related ROIs that could then be reliably identified in single subjects with the same contrast. In each subject, we then iteratively trained and tested pattern classifiers in each predefined ROI to determine whether, before movement, its preparatory spatial activity patterns were predictive of the hand movement to be performed.

Subjects.

Nine right-handed volunteers participated in this study (five males; mean age, 26.2 years) and were recruited from the University of Western Ontario (London, ON, Canada). One subject was excluded as a result of head motion beyond 1 mm translation and 1° rotation in their experimental runs (see below, MRI acquisition and preprocessing). Informed consent was obtained in accordance with procedures approved by the University of Western Ontario Health Sciences Research Ethics Board.

Setup and apparatus.

Each subject's workspace within the MRI scanner consisted of a black platform placed over their waist and tilted away from the horizontal at an angle (∼10–15°) that maximized comfort and target visibility. To facilitate direct viewing of the workspace, we also tilted the head coil (∼20°) and used foam cushions to give an approximate overall head tilt of 30° from supine (Fig. 1A). Participants performed actions with the right hand and had the right upper arm braced such that arm movement was limited to the elbow and wrist, creating an arc of reachability [movements of the upper arm have been shown to cause perturbations in the magnetic field and induce artifacts in the participant's data (Culham, 2004)]. The target object was made up of a smaller cube atop a larger cube (bottom block, 5 × 5 × 5 cm; top block, 2.5 × 2.5 × 1.5 cm) and was secured to the workspace at a location along the arc of reachability for the right hand, at the point corresponding to the participant's sagittal midline. The exact placement of the object on the platform was adjusted to match each participant's arm length such that all required movements were comfortable. During the experiment, the object was illuminated from the front by a bright yellow light emitting diode (LED) attached to flexible plastic stalks (Loc-Line; Lockwood Products). To control for eye movements, a small green fixation LED was placed immediately above the target object (fixation). Subjects were asked to always foveate the fixation LED during functional scans. Experimental timing and lighting were controlled with in-house software created with Matlab (MathWorks).

For each trial, the subjects were required to perform one of three actions on the object: GT, using a precision grip with the thumb and index finger placed on opposing surfaces of the cube; GB, using the same grip; or manually touch the side of the object with the knuckles (transport the hand to the object without hand preshaping). Importantly, for each trial, the graspable object never changed its centrally located position.

Experiment design and timing.

To isolate the visuomotor planning response from the visual and motor execution responses, we used a slow event-related planning paradigm with 32 s trials, each consisting of three distinct phases: preview, plan, and execute (Fig. 1C). We adapted this paradigm from one of our previous studies (Chapman et al., 2011) as well as previous work with eye movements and working memory that have successfully parsed delay activity from the transient responses after the onset of visual input and movement execution (Curtis and D'Esposito, 2003; Curtis et al., 2004, 2005). Each trial was preceded by a period in which participants were in complete darkness except for the fixation LED on which they maintained their gaze. The trial began with the preview phase and illumination of the workspace and centrally located object. After 6 s of the preview phase, a voice auditory cue (0.5 s; one of “top,” “bottom,” or “touch”) was given to the subject and instructed the corresponding upcoming movement, marking the onset of the plan phase. Although participants had visual information of the object to be acted on during the preview phase, only in the plan phase did they know which action was to be performed, thus providing all the information necessary to prepare the upcoming movement. Critically, the visual information during the preview and plan phases was constant for all trials, only the planned movements changed. After 10 s of the plan phase, a 0.5 s auditory beep cue instructed participants to immediately execute the planned action (for a duration of ∼2 s), initiating the execute phase of the trial. Two seconds after the beginning of this go cue, the illuminator was extinguished providing 14 s of darkness/fixation that allowed the blood oxygenation level-dependent (BOLD) response to return to baseline before the next trial [intertrial interval (ITI) phase]. Other than the execution phase of each action, throughout the other phases of the trial (preview, plan, and ITI) the hand was to remain still and in a relaxed ‘home’ position on the platform to the right of the object. The three trial types, with six repetitions per condition (18 trials total per run), were pseudorandomized within a run and balanced across all runs so that each trial type was preceded and followed equally often by every other trial type across the entire experiment.

During the anatomical scan (collected at the beginning of every experiment) and before entering the scanner, brief practice sessions were conducted (equivalent to the length of one experimental functional run) to familiarize participants with the paradigm, especially the delay timing, which required performing the cued action only at the beep (go) cue. A testing session for one participant included setup time (∼45 min), eight or nine functional runs, and one anatomical scan and lasted ∼2.5–3 h. We did not conduct eye tracking during the scan session because there are no MR-compatible eye trackers that can monitor gaze in the head-tilted configuration (because of occlusion from the eyelids). Nevertheless, multiple behavioral control experiments from our laboratory have shown that subjects can maintain fixation well under experimental testing.

MRI acquisition and preprocessing.

Imaging was performed on a 3 tesla Siemens TIM MAGNETOM Trio MRI scanner. The T1-weighted anatomical image was collected using an ADNI MPRAGE sequence [repetition time (TR), 2300 ms; echo time (TE), 2.98 ms; field of view, 192 × 240 × 256 mm; matrix size, 192 × 240 × 256; flip angle, 9°; 1 mm isotropic voxels]. Functional MRI volumes were collected using a T2*-weighted single-shot gradient-echo echo-planar imaging acquisition sequence [TR, 2000 ms; slice thickness, 3 mm; in-plane resolution, 3 × 3 mm; TE, 30 ms; field of view, 240 × 240 mm; matrix size, 80 × 80; flip angle, 90°; and acceleration factor (integrated parallel acquisition technologies (or IPAT) of 2 with generalized autocalibrating partially parallel acquisitions reconstruction (or GRAPPA)]. We used a combination of parallel imaging coils to achieve a good signal/noise ratio and to enable direct viewing without mirrors or occlusion. We tilted (∼20°) the posterior half of the 12-channel receive-only head coil (six channels) and suspended a four-channel receive-only flex coil over the anterosuperior part of the head. Each volume comprised 34 contiguous (no gap) oblique slices acquired at a ∼30° caudal tilt with respect to the anterior-to-posterior commissure (ACPC) line, providing near whole-brain coverage.

Preprocessing included slice scan-time correction, 3D motion correction (such that each volume was aligned to the volume of the functional scan closest in time to the anatomical scan), and high-pass temporal filtering (three cycles per run). We also performed functional-to-anatomical coregistration such that the axial plane of functional and anatomical scans passed through the ACPC space, which was then transformed into Talairach space (Talairach and Tournoux, 1988). Other than inadvertent smoothing arising from the sinc interpolation for all transformations, no additional spatial smoothing was performed. Talairach-transformed data was only used for group voxelwise analyses to define the planning-related ROIs common across all subjects. These same areas were then defined anatomically within each subject's ACPC data. We decided to define ROIs within the ACPC data in this way because multivoxel classification analysis discriminates spatial patterns across voxels and the additional spatial interpolations inherent in normalization may hinder such analyses. Indeed, pattern classification using three of the subject's Talairach-transformed data showed that decoding accuracies were on average ∼1–2% less during both plan and execute time phases than in the corresponding subject's ACPC data. Using the ACPC data also had the advantage that each region of interest could be reliably identified in single subjects regardless of variations in slice planes, a particular problem given the sulcal variability of parietal cortex. The cortical surface from one subject was reconstructed from a high-resolution anatomical image, a procedure that included segmenting the gray and white matter and inflating the surface at the boundary between them. This inflated cortical surface was used to display group activation for figure presentation (Fig. 2).

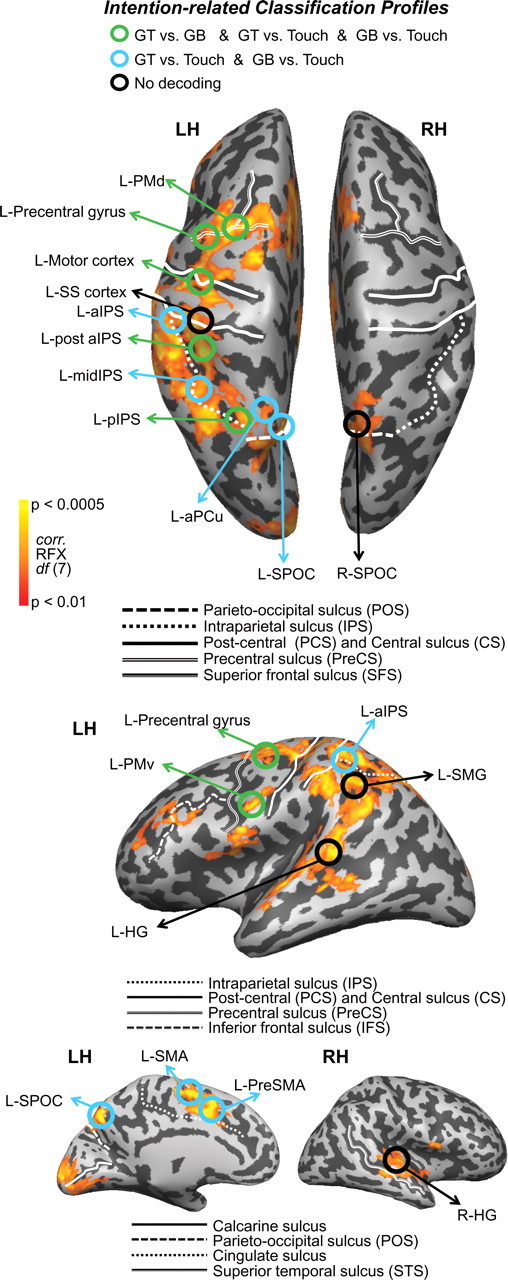

Figure 2.

Decoding of object-directed movement intentions across the parieto-frontal network. Cortical areas that exhibited larger responses during movement planning than the preceding visual phase (plan > preview) are shown in orange/yellow activation. Results calculated across all subjects (random-effects GLM) are displayed on one representative subject's inflated hemispheres. The general locations of the selected ROIs are outlined in circles (actual ROIs were anatomically defined separately in each subject). Each ROI is color coded according to the pairwise discriminations they can decode during the plan phase (found in Fig. 4); see color legend at top for classification profiles (colors denote significant decoding accuracies for upcoming actions with respect to 50% chance). Sulcal landmarks are denoted by white lines (stylized according to the corresponding legends below each brain). LH, Left hemisphere; RH, right hemisphere.

For each participant, functional data from each session were screened for motion and/or magnet artifacts by examining the time course movies and the motion plots created with the motion correction algorithms. Any runs that exceeded 1 mm translation or 1° rotation within the run were discarded, leading to the removal of all runs from one subject and one run from another subject. Action performance was examined offline from videos recorded using an MR-compatible infrared-sensitive camera that was optimally positioned to record the participant's movements during functional runs (MRC Systems). No errors were observed, likely because, by the time they actually performed the required movements in the scanner, subjects were well trained in the delay task. All preprocessing and analyses were performed using Brain Voyager QX version 2.12 (Brain Innovation).

Regions of interest.

To localize specific planning-related areas in which to implement pattern recognition analyses, we used a general linear model (GLM) group random-effects (RFX) voxelwise analysis (on the Talairach-transformed data). Predictors were defined at the onset of the preview, plan, and execute periods for each individual trial with a value of 1 for (1) three volumes during the preview phase, (2) five volumes during the plan phase, and (3) one volume for the execute phase and 0 for the remainder of the trial period (ITI). Each of these predictors was then convolved using a Boynton hemodynamic response function (Boynton et al., 1996). Data were processed using a percentage signal change transformation.

Using the GLM, we contrasted activity for movement planning versus the simple visual response to object presentation [plan > preview: (GT plan + GB plan + touch plan) vs (GT preview + GB preview + touch preview)]. This plan > preview statistical map of all positively active voxels (RFX, t(7) = 3.5, p < 0.01) was then used to define 17 ROIs [foci of activity selected within a (15 mm)3 cube centered on a particular anatomical landmark; only clusters of voxels larger than 297 mm3 were used (minimum cluster size estimated by 1000 Monte Carlo simulations of p < 0.05 corrected, implemented in the cluster threshold plug-in for BVQX)], which could then be localized in single subjects. Fourteen of these ROIs (across parietal, motor, and premotor cortex) were selected based on their well documented and highly reliable coactivations across several movement-related tasks and paradigms (Andersen and Buneo, 2002; Chouinard and Paus, 2006; Culham et al., 2006; Filimon et al., 2009; Cisek and Kalaska, 2010; Filimon, 2010; Grafton, 2010) and the other three ROIs (somatosensory cortex and left and right Heschl's gyrus) were selected as regions known to respond to transient stimuli (i.e., sensory and auditory events) and often activated in experimental contexts but not expected to necessarily participate in sustained movement planning or intentional-related processes (i.e., to serve as sensory control regions). Importantly, all these ROIs are easily defined according to anatomical landmarks (sulci and gyri) and functional activations in each individual subject's ACPC data (see below, ROI selection). Critically, given the contrast used to select these 17 areas (i.e., plan > preview), their activity is not biased to show any plan-related pattern differences between any of the experimental conditions (for confirmation of this fact, see the univariate analyses in Fig. 5).

Figure 5.

No fMRI signal amplitude differences found within the parieto-frontal regions used for pattern classification. Responses are averaged across voxels within each ROI and across subjects (2-volume averaged windows corresponding to preview, plan, and execute phases). Note that only one significant univariate difference is observed in R-SPOC, a non-decoding region. Error bars represent SEM across subjects.

Voxels submitted for pattern classification analysis were selected from the plan > preview GLM contrast on single-subject ACPC data and based on all activity within a (15 mm)3 cube centered on defined anatomical landmarks for each of the 17 ROIs (for details, see below, ROI selection). We chose (15 mm)3 cubes for our ROI sizes not only to allow for the inclusion of numerous functional voxels for pattern classification (an important consideration) but also to ensure that adjacent ROIs did not overlap. These ROIs were selected at a threshold of t = 3, p < 0.003, from an overlay of each subject's activation map (cluster threshold corrected, p < 0.05, so that only voxels passing a minimum cluster size were selected; average minimum cluster size across subjects, 110 mm3). All univariate statistical tests used the Greenhouse–Geisser correction and for post hoc tests (two-tailed paired t tests), we used a threshold of p < 0.05 (see Fig. 5). Only significant results are reported.

ROI selection.

The following ROIs were chosen: left and right superior parieto-occipital cortex (SPOC), defined by selecting voxels located medially and directly anterior to the parieto-occipital sulcus on the left and right (Gallivan et al., 2009); left anterior precuneus (L-aPCu), defined by selecting voxels farther anterior and superior to the L-SPOC ROI, near the transverse parietal sulcus (in most subjects, this activity was located medially, within the same sagittal plane as SPOC, but in a few subjects, this activity was located slightly more laterally) (Filimon et al., 2009); left pIPS (L-pIPS), defined by selecting activity at the caudal end of the IPS (Beurze et al., 2009); left middle IPS (L-midIPS), defined by selecting voxels approximately halfway up the length of the IPS, on the medial bank (Calton et al., 2002), near a characteristic “knob” landmark that we observed consistently within each subject; left region located posterior to L-aIPS (L-post aIPS), defined by selecting voxels just posterior to the junction of the IPS and post-central sulcus (PCS), on the medial bank of the IPS (Culham, 2004); left aIPS (L-aIPS), defined by selecting voxels directly at the junction of the IPS and PCS (Culham et al., 2003); left supramarginal gyrus (L-SMG), defined by selecting voxels on the supramarginal gyrus (SMG), lateral to the anterior segment of the IPS (Lewis, 2006); left motor cortex, defined by selecting voxels around the left “hand knob” landmark in the central sulcus (CS) (Yousry et al., 1997); left PMd (L-PMd), defined by selecting voxels at the junction of the precentral sulcus (PreCS) and superior frontal sulcus (SFS) (Picard and Strick, 2001); left precentral gyrus, defined by selecting voxels lateral to the junction of the PreCS and SFS, encompassing the precentral gyrus and posterior edge of the PreCS; left PMv (L-PMv), defined by selecting voxels slightly inferior and posterior to the junction of the inferior frontal sulcus (IFS) and PreCS (Tomassini et al., 2007); left presupplementary motor area (L-PreSMA), defined by selecting bilateral voxels (although mostly left-lateralized) superior to the middle/anterior segment of the cingulate sulcus, anterior to the plane of the anterior commissure and more anterior and inferior than those selected for left supplementary area (Picard and Strick, 2001); left supplementary motor area (L-SMA), defined by selecting voxels bilaterally (although mostly left-lateralized) adjacent and anterior to the medial end of the CS and posterior to the plane of the anterior commissure (Picard and Strick, 2001); left somatosensory cortex (L-SS cortex), defined by selecting voxels medial and anterior to the aIPS, encompassing the postcentral gyrus and PCS; and left (L-HG) and right (R-HG) Heschl's gyri, defined by selecting voxels halfway up along the superior temporal sulcus (STS), on the superior temporal gyrus (between the insular cortex and outer-lateral edge of the superior temporal gyrus) (Meyer et al., 2010). See Table 1 for details about ROI coordinates and sizes and Figure 2 for anatomical locations on one representative subject's brain.

Table 1.

ROIs with corresponding Talairach coordinates (x, y, and z center of mass mean and SD)

| ROI name | Talairach coordinates |

ROI size |

||||||

|---|---|---|---|---|---|---|---|---|

| Mean x | Mean y | Mean z | SD x | SD y | SD z | mm3 | n voxels | |

| R-SPOC | 4 | −70 | 38 | 4.6 | 3.5 | 4.1 | 1782 | 66 |

| L-SPOC | −6 | −74 | 36 | 4 | 3.9 | 3.6 | 2189 | 81 |

| L-aPCu | −14 | −74 | 44 | 3.7 | 3.8 | 3.4 | 1895 | 70 |

| L-pIPS | −16 | −63 | 50 | 3.3 | 4 | 3 | 1996 | 74 |

| L-midIPS | −35 | −57 | 42 | 3.6 | 4 | 4.3 | 2053 | 76 |

| L-post aIPS | −36 | −44 | 46 | 3.9 | 3.9 | 3.8 | 2094 | 78 |

| L-aIPS | −49 | −34 | 44 | 3.8 | 4 | 4 | 1926 | 71 |

| L-SMG | −58 | −41 | 29 | 3.6 | 3.3 | 4.2 | 1782 | 66 |

| L-motor cortex | −33 | −19 | 56 | 4.5 | 4.4 | 3.6 | 1278 | 47 |

| L-PMd cortex | −23 | −9 | 58 | 4.1 | 4 | 3.6 | 1914 | 71 |

| L-precentral gyrus | −39 | −11 | 55 | 3.5 | 3 | 3.2 | 1679 | 62 |

| L-PMv cortex | −53 | 4 | 31 | 3.1 | 2.7 | 2.9 | 1617 | 60 |

| L-preSMA | −8 | 4 | 41 | 3.2 | 4 | 4 | 1896 | 70 |

| L-SMA | −7 | −3 | 51 | 3.5 | 3.9 | 3.4 | 2026 | 75 |

| L-SS cortex | −41 | −32 | 54 | 3.8 | 3.9 | 4.2 | 1528 | 57 |

| L-HG | −57 | −26 | 6 | 4 | 3.7 | 4.1 | 1956 | 72 |

| R-HG | 57 | −21 | 7 | 3.9 | 3.6 | 3.2 | 1627 | 60 |

Mean ROI sizes across subjects from ACPC data (in cubic millimeters and functional voxels).

Non-brain control regions.

To demonstrate classifier performance outside of our plan network ROIs, we defined two additional control ROIs in which no BOLD signal was expected and thus no reliable classification performance should be possible. To select these ROIs in individual subjects, we further reduced our statistical threshold (after specifying the plan > preview network within each subject) to t = 0, p = 1 and selected all positive activation within a (15 mm)3 cube centered on two consistent points: (1) within each subject's right ventricle and (2) just outside the skull of the brain, near right visual cortex in the ACPC plane.

Multivoxel pattern analysis.

Multivoxel pattern analysis (MVPA) was performed with a combination of in-house software (using Matlab) and the Princeton MVPA Toolbox for Matlab (http://code.google.com/p/princeton-mvpa-toolbox/) using a support vector machines (SVM) binary classifier (libSVM, http://www.csie.ntu.edu.tw/∼cjlin/libsvm/). The SVM model used a linear kernel function and a constant cost parameter, C = 1 [congruent with many other fMRI studies (LaConte et al., 2003; Mitchell et al., 2003; Mourão-Miranda et al., 2005; Haynes et al., 2007; Pessoa and Padmala, 2007)] to compute the hyperplane that best separated the trial responses.

Voxel pattern preparation.

For each voxel within a region and each trial, we extracted the average percentage signal change activation corresponding to the 4 s time windows specified by each of the gray shaded bars in Figures 1D and 3 (i.e., the activity elicited by each distinct phase of the trial: plan, preview, and execute) and entered these as data points for pattern classification. Beyond allowing us to characterize which types of movements within an area could be accurately decoded, this time-specific approach also allowed us to investigate when predictive information pertaining to a particular movement was available (i.e., within the preview, plan, or execute phase).

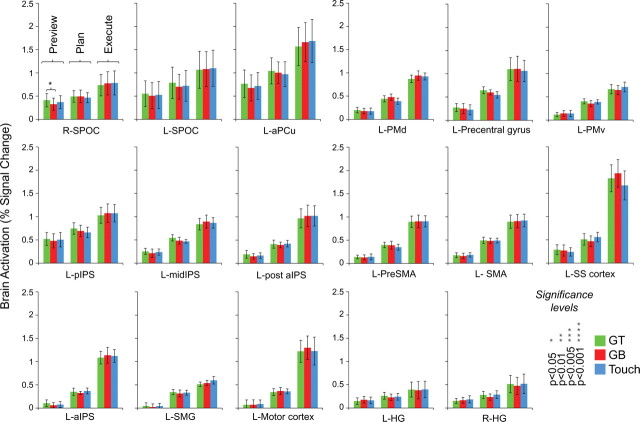

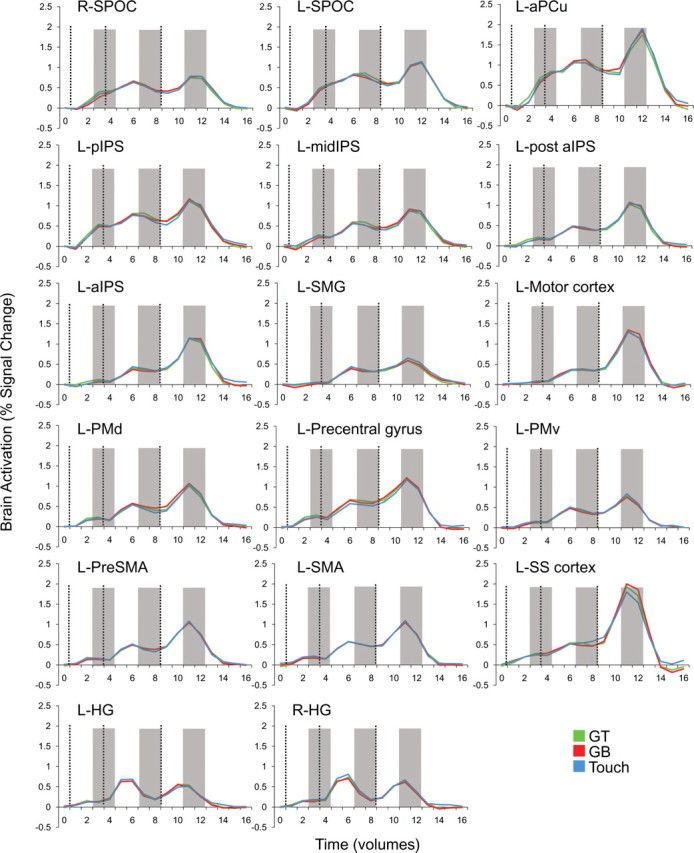

Figure 3.

Trial-related percentage signal change fMRI activations from each of the 14 plan-network ROIs and three sensory control ROIs. Activity in each plot is averaged across voxels within each ROI and across subjects. Plots show the profiles of typical preparatory activity found throughout parieto-frontal network areas. Vertical dashed lines correspond to the onset of the preview, plan, and execute phases of each trial (from left to right). Shaded gray bars highlight the two-volume (4 s) windows that were averaged and extracted for pattern classification analysis. Note that time corresponds to imaging volumes (TR of 2) and not seconds.

The baseline window was defined as the average of volumes 1 and 2 with respect to the start of the trial (which avoids contamination from the previous trial and in which response amplitude differences do not exist). For the preview phase time points, we extracted the mean of volumes 3–4, time points that correspond to the peak of the visual transient response (Fig. 3). For the execute phase time points, we extracted the average of volumes 11–12, which correspond to the peak of the transient movement response, after the subject's action (Fig. 3). Last, for the plan phase, the time points of critical interest for decoding subject's intentions, we extracted the average of volumes 7–8 (the final two volumes of the plan phase), corresponding to the sustained activity of a planning response (Fig. 3). After the extraction of each trial's percentage signal change, these values were z-scored across the run, for each voxel within an ROI.

Our reasoning for using the average of volumes 7–8 (the final two volumes of the plan phase) for pattern classification is obvious: planning is not a transient but sustained process. Whereas simple visual or motor execution responses typically show transient neural activity (Andersen et al., 1997; Andersen and Buneo, 2002), in which the hemodynamic response function peaks approximately at 6 s after the event and then falls, planning responses generally remain high for the duration of the intended movement (Curtis and D'Esposito, 2003; Curtis et al., 2004; Chapman et al., 2011). With this rationale, we figured that, if pattern differences were to arise during movement planning, they would more likely occur during the sustained planning response after the hemodynamic response had reached its peak. For these reasons, we selected the final two volumes of the plan phase to serve as our data points of interest: a critical two-volume window in which the hemodynamic response had already plateaued, any non-plan-related transient responses associated with the auditory cue would be diminishing, and most importantly, a time point before the subject initiated any movement.

Single-trial classification.

For each subject and for each of the 17 plan-related ROIs, nine different binary SVM classifiers were estimated for MVPA (i.e., for each of the preview, plan, and execute phases and each pairwise comparison, GT vs GB, GT vs touch, and GB vs touch). We used “leave-eight-trials-out” cross-validation to test the accuracy of the binary SVM classifiers, meaning that eight trials from each condition (i.e., 16 trials total) were reserved for testing the classifier and the remaining trials were used to train the classifier (i.e., 40 or 46 remaining trials per condition, depending on whether the subject participated in eight or nine experimental runs, respectively). Although a full cross-validation is not feasible with a leave-eight-trials-out design because of the ∼108 possible iterations, a minimum of 1002 train/test iterations were performed for each classification.

A critical assumption underlying single-trial classification analysis is that each individual trial and condition type, beyond being randomly selected for each iteration, be equally represented for classifier training and testing across the total number of iterations. To meet this assumption, given differences in the total number of trials for each subject, the number of iterations was increased for some subjects. For instance, for subjects with eight runs, 1002 iterations were used (each trial was used exactly 167 times to train the classifier). For subjects with nine runs, a perfect solution was not achievable. For these subjects, the number of iterations was increased to 1026 to ensure that each trial was used 152 ± 1 times to train the classifier. This high number of train-and-test iterations produces a precise estimate and highly representative sample of true classification accuracies (this method showed a test–retest reliability within ±0.5%; based on multiple simulations of 1026 iterations conducted in three subjects). Given the noise inherent in single trials and the fact that each trial for training could be randomly selected from any point throughout the experiment, single-trial classification provides a highly conservative but robust measure of decoding accuracies. Moreover, much of the motivation of this present work is to determine the feasibility of predicting specific motor intentions from single fMRI trials, which could be then be applied to human movement-impaired patient populations.

Decoding accuracies were computed separately for each subject, as an average across iterations. The average across subjects for each ROI is shown in Figure 4. To assess the statistical significance of decoding accuracies, we performed one-sample t tests across subjects in each of the ROIs to test whether the decoding accuracy for each pairwise discrimination was significantly higher than 50% chance (Fig. 4, black asterisks, two-tailed tests) (Chen et al., 2011).

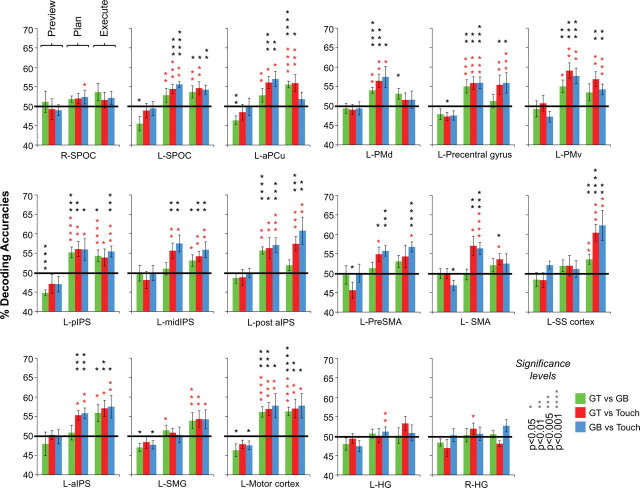

Figure 4.

Classifier decoding accuracies for each ROI for the three trial phases (preview, plan, and execute; the middle 3 bars correspond to accuracies elicited during the plan phase). Error bars represent SEM across subjects. Solid black lines are chance accuracy level (50%). Black asterisks assess statistical significance with two-tailed t tests across subjects with respect to 50%. Note that no above-chance decoding is shown during the preview phase, when subjects were unaware which movement they were going to perform. Red asterisks denote statistical significance with paired two-tailed t tests for decoding accuracies across subjects for plan and execute phases with respect to within-trial decoding accuracies found during the preceding preview phase (i.e., assessing where accuracies are higher than that for simple object visual presentation, when subjects were unaware which action they would be performing). Importantly, any areas showing significant decoding during the plan phase with respect to 50% also show significant decoding with respect to the permutation tests (see Materials and Methods) and preview phase. Note that accurate classification can only be attributed to the spatial response patterns of different planned movement types and not the overall signal amplitudes within each ROI (see Fig. 5). Also note that decoding accuracies are color coded according to pairwise discriminations and not trial types.

SVMs are designed for classifying differences between two stimuli and LibSVM (the SVMs implemented here) uses the so-called “one-against-one method” for each pairwise discrimination. Often the pairwise results are combined to produce multiclass discriminations (Hsu and Lin, 2002) (i.e., distinguish among more than two stimuli). For this particular experiment, however, looking at the individual pairwise discriminations was valuable because it could specifically determine what particular type(s) of planned movements were decoded within each brain area. For instance, a brain region showing a decoding pattern of grasps versus touches (GT vs touch and GB vs touch, but not GT vs GB movements), an interesting theoretical finding here, would be very difficult to assess with multiclass discrimination approaches.

Permutation tests.

In addition to the t test, we separately assessed statistical significance with nonparametric randomization tests (Golland and Fischl, 2003; Etzel et al., 2008; Smith and Muckli, 2010; Chen et al., 2011), which also determined that the chance distribution of decoding accuracies was approximately normal and had a mean ∼50%. For each subject, after classifier training (and testing) with the true trial identities, we also performed 100 random permutations of the test trial identities before testing the classifier. That is, to empirically test the statistical significance of our findings with the true data labels, we examined how a model trained on true data labels would perform when tested on randomized trial labels. For each of the 100 permuted groupings of test labels, we ran the same cross-validation analysis procedure 1002/1026 times (depending on the number of runs per subject). As in the standard analysis, we averaged across the 1002/1026 cross-validation iterations to generate 100 mean accuracies. We then used these 100 random mean accuracies plus the real mean accuracy (the one correct labeling) in each subject to estimate the statistical significance of our group mean accuracy for the eight subjects (see below paragraph for additional details on how exactly this was accomplished). This procedure was performed for each ROI and pairwise discrimination separately.

The data plotted in Figure 4 represent the average across each subject's mean accuracy, calculated from the cross-validation procedure. Thus, the empirical statistical significance of this “true” group mean accuracy is equal to the probability that the true group mean accuracy lies outside a population of random group mean accuracies (Chen et al., 2011). This population was generated from 1000 random group accuracies in which each sample was the average, n = 8, of randomly drawn accuracies from each subject's 101 permuted test labels. The percentile of the true group mean accuracy was then determined from its place in a rank ordering of the permuted population accuracies [thus, the peak percentile of significance (p < 0.001) is limited by the number of samples producing the randomized probability distribution]. The important result of these randomization tests is that brain areas showing significant decoding with one-sample parametric t tests (vs 50%) also show significant decoding (at p < 0.001) with empirical nonparametric tests (data not shown). Thus, the results of this nonparametric randomization test generally produced significant results with much higher significance than those found with the parametric t test (a finding also noted by Smith and Muckli, 2010; Chen et al., 2011). This may indicate that the t test group analysis (as shown in Fig. 4) is a rather conservative estimate of significant decoding accuracies. Near identical results were produced when trial identities were shuffled before classifier training (data not shown).

Within-trial tests.

We also performed a within-trial test for the significance of our decoding accuracies by examining whether classification accuracies found during the plan and execute phases of the trial were significantly higher than the decoding accuracies found during the preceding preview phase. In other words, we wanted to assess whether significant pattern classifications observed in the plan and execute phases could unequivocally be attributed to movement intentions (and executions) rather than simple visual pattern differences that begin to arise during object presentation when subjects had no previous knowledge which action they would be performing (preview phase). To do this, we ran paired t tests in each ROI to determine whether the decoding accuracies discriminating between trial types during the plan and execute phase were significantly higher than the preview phase decoding accuracies occurring earlier within the same trials (Fig. 4, two-tailed tests, red asterisks). For all parametric tests, we additionally verified that the mean accuracies across subjects were in accordance with an underlying normal distribution by performing Lilliefors tests.

Results

The voxel patterns within several of the plan-related ROIs enabled the accurate decoding of grasp versus touch comparisons (GT vs touch and GB vs touch) and, in some cases, all three comparisons (also GT vs GB) with respect to 50% chance (for the corresponding plan-related decoding accuracies, see Fig. 4). For instance, pattern classification in all of the following ROIs successfully decoded movement plans for the grasp versus touch conditions (GT vs touch and GB vs touch): L-SPOC, L-aPCu, L-midIPS, L-aIPS, L-SMA, and L-PreSMA. Given that we found overlapping and indistinguishable response amplitudes for the three different movements types in all of these areas for each of the different time phases (preview, plan, and execute) (Fig. 3), this decoding result suggests that each of these regions differentially contribute to both grasp and reach planning (instead of coding one action vs the other) but, importantly, not toward the planning of the two different grasp movements. Instead, the decoding of movement plans for precision grasps on the different sized objects (as well as differentiation of grasp vs touch actions: GT vs GB and GT vs touch and GB vs touch) were constrained to a different set of ROIs: L-pIPS, L-post aIPS, L-motor cortex, L-precentral gyrus, L-PMd, and L-PMv (Fig. 4). This pattern of results across these parieto-frontal areas suggest that regions can be functionally classified according to whether the resident preparatory signals are predictive of upcoming grasp versus reach movements or, in addition, different precision grasps (for instance, for a color coding of the ROIs depending on the types of movements they can predict, see Fig. 2). Note that decoding accuracies were based on single-trial classifications and, as such, demonstrate that the spatial voxel patterns generated during movement planning (and used for classifier training) were robust and consistent enough across the full experiment (all eight to nine experimental runs) to allow for successful prediction.

As anticipated, the three sensory control areas (L-SS cortex, L-HG, and R-HG) showed no significant decoding during planning, highlighting the fact that predictive information can be specifically localized to particular nodes of the parieto-frontal network. This is particularly intuitive in the case of somatosensory cortex: it should not be expected to decode anything until the mechanoreceptors of the hand are stimulated at movement onset (Fig. 4). Likewise, L-HG and R-HG are primary auditory structures and thus are not expected to carry sustained plan-related predictive information. Null results should always be interpreted with caution in pattern classification [because they may reflect limitations in the classification algorithms rather than the data (Pereira and Botvinick, 2011)]; nevertheless, the absence of decoding during planning in these areas is certainly consistent with expectations.

Importantly, our results also show that plan-related decoding can only be attributed to the intention to perform a specific movement, because we find no significant decoding above 50% chance in the preceding preview phase (i.e., when movement-planning information was unavailable). Moreover, when we additionally tested whether above-chance decoding during planning was also significantly higher than the within-trial decoding found during the preceding preview phase, we found this to be the case in every region (Fig. 4, red asterisks). Critically, accurate classification only reflects the spatial response pattern profiles of different planned movement types and not the overall fMRI signal amplitudes within each ROI. When we averaged the trial responses across all voxels and subjects in each ROI (as done in conventional fMRI ROI analyses), we found no significant differences for the three different hand movements, in any phase of the trial (preview, plan, or execute) (see trial time courses in Fig. 3 and an univariate analysis of signal amplitudes for the same time windows as those extracted for MVPA in Fig. 5 for confirmation of this fact). As an additional type I error control for our classification accuracies, we ran the same pattern discrimination analysis on two noncortical ROIs outside of our plan-related network in which accurate classification should not be possible: the right ventricle and outside the brain. As expected, pattern classification revealed no significant decoding in these two areas for any phase of the trial (Fig. 6).

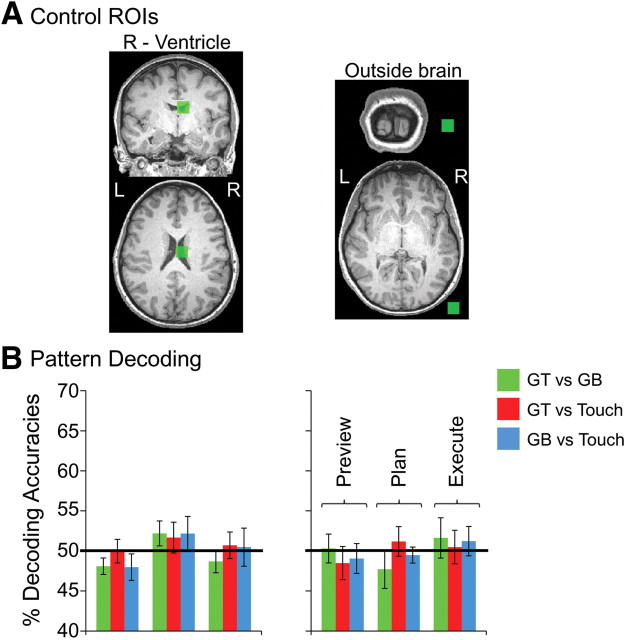

Figure 6.

Classifier decoding accuracies in non-brain control regions. A, Non-brain control ROIs defined in each subject (denoted in green; example subject shown). B, Classifier accuracies for the three trial phases for the right ventricle (left) and outside the brain ROI (right). Error bars represent SEM across subjects. Solid lines show chance classification accuracy (50%). Importantly, no significant differences were found with t tests across subjects with respect to 50% chance.

In addition to using the spatial voxel activity patterns to predict upcoming hand movements, we performed a voxel weight analysis for each ROI (for example, see Kamitani and Tong, 2005) to directly determine whether any structured spatial relationship of voxel activity according to the action being planned could be found (data not shown). To do this, for each iteration of the cross-validation procedure (1002 or 1026 iterations, depending on the number of runs per subject), a different SVM discriminant function was refined based on the subset of trials included for training. We calculated the voxel weights for each function and then averaged across all iterations to produce a set of mean voxel weights; this procedure was repeated for each pairwise comparison, ROI, and subject (note that the weight of each voxel provides a measure of its relationship with the class label as learned by the classifier; in this case, GT, GB, or touch planned actions) (for details, see Pereira and Botvinick, 2011). Both across and within subjects for each ROI and pairwise comparison, we found little structured relationship of voxel weights according to the action being planned. For instance, no correspondence was found between the GT versus touch and GB versus touch spatial arrangement of voxel weights in each ROI, despite the two grasp actions being highly similar and the two touch actions being exactly the same. We did, however, notice that, within individual ROIs, despite the inconsistency of voxel weight patterns across subjects and across pairwise comparisons, voxels that discriminated one planned movement versus another tended to cluster. That is, voxels coding for one particular movement (reflected by the positive or negative direction of the weight) tended to lie adjacent to one another within the ROI, although these sub-ROI clusters were not necessarily consistent between comparisons. Although caution should be applied to interpreting the magnitude of the voxel weights assigned by any classifier (Pereira and Botvinick, 2011), this general result is to be expected based on the structure of the surrounding vasculature and spatial resolution of the BOLD response (Logothetis and Wandell, 2004), further reinforcing the notion that spatial voxel patterns directly reflect underlying physiological changes. Furthermore, and more generally, the findings from this voxel weight analysis are highly consistent with expectations from monkey neurophysiology. The neural organization of macaque parieto-frontal cortex is highly distributed and multiplexed, with neurons containing different sensorimotor frames of reference and separate response properties (e.g., for effector or location) residing in close anatomical proximity (Snyder et al., 1997; Andersen and Buneo, 2002; Calton et al., 2002; Andersen and Cui, 2009; Chang and Snyder, 2010). As such, combined with the fact that we are able to accurately predict upcoming hand actions from the trained pattern classifiers, the primarily unstructured arrangement of voxel weights appears to have a well-documented anatomical basis.

Additional univariate analyses

Although not shown, we also performed a univariate contrast of [(GT execute + GB execute) vs 2 * (touch execute)] to define left aIPS, a brain area frequently reported in studies from our laboratory, consistently shown to be preferentially involved in grasping actions (Culham et al., 2003; Gallivan et al., 2009; Cavina-Pratesi et al., 2010). We localized this region in six of eight subjects (t = 2.4, p < 0.05, in four subjects these clusters did not survive cluster threshold correction), allowing a direct comparison of its general anatomical location with the left aIPS regions we defined in single subjects for pattern analyses according to the contrast of plan > preview (which instead shows no univariate differences between grasp vs touch trials during the execute phase) (Fig. 5). We found a good degree of overlap between the (plan > preview)-defined left aIPS and the left aIPS defined by a contrast of [(GT execute + GB execute) vs 2 * (touch execute)], with the latter aIPS being much smaller in size. For instance, in the six subjects who showed activity in aIPS with the [(GT execute + GB execute) vs 2 * (touch execute)] contrast, we found that this area shared the following percentage of its total voxels with the larger aIPS area defined by the plan > preview contrast: subject 1 (13.7%; size of grasps vs touch defined aIPS, 66 voxels; size of plan > preview defined aIPS, 100 voxels), subject 2 (25%; size of grasps vs touch defined aIPS, 4 voxels; size of plan > preview defined aIPS, 97 voxels), subject 3 (75%; size of grasps vs touch defined aIPS, 4 voxels; size of plan > preview defined aIPS, 62 voxels), subject 4 (33.3%; size of grasps vs touch defined aIPS, 6 voxels; size of plan > preview defined aIPS, 59 voxels), subject 5 (33.3%; size of grasps vs touch defined aIPS, 3 voxels; size of plan > preview defined aIPS, 92 voxels), and subject 6 (75%; size of grasps vs touch defined aIPS, 4 voxels; size of plan > preview defined aIPS, 84 voxels). The discrepancy in the size and signal amplitude differences between these two regions can be easily explained as a difference of contrasts: specifying, a directed search for grasps > touches reveals a much smaller subset of aIPS voxels, with each individual voxel showing the specified effect. In comparison, the anatomically defined aIPS for the more general contrast of plan > preview (used for pattern analyses here) additionally selects for voxels outside the range of this smaller voxel subset, and, thus, when averaging the response amplitudes across this larger cluster size (as shown in Figs. 3, 5), we effectively diminish the influence of the contribution of each individual voxel on the overall ROI signal. It is worth mentioning that, in addition to finding small left aIPS activations in six subjects with the univariate contrast of [(GT execute + GB execute) vs 2 * (touch execute)], small clusters of voxels in three other areas (left pIPS, left motor cortex, and left PMd) were also reliably coactivated; areas revealed here to decode all three planned movements with pattern classification analyses. Apart from distinguishing univariate and multivariate approaches (for additional explanations and examples, see Mur et al., 2009; Pereira et al., 2009; Raizada and Kriegeskorte, 2010), these findings, more than anything, highlight the additional plan-related information contained in voxel spatial patterns.

Discussion

For the first time, fMRI signal decoding is used to unravel predictive neural signals underlying the planning and implementation of real object-directed hand actions in humans. We show that this predictive information is not revealed in preparatory response amplitudes but in the spatial pattern profiles of voxels. This finding may explain why previous characterizations of plan-related activity in parieto-frontal networks from traditional fMRI subtraction methods have been primarily met with mixed degrees of success. From a theoretical perspective, these results provide new insights into the different roles played by various regions within the human parieto-frontal network, results that add to our previous understanding of the predictive movement information contained in parietal preparatory responses (Andersen and Buneo, 2002; Cisek and Kalaska, 2010) and advance previous notions of motor and premotor contributions to movement planning (Tanné-Gariépy et al., 2002; Filimon, 2010).

Decoding in parietal cortex

A particularly notable finding from this study is that preparatory activity along the dorsomedial circuit (L-SPOC, L-aPCu, and L-midIPS) decodes planned grasp versus touch movements. Although these areas are well known to be involved in the planning and execution of reaching movements in both humans and monkeys (Andersen and Buneo, 2002; Culham et al., 2006; Beurze et al., 2007), there has been remarkably little evidence to suggest their particular involvement during grasp planning. To our knowledge, the only evidence to date in support of this notion comes from neural recordings in monkeys showing that parieto-occipital neurons, in addition to being sensitive for reach direction, are also sensitive to grip/wrist orientation and grip type (Fattori et al., 2009, 2010). Based on our similar findings in SPOC, it now seems clear that fMRI pattern analysis in humans can provide a new tool for capturing neural representations only previously detected with invasive electrode recordings in monkeys. Moreover, our present results advance these previous findings by showing for the first time that motor plans requiring hand preshaping or precise object-directed interactions extend farther anteriorly into both the precuneus and midIPS.

The pIPS in the human and macaque monkey appears to serve a variety of visuomotor and attention-related functions: it is involved in the orienting of visual selection and attention (Szczepanski et al., 2010), encodes the 3D visual features of objects for hand actions (Sakata et al., 1998), and integrates both target and effector-specific information for movements (Beurze et al., 2009). pIPS preparatory activity in our task may primarily reflect the combined coding of all these properties given that differences in finger precision, hand orientation, and attention to 3D object shape is required across the three hand movements. Because attention is often directed toward a target location before movement, these particular findings might provide additional evidence for the integration of visuomotor and attention-related processes within common brain areas during movement planning (Moore and Fallah, 2001; Baldauf and Deubel, 2010).

Area aIPS in both the human and monkey shows selective activity for the execution of grasping movements (Murata et al., 2000; Culham et al., 2003). Here we show that both aIPS and an immediately posterior division, post-aIPS, are selective for the planning of grasp versus reach movements. Moreover, aIPS decodes between similar grasps on objects of different sizes during execution, whereas post-aIPS performs such discriminations during planning. These results are consistent with the object size tuning expected from macaque anterior intraparietal area (AIP) (Murata et al., 2000) and provide additional support for a homology between AIP and human aIPS. Importantly, the distinction here between the two human divisions of aIPS provides evidence for a gradient of grasp-related function, with an anterior division perhaps more related to somatosensory feedback (Culham, 2004) and the online control of grip force (Ehrsson et al., 2003) and a posterior division more related to visual object features (Culham, 2004; Durand et al., 2007) and object–action associations (Valyear et al., 2007). In fact, these functionally distinct regions may correspond to anatomically distinct regions defined by cytoarchitechtonics (Choi et al., 2006).

Decoding in motor and premotor cortex

Although motor cortex, traditionally speaking, is predominantly engaged near the moment of movement execution and presumed, at least compared with the higher-level cognitive processing observed in parietal and premotor cortex, to be a relatively lower-level motor output structure [i.e., given its direct connections with corticospinal neurons (Chouinard and Paus, 2006) and that much of its activity can be explained in simple muscle control terms (Todorov, 2000)], such descriptions likely only partially capture some of its complexity. For instance, microstimulation of motor cortex structures can produce a complex array of ecologically relevant movements [e.g., grasping, feeding, etc. (Graziano, 2006)], and recent evidence also suggests that its outputs reflect whether an action goal is present or not (Cattaneo et al., 2009). The fact that we can decode each particular hand movement from the preparatory responses in motor cortex several moments before action execution might additionally speak to a more prominent role in movement planning processes. Alternatively, it might reflect the fact that higher-level signals from other regions must often pass through motor cortex before going to spinal cord.

In addition to motor cortex, areas in premotor cortex have direct anatomical connections (albeit weaker) to spinal cord (Chouinard and Paus, 2006) but, importantly, are also highly interconnected with frontal, parietal, and motor cortical regions (Andersen and Cui, 2009), making them ideally situated to receive, influence, and communicate high-level cognitive movement-related information. Beyond forming a critical node in the visuomotor planning network, recent evidence proposes that different premotor areas (e.g., PMd and PMv) may have dissociable processes. For instance, experiments in both humans and monkeys appear to suggest that PMv is more involved in hand preshaping and grip-specific responses (distal components), whereas PMd is more involved in power-grip or reach-related hand movements (proximal components) (Tanné-Gariépy et al., 2002; Davare et al., 2006). These findings are consistent with the suggestion that PMv and PMd form the anterior components of dissociable parieto-frontal networks involved in visuomotor control, with the dorsolateral circuit—involving connections from pIPS to AIP and then to PMv—thought to be specialized for grasping, and the dorsomedial circuit—involving connections between V6A/aPCu to midIPS and then to PMd—thought to be specialized for reaching (for review, see Rizzolatti and Matelli, 2003; Grafton, 2010). Given that most of these previous distinctions are based on characterizations of activity evoked during the movement itself, the accurate decoding of different planned hand movements shown here provides a significant additional dimension to such descriptions. Indeed, although our finding that PMv can discriminate different upcoming movements with the hand (grasps and reaches) may be congruent with this parallel-pathway view, the same finding in PMd (more traditionally implicated in reach planning) seems essentially incompatible. There are several reasons, however, to suspect that PMd, as shown here, may also be involved in grasp-related movement planning. For instance, both PMd and PMv contain distinct hand digit representations (Dum and Strick, 2005), PMd activity is modulated during object grasping (Raos et al., 2004), by grasp-relevant object properties (Grol et al., 2007; Verhagen et al., 2008) and the grip force scaling required (Hendrix et al., 2009), and multiunit responses in PMd (as well as PMv) are highly predictive of the current reach and grasp movement (Stark and Abeles, 2007). Furthermore, previous work from our laboratory has found differences in PMd between grasping and reaching during the execution phase of the movement (Culham et al., 2003; Cavina-Pratesi et al., 2010). Our current findings with fMRI in humans add to an emerging view that simple grasp (distal) versus reach (proximal) descriptions cannot directly account for the preparatory responses in PMd and PMv and that significant coordination between the two regions is a requirement for complex object-directed behavior.

Implications

Here we have demonstrated that MVPA can decode surprisingly subtle distinctions between actions across a larger network of areas than would be expected from past human neuroimaging research. Based on nonhuman primate neurophysiology, one might expect decoding of more pronounced differences between trials (such as the effector used or the target location acted on). Here, however, effector and object location remained constant, yet we found decoding of slight differences in the planning of actions: in several areas, we were able to discriminate upcoming grasp versus reach hand movements, and, in a subset of these areas—even more surprisingly—we could additionally discriminate upcoming precision grasps on objects of subtly different sizes. These findings suggest that neural implants within several of the reported predictive regions may eventually enable the reconstruction of highly specific planned actions in movement-impaired human patient populations. A critical consideration for cognitive neural prosthetics is the optimal positioning of electrode arrays to capture the appropriate intention-related signals (Andersen et al., 2010). Here, we highlight a number of promising candidate regions that can be further explored in nonhuman primates to not only further assist their development but also expand our understanding of intention-related signals related to complex sensorimotor behaviors.

Footnotes

This work was supported by Canadian Institutes of Health Research Operating Grant MOP84293 (J.C.C.). We are grateful to Fraser Smith for assistance with multivoxel pattern analysis methods, Mark Daley for helpful discussions, and Fraser Smith and Cristiana Cavina-Pratesi for their valuable comments on this manuscript.

References

- Andersen RA, Buneo CA. Intentional maps in posterior parietal cortex. Annu Rev Neurosci. 2002;25:189–220. doi: 10.1146/annurev.neuro.25.112701.142922. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Cui H. Intention, action planning, and decision making in parietal-frontal circuits. Neuron. 2009;63:568–583. doi: 10.1016/j.neuron.2009.08.028. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Snyder LH, Bradley DC, Xing J. Multimodal representation of space in the posterior parietal cortex and its use in planning movements. Annu Rev Neurosci. 1997;20:303–330. doi: 10.1146/annurev.neuro.20.1.303. [DOI] [PubMed] [Google Scholar]

- Andersen RA, Hwang EJ, Mulliken GH. Cognitive neural prosthetics. Annu Rev Psychol. 2010;61:169–190. C1–C3. doi: 10.1146/annurev.psych.093008.100503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baldauf D, Deubel H. Attentional landscapes in reaching and grasping. Vision Res. 2010;50:999–1013. doi: 10.1016/j.visres.2010.02.008. [DOI] [PubMed] [Google Scholar]

- Beurze SM, de Lange FP, Toni I, Medendorp WP. Integration of target and effector information in the human brain during reach planning. J Neurophysiol. 2007;97:188–199. doi: 10.1152/jn.00456.2006. [DOI] [PubMed] [Google Scholar]

- Beurze SM, de Lange FP, Toni I, Medendorp WP. Spatial and effector processing in the human parietofrontal network for reaches and saccades. J Neurophysiol. 2009;101:3053–3062. doi: 10.1152/jn.91194.2008. [DOI] [PubMed] [Google Scholar]

- Boynton GM, Engel SA, Glover GH, Heeger DJ. Linear systems analysis of functional magnetic resonance imaging in human V1. J Neurosci. 1996;16:4207–4221. doi: 10.1523/JNEUROSCI.16-13-04207.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calton JL, Dickinson AR, Snyder LH. Non-spatial, motor-specific activation in posterior parietal cortex. Nat Neurosci. 2002;5:580–588. doi: 10.1038/nn0602-862. [DOI] [PubMed] [Google Scholar]

- Cattaneo L, Caruana F, Jezzini A, Rizzolatti G. Representation of goal and movements without overt motor behavior in the human motor cortex: a transcranial magnetic stimulation study. J Neurosci. 2009;29:11134–11138. doi: 10.1523/JNEUROSCI.2605-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavina-Pratesi C, Monaco S, Fattori P, Galletti C, McAdam TD, Quinlan DJ, Goodale MA, Culham JC. Functional magnetic resonance imaging reveals the neural substrates of arm transport and grip formation in reach-to-grasp actions in humans. J Neurosci. 2010;30:10306–10323. doi: 10.1523/JNEUROSCI.2023-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang SW, Snyder LH. Idiosyncratic and systematic aspects of spatial representations in the macaque parietal cortex. Proc Natl Acad Sci U S A. 2010;107:7951–7956. doi: 10.1073/pnas.0913209107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chapman CS, Gallivan JP, Culham JC, Goodale MA. Mental blocks: fMRI reveals top-down modulation of early visual cortex when planning a grasp movement that is interfered with by an obstacle. Neuropsychologia. 2011;49:1703–1717. doi: 10.1016/j.neuropsychologia.2011.02.048. [DOI] [PubMed] [Google Scholar]

- Chen Y, Namburi P, Elliott LT, Heinzle J, Soon CS, Chee MW, Haynes JD. Cortical surface-based searchlight decoding. Neuroimage. 2011;56:582–592. doi: 10.1016/j.neuroimage.2010.07.035. [DOI] [PubMed] [Google Scholar]

- Choi HJ, Zilles K, Mohlberg H, Schleicher A, Fink GR, Armstrong E, Amunts K. Cytoarchitectonic identification and probabilistic mapping of two distinct areas within the anterior ventral bank of the human intraparietal sulcus. J Comp Neurol. 2006;495:53–69. doi: 10.1002/cne.20849. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chouinard PA, Paus T. The primary motor and premotor areas of the human cerebral cortex. Neuroscientist. 2006;12:143–152. doi: 10.1177/1073858405284255. [DOI] [PubMed] [Google Scholar]

- Cisek P, Kalaska JF. Neural mechanisms for interacting with a world full of action choices. Annu Rev Neurosci. 2010;33:269–298. doi: 10.1146/annurev.neuro.051508.135409. [DOI] [PubMed] [Google Scholar]

- Culham JC. Human brain imaging reveals a parietal area specialized for grasping. In: Kanwisher N, Duncan J, editors. Attention and performance XX: functional brain imaging of human cognition. Oxford: Oxford UP; 2004. [Google Scholar]

- Culham JC, Danckert SL, DeSouza JF, Gati JS, Menon RS, Goodale MA. Visually guided grasping produces fMRI activation in dorsal but not ventral stream brain areas. Exp Brain Res. 2003;153:180–189. doi: 10.1007/s00221-003-1591-5. [DOI] [PubMed] [Google Scholar]

- Culham JC, Cavina-Pratesi C, Singhal A. The role of parietal cortex in visuomotor control: what have we learned from neuroimaging? Neuropsychologia. 2006;44:2668–2684. doi: 10.1016/j.neuropsychologia.2005.11.003. [DOI] [PubMed] [Google Scholar]

- Curtis CE, D'Esposito M. Persistent activity in the prefrontal cortex during working memory. Trends Cogn Sci. 2003;7:415–423. doi: 10.1016/s1364-6613(03)00197-9. [DOI] [PubMed] [Google Scholar]

- Curtis CE, Rao VY, D'Esposito M. Maintenance of spatial and motor codes during oculomotor delayed response tasks. J Neurosci. 2004;24:3944–3952. doi: 10.1523/JNEUROSCI.5640-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Curtis CE, Cole MW, Rao VY, D'Esposito M. Canceling planned action: an FMRI study of countermanding saccades. Cereb Cortex. 2005;15:1281–1289. doi: 10.1093/cercor/bhi011. [DOI] [PubMed] [Google Scholar]

- Davare M, Andres M, Cosnard G, Thonnard JL, Olivier E. Dissociating the role of ventral and dorsal premotor cortex in precision grasping. J Neurosci. 2006;26:2260–2268. doi: 10.1523/JNEUROSCI.3386-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dum RP, Strick PL. Frontal lobe inputs to the digit representations of the motor areas on the lateral surface of the hemisphere. J Neurosci. 2005;25:1375–1386. doi: 10.1523/JNEUROSCI.3902-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Durand JB, Nelissen K, Joly O, Wardak C, Todd JT, Norman JF, Janssen P, Vanduffel W, Orban GA. Anterior regions of monkey parietal cortex process visual 3D shape. Neuron. 2007;55:493–505. doi: 10.1016/j.neuron.2007.06.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ehrsson HH, Fagergren A, Johansson RS, Forssberg H. Evidence for the involvement of the posterior parietal cortex in coordination of fingertip forces for grasp stability in manipulation. J Neurophysiol. 2003;90:2978–2986. doi: 10.1152/jn.00958.2002. [DOI] [PubMed] [Google Scholar]

- Etzel JA, Gazzola V, Keysers C. Testing simulation theory with cross-modal multivariate classification of fMRI data. PLoS One. 2008;3:e3690. doi: 10.1371/journal.pone.0003690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fattori P, Breveglieri R, Marzocchi N, Filippini D, Bosco A, Galletti C. Hand orientation during reach-to-grasp movements modulates neuronal activity in the medial posterior parietal area V6A. J Neurosci. 2009;29:1928–1936. doi: 10.1523/JNEUROSCI.4998-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fattori P, Raos V, Breveglieri R, Bosco A, Marzocchi N, Galletti C. The dorsomedial pathway is not just for reaching: grasping neurons in the medial parieto-occipital cortex of the macaque monkey. J Neurosci. 2010;30:342–349. doi: 10.1523/JNEUROSCI.3800-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Filimon F. Human cortical control of hand movements: parietofrontal networks for reaching, grasping, and pointing. Neuroscientist. 2010;16:388–407. doi: 10.1177/1073858410375468. [DOI] [PubMed] [Google Scholar]

- Filimon F, Nelson JD, Huang RS, Sereno MI. Multiple parietal reach regions in humans: cortical representations for visual and proprioceptive feedback during on-line reaching. J Neurosci. 2009;29:2961–2971. doi: 10.1523/JNEUROSCI.3211-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Formisano E, De Martino F, Bonte M, Goebel R. “Who” is saying “what?” Brain-based decoding of human voice and speech. Science. 2008;322:970–973. doi: 10.1126/science.1164318. [DOI] [PubMed] [Google Scholar]

- Gallivan JP, Cavina-Pratesi C, Culham JC. Is that within reach? fMRI reveals that the human superior parieto-occipital cortex encodes objects reachable by the hand. J Neurosci. 2009;29:4381–4391. doi: 10.1523/JNEUROSCI.0377-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–574. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- Golland P, Fischl B. Permutation tests for classification: towards statistical significance in image-based studies. Inf Process Med Imaging. 2003;18:330–341. doi: 10.1007/978-3-540-45087-0_28. [DOI] [PubMed] [Google Scholar]

- Grafton ST. The cognitive neuroscience of prehension: recent developments. Exp Brain Res. 2010;204:475–491. doi: 10.1007/s00221-010-2315-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Graziano M. The organization of behavioral repertoire in motor cortex. Annu Rev Neurosci. 2006;29:105–134. doi: 10.1146/annurev.neuro.29.051605.112924. [DOI] [PubMed] [Google Scholar]

- Grol MJ, Majdandzić J, Stephan KE, Verhagen L, Dijkerman HC, Bekkering H, Verstraten FA, Toni I. Parieto-frontal connectivity during visually guided grasping. J Neurosci. 2007;27:11877–11887. doi: 10.1523/JNEUROSCI.3923-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harrison SA, Tong F. Decoding reveals the contents of visual working memory in early visual areas. Nature. 2009;458:632–635. doi: 10.1038/nature07832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Haynes JD, Sakai K, Rees G, Gilbert S, Frith C, Passingham RE. Reading hidden intentions in the human brain. Curr Biol. 2007;17:323–328. doi: 10.1016/j.cub.2006.11.072. [DOI] [PubMed] [Google Scholar]

- Hendrix CM, Mason CR, Ebner TJ. Signaling of grasp dimension and grasp force in dorsal premotor cortex and primary motor cortex neurons during reach to grasp in the monkey. J Neurophysiol. 2009;102:132–145. doi: 10.1152/jn.00016.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu CW, Lin CJ. A comparison of methods for multi-class support vector machiens. IEEE Trans Neural Netw. 2002;13:415–425. doi: 10.1109/72.991427. [DOI] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. Decoding the visual and subjective contents of the human brain. Nat Neurosci. 2005;8:679–685. doi: 10.1038/nn1444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LaConte S, Anderson J, Muley S, Ashe J, Frutiger S, Rehm K, Hansen LK, Yacoub E, Hu X, Rottenberg D, Strother S. The evaluation of preprocessing choices in single-subject BOLD fMRI using NPAIRS performance metrics. Neuroimage. 2003;18:10–27. doi: 10.1006/nimg.2002.1300. [DOI] [PubMed] [Google Scholar]

- Lewis JW. Cortical networks related to human use of tools. Neuroscientist. 2006;12:211–231. doi: 10.1177/1073858406288327. [DOI] [PubMed] [Google Scholar]

- Logothetis NK, Wandell BA. Interpreting the BOLD signal. Annu Rev Physiol. 2004;66:735–769. doi: 10.1146/annurev.physiol.66.082602.092845. [DOI] [PubMed] [Google Scholar]

- Meyer K, Kaplan JT, Essex R, Webber C, Damasio H, Damasio A. Predicting visual stimuli on the basis of activity in auditory cortices. Nat Neurosci. 2010;13:667–668. doi: 10.1038/nn.2533. [DOI] [PubMed] [Google Scholar]

- Mitchell TM, Hutchinson R, Just MA, Niculescu RS, Pereira F, Wang X. Classifying instantaneous cognitive states from FMRI data. AMIA Annu Symp Proc. 2003:465–469. [PMC free article] [PubMed] [Google Scholar]

- Moore T, Fallah M. Control of eye movements and spatial attention. Proc Natl Acad Sci U S A. 2001;98:1273–1276. doi: 10.1073/pnas.021549498. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mourão-Miranda J, Bokde AL, Born C, Hampel H, Stetter M. Classifying brain states and determining the discriminating activation patterns: support Vector Machine on functional MRI data. Neuroimage. 2005;28:980–995. doi: 10.1016/j.neuroimage.2005.06.070. [DOI] [PubMed] [Google Scholar]

- Mur M, Bandettini PA, Kriegeskorte N. Revealing representational content with pattern-information fMRI: an introductory guide. Soc Cogn Affect Neurosci. 2009;4:101–109. doi: 10.1093/scan/nsn044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Murata A, Gallese V, Luppino G, Kaseda M, Sakata H. Selectivity for the shape, size, and orientation of objects for grasping in neurons of monkey parietal area AIP. J Neurophysiol. 2000;83:2580–2601. doi: 10.1152/jn.2000.83.5.2580. [DOI] [PubMed] [Google Scholar]

- Pereira F, Botvinick M. Information mapping with pattern classifiers: a comparative study. Neuroimage. 2011;56:476–496. doi: 10.1016/j.neuroimage.2010.05.026. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pereira F, Mitchell T, Botvinick M. Machine learning classifiers and fMRI: a tutorial overview. Neuroimage. 2009;45:S199–S209. doi: 10.1016/j.neuroimage.2008.11.007. [DOI] [PMC free article] [PubMed] [Google Scholar]