Abstract

Conventional neuroscientific methods are inadequate for separating the brain responses related to the simultaneous processing of different parts of a natural scene. In the present human electroencephalogram (EEG) study, we overcame this limitation by tagging concurrently presented backgrounds and objects with different presentation frequencies. As a result, background and object elicited different steady-state visual evoked potentials (SSVEPs), which were separately quantified in the frequency domain. We analyzed the effects of semantic consistency and inconsistency between background and object on SSVEP amplitudes, topography, and tomography [variable resolution electromagnetic tomography (VARETA)]. The results revealed that SSVEPs related to background processing showed higher amplitudes in the consistent as opposed to the inconsistent condition, whereas object-related SSVEPs showed the reversed pattern of effects. Given the SSVEPs' sensitivity to visual attention, the results indicate that semantic inconsistency leads to greater attention focused on the object. If all image parts are semantically related, attention is rather directed to the background. The attentional advantage to inconsistent objects in a scene is likely the result of a mismatch between background-based expectations and semantic object information. A clear lateralization of the consistency effect in the anterior temporal lobes indicates functional hemispheric asymmetries in processing background- and object-related semantic information. In summary, the present study is the first to demonstrate the feasibility of SSVEPs to unravel the respective contributions of concurrent neuronal processes involved in the perception of background and object.

Introduction

Most of our real life perception involves natural scenes in which one or more objects (e.g., a tree) are embedded into a context (e.g., woods in the background). While object recognition is widely investigated with various neuroscientific methods (Logothetis and Sheinberg, 1996; Farah and Aguirre, 1999), little is known about the neuronal basis of the interplay of simultaneous background and object identification. This lack of understanding stems from the problem of segregating brain responses elicited by the background from those elicited by the object. Conventional methods [e.g., blood oxygen level-dependent (BOLD) responses, event-related potentials (ERPs)] typically reflect an overlap of the brain responses to the whole scene.

In the present study, we separately tracked the neuronal activity elicited by the constituent elements of a scene: background and object. Specifically, we applied a high-density electroencephalogram (EEG) to measure steady-state visual evoked potentials (SSVEPs). The SSVEP, the oscillatory response of the cortex to a flickering stimulus with the same temporal frequency as the initiating stimulus, provides a continuous measure of cortical activation patterns related to the processing of a frequency-tagged stimulus (Müller and Hillyard, 2000). Importantly, stimuli presented at different driving frequencies (in our case, background and object) evoke unique and separable brain responses (Appelbaum et al., 2006). SSVEPs have been used to investigate fundamental visual processes (Nakayama and Mackeben, 1982), visual attention (Müller et al., 2003, 2006), and object recognition (Kaspar et al., 2010). It was proposed that the SSVEP originates from the primary visual cortex and propagates to downstream areas within the network responsible for task-relevant stimuli processing (Vialatte et al., 2010). The propagation of SSVEP activity to higher cognitive regions was demonstrated by two independent studies on working memory (Silberstein et al., 2001; Perlstein et al., 2003).

Building on these assumptions, the present study aims at investigating the modulations of network morphologies during the perception of natural scenes. Our approach intends to activate the “object network” and the “background network” by two different driving frequencies (8.6 and 12 Hz). These frequencies had been successfully applied in previous studies using multiple image displays (Morgan et al., 1996; Müller et al., 2003). Moreover, the SSVEP signal is largest around 10 Hz (Herrmann, 2001). To investigate the interplay between background and object, objects were presented either semantically consistent or inconsistent with respect to the background (e.g., a tree in the woods or a tree in the kitchen). We expected specific modulations of SSVEPs within networks associated to object and background processing at the related driving frequencies.

Materials and Methods

Participants

Eighteen participants volunteered for the experiment. Data of two participants were excluded due to excessive artifacts in the EEG and the intake of antidepressants. Mean age of the participants contributing data to the experiment was 21.4 years (range 19–27 years, 3 male, 14 right-handed). The study conformed to Declaration of Helsinki principles.

Stimuli and procedure

Fifty colored photos of backgrounds and 50 photos of objects from personal resources and Photo-Object databases (Hemera Technologies) served as stimuli. The square backgrounds subtended a visual angle of 8.3°. For the consistent condition, each object was presented at a centered position superimposed on a semantically related background, whereas in the inconsistent condition these same objects were presented in the context of a semantically unrelated background (Fig. 1a). One hundred different object-background combinations were presented twice each, resulting in 200 trials, which were divided by breaks into four blocks of 50 trials each. Semantic consistency was randomized within each block, with consistent and inconsistent trials occurring equally often. Each trial started with a fixation point presented for 500–900 ms. Subsequently, the stimulus (background and object) was displayed for 3000 ms. The participants' task was to detect a magenta-colored dot that appeared for 67 ms on 15% of the trials at a random but central position (area of 4.1 × 4.1° around the center). This dot was superimposed on the background/object image and could appear between 100 and 2700 ms after stimulus onset. The detection task was introduced to maintain the participants' attention toward the scenes. Responses had to be given as quickly as possible by pressing the space bar.

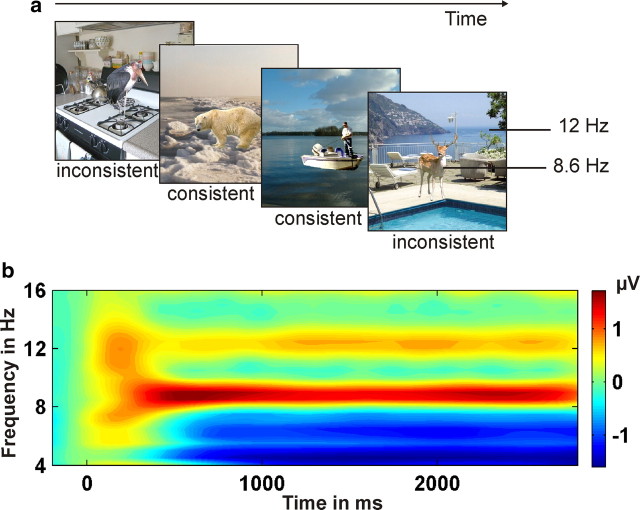

Figure 1.

a, Examples of consistent and inconsistent background–object pairings. The background was presented at a different frequency (e.g., 12 Hz) from the object (e.g., 8.6 Hz). b, TF plot averaged across all conditions and occipital electrodes. Clear SSVEPs at 8.6 and 12 Hz are visible.

To elicit specific SSVEPs, objects were flickered at 8.6 Hz and backgrounds at 12 Hz or vice versa. Stimulus- and frequency-specific effects were controlled by counterbalancing both factors across conditions. The Fourier amplitude and phase spectra as well as the luminance did not differ significantly between consistent and inconsistent background–object pairings.

Electrophysiological recording

EEG was recorded using 128 electrodes and a BioSemi Active Two amplification system (sampling rate, 512 Hz). Eye movements and blinks were measured by vertical and horizontal electro-oculogram (EOG). The EEG was segmented into −500 to 3000 ms epochs relative to stimulus onset (baseline −200 to 0 ms) and artifact corrected by means of “statistical correction of artifacts in dense array studies” (Junghöfer et al., 2000). Single epochs with excessive eye movements and blinks, as well as epochs with >20 channels containing artifacts, were discarded from further analyses. The average rejection rate was ∼20% of the epochs. Finally, the data were rereferenced to the average of all electrodes. The EEG on-target trials (magenta-colored dot was present) were excluded from further analyses.

Data analysis

Behavioral data.

Detection error rate of the targets (i.e., misses of magenta dots) was measured to control whether the participants paid attention to the pictures. We analyzed whether semantic consistency had an effect on the detection error rate by means of a paired t test between the detection error rate to consistent and inconsistent stimuli.

SSVEPs in electrode space.

To determine the temporally changing magnitude of the SSVEP at 8.6 and 12 Hz, the event-related response was spectrally decomposed by means of Morlet wavelet analysis (Bertrand and Pantev, 1994). To demonstrate the selective impact of the applied driving frequencies, spectral decompositions were calculated for a family of wavelets ranging from 1 to 30 Hz (∼12 cycles per wavelet). This method results in a time × frequency (TF) representation (Fig. 1b; spectral bandwidth 1.4 Hz at 8.6 Hz and 2 Hz at 12 Hz). For further analyses, the spectral decompositions related to the 8.6 and 12 Hz wavelets were used. Because spectral amplitudes generally decrease with frequency, SSVEPs were normalized to a mean of 0 and an SD of 1 (across time samples and conditions) for each participant, each electrode, and each frequency separately. The final dataset consisted of normalized SSVEP amplitudes subdivided according to the factors IMAGE CONTENT (background vs object) and semantic CONSISTENCY (consistent vs inconsistent). The 128 recording sites were grouped into six regions (Fig. 2, upper left). Statistical analyses were based on the average amplitude within each region in a latency range from 800 to 2300 ms after stimulus. This time window was chosen to eliminate overlap of SSVEPs and conventional ERPs (Müller et al., 2003, and Keil et al., 2003, for a similar approach).

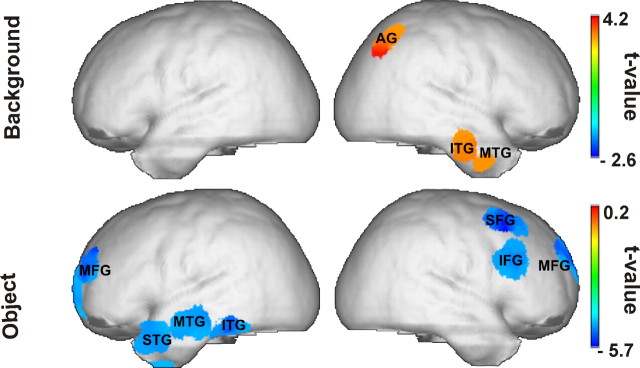

Figure 2.

The normalized background-related (left) and object-related (right) SSVEPs (800–2300 ms). Top, Topographical distributions averaged across consistent and inconsistent stimuli. Regions for statistical analyses are depicted in the left topography (F: frontal, C: central, TO: temporo-occipital). Middle, Difference topographies (consistent minus inconsistent). Bottom, Background and object-related normalized SSVEPs elicited by consistent and inconsistent stimuli at the right central region (left) and at the bilateral frontal region (right).

Next, an ANOVA was performed with the repeated-measures factors REGION, IMAGE CONTENT, and CONSISTENCY. Subsequently, two-way ANOVAs were calculated separately for each IMAGE CONTENT to circumvent the confounding influence of stimulus size (background > object). One-way ANOVAs for the factor CONSISTENCY were performed for each region to define the topographical distribution of this effect.

We plotted the spherical spline-interpolated topographical distributions of the consistency effect in the time window from 800 to 2300 ms (i.e., the difference in activity between consistent and inconsistent conditions of the background- and the object-related SSVEP, respectively). Topographies were generated within EEGLAB (Delorme and Makeig, 2004). All data and statistical analyses were performed within MATLAB (The MathWorks).

SSVEPs in source space.

To localize the cortical generators of the consistency effect, we applied variable resolution electromagnetic tomography (VARETA) (Bosch-Bayard et al., 2001) separately for the background- and the object-related SSVEP. This procedure provides the spatially smoothest intracranial distribution of current densities in source space, which is most compatible with the amplitude distribution in electrode space (Gruber et al., 2006). The inverse solution consisted of 3244 grid points (“voxels”) of a 3D grid (7 mm grid spacing). This grid and the arrangement of 128 electrodes were placed in registration with the average probabilistic MRI brain atlas (“average brain”) produced by the Montreal Neurological Institute (MNI) (Evans et al., 1993). To localize the activation difference between consistent and inconsistent stimuli separately for the background and the object signal, statistical comparisons were performed by means of paired t tests. Activation threshold corrections accounting for spatial dependencies between voxels were calculated by means of false discovery rates (Benjamini and Hochberg, 1995). The thresholds for all statistical parametric maps were set at a significance level of p < 0.001 for the object signal and p < 0.005 for the background signal. Because inconsistency resulted in stronger and more extended activations than consistency, different thresholds were chosen. Moreover, object-related signals were characterized by higher activations in the inconsistent conditions as compared to the consistent condition (and vice versa for the background).

Finally, the significant voxels were projected to the cortical surface constructed on the basis of the MNI average brain.

Results

Behavioral data

Overall only 4.8% of target dots were missed, with no significant differences between consistent and inconsistent conditions (t(15) < 1.7). Thus, participants attended equally well to all stimuli in both conditions.

SSVEPs in electrode space

The TF plot in Figure 1b demonstrates that the frequency-tagged stimuli elicited two distinct SSVEPs with peaks at 8.6 and 12 Hz, respectively. Furthermore, the topographical distributions were characterized by an occipital maximum (Fig. 2, top), which was more widespread for the background-related SSVEP than for the object-related SSVEP.

Differences of the topographical distributions between consistent and inconsistent images are depicted in Figure 2 (middle row), separately for the SSVEP to backgrounds and objects. This figure clearly demonstrates that the significant interaction between IMAGE CONTENT and CONSISTENCY (F(1,15) = 12.3, p < 0.003) was due to a reversed effect of consistency on the amplitude of the SSVEP elicited by the background compared with that of the SSVEP elicited by the object. Moreover, the factor REGION interacted with IMAGE CONTENT (F(5,75) = 2.2, p < 0.064) and with CONSISTENCY (F(5,75) = 2.9, p < 0.016). Further analyses were performed separately for the background- and the object-related SSVEP. Semantic consistency modulated the SSVEP amplitude to the background significantly over right central and left temporal-occipital regions (REGION × CONSISTENCY: F(5,75) = 2.9, p < 0.017; CONSISTENCY at right central electrodes: F(1,15) = 15.2, p < 0.001; CONSISTENCY at left temporal-occipital electrodes: F(1,15) = 7.7, p < 0.014). Over these regions, consistent background images elicited stronger SSVEPs than inconsistent background images (Fig. 2, left middle and bottom).

The object-related SSVEP was significantly modulated by CONSISTENCY (F(1,15) = 6.5, p < 0.023) and by REGION (F(1,15) = 2.5, p < 0.035). The interaction of both factors did not reach statistical significance, F < 1. In contrast to the consistency effect upon the background signal, the SSVEP amplitude to consistent embedded objects was smaller than to inconsistent objects with maximal differences at frontal regions (left frontal: F(1,15) = 3.8, p < 0.071; and right frontal: F(1,15) = 4.4, p < 0.053; Fig. 2, right middle and bottom panel).

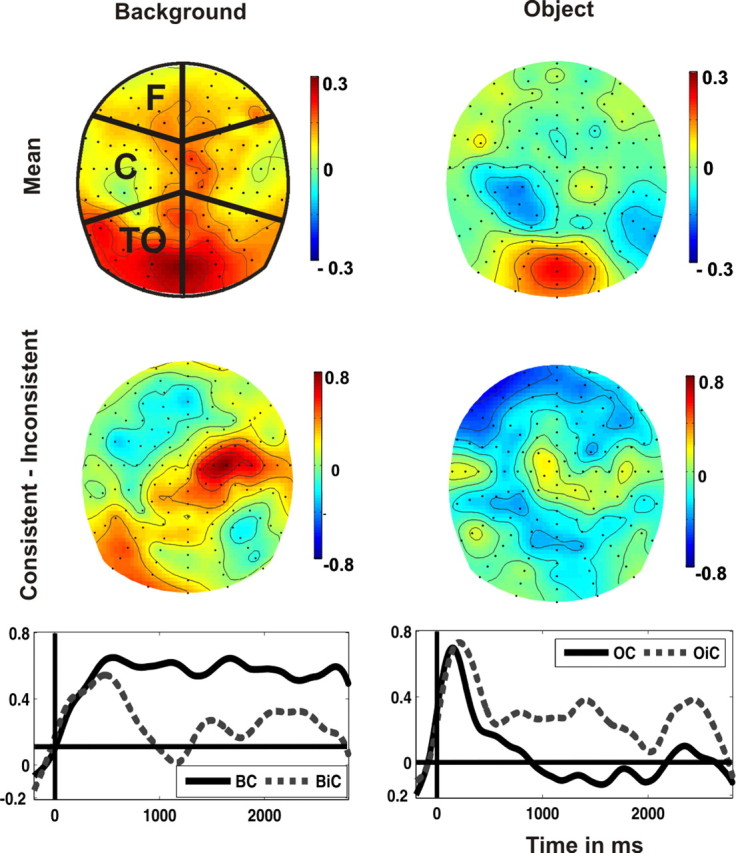

SSVEPs in source space

Background SSVEPs related to consistent as compared to inconsistent scenes were accompanied by significantly higher current densities in right middle, inferior-temporal, and angular gyri (Fig. 3). In contrast, the SSVEP consistency effect related to objects was localized to left temporal and bilateral frontal generators. In this case, we found higher current densities for inconsistent as compared to consistent objects (Fig. 3). A complete list of activated cortical areas and the respective MNI coordinates of their local maxima is given in Table 1.

Figure 3.

Statistically significant SSVEP sources (consistent minus inconsistent) for the background (top) and for the object (bottom) panels.

Table 1.

Significant (*p < 0.005, **p < 0.001) sources of the consistency effect (consistent − inconsistent) and the MNI coordinates of the source maximum

| Signal | Brain region | x | y | z |

|---|---|---|---|---|

| Background (consistent > inconsistent) | Right middle temporal gyrus* | 57 | −11 | −26 |

| Right inferior temporal gyrus* | 58 | −17 | −24 | |

| Right angular gyrus** | 36 | −61 | 42 | |

| Object (inconsistent > consistent) | Right superior frontal gyrus** | 12 | 61 | 27 |

| Right middle frontal gyrus** | 41 | 17 | 55 | |

| Right inferior frontal gyrus** | 50 | 24 | 27 | |

| Left middle frontal gyrus** | −21 | 55 | 19 | |

| Left superior temporal gyrus** | −50 | 16 | −28 | |

| Left middle temporal gyrus** | −64 | −15 | −17 | |

| Left inferior temporal gyrus** | −57 | −40 | −24 |

For background-related SSVEP, significant brain regions showed stronger activity in the consistent than in the inconsistent condition, while for the object-related SSVEP, significant brain regions showed stronger activity in the inconsistent than in the consistent condition.

Discussion

The present study investigated the perception of natural scenes formed by combining a background and an object image. The object was either semantically consistent or inconsistent with respect to the background. The brain responses to both the background and the object were coincidentally tracked by means of the frequency-tagged SSVEP method. Most importantly, we demonstrated that this approach successfully separated the brain responses to background and object processing, respectively, and that SSVEP topographies and tomographies were significantly modulated by semantic consistency and image content. We discuss below these physiological changes in the light of psychological constructs.

The most prominent finding was a double dissociation of cortical activity linked to image content (i.e., object or background) and semantic consistency. SSVEPs related to background processing showed higher amplitudes in the consistent as opposed to the inconsistent condition. This effect was driven by higher activations of the SSVEPs' cortical generators related to background processing in the consistent condition. In contrast, object-related SSVEP sources were more active in the inconsistent condition. In sensor space, this effect was mirrored in higher SSVEP amplitudes during the processing of inconsistent as opposed to consistent background–object pairings. Numerous studies on attention show that SSVEP amplitudes increase for attended as compared to ignored stimuli (e.g., Morgan et al., 1996; Müller et al., 2003). The observed amplitude effect might therefore reflect directing of visual selective attention toward the background when background and object were semantically related and, hence, the object was perceived as integrated with the background. In contrast, when the object was semantically deviant from background context, the object captured full attention. This explanation is consistent with eye-tracking studies reporting that objects that are semantically inconsistent with the global scene context attract more attention than semantically consistent objects (Henderson and Hollingworth, 1999; Võ and Henderson, 2009). Our SSVEP source reconstructions support attentional mechanisms as the basis of the observed effects. In particular, the right inferior and middle frontal areas, which were activated during inconsistent conditions, have been associated with attentional reorientation to previously unattended stimuli (Corbetta and Shulman, 2002). In other words, when a scene is semantically inconsistent, attention might be reoriented from the background to the misplaced object. Note that our results do not imply that attentional selection occurs only from 800 ms after stimulus onset. Many studies indicate that attentional selection takes place at early stages within the processing hierarchy (Hillyard and Anllo-Vento, 1998). Due to limitations of the SSVEP method, we did not analyze these early time windows. It remains the task of future studies to determine to what extent SSVEP modulations reflect a downstream consequence of early attentional selection. An alternative, though unlikely, explanation for the SSVEP amplitude modulation might be that, in the consistent condition, participants made more explorative eye movements than in the inconsistent condition. However, trials contaminated with EOG artifacts had been removed before data analysis. Furthermore, behavioral results did not differ between the two conditions.

A further substantial observation was the lateralization effect of neuronal activity in the temporal cortex. We found the consistency effect for the object-related SSVEP to be located in left temporal regions in contrast to the right temporal consistency effect of the background-related SSVEP. Various studies reported functional hemispheric asymmetries in processing the global and the local features of incoming sensory information (Fink et al., 1997; Yamaguchi et al., 2000; Weissman and Woldorff, 2005; Flevaris et al., 2010). In a recent study, Studer and Hübner (2008) presented pictures of living objects that had to be identified on the basis of either their global shape or their local texture. They found a right hemispheric processing advantage for the global shape and a left hemispheric processing advantage for local features, findings similar to those from studies involving the so-called “Navon tasks” using hierarchical letters (Navon, 1981). These results are in line with our observed lateralization effects: the background consistency effect (i.e., the global image aspect) mainly activated right temporal regions, whereas the object consistency effect (i.e., the local image aspect) mainly activated left temporal regions. Previous imaging studies reported hemispheric asymmetries in temporoparietal (Yamaguchi et al., 2000; Weissman and Woldorff, 2005) or occipital (Fink et al., 1997) regions rather than in anterior-temporal areas. But they used simple hierarchical letters, whereas we used complex images. To our knowledge, such global/local effects have never been demonstrated with complex stimuli at a semantic-processing stage.

The localization of present SSVEP responses is in general agreement with various studies ascribing object processing to temporal areas (e.g., Chao et al., 1999). Moreover, the ventral temporal lobe in both hemispheres seems to be part of a network that stores the semantic representations of objects (Martin et al., 1996; Chao et al., 1999). Additionally, the N400 ERP component, which is elicited by semantic inconsistency and likely reflects the activation of semantic representations, was found to originate from the ventral temporal lobes (McCarthy et al., 1995; Nobre and McCarthy, 1995).

These findings are in line with a model on semantic processing suggested by Jung-Beeman (2005), who proposed that the initial access to semantic representations is localized in the posterior parts of the middle temporal gyri. However, when semantic processing becomes more complex, anterior regions of the middle and superior temporal gyri become activated. These areas are assumed to elaborate the semantic input and to refine higher-order semantic relations. In this so-called “semantic integration process,” Jung-Beeman posits functional differences between the hemispheres that resemble the global/local processing asymmetries discussed above. Although both hemispheres are involved in each stage of semantic processing, the left hemisphere seems to be more sensitive to local, fine-grained semantic information (e.g., specific objects). In contrast, the right hemisphere maintains the broader, “coarse” meaning of semantic activations and integrates concepts (e.g., the background context).

Taking into account the findings from our study and from research on global/local, object, and semantic representations, we propose the following processes to underlie scene perception with a consistent or inconsistent embedded object: In cases where the object and background form a semantically coherent scene, attention is more focused on the background than on the object and the scene is semantically coded. The neuronal correlate of this process could be represented by the present right anterior-temporal activations. In cases where the object and background form a semantically incoherent scene, two semantically competing concepts are selected, a process associated with the activated right middle and inferior frontal attentional network. This conflict seems to be solved by the reevaluation of the unexpected object (Jung-Beeman, 2005). The larger activity of the left superior, middle, and inferior temporal gyrus in the inconsistent condition might indicate the activation of object-specific perceptual and semantic representations. Note that the described scenario implies that expectations are elicited by the background (top-down) and that these influence the salience of objects (bottom-up) (Corbetta and Shulman, 2002). Several findings indicate that scene perception is based on two processes: the ultrafast bottom-up scene categorization (so-called “gist” perception), which occurs in parallel to object processing (e.g., Oliva and Torralba, 2001; Mack and Palmeri, 2010), and a top-down process that guides attention to relevant image parts (Torralba et al., 2006). Our SSVEP results likely reflect the latter process. Further investigations should address the temporal processing architecture of background and object processing. We demonstrated the feasibility of a role for SSVEPs in unraveling the contribution of simultaneous neuronal processes involved in the perception of scenes. Future studies should combine experimental designs that allow the analysis of SSVEPs and of markers of ultrafast categorization processes (Müller and Hillyard, 2000; Oppermann et al., 2011).

Footnotes

We thank Steve Hillyard and two anonymous reviewers for their helpful comments on a previous version of this manuscript.

References

- Appelbaum LG, Wade AR, Vildavski VY, Pettet MW, Norcia AM. Cue-invariant networks for figure and background processing in human visual cortex. J Neurosci. 2006;26:11695–11708. doi: 10.1523/JNEUROSCI.2741-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate: a practical and powerful approach to multiple testing. J R Stat Soc Series B Stat Methodol. 1995;57:289–300. [Google Scholar]

- Bertrand O, Pantev C. Stimulus frequency dependence of the transient oscillatory auditory evoked response (40 Hz) studied by electric and magnetic recordings in human. In: Pantev C, Elbert T, Lütkenhöner B, editors. Oscillatory event-related brain dynamics. New York: Plenum; 1994. pp. 231–242. [Google Scholar]

- Bosch-Bayard J, Valdés-Sosa P, Virues-Alba T, Aubert-Vázquez E, John ER, Harmony T, Riera-Díaz J, Trujillo-Barreto N. 3D statistical parametric mapping of variable resolution electromagnetic tomography (VARETA) Clin Electroencephalogr. 2001;32:47–61. doi: 10.1177/155005940103200203. [DOI] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat Neurosci. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Delorme A, Makeig S. EEGLAB: an open source toolbox for analysis single-trial EEG dynamics. J Neurosci Methods. 2004;134:9–21. doi: 10.1016/j.jneumeth.2003.10.009. [DOI] [PubMed] [Google Scholar]

- Evans AC, Collins DL, Mills SR, Brown ED, Kelly RL, Peters TM. 3D statistical neuroanatomical models from 305 MRI volumes. Proc. IEEE—Nuclear Science Symposium and Medical Imaging Conference; 1993. pp. 1813–1817. [Google Scholar]

- Farah MJ, Aguirre GK. Imaging visual recognition: PET and fMRI studies of the functional anatomy of human visual recognition. Trends Cogn Sci. 1999;3:179–186. doi: 10.1016/s1364-6613(99)01309-1. [DOI] [PubMed] [Google Scholar]

- Fink GR, Halligan PW, Marshall JC, Frith CD, Frackowiak RS, Dolan RJ. Neural mechanisms involved in the processing of global and local aspects of hierarchically organized visual stimuli. Brain. 1997;120:1779–1791. doi: 10.1093/brain/120.10.1779. [DOI] [PubMed] [Google Scholar]

- Flevaris AV, Bentin S, Robertson LC. Local or global? Attentional selection of spatial frequencies binds shapes to hierarchical levels. Psychol Sci. 2010;21:424–431. doi: 10.1177/0956797609359909. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gruber T, Trujillo-Barreto NJ, Giabbiconi CM, Valdés-Sosa PA, Müller MM. Brain electrical tomography (BET) analysis of induced gamma band responses during a simple object recognition task. Neuroimage. 2006;29:888–900. doi: 10.1016/j.neuroimage.2005.09.004. [DOI] [PubMed] [Google Scholar]

- Henderson JM, Hollingworth A. High-level scene perception. Annu Rev Psychol. 1999;50:243–271. doi: 10.1146/annurev.psych.50.1.243. [DOI] [PubMed] [Google Scholar]

- Herrmann CS. Human EEG responses to 1–100 Hz flicker: resonance phenomena in visual cortex and their potential correlation to cognitive phenomena. Exp Brain Res. 2001;137:346–353. doi: 10.1007/s002210100682. [DOI] [PubMed] [Google Scholar]

- Hillyard SA, Anllo-Vento L. Event-related brain potentials in the study of visual selective attention. Proc Natl Acad Sci U S A. 1998;95:781–787. doi: 10.1073/pnas.95.3.781. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jung-Beeman M. Bilateral brain processes for comprehending natural language. Trends Cogn Sci. 2005;9:512–518. doi: 10.1016/j.tics.2005.09.009. [DOI] [PubMed] [Google Scholar]

- Junghöfer M, Elbert T, Tucker DM, Rockstroh B. Statistical control of artifacts in dense array EEG/MEG studies. Psychophysiology. 2000;37:523–532. [PubMed] [Google Scholar]

- Kaspar K, Hassler U, Martens U, Trujillo-Barreto N, Gruber T. Steady-state visually evoked potential correlates of object recognition. Brain Res. 2010;1343:112–121. doi: 10.1016/j.brainres.2010.04.072. [DOI] [PubMed] [Google Scholar]

- Keil A, Gruber T, Müller MM, Moratti S, Stolarova M, Bradley MM, Lang PJ. Early modulation of visual perception by emotional arousal: Evidence from steady-state visual evoked brain potentials. Cogn Affect Behav Neurosci. 2003;3:195–206. doi: 10.3758/cabn.3.3.195. [DOI] [PubMed] [Google Scholar]

- Logothetis NK, Sheinberg DL. Visual object recognition. Annu Rev Neurosci. 1996;19:577–621. doi: 10.1146/annurev.ne.19.030196.003045. [DOI] [PubMed] [Google Scholar]

- Mack ML, Palmeri TJ. Modeling categorization of scenes containing consistent versus inconsistent objects. J Vis. 2010;10:11.1–11.11. doi: 10.1167/10.3.11. [DOI] [PubMed] [Google Scholar]

- Martin A, Wiggs CL, Ungerleider LG, Haxby JV. Neural correlates of category-specific knowledge. Nature. 1996;379:649–652. doi: 10.1038/379649a0. [DOI] [PubMed] [Google Scholar]

- McCarthy G, Nobre AC, Bentin S, Spencer DD. Language-related field potentials in the anterior-medial temporal lobe: I. Intracranial distribution and neural generators. J Neurosci. 1995;15:1080–1089. doi: 10.1523/JNEUROSCI.15-02-01080.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morgan ST, Hansen JC, Hillyard SA. Selective attention to stimulus location modulates the steady-state visual evoked potential. Proc Natl Acad Sci U S A. 1996;93:4770–4774. doi: 10.1073/pnas.93.10.4770. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Müller MM, Hillyard S. Concurrent recording of steady-state and transient event-related potentials as indices of visual-spatial selective attention. Clinical Neurophysiology. 2000;111:1544–1552. doi: 10.1016/s1388-2457(00)00371-0. [DOI] [PubMed] [Google Scholar]

- Müller MM, Malinowski P, Gruber T, Hillyard SA. Sustained division of the attentional spotlight. Nature. 2003;424:309–312. doi: 10.1038/nature01812. [DOI] [PubMed] [Google Scholar]

- Müller MM, Andersen S, Trujillo NJ, Valdés-Sosa P, Malinowski P, Hillyard SA. Feature-selective attention enhances color signals in early visual areas of the human brain. Proc Natl Acad Sci U S A. 2006;103:14250–14254. doi: 10.1073/pnas.0606668103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakayama K, Mackeben M. Steady state visual evoked potentials in the alert primate. Vision Res. 1982;22:1261–1271. doi: 10.1016/0042-6989(82)90138-9. [DOI] [PubMed] [Google Scholar]

- Navon D. The forest revisited: more on global precedence. Psychol Res. 1981;43:1–32. [Google Scholar]

- Nobre AC, McCarthy G. Language-related field potentials in the anterior-medial temporal lobe: II. Effects of word type and semantic priming. J Neurosci. 1995;15:1090–1098. doi: 10.1523/JNEUROSCI.15-02-01090.1995. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oliva A, Torralba A. Modeling the shape of the scene: a holistic representation of the spatial envelope. Int J Comput Vis. 2001;42:145–175. [Google Scholar]

- Oppermann F, Haßler U, Jescheniak JD, Gruber T. The rapid extraction of gist—early neural correlates of high-level visual processing. J Cogn Neurosci. 2011 doi: 10.1162/jocn_a_00100. [DOI] [PubMed] [Google Scholar]

- Perlstein WM, Cole MA, Larson M, Kelly K, Seignourel P, Keil A. Steady-state visual evoked potentials reveal frontally-mediated working memory activity in humans. Neurosci Lett. 2003;342:191–195. doi: 10.1016/s0304-3940(03)00226-x. [DOI] [PubMed] [Google Scholar]

- Silberstein RB, Nunez PL, Pipingas A, Harris P, Danieli F. Steady state visually evoked potential (SSVEP) topography in a graded working memory task. Int J Psychophysiol. 2001;42:219–232. doi: 10.1016/s0167-8760(01)00167-2. [DOI] [PubMed] [Google Scholar]

- Studer T, Hübner R. The direction of hemispheric asymmetries for object categorization at different levels of abstraction depends on the task. Brain Cogn. 2008;67:197–211. doi: 10.1016/j.bandc.2008.01.003. [DOI] [PubMed] [Google Scholar]

- Torralba A, Oliva A, Castelhano MS, Henderson JM. Contextual guidance of eye movements and attention in real-world scenes: the role of global features in object search. Psychol Rev. 2006;113:766–786. doi: 10.1037/0033-295X.113.4.766. [DOI] [PubMed] [Google Scholar]

- Vialatte FB, Maurice M, Dauwels J, Cichocki A. Steady-state visually evoked potentials: focus on essential paradigms and future perspectives. Prog Neurobiol. 2010;90:418–438. doi: 10.1016/j.pneurobio.2009.11.005. [DOI] [PubMed] [Google Scholar]

- Võ ML-H, Henderson JM. Does gravity matter? Effects of semantic and syntactic inconsistencies on the allocation of attention during scene perception. J Vis. 2009;9:24.1–24.15. doi: 10.1167/9.3.24. [DOI] [PubMed] [Google Scholar]

- Weissman DH, Woldorff MG. Hemispheric asymmetries for different components of global/local attention occur in distinct temporo-parietal loci. Cereb Cortex. 2005;15:870–876. doi: 10.1093/cercor/bhh187. [DOI] [PubMed] [Google Scholar]

- Yamaguchi S, Yamagata S, Kobayashi S. Cerebral asymmetry of the “top-down” allocation of attention to global and local features. J Neurosci. 2000;20(RC72):1–5. doi: 10.1523/JNEUROSCI.20-09-j0002.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]