Abstract

Emotional responses are regulated by basic motivational systems (appetitive and defensive), which allow for adaptive behavior when opportunities and threats are detected. It has been suggested that specific ranges of spatial frequencies of the visual input may contain information that is diagnostic for discriminating emotionally relevant from less relevant contents, and that specialized neural modules may analyze these spatial frequencies to allow efficient detection and response to life-threatening or life-sustaining stimuli. However, there is no evidence supporting this possibility regarding natural scenes, which are highly varied in terms of pictorial composition. The present study examines how low and high spatial frequency filtering affects the understanding of natural scene contents and modulates the amplitude of the late positive potential (LPP), a well-known component of the event-related potentials that reflects motivational significance. The content of an initially degraded (low- or high-passed) picture was progressively revealed in a sequence of steps by adding high or low spatial frequencies. At each step, human participants reported whether they identified the gist of the image. The results showed that the affective modulation of the LPP varied with picture identification similarly for low-passed and high-passed pictures. The engagement of corticolimbic appetitive and defensive systems, reflected in the LPP affective modulation, varied with picture identification, and did not critically or preferentially depend on either low or high spatial frequencies.

Introduction

It is unclear whether specific compositional features of natural scenes support the emotional response. It has been suggested that global spatial features that are revelatory of the presence of highly relevant contents exist, and that specialized neural modules may detect these features (Öhman, 2005). However, no conclusive data have been obtained regarding the role of global features in the emotional response to natural scenes.

During evolution, specific mechanisms developed to optimize the detection of threats and opportunities (Lang et al., 1997). In particular, a preparedness module facilitates the detection of highly relevant stimuli even under degraded conditions (Seligman, 1971; Öhman and Mineka, 2001). A number of studies investigated which perceptual features may support this mechanism and indicated the global outline of visual input as a candidate (Vuilleumier et al., 2003). More specifically, an emotional response might be elicited under conditions that only allow the viewer to perceive the global shape of a scene, but not when only fine-grained details are presented.

The manipulation of the spatial frequency content of a scene shares similarities with the manipulation of its exposure time (Bar, 2004). Visual processing proceeds in a global-to-local sequence, and limited exposure time acts as a low-pass filter, hindering the perception of local details (De Cesarei and Loftus, 2011). Recent studies have demonstrated that emotional pictures elicit cortical and autonomic changes even under perceptually challenging conditions in which the stimuli are relatively degraded with a very brief exposure duration, but only to the extent that participants could discriminate among emotional picture contents (Codispoti et al., 2009). These findings seem to suggest that scene identification is a conditio sine qua non of affective processing and emotion elicitation (Codispoti et al., 2009; Nummenmaa et al., 2010).

The present study investigated the role of scene identification and spatial frequencies in the emotional response. We focused on the late positive potential (LPP), because one of the most well established findings in the literature on scene perception is that emotionally arousing (pleasant and unpleasant) pictures elicit a larger LPP than neutral pictures (Schupp et al., 2004; Codispoti et al., 2006a). Several studies seem to indicate that the emotional modulation of the LPP reflects the engagement of corticolimbic appetitive and defensive systems to facilitate perception and ultimately promote adaptive behavior (Lang et al., 1997; Bradley, 2009). Scene content identification and event-related potentials (ERPs) were assessed along sequential stages of high- or low-pass filtered picture presentations, beginning with highly degraded pictures and progressively revealing the contents of pictures (Viggiano and Kutas, 2000). As pronounced differences between picture categories exist (Vannucci et al., 2001; Torralba and Oliva, 2003), the present design allowed us to test whether the activation of motivational systems is similarly pronounced when gist identification is achieved on the basis of low or high spatial frequencies. If low spatial frequencies have an advantage compared with high spatial frequencies in the elicitation of the emotional response, then a more pronounced affective modulation of the LPP should be observed in the low- compared with the high-passed condition, suggesting a preferential or critical role of low spatial frequencies. Alternatively, if identification is critical for emotional response, and spatial frequencies only matter to the extent to which they allow scene identification, then the emotional response should covary similarly with picture identification in the low- and high-passed condition.

Materials and Methods

Participants.

Thirty-five participants (18 females, 17 males; mean age, 25.26 years, SD = 4.79) with no diagnosed psychological or neurological disorders took part in the study. Vision was normal or corrected-to-normal. Participants had no previous experience with experiments investigating emotional reaction to affective pictures.

Stimuli and equipment.

Images were selected from various sources including the International Affective Picture System (Lang et al., 2008), the Internet, and document scanning. The pictures were converted to grayscale and balanced for contrast and brightness (0.6 and 0.1, respectively, on a 0–1 linear scale). Three picture categories were selected (each N = 20, total of 60 pictures): erotic couples, people in neutral contexts, and mutilated bodies. These categories were selected based on previous studies (Schupp et al., 2004; De Cesarei and Codispoti, 2011), which demonstrated that the affective modulation of the LPP is most pronounced for erotic and mutilation pictures. Five additional pictures of people in a neutral context were used for a training phase.

Thirty-two versions of each picture were created by applying a low- or high-pass spatial frequency filter that varied in the cutoff level. Pictures were presented on a 21” CRT monitor 1.5 m away from the observer. The visual angle subtended by the monitor was 15 (horizontal) × 11 (vertical) degrees of visual angle.

Filter parameters.

The filters applied were those used in previous research (De Cesarei and Loftus, 2011). The low-pass filter passed all spatial frequencies lower than 1/3 × cutoff, and eliminated all spatial frequencies equal to or higher than the cutoff value, with a parabolic slope. The high-pass filter was identical to the low-pass filter, mirror-imaged on the frequency axis, and it passed all spatial frequencies higher than 1/3 × cutoff, and all spatial frequencies lower than the cutoff value were eliminated. Through the remainder of the paper, all filters will be described by their direction (low-pass or high-pass) and their cutoff value.

Sixteen cutoff levels were selected for each the high- and for the low-pass spatial frequency filters. The choice of the cutoff ranges was made based on pilot data from 11 participants (data not shown). For the low-pass filter, the cutoffs ranged from 4 to 724 cpi (4, 6, 8, 11, 16, 23, 32, 45, 64, 91, 128, 181, 256, 362, 512, 724), while for the high-pass filter they ranged from 362 to 2 cpi (362, 256, 181, 128, 91, 64, 45, 32, 23, 16, 11, 8, 6, 4, 3, 2).

Procedure.

Each participant viewed the entire 65-picture set. For each participant, each one of the 65 pictures was presented either in the low- or high-pass spatial filter condition, but not both, to prevent previous identification of a low-passed picture from influencing identification of the high-passed version, or vice versa. Across participants, all pictures were presented with an equally frequent occurrence in the low- and high-pass spatial filter version.

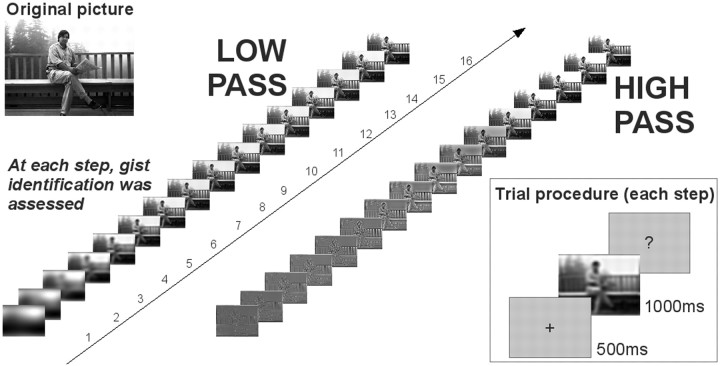

The procedure for each picture is presented in Figure 1. Presentation of each picture began with the most degraded (either low- or high-passed) version. A 500 ms fixation cross alerted participants that a picture would shortly be presented. The image was then presented and remained visible for 1 s. After picture offset, participants indicated whether they identified the gist of the image or not, using a response box. No instructions relative to response speed were given and the procedure was halted until the participant responded. After a response was given, and following an additional 1 s delay, the same picture was presented in a less degraded (higher low-pass cutoff or lower high-pass cutoff) version. This procedure was repeated for all the 16 versions of a picture.

Figure 1.

Experimental procedure. A sequence of low- or high-pass filtered pictures was presented, beginning with the most degraded version and proceeding with the scene becoming progressively more visible. At each presentation step (inset), the picture was presented for 1 s and the participants were asked whether they understood the gist of the scene.

When the identification procedure was completed for all 16 picture versions, participants were asked to confirm that their first “yes” response corresponded to the first time they could correctly identify the gist of the picture, or whether their report of gist understanding was incorrect. Then the identification procedure for the next picture started.

EEG recording and processing.

EEG was recorded at a sampling rate of 256 Hz from 257 active sites using an ActiveTwo system (Biosemi). The EEG was referenced to an additional active electrode during recording. A hardware fifth-order low-pass filter with a −3 dB attenuation factor at 50 Hz was applied online.

Off-line analysis was performed using EMEGS (freely available at http://www.emegs.org/) (Peyk et al., 2011) and included filtering (0.1 Hz high-pass and 40 Hz low-pass), removal of eye movement artifacts (Schlögl et al., 2007), artifact detection and sensor interpolation, and average reference. A baseline correction based on the 100 ms before stimulus onset was performed. Based on previous studies and on the maximal amplitude of the arousing–neutral differential, the LPP was scored over centroparietal areas (1–5, 18, 31–34, 55–57, 64–66, 96, 111, 128, 129, 149–152, 257) as the average ERP amplitude in the 600–800 time interval.

Data analysis.

Pictures, which were either high- or low-pass filtered at one of 16 cutoff levels, were presented while ERPs were recorded. We estimated, for each participant and picture, the threshold cutoff level that would allow the participant to identify the gist of the scene. The interindividual variability in threshold estimation was low, and the thresholds differed from one participant to the next by less than one step (SD = 0.94 steps). The analysis focused on the effects of gist identification on ERPs, and all data were coded regarding the threshold identification value. Identification threshold, operationalized as the first “yes” response, was taken as the zero identification level (+0). Picture versions starting from −4 (four steps before the first “yes” response) to +8 (eight steps after the first “yes” response) were considered. ERPs were averaged in each of the 78 conditions defined by identification × type of filter × picture category (i.e., 13 × 2 × 3). Trials where participants were incorrect in reporting gist identification were discarded. On average, 12.9% of trials (7.7 pictures) per participant were discarded.

An ANOVA was performed (identification × type of filter × picture category). Whenever an interaction reached significance, we proceeded with lower-order ANOVAs (e.g., at each identification level) and with pairwise comparisons. Huynh–Feldt correction was used to correct for sphericity violations. The partial η squared statistic (ηp2), indicating the proportion between the variance explained by one experimental factor and the total variance, was calculated and is reported.

Results

Identification performance

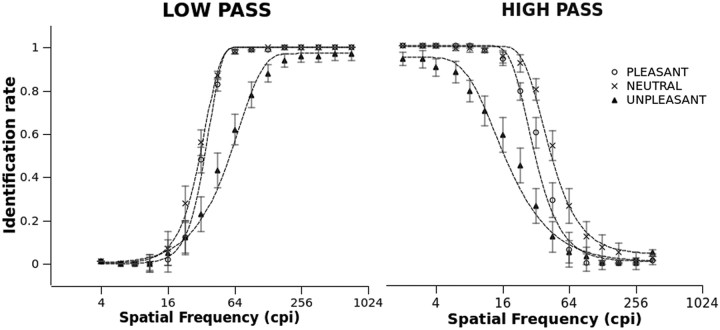

Identification performance is reported in Figure 2. In general, better identification was observed as the filter became less severe, i.e., when it allowed for more high spatial frequencies to be presented in the low-pass filter condition, and vice versa. Additionally, the psychometric function varied with picture category. For both low- and high-passed pictures, less accurate identification was observed at all cutoff levels for unpleasant pictures compared with pleasant and neutral scenes.

Figure 2.

Identification of low- and high-pass filtered pictures as a function of emotional category and filter cutoff. Identification indicates the ratio of subjects that understood the gist of the scene over the total number of participants. Symbols are actual observations, and dashed lines represent the best-fitting psychometric functions. Error bars represent SEM.

To substantiate these descriptive findings, the best-fitting psychometric function was calculated using a Weibull cumulative function (Klein, 2001). This analysis allows for estimation of the horizontal (frequency) shift of the psychometric function. For both low- and high-pass filters, the category effect was highly significant (Fs(2,68) > 96.85, ps < 0.001, ηp2s > 0.74) and significant differences were observed between all categories. For both types of filters, this analysis revealed that neutral pictures required significantly fewer spatial frequencies (lower low-pass or higher high-pass cutoff) to be identified, compared with both pleasant and unpleasant pictures; to be identified, unpleasant pictures required a substantially wider range of spatial frequencies compared with both neutral and pleasant pictures.

LPP

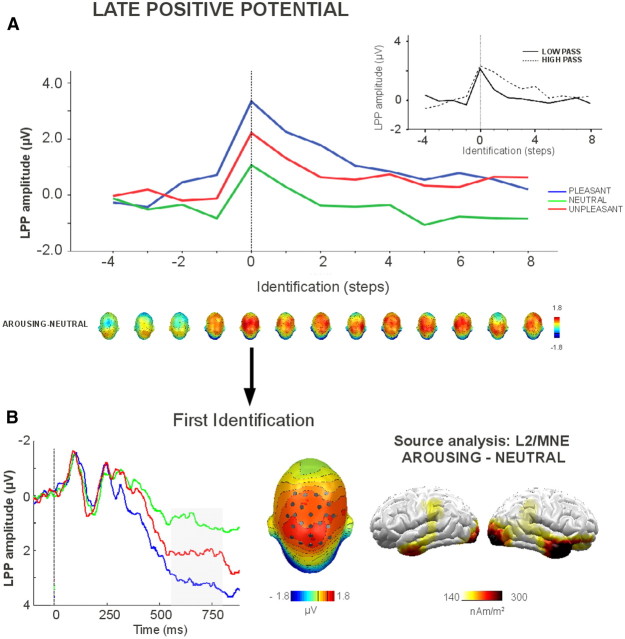

Figure 3 shows the effects of picture identification on emotional modulation of the LPP. The amplitude of the LPP varied with picture identification (F(12,384) = 15.22, p < 0.001, ηp2 = 0.32). This effect was further characterized by a two-way interaction between identification and filter type (F(12,384) = 4.57, p < 0.001, ηp2 = 0.13). The LPP was significantly more positive the first time participants identified a picture compared with the step immediately preceding identification. In the low-pass condition, the amplitude of the LPP showed a decrease one step after identification, and returned to preidentification values two steps after identification. In the high-passed condition, this decrease happened later and no difference was observed between the preidentification LPP amplitude and the LPP elicited three steps after identification. Overall, the LPP was more positive for high-passed than for low-passed pictures (F(1,32) = 14.7, p < 0.001, ηp2 = 0.32).

Figure 3.

The effects of identification and picture category on the LPP, averaged across the low- and high-pass filtered conditions. A, The effects of picture category across levels of identification. Topographies represent the affective modulation of the LPP. The inset shows overall LPP amplitude in the low- and high-pass conditiond. B, Affective modulation of the LPP at the first identification. The head plot displays the topography of the arousing–neutral modulation, and the sensors where the LPP was scored. Brain plots show the difference of the L2 minimum norm estimates (MNE) for arousing and neutral contents.

A significant interaction of identification and category was found, revealing effects that were independent of the filtering condition (F(24,768) = 2.74, p < 0.001, ηp2 = 0.08). Two steps before the first identification, a significantly larger positivity for pleasant contents, compared with neutral and unpleasant contents, was observed (F(2,68) = 3.93, p < 0.05, ηp2 = 0.1). One step before identification, a larger positivity for arousing (pleasant and unpleasant) compared with neutral pictures was observed (F(2,68) = 9.97, p < 0.001, ηp2 = 0.23), and this difference was observed for all successive steps (Fs(2, 68) > 5.71, ps < 0.05, ηp2s > 0.15). Additionally, pleasant pictures elicited a more positive LPP compared with unpleasant pictures, starting from two steps before identification until two steps after identification.

A source analysis was conducted by calculating L2-minimum-norm solutions (350 dipoles, 8 cm shell radius) for the LPP in each of the experimental conditions (Hämäläinen and Ilmoniemi, 1994). As shown in Figure 3B, more pronounced activity over the anterior temporal and occipital areas was estimated for arousing compared with neutral pictures.

Decision times

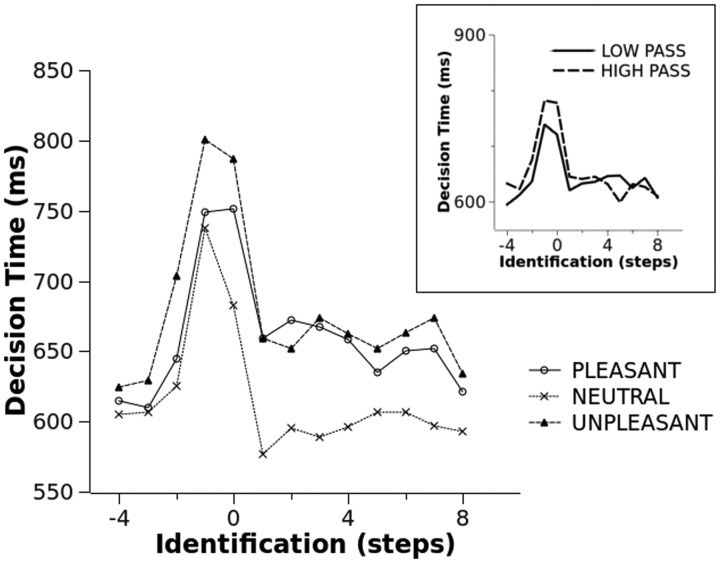

Decision time data are reported in Figure 4. No main effect or interaction involving type of filter was observed. A significant main effect of identification level was observed (F(12,384) = 10.91, p < 0.001, ηp2 = 0.25), indicating a response time slowing between the third and second step and between the second and first step before picture identification. Following picture identification, response time was considerably faster, and a significant increase in speed was observed between the identification level and the first step after identification.

Figure 4.

The effects of identification and picture category on decision times. The inset shows the main effect of scene identification on decision times for low- and high-pass filtered pictures.

The identification × category interaction approached standard significance (F(24,768) = 1.87, p = 0.08, ηp2 = 0.06). Focusing on each identification level, no significant effect of category was observed four steps before identification. From three steps before identification until the first “yes” response, a significantly slower response for unpleasant compared with all other categories was observed. At the first “yes” response, and at all subsequent identification levels, slower responses to arousing (pleasant and unpleasant) compared with neutral pictures were observed. No difference between pleasant and unpleasant contents was observed following picture identification.

Discussion

The present study asked whether specific spatial frequency information may be critical for emotional response to natural scenes. The results were straightforward: the emotional response varied with picture identification and no critical or preferential role for either low or high frequencies was observed.

Picture identification increased with the width of the range of spatial frequencies, and the psychometric functions for pictures composed of high and low spatial frequencies were symmetric (De Cesarei and Loftus, 2011). Emotional pictures required a wider range of spatial frequencies (higher cutoff in the low spatial frequency condition, and lower cutoff in the high spatial filter condition) to be identified, and this effect was particularly pronounced for unpleasant scenes. For all categories, identification improved both in the low- and high-pass filtered condition when intermediate spatial frequencies were added, and no category relied critically on extremely low or high spatial frequencies for identification.

This study showed that emotional response, as revealed by the LPP, did not depend on the type of information upon which identification relied. Rather, a similar emotional response was observed following the radically different types of information conveyed by low- and high-passed spatial frequencies. This result unambiguously indicated that the emotional response did not depend on the compositional content of the natural scenes, but was secondary to the identification of the affective content of the pictures. We observed larger positivity for arousing (pleasant and unpleasant) compared with neutral pictures, based on whatever information—high- or low-pass filtered—was presented. Affective modulation of the LPP was observed at threshold identification and extended one step before identification and for all subsequent steps, similarly for high- and low-pass filtered pictures. This result shows that, one step before reported identification, the available sensory data were already sufficient to detect that a significant content was present in the scene, and to activate the motivational systems accordingly.

These results are consistent with previous studies that indicate that the LPP modulation presumably reflects the semantic identification of motivationally significant scenes and does not rely on bottom-up perceptual factors, such as complexity, picture size, color, exposure time, or spatial frequencies (De Cesarei and Codispoti, 2006, 2011; Bradley et al., 2007; Codispoti et al., 2009, 2011). Additionally, once affective modulation of the LPP and decision time was first observed, it was maintained despite the repeated presentations. This result replicates a number of previous studies that showed that the emotional modulation of this component remains even after massive repetition (Codispoti et al., 2006b, 2007; Ferrari et al., 2011), suggesting that it reflects motivational significance and not only attention allocation, since the latter would be expected to decline with repetition. The generators of the LPP were localized in the anterior temporal and occipital areas (Fig. 3B), consistent with the view that the affective modulation of the LPP is attributable to re-entrant projections from the brain's motivational circuits to the areas most involved in object recognition (Amaral and Price, 1984; Sabatinelli et al., 2005, 2007).

The present data do not support the view that any spatial frequency range contains information that is particularly diagnostic of the presence of an emotional content at the level of explicit identification and of ERP signatures of emotional response. Natural scenes are extremely complex in terms of composition, and it seems unlikely that a specific spatial frequency may be predictive of the emotional content of a scene.

When pictures were extremely degraded or extremely vivid, the decision time was similarly fast. Before picture identification, a pronounced slowing in decision times was observed for all types of stimuli and in particular for those that were unpleasant, possibly reflecting the development of a hypothesis, which was matched against the bottom-up information (Bruner and Potter, 1964). This slowing of decision time was first observed two steps before identification for both low- and high-pass filtered scenes, suggesting that this is when the first hypotheses regarding scene contents are generated. At the following step, affective modulation of the LPP was first observed, showing that enough of the picture was understood to activate the motivational systems that modulate the LPP. Interestingly, both overall amplitude and affective modulation of the LPP tracked the identification process and were maximal when the top-down hypothesis and the bottom-up information matched for the first time (Schupp et al., 2007; Ferrari et al., 2008).

Affective modulation of the LPP was first observed two steps before identification for pleasant pictures, and one step before identification for unpleasant scenes. Therefore, the threshold for affective modulation of the LPP was lower than the threshold for confident gist understanding. This result is presumably related to the present study's specific method of assessing identification, that is, by directly asking participants whether they understood what the scene was about. However, perceptual thresholds vary with the task being carried out. A recent study showed that a gist understanding task was associated with more severe thresholds compared with tasks that aid identification by presenting a verbal descriptor (De Cesarei and Loftus, 2011). The present results extend previous findings, showing that, when the evidence available is not sufficient to allow the subject to confidently claim that the gist of the scene has been understood, affective modulation of the LPP or more sensitive tasks may nonetheless reveal that some understanding about the scene content has been achieved. It is possible that another type of task, or a nonsequential presentation of pictures, may reveal different identification thresholds for arousing as well as for neutral categories. Therefore, future studies could explore the extent to which the type of task determines the use of information in the processing of affective stimuli (Schyns and Oliva, 1999).

Conclusion

The present study asked whether specific ranges of spatial frequencies are critical for emotional response at the level of electrocortical activity. The engagement of corticolimbic appetitive and defensive systems, reflected in the LPP, varied with picture identification and did not depend on either low or high spatial frequencies.

Footnotes

We thank Serena Mastria for her assistance in data acquisition and all participants for having taken part in the study.

The authors declare no financial conflicts of interest.

References

- Amaral DG, Price JL. Amygdalo-cortical projections in the monkey (Macaca fascicularis) J Comp Neurol. 1984;230:465–496. doi: 10.1002/cne.902300402. [DOI] [PubMed] [Google Scholar]

- Bar M. Visual objects in context. Nat Rev Neurosci. 2004;5:617–629. doi: 10.1038/nrn1476. [DOI] [PubMed] [Google Scholar]

- Bradley MM. Natural selective attention: orienting and emotion. Psychophysiology. 2009;46:1–11. doi: 10.1111/j.1469-8986.2008.00702.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bradley MM, Hamby S, Löw A, Lang PJ. Brain potentials in perception: picture complexity and emotional arousal. Psychophysiology. 2007;44:364–373. doi: 10.1111/j.1469-8986.2007.00520.x. [DOI] [PubMed] [Google Scholar]

- Bruner JS, Potter MC. Interference in visual recognition. Science. 1964;144:424–425. doi: 10.1126/science.144.3617.424. [DOI] [PubMed] [Google Scholar]

- Codispoti M, Ferrari V, De Cesarei A, Cardinale R. Implicit and explicit categorization of natural scenes. Prog Brain Res. 2006a;156:53–65. doi: 10.1016/S0079-6123(06)56003-0. [DOI] [PubMed] [Google Scholar]

- Codispoti M, Ferrari V, Bradley MM. Repetitive picture processing: autonomic and cortical correlates. Brain Res. 2006b;1068:213–220. doi: 10.1016/j.brainres.2005.11.009. [DOI] [PubMed] [Google Scholar]

- Codispoti M, Ferrari V, Bradley MM. Repetition and ERPs: distinguishing between early and late processes in affective picture perception. J Cogn Neurosci. 2007;19:577–586. doi: 10.1162/jocn.2007.19.4.577. [DOI] [PubMed] [Google Scholar]

- Codispoti M, Mazzetti M, Bradley MM. Unmasking emotion: exposure duration and emotional engagement. Psychophysiology. 2009;46:731–738. doi: 10.1111/j.1469-8986.2009.00804.x. [DOI] [PubMed] [Google Scholar]

- Codispoti M, De Cesarei A, Ferrari V. The influence of color on emotional perception of natural scenes. Psychophysiology. 2011 doi: 10.1111/j.1469-8986.2011.01284.x. [DOI] [PubMed] [Google Scholar]

- De Cesarei A, Codispoti M. When does size not matter? Effects of stimulus size on affective modulation. Psychophysiology. 2006;43:207–215. doi: 10.1111/j.1469-8986.2006.00392.x. [DOI] [PubMed] [Google Scholar]

- De Cesarei A, Codispoti M. Affective modulation of the LPP and α-ERD during picture viewing. Psychophysiology. 2011;48:1397–1404. doi: 10.1111/j.1469-8986.2011.01204.x. [DOI] [PubMed] [Google Scholar]

- De Cesarei A, Loftus GR. Global and local vision in natural scene identification. Psychon Bull Rev. 2011;18:840–847. doi: 10.3758/s13423-011-0133-6. [DOI] [PubMed] [Google Scholar]

- Ferrari V, Codispoti M, Cardinale R, Bradley MM. Directed and motivated attention during processing of natural scenes. J Cogn Neurosci. 2008;20:1753–1761. doi: 10.1162/jocn.2008.20121. [DOI] [PubMed] [Google Scholar]

- Ferrari V, Bradley MM, Codispoti M, Lang PJ. Repetitive exposure: brain and reflex measures of emotion and attention. Psychophysiology. 2011;48:515–522. doi: 10.1111/j.1469-8986.2010.01083.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hämäläinen MS, Ilmoniemi RJ. Interpreting magnetic fields of the brain: minimum norm estimates. Med Biol Eng Comput. 1994;32:35–42. doi: 10.1007/BF02512476. [DOI] [PubMed] [Google Scholar]

- Klein SA. Measuring, estimating, and understanding the psychometric function: a commentary. Percept Psychophys. 2001;63:1421–1455. doi: 10.3758/bf03194552. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. Motivated attention: affect, activation, and action. In: Lang PJ, Simons RF, Balaban M, editors. Attention and orienting. Mahwah, NJ: Erlbaum; 1997. pp. 97–135. [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. Technical Report A-8. Gainesville, FL: University of Florida; 2008. International affective picture system (IAPS): affective ratings of pictures and instruction manual. [Google Scholar]

- Nummenmaa L, Hyönä J, Calvo MG. Semantic categorization precedes affective evaluation of visual scenes. J Exp Psychol Gen. 2010;139:222–246. doi: 10.1037/a0018858. [DOI] [PubMed] [Google Scholar]

- Öhman A. The role of the amygdala in human fear: automatic detection of threat. Psychoneuroendocrinology. 2005;30:953–958. doi: 10.1016/j.psyneuen.2005.03.019. [DOI] [PubMed] [Google Scholar]

- Öhman A, Mineka S. Fears, phobias, and preparedness: toward an evolved module of fear and fear learning. Psychol Rev. 2001;108:483–522. doi: 10.1037/0033-295x.108.3.483. [DOI] [PubMed] [Google Scholar]

- Peyk P, De Cesarei A, Junghöfer M. ElectroMagnetoEncephaloGraphy software (EMEGS): overview and integration with other EEG/MEG toolboxes. Comput Intell Neurosci. 2011;2011:861705. doi: 10.1155/2011/861705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sabatinelli D, Bradley MM, Fitzsimmons JR, Lang PJ. Parallel amygdala and inferotemporal activation reflect emotional intensity and fear relevance. Neuroimage. 2005;24:1265–1270. doi: 10.1016/j.neuroimage.2004.12.015. [DOI] [PubMed] [Google Scholar]

- Sabatinelli D, Lang PJ, Keil A, Bradley MM. Emotional perception: correlation of functional MRI and event-related potentials. Cereb Cortex. 2007;17:1085–1091. doi: 10.1093/cercor/bhl017. [DOI] [PubMed] [Google Scholar]

- Schlögl A, Keinrath C, Zimmermann D, Scherer R, Leeb R, Pfurtscheller G. A fully automated correction method of EOG artifacts in EEG recordings. Clin Neurophysiol. 2007;118:98–104. doi: 10.1016/j.clinph.2006.09.003. [DOI] [PubMed] [Google Scholar]

- Schupp HT, Cuthbert BN, Bradley MM, Hillman CH, Hamm AO, Lang PJ. Brain processes in emotional perception: motivated attention. Cogn Emot. 2004;18:593–611. [Google Scholar]

- Schupp HT, Stockburger J, Codispoti M, Junghöfer M, Weike AI, Hamm AO. Selective visual attention to emotion. J Neurosci. 2007;27:1082–1089. doi: 10.1523/JNEUROSCI.3223-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schyns PG, Oliva A. Dr. Angry and Mr. Smile: when categorization flexibly modifies the perception of faces in rapid visual presentations. Cognition. 1999;69:243–265. doi: 10.1016/s0010-0277(98)00069-9. [DOI] [PubMed] [Google Scholar]

- Seligman ME. Phobias and preparedness. Behav Ther. 1971;2:307–321. doi: 10.1016/j.beth.2015.08.005. [DOI] [PubMed] [Google Scholar]

- Torralba A, Oliva A. Statistics of natural image categories. Network. 2003;14:391–412. [PubMed] [Google Scholar]

- Vannucci M, Viggiano MP, Argenti F. Identification of spatially filtered stimuli as function of the semantic category. Brain Res Cogn Brain Res. 2001;12:475–478. doi: 10.1016/s0926-6410(01)00086-6. [DOI] [PubMed] [Google Scholar]

- Viggiano MP, Kutas M. Covert and overt identification of fragmented objects. J Exp Psychol Gen. 2000;129:107–125. doi: 10.1037//0096-3445.129.1.107. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat Neurosci. 2003;6:624–631. doi: 10.1038/nn1057. [DOI] [PubMed] [Google Scholar]