Abstract

All models of attention include the concept of an attentional template (or a target or search template). The template is conceptualized as target information held in memory that is used for prioritizing sensory processing and determining if an object matches the target. It is frequently assumed that the template contains a veridical copy of the target. However, we review recent evidence showing that the template encodes a version of the target that is adapted to the current context (e.g., distractors, task, etc.); information held within the template may include only a subset of target features, real world knowledge, pre-existing perceptual biases, or even be a distorted version of the veridical target. We argue that the template contents are customized in order to maximize the ability to prioritize information that distinguishes targets from distractors. We refer to this as template-to-distractor distinctiveness and hypothesize that it contributes to visual search efficiency by exaggerating target-to-distractor dissimilarity.

A. Introduction

All models of attention include the concept of the attentional template (or equivalently, a target or search template or attentional set). It refers to the representation of target information held in memory during visual search [1–5]. Template information is used to locate targets by selectively prioritizing relevant sensory information and by serving as the pattern for determining target-matches or non-matches. Importantly, the template reflects expectations of what information will be useful in locating the target. The idea of the template is central to models of attention because it reflects the source of information to constrain search processes and determine the relevance of information for current behavior. However, despite its ubiquitous presence in the study of attention, there are still many open questions regarding what information is stored in the template and how its contents affect visual search efficiency. Here, we review studies that demonstrate the flexibility of the template contents and propose that the template-to-distractor distinctiveness is an underappreciated factor in predicting visual search efficiency

B. Historical perspective

The concept of the attentional template predates the actual term. In some of the earliest formal studies of visual search, it was discovered that changing what subjects know about the target can radically change visual search efficiency. For example, Green and Anderson (1956) asked subjects to find a colored two-digit number among colored distractor digits, some of which shared the target color [7]. They found that search times were proportional to the number of distractor digits that shared the target color when observers knew the target digit’s color in advance, but search times depended on the total number of digits when the target’s color was not known in advance. These early demonstrations [8, 9] showed that visual search times are determined by the type of information observers have about the target and how well that information distinguishes targets from distractors.

Despite similar ideas in other theories [10–18], Duncan and Humphreys (1989) appear to be the first to use the term “template” in the human literature to describe the hypothesized internal representation of target information that modulates sensory gain and is used to determine if visual inputs match the target. The idea that the template contributes to two processes - proactive setting of sensory priority and reactive decisions about the target match - is consistent with brain imaging studies that find separate “source” and “site” regions engaged during attentionally demanding tasks [19–32], and behavioral studies that find early and late effects of target-to-distractor and distractor-to-distractor similarity in visual search [33–35].

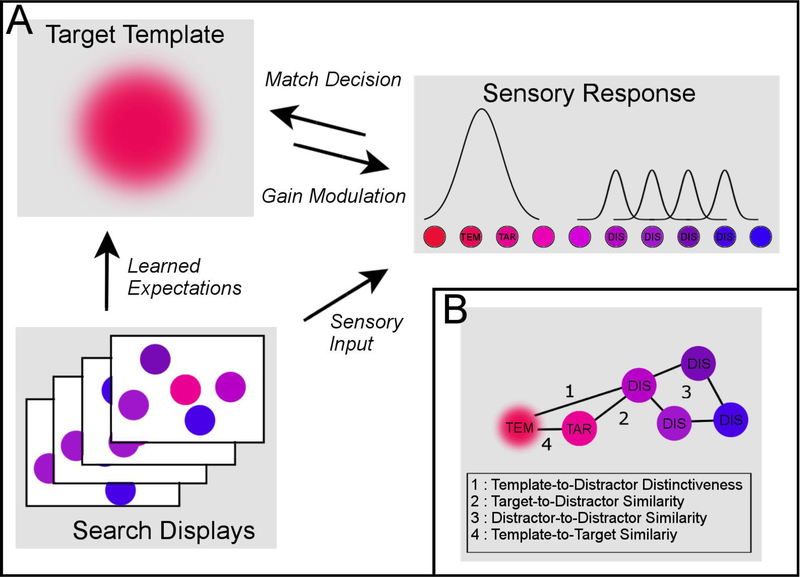

The template is the stored memory representation of the target, but it is frequently assumed to be an exact replica of the target. In more recent years, however, it has become increasingly clear that the template contents are not always a veridical copy of the target. Sometimes, the template may be poorly specified (e.g., when looking for a dog, without knowing its specific features), contain extra-sensory knowledge (e.g., using contextual predictors of the target), or may even be strategically shifted to exaggerate the dissimilarities between targets and distractors. This has led us to the view that the template is a highly dynamic and flexible representation that is customized with all the available information about the target given the observer and context. We propose that the customization of the target template is an overlooked source of visual search efficiency that is described by the template-to-distractor similarity, which is separate from the target-to-distractor, distractor-to-distractor similarity, or even template-to-target relationship (Figure 1).

Figure 1:

A) A cartoon illustration of how template information is shaped by learned expectations about the search context and impacts sensory processing. The target is the pink circle within the search displays. Multiple search displays over time contribute to the learned expectations of distractor properties. Because the target appears with “bluer” distractors, the template encodes a “redder” target than veridical. This produces larger “top-down” gain enhancements and sensory responses that prioritize the off-veridical “reddish” color over the true target “pinkish” color. Despite being slightly off-veridical, the shift in the template is advantageous because it prioritizes target features that are more distinct from distractors. B) Illustration of the representational geometry of the target template and different objects in the visual display. All four relationships (labeled 1–4) are important for predicting visual search efficiency. The specific geometry here is just an illustrative case of the more general thesis that the target template is rarely an exact replica of the searched-for target, and instead, an adapted version that accentuates information that best differentiates the target from distractors. The specific geometry (illustrated distances 1–4) are expected to change according to stimulus context and task goals.

C. Content Specificity

If the attentional template serves to filter stimuli entering the visual system and working memory, it follows that more precise templates should produce faster attentional guidance to the correct target object(s), and shorter target-match decisions. For example, Hout and Goldringer (2015) provided observers with target cues that were either an exact visual match, the same object from a different viewpoint, or a different exemplar from the same category. They found that less specific cues resulted in longer saccade scan paths as well as longer fixation durations on the target after it was fixated [36–40]. More specific templates also produce larger N2pc ERPs, an index of visual attentional selection, than more general ones, suggesting precise templates lead to more effective guidance [41].

These studies argue for the advantage of template specificity, but search in all these cases involved a specific object whose true features could be known in advance. Real world situations often require tolerance for uncertainty in visual properties of the target either because the target itself is variable (e.g., the clothes on a friend) or the target comes from a category (e.g., cars on the road). Bravo and Faird (2012, 2016) asked observers to find a tropical fish (of any kind) in naturalistic undersea scenes [42, 43]. Critically, during training, one group saw targets that were identical to the cue; another group saw targets that were from the same species but possibly a different exemplar. A day later, the observers that only saw exact cue-target pairs showed poorer performance than the group that underwent cue-category training when a new target fish was introduced. The enhanced generalization of the template to the new exemplar was attributed to the fact that the subjects in the cue-category group learned to extract target templates that contained information common across a species but that subjects in the cue-image group learned to store specific visual features that were most useful when cues were exact. This suggests the utility of precise details in the template is task-dependent and sparser representations may be more optimal when generalizing over variable targets.

In addition to being more generalizable, categorical templates have the advantage of being less demanding to encode and maintain in memory as a verbal heuristic. It is therefore judicious to trade precision for category information whenever broad templates are sufficient for distinguishing targets from distractors [44, 45]. In an elegant example of this, Schmidt and Zelinsky (2017) asked subjects to find a teddy bear target image embedded within non-teddy bear objects (easy search) or other teddy bears (difficult search). The contralateral delay activity, an ERP index of the number of items held in working memory during a delay [46, 47], had a larger amplitude during blocks of difficult search [48]. This suggested that more target details were held in the target template when target selection was expected to be difficult. The template contents depended on the expected number of visual details necessary for the task, not the target stimulus, per se. The ability to hold only necessary information within the template to discriminate the target from distractors maximizes the efficiency of target selection while minimizing memory demands [41, 49].

In real-world scenes, there is evidence that prior knowledge about object regularities and likely actions are also brought into the template to aid search [36, 37, 50–52]. Thus, search templates may have more information than just the visual properties of the target. For example, Malcolm and Henderson (2009) found that prior knowledge, such as that clocks are more likely on walls than floors, produced additive advantages during search with cue specificity (i.e., cued with the exact visual image vs. a verbal label). The advantages were seen in both the guidance of attention (saccade scan paths) and decision times to verify the target once fixated. That target templates in the real world contain learned associations demonstrates the importance of accounting for prior knowledge [50, 51, 53]and individual differences on visual search [25, 54].

Together these studies suggest that the most optimal level of specificity in a target template depends on the environmental context. More precise templates are better for targets in complex scenes with many similar distractors, but efficiency can be increased even for precise templates by including extra-sensory information such as learned probabilities and semantic relatedness. However, only subset of (or coarser) information may be sufficient when the distractor context is dissimilar or when targets have uncertain or variable features.

D. Optimal Feature Tuning

Perhaps some of the most compelling evidence for the idea that the template contents are based on template-to-distractor distinctiveness, rather than a veridical feature value, comes from studies in which the target representation shifts “off-veridical” to optimize behavior. Even when the target features are available to the observer, the target representation may be shifted in order to optimize the ability to distinguish targets from distractors, sacrificing template-to-target accuracy (Figure 1B). Navalpakkam and Itti (2007) gave the intuition of looking for a tiger in the grasslands: if the tiger is orange-yellow, but the grasslands are generally more yellow than the tiger, it may be more optimal to only bias top-down sensory enhancement of neurons encoding “orange-reddish” features [14]. This is because those features are unique to the tiger whereas “yellowish” elements are shared with the grassland. Shifting of target features has been found for varied stimulus dimensions including orientation, size, and color and have been measured using a number of methods including: set size estimates of distractor costs, irrelevant cues to probe for attentional capture, working memory estimation probes, and 2-alternative forced choice identification tasks [14, 55–60]. These studies suggest that linearly separable distractors (i.e., distractor sets that can be distinguished from targets by a single line within feature space) produce efficient visual search because the template can be shifted away from distractors, which increases the template-to-distractor distinctiveness [61].

Although it is clear that this behavior is efficient when searching for a target among linearly separable distractors, different underlying mechanisms have been proposed. Earlier models of selective attention hypothesized this effect was due to weighting parallel features that are less likely to select distractors [2, 18, 45]. Navalpakkam and Itti (2007) used signal detection theory and empirical data to demonstrate that the shift in target representation was due to “optimal gains” modulation of neurons that encode the most distinctive target features [62, 63]. Becker and colleagues have proposed the “relational theory of visual attention” that hypothesizes the target is encoded relationally (e.g., as the “reddest” or “shortest” object) rather than a specific value [64, 65]. The relational theory does not posit changes in sensory gain for specific feature values in advance of the search display; instead the stimulus that best fits the relational rule, e.g., “reddest” is automatically selected, even if it is distant from the actual target. Furthermore, others have found that the similarity of the expected distractor set to the target may produce asymmetrical sharpening of the template in addition to overall shifting [55, 58]. It is interesting to consider that these strategies may not be mutually exclusive but depend upon task goals. While the exact mechanisms are still to be determined, these studies emphasize that the goal of the attentional template is to prioritize information that distinguishes targets from distractors, i.e., the template-to-distractor distinctiveness rather than represent the veridical target directly.

In addition to shifting target representations away from specific features within a stimulus dimension, it is possible to selectively enhance or suppress feature dimensions in sensory cortex analogous to other models of feature-based attentional selection [66] [67]. Reeder et al. (2017) used a conjunction Gabor target composed of two feature dimensions (spatial frequency, orientation) but only one feature dimension distinguished targets from distractors (i.e., informative dimension) [68]. They found that the neural similarity of expected targets during the post-cue delay period reflected the informative dimension. Biasing template representations in memory towards feature dimensions that are expected to be informative has also been found using retro-cues [60]. Together these studies show that optimal template representations encode information that are expected to maximally differentiate targets from distractors and that this information may involve shifting, supplementing, or even reducing information about the veridical target object. However, the use of optimal strategies may depend on individual differences in perceived effort [69, 70].

E. Definitions of Similarity

The concept of similarity underlies all attentional theories [5] and is a core concept in understanding the template contents, but similarity can be defined based on physical, categorical, semantic, contextual, or even idiosyncratic characteristics. Even stimuli from single continuous feature dimensions, such as color and orientation, have strong nonlinear categorical boundaries that vary across observers and tasks [71–73]. One challenge for studies of attention is that the architecture of similarity must be measured outside of concurrent visual search tasks if they are used to predict visual search efficiency. Estimating similarity within visual search (or any attentionally demanding task) risks circularity by theorizing that target-similar stimuli interfere with target selection, and concluding that a distractor is target-similar because it interferes with attentional selection.

Some attention researchers have begun to use independent measurements of similarity using stimulus pair-wise similarity ratings, multidimensional scaling, and computational models [74–79]. Studies employing these techniques have found visual search dynamics depend on visual similarity, categorical membership, conceptual relatedness, and stored world knowledge about scenes [25, 33, 40, 52, 76, 80–85]. For example, search performance for a target category (e.g., teddy bears) depended on the rank ordered similarity of distractor objects obtained in an independent task (e.g., toys, clothing, tools, etc.) [86]. The similarity task provided an objective prediction of what objects would interfere with target selection without relying on preconceived notions of visual or semantic structure. Such independent ratings can reveal surprising consistency between the organization of knowledge and visual search performance. For example, we found that idiosyncratic differences in the perceptual categorization of facial identities predicted visual search efficiency when those faces were targets and distractors in visual search [25]. There is no doubt that target-similar distractors are more likely to capture attention and are harder to reject, but our understanding of how similarity is defined is still emerging. The challenge now is to understand how stable visual, semantic, and episodic representational structures are transformed into attentional templates during visual search that maximize the ability to distinguish targets from distractors [21–23, 27]

F. Conclusions

The idea of templates is ubiquitous in theories of attention. Most hypothesize that template information is used to set sensory gain in order to enhance processing of task-relevant information, and to compute if an object matches the target (Figure 1A). Much of the literature has assumed that the best target template is one that perfectly matches the veridical target, but encoding a perfectly veridical target might be the exception instead of the rule in real-world situations. We have argued here that the template should be understood as a custom set of information that will best differentiate the target from non-targets in the current environment. Sometimes this might mean holding only subset of target features, including extra-sensory knowledge about the target, or even shifting the target representation. A better understanding of what optimal templates contain will require a more systematic mapping of similarity between stimuli within a context. The concept of the template-to-distractor distinctiveness captures the relationship between these ideas and describes how the internal representation of the target is customized to contain the best available information that distinguishes the target from distractors in the current moment of time.

Highlights.

The attentional template encodes information about the target and is held in working or long-term memory.

The attentional template does not contain a veridical copy of the target but a version that maximizes the ability to distinguish targets from distractors.

Template-to-distractor distinctiveness describes the relationship between the version of the target held in memory and the current environment.

Acknowledgements

We would like to thank John Henderson and Bo-Yeong Won for their comments on this manuscript. This work was supported by NSF BCS-201502778 and NIH-RO1-MH113855–01A1to JJG.

Footnotes

Declarations of interest: none

The authors confirm that there are no known conflicts of interest associated with this publication.

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Carlisle NB, et al. , Attentional templates in visual working memory. J Neurosci, 2011. 31(25): p. 9315–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Duncan J and Humphreys GK, Visaul search and stimulus similarity. Psychological Review, 1989. 96(3): p. 433–458. [DOI] [PubMed] [Google Scholar]

- 3.Eimer M, The neural basis of attentional control in visual search. Trends Cogn Sci, 2014. 18(10): p. 526–35. [DOI] [PubMed] [Google Scholar]

- 4.Olivers CN, et al. , Different states in visual working memory: when it guides attention and when it does not. Trends Cogn Sci, 2011. 15(7): p. 327–34. [DOI] [PubMed] [Google Scholar]

- 5.Wolfe JM and Horowitz TS, Five factors that guide attention in visual search. Nature Human Behaviour, 2017. 1(3). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Johnston JC and Pashler H, Close binding of identity and location in visual feature perception. Journal of Experimental Psychology: Human Perception and Performance, 1990. 16(4): p. 843–856. [DOI] [PubMed] [Google Scholar]

- 7.Green BF and Anderson LK, Color coding in a visual search task. Journal of Experimental Psychology, 1956. 51(1): p. 19–24. [DOI] [PubMed] [Google Scholar]

- 8.Egeth HE, Virzi RA, and Garbart H, Searching for conjunctively defined targets. Journal of Experimental Psychology: Human Perception and Performance, 1994. 10(1). [DOI] [PubMed] [Google Scholar]

- 9.Eriksen C, Location of objects in a visual display as a function of the number of dimensions on which the objects differ. Journal of Experimental Psychology, 1952. 44(1): p. 56–60. [DOI] [PubMed] [Google Scholar]

- 10.Bundesen C, A theory of visual attention. Psychological Review, 1990. 97(4): p. 523–547. [DOI] [PubMed] [Google Scholar]

- 11.Bundesen C, Habekost T, and Kyllingsbaek S, A neural theory of visual attention: bridging cognition and neurophysiology. Psychol Rev, 2005. 112(2): p. 291–328. [DOI] [PubMed] [Google Scholar]

- 12.Dent K, et al. , Parallel distractor rejection as a binding mechanism in search. Front Psychol, 2012. 3: p. 278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Desimone R and Duncan J, Neural mechanisms of selective attention. Annual Review of Neuroscience, 1995. 18: p. 193–222. [DOI] [PubMed] [Google Scholar]

- 14.Navalpakkam V and Itti L, Search goal tunes visual features optimally. Neuron, 2007. 53(4): p. 605–17.* This paper provides a model and psychophysical evidence for the optimal feature gain modulation theory, which describes how target and distractor information is combined to maximize target distinctiveness.

- 15.Trapp S, Schroll H, and Hamker FH, Open and closed loops: A computational approach to attention and consciousness. Adv Cogn Psychol, 2012. 8(1): p. 1–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Treisman AM and Gelade G, A feature-integration theory of attention. Cogn Psychol, 1980. 12(1): p. 97–136. [DOI] [PubMed] [Google Scholar]

- 17.Wolfe JM, Cave KR, and Franzel SL, Guided search: An alterantive to the feature integration model for visual search. Journal of Experimental Psychology: Human Perception and Performance, 1989. 15(3): p. 419–433. [DOI] [PubMed] [Google Scholar]

- 18.Wolfe JM, Guided search 4.0. 2007.

- 19.Baldauf D and Desimone R, Neural mechanisms of object-based attention. Science, 2014. 344(6182): p. 424–7. [DOI] [PubMed] [Google Scholar]

- 20.Rossi AF, et al. , The prefrontal cortex and the executive control of attention. Exp Brain Res, 2009. 192(3): p. 489–97. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bettencourt KC and Xu Y, Decoding the content of visual short-term memory under distraction in occipital and parietal areas. Nat Neurosci, 2016. 19(1): p. 150–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Ester EF, et al. , Feature-Selective Attentional Modulations in Human Frontoparietal Cortex. J Neurosci, 2016. 36(31): p. 8188–99. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Jigo M, Gong M, and Liu T, Neural Determinants of Task Performance during Feature-Based Attention in Human Cortex. eNeuro, 2018. 5(1). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Feredoes E, et al. , Causal evidence for frontal involvement in memory target maintenance by posterior brain areas during distracter interference of visual working memory. Proc Natl Acad Sci U S A, 2011. 108(42): p. 17510–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lee J and Geng JJ, Idiosyncratic Patterns of Representational Similarity in Prefrontal Cortex Predict Attentional Performance. J Neurosci, 2017. 37(5): p. 1257–1268.* This paper found that idiosyncratic representational strucutre of stimuli in right prefrontal cortex during a categorization task predicted performance in an independent visual search task.

- 26.Moore T and Zirnsak M, The What and Where of Visual Attention. Neuron, 2015. 88(4): p. 626–8. [DOI] [PubMed] [Google Scholar]

- 27.Long NM and Kuhl BA, Bottom-up and top-down factors differentially influence stimulus representations across large-scale attentional networks. J Neurosci, 2018.* This fMRI study found that goal-relevant stimulus features could be decoded in multiple brain networks, but that the exact information encoded about the features differed.

- 28.van Diepen RM, et al. , The Role of Alpha Activity in Spatial and Feature-Based Attention. eNeuro, 2016. 3(5). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Gunseli E, Olivers CN, and Meeter M, Effects of search difficulty on the selection, maintenance, and learning of attentional templates. J Cogn Neurosci, 2014. 26(9): p. 2042–54. [DOI] [PubMed] [Google Scholar]

- 30.van Driel J, et al. , Local and interregional alpha EEG dynamics dissociate between memory for search and memory for recognition. Neuroimage, 2017. 149: p. 114–128. [DOI] [PubMed] [Google Scholar]

- 31.Grubert A and Eimer M, The timecourse of target template activation processees during preparation for visual search. Journal of Neuroscience, 2018. 38(44): p. 9527–9538. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Weaver MD, van Zoest W, and Hickey C, A temporal dependency account of attentional inhibition in oculomotor control. Neuroimage, 2017. 147: p. 880894. [DOI] [PubMed] [Google Scholar]

- 33.Avraham T, Yeshurun Y, and Lindenbaum M, Predicting visual search performance by quantifying stimuli similarities. J Vis, 2008. 8(4): p. 9 1–22. [DOI] [PubMed] [Google Scholar]

- 34.Geng JJ and Diquattro NE, Attentional capture by a perceptually salient non-target facilitates target processing through inhibition and rapid rejection. J Vis, 2010. 10(6): p. 5. [DOI] [PubMed] [Google Scholar]

- 35.Mulckhuyse M, Van der Stigchel S, and Theeuwes J, Early and late modulation of saccade deviations by target distractor similarity. J Neurophysiol, 2009. 102(3): p. 1451–8. [DOI] [PubMed] [Google Scholar]

- 36.Malcolm GL and Henderson JM, The effects of target template specificity on visual search in real-world scenes: evidence from eye movements. J Vis, 2009. 9(11): p. 8 1–13. [DOI] [PubMed] [Google Scholar]

- 37.Malcolm GL and Henderson JM, Combining top-down processes to guide eye movements during real-world scene search. J Vis, 2010. 10(2): p. 4 1–11. [DOI] [PubMed] [Google Scholar]

- 38.Smith PL and Ratcliff R, An integrated theory of attention and decision making in visual signal detection. Psychol Rev, 2009. 116(2): p. 283–317. [DOI] [PubMed] [Google Scholar]

- 39.Anderson BA, On the precision of goal-directed attentional selection. J Exp Psychol Hum Percept Perform, 2014. 40(5): p. 1755–62. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Hout MC and Goldinger SD, Target templates: the precision of mental representations affects attentional guidance and decision-making in visual search. Atten Percept Psychophys, 2015. 77(1): p. 128–49.* This study found that the precision of information in target templates affected both the time to locate the target as well as the time to determine it was the target (akin to Malcolm and Henderson, 2009). Additionally, the template precision was manipulated by using multidimensional scaling measures of similarity between objects.

- 41.Nako R, Wu R, and Eimer M, Rapid guidance of visual search by object categories. J Exp Psychol Hum Percept Perform, 2014. 40(1): p. 50–60. [DOI] [PubMed] [Google Scholar]

- 42.Bravo MJ and Farid H, Task demands determine the specificity of the search template. Atten Percept Psychophys, 2012. 74(1): p. 124–31. [DOI] [PubMed] [Google Scholar]

- 43.Bravo MJ and Farid H, Observers change their target template based on expected context. Atten Percept Psychophys, 2016. 78(3): p. 829–37. [DOI] [PubMed] [Google Scholar]

- 44.Reeder RR and Peelen MV, The contents of the search template for category-level search in natural scenes. J Vis, 2013. 13(3): p. 13. [DOI] [PubMed] [Google Scholar]

- 45.Wolfe JM, et al. , The role of categorization in visual search for orientation. Journal of Experimental Psychology: Human Perception and Performance, 1992. 18(1): p. 34–49. [DOI] [PubMed] [Google Scholar]

- 46.Luria R, et al. , The contralateral delay activity as a neural measure of visual working memory. Neurosci Biobehav Rev, 2016. 62: p. 100–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Vogel EK, Woodman GF, and Luck SJ, Storage of features, conjunctions, and objects in visual working memory. Journal of Experimental Psychology: Human Perception and Performance, 2001. 27(1): p. 92–114. [DOI] [PubMed] [Google Scholar]

- 48.Schmidt J and Zelinsky GJ, Adding details to the attentional template offsets search difficulty: Evidence from contralateral delay activity. J Exp Psychol Hum Percept Perform, 2017. 43(3): p. 429–437.* This study used ERPs to demonstrate that the number of object details held in memory during visual search for real-world objects depends on the expected similarity of distractors, not the target iteself. Expectations of categorically different distractors resulted in fewer target details held in working memory than when categroically similar distractors were expected.

- 49.Nako R, et al. , Item and category-based attentional control during search for real-world objects: Can you find the pants among the pans? J Exp Psychol Hum Percept Perform, 2014. 40(4): p. 1283–8. [DOI] [PubMed] [Google Scholar]

- 50.Castelhano MS and Witherspoon RL, How You Use It Matters: Object Function Guides Attention During Visual Search in Scenes. Psychol Sci, 2016. 27(5): p. 606–21. [DOI] [PubMed] [Google Scholar]

- 51.Pereira EJ and Castelhano MS, Peripheral guidance in scenes: The interaction of scene context and object content. J Exp Psychol Hum Percept Perform, 2014. 40(5): p. 2056–72. [DOI] [PubMed] [Google Scholar]

- 52.Vo ML and Wolfe JM, The role of memory for visual search in scenes. Ann N Y Acad Sci, 2015. 1339: p. 72–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Biederman I, Perceiving real-world scenes. Science, 1972. 177: p. 77–80. [DOI] [PubMed] [Google Scholar]

- 54.Harel A, What is special about expertise? Visual expertise reveals the interactive nature of real-world object recognition. Neuropsychologia, 2016. 83: p. 88–99. [DOI] [PubMed] [Google Scholar]

- 55.Yu X and Geng JJ, The Attentional Template is Shifted and Asymmetrically Sharpened by Distractor Context. Journal of Experimental Psychology: Human Perception and Performance, 2018.* This study modeled template representation of the target acquired with a probe task interleaved between visual search trials. The results showed that the central tendency and variance of the target template were flexibly adjusted in response to different aspects of the distractor set.

- 56.Bauer B, Jolicoeur P, and Cowan WB, Visual search for colour target that are or are not linearly separable from distractors. Vision Research, 1996. 36(10): p. 1439–1465. [DOI] [PubMed] [Google Scholar]

- 57.Becker SI, Folk CL, and Remington RW, The role of relational information in contingent capture. J Exp Psychol Hum Percept Perform, 2010. 36(6): p. 146076. [DOI] [PubMed] [Google Scholar]

- 58.Geng JJ, DiQuattro NE, and Helm J, Distractor probability changes the shape of the attentional template. Journal of Experimental Psychology: Human Perception and Performance, 2017. 43(12): p. 1993–2007.* This study used two different memory probe tasks during visual search to assess how the target tempalte was affected by probabilistic expectations of the distractor.

- 59.Hodsoll J and Humphreys GW, Driving attention with the top down: the relative contribution of target templates to the linear separability effect in the size dimension. Percept Psychophys, 2001. 63(5): p. 918–26. [DOI] [PubMed] [Google Scholar]

- 60.Niklaus M, Nobre AC, and van Ede F, Feature-based attentional weighting and spreading in visual working memory. Sci Rep, 2017. 7: p. 42384. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Vighneshvel T and Arun SP, Does linear separability really matter? Complex visual search is explained by simple search. J Vis, 2013. 13(11). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Scolari M and Serences JT, Adaptive allocation of attentional gain. J Neurosci, 2009. 29(38): p. 11933–42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Scolari M, Byers A, and Serences JT, Optimal deployment of attentional gain during fine discriminations. J Neurosci, 2012. 32(22): p. 7723–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Becker SI, Oculomotor capture by colour singletons depends on intertrial priming. Vision Res, 2010. 50(21): p. 2116–26. [DOI] [PubMed] [Google Scholar]

- 65.Becker SI, et al. , Visual search for color and shape: when is the gaze guided by feature relationships, when by feature values? J Exp Psychol Hum Percept Perform, 2014. 40(1): p. 264–91.* This paper reports saccadic eye-movement and manual behaviors showing attentional capture by distractors that matched the relative attributes of the target (in relation to the distractor set) rather than by distractors that were more similar to the target itself.

- 66.Muller HJ, Heller D, and Ziegler J, Visual search for singleton feature targets within and across feature dimensions. Perception & Psychophysics, 1995. 57(1): p. 1–17. [DOI] [PubMed] [Google Scholar]

- 67.Pollmann S, et al. , Neural correlates of visual dimension weighting. Visual Cognition, 2006. 14(4–8): p. 877–897. [Google Scholar]

- 68.Reeder RR, Hanke M, and Pollmann S, Task relevance modulates the cortical representation of feature conjunctions in the target template. Sci Rep, 2017. 7(1): p. 4514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Irons JL and Leber AB, Characterizing individual variation in the strategic use of attentional control. J Exp Psychol Hum Percept Perform, 2018. 44(10): p. 1637–1654. [DOI] [PubMed] [Google Scholar]

- 70.Irons JL and Leber AB, Choosing attentional control settings in a dynamically changing environment. Atten Percept Psychophys, 2016. 78(7): p. 2031–48. [DOI] [PubMed] [Google Scholar]

- 71.Bae GY, et al. , Stimulus-specific variability in color working memory with delayed estimation. J Vis, 2014. 14(4). [DOI] [PubMed] [Google Scholar]

- 72.Brouwer GJ and Heeger DJ, Categorical clustering of the neural representation of color. Journal of Neuroscience, 2013. 33(39): p. 1545415465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Nagy AL and Sanchez RR, Critical color differences determined with a visual seartch task. Opt. Soc. Am.A, 1990. 7(7): p. 1209. [DOI] [PubMed] [Google Scholar]

- 74.Charest I, et al. , Unique semantic space in the brain of each beholder predicts perceived similarity. Proc Natl Acad Sci U S A, 2014. 111(40): p. 14565–70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Goldstone RL and Barsalou LW, Reuniting perception and conception. Cognition, 1998. 65: p. 231–262. [DOI] [PubMed] [Google Scholar]

- 76.Hout MC, et al. , Using multidimensional scaling to quantify similarity in visual search and beyond. Attention Perception & Psychophysics, 2016. 78(1): p. 320. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Mur M, et al. , Human Object-Similarity Judgments Reflect and Transcend the Primate-IT Object Representation. Front Psychol, 2013. 4: p. 128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Kriegeskorte N and Mur M, Inverse MDS: Inferring Dissimilarity Structure from Multiple Item Arrangements. Front Psychol, 2012. 3: p. 245. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Nosofsky RM, Attention, similarity, and the identification-categorization relationship. J Exp Psychol Gen, 1986. 115(1): p. 39–61. [DOI] [PubMed] [Google Scholar]

- 80.Yu CP, Maxfield JT, and Zelinsky GJ, Searching for Category-Consistent Features: A Computational Approach to Understanding Visual Category Representation. Psychol Sci, 2016. 27(6): p. 870–84. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Cohen MA, et al. , Visual search for object categories is predicted by the representational architecture of high-level visual cortex. J Neurophysiol, 2017. 117(1): p. 388–402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Becker SI, Determinants of dwell time in visual search: similarity or perceptual difficulty? PLoS One, 2011. 6(3): p. 1–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Moores E, Laiti L, and Chelazzi L, Associative knowledge controls deployment of visual selective attention. Nat Neurosci, 2003. 6(2): p. 182–9. [DOI] [PubMed] [Google Scholar]

- 84.Seidl-Rathkopf KN, Turk-Browne NB, and Kastner S, Automatic guidance of attention during real-world visual search. Atten Percept Psychophys, 2015. 77(6): p. 1881–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Torralba A, et al. , Contextual guidance of eye movements and attention in real-world scenes: the role of global features in object search. Psychol Rev, 2006. 113(4): p. 766–86. [DOI] [PubMed] [Google Scholar]

- 86.Alexander RG and Zelinsky GJ, Visual similarity effects in categorical search. J Vis, 2011. 11(8). [DOI] [PMC free article] [PubMed] [Google Scholar]