Abstract

Radiographic assessment with magnetic resonance imaging (MRI) is widely used to characterize gliomas, which represent 80% of all primary malignant brain tumors. Unfortunately, glioma biology is marked by heterogeneous angiogenesis, cellular proliferation, cellular invasion, and apoptosis. This translates into varying degrees of enhancement, edema, and necrosis, making reliable imaging assessment challenging. Deep learning, a subset of machine learning artificial intelligence, has gained traction as a method, which has seen effective employment in solving image-based problems, including those in medical imaging. This review seeks to summarize current deep learning applications used in the field of glioma detection and outcome prediction and will focus on (1) pre- and post-operative tumor segmentation, (2) genetic characterization of tissue, and (3) prognostication. We demonstrate that deep learning methods of segmenting, characterizing, grading, and predicting survival in gliomas are promising opportunities that may enhance both research and clinical activities.

Keywords: glioma, glioblastoma, machine learning, artificial intelligence, deep learning, neural network

1. Introduction

Radiographic assessment with magnetic resonance imaging (MRI) is widely used to characterize gliomas, which represent a third of all brain tumors and 80% of all primary malignant brain tumors [1]. From a clinical perspective, imaging is often used preoperatively for diagnosis and prognostication, and post-operatively for surveillance. From a research perspective, MRI assessment provides a standardized method with which to establish patient baselines and identify endpoints for monitoring response to therapies for patient clinical trial enrollment and participation. Unfortunately, obtaining reliable, quantitative imaging assessment is complicated by the variegation of glioma biology, which is marked by heterogeneous angiogenesis, cellular proliferation, cellular invasion, and apoptosis [2].

Several approaches have emerged to standardize visual interpretation of malignant gliomas for tissue classification. For example, the Visually AcceSAble Rembrandt Images (VASARI) feature set is a rules-based lexicon to improve reproducibility of glioma interpretation [3]. Gutman et al. [4] successfully implemented this analysis for routine structural images within 75 glioblastoma multiforme (GBM) patients from The Cancer Genome Atlas (TCGA) portal and observed lower levels of contrast enhancement within proneural GBMs and lower levels of the non-enhanced tumor for mesenchymal GBMs. Human-designed rule-based systems such as VASARI have improved the reproducibility of glioma interpretation [3,5,6], but a small number of numeric descriptors are inadequate to capture the complexity of a typical MRI scan with over a million voxels, constituting a “Big Data” problem.

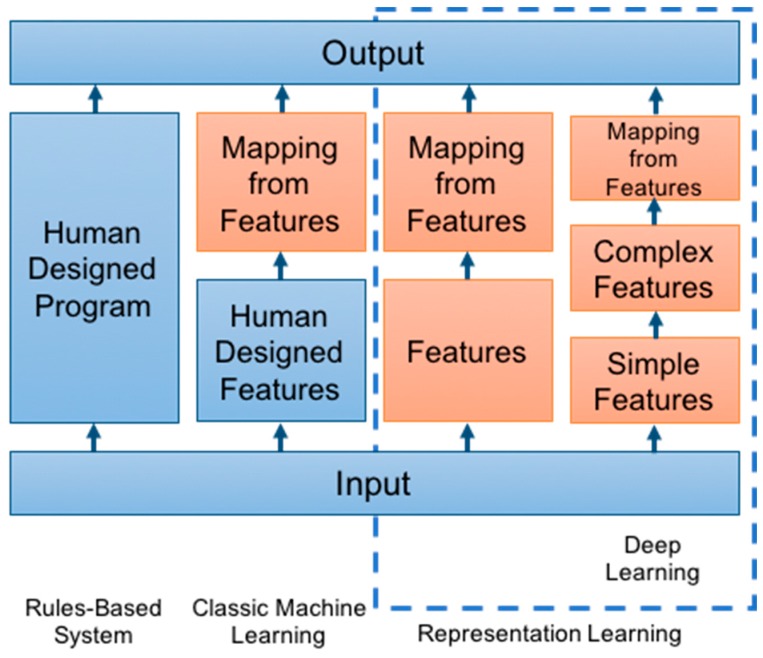

Machine learning approaches are uniquely suited to tackle such Big Data challenges. They utilize computational algorithms to parse and learn from data, and ultimately make a determination given the input variables. Machine learning has been used to train computers for pattern recognition, a task that usually requires human intelligence (Figure 1) [7]. Classic machine learning approaches employ human-designed feature extraction to distinguish tumor characteristics, and this has improved the accuracy of identifying tumor features on imaging [8]. For example, Hu et al. [9] utilized hand-crafted features derived from textural metrics to first characterize 48 biopsies from 13 patients, which were ultimately used as input to a decision tree classifier to predict underlying tumor molecular alterations. In Hu et al.’s example, the textural analysis takes advantage of manually identified features reflecting a priori (pre-selected) human expert assumptions about imaging metrics relevant to tumor biology [9]. While this exploratory study provided a framework for future studies evaluating image-based signatures of the intra-tumoral variability, the performance of this classic machine learning approach is limited to the ability of the a priori features alone to capture all the relevant image variance needed to predict tumor mutations [10].

Figure 1.

Adapted from Goodfellow et al. [7]. Flowchart of the varying machine learning components across different disciplines, increasing in sophistication from left to right. Orange boxes denote trainable components.

By contrast, deep learning approaches do not require pre-selection of features and can, instead, learn which features are most relevant for classification and/or prediction. Deep learning, a subset of machine learning, can extract features, analyze patterns, and classify information by learning multiple levels of lower and higher-order features [11]. Lower-order features, for example, would include corners, edges, and other basic shapes. Higher-order features would include different gradations of image texture, more refined shapes, and image-specific patterns [12]. Furthermore, deep learning neural networks are capable of determining more abstract and higher-order relationships between features and data [11]. The state-of-the-art approach for image classification is currently deep learning through convolutional neural networks (CNNs). For reference, CNN approaches represent all recent winning entries within the annual ImageNet Classification Challenge, consisting of over one million photographs in 1000 object categories, with a 3.6% classification error rate to date [13,14]. CNN approaches model the animal visual cortex by applying a feed-forward artificial neural network to simulate multiple layers of inputs where the individual neurons are organized to respond to overlapping regions within a visual field [15]. Because it functions similarly to a human brain in recognizing, processing, and analyzing visual image features, a CNN approach is very effective for image recognition applications [16].

The purpose of this review is to summarize deep learning applications used in the field of glioma detection, characterization, and outcome prediction, with a focus on methods that seek to (1) quantify disease burden, (2) determine textural and genetic characterization of tumor and surrounding tissue, and (3) predict prognosis from imaging information.

2. Pre and Post-Operative Tumor Segmentation: Quantification of Disease Burden

Quantitative metrics are needed for therapy guidance, risk stratification, and outcome prognostication, both pre and post-operatively [17]. Simple radiographic monitoring with freehand measurements of the amount of contrast-enhancing tumor in 2 or 3 planes is commonly used for estimating disease burden; however, single-dimensional techniques may be inaccurate, and not reflective of change in actual tumor burden [18], particularly given the propensity of high-grade tumors to grow in a non-uniform and unpredictable fashion. Manual brain tumor segmentation represents a potential solution and involves separating tumor tissues such as edema and necrosis from normal brain tissue such as gray matter, white matter, and cerebrospinal fluid [19]. However, manual segmentation is both time consuming and is subject to reader variability, making fast and reproducible segmentations challenging. Machine learning approaches represent a potential solution to meet these challenges. A summary of recent machine learning architectures and approaches used to segment both pre and post-operative GBMs is listed in Table 1.

Table 1.

Machine learning architectures and approaches used to segment both pre-operative and post-operative GBMs. Dice score (Sørensen-Dice coefficient) is a statistic used for comparing the spatial overlap of two binary images and is routinely used for tissue classification assessment. Dice scores closer to 1 indicate stronger overlap and accuracy [20].

| Author | Approach | Feature | Training Size | Results |

|---|---|---|---|---|

| Chen et al. [21] |

Connected CNN | Necrotic and non-enhancing tumor, peritumoral edema, and GD-enhancing tumor | 210 patients | Dice Scores—0.72 whole tumor, 0.81 enhancing tumor, 0.83 core |

| Havaei et al. [22] |

Two Pathway CNN | Local and global features | 65 patients | Dice Scores—0.81 whole tumor, 0.58 enhancing tumor, 0.72 core |

| Yi et al. [23] |

3D CNN | Tumor edges | 274 patients | Dice Scores—0.89 whole tumor, 0.80 enhancing tumor, 0.76 core |

| Rao et al. [24] |

CNN | Non-tumor, necrosis, edema, non-enhancing tumor, enhancing tumor | 10 patients | Accuracy—67% |

The Multimodal Brain Tumor Image Segmentation (BraTS) dataset, which was created in 2012, has been extensively used to demonstrate the efficacy of deep learning applications in segmenting pre-operative GBMs [25]. The BraTS dataset gave deep learning designers and programmers access to hundreds of GBM images and has become a benchmark for GBM segmentation performance [26]. For example, using the 2017 BraTS data [27], Chen et al. [21] developed a neural network which hierarchically segmented the necrotic and non-enhancing tumor, peritumoral edema, and enhancing tumor, resulting in mean Dice coefficients for whole tumor, enhancing tumor, and core of 0.72, 0.81, and 0.83, respectively. The Dice coefficient is a statistic used for comparing the spatial overlap of two binary images and is routinely used for tissue classification assessment [20]. It ranges between 0 and 1, where 0 indicates no overlap and 1 indicates exact overlap. In contrast to Chen et al., Havaei’s study [22] exploits both local features and global contextual features simultaneously by using a CNN that can extract small image details and the context of the image. Mean Dice coefficients of 0.81, 0.58, and 0.72 for whole tumor, enhancing tumor, and core were achieved. More importantly, every winning entry for the BraTS competition has since been a neural network implementation.

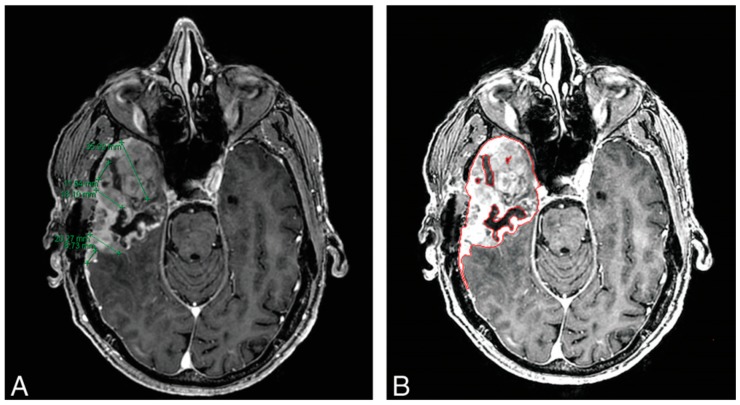

In post-operative surveillance, MRI imaging with gadolinium contrast is the standard for determining tumor growth (denoted by increased contrast-enhanced growth) and tumor response (denoted with decreases in CE tumor size) [3,28]. Both Response Assessment for Neuro-Oncology (RANO) and Macdonald criteria for GBM assessment rely on two-dimensional measurements of the contrast-enhanced area, the product of the two maximum diameters of the enhancing tissue [3]. Although this approach is simple, widely available, and requires minimal training, there are concerns about its reproducibility and accuracy. For example, while linear measurements may be sufficient for rounded nodular lesions, these measurements may be inaccurate for tumors with irregular margins, a feature common in high-grade gliomas given their propensity for necrosis and eccentric growth [11]. The variegation of glioma pathogenesis and subsequent response to therapy contributes to myriad patterns observed on imaging and may result in classifying effective treatments as ineffective or ineffective treatments as effective, underscoring the need for reliable, reproducible, and accurate tools of surveillance [29]. Volumetric assessment techniques have shown better accuracy in determining tumor size when compared to linear methods in several studies [30,31,32]. Additionally, Dempsey et al. [33] demonstrated that volumetric analysis of tumor size served as a stronger predictor of survival compared to linear-based techniques. Kanaly et al. [34] demonstrated that a semi-automatic approach to brain tumor volume assessment reduced inter-observer variability while being highly reproducible. To obtain more accurate approximations of tumor size, quantifying the entire tumor in three dimensions would provide more precise measurements (Figure 2) [35].

Figure 2.

Comparison of linear 1D measurements (A) and machine learning volumetric analysis (B) in a 64-year-old man with GBM, 11 weeks following resection. Chow et al. [35] found volumetric analysis preferable given the irregularity of recurrence. Panel A indicates the challenges of selecting greatest dimensions in 2D, while panel B shows how a semi-automated volumetric approach can accurately capture greatest dimensions.

For accurate quantification, deep learning has been applied to estimate tumor volume in post-operative GBMs. Yi et al. [23] used the 2015 BraTS dataset, which contained post-operative GBMs [26] and implemented a CNN that combined 4 imaging modalities (T1 pre-contrast, T1 post-contrast, T2, and FLAIR) at the beginning of the CNN architecture. Yi et al. also used special detection of the tumor edges for faster training and the performance was 0.89, 0.80, and 0.76 for whole tumor, enhancing tumor, and core. Rao et al. [24] examined post-operative GBMs by training with the 2015 BraTS dataset and applied a deep learning network combined with a random forest for separately classifying non-tumor tissue, necrosis edema, non-enhancing tumor, and enhancing tumoral tissue. These studies demonstrated the capabilities of deep learning approaches to improve quantification of disease burden.

3. Characterization: Pseudoprogression

Distinguishing pseudoprogression from true tumor progression is important for identifying appropriate treatment options for glioma patients. Presently, the only accepted methods to distinguish true progression of disease (PD) from treatment-related pseudoprogression of disease (psPD) is invasive tissue sampling and short interval clinical follow-up with imaging, which may delay and compromise disease management in an aggressive tumor [36,37]. RANO criteria includes methods for psPD evaluation but remains limited [38]. For example, Nasseri et al. [39] demonstrated that many psPD cases were not accurately identifiable with RANO criteria. In addition, Abbasi et al.’s [40] meta-analysis demonstrated that cases of psPD in high-grade gliomas were more frequent than most studies reported, largely comprising 36% of all post-treatment MRI contrast enhancement-identified cases.

Classic machine learning methods have been more robustly explored than deep learning models for characterizing psPD on imaging. Hu et al.’s [41] Support Vector Machine (SVM) approach (a traditional linear machine learning technique) examining multiparametric MRI data from 31 patients yielded an optimized classifier for psPD with a sensitivity of 89.9%, specificity of 93.7%, and area under ROC curve of 0.944. Other machine learning methods explored in assessing psPD have included unsupervised clustering [42] and spatio-temporal discriminative dictionary learning schemes [43]. Though deep learning methods have been less often used, they are showing promise for characterizing psPD versus true PD. Jang et al. [44] assessed a hybrid approach that coupled a deep learning CNN algorithm to a classical machine learning, long short-term memory (CNN-LSTM) method to determine psPD versus tumor PD in GBM. Their dataset consisted of clinical and MRI data from two institutions, with 59 patients in the training cohort and 19 patients in the testing cohort. Their CNN-LSTM structure, utilizing both clinical and MRI data, outperformed the two comparison models of CNN-LSTM with MRI data alone and a Random Forest structure with clinical data alone, yielding an AUC (area under the curve) of 0.83, an AUPRC (area under the precision-recall curve) of 0.87, and an F-1 score of 0.74 [44]. This example indicates that utilization of a deep learning approach can outperform a more traditional machine learning approach in analyzing images.

4. Characterization: Radiogenomics

Characterizing genetic features of gliomas is important for both prognosis and predicting response to therapy. For example, isocitrate dehydrogenase (IDH)-mutant GBMs are characterized by significantly improved survival than IDH-wild GBMs (31 months vs. 15 months) [45,46]. Recognition of the importance of genetic information has led the World Health Organization (WHO) to place considerable emphasis on the integration of genetic information, including IDH status, into its classification schemes in its 2016 update [47]. Regarding treatment response, it is becoming increasingly evident that GBMs’ differing genetic attributes also result in mixed responses [48]. One of the early mutations discovered was O6-methylguanine-DNA methyltransferase (MGMT) promoter silencing, which reduces tumor cells’ ability to repair DNA damage from alkylating agents such as temozolomide (TMZ). Hegiel et al. [49] subsequently observed that MGMT promoter methylation silencing was observed in 45% of GBM patients and demonstrated a survival benefit when treated with a combination of TMZ and radiotherapy versus radiotherapy alone (21.7 months versus 15.3 months).

Currently, genomic profiling is performed on tissue samples from enhancing tumoral components. However, securing tumor-rich biopsies is challenging and a TCGA report observed that only 35% of submitted biopsy samples contained adequate tumoral content [50]. In addition, tumors may be surgically inaccessible when eloquent areas of the brain are involved. Furthermore, biospecimens are typically required for clinical trial entry, which may be delayed as patients wait weeks before and after resection for genetic results to return. The growing field of radiomics—the extraction and detection of quantitative features from radiographic imaging through computerized algorithms—seeks to address this problem by extracting quantitative imaging features that may lead to a better understanding of the characteristics of a particular disease state [51,52,53]. Imaging features of gliomas have been linked to genetic features [54] and are strongly correlated with particular subtypes of glioma and overall patient survival [4,28]. This has led to the creation of tools and methods such as VASARI, which seek to standardize glioma characterization through identification of outlined imaging features [3]. Radiogenomics, a branch of radiomics, holds particular promise as a non-invasive means of determining tumor genomics through non-labeled radiographic imaging. This push towards utilizing MR imaging to classify and characterize gliomas has made deep learning applications an especially appealing means of quickly and automatically characterizing gliomas.

Levner et al. [55] was one of the earliest groups to use neural networks to predict tumoral genetic subtypes from imaging features. Their model sought to predict MGMT promoter methylation status in newly diagnosed GBM patients using features extracted by space-frequency texture analysis based on the S-transform of brain MRIs. Levner’s group achieved an accuracy of 87.7% across 59 patients, among which 31 patients had biopsy-confirmed MGMT promoter methylated tumors [55]. Korfiatis et al. [56] compared 3 different Residual CNN methods to predict MGMT promoter methylation status on 155 brain MRIs without a distinct tumor segmentation step. Residual CNNs employ many more layers than the traditional CNN architectures for training data [13,56,57]. It was shown that the ResNet50 layer architecture outperformed ResNet34 and ResNet18, achieving high accuracies of 94.90%, 80.72%, and 76.75%, respectively, despite the absence of a tumor segmentation step [56]. Ken Chang et al. [58] applied a Residual CNN to 406 preoperative brain MRIs (T1 pre- and post-contrast, T2, and FLAIR) acquired from 3 different institutions ranging from Grade II to Grade IV gliomas to predict IDH mutation status. Their group achieved IDH prediction accuracy of 82.8% for the training set, 83.0% for the validation set, and 85.7% for the testing set. They noted slight increases of each when patient age at diagnosis was included [58].

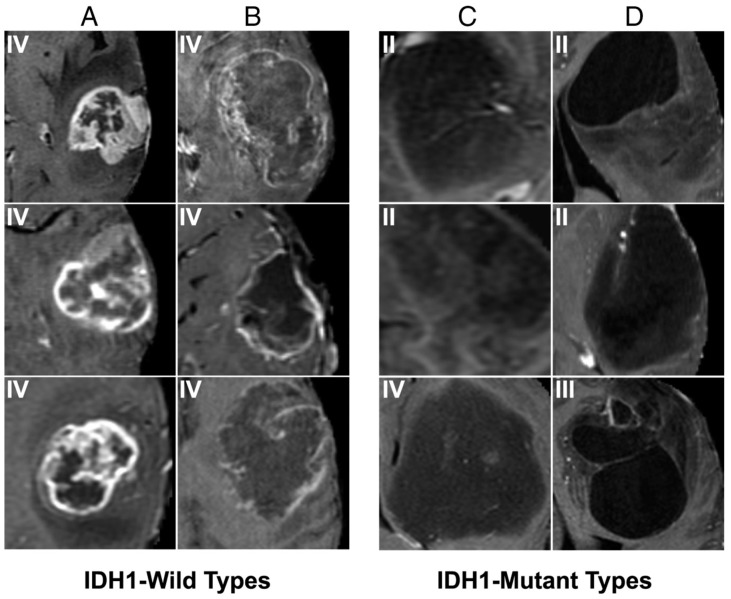

Many deep learning approaches have also managed to successfully characterize single tumoral genetic mutations on brain imaging. Chang et al. [59] described a CNN for accurate prediction of IDH1, MGMT methylation, and 1p/19q co-deletion status from 256 brain MRIs from the Cancer Imaging Archives Dataset. They achieved an accuracy of 94% for IDH status, 92% for 1p/19q co-deletion status, and 83% for MGMT promoter methylation status. In addition, they applied a dimensionality reduction approach (principal component analysis) to the final CNN layer to visually display the highest ranking features for each category, an important step towards explainable deep learning approaches (Figure 3) [59].

Figure 3.

Prototypical imaging features associated with IDH mutation status [59]. Our CNN demonstrated that T1 post-contrast features predict IDH1 mutation status. Specifically, IDH wild types are characterized by thick and irregular enhancement (A) or thin, irregular, poorly-margined, peripheral enhancement (B). In contrast, patients with IDH mutations show minimal enhancement (C) and well-defined tumor margins (D).

A variety of other deep learning methods have been employed to assess genetic and cellular character on radiographic imaging. Several other studies in addition to those discussed have been listed in Table 2 [55,60,61,62,63,64,65,66]. With the advent of these new technologies, radiogenomic characterization of tumors continues to grow as a means of non-invasive assessment of brain tumors.

Table 2.

A summary of deep learning methods in the characterization of gliomas on MR imaging.

| Deep Learning Methods for Glioma Characterization | |||||||||

|---|---|---|---|---|---|---|---|---|---|

| Author | Year | Character Assessed | Type of DL | Patient Number | MRI Number | Accuracy | AUC | AUPRC | F-1 |

| Bum-Sup Jang et al. [44] | 2018 | Pseudoprogression | Hybrid deep and machine learning CNN-LSTM | 78 | 0.83 | 0.87 | 0.74 | ||

| Zeynettin Akkus et al. [66] | 2015 | 1p/19q Co-Deletion | Multi-Scale CNN | 159 | 87.70% | ||||

| Panagiotis Korfiatis et al. [56] | 2017 | MGMT Promoter Methylation Status | ResNet50 | 155 | 94.90% | ||||

| ResNet36 | 80.72% | ||||||||

| ResNet18 | 76.75% | ||||||||

| Ken Chang et al. [58] | 2018 | IDH mutant status | Residual CNN (ResNet34) | 406 | 82.8% training | ||||

| 83.6% validation | |||||||||

| 85.7% testing | |||||||||

| Peter Chang et al. [59] | 2018 | IDH mutant Status | CNN | 256 | 94% | ||||

| 1p/19q Co-Deletion | 92% | ||||||||

| MGMT Promoter Methylation Status | 83% | ||||||||

| Sen Liang et al. [60] | 2018 | IDH Mutant Status | Multimodal 3D DenseNet | 167 | 84.60% | 85.70% | |||

| Multimodal 3D DenseNet with Transfer learning | 91.40% | 94.80% | |||||||

| Jinhua Yu et al. [61] | 2017 | IDH Mutant Status | CNN Segmentation | 110 | 0.80 | ||||

| Lichy Han and Maulik Kamdar [62] | 2018 | MGMT Promoter Methylation Status | Convolutional Recurrent Neural Network (CRNN) | 262 | 5235 | 0.62 Testing | |||

| 0.67 Validation | |||||||||

| 0.97 Training | |||||||||

| Zeju Li et al. [65] | 2017 | IDH1 Mutation Status | CNN | 151 | 92% | ||||

| 95% (multi-modal MRI) | |||||||||

| Chenjie Ge et al. [63] | 2018 | High Grade vs. Low Grade Glioma | 2D-CNN | 285 | 285 | 91.93% Training | |||

| 93.25% validation | |||||||||

| 90.87% test | |||||||||

| 1p/19q Co-Deletion | 159 | 159 | 97.11 training | ||||||

| 90.91% validation | |||||||||

| 89.39% test | |||||||||

| Peter Chang et al. [64] | 2017 | Heterogeneity/ Cellularity | CNN | 39 | 36 MRI, 91 Biopsies | r = 0.74 | |||

| Ilya Levner et al. [55] | 2009 | MGMT Promoter Methylation Status | ANN | 59 | 87.70% | ||||

5. Prognostication

Machine learning approaches are increasingly being explored as methods of automatically and accurately grading and predicting prognosis in glioma patients. Various machine learning approaches, including Support Vector Machine (SVM) classifiers, have been utilized in grading and evaluating the prognosis of gliomas. These strategies predominantly rely on extracting distinguishing features of gliomas from pre-existing patient data to build a prototype model. Both of these tasks portend important clinical considerations such as monitoring disease progression and recurrence, developing personalized surgical and chemo-radiotherapy treatment plans, and examining treatment response.

For example, Zhang et al. [67] explored the use of SVM in grading gliomas in 120 patients. These investigators combined SVM with the Synthetic Minority Over-sampling Technique (i.e., over-sampling the abnormal class and under-sampling the normal class) and were able to classify low-grade and high-grade gliomas with 94–96% accuracy. More recently, SVM classifiers have been applied in evaluating glioma patients’ prognosis. In a study with 105 high-grade glioma patients, Macyszyn et al. [68] demonstrated that their SVM model could classify patients’ survival into short or long-term categories with an accuracy range of 82–88%. In another study with 235 patients, Emblem et al. [69] developed a SVM classifier utilizing histogram data of whole tumor relative cerebral blood volume (rCBV) to predict pre-operative glioma patient overall survival (OS). The sensitivity, specificity, and AUC were 78%, 81%, and 0.79 at 6 months and 85%, 86%, and 0.85 at 3 years for OS prediction [69]. A systemic literature review by Sarkiss et al. [70] provides evidence of the many applications of machine learning in exploring this topic. Twenty-nine studies from 2000 to 2018 totaling 5346 patients and using machine learning in neuro-oncology were included, and for 2483 patients with prediction outcomes showed a sensitivity range of 78–93% and specificity range of 76–95% [70]. These studies highlight the efficacy and accuracy of machine learning-based models in determining patients’ OS compared to human readers (board-certified radiologists), which are prone to variability and subjectivity inherent in human perception and interpretation.

Deep learning-based radiomics models have also been proposed for survival prediction of glioma patients. For example, Nie et al. [71] hybridized a traditional SVM approach with a deep learning architecture. This deep learning architecture involved a three-dimensional CNN that extracted defining features from pre-existing brain tumors. When combined with SVM, this two-step method achieved an accuracy of 89% in predicting OS in a cohort of 69 patients with high-grade gliomas. Their findings suggest that deep learning methods coupled with linear machine learning classifiers can result in the accurate prediction of OS.

While still in their introductory stages, deep learning approaches are promising tools for accurate and expeditious interpretation of complex data that minimize human error and bias.

6. Challenges

It is important to recognize that several substantive challenges for deep learning radiographic analysis include the relative lack of annotated data [72] and limited algorithm generalizability, as well as barriers to integration into the clinical workflow. Proper training and convergence of deep learning algorithms currently requires a tremendous volume of high-quality, well-annotated data; however, such datasets are difficult to aggregate in large part due to regulatory bottlenecks that limit sharing of patient data between institutions. In addition, existing analysis of retrospective data acquired during routine clinical care may be inconsistent (e.g., scanned at variable time intervals and/or follow-up). Finally, even if large cohorts across many hospitals can be aggregated, annotation is a time-consuming process requiring a high level of expertise. Given that manual annotations are often time-consuming, future development of customized semi-automated labeling tools and iterative re-annotation strategies may provide an effective solution by relying on machine learning techniques to provide initial ground-truth estimates, which are then refined by human experts [15]. This may allow for faster, better-quality annotated input data necessary to effectively train deep learning algorithms.

Related to the problem of small datasets is the well-documented capacity of a deep learning algorithm with millions of parameters to over-fit to a single, specific training cohort, resulting in an artificially inflated algorithm accuracy [73]. This is especially true given the relatively limited curated datasets currently observed in radiographic research. In addition to striving for large, heterogeneous datasets, there have been several methods developed that attempt to address this limitation, including the addition of feature dropout described by Srivastava et al. [73], L2 regularization [74], and batch normalization [75].

Additional challenges relate to the deployment of deep learning applications in a clinical setting. Challenges to employing computer-aided diagnosis (CAD) within the radiology workflow mirror expected challenges to the integration of AI methods. Radiologists are likely to reject newer technologies that prove disruptive to their workflow or have interfaces which are difficult to access and are not readily available on a normal PACS viewer [76,77]. A study by Karssemeijer et al. [78] examining the efficacy of CAD in breast mass detection on mammogram demonstrated overall lower performance of the CAD system compared with expert readers due to the larger number of false positives recorded for CAD. To be clinically useful, CAD (and by extension, deep learning) approaches would need to provide improved diagnostic capabilities while also optimizing normal workflow [79]. FDA regulatory restrictions also constrain the deployment of deep learning tools into a clinician’s toolbox. Currently there are few acceptable regulatory pathways for approval of deep learning for clinical use. One attempt to address this by the FDA is through the creation of the Digital Health Software Precertification (Pre-Cert) program [80]. More recently, the FDA released a proposed regulatory framework that seeks to allow for oversight of machine learning and other continuous learning models as medical devices [81]. While steps are underway to address the regulatory gap between deep learning research and clinical utility, this still exists as a barrier to effective clinical deployment.

7. Conclusions

Deep learning applications continue to provide effective solutions to problems in medical image analysis. Radiological sciences and the growing field of radiomics are well poised to incorporate deep learning techniques well-suited to quick image analysis. There are many opportunities rife for exploration in deep learning analysis of gliomas on radiographic imaging, including determining tumor heterogeneity, more extensive identification of tumor genotype, cases of progression and pseudoprogression, tumor grading, and survival prediction. This type of imaging analysis naturally bolsters the possibility for precision medicine initiatives in glioma treatment and management. Deep learning methods for segmenting, characterizing, grading, and predicting survival in glioma patients have helped lay the foundations for more precise and accurate understanding of a patient’s unique tumoral characteristics. Better insights into both the quantitative and qualitative aspects of a patient’s disease may help open opportunities for enhanced patient care and outcomes in the future.

Funding

This research received no external funding. The APC was funded by CANON MEDICAL SYSTEMS CORPORATION, grant title: “Machine Learning Classification of Glioblastoma Genetic Heterogeneity”.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

References

- 1.Ostrom Q.T., Gittleman H., Fulop J., Liu M., Blanda R., Kromer C., Wolinsky Y., Kruchko C., Barnholtz-Sloan J.S. CBTRUS Statistical Report: Primary Brain and Central Nervous System Tumors Diagnosed in the United States in 2008–2012. Neuro Oncol. 2015;17(Suppl. 4):iv1–iv62. doi: 10.1093/neuonc/nov189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Belden C.J., Valdes P.A., Ran C., Pastel D.A., Harris B.T., Fadul C.E., Israel M.A., Paulsen K., Roberts D.W. Genetics of glioblastoma: A window into its imaging and histopathologic variability. Radiographics. 2011;31:1717–1740. doi: 10.1148/rg.316115512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Wiki for the VASARI Feature Set the National Cancer Institute Web Site. [(accessed on 10 April 2019)]; Available online: https://wiki.cancerimagingarchive.net/display/Public/VASARI+Research+Project.

- 4.Gutman D.A., Cooper L.A., Hwang S.N., Holder C.A., Gao J., Aurora T.D., Dunn W.D., Jr., Scarpace L., Mikkelsen T., Jain R., et al. MR imaging predictors of molecular profile and survival: Multi-institutional study of the TCGA glioblastoma data set. Radiology. 2013;267:560–569. doi: 10.1148/radiol.13120118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Mazurowski M.A., Desjardins A., Malof J.M. Imaging descriptors improve the predictive power of survival models for glioblastoma patients. Neuro-Oncol. 2013;15:1389–1394. doi: 10.1093/neuonc/nos335. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Velazquez E.R., Meier R., Dunn W.D., Jr., Alexander B., Wiest R., Bauer S., Gutman D.A., Reyes M., Aerts H.J. Fully automatic GBM segmentation in the TCGA-GBM dataset: Prognosis and correlation with VASARI features. Sci. Rep. 2015;5:16822. doi: 10.1038/srep16822. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Goodfellow I., Bengio Y., Courville A. Deep Learning. The MIT Press; Cambridge, MA, USA: 2016. [Google Scholar]

- 8.Zacharaki E.I., Wang S., Chawla S., Soo Yoo D., Wolf R., Melhem E.R., Davatzikos C. Classification of brain tumor type and grade using MRI texture and shape in a machine learning scheme. Magn. Reson. Med. Off. J. Int. Soc. Magn. Reson. Med. 2009;62:1609–1618. doi: 10.1002/mrm.22147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hu L.S., Ning S., Eschbacher J.M., Baxter L.C., Gaw N., Ranjbar S., Plasencia J., Dueck A.C., Peng S., Smith K.A., et al. Radiogenomics to characterize regional genetic heterogeneity in glioblastoma. Neuro Oncol. 2017;19:128–137. doi: 10.1093/neuonc/now135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kassner A., Thornhill R.E. Texture analysis: A review of neurologic MR imaging applications. AJNR Am. J. Neuroradiol. 2010;31:809–816. doi: 10.3174/ajnr.A2061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Deng L., Yu D. Deep learning: Methods and applications. Found. Trends® Signal Process. 2014;7:197–387. doi: 10.1561/2000000039. [DOI] [Google Scholar]

- 12.Zeiler M.D., Fergus R. European Conference on Computer Vision. Springer; Cham, Switzerland: 2014. Visualizing and understanding convolutional networks; pp. 818–833. [Google Scholar]

- 13.He K., Zhang X., Ren S., Sun J. Deep Residual Learning for Image Recognition. arXiv. 20151512.03385 [Google Scholar]

- 14.Krizhevsky A., Sutskever I., Hinton G. Imagenet classification with deep convolutional neural networks; Proceedings of the 25th International Conference on Neural Information Processing Systems-Volume 1; Lake Tahoe, NV, USA. 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- 15.LeCun Y., Bengio Y., Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 16.LeCun Y., Huang F.J., Bottou L. Learning methods for generic object recognition with invariance to pose and lighting; Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition; Washington, DC, USA. 27 June–2 July 2004; pp. 97–104. [Google Scholar]

- 17.Reardon D.A., Galanis E., DeGroot J.F., Cloughesy T.F., Wefel J.S., Lamborn K.R., Lassman A.B., Gilbert M.R., Sampson J.H., Wick W., et al. Clinical trial end points for high-grade glioma: The evolving landscape. Neuro Oncol. 2011;13:353–361. doi: 10.1093/neuonc/noq203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Henson J.W., Ulmer S., Harris G.J. Brain tumor imaging in clinical trials. Am. J. Neuroradiol. 2008;29:419–424. doi: 10.3174/ajnr.A0963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Işın A., Direkoğlu C., Şah M. Review of MRI-based brain tumor image segmentation using deep learning methods. Procedia Comput. Sci. 2016;102:317–324. doi: 10.1016/j.procs.2016.09.407. [DOI] [Google Scholar]

- 20.Zijdenbos A.P., Dawant B.M., Margolin R.A., Palmer A.C. Morphometric analysis of white matter lesions in MR images: Method and validation. IEEE Trans. Med. Imaging. 1994;13:716–724. doi: 10.1109/42.363096. [DOI] [PubMed] [Google Scholar]

- 21.Chen L., Wu Y., DSouza A.M., Abidin A.Z., Wismüller A., Xu C. Medical Imaging 2018: Image Processing. Volume 10574. International Society for Optics and Photonics; Bellingham, WA, USA: 2018. MRI tumor segmentation with densely connected 3D CNN; p. 105741F. [Google Scholar]

- 22.Havaei M., Davy A., Warde-Farley D., Biard A., Courville A., Bengio Y., Pal C., Jodoin P.-M., Larochelle H. Brain tumor segmentation with deep neural networks. Med. Image Anal. 2017;35:18–31. doi: 10.1016/j.media.2016.05.004. [DOI] [PubMed] [Google Scholar]

- 23.Yi D., Zhou M., Chen Z., Gevaert O. 3-D convolutional neural networks for glioblastoma segmentation. arXiv. 20161611.04534 [Google Scholar]

- 24.Rao V., Sarabi M.S., Jaiswal A. Brain tumor segmentation with deep learning. MICCAI Multimodal Brain Tumor Segm. Chall. (BraTS) 2015:56–59. [Google Scholar]

- 25.Menze B.H., Jakab A., Bauer S., Kalpathy-Cramer J., Farahani K., Kirby J., Burren Y., Porz N., Slotboom J., Wiest R. The multimodal brain tumor image segmentation benchmark (BRATS) IEEE Trans. Med. Imaging. 2015;34:1993–2024. doi: 10.1109/TMI.2014.2377694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Bakas S., Reyes M., Jakab A., Bauer S., Rempfler M., Crimi A., Shinohara R.T., Berger C., Ha S.M., Rozycki M. Identifying the best machine learning algorithms for brain tumor segmentation, progression assessment, and overall survival prediction in the BRATS challenge. arXiv. 20181811.02629 [Google Scholar]

- 27.Huang G., Liu Z., Van Der Maaten L., Weinberger K.Q. Densely connected convolutional networks; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Honolulu, HI, USA. 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- 28.Liu Y., Xu X., Yin L., Zhang X., Li L., Lu H. Relationship between glioblastoma heterogeneity and survival time: An MR imaging texture analysis. Am. J. Neuroradiol. 2017;38:1695–1701. doi: 10.3174/ajnr.A5279. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.He K., Zhang X., Ren S., Sun J. Deep residual learning for image recognition; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition; Las Vegas, NV, USA. 27–30 June 2016; pp. 770–778. [Google Scholar]

- 30.Warren K.E., Patronas N., Aikin A.A., Albert P.S., Balis F.M. Comparison of one-, two-, and three-dimensional measurements of childhood brain tumors. J. Natl. Cancer Inst. 2001;93:1401–1405. doi: 10.1093/jnci/93.18.1401. [DOI] [PubMed] [Google Scholar]

- 31.Sorensen A.G., Patel S., Harmath C., Bridges S., Synnott J., Sievers A., Yoon Y.-H., Lee E.J., Yang M.C., Lewis R.F. Comparison of diameter and perimeter methods for tumor volume calculation. J. Clin. Oncol. 2001;19:551–557. doi: 10.1200/JCO.2001.19.2.551. [DOI] [PubMed] [Google Scholar]

- 32.Sorensen A.G., Batchelor T.T., Wen P.Y., Zhang W.-T., Jain R.K. Response criteria for glioma. Nat. Rev. Clin. Oncol. 2008;5:634–644. doi: 10.1038/ncponc1204. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Dempsey M.F., Condon B.R., Hadley D.M. Measurement of tumor “size” in recurrent malignant glioma: 1D, 2D, or 3D? Am. J. Neuroradiol. 2005;26:770–776. [PMC free article] [PubMed] [Google Scholar]

- 34.Kanaly C.W., Mehta A.I., Ding D., Hoang J.K., Kranz P.G., Herndon J.E., Coan A., Crocker I., Waller A.F., Friedman A.H. A novel, reproducible, and objective method for volumetric magnetic resonance imaging assessment of enhancing glioblastoma. J. Neurosurg. 2014;121:536–542. doi: 10.3171/2014.4.JNS121952. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Chow D.S., Qi J., Guo X., Miloushev V.Z., Iwamoto F.M., Bruce J.N., Lassman A.B., Schwartz L.H., Lignelli A., Zhao B., et al. Semiautomated volumetric measurement on postcontrast MR imaging for analysis of recurrent and residual disease in glioblastoma multiforme. Am. J. Neuroradiol. 2014;35:498–503. doi: 10.3174/ajnr.A3724. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Hygino da Cruz L.C., Jr., Rodriguez I., Domingues R.C., Gasparetto E.L., Sorensen A.G. Pseudoprogression and pseudoresponse: Imaging challenges in the assessment of posttreatment glioma. Am. J. Neuroradiol. 2011;32:1978–1985. doi: 10.3174/ajnr.A2397. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Brandsma D., Stalpers L., Taal W., Sminia P., van den Bent M.J. Clinical features, mechanisms, and management of pseudoprogression in malignant gliomas. Lancet Oncol. 2008;9:453–461. doi: 10.1016/S1470-2045(08)70125-6. [DOI] [PubMed] [Google Scholar]

- 38.Wen P.Y., Macdonald D.R., Reardon D.A., Cloughesy T.F., Sorensen A.G., Galanis E., Degroot J., Wick W., Gilbert M.R., Lassman A.B., et al. Updated response assessment criteria for high-grade gliomas: Response assessment in neuro-oncology working group. J. Clin. Oncol. 2010;28:1963–1972. doi: 10.1200/JCO.2009.26.3541. [DOI] [PubMed] [Google Scholar]

- 39.Nasseri M., Gahramanov S., Netto J.P., Fu R., Muldoon L.L., Varallyay C., Hamilton B.E., Neuwelt E.A. Evaluation of pseudoprogression in patients with glioblastoma multiforme using dynamic magnetic resonance imaging with ferumoxytol calls RANO criteria into question. Neuro Oncol. 2014;16:1146–1154. doi: 10.1093/neuonc/not328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Abbasi A.W., Westerlaan H.E., Holtman G.A., Aden K.M., van Laar P.J., van der Hoorn A. Incidence of Tumour Progression and Pseudoprogression in High-Grade Gliomas: A Systematic Review and Meta-Analysis. Clin. Neuroradiol. 2018;28:401–411. doi: 10.1007/s00062-017-0584-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Hu X., Wong K.K., Young G.S., Guo L., Wong S.T. Support vector machine multiparametric MRI identification of pseudoprogression from tumor recurrence in patients with resected glioblastoma. J. Magn. Reson. Imaging. 2011;33:296–305. doi: 10.1002/jmri.22432. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Kebir S., Khurshid Z., Gaertner F.C., Essler M., Hattingen E., Fimmers R., Scheffler B., Herrlinger U., Bundschuh R.A., Glas M. Unsupervised consensus cluster analysis of [18F]-fluoroethyl-L-tyrosine positron emission tomography identified textural features for the diagnosis of pseudoprogression in high-grade glioma. Oncotarget. 2017;8:8294–8304. doi: 10.18632/oncotarget.14166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Qian X., Tan H., Zhang J., Zhao W., Chan M.D., Zhou X. Stratification of pseudoprogression and true progression of glioblastoma multiform based on longitudinal diffusion tensor imaging without segmentation. Med. Phys. 2016;43:5889–5902. doi: 10.1118/1.4963812. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Jang B.-S., Jeon S.H., Kim I.H., Kim I.A. Prediction of pseudoprogression versus progression using machine learning algorithm in glioblastoma. Sci. Rep. 2018;8:12516. doi: 10.1038/s41598-018-31007-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Nobusawa S., Watanabe T., Kleihues P., Ohgaki H. IDH1 mutations as molecular signature and predictive factor of secondary glioblastomas. Clin. Cancer Res. 2009;15:6002–6007. doi: 10.1158/1078-0432.CCR-09-0715. [DOI] [PubMed] [Google Scholar]

- 46.Yan H., Parsons D.W., Jin G., McLendon R., Rasheed B.A., Yuan W., Kos I., Batinic-Haberle I., Jones S., Riggins G.J., et al. IDH1 and IDH2 mutations in gliomas. N. Engl. J. Med. 2009;360:765–773. doi: 10.1056/NEJMoa0808710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Louis D.N., Perry A., Reifenberger G., von Deimling A., Figarella-Branger D., Cavenee W.K., Ohgaki H., Wiestler O.D., Kleihues P., Ellison D.W. The 2016 World Health Organization Classification of Tumors of the Central Nervous System: A summary. Acta Neuropathol. 2016;131:803–820. doi: 10.1007/s00401-016-1545-1. [DOI] [PubMed] [Google Scholar]

- 48.Bartek J., Jr., Ng K., Bartek J., Fischer W., Carter B., Chen C.C. Key concepts in glioblastoma therapy. J. Neurol. Neurosurg. Psychiatry. 2012;83:753–760. doi: 10.1136/jnnp-2011-300709. [DOI] [PubMed] [Google Scholar]

- 49.Hegi M.E., Diserens A.C., Gorlia T., Hamou M.F., de Tribolet N., Weller M., Kros J.M., Hainfellner J.A., Mason W., Mariani L., et al. MGMT gene silencing and benefit from temozolomide in glioblastoma. N. Engl. J. Med. 2005;352:997–1003. doi: 10.1056/NEJMoa043331. [DOI] [PubMed] [Google Scholar]

- 50.Cancer Genome Atlas Research N. Comprehensive genomic characterization defines human glioblastoma genes and core pathways. Nature. 2008;455:1061–1068. doi: 10.1038/nature07385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Lambin P., Leijenaar R.T.H., Deist T.M., Peerlings J., de Jong E.E.C., van Timmeren J., Sanduleanu S., Larue R.T.H.M., Even A.J.G., Jochems A., et al. Radiomics: The bridge between medical imaging and personalized medicine. Nat. Rev. Clin. Oncol. 2017;14:749–762. doi: 10.1038/nrclinonc.2017.141. [DOI] [PubMed] [Google Scholar]

- 52.Kumar V., Gu Y., Basu S., Berglund A., Eschrich S.A., Schabath M.B., Forster K., Aerts H.J., Dekker A., Fenstermacher D., et al. Radiomics: The process and the challenges. Magn. Reson. Imaging. 2012;30:1234–1248. doi: 10.1016/j.mri.2012.06.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Gillies R.J., Kinahan P.E., Hricak H. Radiomics: Images Are More than Pictures, They Are Data. Radiology. 2016;278:563–577. doi: 10.1148/radiol.2015151169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Gevaert O., Mitchell L.A., Achrol A.S., Xu J., Echegaray S., Steinberg G.K., Cheshier S.H., Napel S., Zaharchuk G., Plevritis S.K. Glioblastoma multiforme: Exploratory radiogenomic analysis by using quantitative image features. Radiology. 2014;273:168–174. doi: 10.1148/radiol.14131731. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Levner I., Drabycz S., Roldan G., De Robles P., Cairncross J.G., Mitchell R. International Conference on Medical Image Computing and Computer-Assisted Intervention. Volume 12. Springer; Berlin/Heidelberg, Germany: 2009. Predicting MGMT methylation status of glioblastomas from MRI texture; pp. 522–530. [DOI] [PubMed] [Google Scholar]

- 56.Korfiatis P., Kline T.L., Lachance D.H., Parney I.F., Buckner J.C., Erickson B.J. Residual Deep Convolutional Neural Network Predicts MGMT Methylation Status. J. Digit. Imaging. 2017;30:622–628. doi: 10.1007/s10278-017-0009-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Veit A., Wilber M., Belongie S. Residual Networks Behave Like Ensembles of Relatively Shallow Networks. arXiv. 20161605.06431 [Google Scholar]

- 58.Chang K., Bai H.X., Zhou H., Su C., Bi W.L., Agbodza E., Kavouridis V.K., Senders J.T., Boaro A., Beers A., et al. Residual Convolutional Neural Network for the Determination of IDH Status in Low- and High-Grade Gliomas from MR Imaging. Clin. Cancer Res. 2018;24:1073–1081. doi: 10.1158/1078-0432.CCR-17-2236. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Chang P., Grinband J., Weinberg B.D., Bardis M., Khy M., Cadena G., Su M.Y., Cha S., Filippi C.G., Bota D., et al. Deep-Learning Convolutional Neural Networks Accurately Classify Genetic Mutations in Gliomas. Am. J. Neuroradiol. 2018;39:1201–1207. doi: 10.3174/ajnr.A5667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Liang S., Zhang R., Liang D., Song T., Ai T., Xia C., Xia L., Wang Y. Multimodal 3D DenseNet for IDH Genotype Prediction in Gliomas. Genes. 2018;9:382. doi: 10.3390/genes9080382. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Yu J., Shi Z., Lian Y., Li Z., Liu T., Gao Y., Wang Y., Chen L., Mao Y. Noninvasive IDH1 mutation estimation based on a quantitative radiomics approach for grade II glioma. Eur. Radiol. 2017;27:3509–3522. doi: 10.1007/s00330-016-4653-3. [DOI] [PubMed] [Google Scholar]

- 62.Han L., Kamdar M.R. MRI to MGMT: Predicting methylation status in glioblastoma patients using convolutional recurrent neural networks. Pac Symp. Biocomput. 2018;23:331–342. [PMC free article] [PubMed] [Google Scholar]

- 63.Ge C., Gu I.Y., Jakola A.S., Yang J. Deep Learning and Multi-Sensor Fusion for Glioma Classification Using Multistream 2D Convolutional Networks; Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Honolulu, HI, USA. 18–21 July 2018; pp. 5894–5897. [DOI] [PubMed] [Google Scholar]

- 64.Chang P.D., Malone H.R., Bowden S.G., Chow D.S., Gill B.J.A., Ung T.H., Samanamud J., Englander Z.K., Sonabend A.M., Sheth S.A., et al. A Multiparametric Model for Mapping Cellularity in Glioblastoma Using Radiographically Localized Biopsies. Am. J. Neuroradiol. 2017;38:890–898. doi: 10.3174/ajnr.A5112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Li Z., Wang Y., Yu J., Guo Y., Cao W. Deep Learning based Radiomics (DLR) and its usage in noninvasive IDH1 prediction for low grade glioma. Sci. Rep. 2017;7:5467. doi: 10.1038/s41598-017-05848-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Akkus Z., Sedlar J., Coufalova L., Korfiatis P., Kline T.L., Warner J.D., Agrawal J., Erickson B.J. Semi-automated segmentation of pre-operative low grade gliomas in magnetic resonance imaging. Cancer Imaging. 2015;15:12. doi: 10.1186/s40644-015-0047-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Zhang X., Yan L.-F., Hu Y.-C., Li G., Yang Y., Han Y., Sun Y.-Z., Liu Z.-C., Tian Q., Han Z.-Y. Optimizing a machine learning based glioma grading system using multi-parametric MRI histogram and texture features. Oncotarget. 2017;8:47816. doi: 10.18632/oncotarget.18001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Macyszyn L., Akbari H., Pisapia J.M., Da X., Attiah M., Pigrish V., Bi Y., Pal S., Davuluri R.V., Roccograndi L. Imaging patterns predict patient survival and molecular subtype in glioblastoma via machine learning techniques. Neuro-Oncol. 2015;18:417–425. doi: 10.1093/neuonc/nov127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Emblem K.E., Pinho M.C., Zöllner F.G., Due-Tonnessen P., Hald J.K., Schad L.R., Meling T.R., Rapalino O., Bjornerud A. A generic support vector machine model for preoperative glioma survival associations. Radiology. 2015;275:228–234. doi: 10.1148/radiol.14140770. [DOI] [PubMed] [Google Scholar]

- 70.Sarkiss C.A., Germano I.M. Machine Learning in Neuro-Oncology: Can Data Analysis from 5,346 Patients Change Decision Making Paradigms? World Neurosurg. 2019;124:287–294. doi: 10.1016/j.wneu.2019.01.046. [DOI] [PubMed] [Google Scholar]

- 71.Nie D., Zhang H., Adeli E., Liu L., Shen D. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer; Cham, Switzerland: 2016. 3D deep learning for multi-modal imaging-guided survival time prediction of brain tumor patients; pp. 212–220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Choy G., Khalilzadeh O., Michalski M., Do S., Samir A.E., Pianykh O.S., Geis J.R., Pandharipande P.V., Brink J.A., Dreyer K.J. Current applications and future impact of machine learning in radiology. Radiology. 2018;288:318–328. doi: 10.1148/radiol.2018171820. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Srivastava N., Hinton G., Krizhevsky A., Sutskever I., Salakhutdinov R. Dropout: A simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 2014;15:1929–1958. [Google Scholar]

- 74.Moore R., DeNero J. L1 and L2 regularization for multiclass hinge loss models; Proceedings of the Symposium on Machine Learning in Speech and Language Processing; Bellevue, WA, USA. 27 June 2011. [Google Scholar]

- 75.Ioffe S., Szegedy C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. arXiv. 20151502.03167 [Google Scholar]

- 76.Boone J.M. Radiological interpretation 2020: Toward quantitative image assessment. Med. Phys. 2007;34:4173–4179. doi: 10.1118/1.2789501. [DOI] [PubMed] [Google Scholar]

- 77.Summers R.M. Road maps for advancement of radiologic computer-aided detection in the 21st century. Radiology. 2003;229:11–13. doi: 10.1148/radiol.2291030010. [DOI] [PubMed] [Google Scholar]

- 78.Karssemeijer N., Otten J.D., Verbeek A.L., Groenewoud J.H., de Koning H.J., Hendriks J.H., Holland R. Computer-aided detection versus independent double reading of masses on mammograms. Radiology. 2003;227:192–200. doi: 10.1148/radiol.2271011962. [DOI] [PubMed] [Google Scholar]

- 79.Van Ginneken B., Schaefer-Prokop C.M., Prokop M. Computer-aided diagnosis: How to move from the laboratory to the clinic. Radiology. 2011;261:719–732. doi: 10.1148/radiol.11091710. [DOI] [PubMed] [Google Scholar]

- 80.Fröhlich H., Balling R., Beerenwinkel N., Kohlbacher O., Kumar S., Lengauer T., Maathuis M.H., Moreau Y., Murphy S.A., Przytycka T.M. From hype to reality: Data science enabling personalized medicine. BMC Med. 2018;16:150. doi: 10.1186/s12916-018-1122-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.U.S. Food & Drug Administration Artificial Intelligence and Machine Learning in Software as a Medical Device. [(accessed on 5 June 2019)]; Available online: https://www.fda.gov/medical-devices/software-medical-device-samd/artificial-intelligence-and-machine-learning-software-medical-device.