Abstract

Introduction

Robust evaluation of service models can improve the quality and efficiency of care while articulating the models for potential replication. Even though it is an essential part of learning health systems, evaluations that benchmark and sustain models serving adults with developmental disabilities are lacking, impeding pilot programs from becoming official care pathways. Here, we describe the development of a program evaluation for a specialized medical‐dental community clinic serving adults with autism and intellectual disabilities in Montreal, Canada.

Method

Using a Participatory Action‐oriented approach, researchers and staff co‐designed an evaluation for a primary care service for this population. We performed an evaluability assessment to identify the processes and outcomes that were feasible to capture and elicited perspectives at both clinical and health system levels. The RE‐AIM framework was used to categorize and select tools to capture data elements that would inform practice at the clinic.

Results

We detail the process of conceptualizing the evaluation framework and operationalizing the domains using a mixed‐methods approach. Our experience demonstrated (1) the utility of a comprehensive framework that captures contextual factors in addition to clinical outcomes, (2) the need for validated measures that are not cumbersome for everyday practice, (3) the importance of understanding the functional needs of the organization and building a sustainable data infrastructure that addresses those needs, and (4) the need to commit to an evolving, “living” evaluation in a dynamic health system.

Conclusions

Evaluation employing rigorous patient‐centered and systems‐relevant metrics can help organizations effectively implement and continuously improve service models. Using an established framework and a collaborative approach provides an important blueprint for a program evaluation in a learning health system. This work provides insight into the process of integrating care for vulnerable populations with chronic conditions in health care systems and integrated knowledge generation processes between research and health systems.

Keywords: autism, community clinic, primary care, program evaluation, RE‐AIM

1. INTRODUCTION

The development of a learning health system (LHS) relies on robust and continuous evaluation.1, 2 Since an LHS can be conceptualized as a series of practices that cycle between knowing what is being done, what ought to be done, and how change can occur, collection of data that characterizes outputs of service delivery is a critical component. Depending on the scale of the LHS, the infrastructure that support evaluation efforts will vary. But to complete the LHS cycle at any scale, systematic and comprehensive capturing of relevant data is a powerful tool to improve the quality of care, identify key ingredients of programs, and facilitate optimal scale and spread.3 The concept of evaluation for clinical interventions in academia instinctively conjures up traditional methods of randomized control trials and comparative effectiveness; however, for organizations, pragmatic and developmental evaluation approaches require a different mindset.4, 5 These latter types of evaluation share questions with the traditional evaluation literature in asking for whom an intervention is most effective and importantly, under what context and circumstances it works.6, 7 Moreover, pragmatic and developmental approaches are better suited in an LHS context, as these are more likely to be responsive to the practitioner's needs8 and deliver feedback in the necessary timescale.

1.1. Population

Autism is a developmental disability characterized by differences in social communication and interaction across multiple contexts and repetitive interests, behaviors, or activities that challenge functioning in typical environments.9 In the past, relatively more attention has been given to the health and service needs of children with autism; however, recent research highlights the specific challenges that adults face10 around both clinical need11 and adaptive functioning.12 Similarly, intellectual disability, defined as having deficits in both intellectual and adaptive function, is another developmental condition that is associated with these challenges. Compared with the general population, adults with developmental disabilities (including both autism and intellectual disabilities) have more healthcare needs,11, 13, 14 often presenting with concurrent behavioral, mental health, and physical health conditions.15, 16

In healthcare settings, the communication challenges that adults with developmental disabilities often have are exacerbated because of the environment,17 even as they are heavier users of the health care system. These adults receive more specialist care11, 18 and are more likely to visit the emergency department and be admitted to hospitals.18, 19 For instance, adults with developmental disabilities are more than twice as likely to be hospitalized for ambulatory care‐sensitive conditions such as diabetes‐related concerns that could have been avoided if managed adequately in primary care.20 These data suggest that the current system is not sufficiently managing clinical needs to avoid unnecessary emergency department visits.21, 22

Access to healthcare is not just a point of care, but a continuum with determinants from both the user and provider ends. In a synthesis of the literature by Levesque et al,23 the healthcare user begins the access journey with the ability to perceive needs, seek and then reach services, followed by the ability to pay and engage with services. For providers, corollary concepts that describe the services at each step are the approachability, acceptability, availability and accommodation, affordability, and appropriateness of the service.23 Thus, service provision models need to address the gaps in access for this population and avoid unnecessary overload at each stage throughout the system, ensuring optimal health and mitigating caregiver burden. Crucial to those processes is the role of primary care as the medical home and a gatekeeper to accessing other services.24 However, primary care providers report barriers to support patients with developmental disabilities. For instance, there is a lack of adequate mechanisms to attend to the needs of resource‐intensive patients while balancing volume and compensation constraints.25, 26 In addition, others report a lack of adequate training resulting in a sense of discomfort when attending to this population.27 All this results in decreased quality of care and system inefficiency.

Extensive work to produce evidence‐based guidelines for managing care of different populations reflects a growing interest of developing an LHS in rehabilitation and medical care of people with developmental disabilities.28 Moreover, even as family‐centered care is common practice in the field, integrating the needs and wishes of families while responding efficiently to the systems constraints and complex multiple‐level care is a challenge. Multidisciplinary primary care would increase the likelihood of continuity of care.29 Implementing an LHS would benefit the delivery of care and strengthen research capacity while forming a network of primary care centers in this area. Examples of implementation of an LHS in the childhood disability care system exist30 but are still in their infancy. For instance, parallel development of electronic health records (EHRs) strengthens evaluation through aligning metrics with practice in a care program for children with cerebral palsy.30 Integrating a systematic evaluation framework for such initiatives can facilitate continuous evolution and scaling of such systems.

1.2. RE‐AIM framework

The RE‐AIM framework was designed initially for public health interventions.31 The acronym represents the domains of Reach, Efficacy, Adoption, Implementation, and Maintenance and has been used in various contexts ranging from clinical interventions to community programs and corporate settings32 and in multiple project phases from planning through to implementation and summative evaluations.32, 33 In summative evaluations, RE‐AIM was employed in settings from a summer camp targeting pediatric obesity34 to decision aids to coach patients in a cancer clinic.35 At the same time, others have employed it during the development phase in ongoing learning and improvement (for example, Finlayson et al36). In that study, the framework was used to structure discussions with stakeholders around feasibility during the design process and what factors would improve adoption and encourage widespread implementation. Thus, using the RE‐AIM framework to guide the development and growth of service models is an approach we took in designing a responsive program evaluation.

2. OBJECTIVE AND RESEARCH INTERESTS

This paper describes the design of a comprehensive program evaluation using the RE‐AIM framework for a newly established developmental disability service consisting of medical and dental services for adults in Montreal, Canada. The objective of the service is to improve the quality of care offered to patients and their families in this population and to identify processes that can inform better access to services for people with developmental disabilities at large. The evaluation aims to support program goals of the organization to (1) enable replication of the service model and (2) catalyze public health system action. The work provides insights into the process of integrating care for marginalized populations with chronic conditions in health systems and the possibilities for creating an LHS in this context, through knowledge translation and integrated knowledge generation strategies between researchers and the health care system players.

2.1. Local setting

The See Things My Way (STMW) medical dental clinic was founded in 2016 by the Centre for Innovation in Autism and Intellectual Disabilities (CIAID). This specialized interdisciplinary clinic provides primary care for adults with developmental disabilities. The goal of the clinic is to optimize care for this population by creating a sustainable and replicable service model using a public‐private partnership model. At the time of writing, the program runs the medical clinic once a month for a full day with a doctor and two nurses, alongside nurse‐only visits as needed. Dental services are provided approximately 1.5 days a week by one dentist and a dental hygienist. A large proportion of resources is allocated to supporting patients and their families as they navigate the system between appointments and building a comprehensive patient profile, as these are generally patients with complex health needs. The organization aims to be a catalyst in the health system and influence policy while enabling a seamless system of services for the clients. To do so, CIAID supports research and evaluation as means to optimize, replicate, and enable uptake of this model in the public health sector. The CIAID has committed to implementing a program evaluation as part of collaborative efforts to create a learning health care system for individuals with developmental disabilities in Quebec, Canada.

3. METHODS

The program evaluation was co‐designed using a Participatory Action‐oriented approach with researchers and stakeholders from the clinical setting at CIAID. A participatory research approach strengthens the ability to build relevance and utility of the research findings37 and aligns with the principles of integrated knowledge translation, by which research designed in collaboration with stakeholders also gets implemented and evaluated collaboratively.38 In this sense, the project development itself already contributed to establishing an LHS. The first author was funded by a CIHR Health System Impact Fellowship39 that enabled him to be an on‐site, “embedded” researcher in a nonacademic organization. This positioned the researcher as a core member of the organization with complementary expertise to that of the managers and clinicians.40 As a researcher with experience in the autism field and an understanding of the service needs in this population, this evaluation opportunity, initiated by the organization, was an ideal fit to better understand the nature of the program, the delivery context, and the external validity the program could have.41, 42

Our process of developing this program evaluation consisted of five steps: (1) conducting an evaluability assessment to understand what constructs could and should be evaluated based on organizational goals; (2) identifying the key questions for the organization and potential indicators for these constructs; (3) operationalizing a framework to support the evaluation domains and activities; (4) identifying relevant and valid measurement tools; and (5) consolidating and implementing the evaluation in phases based on organizational priorities.

3.1. Conducting an evaluability assessment

We performed an initial assessment to identify which processes and outcomes should be evaluated. The first author held multiple meetings with leaders in the organization and analyzed organizational administrative documentation to learn about the organization structure and context of the current public health system. The sequence of the evaluability assessment is detailed below:

The first author conducted individual, face‐to‐face, open‐ended interviews all on separate days with the members of the management team (multiple separate interviews with the president of the organization and the senior project manager) and all the clinicians involved (one doctor, one dentist, and two nurses) to understand the goals, processes, and challenges. These initial interviews were done using a semistructured interview protocol43 to create a logic model of the service that described the clinic flow for both the medical and dental services. Questions were developed by the researchers and queried the chain of inputs, activities, outputs, and outcomes. Documents related to the current service structure and policies guiding the formulation of the clinical services were retrieved.

The documentation shared by the president of the organization and the senior project manager of the clinic during the interviews were reviewed and analyzed. These ranged from consulting reports to board meetings, internal meeting notes, and dashboards tracking the current operations of the clinic.

The first author contacted and conducted semistructured interviews with researchers and program evaluators in other organizations serving similar populations to gain insight into their research and evaluation methods. These consultations were held with medical and dental health clinics that served this population across Canada as well as organizations in other sectors serving the same population (eg, employment training). There were a total of seven researchers and practitioners consulted from different organizations over a 2‐month period over the phone and by email correspondence. Pertinent documents (eg, evaluation reports and clinical forms) were requested and analyzed.

To contextualize the documents retrieved from other organizations, the first author observed both the medical and dental clinics at CIAID for a total of 3 days over 3 months. During those times and in subsequently scheduled interactions, all the clinicians (doctor, dentist, and nurses) were interviewed about their professional trajectory (training, motivations), key successes and challenges on a day‐to‐day basis in implementing the model, and outcomes they see as important to capture.

3.2. Identify key questions and potential indicators

Equipped with a thorough understanding of the organization, the goals, and the challenges that were being faced on the ground, the researcher and organization administrators worked to identify the key questions that were priorities to the organization. This process was done iteratively with the organization's administration, the clinicians, and researcher. A final meeting with the president was held to align the key questions in the evaluation with the aims of the organization.

3.3. Establishing and operationalizing a framework

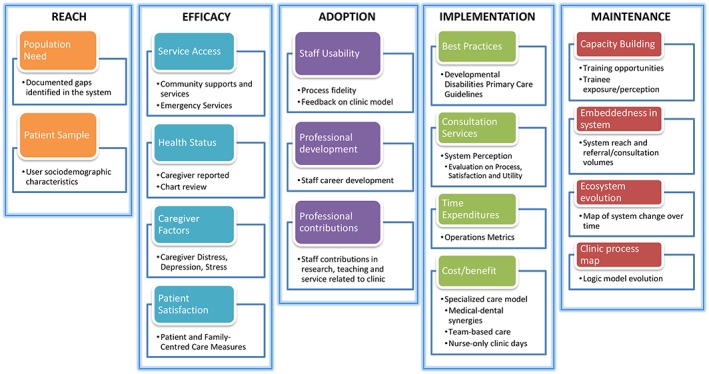

To organize the evaluation, we searched for a framework that would guide the process. After a review of evaluation tools, domains, and frameworks using the Training Institute for Dissemination and Implementation Research in Health (TIDIRH) website (http://www.dissemination‐implementation.org/select.aspx), the RE‐AIM framework31 was selected to guide the evaluation based on the relevance to the key questions identified and the demonstrated utility of the framework in the literature across settings.32 Potential indicators were places as domains of evaluation under the five axes in the framework (see Figure 1). Since data and practice are integrated in an LHS, an extensive search in the literature and conversations with experts in the field (from the organization's research advisory panel made up of six members) were used to identify validated tools for each domain that can assess the outcomes of interest while being feasible for use in clinical practice.

Figure 1.

Program evaluation of a medical‐dental clinic in a primary care setting based on the RE‐AIM framework

3.4. Conducting a phased implementation

Once the framework was chosen, the domains developed, and the approaches and tools identified, the implementation had to be phased in. Priorities were set through discussion with the leadership (ie, president and senior manager). Many of the considerations were related to the current challenges that the organization was facing and what data would be most helpful at this time to support and overcome those barriers.

4. RESULTS

4.1. Evaluability assessment

Interviews with the staff provided information about the internal workings of the organization and the actual outcomes (versus perceived) of each task identified as critical for service implementation. The documentation received during these interviews consisted of board meeting presentations, minutes, and notes. Altogether, the initial interviews and documentation provided insight into the context, the external factors and setting, and the hypothesized effects and theory of change of the service model.44 The theory of change was as follows: access to appropriate care for this population will lead to less unnecessary use of system resources and more coordinated specialized care, resulting in better health outcomes at lower cost.

Documents reflecting implementation of similar services and structures were requested and received from four other organizations across Canada (a national employment organization for people with autism and other healthcare clinics that provide care for people with developmental disabilities). Program evaluation reports from a national employment organization that gave an initial sense of areas to focus on in servicing the population of individuals with autism and related disabilities. The most salient points included unique indicators to collect specific to this population and methodological challenges to collecting data in a clinic outside of the clinical workflow. Specifically, areas identified included a focus on barriers to other community services and noting the individual's level of function. From the health clinics, documents reflecting best practices for this population were reviewed (eg, intake forms, medical review of systems forms, and behavioral checklists).

Structured observations of the clinical services were necessary to ensure correspondence between what was proposed or described in the received documentation and what was occurring in practice. A first‐person perspective of the service and interviews with frontline clinical staff also informed how feasible various parts of the evaluation would be to implement, given the staff's constraints and timelines, the physical environment, and the interruptions that occur in a clinical setting. The interviews also provided information on the service capacity and alignment with the vision of the organization. Potential barriers for implementation of the evaluation framework that were considered into the next phases of the project were influx of patients, staff capacity, time to complete extra questionnaires, and volume of documentation already in place.

4.2. Key questions and potential indicators

The initial findings from the evaluability assessment were presented to senior administration to inform the development of indicators. In that discussion, many of the questions that were of interest for researchers had to be laid aside to focus on the relevant questions and outcomes of interest prioritized by the organization. Table 1 presents the final key questions identified for the program. These key or main questions were categorized by themes and also broken down into more detailed questions. After questions were established, the researcher reviewed the literature to identify potential indicators and research approaches that were related to these questions.

Table 1.

Key questions from stakeholders and potential indicators for evaluation

| Theme | Main Question | Details | Potential Indicators |

|---|---|---|---|

| Population | Who comes to the program? | Who are the patients seen in the program compared with the potential population targeted? Who has access to the program? | Sociodemographic and clinical factors of patients, caregiver characteristics |

| Outcomes | What changes because of the program? | How are things different because of the program? If we see changes, which individuals are affected, which families, and which parts of the health system does the program impact? | Patient health outcomes, satisfaction with service, enabling self‐empowerment in healthcare, change in acute emergency visits |

| Evidence base | Is the program consistent with the evidence base? | Does the program adhere to best practices? What are the guidelines and existing evidence that supports this model of care? | Clinic workflow and use of guidelines and tools |

| Sustainability | Can the program last? | What is the current and optimal funding model? How does this model scale and/or spread? What partnerships are built with the public health system? | Operations and cost‐effectiveness, clinician satisfaction, referral volumes, public health system constraints, policy mapping, other relevant agents in the sector |

For each indicator, an evaluation approach was co‐created with the organization's administrative team. For some indicators, validated outcome assessment tools were selected to support a sound evaluation framework that can be generalized and applied to other contexts. For others, qualitative approaches such as narrative inquiry were more helpful. For instance, activities such as mapping the players in the public health system and process modeling of the service yielded themselves to qualitative methods.

4.3. Operationalizing the framework

The RE‐AIM framework was chosen based on its comprehensive nature, focusing on both clinical and long‐term outcomes, which aligned with the objectives of the organization. The various indicators were further developed and were placed within this framework (Figure 1). Multiple domains were then conceptualized under each of the five axes to allow for continual improvement and for tracking system change (Table 2). According to the characteristics of the program, the challenges being encountered in practice, and the constructs in the framework, validated scales and measures were chosen through discussions with international experts (from the organization's research advisory panel) and a review of the literature (see Fleiszer et al45 and Murphy et al46). Consultation with external experts were sought out to find practical evaluation tools.

Table 2.

Operational definitions and description of domains using the RE‐AIM framework

| Axes | Operational Definition | Domains and Description |

|---|---|---|

| Reach | Reach refers to the representation of the population targeted. The current patients who participate compared with the population catchment for this intervention. | Population need—review government documentation and internal reports about rates |

| Patient characteristics—compare the sociodemographic characteristics of the patients and families that accessed care compared with the population | ||

| Efficacy | Efficacy refers to the impact the intervention has on key outcomes: Patient health outcomes, caregiver burden and distress, and patient and caregiver satisfaction with the services provided. | Service access—track how patient's access to other community service and supports change over time |

| Caregiver factors—track how caregiver burden, enablement, depression, stress symptoms change over time | ||

| Health status—as a long‐term outcome, EMR data will be used to track the health outcomes of patients over time | ||

| Patient satisfaction—track how caregiver satisfaction with services provided change over time | ||

| Adoption | Adoption refers to how feasible the program is in practice. It is a measure of how well the service providers have used the intervention as planned and address issues such as adherence, usability, and acceptability. Additional measures of provider professional development and contributions to this field of work are included. | Usability—continual feedback from staff about their satisfaction with the current model and how to improve the model |

| Professional development and contributions—examining the professional development of staff and their contributions to the field (eg, research, service committees, teaching, and training) | ||

| Implementation | Implementation refers to process fidelity, process evolution, costs for effectiveness, and interactions with the extant health care system over time. | Adherence to best practices—fidelity to guidelines in clinical operations |

| System reach and acceptability—an evaluation of consultation services process by other service providers | ||

| Time expenditure—clinic flow obtained and analyzed using Salesforce, a platform with the ability to capture operations metrics | ||

| Cost‐benefit analysis—understand the synergies between medical and dental care, team‐based care approaches and nurse‐only clinic days | ||

| Maintenance | Maintenance refers to the sustainability of the program, comprising factors such as capacity, public sector response, and embeddedness in system and ecosystem evolution that this intervention produces. | Ecosystem evolution—documentation, interviews that elicit the narrative of how things have changed, and public reports will be analyzed to gain insight into the impact the clinic has made over time |

| Capacity building—examine the staff training opportunities and the exposure and perceptions of trainees to the service and population, assess the extent other providers gain interest and/or confidence to treat this population | ||

| Embeddedness in system—examine how the program is incorporated into the public system through tracking referrals, and consultation requests over time, partnerships with academic faculties for research and training | ||

| Clinic process map—track how the theory of change and logic model evolves with the environment in the complexity of the public health system |

A description of how each axis was conceptualized, the domains, and some examples of the tools used is provided below. Please see Appendix A for a comprehensive list of tools and a detailed description of proposed delivery methods.

The “reach” axis of the framework seeks the level of representation the clinic (as the intervention) has compared with the targeted population in the local context. Two domains were identified and described: population need would be assessed by reviewing documentation that initiated the creation of this clinic, and patient characteristics would allow for a comparison of the patients and families that accessed this clinic compared with those in the general population. One may hypothesize that certain sociodemographic or clinical need factors (eg, length of residence in the country, mental health need, and access to other services) may be associated with more access to the intervention. Such data would inform how the clinic ought to develop and grow.

The “efficacy” axis was conceptualized as the impact the intervention has on key outcomes. The key outcomes were informed by the potential indicators around patient health. Since the duration of the project may not be sufficient to detect direct health outcomes, measures of access to services and experience measures were selected to be collected over time as short‐term indicators. These were grouped as the following: service access—how much access the patient had to other services in the community; caregiver factors—understanding caregiver burden, enablement, self‐efficacy, depression, and stress; patient health status—the overall health status of the patient; and satisfaction with services provided. In regards to tools, caregiver satisfaction with services (MPOC‐2047) and patient enablement (PEI48, 49) were validated tools that were deemed relevant for this service (see Appendix A for detailed description and selection rationale).

“Adoption” was framed as the feasibility of the intervention based on the practitioner's perspective (usability, acceptability) and if the service providers were using the intervention as planned (adherence). This axis also includes measures of how the intervention has aided the professional developmental of the providers and their contributions to their field of work.

“Implementation” refers to the fidelity and evolution to the processes over time. Since the context and constraints of the health system are continually changing, we expect that the clinical process will inevitably change, so measures of adherence to best practices and interactions with other services are needed. For instance, clinicians external to the organization that receive a clinical consultations from this clinic will receive a questionnaire alongside the consult to evaluate the quality and utility (Consultation Services Survey on Process, Satisfaction and Utility50). Also, the number of referral to this clinic from various settings will be tracked. This will be a proxy for system embeddedness. Measurement of time expenditure throughout the clinical workflow will be collected using SalesForce to streamline operations. A cost‐benefit analysis was conducted to understand potential synergies between medical and dental care from an organizational perspective as it is unclear if a completely integrated medical dental clinic is the most effective approach for the organization at this time.

Lastly, “maintenance,” or sustainability, is heavily informed by the program goals of the organization. The program goals are to improve access to appropriate care for this population and catalyze further action by the public sector in the provincial health system. Documenting the evolution of the ecosystem due to this new clinic as a systems map (eg, referral corridors, partnerships with other organizations, other similar initiatives, and policy changes) will provide insight into the impact over time. Also, capacity building through linkages and partnership with academic health centers and training opportunities for other clinicians will be documented.

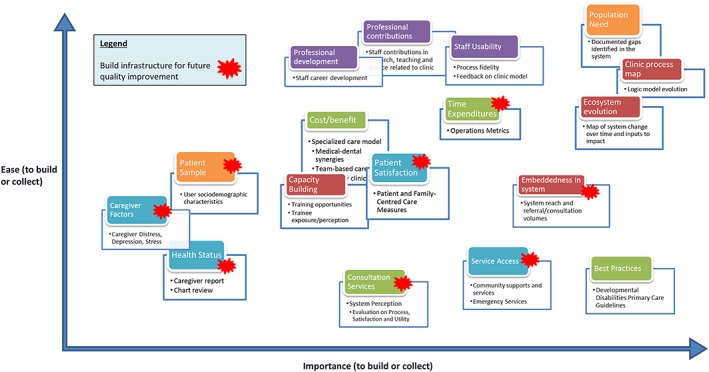

4.4. Conducting a phased implementation

In collaboration with the organization administration, we established how the evaluation should be implemented, considering both the internal and external challenges faced, the practices and areas of growth in the organization that were occurring, and the conceptual framework adopted. On the basis of the current environment and the existing infrastructure, two factors were deemed most relevant in establishing an evaluative structure: the ease or ability to build infrastructure to collect data in each domain and the importance of having that data to meet the organization's goals at this point in time. These factors were used to prioritize the domains and phase in the evaluation plan (Figure 2). Specific clinician feedback was sought to validate these ranks and identify contingencies from their vantage point (eg, hurdles in the clinical workflow). This led to the planning the phases of implementation of the evaluation.

Figure 2.

Mapping of stakeholder priorities of evaluation domains based on importance and ease of implementation

Other practical opportunities integrating evaluation capacity into operations were identified. For instance, the clinic is currently transitioning to an integrated EHR. This is creating an opportunity to integrate data elements in the EHR that would be extractable and usable for research and learning purposes. These metrics collected would align with what is important for clinical practice and inform further growth. In parallel, the development of tools such as a new intake form for patients and end‐of‐visit satisfaction questionnaires are being redesigned to feed into an EHR platform and align with the evaluation framework.

5. DISCUSSION

Evaluation is a crucial part of building an LHS. In this paper, we document the development of an evaluation framework for a medical‐dental service for adults with developmental disabilities in Montreal, Canada. Our experience demonstrated (1) the utility of a comprehensive framework that captures contextual factors in addition to clinical outcomes, (2) the need for validated measurement tools that are not cumbersome for everyday practice, (3) the importance of understanding the functional needs of the organization and building a sustainable data infrastructure that address those needs, and (4) the need to commit to an evolving, “living” evaluation in a dynamic health system. Overall, we provide insights into evaluating the integration of processes of care for vulnerable populations with chronic conditions in health care systems and embedding knowledge generation processes into operational systems towards developing an LHS infrastructure.

The RE‐AIM framework in developing an LHS adapting an established framework provides the opportunity to build on previous knowledge. Since RE‐AIM was shown to have such utility across multiple contexts and settings32 and also be relevant over time, from the development, adaptation, and summative evaluation of interventions,34, 35, 36 we chose it to guide our thinking around factors of implementation, particularly as information management and data intake platforms were being refined. Similarly, others have introduced RE‐AIM as an assessment tool to guide adaptation of existing community programs.51 This approach would be useful in supporting the optimal scale and the spread of any systems intervention, as there is an emphasis on maintenance, or sustainability, in the framework. This guides the understanding of how effective and evidence‐informed practices are taken up by the larger system that the intervention is situated in. In our case, this would be a way to scale the service to other similar settings within the province and nationally and also to support the continuity of funding for the program. Altogether, we found this framework to be useful in the development of an LHS, as the axes guide future action by emphasizing adoption, implementation, and the context required for sustainability of the intervention over time.

5.1. Measurement tools

There are many measurement tools used in clinical research studies, but the majority are not applicable for use in a learning system. As we were conceptualizing each domain and identifying tools to capture data, finding validated instruments that would rigorously assess the clinical outcomes of interest while being feasible to employ in the world of practice was a challenge.52 Mindful of data collection for the sake of data, we wanted to find patient‐ and provider‐centered data collection methods of outcomes that would drive change (see Appendix A for our selection of tools and rationale). In many domains, the standardized, psychometrically tested tools were impractical and not feasible to use, being too lengthy or not relevant to quality improvement directly. In addition, the limited number of financial and human resources constrain the extensiveness of evaluation and create a situation to streamline what is practical for organizations.

In an era of big data, there are many opportunities to utilize powerful analytics on quantitative datasets,53 linking population and clinical‐level data in a single system. However, clinical models are typically introduced into complex systems with multiple interdependent agents,54 and thus, outcomes are affected by multiple factors and are not directly and linearly produced.55 Quantitative data may provide limited insight about complex processes and contextual elements surrounding the implementation of the service. Qualitative approaches in LHS can provide richer understanding to improve care by capturing these contextual elements and nuances of stakeholders' perspectives in to the LHS (for example, see Munoz‐Plaza56). In our setting, implementation and sustainability axes heavily relied on qualitative data—the mapping out of partnerships, specialist referral corridors for patients, and other key players in the health system to engage—all these rely on narrative inquiry and interview data with stakeholders. Indeed, others have recently highlighted the use of RE‐AIM in qualitative evaluation.57 Overall, the comprehensiveness of an evaluation is strengthened by employing mixed‐methods approaches.58

5.2. Infrastructure for functional needs

EHRs carry the potential to transform care, evaluation, and clinician practice.59, 60 Integrated records across multiple points of care is important especially for community health providers serving people with complex health needs (.61) However, integrating the data into an iterative quality improvement cycle has been a systemic challenge.62 Fraught with privacy and safety concerns,63, 64 uptake of these data collection systems has been slow,65 a major challenge being the lack of interoperability across sites.66

At the same time, building an evaluation for an LHS requires infrastructure support. As a program adapts and optimizes their workflow process and as organizations grow in capacity and scope, it is important that the functional needs guide the development of such infrastructure. Knowing those needs rather than collecting data out of convenience or habit requires close iterative coordination between leadership, researchers, and data managers. In this intervention, an EHR system and other IT infrastructure were being implemented concomitantly as the evaluation was rolled out, creating opportunities to embed research and evaluation infrastructure into the clinical environment.67 In the near future, the use of patient/person‐centered technologies (eg, phone apps), where the health data is housed and managed by the individual, will change the infrastructure and granularity of program evaluation.

5.3. Sustained learning through iteration

Organizations need to have capacity to map out a comprehensive evaluation plan initially and maintain resources to iteratively assess how the plan should evolve. Since contexts are ever‐changing and health systems are complex in nature, organizations that want to stay relevant over time need to embed evaluation within their delivery system and iteratively update their evaluation plan to remain relevant as a learning system. Traditional evaluations have been thought of as formative or summative in nature, respectively to inform the adaptations needed during implementation or to give a final report after a project is complete. However, in the learning cycle, a learning68 or developmental5 approach to evaluation may be more suitable. For instance, public health system constraints, changes in political direction and partnering organizations, and internal changes such as staffing growth, EHR implementation, and physical space for this clinic will drive the need to review of these domains and decisions about when to implement each part of the evaluation.

On the basis of the pace of growth and direction in an organization, certain components may be measured, adapted, or ceased to be measured at different times to ensure the evaluation supports the goals of the organization. This learning mindset requires an alignment of values from leadership, practitioners, and the clientele.69 An organizational culture can facilitate collaborations between program managers/administrators and evaluators/researchers that will be more effective in sustaining a learning system. Structures that build internal research capacity and support embedded researchers enable the organization to achieve the tenets of an LHS.70 At the same time, relying on funding from academia entirely may undermine the sustainability of the LHS. Given the need to coalesce local practice with systematically derived knowledge,71 organizations ought to spearhead these collaborations through incentive structures to attract research staff.

5.4. Limitations

Limitations of this work include the following. This work was done in one organization in the public health system in Montreal, Canada, and therefore, may not apply equally in other settings. However, the principles, learnings, and process of adapting an evaluation framework are still relevant regardless of context. This work focuses on a specific population with unique differences in communication that shaped how services were structured and delivered. This model itself, therefore, may not apply to all chronic conditions. At the same time, as demonstrated in other pediatric populations with disabilities,72 focusing on the barriers in various vulnerable populations may bring to light solutions that will strengthen our health system in general (eg, better support for transitions, navigation of multiple sectors, and appropriate provider‐patient communication). Lastly, organization leadership and culture welcomed and supported evaluation efforts extensively. Other initiatives may receive more pushback if there is a lack of understanding of the benefits of research and evaluation for organizational objectives. Organizational awareness of the benefits of building an LHS will lead to greater capacity and ease in this area.

6. CONCLUSION

Creating an LHS for complex care populations requires a multi‐leveled approach that considers different stakeholders' needs and ongoing evaluation. Responsive evaluation that aligns with organizational needs and goals is a critical component of the LHS cycle. The RE‐AIM framework offers a structure to consider meaningful axes to support evaluation within an LHS based on its comprehensiveness and flexibility to be used across time and setting. Continuous engagement between researchers, leadership, and clinicians is necessary for developing such an evaluation. Identifying feasible system‐ and stakeholder‐relevant measures to support evaluation is a challenge, pointing to the need to develop approaches that capture important clinical outcomes but also easy to apply in collecting continuous, high‐volume data. Lastly, having a sustainable infrastructure that is lean enough to withstand fluctuations in financial and human resources is essential to ensure longevity of such programs.

CONFLICT OF INTEREST

The authors have no conflicts of interest to declare.

ACKNOWLEDGMENTS

We wish to acknowledge the staff at CIAID for their time and participation in this work. A particular thanks to the research advisory panel at See Things My Way Health Services, especially to Dr Yona Lunsky for her insights regarding measurement tools and data collection methods in this population. We would also like to thank Drs Ebele Mọgọ, Shaun Cleaver, Anne Hudon, and Srikanth Reddy for their review of the manuscript. All phases of this study was funded by a CIHR Health System Impact Fellowship to JL (#HI5‐154962; Canadian Institutes of Health Research, co‐funded by MITACS and CIAID).

APPENDIX A.

Approaches and tools used to operationalize domains of RE‐AIM evaluation framework for a primary medical‐dental service for adults with developmental disabilities

| Axis, Domain | Description of Approach and Tools | Delivery |

|---|---|---|

| Reach, population need | Assessed by interviewing the president of the organization to identify documents that pertain to the initiation of the clinic and map out existing services. Those documents include the government ombudsman reports made available on public websites, press releases, and internal consulting and environmental scans that show the gap that this clinic was created to address. These were both publicly available documents and documents internal to the organization. | Interview |

| Reach, patient characteristics | Assessed by questionnaires asking about ethnicity, time in Canada (recent immigrant), income bracket, and education modified from a Canadian Autism National Needs Assessment Survey and published by Lai and Weiss. 73 This survey uses multiple choice options that were developed with stakeholders (community service organizations leaders and families in the ASD field), along with questions selected and validated from other research. | Questionnaire and clinical intake forms |

| Efficacy, service access | Assessed by asking about what health and social services have been used in the last 6 months, emergency health services accessed in the past 6 months, and type of emergency department visits. 73 | Clinical intake forms |

| Efficacy, caregiver factors | We explored the use of the Brief Family Distress Scale (BFDS) 74 and the Revised Caregiver Appraisal Scale (RCAS) 75, the Depression Anxiety Stress Scales (DASS‐21) 76. After consultation with experts in this domain, we decided to use the BFDS and the DASS‐21 based on usability and brevity. In the DASS‐21, we specifically used the stress and depression subscales since that has previously been shown to be affected in caregivers (Lunsky, personal communication, 2018). Further consultation with service providers led us to not administer this as part of the intake form but as an additional survey later on. The reasoning was to avoiding the impression that caregivers were being studied while they were seeking and accessing services at the clinic. The RCAS was not used based on feasibility in this setting and length of the overall evaluation. | Questionnaire and clinical intake forms |

| Efficacy, health status | Overall health status was measured by caregiver report (Idler and Benyamini, 77 Patient Enablement Inventory used previously in Lai and Weiss 73 ). Specific questions around oral health that had both clinical and research utility were co‐designed with the dentist. In addition, chart review of electronic medical record data forms is used to obtain the full clinical picture and offer cases to illustrate the changes in health. Data from the initial intake form and active diagnosis to track the medical complexity of the patient will be collected in the EHR. Specific variables to be included are concurrent diagnosis per patient, to be quantified by type and severity to better describe and subdivide our sample. | Questionnaire and clinical intake forms |

| Efficacy, patient satisfaction | To have a patient‐centered measure of service quality, after a review of the Patient‐Reported Outcome Measures (PROMs) literature, we decided to use the Measures of Process of Care‐ 20 (MPOC‐20 47 ), the Patient Enablement Inventory (PEI 48, 49 ) with an open-ended section for comments (Bayliss et al78). The MPOC‐20 will be administered once a year to all patients, and the PEI with a comment section will be given after each visit as a proxy self‐care and self‐efficacy, a short‐term indicator of program efficacy. | Questionnaire and clinical end‐of‐visit forms |

| Adoption, usability | Assessed by continual feedback from staff about their satisfaction with the current model and how to improve the model. | Interviews |

| Adoption, professional development and contributions | Assessed by interviews with the service providers in the clinic to track their career development, contributions to the field at large and teaching to trainees. This was measured in part by the number of professional collaborations and trainees reached directly and indirectly (eg, teaching, and knowledge transfer activities). Individual semistructured interviews with providers ask about the benefits they see in this specialized clinic. Interviews will target user perceptions of the efficacy and impact of the clinic and potential areas of development. In addition, we will ask about impacts on their career development. For example, we will ask “how has your involvement in the clinic impact your professional and research trajectory?”, “what are the benefits and synergies you see in this model of care?”, “how many additional trainees have you taught as part of this clinic and what do you see as the impact of those exposures?” etc. | Interviews |

| Adoption, adherence to best practices | Assessed by EMR data that examines the number of times clinical guidelines or specific algorithms were used appropriately in an evidence‐informed fashion. | EMR analytics |

| Implementation, system reach and acceptability | Assessed using a Consultation Services Survey on Process, Satisfaction and Utility. 50 This survey will be sent to external physicians to gain their perspective on the quality and value of the services this clinic offers. This package will be sent along each consultation report that the clinic provides. | Questionnaire |

| Implementation, time expenditure | Assessed by using Salesforce, a platform with the ability to capture operations metrics in the clinic to optimize scheduling and workflow | IT analytics |

| Implementation, cost‐benefit analysis | Assessed by interviews with managers and clinicians to explore the synergies between medical and dental care, team‐based care approaches and nurse‐only clinic days, including the regulatory and financial constraints in the local public health system | Interviews |

| Maintenance, capacity building | Assessed by survey will be sent out electronically via email after a consult report has been given to the requesting service provider and surveys to trainees (eg, residents) gauging their comfort and confidence working with this population | Questionnaire |

| Maintenance, system embeddedness | Assessed through tracking referrals and consultation requests over time through reports from the EMR. These data will be quantified and will increase our knowledge of the embeddedness of this clinic into the greater health system and how the changes in volume relate to clinic development. | EMR analytics |

| Maintenance, clinic process map and ecosystem evolution | Individual semistructured interviews will be completed with management staff to understand the development of the clinic and the context surrounding it. Sample questions include “what were the hurdles in implementing this model?” “what were the key ingredients to integrate this model into the current health system?”, “what challenges were unforeseen and how did you overcome those?”. Pertinent documents will be analyzed to trace the path from inputs to impact in the development of the clinic. We will be able to identify partners/providers in external organizations that may provide insights around how the landscape has changed because of this. We will also map out the formal and informal corridors established with the public sector services network. | Interviews |

Lai J, Klag M, Shikako‐Thomas K. Designing a program evaluation for a medical‐dental service for adults with autism and intellectual disabilities using the RE‐AIM framework. Learn Health Sys. 2019;3:e10192 10.1002/lrh2.10192

REFERENCES

- 1. Friedman C, Rubin J, Brown J, et al. Toward a science of learning systems: a research agenda for the high‐functioning learning health system. J Am Med Inform Assoc. 2014;22(1):43‐50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Riley WT, Glasgow RE, Etheredge L, Abernethy AP. Rapid, responsive, relevant (R3) research: a call for a rapid learning health research enterprise. Clinical and translational medicine. 2013;2(1):10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Greene SM, Reid RJ, Larson EB. Implementing the learning health system: from concept to action. Ann Intern Med. 2012;157(3):207‐210. [DOI] [PubMed] [Google Scholar]

- 4. Hall JN. Pragmatism, evidence, and mixed methods evaluation. New Directions for Evaluation. 2013;2013(138):15‐26. [Google Scholar]

- 5. Patton MQ. Developmental evaluation. Evaluation Practice. 1994;15(3):311‐319. [Google Scholar]

- 6. Green LW, Glasgow RE. Evaluating the relevance, generalization, and applicability of research: issues in external validation and translation methodology. Eval Health Prof. 2006;29(1):126‐153. [DOI] [PubMed] [Google Scholar]

- 7. Tunis SR, Stryer DB, Clancy CM. Practical clinical trials: increasing the value of clinical research for decision making in clinical and health policy. Jama. 2003;290(12):1624‐1632. [DOI] [PubMed] [Google Scholar]

- 8. Key KD, Lewis EY. Sustainable community engagement in a constantly changing health system. Learning Health Systems. 2018;2(3):e10053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. American Psychiatric Association . Diagnostic and Statistical Manual of Mental Disorders (DSM‐5®). Washington, DC: American Psychiatric Publishing; 2013. [Google Scholar]

- 10. Gerhardt PF, Lainer I. Addressing the needs of adolescents and adults with autism: a crisis on the horizon. J Contemp Psychother. 2011;41(1):37‐45. [Google Scholar]

- 11. Croen LA, Zerbo O, Qian Y, et al. The health status of adults on the autism spectrum. Autism. 2015;19(7):814‐823. [DOI] [PubMed] [Google Scholar]

- 12. Shattuck PT, Seltzer MM, Greenberg JS, et al. Change in autism symptoms and maladaptive behaviors in adolescents and adults with an autism spectrum disorder. J Autism Dev Disord. 2007;37(9):1735‐1747. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Havercamp SM, Scott HM. National health surveillance of adults with disabilities, adults with intellectual and developmental disabilities, and adults with no disabilities. Disabil Health J. 2015;8(2):165‐172. [DOI] [PubMed] [Google Scholar]

- 14. Lunsky Y, Gracey C, Bradley E. Adults with autism spectrum disorders using psychiatric hospitals in Ontario: clinical profile and service needs. Res Autism Spectr Disord. 2009;3(4):1006‐1013. [Google Scholar]

- 15. Cashin A, Buckley T, Trollor JN, Lennox N. A scoping review of what is known of the physical health of adults with autism spectrum disorder. J Intellect Disabil. 2018;22(1):96‐108. [DOI] [PubMed] [Google Scholar]

- 16. LoVullo SV, Matson JL. Comorbid psychopathology in adults with autism spectrum disorders and intellectual disabilities. Res Dev Disabil. 2009;30(6):1288‐1296. [DOI] [PubMed] [Google Scholar]

- 17. McNeil K, Gemmill M, Abells D, et al. Circles of care for people with intellectual and developmental disabilities: communication, collaboration, and coordination. Canadian Family Physician. 2018;64(Suppl 2):S51‐S56. [PMC free article] [PubMed] [Google Scholar]

- 18. Weiss JA, Isaacs B, Diepstra H, et al. Health concerns and health service utilization in a population cohort of young adults with autism spectrum disorder. J Autism Dev Disord. 2018;48(1):36‐44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Durbin A, Balogh R, Lin E, Wilton AS, Lunsky Y. Emergency department use: common presenting issues and continuity of care for individuals with and without intellectual and developmental disabilities. Journal of Autism and Developmental Disorders. 2018;48(10):3542‐3550. [DOI] [PubMed] [Google Scholar]

- 20. Balogh RS, Lake JK, Lin E, Wilton A, Lunsky Y. Disparities in diabetes prevalence and preventable hospitalizations in people with intellectual and developmental disability: a population‐based study. Diabet Med. 2015;32(2):235‐242. [DOI] [PubMed] [Google Scholar]

- 21. Muskat B, Burnham Riosa P, Nicholas DB, Roberts W, Stoddart KP, Zwaigenbaum L. Autism comes to the hospital: the experiences of patients with autism spectrum disorder, their parents and health‐care providers at two Canadian paediatric hospitals. Autism. 2015;19(4):482‐490. [DOI] [PubMed] [Google Scholar]

- 22. Vogan V, Lake JK, Tint A, Weiss JA, Lunsky Y. Tracking health care service use and the experiences of adults with autism spectrum disorder without intellectual disability: a longitudinal study of service rates, barriers and satisfaction. Disabil Health J. 2017;10(2):264‐270. [DOI] [PubMed] [Google Scholar]

- 23. Levesque JF, Harris MF, Russell G. Patient‐centred access to health care: conceptualising access at the interface of health systems and populations. International Journal for Equity in Health. 2013;12(1):18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Starfield B, Shi L, Macinko J. Contribution of primary care to health systems and health. Milbank Q. 2005;83(3):457‐502 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Lennox N, Van Driel ML, van Dooren K. Supporting primary healthcare professionals to care for people with intellectual disability: a research agenda. Journal of Applied Research in Intellectual Disabilities, 2015;28(1):33–42. [DOI] [PubMed] [Google Scholar]

- 26. Saqr Y, Braun E, Porter K, Barnette D, Hanks C. Addressing medical needs of adolescents and adults with autism spectrum disorders in a primary care setting. Autism. 2018;22(1):51‐61. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Wilkinson J, Dreyfus D, Cerreto M, Bokhour B. “Sometimes I feel overwhelmed”: educational needs of family physicians caring for people with intellectual disability. Intellect Dev Disabil. 2012;50(3):243‐250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Sullivan WF, Diepstra H, Heng J, et al. Primary care of adults with intellectual and developmental disabilities: 2018 Canadian consensus guidelines. Canadian Family Physician. 2018;64(4):254‐279. [PMC free article] [PubMed] [Google Scholar]

- 29. Gulliford M, Naithani S, Morgan M. What is 'continuity of care'? J Health Serv Res Policy. 2006;11(4):248‐250. [DOI] [PubMed] [Google Scholar]

- 30. Lowes LP, Noritz GH, Newmeyer A, et al. ‘Learn From Every Patient’: implementation and early results of a learning health system. Developmental Medicine & Child Neurology. 2017;59(2):183‐191. [DOI] [PubMed] [Google Scholar]

- 31. Glasgow RE, Vogt TM, Boles SM. Evaluating the public health impact of health promotion interventions: the RE‐AIM framework. Am J Public Health. 1999;89(9):1322‐1327. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Harden SM, Smith ML, Ory MG, Smith‐Ray RL, Estabrooks PA, Glasgow RE. RE‐AIM in clinical, community, and corporate settings: perspectives, strategies, and recommendations to enhance public health impact. Frontiers in Public Health. 2018;6:71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Glasgow RE, Estabrooks PE. Peer reviewed: pragmatic applications of RE‐AIM for health care initiatives in community and clinical settings. Prev Chronic Dis. 2018;15:E02. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Burke SM, Shapiro S, Petrella RJ, et al. Using the RE‐AIM framework to evaluate a community‐based summer camp for children with obesity: a prospective feasibility study. BMC obesity. 2015;2(1):21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35. Belkora J, Volz S, Loth M, et al. Coaching patients in the use of decision and communication aids: RE‐AIM evaluation of a patient support program. BMC Health Services Research. 2015;15(1):209. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Finlayson M, Cattaneo D, Cameron M, et al. Applying the RE‐AIM framework to inform the development of a multiple sclerosis falls‐prevention intervention. International Journal of MS Care. 2014;16(4):192‐197. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Mercer SL, DeVinney BJ, Fine LJ, Green LW, Dougherty D. Study designs for effectiveness and translation research: identifying trade‐offs. Am J Prev Med. 2007;33(2):139‐154. [DOI] [PubMed] [Google Scholar]

- 38. Gagliardi AR, Berta W, Kothari A, Boyko J, Urquhart R. Integrated knowledge translation (IKT) in health care: a scoping review. Implementation Science. 2015;11(1):38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Tamblyn R, McMahon M, Nadigel J, Dunning B. Health system transformation through research innovation. Healthcare Papers. 2016;16. (Special Issue) [Google Scholar]

- 40. Marshall M, Pagel C, French C, et al. Moving improvement research closer to practice: the researcher‐in‐residence model. BMJ Qual Saf. 2014. pp.bmjqs‐2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Green LW. Making research relevant: if it is an evidence‐based practice, where's the practice‐based evidence? Family Practice. 2008;25(suppl_1):i20‐i24. [DOI] [PubMed] [Google Scholar]

- 42. Pawson R, Tilley N, Tilley N. Realistic evaluation. Thousand Oaks, CA: Sage Publications, Inc.; 1997. [Google Scholar]

- 43. Gugiu PC, Rodriguez‐Campos L. Semi‐structured interview protocol for constructing logic models. Eval Program Plann. 2007;30(4):339‐350. [DOI] [PubMed] [Google Scholar]

- 44. Moore GF, Audrey S, Barker M, et al. Process evaluation of complex interventions: Medical Research Council guidance. BMJ. 2015;350:h1258. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Fleiszer AR, Semenic SE, Ritchie JA, Richer MC, Denis JL. The sustainability of healthcare innovations: a concept analysis. J Adv Nurs. 2015;71(7):1484‐1498. [DOI] [PubMed] [Google Scholar]

- 46. Murphy M, Hollinghurst S, Salisbury C. Identification, description and appraisal of generic PROMs for primary care: a systematic review. BMC family practice. 2018;19(1):41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. King S, King G, Rosenbaum P. Evaluating health service delivery to children with chronic conditions and their families: development of a refined measure of processes of care (MPOC− 20). Child Health Care. 2004;33(1):35‐57. [Google Scholar]

- 48. Howie JG, Heaney DJ, Maxwell M, Walker JJ. A comparison of a patient enablement instrument (PEI) against two established satisfaction scales as an outcome measure of primary care consultations. Fam Pract. 1998;15(2):165‐171. [DOI] [PubMed] [Google Scholar]

- 49. Hudon C, Fortin M, Rossignol F, Bernier S, Poitras ME. The Patient Enablement Instrument‐French version in a family practice setting: a reliability study. BMC family practice. 2011;12(1):71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Forrest CB, Glade GB, Baker AE, Bocian A, von Schrader S, Starfield B. Coordination of specialty referrals and physician satisfaction with referral care. Arch Pediatr Adolesc Med. 2000;154(5):499‐506. [DOI] [PubMed] [Google Scholar]

- 51. Altpeter M, Gwyther LP, Kennedy SR, Patterson TR, Derence K. From evidence to practice: using the RE‐AIM framework to adapt the REACHII caregiver intervention to the community. Dementia. 2015;14(1):104‐113. [DOI] [PubMed] [Google Scholar]

- 52. Funderburk JS, Shepardson RL. Real‐world program evaluation of integrated behavioral health care: improving scientific rigor. Families, Systems, & Health. 2017;35(2):114. [DOI] [PubMed] [Google Scholar]

- 53. Krumholz HM. Big data and new knowledge in medicine: the thinking, training, and tools needed for a learning health system. Health Aff. 2014;33(7):1163‐1170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Plsek PE, Greenhalgh T. Complexity science: the challenge of complexity in health care. BMJ: British Medical Journal. 2001;323(7313):625‐628. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Lipsitz LA. Understanding health care as a complex system: the foundation for unintended consequences. Jama. 2012;308(3):243‐244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56. Munoz‐Plaza CE, Parry C, Hahn EE, et al. Integrating qualitative research methods into care improvement efforts within a learning health system: addressing antibiotic overuse. Health Research Policy and Systems. 2016;14(1):63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57. Holtrop JS, Rabin BA, Glasgow RE. Qualitative approaches to use of the RE-AIM framework: rationale and methods. BMC Health Services Research, 2018;18(1):177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Sale JE, Lohfeld LH, Brazil K. Revisiting the quantitative‐qualitative debate: implications for mixed‐methods research. Quality and Quantity. 2002;36(1):43‐53. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59. Carey DJ, Fetterolf SN, Davis FD, et al. The Geisinger MyCode community health initiative: an electronic health record–linked biobank for precision medicine research. Genetics in medicine. 2016;18(9):906‐913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. Hatef E, Kharrazi H, VanBaak MF, et al. A state‐wide health IT infrastructure for population health: building a community‐wide electronic platform for Maryland's all‐payer global budget. Online Journal of Public Health Informatics. 2017;9(3):e195. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Osborn R, Moulds D, Schneider EC, Doty MM, Squires D, Sarnak DO. Primary care physicians in ten countries report challenges caring for patients with complex health needs. Health Aff. 2015;34(12):2104‐2112. [DOI] [PubMed] [Google Scholar]

- 62. Schneeweiss S. Learning from big health care data. New England Journal of Medicine. 2014;370(23):2161‐2163. [DOI] [PubMed] [Google Scholar]

- 63. Roski J, Bo‐Linn GW, Andrews TA. Creating value in health care through big data: opportunities and policy implications. Health Aff. 2014;33(7):1115‐1122. [DOI] [PubMed] [Google Scholar]

- 64. Singh H, Sittig DF. Measuring and improving patient safety through health information technology: the health IT safety framework. BMJ Qual Saf. 2015. pp.bmjqs‐2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Furukawa MF, King J, Patel V, Hsiao CJ, Adler‐Milstein J, Jha AK. Despite substantial progress in EHR adoption, health information exchange and patient engagement remain low in office settings. Health Aff. 2014;33(9):1672‐1679. [DOI] [PubMed] [Google Scholar]

- 66. Chang F, Gupta N. Progress in electronic medical record adoption in Canada. Canadian Family Physician. 2015;61(12):1076‐1084. [PMC free article] [PubMed] [Google Scholar]

- 67. Kohli R, Tan SSL. Electronic health records: how can IS researchers contribute to transforming healthcare? MIS Q. 2016;40(3):553‐573. [Google Scholar]

- 68. Balasubramanian BA, Cohen DJ, Davis MM, et al. Learning evaluation: blending quality improvement and implementation research methods to study healthcare innovations. Implementation Science. 2015;10(1):31. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69. Akhnif E, Macq J, Fakhreddine MI, Meessen B. Scoping literature review on the learning organisation concept as applied to the health system. Health research policy and systems. 2017;15(1):16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Reid RJ. Embedding research in the learning health system. Healthc Pap. 2016;16(Special Issue):30‐35. [Google Scholar]

- 71. Guise JM, Savitz LA, Friedman CP. Mind the gap: putting evidence into practice in the era of learning health systems. J Gen Intern Med. 2018;33(12):2237‐2239. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Moore JL, Shikako‐Thomas K, Backus D. Knowledge translation in rehabilitation: a shared vision. Pediatr Phys Ther. 2017;29:S64‐S72. [DOI] [PubMed] [Google Scholar]

- 73. Lai JK, Weiss JA. Priority service needs and receipt across the lifespan for individuals with autism spectrum disorder. Autism Res. 2017;10(8):1436‐1447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74. Weiss JA, Lunsky Y. The brief family distress scale: a measure of crisis in caregivers of individuals with autism spectrum disorders. Journal of Child and Family Studies. 2011;20(4):521‐528. [Google Scholar]

- 75. Steffen AM, McKibbin C, Zeiss AM, Gallagher‐Thompson D, Bandura A. The revised scale for caregiving self‐efficacy: reliability and validity studies. The Journals of Gerontology Series B: Psychological Sciences and Social Sciences. 2002;57(1):P74‐P86. [DOI] [PubMed] [Google Scholar]

- 76. Osman A, Wong JL, Bagge CL, Freedenthal S, Gutierrez PM, Lozano G. The depression anxiety stress scales—21 (DASS‐21): further examination of dimensions, scale reliability, and correlates. J Clin Psychol. 2012; 68(12):1322‐1338. [DOI] [PubMed] [Google Scholar]

- 77. Idler EL, Benyamini Y. Self‐rated health and mortality: a review of twenty‐seven community studies. Journal of health and social behavior. 1997;38:21‐37. [PubMed] [Google Scholar]

- 78. Bayliss EA, Bonds DE, Boyd CM, et al. Understanding the context of health for persons with multiple chronic conditions: moving from what is the matter to what matters. The Annals of Family Medicine. 2014;12(3):260‐269. [DOI] [PMC free article] [PubMed] [Google Scholar]