Abstract

The p value has been widely used as a way to summarise the significance in data analysis. However, misuse and misinterpretation of the p value is common in practice. Our result shows that if the model specification is wrong, the distribution of the p value may be inappropriate, which makes the decision based on the p value invalid.

Keywords: asymptotic distribution, hypothesis testing, linear regression

Introduction

In 2016, a statement was jointly released by Ronald Wasserstein and Nicole Lazar1 on behalf of the American Statistical Association warning against the misuse and misinterpretation of statistical significance and p values in scientific research. The Statement offers six principles in using p values. In fact, the controversies around p values have appeared from time to time in statistical communities and in other areas. For example, in 2015, the editors of Basic and Applied Social Psychology decided to ban p values in the papers published in that journal. A recent paper in Nature raised the issue again about the use of p values in scientific discoveries.2 Although the p value has a history almost as long as that of the modern statistics and has been used in millions of scientific publications, ironically it has never been rigorously defined in statistical literature. This means that all reported p values in publications are based on some very intuitive interpretations. This is one of the possible reasons that p value has caused a tremendous number of misuse and misconception. A formal discussion of the rigorous definition of a p value is out of the scope of this paper. Our discussion follows the current tradition of interpretation of p values.

Suppose X1, X2, …, Xn is a random sample from some probability and T=T(X1, X2, …, Xn) is a statistic used to test some null hypothesis. Suppose the observed data are x1, x2, …, xn. Then the calculated value of the test statistic is T(x1, x2, …, xn). Informally speaking, the p value is the probability that T(X1, X2, …, Xn), as a random variable, has values more ‘extreme’ than the currently observed value T(x1, x2, …, xn) under the null hypothesis. Suppose a larger value means more ‘extreme’. Then the p value (given the data) is the probability that T(X1, X2, …, Xn)≥T(x1, x2, …, xn), that is,

| p(x1, x2, …, xn)=P{T(X1, X2, …, Xn)≥T(x1, x2, …, xn)}. | (1) |

To calculate the p value, we need to know the distribution of T under the null hypothesis. For example, the two-sample t-test has been used a lot to test the hypothesis that whether the two independent samples have the same mean value. If those two samples are normally distributed with the same variance, the test statistic has a central t-distribution under the null hypothesis. If the variances are not the same, the distribution of the test statistic does not have a close. This is the so called Fisher’s two-sample t-test problem.3 However, if the sample sizes in two groups are large enough, the asymptotic distribution of the test statistic can be safely approximated by normal distribution (due to the central limit theorem).

In case of discrete outcome variables, for example, the treatment outcome (success or failure) in a randomised clinical trial, the result is often presented in a contingency table (see table 1).

Table 1.

Outcome of a randomised clinical trial

| Group | Total | ||

| 1 | 2 | ||

| Successes | S 1 | S 2 | S=S 1+S 2 |

| Failures | F 1 | F 2 | F=F 1+F 2 |

| Total | n 1 | n 2 | n=n 1+n 2 |

where Si in group () follows the binomial distribution with the sample size and probability of success . The hypothesis that is usually of interest is

Pearson’s χ2 test is one way of measuring departure from conducted by calculating an expected frequency table (see table 2). This table is constructed by conditioning on the marginal totals and filling in the table according to , that is,

Table 2.

Expected frequencies in contingency tables

| Expected frequencies | Total | ||

| 1 | 2 | ||

| Successes | |||

| Failures | |||

| Total | |||

Using this expected frequency table, a statistic is calculated by going through the cells of the tables and computing

Note that follows the χ2 distribution asymptotically. Thus, the p value yield by this is

where this follows the χ2 distribution with df.

Fisher’s exact test is preferred over Pearson’s χ2 test in the case of either cell or in table 1 is very small. Consider the testing of the hypothesis:

One could show is a sufficient statistic under . Given the value of , it is reasonable to use as a test statistic and reject in favour of for large values of , because large values of correspond to small values of . The conditional distribution given is hypergeometric . Thus, the conditional p value is

where is the probability density function of the hypergeometric .

It is well known that due to the randomness, with the same design, if we repeat the same experiment, we may get different results. From equation (1) we can see that the p value explicitly depends on the observed data x1, x2, …, xn. Hence, the p value is a random variable with the range [0,1]. In this paper we study the behaviour of p value. Our results show that under some conditions, the distribution of the p value may be weird, which makes the result based on the p value uninterpretable.

The distribution of p values under H 0

As discussed in the last section, the p value changes with observations. Hence, it is a random variable. Let FT denote the distribution of the test statistic T under the null hypothesis. The p value is 1−FT(T). If FT is continuous, the p value is uniformly distributed on [0,1].4

For example, the distribution of the two-sample t-test is continuous, and the distribution of its p value has a uniform [0,1] distribution. For the Pearson’s χ2 test, although the distribution of the test statistic is discrete, the p value is calculated based on its asymptotic χ2 distribution. Hence, the distribution of the p value is also almost uniformly distributed when the sample size is large enough.

The p value of Fisher’s exact test is different. Its range is discrete. When the sample size is small, its distribution may be far away from the uniform distribution. However, when the sample size is large enough, the p value of the Fisher’s exact is the same as Pearson’s χ2 test. Of course, it is not a wise way to calculate the exact p value in that case.

In the regression analysis, the test of the significance of the coefficient of each covariates is usually based on the Wald test.5 When the sample size is large enough, the distribution of the Wald test is close to a normal distribution which makes it convenient to calculate the asymptotic p value.

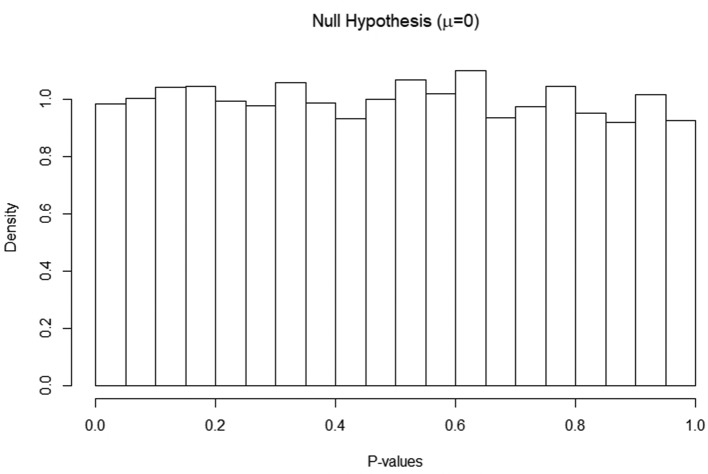

Figure 1 shows the histogram of the p value of the one-sample t-test of the hypothesis.

Figure 1.

Histogram of p values of one-sample t-test of hypothesis.

The test statistic is , where is the sample mean, is the sample SD and is the sample size. Under we will have follows a t distribution with df.

We simulated 10 000 replicates of the data from a standard normal distribution with the sample size . We can see that the distribution of the p value is uniformly distributed.

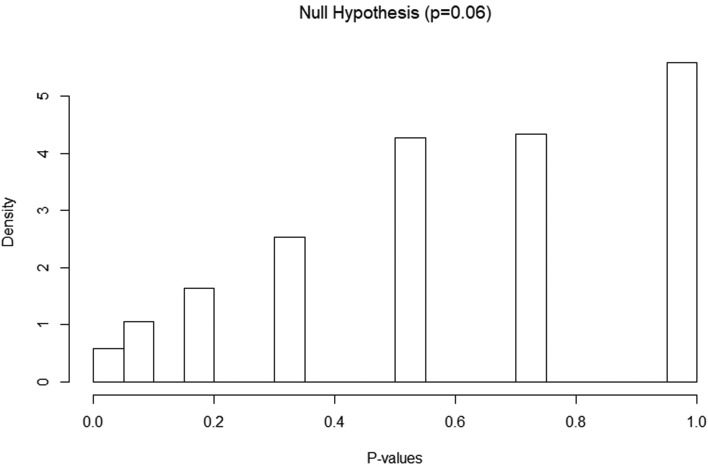

Figure 2 shows the histogram of the p values of one-sample Fisher’s exact test. The data are generated from the binomial (40, 0.06). We simulated 10 000 replicates of the data.

Figure 2.

Histogram of p values of one-sample Fisher’s exact test.

The distribution of p values under misspecified models

As discussed above, in regression analysis, the p value is used to determine whether a covariate is significant. For example, in many medical papers, before doing the multiple regression analysis, the authors usually run a univariate analysis to determine some potential significant covariates. If the p value of the univariate analysis is below some prespecified cut-offs, the covariate is used in the multiple regression analysis.6 One assumption of this method is that the ‘p-value’ in the univariate analysis is really a valid p value, which means that its distribution is close to the uniform distribution. Otherwise, the decision based on an invalid p value may be problematic. In this section, we use a very simple multiple linear regression to show that this may happen.

Assume the following true linear model:

where , , , and is independent of . Then for the regression model defined through a conditional expectation,4 we will have

Note that the univariate regression of on no longer satisfies a univariate linear regression model, and .

The univariate analysis proceeds by assuming

The significance of X1 is based on the ‘p-value’ for testing from the Wald test.

We simulated 10 000 replicates with sample sizes n=30, 50, 100, 200, 500 and 1000, respectively. The sample mean and sample SD of α1 are summarised in table 3, in addition to the proportion of p values larger than 0.2 and 0.1.

Table 3.

Results from a univariate analysis

| n | Regression of on | |||

| Estimate | SD | P value >0.2 | P value >0.1 | |

| 30 | 0.190 | 1.057 | 0.410 | 0.514 |

| 50 | 0.111 | 0.868 | 0.414 | 0.522 |

| 100 | 0.055 | 0.644 | 0.399 | 0.507 |

| 200 | 0.032 | 0.461 | 0.408 | 0.508 |

| 500 | 0.014 | 0.297 | 0.408 | 0.510 |

| 1000 | 0.008 | 0.210 | 0.409 | 0.504 |

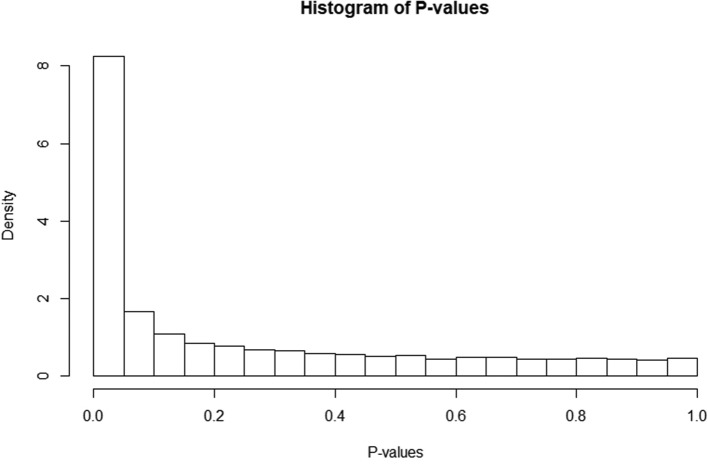

Both table 3 and figure 3 show that the distribution of the p value obtained from the univariate analysis is far away from the uniform distribution even if the sample size is incredibly large. The decision based on an invalid p value makes the univariate analysis uninterpretable.

Figure 3.

Histogram of the ‘p-value’ when the sample size is 10 000.

Conclusion

The p value is probably the most famous terminology in scientific publications. However, it has also caused confusions and controversies when used as a way to declare ‘significance’ in data analysis. According to the definition of the p value, a valid p value should have a distribution close to the standard uniform distribution. A distribution of the p value far away from the uniform distribution may indicate that the model is misspecified.

Biography

Bokai Wang obtained his BS in Statistics from Nankai University in China in 2010 and his MS in Applied Statistics from Bowling Green State University (Bowling Green, OH) in USA in 2012. Starting at 2014, he is currently a 5th year PhD candidate in Statistics at the Department of Biostatistics and Computational Biology, University of Rochester. His research interests include but not limited to Survival Analysis, Causal Inference, and Variable Selection in Biomedical Research. By now he has published 13 papers in peer reviewed journals.

Bokai Wang obtained his BS in Statistics from Nankai University in China in 2010 and his MS in Applied Statistics from Bowling Green State University (Bowling Green, OH) in USA in 2012. Starting at 2014, he is currently a 5th year PhD candidate in Statistics at the Department of Biostatistics and Computational Biology, University of Rochester. His research interests include but not limited to Survival Analysis, Causal Inference, and Variable Selection in Biomedical Research. By now he has published 13 papers in peer reviewed journals.

Footnotes

Contributors: CF and HW derived the theoretical results. BW and ZZ constructed the examples and graphs. XMT drafted the manuscript.

Funding: The authors have not declared a specific grant for this research from any funding agency in the public, commercial or not-for-profit sectors.

Competing interests: None declared.

Patient consent for publication: Not required.

Provenance and peer review: Commissioned; internally peer reviewed.

References

- 1. Wasserstein RL, Lazar NA. The ASA's Statement on p -Values: Context, Process, and Purpose. Am Stat 2016;70:129–33. 10.1080/00031305.2016.1154108 [DOI] [Google Scholar]

- 2. Amrhein V, Greenland S, McShane B. Scientists rise up against statistical significance. Nature 2019;567:305–7. 10.1038/d41586-019-00857-9 [DOI] [PubMed] [Google Scholar]

- 3. Richards LE, Byrd J. Fisher's randomization test for two small independent samples. Journal of the Royal Statistical Society Series C 1996;45:394–8. [Google Scholar]

- 4. Durret R. Probability: theory and examples. 4th edn Cambridge: Cambridge University Press, 2010. [Google Scholar]

- 5. Agresti A. Categorical data analysis. 2nd edn New York: Wiley, 2002. [Google Scholar]

- 6. Karcutskie CA, Meizoso JP, Ray JJ, et al. Association of mechanism of injury with risk for venous thromboembolism after trauma. JAMA Surg 2017;152:35–40. 10.1001/jamasurg.2016.3116 [DOI] [PubMed] [Google Scholar]