Abstract

Introduction

Listening effort may be defined as the cognitive resources needed to understand an auditory message. A sustained requirement for listening effort is known to have a negative impact on individuals’ sense of social connectedness, well-being and quality of life. A number of hearing-specific patient-reported outcome measures (PROMs) exist currently; however, none adequately assess listening effort as it is experienced in the listening situations of everyday life. The Listening Effort Questionnaire-Cochlear Implant (LEQ-CI) is a new, hearing-specific PROM designed to assess perceived listening effort as experienced by adult CI patients. It is the aim of this study to conduct the first psychometric evaluation of the LEQ-CI’s measurement properties.

Methods and analysis

This study is a phased, prospective, multi-site validation study in a UK population of adults with severe-profound sensorineural hearing loss who meet local candidacy criteria for CI. In phase 1, 250 CI patients from four National Health Service CI centres will self-complete a paper version of the LEQ-CI. Factor analysis will establish unidimensionality and Rasch analysis will evaluate item fit, differential item functioning, response scale ordering, targeting of persons and items, and reliability. Classical test theory methods will assess acceptability/data completeness, scaling assumptions, targeting and internal consistency reliability. Phase 1 results will inform refinements to the LEQ-CI. In phase 2, a new sample of adult CI patients (n=100) will self-complete the refined LEQ-CI, the Speech, Spatial and Qualities of Hearing Scale, the Nijmegen Cochlear Implant Questionnaire and the Fatigue Assessment Scale to assess construct validity.

Ethics and dissemination

This study was approved by the Abertawe Bro Morgannwg University Health Board/Swansea University Joint Study Review Committee and the Newcastle and North Tyneside 2 Research Ethics Committee, Ref: 18/NE/0320. Dissemination will be in high-quality journals, conference presentations and SEH’s doctoral dissertation.

Keywords: hearing loss, cochlear implant, patient-reported outcome measure, validation, listening effort, prom

Strengths and limitations of this study.

The Listening Effort Questionnaire-Cochlear Implant (LEQ-CI) is the first patient-reported outcome measure developed specifically to assess perceived listening effort in cochlear implant candidates and recipients.

The proposed study conforms to international consensus standards on best practice of studies of instrument development and validation—the COnsensus-based Standards for the selection of health-status Measurement INstruments (COSMIN).

The use of classical test theory and Rasch analysis will enable a robust initial assessment of the LEQ-CI’s measurement characteristics at both item and scale level.

The conceptual framework underpinning the LEQ-CI is based on an explanatory model developed from current theoretical frameworks and the patient perspective. Assessment of the LEQ-CI’s measurement properties will provide early evidence of the validity of the proposed model.

Instrument validation is an iterative process to build a body of evidence relating to the quality of an instrument’s measurement properties. Further studies that assess the measurement characteristics of LEQ-CI will be required.

Introduction

Hearing loss is a top 10 burden of disease. It affects approximately one in every six people in the UK population and the economic burden is estimated to be over £30 billion annually.1 Management of hearing loss is typically focused on the provision of hearing technologies such as hearing aids or cochlear implants (CI). However, even with appropriate provision of devices, individuals continue to report a sustained requirement for listening effort.2 Listening effort may be defined as the mental exertion (the attentional and cognitive resources) needed to understand an auditory signal.3 A sustained requirement for high listening effort is known to impact on the everyday listening activities of adults with hearing loss with negative implications for their social functioning, work recovery, social connectedness, well-being and quality of life.4–7

In the context of audiological clinical practice, many current routine assessments are capable of providing insight into audibility of the acoustic signal but are unable to supply information relating to the underlying processes and mechanisms, such as listening effort, that inform the measured performance. In the era of person-centred care, well-validated measures that assess these underlying factors are needed if hearing healthcare professionals are to adopt a more holistic approach to the management of hearing loss. Validated self-report instruments, such as patient-reported outcome measures (PROMs), have the potential to be viable clinical measures of an individual’s listening effort in everyday listening situations.

PROMs are self-report tools that assess an individual’s perception of their disease severity, symptoms and functioning, quality of life or well-being.8–10 PROMs are being used increasingly in routine clinical practice and are already well established in the field of audiology.11 12 PROMs enable clinicians to gain insight into the patient’s perspective of their condition and the treatment they receive. Importantly, these instruments provide insight into those aspects of a disease or condition that are not observable, but rather, are knowable only to the patient themselves. PROMs offer a complementary method to current behavioural (eg, dual-task paradigms) and physiological measures (eg, pupillometry, functional MRI (fMRI), electroencephalography (EEG)) of listening effort. There is a growing body of research to suggest that listening effort is a multidimensional construct and that these different measures may evaluate different aspects of this phenomenon.4 13–16 Using factor analysis, Alhanbali et al have shown that hearing level, signal to noise ratio (SNR), dual-task paradigms, pupillometry and EEG (ie, alpha power during speech recognition and retention) and self-reported effort tap into different underlying dimensions of listening effort.14 Reflecting on this work, it may be argued that PROMs, as a measure of self-reported effort, have the potential to assess a dimension of listening effort that is not captured by current behavioural and physiological measures.

Several hearing-specific PROMs have been developed that include items considered to measure listening effort. A systematic review by the authors identified two PROMs that measured listening effort and cognitive effort in listening, respectively.17 18 Several PROMs assessing listening effort at either the item or subscale level (eg, Speech, Spatial and Qualities of Hearing Scale (SSQ), Profile of Hearing Aid Benefit(PHAB), Communication Profile for the Hearing Impaired (CPHI) were also identified.19–22 Overall, the review findings found limited evidence of these PROMs’ psychometric measurement properties. The SSQ was identified as the current best candidate for use as a listening effort PROM based on the extent and quality of its validation when assessed against the COnsensus-based Standards for the selection of health-status Measurement INstruments (COSMIN) criteria.23 However, one drawback of the SSQ as a measure of listening effort is a high response burden with only 6% of its items measuring listening effort. Notably, all of the PROMs identified in this systematic review were developed prior to publication of the theoretical frameworks that inform current conceptualisations of listening effort.2 24–26 Lack of congruence between these instruments and current theoretical frameworks is a limitation of the content validity of existing PROMs. It is unlikely these instruments capture fully listening effort as presented in these recently published models and, as such, there is growing support in the literature for a new PROM that comprehensively measures self-reported listening effort in hearing loss as it is conceptualised currently.14 26 To address this situation, the Listening Effort Questionnaire-Cochlear Implant (LEQ-CI) has been developed. The LEQ-CI is a new hearing-specific PROM measuring perceived listening effort in adults who receive CIs.

Aims

To have confidence that a PROM is providing meaningful information, psychometric evaluation of its measurement properties must be undertaken to satisfy rigorous criteria.23 27 This includes assessment of an instrument’s validity (ie, to what extent does the instrument measure the construct it purports to measure), its reliability (ie, the degree to which measurement is free from error) and its responsiveness (ie, the ability of an outcome measure to detect change over time in the construct to be measured).28 There are several measurement properties that require assessment and each property needs its own type of study to assess it. The process of psychometric validation is iterative and represents an accumulation of evidence over time from multiple studies.29

The aim of this study is to conduct the first psychometric validation of the LEQ-CI in accordance with the internationally recognised COSMIN guidelines.28 30 Building on previous work undertaken by the authors to establish the LEQ-CI’s content validity,2 31 the current study represents a further step towards the provision of a robust self-report measure of perceived listening effort for use in research and clinical practice.

Objectives

To refine the items, response categories, and scale structure of the new LEQ-CI using Rasch measurement theory in an English-speaking sample of adult CI candidates and recipients in the UK.

To assess acceptability, scaling assumptions, targeting and reliability using classical test theory (CTT) methods.

To assess the construct validity of the refined LEQ-CI in the population of adults with severe-profound, postlingual HL who are CI candidates or recipients.

Methods and analysis

Study setting and patient involvement

This prospective study is a UK-based multiphase study to validate the measurement properties of a new PROM, the LEQ-CI. The planned study will take place over a 12-month period and has been coproduced by the study team with input from two lay members, both CI recipients, from the study’s Research Management Group. Lay members reviewed and provided feedback on study design, participant documents and iterations of the LEQ-CI.

Development of the LEQ-CI

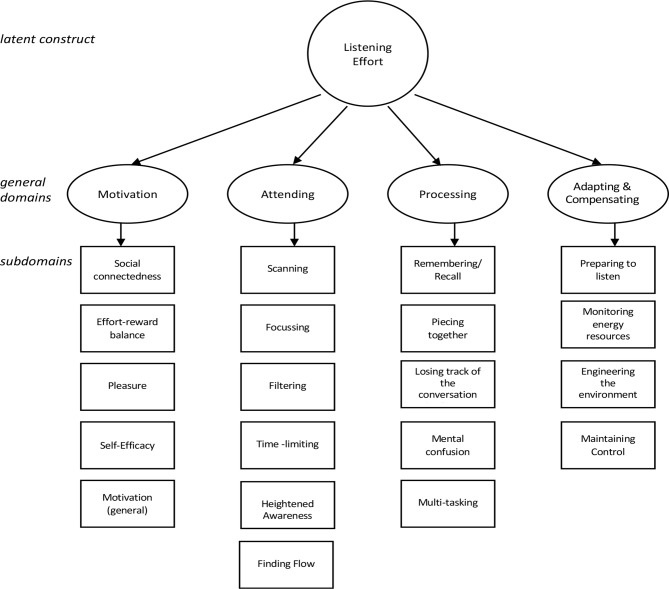

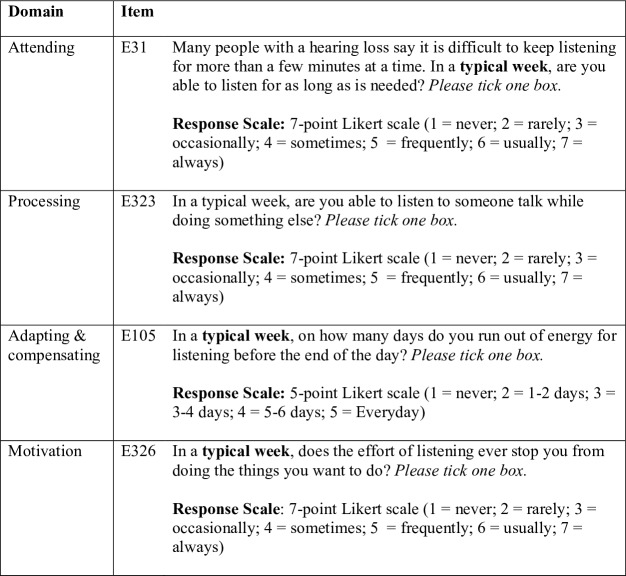

The LEQ-CI is a hearing-specific PROM measuring listening effort in adult CI candidates and recipients. It is composed of 29 items across four domains. Five-point or seven-point Likert scales with absolute anchors and labelled categories ensure a broad range of response options. Item responses are summed to produce a simple total score. The LEQ-CI’s conceptual framework, presented in figure 1, was developed from a mixed-methods qualitative study involving focus groups and a postal survey.2 An item bank was constructed that included new items and items harvested from extant PROMs considered to measure listening effort or associated constructs.17 18 Exemplar items are presented in figure 2. Preliminary testing was completed to identify and rectify problems with items and response scales prior to undertaking psychometric evaluation.31 Preliminary testing involved the use of multiple datasets to assess reliability.32 Item quality was estimated using the online (SQP V.2.1, http://sqp.upf.edu/). An expert review panel of academics, researchers and clinicians (n=7) and a series of cognitive interviews with a purposive sample of CI candidates and recipients (n=12) were completed to elicit feedback on the relevance, clarity and acceptability of the LEQ-CI.

Figure 1.

The conceptual framework of the Listening Effort Questionnaire-Cochlear Implant (LEQ-CI).

Figure 2.

Example items from the Listening Effort Questionnaire-Cochlear Implant (LEQ-CI).

Sample size

The study sample will be representative of the population of adults with acquired, postlingual severe-to-profound sensorineural hearing loss (SNHL) referred for cochlear implantation in the UK. A total study sample of 350 participants will be recruited. In phase 1, a cohort of 250 participants will be recruited from four National Health Service (NHS) CI centres. To minimise burden on implant centre staff and to ensure representation from different regions of the UK, each centre will send questionnaire packs to 125 CI candidates or recipients who meet the study inclusion criteria (n=500). If necessary, additional participants will be recruited until such time as 250 completed LEQ-CI forms with no missing data are returned. There are no general criteria for determination of sample sizes in studies of PROM validation and sample sizes are, in part, dependent on the psychometric characteristics being assessed.23 27 Mokkink et al recommend greater than 200 respondents when undertaking Rasch analysis (RA) and seven times the number of items for purposes of undertaking assessment of unidimensionality.23 Linacre recommends a minimum sample size of 250 respondents for definitive item calibration using RA.33 The LEQ-CI is composed of 29 items that have been selected to minimise respondent burden while allowing for adequate sampling of relevant constructs associated with listening effort. Therefore, a minimum sample of 250 participants is considered sufficient for undertaking both assessment of unidimensionality and RA of the LEQ-CI. In phase 2, a new cohort of 100 participants fulfilling the same eligibility criteria will be recruited from two CI centres. Each centre will recruit 125 participants initially. If necessary, further participants will be recruited until the required sample size is achieved. Hobart et al recommend a minimum sample size of 80 participants for assessment of construct validity.34

Recruitment and data collection

The participant eligibility criteria are the same for both phases of the study and are presented in table 1.

Table 1.

Study eligibility criteria for recruitment of participants

| Inclusion criteria | Exclusion criteria |

|

|

SNHL, sensorineural hearing loss.

In phases 1 and 2, participants meeting the study inclusion criteria will be sent an invitation letter, an information sheet describing the study in detail, the LEQ-CI, a demographic questionnaire and comparator questionnaires (phase 2 participants only). A reply-paid envelope for the return of the completed questionnaires will be provided. Informed consent is presumed if the questionnaires are completed and returned to the study team. To maintain participant anonymity, eligibility screening and the study documents will be mailed to prospective participants by a member of the clinical team at each participating CI centre. Each questionnaire pack will be coded with a unique identifier and no personal identifiable information will be retained by the study team.

Statistical analysis

There are two schools of psychometric measurement theory dominate the field of PROM development—classical test theory (CTT) and Rasch measurment theory.29 35 Traditional psychometric analyses (eg, Cronbach’s alpha as a measure of internal consistency reliability) are underpinned by CTT. CTT seeks to evaluate reliability and validity of a scale and has been the dominant approach used in the development and validation of outcome measures.36 However, modern measurement techniques such as RA are increasingly being reported alongside traditional analyses in studies of PROM development and validation (eg, refs 37 38).

CTT is based on the assumption that every observed score is a function of an individual’s true score and random error.39 The assumptions underpinning CTT differ from those underpinning the Rasch model. For example, it has been argued that CTT cannot be tested adequately as it is based on definitions rather than assumptions which can be proven true or false. This is in contrast to modern measurement theory (ie, RA) which generates assumptions that can be proven true or false.40 Whereas CTT methods focus on the total score of a measure, RA enables instrument developers to focus more specifically on the characteristics of individual items.41 For example, RA, unlike CTT methods, can be used to establish whether an item’s response scale is functioning as expected and, if not, suggest improvements.

The Rasch model allows for ordering persons (ie, patients) according to the amount of the latent target construct (ie, listening effort) they possess and for ordering items that measure the target construct according to their difficulty.35 This method allows non-linear (ie, ordinal) raw data to be converted to a linear (ie, interval) scale, which can then be evaluated through the use of parametric statistical tests.42 By contrast, CTT methods yield measures that produce ordinal rather than interval level data. This principle has implications for the interpretation of test scores since difference scores and change scores are most meaningful when interval level of measurement is used.35 40

A further limitation of CTT is that the performance of a test is dependent on the sample in which that test is assessed.40 This renders its psychometric properties (ie, reliability and validity) dependent on the sample rather than characteristics of the test itself. By contrast, RA produces item and test statistics that are sample independent rendering the test valid across groups. Any discrepancies between the scale data and the Rasch model requirements are indicative of anomalies in the scale as a measurement instrument. These discrepancies provide diagnostic information that serves as a basis for understanding and empirical improvement of the instrument at both item and scale level.43

Despite these limitations, CTT methods continue to be widely used in studies of instrument validation and are included in the COSMIN standards.28 40 Indeed, some properties (eg, acceptability, scaling assumptions) can only be evaluated using CTT methods.36 For these reasons, this study will use both CTT and RA in a complementary fashion to ensure rigorous validation of the LEQ-CI at both item and scale level.

Study data will be managed using the online clinical data management programme, REDCap (V.7.2.1, Vanderbilt University), licensed to the Swansea Trials Unit, Swansea University. RA will be used to evaluate the LEQ-CI’s psychometric properties and refine the item and scale structure of the LEQ-CI using Winsteps (V.4.1.0) software. Psychometric analyses applying CTT will be conducted using SPSS V.22.0 licensed to Swansea University.

Phase 1: item and scale refinement using Rasch measurement theory

Assessing unidimensionality

The Rasch measurement model assumes unidimensionality which is defined as the measurement of a single latent construct.35 44 Therefore, prior to undertaking RA, factor analysis will be undertaken to assess the underlying structure of the LEQ-CI and establish the unidimensionality of its (sub)scales.45

Assessing item fit

In RA, item fit refers to the degree of mismatch between the pattern of actual observed responses and the Rasch modelled expectations. Specifically, it refers to the pattern for each item across persons investigated by examining item infit and outfit statistics.35Mean square standardised residuals (MNSQ) will be used to assess fit with MNSQ residuals within the 0.5–1.5 range considered acceptable for productive measurement. Mean square values less than 0.5 indicate overfit (ie, the items are too predictable relative to the Rasch model), while mean square values greater than 1.5 are indicative of too much noise (randomness) relative to the Rasch model.46

Assessing differential item functioning

Differential item functioning (DIF) is an indication of the loss of invariance across subsamples of respondents. The presence of DIF will be an indicator of potential problems with an item since item and person measures on a unidimensional instrument should remain invariant (ie, within error) across all appropriate measurement conditions.35 DIF will be examined for key demographic variables such as age and sex. The standard threshold of >1 logit will be used as an indicator of DIF.44 The methodology proposed by Zumbo using logistic regression DIF tests for significance (ie, X2 two df test) and magnitude of DIF by computing the R-squared effect for both uniform and non-uniform DIF will be applied.47 If items are found to have DIF, they will either be considered candidates for removal or examined for adjustment of DIF and re-evaluated, thus reflecting the iterative nature of instrument validation.35 48

Assessing response scale ordering

The response options of an instrument (ie, number of categories and their definitions) are critical to its reliability and validity.49 The Rasch model will enable us to show empirically how respondents use the LEQ-CI’s rating scale informing future iterations of the LEQ-CI to ensure it yields high-quality data.35 Response category ordering will be assessed using Rasch probability curves and there will be an examination of the data for category disordering and threshold disordering.50 These investigations will show whether the response options selected for the LEQ-CI are sufficient or should be adjusted to provide better coverage of the latent trait.

Assessing the targeting of persons and items

Targeting using RA explores whether the instrument has a distribution of items that matches the range of the respondents’ latent trait. This will be done by examining the item-person threshold distribution map, which illustrates a relative position of ‘item difficulty’ to ‘person ability’.44 The means and SD of items and persons should match closely.35

Assessing reliability

The reliability of the LEQ-CI will be examined by observing the Person Separation Index (PSI). The PSI is an estimate of the spread or separation of persons on the measured variable35 and is considered to be a measure of internal consistency reliability.51 It is a measure of the scale’s ability to separate the study sample. A PSI >0.7 will be considered an adequate measure of reliability.52

Phase 1: psychometric evaluation using CTT

Assessing acceptability and data completeness

Acceptability and data completeness will establish extent to which scale items are scored and total scores can be computed. Assessment of the completeness of item and scale-level data (ie, missing or incomplete data for items and sample) including frequency of endorsement will be completed. Score distributions including skew of scale scores and presence of floor and ceiling effects will be examined.29

Assessing scaling assumptions

Examination of scaling assumptions involves assessment of whether it is legitimate to group items into a scale to produce a scale score. Tests of scaling assumptions examine item-total correlations, mean scores and SD. When checking homogeneity of the LEQ-CI’s scales, the heuristic that items should correlate with the total score above 0.20 will be applied. Item-total correlations will be calculated using the Pearson product-moment correlation.29

Assessing targeting

Targeting may be defined as ‘the extent to which the range of the variable measured by the scale matches the range of that variable in the study sample’ (p.4).53 Targeting will be assessed following item refinement using RA. CTT will be used to determine whether the LEQ-CI scale scores span the entire scale range, skewness, and whether floor and ceiling effects are low, defined as <15% of the sample.27

Assessing internal consistency reliability

Assessment of internal consistency establishes the inter-relatedness among items and is an assessment of the unidimensionality of a scale or subscale.23 Internal consistency will be assessed by calculating inter-item and item-total correlations and Cronbach’s alpha.

Inter-item correlation—Calculating inter-item correlations will provide an indication whether an item is part of a (sub)scale. Correlations should fall between 0.2 and 0.5. Items which have a correlation greater than 0.7 may be considered to measure the same thing, making one item a candidate for deletion.27

Item-total correlation—Calculating item-total correlations will assess whether the LEQ-CI’s items discriminate patients on the listening effort construct. Items that show an item-total correlation of less than 0.3 will be considered as contributing little to the LEQ-CI in terms of discriminating between individuals with high versus low levels of listening effort.27 These items will be considered as candidates for deletion.

Cronbach’s alpha—Internal consistency will be calculated for each subscale of the LEQ-CI by calculating Cronbach’s alpha. Alpha values ≥0.70 and ≤0.95 will be considered good evidence of internal consistency.29

Phase 2: establishing construct validity

Construct validity may be defined as the extent to which the scores of an instrument are a valid measure of the latent construct.27 Construct validity of the refined LEQ-CI will be assessed by applying criteria specified by the COSMIN group. COSMIN guidance specifies that construct validity may be assessed by testing a priori hypotheses based on the literature and the experience of the study team.23 Hypotheses are generated by the study team and founded on the assumption that the LEQ-CI validly measures the target construct (ie, listening effort). These state the relationship between the instrument and other measures, as well as the expected differences between the scores attained by different subgroups of the target population. To establish the construct validity of an instrument, Mokkink et al recommend at least 75% of the stated hypotheses are endorsed.23

Assessing convergent validity

Concurrent construct validity will be assessed by examining the correlation between scores on the LEQ-CI with the summed score on the three items considered to measure listening effort on the Speech, Spatial and Qualities of Hearing questionnaire (SSQ). As no validated measure of listening effort has been identified as a suitable comparator PROM, these items were selected to assess construct validity as the SSQ has good evidence of being a well-validated instrument across multiple studies.17 As the LEQ-CI and the SSQ are measuring the same construct, we hypothesise that a strong positive correlation >0.50 will be observed between measures as suggested by Mokkink et al.23 We further predict a moderate positive correlation (0.30–0.50) between the LEQ-CI and SSQ total score as LE may be considered to be a component of hearing disability, the construct measured by the SSQ.11

Assessing discriminant validity

Discriminant (ie, divergent) validity is an assessment of a measure’s ability to discriminate between dissimilar constructs.29 It will be assessed by the examining the correlation between scores on the LEQ-CI and the Fatigue Assessment Scale,54 a measure of fatigue used in other studies investigating LE and fatigue in individuals with HL.55 As LE and fatigue are similar but unrelated constructs,16 we anticipate a moderate positive correlation between 0.30 and 0.50.

Further assessment of discriminant validity will be undertaken by examining the correlation between scores on the LEQ-CI and the Nijmegen Cochlear Implant Questionnaire,56 a measure of quality of life in CI patients. A small positive correlation of between 0.30 and 0.50 is anticipated as these measures may be considered to assess similar, but unrelated constructs.23

Ethics and dissemination

Study findings will be disseminated through publication in peer-reviewed journals, conference presentations and in the lead author’s (SEH) doctoral dissertation.

Supplementary Material

Footnotes

Contributors: SEH, HAH, AW and FR conceived the study. SEH wrote the protocol and this manuscript. HAH, AW, FR, IB and CMM provided critical review of the protocol and this manuscript. All authors read and approved the final manuscript.

Funding: This project is supported by an Abertawe Bro Morgannwg University Health Board Pathway to Portfolio grant (R&D Pathway June002) awarded to SEH.

Competing interests: None declared.

Ethics approval: This study has received ethical approval from the NHS Research Ethics Committee (REC): Newcastle and North Tyneside 2 (Ref: 18/NE/0320).

Provenance and peer review: Not commissioned; externally peer reviewed.

Patient consent for publication: Not required.

References

- 1. Wilson BS, Tucci DL, Merson MH, et al. . Global hearing health care: new findings and perspectives. Lancet 2017;390:2503–15. 10.1016/S0140-6736(17)31073-5 [DOI] [PubMed] [Google Scholar]

- 2. Hughes SE, Hutchings HA, Rapport FL, et al. . Social connectedness and perceived listening effort in adult cochlear implant users: a Grounded Theory to establish content validity for a new patient-reported outcome measure. Ear Hear 2018;39:922–34. 10.1097/AUD.0000000000000553 [DOI] [PubMed] [Google Scholar]

- 3. McGarrigle R, Munro KJ, Dawes P, et al. . Listening effort and fatigue: what exactly are we measuring? A British Society of Audiology Cognition in Hearing Special Interest Group ’white paper'. Int J Audiol 2014;53:433–45. 10.3109/14992027.2014.890296 [DOI] [PubMed] [Google Scholar]

- 4. Mackersie CL, MacPhee IX, Heldt EW. Effects of hearing loss on heart rate variability and skin conductance measured during sentence recognition in noise. Ear Hear 2015;36:145–54. 10.1097/AUD.0000000000000091 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5. Zekveld AA, George EL, Houtgast T, et al. . Cognitive abilities relate to self-reported hearing disability. J Speech Lang Hear Res 2013;56:1364–72. 10.1044/1092-4388(2013/12-0268) [DOI] [PubMed] [Google Scholar]

- 6. Ramage-Morin PL. Hearing difficulties and feelings of social isolation among Canadians aged 45 or older. Health Rep 2016;27:3–12. [PubMed] [Google Scholar]

- 7. Nachtegaal J, Kuik DJ, Anema JR, et al. . Hearing status, need for recovery after work, and psychosocial work characteristics: results from an internet-based national survey on hearing. Int J Audiol 2009;48:684–91. 10.1080/14992020902962421 [DOI] [PubMed] [Google Scholar]

- 8. Hutchings HA, Alrubaiy L. Patient-Reported Outcome Measures in Routine Clinical Care: The PROMise of a Better Future? Dig Dis Sci 2017;62:1841–3. 10.1007/s10620-017-4658-z [DOI] [PubMed] [Google Scholar]

- 9. Ousey K, Cook L. Understanding patient reported outcome measures (PROMs). Br J Community Nurs 2011;16:80–2. 10.12968/bjcn.2011.16.2.80 [DOI] [PubMed] [Google Scholar]

- 10. Meadows KA. Patient-reported outcome measures: an overview. Br J Community Nurs 2011;16:146–51. 10.12968/bjcn.2011.16.3.146 [DOI] [PubMed] [Google Scholar]

- 11. Noble W. Self-Assessment of Hearing. 2nd edn San Diego: Plural Publishing, 2013. [Google Scholar]

- 12. Devlin NJ, Appleby J. Getting the most out of PROMs: putting health outcomes at the heart of NHS decision-mkaing. 2010. http://www.kingsfund.org.uk/sites/files/kf/Getting-the-most-out-of-PROMs-Nancy-Devlin-John-Appleby-Kings-Fund-March-2010.pdf (accessed 16 Feb 2014).

- 13. Hornsby BW. The effects of hearing aid use on listening effort and mental fatigue associated with sustained speech processing demands. Ear Hear 2013;34:523–34. 10.1097/AUD.0b013e31828003d8 [DOI] [PubMed] [Google Scholar]

- 14. Alhanbali S, Dawes P, Millman RE, et al. . Measures of listening effort are multidimensional. Ear Hear 2019:1 10.1097/AUD.0000000000000697 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Miles K, McMahon C, Boisvert I, et al. . Objective assessment of listening effort: coregistration of pupillometry and EEG. Trends Hear 2017;21:1–13. 10.1177/2331216517706396 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Alhanbali S, Dawes P, Lloyd S, et al. . Hearing handicap and speech recognition correlate with self-reported listening effort and fatigue. Ear Hear 2018;39:470–4. 10.1097/AUD.0000000000000515 [DOI] [PubMed] [Google Scholar]

- 17. Hughes SE, Hutchings HA, Dobbs TD, et al. . A systematic review and narrative synthesis of the measurement properties of patient-reported outcome measures (PROMs) used to assess listening effort in hearing loss [poster presentation]. British Society of Audiology 2017. [Google Scholar]

- 18. Hughes SE, Rapport FL, Boisvert I, et al. . Patient-reported outcome measures (PROMs) for assessing perceived listening effort in hearing loss: protocol for a systematic review. BMJ Open 2017;7:e014995 10.1136/bmjopen-2016-014995 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19. Gatehouse S, Noble W. The speech, spatial and qualities of hearing scale (SSQ). Int J Audiol 2004;43:85–99. 10.1080/14992020400050014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Cox RM, Gilmore C, Alexander GC. Comparison of two questionnaires for patient-assessed hearing aid benefit. J Am Acad Audiol 1991;2:134–45. [PubMed] [Google Scholar]

- 21. Cox RM, Alexander GC. The Abbreviated Profile of Hearing Aid Benefit. Ear Hear 1995;16:176–86. 10.1097/00003446-199504000-00005 [DOI] [PubMed] [Google Scholar]

- 22. Demorest ME, Erdman SA. Development of the communication profile for the hearing impaired. J Speech Hear Disord 1987;52:129–43. 10.1044/jshd.5202.129 [DOI] [PubMed] [Google Scholar]

- 23. Mokkink LB, Prinsen CAC, Patrick DL, et al. . COSMIN Methodology for Systematic Reviews of Patient ‐ Reported Outcome Measures (PROMs) User Manual Version 1.0, 2018:1–78. [Google Scholar]

- 24. Strauss DJ, Francis AL. Toward a taxonomic model of attention in effortful listening. Cogn Affect Behav Neurosci 2017;17:809–25. 10.3758/s13415-017-0513-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Peelle JE. Listening effort: how the cognitive consequences of acoustic challenge are reflected in brain and behavior. Ear Hear 2018;39:204–14. 10.1097/AUD.0000000000000494 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Pichora-Fuller MK, Kramer SE, Eckert MA, et al. . Hearing impairment and cognitive energy. Ear Hear 2016;37:5S–27. 10.1097/AUD.0000000000000312 [DOI] [PubMed] [Google Scholar]

- 27. de Vet H, Terwee CB, Mokkink LB, et al. . Measurement in Medicine: A Practical Guide. Cambridge: Cambridge University Press, 2011. [Google Scholar]

- 28. Mokkink LB, de Vet HCW, Prinsen CAC, et al. . COSMIN Risk of bias checklist for systematic reviews of patient-reported outcome measures. Qual Life Res 2018;27:1171–9. 10.1007/s11136-017-1765-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Streiner DL, Norman GR. Health Measurement Scales: A Practical Guide to Their Development and Use. 4th ed: Oxford University Press, 2008. [Google Scholar]

- 30. Prinsen CAC, Mokkink LB, Bouter LM, et al. . COSMIN guideline for systematic reviews of patient-reported outcome measures. Qual Life Res 2018;27:1147–57. 10.1007/s11136-018-1798-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Hughes SE, Boisvert I, Rapport F, et al. . Measuring the experience of listeing effort in cochlear implant candidates and recipients: Development and pretesting of the LEQ-CI [poster presentation]. Southampton: British Cochlear Implant Group Academic Meeting, 2019. [Google Scholar]

- 32. Maitland A, Presser S. How accurately do different evaluation methods predict the reliability of survey questions? J Surv Stat Methodol 2016;4:362–81. 10.1093/jssam/smw014 [DOI] [Google Scholar]

- 33. Linacre JM. Sample size and item calibration or person measure stability. Rasch Meas Trans 1994;7:328. [Google Scholar]

- 34. Hobart JC, Cano SJ, Warner TT, et al. . What sample sizes for reliability and validity studies in neurology? J Neurol 2012;259:2681–94. 10.1007/s00415-012-6570-y [DOI] [PubMed] [Google Scholar]

- 35. Bond TG, Fox CM. Applying the Rasch Model: Fundamental Measurement in the Human Sciences. 3rd ed London: Routledge, 2015. [Google Scholar]

- 36. Petrillo J, Cano SJ, McLeod LD, et al. . Using Classical Test Theory, Item Response Theory, and Rasch Measurement Theory to evaluate patient-reported outcome measures: a comparison of worked examples. Value Health 2015;18:25–34. 10.1016/j.jval.2014.10.005 [DOI] [PubMed] [Google Scholar]

- 37. Barker BA, Donovan NJ, Schubert AD, et al. . Using Rasch analysis to examine the item-level psychometrics of the Infant-Toddler Meaningful Auditory Integration Scales . Speech Lang Hear 2017;20:130–43. 10.1080/2050571X.2016.1243747 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Heffernan E, Maidment DW, Barry JG, et al. . Refinement and validation of the Social Participation Restrictions Questionnaire: an application of Rasch analysis and traditional psychometric analysis techniques. Ear Hear 2019;40:328–39. 10.1097/AUD.0000000000000618 [DOI] [PubMed] [Google Scholar]

- 39. Tractenberg RE. Classical and modern measurement theories, patient reports, and clinical outcomes. Contemp Clin Trials 2010;31:1–3. 10.1016/S1551-7144(09)00212-2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Cano SJ, Hobart JC. The problem with health measurement. Patient Prefer Adherence 2011;5:279–90. 10.2147/PPA.S14399 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. da Rocha NS, Chachamovich E, de Almeida Fleck MP, et al. . An introduction to Rasch analysis for psychiatric practice and research. J Psychiatr Res 2013;47:141–8. 10.1016/j.jpsychires.2012.09.014 [DOI] [PubMed] [Google Scholar]

- 42. Boone WJ. Rasch analysis for instrument development: why, when, and how? CBE Life Sci Educ 2016;15:rm4 10.1187/cbe.16-04-0148 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43. Ball S, Vickery J, Hobart J, et al. . The Cannabinoid Use in Progressive Inflammatory brain Disease (CUPID) trial: a randomised double-blind placebo-controlled parallel-group multicentre trial and economic evaluation of cannabinoids to slow progression in multiple sclerosis. Health Technol Assess 2015;19:1–188. 10.3310/hta19120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Tadić V, Cooper A, Cumberland P, et al. . Measuring the quality of life of visually impaired children: first stage psychometric evaluation of the novel VQoL_CYP instrument. PLoS One 2016;11:e0146225 10.1371/journal.pone.0146225 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Floyd FJ, Widaman KF. Factor analysis in the development and refinement of clinical assessment instruments. Psychol Assess 1995;7:286–99. 10.1037/1040-3590.7.3.286 [DOI] [Google Scholar]

- 46. Wright L. Reasonable mean-square fit vales. Rasch Meas Trans 1994;8. [Google Scholar]

- 47. Zumbo B. A handbook on the theory and methods of differential item functioning (DIF): logistic regression modeling as a unitary framework for binary and likert-type (ordinal) item scores. 1999. www.researchgate.net/publication/236596822_A_handbook_on_the_theory_and_methods_of_differential_item_functioning_(DIF)_Logistic_regression_modeling_as_a_unitary_framework_for_binary_and_Likert-type_(ordinal)_item_scores/file/60b7d51830c07e4cbc.pdf (accessed Dec 2018).

- 48. Teresi JA, Ramirez M, Jones RN, et al. . Modifying measures based on differential item functioning (DIF) impact analyses. J Aging Health 2012;24:1044–76. 10.1177/0898264312436877 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Johnston M, Dixon D, Hart J, et al. . Discriminant content validity: a quantitative methodology for assessing content of theory-based measures, with illustrative applications. Br J Health Psychol 2014;19:240–57. 10.1111/bjhp.12095 [DOI] [PubMed] [Google Scholar]

- 50. Linacre JM. Categories Disordering (disordered categories) vs. Threshold Disordering (disordered thresholds). Rasch Meas Trans 1999;13:675. [Google Scholar]

- 51. Gorecki CA. The development and validation of a patient-reported outcome measure of health-related quality of life for patients with pressure ulcers: PUQOL Project: University of Leeds, 2011. [Google Scholar]

- 52. Tennant A, Conaghan PG. The Rasch measurement model in rheumatology: what is it and why use it? When should it be applied, and what should one look for in a Rasch paper? Arthritis Care Res 2007;57:1358–62. 10.1002/art.23108 [DOI] [PubMed] [Google Scholar]

- 53. Gorecki C, Brown JM, Cano S, et al. . Development and validation of a new patient-reported outcome measure for patients with pressure ulcers: the PU-QOL instrument. Health Qual Life Outcomes 2013;11:95 10.1186/1477-7525-11-95 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Michielsen HJ, De Vries J, Van Heck GL, et al. . Examination of the dimensionality of fatigue. Eur J Psychol Assess 2004;20:39–48. 10.1027/1015-5759.20.1.39 [DOI] [Google Scholar]

- 55. Alhanbali S, Dawes P, Lloyd S, et al. . Self-reported listening-related effort and fatigue in hearing-impaired adults. Ear Hear 2017;38:e39–e48. 10.1097/AUD.0000000000000361 [DOI] [PubMed] [Google Scholar]

- 56. Hinderink JB, Krabbe PF, Van Den Broek P. Development and application of a health-related quality-of-life instrument for adults with cochlear implants: the Nijmegen Cochlear Implant Questionnaire. Otolaryngol Head Neck Surg 2000;123:756–65. 10.1067/mhn.2000.108203 [DOI] [PubMed] [Google Scholar]

- 57. National Institute for Health and Clinical Excellence (NICE). NICE technology appraisal guidance 166: Cochlear implants for children and adults with severe to profound deafness. 2009. www.guidance.nice.org.uk/TA166/pdf/English (accessed Dec 2018).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.