Abstract

The dopaminergic system is known to play a central role in reward-based learning (Schultz, 2006), yet it was also observed to be involved when only cognitive feedback is given (Aron et al., 2004). Within the domain of information-integration category learning, in which information from several stimulus dimensions has to be integrated predecisionally (Ashby and Maddox, 2005), the importance of contingent feedback is well established (Maddox et al., 2003). We examined the common neural correlates of reward anticipation and prediction error in this task. Sixteen subjects performed two parallel information-integration tasks within a single event-related functional magnetic resonance imaging session but received a monetary reward only for one of them. Similar functional areas including basal ganglia structures were activated in both task versions. In contrast, a single structure, the nucleus accumbens, showed higher activation during monetary reward anticipation compared with the anticipation of cognitive feedback in information-integration learning. Additionally, this activation was predicted by measures of intrinsic motivation in the cognitive feedback task and by measures of extrinsic motivation in the rewarded task. Our results indicate that, although all other structures implicated in category learning are not significantly affected by altering the type of reward, the nucleus accumbens responds to the positive incentive properties of an expected reward depending on the specific type of the reward.

Introduction

Learning which action leads to the most beneficial outcome in a given situation is one of the central components of adaptive behavior. The dopaminergic system with its projections to striatal and medial prefrontal areas is known to play a crucial role in reward learning (O'Doherty, 2004; Schultz, 2006). In humans, it is often studied by using gambling paradigms, in which subjects learn probabilistic stimulus–reward contingencies by trial and error, the reward being earnings in money (Abler et al., 2006; Dreher et al., 2006; Smith et al., 2009). However, there are indications that the dopaminergic system is also involved in tasks in which only cognitive feedback is provided (Aron et al., 2004; Rodriguez et al., 2006).

Behavioral studies of category learning with cognitive feedback suggest that tasks, which force subjects to rely on gradually acquired stimulus–outcome contingencies, are sensitive to the nature and timing of feedback (Maddox et al., 2003, 2008). During trial and error learning in these tasks, striatal areas are activated (Poldrack et al., 2001; Cincotta and Seger, 2007) and patients with Parkinson's disease, which is characterized by the loss of dopaminergic input to the striatum, are impaired (Filoteo and Maddox, 2007). Results therefore indicate that the functional neuronal substrates underlying learning based on reward and cognitive feedback are very similar in certain task domains.

However, differences have to be expected because the dopaminergic system is known to respond differentially to rewards of different magnitude and value in the insula, amygdala, orbitofrontal cortex, and striatum (Gottfried et al., 2003; Tobler et al., 2007; Smith et al., 2009). Also, subcomponents of the striatum coding motivational aspects, such as the nucleus accumbens, are assumed to respond to the modulation of reward characteristics, whereas subcomponents involved in executive processes, such as the caudate head, should be less affected.

To test these assumptions, we conducted a functional magnetic resonance imaging (fMRI) experiment comparing the effects of cognitive feedback and reward in an information-integration category learning task (Ashby et al., 1998). Each subject performed two parallel versions, in one of which correct answers were rewarded with a monetary gain, whereas in the other, only information about the correctness of the answer was given. Although there are indications that both negative and positive feedback contribute to information-integration learning (Ashby and O'Brien, 2007), the dopaminergic substrates of reinforcement learning are well established, whereas research on avoidance learning mainly focused on the amygdaloid–hippocampal basis of fear conditioning (LeDoux, 2003). Therefore, in the present study, no monetary punishment was delivered. Because with training the dopaminergic response is known to shift backwards in time (Schultz and Dickinson, 2000), we expected to find an effect of the reward manipulation already during stimulus presentation. If reward manipulation also has an influence on the prediction error signal (Schultz, 2007), differential responses between the tasks to both negative and positive feedback are predicted, as long as performance is not perfect. Additionally, motivational states were shown to modulate the fMRI response in the striatum (Mizuno et al., 2008). We assessed the motivation of each subject (Ryan, 1982) and anticipated that the effect of monetary reward is primarily predicted by measures of extrinsic motivation, whereas the effect of cognitive feedback is more responsive to measures of intrinsic motivation.

Materials and Methods

Participants.

Sixteen subjects with an average ± SD of 23.1 ± 2.9 years (range 18–29) recruited from the Otto-von-Guericke University community participated in the experiment. None of them reported a history of drug abuse, neurological or psychiatric diseases, or injuries, and all were without pathological findings on a psychiatric screening questionnaire [SCL-90-R (Franke, 1995)]. The participants were right-handed as confirmed by the Edinburgh Handedness Inventory (Oldfield, 1971) and reported normal or corrected-to-normal vision. Informed written consent was obtained in accordance with the protocols approved by the local ethics committee before the experiment, and the participants received a payment of an average ± SD of €29.3 ± €2.5 (range €26–33) based on their performance.

Materials.

The perceptual categorization task used in this study was developed by using the randomization technique introduced by Ashby and Gott (1988). Two sets of stimuli were presented in the present experiment, either circles with an opening of 30 width or two parallel lines, both in white on black background. All stimuli varied on two dimensions, line width and orientation, and categories were specified based on the location of the stimuli within this two-dimensional perceptual space (Fig. 1). Each category was based on a bivariate normal distribution from which all category members were sampled. Two parallel category structures for each set of stimuli were specified by means of rotating the category boundary by 90°, resulting in the same within-category scatter, difference between category means, and absolute value of covariances of the two dimensions in both category structures. The exact parameter values for the resulting four categories are summarized in Table 1. From each distribution, 25 ordered pairs (x, y) were randomly sampled for each experimental block and linearly transformed so that the sample mean vector and variance–covariance matrix equaled those of the population. The x and y values obtained in this way were used to determine line width (x/2 pixels) and orientation (y × π/600 radians) of the stimuli. The scale factors were chosen in an attempt to equalize the salience of both dimensions. Because line width only increased to the center of the stimuli, the overall dimensions of the images were not changed by the manipulation. The optimal decision bound for both category structures is depicted along with examples for both stimulus sets in Figure 1. A pilot study with 17 subjects confirmed that the resulting four task versions (2 sets of stimuli × 2 category structures) do not differ significantly in terms of learning speed and error rates.

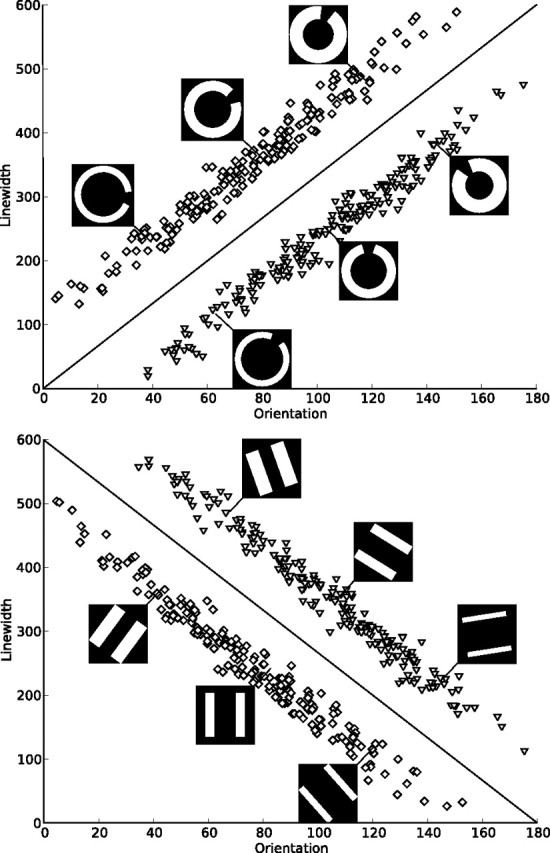

Figure 1.

Category structures and sample stimuli. Each square denotes the orientation and line width of a stimulus from category A, and each triangle denotes those of a stimulus from category B. The lines represent the optimal decision bound. Two types of category structures were presented, one with a positive slope of the optimal decision bound and one with a negative slope. For both types of stimuli used in the experiment (circles and lines), examples of three stimuli from each category are shown. Both types of stimuli were used with both types of decision bounds. Note that 0° does not correspond to a horizontal alignment of the stimuli to make the verbalization of a categorization rule more difficult.

Table 1.

Category distribution

| Slopea | Category A |

Category B |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| μx | μy | σx | σy | covxy | μx | μy | σx | σy | covxy | |

| Positive | 500 | 700 | 44,100 | 44,100 | 43,500 | 700 | 500 | 4410 | 44,100 | 43,500 |

| Negative | 500 | 500 | 44,100 | 44,100 | −43,500 | 700 | 700 | 4410 | 44,100 | −43500 |

μ, Mean for each dimension; σ, variance for each dimension; cov, covariance between dimensions.

aOf the optimal decision bound.

Procedure.

Each participant performed two tasks. In the first task, one type of stimuli (lines or circles) was presented with one category structure (positive or negative slope of the optimal decision bound), and in the second task, the other type of stimuli was presented with the other category structure. One of these two tasks was rewarded with €0.20 for each percentage of right answers, resulting in eight possible task combinations [2 (stimulus type) × 2 (category structure) × 2 (first or second task rewarded)], each of which was presented to two of the 16 participants. Subjects were instructed to learn about the two categories in each task by relying on the feedback they would receive after each decision and were informed that perfect performance was possible. Because we were not interested in the early performance on this task, while subjects might still use suboptimal verbal strategies, all participants were trained to criterion (80% correct answers) on the day before the fMRI session. The training was performed in a dimly lit room using the Presentation (Neurobehavioral Systems) software. All participants were presented with the two tasks alternatingly in blocks of 50 trials with equal base rates for both categories. Each trial consisted of the presentation of a stimulus spanning a visual angle of 12° at the center of the screen for 2 s. Subjects were requested to make a decision about category membership during stimulus presentation. After a random delay that was sampled from an exponential distribution with a mean of 2 s (range of 0.5–6 s), they received both auditory and visual feedback. Auditory feedback was provided via a tone of 0.25 s duration and had a frequency of 900 Hz for right and of 350 Hz for wrong answers, whereas visual feedback was presented for 1 s at a visual angle of 10°. Positive visual feedback consisted of a filled green circle in the cognitive feedback task and of a picture of a 20¢ coin in the monetary reward task. Negative feedback was indicated in both tasks by a filled red circle. When the subject failed to respond, a filled yellow circle was presented. Except for the intertrial interval (ITI), which was sampled from an exponential distribution with a mean of 3.5 s (range of 0.5–8 s), all parameters were equivalent to the fMRI session (Fig. 2). Training ended independently for each task after the criterion of 80% correct answers in a single block was reached. Two subjects did not reach the criterion after 5 blocks and were therefore not included in the study. Training was followed by the fMRI experiment on the next day. During the fMRI experiment, subjects completed the two tasks they had trained alternatingly in four blocks. Each block incorporated 50 trials and lasted ∼11 min. All stimuli originated from the same bivariate distributions as the training stimuli but were sampled independently of them. To exclude the possibility that activation differences between the rewarded and unrewarded task are exclusively attributable to differing visual stimulation, for half of the subjects, the 20¢ coin signaling a correct answer in the rewarded task was replaced by a green circle with the instruction they would nevertheless gain e 0.20 for each correct answer. The single trials were separated by a variable ITI sampled from an exponential distribution with a mean of 6 s (range of 1–12 s). An overview of the experimental procedure is given in Figure 3. After completing the testing session, all subjects filled out a questionnaire based on the postexperimental scale of the Intrinsic Motivation Inventory (Ryan, 1982; McAuley et al., 1989) for both tasks. The included subscales were interest/enjoyment, perceived competence, perceived choice, pressure/tension, effort/importance, and value/usefulness.

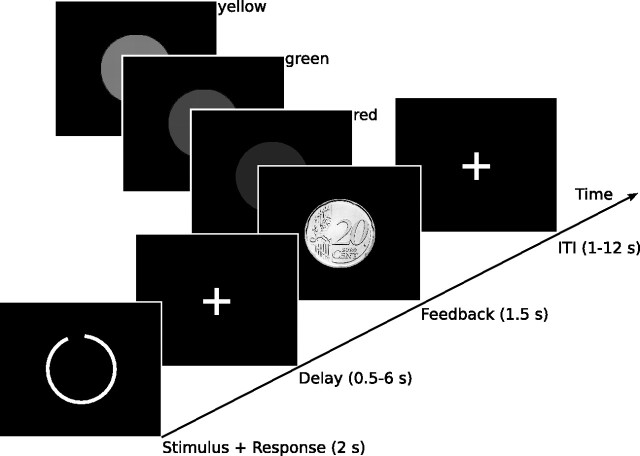

Figure 2.

Trial structure. Each trial started with the presentation of a stimulus for 2 s. Subjects were instructed to respond during this period by the pressing of one of two buttons. The stimulus was followed by a delay that was randomly sampled from an exponential distribution with a mean of 2 s (range of 0.5–6 s), after which feedback was presented for 1.5 s. Positive feedback was given by showing a green circle, or, in the rewarded condition by a 20¢ coin, and a high tone. Negative feedback consisted of a red circle and a low tone. If the subject failed to respond, a yellow circle was presented together with the low tone. Trials were separated by an interval that was randomly sampled from an exponential distribution with a mean of 6 s (range of 1–12 s).

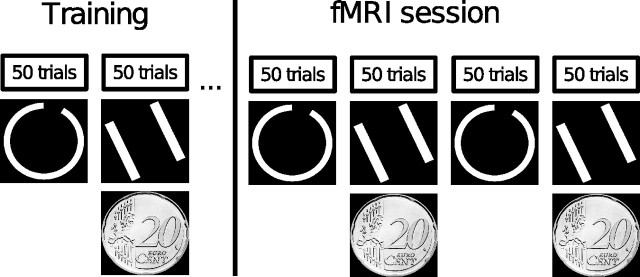

Figure 3.

Session structure. Each subject was trained on both tasks on the day before the fMRI session. Whether the first task was rewarded or not, whether it contained circle or line stimuli, and whether the optimal decision bound had a positive or negative slope was randomized across subjects. Training ended independently for both tasks after the subject reached an accuracy rate of 80% within a single block. During the fMRI session, the two trained tasks were presented alternatingly in four blocks of 50 trials each.

fMRI image acquisition.

Functional magnetic resonance imaging data was acquired on a Siemens MAGNETOM Trio whole-body 3T MRI scanner equipped with an eight-channel head coil. First, structural images of the brain were recorded using a T1-weighted magnetization-prepared rapid acquisition gradient echo (MP-RAGE) sequence with a 256 × 256 matrix, a field of view (FOV) of 256 mm, and 192 sagittal slices of 1 mm thickness. A total of 1360 functional volumes were obtained in a single session using a whole-brain T2*-weighted echo planar imaging (EPI) sequence. The parameters for the functional measurements were as follows: echo time, 30 ms; repetition time, 2000 ms; slice thickness, 3 mm; slice gap thickness, 0.3 mm; number of slices, 30 (interleaved order); flip angle, 80°; FOV, 192 × 192 mm; and a matrix size of 64 × 64, resulting in an in-plane resolution of 3 × 3 mm.

Image processing.

The functional images were preprocessed with the statistical parametric mapping software SPM5 (Wellcome Department of Cognitive Neurology, London, UK). Preprocessing included slice timing correction using the first slice as reference and three-dimensional motion correction, i.e., rigid body realignment to the mean of all images. The six estimated movement parameters were saved to be included in the statistical analysis. It was ensured that head movement was below 3 mm and 3° for each participant. Images were normalized to Montreal Neurological Institute (MNI) space (Evans et al., 1993) using the standard EPI template of SPM5. The data were spatially smoothed using a Gaussian filter of 6 mm full-width at half-maximum, and a temporal high-pass filter of Hz was applied to remove low-frequency confounds.

Statistical analysis.

The regressors for within-subject modeling were convolved with a model of the hemodynamic response function and represented right and wrong answers of each subject for both the period of categorization (i.e., stimulus presentation and response) and feedback presentation. An additional regressor for delay between categorization and feedback was included, and all regressors were fit to the data separately for the rewarded and not rewarded condition using the general linear model. Contrast images of the condition-specific estimates for each subject were then submitted to the second-level group analyses with subject as the random-effect variable. A 2 × 2 × 2 ANOVA was conducted with the factors trial part (stimulus or feedback), condition (rewarded or nonrewarded), and success (right or wrong answer). All fMRI activation maps were thresholded at p < 0.05 (spatial extent of more than five contiguous voxels), and all reported clusters survived correction for multiple comparisons at the whole-brain level using familywise error rate (FWE) correction (Worsley et al., 1996). Because an analysis comparing the group of subjects receiving visual positive feedback in the rewarded condition in the form of a coin with those receiving it in the form of a green circle did not reveal any significant differences, the data of both groups were collapsed.

Results

Behavioral results

Accuracy measures

Subjects fulfilled the criterion of 80% correct answers after an average ± SE of 131.25 ± 20.09 training trials in the rewarded task and after an average of 129.69 ± 17.56 training trials in the unrewarded task. An ANOVA of the behavioral data collected during the fMRI session with repeated measures on condition (rewarded/not rewarded) and block (first vs second block of the experiment) showed a significant main effect of block (F(1,15) = 5.5, p < 0.05) and a significant interaction of block by reward (F(1,15) = 5.5, p < 0.05). Paired samples t tests revealed that the interaction was attributable to the fact that, for the rewarded condition, error rates significantly decreased from the first to the second block (t(15) = 3.3, p < 0.05), although they did not change in the unrewarded condition (t(15) = 0.5, NS). The average ± SE error rates were 23.7 ± 1.6% in the monetary reward condition and 23.4 ± 1.4% in the cognitive feedback condition.

Model-based analysis

Because previous experiments have shown that tasks that can be solved by applying a simple verbal rule do not recruit the dopaminergic system, it is crucial for the interpretation of the results of the current experiment to determine which strategies subjects used to solve the task, information that cannot be provided by measures of overall performance. Therefore, based on the location of each subject's responses in the two-dimensional stimulus space, four rule-based models and an information-integration model were separately fit to each of the 32 datasets (two tasks for each of the 16 subjects) using model-fitting procedures as established by Maddox and Ashby (1993). Two of the estimated rule-based models build on the assumption that subjects based their decisions on either only the orientation or only the line width of the stimuli. The other two rule-based models assume that subjects separately evaluated the stimuli against each of the two dimensions and then combined the result of these decisions in a conjunctive rule like “Respond A if the circles are open to the right and are thick, otherwise respond B” (or, respectively, “Respond B if the circles are open to the left and are thin, otherwise respond A”). Results suggest that, for 9 of the 16 subjects, at least one dataset is best fit by the information-integration model, which assumes that the subject applied the optimal decision bound depicted in Figure 1, and they therefore integrated the information of both dimensions. Given the few data points the model fits are based on, these results have to be interpreted with care. Nevertheless, the modeling results might indicate that not all subjects engaged the procedural-learning-based system mediated by subcortical structures. The influence of individual strategies on brain activation is addressed below (see Functional imaging results). Additional details on model fitting and results are provided in supplemental material (available at www.jneurosci.org).

Questionnaire data

ANOVAs were performed with repeated measures on condition (rewarded/not rewarded) with the subscales of the postexperimental motivation inventory as dependent measures. No significant effects were observed.

Functional imaging results

Effect of monetary reward versus cognitive feedback

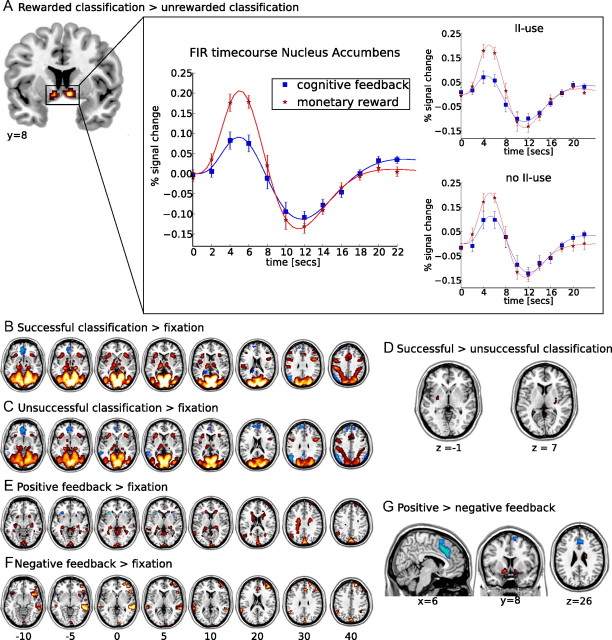

Comparing the effect of monetary reward and cognitive feedback revealed a significantly higher activation for monetary reward bilaterally in the nucleus accumbens (MNI: x = 9, y = 6, z = −9; maximum T, 6.59; and x = −6, y = 0, z = 6; maximum T, 5.52) during categorization (Fig. 4A). No areas were significantly more activated during categorization in the cognitive feedback task nor were any differences observed during the receipt of feedback in both tasks. When the effects of feedback in the rewarded and the cognitive feedback task were compared separately for positive and negative feedback, no significant differences were observed.

Figure 4.

fMRI results. A, Activation in the contrast of monetary reward minus cognitive feedback during stimulus presentation. The time course represents the finite impulse response (FIR) to both monetary reward and cognitive feedback during stimulus presentation, extracted using MarsBar and an anatomical ROI of the nucleus accumbens from the Harvard–Oxford subcortical structural atlas. For each subject, individual functional ROIs within this anatomical ROI were defined based on the areas in which the main effect of stimulus presentation exceeded an uncorrected threshold of p ≤ 0.1. Error bars represent the SEM. Results of this analysis are also plotted separately for subjects with use of the optimal decision bound in at least one condition and those with no information-integration use (no II use). A significant peak activation difference between the task conditions is only observed in the group of subjects with information-integration use (II use). B, C, E, F, Activations (yellow to red) and deactivations (white to blue) for contrasts against fixation. B, Successful categorization minus fixation. Activations are observed in occipital and parietal cortices, as well as in subcortical areas. C, Unsuccessful categorization minus fixation. Activations are mainly observed in occipital and parietal cortices. D, Activations in the contrast of successful minus unsuccessful categorization. No voxel showed higher activations for unsuccessful categorization, whereas bilateral clusters of higher activation during successful categorization were observed in the putamen. E, Positive feedback minus fixation. Both the caudate nuclei and the hippocampi are activated. F, Negative feedback minus fixation. Activations include the rostral cingulate zone and right prefrontal areas. G, Activations in the contrast of positive minus negative feedback. Voxels that were more activated during the processing of negative feedback include the RCZ and anterior insula, whereas voxels more activated during the processing of positive feedback are observed in the nucleus accumbens and right caudate body. All maps are thresholded at a level of pFWE < 0.05. Left hemisphere is presented at the left.

Examining the nucleus accumbens activation

Predicting the blood oxygenation level-dependent signal by questionnaire data.

To assess the effect of individual motivation on dopaminergic activations, the estimated signal change (percentage) within the nucleus accumbens was extracted to both monetary reward and cognitive feedback during categorization using MarsBar (http://marsbar.sourceforge.net) and an anatomical region of interest (ROI) of the nucleus accumbens from the Harvard–Oxford subcortical structural atlas as implemented in the Oxford University Centre for Functional MRI of the Brain Software Library (http://www.fmrib.ox.ac.uk). For each subject, individual functional ROIs within this anatomical ROI were defined based on the areas in which the main effect of stimulus presentation exceeded an uncorrected threshold of p < 0.1. These data were then submitted as dependent variable to a multiple stepwise regression with backward elimination of the individual scores on the six motivation questionnaire subscales. In each task, two predictors were left in the final model (cognitive feedback: R2 = 0.06; monetary reward: R2 = 0.37): in the cognitive feedback task, perceived competence best predicted activation within the nucleus accumbens (β = 0.077, p < 0.05), whereas in the monetary reward task, it was predicted by pressure/tension (β = 0.055, p < 0.05).

Influence of individual strategies.

Because the model-based analyses indicate that not all subjects used the optimal information-integration decision bound, the dataset was split into subjects whose data was fit best by an information-integration model in at least one task and those subjects for whom this was not the case. A stronger blood oxygenation level-dependent (BOLD) signal in response to the expectation of monetary reward compared with cognitive feedback in the nucleus accumbens was only present in the group of subjects using an information-integration rule (T = 2.93, df = 8, p < 0.05). For subjects putatively using a rule-based strategy (i.e., a conjunctive rule), peak activations in the nucleus accumbens did not differ between the expectation of monetary reward and cognitive feedback (T = 1.67, df = 6, p < 0.15) (Fig. 4A).

Successful and unsuccessful categorization versus fixation

To ensure that the lack of differential activation in response to cognitive feedback and monetary reward in areas other than the nucleus accumbens was not attributable to a general failure of our paradigm to activate dopaminergic structures, we compared both successful and unsuccessful categorization separately to fixation. We observed widespread common bilateral cortical activations extending from the visual cortices [Brodmann area (BA) 17/18/19] ventrally to the posterior temporal cortex (BA 37), as well as dorsally to the posterior parietal cortex (BA 39/40/7). Also, superior frontal (BA 6), ventrolateral prefrontal (BA 45), medial prefrontal (BA 24/32), and anterior insular activations were observed in both contrasts. Signal decreases were found for both contrasts in medial orbitofrontal areas (BA 9/10/11), in the angular gyrus (BA 39), and in the middle temporal gyrus (BA 21).

Activations during successful categorization were observed in the parahippocampi, the thalamus, the head of the caudate, and the anterior pallidum. Moreover, a cluster of activation was found bilaterally in the midbrain (MNI: x = 9/−9, y = −15, z = 15) at or near the substantia nigra, for both successful and unsuccessful categorization. Additional activations detected when comparing unsuccessful categorization with baseline were located bilaterally within the parahippocampi, the thalamus, and a cluster in the left anterior pallidum but not the caudate (Fig. 4B,C).

Successful versus unsuccessful categorization

When comparing successful and unsuccessful categorization with fixation, we observed different activation patterns in our areas of interest, i.e., subcortical dopaminergic projection sites. We therefore directly compared the two. Bilateral activations within the putamen were observed along with a cluster in the left posterior parietal cortex (BA 40) to show significantly higher activations during successful compared with unsuccessful categorization. No areas showed significantly higher activations for unsuccessful compared with successful categorization (Table 2, Fig. 4D).

Table 2.

Areas of activation when comparing successful and unsuccessful classification and positive and negative feedback

| Region | L/R | BA | k | Maximum T | MNI |

||

|---|---|---|---|---|---|---|---|

| x | y | z | |||||

| Successful classification–unsuccessful classification | |||||||

| Putamen | L | 10 | 5.35 | −27 | −6 | −3 | |

| R | 6 | 5.32 | 30 | −12 | 12 | ||

| Posterior parietal cortex | L | 40 | 7 | 4.89 | −54 | −54 | 39 |

| Unsuccessful classification–successful classification | – | – | – | – | – | – | – |

| Positive–negative feedback | |||||||

| Nucleus accumbens | L | 21 | 8.05 | −12 | 6 | −12 | |

| R | 7 | 6.74 | 15 | −6 | −15 | ||

| Caudate body | R | 9 | 5.54 | 21 | −12 | 27 | |

| Parahippocampal gyrus | R | 8 | 5.37 | 36 | −42 | −6 | |

| Medial orbitofrontal cortex | M | 9/10/11 | 13 | 5.80 | −3 | 33 | 69 |

| M | 10 | 5.39 | 0 | 51 | −6 | ||

| Precentral gyrus | L | 4/6 | 11 | 5.57 | −15 | −33 | 69 |

| Negative–positive feedback | |||||||

| Dorsal anterior cingulate | M | 32/8 | 262 | 7.09 | 6 | 21 | 42 |

| Anterior insula | R | 121 | 7.15 | 33 | 21 | −15 | |

| L | 70 | 6.92 | −33 | 21 | −15 | ||

| Middle temporal gyrus | R | 21 | 8 | 5.67 | 54 | −36 | −3 |

Included regions exceeded an extent threshold of five continguous voxels and p < 0.05 (FWE-corrected). For each region, the voxel with the maximum T value is described. The voxel coordinates refer to the MNI template. The laterality (L/R) of the clusters is described [left (L), right (R), medial (M)] and corresponding anatomical labels (Region), BAs, and cluster sizes (k) are listed.

Positive and negative feedback versus fixation

Because performance on the task was not perfect, even after training, both positive and negative feedback are not fully predicted and are therefore expected to elicit a prediction error signal. To examine this effect, we compared both events separately to fixation. Positive feedback significantly activated cortical areas in the (pre-)cuneus, middle temporal gyrus (BA 20/21), angular gyrus (BA 39), posterior parietal cortex (BA 7/40), and superior frontal areas (BA 9/10). Deactivations were detected bilaterally in the anterior insula. Activations in this contrast included bilaterally all parts of the caudate (head, body, and tail), as well as both hippocampi. When comparing negative feedback with fixation, no subcortical activations were found at the chosen threshold. Cortical activations included left prefrontal cortex extending from dorsolateral to frontopolar areas (BA 9/10/45/46), the precuneus, bilaterally the middle/superior temporal gyri (BA 21/22), as well as a cluster in the right the posterior parietal cortex (BA 40). Also, both anterior insulae, the right supplementary motor area, and the dorsal anterior cingulate cortex (BA 32) were activated (Fig. 4E,F).

Positive versus negative feedback

The direct comparison of positive with negative feedback allows a differential assessment of positive versus negative prediction errors. Areas activated significantly more by positive than negative feedback included bilaterally the nucleus accumbens, the body of the caudate and paracentral areas (BA 4/6), as well as the right parahippocampus and a medial orbitofrontal focus (BA 11). Areas activated significantly more by negative than by positive feedback included the rostral cingulate zone (RCZ) (BA 32/8) and bilaterally the anterior insula, as well as a locus in the right middle temporal gyrus (BA 21) (Table 2, Fig. 4G)

Effects of training

Because the behavioral data indicated significant effects for the main effect of block (first vs second block of the task) and the interaction between block and condition (cognitive feedback vs monetary reward), we ran the first-level fMRI analysis again with separate regressors for the first versus second block to check for neuronal substrates of the behavioral effect. No training effect or block × condition interaction was observed in the functional data.

Discussion

Differential activations during categorization

We compared monetary reward with cognitive feedback in information-integration learning. The anticipation of monetary reward led to higher activation than the anticipation of cognitive feedback in a single structure, the nucleus accumbens. Activation in the nucleus accumbens has been shown previously to increase with both reward magnitude and reward probability (Knutson et al., 2001; Abler et al., 2006) during reward expectation and was therefore suggested to code for expected reward value, which is defined as the product of these (Knutson et al., 2005). Because in our experiment error rates between the rewarded and cognitive feedback task did not differ, probability of reward was constant across the tasks, and the differential activation in the nucleus accumbens is likely to represent an effect of reward magnitude.

Previous results indicate that subcortical dopaminergic structures are implicated in implicit but not explicit category learning (Nomura and Reber, 2008). Although in the current study the task was designed so that optimal performance is only possible when information from both stimulus dimensions is integrated predecisionally, this cannot ensure that subjects did not use explicit rules nevertheless. Therefore, a series of models describing the location of responses in the two-dimensional stimulus space was fit to each subject's behavioral data (Maddox and Ashby, 1993). The anticipation of monetary reward compared with cognitive feedback led to higher activation in the nucleus accumbens only in the group of subjects whose behavioral data were better fit by the information-integration model than by the rule-based models, a result that further underscores the dissociation between verbal and implicit systems for category learning.

We did not observe any additional significant differences during reward expectation between the two task versions. This was not attributable to a lack of activation in the dopaminergic pathways and their cortical target structures. Activations against baseline within the head of the caudate nucleus, the pallidum, and midbrain were observed for categorization. Also, differential activations were present in a dopaminergic target structure, the putamen. It was significantly more activated during successful compared with unsuccessful categorization. This fits in well with previous studies on classification learning, which reported activation within the putamen (Cincotta and Seger, 2007) that correlated with accuracy and increased with training (Seger and Cincotta, 2005). With its connections to premotor areas, the putamen has been suggested to be central to action selection (Seger, 2008) and to be implicated in the skilled performance of a task (Poldrack et al., 2005).

Differential activations during feedback processing

Next to reward expectation, we also investigated activation related to another central aspect of learning, the prediction error. Two areas implicated previously in processing reward prediction errors were differentially activated during the processing of negative and positive feedback: the RCZ was more active during the processing of negative feedback, whereas the nucleus accumbens was more active during the processing of positive feedback. The finding of an RCZ activation in response to negative feedback is in accordance with a large body of research (for an overview, see Ridderinkhof et al., 2004a). This activation is often interpreted as reflecting the transmission of a prediction error signal conveyed by the mesencephalic dopaminergic system (Holroyd and Coles, 2002), which in turn signals other brain areas the increased need for control to induce behavioral adjustments and thereby maximize performance (Ridderinkhof et al., 2004b). Activation within the nucleus accumbens was shown previously to reflect the positive prediction error depending on both the probability (Abler et al., 2006) and the magnitude (Breiter et al., 2001) of reward. The observed activations both within the RCZ and the nucleus accumbens only reflected reward valence and were not further modulated by the different types of reward presented. Concerning the RCZ activation, this is in line with studies on the error- or feedback-related negativity, an event-related EEG component, which is thought to be generated in the RCZ (Debener et al., 2005) and has been shown to be only modulated by feedback valence but not by the magnitude of the (not received) reward (Yeung and Sanfey, 2004; Hajcak et al., 2006). Previous experiments on reward in humans showed increasing activation in the ventral striatum during the anticipation of increasing monetary rewards (Knutson et al., 2001; Tobler et al., 2007), whereas effects of reward magnitude on the processing of actual reward are less clear. In studies in which the height of the reward was subject to a prediction error, a positive relationship between the magnitude of outcome was observed in the ventral striatum (Breiter et al., 2001), whereas Delgado et al. (2003) only observed dorsal striatal responses.

During the processing of feedback, we also observed differential activation in the body of the caudate nucleus. This structure is part of the visual corticostriatal loop and receives both highly compressed input from visual cortices and a dopaminergic learning signal. Therefore, the caudate body and tail are regarded as the central structures for the establishment of stimulus–response contingencies in information-integration category learning (Ashby and Maddox, 2005; Nomura and Reber, 2008; Seger, 2008). This interpretation is supported by our finding that the caudate body is significantly more activated during the processing of positive than during the processing of negative feedback. Again, the activation within the body of the caudate nucleus was not significantly modulated by the type of reward, i.e., if monetary reward or cognitive feedback was presented.

Additionally, we observed an activation in the right posterior parahippocampus that was stronger for positive compared with negative feedback. No differential medial temporal lobe activations were observed for successful compared with unsuccessful task performance. This result is in line with Seger and Cincotta (2005), who reported hippocampal and parahippocampal activation during implicit category learning associated with the processing of positive feedback but not with correct classification. In summary, we observed several differential activations in dopaminergic projection areas during the processing of feedback, but none of those was modulated by our reward manipulation. The reward magnitude within a given condition of our paradigm was fixed, leaving no prediction error concerning the reward magnitude during feedback but only the question if reward is delivered or not.

Commonalities of reward and cognitive feedback-based learning

The idea that reward and cognitive feedback-based information-integration learning share similar functional substrates first came up a decade ago (Ashby et al., 1998). It has received considerable support (Ashby and Valentin, 2005; Nomura and Reber, 2008) since then, but no study has directly compared the processes within a single fMRI experiment. In this comparison, we observed several differential activations within dopaminergic projection areas in the striatum during information-integration learning, including activation in the putamen for successful compared with unsuccessful categorization, in the nucleus accumbens and in the body of the caudate nucleus for positive compared with negative feedback. However, none of these activations was significantly modulated by the type of feedback, whether cognitive or monetary. The only difference between monetary reward and cognitive feedback we observed was a quantitative effect within the nucleus accumbens during categorization, i.e., while subjects anticipated the reward. Similarly, previous experiments on monetary rewards showed that activation in the nucleus accumbens during reward anticipation increases with the magnitude of potential gains (Knutson et al., 2001, 2005). Together with our results, this may, on first sight, indicate that cognitive feedback and monetary reward are processed very similarly, with the subjective incentive magnitude as only difference. This interpretation would predict that, when parametrically varying the magnitude of the monetary reward, the anticipation of low monetary gains elicits a very similar reaction as the anticipation of cognitive feedback. However, when correlating the individual motivation with the BOLD signal change, we observed that the activation within the nucleus accumbens increased with the subject's perceived competence during the expectation of cognitive feedback and with the subjective pressure/tension during the expectation of monetary reward. Perceived competence is presumed to be a predictor of intrinsic motivation, whereas pressure and tension are predictors of extrinsic motivation (Deci et al., 1994; Ryan and Deci, 2000). Observing this distinct pattern within the same subjects suggests that, although the accumbens parametrically codes for the incentive value of a potential reward, it does so distinctly for different kinds of motivation. Following this line of argumentation, it is expected that even small monetary rewards may alter motivational processes. It may be an interesting topic for additional studies whether these motivation changes are associated with different neural activation patterns within dopaminergic structures.

Conclusions

Cognitive feedback and monetary reward activated dopaminergic structures in a very similar way during information-integration category learning. This result supports the assumption that forms of learning that depend on response contingent feedback rely on related neuronal substrates as reward learning (Ashby and Maddox, 2005; Nomura and Reber, 2008; Seger, 2008). However, one structure, the nucleus accumbens, showed pronouncedly higher activations in expectation of monetary reward compared with the expectation of cognitive feedback in the group of subjects who did use implicit strategies. Moreover, the activation strength in the nucleus accumbens was predicted by intrinsic motivation when cognitive feedback was expected and by extrinsic motivation when monetary reward was expected. Previous findings on monetary rewards suggest that the nucleus accumbens codes for the expected positive incentive properties of a reward (Knutson et al., 2001; Cooper and Knutson, 2008). Our observations complement these findings by showing that the accumbens also responds differentially when comparing different types of reward, i.e., cognitive feedback and monetary reward. Additional studies may investigate whether the activation strength in the nucleus accumbens represents only the common strength of (intrinsic or extrinsic) reward expectation or whether it is modulated differentially by different forms of motivation.

Footnotes

This work was supported by the Deutsche Forschungsgemeinschaft (Sonderforschungsbereich 779, TP A4). We thank Angela A. Manginelli for support with the development of the experimental design and Jana Tegelbeckers for assistance in acquiring the data.

References

- Abler B, Walter H, Erk S, Kammerer H, Spitzer M. Prediction error as a linear function of reward probability is coded in human nucleus accumbens. Neuroimage. 2006;31:790–795. doi: 10.1016/j.neuroimage.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Aron AR, Shohamy D, Clark J, Myers C, Gluck MA, Poldrack RA. Human midbrain sensitivity to cognitive feedback and uncertainty during classification learning. J Neurophysiol. 2004;92:1144–1152. doi: 10.1152/jn.01209.2003. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Alfonso-Reese LA, Turken AU, Waldron EM. A neuropsychological theory of multiple systems in category learning. Psychol Rev. 1998;105:442–481. doi: 10.1037/0033-295x.105.3.442. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Gott RE. Decision rules in the perception and categorization of multidimensional stimuli. J Exp Psychol Learn Mem Cogn. 1988;14:33–53. doi: 10.1037//0278-7393.14.1.33. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Maddox WT. Human category learning. Annu Rev Psychol. 2005;56:149–178. doi: 10.1146/annurev.psych.56.091103.070217. [DOI] [PubMed] [Google Scholar]

- Ashby FG, O'Brien JB. The effects of positive versus negative feedback on information-integration category learning. Percept Psychophys. 2007;69:865–878. doi: 10.3758/bf03193923. [DOI] [PubMed] [Google Scholar]

- Ashby FG, Valentin VV. Multiple systems of perceptual category learning: theory and cognitive tests. In: Cohen H, Lefebvre C, editors. Categorization in cognitive science. New York: Elsevier; 2005. [Google Scholar]

- Breiter HC, Aharon I, Kahneman D, Dale A, Shizgal P. Functional imaging of neural responses to expectancy and experience of monetary gains and losses. Neuron. 2001;30:619–639. doi: 10.1016/s0896-6273(01)00303-8. [DOI] [PubMed] [Google Scholar]

- Cincotta CM, Seger CA. Dissociation between striatal regions while learning to categorize via feedback and via observation. J Cogn Neurosci. 2007;19:249–265. doi: 10.1162/jocn.2007.19.2.249. [DOI] [PubMed] [Google Scholar]

- Cooper JC, Knutson B. Valence and salience contribute to nucleus accumbens activation. Neuroimage. 2008;39:538–547. doi: 10.1016/j.neuroimage.2007.08.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Debener S, Ullsperger M, Siegel M, Fiehler K, von Cramon DY, Engel AK. Trial-by-trial coupling of concurrent electroencephalogram and functional magnetic resonance imaging identifies the dynamics of performance monitoring. J Neurosci. 2005;25:11730–11737. doi: 10.1523/JNEUROSCI.3286-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deci EL, Eghrari H, Patrick BC, Leone DR. Facilitating internalization: the self-determination theory perspective. J Pers. 1994;62:119–142. doi: 10.1111/j.1467-6494.1994.tb00797.x. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Locke HM, Stenger VA, Fiez JA. Dorsal striatum responses to reward and punishment: effects of valence and magnitude manipulations. Cogn Affect Behav Neurosci. 2003;3:27–38. doi: 10.3758/cabn.3.1.27. [DOI] [PubMed] [Google Scholar]

- Dreher JC, Kohn P, Berman KF. Neural coding of distinct statistical properties of reward information in humans. Cereb Cortex. 2006;16:561–573. doi: 10.1093/cercor/bhj004. [DOI] [PubMed] [Google Scholar]

- Evans AC, Collins DL, Mills SR, Brown ED, Kelly RL, Peters TM. 3D statistical neuroanatomical models from 305 MRI volumes. IEEE Conf Rec; Nuclear Science Symposium and Medical Imaging Conference; 1993. pp. 1813–1817. [Google Scholar]

- Filoteo JV, Maddox WT. Category learning in Parkinson's disease. In: Sun M-K, editor. Research progress in Alzheimer's disease and dementia. Vol 3. New York: Nova Science Publishers; 2007. pp. 339–365. [Google Scholar]

- Franke G. Die Symptom-Checkliste von Derogatis. Göttingen, Germany: Beltz Test Gesellschaft; 1995. [Google Scholar]

- Gottfried JA, O'Doherty J, Dolan RJ. Encoding predictive reward value in human amygdala and orbitofrontal cortex. Science. 2003;301:1104–1107. doi: 10.1126/science.1087919. [DOI] [PubMed] [Google Scholar]

- Hajcak G, Moser JS, Holroyd CB, Simons RF. The feedback-related negativity reflects the binary evaluation of good versus bad outcomes. Biol Psychol. 2006;71:148–154. doi: 10.1016/j.biopsycho.2005.04.001. [DOI] [PubMed] [Google Scholar]

- Holroyd CB, Coles MGH. The neural basis of human error processing: reinforcement learning, dopamine, and the error related negativity. Psychol Rev. 2002;109:679–709. doi: 10.1037/0033-295X.109.4.679. [DOI] [PubMed] [Google Scholar]

- Knutson B, Adams CM, Fong GW, Hommer D. Anticipation of increasing monetary reward selectively recruits nucleus accumbens. J Neurosci. 2001;21 doi: 10.1523/JNEUROSCI.21-16-j0002.2001. RC159(1–5) [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knutson B, Taylor J, Kaufman M, Peterson R, Glover G. Distributed neural representation of expected value. J Neurosci. 2005;25:4806–4812. doi: 10.1523/JNEUROSCI.0642-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- LeDoux J. The emotional brain, fear, and the amygdala. Cell Mol Neurobiol. 2003;23:727–738. doi: 10.1023/A:1025048802629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maddox WT, Ashby FG. Comparing decision bound and exemplar models of categorization. Percept Psychophys. 1993;53:49–70. doi: 10.3758/bf03211715. [DOI] [PubMed] [Google Scholar]

- Maddox WT, Ashby FG, Bohil CJ. Delayed feedback effects on rule-based and information-integration category learning. J Exp Psychol Learn Mem Cogn. 2003;29:650–662. doi: 10.1037/0278-7393.29.4.650. [DOI] [PubMed] [Google Scholar]

- Maddox WT, Love BC, Glass BD, Filoteo JV. When more is less: feedback effects in perceptual category learning. Cognition. 2008;108:578–589. doi: 10.1016/j.cognition.2008.03.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McAuley E, Duncan T, Tammen VV. Psychometric properties of the intrinsic motivation inventory in a competitive sport setting: a confirmatory factor analysis. Res Q Exerc Sport. 1989;60:48–58. doi: 10.1080/02701367.1989.10607413. [DOI] [PubMed] [Google Scholar]

- Mizuno K, Tanaka M, Ishii A, Tanabe HC, Onoe H, Sadato N, Watanabe Y. The neural basis of academic achievement motivation. Neuroimage. 2008;42:369–378. doi: 10.1016/j.neuroimage.2008.04.253. [DOI] [PubMed] [Google Scholar]

- Nomura EM, Reber PJ. A review of medial temporal lobe and caudate contributions to visual category learning. Neurosci Biobehav Rev. 2008;32:279–291. doi: 10.1016/j.neubiorev.2007.07.006. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP. Reward representations and reward-related learning in the human brain: insights from neuroimaging. Curr Opin Neurobiol. 2004;14:769–776. doi: 10.1016/j.conb.2004.10.016. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Poldrack RA, Clark J, Paré-Blagoev EJ, Shohamy D, Creso Moyano J, Myers C, Gluck MA. Interactive memory systems in the human brain. Nature. 2001;414:546–550. doi: 10.1038/35107080. [DOI] [PubMed] [Google Scholar]

- Poldrack RA, Sabb FW, Foerde K, Tom SM, Asarnow RF, Bookheimer SY, Knowlton BJ. The neural correlates of motor skill automaticity. J Neurosci. 2005;25:5356–5364. doi: 10.1523/JNEUROSCI.3880-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ridderinkhof KR, Ullsperger M, Crone EA, Nieuwenhuis S. The role of the medial frontal cortex in cognitive control. Science. 2004a;306:443–447. doi: 10.1126/science.1100301. [DOI] [PubMed] [Google Scholar]

- Ridderinkhof KR, van den Wildenberg WP, Segalowitz SJ, Carter CS. Neurocognitive mechanisms of cognitive control: the role of prefontal cortex in action selection, response inhibition, performance monitoring, and reward based learning. Brain Cogn. 2004b;56:129–140. doi: 10.1016/j.bandc.2004.09.016. [DOI] [PubMed] [Google Scholar]

- Rodriguez PF, Aron AR, Poldrack RA. Ventral-striatal/nucleus-accumbens sensitivity to prediction errors during classification learning. Hum Brain Mapp. 2006;27:306–313. doi: 10.1002/hbm.20186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ryan RM. Control and information in the intrapersonal sphere: an extension of cognitive evaluation theory. J Pers Soc Psychol. 1982;43:450–461. [Google Scholar]

- Ryan RM, Deci EL. Intrinsic and extrinsic motivations: classic definitions and new directions. Contemp Educ Psychol. 2000;25:54–67. doi: 10.1006/ceps.1999.1020. [DOI] [PubMed] [Google Scholar]

- Schultz W. Behavioral theories and the neurophysiology of reward. Annu Rev Psychol. 2006;57:87–115. doi: 10.1146/annurev.psych.56.091103.070229. [DOI] [PubMed] [Google Scholar]

- Schultz W. Behavioral dopamine signals. Trends Neurosci. 2007;30:203–210. doi: 10.1016/j.tins.2007.03.007. [DOI] [PubMed] [Google Scholar]

- Schultz W, Dickinson A. Neural coding of prediction errors. Annu Rev Neurosci. 2000;23:473–500. doi: 10.1146/annurev.neuro.23.1.473. [DOI] [PubMed] [Google Scholar]

- Seger CA. How do the basal ganglia contribute to categorization? Their roles in generalization, response selection, and learning via feedback. Neurosci Biobehav Rev. 2008;32:265–278. doi: 10.1016/j.neubiorev.2007.07.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seger CA, Cincotta CM. The roles of the caudate nucleus in human classification learning. J Neurosci. 2005;25:2941–2951. doi: 10.1523/JNEUROSCI.3401-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Smith BW, Mitchell DG, Hardin MG, Jazbec S, Fridberg D, Blair RJ, Ernst M. Neural substrates of reward magnitude, probability, and risk during a wheel of fortune decision-making task. Neuroimage. 2009;44:600–609. doi: 10.1016/j.neuroimage.2008.08.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tobler PN, O'Doherty JP, Dolan RJ, Schultz W. Reward value coding distinct from risk attitude-related uncertainty coding in human reward systems. J Neurophysiol. 2007;97:1621–1632. doi: 10.1152/jn.00745.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Worsley KJ, Marrett S, Neelin P, Vandal AC, Friston KJ, Evans AC. A unified statistical approach for determining significant signals in images of cerebral activation. Hum Brain Mapp. 1996;4:58–73. doi: 10.1002/(SICI)1097-0193(1996)4:1<58::AID-HBM4>3.0.CO;2-O. [DOI] [PubMed] [Google Scholar]

- Yeung N, Sanfey AG. Independent coding of reward magnitude and valence in the human brain. J Neurosci. 2004;24:6258–6264. doi: 10.1523/JNEUROSCI.4537-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]