Abstract

The involvement of facial mimicry in different aspects of human emotional processing is widely debated. However, little is known about relationships between voluntary activation of facial musculature and conscious recognition of facial expressions. To address this issue, we assessed severely motor-disabled patients with complete paralysis of voluntary facial movements due to lesions of the ventral pons [locked-in syndrome (LIS)]. Patients were required to recognize others' facial expressions and to rate their own emotional responses to presentation of affective scenes. LIS patients were selectively impaired in recognition of negative facial expressions, thus demonstrating that the voluntary activation of mimicry represents a high-level simulation mechanism crucially involved in explicit attribution of emotions.

Introduction

Darwin (1872) claimed that emotional experience is intensified by its behavioral expression and inhibited by the suppression thereof. Several modern lines of evidence support this claim. For instance, subjects required to produce facial muscle contractions covertly facilitating or inhibiting smile (e.g., to hold a pen between teeth or between lips) report subjective experiences or judge external stimuli consistently with their imposed facial mimicry (for review, see Niedenthal, 2007; Oberman et al., 2007). Moreover, it has been shown that observation of happy and angry faces determines a corresponding mimic response in the observer (Dimberg et al., 2000). Such facial response is spontaneous (i.e., without external prompting or a goal to mimic) (Dimberg and Lundquist, 1988), unconscious (i.e., it occurs even when faces are presented subliminally), and rapid (i.e., it emerges within one second after face presentation) (Dimberg et al., 2000). Spontaneous mimicry thus differs from voluntary facial expressions, which are effortful and slow (Dimberg et al., 2002), are affected by contextual demands (Ekman, 1992), and involve different neurofunctional mechanisms (Ekman, 1992; Tassinary and Cacioppo, 2000; Morecraft et al., 2004).

The influence of facial mimicry on different aspects of emotional experience is in keeping with theories positing that perception of others' emotions requires reactivation of the sensorimotor system (embodied cognition) (Gallese, 2003; Goldman and Sripada, 2005; Adolphs, 2006; Niedenthal, 2007). For instance, spontaneous facial mimicry may facilitate immediate empathy via a process of internal simulation of the observed emotion (Niedenthal, 2007). However, autistic individuals, whose spontaneous mimicry is impaired, perform tasks requiring recognition of facial expressions as well as normal controls (Oberman et al., 2009). These findings could suggest that voluntary facial motility, spared in autistic individuals, may contribute to explicit recognition of emotional facial expressions. This hypothesis would fit with theoretical frames according to which understanding of others' emotional states calls for both conscious and unconscious cognitive processes (Keysers and Gazzola, 2006; Goldman, 2009).

To directly verify whether voluntary mimicry is involved in explicit recognition of emotional facial expressions, we studied patients affected by severe impairments of voluntary motility [i.e., locked-in syndrome (LIS)]. LIS patients show quadriplegia, mutism, and bilateral facial palsy with preservation of vertical gaze and upper eyelid movements; consciousness and cognitive abilities are spared (Schnakers et al., 2008). LIS patients are able to interact with the environment solely through eye-coded communication; despite this extreme degree of motor disability, LIS patients appear emotionally adapted to their severe physical conditions (Cappa et al., 1985; Lulé et al., 2009). The causal ventral pontine lesion in LIS patients usually spares neural structures involved in producing spontaneous facial expressions (Hopf et al., 1992; Töpper et al., 1995). Because of the dissociation between voluntary (abolished) and involuntary (spared) facial mimicry, our hypothesis predicts that LIS patients are impaired in consciously recognizing emotional facial expressions, despite preserved emotional responses to evocative scenes not involving faces.

Materials and Methods

Participants.

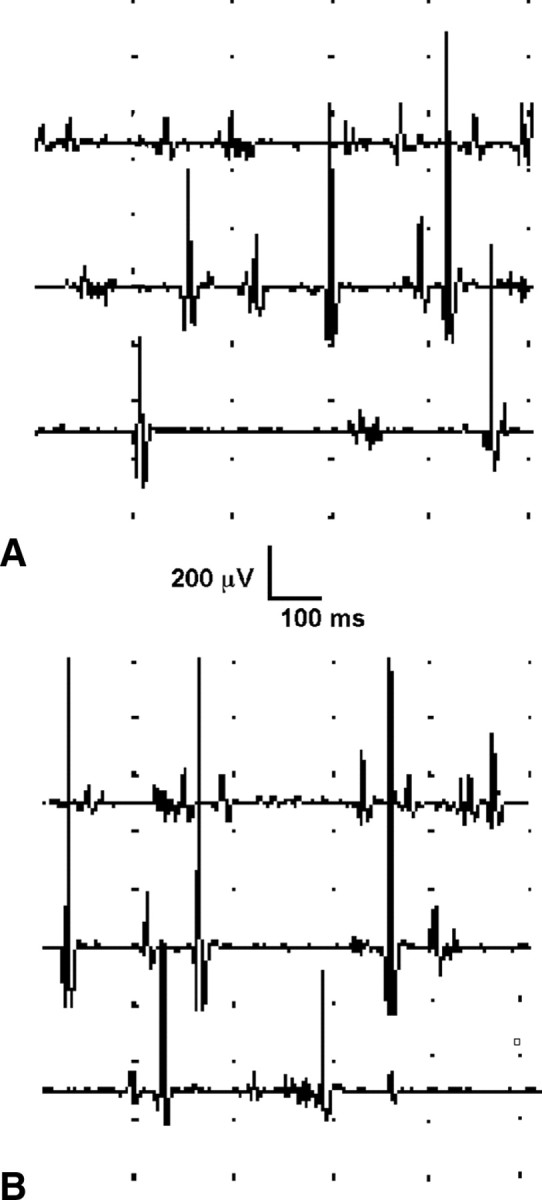

Seven LIS inpatients (mean age = 49 years; SD = 13.7; range, 26–66; mean education = 11 years; SD = 3.8; range, 5–17) of the Post Coma and Rehabilitation Care San Raffaele Unit (Cassino, Italy) were included in the study; all patients were tested several months after onset (Table 1) and were in stabilized clinical conditions. Consistent with the diagnosis of pure LIS (Bauer et al., 1979), all patients showed a complete loss of any voluntary verbal or motor output with the exception of vertical eye movements and blinking. In particular, LIS patients could not produce voluntary facial movements; this clinical impression was endorsed by electromyography recordings obtained from facial muscles in all patients, according to standard procedures. At rest, all patients showed diffuse fibrillation potentials compatible with a lesion of the second motoneuron; when patients were asked to voluntarily perform facial movements, no appreciable recruitment of motor unit action potentials could be recorded, consistent with a damage of the descending motor pathways (Fig. 1). As all enrolled patients did not present eye gaze deviation or involuntary ocular movements, they could communicate via an eye-response code reliably (upward eye movements meaning affirmative responses and downward movements meaning negative responses). By these means, we could ascertain that they showed preserved vision on confrontation procedures, a finding further confirmed by normal visual-evoked potentials. Disorders of visual cognition, potentially interfering with the experimental study, were excluded by patients' normal performance on tests for visuospatial intelligence (Raven's colored progressive matrices; Caltagirone et al., 1995) and face perception (Benton facial recognition test; Benton et al., 1992) (Table 1).

Table 1.

Demographic and cognitive characteristics of patients

| Patient no. | Sex | Age | Cause | Time from injury (months) | Education (years) | RCPM | BFRT |

|---|---|---|---|---|---|---|---|

| 1 | F | 33 | Posttraumatic BA thrombosis | 13 | 8 | 25 | 45 |

| 2 | M | 46 | Spontaneous BA thrombosis | 39 | 17 | 27 | 47 |

| 3 | F | 59 | Brainstem hemorrhage | 11 | 13 | 26 | 43 |

| 4 | M | 57 | Spontaneous BA thrombosis | 9 | 13 | 23 | 46 |

| 5 | F | 26 | Spontaneous BA thrombosis | 6 | 13 | 26 | 49 |

| 6 | F | 57 | Spontaneous BA thrombosis | 16 | 5 | 21 | 44 |

| 7 | M | 66 | Spontaneous BA thrombosis | 9 | 17 | 23 | 48 |

All scores were within normal range after adjustment for age and education according to Italian normative studies. BA, Basilar artery; RCPM, Raven's coloured progressive matrices; BFRT, Benton facial recognition test.

Figure 1.

A, B, Electromyographic recording from the orbicularis oris in subject 7, obtained at rest (A) and during intentional contraction (B). As all patients, subject 7 showed fibrillation potentials at rest, without appreciable recruitment of motor units during intended contraction.

Although LIS patients could not produce voluntary facial movements upon verbal request or as imitation, environmental stimuli could sometimes trigger laughter or crying responses in tune with the context in all of them. These spontaneous emotional expressions were not part of the so-called pathological laughter and crying episodes, in which stereotyped and uncontrollable facial mimicry is precipitated by nonspecific stimuli and does not correlate with patients' feeling (Sacco et al., 2008). At the time of testing, no patient showed signs of anxiety or depression, assessed on clinical grounds and by means of an adapted version of the Hospital Anxiety and Depression Scale (HADS) (Zigmond and Snaith, 1983), a questionnaire specifically designed to assess psychological distress in patients with severe disabilities. The HADS provides separate scores for anxiety and depression ranging from 0 to 21, with scores of 7 or less considered as non-cases, scores of 8–10 indicating possible levels of distress, and scores of 11 or above corresponding to clinical levels of distress (Zigmond and Snaith, 1983). In the present study, both HADS anxiety and depression scores were well within the normal range (mean HADS anxiety score = 5.57; SD = 1.13; mean HADS depression score = 6, SD = 1.19). Accordingly, no patient was under psychopharmacological treatment.

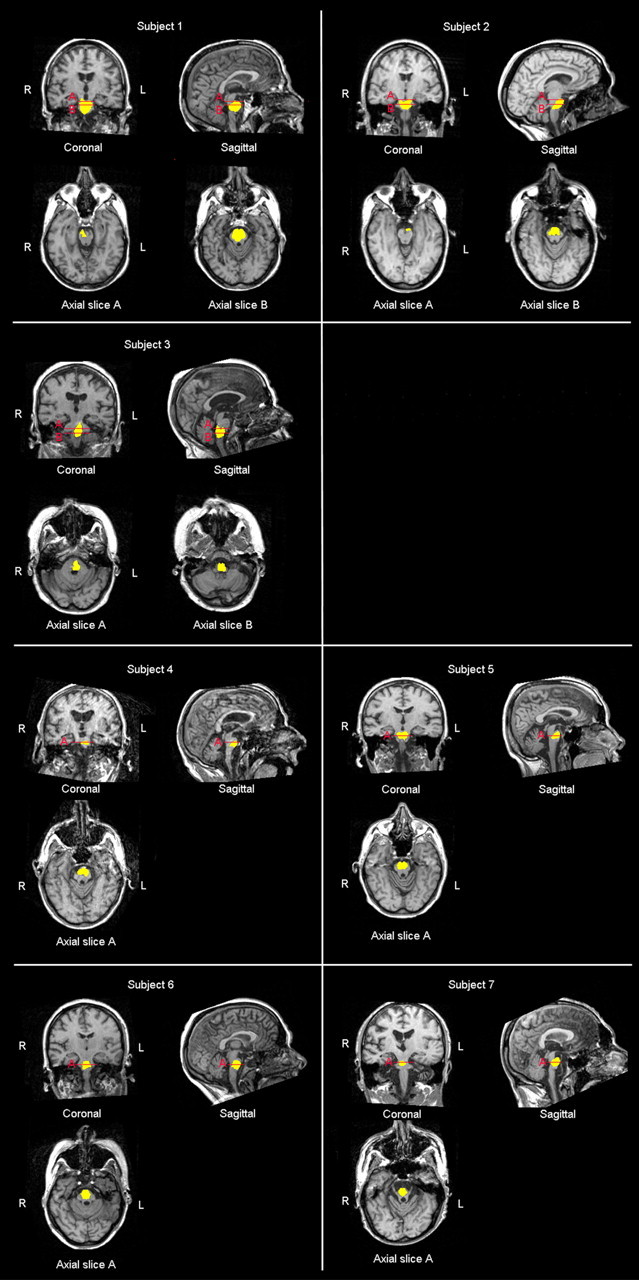

Whole-brain magnetic resonance (MR) demonstrated that all enrolled patients were affected by a selective lesion at the brainstem. The pontine lesion responsible for LIS was ischemic in six cases, due to posttraumatic (patient 1) or spontaneous (patients 2, 4, 5, 6, and 7) basilar artery thrombosis; in one case the pontine lesion was due to bleeding of a brainstem cavernous malformation (patient 3) (Fig. 2). Although arterial hypertension had been previously diagnosed in five cases (patients 2, 3, 4, 6, and 7) and diabetes mellitus in one case (patient 7), no patient showed additional cortical or subcortical lesions.

Figure 2.

MR scans showing that subjects 1 and 2 had brainstem lesion including the midbrain and the pons, subject 3 presented a lesion involving the pons and the medulla oblongata, whereas the remaining subjects showed a lesion selectively involving the pons.

Quantitative analysis of MR data (Software package ImageJ 1.41; National Institutes of Health) revealed that patients 1 and 2 had brainstem lesions including the midbrain and the pons, patient 3 presented a lesion spreading over the pons and the medulla oblongata, and the remaining patients showed a selective pontine lesion. Volumetric measurements of the lesion in each patient are enlisted in the Table 2.

Table 2.

Quantitative analysis of MR data in single patients

| Subject | Brainstem substructure | Lesion volume (mm3) | Healthy volume (mm3) | Cumulative volume (mm3) | Lesion volume percentage (%) |

|---|---|---|---|---|---|

| 1 | Midbrain | 253 | 5286 | 5539 | 4.57 |

| Pons | 8199 | 6988 | 15187 | 53.99 | |

| Medulla oblongata | 0 | 4015 | 4015 | — | |

| 2 | Midbrain | 248 | 9745 | 9993 | 2.48 |

| Pons | 4696 | 8568 | 13,264 | 35.40 | |

| Medulla oblongata | 0 | 5081 | 5081 | — | |

| 3 | Midbrain | 0 | 5263 | 5263 | — |

| Pons | 3119 | 14,148 | 17,267 | 18.06 | |

| Medulla oblongata | 2120 | 6939 | 9059 | 23.4 | |

| 4 | Midbrain | 0 | 4707 | 4707 | — |

| Pons | 2417 | 11,289 | 13,706 | 17.63 | |

| Medulla oblongata | 0 | 3310 | 3310 | — | |

| 5 | Midbrain | 0 | 6844 | 6844 | — |

| Pons | 2950 | 11,035 | 13,985 | 21.09 | |

| Medulla oblongata | 0 | 3249 | 3249 | — | |

| 6 | Midbrain | 0 | 6288 | 6288 | — |

| Pons | 3610 | 7952 | 11,562 | 31.22 | |

| Medulla oblongata | 0 | 3209 | 3209 | — | |

| 7 | Midbrain | 0 | 3964 | 3964 | — |

| Pons | 3500 | 7083 | 10,583 | 33.07 | |

| Medulla oblongata | 0 | 4593 | 4593 | — |

Twenty right-handed subjects, matching patients for age, sex, and education (mean age = 48.6 years; SD = 12.8; range, 27–65; mean education = 12.5; SD = 4.9; range, 5–18), and free from neurological or psychiatric disorders, performed the experimental tasks as normal controls.

The study was conducted in accordance with the ethical standards of the 1964 Declaration of Helsinki and an informed consent was obtained from all participants.

Experimental tasks

Task 1: Recognition of facial expressions.

Photographs of 10 actors (5 males, 5 females) were taken from the Ekman and Friesen (1976) set of pictures of facial affect. Each model posed facial expressions corresponding to six basic emotions, i.e., happiness, sadness, anger, fear, disgust, and surprise; the complete image set included 60 stimuli (10 items × 6 emotions).

For each stimulus, subjects were required to choose the expressed emotion among six labels (i.e., happiness, sadness, anger, fear, disgust and surprise), and then to rate emotion intensity on 1–9 Likert scale (1 = none, 5 = moderate, 9 = extreme).

Task 2: Judgment of emotionally evocative scenes.

Stimuli consisted of complex pictures selected from the International Affective Picture System (IAPS) (Lang et al., 1997). Stimulus selection was based on results of a pilot study in which 80 university students assigned 200 IAPS scenes to one of six basic emotion labels (i.e., happiness, sadness, anger, fear, disgust, and surprise). In the present study, we only used images classified by at least 70% of normal subjects consistently; on this basis, we had to exclude stimuli intended to elicit surprise because no item of this category reached the defined consistency level. The resulting image set included 30 stimuli (6 items × 5 emotions); happiness was represented by scenes involving babies or sporting events, sadness by cemeteries or funeral scenes, anger by guns or human violence scenes, fear by snakes or spiders, and disgust by rubbish or rats. Each stimulus was presented twice for a total of 60 items.

Subjects were required to evaluate each stimulus for kind of subjectively evoked emotion by choosing one of five options (i.e., happiness, sadness, anger, fear, and disgust), and to rate how strong was their emotional experience on 1–9 Likert scale (1 = not at all, 5 = moderately, 9 = extremely).

Procedure.

During the two experimental tasks, participants were seated 60 cm away from a computer screen in a quiet room. Care was taken to make stimuli of the two tasks as similar as possible in their subtended visual angle, with their widest axis being vertically arranged (max visual angle: 12°); all stimuli remained on view in the monitor until subjects gave their response. For each stimulus, subjects had to select one of the emotion labels appearing on a single row at the bottom of the screen; horizontal arrangement of emotion labels changed across stimuli. In regards to emotion intensity rating, participants choose the number corresponding to the perceived intensity among nine numbers horizontally displayed under the row of emotion labels; for the purpose of data analysis only ratings on correctly recognized emotions were taken into account. Each task was preceded by specific verbal instructions and by four practice trials (not considered for data analysis).

Stimuli presentation was identical for both patients and healthy controls, but normal subjects gave their response verbally, whereas patients used the eye communication code (upward eye movements meaning a “yes” response, and downward movements meaning a “no” response) to choose among alternatives pointed to by the examiner (all given alternatives were pointed to in each trial, to ensure that patients had explored the whole array and chosen the response they preferred). Patients' responses were recorded by an expert examiner, specifically trained to decode eye movements and totally unaware of purposes and predictions of the experiment. For both patients and controls, the examiner recorded responses by pressing the corresponding keys on the computer keyboard.

Each patient completed the two tasks in separate sessions each lasting ∼50 min, whereas control participants were tested in a single session lasting ∼1 h; task order was counterbalanced across subjects.

Results

Task 1: Recognition of facial expressions

Percentages of correct responses are shown in Figure 3A. A two-way mixed ANOVA, with emotion (disgust, happiness, fear, anger, surprise, and sadness) as a within-subject factor and group as a between-subject factor, revealed a significant main effect of emotion [F(5,125) = 28.405, p = 0.0001], with recognition of fear (0.57) being worse than all other emotions (disgust = 0.79; happiness = 0.99; anger = 0.75; surprise = 0.91; and sadness = 0.72). There was also a main effect of group [F(1,25) = 19.608, p = 0.0001] overall accuracy being lower in patients (0.71) than in normal controls (0.087). Importantly, we found a significant interaction between emotion and group [F(5,125) = 4.085, p = 0.002].

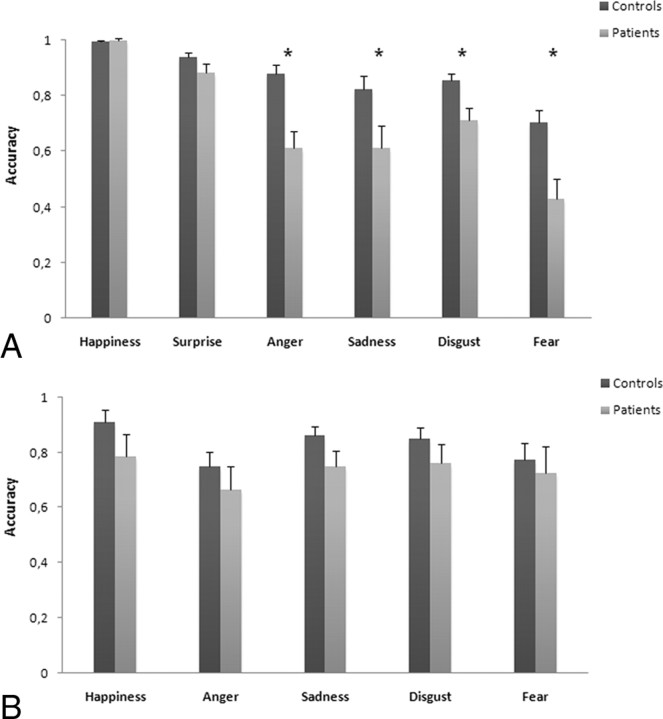

Figure 3.

A, B, Mean accuracy (SEs) of controls and patients on explicit recognition of six facial expressions (A) and on judgments of emotionally evocative scenes (B). *Significant differences at p < 0.05.

Post hoc comparisons (paired t tests) performed on the main effect of emotion showed that recognition of happiness was significantly easier than all other emotions (p < 0.0001), followed by surprise (p < 0.001 vs remaining emotions). No significant differences were detected in recognition of anger, sadness, or disgust (p > 0.05), whereas recognition of fear was significantly worse than all other emotions (p < 0.0001).

Post hoc comparisons (unpaired t tests) on the emotion × group interaction showed that patients were significantly less accurate than normal controls in recognizing disgust (t = 2.755; p = 0.011), fear (t = 3.348; p = 0.003), anger (t = 4.041; p = 0.0001), and sadness (t = 2.261; p = 0.033), whereas the two groups did not differ in recognizing happiness (t = −0.584; p = 0.564) or surprise (t = 1.562; p = 0.131).

Since it has been suggested that apparently selective impairments in recognition of specific emotions may be ascribed to different levels of task difficulty (Rapcsak et al., 2000), we aimed at verifying whether such a bias could account for LIS patients' impairment in recognizing negative emotions. To address this issue, we needed to refer to well established difficulty ratings for processing facial emotions. The percentage of correct judgments for each single stimulus reported in the original study by Ekman and Friesen (1976) can be considered the most solid data for these purposes. We incorporated such data as a covariate in separate one-way ANCOVAs used to compare correct responses in LIS patients and in normal controls for each of the six emotion types (disgust, happiness, fear, anger, surprise, and sadness). Results showed that LIS patients were significantly less accurate than controls in recognizing fear [F(1,19) = 10.517, p = 0.005], anger [F(1,19) = 12.637, p = 0.002], and sadness [F(1,19) = 4.839, p = 0.042], but not disgust [F(1,19) = 2.284, p = 0.149], happiness [F(1,19) = 0.987, p = 0.334], or surprise [F(1,19) = 1.027, p = 0.325].

As regards to intensity rating (on correctly recognized emotions), we performed a two-way mixed ANOVA, with emotion (disgust, happiness, fear, anger, surprise, and sadness) as a within-subject factor and group as a between-subject factor. Results showed a significant main effect of emotion [F(5,125) = 6.154, p = 0.0001], with fearful faces (6.50) being rated more intense than all other emotion expressions (happiness = 6.16, disgust = 5.72, anger = 5.50, surprise = 5.94, and sadness = 5.65). In contrast, the main effect of group [F(1,25) = 0.392, p = 0.537] and emotion × group interaction [F(5,125) = 1.081, p = 0.374] were not statistically significant.

Post hoc comparisons (paired t test) performed on the main effect of emotion showed that rating of emotional intensity was significantly higher for fear with respect to all the other emotions (p < 0.015), followed by happiness and surprise, which were rated significantly more intense than angry, sadness, or disgust (p < 0.040). Finally, ratings of sad and disgusted expressions did not significantly differ between each other (p > 0.05).

Finally, to verify associations among emotion recognition performance, demographic (age and education) and clinical (time from injury) variables, volumetric measurements, and cognitive functioning (performance on the Raven's colored progressive matrices and on the Benton face recognition test), we performed Spearman's rank correlation tests. Results did not show significant correlations (p > 0.05) among the considered factors.

Task 2: Judgment of emotionally evocative scenes

Percentage of correct responses are shown in Figure 3B. A two-way mixed ANOVA, with emotion (disgust, happiness, fear, anger, and sadness) as a within-subject factor and group as a between-subject factor, did not reveal significant main effects of emotion [F(4,100) = 1.812, p = 0.132] or group [F(1,25) = 2.727, p = 0.111]. Analogously, the interaction between emotion and group was not significant [F(1,25) = 0.187, p = 0.669]. Actually, patients and normal controls judged the five emotionally evocative scenes with equivalent accuracy and without differences among the five emotions.

Intensity ratings (on correct responses) were analyzed by a two-way mixed ANOVA, with emotion (disgust, happiness, fear, anger, and sadness) as a within-subject factor and group as a between-subject factor. Analogously to accuracy data, results did not show significant main effects of emotion [F(4,100) = 1.183, p = 0.323] and group [F(1,25) = 0.308, p = 0.584]. Moreover, the interaction between emotion and group was not significant [F(4,100) = 0.851, p = 0.496].

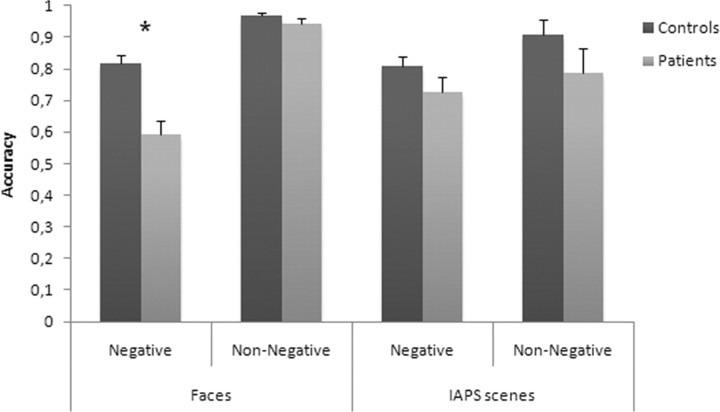

Analysis of face versus scene tasks

To confirm that LIS patients showed a selective impairment in recognizing negative facial emotions, we performed an overall ANOVA in which the task was treated as a within-subject factor. For the scope of this analysis, we had to collapse emotion types into two categories, negative (disgust, fear, anger, and sadness) and non-negative (happiness and surprise) (Ashwin et al., 2006), because the number of basic emotions was not the same in the face and scene tasks. The resulting statistical design was a three-way mixed ANOVA with group (LIS vs control) as a between-subject factor, and emotion valence (negative vs non-negative) and task (faces vs scenes) as within-subject factors. This analysis showed significant main effects of emotion valence [F(1,25) = 32.733, p = 0.0001], with recognition of negative emotions being worse (0.83) than non-negative emotions (0.91), and of group [F(1,25) = 10.968, p = 0.003], with patients being less accurate (0.76) than normal controls (0.88). In contrast, the main effect of task was not significant [F(1,25) = 0.405, p = 0.530], since overall accuracy did not differ between face (0.83) and scene (0.81) tasks. Moreover, we found a significant first-order interaction between emotion valence and task [F(1,25) = 19.645, p = 0.0001], but not between task and group [F(1,25) = 0.089, p = 0.767] or between emotion valence and group [F(1,25) = 1.909, p = 0.179]. More relevant here, results showed that the second-order interaction among emotion valence, task, and group was statistically significant [F(1,25) = 9.486, p = 0.005]. Post hoc comparisons (unpaired t tests) performed on this task × emotion valence × group interaction showed that LIS patients were significantly less accurate than normal controls in recognizing negative (t = 4.488, p = 0.0001) but not non-negative (t = 1.345, p = 0.191) facial expressions, whereas LIS patients did not differ from controls in recognizing both negative (t = 1.485, p = 0.150) and non-negative (t = 1.326, p = 0.197) IAPS scenes (see Fig. 4).

Figure 4.

Mean scores (SEs) of controls and patients on recognition of facial expressions and IAPS scenes for the negative and non-negative valence emotions. *Significant differences at p < 0.05.

To verify the nature of the errors made by the patients in recognizing facial emotions we also checked whether patients tended to misattribute facial expressions within the categories (negative or positive, e.g., misidentifying fear as disgust or happiness as surprise) or between positive and negative categories (e.g., misidentifying fear as surprise). The percentage of total within-category errors (70 of 114; 61%) for negative facial emotions was higher than that of between-category errors (39%), whereas the percentage of within- and between-category errors was similar in healthy controls (51% vs 49%). However, the distribution of these errors in patients and controls did not differ significantly (two-tailed Fisher's exact test, p = 0.11). The distribution of within- and between-category errors for positive emotions virtually overlapped in patients and controls.

Discussion

In the present study, LIS patients were significantly less accurate than normal controls in recognizing negative emotional facial expressions and tended to confound negative emotions with each other; the dissociation between recognition of negative versus non-negative emotions was not found with IAPS scenes. Some authors have suggested that a selective defect in recognition of specific emotional faces could reflect differential difficulty levels across emotions (Rapcsak et al., 2000). However, an analysis taking into account the stimuli's difficulty, as reported by Ekman and Friesen (1976), confirmed that patients were significantly less accurate than controls in recognizing negative expressions (with the exception of disgust). Moreover, differences in task difficulty between face and scene tasks could not explain our findings as the three-way ANOVA did not reveal a significant effect of task, but confirmed a significant interaction among task, group, and type of emotion. Therefore, the impaired explicit recognition of negative facial expressions in LIS patients appears to be a solid finding that can elucidate the role of sensorimotor activation in emotion attribution.

Simulative theories posit that reenactment of sensory and motor components related to emotional experience contribute to attribution of emotions to others (Niedenthal, 2007). A fundamental assumption of simulative models also foresees a co-occurrence of deficits in production and recognition of the very same emotion (i.e., paired deficits) in brain-damaged patients (Goldman and Sripada, 2005; Niedenthal, 2007). However, this is not a systematic finding, thus suggesting that simulation alone is not sufficient to explain emotion attribution (Keysers and Gazzola, 2006). Actually, it has been claimed that emotion attribution cannot be restricted to simulation and would imply supplementary explicit cognitive computations (Keysers and Gazzola, 2006). These cognitive processes are well represented by the concept of theory of mind (or mentalizing), describing a set of cognitive abilities allowing subjects to think about the mind (e.g., beliefs, desires, emotions) of others (Frith and Frith, 1999).

The present demonstration that impaired voluntary mimicry affects conscious recognition of facial expressions, without hampering adequate emotional responses to complex scenes, does not fit with the assumption of paired deficits predicted by “pure” simulative theories (Goldman and Sripada, 2005). Instead, it is consistent with hybrid theories according to which simulation and mentalizing contribute to different aspects of mind-reading (Keysers and Gazzola, 2006; Goldman, 2009). In such theoretical frames, implicit sharing and understanding of others' states (low-level mind-reading) is guaranteed by simulation, likely subserved by the mirror neuron system (Gallese, 2003), whereas conscious and explicit reflections on these contents would require high-level mind-reading processes (Keysers and Gazzola, 2006; Goldman, 2009). Our findings provide a direct support to the existence of high-level simulation mechanisms playing a crucial role in explicit attribution of emotions.

The problem remains of defining whether central or peripheral motor mechanisms are implied in emotion attribution (Calder et al., 2000; Keillor et al., 2002). A lesion of the ventral pons, as in LIS patients, interrupts efferent pathways but also interferes with functional connections linking frontal, parietal, and limbic cortex with cerebellum via pontine nuclei. Actually, a cerebellar dysfunction has been demonstrated in chronic LIS patients (Laureys et al., 2005) and can delineate a complex syndrome characterized by cognitive and affective disorders (Schmahmann and Sherman, 1998). The functional interruption of parieto-cerebellar connections might abolish forward mechanisms predicting consequences of movements (Blakemore and Decety, 2001; Blakemore and Sirigu, 2003), a defect contributing to severe motor imagery defects in LIS patient (Conson et al., 2008). Analogously, the functional impairment of corticocerebellar circuits could account for the selective impairment in explicit recognition of emotional facial expressions, a process that mainly requires activation of frontal structures (Lewis et al., 2007; Yamada et al., 2009). Therefore, an impairment of these central neural mechanisms would interfere with high-level simulative processes involved in emotion attribution. This hypothesis would also account for the lack of emotion recognition defects in patients with either congenital (Mobius syndrome) (Calder et al., 2000) or acquired (due to Guillain-Barre syndrome) (Keillor et al., 2002) bilateral peripheral facial palsy. In this perspective, not mimicry per se but its central mechanisms would play a critical role in conscious emotion attribution. Instead, rating intensity of emotions has been ascribed to activation of amygdala (Adolphs et al., 1994, 1999; Lewis et al., 2007), and this could explain the normal performance of LIS patients on this task. Actually, no metabolic sign of reduced function has been demonstrated in amygdala of chronic LIS patients (Laureys et al., 2005).

The dissociation between impaired recognition of emotional valence and spared intensity rating of facial expressions fits with available functional neuroimaging data in LIS patients (Laureys et al., 2005), whereas the possible mechanisms accounting for the selective defect in recognition of negative versus non-negative facial expressions remain uncertain. Among visual stimuli with emotional valence, facial expressions hold a relevant role with respect to social interaction and survival (Blair, 2003; Schutter et al., 2008). Actually, processing emotional faces prompts a series of physiological modifications related to action preparedness (Dalgleish, 2004; Schutter et al., 2008) that varies as a function of the valence of the expressed emotion. Although a general relationship exists between emotional processing and the motor system (Oliveri et al., 2003), Schutter et al. (2008) showed that transcranial magnetic stimulation applied over the left primary motor cortex during the presentation of emotional facial expressions selectively increased motor-evoked potentials to fearful but not to happy or neutral expressions. This selective motor activation to fearful faces seems to reflect action preparedness to threat (Schutter et al., 2008), which is mediated by the activity of frontal cortical structures specifically involved in processing negative emotional signals (van Honk et al., 2002; Anders et al., 2009). Within this framework, we could speculate that in LIS patients the functional disconnection of the corticocerebellar circuits could more specifically impair recognition of negative facial expressions, thus preventing subjects from consciously detecting threats in the environment.

References

- Adolphs R. How do we know the minds of others? Domain-specificity, simulation, and enactive social cognition. Brain Res. 2006;1079:25–35. doi: 10.1016/j.brainres.2005.12.127. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio A. Impaired recognition of emotion in facial expression following bilateral damage to the human amygdala. Nature. 1994;372:669–672. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Hamann S, Young AW, Calder AJ, Phelps EA, Anderson A, Lee GP, Damasio AR. Recognition of facial emotion in nine individuals with bilateral amygdala damage. Neuropsychologia. 1999;37:1111–1117. doi: 10.1016/s0028-3932(99)00039-1. [DOI] [PubMed] [Google Scholar]

- Anders S, Eippert F, Wiens S, Birbaumer N, Lotze M, Wildgruber D. When seeing outweighs feeling: a role for prefrontal cortex in passive control of negative affect in blindsight. Brain. 2009;132:3021–3031. doi: 10.1093/brain/awp212. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashwin C, Chapman E, Colle L, Baron-Cohen S. Impaired recognition of negative basic emotions in autism: a test of the amygdala theory. Soc Neurosci. 2006;1:349–363. doi: 10.1080/17470910601040772. [DOI] [PubMed] [Google Scholar]

- Bauer G, Gerstenbrand F, Rumpl E. Varieties of the locked-in syndrome. J Neurol. 1979;221:77–91. doi: 10.1007/BF00313105. [DOI] [PubMed] [Google Scholar]

- Benton AL, De Hamsher K, Varney NR, Spreen O. Test di riconoscimento di volti ignoti. Firenze: Organizzazioni Speciali; 1992. [Google Scholar]

- Blair RJ. Facial expressions, their communicatory functions and neuro-cognitive substrates. Philos Trans R Soc Lond B Biol Sci. 2003;358:561–572. doi: 10.1098/rstb.2002.1220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blakemore SJ, Decety J. From the perception of action to the understanding of intention. Nat Rev Neurosci. 2001;2:561–567. doi: 10.1038/35086023. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Sirigu A. Action prediction in the cerebellum and in the parietal lobe. Exp Brain Res. 2003;153:239–245. doi: 10.1007/s00221-003-1597-z. [DOI] [PubMed] [Google Scholar]

- Calder AJ, Keane J, Cole J, Campbell R, Young AW. Facial expression recognition by people with Mobius syndrome. Cogn Neuropsychol. 2000;17:73–87. doi: 10.1080/026432900380490. [DOI] [PubMed] [Google Scholar]

- Caltagirone C, Gainotti G, Carlesimo GA, Parnetti L. Batteria per la valutazione del deterioramento mentale (parte I): descrizione di uno strumento per la diagnosi neuropsicologica. Arch Psicol Neurol Psichiat. 1995;55:461–470. [Google Scholar]

- Cappa SF, Pirovano C, Vignolo LA. Chronic “locked-in” syndrome: psychological study of a case. Eur Neurol. 1985;24:107–111. doi: 10.1159/000115769. [DOI] [PubMed] [Google Scholar]

- Conson M, Sacco S, Sarà M, Pistoia F, Grossi D, Trojano L. Selective motor imagery defect in patients with locked-in syndrome. Neuropsychologia. 2008;46:2622–2628. doi: 10.1016/j.neuropsychologia.2008.04.015. [DOI] [PubMed] [Google Scholar]

- Dalgleish T. The emotional brain. Nat Rev Neurosci. 2004;5:583–589. doi: 10.1038/nrn1432. [DOI] [PubMed] [Google Scholar]

- Darwin C. London: John Murray; 1872. The expression of emotions in man and animals. [Google Scholar]

- Dimberg U, Lundquist O. Facial reactions to facial expressions: sex differences. Psychophysiology. 1988;25:442–443. [Google Scholar]

- Dimberg U, Thunberg M, Elmehed K. Unconscious facial reactions to emotional facial expressions. Psychol Sci. 2000;11:86–89. doi: 10.1111/1467-9280.00221. [DOI] [PubMed] [Google Scholar]

- Dimberg U, Thunberg M, Grunedal S. Facial reactions to emotional stimuli: automatically controlled emotional responses. Cogn Emot. 2002;16:449–471. [Google Scholar]

- Ekman P. An argument for basic emotions. Cogn Emot. 1992;6:169–200. [Google Scholar]

- Ekman P, Friesen WV. Pictures of facial affect. Palo Alto, CA: Consulting Psychologists; 1976. [Google Scholar]

- Frith CD, Frith U. Interacting minds: a biological basis. Science. 1999;286:1692–1695. doi: 10.1126/science.286.5445.1692. [DOI] [PubMed] [Google Scholar]

- Gallese V. The manifold nature of interpersonal relations: the quest for a common mechanism. Philos Trans R Soc Lond B Biol Sci. 2003;358:517–528. doi: 10.1098/rstb.2002.1234. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldman AI. Mirroring, mindreading and simulation. In: Pineda JA, editor. Mirror neuron systems: the role of mirroring processes in social cognition. New York: Humana; 2009. [Google Scholar]

- Goldman AI, Sripada CS. Simulationist models of face-based emotion recognition. Cognition. 2005;94:193–213. doi: 10.1016/j.cognition.2004.01.005. [DOI] [PubMed] [Google Scholar]

- Hopf HC, Müller-Forell W, Hopf NJ. Localization of emotional and volitional facial paresis. Neurology. 1992;42:1918–1923. doi: 10.1212/wnl.42.10.1918. [DOI] [PubMed] [Google Scholar]

- Keillor JM, Barrett AM, Crucian GP, Kortenkamp S, Heilman KM. Emotional experience and perception in the absence of facial feedback. J Int Neuropsychol Soc. 2002;8:130–135. [PubMed] [Google Scholar]

- Keysers C, Gazzola V. Towards a unifying neural theory of social cognition. Prog Brain Res. 2006;156:379–401. doi: 10.1016/S0079-6123(06)56021-2. [DOI] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. Gainesville, FL: NIMH Center for the Study of Emotion and Attention, University of Florida; 1997. International affective picture system (IAPS): technical manual and affective ratings. [Google Scholar]

- Laureys S, Pellas F, Van Eeckhout P, Ghorbel S, Schnakers C, Perrin F, Berré J, Faymonville ME, Pantke KH, Damas F, Lamy M, Moonen G, Goldman S. The locked-in syndrome: what is it like to be conscious but paralyzed and voiceless? Prog Brain Res. 2005;150:495–511. doi: 10.1016/S0079-6123(05)50034-7. [DOI] [PubMed] [Google Scholar]

- Lewis PA, Critchley HD, Rotshtein P, Dolan RJ. Neural correlates of processing valence and arousal in affective words. Cereb Cortex. 2007;17:742–748. doi: 10.1093/cercor/bhk024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lulé D, Zickler C, Häcker S, Bruno MA, Demertzi A, Pellas F, Laureys S, Kübler A. Life can be worth living in locked-in syndrome. Prog Brain Res. 2009;177:339–351. doi: 10.1016/S0079-6123(09)17723-3. [DOI] [PubMed] [Google Scholar]

- Morecraft RJ, Stilwell-Morecraft KS, Rossing WR. The motor cortex and facial expression: new insights from neuroscience. Neurologist. 2004;10:235–249. doi: 10.1097/01.nrl.0000138734.45742.8d. [DOI] [PubMed] [Google Scholar]

- Niedenthal PM. Embodying emotion. Science. 2007;316:1002–1005. doi: 10.1126/science.1136930. [DOI] [PubMed] [Google Scholar]

- Oberman LM, Winkielman P, Ramachandran VS. Face to face: blocking facial mimicry can selectively impair recognition of emotional expressions. Soc Neurosci. 2007;2:167–178. doi: 10.1080/17470910701391943. [DOI] [PubMed] [Google Scholar]

- Oberman LM, Winkielman P, Ramachandran VS. Slow echo: facial EMG evidence for the delay of spontaneous, but not voluntary, emotional mimicry in children with autism spectrum disorders. Dev Sci. 2009;12:510–520. doi: 10.1111/j.1467-7687.2008.00796.x. [DOI] [PubMed] [Google Scholar]

- Oliveri M, Babiloni C, Filippi MM, Caltagirone C, Babiloni F, Cicinelli P, Traversa R, Palmieri MG, Rossini PM. Influence of the supplementary motor area on primary motor cortex excitability during movements triggered by neutral or emotionally unpleasant visual cues. Exp Brain Res. 2003;149:214–221. doi: 10.1007/s00221-002-1346-8. [DOI] [PubMed] [Google Scholar]

- Rapcsak SZ, Galper SR, Comer JF, Reminger SL, Nielsen L, Kaszniak AW, Verfaellie M, Laguna JF, Labiner DM, Cohen RA. Fear recognition deficits after focal brain damage: a cautionary note. Neurology. 2000;54:575–581. doi: 10.1212/wnl.54.3.575. [DOI] [PubMed] [Google Scholar]

- Sacco S, Sarà M, Pistoia F, Conson M, Albertini G, Carolei A. Management of pathologic laughter and crying in patients with locked-in syndrome: a report of 4 cases. Arch Phys Med Rehabil. 2008;89:775–778. doi: 10.1016/j.apmr.2007.09.032. [DOI] [PubMed] [Google Scholar]

- Schmahmann JD, Sherman JC. The cerebellar cognitive affective syndrome. Brain. 1998;121:561–579. doi: 10.1093/brain/121.4.561. [DOI] [PubMed] [Google Scholar]

- Schnakers C, Majerus S, Goldman S, Boly M, Van Eeckhout P, Gay S, Pellas F, Bartsch V, Peigneux P, Moonen G, Laureys S. Cognitive function in the locked-in syndrome. J Neurol. 2008;255:323–330. doi: 10.1007/s00415-008-0544-0. [DOI] [PubMed] [Google Scholar]

- Schutter DJ, Hofman D, Van Honk J. Fearful faces selectively increase corticospinal motor tract excitability: a transcranial magnetic stimulation study. Psychophysiology. 2008;45:345–348. doi: 10.1111/j.1469-8986.2007.00635.x. [DOI] [PubMed] [Google Scholar]

- Tassinary LG, Cacioppo JT. The skeletomotor system: surface electromyography. In: Cacioppo JT, Tassinary LG, Berntson GG, editors. Handbook of psychophysiology. Ed 2. Cambridge: Cambridge UP; 2000. pp. 163–199. [Google Scholar]

- Töpper R, Kosinski C, Mull M. Volitional type of facial palsy associated with pontine ischaemia. J Neurol Neurosurg Psychiatry. 1995;58:732–734. doi: 10.1136/jnnp.58.6.732. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Honk J, Schutter DJ, d'Alfonso AA, Kessels RP, de Haan EH. 1 Hz rTMS over the right prefrontal cortex reduces vigilant attention to unmasked but not to masked fearful faces. Biol Psychiatry. 2002;52:312–317. doi: 10.1016/s0006-3223(02)01346-x. [DOI] [PubMed] [Google Scholar]

- Yamada M, Ueda K, Namiki C, Hirao K, Hayashi T, Ohigashi Y, Murai T. Social cognition in schizophrenia: similarities and differences of emotional perception from patients with focal frontal lesions. Eur Arch Psychiatry Clin Neurosci. 2009;259:227–233. doi: 10.1007/s00406-008-0860-5. [DOI] [PubMed] [Google Scholar]

- Zigmond AS, Snaith RP. The hospital anxiety and depression scale. Acta Psychiatr Scand. 1983;67:361–370. doi: 10.1111/j.1600-0447.1983.tb09716.x. [DOI] [PubMed] [Google Scholar]