Abstract

Recent studies have suggested that the extent to which primary task demands draw on attentional resources determines whether or not task-irrelevant emotional stimuli are processed. Another important factor that can bias task-relevant and task-irrelevant stimulus competition is the bottom-up factor of stimulus salience. Here, we investigated the effect of stimulus salience associated with a primary motion task on the processing of emotional face distractors. Faces of different emotional valences were presented within a context of randomly moving dots. Subjects had to detect short intervals of coherent motion while ignoring the background faces. Task salience was manipulated by the level of motion coherence of the dots with high motion coherence being associated with high salience. Using functional magnetic resonance imaging, we show that emotional faces, compared with neutral faces, more strongly interfered with the primary task, as reflected in significant signal decreases in task-related motion area V5/hMT+. In addition, these faces elicited significant signal increases in the left amygdala. Most importantly, task salience was found to further increase amygdala's activity when presented together with an emotional face. Our data support a more general role of the amygdala as a behavioral relevance detector, which flexibly integrates behavioral relevant, salient context information to decode the emotional content of a visual scene.

Introduction

Emotional stimuli compared with other visual events can act as potent distractors that involuntarily attract attention. Selective attention, as defined by explicit task relevance, may help to counteract these detrimental effects of emotional interference. For example, increasing the load of the primary task is assumed to attenuate the processing of competing task-irrelevant information because resources are exhausted (Lavie, 1995, 2005). In the context of emotional distractors, several functional magnetic resonance imaging (fMRI) studies reported emotion effects under low load that vanished under more resource-absorbing conditions (Pessoa et al., 2002, 2005; Bishop et al., 2007; Mitchell et al., 2007; Silvert et al., 2007; but see Gläscher et al., 2007).

However, the processing of task-irrelevant objects is also determined by bottom-up factors reflecting the sensory stimulation, i.e., stimulus salience (Desimone and Duncan, 1995). To date, stimulus salience in studies on emotional interference has commonly been associated with the emotional signature of the emotional stimuli per se as with the physical characteristics of the task-relevant, non-emotional stimuli that accompany the emotional content and therefore establish the overall perceptual context (Vuilleumier et al., 2001; Pessoa et al., 2002; Anderson et al., 2003; Pourtois et al., 2006; Bishop et al., 2007; Gläscher et al., 2007; Hsu and Pessoa, 2007). Recent findings indicate that the amygdala, known as candidate brain region for the processing of emotional stimuli, is further modulated by experimental manipulations of task salience, current goals, and contextual demands (Hsu and Pessoa, 2007; Cunningham et al., 2008; Ousdal et al., 2008). This, together with cumulative evidence that shows an amygdala involvement in processes of novelty and ambiguity (Wright and Liu, 2006; Whalen, 2007), unpredictability (Herry et al., 2007), and social cognitive and interactive processes (Adams et al., 2003; Adolphs, 2003; Kennedy et al., 2009), supports a more generalized function of the amygdala beyond emotional processing per se.

The aim of this functional MRI study was to further delineate the operating characteristics of the amygdala by focusing on its role in processing contextual stimulus salience. Our experimental design comprised task-irrelevant faces of different emotional valences which were presented in the context of a primary motion task whose salience was varied by the level of coherent motion (Rosenholtz, 1999). Thus, we were able to analyze the effects of perceptual context and emotional valence in functionally defined brain areas: the V5/hMT+ complex activated by the primary task (Britten et al., 1992, 1996; Patzwahl and Zanker, 2000; Rees et al., 2000) and the amygdala in response to the emotional signature of the face distractors. We hypothesized that task-irrelevant emotional faces more strongly interfere with the primary task compared with neutral faces, as reflected in reduced activity in V5/hMT+, whereas neural activity in the amygdala should be enhanced in response to these stimuli. Importantly, if the amygdala is further responsive to the general contextual salience, high task salience should have a modulatory effect on this region too, either independently or in interaction with the emotional valence of the face.

Materials and Methods

Participants.

Twenty healthy, right-handed adults with normal or corrected-to-normal vision (11 females; mean ± SD age, 26.3 ± 4.9 years) participated in the study. All participants gave written informed consent before the experiment according to the Declaration of Helsinki. They had no history of neurological problems and were not taking medications. All participants were remunerated for participation.

Stimuli.

Stimuli for the task consisted of yellow dots (each 0.3° × 0.3°) that were arranged within the borders of an oval mask. For task-irrelevant distractors we used 12 different face stimuli (12° × 14°) from the Ekman series (Ekman and Friesen, 1976) with neutral, fearful, and happy expressions. All peripheral features (e.g., hair, clothes) were removed. We further used scrambled pictures, obtained by phase scrambling the original face stimuli. The resulting stimuli were characterized by equal global low-level properties of the original face stimuli (luminance, spectral energy) with any content-related information deleted.

Task.

Subjects were required to attend to the dots that were continuously moving in random directions (3°/s) within the borders of the oval mask and in the foreground of the face stimuli. During each trial, they had to detect short intervals (300 ms) of coherent motion (i.e., targets). Within one trial, zero, two, or three coherent motion intervals could occur and the direction of each coherent motion was randomly chosen in the range from 0° to 360°. Task salience was modulated by varying the number of dots moving coherently. We used two levels of salience: high task salience (80% of dots moved coherently) and low task salience (50% of dots moved coherently), varying on a trial-by-trial basis. No cue was presented at the beginning of the trials so that subjects were not aware of the type of upcoming salience level until they observed coherent motion. Subjects were instructed to focus on the task and to accurately respond to each target via button press. Throughout the experiment, fixation on a central yellow cross was demanded. Although the dot array was superimposed over the entire face, the facial expression was easy recognizable (for a graphical example, see Fig. 1). To avoid an attentive tracking of single dots, all dots had a limited lifetime of, on average, 800 ms, after which they disappeared and reappeared at new, randomly chosen positions.

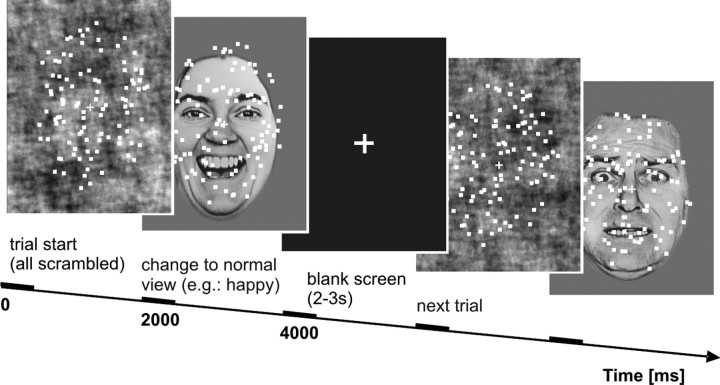

Figure 1.

Schematic illustration of a trial sequence. In each trial, subjects were confronted with randomly moving dots superimposed on a face picture. They were instructed to attend to the dots and to detect short intervals of coherent motion (up to three during one trial). Task salience was varied in two levels of motion coherence. Emotional relevance was varied as to the emotional valence of the faces. Each face was initially presented in scrambled view and changed after 2000 ms to normal view (here: happy, fearful) or to another scrambled view. Faces were presented in grayscale, superimposed dots were yellow.

Our 2 × 4 factorial design with the within-subjects factors task salience (50 or 80% motion coherence) and distractor salience associated with the facial expressions of the background images (neutral, fearful, happy, or scrambled faces) yielded a total of eight conditions.

The experiment consisted of three successive runs (∼15 min each). Each run started with a gray background and a central yellow fixation cross for 2 s. One trial lasted 4 s and was followed by an intertrial interval that was randomly jittered between 2 and 3 s in 80% of cases and 6–8 s in 20% of cases, equally distributed across trials and conditions. All conditions were presented in randomized order with the same condition not repeated more than twice. To allow for an appropriate analysis of behavioral data, coherent motion targets were randomly distributed across small time windows of 300 ms each. The minimum distance between two subsequent targets within one trial was therefore 900 ms.

At trial onset, all background faces were presented in a scrambled version and changed after half of the trial (i.e., after 2 s) to the normal presentation of the image or to another scrambled view in the control condition. In the last case we used a randomly selected sample of all faces, counterbalanced for the three facial expressions (neutral, fearful, and happy) across trials. To ensure that the scrambled condition also contained a change, scrambling was performed twice for the selected face.

All stimuli were presented on a gray background and were back-projected onto a translucent screen positioned on top of the head coil visible via an angled mirror placed above the participant's head. The luminance of the experimental display was 34.6 cd/m2 on average and did not differ between conditions. To familiarize subjects with the task, they underwent a training session before scanning. On average, they performed three blocks of 20 trials each and training ended when target detection rate was >60% in all primary task conditions (i.e., high and low task salience). In addition, subjects completed the Spielberger State-Trait Anxiety Inventory (STAI) (Spielberger, 1983) and the Toronto Alexithymia Scale (TAS) (Bagby et al., 1994) before the fMRI session. After the scanning session, subjects were asked to rate the faces in terms of valence and arousal on the self-assessment manikin rating scales (Bradley and Lang, 1994) of the International Affective Picture System (Lang et al., 1999). Valence ratings ranged from 1 (very unpleasant) to 9 (very pleasant) and arousal ratings were from 1 (very calm) to 9 (very excited).

Data acquisition.

Functional imaging was performed on a 3 T Siemens system. In each volume, 38 contiguous descending transversal slices of echo-planar T2*-weighted images were acquired (3 mm slice thickness, no gap; repetition time, 2.5 s; echo time, 30 ms; flip angle, 80°; field of view, 216 mm; image matrix, 64 × 64). A total of 380 volumes were collected per run. The first four volumes of each run were discarded to allow for T1 equilibration effects.

Data processing.

Imaging data were analyzed using SPM5 software (http://www.fil.ion.ucl.ac.uk/spm). Each echo-planar imaging (EPI) volume was realigned to the first volume, spatially normalized to a standard EPI template (SPM5) using third-degree B-spline interpolation, and spatially smoothed by an 8 mm full-width at half maximum isotropic Gaussian kernel.

Statistical analysis.

The onsets of the background image change (after 2 s) of the eight experimental conditions were modeled as separate events for all three image acquisition runs using a canonical hemodynamic response function with its temporal derivative. Brain activity was high-pass filtered at 1/128 Hz and corrected for serial autocorrelation. Trials in which subjects showed poor task performance (button presses in trials with zero coherent motion or less than two button presses in all other trials with two to three coherent motions) were modeled as separate nuisance regressor to avoid confounding brain activations. The mean error rate for all subjects was 11.8%.

Regression coefficients were estimated and linear contrasts for each experimental condition were created by averaging the same experimental conditions across the three runs. For the group analysis, these single-subject contrast images were entered in repeated-measures ANOVA, allowing for an appropriate nonsphericity correction.

As we had an a priori hypothesis for the amygdala, we based correction for multiple comparisons in this region on the small volume correction as implemented in SPM. The central coordinates for the amygdala were taken from the main effect of facial emotion (fear > neutral) used in the fMRI study of Vuilleumier et al. (2001). The small volume correction was based on an 8 mm sphere. For all other brain areas, we used a statistical threshold of p < 0.05, corrected for multiple comparisons across the whole brain (family-wise error rate).

Performance of the primary task was analyzed by means of target detection rates and reaction times, which were entered into repeated-measures ANOVAs comprising the factors of task salience (low and high) and distractor valence (neutral, fearful, happy, and scrambled faces). Greenhouse-Geisser corrections were applied whenever appropriate. To test for significant differences between conditions, paired t tests were subsequently calculated. Only responses between 200–900 ms after the onset of a coherent motion were regarded as correct responses. Responses after that time window were considered as false alarms and were excluded from further analysis.

Valence and arousal ratings were tested by paired t tests between the three face categories (neutral, happy, and fearful). Scrambled images were not included in the rating.

Results

Behavioral results

Rating

Valence ratings for happy faces (mean = 6.94, SD = 1.21) were significantly higher (t(19) = −9.14, p < 0.0001) and valence ratings for fearful faces (mean = 2.54, SD = 0.57) were significantly lower (t(19) = 12.13, p < 0.0001) than valence ratings for neutral faces (mean = 4.45, SD = 0.45). Self-reported arousal was also higher for happy (mean = 4.94, SD = 1.38; t(19) = −4.86, p < 0.0001) and fearful faces (mean = 6.61, SD = 1.14; t(19) = −11.59, p < 0.0001) compared with neutral faces (mean = 3.37, SD = 0.99). In addition, fearful faces were rated as more arousing than happy faces (t(19) = −4.51, p < 0.0001).

Task performance

Differences between conditions were mainly observed in reaction times (RTs). A 2 (task salience: low, high) × 4 (emotional valence: fearful, happy, neutral, scrambled) repeated measures ANOVA for the time period after picture change revealed significant main effects of task salience (Hits, F(1,19) = 61.15, p < 0.0001; RTs, F(1,19) = 193.28, p < 0.0001) and emotional valence (Hits, F(3,57) = 11.31, p < 0.0001; RTs, F(3,57) = 10.28, p < 0.0001).

When task salience was high, subjects reacted significantly faster when neutral compared with fearful or happy faces were presented in the background (neutral vs happy faces: t19 = −2.13, p < 0.05; neutral vs fearful faces: t19 = −2.08, p < 0.05). In addition, scrambled background faces yielded the shortest RTs (scrambled vs neutral faces: t19 = 3.66, p < 0.005, scrambled vs happy faces: t19 = 4.90, p < 0.0001; and scrambled vs fearful faces: t19 = 6.05, p < 0.0001). When task salience was low, scrambled background faces similarly yielded shortest reaction times that were, however, only significant when compared with fearful face distractors (t19 = 2.20, p < 0.05).

Questionnaires

For all participants, the scores on the STAI and the TAS were within the normal range (STAI: state scale, mean = 35.10, SD = 6.72; trait scale, mean = 35.30, SD = 9.15; TAS: scale 1, mean = 12.35, SD = 4.15; scale 2, mean = 12.65, SD = 3.70; scale 3, mean = 12.85, SD = 3.18). Thus, we could exclude any possible influence on our results due to pathological levels of anxiety or alexithymia.

Imaging data

We first analyzed the imaging data by identifying the brain areas responding to the main effect of task salience. This was done by the comparison “high > low task salience.” Based on previous imaging studies, which demonstrated a positive correlation between responses of area V5/hMT+ and motion coherence (Rees et al., 2000; Nakamura et al., 2003; Aspell et al., 2005; Siegel et al., 2007), we expected higher activity in this area for targets with more coherently moving dots. Accordingly, we observed significant effects in bilateral motion area V5/hMT+ (thresholded at p < 0.001). In addition, we observed widespread activation in the angular gyrus, the precuneus, inferior and middle temporal regions, and the fusiform gyrus, among others (summarized in Table 1). We also computed the converse comparison, that is “low > high task salience,” because trials of low task salience were further characterized by a higher degree of difficulty. Indeed, this comparison elicited activation of the frontoparietal attention network, including the inferior, middle, and superior frontal gyrus and the inferior parietal lobe (Table 1).

Table 1.

Peak voxel and corresponding t values of the effects of task salience and emotional valence of the face distractors

| Experimental effect | Left hemisphere |

Right hemisphere |

||||||

|---|---|---|---|---|---|---|---|---|

| x | y | z | t value | x | y | z | t value | |

| Main effect task salience (high > low) | ||||||||

| SFG | −15 | 39 | 51 | 7.43 | ||||

| Angular G | −48 | −69 | 33 | 9.17 | 54 | −63 | 27 | 5.38 |

| Precuneus | −6 | −60 | 27 | 7.47 | 6 | −54 | 27 | 7.09 |

| Rolandic Operculum | −45 | −30 | 21 | 5.40 | 51 | −24 | 18 | 7.41 |

| MTG | −60 | −9 | −21 | 7.87 | 60 | −6 | −21 | 6.04 |

| V5/MT+ | −48 | −69 | 3 | 3.26 | 48 | −63 | −9 | 4.63 |

| ITG | −42 | 9 | −45 | 7.05 | ||||

| Amygdala | −24 | −6 | −24 | 5.78 | 21 | −9 | −21 | 7.75 |

| Hippocampus | −27 | −15 | 21 | 7.91 | ||||

| SOG | 39 | 18 | −45 | 6.27 | ||||

| FG | 30 | −33 | 21 | 5.49 | ||||

| Main effect task salience (low > high) | ||||||||

| IFG | −33 | 24 | −6 | 14.21 | 33 | 27 | −6 | 14.61 |

| MFG | −30 | 48 | 15 | 5.54 | 42 | 33 | 18 | 5.66 |

| SFG | −3 | 27 | 39 | 11.93 | ||||

| Nucleus Caudate | −9 | 6 | −3 | 7.01 | 9 | 9 | 0 | 7.16 |

| IPC | −42 | −45 | 45 | 7.12 | 42 | −45 | 48 | 6.04 |

| Precentral G | −36 | 3 | 36 | 6.55 | ||||

| Main effect emotion (emotional > neutral) | ||||||||

| Amygdala | −30 | −3 | −27 | 3.67* | ||||

| OFG | −3 | 42 | −21 | 4.50+ | ||||

| MTG | 42 | −3 | 27 | 3.54+ | ||||

| Main effect emotion (emotional < neutral) | ||||||||

| V5/MT+ | −42 | −63 | −6 | 4.48 | 48 | −57 | −6 | 3.53 |

| Interaction effect of task salience (high > low) with normal > scrambled faces | ||||||||

| Amygdala | −27 | 0 | −27 | 3.31* | ||||

| Conjunction of task salience (high > low) and emotional valence (emotional > neutral) | ||||||||

| Amygdala | −27 | −3 | −27 | 3.50* | ||||

FG, Frontal gyrus; SFG, superior FG; MFG, medial FG; IFG, inferior FG; OFG, orbitofrontal gyrus; IPC, inferior parietal cortex; ITG, inferior temporal gyrus; MTG, medial temporal gyrus; SOG, superior occipital gyrus.

*p < 0.05 (corrected for small volume); +p < 0.001 (uncorrected); p < 0.05 (corrected for whole-brain volume for all other peaks).

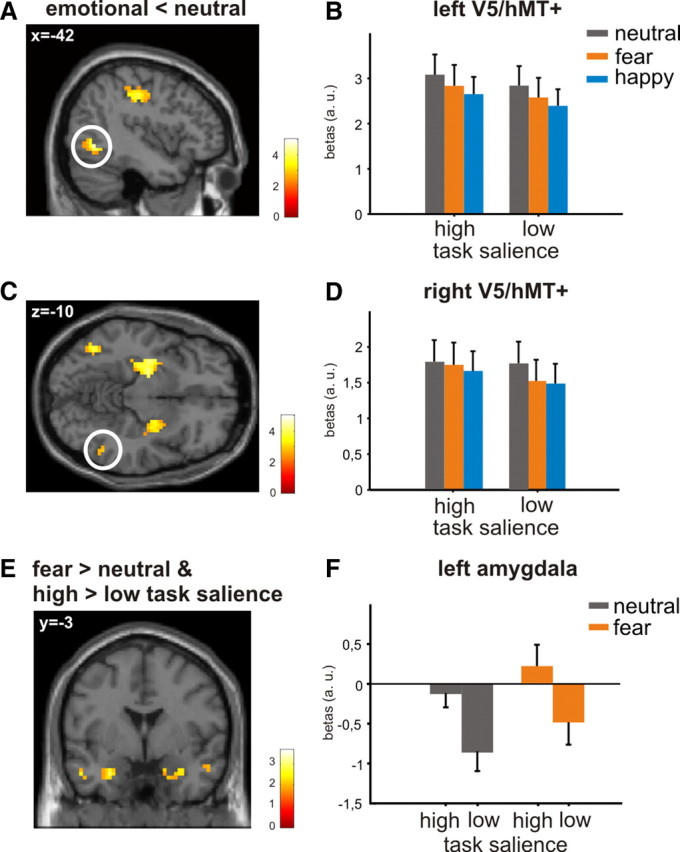

After we had verified that the primary task activated motion area V5/hMT+, in the next step we tested the influence of emotional valence on the activation pattern within this area. According to our hypothesis, the blood oxygen level-dependent (BOLD) signal in area V5/hMT+ should be reduced when emotional faces were concurrently presented in the background because of their higher amount of behavioral relevance. To test this hypothesis, we compared “emotional < neutral faces” masked with the comparison “high > low task salience.” As expected, we observed a significant decrease of the BOLD signal for emotional relative to neutral faces across all task conditions (i.e., high and low task salience) within bilateral area V5/hMT+, thus indicating that emotional faces exerted greater interference with the primary task (Fig. 2A–D, Table 1). Notably, as shown in Figure 2, B and D, this main effect of emotion was slightly stronger for happy than for fearful faces distractors.

Figure 2.

V5/hMT+ activity in response to the emotional valence of the face distractors. A, C, Statistical map of the main effect of emotional valence masked with the comparison “high versus low task salience” on the neural activity in bilateral motion area V5/hMT+ (thresholded at p < 0.05; overlaid on canonical single subject T1 images). B, D, Mean parameter estimates for the peak voxels from A and C for neutral, fearful, and happy face distractors show decreased neural activity for emotional faces independent of the different levels of task salience (error bars indicate SEM). E, F, Effects of the statistical conjunction analysis showing significantly activated voxels within the amygdala for both comparisons “high versus low task salience” and “fearful versus neutral faces.” E, Statistical map of the conjunction analysis on the basis of the two main effects of task salience and emotional valence (thresholded at p < 0.05; overlaid on a canonical single subject T1 image). F, Mean parameter estimates for the significant right peak voxel of the conjunction analysis from E for fearful and neutral face distractors show increased neural activity for emotional relative to neutral faces as well as for high salient relative to low salient task conditions (error bars indicate SEM). a.u., Arbitrary unit.

We finally tested for a two-way interaction of task salience and emotional valence within V5/hMT+, which did not yield significant effects even at an uncorrected threshold of p < 0.001.

To test the influence of emotion on neural activity within in the amygdala, we compared emotional with neutral faces across all task conditions. Fearful relative to neutral faces elicited a significantly higher BOLD signal in the left amygdala (x, y, z = −30, −3, −27; t = 3.67, p < 0.05) (Table 1), whereas a similar effect in the right amygdala failed to reach significance. Happy faces did not activate the amygdala and also a two-way interaction of emotional valence (fearful > neutral face) and task salience (high > low) failed to reach significance when formally tested.

The results reported so far clearly demonstrate that emotional faces were capable of influencing neural activity in the amygdala and area V5/hMT+ independent of manipulations of task salience.

To address our main question of whether the amygdala incorporates overall contextual salience when processing emotional faces, we next tested the two-way interaction between task salience and distractor type. This elicited significant activation in the left amygdala (x, y, z = −27, 0, −27; t = 3.31, p < 0.05) with higher BOLD responses to high salient task conditions in the foreground of a meaningful face (neutral, happy, fearful) relative to conditions in which a high salient task was presented together with a scrambled face. Activation in response to face distractors was thus potentiated when the accompanying perceptual context was also characterized by highly salient yet not biologically relevant features. In a further control test, we only included scrambled faces into the analysis of task salience, which did not reveal any significant effects.

In a last step, we tested whether the effects of task salience and emotional valence exhibit a common effect on neural activity within the same region of the amygdala. This was formally tested by a conjunction analysis on the basis of the contrasts “high > low task salience” and “fearful > neutral faces” (real conjunction testing for significant effects in both contrasts). We observed a significant effect in the left amygdala (x, y, z = −27, −3, −27; t = 3.50, p < 0.05) (Fig. 2E,F) whereas a similar effect in the right amygdala failed to reach significance (x, y, z = 30, 0, −27; t = 2.21, p > 0.05) (Fig. 2E,F). Importantly, the signal maximum of this last analysis, which we located at the left lateral border of the ventral amygdala, was almost identical to the signal maxima for the interaction effect of task salience × emotional valence and the main effect of emotion. Together, the interaction between task salience and emotion with this last conjunction analysis shows that the amygdala is also sensitive to non-biologically relevant context information when processing emotional faces. The identical region for both effects of task salience and emotion further indicates that the process that evaluates the faces is modulated by contextual salience.

Discussion

The present study examined the influence of salient contextual information on the processing of emotional face distractors. We observed specific influences of emotional valence, which were reflected in significant signal decreases in task-related area V5/hMT+ and signal increases in the amygdala in response to emotional versus neutral faces. Importantly, high contextual salience in combination with a clearly detectable face was found to potentiate the BOLD signal within the amygdala, providing support for a more general role of this region in the detection of behaviorally relevant stimuli (Sander et al., 2003).

One important aspect of this study is that we explicitly manipulated task salience in addition to the emotional valence of the face distractors. We used dynamic motion stimuli as targets, which have previously been shown to be powerful in capturing attention (Hillstrom and Yantis, 1994). Task salience varied according to the level of motion coherence associated with the target stimuli. In particular, increasing task salience by higher levels of motion coherence should facilitate the rapid perceptual grouping of visual items and, as a consequence, decrease reaction times (Patzwahl and Zanker, 2000). Indeed, behavioral data show that subjects were faster to detect high coherent relative to low coherent targets, which we interpreted as direct evidence that our manipulation of task salience was effective.

Independent of the salience level associated with the primary task, we found that fearful relative to neutral face distractors elicited increased neural responses in the amygdala. This was in line with numerous evidence that high salient emotional stimuli receive prioritized processing when compared with other visual items of less behavioral significance (Whalen et al., 2001; Dolan and Vuilleumier, 2003; Hariri et al., 2003). In addition, emotional relative to neutral faces did more strongly decrease neural signals in V5/hMT+, which was implicated in the processing of task-relevant motion targets. The emotional interference effect was also reflected in the behavioral data where subjects showed enhanced response latencies to high salient motion targets when presented in the foreground of an emotional face.

To investigate whether the amygdala is not only responsive to the emotional information but also to the accompanying perceptual context of the emotional stimulus, we investigated the effect of task salience on the BOLD signal within this region. Indeed, we found an effect of task salience that varied with respect to the underlying distractor face. That is, high task salience only increased responses in the amygdala when it was associated with a biologically relevant, meaningful face. In addition, robust amygdala activation was revealed when testing for the common influence of task salience and emotional valence. These findings strongly support the notion that the amygdala is not only responsive to the emotional signature of a stimulus but incorporates the general perceptual context that accompanies this stimulus. In fact, the perceptual context might provide the primary basis upon which further emotional processing takes place. Based on its functional and anatomical connectivity, the amygdala is uniquely positioned to act as an integral component of a vigilance system devoted to the detection of salient environmental events that are not necessarily restricted to the emotional value of a stimulus (Whalen, 1998, 2007; Sander et al., 2003). According to Whalen (1998), the amygdala constantly monitors the environment for signals of behavioral relevance and modulates the moment-to-moment vigilance level of an organism. If environmental signals are uncertain in their predictive outcome or—as in the present case—consist of unpredictable, salient motion targets, the amygdala can boost vigilance in response to these events to determine any causal relationships between these events (Whalen, 1998). Once activated by a salient contextual environment, the amygdala might then subsequently decode other salient information of high behavioral relevance like threatening faces as in the present case. The finding that both task salience and emotional valence activated a similar region with overlapping signal maxima in the left amygdala further supports the assumption that these activations represent a single integrative process. The unilateral left-sided location of the significant activation foci is corroborated by numerous meta-analyses of studies of emotion processing (Murphy et al., 2003; Wager et al., 2003; Baas et al., 2004; Sergerie et al., 2008) and indicates a local processing mode sensitive to specific threatening (Hardee et al., 2008) and other behavioral relevant, salient features (e.g., motion).

Our current results are consistent with other recent data that demonstrated amygdala responsitivity to manipulations of goal-relevance by shifting stimulus salience to specific stimulus categories (Goldstein et al., 2007; Cunningham et al., 2008; Ousdal et al., 2008). Other studies supporting the extended role of the amygdala in mediating non-emotional behavioral relevance reported its activation by socially relevant stimuli (Adams et al., 2003; Adolphs, 2003; Kennedy et al., 2009), unpredictable auditory stimuli (Herry et al., 2007), or rising sound intensity (Bach et al., 2008). All of these stimuli signal potential relevance and the amygdala is supposed to flexibly integrate these stimulus valences with current goals, motivations, and contextual demands (Cunningham and Zelazo, 2007; Cunningham et al., 2008).

One might argue that the decreased responses in the amygdala that we observed during conditions of low task salience are rather due to a top-down attentional suppression effect. Indeed, low salient targets were more difficult to detect than high salient targets and thus increased the load on the attentional system. Accordingly, when comparing low with high task salience, we observed activation in a frontoparietal network that has previously been proposed to engage top-down attentional effects (Kastner and Ungerleider, 2000; Corbetta and Shulman, 2002). Other studies that directly assessed the effect of attentional load on the processing of emotional distractors (Pessoa et al., 2002, 2005; Bishop et al., 2004, 2007; Mitchell et al., 2007; Silvert et al., 2007; Lim et al., 2008) have consistently reported decreased amygdala activation to emotional stimuli when attentional load was high (but see Gläscher et al., 2007). Here we also investigated the effect of task condition when only combined with scrambled faces. The fact that during the presentation of a scrambled face the identical attentional load did not result in a modulation of amygdala activation further indicates an integration of information that depends on emotional face processing.

We cannot exclude that the effect of task salience we observed in the amygdala is a general phenomenon or rather restricted to the use of motion stimuli that might also bear emotional relevance. In fact, the processing of motion and emotional stimuli is characterized by more similarities than it appears to be on first sight. For example, motion stimuli compared with other physical features such as luminance and color can be detected without attentional scrutiny (Franconeri and Simons, 2003, 2005), an advantage that also holds for emotional stimuli (Morris et al., 1998). In biological terms, enhanced sensitivity to visual motion and emotion would involve clear adaptive advantages for the survival of prey and predator. Although direct evidence is lacking (Palermo and Rhodes, 2007), it is argued that emotional stimuli are more rapidly processed via the subcortical route of the magnocellular pathway than via the cortical pathway of the parvocellular system. One functional difference between magnocellular and parvocellular cells (for review, see Livingstone and Hubel, 1987; Schiller et al., 1990) is that magnocellular cells are specialized in analyzing low spatial frequency (LSF) information (Vuilleumier et al., 2003; Pourtois et al., 2005), which is also crucial for the processing of motion stimuli (Maunsell and Newsome, 1987; DeYoe and Van Essen, 1988; Merigan and Maunsell, 1993; Chapman et al., 2004). Specifically, it has been shown that the amygdala more strongly responds to LSF stimuli of emotional (Vuilleumier et al., 2003) and neutral valence (Kveraga et al., 2007) while being comparatively “blind” to high spatial frequency features (Vuilleumier et al., 2003). As a hub on the magnocellular processing stream and characterized by its widespread functional and anatomical connectivity, the amygdala might therefore be ideally suited to integrate salient motion information in the processing of emotional relevance.

In conclusion, the present study provides clear evidence that the task-irrelevant emotional background of a scene directly modulates neural processing in V5/hMT+ and the amygdala, regardless of the manipulations of a primary motion detection task. We could also demonstrate that motion targets that are provided with increased salience further modulate neural activity in the amygdala when presented together with a biologically relevant face stimulus. Our findings thus emphasize a broader role of the amygdala as a hub that integrates perceptual salient information on the basis of which further emotional stimulus processing takes place.

Footnotes

This work was supported by a grant of the German Research Foundation as part of the graduate program “Function of attention in cognition” (DFG 1182).

References

- Adams RB, Jr, Gordon HL, Baird AA, Ambady N, Kleck RE. Effects of gaze on amygdala sensitivity to anger and fear faces. Science. 2003;300:1536. doi: 10.1126/science.1082244. [DOI] [PubMed] [Google Scholar]

- Adolphs R. Is the human amygdala specialized for processing social information? Ann N Y Acad Sci. 2003;985:326–340. doi: 10.1111/j.1749-6632.2003.tb07091.x. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Christoff K, Panitz D, De Rosa E, Gabrieli JD. Neural correlates of the automatic processing of threat facial signals. J Neurosci. 2003;23:5627–5633. doi: 10.1523/JNEUROSCI.23-13-05627.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aspell JE, Tanskanen T, Hurlbert AC. Neuromagnetic correlates of visual motion coherence. Eur J Neurosci. 2005;22:2937–2945. doi: 10.1111/j.1460-9568.2005.04473.x. [DOI] [PubMed] [Google Scholar]

- Baas D, Aleman A, Kahn RS. Lateralization of amygdala activation: a systematic review of functional neuroimaging studies. Brain Res Rev. 2004;45:96–103. doi: 10.1016/j.brainresrev.2004.02.004. [DOI] [PubMed] [Google Scholar]

- Bach DR, Schächinger H, Neuhoff JG, Esposito F, Di Salle F, Lehmann C, Herdener M, Scheffler K, Seifritz E. Rising sound intensity: an intrinsic warning cue activating the amygdala. Cereb Cortex. 2008;18:145–150. doi: 10.1093/cercor/bhm040. [DOI] [PubMed] [Google Scholar]

- Bagby RM, Parker JD, Taylor GJ. The twenty-item Toronto Alexithymia Scale. I. Item selection and cross-validation of the factor structure. J Psychosom Res. 1994;38:23–32. doi: 10.1016/0022-3999(94)90005-1. [DOI] [PubMed] [Google Scholar]

- Bishop SJ, Duncan J, Lawrence AD. State anxiety modulation of the amygdala response to unattended threat-related stimuli. J Neurosci. 2004;24:10364–10368. doi: 10.1523/JNEUROSCI.2550-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bishop SJ, Jenkins R, Lawrence AD. Neural processing of fearful faces: effects of anxiety are gated by perceptual capacity limitations. Cereb Cortex. 2007;17:1595–1603. doi: 10.1093/cercor/bhl070. [DOI] [PubMed] [Google Scholar]

- Bradley MM, Lang PJ. Measuring emotion: the Self-Assessment Manikin and the Semantic Differential. J Behav Ther Exp Psychiatry. 1994;25:49–59. doi: 10.1016/0005-7916(94)90063-9. [DOI] [PubMed] [Google Scholar]

- Britten KH, Shadlen MN, Newsome WT, Movshon JA. The analysis of visual motion: a comparison of neuronal and psychophysical performance. J Neurosci. 1992;12:4745–4765. doi: 10.1523/JNEUROSCI.12-12-04745.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Britten KH, Newsome WT, Shadlen MN, Celebrini S, Movshon JA. A relationship between behavioral choice and the visual responses of neurons in macaque MT. Vis Neurosci. 1996;13:87–100. doi: 10.1017/s095252380000715x. [DOI] [PubMed] [Google Scholar]

- Chapman C, Hoag R, Giaschi D. The effect of disrupting the human magnocellular pathway on global motion perception. Vision Res. 2004;44:2551–2557. doi: 10.1016/j.visres.2004.06.003. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Shulman GL. Control of goal-directed and stimulus-driven attention in the brain. Nat Rev Neurosci. 2002;3:201–215. doi: 10.1038/nrn755. [DOI] [PubMed] [Google Scholar]

- Cunningham WA, Zelazo PD. Attitudes and evaluations: a social cognitive neuroscience perspective. Trends Cogn Sci. 2007;11:97–104. doi: 10.1016/j.tics.2006.12.005. [DOI] [PubMed] [Google Scholar]

- Cunningham WA, Van Bavel JJ, Johnsen IR. Affective flexibility: evaluative processing goals shape amygdala activity. Psychol Sci. 2008;19:152–160. doi: 10.1111/j.1467-9280.2008.02061.x. [DOI] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- DeYoe EA, Van Essen DC. Concurrent processing streams in monkey visual cortex. Trends Neurosci. 1988;11:219–226. doi: 10.1016/0166-2236(88)90130-0. [DOI] [PubMed] [Google Scholar]

- Dolan RJ, Vuilleumier P. Amygdala automaticity in emotional processing. Ann N Y Acad Sci. 2003;985:348–355. doi: 10.1111/j.1749-6632.2003.tb07093.x. [DOI] [PubMed] [Google Scholar]

- Ekman P, Friesen WV. Palo Alto, CA: Consulting Psychologists; 1976. Pictures of facial affect. [Google Scholar]

- Franconeri SL, Simons DJ. Moving and looming stimuli capture attention. Percept Psychophys. 2003;65:999–1010. doi: 10.3758/bf03194829. [DOI] [PubMed] [Google Scholar]

- Franconeri SL, Simons DJ. The dynamic events that capture visual attention: a reply to Abrams and Christ (2005) Percept Psychophys. 2005;67:962–966. doi: 10.3758/bf03193623. [DOI] [PubMed] [Google Scholar]

- Gläscher J, Rose M, Büchel C. Independent effects of emotion and working memory load on visual activation in the lateral occipital complex. J Neurosci. 2007;27:4366–4373. doi: 10.1523/JNEUROSCI.3310-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goldstein M, Brendel G, Tuescher O, Pan H, Epstein J, Beutel M, Yang Y, Thomas K, Levy K, Silverman M, Clarkin J, Posner M, Kernberg O, Stern E, Silbersweig D. Neural substrates of the interaction of emotional stimulus processing and motor inhibitory control: an emotional linguistic go/no-go fMRI study. Neuroimage. 2007;36:1026–1040. doi: 10.1016/j.neuroimage.2007.01.056. [DOI] [PubMed] [Google Scholar]

- Hardee JE, Thompson JC, Puce A. The left amygdala knows fear: laterality in the amygdala response to fearful eyes. Soc Cogn Affect Neurosci. 2008;3:47–54. doi: 10.1093/scan/nsn001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hariri AR, Mattay VS, Tessitore A, Fera F, Weinberger DR. Neocortical modulation of the amygdala response to fearful stimuli. Biol Psychiatry. 2003;53:494–501. doi: 10.1016/s0006-3223(02)01786-9. [DOI] [PubMed] [Google Scholar]

- Herry C, Bach DR, Esposito F, Di Salle F, Perrig WJ, Scheffler K, Lüthi A, Seifritz E. Processing of temporal unpredictability in human and animal amygdala. J Neurosci. 2007;27:5958–5966. doi: 10.1523/JNEUROSCI.5218-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hillstrom AP, Yantis S. Visual motion and attentional capture. Percept Psychophys. 1994;55:399–411. doi: 10.3758/bf03205298. [DOI] [PubMed] [Google Scholar]

- Hsu SM, Pessoa L. Dissociable effects of bottom-up and top-down factors on the processing of unattended fearful faces. Neuropsychologia. 2007;45:3075–3086. doi: 10.1016/j.neuropsychologia.2007.05.019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kastner S, Ungerleider LG. Mechanisms of visual attention in the human cortex. Annu Rev Neurosci. 2000;23:315–341. doi: 10.1146/annurev.neuro.23.1.315. [DOI] [PubMed] [Google Scholar]

- Kennedy DP, Gläscher J, Tyszka JM, Adolphs R. Personal space regulation by the human amygdala. Nat Neurosci. 2009;12:1226–1227. doi: 10.1038/nn.2381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kveraga K, Boshyan J, Bar M. Magnocellular projections as the trigger of top-down facilitation in recognition. J Neurosci. 2007;27:13232–13240. doi: 10.1523/JNEUROSCI.3481-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lang PJ, Bradley MM, Cuthbert BN. Gainesville, FL: University of Florida; 1999. International affective picture system (IAPS): technical manual and affective ratings. [Google Scholar]

- Lavie N. Perceptual load as a necessary condition for selective attention. J Exp Psychol Hum Percept Perform. 1995;21:451–468. doi: 10.1037//0096-1523.21.3.451. [DOI] [PubMed] [Google Scholar]

- Lavie N. Distracted and confused?: Selective attention under load. Trends Cogn Sci. 2005;9:75–82. doi: 10.1016/j.tics.2004.12.004. [DOI] [PubMed] [Google Scholar]

- Lim SL, Padmala S, Pessoa L. Affective learning modulates spatial competition during low-load attentional conditions. Neuropsychologia. 2008;46:1267–1278. doi: 10.1016/j.neuropsychologia.2007.12.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Livingstone MS, Hubel DH. Psychophysical evidence for separate channels for the perception of form, color, movement, and depth. J Neurosci. 1987;7:3416–3468. doi: 10.1523/JNEUROSCI.07-11-03416.1987. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maunsell JH, Newsome WT. Visual processing in monkey extrastriate cortex. Annu Rev Neurosci. 1987;10:363–401. doi: 10.1146/annurev.ne.10.030187.002051. [DOI] [PubMed] [Google Scholar]

- Merigan WH, Maunsell JH. How parallel are the primate visual pathways? Annu Rev Neurosci. 1993;16:369–402. doi: 10.1146/annurev.ne.16.030193.002101. [DOI] [PubMed] [Google Scholar]

- Mitchell DG, Nakic M, Fridberg D, Kamel N, Pine DS, Blair RJ. The impact of processing load on emotion. Neuroimage. 2007;34:1299–1309. doi: 10.1016/j.neuroimage.2006.10.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Büchel C, Frith CD, Young AW, Calder AJ, Dolan RJ. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain. 1998;121:47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- Murphy FC, Nimmo-Smith I, Lawrence AD. Functional neuroanatomy of emotions: a meta-analysis. Cogn Affect Behav Neurosci. 2003;3:207–233. doi: 10.3758/cabn.3.3.207. [DOI] [PubMed] [Google Scholar]

- Nakamura H, Kashii S, Nagamine T, Matsui Y, Hashimoto T, Honda Y, Shibasaki H. Human V5 demonstrated by magnetoencephalography using random dot kinematograms of different coherence levels. Neurosci Res. 2003;46:423–433. doi: 10.1016/s0168-0102(03)00119-6. [DOI] [PubMed] [Google Scholar]

- Ousdal OT, Jensen J, Server A, Hariri AR, Nakstad PH, Andreassen OA. The human amygdala is involved in general behavioral relevance detection: evidence from an event-related functional magnetic resonance imaging Go-NoGo task. Neuroscience. 2008;156:450–455. doi: 10.1016/j.neuroscience.2008.07.066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Palermo R, Rhodes G. Are you always on my mind?: A review of how face perception and attention interact. Neuropsychologia. 2007;45:75–92. doi: 10.1016/j.neuropsychologia.2006.04.025. [DOI] [PubMed] [Google Scholar]

- Patzwahl DR, Zanker JM. Mechanisms of human motion perception: combining evidence from evoked potentials, behavioural performance and computational modelling. Eur J Neurosci. 2000;12:273–282. doi: 10.1046/j.1460-9568.2000.00885.x. [DOI] [PubMed] [Google Scholar]

- Pessoa L, McKenna M, Gutierrez E, Ungerleider LG. Neural processing of emotional faces requires attention. Proc Natl Acad Sci U S A. 2002;99:11458–11463. doi: 10.1073/pnas.172403899. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Padmala S, Morland T. Fate of unattended fearful faces in the amygdala is determined by both attentional resources and cognitive modulation. Neuroimage. 2005;28:249–255. doi: 10.1016/j.neuroimage.2005.05.048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Dan ES, Grandjean D, Sander D, Vuilleumier P. Enhanced extrastriate visual response to bandpass spatial frequency filtered fearful faces: time course and topographic evoked-potentials mapping. Hum Brain Mapp. 2005;26:65–79. doi: 10.1002/hbm.20130. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pourtois G, Schwartz S, Seghier ML, Lazeyras F, Vuilleumier P. Neural systems for orienting attention to the location of threat signals: an event-related fMRI study. Neuroimage. 2006;31:920–933. doi: 10.1016/j.neuroimage.2005.12.034. [DOI] [PubMed] [Google Scholar]

- Rees G, Friston K, Koch C. A direct quantitative relationship between the functional properties of human and macaque V5. Nat Neurosci. 2000;3:716–723. doi: 10.1038/76673. [DOI] [PubMed] [Google Scholar]

- Rosenholtz R. A simple saliency model predicts a number of motion popout phenomena. Vision Res. 1999;39:3157–3163. doi: 10.1016/s0042-6989(99)00077-2. [DOI] [PubMed] [Google Scholar]

- Sander D, Grafman J, Zalla T. The human amygdala: an evolved system for relevance detection. Rev Neurosci. 2003;14:303–316. doi: 10.1515/revneuro.2003.14.4.303. [DOI] [PubMed] [Google Scholar]

- Schiller PH, Logothetis NK, Charles ER. Role of the color-opponent and broad-band channels in vision. Vis Neurosci. 1990;5:321–346. doi: 10.1017/s0952523800000420. [DOI] [PubMed] [Google Scholar]

- Sergerie K, Chochol C, Armony JL. The role of the amygdala in emotional processing: a quantitative meta-analysis of functional neuroimaging studies. Neurosci Biobehav Rev. 2008;32:811–830. doi: 10.1016/j.neubiorev.2007.12.002. [DOI] [PubMed] [Google Scholar]

- Siegel M, Donner TH, Oostenveld R, Fries P, Engel AK. High-frequency activity in human visual cortex is modulated by visual motion strength. Cereb Cortex. 2007;17:732–741. doi: 10.1093/cercor/bhk025. [DOI] [PubMed] [Google Scholar]

- Silvert L, Lepsien J, Fragopanagos N, Goolsby B, Kiss M, Taylor JG, Raymond JE, Shapiro KL, Eimer M, Nobre AC. Influence of attentional demands on the processing of emotional facial expressions in the amygdala. Neuroimage. 2007;38:357–366. doi: 10.1016/j.neuroimage.2007.07.023. [DOI] [PubMed] [Google Scholar]

- Spielberger CD. Palo Alto, CA: Consulting Psychologists; 1983. Manual for the State-Trait Anxiety Inventory. [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–841. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat Neurosci. 2003;6:624–631. doi: 10.1038/nn1057. [DOI] [PubMed] [Google Scholar]

- Wager TD, Phan KL, Liberzon I, Taylor SF. Valence, gender, and lateralization of functional brain anatomy in emotion: a meta-analysis of findings from neuroimaging. Neuroimage. 2003;19:513–531. doi: 10.1016/s1053-8119(03)00078-8. [DOI] [PubMed] [Google Scholar]

- Whalen PJ. Fear, vigilance, and ambiguity: initial neuroimaging studies of the human amygdala. Curr Direct Psychol Sci. 1998;7:177–188. [Google Scholar]

- Whalen PJ. The uncertainty of it all. Trends Cogn Sci. 2007;11:499–500. doi: 10.1016/j.tics.2007.08.016. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Shin LM, McInerney SC, Fischer H, Wright CI, Rauch SL. A functional MRI study of human amygdala responses to facial expressions of fear vs. anger. Emotion. 2001;1:70–83. doi: 10.1037/1528-3542.1.1.70. [DOI] [PubMed] [Google Scholar]

- Wright P, Liu Y. Neutral faces activate the amygdala during identity matching. Neuroimage. 2006;29:628–636. doi: 10.1016/j.neuroimage.2005.07.047. [DOI] [PubMed] [Google Scholar]