Abstract

To investigate the neural basis of the associative aspects of facial identification, we recorded neuronal activity from the ventral, anterior inferior temporal cortex (AITv) of macaque monkeys during the performance of an asymmetrical paired-association (APA) task that required associative pairing between an abstract pattern and five different facial views of a single person. In the APA task, after one element of a pair (either an abstract pattern or a face) was presented as a sample cue, the reward-seeking monkey correctly identified the other element of the pair among various repeatedly presented test stimuli (faces or patterns) that were temporally separated by interstimulus delays. The results revealed that a substantial number of AITv neurons responded both to faces and abstract patterns, and the majority of these neurons responded selectively to a particular associative pair. It was demonstrated that in addition to the view-invariant identity of faces used in the APA task, the population of AITv neurons was also able to represent the associative pairing between faces and abstract patterns, which was acquired by training in the APA task. It also appeared that the effect of associative pairing was not so strong that the abstract pattern could be treated in a manner similar to a series of faces belonging to a unique identity. Together, these findings indicate that the AITv plays a crucial role in both facial identification and semantic associations with facial identities.

Introduction

The activity of certain neurons in the temporal cortex has been shown to selectively increase in response to the sight of faces, as opposed to all other classes of objects and forms; these neurons have thus been referred to in the literature as “face neurons” (Bruce et al., 1981; Perrett et al., 1982, 1985; Desimone et al., 1984; Hasselmo et al., 1989; Heywood and Cowey, 1992; Young and Yamane, 1992; Sugase et al., 1999). Together with the recent findings by functional brain imaging studies (Kanwisher et al., 1997; Gauthier et al., 2000; Haxby et al., 2001), the existence of face neurons demonstrates that a neural circuitry specialized for the processing of faces exists in the primate brain. Recent reports have suggested that the face neurons are organized in approximately six different and discrete face “patches” in the temporal cortex, although it remains unclear at this point how functionally different these six face patches might be (Tsao et al., 2003, 2006; Moeller et al., 2008). It is well known that damage to the human neural circuitry specific to faces causes a very characteristic disability, namely, prosopagnosia (Farah, 1990). In the narrow sense, prosopagnosia indicates a cognitive inability to identify familiar individuals by their faces. Previous literature has suggested that there are two basic types of prosopagnosia (Damasio et al., 1982; De Renzi et al., 1991; Barton et al., 2002; Barton and Cherkasova, 2003). One of these two subtypes is apperceptive prosopagnosia, which implies a deficit in face recognition itself. The other subtype is associative prosopagnosia, which implies a deficit in the recognition of associated semantic information with particular faces. However, few studies have been conducted to date with the aim of elucidating the neural substrate for the associative aspects of facial identification that are disturbed in cases of associative prosopagnosia.

The purpose of the present study is to investigate the neural correlates of the associative aspects of facial identification. To this end, we recorded single-neuron activity in the ventral part of the anterior inferior temporal cortex (AITv) of macaque monkeys during the performance of an asymmetrical paired-association (APA) task that required associative pairing between facial identities and abstract patterns. In our previous study, we found that a population of face-responsive neurons in the AITv can represent the identity of faces in a view-invariant manner (Eifuku et al., 2004). Recent reports have shown that a population of visual neurons in the anterior inferior temporal cortex (AIT) can represent various perceptual categories of visual stimuli, including faces (Kiani et al., 2007). In contrast, it has been reported that neurons in the AITv selectively respond to particular paired associations of nonfacial, complex pictorial patterns, acquired by training (Sakai and Miyashita, 1991; Naya et al., 2001, 2003). Given the findings to date, we focused on AITv-area neural representations of various faces and of semantic associations with particular facial identities presented to subjects.

Materials and Methods

Subjects and ethics

Three monkeys (three female Macaca fuscata, 4.6–8.4 kg) were used for the experiment.

All experimental protocols used in the present study were approved by the Animal Care and Use Committee of University of Toyama; the protocols also conformed to the National Institutes of Health guidelines for the humane care and use of laboratory animals.

Cognitive task

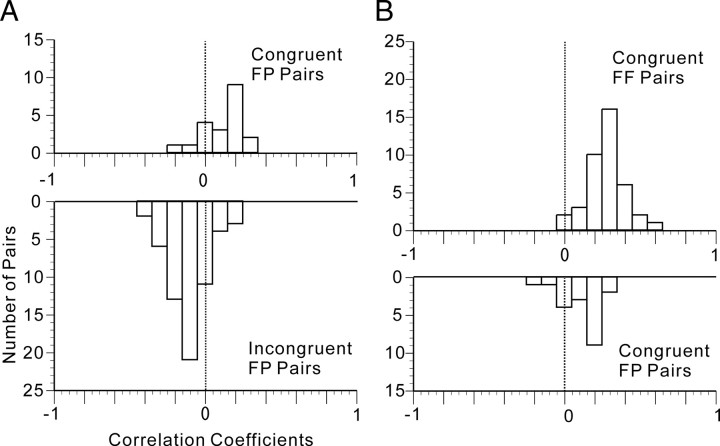

In the APA task, four paired associations between faces and abstract images were used. In the experimental arm, images of individual faces were prepared from five different perspectives (left profile, −90°; left oblique, −45°; frontal, ±0°; right oblique, +45°; right profile, +90°), and all five views of the individual's face were associated with a specific abstract pattern to form paired associations (Fig. 1A).

Figure 1.

APA task. A, Four paired associations used in the APA task. Monkeys learned to associatively pair a pattern and a facial identity. Five different images of a unique face were presented to the animal from five different perspectives. B, Task sequence of a typical “pattern-to-face” trial. During fixation, a pattern cue from a paired association was presented to the animal. Then, after interstimulus delays (ISI), distractors were presented zero to three times until a face target finally appeared, to which the monkey was to respond by pushing a lever to obtain juice. C, Task sequence of a typical “face-to-pattern” trial. The temporal order of the face-to-pattern trial was reversed in the case of the pattern-to-face trial. Depicted in B and C are cases in which two distractors were presented.

The temporal structure of the APA task was regarded as a variant of a sequential delayed pair-comparison task (Fig. 1B,C). After one element of a pair (either an abstract pattern or a face) was presented as a sample cue (496 ms), the reward-seeking monkey correctly identified the other element of the pair among various repeatedly presented test stimuli (faces or patterns, 496 ms), which were temporally separated by interstimulus delays (992 ms). Before the correct test stimulus (a target) finally appeared, different incorrect test stimuli (distractors) were presented zero to three times in a pseudorandomized manner. In one-half of the distractor presentations in each session, a distractor face or pattern was chosen from the 20 faces (four identities by five perspectives) or four abstract patterns that were used as paired-associates in the task; in the other half of the presentations, it was chosen from 80 faces or 16 patterns that were not used as paired associates. If the test stimulus was a target, the monkey was trained to push a lever within 800 ms after the onset of a target. All correct trials were rewarded; if the monkey responded correctly, a reward of approximately 0.2 ml of orange juice was presented for 2 s after another 1 s delay. If the monkey failed to respond correctly, a 620 Hz buzzer tone sounded, and the reward was withheld. A 2 to 4 s intertrial interval was imposed before the onset of the next trial. During the APA task, eye position was monitored using a scleral search coil (Judge et al., 1980). The size of the eye control window was ±2°. There were in effect two types of trials in this APA task. The first type consisted of a test using an abstract image cue and then one of five facial views (“pattern-to-face” trials), and the other type of trial employed a face cue and then abstract image tests (“face-to-pattern” trials). Associative pairing was bidirectional in the APA task, since face-to-pattern trials and pattern-to-face trials were counterbalanced with equal probability. Therefore, the associative pairing on the APA task was asymmetrical in terms of the three following characteristics. First, one image from a pair of images was an abstract pattern, whereas the counterpart showed a face, and thus the pairing crossed different classes of visual stimuli. Second, one abstract pattern was associated with five different views of a face, and thus the pairings were based on a one-to-five correspondence. Finally, although the abstract pattern was locked into a specific viewpoint, it was associated with images of a single individual's face (i.e., a specific view-invariant identity) as seen from five different perspectives, and thus the visual stimulus was associated with the perceptual category of individual identity.

Visual stimuli

The visual stimuli used in this study were digitized images in a gray scale of 256 depths of the faces of people familiar to each monkey; these people were members of the laboratory involved in the daily care of the subjects. The visual stimuli were displayed on a cathode ray tube monitor placed 57 cm from the eyes while the monkey fixated; the visual stimuli were displayed with a resolution of 640 × 480 pixels. All visual stimuli were presented within the receptive field (RF) center of each recorded neuron that was mapped in advance of the experiment (see below, Recording): stimuli were usually centered on the fixation point, and the size of the stimulus area was 10–15 × 10–15°. Among all stimuli, the luminances of the brightest white and the darkest black (background) were 32.8 and 0.9 cd/m2, respectively. The computer generating the visual stimuli was controlled by the standard laboratory real-time experimental system REX (Hays et al., 1982) running on a dedicated personal computer.

Behavioral training

All the monkeys were first trained to perform the behavioral task without the use of head fixation or eye position measurement. After the monkeys attained ∼80% correct performance for the APA task, they underwent a surgery (see below, Surgery) and were prepared for single-cell recording. After the monkeys recovered from the surgery, we retrained them to perform the APA task with head fixation and eye position measurement. When the monkeys had learned the APA task at a performance level of >85% correct responses, we began recording neuronal activity. The total training period was 9, 8, or 6 months for the three monkeys, respectively.

Training before surgery was arranged in the following order. The criterion for each transfer was 80% correct responses in 10 successive trials. First, each of the three monkeys was trained in the face-to-pattern trials of the APA task using four facial identities but only the frontal view as face cues. Then, each monkey was transferred to training in the face-to-pattern trials of the APA task using four facial identities with all five views. Next, each monkey was trained in the pattern-to-face trials of the APA task using four facial identities but only the frontal view as the face target, and was then transferred to training in the pattern-to-face trials of the APA task using four facial identities with all five views. In the next training, both types (directions) of trials, i.e., the face-to-pattern trials and the pattern-to-face trials, were imposed on the monkey in separate blocks. And finally, both directions of trials were intermixed in a pseudorandomized order.

In the (re)training after surgery, the same sequence as used for the training before the surgery was repeated, but the criterion for each transfer was 85% correct responses in 10 successive trials.

Surgery

With the animal under general anesthesia with sodium pentobarbital (35 mg/kg, i.m.), a recording chamber was implanted on the surface of the skull over the AIT, and a head holder was embedded in a dental acrylic cap covering the top of the skull and compatible with magnetic resonance (MR) imaging scans (Crist et al., 1988). The cylinders and head holders were plastic, and the screws in the skull were made of titanium. In addition, scleral search coils were implanted into the eyes to record eye movements. During surgery, heart and respiratory function and rectal temperature were monitored on a polygraph (Nihon Kohden). The rectal temperature was controlled at 37 ± 0.5°C by a blanket heater. Antibiotics were administrated topically and systemically during the recovery period to protect the animals against infection.

Recording

For the recording of neuronal activity, a grid was placed within the recording cylinders to facilitate the insertion of stainless-steel guide tubes through the dura to a depth of ∼10 mm above the AIT. At the beginning of each recording session, the guide tube stylet was removed and an epoxy-coated tungsten microelectrode (FHC; 1.0–1.5 MΩ at 1 kHz) was inserted. The electrode was advanced using a stepping microdrive (MO95I; Narishige), and neuronal activity was monitored to establish the relative depth of the landmarks (including the layers of gray and white matter), as well as to determine the characteristic neuronal responses. We first isolated single-neuron activity from the AIT. In advance of the recording experiment, the size and location of the excitatory RF region were mapped by a mouse-controlled stimulus during a visual-fixation task. We then proceeded to record the neuronal activity during the performance of the APA task. Successively studied cells were spaced apart at 100 μm intervals. The behavioral task, stimulus timing, storage of single-neuron activity, and eye position were controlled by the REX system. Single-neuron activity was digitized using a standard-spike sorter based on a template-matching algorithm (MSD; Alpha Omega), and the activity was sampled at 1 kHz. The data were stored along with information about the stimulus and associated behavioral events. An online raster display showed the occurrence of single neuronal discharges aligned according to the stimulus and behavioral events during the experiment. Eye position was monitored by the REX system for behavioral control during all of the experiments, and this information was also recorded.

Data analysis

In this study, we primarily analyzed single-neuron activity in response to sample cue stimuli, i.e., during the period 64–560 ms after the onset of each stimulus (the lag time of 64 ms was based on the minimum response latency of neurons). Control (baseline) firing was measured during the 208 ms period before the sample cue stimulus was presented. The criterion for the data registration was at least six trials for each stimulus. Off-line data analysis included examination of spike density histograms that were created by replacing the spikes with Gaussian pulses of a width corresponding to a 10 ms SD using the method of MacPherson and Aldridge (1979) as implemented by Richmond et al. (1987).

Receiver operating characteristic analyses.

We analyzed the receiver operating characteristic (ROC) curves based on the firings of each neuron during the period 64–560 ms after the onset of each stimulus. The ROC curves were computed from RRi and RR, where RRi indicates the distribution of firings responding to a facial identity i, and RR indicates the distribution of firings responding to identities other than i. Then the area under the ROC curve (AUC) was calculated for the four identities, and their maximum was designated as AUCbest identity. In addition, the ROC curves based on the surrogate data that were generated by random shuffling the relationship between the responses and stimuli were also analyzed to estimate the significance of the original ROC curve. Similarly, we also calculated the AUCbest view for the facial views and the AUCbest pattern for the abstract patterns.

To investigate the temporal changes in neuronal representations, we also performed an ROC analysis based on 64 ms time windows starting at −128, −64, 0, 64, 128, 192, 256, 320, 384, 448, and 512 ms after the cue-face onset. The best identity, best view, and best pattern determined by the best response during the full (64–560 ms) window were consistently used as the best identity, best view, and best pattern in the analyses using these smaller windows.

Correlation and cluster analyses.

To investigate the stimulus representations by the population of neurons, we performed correlation and cluster analyses. Neuronal responses to 24 cue stimuli (20 faces consisting of four identities by five views plus four abstract patterns) were used for the multivariate analysis. It should be noted that the activity of all of the associative-pair-responsive neurons recorded in the AITv that satisfied the criteria (i.e., that showed significantly large visual responses both to face and pattern cues) was used for the analysis; no selection beyond these criteria was made. The neuronal responses were normalized to minimize the inherited influence of differences in the firing rate; for an individual neuron, the averaged neuronal response to each face was divided by the norm of all of the averaged neuronal responses. For all of the combinations of 2 of the 24 visual stimuli (24C2 = 276 pairs), the correlation coefficient (r) between arrays of the normalized neuronal responses of all of the associative-pair-responsive neurons in the population was calculated. The significance of differences between zero and the mean of z′-transformed r values for pairs of a particular stimuli type or the significance of differences between the means of z′-transformed r values for pairs of particular stimuli types was analyzed using Student's t test with Welch's correction at a significance level of p = 0.05. As the dissimilarity measures between different stimuli was defined by 1 − r, the matrix of dissimilarity measures of all combinations of two possible faces or patterns was then computed. A cluster analysis was performed on the matrix of dissimilarity measures, and the results were finally displayed in a dendrogram.

Recording sites

For all monkeys, MR imaging was used to place the electrodes into the AITv. The positions of the AITv neurons and of the recording electrodes were confirmed by MR imaging during the experiment. All MR images included a marker (tungsten, 500 μm in diameter), and the calculated recording sites were verified in reference to the coordinates of the marker. After the final recording session, the locations of the neurons analyzed in this study were reconstructed based on histological investigation and MR images. Several small marker lesions were created in the AITv by the passage of 20–30 μA of anodal current for 40 s through an electrode placed stereotaxically, which was monitored by MR imaging. All three animals were then deeply anesthetized with an overdose of sodium pentobarbital (50 mg/kg, i.m.) and perfused transcardially with 0.9% saline followed by 10% buffered formalin. The brains were removed and cut into 50 μm sections through the target areas. The sections were stained with cresyl violet, and the sites of the electrically induced lesions were determined microscopically. The location of each recording site was then calculated by comparing the stereotaxic coordinates of the recording sites with those of the lesions. The activity of all of the visually responsive neurons in the present study was recorded in an area that ranged from 23 to 17 mm anterior to the interaural line, where the anterior middle temporal sulcus (AMTS) is located. Many neurons from the AITv sample were near the AMTS, which roughly corresponds to the TEav (Tamura and Tanaka, 2001) and AITv (Felleman and Van Essen, 1991) areas.

Results

The range of correct behavioral responses in the APA task for the three monkeys was 78.87–99.52%. We compared the behavioral performances between the face-to-pattern trials and the pattern-to-face trials. As for the data analyzed in the present study (n = 120 sessions), the average ± SD of the percentage correct measures in the face-to-pattern trials was 92.38 ± 5.54%, whereas that in the pattern-to-face trials was 93.24 ± 4.83%. There was no significant difference between the means of the behavioral performances of the two different types of trials (two-tailed paired t test; p = 0.0640).

Neuronal data: individual

A net total of 432 neurons were recorded during the whole period of recording for all three monkeys. The activity of a total of 156 cue-responsive neurons with significantly increased activity in response to the presentation of at least one of 24 possible cue pictures, i.e., 20 faces and four patterns (paired t test; significance level of p = 0.05), was recorded in four hemispheres of the brains of three monkeys during their performance of the APA task. There may have been a sampling bias because we looked for cue responsive neurons in the initial stage of the experiments. Recording sites were confirmed in the AITv area, both by MR imaging and histological investigation (see Materials and Methods). Of the cue-responsive neurons, 120 showed significant increases in activity in response to at least one cue face (during the period 64–560 ms after the cue onset; paired t test at the significance level of p = 0.05) on the face-to-pattern trials, and these neurons were classified as face responsive. Of the face-responsive neurons, 80 (66.7%) showed significant increases in response to the cue presentation of at least one abstract image (during the period 64–560 ms after the cue onset; paired t test at the significance level of p = 0.05) on pattern-to-face trials, and these neurons were designated as associative-pair responsive. The associative-pair-responsive neurons were subjected to additional analysis. Of the associative-pair-responsive neurons, a significant main effect was observed by facial identity (n = 43; 53.8%), by facial view (n = 27; 33.8%), or by a significant interaction of facial identity by facial view (n = 19; 23.8%), according to two-way ANOVA with repeated measures (factors: facial identity, and facial view), when a significance level of p = 0.05 was applied to the magnitudes of responses to the cue faces (i.e., neuronal activity in the cue-face phase minus spontaneous activity). Of the associative-pair-responsive neurons, 34 (42.5%) also exhibited a significant main effect according to cue abstract pattern, as based on one-way ANOVA (factor: abstract images) with repeated measures and at a significance level of p = 0.05 applied to the response magnitudes to cue abstract patterns (i.e., neuronal activity in the cue-pattern phase minus spontaneous activity). For the majority of associative-pair-responsive neurons, combinations of the best facial identity (i.e., the identity that elicited the largest cue response magnitude in the face-to-pattern trials) and the best abstract pattern (i.e., the pattern that elicited the largest cue response magnitude in the pattern-to-face trials) were matched to the combinations assigned in the associative pairs, which had to be learned/acquired by the monkeys when performing the APA task. These particular sets of neurons were classified as associative-pair-selective neurons (n = 63). It should be noted that the associative-pair-selective neurons included neurons with relatively broad tuning to the kinds of abstract patterns, and for some of these neurons there was no significant main effect by the kinds of abstract patterns in the one-way ANOVA.

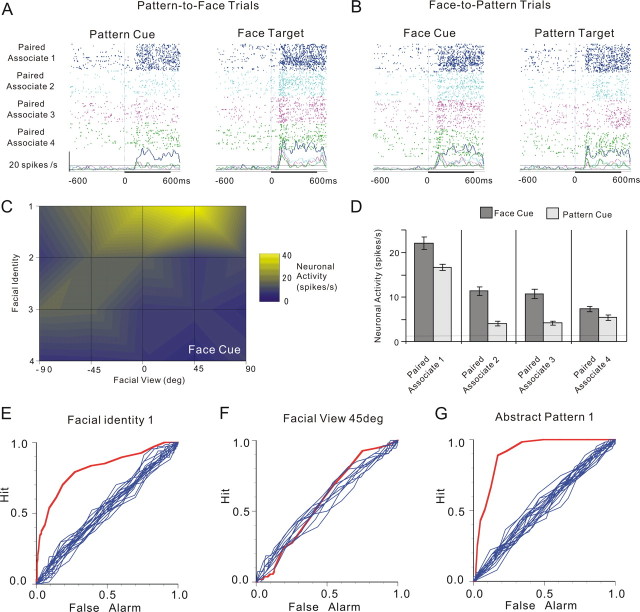

An example of the associative-pair-selective neurons is depicted in Figure 2. In the pattern-to-face trials (Fig. 2A, left, D, light bars), this neuron exhibited selectivity for patterns; responses to cue-abstract patterns showed a significant main effect by pattern, according to one-way ANOVA with repeated measures (factor: pattern, F(3,246) = 60.57; p = 5.9613 × 10−32). Specifically, this neuron responded best to the cue presentation of pattern 1 (Fisher's PLSD post hoc test at a significance level of p = 0.05). Meanwhile, in the face-to-pattern trials (Fig. 2B, left, C,D, dark bars), this neuron showed selectivity for both facial identity and facial view; responses to cue faces showed significant main effects by facial identity (F(3,246) = 60.57; p = 2.3380 × 10−25) and facial view (F(4,246) = 5.27; p = 4.3582 × 10−4), as well as a significant interaction of facial identity by facial view (F(12,246) = 9.44; p = 5.3291 × 10−15), according to two-way ANOVA with repeated measures (factors: facial identity and facial view). This neuron responded best to the cue presentation of identity 1 in the +45° view (Fig. 2C) (Fisher's PLSD post hoc test at a significance level of p = 0.05). We also performed ROC analysis of neuronal firing in response to cue faces or patterns, and the results obtained for the best facial identity (identity 1), the best facial view (+45°), and the best abstract pattern (pattern 1) are depicted in Figure 2E–G. The AUC values for each configuration are 0.81, 0.57, and 0.9, respectively. The significance of difference between the AUC and the AUCs obtained by ROC surrogate curves (n = 20) was tested by z test; the p values for the three configurations were 8.39 × 10−171, 0.26, and 0.00 × 10−250, respectively.

Figure 2.

An example of associative-pair-selective neurons. A, Neuronal activity in the pattern-to-face trials of an associative-pair-selective neuron are displayed in rasters and spike-density functions (σ = 10 ms). All graphs are aligned to the onset of the cue (left) and target (right) presentation (time 0). Differences in raster colors indicate four different paired associations; five different views are intermixed in the rasters tinted in accord with the paired associates. Dashed lines on the graphs indicate the mean firing rates during the control period (208 ms period before presentation of the cues) ± 2 SD. B, Neuronal activity in the face-to-pattern of the same neuron. The figure conventions are the same as those introduced in A. C, Summary of cue-face responses. The mean firing rates of cue-face responses to five facial views of four facial identities are coded using different colors. D, Summary of neuronal responses to cue faces (cue-face responses) in face-to-pattern trials and those to cue patterns (cue-pattern responses) in pattern-to-face trials. The panel for cue-face responses shows the mean firing rates ± SEM during the period 64–560 ms after the onset of cue presentation (cue period) for each facial identity (5 facial views are averaged in this panel). The panel for cue-pattern responses shows the mean firing rates ± SEM in the cue period for each pattern. E, ROC curve of neuronal responses to cue faces to best discriminate a particular facial identity. Of the four identities, the ROC curve with identity 1, which produced the largest AUC value, is depicted (red). Ten other ROC plots based on the surrogate data in which the relationship between stimulus and response was destroyed are also shown in blue. F, ROC curve of neuronal responses to cue faces to best discriminate a particular facial view (the +45° view). The figure conventions are the same as those introduced in E. G, ROC curve of neuronal responses to cue patterns to best discriminate a particular pattern (pattern 1). The Figure conventions are the same as those introduced in E.

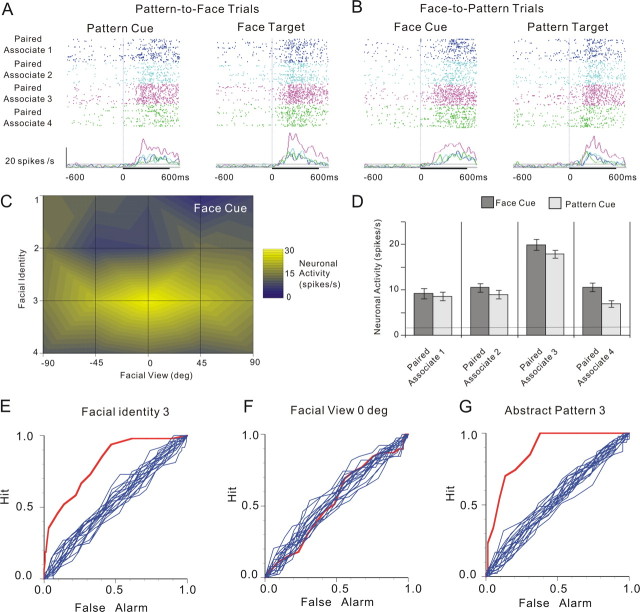

Another example of the associative-pair-selective neurons but with a relatively broad selectivity to facial views is also depicted in Figure 3. This neuron responded best to the cue presentation of pattern 3 in the pattern-to-face trials, whereas in the face-to-pattern trials, this neuron responded best to the cue presentation of identity 3 in the 0° view. In the pattern-to-face trials (Fig. 3A, left, D, light bars), the responses to cue patterns showed a significant main effect by pattern (F(3,199) = 31.3; p = 1.1102 × 10−16) when one-way ANOVA with repeated measures (factor: pattern) was applied, and the neuron responded best to the cue presentation of pattern 3 (Fisher's PLSD post hoc test at a significance level of p = 0.05). In the face-to-pattern trials (Fig. 3B, left, C,D, dark bars), the responses to cue faces showed a significant main effect by facial identity (F(3,186) = 24.22; p = 2.820 × 10−13) and a significant interaction by facial identity by facial view (F(12,186) = 2.35; p = 0.008) when two-way ANOVA with repeated measures (factors: facial identity and facial view) was applied; the main effect of facial view was not significant (F(4,186) = 2.35; p = 0.7724). This neuron responded best to the cue presentation of identity 3 (Fisher's PLSD post hoc test at a significance level of p = 0.05). The ROC analysis of neuronal firing in response to cue faces or patterns was also performed, and the results obtained for the best facial identity (identity 3), the best facial view (0°), and the best abstract pattern (pattern 3) are depicted in Figure 3E–G. The AUC values for each configuration are 0.81, 0.50, and 0.88, respectively. The significance of difference between the AUC and the AUCs obtained by ROC surrogate curves (n = 20) was tested by z test; the p values for the three configurations were 5.39 × 10−105, 0.33, and 8.25 × 10−228, respectively.

Figure 3.

An example of associative-pair-selective neurons. A–G, The figure conventions are the same as those in Figure 2. The ROC curves are obtained for facial identity 3, facial view ±0°, and abstract pattern 3. Error bars indicate mean ± SEM.

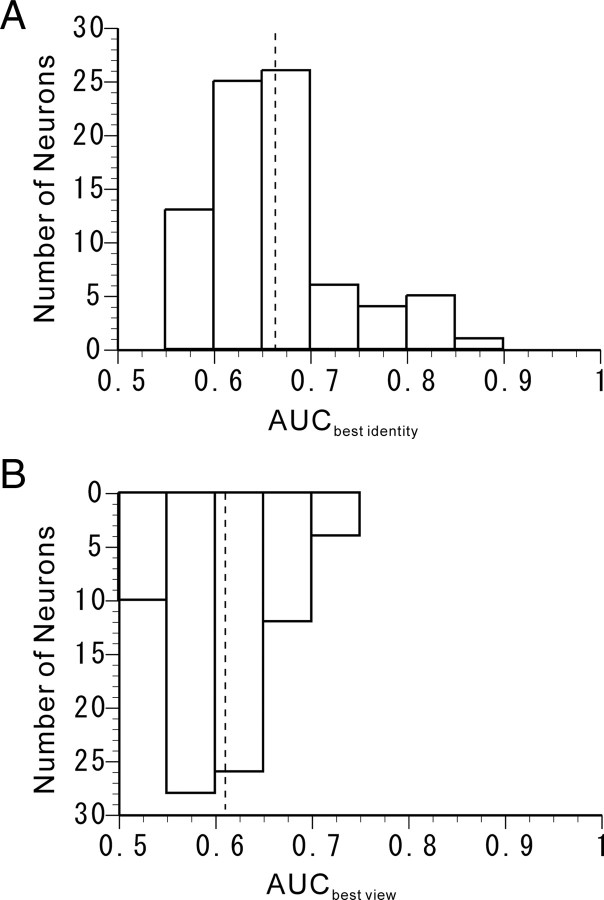

We then calculated AUC values for the best facial identity and those for the best facial views of all of the associative-pair-responsive neurons. The distributions of the AUC values for the best facial identity and the best facial views are displayed in Figures 4, A and B. The mean ± SD of the AUCbest identity values and that of the AUCbest view values were 0.6626 ± 0.0679 and 0.6094 ± 0.0491, respectively. The mean of the AUCbest identity values was significantly larger than that of the AUCbest view values (two-tailed paired t test; p = 3.2300 × 10−14). The results also indicate that the associative-pair-responsive neurons showed a relatively higher selectivity for facial identities than for facial views.

Figure 4.

ROC analysis of individual associative-pair-responsive neurons. A, Distribution of the AUCbest identity obtained from the ROC curve to best discriminate facial identity. The dashed line on the graph indicates the mean AUCbest identity. B, Distribution of the AUCbest view obtained from the ROC curve to best discriminate facial views. The dashed line on the graph indicates the mean AUCbest view.

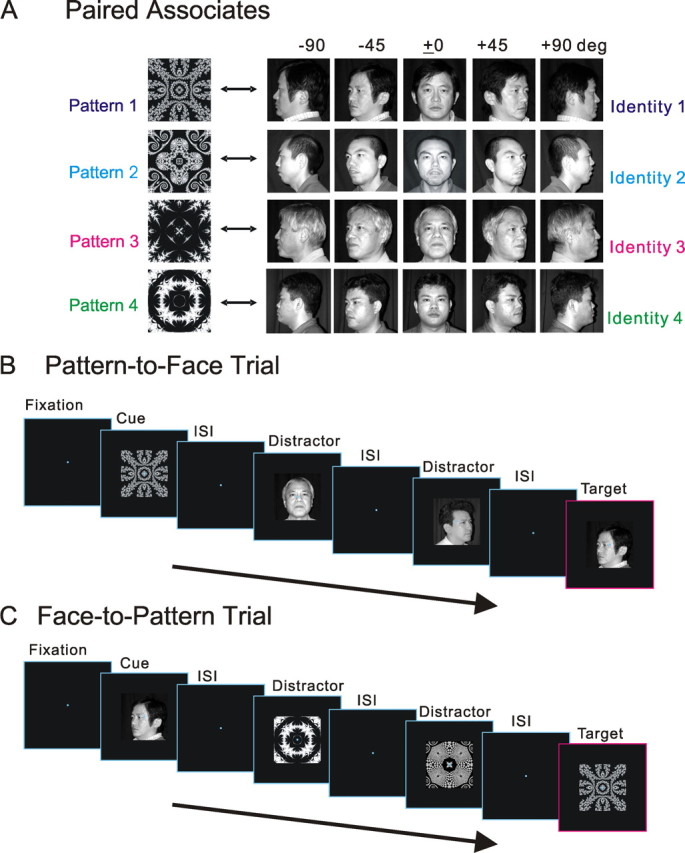

Subsequently, to investigate the degree of learned associative pairing as based on cue responses, we compared the neuronal responses to cue pattern in the pattern-to-face trials and those to cue face in the face-to-pattern trials for all associative-pair-responsive neurons in the scatter plots in Figure 5A. The correlation coefficients between neuronal responses to cue faces and cue patterns depicted in these scatter plots are summarized in Table 1. The relationship between the best-responsive cue pattern in the pattern-to-face trials and the best-responsive cue face in the face-to-pattern trials for all associative-pair-responsive neurons is shown in Figure 5B. The results revealed that 63 (78.8%) of the associative-pair-responsive neurons were matched for “best cues” between faces and patterns, as indicated by the neurons on the diagonal line, and were thus classified as associative-pair selective. An χ2 test was performed, taking into account the uneven distribution of the probability for each stimulus, since the marginal distribution of Figure 5B indicated that the probability of observing the neurons' best response to each picture is not even. The results showed that a significantly higher occurrence rate for associative-pair-selective neurons among associative-pair-responsive neurons was observed than was predicted by chance (χ2 test, df = 9; χ2 = 116.217; p = 7.9341 × 10−21), which suggested that these neurons plausibly reflect paired-associative learning.

Figure 5.

Associative-pair selectivity of individual associative-pair-responsive neurons. A, Scatter plot between cue face responses and cue-pattern responses in paired associations 1 to 4. The spontaneous activities were subtracted from the responses, and then the responses were normalized by the norm of responses to the 24 visual stimuli. Different facial views are indicated by different colors. B, Comparison between best-cue patterns and best-cue faces of the associative-pair-selective (dark; n = 63) and other responsive [white; n = 17 (80 − 63)] neurons. The diagonal positions imply that the best-cue patterns and best-cue faces were in agreement.

Table 1.

Correlation between face-cue response and pattern-cue response

| Correlation coefficients |

|||||

|---|---|---|---|---|---|

| −90° | −45° | 0° | 45° | 90° | |

| Identity 1 | 0.1696 | 0.2652* | 0.4389** | 0.1772 | 0.4367** |

| Identity 2 | 0.3689** | 0.4602** | 0.5483** | 0.5004** | 0.4137** |

| Identity 3 | 0.5114** | 0.4449** | 0.5198** | 0.2725* | 0.4958** |

| Identity 4 | 0.4454** | 0.5017** | 0.5251** | 0.3877** | 0.5889** |

*p < 0.05;

**p < 0.001.

In addition, we analyzed neuronal activities during the erroneous trials in response to the cue presentation of the best abstract pattern as defined during the correct trials in the APA task to investigate whether the preference for the abstract pattern associated with an individual face would be seen only during the correct performance of the APA task or whether it might also be seen during erroneous trials. We also analyzed the neuronal activities of some associative-pair-selective neurons that were available during a passive viewing condition to examine whether the preference for the abstract pattern associated with an individual face would be seen only during performance of the APA task or whether it might also be seen during passive fixation. The results revealed that neuronal activities during these conditions were consistent with those during the correct trials in the APA task (supplemental PDF, supplemental Fig. 1, available at www.jneurosci.org as supplemental material).

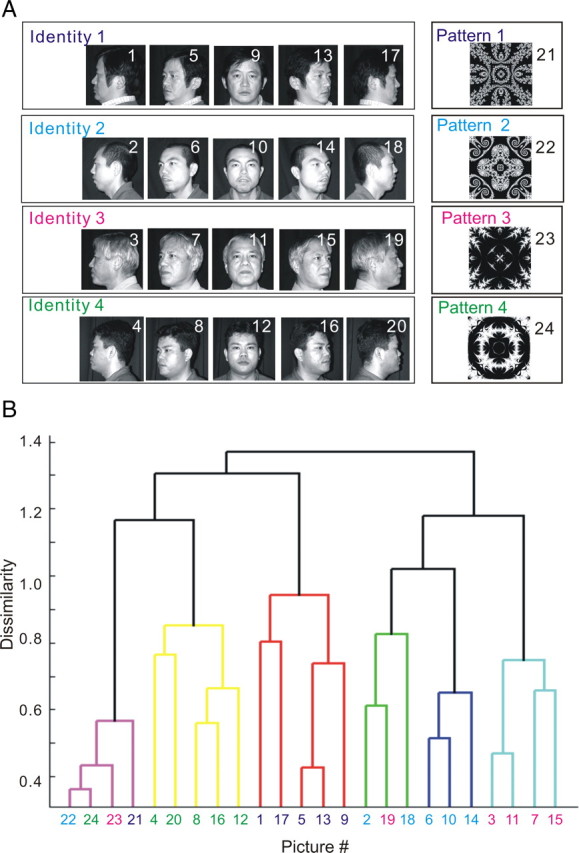

Neuronal data: population

Using multivariate analysis based on the population data from all of the associative-pair-responsive neurons in the AITv area (n = 80), we analyzed representations of 20 different faces (four different identities by five different views) and four different abstract patterns in this area using methods similar to those used in our previous studies (Eifuku et al., 2004). The cue responses of all associative-pair-responsive neurons to either faces or patterns were registered. Dissimilarities of all possible stimuli pairs (24C2 = 276 pairs) were defined based on correlation coefficients between the cue response magnitudes to each stimulus in the pair (see Materials and Methods). A cluster analysis was then performed using these dissimilarity measures between faces and/or abstract patterns. Figure 6 shows the dendrogram obtained by this cluster analysis. The dendrogram depicts the dissimilarity/similarity relationships between representations of the 20 faces (four identities by five views) and four abstract patterns. The dendrogram indicated that the four abstract pictorial patterns were segregated as a cluster. In addition, it was indicated that the faces belonging to each of the four different facial identities formed four nearly distinct clusters.

Figure 6.

Dendrogram obtained by cluster analysis of the population of associative-pair-responsive neurons in the AITv area. A, The 20 faces (4 identities by 5 views) and four abstract patterns used in the APA task as visual stimuli. A picture number was assigned for each visual stimulus. The four paired associates are indicated by different colors. B, The dendrogram depicts the dissimilarity/similarity relationships of all visual stimuli used in the cognitive task comprised of 20 faces (4 identities by 5 views) and four abstract patterns, as obtained by a cluster analysis. In the cluster analysis, the dissimilarity of all possible stimuli pairs, as defined based on correlation coefficients between the magnitudes of cue response to each stimuli in the pair, was used for all of the associative-pair-responsive neurons recorded in the AITv (n = 80).

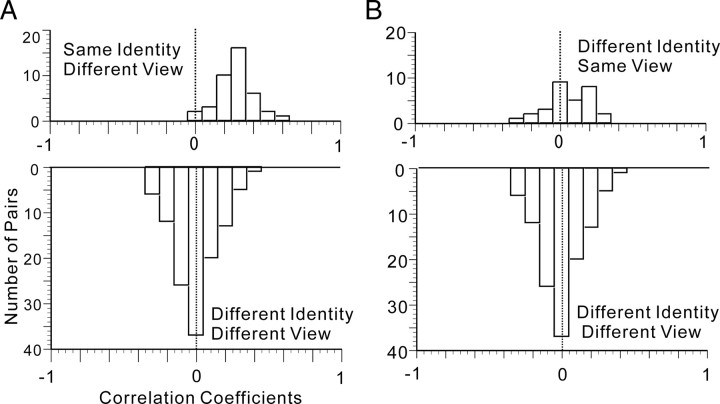

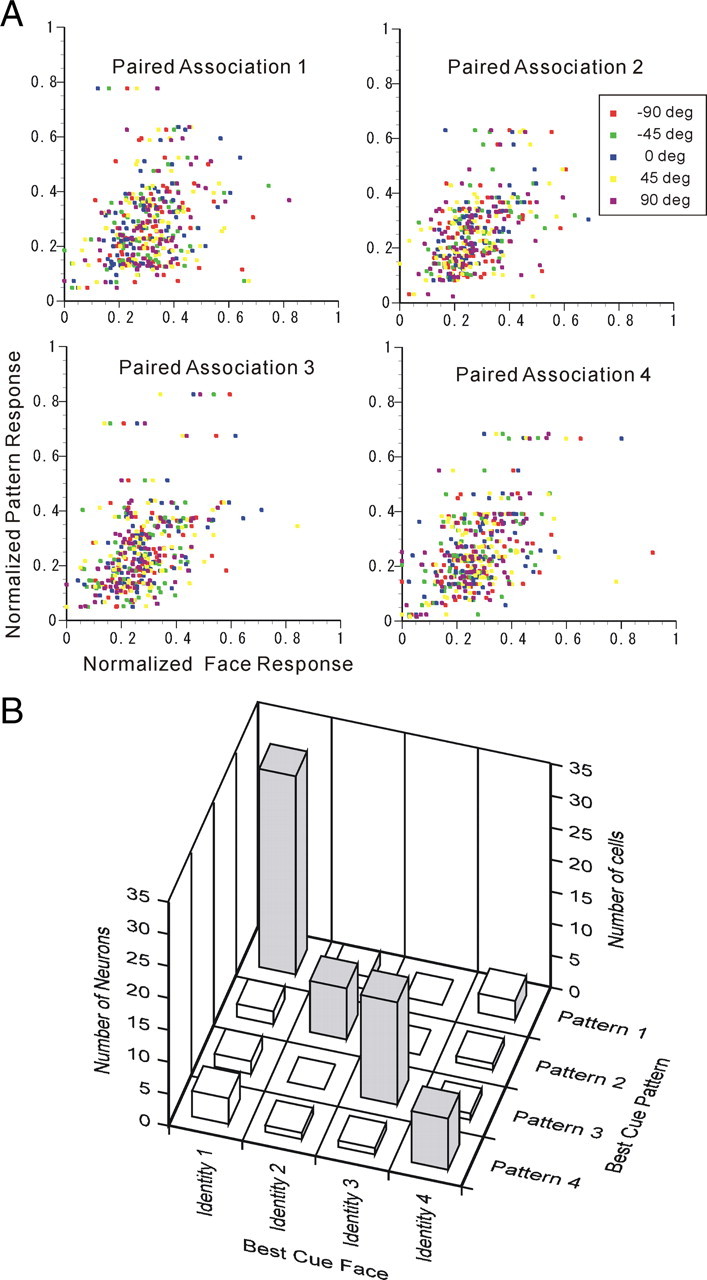

We then examined the neural representation of the faces in depth, using t statistics on the correlation coefficients. We first compared the correlation coefficients (r) between the stimuli pairs of a unique individual's face seen from different views (40 pairs) and those between the stimuli pairs of faces belonging to different identities and seen from different views (20C2 − 30 − 40 = 120 pairs). The frequency distribution of the correlation coefficient between faces of the same identity seen from different views is shown in Figure 7A (top). The mean ± SD of the correlation coefficients was 0.2802 ± 0.1257. There was a significant difference between zero and the mean of the distributions (two-tailed, z′-transformed Student's t test; t = 13.21355; df = 39; p = 5.5423 × 10−16). The frequency distribution of the correlation coefficient between faces of different identities and also seen from different views are shown in Figure 7A (bottom). The mean ± SD of the correlation coefficients was −0.0359 ± 0.1594. There was no significant difference between zero and the mean of the distributions (two-tailed, z′-transformed Student's t test; t = −0.1425; df = 119; p = 0.8869).

Figure 7.

Representation of faces by the population of associative-pair-responsive neurons in the AITv area. A, The frequency distribution of correlation coefficients (r) between faces of the same identity but seen from different viewpoints (top), and of correlation coefficients (r) between faces of different identities and seen from different viewpoints (bottom). B, Frequency distribution of correlation coefficients (r) between faces seen from the same angle but of different identities (top), and of correlation coefficients (r) between faces shown from different perspectives and of different identities (bottom).

Meanwhile, we compared the correlation coefficients between the stimuli pairs of faces with different identities seen from the same point of view (30 pairs) and the correlation coefficients between the stimuli pairs of faces with different identities and shown from different viewpoints (20C2 − 40 − 30 = 120 pairs). The frequency distribution of the correlation coefficients between faces seen from the same angle but with different identities is shown in Figure 7B (top). The mean ± SD of the correlation coefficients was 0.0550 ± 0.1510. There was no significant difference between zero and the mean of the distributions (two-tailed, z′-transformed Student's t test with Welch's correction; t = 1.9901; df = 29; p = 0.0561). The frequency distribution of the correlation coefficients between faces with different identities and seen from different viewpoints is shown in Figure 7B (bottom). In Figure 7A (bottom) and B (bottom) are identical. These results suggest that the population of associative-pair-responsive neurons can represent facial identity independent of facial views, but this population cannot represent facial views independent of facial identity.

We then looked into the effect of paired-association learning on neural representation using t statistics on the correlation coefficients. First, we compared the correlation coefficients between the stimuli pairs of patterns and faces belonging to a particular paired association (designated as congruent face pattern (FP) pairs; 20 pairs) and the correlation coefficients between the stimuli pairs of patterns and faces that did not belong to a particular paired association (designated as incongruent FP pairs; 60 pairs). The frequency distribution of correlation coefficients between congruent FP pairs is shown in Figure 8A (top). The mean ± SD of the correlation coefficients was 0.1212 ± 0.1374. The mean was positive, and there was a significant difference between zero and the mean (two-tailed, z′-transformed Student's t test; t = 3.9312; df = 19; p = 0.0009). The frequency distribution of correlation coefficients between incongruent FP pairs is shown in Figure 8A (bottom). The mean + SD of the correlation coefficients was −0.1001 ± 0.1307. The mean was negative, and there was a significant difference between zero and the mean (two-tailed, z′-transformed Student's t test; t = −6.0250; df = 59; p = 1.1800 × 10−7). Moreover, there was a significant difference between the means of the two distributions (two-tailed, z′-transformed Student's t test with Welch's correction; t = 6.3664; df = 32; p = 3.7735 × 10−7). The results indicated that a representation of an abstract pattern associatively paired with a facial identity was significantly closer to a representation of the face possessing that facial identity than was a representation of an abstract pattern not associatively paired. These results indicate the substantial impact of associative pairing on the neural representation of faces and abstract patterns.

Figure 8.

Representation of paired associations by a population of associative-pair-responsive neurons in the AITv area. A, Frequency distribution of correlation coefficients (r) between a face and a pattern paired as associates (congruent FP pair; top) and of correlation coefficients (r) between a face and a pattern not paired as associates (incongruent FP pair; bottom). B, Frequency distribution of correlation coefficients (r) between images of the same face seen from different viewpoints (congruent FF pair; top) and of correlation coefficients (r) between a face and a pattern paired as associates (congruent FP pair; bottom).

Second, we compared the correlation coefficients between the stimuli pairs of different faces shown from different views but having the same identity (designated as congruent face face (FF) pairs; 4 × 5C2 = 40 pairs) and the correlation coefficients between the stimuli pairs of patterns and faces that belong to paired associations (congruent FP pairs). The frequency distribution of the correlation coefficients between congruent FF pairs is shown in Figure 8B (top). Figure 8B (top) and Figure 7A (top) are identical graphs. The frequency distribution of the correlation coefficients between congruent FP pairs is shown in Figure 8B (bottom). In Figure 8, A (top) and B (bottom) are identical graphs. There was a significant difference between the means of the two distributions (two-tailed, z′-transformed Student's t test with Welch's correction; t = 4.4030; df = 38; p = 8.3980 × 10−5). The results indicated that a representation of a face possessing a facial identity seen from a given perspective was significantly closer to a representation of another face presented from a different perspective but possessing the same facial identity than to a representation of an abstract pictorial pattern associatively paired with the facial identity.

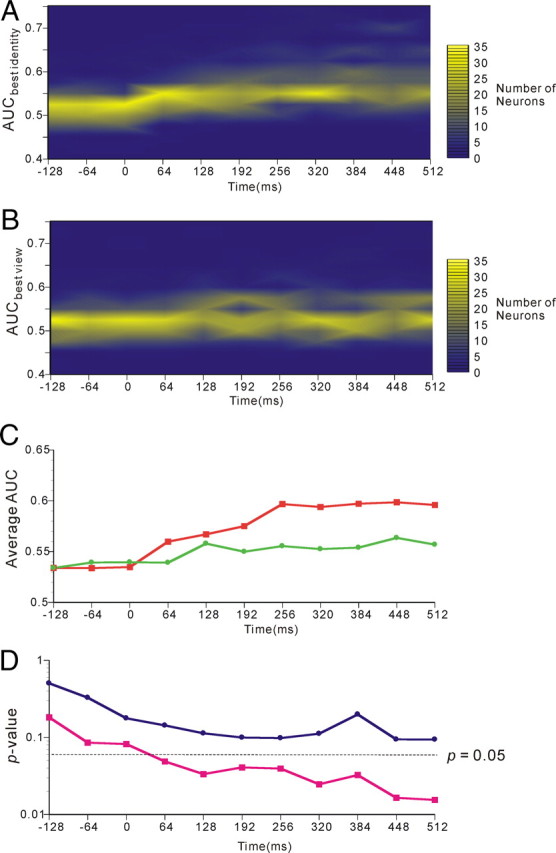

Finally, we investigated the temporal changes in AITv-area representations of the facial identities and those of the paired associations. We performed the same analysis based on ROC analysis as we used for Figure 4 and the same correlation analysis as we used for Figures 7 and 8. However, this analysis was based on a 64 ms time window, which is smaller than that used in the previous figures. The results for the temporal changes in AITv-area representations of facial identities and of facial views are depicted in Figure 9. In the figure, the firing of individual neurons in the 64 ms time-window starting −128, −64, 0, 64, 128, 192, 256, 320, 384, 448, and 512 ms after the cue-face onset was analyzed. In Figure 9, temporal changes in the distributin of AUCbest identity (A) and AUCbest view (B) computed from the ROC analyses and those in the means of AUCbest identity and AUCbest view (C) are presented. In Figure 9C, the AUCbest identity and AUCbest view showed rises at 64–128 and 128–192 ms time segments (64 ms width), respectively, and then became almost stationary. The temporal course of the p value of the differences from zero of the correlation coefficients between faces possessing the same identity but with different views (magenta) and of the differences from zero of the correlation coefficients between faces having different identities and different views (blue) are presented in Figure 9D. The results revealed that the p value of the differences from zero of the correlation coefficients between faces possessing the same facial identities became statistically significant (p = 0.05) at the 64–128 ms (64 ms width) time segment. Thereafter, the p value gradually decreased.

Figure 9.

Temporal changes in AITv-area representations of facial identities In each figure, statistical analysis was performed using a 64 ms window starting −128, −64, 0, 64, 128, 192, 256, 320, 384, 448, or 512 ms after the onset of cue face presentation. A, Temporal course of the distribution of the AUCbest identity obtained by the ROC analysis performed on activities in response to cue faces of individual neurons. B, Temporal course of the distribution of the AUCbest view obtained by the ROC analysis performed on activities in response to cue faces of individual neurons. C, Temporal course of the mean of the AUCbest identity (red) and AUCbest view (green). D, Temporal course of the p value of the differences from zero of the correlation coefficients between neural responses to the faces possessing the same identity but with different views (magenta) and of the correlation coefficients between neural responses to the faces having different identities and different views (blue).

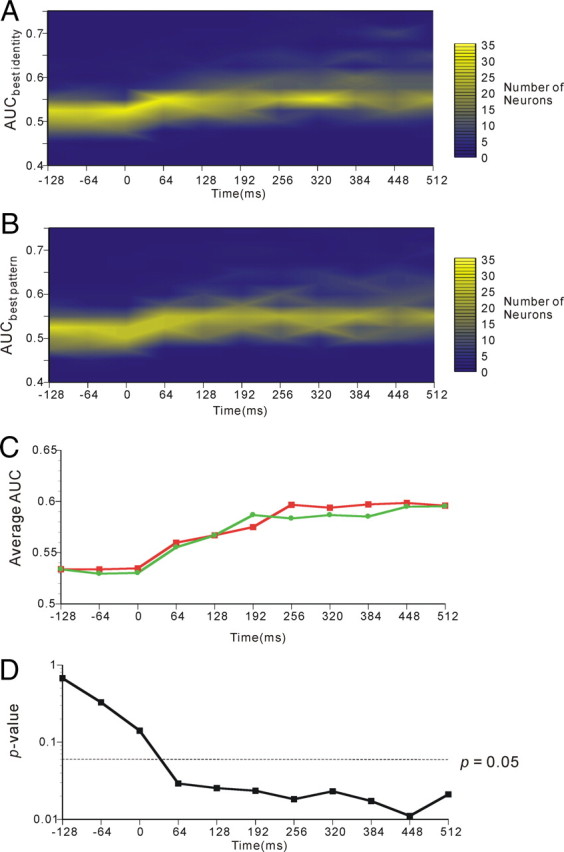

Next, the temporal changes in AITv-area representations of paired associations were determined and are depicted in Figure 10. Again in this figure, the firing of individual neurons in the 64 ms time window starting −128, −64, 0, 64, 128, 192, 256, 320, 384, 448, and 512 ms after the cue-face or cue-pattern onset was analyzed. In Figure 10, temporal changes in the distribution of AUCbest identity (A) and AUCbest pattern (B) computed from the ROC analyses and those in the means of AUCbest identity and AUCbest pattern (C) are presented. The temporal courses of the p values of the differences between the mean of the correlation coefficients for congruent FP pairs and the mean of the correlation coefficients for incongruent FP pairs are presented in Figure 10D. The results revealed that the p value between the correlation coefficients for congruent FP pairs and those for incongruent FP pairs showed drops at the 64–128 ms (64 ms width) time segment and attained statistical significance (p = 0.05) in this time segment. Thereafter, the correlation coefficients gradually increased and the p value gradually decreased.

Figure 10.

Temporal changes in AITv-area representations of paired associations. In each figure, statistical analysis was performed using a 64 ms window starting −128, −64, 0, 64, 128, 192, 256, 320, 384, 448, or 512 ms after the onset of cue-face or cue-pattern presentation. A, Temporal course of the distribution of the AUCbest identity obtained by the ROC analysis performed on activities in response to cue faces of individual neurons. B, Temporal course of the distribution of the AUCbest pattern obtained by the ROC analysis performed on activities in response to cue patterns of individual neurons. C, Temporal course of the mean of the AUCbest identity (red) and AUCbest pattern (green). D, Temporal course of the p value of the differences between the mean of the correlation coefficients for congruent FP pairs and the mean of the correlation coefficients for incongruent FP pairs.

Discussion

Our results demonstrated that a significant number of face-responsive neurons that selectively responded to a specific facial identity also responded to a specific pattern that was used in the paired-associative learning. In the majority of these neurons, the best-responding pattern was the counterpart of the best-responding facial identity in the paired associates (Figs. 2, 3). The ratio of associative-pair-selective neurons to associative-pair-responsive neurons was statistically significant, i.e., larger than the ratio expected by chance, as demonstrated by an χ2 test taking into account the uneven distribution of the probability for each stimulus. The results indicated that the selectivity of the associative-pair-selective neurons plausibly reflected acquired (or learned) associative pairing by the monkeys (Fig. 5B). The results of the present study were consistent with the results of previous reports showing neural correlates of paired-associative learning of pictorial patterns by monkeys (Sakai and Miyashita, 1991; Naya et al., 2001, 2003) and demonstrated the influence that paired-associative learning can exert on neuronal activity, even across different classes of visual stimuli (i.e., in this case, abstract patterns and familiar faces).

In our previous study (Eifuku et al., 2004), 52, 10, and 21 face neurons showed significant main effects of facial identity, significant effects of facial views, and significant interactions between facial views and facial identity, respectively, in the two-way ANOVA. It is important to note that the numbers of each type of neuron were somewhat different in the present study (i.e., we had 43, 27, and 19 neurons of each type), although we executed the recording from the cortical area around the AMTS, which is very close to the recorded area in that previous study. However, it also should be noted that the number of neurons with a significant main effect of facial identity but without a significant main effect of facial views was comparable between the two studies: 34 (21.8% of 156 cue-responsive neurons) in the present study and 43 (21.1% of 204 visually responsive neurons) in the previous study. The neurons described in Figure 3 in the present study and the neuron that was described in Figure 6 of Eifuku et al. (2004) belonged to this group.

The present results revealed that the correlation coefficients between neuronal responses to the faces possessing the same facial identity but viewed from different angles were significantly larger than zero, whereas the correlation coefficients between neuronal responses to the faces viewed from the same facial view but possessing different facial identities were not significantly larger than zero, implying that a population of face-responsive neurons in the inferior temporal area, AITv, was able to represent facial identity in a view-invariant manner (Fig. 7A) but did not represent facial views independent of facial identity (Fig. 7B), which is consistent with our previous report (Eifuku et al., 2004). Previous studies from other laboratories have also suggested that information about facial identity is reflected in the neural activity of the face-dedicated areas, or “face patches,” in the anterior temporal cortical areas of monkeys (Hasselmo et al., 1989; Tsao et al., 2006). It should be noted in this context that the visual neurons in the AIT area can represent various perceptual categories of visual stimuli, including faces (Kiani et al., 2007). Meanwhile, a substantial number of previous reports have suggested the view specificity of neural activity in response to the presentation of objects or faces to the inferior temporal areas of the monkey brain (Perrett et al., 1985, 1998; Oram and Perrett, 1992; Logothetis et al., 1995). A wide and continuous range of distribution of view-specificity indices of face neuronal responses was consistently confirmed in our sample from the AITv area, including some neurons with relatively precise view specificity (supplemental PDF, supplemental Fig. 2, available at www.jneurosci.org as supplemental material).

Thus, at the level of the individual neuron, face-responsive neurons that showed selectivity for facial identity often showed selectivity for facial views as well. However, after the responses of the face-responsive neurons in the AITv area were pooled, we found representations of facial identities that could be interpreted as view invariant. Recently, optical and multiunit activity recordings performed in the inferior temporal cortex of monkeys revealed the existence of one or possibly more common properties across neurons in a column (Sato et al., 2009). The results of this important study implied that the dimensions of information represented by inferior temporal neuron activity can differ between individual neurons and a population of (or pooled) neurons. Although our study was confined to an examination of the functional aspects of neuronal activity and did not have a strong structural basis, our data still support the notion of a difference between the individual and pooled levels of neuronal activity. Additional studies will be needed to elucidate the computations underlying such pooling.

Our analysis, which was based on population data of the AITv neuronal activities, revealed that the representation of an abstract pattern associated with a facial identity was significantly closer to the representations of the faces of the facial identity than the representation of an abstract pattern not associated with a facial identity (Fig. 8A). These results demonstrated a significant effect of associative pairing (or learning) between faces and abstract patterns in terms of the neural representations composed by the population of face-responsive neurons in the AITv. At a glance, the results from the cluster analysis, depicted in Figure 6B, may not be consistent with the presence of neurons that showed selective responses to abstract patterns because the associated stimuli, i.e., five faces in five different views but belonging to a unique identity and an abstract pattern, are expected to form a cluster if a significant fraction of neurons are selective to paired associates. However, it also appeared by the correlation analysis that the effect of pairing was not so strong that the abstract pattern could be treated in a manner similar to a series of faces belonging to a unique identity (Fig. 8B). The present study revealed that an abstract pattern associated with a facial identity and faces of a unique identity shown from different views were differently represented in the AITv. This result is consistent with the finding that the abstract patterns associated with a facial identity formed another cluster in the dendrogram of Figure 6B that was different from the cluster of faces. It has been reported that some semantic categories can be represented by the activity of certain visual neurons in the AITv (Tomita et al., 1999). Our results suggest that the semantic association, as measured in the present study, differs from the perceptual association underlying the perception of a view-invariant facial identity. At this point, the reason for the difference between the semantic and perceptual associations remains unclear. It is possible that the relatively short time period used for the paired-associative learning task in this study yielded different results compared with the long-term exposure to particular faces with which these monkeys were very familiar.

As depicted in Figures 9 and 10, it should be noted that the p value for the view-invariant facial identity attained a significance level of p = 0.05 even for the early time segment of 64–128 ms (64 ms width) in the neuronal responses and then gradually and monotonically decreased after the stimulus onset. Importantly, the p value for the paired associations also attained a significance level of p = 0.05 even for the early time segment of 64–128 ms (64 ms width) in the neuronal responses and then gradually and monotonically decreased after the stimulus onset. These findings are compatible with the finding that the very early segment in face neuronal responses transmits substantial information about faces (Oram and Perrett, 1992). It may be interesting to compare our results with those of Naya et al. (2001), who showed a greatly delayed response to a learned associate in the AITv compared to the perirhinal cortex. It should be noted that their results were on the sustained neuronal activities during interstimulus intervals after the cue presentation went off, and that they associated these delayed activities with the retrieval of the upcoming target stimuli. In contrast, our data were on neuronal responses to the cue presentation, which are likely to reflect the processing of cue stimuli, and thus the two studies may have covered different aspects of the pair-association memory task.

In humans, the apperceptive subtype of prosopagnosia (i.e., a deficit in recognizing a face), is generally considered to be attributable to damage that overrides the fusiform face area. In contrast, the associative subtype of prosopagnosia (i.e., a deficit in recognizing various associations between facial identity and related attributes such as certain types of semantic information) is generally considered to be attributable to damages overriding the anterior temporal cortical areas (Damasio et al., 1982; De Renzi et al., 1991; Barton et al., 2002; Barton and Cherkasova, 2003). The APA task to which we subjected the monkeys in the present study was designed to functionally simulate semantic associations with facial identities, as such associations are apparently broken in patients with the associative subtype of prosopagnosia. In this study, we found that facial identities and semantic associations with facial identities (as examined by the use of abstract patterns associated in a pair with facial identities) were both represented by the same population of AITv neurons. The observed associative combination of faces with abstract patterns, as represented in the AITv region of the brain, provides a plausible neural basis for semantic associations with facial identities and may partially account for the associative subtype of prosopagnosia.

Footnotes

This work was supported by Grants-in-Aid for Scientific Research from the Ministry of Education, Culture, Sports, Science and Technology, Japan (Grants 18020012, 19500348, and 20119006). We thank Yoshio Daimon and Takashi Kitamura for providing technical assistance and care for the animals.

The authors declare no competing financial interests.

References

- Barton JJ, Cherkasova M. Face imagery and its relation to perception and covert recognition in prosopagnosia. Neurology. 2003;61:220–225. doi: 10.1212/01.wnl.0000071229.11658.f8. [DOI] [PubMed] [Google Scholar]

- Barton JJ, Press DZ, Keenan JP, O'Connor M. Lesions of the fusiform face area impair perception of facial configuration in prosopagnosia. Neurology. 2002;58:71–78. doi: 10.1212/wnl.58.1.71. [DOI] [PubMed] [Google Scholar]

- Bruce CJ, Desimone R, Gross CG. Visual properties of neurons in a polysensory area in superior temporal sulcus of the macaque. J Neurophysiol. 1981;46:369–384. doi: 10.1152/jn.1981.46.2.369. [DOI] [PubMed] [Google Scholar]

- Crist CF, Yamasaki DSG, Komatsu H, Wurtz RH. A grid systems and a microsyringe for single cell recording. J Neurosci Methods. 1988;26:117–122. doi: 10.1016/0165-0270(88)90160-4. [DOI] [PubMed] [Google Scholar]

- Damasio AR, Damasio H, Van Hoesen GW. Prosopagnosia: anatomic basis and behavioral mechanisms. Neurology. 1982;32:331–341. doi: 10.1212/wnl.32.4.331. [DOI] [PubMed] [Google Scholar]

- De Renzi E, Faglioni P, Grossi P, Nichelli P. Apperceptive and associative forms of prosopagnosia. Cortex. 1991;27:213–222. doi: 10.1016/s0010-9452(13)80125-6. [DOI] [PubMed] [Google Scholar]

- Desimone R, Albright TD, Gross CG, Bruce CJ. Stimulus-selective properties of inferior temporal neurons in the macaque. J Neurosci. 1984;8:2051–2062. doi: 10.1523/JNEUROSCI.04-08-02051.1984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eifuku S, De Souza WC, Tamura R, Nishijo H, Ono T. Neuronal correlates of face identification in the monkey anterior temporal cortical areas. J Neurophysiol. 2004;91:358–371. doi: 10.1152/jn.00198.2003. [DOI] [PubMed] [Google Scholar]

- Farah MJ. Cambridge, MA: MIT; 1990. Visual agnosia: disorders of object recognition and what they tell us about normal vision. [Google Scholar]

- Felleman DJ, Van Essen DC. Distributed hierarchical processing in the primate visual cortex. Cereb Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Skudlarski P, Gore JC, Anderson AW. Expertise for cars and birds recruits brain areas involved in face recognition. Nat Neurosci. 2000;3:191–197. doi: 10.1038/72140. [DOI] [PubMed] [Google Scholar]

- Hasselmo ME, Rolls ET, Baylis GC. The role of expression and identity in the face selective response of neurons in the temporal visual cortex of the monkey. Behav Brain Res. 1989;32:203–218. doi: 10.1016/s0166-4328(89)80054-3. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;28:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Hays AV, Richmond BJ, Optican LM. A UNIX-based multiple process system for real-time data acquisition and control. WESCON Conf Proc. 1982;2:1–10. [Google Scholar]

- Heywood CA, Cowey A. The role of the “face-cell” area in the discrimination and recognition of faces by monkeys. Philos Trans R Soc Lond B Biol Sci. 1992;335:31–38. doi: 10.1098/rstb.1992.0004. [DOI] [PubMed] [Google Scholar]

- Judge SJ, Richmond BJ, Chu FC. Implantation of magnetic search coils for measurement of eye position: an improved method. Vision Res. 1980;20:535–538. doi: 10.1016/0042-6989(80)90128-5. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, Mcdermott J, Chun MM. The fusiform face areas: a module in human extra striate cortex specialized for face perception. J Neurosci. 1997;17:4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kiani R, Esteky H, Mirpour K, Tanaka K. Object category structure in response patterns of neuronal population in monkey inferior temporal cortex. J Neurophysiol. 2007;97:4296–4309. doi: 10.1152/jn.00024.2007. [DOI] [PubMed] [Google Scholar]

- Logothetis NK, Pauls J, Poggio T. Shape representation in the inferior temporal cortex of monkeys. Curr Biol. 1995;5:552–563. doi: 10.1016/s0960-9822(95)00108-4. [DOI] [PubMed] [Google Scholar]

- MacPherson JM, Aldridge JW. A quantitative method of computer analysis of spike train data collected from behaving animals. Brain Res. 1979;175:183–187. doi: 10.1016/0006-8993(79)90530-4. [DOI] [PubMed] [Google Scholar]

- Moeller S, Freiwald WA, Tsao DY. Patches with links: a unified system for processing faces in the macaque temporal lobe. Science. 2008;320:1355–1359. doi: 10.1126/science.1157436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Naya Y, Yoshida M, Miyashita Y. Backward spreading of memory retrieval signal in the primate temporal cortex. Science. 2001;291:661–664. doi: 10.1126/science.291.5504.661. [DOI] [PubMed] [Google Scholar]

- Naya Y, Yoshida M, Miyashita Y. Forward processing of long-term associative memory in monkey inferotemporal cortex. J Neurosci. 2003;23:2861–2871. doi: 10.1523/JNEUROSCI.23-07-02861.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oram MW, Perrett DI. Time course of neural responses discriminating different view of the face and head. J Neurophysiol. 1992;68:70–84. doi: 10.1152/jn.1992.68.1.70. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Rolls ET, Caan W. Visual neurons responsive to faces in the monkey temporal cortex. Exp Brain Res. 1982;47:29–342. doi: 10.1007/BF00239352. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Smith PA, Potter DD, Mistlin AJ, Head AS, Milner AD, Jeeves MA. Visual cells in the temporal cortex sensitive to face view and gaze direction. Proc R Soc Lond B Biol Sci. 1985;223:293–317. doi: 10.1098/rspb.1985.0003. [DOI] [PubMed] [Google Scholar]

- Perrett DI, Oram MW, Ashbridge E. Evidence accumulation in cell populations responsive to faces: an account of generalization of recognition without mental transformations. Cognition. 1998;67:115–145. doi: 10.1016/s0010-0277(98)00015-8. [DOI] [PubMed] [Google Scholar]

- Richmond BJ, Optican LM, Podell M, Spitzer H. Temporal encoding of two-dimensional patterns by single units in primate inferior temporal cortex. I. Response characteristics. J Neurophysiol. 1987;57:132–146. doi: 10.1152/jn.1987.57.1.132. [DOI] [PubMed] [Google Scholar]

- Sakai K, Miyashita Y. Neural organization for the long-term memory of paired associates. Nature. 1991;354:152–155. doi: 10.1038/354152a0. [DOI] [PubMed] [Google Scholar]

- Sato T, Uchida G, Tanifuji M. Cortical columnar organization is reconsidered in inferior temporal cortex. Cereb Cortex. 2009;19:1870–1888. doi: 10.1093/cercor/bhn218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugase Y, Yamane S, Ueno S, Kawano K. Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999;400:869–873. doi: 10.1038/23703. [DOI] [PubMed] [Google Scholar]

- Tamura H, Tanaka K. Visual response properties of cells in the ventral and dorsal parts of the macaque inferotemporal cortex. Cereb Cortex. 2001;11:384–399. doi: 10.1093/cercor/11.5.384. [DOI] [PubMed] [Google Scholar]

- Tomita H, Ohbayashi M, Nakahara K, Hasegawa I, Miyashita Y. Top down signal from prefrontal cortex in executive control of memory retrieval. Nature. 1999;401:699–703. doi: 10.1038/44372. [DOI] [PubMed] [Google Scholar]

- Tsao DY, Freiwald WA, Knutsen TA, Mandeville JB, Tootell RB. Faces and objects in macaque cerebral cortex. Nat Neurosci. 2003;6:989–995. doi: 10.1038/nn1111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsao DY, Freiwald WA, Tootell RBH, Livingstone MS. A cortical region consisting entirely of face-selective cells. Science. 2006;311:670–674. doi: 10.1126/science.1119983. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Young MP, Yamane S. Sparse population coding of faces in the inferotemporal cortex. Science. 1992;256:1327–1331. doi: 10.1126/science.1598577. [DOI] [PubMed] [Google Scholar]