Abstract

Behavioral studies have demonstrated that learning to read and write affects the processing of spoken language. The present study investigates the neural mechanism underlying the emergence of such orthographic effects during speech processing. Transcranial magnetic stimulation (TMS) was used to tease apart two competing hypotheses that consider this orthographic influence to be either a consequence of a change in the nature of the phonological representations during literacy acquisition or a consequence of online coactivation of the orthographic and phonological representations during speech processing. Participants performed an auditory lexical decision task in which the orthographic consistency of spoken words was manipulated and repetitive TMS was used to interfere with either phonological or orthographic processing by stimulating left supramarginal gyrus (SMG) or left ventral occipitotemporal cortex (vOTC), respectively. The advantage for consistently spelled words was removed only when the stimulation was delivered to SMG and not to vOTC, providing strong evidence that this effect arises at a phonological, rather than an orthographic, level. We propose a possible mechanistic explanation for the role of SMG in phonological processing and how this is affected by learning to read.

Introduction

Learning to read is a lengthy and difficult process with far reaching effects that go beyond simply establishing spelling-to-sound links. Not only does it induce structural brain changes in both gray and white matter (Castro-Caldas et al., 1999; Carreiras et al., 2009), it also improves visuo-spatial abilities (Reis and Castro-Caldas, 1997a) and spoken language skills (Morais et al., 1979; Reis and Castro-Caldas, 1997b). In fact, literacy even affects the purely auditory perception of speech by introducing subtle influences related to spelling. Seidenberg and Tanenhaus (1979), for example, found that participants were faster to recognize two spoken words as rhymes when they were spelled similarly (e.g., tie-pie) relative to dissimilar spellings (tie-rye). Since this first striking observation, similar findings have been reported in many subsequent studies (Tanenhaus et al., 1980; Donnenwerth-Nolan et al., 1981). More recently, it has been demonstrated that recognizing spoken words ending with rimes (i.e., the final vowel-consonant cluster) that have only one possible spelling (e.g., “must”) is faster than recognizing those ending with rimes that can have many spellings (e.g., “break”). In other words, the speed of recognizing a spoken word depends, in part, on its spelling. This has become known as the orthographic consistency effect (Ziegler and Ferrand, 1998; Pattamadilok et al., 2007; Ziegler et al., 2008; Pattamadilok et al., 2009; Peereman et al., 2009). We investigated the origin of this effect.

There are two main hypotheses. The first claims that learning to read alters preexisting phonological representations (Taft and Hambly, 1985; Harm and Seidenberg, 1999, 2004; Muneaux and Ziegler, 2004; Taft, 2006). For instance, literates are better than illiterates at repeating spoken nonsense words (Reis and Castro-Caldas, 1997b; Castro-Caldas et al., 1998), adding or deleting sounds from words (Morais et al., 1979), and producing words beginning with a particular sound (i.e., fluency tasks: Reis and Castro-Caldas, 1997b). By this account, literacy restructures phonological representations and introduces an advantage for words with consistent spelling that arises at a purely phonological level. The second, and more common, explanation is that strong functional links between spoken and written word forms automatically activate visual representations of words (Grainger and Ferrand, 1996; Ziegler and Ferrand, 1998; Grainger et al., 2003). For inconsistent spellings, this gives rise to competition at the visual level which slows responses relative to words with consistent spellings. The two hypotheses need not be mutually exclusive, although they have proven difficult to disentangle, especially at a purely behavioral level.

Here we investigated this question using transcranial magnetic stimulation (TMS) to selectively interfere with either phonological or orthographic processing. If orthographic consistency effects result from phonological restructuring, then stimulation of the left supramarginal gyrus (SMG)—an area involved in phonological processing—will reduce the effect. On the contrary, if orthographic consistency is due to coactivation of visual information, then stimulation of left ventral occipitotemporal cortex (vOTC)—an area involved in orthographic processing—will reduce the effect. If both SMG and vOTC stimulation affect the orthographic consistency advantage, then both contribute to the overall effect.

Materials and Methods

Design.

The TMS experiments reported here used a mixed design with two within-subject factors, Consistency (consistent vs inconsistent spellings) and TMS (stimulation vs none), and a between-subjects factor, Site (test vs control). Practical considerations prevented us from testing each participant on all three experiments. Specifically, there are not enough monosyllabic words in English that can be suitably matched across the necessary range of psycholinguistic factors to avoid repeating stimuli across conditions. In addition, participating in all three experiments would significantly exceed the recommended safety guidelines for TMS (Wassermann, 1998; Rossi et al., 2009). As a result, a mixed design was used. Note that the difference between consistent and inconsistent spellings was always assessed within-subject. In contrast, the between-subjects component assessed whether stimulation of different cortical fields modulated this basic orthographic consistency effect. Consequently, it was necessary to test separate groups of participants and ensure they were carefully matched. Only data from subjects who met two criteria were included in the TMS analyses to ensure the validity of the results. First, individuals needed to demonstrate an orthographic consistency effect without TMS in order for the comparison between groups to be meaningful. Second, if we were unable to adequately functionally localize the stimulation site in an individual, then those data were not included in the final analyses. These two criteria helped to ensure that any differential effects of TMS could not be the result of sampling bias. In addition to these precautions, the raw RT data were also z-transformed to equate the baseline RT performance in all 4 stimulation sites.

Participants.

A total of five separate groups of monolingual British English speakers took part in the three experiments. The purely behavioral experiment involved one group of 18 participants (12 female, aged 18–37, M = 24) while each TMS experiment involved two groups, one per stimulation site. The first TMS experiment tested 22 participants, of which 18 were included in the final analyses [SMG site: 4 male (M), 6 female (F), aged 19–38, M = 27.0; intraparietal sulcus (IPS), a control site for SMG: 3 M, 5 F, aged 20–38, M = 25.9]. The second TMS experiment tested 31 participants of which 18 were included in the final analyses [vOTC site: 5 M, 4 F, aged 23–39, M = 27.7; lateral occipital complex (LOC), a control site for vOTC: 4 M, 5 F, aged 19–39, M = 26.9]. Participants were included in the final analyses if they met the two inclusion criteria and the full details of this process are provided below. All subjects were right-handed and none reported any history of language or neurological disorders. The experiments were approved by the Berkshire National Health Service Research Ethics Committee and participants were paid for their participation.

Experimental procedures.

In each of the experiments, the main task used to elicit an orthographic consistency effect was auditory lexical decision. Participants were instructed to listen carefully to a spoken stimulus and to indicate, as quickly and accurately as possible, whether it was a real English word or not. Participants responded by pressing a button using either their right index or middle finger, and these were counterbalanced across subjects. Response latency was measured from the onset of the stimulus to the button-press response. Each trial started with a fixation cross displayed for 1000 ms, followed by an auditory stimulus presented at a comfortable sound pressure level through headphones. There was 2500 ms from stimulus onset to the start of the next trial during which responses were recorded, leading to total trial duration of 3500 ms. A practice session before the main task ensured familiarity with the task requirements.

The stimuli for this task were those used by Ziegler et al. (2008) in their third experiment. They consisted of 40 words ending with a phonological rime that could be spelled in only one way (e.g., “crab”), 40 words ending with a phonological rime that could be spelled in several ways (e.g., “soap”) and 80 pseudowords (e.g., “brike”). All stimuli were monosyllabic. Consistent and inconsistent words were matched for word frequency (Baayen et al., 1993), number of letters, number of phonemes, duration, orthographic neighborhood, number of higher frequency neighbors (Grainger, 1990), body neighbors (Ziegler and Perry, 1998) and phonological neighborhood (Goldinger et al., 1989). [See Ziegler et al. (2008) for full details of the matching.] The consistency ratio [friends/(friends + enemies)] of inconsistent words was 0.21 (range: 0.05–0.50). Following testing, four items were excluded from subsequent analyses due to their high error rates (>40% errors): malt, puss, salve and squaw. Note that the stimuli remained matched across all psycholinguistic factors after excluding these items.

The TMS experiments began by functionally localizing a test and a control site in each individual, although this process was different in the two experiments. The first experiment tested two sites within the inferior parietal lobe. An anterior region of the left SMG sensitive to phonological processing (Price et al., 1997; Devlin et al., 2003; Seghier et al., 2004; Zevin and McCandliss, 2005; Prabhakaran et al., 2006; Raizada and Poldrack, 2007) served as the main testing site while an adjacent region of IPS served as the control site (see Fig. 1). To identify a specific region of left SMG involved in phonological processing, we used a two-stage localization procedure in each participant. The first stage used a BrainSight frameless stereotaxy system (Rogue Research) to anatomically identify potential stimulation targets within each participant's left SMG using their high-resolution T1-weighted anatomical MRI scan. Before the TMS session, potential target sites were marked on the individual's structural scan in three regions of the anterior SMG. The first was located just superior to the termination of the posterior ascending ramus of the Sylvian fissure. The second was placed at the ventral end of the anterior SMG, superior to the Sylvian fissure, posterior to the postcentral sulcus and anterior to the posterior ascending ramus of the Sylvian fissure. The third was approximately half-way between these sites and ∼10–15 mm from the other two. These sites were then tested in a second stage to determine whether stimulation of any of them disrupted phonological processing.

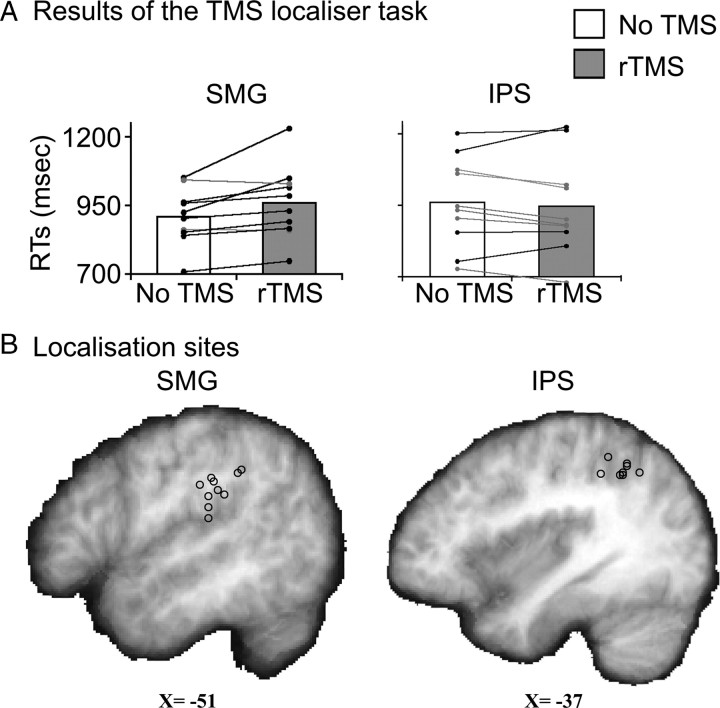

Figure 1.

Results of the TMS-based localizer task. A, The RT data for the SMG and IPS groups are shown as bar plots with the white bars indicating no TMS and gray indicating TMS trials. Overlaid on this group effect are the data from individual participants. Black lines connect the points from subjects who showed a net increase in RT due to rTMS while gray lines connect those with a net decrease. B, Stimulation sites for individual participants are shown as circles on a parasagittal slice through the group mean brain.

This second stage used short bursts of rTMS to temporally interfere with regional information processing to determine whether the region was engaged in phonological processing. A rhyme judgment task was used to focus attention on the sounds of the words. A trial began with a centrally presented fixation cross which remained on the screen for 500 ms before two words appeared, above and below the cross, for a further 500 ms. Participants had to decide whether or not the words rhymed (e.g., chair–pear) and indicate their responses by pressing a button with either their index or middle finger. This forced subjects to focus on the phonological form of the two words. Moreover, the congruency between sound and spelling of the stimuli were manipulated such that it was impossible for the participants to perform to task by relying solely on the word's spelling. For both rhyming and nonrhyming trials, the two words had similar spellings in half of the cases (rhyming: house-mouse vs kite-night; nonrhyming: mint-pint vs desk-ball). There was a 2500 ms intertrial interval before the next trial began and each run consisted of 42 trials presented in a random order, for a total duration of 2.45 min per run. The first two trials were dummies to get the participant past anticipating the first rTMS trial. Half of the trials had concurrent rTMS (10 Hz for 400 ms at 100% MT) starting with the onset of the word pair. Reaction times (RTs) were recorded from the onset of the stimulus and only correct responses were analyzed.

Testing began with a practice run where no TMS was delivered. When participants were comfortable with the task, then TMS was introduced by placing the coil on the scalp such that the line of maximum magnetic flux intersected one of the anatomically marked SMG sites. All participants tolerated TMS with no discomfort, although in a few cases there was peripheral stimulation of the temporalis muscle. After each run, RTs were analyzed and if TMS led to numerically smaller median RT (i.e., facilitation), the next site was tested. When there was inhibition, the site was re tested to ensure the effect was repeatable. Inhibition effects of >100 ms were considered physiologically unlikely, and were re tested. If after 10 runs, we were unable to identify a suitable testing site, the experiment ended. On average, it took five localizer runs to identify a site where stimulation consistently interfered with rhyme judgements and this site was used as the test site for the main auditory lexical decision task. In short, a TMS-based functional localizer was used to identify the main testing site (Ashbridge et al., 1997; Devlin et al., 2003; Gough et al., 2005; Stoeckel et al., 2009). The same basic procedure was used with the IPS site, but because this was a control condition, TMS was not predicted to consistently slow reaction times and no subjects were excluded based on “localizing” the IPS site. Instead, the effects in individual runs varied from facilitation through inhibition, and as expected, there was no overall reliable effect of IPS stimulation on visual rhyme judgments (see Fig. 1). Consequently, we conducted an average of four runs per subject to approximately match the number of runs needed to find the SMG site. The main purpose of these runs was simply to complete the same procedure at each site so as not to implicitly bias participants by testing the sites differently.

The second TMS experiment tested two sites within left extrastriate visual cortex: a vOTC region sensitive to written words (McCandliss et al., 2003; Price and Mechelli, 2005; Devlin et al., 2006) and an adjacent region within the LOC sensitive to visual objects (Malach et al., 1995; Grill-Spector et al., 2001). Unlike inferior parietal stimulation, TMS to occipitotemporal sites produces peripheral enervation of the temporalis muscle which can be uncomfortable in some subjects. To minimize this discomfort, we chose to avoid the extra stimulation involved in functionally localizing with TMS and instead used fMRI to identify the two sites in each participant. To functionally identify the two areas (see Fig. 3), a one-back task was used with four categories of visual stimuli: written words, pictures of common objects, scrambled pictures of the same objects, and faces. Data from the Face condition were not used in the current study. Subjects were instructed to press a button if the stimulus was identical to the preceding stimulus and 12.5% of the stimuli were targets. A block design was used to maximize statistical sensitivity. Each block consisted of 16 trials from a single category presented one every second. A trial began with a 650 ms fixation cross, followed by the stimulus for 350 ms. In between blocks, subjects viewed a fixation cross for 16 s. The stimuli were divided equally into two lists, with the order counterbalanced across subjects such that 50% of subjects saw the first list of stimuli during run 1 and the remaining 50% during run 2. In total there were 192 stimuli per category including targets. Using a one-back task has the advantage that stimulus category can be varied without changing the task, maintaining a constant cognitive set—the specific stimuli are almost incidental to the task. In addition, it is commonly used for functional localization (Kanwisher et al., 1999; Gazzaley et al., 2005; Peelen and Downing, 2005; Baker et al., 2007; Downing et al., 2007; Duncan et al., 2009).

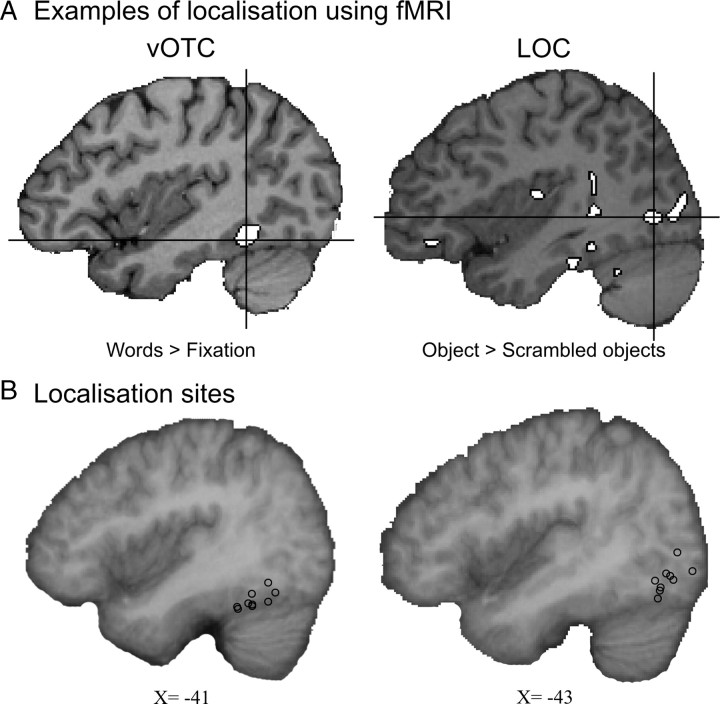

Figure 3.

Results of the fMRI-based localizer task. A, Activation in the one-back task is shown in white with black outline. The comparison of words relative to fixation was used to identify the peak voxel within vOTC while objects relative to scrambled objects was used to identify the peak voxel in LOC. The peaks are shown under the cross-hairs for two typical participants in the top row of the figure. B, The complete set of stimulation sites for individual participants is shown as circles on a parasagittal slice through the group mean brain.

Word stimuli (n = 168) were obtained from the MRC Psycholinguistic database (Coltheart, 1981) and consisted of 4 or 5 letter words with regular spellings (e.g., “hope”). All words had familiarity ratings between 300 and 500 (Coltheart, 1981), were either one or two syllables, and had a British English written word frequency value of 40 or less (Baayen et al., 1993). The stimuli in the two runs were fully matched for frequency, familiarity, imageability, number of letters, and number of syllables. Object stimuli consisted of black and white pictures (200 × 250 pixels) of easily recognizable objects such as a boat, tent, nail, etc. The scrambled objects were generated by dividing the pictures into 10 × 10 pixel squares and permuting their placement within the image. None of the resulting images were recognizable after scrambling.

Whole-brain imaging was performed on a Siemens 1.5 Tesla MR scanner at the Birkbeck-UCL Neuroimaging Centre in London. The functional data were acquired with a gradient-echo EPI sequence [repetition time (TR) = 3000 ms; echo time (TE) = 50 ms, field of view = 192 × 192, matrix = 64 × 64] giving a notional resolution of 3 × 3 × 3 mm. Each run consisted of 164 volumes and as a result, the two runs together took 16.4 min. In addition, a high-resolution anatomical scan was acquired (T1-weighted FLASH, TR = 12 ms; TE = 5.6 ms; 1 mm3 resolution) for anatomically localizing activations in individuals.

To restrict the analyses to the ventral and lateral OTC, two anatomical masks were drawn in standard space. The ventral OTC mask encompassed the posterior portion of the left fusiform gyrus, occipitotemporal sulcus (OTS), and medial parts of the inferior temporal gyrus (ITG)—areas consistently activated by visual word recognition tasks (Price et al., 1994, 1996; Herbster et al., 1997; Rumsey et al., 1997; Fiez and Petersen, 1998; Fiez et al., 1999; Shaywitz et al., 2004). The standard space coordinates were: X = −30 to −54, Y = −45 to −70 and Z = −30 to −4. This region is sometimes referred to as the “visual word form area” (McCandliss et al., 2003; Dehaene et al., 2005), although the term is misleading as it suggests a functional specificity which is not present (Price and Devlin, 2003, 2004). The lateral OTC mask encompassed lateral posterior fusiform gyrus, posterior OTS and lateral parts of posterior ITG—areas consistently activated by visual objects and collectively known as the “lateral occipital complex” (Malach et al., 1995; Grill-Spector et al., 1999). The standard space coordinates were X = −33 to −56, Y = −67 to −89 and Z = −20 to +4. Within each mask, only voxels with at least a 20% chance of being gray matter were included based on an automatic tissue segmentation algorithm (Zhang et al., 2001). The contrasts [words—fixation] and [objects—scrambled objects] were inclusively masked by the anatomical regions of interest and the voxel within the mask with the highest activation was marked on the participant's anatomical scan using BrainSight and then targeted with TMS in the main auditory lexical decision experiment. The word-sensitive region was used as the test site for the main experiment and the object-sensitive region served as the control site.

Although the experiments used different methods to localize the stimulation sites, the net result was the same. In both cases, stimulation for the test site was individually targeted to a region where TMS disrupted either phonological or orthographic processing, as demonstrated in an independent dataset. Importantly, functional localization of this type reduces the intersubject variance present for other (more heuristic) targeting procedures, optimizing the number of participants needed (Sparing et al., 2008; Sack et al., 2009).

After localizing the stimulation sites, participants performed the main auditory lexical decision experiment. rTMS was pseudorandomly delivered on half of the trials. The number of TMS trials was equal across conditions and no more than three TMS trials occurred sequentially. Two versions of the stimuli were created per task to guarantee that each stimulus was equally presented in TMS and no-TMS trials across participants. Because each discharge of the stimulator produces a click, participants always wore an ear plug in their left ear (the one nearest the stimulating coil) and auditory stimuli were delivered directly to their right ear via an earphone (Sennheiser, CX-300). This facilitated hearing the stimuli by reducing the TMS noise and by introducing a spatial separation between the noise and speech signal (Dirks and Wilson, 1969; Zurek, 1993).

Finally, for the second TMS experiment only, we ran an additional TMS experiment after the main task to determine whether we stimulated the correct region of vOTC to successfully disrupt orthographic processing. If our targeting of vOTC was off, possibly due spatial biases between fMRI and TMS, then a null effect in the main experiment could be due to simply stimulating the wrong area. Consequently, participants performed a visual lexical decision task with short trains of rTMS (10 Hz for 500 ms) pseudorandomly delivered on half of the trials. The stimuli consisted of 40 monosyllabic words and pseudowords. The number of TMS trials was equal across conditions and no more than three TMS trials occurred sequentially. Two versions of stimuli were created to guarantee that each stimulus was equally presented in TMS and no-TMS trials across participants. Previously, we have shown that rTMS to vOTC increased reaction times (RTs) in this task whereas stimulation of LOC had no significant effect on RTs (Duncan et al., 2010). Consequently, subjects who did not show a disruptive effect of ventral occipitotemporal rTMS on words were excluded from the analysis of the main experiment on the assumption that we had targeted the site incorrectly in these participants.

In summary, we began with a purely behavioral experiment that consisted solely of the main auditory lexical decision task. Then the two TMS experiments were conducted in stages: (1) functionally localizing the test and control stimulation sites within either the inferior parietal lobe or occipitotemporal cortex, (2) running the main auditory lexical decision task, and for the second TMS experiment only, (3) verifying the accuracy of the fMRI-based stimulation sites with a visual lexical decision task. Participants had the opportunity to practice each task before actual testing began.

TMS.

We used a frameless stereotaxy system to position the TMS coil on the scalp to stimulate the precise anatomical region-of-interest. All participants in the TMS experiments participated in a separate MRI session where a high-resolution anatomical scan was acquired (T1-weighted FLASH, TR = 12 ms; TE = 5.6 ms; 1 mm3 resolution). During the TMS sessions, a Polaris infrared camera (Northern Digital) tracked the participant's head, and BrainSight software (Rogue Research) registered the participant's head to his/her MRI scan. Neuronavigation was used to both target and record stimulation sites.

Stimulation was performed using a MagStim Rapid2 TMS system and a 70 mm figure-of-eight coil. The stimulation intensity was individually determined based on the threshold necessary to observe a visible contralateral hand twitch. This ranged from 42% to 65% of the maximum stimulator output (mean = 55%). In all of the experiments, short trains of 10 Hz pulses were delivered starting at the onset of the stimuli. Stimulation parameters were well within international safety guidelines (Wassermann, 1998).

Analyses.

For the auditory lexical decision data, reaction times were measured from the onset of the auditory stimulus. Only correct responses from word trials were analyzed and individual trials with RTs longer or shorter than the mean RT ± 3 SD were discarded, removing 0.89% of the RT data from the behavioral pre test and 0.84% of the RT data from the two TMS experiments. For the behavioral experiment, paired t tests were used to compare reaction times and accuracy scores for consistent and inconsistent words. The TMS experiments used three-way ANOVAs with Consistency (yes/no) and TMS (yes/no) as within-subject factors and Site (SMG/IPS or vOTC/LOC) as a between-subjects factor. When a significant interaction with Site was present, the data from the two sites were analyzed separately to characterize the interaction. Post hoc comparisons used the Bonferroni method to correct for multiple comparisons. It is worth noting, that there were no significant main effects or interactions when the accuracy data were analyzed in an equivalent fashion, so the only analyses reported here used RT as their dependent measure. The full set of accuracy data are summarized in Table 1.

Table 1.

Accuracy results (in percent correct) for all three experiments

| Consistent |

Inconsistent |

|||

|---|---|---|---|---|

| No TMS | TMS | No TMS | TMS | |

| Behavioral pretest | 89.2% | 90.1% | ||

| SMG stimulation | 90.3% | 88.3% | 91.0% | 85.8% |

| IPS stimulation | 94.8% | 93.3% | 93.4% | 93.4% |

| vOTC stimulation | 84.5% | 88.9% | 88.8% | 90.1% |

| LOC stimulation | 88.1% | 88.9% | 90.1% | 90.1% |

Data from the localizer tasks were analyzed separately. For the TMS-based localizer used in the first TMS experiment, the median RTs for correct responses were compared for trials with and without rTMS. The mean of these (median) RTs per condition over all runs at the final testing site was used to plot the TMS effects in Figure 1A. The second TMS experiment used data from a short fMRI experiment to localize occipitotemporal regions sensitive to words and objects. Image processing was performed using FSL 4.0 (www.fmrib.ox.ac.uk/fsl). To allow for T1 equilibrium, the initial two images of each run were discarded. The data were then realigned to remove small head movements (Jenkinson et al., 2002), smoothed with a 6 mm full-width half-maximum Gaussian kernel and prewhitened to remove temporal autocorrelation (Woolrich et al., 2001). The resulting images were entered into a general linear model with four conditions of interest corresponding to the four categories of visual stimuli. Blocks were convolved with a double gamma “canonical” hemodynamic response function (Glover, 1999) to generate the main regressors. In addition, the estimated motion parameters were entered as covariates of no interest to reduce structured noise due to minor head motion. Linear contrasts of [words > fixation] and [objects > scrambled objects] identified reading- and object-sensitive areas, respectively. First level results were registered to the MNI-152 template using a 12-DOF affine transformation (Jenkinson and Smith, 2001) and a subsequent second level, fixed-effects model combined the two first level runs into a single, subject-specific analysis. This was then transformed into the participant's native structural space and used to target stimulation in the TMS experiment.

Results

Behavioral pretest

Overall accuracy on this task was 90% and did not differ between spoken words with consistent or inconsistent spellings (t(17) = 1. 3, p = 0.225; Table 1). There was, however, an expected difference in RTs. Responses to words with consistent spellings were significantly faster than for words with inconsistent spellings (866 vs 926 ms, t(17) = 5.8, p < 0.001), replicating previous studies (Ziegler and Ferrand, 1998; Ventura et al., 2004; Pattamadilok et al., 2007; Ziegler et al., 2008). This advantage for consistent words was not observed in all participants, although it was present in the majority (16 of 18). In other words, these results confirm that the auditory lexical decision task and stimuli used here were appropriate for eliciting a robust orthographic consistency effect over the group, but demonstrate that the effect was only present in ∼89% of the participants. This finding highlights the importance of our first inclusion criterion, namely determining whether individual participants display this consistency advantage before assessing whether TMS affected it.

Inferior parietal TMS

Using the visual rhyme localizer task, we were able to identify a testing site within SMG where rTMS consistently slowed rhyme judgments in 8 of 11 participants. Two participants showed a net speed up for TMS due to a single run where stimulation produced a large facilitation effect even though two other runs showed typical rTMS-induced slowdowns. Because both participants had two out of three runs with typical slowdowns, they were included in the main experiment despite a net speed up during localization. The final participant was unable to perform the task due to difficulty hearing the stimuli, so all testing was stopped. On average, five localizer runs per subject were required to identify the main SMG testing site. For IPS, where localizer runs were included to approximate the subjective experience and amount of practice with TMS across participants, an average of four runs per subject were used. Figure 1A illustrates the differential effects of rTMS on the two sites. For SMG, stimulation increased RTs by +50 ms (paired t test, t(9) = 3.1, p = 0.012) while for IPS, there was a small (and nonsignificant) reduction in RTs of −15 ms (t(10) = 0.8, n.s.). The precise location where stimulation interfered with phonological processing varied slightly across individuals, but was consistently located between the ventral limb of the postcentral sulcus and the posterior ascending ramus of the Sylvian fissure. In other words, the stimulation site consistently lay on the crest of the anterior portion of SMG. These sites are shown in the left hand column of Figure 1B for each participant (circles) on a parasagittal slice through the group mean brain in standard (i.e., MNI-152) space. The average coordinates were [−51, −32, +26], a region previously implicated in phonological processing (Price et al., 1997; Devlin et al., 2003; Seghier et al., 2004; Zevin and McCandliss, 2005; Prabhakaran et al., 2006; Raizada and Poldrack, 2007). In contrast, the IPS stimulation sites for each participant are shown in the right column with a mean location of [−37, −52, +41]. These two stimulation sites were separated by ∼3 cm on the cortical surface and were used as the testing and control sites for the main TMS experiment.

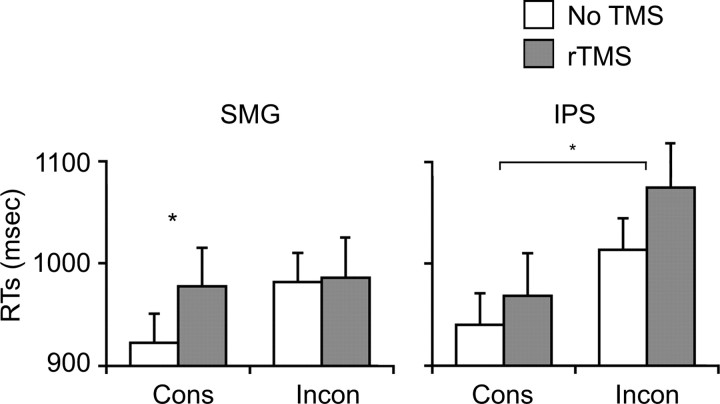

Accuracy in the main experiment was similar to the behavioral pretest (91%) and did not significantly differ across conditions indicating that participants were able to perform the task well (Table 1). To evaluate whether interfering with phonological processing modified the orthographic consistency effect, we first identified participants who showed the basic orthographic consistency effect, i.e., a numeric RT advantage for consistent words, on non-TMS trials. Like in the behavioral experiment, 18 of 21 (86%) participants responded faster to consistently than inconsistently spelled words, with 10 participants in the SMG group and 8 in the IPS group. The raw RT data for these subjects are shown in Figure 2. There was a significant three-way interaction (Consistency × TMS × Site; F(1,16) = 7.6, p = 0.014) indicating that when rTMS was applied to SMG but not to IPS, the orthographic consistency benefit disappeared. In other words, stimulation had different effects on the two sites. Within SMG, a significant Consistency × TMS interaction (F(1,9) = 10.2, p = 0.011) indicated that stimulation removed the RT advantage by slowing consistent words (t(9) = 3.1, p = 0.013) without affecting the inconsistent words (t(9) = 0.2, p = 0.858). In contrast, within IPS there was a robust main effect of Consistency (F(1,7) = 23.0, p = 0.002) but no significant main effect of TMS (F(1,7) = 1.9, p = 0.212) and no significant interaction (F(1,7) = 1.4, p = 0.271). In other words, rTMS delivered to a region of SMG involved in phonological processing selectively removed the RT advantage for consistent relative to inconsistent words in this auditory lexical decision task.

Figure 2.

Behavioral results of the first TMS experiment showing RTs for the auditory lexical decision experiment. White bars indicate trials without TMS and gray bars are trials with rTMS. The orthographic consistency effect can be seen as an increase in RTs between the consistent and inconsistent trials without TMS. Stimulation delivered to the SMG eliminated this effect by increasing response times for consistent trials without affecting inconsistent trials. In contrast, stimulation of the IPS had no significant effect, resulting in a significant orthographic consistency advantage for both TMS and non-TMS trials.

Occipitotemporal TMS

Testing began with 31 participants and in 30 the fMRI data sufficed to identify word and object-sensitive regions within vOTC and LOC, respectively. Examples of individual activations are shown in Figure 3A. In one subject, the word-sensitive activation within occipitotemporal cortex was too posterior and data from this participant were excluded from further analyses.

An additional seven participants were excluded after analyzing their data from the visual lexical decision TMS experiment. 11 of 18 participants who received vOTC stimulation showed the expected slowdown on words, with an average increase of 40 ms for TMS relative to no-TMS trials (t(10) = 4.0, p = 0.003), similar to that seen previously (Duncan et al., 2010). The remaining seven either showed no effect of TMS or had faster RTs with stimulation, probably due to a nonspecific intersensory facilitation. In contrast, stimulation of LOC did not significantly affect RTs (t(11) = 1.5, n.s.), consistent with our previous findings (Duncan et al., 2010). Therefore, only data from these 23 (11 vOTC, 12 LOC) participants were further considered in the study.

Finally, of the remaining participants, 78% showed an orthographic consistency effect in the auditory lexical decision experiment for no-TMS trials leaving 9 subjects in each of the vOTC and LOC conditions. In summary, these 18 participants were the ones who demonstrated both the basic orthographic consistency effect without stimulation and accurate targeting of vOTC or LOC stimulation (sites shown in Fig. 3B). The average vOTC stimulation coordinates were [−41, −56, −20], a region consistently implicated in orthographic processing (McCandliss et al., 2003; Price and Mechelli, 2005; Devlin et al., 2006). The average LOC coordinates were [−43, −77, −11], an area within the lateral occipital complex associated with visual object processing (Malach et al., 1995; Grill-Spector et al., 2001). These two stimulation sites were separated by ∼2.5 cm on the cortical surface.

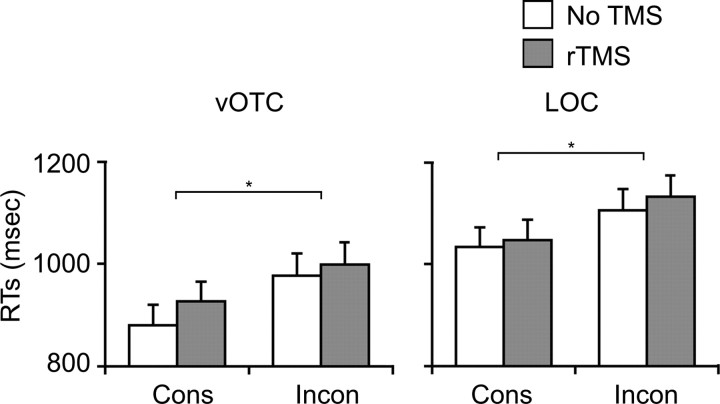

Unlike the previous TMS experiment, the analyses performed on the RT data showed that the basic orthographic consistency advantage was not affected by stimulation of either the vOTC or LOC sites (Fig. 4). The only significant effects on RTs were the main effects of Consistency (F(1,16) = 35.6, p < 0.001) and Site (F(1,16) = 6.5, p = 0.021). On average, responses to consistent words were faster than inconsistent words (972 vs 1054 ms) and responses in the vOTC group were faster than those in the LOC group (946 vs 1080 ms). There was also a (nonsignificant) trend for a main effect of TMS (F(1,16) = 3.2, p = 0.094) with faster responses on trials without TMS compared with those with rTMS (1000 vs 1026 ms). This numeric slowdown for TMS was present for both consistent and inconsistent words at both stimulation sites and is therefore a nonspecific effect. Finally, none of the interaction terms were significant (all F < 1). And like the previous experiments, accuracy on the task was 88.2% and did not differ across conditions, with no reliable main effects or interactions (Table 1). In other words, despite that fact that vOTC stimulation disrupted orthographic processing sufficiently to slow visual lexical decisions, it had no effect on the orthographic consistency effect in the auditory lexical decision task.

Figure 4.

Behavioral results of the second TMS experiment showing RTs for the auditory lexical decision experiment. White bars indicate trials without TMS and gray bars are trials with rTMS. The orthographic consistency effect can be seen as an increase in RTs between the consistent and inconsistent trials without TMS. In this case, neither vOTC nor LOC stimulation had an effect on the orthographic consistency advantage, which is present for both TMS and non-TMS trials.

Additional analyses

Given that the statistical comparisons reported above were done on the basis of a mixed-design (with orthographic consistency and TMS as within-subject factors and stimulation site as a between-subjects factor), we Z-transformed the raw RT data to equate the baseline RT performance in all four stimulation sites. In both TMS experiments, ANOVAs performed on the standardized RT data confirmed the results obtained in the analyses performed on the raw RT data.

For the first TMS experiment (inferior parietal TMS), there was a significant three-way interaction (Consistency × TMS × Site; F(1,16) = 8.1, p = 0.012) showing that the consistency effect disappeared only when rTMS was applied to SMG. Within this site, the Consistency × TMS interaction remained unchanged (F(1,9) = 10.1, p = 0.011) indicated that stimulation removed the RT advantage by slowing consistent words (t(9) = 3.1, p = 0.013) without affecting the inconsistent words (t(9) = 0.2, p = 0.856). In contrast, within IPS there was a robust main effect of Consistency (F(1,7) = 23.3, p = 0.002) but no significant main effect of TMS (F(1,7) = 1.9, p = 0.211) and no significant interaction (F(1,7) = 1.1, p = 0.33).

The same analyses performed on the standardized RTs obtained in the second TMS experiment (occipitotemporal TMS) changed the results slightly, but did not alter the key findings. Specifically, there was again a significant main consistency (F(1,16) = 28.5, p < 0.001) and the previous main effect of Site was removed by standardizing the data (by definition). In the new analysis, the main effect of TMS also became significant (F(1,16) = 4.6, p = 0.047) whereas it was a trend before. Critically, though, no significant interactions were observed. In other words, the application of TMS did not alter the orthographic consistency advantage.

The mechanism of action of the main TMS effect observed here is different from the one reported in the visual lexical decision task (see also Duncan et al., 2010) where only the stimulation applied on vOTC (but not LOC) affected visual word recognition. A nonspecific TMS effect reported in the current auditory lexical decision task was more likely to result from interference from the sound of the discharging coil due to the proximity of both vOTC and LOC to the ear.

Discussion

These results demonstrate that stimulation of the left SMG, but not vOTC, selectively abolished the advantage for auditory words with consistent spellings. In the first TMS experiment, SMG stimulation increased RTs to consistent words without affecting inconsistent words. In contrast, stimulation over the adjacent IPS did not affect the consistency advantage, ruling out nonspecific TMS effects. A different pattern of results was seen in the second TMS experiment, where neither vOTC nor LOC stimulation affected the advantage for consistent words. Stimulation of vOTC (but not LOC) did, however, significantly increase RTs in the visual lexical decision task, indicating that TMS successfully interfered with orthographic processing.

These findings receive additional support from a recent high-density EEG study that investigated orthographic consistency effects in a spoken word recognition task (Perre et al., 2009). The authors observed a larger negative-going potential for inconsistent relative to consistent words. They then used standardized low resolution electromagnetic tomography (sLORETA) to determine the possible cortical generators of the effect and found it was localized in left SMG with no additional contribution from vOTC. Given the many differences between this and the current experiment (e.g., different subjects, languages, and methodologies), the consistency of the results is striking. Together, these findings provide strong evidence that “orthographic” influences in speech perception arise at a phonological, rather than orthographic, level.

To what extent, though, do these results support the phonological restructuring hypothesis? The inference rests on SMG's involvement in phonological processing. Interestingly, this region is not included in two influential models of speech processing (Hickok and Poeppel, 2007; Rauschecker and Scott, 2009; but see Price, 2010) presumably because listening to speech is not typically sufficient to activate the area (Harris et al., 2009; Leff et al., 2009; Adank and Devlin, 2010). Instead tasks with a more explicit phonological component such as rhyme (Booth et al., 2004; Seghier et al., 2004; Yoncheva et al., 2010) or syllable judgments (Price et al., 1997; Callan et al., 2003; Devlin et al., 2003; Zevin and McCandliss, 2005; Raizada and Poldrack, 2007) activate the region. One thing that may be common across these tasks is a covert articulation of the stimulus, consistent with the strong anatomical connections linking SMG to ventral premotor cortex (PMv) (Catani et al., 2005; Petrides and Pandya, 2009) where neurons control oro-facial movements of the lips, tongue, and larynx and play an important role in articulation (Petrides et al., 2005; Sereno and Dick, 2008).

These reciprocal connections between PMv and SMG may form a processing loop for acting on reproducible sound patterns that would have two valuable functions. First, it would provide a simple resonance circuit for temporarily storing these patterns (McClelland and Elman, 1986; Botvinick and Plaut, 2006). Indeed, studies of verbal working memory commonly implicate these regions (Paulesu et al., 1993; Romero et al., 2006; Koelsch et al., 2009). It is worth noting that reproducible sounds are not limited to verbal information—when subjects attend to reproducible sequences of tones, for instance, activation is also seen in these areas (Gelfand and Bookheimer, 2003; Yoncheva et al., 2010). Second, this type of anatomical loop provides a computational mechanism for encoding statistical regularities among components of the representation which help to “clean up” aberrant patterns of activity via attractor dynamics (Pearlmutter, 1989; Amit, 1992; Lundqvist et al., 2006). Recently, Raizada and Poldrack (2007) reported clear evidence of attractor dynamics when perceiving auditory syllables artificially morphed between /ba/ and /da/. Activation in anterior SMG was largest when participants perceived minimally contrasting pairs as different syllables suggesting that greater SMG engagement was necessary to force each auditory input into a different attractor corresponding to one of the two canonical syllables. By this account, then, auditory speech input instantiates a noisy phono-articulatory pattern of activity in anterior SMG that is then cleaned-up via attractor dynamics into a stored pattern (for additional examples, see Callan et al., 2003; Jacquemot et al., 2003; Zevin and McCandliss, 2005). Different voices, accents, and speech rates as well as masking background sounds, acoustic distortion, etc. all contribute to the noise present in the initial pattern of activity, thus requiring a clean-up mechanism. In sum, we hypothesize that the PMv–SMG circuit plays an integral role in representing and processing representations for phono-articulatory patterns that contribute to “phonological processing.”

It is worth noting that anterior SMG is both anatomically and functionally different from two adjacent regions involved in language processing: the angular gyrus (ANG) and area Spt. ANG is posterior to SMG and has a different pattern of anatomical connectivity (Rushworth et al., 2006), yielding numerous functional differences between the two (Göbel et al., 2001; Schacter et al., 2007). It is thought to play a role assembling phonological information from visual (i.e., orthographic) inputs (Dejerine, 1891; Horwitz et al., 1998; Pugh et al., 2000; Shaywitz et al., 2002; Booth et al., 2004) while SMG is believed to contribute more generally to phonological processing (Price et al., 1997; Devlin et al., 2003; Seghier et al., 2004; Zevin and McCandliss, 2005; Prabhakaran et al., 2006; Raizada and Poldrack, 2007). In contrast, the audio-motor integration area Spt (Okada and Hickok, 2006; Hickok et al., 2009) is found in the ventral bank of the Sylvian fissure where it forms the posterior part of the planum temporale and receives direct projections from caudal regions of auditory cortex (Seltzer and Pandya, 1978, 1991; Kaas and Hackett, 2000). In addition, it is directly linked to PMv via the fibers of the arcuate fasciculus (Catani et al., 2005; Petrides and Pandya, 2009). Thus the region is anatomically well situated to integrate sensorimotor signals related to producing and perceiving speech. In contrast, anterior SMG does not receive direct projections from auditory cortex (Seltzer and Pandya, 1978, 1984; Petrides and Pandya, 1988; Seltzer and Pandya, 1994; Kaas and Hackett, 2000). Instead, auditory information presumably arrives via PMv, suggesting that it is first transformed into a motor pattern. Therefore, neuronal activity in SMG would be expected to encode patterns of movements over the articulators (i.e., mouth, lips, tongue, and larynx) capable of producing verbal output for both speech and nonspeech such as humming.

So how would such a system contribute to orthographic effects in speech perception? Before learning to read, an auditory input needs a certain amount of clean-up processing to settle into a specific attractor. Literacy introduces a novel input route that maps visual (i.e., orthographic) stimuli onto phono-articulator codes. For words with consistent spellings, this is essentially as efficient as mapping an auditory input. For words with rimes that can be spelled in multiple ways, however, the mapping is less efficient. Orthographic inconsistency moves a word further from its attractor state, requiring stronger attractor dynamics to clean it up. Presumably this additional processing is the source of the longer RTs and greater ERP signal for inconsistent words. In a computational simulation of this process, Harm and Seidenberg (1999; 2004) showed that learning to read enhanced the attractor basins for rimes which helped to compensate for the additional noise present for inconsistently spelled words. This effect was present for both written and spoken words which, due to Hebbian learning, mapped onto essentially identical initial patterns. Consequently, learning to read alters the initial state of phono-articulatory representations and strengthens segmental attractor dynamics, thus introducing an orthographic influence into these phono-articulatory representations that is independent of the input modality. Presumably these changes are the neural correlate of segmental phonological representations that underlie “phonological awareness” skills (Morais et al., 1979, 1986; Goswami et al., 2005).

If true, this provides a mechanistic account of the current TMS findings. Assuming a distributed population coding of the phono-articulatory representations, stimulation introduces noise into the pattern of activation by preferentially affecting less active neurons (Silvanto et al., 2008). This increases the distance from stored attractor trajectories, requiring additional processing to clean up the noise, effectively turning a consistent word into an inconsistent one. It has less effect on inconsistent words due to the nonlinear nature of attractor dynamics where small perturbations have larger effects near the attractor state. This is precisely the result pattern observed when stimulating anterior SMG: stimulation increased RTs for consistent words without affecting inconsistent words.

One important implication of the finding that orthographic consistency effects rely on phonological reorganization is that the development of segmental phonological representations is dependent on exposure to an alphabetic writing system (Morais et al., 1979; Harm and Seidenberg, 1999, 2004). In other words, the awareness of phonemes appears to result from reading experience. Consequently, phonemic deficits observed in developmental dyslexics or poor readers are likely to be a consequence of their reading difficulties rather than a cause (Metsala, 1997; Ziegler and Goswami, 2005; Bruno et al., 2007).

Finally, although our results indicate that “orthographic effects” in speech perception arise at a phono-articulatory, rather than a visual level, this may in part reflect the task we chose. Listening to speech is automatic and does not typically engage vOTC (Cohen et al., 2004) but more explicit phonological tasks such as auditory rhyme judgments do (Booth et al., 2002, 2004; Cao et al., 2009; Yoncheva et al., 2010). It is possible that on-line coactivation of visual information may also contribute to orthographic effects on speech processing that involves strategic (as opposed to purely automatic) phonological processing (Damian and Bowers, 2009). Future TMS experiments using rhyme judgments would help to determine whether on-line coactivation of visual information can also contribute to orthographic effects on speech processing.

Footnotes

This research was funded by a grant from the Belgian French community (Action de Recherche Concertée 06/11-342) and the Région de Bruxelles-Capitale (Institut d'Encouragement de la Recherche Scientique et de l'Innovation de Bruxelles, Brains Back to Brussels program) to C.P., as well as funding from the Biotechnology and Biosciences Research Council (K.J.K.D.) and the Wellcome Trust (J.T.D.). We thank Johannes Ziegler for providing the stimuli for the auditory lexical decision task and Cathy Price for helpful discussions.

References

- Adank P, Devlin JT. On-line plasticity in spoken sentence comprehension: adapting to time-compressed speech. Neuroimage. 2010;49:1124–1132. doi: 10.1016/j.neuroimage.2009.07.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amit DJ. Modeling brain function: the world of attractor neural networks. New York: Cambridge UP; 1992. [Google Scholar]

- Ashbridge E, Walsh V, Cowey A. Temporal aspects of visual search studied by transcranial magnetic stimulation. Neuropsychologia. 1997;35:1121–1131. doi: 10.1016/s0028-3932(97)00003-1. [DOI] [PubMed] [Google Scholar]

- Baayen RH, Piepenbrock R, Van Rijn H. The celex lexical database (cd-rom) Philadelphia: Linguistic Data Consortium, University of Pennsylvania; 1993. [Google Scholar]

- Baker CI, Liu J, Wald LL, Kwong KK, Benner T, Kanwisher N. Visual word processing and experiential origins of functional selectivity in human extrastriate cortex. Proc Natl Acad Sci U S A. 2007;104:9087–9092. doi: 10.1073/pnas.0703300104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Booth JR, Burman DD, Meyer JR, Gitelman DR, Parrish TB, Mesulam MM. Functional anatomy of intra-and cross-modal lexical tasks. Neuroimage. 2002;16:7–22. doi: 10.1006/nimg.2002.1081. [DOI] [PubMed] [Google Scholar]

- Booth JR, Burman DD, Meyer JR, Gitelman DR, Parrish TB, Mesulam MM. Development of brain mechanisms for processing orthographic and phonologic representations. J Cogn Neurosci. 2004;16:1234–1249. doi: 10.1162/0898929041920496. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Botvinick MM, Plaut DC. Short-term memory for serial order: a recurrent neural network model. Psychol Rev. 2006;113:201–233. doi: 10.1037/0033-295X.113.2.201. [DOI] [PubMed] [Google Scholar]

- Bruno JL, Manis FR, Keating P, Sperling AJ, Nakamoto J, Seidenberg MS. Auditory word identification in dyslexic and normally achieving readers. J Exp Child Psychol. 2007;97:183–204. doi: 10.1016/j.jecp.2007.01.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Callan DE, Tajima K, Callan AM, Kubo R, Masaki S, Akahane-Yamada R. Learning-induced neural plasticity associated with improved identification performance after training of a difficult second-language phonetic contrast. Neuroimage. 2003;19:113–124. doi: 10.1016/s1053-8119(03)00020-x. [DOI] [PubMed] [Google Scholar]

- Cao F, Peng D, Liu L, Jin Z, Fan N, Deng Y, Booth JR. Developmental differences of neurocognitive networks for phonological and semantic processing in Chinese word reading. Hum Brain Mapp. 2009;30:797–809. doi: 10.1002/hbm.20546. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carreiras M, Seghier ML, Baquero S, Estévez A, Lozano A, Devlin JT, Price CJ. An anatomical signature for literacy. Nature. 2009;461:983–986. doi: 10.1038/nature08461. [DOI] [PubMed] [Google Scholar]

- Castro-Caldas A, Petersson KM, Reis A, Stone-Elander S, Ingvar M. The illiterate brain. Learning to read and write during childhood influences the functional organization of the adult brain. Brain. 1998;121:1053–1063. doi: 10.1093/brain/121.6.1053. [DOI] [PubMed] [Google Scholar]

- Castro-Caldas A, Miranda PC, Carmo I, Reis A, Leote F, Ribeiro C, Ducla-Soares E. Influence of learning to read and write on the morphology of the corpus callosum. Eur J Neurol. 1999;6:23–28. doi: 10.1046/j.1468-1331.1999.610023.x. [DOI] [PubMed] [Google Scholar]

- Catani M, Jones DK, ffytche DH. Perisylvian language networks of the human brain. Ann Neurol. 2005;57:8–16. doi: 10.1002/ana.20319. [DOI] [PubMed] [Google Scholar]

- Cohen L, Jobert A, Le Bihan D, Dehaene S. Distinct unimodal and multimodal regions for word processing in the left temporal cortex. Neuroimage. 2004;23:1256–1270. doi: 10.1016/j.neuroimage.2004.07.052. [DOI] [PubMed] [Google Scholar]

- Coltheart M. The MRC psycholinguistic database. Q J Exp Psychol Sec A. 1981;33:497–505. [Google Scholar]

- Damian MF, Bowers JS. Orthographic effects in rhyme monitoring tasks: are they automatic? Eur J Cogn Psychol. 2009;22:106–116. [Google Scholar]

- Dehaene S, Cohen L, Sigman M, Vinckier F. The neural code for written words: a proposal. Trends Cogn Sci. 2005;9:335–341. doi: 10.1016/j.tics.2005.05.004. [DOI] [PubMed] [Google Scholar]

- Dejerine J. Sur un cas de cécité verbale avec agraphie, suivi d'autopsie. CR Soc Biol. 1891;43:197–201. [Google Scholar]

- Devlin JT, Matthews PM, Rushworth MF. Semantic processing in the left inferior prefrontal cortex: a combined functional magnetic resonance imaging and transcranial magnetic stimulation study. J Cogn Neurosci. 2003;15:71–84. doi: 10.1162/089892903321107837. [DOI] [PubMed] [Google Scholar]

- Devlin JT, Jamison HL, Gonnerman LM, Matthews PM. The role of the posterior fusiform gyrus in reading. J Cogn Neurosci. 2006;18:911–922. doi: 10.1162/jocn.2006.18.6.911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dirks DD, Wilson RH. The effect of spatially separated sound sources on speech intelligibility. J Speech Hear Res. 1969;12:5–38. doi: 10.1044/jshr.1201.05. [DOI] [PubMed] [Google Scholar]

- Donnenwerth-Nolan S, Tanenhaus MK, Seidenberg MS. Multiple code activation in word recognition: evidence from rhyme monitoring. J Exp Psychol Hum Learn. 1981;7:170–180. [PubMed] [Google Scholar]

- Downing PE, Wiggett AJ, Peelen MV. Functional magnetic resonance imaging investigation of overlapping lateral occipitotemporal activations using multi-voxel pattern analysis. J Neurosci. 2007;27:226–233. doi: 10.1523/JNEUROSCI.3619-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan KJ, Pattamadilok C, Knierim I, Devlin JT. Consistency and variability in functional localisers. Neuroimage. 2009;46:1018–1026. doi: 10.1016/j.neuroimage.2009.03.014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan KJ, Pattamadilok C, Devlin JT. Investigating occipito-temporal contributions to reading with TMS. J Cogn Neurosci. 2010;22:739–750. doi: 10.1162/jocn.2009.21207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiez JA, Petersen SE. Neuroimaging studies of word reading. Proc Natl Acad Sci U S A. 1998;95:914–921. doi: 10.1073/pnas.95.3.914. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fiez JA, Balota DA, Raichle ME, Petersen SE. Effects of lexicality, frequency, and spelling-to-sound consistency on the functional anatomy of reading. Neuron. 1999;24:205–218. doi: 10.1016/s0896-6273(00)80833-8. [DOI] [PubMed] [Google Scholar]

- Gazzaley A, Cooney JW, McEvoy K, Knight RT, D'Esposito M. Top-down enhancement and suppression of the magnitude and speed of neural activity. J Cogn Neurosci. 2005;17:507–517. doi: 10.1162/0898929053279522. [DOI] [PubMed] [Google Scholar]

- Gelfand JR, Bookheimer SY. Dissociating neural mechanisms of temporal sequencing and processing phonemes. Neuron. 2003;38:831–842. doi: 10.1016/s0896-6273(03)00285-x. [DOI] [PubMed] [Google Scholar]

- Glover GH. Deconvolution of impulse response in event-related BOLD fMRI1. Neuroimage. 1999;9:416–429. doi: 10.1006/nimg.1998.0419. [DOI] [PubMed] [Google Scholar]

- Göbel S, Walsh V, Rushworth MF. The mental number line and the human angular gyrus. Neuroimage. 2001;14:1278–1289. doi: 10.1006/nimg.2001.0927. [DOI] [PubMed] [Google Scholar]

- Goldinger SD, Luce PA, Pisoni DB. Priming lexical neighbors of spoken words: effects of competition and inhibition. J Mem Lang. 1989;28:501–518. doi: 10.1016/0749-596x(89)90009-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goswami U, Ziegler JC, Richardson U. The effects of spelling consistency on phonological awareness: a comparison of English and German. J Exp Child Psychol. 2005;92:345–365. doi: 10.1016/j.jecp.2005.06.002. [DOI] [PubMed] [Google Scholar]

- Gough PM, Nobre AC, Devlin JT. Dissociating linguistic processes in the left inferior frontal cortex with transcranial magnetic stimulation. J Neurosci. 2005;25:8010–8016. doi: 10.1523/JNEUROSCI.2307-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grainger J. Word frequency and neighborhood frequency effects in lexical decision and naming. J Mem Lang. 1990;29:228–244. [Google Scholar]

- Grainger J, Ferrand L. Masked orthographic and phonological priming in visual word recognition and naming: cross-task comparisons. J Mem Lang. 1996;35:623–647. [Google Scholar]

- Grainger J, Diependaele K, Spinelli E, Ferrand L, Farioli F. Masked repetition and phonological priming within and across modalities. J Exp Psychol Learn Mem Cogn. 2003;29:1256–1269. doi: 10.1037/0278-7393.29.6.1256. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Edelman S, Avidan G, Itzchak Y, Malach R. Differential processing of objects under various viewing conditions in the human lateral occipital complex. Neuron. 1999;24:187–203. doi: 10.1016/s0896-6273(00)80832-6. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kourtzi Z, Kanwisher N. The lateral occipital complex and its role in object recognition. Vision Res. 2001;41:1409–1422. doi: 10.1016/s0042-6989(01)00073-6. [DOI] [PubMed] [Google Scholar]

- Harm MW, Seidenberg MS. Phonology, reading acquisition, and dyslexia: insights from connectionist models. Psychol Rev. 1999;106:491–528. doi: 10.1037/0033-295x.106.3.491. [DOI] [PubMed] [Google Scholar]

- Harm MW, Seidenberg MS. Computing the meanings of words in reading: cooperative division of labor between visual and phonological processes. Psychol Rev. 2004;111:662–720. doi: 10.1037/0033-295X.111.3.662. [DOI] [PubMed] [Google Scholar]

- Harris KC, Dubno JR, Keren NI, Ahlstrom JB, Eckert MA. Speech recognition in younger and older adults: a dependency on low-level auditory cortex. J Neurosci. 2009;29:6078–6087. doi: 10.1523/JNEUROSCI.0412-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herbster AN, Mintun MA, Nebes RD, Becker JT. Regional cerebral blood flow during word and nonword reading. Hum Brain Mapp. 1997;5:84–92. doi: 10.1002/(sici)1097-0193(1997)5:2<84::aid-hbm2>3.0.co;2-i. [DOI] [PubMed] [Google Scholar]

- Hickok G, Poeppel D. The cortical organization of speech processing. Nat Rev Neurosci. 2007;8:393–402. doi: 10.1038/nrn2113. [DOI] [PubMed] [Google Scholar]

- Hickok G, Okada K, Serences JT. Area Spt in the Human planum temporale supports sensory-motor integration for speech processing. J Neurophysiol. 2009;101:2725–2732. doi: 10.1152/jn.91099.2008. [DOI] [PubMed] [Google Scholar]

- Horwitz B, Rumsey JM, Donohue BC. Functional connectivity of the angular gyrus in normal reading and dyslexia. Proc Natl Acad Sci U S A. 1998;95:8939–8944. doi: 10.1073/pnas.95.15.8939. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jacquemot C, Pallier C, LeBihan D, Dehaene S, Dupoux E. Phonological grammar shapes the auditory cortex: a functional magnetic resonance imaging study. J Neurosci. 2003;23:9541–9546. doi: 10.1523/JNEUROSCI.23-29-09541.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jenkinson M, Smith S. A global optimisation method for robust affine registration of brain images. Med Image Anal. 2001;5:143–156. doi: 10.1016/s1361-8415(01)00036-6. [DOI] [PubMed] [Google Scholar]

- Jenkinson M, Bannister P, Brady M, Smith S. Improved optimization for the robust and accurate linear registration and motion correction of brain images. Neuroimage. 2002;17:825–841. doi: 10.1016/s1053-8119(02)91132-8. [DOI] [PubMed] [Google Scholar]

- Kaas JH, Hackett TA. Subdivisions of auditory cortex and processing streams in primates. Proc Natl Acad Sci U S A. 2000;97:11793–11799. doi: 10.1073/pnas.97.22.11793. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, Stanley D, Harris A. The fusiform face area is selective for faces not animals. Neuroreport. 1999;10:183–187. doi: 10.1097/00001756-199901180-00035. [DOI] [PubMed] [Google Scholar]

- Koelsch S, Schulze K, Sammler D, Fritz T, Müller K, Gruber O. Functional architecture of verbal and tonal working memory: an FMRI study. Hum Brain Mapp. 2009;30:859–873. doi: 10.1002/hbm.20550. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leff AP, Iverson P, Schofield TM, Kilner JM, Crinion JT, Friston KJ, Price CJ. Vowel-specific mismatch responses in the anterior superior temporal gyrus: an fMRI study. Cortex. 2009;45:517–526. doi: 10.1016/j.cortex.2007.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lundqvist M, Rehn M, Djurfeldt M, Lansner A. Attractor dynamics in a modular network model of neocortex. Network. 2006;17:253–276. doi: 10.1080/09548980600774619. [DOI] [PubMed] [Google Scholar]

- Malach R, Reppas JB, Benson RR, Kwong KK, Jiang H, Kennedy WA, Ledden PJ, Brady TJ, Rosen BR, Tootell RB. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proc Natl Acad Sci U S A. 1995;92:8135–8139. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McCandliss BD, Cohen L, Dehaene S. The visual word form area: expertise for reading in the fusiform gyrus. Trends Cogn Sci. 2003;7:293–299. doi: 10.1016/s1364-6613(03)00134-7. [DOI] [PubMed] [Google Scholar]

- McClelland JL, Elman JL. The TRACE model of speech perception. Cogn Psychol. 1986;18:1–86. doi: 10.1016/0010-0285(86)90015-0. [DOI] [PubMed] [Google Scholar]

- Metsala JL. Spoken word recognition in reading disabled children. J Educ Psychol. 1997;89:159–169. [Google Scholar]

- Morais J, Cary L, Alegria J, Bertelson P. Does awareness of speech as a sequence of phones arise spontaneously? Cognition. 1979;7:323–331. [Google Scholar]

- Morais J, Bertelson P, Cary L, Alegria J. Literacy training and speech segmentation. Cognition. 1986;24:45–64. doi: 10.1016/0010-0277(86)90004-1. [DOI] [PubMed] [Google Scholar]

- Muneaux M, Ziegler JC. Locus of orthographic effects in spoken word recognition: novel insights from the neighbour generation task. Lang Cogn Proc. 2004;19:641–660. [Google Scholar]

- Okada K, Hickok G. Identification of lexical-phonological networks in the superior temporal sulcus using functional magnetic resonance imaging. Neuroreport. 2006;17:1293–1296. doi: 10.1097/01.wnr.0000233091.82536.b2. [DOI] [PubMed] [Google Scholar]

- Pattamadilok C, Morais J, Ventura P, Kolinsky R. The locus of the orthographic consistency effect in auditory word recognition: Further evidence from French. Lang Cogn Proc. 2007;22:700–726. [Google Scholar]

- Pattamadilok C, Morais J, De Vylder O, Ventura P, Kolinsky R. The orthographic consistency effect in the recognition of French spoken words: an early developmental shift from sublexical to lexical orthographic activation. Appl Psycholinguistics. 2009;30:441–462. [Google Scholar]

- Paulesu E, Frith CD, Frackowiak RS. The neural correlates of the verbal component of working memory. Nature. 1993;362:342–345. doi: 10.1038/362342a0. [DOI] [PubMed] [Google Scholar]

- Pearlmutter BA. Learning state space trajectories in recurrent neural networks. Neural Comput. 1989;1:263–269. [Google Scholar]

- Peelen MV, Downing PE. Within-subject reproducibility of category-specific visual activation with functional MRI. Hum Brain Mapp. 2005;25:402–408. doi: 10.1002/hbm.20116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peereman R, Dufour S, Burt JS. Orthographic influences in spoken word recognition: the consistency effect in semantic and gender categorization tasks. Psychon Bull Rev. 2009;16:363–368. doi: 10.3758/PBR.16.2.363. [DOI] [PubMed] [Google Scholar]

- Perre L, Pattamadilok C, Montant M, Ziegler JC. Orthographic effects in spoken language: on-line activation or phonological restructuring? Brain Res. 2009;1275:73–80. doi: 10.1016/j.brainres.2009.04.018. [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Association fiber pathways to the frontal cortex from the superior temporal region in the rhesus monkey. J Comp Neurol. 1988;273:52–66. doi: 10.1002/cne.902730106. [DOI] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Distinct parietal and temporal pathways to the homologues of Broca's area in the monkey. PLoS Biol. 2009;7:e1000170. doi: 10.1371/journal.pbio.1000170. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Cadoret G, Mackey S. Orofacial somatomotor responses in the macaque monkey homologue of Broca's area. Nature. 2005;435:1235–1238. doi: 10.1038/nature03628. [DOI] [PubMed] [Google Scholar]

- Prabhakaran R, Blumstein SE, Myers EB, Hutchison E, Britton B. An event-related fMRI investigation of phonological–lexical competition. Neuropsychologia. 2006;44:2209–2221. doi: 10.1016/j.neuropsychologia.2006.05.025. [DOI] [PubMed] [Google Scholar]

- Price CJ. The anatomy of language: a review of 100 fMRI studies published in 2009. Ann N Y Acad Sci. 2010;1191:62–88. doi: 10.1111/j.1749-6632.2010.05444.x. [DOI] [PubMed] [Google Scholar]

- Price CJ, Devlin JT. The myth of the visual word form area. Neuroimage. 2003;19:473–481. doi: 10.1016/s1053-8119(03)00084-3. [DOI] [PubMed] [Google Scholar]

- Price CJ, Devlin JT. The pro and cons of labelling a left occipitotemporal region. Neuroimage. 2004;22:477–479. doi: 10.1016/j.neuroimage.2004.01.018. [DOI] [PubMed] [Google Scholar]

- Price CJ, Mechelli A. Reading and reading disturbance. Curr Opin Neurobiol. 2005;15:231–238. doi: 10.1016/j.conb.2005.03.003. [DOI] [PubMed] [Google Scholar]

- Price CJ, Wise RJ, Watson JD, Patterson K, Howard D, Frackowiak RS. Brain activity during reading. The effects of exposure duration and task. Brain. 1994;117:1255–1269. doi: 10.1093/brain/117.6.1255. [DOI] [PubMed] [Google Scholar]

- Price CJ, Wise RJ, Frackowiak RS. Demonstrating the implicit processing of visually presented words and pseudowords. Cereb Cortex. 1996;6:62–70. doi: 10.1093/cercor/6.1.62. [DOI] [PubMed] [Google Scholar]

- Price CJ, Moore CJ, Humphreys GW, Wise RJS. Segregating semantic from phonological processes during reading. J Cogn Neurosci. 1997;9:727–733. doi: 10.1162/jocn.1997.9.6.727. [DOI] [PubMed] [Google Scholar]

- Pugh KR, Mencl WE, Jenner AR, Katz L, Frost SJ, Lee JR, Shaywitz SE, Shaywitz BA. Functional neuroimaging studies of reading and reading disability (developmental dyslexia) Mental Retardation Dev Dis Res Rev. 2000;6:207–213. doi: 10.1002/1098-2779(2000)6:3<207::AID-MRDD8>3.0.CO;2-P. [DOI] [PubMed] [Google Scholar]

- Raizada RD, Poldrack RA. Selective amplification of stimulus differences during categorical processing of speech. Neuron. 2007;56:726–740. doi: 10.1016/j.neuron.2007.11.001. [DOI] [PubMed] [Google Scholar]

- Rauschecker JP, Scott SK. Maps and streams in the auditory cortex: nonhuman primates illuminate human speech processing. Nat Neurosci. 2009;12:718–724. doi: 10.1038/nn.2331. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reis A, Castro-Caldas A. Learning to read and write increases the efficacy of reaching a target in two dimensional space. J Int Neuropsychol Soc. 1997a;3:213. [Google Scholar]

- Reis A, Castro-Caldas A. Illiteracy: A cause for biased cognitive development. J Int Neuropsychol Soc. 1997b;3:444–450. [PubMed] [Google Scholar]

- Romero L, Walsh V, Papagno C. The neural correlates of phonological short-term memory: a repetitive transcranial magnetic stimulation study. J Cogn Neurosci. 2006;18:1147–1155. doi: 10.1162/jocn.2006.18.7.1147. [DOI] [PubMed] [Google Scholar]

- Rossi S, Hallett M, Rossini PM, Pascual-Leone A. Safety, ethical considerations, and application guidelines for the use of transcranial magnetic stimulation in clinical practice and research. Clin Neurophysiol. 2009;120:2008–2039. doi: 10.1016/j.clinph.2009.08.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rumsey JM, Horwitz B, Donohue BC, Nace K, Maisog JM, Andreason P. Phonological and orthographic components of word recognition. A PET-rCBF study. Brain. 1997;120:739–759. doi: 10.1093/brain/120.5.739. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Behrens TE, Johansen-Berg H. Connection patterns distinguish 3 regions of human parietal cortex. Cereb Cortex. 2006;16:1418–1430. doi: 10.1093/cercor/bhj079. [DOI] [PubMed] [Google Scholar]

- Sack AT, Cohen Kadosh R, Schuhmann T, Moerel M, Walsh V, Goebel R. Optimizing functional accuracy of TMS in cognitive studies: a comparison of methods. J Cogn Neurosci. 2009;21:207–221. doi: 10.1162/jocn.2009.21126. [DOI] [PubMed] [Google Scholar]

- Schacter DL, Addis DR, Buckner RL. Remembering the past to imagine the future: the prospective brain. Nat Rev Neurosci. 2007;8:657–661. doi: 10.1038/nrn2213. [DOI] [PubMed] [Google Scholar]

- Seghier ML, Lazeyras F, Pegna AJ, Annoni JM, Zimine I, Mayer E, Michel CM, Khateb A. Variability of fMRI activation during a phonological and semantic language task in healthy subjects. Hum Brain Mapp. 2004;23:140–155. doi: 10.1002/hbm.20053. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seidenberg MS, Tanenhaus MK. Orthographic effects on rhyme monitoring. J Exp Psychol Hum Learn. 1979;5:546–554. [PubMed] [Google Scholar]

- Seltzer B, Pandya DN. Afferent cortical connections and architectonics of the superior temporal sulcus and surrounding cortex in the rhesus monkey. Brain Res. 1978;149:1–24. doi: 10.1016/0006-8993(78)90584-x. [DOI] [PubMed] [Google Scholar]

- Seltzer B, Pandya DN. Further observations on parieto-temporal connections in the rhesus monkey. Exp Brain Res. 1984;55:301–312. doi: 10.1007/BF00237280. [DOI] [PubMed] [Google Scholar]

- Seltzer B, Pandya DN. Post-rolandic cortical projections of the superior temporal sulcus in the rhesus monkey. J Comp Neurol. 1991;312:625–640. doi: 10.1002/cne.903120412. [DOI] [PubMed] [Google Scholar]

- Seltzer B, Pandya DN. Parietal, temporal, and occipital projections to cortex of the superior temporal sulcus in the rhesus monkey: a retrograde tracer study. J Comp Neurol. 1994;343:445–463. doi: 10.1002/cne.903430308. [DOI] [PubMed] [Google Scholar]

- Sereno MI, Dick F. Mapping place of consonant articulation in human somatomotor cortex. Soc Neurosci Abstr. 2008;34 873.14. [Google Scholar]

- Shaywitz BA, Shaywitz SE, Pugh KR, Mencl WE, Fulbright RK, Skudlarski P, Constable RT, Marchione KE, Fletcher JM, Lyon GR, Gore JC. Disruption of posterior brain systems for reading in children with developmental dyslexia. Biol Psychiatry. 2002;52:101–110. doi: 10.1016/s0006-3223(02)01365-3. [DOI] [PubMed] [Google Scholar]

- Shaywitz BA, Shaywitz SE, Blachman BA, Pugh KR, Fulbright RK, Skudlarski P, Mencl WE, Constable RT, Holahan JM, Marchione KE, Fletcher JM, Lyon GR, Gore JC. Development of left occipitotemporal systems for skilled reading in children after a phonologically-based intervention. Biol Psychiatry. 2004;55:926–933. doi: 10.1016/j.biopsych.2003.12.019. [DOI] [PubMed] [Google Scholar]

- Silvanto J, Muggleton N, Walsh V. State-dependency in brain stimulation studies of perception and cognition. Trends Cogn Sci. 2008;12:447–454. doi: 10.1016/j.tics.2008.09.004. [DOI] [PubMed] [Google Scholar]

- Sparing R, Buelte D, Meister IG, Paus T, Fink GR. Transcranial magnetic stimulation and the challenge of coil placement: a comparison of conventional and stereotaxic neuronavigational strategies. Hum Brain Mapp. 2008;29:82–96. doi: 10.1002/hbm.20360. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stoeckel C, Gough PM, Watkins KE, Devlin JT. Supramarginal gyrus involvement in visual word recognition. Cortex. 2009;45:1091–1096. doi: 10.1016/j.cortex.2008.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taft M. Orthographically influenced abstract phonological representation: Evidence from non-rhotic speakers. J Psycholinguistic Res. 2006;35:67–78. doi: 10.1007/s10936-005-9004-5. [DOI] [PubMed] [Google Scholar]

- Taft M, Hambly G. The influence of orthography on phonological representations in the lexicon. J Mem Lang. 1985;24:320–335. [Google Scholar]

- Tanenhaus MK, Flanigan HP, Seidenberg MS. Orthographic and phonological activation in auditory and visual word recognition. Mem Cogn. 1980;8:513–520. doi: 10.3758/bf03213770. [DOI] [PubMed] [Google Scholar]

- Ventura P, Morais J, Pattamadilok C, Kolinsky R. The locus of the orthographic consistency effect in auditory word recognition. Lang Cogn Proc. 2004;19:57–95. [Google Scholar]

- Wassermann EM. Risk and safety of repetitive transcranial magnetic stimulation: report and suggested guidelines from the International Workshop on the Safety of Repetitive Transcranial Magnetic Stimulation, June 5–7, 1996. Electroencephalogr Clin Neurophysiol. 1998;108:1–16. doi: 10.1016/s0168-5597(97)00096-8. [DOI] [PubMed] [Google Scholar]

- Woolrich MW, Ripley BD, Brady M, Smith SM. Temporal autocorrelation in univariate linear modeling of FMRI data. Neuroimage. 2001;14:1370–1386. doi: 10.1006/nimg.2001.0931. [DOI] [PubMed] [Google Scholar]

- Yoncheva YN, Zevin JD, Maurer U, McCandliss BD. Auditory selective attention to speech modulates activity in the visual word form area. Cereb Cortex. 2010;20:622–632. doi: 10.1093/cercor/bhp129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zevin JD, McCandliss BD. Dishabituation of the BOLD response to speech sounds. Behav Brain Funct. 2005;1:4. doi: 10.1186/1744-9081-1-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang Y, Brady M, Smith S. Segmentation of brain MR images through a hidden Markov random field model and the expectation-maximization algorithm. IEEE Trans Med Imaging. 2001;20:45–57. doi: 10.1109/42.906424. [DOI] [PubMed] [Google Scholar]

- Ziegler JC, Ferrand L. Orthography shapes the perception of speech: the consistency effect in auditory word recognition. Psychon Bull Rev. 1998;5:683–689. [Google Scholar]

- Ziegler JC, Goswami U. Reading acquisition, developmental dyslexia, and skilled reading across languages: a psycholinguistic grain size theory. Psychol Bull. 2005;131:3–29. doi: 10.1037/0033-2909.131.1.3. [DOI] [PubMed] [Google Scholar]

- Ziegler JC, Perry C. No more problems in Coltheart's neighborhood: resolving neighborhood conflicts in the lexical decision task. Cognition. 1998;68:53–62. doi: 10.1016/s0010-0277(98)00047-x. [DOI] [PubMed] [Google Scholar]

- Ziegler JC, Petrova A, Ferrand L. Feedback consistency effects in visual and auditory word recognition: where do we stand after more than a decade? J Exp Psychol Learn Mem Cogn. 2008;34:643–661. doi: 10.1037/0278-7393.34.3.643. [DOI] [PubMed] [Google Scholar]

- Zurek PM. Binaural advantages and directional effects in speech intelligibility. In: Studebaker GA, Hockberg I, editors. Acoustical factors affecting hearing aid performance. Ed 2. Needham Heights, MA: Allyn and Bacon; 1993. [Google Scholar]