Abstract

Picking up a cup requires transporting the arm to the cup (transport component) and preshaping the hand appropriately to grasp the handle (grip component). Here, we used functional magnetic resonance imaging to examine the human neural substrates of the transport component and its relationship with the grip component. Participants were shown three-dimensional objects placed either at a near location, adjacent to the hand, or at a far location, within reach but not adjacent to the hand. Participants performed three tasks at each location as follows: (1) touching the object with the knuckles of the right hand; (2) grasping the object with the right hand; or (3) passively viewing the object. The transport component was manipulated by positioning the object in the far versus the near location. The grip component was manipulated by asking participants to grasp the object versus touching it. For the first time, we have identified the neural substrates of the transport component, which include the superior parieto-occipital cortex and the rostral superior parietal lobule. Consistent with past studies, we found specialization for the grip component in bilateral anterior intraparietal sulcus and left ventral premotor cortex; now, however, we also find activity for the grasp even when no transport is involved. In addition to finding areas specialized for the transport and grip components in parietal cortex, we found an integration of the two components in dorsal premotor cortex and supplementary motor areas, two regions that may be important for the coordination of reach and grasp.

Introduction

Although the everyday act of reaching out to pick up a coffee cup seems like a single fluid action, arguably it is comprised of two dissociable components. For example, some neuropsychological patients are able to accurately reach the cup but then fail to preshape the hand appropriately for grasping the handle (Binkofski et al., 1998), while other patients (with optic ataxia) reach to an incorrect location even though they can form a proper grip under some circumstances (Cavina-Pratesi et al., 2010). Although functional magnetic resonance imaging (fMRI) has been extensively used to study the neural substrates of the hand grasps (for review, see Culham et al., 2006), the human neural substrates of reaching with the arm are not well established (although a number of regions have been identified in patients with ataxia) (Karnath and Perenin, 2005). Which areas of the human brain enable us to move the hand to interact directly with objects within reach?

In an influential model, Jeannerod (1981) proposed that reach-to-grasp actions can be broken into a transport component, moving the hand toward the goal object, and a grip component, shaping the hand to reflect the shape and size of the object. According to the model, the transport component is driven by “extrinsic” properties of objects (i.e., location) and relies on the proximal arm/shoulder muscles, whereas the grip component is driven by “intrinsic” properties of objects (i.e., shape and size) and relies on the distal hand/finger muscles (Arbib, 1981). Although the grip and transport channels must be closely choreographed (Jeannerod, 1999), the model nevertheless proposes that they are largely independent.

Almost 30 years later, the theory of independent transport and grip components remains quite controversial. In humans, supporting evidence comes from neuroimaging (Culham and Valyear, 2006; Castiello and Begliomini, 2008), neuropsychology (Jeannerod et al., 1994; Binkofski et al., 1998; Shallice et al., 2005), transcranial magnetic stimulation (Rice et al., 2006; Davare et al., 2007), and developmental (von Hofsten, 1982) studies. In macaques, supporting evidence comes from neurophysiological (Sakata et al., 1995; Andersen and Buneo, 2002; Buneo et al., 2002; Fattori et al., 2005), neuroanatomical (Rizzolatti and Matelli, 2003) and inactivation (Gallese et al., 1994) studies. In both species, the anterior intraparietal sulcus (human aIPS; macaque AIP) is thought to be specialized for hand grip, whereas the superior parieto-occipital cortex (human SPOC; macaque V6A) and medial intraparietal cortex are specialized for arm transport. These areas then project to the ventral premotor cortex (vPM) and dorsal premotor cortex (dPM), respectively, with similar specializations (Tanné-Gariépy et al., 2002). However, evidence challenging the independence of the two components comes from theoretical models (Smeets and Brenner, 1999), kinematic covariance of transport and grip (Jeannerod et al., 1998), neurons selective to both reaching and grasping (Stark et al., 2007; Fattori et al., 2010), and lesions compromising both components (Jeannerod, 1986; Perenin and Vighetto, 1988; Battaglini et al., 2002; Karnath and Perenin, 2005).

Regardless of whether or not the two components are completely independent, the transport component has scarcely been studied separately from the grip component, particularly in human neuroimaging. Because of movement-related artifacts (Culham, 2006), many neuroimaging studies purportedly interested in “reaching” have used actions in which no transport occurs; for example, joystick/stylus movements (e.g., Grefkes et al., 2004) or pointing movements where the index finger is aimed toward the target but the arm is not extended (for a discussion, see Culham et al., 2006). Other studies (Culham, 2004; Prado et al., 2005; Filimon et al., 2007) have investigated reaching (transport without grip) and grasping (transport with grip) compared with rest or passive viewing conditions, but subtractions among these conditions do not isolate the transport component without other factors (e.g., response selection, motor attention, sensory feedback, etc.). Here, we developed a novel paradigm to examine arm transport independently of grip and confounding factors. Using fMRI, this enabled us to isolate the neural substrates of arm transport for the first time and investigate the relationship between transport and grip components in the normal human brain.

Materials and Methods

Experimental design

We designed a paradigm in which grip and transport components were factorially manipulated in two separate experiments (Fig. 1). In experiment 1, participants performed grasping, touching, or passive viewing (here called looking) tasks upon peripheral objects in near (immediately adjacent to the hand) or far space (at the furthest reachable extent of the arm) with respect to the hand's starting location while fixating. The logic was that grasping but not touching involves the grip component, while actions in far but not near space involve the transport component. Localizing the transport component by comparing actions performed toward the far position versus actions performed toward the near one allowed us to control for confounds present in previous studies. Indeed, actions executed toward both the far and the near positions shared the same cognitive components, including visual object recognition, movement intention/attention/preparation, and sensorimotor interactions associated with action execution. The passive viewing conditions served as a control for differences in the retinal locations of the objects. In experiment 1, actions were performed in closed loop (i.e., with vision available throughout the movement). In experiment 2, we investigated whether or not the activations observed in experiment 1 were truly related to the transport component and not simply a consequence of the visual feedback, target location, or the direction of transport. Thus, we had participants perform the actions in open loop (i.e., vision available only before movement onset) from two different starting locations (near the body vs away from the body). Unless otherwise stated, methods for experiment 2 were the same as in experiment 1.

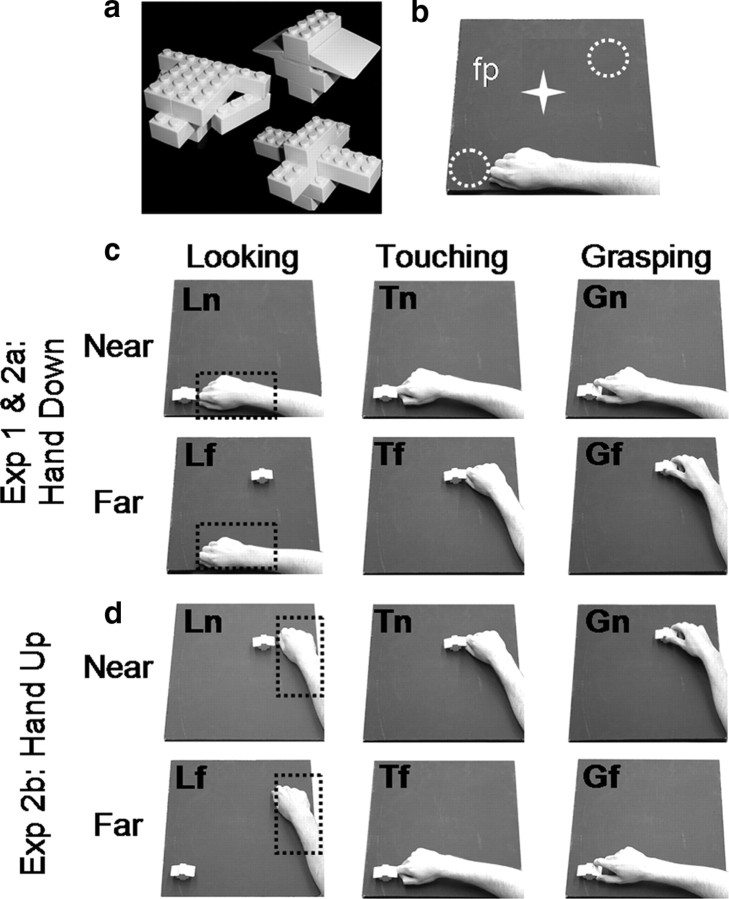

Figure 1.

Schematic illustration of the stimuli and setup used for experiments 1 and 2. a, Stimuli were Lego pieces assembled to create ∼10 different novel 3D objects. b, The setup required participants gaze at the fixation point (fp) while performing the tasks at two possible object locations: near and far from the hand (white dotted circles). The white star represents the fixation point, which was located midway between the two objects. c, d, For both experiments, the setup is illustrated from the point of view of the participants. The starting positions of the hand are highlighted by black dotted rectangles. In experiment 1, only one starting position was used (c). In experiment 2, two starting positions were used (c, d). At trial onset, participants were asked to perform one of the following tasks: (1) looking (left column): passively viewing the objects; (2) touching (middle column): touching the object with the knuckles; or (3) grasping (right column): using a precision grip (with the index finger and the thumb) to grasp and lift the object. Actions performed at the location furthest from the starting position required arm transport. The hand down starting position (c) involved a rotation of the elbow to extend (abduct) the arm while keeping the palm down (pronated). The hand up starting position (d) involved a rotation of the elbow to flex (adduct) the arm while keeping the palm down (pronated). Grasping at the near locations required hand displacement but no arm transport. Overall there were six actions: Gf, grasping the far object; Tf, touching the far object; Lf, passive viewing of the far object; Gn, grasping the near object; Tn, touching the near object; Ln, passive viewing of the near object.

Participants

Participants were students recruited from the University of Western Ontario (London, Ontario, Canada). Eleven students (range: 24–34; 5 female) participated in experiment 1 and 14 students (age range: 23–35; 5 female) participated in experiment 2. Data from one participant in each experiment were discarded because of motion artifacts, leaving 10 and 13 participants, respectively. All participants had normal or corrected-to-normal vision and were fully right handed as measured by the Edinburgh Handedness Inventory (Oldfield, 1971). All participants performed repeated functional scans and one anatomical scan during the same session lasting up to 2 h. Eight additional right-handed volunteers (mean age: 25.6; 5 female) were recruited from Durham University (Durham, UK) to participate in a behavioral control experiment to measure kinematics parameters of the reach-to-grasp and reach-to-touch movements in a setup similar to that used within the scanner. Informed consent was given before the experiments in accordance with the University of Western Ontario Health Sciences and the Durham University Review Ethics Boards.

Imaging experiments

Tasks

Real three-dimensional (3D) objects (Fig. 1a) were presented at one of two different spatial locations (near the hand and far from the hand), and participants were required to perform one of three possible tasks depending on the auditory instructions. The auditory instructions consisted of a recorded voice saying “grasp,” “touch,” or “look.” In the grasping condition (G), participants were asked to grasp the object using a precision grip with their index finger and thumb, lift it up slightly, put it back in place, and return to the starting position (Fig. 1c, experiment 1, right column). In the touching condition (T), participants were asked to contact the objects with their knuckles (without forming a grip) and return to the starting position (Fig. 1c, experiment 1, middle column). G and T tasks differed depending on the spatial location of the target objects. Objects were presented at one of two different locations: a near location (n), immediately beside the hand at the starting position, or a far location (f), away from the starting position. When the stimulus was presented near the hand, no arm extension was required and the participants were able to grasp and touch the objects by a simple, small displacement of the hand. When the stimulus was presented far from the hand, the extension of the arm was necessary to either grasp or touch the target object. In the look condition (L), participants were asked not to perform any action and to keep looking at the fixation point (Fig. 1c, experiment 1, left column).

We used a slow event-related design with trials spaced every 16 s. After an auditory instruction (8 s before trial onset), the experimenter placed the object on the platform (6 s before trial onset). Each trial began with the illumination of the platform with a bright light-emitting diode (LED), cueing participants to initiate the task. The length of the illumination period was manipulated between experiment 1 and experiment 2 (see below, Timing and experimental conditions: experiment 1 and Timing and experimental conditions: experiment 2). After offset of the illumination LED, the participant received the auditory instruction for the following trial and the experimenter replaced the object with another. Participants could not see the experimenter placing the stimuli, given that the bore was completely dark (except for the fixation point, which was not bright enough to illuminate the experimenter's movements).

Apparatus, stimuli, and viewing conditions

During the experiments, each participant lay supine within the magnet with the torso and head coil tilted (∼5–10°) and the head tilted within the coil at an additional angle (∼20°) to permit direct viewing of the stimuli without mirrors. A wooden platform was placed above the participant's pelvis to enable presentation of real 3D stimuli at different spatial locations that could be comfortably reached by the participant. Each participant rested the right hand at the starting position, which varied between experiment 1 and experiment 2 (see below, Timing and experimental conditions: experiment 1 and Timing and experimental conditions: experiment 2). The upper right arm was held by a hemicylindrical brace, which prevented movements of the shoulder and head but allowed the arm to rotate along an arc by pivoting at the elbow and the wrist to bend freely. The wooden platform had a flat surface (50 × 50 cm) that could be tilted by an adjustable angle, typically ∼25°, such that the edge closest to the participant was lower than the far edge, enabling participants to see all three dimensions of the object. Two plastic tracks, not shown in Fig. 1, were mounted atop the platform to allow the experimenter to slide the stimuli to one of the two locations. Pieces of Lego were assembled to form 10 objects, each of ∼5 × 2 × 1.5 cm in length, depth, and height, respectively (Fig. 1a). Objects were randomly assigned to each task and each spatial location.

The participant maintained fixation on a dim LED (masked by a 0.1° aperture) that was positioned ∼10° of visual angle above the platform and equidistant from the two locations (Fig. 1b). A bright LED (illuminator) was used to briefly illuminate the working space at the onset of each trial. Both the fixation LED and the illuminator LED were independently mounted on a flexible stalk (made of Loc-line, Lockwood Products) attached to the wooden platform. Another set of LEDs was mounted at the end of the platform, visible to the experimenter but not the participant, to instruct the experimenter to place an object at the correct location at the appropriate time. All of the LEDs were controlled by SuperLab software (Cedrus) on a laptop personal computer that received a signal from the MRI scanner at the start of each trial.

Timing and experimental conditions: experiment 1

In experiment 1, participants were required to rest the right hand at a starting position in the lower left of the platform (Fig. 1c, left column, dotted square). In the near condition, the objects were presented in the lower left portion of the platform immediately adjacent to the starting position of the hand (experiment 1, Fig. 1c, top row, left), while stimuli for the far condition were presented in the upper right portion of the platform at the furthest comfortable extent of the arm (Fig. 1c, experiment 1, bottom row, left). As a consequence, actions including arm transport required an outward movement of the arm toward the upper right.

At trial onset, the platform was illuminated for 2 s, enabling the participants to detect the stimuli and perform the instructed tasks in full vision. During the 2 s of light, the experimenter, who was standing at the end of the magnet bore, could monitor the performance of the participants and signal online any errors via button press, which were observed by a second experimenter in the operating room who recorded them.

The combination of three tasks (G, T, and L) and two spatial locations (n and f) gave rise to a 3 × 2 design consisting of six different experimental conditions: grasp near (Gn); grasp far (Gf); touch near (Tn); touch far (Tf); look near (Ln); look far (Lf).

Each run consisted of 24 trials, and each experimental condition was repeated four times in a random order for a total running time of ∼7 min. Each participant performed four runs for a total of 16 observations per experimental condition and a total time ∼40 min.

Timing and experimental conditions: experiment 2

Experiment 2 used 2 × 2 × 3 design with three factors: 2 hand [hand down (HD) vs hand up (HU) starting positions] × 3 task (G, T, and L) factors × 2 location (n vs f objects, with respect to the hand's starting location), as shown in Figure 1 (experiment 2). The starting position was changed between runs to avoid mass motion artifacts related to arm position (Culham, 2006) and to avoid an excessive number of experimental conditions within each run. During odd-numbered runs (experiment 2a), the participant began with the hand in the starting position in the lower left portion of the platform (HD) as in experiment 1 and directed the arm outward and rightward to act upon objects at the f location (Fig. 1c, experiment 2a). During even-numbered runs (experiment 2b), the participant began with the hand in the starting position in the upper right portion of the platform (HU) and directed the arm inward and leftward to act upon objects at the f location (Fig. 1d, experiment 2b, bottom row). For both experiments, actions executed toward the objects in the n location (Fig. 1c, experiment 2a, top row; Fig. 1d, experiment 2b, top row) were performed by a simple hand displacement to a location immediately adjacent to the starting position of the hand.

The timing used in experiments 2a and 2b was identical to that in experiment 1, except that the platform was illuminated for only 400 ms. Behavioral piloting (Cavina-Pratesi et al., 2007a) indicated that 400 ms was shorter than the typical range of reaction times (as confirmed by our kinematic control experiment). Between the first and second experiments we obtained a magnetic resonance-compatible, infrared-sensitive camera that was positioned on the head coil to record the participant's actions (MRC Systems). Videos of the runs were then screened offline, and trials containing errors were cut from the data (see below, Preprocessing). To see examples of the actions performed in the scanner, please watch the videos provided, available at www.jneurosci.org as supplemental material. In addition, the 3D Lego stimuli were painted white to increase their contrast with respect to the black background of the platform.

Each run consisted of 24 trials, 4 trials for each of the 6 conditions at a given starting position (HD in experiment 2a and HU in experiment 2b) in a random order for a total run time of ∼7 min. Each participant performed at least 3 runs per starting position for a minimum total number of 12 trials per experimental condition.

Imaging parameters: experiments 1 and 2

All imaging was performed at the Robarts Research Institute (London, Ontario, Canada) using a 4 tesla whole-body MRI system (Varian; Siemens). In experiment 1, we used a transmit–receive, cylindrical birdcage radiofrequency head coil. Functional MRI volumes based on the blood oxygenation level-dependent (BOLD) signal (Ogawa et al., 1992) were collected using an optimized segmented T2*-weighted segmented gradient echo echoplanar imaging [19.2 cm field of view with 64 × 64 matrix size for an in-plane resolution of 3 mm; repetition time (TR) = 1 s with two segments/plane for a volume acquisition time of 2 s; time to echo (TE) = 15 ms; flip angle (FA) = 45°, navigator corrected]. Each volume comprised 14 contiguous slices of 6 mm thickness, angled at ∼30° from axial to sample occipital, parietal, posterior temporal, and posterior/superior frontal cortices. A constrained 3D phase shimming procedure was performed to optimize the magnetic field homogeneity over the prescribed functional planes (Klassen and Menon, 2004). During each experimental session, a T1-weighted anatomic reference volume was acquired along the same orientation as the functional images using a 3D acquisition sequence (256 × 256 × 64 matrix size; 3.0 mm reconstructed slice thickness; time for inversion = 600 ms; TR = 11.5 ms; TE = 5.2 ms; FA = 11°).

In experiment 2, data were collected using a four-channel phased-array “clamshell” coil built in-house (see supplemental Fig. 1, available at www.jneurosci.org as supplemental material). The coil consisted of two fixed occipital elements and two hinged temporal elements. The clamshell forms a ¾ cylinder with an open face providing an unobstructed view of the stimuli. The hinged temporal elements allowed the coil to be adjusted to tightly but comfortably enclose (with the addition of foam) the participant's head for optimal signal-to-noise while also providing additional head stabilization. Because phased array coils consist of multiple elements with different orientations, they experience less signal loss in the tilted position compared with the single channel head coil used in experiment 1; thus, we were able to tilt the coil up to 45° (though the coil was typically tilted only by ∼30°). Data from the coil were combined using a sum-of-squares reconstruction method. Each volume comprised 17 contiguous slices of 5 mm thickness at the same orientation as in experiment 1.

Preprocessing

For data analysis, we used the Brain Voyager software package (QX, version 1.8, Brain Innovation). Functional data were superimposed on anatomical brain images, aligned on the plane between the anterior commissure and posterior commissure, and transformed into Talairach space (Talairach and Tournoux, 1988). Functional data were preprocessed with temporal high-pass filtering (to remove frequencies <3 cycles/run). Data were analyzed using a general linear model (GLM) with separate predictors for each trial type for each subject. The model included six predictors for Gn, Gf, Tn, Tf, Ln, and Lf. Predictors were modeled using a 2 s (or 1 image volume) rectangular wave for each trial and then convolved with a standard hemodynamic response. This time window was chosen because it covered stimulus presentation and participant response for actions executed in both the near and far locations. The remaining 14 s were considered as the intertrial interval. Trials in which an error occurred (e.g., the experimenter or participant dropped or fumbled the object) were cut from the data using Matlab software. We cut a total of 1 and 4% of the trials in experiment 1 and experiment 2, respectively. We chose to exclude the data from analysis rather than to model the errors with predictors of no interest because the errors could vary in amplitude, duration, and onset, such that a single hemodynamic predictor would not fully account for the effects (and would thus increase residual variance and hamper statistical power). Both experiments used random effects analyses, which do not require correction for temporal autocorrelation (because the sample size is determined by the number of subjects rather than the number of time points). Thus, although the exclusion of data points following error trials may affect the magnitude of serial correlations, it should have a negligible effect on the statistics.

The data were z-transformed before GLM analysis, such that the β weights were in units of z-scores (i.e., SDs). Because the z-scores derived within each run are based on the same mean and SD, comparisons between conditions within the same runs (all of our condition differences except the hand starting position in experiment 2) reflect differences in the magnitude of activation. However, z-scores derived from different sets of runs, as for the HU and HD conditions in experiment 2, cannot be easily compared because differences could arise from normalization. Here, our goal was to show that the basic pattern of results was replicable despite differences in starting position HU and HD (as it was) rather than to directly compare HU versus HD (which showed no statistical differences).

For each participant, functional data from each session were screened for motion or magnet artifacts with cine-loop animation. The arm movements, which followed an arc atop the platform, did not lead to any detectable artifacts, presumably because the motions of the upper arm and shoulder were less problematic than in past studies in which the lower arm was also raised to grasp objects (e.g., Cavina-Pratesi et al., 2007b). Data were discarded from two participants who had abrupt head motion artifacts. In all remaining subjects, there was no abrupt movement exceeding 1 mm or 1° and no obvious artifactual activation in the statistical maps for the contrasts performed for that subject (e.g., no apparent clusters along the edge of the brain or in the ventricles). Because our participants were highly experienced and the motion was very limited, no motion correction was applied (Freire and Mangin, 2001).

Data analysis: experiment 1

We performed two types of analyses for experiment 1. First, because we had definite hypotheses about specific areas responsive to the grip and the transport component within the parietal lobe, we performed a single subject analysis using a region of interest (ROI) approach. The ROI approach offers the advantages that each area can be identified in individual subjects regardless of variations in stereotaxic location and, moreover, that specific areas are not blurred with adjacent areas because of interindividual variability. Second, to investigate other areas within the brain, we conducted a voxelwise analysis (in which data were smoothed using a 6 mm Gaussian filter).

Region of interest analyses.

For each individual, an aIPS ROI was identified by a comparison of Gf versus Tf, which has been typical in past studies of the region (Binkofski et al., 1998; Culham, 2004; Frey et al., 2005; Begliomini et al., 2007a,b; Cavina-Pratesi et al., 2007b). Voxel selection was constrained by anatomical landmarks; aIPS included only significant voxels near the junction of the anterior portion of the IPS and the postcentral sulcus (PCS). A contrast of Tf versus Tn identified two transport-related areas in the SPOC in most subjects. Again, voxels were selected in the vicinity of a reliable anatomical landmark, in this case the dorsal end of the parietal-occipital sulcus (POS). ROIs were defined using a voxelwise contrast in each individual using a threshold ranging from p = 0.001 to p = 0.01, uncorrected. Given intersubject variability in activation strength, these slight variations in threshold were used to allow selection of an ROI at the appropriate anatomical location without spilling into adjacent regions (for example, we know from years of experience that at liberal thresholds, aIPS merges with more somatosensory areas in the superior and inferior postcentral sulcus). From each ROI and from each participant, we then extracted the event-related time course and computed the percentage BOLD signal change (%BSC) for the peak response (4–8 s after trial onset) for all voxels. These %BSC data were then analyzed by using ANOVA and t tests comparisons (see Logic of statistical analyses and Classification of patterns sections below).

Voxelwise analyses.

To examine whether additional areas would display interesting activation patterns, we also performed voxelwise contrasts between conditions in our group data after transformation into stereotaxic space. These contrasts were performed using random effects (RFX) analysis with a repeated-measures ANOVA using task and location as main factors (using the AN(C)OVA module of BrainVoyager). Statistical activation maps excluded voxels outside a mask based upon the average functional volume that was sampled within the group of subjects. To correct for the problem of multiple comparisons, we used a minimum statistical threshold of p < 0.001 combined with BrainVoyager's cluster level statistical threshold estimator plug-in. This algorithm uses Monte Carlo simulations (1000 iterations) to estimate the probability of clusters of a given size arising purely from chance [adapted from Forman et al. (1995) for three dimensional data). Because the minimum cluster size for a corrected p value is estimated separately for each contrast map (based on smoothness estimates), cluster sizes can vary across different comparisons. Nevertheless, all of the clusters reported have a minimum size of 7 voxels of 3 mm3 each, totaling 189 mm3 or greater. Note that the assumption of uniform smoothness (i.e., stationarity) in fMRI data has been challenged (Hayasaka et al., 2004), suggesting that our approach had the potential to show an increase in false positives in zones with above average smoothness and a loss of statistical power in zones with below average smoothness. To further evaluate data patterns, for each area we extracted the β weights (βs) for each participant in each condition. These βs were used to generate bar graphs showing activation across conditions and to perform post hoc t tests where appropriate (see next section, Logic of statistical analyses and classification of patterns).

Logic of statistical analyses and classification of patterns.

Although a factorial ANOVA is appropriate for our 2 × 3 design, our hypotheses predict a complex pattern of statistical differences beyond simple main effects and interactions. Thus, areas that showed significant interactions in the 2 × 3 ANOVA were further dissected by performing two 2 × 2 ANOVAs on activation levels (%BSC for ROI analyses; βs for areas identified through voxelwise contrasts) extracted from each area. First, we conducted a 2 × 2 ANOVA comparing action (collapsed between grasp and touch) versus look conditions across near versus far locations. We call this the action/look × location ANOVA. Second, we conducted a 2 × 2 ANOVA comparing grasp versus touch conditions across near versus far locations. We call this the grasp/touch × location ANOVA.

Areas with a grip effect would be expected to show a main effect of task in the 2 × 3 ANOVA. Moreover, they should also show a main effect of task (G > T) in the grasp/touch × location ANOVA. Areas with a transport effect would be expected to show an interaction of task × location in the 2 × 3 ANOVA. Moreover, they should also show a main effect of location (f > n) in the grasp/touch × location ANOVA and an interaction of task × location in the action/look × location ANOVA (f > n for action but not look tasks). In addition, it is possible that areas could show an interaction between task × location in the grasp/touch × location ANOVA, which would indicate a grip/transport interaction. Results from both ANOVAs will be summarized in Tables 3 and 6 for experiments 1 and 2, respectively.

Table 3.

Brain areas, F values, and p values for the action/look × location and the grasp/touch × location ANOVAs for the areas found in the task × location interaction in experiment 1

| Brain areas | Experiment 1 |

|||||

|---|---|---|---|---|---|---|

| 2 × 2 Action/look × location ANOVA |

2 × 2 Grasp/touch × location ANOVA |

|||||

| Task | Location | Task × location | Task | Location | Task × location | |

| ROI analysis | ||||||

| Left aIPS | F(1,9) = 117.44, p < 0.0001 | F(1,9) = 0.01, p = 0.909 | F(1,9) = 4.02, p = 0.076 | F(1,9) = 157.99, p < 0.0001 | F(1,9) = 5.92, p = 0.038 | F(1,9) = 11.53, p = 0.008 |

| Left aSPOC | F(1,8) = 129.28, p < 0.0001 | F(1,8) = 56.12, p < 0.0001 | F(1,8) = 118.40, p < 0.0001 | F(1,8) = 1.81, p = 0.216 | F(1,8) = 131.9, p < 0.0001 | F(1,8) = 2.11, p = 0.184 |

| Left pSPOC | F(1,9) = 16.07, p = 0.003 | F(1,9) = 46.93, p < 0.0001 | F(1,9) = 21.2, p = 0.001 | F(1,9) = 4.21, p = 0.07 | F(1,9) = 49.53, p < 0.0001 | F(1,9) = 0.1, p = 0.763 |

| Voxelwise analysis | ||||||

| Left aSPOCa | F(1,9) = 61.94, p < 0.0001 | F(1,9) = 3.36, p = 0.1 | F(1,9) = 21.11, p = 0.001 | F(1,9) = 0.37, p = 0.254 | F(1,9) = 20.93, p = 0.001 | F(1,9) = 0.99, p = 0.346 |

| Left pSPOCa | F(1,9) = 11.07, p = 0.009 | F(1,9) = 18.66, p = 0.002 | F(1,9) = 6.06, p = 0.036 | F(1,9) = 4.21, p = 0.56 | F(1,9) = 37.43, p < 0.0001 | F(1,9) = 1.94, p = 0.197 |

| Left SPL/area 5La | F(1,9) = 34.45, p < 0.0001 | F(1,9) = 2.70, p = 0.135 | F(1,9) = 20.37, p = 0.001 | F(1,9) = 1.29, p = 0.286 | F(1,9) = 17.82, p = 0.002 | F(1,9) = 0.70, p = 0.425 |

| Right SPL/area 5La | F(1,9) = 41.91 p < 0.0001 | F(1,9) = 0.85, p = 0.381 | F(1,9) = 14.22, p = 0.004 | F(1,9) = 1.23, p = 0.297 | F(1,9) = 25.34, p = 0.001 | F(1,9) = 0.18, p = 0.740 |

| Left dPMb | F(1,9) = 84.60, p < 0.0001 | F(1,9) = 2.49, p = 0.149 | F(1,9) = 5.67, p = 0.041 | F(1,9) = 15.29, p = 0.004 | F(1,9) = 14.81, p = 0.004 | F(1,9) = 4.01, p = 0.076 |

| Left M1/S1b | F(1,9) = 94.31, p < 0.0001 | F(1,9) = 2.50, p = 0.148 | F(1,9) = 57.77, p < 0.0001 | F(1,9) = 14.19, p = 0.004 | F(1,9) = 21.03, p = 0.001 | F(1,9) = 1.87, p = 0.205 |

| SMAb | F(1,9) = 49.85, p < 0.0001 | F(1,9) = 4.36, p = 0.096 | F(1,9) = 5.04, p = 0.043 | F(1,9) = 12.34, p = 0.007 | F(1,9) = 28.49, p < 0.0001 | F(1,9) = 0.11, p = 0.751 |

| Left S2c | F(1,9) = 29.78, p < 0.0001 | F(1,9) = 3.28, p = 0.104 | F(1,9) = 4.33, p = 0.067 | F(1,9) = 1.17, p = 0.308 | F(1,9) = 72.06, p < 0.0001 | F(1,9) = 5.6, p = 0.042 |

aInteraction driven by action/look × location; no grip effect.

bInteraction driven by action/look × location; grip effect.

cInteraction partially explained by grasp/reach × location.

Table 6.

Brain areas, F values, and p values for the action/look × location and the grasp/touch × location ANOVAs for the areas found in the task × location interaction in experiment 2

| Brain areas | Experiment 2 |

|||||

|---|---|---|---|---|---|---|

| 2 × 2 Action/look × location ANOVA |

2 × 2 Grasp/touch × location ANOVA |

|||||

| Task | Location | Task × location | Task | Location | Task × location | |

| Left aSPOCa | F(1,12) = 23.31, p < 0.0001 | F(1,12) = 9.79, p = 0.009 | F(1,9) = 21.11, p = 0.001 | F(1,12) = 2.15, p = 0.168 | F(1,12) = 29.64, p < 0.0001 | F(1,12) = 2.07, p = 0.175 |

| Left paraCGa | F(1,12) = 13.79, p = 0.003 | F(1,12) = 11.97, p = 0.005 | F(1,12) = 58.08, p < 0001 | F(1,12) = 2.6, p = 0.133 | F(1,12) = 37.01, p < 0.0001 | F(1,9) = 0.16, p = 0.902 |

| Left SPL/area 5La | F(1,12) = 95.96, p < 0.0001 | F(1,12) = 17.93, p = 0.001 | F(1,12) = 60.12, p < 0.0001 | F(1,12) = 0.612, p = 0.449 | F(1,12) = 59.46, p < 0.0001 | F(1,12) = 2.24, p = 0.16 |

| Left S2b | F(1,12) = 133.1, p < 0.0001 | F(1,12) = 5.7, p = 0.034 | F(1,12) = 61.78, p < 0001 | F(1,12) = 10.9, p = 0.006 | F(1,12) = 32.55, p < 0.0001 | F(1,12) = 4.25, p = 0.062 |

| Left pSPOCc | F(1,12) = 8.78, p = 0.012 | F(1,12) =14.47, p = 0.003 | F(1,12) = 42.1, p < 0.0001 | F(1,12) = 0.3, p = 0.597 | F(1,12) = 43.31, p < 0.0001 | F(1,12) = 9.42, p = 0.01 |

| Vvenc | F(1,12) = 27.33, p < 0.0001 | F(1,12) =4.7, p = 0.056 | F(1,12) = 67.58, p < 0.0001 | F(1,12) = 2.55, p = 0.136 | F(1,12) = 24.47, p < 0.0001 | F(1,12) =10.36, p = 0.007 |

| Vdorc | F(1,12) = 27.26, p < 0.0001 | F(1,12) = 7.15, p = 0.02 | F(1,12) = 46.11, p < 0.0001 | F(1,12) = 3.09, p = 0.104 | F(1,12) = 36.73, p < 0.0001 | F(1,12) = 8.47, p = 0.013 |

| Left dPMb | F(1,12) = 40.03, p < 0.0001 | F(1,12) = 9.3, p = 0.01 | F(1,12) = 30.81, p < 0.0001 | F(1,12) = 13.57, p = 0.003 | F(1,12) = 24.29, p < 0.0001 | F(1,12) = 11.72, p = 0.005 |

| Left M1/S1b | F(1,12) = 222.9, p < 0.0001 | F(1,12) = 7.16, p = 0.02 | F(1,12) = 36.27, p < 0.0001 | F(1,12) = 22.9, p < 0.0001 | F(1,12) = 22.41, p < 0.0001 | F(1,12) = 13.56, p = 0.003 |

| SMAb | F(1,12) = 50.20, p < 0.0001 | F(1,12) = 20.73, p = 0.001 | F(1,12) = 34.45, p < 0.0001 | F(1,12) = 5.31, p = 0.04 | F(1,12) = 34.82, p < 0.0001 | F(1,12) = 8.84, p = 0.012 |

aInteraction driven by action/look × location; no grip effect.

bInteraction driven by action/look × location; grip effect.

cInteraction driven by both action/look × location and grasp/touch × location; no grip effect.

To further dissect patterns within areas showing a task × location interaction in the 2 × 3 ANOVA, we also computed differences in activation between key conditions, along with their 95% confidence limits. Given the difficulty in computing appropriate error bars for within-subjects designs (Loftus and Masson, 1994; Masson and Loftus, 2004) and common misconceptions about the interpretation of error bars (Belia et al., 2005), this is a straightforward way to illustrate statistical differences graphically. If the error bar on a difference score does not include zero, that difference is statistically significant (p < 0.05); otherwise it is not. Difference scores and confidence limits were computed for the critical comparisons of: Gf − Gn, Tf − Tn, Lf − Ln, Gf − Tf, and Gn − Tn. In addition, we computed one additional a priori contrast to dissociate between two possible patterns in the data. In cases where a transport effect is found, it could be driven by the transport component per se. Alternatively, a transport effect-like pattern could simply reflect a visual response (or visual imagery in experiment 2) to the motion of the arm during transport. Presumably, transport-related areas that are truly visuomotor should respond more during any motor task than during passive viewing (i.e., look tasks). Conversely, areas that are purely visual may not show such a difference. Thus, we also computed a contrast for the two visuomotor tasks in near space (Gn, Tn) versus the two passive viewing conditions (Ln, Lf) in a contrast we call GTn > Lnf.

To simplify the interpretation of the many possible activation patterns, based on the logic above, we classified areas based on whether they showed a grip effect, a transport effect (said to be visually driven when GTn > Lnf did not reach statistical significance), and a grip/transport interaction. Areas could of course show combinations of such effects.

Data analysis: experiment 2

Because of the addition of intrasession motion correction and improvements in BrainVoyager's algorithm (which had been previously suboptimal) (Oakes et al., 2005) and new validation of approaches (Johnstone et al., 2006) between the time that the first and second experiments were run and analyzed, we changed our approach to motion correction. In experiment 2, the data were motion corrected to be aligned to the functional volume closest in time to the anatomical image using six parameters (three translations and three rotations). The motion parameters were added as predictors in the main GLM (Johnstone et al., 2006).

The RFX GLM for experiment 2 included 12 predictors, one for each trial type (HD vs HU × 6 conditions). In HD runs, the predictors for HU trials were flat, while in HU runs the predictors for HD trials were flat. The intertrial interval served as the baseline in both types of runs, enabling comparisons between the two types of runs. The interleaving of HD and HU runs makes it unlikely that any differences between the two conditions were caused by systematic changes such as fatigue. Group average voxelwise analyses were performed by using random effects analysis and implementing a three-factor repeated measures 2 × 3 × 2 ANOVA model, allowing us to measure the main effects of hand (HD vs HU), task (G, T, and L), location (n and f), and their interactions. As in experiment 1, we also conducted simpler (2 × 2) ANOVAs and difference scores to interpret statistical patterns.

Kinematic control experiment

Procedure

Subjects lay comfortably in a mock wooden scanner and data were collected using the following: (1) a tilted platform similar to the one used for the imaging experiments; (2) objects of different shapes made out of Lego pieces; (3) the head tilted; (4) liquid crystal shutter goggles (Plato System, Translucent Technologies) to control visual feedback; and (5) an upper arm immobilizer. Thus, kinematic data were collected while the participants were subjected to the same movement and visual constraints experienced in the imaging experiment. We adopted the experimental design used in the fMRI experiment 2, where touching and grasping actions were performed in open loop using both inward and outward movements. As in fMRI experiment 2, inward and outward movements were tested in separate blocks, while grasping and touching actions, either toward close or far objects, were performed randomly within each block.

Data were collected in two separate blocks (one for each starting position) using 8 trials per condition per block (Gn, Gf, Tn, and Tf) for a total of 32 trials per block.

At the beginning of each trial the subjects were instructed via headphones about the task they were to perform (either grasp or touch), and after 2 to 3 s the shutter goggles opened for 400 ms, instructing the participant to perform the actions. Participants were asked to fixate toward an LED placed at the center of the platform midway between the near and the far object (fixation was monitored by a second experimenter via a small camera focusing on one eye). As soon as an eye movement was detected, the trial was discarded and repeated at the end of the block. Action movements were recorded by sampling the position of three markers placed in the thumb, index finger, and wrist at a frequency of 85 Hz, using an electromagnetic motion analysis system (Minibird, Ascension Technology).

Data analysis

While the thumb and index finger markers were used to calculate the grip variables, the wrist marker was used to extract the transport variables. Analyses were performed on reaction time (RT) and on traditional components of the transport (movement time, peak velocity, and time to peak velocity) and the grip (maximum grip aperture, percentage time to maximum grip aperture) phases of the actions.

Transport variables.

Movement time (MT) was computed as the time interval between movement onset and movement offset when the hand velocity dropped below 50 mm/s as it reached the object. Although the transport movement toward the near target was negligible, the small displacement of the wrist necessary to access the objects was clearly captured by the wrist marker and analyzed using the same criteria as those for the transport to the far object.

Peak velocity (PV) was defined as the maximum velocity of the thumb marker during MT. Time to PV (TPV) is the time at which the PV was reached.

Grip variables.

Maximum grip aperture (MGA) was computed as the maximum distance in 3D space between thumb and index markers during the hand movement. Time to maximum grip aperture was computed as the percentage of the MT at which the MGA occurred.

Other variables.

RTs were computed as the time of movement onset (the time at which the velocity of the thumb marker rose above 50 mm/s after the opening of the goggles).

We also collected one more parameter that, although not usually analyzed in standard kinematics, might indeed affect the BOLD response: total MT (TMT). TMT is the time taken to perform the full action from the onset of the outgoing movement to the offset (velocity, <50 mm/s) of the return movement to the home position.

For transport parameters, data were analyzed using repeated-measures ANOVAs where actions (grasp versus touch), movements (outward versus inward), and positions (near versus far) were used as within-subjects factors. For the grip parameters, data were analyzed using repeated-measures ANOVAs where movements (outward versus inward), and positions (near versus far) were used as within-subjects factors.

Results

Results of experiment 1

Region of interest analyses

aIPS: grip effect plus transport/grip interaction.

The comparison of Gf versus Tf showed activation in the left aIPS in all 10 subjects. In particular, a clear focus of activation was found at the junction between the anterior IPS and the inferior segment of the PCS in the left hemisphere of all 10 participants. Averaged Talairach coordinates (x = −40; y = −30; z = 43) are in agreement with coordinates from previous fMRI experiments that also used real 3D stimuli (Frey et al., 2005; Culham et al., 2006; Castiello and Begliomini, 2008). Right aIPS was localized in only 6 of the 10 participants (averaged Talairach coordinates: x = 38; y = −30; z = 44). Results for left aIPS are shown for each participant in Figure 2a together with the average time courses and peak activation (Fig. 2b–d).

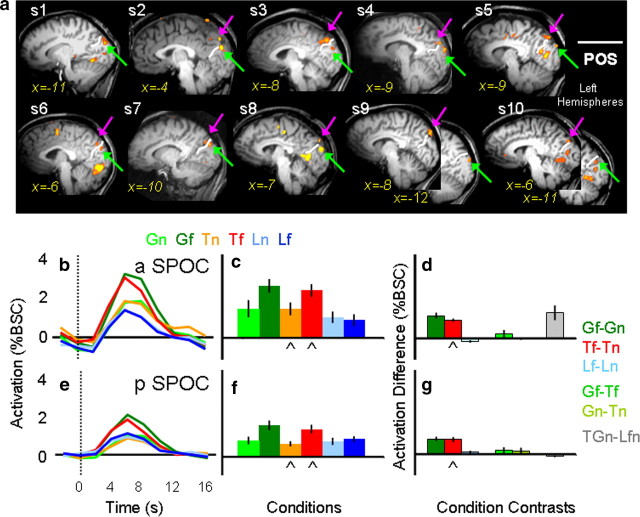

Figure 2.

Individual statistical maps and activation levels across conditions for the aIPS region of interest in experiment 1. a, The position of the left aIPS, localized by comparing Gf versus Tf, is shown in the most clear transverse slice (z value) for each of the 10 participants. In each participant, aIPS (highlighted by a yellow arrow) was identified at the junction of the anterior end of the intraparietal sulcus (dotted line) and the inferior segment of the PCS (solid line). b, Line graphs show the event-related average time courses for the six experimental conditions in the left aIPS with time 0 indicating the onset of the visual stimuli. c, The bar graph displays the average magnitude of peak activation (%BSC) in each experimental condition for the group. d, The bar graph displays differences in average peak activations (%BSC) between key conditions. Error bars represent 95% confidence intervals such that difference scores with error bars that do not include zero indicate that the difference was significant at p < 0.05, two-tailed t test. The chevron (⋀) highlights the experimental conditions and contrast used to localize each ROI, indicating which conditions and contrasts may be susceptible to the nonindependence error. Statistical values for the %BSC peak activation differences are as follows: Gf > Gn, p = 0.0005; Tf = Tn, p = 0.2; Lf = Ln, p = 0.09; Gf > Tf, p = 0.0001; Gn > Tn, p = 0.0001; GTn > Lnf, p = 0.0001.

Having defined aIPS by contrasting Gf versus Tf, we then examined the activation patterns for the six experimental conditions with a 2 × 3 ANOVA. We found a significant effect of task (F(1,9) = 64.07, p < 0.0001), location (F(1,9) = 20.3, p = 0.001), and task × location (F(2,18) = 6.126, p < 0.009). There was a main effect of task in the 2 × 2 action/look by location ANOVA, indicating higher activation for the action tasks than the look tasks, but no main effect of location and no interaction. There was a main effect of task in the grasp/touch × location ANOVA, indicating higher activation for grasp than touch tasks, and this was modulated by an interaction between task and location.

As necessitated by the contrast used to localize aIPS, there was higher activation for grasping than touching in the far location (Gf > Tf, p < 0.0001). As expected, there was also higher activation for grasping than touching in the near location (Gn > Tn, p < 0.0001). Importantly, we found no significant location differences for the two touch (Tn vs Tf, p = 0.46) and the two look conditions (Ln vs Lf, p = 0.24).

The task × location interaction arose from differences between activation for far versus near locations in grasp task (Gf > Gn, p = 0.001), but not the touch (Tf = Tn, p = 0.46) or look (Lf = Ln, p = 0.24) tasks. This pattern may have arisen because the localizer contrast was biased to include voxels where Gf activation was high (Kriegeskorte et al., 2009; Vul and Kanwisher, 2010); alternatively, it may be that grasping in far space taxes the system more because hand preshaping occurs over a longer duration (Cavina-Pratesi et al., 2007a).

SPOC: transport effect.

The contrast of Tf versus Tn revealed consistent activation across individual participants only in the vicinity of the superior parieto-occipital sulcus (Fig. 3). In 9 of the 10 participants we located one focus in anterior SPOC (aSPOC), anterior to the POS, in the precuneus, medial to the IPS. In all 10 participants, we located a second focus in posterior SPOC (pSPOC), posterior to POS, in the cuneus, medial to the transverse occipital sulcus. Both activations were in the left hemisphere. The averaged Talairach coordinates for aSPOC were x = −4, y = −79, and z = 32, and those for pSPOC were x = −8, y = −83, and z = 21.

Figure 3.

Individual statistical maps and activation levels across conditions for the anterior and posterior superior parietal occipital sulcus (SPOC) regions of interest in experiment 1. a, The positions of aSPOC and pSPOC, localized by comparing Tf versus Tn, are shown in the most clear sagittal slice (x value) within the left hemisphere for each of the 10 participants. In all 10 participants, posterior SPOC (highlighted by a green arrow) was identified posterior to the POS. In 9 of the 10 participants, activation in the anterior SPOC (highlighted by a pink arrow) was identified anterior to the POS. b, e, Line graphs indicate the event-related average time courses for the six experimental conditions in the anterior and posterior SPOC, with time 0 indicating the onset of the visual stimuli. c, f, The bar graphs display the average magnitude of peak activation in %BSC in each experimental condition for the group. d, g, The bar graphs display differences in the average peak activation (%BSC) between key comparisons. Conventions are as in Figure 2. Statistical values for the %BSC peak activation differences are as follows: a SPOC: Gf > Gn, p = 0.0001; Tf = Tn, p = 0.0001; Lf = Ln, p = 0.054; Gf > Tf, p = 0.085; Gn > Tn, p = 0.45; GTn > Lnf, p = 0.0053; pSPOC: Gf > Gn, p = 0.0001; Tf = Tn, p = 0.0001; Lf = Ln, p = 0.117; Gf > Tf, p = 0.083; Gn > Tn, p = 0.074; GTn > Lnf, p = 0.02.

Having defined aSPOC and pSPOC with the contrast of Tf versus Tn, we then examined the activation patterns for the six experimental conditions with the ANOVA. In the anterior SPOC, we found a significant effect of task (F(1,8) = 12.97, p < 0.001), location (F(1,8) = 20.23, p = 0.004), and task × location (F(1,8) = 5.09, p = 0.025). Consistent with a transport effect but no transport × grip interaction, the two 2 × 2 ANOVAs showed a significant interaction between action/look × location, but no significant grasp/touch × location interaction. As necessitated by the contrast used to select these regions, post hoc t tests showed higher activation for Tf versus Tn (p < 0.0001). More importantly, there was also a difference for Gf > Gn (p < 0.0001), but no statistical difference between Lf versus Ln (p = 0.07). Differences between the type of action tasks at the different object locations showed no significant differences (Gf = Tf, p = 0.17; Gn = Tn, p = 0.9). Moreover, more activation was found for the action tasks over passive viewing tasks (for all comparisons, p < 0.0001).

Similar but not identical results were found in the posterior SPOC. Once again we found a significant effect of task (F(1,9) = 7.59, p < 0.005), location (F(1,9) = 57.79, p < 0.0001), and task × location (F(1,9) = 20.45, p < 0.0001). As in the anterior SPOC, 2 × 2 ANOVAs were consistent with a transport effect that did not interact with a grip effect. We found significantly higher activation for the far location than the near location only for the action tasks (Gf > Gn, p < 0.0001; Tf > Tn, p < 0.0001) but not the passive viewing task (Lf = Ln, p = 0.234). As in aSPOC, higher activation for Tf versus Tn is not surprising given that the comparison of Tf > Tn was used to select the region. Moreover, as for anterior SPOC, differences between the type of action tasks at the two object locations were statistically indistinguishable (Gf = Tf, p = 0.09; Gn = Tn, p = 0.149). Unlike anterior SPOC, posterior SPOC did not show a higher activation for actions in near space compared with passive viewing (GTn = Lnf), raising the possibility of a visually driven transport effect.

Although eye movement monitoring is not possible in the head-tilted configuration, the pattern of activity in SPOC suggests that our results were not contaminated by unwanted eye movements resulting from a failure to maintain fixation. Given that previous results in SPOC showed increased activity when the eyes verge at a near (vs far) point (Quinlan and Culham, 2007), if participants had looked directly at the targets in the current experiment we should have observed higher activation for the near targets than for the far ones. In fact, our results are actually the opposite (higher activation in SPOC for far vs near targets), indicating that fixation errors were not a confound for SPOC or other areas.

Voxelwise analyses (averaged data).

Main effect of task.

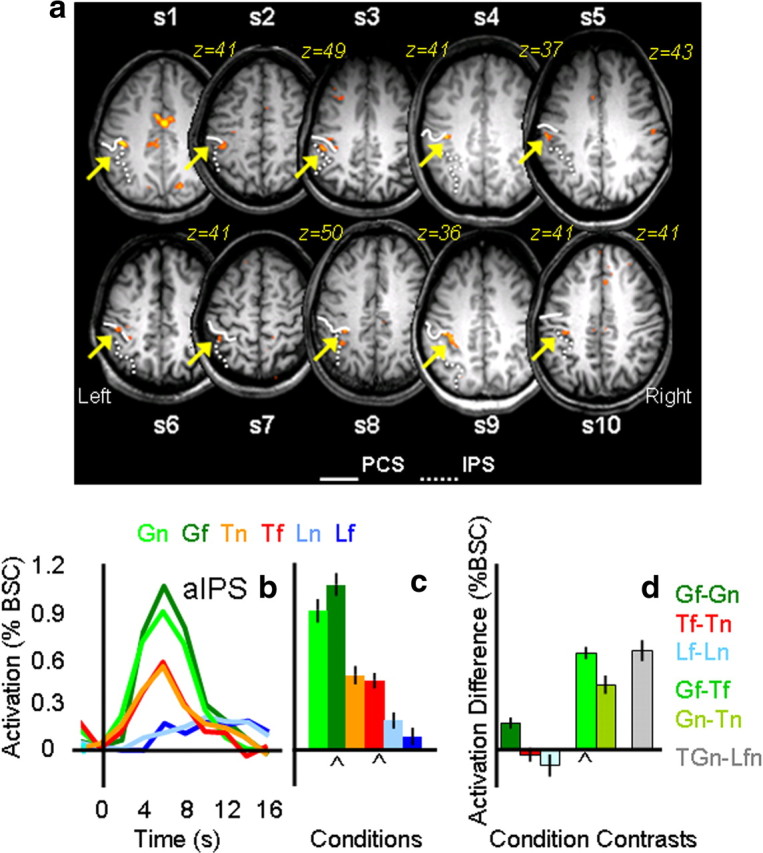

The main effect of task (F(2,18) = 99.8, p = 0.001) revealed activations in several areas within the left and the right hemisphere (see Fig. 4).

Figure 4.

Group statistical maps and activation levels for areas showing a main effect of task in experiment 1. a, Each region that showed a significant main effect of task in the voxelwise ANOVA for experiment 1 is color coded based on the pattern of activation, as indicated in the legend. The group activation map is based on the Talairach averaged group results shown on the averaged anatomical map. Talairach coordinates for the activated areas and p values for the relevant statistical comparisons are shown in Table 1. b, Brain areas surviving a more conservative threshold (p < 0.0001, Bonferroni corrected) for the same main effect of task revealed distinct foci of activation within left M1, left S1, and left aIPS. c–h, The bar graphs display average and differences for β weights within key areas in the grasping network: right aIPS (c, f), left vPM (d, g) and left aIPS (e, h). Statistical values for the β weight differences in left vPM, left aIPS, and right aIPS are as follows: Left vPM: Gf = Gn, p = 0.67; Tf = Tn, p = 0.31; Lf = Ln, p = 0.42; Gf > Tf, p = 0.007; Gn > Tn, p = 0.004; GTn > Lnf, p = 0.0001; left aIPS: Gf = Gn, p = 0.146; Tf = Tn, p = 0.185; Lf = Ln, p = 0.134; Gf > Tf, p = 0.0018l Gn > Tn, p = 0.005; GTn > Lnf, p = 0.0008; right aIPS: Gf = Gn, p = 0.53; Tf = Tn, p = 0.3; Lf = Ln, p = 0.55; Gf > Tf, p = 0.003; Gn > Tn, p = 0.003, GTn > Lnf, p = 0.0001. aIPS, Anterior intraparietal sulcus; CS, central sulcus; IPL, inferior parietal lobe; IPS, intraparietal sulcus; L, left; LOC, lateral occipital complex; M1, primary motor cortex; PCS, postcentral sulcus; R, right; S1, primary somatosensory cortex; SII (S2), secondary somatosensory area; SMA, supplementary motor area; SPL, superior parietal lobe; vPM, ventral premotor cortex.

Analyses of the brain activity for the grasp, touch, and look tasks (β weights) were performed in each area by using paired sample t tests corrected for the number of comparisons (0.05/3 tasks = 0.017). Results are reported in Table 1 and depicted in Figure 4 where areas showing a preference for grasp (with grasp higher than touch and both grasp and touch higher than look) are depicted in green, areas showing a preference for grasp and touch (over look) are depicted in red, and areas showing a preference for look (over touch and grasp) are depicted in light blue. As is clearly shown, a preference for the grasp task was found in a large left cluster [which included the central sulcus (primary motor cortex or M1), the PCS (primary somatosensory area or S1), and the aIPS], the right postcentral sulcus (S1), the right aIPS, the bilateral junction of postcentral sulcus and the Sylvian fissure (secondary somatosensory area or S2), the left inferior frontal gyrus (vPM), the left thalamus, the left cerebellum, and the left lateral occipital cortex (LO). A preference for the action tasks in general (grasp and reach higher than look) was found in the medial wall of the superior frontal gyrus [supplementary motor area (SMA)], left superior parietal lobule (SPL) (medially to IPS and just posterior to the PSC, probably corresponding to parietal area 5 and, in particular, given its distance from the cingulate sulcus, the more lateral portion of area 5L) (Scheperjans et al., 2008), and right LO. Higher activation for grasp and touch in bilateral LO is likely caused by the extra visual information associated with seeing the arm/hand/fingers interacting with the objects (Bracci et al., 2010). Interestingly, negative activation for grasp and touch was found within the left inferior parietal lobe (IPL). This negative response for grasp and touch in IPL might reflect IPL default mode network activity that is anticorrelated with activity in IPS, premotor, and posterior temporal areas (Fox and Raichle, 2007).

Table 1.

Talairach coordinates and statistical details for brain areas showing a main effect of task in experiment 1

| Brain areas | Main effect of task experiment 1 |

||||||||

|---|---|---|---|---|---|---|---|---|---|

| Talairach coordinates |

Volume (mm3) | F | Paired t test |

||||||

| x | y | z | G > T | G > L | T > L | L > G & T | |||

| Left S1, aIPS, and M1 | −37 | −23 | 53 | 15,417 | 12 | 0.001 | 0.0001 | 0.0001 | n.a. |

| Left M1* | −36 | −20 | 53 | 650 | 36 | 0.0001 | 0.0001 | 0.0001 | n.a. |

| Left S1* | −55 | −25 | 51 | 240 | 36 | 0.0006 | 0.001 | 0.01 | n.a. |

| Left aIPS* | −39 | −33 | 48 | 271 | 36 | 0.005 | 0.0003 | 0.002 | n.a. |

| Left vPM | −47 | 4 | 12 | 494 | 12 | 0.005 | 0.0001 | 0.002 | n.a. |

| Right aIPS | 51 | −29 | 33 | 492 | 12 | 0.0006 | 0.0001 | 0.0001 | n.a. |

| Right S2 | 53 | −29 | 25 | 253 | 12 | 0.005 | 0.0001 | 0.0001 | n.a. |

| Left S2 | −57 | −28 | 27 | 520 | 12.9 | 0.009 | 0.0001 | 0.0001 | n.a. |

| Cerebellum | −8 | −50 | −15 | 3778 | 12 | 0.0002 | 0.0001 | 0.0001 | n.a. |

| Left LO | −45 | −62 | 2 | 1592 | 12 | 0.001 | 0.0002 | 0.008 | n.a. |

| Right S1 | 50 | −17 | 40 | 518 | 12 | 0.0001 | 0.0001 | 0.001 | n.a. |

| SMA | −1.6 | −3.6 | 49 | 3786 | 12 | 0.06 | 0.0001 | 0.0001 | n.a. |

| Left SPL/area 5L | −25 | −51 | 58 | 433 | 12 | 0.075 | 0.0003 | 0.0004 | n.a. |

| Right LO | 48 | −50 | 3 | 865 | 12 | 0.06 | 0.0001 | 0.009 | n.a. |

| Left IPL | −44 | −55 | 28 | 888 | 12 | 0.07 | 0.008 | ||

Nonsignificant values are indicated in boldface. Area abbreviations are the same as those in the figure legends. G, Grasp, T, touch; L, look; n.a., not applicable. Asterisk (*) denotes voxels selected using a threshold of p = 0.0001, Bonferroni corrected.

The smoothing of group data (which improves interindividual overlap in stereotaxic space, particularly important for RFX analyses) has the drawback that nearby foci can form a large cluster of activation at corrected thresholds that easily identify isolated foci. This can be seen in the large cluster of activation that likely includes left M1, left S1, and left aIPS (Fig. 4b). When we increased the threshold (p = 0.0001, Bonferroni corrected), we were able to isolate peaks within this cluster that likely correspond to the three foci. This was important for distinguishing group aIPS from the neighboring regions. We tested brain activity in all three areas, but here we report results for aIPS only for conciseness. The 3 × 2 ANOVA on the six experimental conditions showed only a main significant effect of task (F(1,9) = 19.188, p < 0.0001) with grasp higher than touch (p = 0.006) and look (p = 0.003), which were also statistically different from each other (p = 0.013). Importantly, we found that Gf and Gn were approximately equal (p = 0.146) suggesting that higher response for Gf compared with Gn found in the ROI analysis was related to a bias in voxel selection (Kriegeskorte et al., 2009; Vul and Kanwisher, 2010). A complete report of the statistical results found in aIPS is reported in the legend of Figure 4.

Main effect of location.

No brain areas showed a significant effect of location, i.e., for an object presented in the upper right versus the lower left position of the platform regardless of the type of task. Recall, however, that a transport effect would be expected to be characterized by a task × location interaction and not necessarily by a main effect of location (because no location differences are expected in the look conditions).

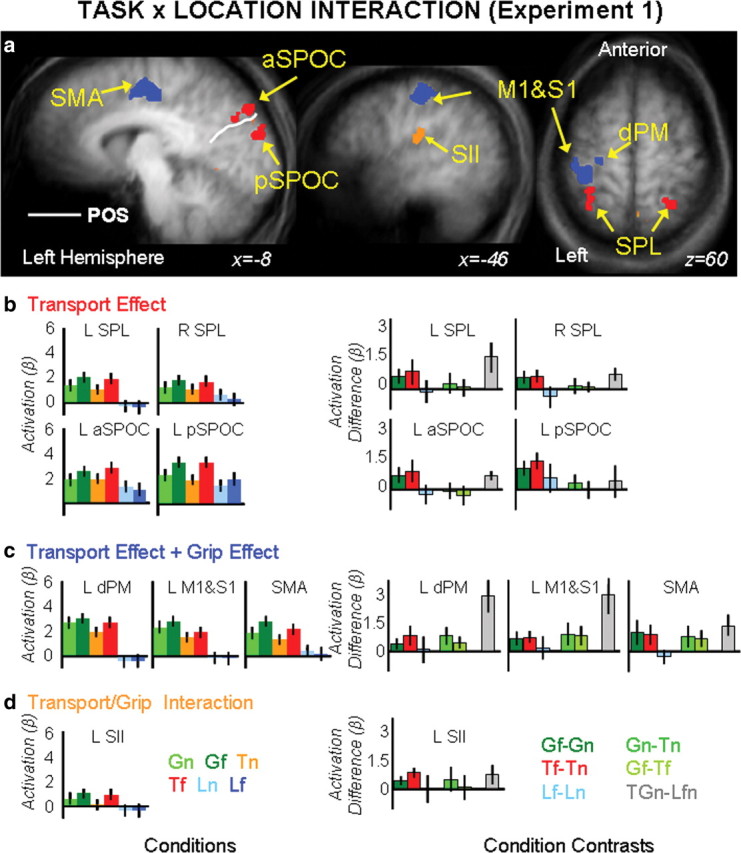

Interaction task × location.

The interaction of task × location (F(2,18) = 7.27, p = 0.001) revealed activations in several areas mostly within the left hemisphere. Activations were observed in the left M1, left dPM, left SMA, left S1, left S2, left medial SPL/area 5L, left aSPOC, and left pSPOC. In the right hemisphere, activation was found in the medial SPL/area 5L. Statistical maps and stereotaxic coordinates for all areas are reported in Figure 5b and Table 2, respectively.

Figure 5.

Group statistical maps and activation levels for areas showing an interaction of task × location in experiment 1. a, Each region that showed a significant interaction of task × location in the voxelwise ANOVA is color-coded based on the pattern of activations, as indicated by the color coded headers for b, c, and d. b–d, Bar graphs show β weight averages (left panels) and β weight differences between key conditions (right panels) for each area. Labels and conventions are as in previous figures. dPM, Dorsolateral premotor cortex.

Table 2.

Talairach coordinates and statistical details for brain areas showing an interaction of task × location in experiment 1

| Brain areas | Talairach coordinates |

Volume | Interaction experiment 1 (task × location) p values for paired t tests |

|||||||

|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | Gf > Gn | Tf > Tn | Lf > Ln | Gn − Tn | Gf − Tf | GTn − Lfn | ||

| Left SPL | −30 | −45 | 61 | 1220 | 0.002 | 0.01 | 0.635 | 0.111 | 0.341 | 0.001 |

| Right SPL/area 5L | 25 | −46 | 59 | 393 | 0.003 | 0.001 | 0.126 | 0.178 | 0.173 | 0.001 |

| Left aSPOC | −7 | −80 | 36 | 403 | 0.003 | 0.004 | 0.293 | 0.378 | 0.487 | 0.0001 |

| Left pSPOC | −8 | −87 | 19 | 199 | 0.0001 | 0.0001 | 0.093 | 0.045 | 0.39 | 0.292 |

| Left dPM | −24 | −14 | 69 | 932 | 0.01 | 0.008 | 0.789 | 0.002 | 0.006 | 0.0001 |

| Left M1/S1 | −31 | −24 | 51 | 3977 | 0.008 | 0.001 | 0.354 | 0.0001 | 0.028 | 0.0001 |

| SMA | −6 | −13 | 47 | 1514 | 0.003 | 0.004 | 0.283 | 0.008 | 0.254 | 0.0001 |

| Left S2 | −42 | −23 | 23 | 968 | 0.003 | 0.0001 | 0.999 | 0.054 | 0.003 | 0.007 |

Nonsignificant values are indicated in boldface. Gf, Grasping the far object; Tf, touching the far object; Gn, grasping the near object; Tn, touching the near object; Ln, passive viewing the near object; Lf, passive viewing the far object.

Analyses of the β weights (Fig. 5b–g; Tables 2 and 3) revealed different pattern of activity that could be summarized by organizing the brain areas into three separate groups: transport component-related areas, grip-plus-transport component-related areas, and interaction of grip/transport-related areas.

Areas responsive to the transport component (left and right SPL/area 5L, left aSPOC and left pSPOC) are depicted in red and showed a main effect of location in the 2 × 2 grasp/touch × location ANOVA with actions executed in the far space eliciting higher response compared with actions executed in the near space. An interaction of task × location was found for the 2 × 2 action/look ANOVA, where action tasks only elicited a higher response for objects presented in the far location (Gf > Gn, Tf > Tn, and Lf = Ln) (Table 2; Fig. 5b). Interestingly, left pSPOC but not left aSPOC and SPL/area 5L (bilaterally) showed an effect of visual transport given that actions executed toward the near space were equal to passive viewing (GTn = Lnf).

Areas responsive to both the transport and the grip component (left dPM, left M1/S1, and SMA) are depicted in blue and showed main effects of task and location in the 2 × 2 grasp/touch × location ANOVA where grasp was higher than touch and where actions executed in the far location showed a higher response than actions executed toward the near location. An interaction of task × location was found for the 2 × 2 action/look ANOVA, where only action tasks elicited a higher response for objects presented in the far location (Gf > Gn, Tf > Tn and Lf = Ln) (Table 3; Fig. 5c).

One area, left S2 (Fig. 5a, orange), showed an interaction between the transport and the grip component. Main effects of task and location and the interaction of task × location were significant in the 2 × 2 grasp/touch × location ANOVA. Analyses of the differences showed that the interaction was related to the grasp task, eliciting a higher response than touch when executed toward the near space (Gn > Tn, Gf = Tf) (Fig. 5c). Only the main effect of task was significant in the action/look × location ANOVA, with action tasks eliciting a higher response than passive viewing.

Results of experiment 2

In experiment 1 we found that tasks requiring arm transport yielded significantly higher activation than tasks that did not in the anterior and posterior SPOC. Because actions were performed with visual feedback of the moving arm (i.e., in closed loop), it is possible that the transport-specific activation was merely a result of visual motion from the moving arm. This explanation could fully account for the pattern of effects in pSPOC, where higher responses were observed only when the arm moved (Gf and Tf > Gn and Tn = Ln and Lf). However, this explanation is less likely to account for the pattern of effects in aSPOC, where higher activation was found for movements without arm transport compared with passive viewing (Gf and Tf > Gn and Tn > Ln and Lf). Moreover, given the unilateral left hemisphere response, it is not clear whether SPOC activations reflect moving the contralateral effector (right arm/hand) or seeing the far objects always presented in the contralateral (right) hemifield. In experiment 2, we controlled for such confounds by asking participants to perform actions in open loop (i.e., without seeing their own arm/hand moving) and by presenting stimuli requiring arm transport at either the right (experiment 2a) or the left (experiment 2b) visual field.

Voxelwise analyses

In experiment 2, data were analyzed using voxelwise analysis on averaged data only. A three-level factorial ANOVA allowed us to check which brain areas were influenced by the position of the hand on the platform, the task performed, the location of the stimuli, and their interactions.

No main effect or interactions regarding hand.

No brain area showed a significant main effect of hand nor any interaction of hand with the other variables (task and location). Consistent with the hypothesis that the starting position of the hand had no effect on the pattern of results, the logic of our analysis was thus the same as with the simple 2 × 3 location × task ANOVA in experiment 1.

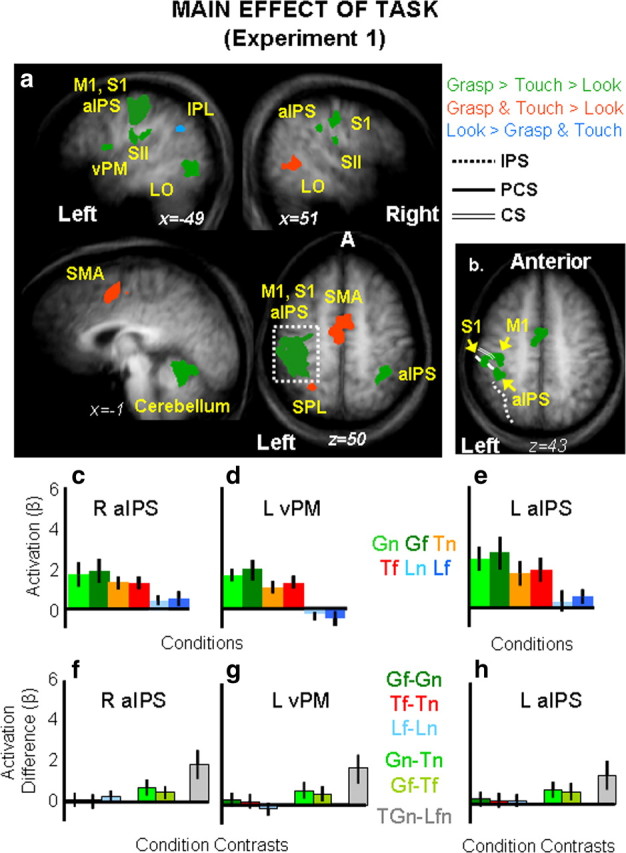

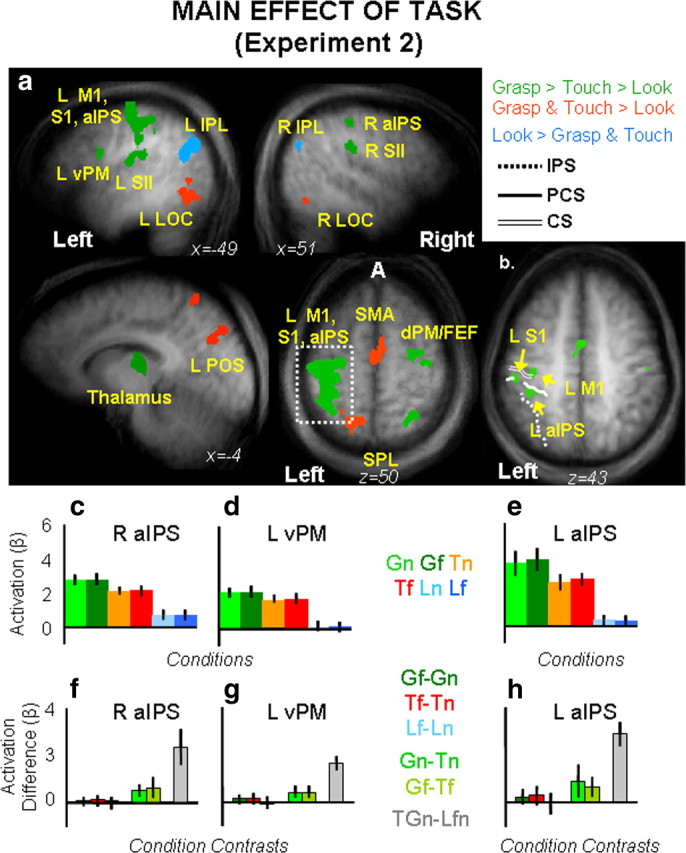

Main effect of task.

The main effect of task (F(2,24) = 110.5, p = 0.001) revealed activations in several areas within the left and the right hemispheres (Fig. 6).

Figure 6.

Group statistical maps and activation levels for areas showing a main effect of task in experiment 2. a, Each region that showed a significant main effect of task in the voxelwise ANOVA for experiment 2 is color coded based on its pattern of activations, as indicated in the legend. Talairach coordinates for the activated areas and p values for the relevant statistical comparisons are shown in Table 3. b, Brain areas that survived a more conservative threshold (p < 0.0001, Bonferroni corrected) for the same main effect of task revealed separate activations within left M1, left S1, and left aIPS. c–h, The bar graphs display average and differences for β weights in each experimental condition within key areas in the grasping network: right aIPS (c, f), left vPM (d, g), and left aIPS (e, h). Labels and conventions are as in previous figures. β weight differences in left vPM, left aIPS, and right aIPS are as follows: Left vPM: Gf = Gn, p = 0.75; Tf = Tn, p = 0.71; Lf = Ln, p = 0.43; Gf > Tf, p = 0.009; Gn > Tn, p = 0.007; GTn > Lnf, p = 0.0001; left aIPS: Gf > Gn, p = 0.094; Tf = Tn, p = 0.103; Lf = Ln, p = 0.409; Gf > Tf, p = 0.007; G > Tn, p = 0.003; GTn > Lnf, p = 0.0001; right aIPS: Gf = Gn, p = 0.73; Tf = Tn, p = 0.43; Lf = Ln, p = 0.65; Gf > Tf, p = 0.0003; Gn > Tn, p = 0.0031; GTn > Lnf, p = 0.0001.

Analyses of brain activity for the three tasks (β weights for grasp, touch, and look) were performed using paired samples t tests in each area corrected for the number of comparisons (0.05/3 = 0.017). Results are reported in Table 4 and depicted in Figure 6a, where areas showing a preference for grasp (with grasp higher than touch and both grasp and touch higher than look) were depicted in green, areas showing a preference for grasp and touch (over look) were depicted in red, and areas showing a preference for look (over touch and grasp) were depicted in light blue. As is clearly shown, a preference for the grasp task only was found in the left larger cluster (including M1, S1, dPM, and aIPS), the S2, vPM, and thalamus in the left hemisphere, and the dPM, aIPS, S2, and SPL/area 5L in the right hemisphere. A preference for the action tasks in general (grasp and touch) was found in SMA, left SPL/area 5L, left POS, and bilateral LO. Higher response for grasp and touch in bilateral LO might be caused by the residual vision of the hand/arm/fingers at the very early stage of movements (400 ms of illumination might have been enough to see the hand leaving the starting position, but not enough to see the hand approaching and interacting with the objects). Positive activation for look compared with negative activation for grasp and touch was found bilaterally within the IPL and in the left anterior temporal sulcus (ATs). As suggested for experiment 1, this negative response for grasp and touch might reflect the anticorrelated relationship existing for activity in IPL and ATs versus activity within IPS, premotor, and posterior temporal areas during the default resting network (Fox and Raichle, 2007).

Table 4.

Brain areas, Talairach coordinates, volume and p values for activations associated to the main effect of task in experiment 2

| Brain areas | Main effect of task experiment 2 |

||||||||

|---|---|---|---|---|---|---|---|---|---|

| Talairach coordinates |

Volume (mm3) | F | Paired t test |

||||||

| x | y | z | G > T | G > L | T > L | L > G & T | |||

| Left M1, S1, aIPS | −39 | −27 | 50 | 23310 | 12.9 | 0.004 | 0.0001 | 0.0001 | n.a. |

| Left M1* | −38 | −25 | 52 | 1394 | 46.10 | 0.0001 | 0.0001 | 0.0001 | n.s. |

| Left S1* | −55 | −25 | 41 | 240 | 46.10 | 0.0001 | 0.0001 | 0.0001 | n.s. |

| Left aIPS* | −42 | −34 | 42 | 295 | 57.72 | 0.001 | 0.0001 | 0.0001 | n.s. |

| Right dPM | 29 | −13 | 63 | 3526 | 12.9 | 0.013 | 0.0001 | 0.0001 | n.s. |

| Right SPL/area 5L | 25 | −56 | 57 | 427 | 12.9 | 0.0004 | 0.0001 | 0.0001 | n.s. |

| Right aIPS | 40 | −34 | 43 | 1492 | 12.9 | 0.0006 | 0.0001 | 0.0001 | n.s. |

| Left S2 | −42 | −17 | 22 | 324 | 12.9 | 0.001 | 0.0001 | 0.0001 | |

| Right S2 | 58 | −29 | 26 | 2717 | 12.9 | 0.009 | 0.0001 | 0.0001 | n.s. |

| Left vPM | −42 | 3 | 19 | 820 | 12.9 | 0.0001 | 0.0001 | 0.0001 | n.s. |

| Thalamus | −13 | −20 | 11 | 4646 | 12.9 | 0.005 | 0.0001 | 0.0001 | n.s. |

| SMA | 0 | −5 | 48 | 4136 | 12.9 | 0.58 | 0.0001 | 0.0001 | |

| Left SPL/area 5L | −17 | −50 | 59 | 1115 | 12.9 | 0.039 | 0.0001 | 0.0001 | n.s. |

| Left SPOC | −10 | −75 | 33 | 1240 | 12.9 | 0.42 | 0.001 | 0.0007 | n.s. |

| Left LO | −50 | −61 | 7 | 1211 | 12.9 | 0.43 | 0.0002 | 0.0001 | n.s. |

| Right LO | 52 | −59 | 11 | 333 | 12.9 | 0.08 | 0.0001 | 0.0001 | n.s. |

| Left IPL | −46 | −62 | 26 | 2378 | 12.9 | 0.79 | 0.0001 | ||

| Right IPL | 50 | −64 | 29 | 193 | 12.9 | 0.67 | 0.0004 | ||

| Left AT | −59 | −29 | −2.9 | 508 | 12.9 | 0.051 | 0.0001 | ||

Nonsignificant values are indicated in boldface. AT, Anterior temporal cortex; n.a., not applicable; n.s., not significant. Asterisk (*) denotes voxels selected using a threshold of p = 0.0001, Bonferroni corrected.

As was the case in experiment 1, to make sure that left aIPS was activated reliably we increased our threshold (p = 0.0001, Bonferroni corrected). We found separate activations for left M1, left S1, and left aIPS. We tested brain activity in all areas, but here we report results for aIPS only for conciseness (Fig. 6b–d). The 3 × 2 ANOVA on the six experimental conditions showed only a main significant effect of task (F(2,24) = 93.307, p < 0.0001), with grasp higher than touch (p = 0.006) and look (p = 0.0001), which were also statistically different from each other (p = 0.0001). As before, we found that brain activity levels for grasping actions executed toward the far or the near locations were statistically undistinguishable (Gf = Gn). Please note that a complete report of the statistical results found in aIPS is included in the legend of Figure 6.

Main effect of location.

As for experiment 1, no brain area showed a significant main effect of location, i.e., when objects were presented immediately adjacent to the hand compared with far away from the hand at the chosen threshold.

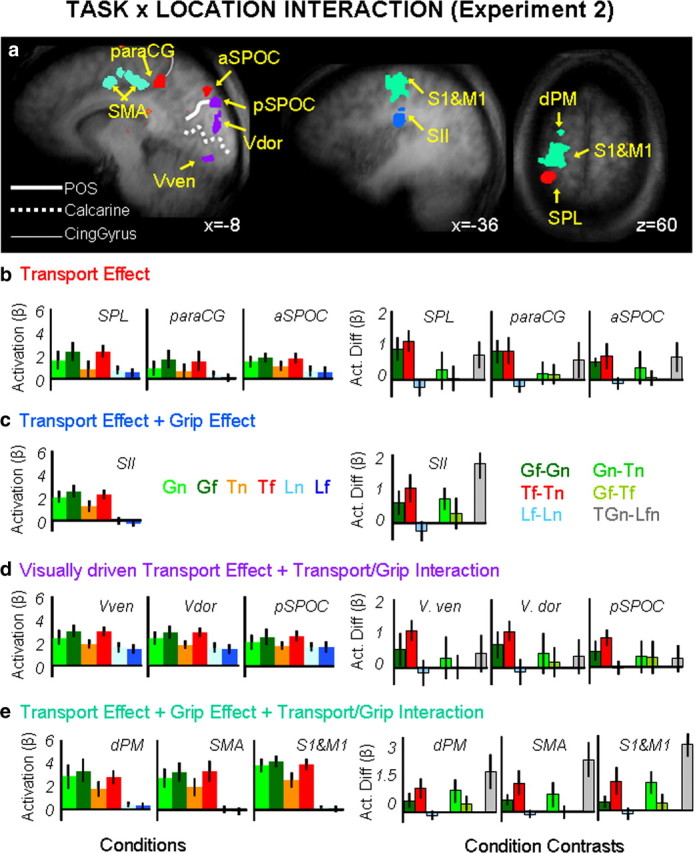

Interaction task × location.

The interaction of task × location (F(1,12) = 7.033, p = 0.001) revealed activations in several areas, mostly within the left hemisphere. Activations were observed in the left M1, left dPM, left SMA, the paracingulate gyrus (paraCG) anterior to the ascending ramus of the cingulate sulcus, the left S1, left S2, left SPL/area 5L, left aSPOC, left pSPOC, and early visual areas (dorsally and ventrally to the calcarine fissure, Vdor and Vven). Statistical maps and stereotaxic coordinates for all the areas are reported in Figure 7 and in Table 5, respectively.

Figure 7.

Group statistical maps and activation levels for areas showing an interaction of task × location in experiment 2. a, Brain areas are depicted according to the specific pattern of activation they displayed as indicated by color labels for b, c, d, and e. The group activation map is based on the Talairach averaged group results shown on one averaged anatomical map. b–e, Bar graphs show averaged β weights (left panels) and differences in β weights between key conditions (right panels) for the transport effect-related areas (b), the transport effect plus grip effect related areas (c), the visually driven transport effect related areas (d), and the transport effect-, grip effect-, and transport/grip interaction effect-related areas (e). Labels and conventions as in previous figures. Vven, visual ventral; Vdor, visual dorsal.

Table 5.

Brain areas, Talairach coordinates, Volume and p values for activations associated to the effect of task × location in experiment 2

| Brain areas | Talairach coordinates |

Volume | Interaction experiment 2 (task × location) p values for paired t tests |

||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| x | y | z | Gf > Gn | Tf > Tn | Lf > Ln | Gn − Tn | Gf − Tf | GTn − Lfn | |||||

| Left paraCG | −12 | −41 | 43 | 771 | 0.0001 | 0.0001 | 0.039 | 0.335 | 0.284 | 0.009 | |||

| Left SPL/area 5L | −25 | −39 | 62 | 523 | 0.001 | 0.0001 | 0.134 | 0.544 | 0.363 | 0.0001 | |||

| Left aSPOC | −9 | −79 | 38 | 348 | 0.0001 | 0.002 | 0.265 | 0.069 | 0.329 | 0.007 | |||

| Left S2 | −42 | −23 | 23 | 968 | 0.006 | 0.0001 | 0.121 | 0.0003 | 0.102 | 0.0001 | |||

| Left pSPOC | −9 | −85 | 29 | 487 | 0.004 | 0.0001 | 0.838 | 0.098 | 0.108 | 0.205 | |||

| Vdor | −4 | −85 | 11 | 637 | 0.003 | 0.0001 | 0.479 | 0.073 | 0.197 | 0.018 | |||

| Vven | −5 | −84 | −12 | 177 | 0.008 | 0.0001 | 0.436 | 0.166 | 0.430 | 0.133 | |||

| Left dPM | −20 | −9 | 65 | 49 | 0.006 | 0.0001 | 0.156 | 0.0001 | 0.044 | 0.0001 | |||

| Left M1/S1 | −29 | −23 | 56 | 2964 | 0.008 | 0.001 | 0.214 | 0.0008 | 0.013 | 0.0001 | |||

| SMA | −2 | −18 | 48 | 6700 | 0.0001 | 0.0001 | 0.180 | 0.006 | 0.438 | 0.01 | |||

Nonsignificant values are indicated in boldface.

Analyses of the β weights, summarized in Figure 5b–g and in Tables 5 and 6, revealed different patterns of activity that could be summarized by organizing the brain areas into four groups: (1) transport component-related areas; (2) grip-plus-transport component-related areas; (3) visual transport component-related areas; and (4) interaction of grip/transport related areas.

Areas responsive to the transport component (left aSPOC, left paraCG, and left SPL/area 5L) showed a main effect of location in the 2 × 2 grasp/touch × location ANOVA with actions executed in far space eliciting higher response compared with tasks executed in the near position. An interaction of task × location was found for the 2 × 2 action/look ANOVA, where only action tasks elicited a higher response for objects presented in the far location (Gf > Gn, Tf > Tn, and Lf = Ln).

One area responded to both the transport and the grip component (left S2) and showed significant main effects of task and location in the 2 × 2 grasp/touch × location ANOVA (with grasp higher than touch and with actions executed in the far space eliciting a higher response than action executed in the near one). An interaction of task × location was found for the 2 × 2 action/look ANOVA, where only action tasks elicited a higher response for objects presented in the far location (Gf > Gn, Tf > Tn, and Lf = Ln).