Abstract

Choices are often intertemporal, requiring tradeoff of short-term and long-term outcomes. In such contexts, humans may prefer small rewards delivered immediately to larger rewards delivered after a delay, reflecting temporal discounting (TD) of delayed outcomes. The medial orbitofrontal cortex (mOFC) is consistently activated during intertemporal choice, yet its role remains unclear. Here, patients with lesions in the mOFC (mOFC patients), control patients with lesions outside the frontal lobe, and healthy individuals chose hypothetically between small-immediate and larger-delayed rewards. The type of reward varied across three TD tasks, including both primary (food) and secondary (money and discount vouchers) rewards. We found that damage to mOFC increased significantly the preference for small-immediate over larger-delayed rewards, resulting in steeper TD of future rewards in mOFC patients compared with the control groups. This held for both primary and secondary rewards. All participants, including mOFC patients, were more willing to wait for delayed money and discount vouchers than for delayed food, suggesting that mOFC patients' (impatient) choices were not due merely to poor motor impulse control or consideration of the goods at stake. These findings provide the first evidence in humans that mOFC is necessary for valuation and preference of delayed rewards for intertemporal choice.

Introduction

Humans and other animals are frequently faced with choices differing in the timing of their consequences (intertemporal choices; e.g., should I spend my money now or put it into a saving account?). In the laboratory, these situations may be modeled by manipulating the time at which rewards are delivered. For example, a subject might choose between $5 now and $15 in 1 week. Intertemporal choices require tradeoff of the value of one outcome that is temporally proximal with another outcome that is temporally distant. Individuals, and more so animals (Ainslie, 1974; Rosati et al., 2007), tend to prefer immediate over delayed rewards, even when waiting would yield larger payoffs. This phenomenon reflects the decrease in subjective value of a reward as the delay until its receipt increases, known as temporal discounting (TD) (Ainslie, 1975; Myerson and Green, 1995; Frederick et al., 2002).

The rate at which future rewards are discounted varies across individuals (Soman et al., 2005) and correlates with individual differences in real-world behavior. Steep TD of future rewards is associated with shortsighted, or patently impulsive, behavior. Addicted subjects (Kirby and Petry, 2004) and compulsive gamblers (Holt et al., 2003), for example, show increased TD rates compared with healthy controls, consistent with their inability to forgo immediate rewards (e.g., drug) to favor later rewards of larger value (e.g., health).

Research in cognitive neuroscience has begun to investigate the neural mechanisms governing TD (Luhmann, 2009). Functional neuroimaging [functional magnetic resonance imaging (fMRI)] studies in humans have consistently detected activity in brain structures such as the medial orbitofrontal cortex (mOFC) and adjacent medial prefrontal cortex (designated collectively as ventromedial prefrontal cortex) during intertemporal choices, especially when individuals weighted delayed rewards against immediately available rewards (McClure et al., 2004, 2007; Ballard and Knutson, 2009). Other fMRI studies have shown that activity in the mOFC and medial prefrontal cortex tracked the subjective value of rewards, over both short and long timescales (Kable and Glimcher, 2007; Peters and Büchel, 2009; Pine et al., 2009). Together, these findings identify the mOFC as an important structure for intertemporal choice.

fMRI evidence, however, cannot establish whether the mOFC is necessary for intertemporal choice. Lesion studies have the potential to test this claim directly. Several nonhuman animal studies have shown abnormal TD following lesion to the OFC (Cardinal et al., 2004; Winstanley et al., 2004; Rudebeck et al., 2006), providing evidence for a causal involvement in TD. The only study assessing TD in human patients with lesion to the ventromedial prefrontal cortex, however, found no deficit (Fellows and Farah, 2005). Crucially, some patients in that study had damage involving medial prefrontal cortex but sparing mOFC. Thus, whether mOFC plays a necessary role in TD in humans remains unknown.

Here, we investigated TD of hypothetical primary and secondary rewards in patients with lesions in mOFC, control patients with lesions outside the frontal lobe, and healthy individuals. If mOFC plays a crucial role during intertemporal choice, favoring valuation of long-term outcomes (Bechara et al., 1997; Schoenbaum et al., 2009), then patients with lesions involving this brain region should show increased TD of future rewards compared with control groups.

Materials and Methods

Participants

Participants included 16 patients with brain damage and 20 healthy individuals (see Table 1 for demographic and clinical information). Patients were recruited at the Centre for Studies and Research in Cognitive Neuroscience, Cesena, Italy. Patients were selected on the basis of the location of their lesion evident on magnetic resonance imaging (MRI) or computerized tomography (CT) scans.

Table 1.

Participant groups' demographic and clinical data

| Group | Sex (M/F) | Age (years) | Education (years) | MMSE | BIS-11 | Lesion volume (cc) | SRM | DS |

|---|---|---|---|---|---|---|---|---|

| mOFC (n = 7) | 6/1 | 57.7 (10.4) | 11.1 (6.4) | 27.6 (2.3) | 63.4 (6.1) | 49.3 (14.6) | 30.1 (5.8) | 4.9 (0.7) |

| non-FC (n = 9) | 7/2 | 57 (12.8) | 12.1 (4.0) | 28.1 (1.9) | 64.3 (10.9) | 46.8 (33.9) | 27.6 (4.5) | 5.1 (1.4) |

| HC (n = 20) | 18/2 | 58.2 (6.6) | 10.6 (4.6) | 28.6 (1.44) | 57.9 (7.5) | – | – |

The values in parentheses are SDs. M, Male; F, female; MMSE, Mini-Mental State Examination; SRM, Standard Raven Matrices (corrected score); DS, digit span forward (corrected score). Dashes indicate data are not available.

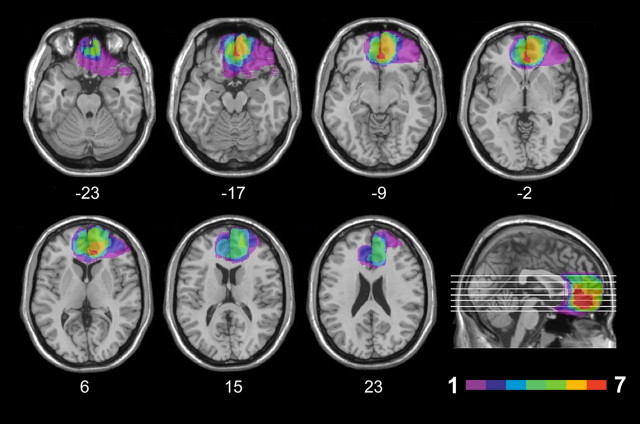

Seven patients (1 female) had lesions encompassing the medial one-third of the orbital surface and the ventral one-third of the medial surface of the frontal lobe, as well as the white matter subjacent to these regions (Fig. 1). Since lesions predominantly involved the mOFC (see Lesion analysis), we henceforth refer to this group as mOFC patients. Lesions were the results of the rupture of an aneurysm of the anterior communicating artery in 5 cases, and traumatic brain injury in 2 cases. Lesions were bilateral in all cases, though often asymmetrically so.

Figure 1.

Location and overlap of brain lesions. The panel shows the lesions of the seven patients with mOFC damage projected on the same seven axial slices and on the mesial view of the standard Montreal Neurological Institute brain. The level of the axial slices has been marked by white horizontal lines on the mesial view of the brain. z-coordinates of each axial slice are given. The color bar indicates the number of overlapping lesions. In each axial slice, the left hemisphere is on the left side. Maximal overlap occurs in the medial orbitofrontal cortex (BAs 10, 11), and adjacent medial prefrontal cortex (BA 32).

Nine patients (two females) were selected on the basis of having damage that did not involve the mesial orbital/ventromedial prefrontal cortex and frontal pole, and also spared the amygdala in both hemispheres. We henceforth refer to this group of nonfrontal patients as non-FC patients. In this group, lesions were unilateral in all cases (in the left hemisphere in 6 cases, and in the right hemisphere in 3 cases), and were all caused by ischemic or hemorrhagic stroke. Lesion sites included the lateral aspect of the temporal lobe and adjacent white matter (in 5 cases), the inferior parietal lobule (in 1 case), the medial occipital area (in 1 case), and the lateral occipitoparietal junction (in 2 cases).

Included patients were in the stable phase of recovery (at least 12 months postmorbid), were not receiving psychoactive drugs, and had no other diagnosis likely to affect cognition or interfere with participation in the study (e.g., significant psychiatric disease, alcohol abuse, history of cerebrovascular disease). There was no significant difference in lesion volume between mOFC patients and non-FC patients (49.2 vs 46.9 cc; p = 0.88).

The healthy control (HC) group comprised 20 individuals (two females) matched to the patients on mean age, gender, and education. Control participants were not taking psychoactive drugs, and were free of current or past psychiatric or neurological illness as determined by history. Participants gave informed consent, according to the Declaration of Helsinki (International Committee of Medical Journal Editors, 1991) and the Ethical Committee of the Department of Psychology, University of Bologna.

Patients' general cognitive functioning was generally preserved, as indicated by the scores they obtained in the Mini-Mental State Examination (Folstein et al., 1975), the Raven Standard Matrices, and the digit span test, which were within the normal range in all cases (Spinnler and Tognoni, 1987) (Table 1).

Lesion analysis

For each patient, lesion extent and location were documented by using the most recent clinical CT or MRI scan. Lesions were traced by a neurologist with experience in image analysis on the T1-weighted template MRI scan from the Montreal Neurological Institute provided with the MRIcron software (Rorden and Brett, 2000; available at http://www.mricro.com/mricron). This scan is normalized to Talairach space and has become a popular template for normalization in functional brain imaging (Moretti et al., 2009). Superimposing each patient's lesion onto the standard brain allowed us to estimate the total brain lesion volume (in cubic centimeters). Furthermore, the location of the lesions was identified by overlaying the lesion area onto the Automated Anatomical Labeling template provided with MRIcron. Figure 1 shows the extent and overlap of brain lesions in mOFC patients. Brodmann's areas (BA) affected in the mOFC group were areas 10, 11, 47, 32 (subgenual portion), and 24, with region of maximal overlap occurring in BA 11 [mean (M) = 21.0 cc, SD = 8.6], BA 10 (M = 12.6 cc, SD = 4.7), and BA 32 (M = 5.4 cc, SD = 4.0).

Temporal discounting tasks

In each of three computerized TD tasks, participants chose between an amount of a reward that could be received immediately and an amount of reward that could be received after some specified delay (Kirby and Herrnstein, 1995; Myerson et al., 2003). The nature of the reward changed across tasks. One task assessed TD for money, one task assessed TD for food (e.g., chocolate bars; see Procedures), and one task assessed TD for discount vouchers (e.g., discount vouchers for gym sessions; see Procedures). All rewards used were hypothetical.

In each task, participants made five choices at each of six delays: 2 d, 2 weeks, 1 month, 3 months, 6 months, and 1 year. The order of blocks of choices pertaining to different delays was randomly determined across participants. Within each block of five choices, the delayed amount was always 40 units (e.g., 40 €, 40 chocolate bars, a 40 € discount voucher for a gym session). The amount of the immediate reward, on the other hand, was adjusted based on the participant's choices, using a staircase procedure that converged on the amount of the immediate reward that was equal, in subjective value, to the delayed reward (Du et al., 2002). The first choice was between a delayed amount of 40 units and an immediate amount of 20 units. If the participant chose the immediate reward, then the amount of the immediate reward was decreased on the next trial; if the subject chose the delayed reward, then the amount of the immediate reward was increased on the next trial. The size of the adjustment in the immediate reward decreased with successive choices: the first adjustment was half of the difference between the immediate and the delayed reward, whereas for subsequent choices it was half of the previous adjustment (Myerson et al., 2003). This procedure was repeated until the subject had made five choices at one specific delay, after which the subject began a new series of choices at another delay. For each trial in a block, the immediate amount represents the best guess as to the subjective value of the delayed reward. Therefore, the immediate amount that would have been presented on the sixth trial of a delay block was taken as the estimate of the subjective value of the delayed reward at that delay.

Self-report impulsivity scales

Participants were administered the Barratt Impulsiveness Scale (BIS-11) (Fossati et al., 2001), a 30-item self-report questionnaire evaluating everyday behaviors reflecting impulsivity on a 4-point Likert scale. The BIS-11 assesses 3 facets of impulsivity: attentional impulsivity (AI subscale, e.g., “I am more interested in the present than the future”), motor impulsivity (MI subscale, e.g., “I do things without thinking”), and impulsive nonplanning (INP subscale, e.g., “I make up my mind quickly”). High BIS-11 scores indicate high levels of impulsivity.

Procedures

To ensure motivation across tasks, before starting the experiment, participants were invited to choose their favorite food and discount voucher among four alternatives, presented on the computer screen. Food alternatives included two sweet snacks (cookie and chocolate bar), and two salty snacks (cracker and breadstick). Discount voucher alternatives included discount vouchers for a museum tour, gym session, hairdresser/barber session, and book purchase. The favorite food and discount vouchers were used as the reward for the food task and the discount voucher task, respectively.

Participants then underwent the three TD tasks. The tasks were administered separately, and the order of tasks was randomly determined across participants. Participants were told that, on each trial, two amounts of a hypothetical reward would appear on the screen. One could be received right now, and one could be received after a delay. They were informed that there were no correct or incorrect choices, and were required to indicate the option they preferred by pressing one of two buttons (Estle et al., 2007).

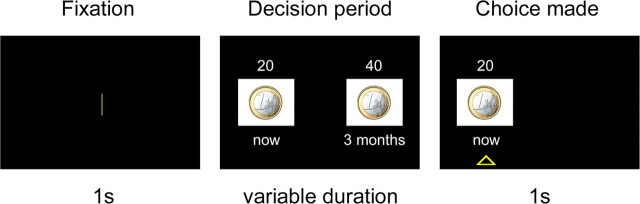

Figure 2 illustrates the experimental paradigm. Each trial began with a 1 s fixation screen, followed by a screen depicting the two available options. The two options appeared on the left and right side of the screen, and clearly indicated the type of reward, the amount of reward, and the delay of delivery of the reward. After the participants made their choices, the nonpreferred option disappeared, whereas the preferred option remained on the screen for 1 s, with a triangle underneath it. The intertrial interval was 1.5 s.

Figure 2.

Experimental paradigm. In each trial, after a 1 s fixation period, subjects chose between a small amount of reward delivered immediately and a larger amount of reward delivered after a delay. The preferred option remained highlighted for 1 s. Separate tasks involved different types of rewards, including money, discount vouchers, and food. All rewards used were hypothetical. The picture refers to a choice trial in the money task. See Materials and Methods for a more detailed explanation of procedures.

Data analysis

For each task, the rate at which the subjective value of a reward decays with delay (TD rate) was assessed through two indices: the temporal discounting parameter (k) (Mazur, 1987; Rachlin et al., 1991; Green and Myerson, 2004; Fellows and Farah, 2005), and the area under the empirical discounting curve (AUC) (Myerson et al., 2001).

Estimation of k.

The hyperbolic function SV = 1/(1+kD), where SV = subjective value (expressed as a fraction of the delayed amount), and D = delay (in days), was fit to the data to determine the k constant of the best fitting TD function, using a nonlinear, least-squares algorithm (as implemented in Statistica; Statsoft). The larger the value of k, the steeper the discounting function, the more participants were inclined to choose small-immediate rewards over larger-delayed rewards. Subjective preferences were well characterized by hyperbolic functions across groups. There were not significant differences in R2 across participant groups in any of the tasks (money task: HC group = 0.72; mOFC group = 0.72; non-FC group = 0.68, F(2,33) = 0.11, p = 0.89; discount voucher task: HC group = 0.65; mOFC group = 0.70; non-FC group = 0.72, F(2,33) = 0.37, p = 0.68; food task: HC group = 0.71; mOFC group = 0.87; non-FC group = 0.80, F(2,33) = 1.74, p = 0.19).

For comparison purposes, we also assessed the fits to the data of an exponential discounting model. For each TD task, the exponential function SV = ekD was fit to the data to determine the k constant of the best fitting TD function. Although both the hyperbolic and the exponential functions fit the data well, the hyperbolic function fit better than the exponential across participant groups and reward types. We entered R2 scores as the dependent variable in an ANOVA with group (mOFC patients, non-FC patients, and HC) and model (hyperbolic, exponential) as factors, for each reward separately. For the money task, there was a significant effect of model (F(1,33) = 10.16, p = 0.003), such that the hyperbolic model fit better than the exponential model (0.71 vs 0.64; p = 0.001), with no significant effect of group (p = 0.94) or group × model interaction (p = 0.91). Similar results were found for the discount voucher and food tasks. For the discount voucher task, there was a significant effect of model (F(1,33) = 5.83, p = 0.02), such that the hyperbolic model fit better than the exponential model (0.69 vs 0.63; p = 0.01), with no significant effect of group (p = 0.62) or group × model interaction (p = 0.61). As well, for the food task, there was a significant effect of model (F(1,33) = 4.43, p = 0.04), such that the hyperbolic model fit better than the exponential model (0.79 vs 0.73; p = 0.02), with no significant effect of group (p = 0.11) or group × model interaction (p = 0.77). It is worth noting that the superiority of the hyperbolic over the exponential model in describing discounting behavior applied to healthy participants as well as patients with mOFC lesions. This finding indicates that lesions to mOFC steepened the discounting function, but did not alter it in any other way (e.g., shape). Together with the lack of evidence for inconsistent preference in this patient population (see Results), this finding strongly suggests that mOFC patients' behavior was truly reflective of TD, and not poor task comprehension or idiosyncratic preferences. Given the superiority of the hyperbolic over the exponential model in describing discounting behavior, hyperbolic k values were adopted as measures of TD. The hyperbolic k constants were normally distributed after log-transformation (Kolmogorov–Smirnov d < 0.14, p > 0.2 in all cases), and therefore, comparisons were performed using parametric statistical tests.

Estimation of AUC.

Although hyperbolic functions captured participants' TD behavior relatively well, we also obtained AUC as an additional index of TD rate that, unlike k, does not depend on theoretical models regarding the shape of the discounting function (Myerson et al., 2001; Johnson and Bickel, 2008). Briefly, delays and subjective values were first normalized. Delays were expressed as a proportion of the maximum delay (360 d), and subjective values were expressed as a proportion of the delayed amount (40 units). Delays and subjective values were then plotted as x and y coordinates, respectively, to construct a discounting curve. Vertical lines were drawn from each x value to the curve, subdividing the area under the curve into a series of trapezoids. The area of each trapezoid was calculated as (x2 − x1)(y1 + y2)/2, where x1 and x2 are successive delays, and y1 and y2 are the subjective values associated with these delays (Myerson et al., 2001). The AUC is the sum of the areas of all the trapezoids. The AUC varies between 0 and 1. The smaller the AUC, the steeper the TD, the more participants were inclined to choose small-immediate rewards over larger-delayed rewards. The AUC scores were normally distributed (Kolmogorov–Smirnov d < 0.12, p > 0.2 in all cases), and therefore, comparisons were performed using parametric statistical tests.

Results

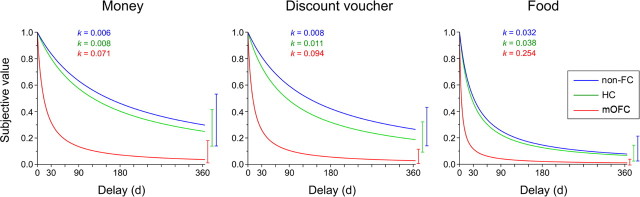

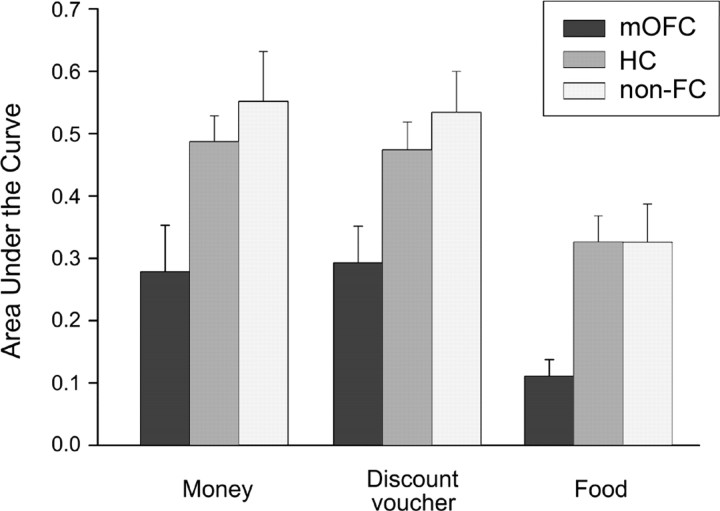

Figure 3 shows TD curves by participant group and delay. The k value for each curve reflects the geometric mean of the group—which corresponds to mean of the log-transformed values—and thus provides a better measure of central tendency for positively skewed metrics, such as TD rates, than does the arithmetic mean. Figure 4 shows the AUC for each participant group and type of reward. As is evident, TD curves were steeper and the AUC was smaller in mOFC patients compared with non-FC patients and normal controls (HC), suggesting that mOFC patients had an increased tendency to discount future rewards compared with the control groups. For example, on average, 40 € delayed by 1 month were worth ∼32 € now for normal controls, but only 12 € for mOFC patients (losing ∼70% of their value). Figures 3 and 4 also highlight that TD of food was steeper than TD of money and discount vouchers across groups. These impressions were confirmed by ANOVA analyses.

Figure 3.

Temporal discounting functions by participant group (mOFC, non-FC, HC) and type of reward. The hyperbolic curves describe the discounting of subjective value (expressed as a proportion of the delayed amount) as a function of time (days). The discounting parameter k reflects the geometric mean of the group (mean of the log-transformed values). Confidence intervals are 95% intervals.

Figure 4.

Area under the empirical discounting curve by participant group (mOFC, non-FC, HC) and type of reward. The error bars indicate the SEM.

k

An ANOVA on log-transformed k values with group (mOFC, non-FC, and HC) as a between-subject factor, and task (money, food, discount voucher) as a within-subject factor yielded a significant effect of group (F(2,33) = 8.56, p = 0.001). Post hoc comparisons, performed with the Newman–Keuls test, showed that TD was steeper in mOFC patients compared with non-FC patients (−0.92 vs −1.93; p = 0.0006) and HC (−0.92 vs −1.80; p = 0.0009), whereas no significant difference was detected between non-FC patients and HC (p = 0.61). Moreover, there was a significant effect of task (F(2,66) = 14.85, p = 0.000005), indicating that TD of food was steeper than TD of money (−1.16 vs −1.80; p = 0.0001) and discount vouchers (−1.16 vs −1.69; p = 0.0001), whereas no significant difference emerged between TD of money and discount vouchers (p = 0.30). There was no significant group × task interaction (p = 0.98). Group differences in TD were confirmed when the ANOVA was run on data from the money and discount voucher tasks only (see supplemental material, available at www.jneurosci.org), and using nonparametric tests (see supplemental material, available at www.jneurosci.org).

AUC

Similar results were obtained using AUC as the dependent variable. An ANOVA on AUC scores with group and task as factors yielded a significant effect of group (F(2,33) = 5.90, p = 0.006). Post hoc comparisons, performed with the Newman–Keuls test, showed that AUC was smaller (i.e., TD was steeper) in mOFC patients compared with non-FC patients (0.22 vs 0.47; p = 0.003) and HC (0.22 vs 0.42; p = 0.01), whereas no significant difference was detected between non-FC and HC (p = 0.54). There was a significant effect of task (F(2,66) = 16.86, p = 0.000001), indicating steeper TD of food than money (0.25 vs 0.44; p = 0.0001) and discount vouchers (0.25 vs 0.43; p = 0.0001), with no significant difference between TD of money and discount vouchers (p = 0.86). No significant group × task interaction emerged (p = 0.91). Group differences in TD were confirmed when the ANOVA was run on data from the money and discount voucher tasks only (see supplemental material, available at www.jneurosci.org), and using nonparametric tests (see supplemental material, available at www.jneurosci.org).

TD and mOFC

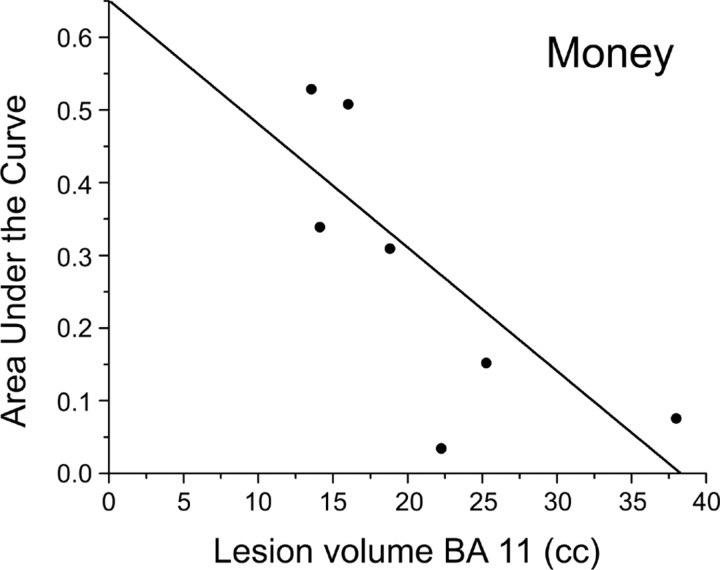

We investigated whether TD rate in mOFC patients correlated with lesion volume. As anticipated, brain lesions in mOFC patients overlapped maximally in BAs 11, 10, and 32. By using partial correlation analyses, we investigated the relation between AUC scores and lesion volume in each of the three BAs, partialing out the effect of lesion volume in the other two BAs. We found that lesion volume in BA 11 correlated significantly with AUC scores for money (r = −0.91; p = 0.01, two-tailed; Fig. 5), and, marginally, with AUC scores for food (r = −0.83; p = 0.056, two-tailed) and discount vouchers (r = −0.78; p = 0.08, two-tailed): the larger the lesion in BA 11, the steeper the TD. We also observed a marginal correlation between lesion volume in BA 32 and AUC scores for money (r = −0.81; p = 0.07, two-tailed). No other correlations were significant (p > 0.15 in all cases). Although these results should be taken with caution due to the small sample size, they suggest that BA 11 of mOFC may play a privileged role in governing TD across types of reward.

Figure 5.

Scatter plot of the correlation between lesion volume in BA 11 and degree of TD for money (AUC scores).

Self-reports of impulsivity

mOFC patients' self-reports did not reflect significantly higher levels of impulsivity than those of non-FC patients and healthy controls (F(2,33) = 2.18; p = 0.13). Separate analysis on scores from the three subscales of the BIS-11 also failed to yield statistically significant group differences (AI subscale: F(2,33) = 1.91; p = 0.16; INP subscale: F(2,33) = 2.85; p = 0.08; MI subscale: F(2,33) = 2.64; p = 0.09), although the results trended toward more impulsive nonplanning and motor impulsivity in mOFC patients than in controls. This finding was surprising in light of mOFC patients' poor valuation of the future in the TD tasks. It is important to note, however, that self-reports assess awareness of a behavior, which may dissociate from more objective measures of the same behavior (Schooler, 2002), especially in patients with damage to ventromedial prefrontal regions (Ciaramelli and Ghetti, 2007; Modirrousta and Fellows, 2008), who may lack self-insight (Barrash et al., 2000; Beer et al., 2006). mOFC patients, therefore, may have had problems introspecting on their impulsive behaviors.

The results indicate that mOFC patients discounted delayed rewards more steeply than normal controls. Crucially, large TD rates were not a general consequence of brain damage. Patients with lesions outside the frontal lobe, indeed, did not exhibit TD rates different from normal controls. These findings argue for a necessary role of mOFC for valuation of future rewards. Before discussing these results further, it is important to rule out the possibility that differences in TD rates between mOFC patients and control groups depended on factors other than TD, such as poor comprehension of the task, or the presence of inconsistent preferences in mOFC patients. The fact that hyperbolic functions described TD behavior equally well in mOFC patients and control groups, and better than did exponential functions, argues against this possibility: mOFC patients' TD behavior obeyed the typical (hyperbolic) curves, though reflecting a reliable increase in the parameter k. As a more direct (and model-free) test for the ability to perform the task, we counted the number of inconsistent preferences participants had evinced. By definition, TD behavior should result in a monotonic decrease of the subjective value of the future outcome with delay (Johnson and Bickel, 2008). That is, if R1 is the subjective value of a reward R delivered at delay t1, R2 is the subjective value of R delivered at delay t2, and t2 > t1, then it is expected that R2 < R1. As a consequence, subjects exhibit inconsistent preference when the subjective value of the future outcome at a given delay is greater than that at the preceding delay, i.e., R2 > R1 (Johnson and Bickel, 2008). To allow variability in the data, we considered as indicative of inconsistent preferences only those data points in which the subjective value of a reward overcame that at the preceding delay by a value of >10% of the future outcome, i.e., R2 > R1 + R/10, as recommended by Johnson and Bickel (2008). The mean number of inconsistent preferences was small, and comparable across participant groups (money task: HC, 0.40; mOFC, 0.57; non-FC, 0.44, F(2,33) = 0.15, p = 0.86; discount voucher task: HC, 0.75; mOFC, 0.42; non-FC, 0.55, F(2,33) = 0.57; p = 0.56; food task: HC: 0.55; mOFC: 0.57; non-FC: 0.33, F(2,33) = 0.33; p = 0.71). This held even if all deviations from a monotonically decreasing function were counted as inconsistent preferences, regardless of their magnitude, i.e., R2 > R1 (see supplemental material, available at www.jneurosci.org). Moreover, no participant in any group followed a response heuristic, such as always selecting the larger-delayed amount or the smaller-immediate amount across delay and reward conditions, regardless of the options at stake. Together, the findings that lesion to mOFC did not result in changes to the shape of the discounting function aside from its steepness, inconsistent preferences, or response heuristics, strongly suggest that mOFC patients' behavior was indeed reflective of increased TD, and not poor task comprehension or idiosyncratic preferences.

One further aspect of the preset study deserves attention. Participants did not receive the actual consequences of their choices, but instead made choices about hypothetical rewards. Hypothetical outcomes have the advantage of allowing the use of reward amounts and delays that are large enough to be meaningful to participants, which are generally infeasible in studies involving real outcomes (Frederick et al., 2002; Jimura et al., 2009). Hypothetical outcomes, on the other hand, have the disadvantage that people may not be motivated to, or capable of, accurately predicting what they would do if outcomes were real (Frederick et al., 2002; Jimura et al., 2009). For this reason, although there is, as of yet, no evidence that hypothetical rewards are discounted differently from real rewards, either in terms of the degree of TD (Johnson and Bickel, 2002), the shape of TD curves (Kirby and Herrnstein, 1995; Kirby and Marakovic, 1995; Johnson and Bickel, 2002), or the neural bases of TD (Bickel et al., 2009), we conducted a corollary investigation of TD for money in mOFC patients and healthy controls using real rewards. We confirmed our results: mOFC patients discounted real monetary rewards more steeply than controls (see supplemental material, available at www.jneurosci.org).

Discussion

The present study investigated the role of mOFC in intertemporal choice. Patients with lesions in the mOFC, control patients with lesions outside the frontal lobe, and healthy individuals made a series of hypothetical choices between small-immediate rewards and larger-delayed rewards. Since decisions about money are not necessarily representative of all decisions, and to examine the role of the mOFC across a wide range of contexts, we varied the type of outcome, which included both primary (food) and secondary (money and discount vouchers) rewards. The study yielded two main findings. Lesions to the mOFC increased significantly the preference for small-immediate over larger-delayed rewards, resulting in steeper TD of future rewards in mOFC patients compared with the control groups. This finding held for both primary and secondary rewards. Damage to the mOFC, however, did not alter the normal tendency to discount different types of rewards at different rates, such that food was discounted more steeply than money and discount vouchers across groups.

Our primary finding that damage to mOFC caused steep TD of future outcomes is consistent with a large body of literature. Single-neuron studies of OFC (Roesch et al., 2006) in rodents show that neural response to reward is affected by the delay preceding its delivery. Moreover, disruption of the OFC in animals affects discounting of future rewards (Mobini et al., 2002; Cardinal et al., 2004; Winstanley et al., 2004; Rudebeck et al., 2006). In humans, fMRI studies have detected consistent activation of mOFC and adjacent medial prefrontal cortex during intertemporal choice (McClure et al., 2004, 2007; Kable and Glimcher, 2007). Our results confirm and extend previous evidence by providing, for the first time, evidence for a necessary role of mOFC in valuing delayed outcomes in humans.

Although fMRI studies have reported wide activation of ventromedial prefrontal areas during TD tasks, there may be heterogeneity in the causal involvement of different regions within ventromedial prefrontal cortex in TD behavior. A previous neuropsychological investigation in patients with lesions to ventromedial prefrontal cortex found no deficit in TD (Fellows and Farah, 2005). In that study, patients' lesions overlapped maximally in medial prefrontal cortex, and some patients had spared OFC. Together with our findings, this null effect raises the possibility that mOFC, but not medial prefrontal cortex, is necessary for normal discounting behavior. Consistent with this proposal, ablation studies in animals have found that lesions in the OFC, but not medial prefrontal cortex, affect reward-based decision-making (Noonan et al., 2010) and increase delay discounting (Rudebeck et al., 2006). Moreover, in our study, lesion volume in BA 11 of mOFC showed the strongest association with behavior. This proposal, of course, will need to be tested empirically in future studies.

The present results have important implications for current neurobiological models of intertemporal choice. According to the β–δ model (McClure et al., 2004, 2007), limbic areas, including the mOFC, ventral striatum, and posterior cingulate cortex, form an impulsive (β) system that places special weight on immediate rewards, whereas a more providential cognitive (δ) system, based in the lateral prefrontal cortex and posterior parietal cortex, is more engaged in patient choices. During intertemporal choice, activation of the β system would favor the immediate option, whereas activation of the δ system would favor the delayed option (McClure et al., 2004). Our finding that damage to the mOFC increases impatient choices is not in line with the hypothesis that mOFC acts as a neuroanatomical correlate of the impulsive system (McClure et al., 2004). Were this the case, lesions to mOFC should lead to a weakening of the β system relative to the δ system, and, consequently, more patient choices.

Our results can be understood in the context of an alternative model of intertemporal choice (Kable and Glimcher, 2007, 2010; Peters and Büchel, 2009), according to which a unitary system, encompassing mOFC and adjacent medial prefrontal cortex, ventral striatum, and posterior cingulate cortex, represents the value of both immediate and delayed rewards, and is subject to top-down control by lateral prefrontal cortex (Hare et al., 2009; Figner et al., 2010). Within this network, the mOFC is thought to signal the subjective value of expected outcomes during choice (Rudebeck et al., 2006; Schoenbaum et al., 2006, 2009; Murray et al., 2007; Rushworth et al., 2007; Talmi et al., 2009), by integrating different kinds of information and concerns (e.g., magnitude, delays) into a common “neural currency” (Montague and Berns, 2002).

We propose two possible mechanisms through which mOFC may contribute to valuation and preference of future outcomes. Ventromedial prefrontal cortex regions, including mOFC, are at the core of a network of brain regions involved in self-projection (Buckner and Carroll, 2007; Andrews-Hanna et al., 2010). During intertemporal choice, mOFC may allow individuals to simulate future experiences associated with rewards, and upregulate valuation of future options based on the resulting (positive) affective states (Bechara, 2005). Damage to mOFC would therefore lead to a degraded representation of the delayed reward. Further, mOFC is the target of top-down signals from lateral prefrontal cortex promoting “rational” decision-making and self-control over immediate gratification (Christakou et al., 2009; Hare et al., 2009; Figner et al., 2010). Damage to the mOFC, therefore, would prevent lateral prefrontal signals from influencing behavior. Poor mental time travel and/or poor self-control arguably result in problems anticipating, or adapting behavior to, the long-term consequences of decisions (i.e., “myopia for the future”) (Damasio, 1994; Bechara, 2005).

This suggestion is compatible with a theory of impulse control proposed by Bechara (Bechara, 2005; Bechara and Van Der Linden, 2005), according to which regions in the ventromedial prefrontal cortex, including mOFC, weight the long-term prospect of a given choice during decision-making (Schoenbaum et al., 2009), while the amygdala and ventral striatum signal the immediate prospect of pain or pleasure (Bechara and Damasio, 2005; Kringelbach, 2005). Impulsive behavior would emerge as the result of an imbalance between competing signals, favoring valuation of immediate over future outcomes. The evidence of abnormally steep TD in mOFC patients, indeed, makes contact with previous evidence that in the Iowa Gambling Task mOFC patients make impulsive, “shortsighted” choices that warrant (monetary) gains in the short-term but prove disadvantageous in the long-term (Bechara et al., 1997; Berlin et al., 2004; Anderson et al., 2006).

Though generally more impulsive than controls, mOFC patients retained the same tendency of the controls to discount food more steeply than money and discount vouchers. This finding confirms previous evidence that TD depends strongly on reward type (McClure et al., 2007): Delayed monetary rewards are discounted less steeply than directly consumable rewards (Odum and Rainaud, 2003; Estle et al., 2007; Rosati et al., 2007; Charlton and Fantino, 2008). Why this difference occurs is not entirely clear. People may discount delayed money less steeply than consumable rewards because money can be stored, exchanged for other primary and secondary reinforcers (Catania, 1998), and retains its utility despite fluctuations of desire and changes in the internal state of the organism. In the present study, the interpretation of differences in TD for primary versus secondary rewards is complicated by the fact that delays and amounts used, though comparable to those used in previous research (Odum and Rainaud, 2003; Estle et al., 2007; Charlton and Fantino, 2008), may have been more suited for the assessment of TD for money than for food (Jimura et al., 2009). For example, 40 chocolate bars may reach saturation and may therefore be less appetitive than 40 units of money.

Although further, ad hoc research is needed to clarify the reason for increased TD of food compared with money, our finding that mOFC patients discounted all types of reward more steeply than controls, and that differences in TD rates between mOFC patients and controls were not modulated by the type of reward, is important in many respects. First, it reinforces the suggestion that the role of the mOFC during valuation and choice is generalized across a wide range of stimuli and contexts, ranging from primary to secondary rewards (Chib et al., 2009; FitzGerald et al., 2009; Hare et al., 2010). Second, it is consistent with the hypothesis that the mOFC is necessary to encode the prospective value of available goods to choose between them (Padoa-Schioppa and Assad, 2006), but not to encode the incentive value of a stimulus per se, regardless of whether an economic choice is required (for review, see O'Doherty, 2004). Indeed, in experiments that compared conditions in which subjects did or did not make a choice, mOFC was significantly more active in the choice condition (Arana et al., 2003). By contrast, neural responses in the amygdala were related to incentive value, and independent of behavioral choice. Finally, the preserved effect of reward type on TD after mOFC lesion rules out the possibility that increased choosing of smaller-immediate rewards in mOFC patients simply resulted from poor motor impulse control (Bechara and Van Der Linden, 2005): mOFC patients in the money task faced a situation identical to that in the food task, yet showed increased willingness to wait for a larger reward.

To conclude, we have shown, for the first time in humans, that damage to mOFC causes abnormally steep TD of delayed rewards. This finding indicates that mOFC is necessary for optimal weighting of future outcomes during intertemporal choice. mOFC may be crucial to form vivid representations of future outcomes, capable of competing with immediate ones, or to incorporate top-down signals promoting resistance to immediate gratification in the valuation process, ultimately extending the reach of humans' choices into the future.

Footnotes

This work was supported by a Programmi di Ricerca Scientifica di Rilevante Interesse Nazionale (PRIN) grant (no. 2008YTNXXZ) from Ministero dell'Istruzione dell'Università e della Ricerca to G.d.P., and a Marie Curie individual fellowship (OIF 40575) to E.C. Comments of Andrea Serino, Julia Spaniol, Davide Dragone, and two anonymous reviewers improved an earlier version of this manuscript.

References

- Ainslie G. Specious reward: a behavioral theory of impulsiveness and impulse control. Psychol Bull. 1975;82:463–496. doi: 10.1037/h0076860. [DOI] [PubMed] [Google Scholar]

- Ainslie GW. Impulse control in pigeons. J Exp Anal Behav. 1974;21:485–489. doi: 10.1901/jeab.1974.21-485. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson SW, Barrash J, Bechara A, Tranel D. Impairments of emotion and real-world complex behavior following childhood- or adult-onset damage to ventromedial prefrontal cortex. J Int Neuropsychol Soc. 2006;12:224–235. doi: 10.1017/S1355617706060346. [DOI] [PubMed] [Google Scholar]

- Andrews-Hanna JR, Reidler JS, Sepulcre J, Poulin R, Buckner RL. Functional-anatomic fractionation of the brain's default network. Neuron. 2010;65:550–562. doi: 10.1016/j.neuron.2010.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arana FS, Parkinson JA, Hinton E, Holland AJ, Owen AM, Roberts AC. Dissociable contributions of the human amygdala and orbitofrontal cortex to incentive motivation and goal selection. J Neurosci. 2003;23:9632–9638. doi: 10.1523/JNEUROSCI.23-29-09632.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ballard K, Knutson B. Dissociable neural representations of future reward magnitude and delay during temporal discounting. Neuroimage. 2009;45:143–150. doi: 10.1016/j.neuroimage.2008.11.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barrash J, Tranel D, Anderson SW. Acquired personality disturbances associated with bilateral damage to the ventromedial prefrontal region. Dev Neuropsychol. 2000;18:355–381. doi: 10.1207/S1532694205Barrash. [DOI] [PubMed] [Google Scholar]

- Bechara A. Decision making, impulse control and loss of willpower to resist drugs: a neurocognitive perspective. Nat Neurosci. 2005;8:1458–1463. doi: 10.1038/nn1584. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio AR. The somatic marker hypothesis: a neural theory of economic decision. Games Econ Behav. 2005;52:336–372. [Google Scholar]

- Bechara A, Van Der Linden M. Decision-making and impulse control after frontal lobe injuries. Curr Opin Neurol. 2005;18:734–739. doi: 10.1097/01.wco.0000194141.56429.3c. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H, Tranel D, Damasio AR. Deciding advantageously before knowing the advantageous strategy. Science. 1997;275:1293–1295. doi: 10.1126/science.275.5304.1293. [DOI] [PubMed] [Google Scholar]

- Beer JS, John OP, Scabini D, Knight RT. Orbitofrontal cortex and social behavior: integrating self-monitoring and emotion-cognition interactions. J Cogn Neurosci. 2006;18:871–879. doi: 10.1162/jocn.2006.18.6.871. [DOI] [PubMed] [Google Scholar]

- Berlin HA, Rolls ET, Kischka U. Impulsivity, time perception, emotion and reinforcement sensitivity in patients with orbitofrontal cortex lesions. Brain. 2004;127:1108–1126. doi: 10.1093/brain/awh135. [DOI] [PubMed] [Google Scholar]

- Bickel WK, Pitcock JA, Yi R, Angtuaco EJ. Congruence of BOLD response across intertemporal choice conditions: fictive and real money gains and losses. J Neurosci. 2009;29:8839–8846. doi: 10.1523/JNEUROSCI.5319-08.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buckner RL, Carroll DC. Self-projection and the brain. Trends Cogn Sci. 2007;11:49–57. doi: 10.1016/j.tics.2006.11.004. [DOI] [PubMed] [Google Scholar]

- Cardinal RN, Winstanley CA, Robbins TW, Everitt BJ. Limbic corticostriatal systems and delayed reinforcement. Ann N Y Acad Sci. 2004;1021:33–50. doi: 10.1196/annals.1308.004. [DOI] [PubMed] [Google Scholar]

- Catania AC. Learning. Ed 4. Upper Saddle River, NJ: Prentice Hall; 1998. [Google Scholar]

- Charlton SR, Fantino E. Commodity specific rates of temporal discounting: does metabolic function underlie differences in rates of discounting? Behav Processes. 2008;77:334–342. doi: 10.1016/j.beproc.2007.08.002. [DOI] [PubMed] [Google Scholar]

- Chib VS, Rangel A, Shimojo S, O'Doherty JP. Evidence for a common representation of decision values for dissimilar goods in human ventromedial prefrontal cortex. J Neurosci. 2009;29:12315–12320. doi: 10.1523/JNEUROSCI.2575-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Christakou A, Brammer M, Giampietro V, Rubia K. Right ventromedial and dorsolateral prefrontal cortices mediate adaptive decisions under ambiguity by integrating choice utility and outcome evaluation. J Neurosci. 2009;29:11020–11028. doi: 10.1523/JNEUROSCI.1279-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ciaramelli E, Ghetti S. What are confabulators' memories made of? A study of subjective and objective measures of recollection in confabulation. Neuropsychologia. 2007;45:1489–1500. doi: 10.1016/j.neuropsychologia.2006.11.007. [DOI] [PubMed] [Google Scholar]

- Damasio AR. Descartes' error: emotion, reason, and the human brain. New York: Putnam; 1994. [Google Scholar]

- Du W, Green L, Myerson J. Cross-cultural comparisons of discounting delayed and probabilistic rewards. Psychol Rec. 2002;52:479–492. [Google Scholar]

- Estle SJ, Green L, Myerson J, Holt DD. Discounting of monetary and directly consumable rewards. Psychol Sci. 2007;18:58–63. doi: 10.1111/j.1467-9280.2007.01849.x. [DOI] [PubMed] [Google Scholar]

- Fellows LK, Farah MJ. Dissociable elements of human foresight: a role for the ventromedial frontal lobes in framing the future, but not in discounting future rewards. Neuropsychologia. 2005;43:1214–1221. doi: 10.1016/j.neuropsychologia.2004.07.018. [DOI] [PubMed] [Google Scholar]

- Figner B, Knoch D, Johnson EJ, Krosch AR, Lisanby SH, Fehr E, Weber EU. Lateral prefrontal cortex and self-control in intertemporal choice. Nat Neurosci. 2010;13:538–539. doi: 10.1038/nn.2516. [DOI] [PubMed] [Google Scholar]

- FitzGerald TH, Seymour B, Dolan RJ. The role of human orbitofrontal cortex in value comparison for incommensurable objects. J Neurosci. 2009;29:8388–8395. doi: 10.1523/JNEUROSCI.0717-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Folstein MF, Folstein SE, McHugh PR. “Mini-mental state”: a practical method for grading the cognitive state of patients for the clinician. J Psychiatr Res. 1975;12:189–198. doi: 10.1016/0022-3956(75)90026-6. [DOI] [PubMed] [Google Scholar]

- Fossati A, Di Ceglie A, Acquarini E, Barratt ES. Psychometric properties of an Italian version of the Barratt impulsiveness scale-11 (BIS-11) in nonclinical subjects. J Clin Psychol. 2001;57:815–828. doi: 10.1002/jclp.1051. [DOI] [PubMed] [Google Scholar]

- Frederick S, Loewenstein G, O'Donoghue T. Time discounting and time preference: a critical review. J Econ Lit. 2002;40:351–401. [Google Scholar]

- Green L, Myerson J. A discounting framework for choice with delayed and probabilistic rewards. Psychol Bull. 2004;130:769–792. doi: 10.1037/0033-2909.130.5.769. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hare TA, Camerer CF, Rangel A. Self-control in decision-making involves modulation of the vmPFC valuation system. Science. 2009;324:646–648. doi: 10.1126/science.1168450. [DOI] [PubMed] [Google Scholar]

- Hare TA, Camerer CF, Knoepfle DT, Rangel A. Value computations in ventral medial prefrontal cortex during charitable decision making incorporate input from regions involved in social cognition. J Neurosci. 2010;30:583–590. doi: 10.1523/JNEUROSCI.4089-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Holt DD, Green L, Myerson J. Is discounting impulsive? Evidence from temporal and probability discounting in gambling and non-gambling college students. Behav Processes. 2003;64:355–367. doi: 10.1016/s0376-6357(03)00141-4. [DOI] [PubMed] [Google Scholar]

- International Committee of Medical Journal Editors. Statements from the Vancouver group. Br Med J. 1991;302:1194. [Google Scholar]

- Jimura K, Myerson J, Hilgard J, Braver TS, Green L. Are people really more patient than other animals? Evidence from human discounting of real liquid rewards. Psychon Bull Rev. 2009;16:1071–1075. doi: 10.3758/PBR.16.6.1071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson MW, Bickel WK. Within-subject comparison of real and hypothetical money rewards in delay discounting. J Exp Anal Behav. 2002;77:129–146. doi: 10.1901/jeab.2002.77-129. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Johnson MW, Bickel WK. An algorithm for identifying nonsystematic delay-discounting data. Exp Clin Psychopharmacol. 2008;16:264–274. doi: 10.1037/1064-1297.16.3.264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kable JW, Glimcher PW. The neural correlates of subjective value during intertemporal choice. Nat Neurosci. 2007;10:1625–1633. doi: 10.1038/nn2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kable JW, Glimcher PW. An “as soon as possible” effect in human intertemporal decision making: behavioral evidence and neural mechanisms. J Neurophysiol. 2010;103:2513–2531. doi: 10.1152/jn.00177.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kirby KN, Herrnstein RJ. Preference reversals due to myopic discounting of delayed reward. Psychol Sci. 1995;6:83–89. [Google Scholar]

- Kirby KN, Marakovic NN. Modeling myopic decisions: evidence for hyperbolic delay-discounting within subjects and amounts. Organ Behav Hum Dec. 1995;64:22–30. [Google Scholar]

- Kirby KN, Petry NM. Heroin and cocaine abusers have higher discount rates for delayed rewards than alcoholics or non-drug-using controls. Addiction. 2004;99:461–471. doi: 10.1111/j.1360-0443.2003.00669.x. [DOI] [PubMed] [Google Scholar]

- Kringelbach ML. The human orbitofrontal cortex: linking reward to hedonic experience. Nat Rev Neurosci. 2005;6:691–702. doi: 10.1038/nrn1747. [DOI] [PubMed] [Google Scholar]

- Luhmann CC. Temporal decision-making: insights from cognitive neuroscience. Front Behav Neurosci. 2009;3:39. doi: 10.3389/neuro.08.039.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mazur JE. An adjusting procedure for studying delayed reinforcement. In: Commons ML, Mazur JE, Nevin JA, Rachlin H, editors. Quantitative analyses of behavior: the effect of delay and of intervening events on reinforcement value. Vol 5. Hillsdale, NJ: Erlbaum; 1987. pp. 55–73. [Google Scholar]

- McClure SM, Laibson DI, Loewenstein G, Cohen JD. Separate neural systems value immediate and delayed monetary rewards. Science. 2004;306:503–507. doi: 10.1126/science.1100907. [DOI] [PubMed] [Google Scholar]

- McClure SM, Ericson KM, Laibson DI, Loewenstein G, Cohen JD. Time discounting for primary rewards. J Neurosci. 2007;27:5796–5804. doi: 10.1523/JNEUROSCI.4246-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mobini S, Body S, Ho MY, Bradshaw CM, Szabadi E, Deakin JF, Anderson IM. Effects of lesions of the orbitofrontal cortex on sensitivity to delayed and probabilistic reinforcement. Psychopharmacology (Berl) 2002;160:290–298. doi: 10.1007/s00213-001-0983-0. [DOI] [PubMed] [Google Scholar]

- Modirrousta M, Fellows LK. Medial prefrontal cortex plays a critical and selective role in ‘feeling of knowing’ meta-memory judgments. Neuropsychologia. 2008;46:2958–2965. doi: 10.1016/j.neuropsychologia.2008.06.011. [DOI] [PubMed] [Google Scholar]

- Montague PR, Berns GS. Neural economics and the biological substrates of valuation. Neuron. 2002;36:265–284. doi: 10.1016/s0896-6273(02)00974-1. [DOI] [PubMed] [Google Scholar]

- Moretti L, Dragone D, di Pellegrino G. Reward and social valuation deficits following ventromedial prefrontal damage. J Cogn Neurosci. 2009;21:128–140. doi: 10.1162/jocn.2009.21011. [DOI] [PubMed] [Google Scholar]

- Murray EA, O'Doherty JP, Schoenbaum G. What we know and do not know about the functions of the orbitofrontal cortex after 20 years of cross-species studies. J Neurosci. 2007;27:8166–8169. doi: 10.1523/JNEUROSCI.1556-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myerson J, Green L. Discounting of delayed rewards: models of individual choice. J Exp Anal Behav. 1995;64:263–276. doi: 10.1901/jeab.1995.64-263. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myerson J, Green L, Warusawitharana M. Area under the curve as a measure of discounting. J Exp Anal Behav. 2001;76:235–243. doi: 10.1901/jeab.2001.76-235. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myerson J, Green L, Hanson JS, Holt DD, Estle SJ. Discounting delayed and probabilistic rewards: processes and traits. J Econ Psychol. 2003;24:619–635. [Google Scholar]

- Noonan MP, Sallet J, Rudebeck PH, Buckley MJ, Rushworth MF. Does the medial orbitofrontal cortex have a role in social valuation? Eur J Neurosci. 2010;31:2341–2351. doi: 10.1111/j.1460-9568.2010.07271.x. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP. Reward representations and reward-related learning in the human brain: insights from neuroimaging. Curr Opin Neurobiol. 2004;14:769–776. doi: 10.1016/j.conb.2004.10.016. [DOI] [PubMed] [Google Scholar]

- Odum AL, Rainaud CP. Discounting of delayed hypothetical money, alcohol, and food. Behav Processes. 2003;64:305–313. doi: 10.1016/s0376-6357(03)00145-1. [DOI] [PubMed] [Google Scholar]

- Padoa-Schioppa C, Assad JA. Neurons in the orbitofrontal cortex encode economic value. Nature. 2006;441:223–226. doi: 10.1038/nature04676. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peters J, Büchel C. Overlapping and distinct neural systems code for subjective value during intertemporal and risky decision making. J Neurosci. 2009;29:15727–15734. doi: 10.1523/JNEUROSCI.3489-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pine A, Seymour B, Roiser JP, Bossaerts P, Friston KJ, Curran HV, Dolan RJ. Encoding of marginal utility across time in the human brain. J Neurosci. 2009;29:9575–9581. doi: 10.1523/JNEUROSCI.1126-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rachlin H, Raineri A, Cross D. Subjective probability and delay. J Exp Anal Behav. 1991;55:233–244. doi: 10.1901/jeab.1991.55-233. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roesch MR, Taylor AR, Schoenbaum G. Encoding of time-discounted rewards in orbitofrontal cortex is independent of value representation. Neuron. 2006;51:509–520. doi: 10.1016/j.neuron.2006.06.027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rorden C, Brett M. Stereotaxic display of brain lesions. Behav Neurol. 2000;12:191–200. doi: 10.1155/2000/421719. [DOI] [PubMed] [Google Scholar]

- Rosati AG, Stevens JR, Hare B, Hauser MD. The evolutionary origins of human patience: temporal preferences in chimpanzees, bonobos, and human adults. Curr Biol. 2007;17:1663–1668. doi: 10.1016/j.cub.2007.08.033. [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, Walton ME, Smyth AN, Bannerman DM, Rushworth MF. Separate neural pathways process different decision costs. Nat Neurosci. 2006;9:1161–1168. doi: 10.1038/nn1756. [DOI] [PubMed] [Google Scholar]

- Rushworth MF, Buckley MJ, Behrens TE, Walton ME, Bannerman DM. Functional organization of the medial frontal cortex. Curr Opin Neurol. 2007;17:220–227. doi: 10.1016/j.conb.2007.03.001. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Roesch MR, Stalnaker TA. Orbitofrontal cortex, decision-making and drug addiction. Trends Neurosci. 2006;29:116–124. doi: 10.1016/j.tins.2005.12.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schoenbaum G, Roesch MR, Stalnaker TA, Takahashi YK. A new perspective on the role of the orbitofrontal cortex in adaptive behaviour. Nat Rev Neurosci. 2009;10:885–892. doi: 10.1038/nrn2753. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schooler JW. Re-representing consciousness: dissociations between experience and meta-consciousness. Trends Cogn Sci. 2002;6:339–344. doi: 10.1016/s1364-6613(02)01949-6. [DOI] [PubMed] [Google Scholar]

- Soman D, Ainslie G, Frederick S, Li X, Lynch J, Moreau P, Mitchell A, Read D, Sawyer A, Trope Y, Wertenbroch K, Zauberman G. The psychology of intertemporal discounting: why are distant events valued differently from proximal ones? Market Lett. 2005;16:347–360. [Google Scholar]

- Spinnler H, Tognoni G. Standardizzazione e taratura italiana di Test Neuropsicologici. Ital J Neurol Sci. 1987;6(Suppl 8):1–120. [PubMed] [Google Scholar]

- Talmi D, Dayan P, Kiebel SJ, Frith CD, Dolan RJ. How humans integrate the prospect of pain and reward during choice. J Neurosci. 2009;29:14617–14626. doi: 10.1523/JNEUROSCI.2026-09.2009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winstanley CA, Theobald DE, Cardinal RN, Robbins TW. Contrasting roles of basolateral amygdale and orbitofrontal cortex in impulsive choice. J Neurosci. 2004;24:4718–4722. doi: 10.1523/JNEUROSCI.5606-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]