Abstract

The potential for developmental neurotoxicity (DNT) of environmental chemicals may be evaluated using specific test guidelines from the US Environmental Protection Agency or the Organisation for Economic Cooperation and Development (OECD). These guidelines generate neurobehavioral, neuropathological, and morphometric data that are evaluated by regulatory agencies globally. Data from these DNT guideline studies, or the more recent OECD extended one-generation reproductive toxicity guideline, play a pivotal role in children’s health risk assessment in different world areas. Data from the same study may be interpreted differently by regulatory authorities in different countries resulting in inconsistent evaluations that may lead to inconsistencies in risk assessment decisions internationally, resulting in regional differences in public health protection or in commercial trade barriers. These issues of data interpretation and reporting are also relevant to juvenile and pre-postnatal studies conducted more routinely for pharmaceuticals and veterinary medicines. There is a need for development of recommendations geared toward the operational needs of the regulatory scientific reviewers who apply these studies in risk assessments, as well as the scientists who generate DNT data sets. The workshops summarized here draw upon the experience of the authors representing government, industry, contract research organizations, and academia to discuss the scientific issues that have emerged from diverse regulatory evaluations. Although various regulatory bodies have different risk management decisions and labeling requirements that are difficult to harmonize, the workshops provided an opportunity to work toward more harmonized scientific approaches for evaluating DNT data within the context of different regulatory frameworks. Five speakers and their coauthors with neurotoxicology, neuropathology, and regulatory toxicology expertise discussed issues of variability, data reporting and analysis, and expectations in DNT data that are encountered by regulatory authorities. In addition, principles for harmonized evaluation of data were suggested using guideline DNT data as case studies.

Keywords: Developmental neurotoxicity testing, Extended one-generation reproductive toxicity, Behavior, Learning and memory, Morphometry, Neuropathology

1. Introduction

There is concern globally about the potential for food-use pesticides, pharmaceuticals, and other chemicals to cause neurodevelopmental effects following perinatal exposures (Bjørling-Poulsen et al., 2008; Grandjean and Landrigan, 2014). Neurotoxicity evaluations from studies conducted according to test guidelines of the US Environmental Protection Agency (US EPA, 1998a) and the Organisation for Economic Cooperation and Development (OECD, 2007), as well as the recently finalized extended one-generation reproductive toxicity study (EOGRTS; OECD, 2012a) guideline, may play a pivotal role in children’s health risk assessment. Neurological endpoints such as auditory startle habituation, motor activity, and brain morphometry are now being used in regulatory hazard identification and risk assessments; however, data from the same study may be interpreted differently by regulatory authorities in different countries, leading to differences in public health protection, which may, in turn, lead to commercial trade barriers. The issues of which specific tests to use, and data interpretation and reporting for DNT studies are also relevant to juvenile and pre-postnatal studies (ICH S5-R2, 2005) conducted more routinely for pharmaceuticals.

Previous efforts have provided excellent reviews of some of the important issues that are encountered in DNT studies (Crofton et al., 2008; Holson et al., 2008; Tyl et al., 2008; Raffaele et al., 2008). These reports provide general recommendations for improving data quality, statistical analysis, and interpretation. In addition, there have been useful publications on recommended practices for implementing and evaluating the OECD and US EPA DNT guidelines for neuropathology and morphometry (Garman et al., 2001, 2016; Bolon et al., 2006; Kaufmann and Gröters, 2006; Bolon et al., 2011; Kaufmann, 2011). Despite these resources, there is a need for further development of recommendations geared toward the operational needs of the regulatory scientific reviewers who use these studies in risk assessments, as well as the scientists who generate DNT data sets.

The workshops summarized here used the experience of the authors to discuss several of the functional evaluations and postmortem procedures included in DNT studies as well as a number of issues that have emerged from diverse regulatory evaluations of these studies. Important issues addressed are the need to: (a) articulate the primary biological hypotheses that are being tested by the DNT guidelines, (b) understand which dependent variables and potential interactions are of primary interest in addressing the biological questions, (c) understand inherent variability of measures from studies performed in conformance with regulatory guidelines, (d) identify practical considerations (e.g., test equipment and conduct) that influence the ability to test different biological hypotheses, and (e) illustrate approaches to evaluating DNT data that take into consideration inherent variability, historical control data, maternal toxicity, and appropriate statistical analyses.

These workshops (Regulatory Neurodevelopmental Testing: New Guiding Principles for Harmonization of Data Collection and Analysis) were held at the annual 2015 Society of Toxicology (SOT) and the 2015 joint Teratology Society and Neurobehavioral Teratology Society (NBTS - now named the Developmental Neurotoxicology Society [DNTS]) meetings held in San Diego, California, US, and Montreal, Quebec, Canada, respectively. Speakers and co-authors were selected to promote exchange of different perspectives and expertise among toxicologists, pathologists, and statisticians from government, industry and academia (Table 1). This report summarizes issues identified with the performance and interpretation of DNT studies that were addressed at these workshops, with the intent that these discussions will assist in generating improved DNT study data, data analysis, and interpretation. The presentations and views expressed by the authors of each presentation are summarized in each section below; these views may not be those of other authors.

Table 1.

Contributors to the SOT and TS/NBTS [DNTS] workshops (in order of presentation).

| Name (abbreviation) | Areas of expertise | Affiliation at time abstracts to SOT were submitted |

|---|---|---|

| Angela Hofstra (AH) | Regulatory Toxicology | Syngenta Canada |

| Francis Bailey (FB) | Regulatory Toxicology | Health Canada Pest Management Regulatory Agency |

| Larry Sheets (LS) | Neurotoxicology, Regulatory Toxicology | Bayer CropScience |

| Wayne Bowersa (WB) | Neurotoxicology | Health Canada (currently at Dept. Neuroscience, Carleton University) |

| Virginia Moser (VM) | Neurotoxicology | US EPA NHEERL ORD |

| Kathleen Raffaele (KR) | Neurotoxicology, Risk Assessment and Management | US EPA OSWER |

| Thomas Vidmar (TV) | Statistics | BioSTAT Consultants |

| Edmund Lau (EL) | Statistics | Exponent Health Sciences |

| Abby Lia (AL) | Neurotoxicology, Risk Assessment | Exponent Health Sciences |

| Robert Garman (RG) | Neuropathology | Consultants in Veterinary Pathology, Inc. |

| Brad Bolon (BB) | Neuropathology and Developmental Neurobiology | The Ohio State University. College of Veterinary Medicine (currently at GEMPath, Inc.) |

| Wolfgang Kaufmann (WK) | Neuropathology and Reproductive Toxicology | Merck KGaA, Germany |

| Roland Auer (RA) | Neuropathology and Medicine | Hopital Ste-Justine, Departement de Pathologie, Montreal, Quebec (currently at University of Saskatchewan, Canada) |

| Alan Hobermana (AMH) | Developmental, Reproductive and Juvenile Toxicology (DART) | Charles River Laboratory |

| Susan Makris (SM) | Developmental and Reproductive Toxicology, Risk Assessment | US EPA NCEA ORD |

Chairpersons for Society of Toxicology or Teratology Association Meetings.

The workshops were introduced by brief remarks about the current regulatory need to improve and harmonize the analysis and evaluation of DNT studies. Dr. Angela Hofstra noted that the disparity in the regulatory interpretation of DNT study results is due, in part, to different expectations and interpretations by regulatory agencies about variability and outcomes of DNT data. In addition, insufficient reporting of methods and data or inadequate analysis of data can contribute to differences in regulatory interpretation between jurisdictions. Dr. Larry Sheets provided an overview of DNT study design requirements, the types of testing included in DNT studies, and addressed how experimental design features of regulatory guideline studies may also contribute to data variability. This presentation also provided historical control data for both motor activity and auditory startle from his laboratory and approaches for qualitative evaluation of patterns of motor activity based on historical control data. Dr. Wayne Bowers extended this discussion of behavioral data variability to factors related to testing parameters (e.g., equipment and software settings), age-related differences in motor capacity, and quantitative approaches for analyzing motor activity data. Dr. Kathleen Raffaele collaborated with Drs. Thomas Vidmar, Edmund Lau, and Abby Li to present approaches for hypothesis-driven statistical analysis of continuous behavioral data using auditory startle data as an example. Using learning and memory as an example, Drs. Virginia Moser and Angela Hofstra highlighted shortcomings in data reporting and analysis encountered by regulatory authorities that influence scientific evaluation. Dr. Moser also discussed approaches for conducting learning and memory tests, an area where there is little specific guidance. Dr. Li discussed three DNT topics that have had an impact on risk assessment decisions regarding relative sensitivity: (1) quality of maternal toxicity assessment, (2) selection of an adverse-effect level, and (3) quality of linear morphometric methods and neuropathology evaluation (presented in collaboration with pathologists Drs. Robert Garman, Brad Bolon, Wolfgang Kaufmann, and Roland Auer). Portions of Dr. Raffaele’s and Dr. Li’s presentations are captured in separate manuscripts that provide detailed discussion and illustration of optimal approaches for quantitative analysis of behavioral data and for histopathology processing for morphometry measurements (Garman et al., 2016). Consequently, only brief highlights of the latter two presentations are included in this manuscript. The discussion section captures the main points discussed at the meeting.

Given the significant role of the DNT study for hazard identification and risk assessment to protect the developing fetus, infants, and children, Health Canada Pest Management Regulatory Agency (PMRA) has been working in partnership with Health Canada and the US EPA DNT experts to develop internal guidance for how DNT studies conducted according to test guidelines should be analyzed and evaluated based on practical experience of those who have reviewed them (Table 1). The 2015 workshops at the SOT and Teratology Society/NBTS [DNTS] meetings held in the US and Canada provided an opportunity to extend this discussion to the broader scientific community.

2. Global regulatory perspective on harmonization of scientific approaches (Angela Hofstra)

The DNT study provides information on the potential effects of chemicals on prenatal and postnatal toxicity to the developing nervous system. Given the role of the DNT study in assessing risk to the developing fetus and the young, it is imperative that it be conducted and interpreted in a scientifically-robust and defensible manner. However, both the conduct and interpretation are challenging, due to the complexity and lack of familiarity with this study. DNT studies conducted according to current guidelines (Table 2) employ multiple assessments at various life stages and their conduct requires knowledge of developmental toxicity, neurotoxicity, and sophisticated statistics. In addition, DNT studies are conditionally required and, therefore, are less routinely conducted than other standard toxicity studies; consequently, regulatory toxicologists in industry and government may be less familiar with this type of study.

Table 2.

EPA and OECD guidelines for developmental neurotoxicity studies (DNT) and extended one-generation reproductive toxicity studies (EOGRTS).

| EPA 870.6300 (1998a) | OECD 426 (2007) | OECD 443 (2012a) | |

|---|---|---|---|

| Test species | Rat | Rat | Rat |

| Exposure parent/Fl | GD6 to PND11 (EPA OPP currently specifies exposure to weaning) | GD6 to weaning | 2 weeks pre-mating to weaning/adult |

| Motor activity | Pre-weaning ontogeny (PND 13, 17, 21) and 60 (± 2) | Pre-weaning and adult | Adult |

| Behavioral ontogeny | None (other than motor activity) | 2 measures (“e.g., PND 13, 17, 21” motor activity) | None |

| Clinical observations, body weight | Throughout | Throughout | Adult |

| Motor and sensory function | Auditory startle habituation specified; weaning and ~day 60 | PND 25 (± 2) and adult | Auditory startle habituation specified; weaning |

| Sexual maturation | As appropriate | As appropriate | As appropriate |

| Learning and memory | Weaning and ~day 60 | PND 25 (+ 2) and (PND 60–70) | None |

| Brain weight, neuropathology, brain morphometric analysis | Weaninga (brain only) and adult (brain, spinal cord and peripheral nerves) | PND 22b (brain only) and adult (brain, spinal cord and peripheral nerves) | Weaning (brain only) and adult (brain, spinal cord and peripheral nerves) |

EPA guidelines state PND 11 but EPA accepts OECD guidance.

EPA guidelines state PND 11 but EPA accepts PND 21.

The variability of behavioral endpoints is higher than regulators may be used to seeing, compared to adult standard toxicity or adult neurotoxicity studies that are more frequently conducted (OECD, 2007 Appendix 1; OECD, 1997). In some cases, the variability range from adult neurotoxicity studies is used as a reference point for DNT studies, but these expectations are not necessarily appropriate. This has led to different interpretations of the biological relevance of changes, including non-statistically significant differences from control groups, contributing further to divergent regulatory assessments of DNT endpoints from guideline studies around the globe.

Data from DNT studies can be used as the basis for the regulatory point of departure for risk assessment purposes and to determine the magnitude of uncertainty factors. Of particular importance are vulnerable populations (e.g., infants and children), which are addressed on the regulatory/policy side, with distinct uncertainty factors such as the Food Quality Protection Act (FQPA) safety factor in the United States, the Pest Control Products Act (PCPA) factor in Canada, or hazard classification in the European Union.

Regulatory requirements dictate that Health Canada PMRA must apply the PCPA factor in addition to the traditional uncertainty factors (10× for interspecies and 10× for intraspecies), unless “the PMRA concludes, based on reliable data, that a different factor is appropriate for the protection of infants and children” (Health Canada, 2008). The final determination of the magnitude of the PCPA factor (reduce or increase) involves “evaluating the completeness of the data with respect to exposure of and toxicity to infants and children as well as potential for prenatal or postnatal toxicity” (Health Canada, 2008). US EPA Office of Pesticide Programs (OPP) also must “consider the special susceptibility of children to pesticides by using an additional tenfold (10×) safety factor when setting and reassessing tolerances, unless adequate data are available to support a different factor” (US EPA, 2015).

A regulator in one region may conclude that an endpoint or point of departure for regulatory assessment is the highest dose with no increased sensitivity of the young, while a regulator in a different region may assess the same study and determine that it indicates findings in the young at doses that are not maternally toxic. This could lead to assessments based on points of departure that are quite different for various regulatory bodies. In addition, composite uncertainty factors that include the FQPA factor (US) or PCPA factor (Canada) can vary by a factor of 3 or 10. Taken together, this can lead to very different risk assessments impacting commercial interests, such as uses, import/exports, classification, labeling, and packaging. These differences could lead to different health protective values and restricted or cancelled uses of a chemical in one country but not another. In the experience of the industry representatives, this situation has had significant impact on international trade of more than just a few chemicals.

Harmonization of key elements will improve the conduct, reporting, interpretation, and assessment of DNT studies and should help reduce inconsistencies in regulatory decision-making. These key elements include selection of tests or specific behavioral measures, best practices in study conduct, and data assessment, including understanding the hypothesis being tested, addressing and understanding the variability, applying appropriate statistical analysis, and evaluating the weight of evidence.

3. Evaluating data variability for neurobehavioral measures: how much is too much? (Larry Sheets)

This presentation discussed how to evaluate data variability for neurobehavioral measures and also sets the stage for later presentations by using motor activity and auditory startle as examples of these principles. First, various sources of variability were discussed, including which sources are inherent to the behavior that is being evaluated and which are ones that the investigator can control. Second, logistical and practical circumstances were outlined that permit the investigator to produce data of consistently high quality. Finally, different ways to determine whether or not the data are excessively variable were discussed.

3.1. Sources of variability

In general, there are intrinsic and extrinsic sources of variability (Raffaele et al., 2008). For the purposes of this discussion, intrinsic, or biological, variability is defined as variability that is inherent in each measured endpoint (e.g. photobeam breaks for ambulatory activity, peak startle amplitude for auditory startle response), which the investigator has a limited ability to control. Extrinsic variability is defined as variability that is caused by experimental or environmental conditions that can be controlled within the context of US EPA and OECD DNT guidelines. Some procedures that may serve to reduce intrinsic variability (e.g., by over-training the animal or selecting a test that is easy for the animal to solve) can also reduce the sensitivity of the test (Raffaele et al., 2008). This is the reason the title to this presentation refers to “How much is too much?”—because too little intrinsic variability is also not necessarily desirable.

Extrinsic variability is affected by factors, such as environmental conditions, that the investigator must manage in order to achieve consistent results. Some extrinsic factors that impact data variability include the time of day when animals are tested, how the equipment is cleaned between animals, and the manner in which the animals are handled by personnel on a daily basis and in preparation for testing on a given day (Li, 2005; Raffaele et al., 2008; Crofton et al., 2008; Slikker et al., 2005).

Individual animal factors may have a considerable impact on variability as well, and this is considered “intrinsic” variability. For example, animals are typically assigned the same date of birth within a 24-h pre defined period (e.g., all animals born after 4 pm can be considered born on the next calendar day). At early ages of testing specified by DNT guidelines (e.g., PND 13), this difference in the assigned age can have a major impact on behavior, thereby increasing variability. Because it is impractical to determine the hour of birth for each of the 80–100 litters in a study and test the offspring accordingly, the variability due to this age difference is considered to be “intrinsic” variability.

In studies conducted in my laboratory, preweaning assessment of some neurobehavioral measures such as motor activity can have variability of ~30% to > 100%, based on the standard deviation (SD) compared to the group mean (Table 3). This is consistent with the variability in guideline DNT studies by other laboratories (Maurissen et al., 2000; Tyl et al., 2008; Stump et al., 2010; Sheets et al., 2016). A key question is whether this variability is too high for measures that are inherently more variable than other toxicity endpoints, such as body weight (Maurissen et al., 2000; Stump et al., 2010). Our data show that motor activity in PND 60 adult rats is less variable (~17–30%; Table 4), which agrees well with motor activity data derived from studies that were not part of a DNT assessment (e.g., Crofton et al., 1991). While there are important extrinsic sources of variability that should be controlled, there are also intrinsic sources of variability (differences between animals that are independent of any treatment) that are inherent to the behavioral endpoints or impractical to control within the context of DNT test guidelines.

Table 3.

Control figure-8 chamber motor activity from the Bayer laboratory (mean ± SD; coefficient of variation [CV]) from nine DNT studies using Wistar male rats (20/group).

| Study number | PND 13 | PND 17 | PND 21 | PND 60 | ||||

|---|---|---|---|---|---|---|---|---|

| 1 | 79 ± 109 | 138% | 263 ± 153 | 58% | 341 ± 92 | 27% | 573 ± 128 | 22% |

| 2 | 65 ± 62 | 95% | 257 ± 128 | 50% | 282 ±117 | 41% | 434 ± 113 | 26% |

| 3 | 80 ± 76 | 95% | 129 ± 80 | 62% | 270 ± 84 | 31% | 586 ± 149 | 25% |

| 4 | 64 ± 65 | 102% | 240 ± 160 | 67% | 334 ± 89 | 27% | 531 ± 200 | 38% |

| 5 | 90 ± 81 | 90% | 169 ± 103 | 61% | 344 ± 134 | 39% | 536 ± 91 | 17% |

| 6 | 77 ± 76 | 99% | 253 ± 143 | 57% | 343 ± 180 | 52% | 572 ± 142 | 25% |

| 7 | 49 ± 37 | 76% | 217 ± 115 | 53% | 307 ± 117 | 38% | 521 ± 104 | 20% |

| S | 46 ± 61 | 133% | 181 ± 129 | 71% | 314 ± 126 | 40% | 472 ± 98 | 21% |

| 9 | 49 ± 44 | 90% | 127 ± 85 | 67% | 331 ± 77 | 23% | 529 ± 157 | 30% |

Table 4.

Control data (10 DNT studies) from the Bayer laboratory: Intersession Figure-8 Maze Motor Activity for PND 60 Wistar Rat Offspring (N = 20 male rats/group).

| Interval 1 | Interval 2 | Interval 3 | Interval 4 | Interval 5 | Interval 6 |

|---|---|---|---|---|---|

| Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD | Mean ± SD |

| 116 ± 32 | 105 ± 36 | 103 ± 35 | 94 ± 31 | 80 ± 23 | 76 ± 29 |

| 98 ± 19 | 72 ± 21 | 71 ± 32 | 76 ± 27 | 68 ± 31 | 50 ± 27 |

| 116 ± 35 | 96 ± 28 | 111 ± 40 | 98 ± 26 | 87 ± 22 | 78 ± 31 |

| 119 ± 47 | 84 ± 34 | 89 ± 38 | 91 ± 43 | 81 ± 37 | 67 ± 35 |

| 107 ± 20 | 92 ± 23 | 85 ± 22 | 92 ± 32 | 89 ± 23 | 71 ± 32 |

| 106 ± 27 | 94 ± 27 | 102 ± 38 | 105 ± 40 | 86 ± 30 | 79 ± 25 |

| 97 ± 15 | 93 ± 30 | 91 ± 31 | 85 ± 27 | 83 ± 32 | 73 ± 31 |

| 103 ± 17 | 84 ± 22 | 77 ± 24 | 72 ± 24 | 68 ± 31 | 68 ± 24 |

| 99 ± 24 | 75 ± 26 | 92 ± 51 | 89 ± 33 | 88 ± 37 | 86 ± 35 |

| 101 ± 17 | 88 ± 25 | 89 ± 22 | 79 ± 29 | 70 ± 37 | 76 ± 41 |

3.2. Logistical and practical considerations when conducting DNT studies

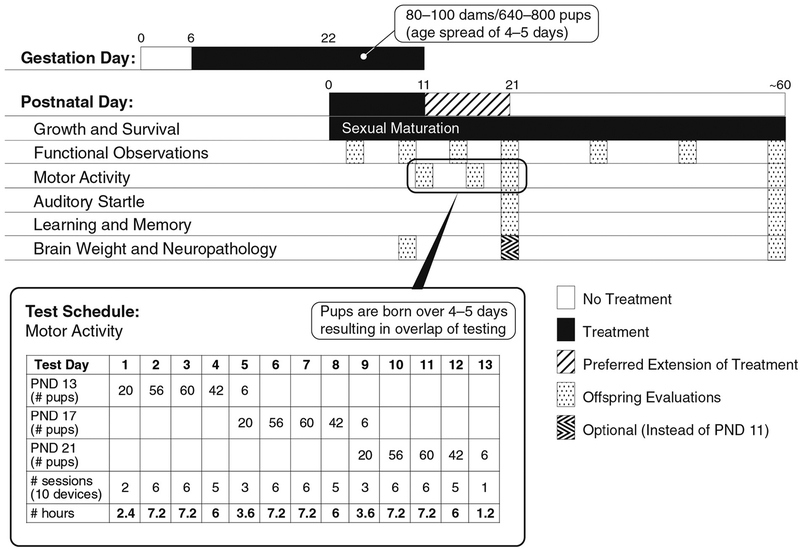

Speaking from personal experience, a considerable and constant challenge associated with DNT studies is to manage the logistics associated with the test guideline. This includes the number and variety of tests in the study design and the large number of animals that must be tested on specific days of age, preferably within a narrow time window during the day to reduce diurnal variability. In the OECD guideline for a DNT study (OECD, 2007), which is also accepted by the US EPA, the treatment is administered (e.g., by gavage or the diet) from gestation day (GD) 6 to lactation day (LD) 21 (Fig. 1). Testing of the dams is limited to measures of food consumption, body weight, and detailed clinical observations during gestation and lactation, and the dams are euthanized without tissues being collected on LD 21. For pesticides, a DNT study is required when there is an indication for neurotoxicity or increased sensitivity of the young, compared to adults, and this study is performed after the results from prenatal developmental toxicity and multi-generation reproduction studies are available. The findings in these studies are used for dose selection and to indicate whether the chemical is a developmental or reproductive toxicant.

Fig. 1.

Diagram of EPA Developmental Neurotoxicity Study—OCSPP 870.6300 (adapted from Raffaele et al., 2010). Detailed clinical observations and body weights are performed periodically in the F1 generation throughout the study, before weaning and after treatment is discontinued, until around postnatal day (PND) 70 (PND 0 is defined as the day of delivery). Neurobehavioral tests for the F1 generation include automated measures of activity, auditory startle habituation, and measures of cognition (US EPA, 1998a). The numbers in the table including the total time needed to conduct motor activity in the last row are from an actual study from Dr. Sheets’ lab. The last row in bold is the approximate time it takes to run all the motor activity sessions. As illustrated in this example, some labs add extra pups distributed across groups to meet guideline requirements for 20 offspring/dose group (1 pup/sex/litter from 20 litters/dose group) in case of mortalities, logistical errors and equipment malfunction. The US EPA and OECD DNT study design requirements result in a particularly high workload at ~PND 21 (US EPA, 1998a; OECD, 2007). In cases where the 80–100 females are mated over a period of 3–5 days (the duration of the estrous cycle in rats), this may result in litters born over a similar period. Using this study design, having a range of delivery days helps to reduce certain aspects of the workload at specific days of age. On the other hand, this factor increases the logistical complexity to manage the daily workload, because the various study endpoint assessments overlap. PND 21 is a particularly busy day, with the need to measure body weight, obtain detailed clinical observations, and evaluate motor activity, auditory startle, and cognition for F1 animals from 80 to 100 litters. One consequence of the test guideline requirements is that animals must be tested over a long test day and across different days, increasing variability due to circadian rhythms and other environmental factors that can vary despite best attempts to control them.

Review of the study design clearly illustrates the focus of the DNT study (US EPA, 1998a; OECD, 2007) is testing of the F1 animals, using neurobehavioral and neuropathology endpoints (Fig. 1, Table 2). In general, the test strategy for these studies (Table 2) is to investigate whether treatment during gestation and lactation produces effects on the nervous system in F1 animals associated with ongoing treatment and to investigate persistent or latent effects after treatment has been discontinued. Finally, the offspring are euthanized on PND 21 or ~PND 70, with perfusion fixation, gross and microscopic brain morphometry, and a thorough examination of the brain (PND 21 pups and PND 60 adults) and other neural tissues (spinal cord and peripheral nerves in adults only) for histopathology (Table 2). Given the emphasis of the study on the offspring and neurodevelopmental endpoints, it is important to recognize that not every finding is evidence of DNT; rather, the finding should be interpreted in the context of all the available information for the test substance, including evidence of toxicity identified in other studies.

Given the circumstances, it is a constant challenge for the staff to manage the workload without making errors or handling the animals in an inconsistent or hurried manner, which would increase variability (Crofton et al., 2008). The logistics involve taking the correct first generation offspring (F1) for each test from each litter (in the DNT case, for 80–100 litters), transporting the appropriate animals to the test room, placing each one into the proper test apparatus, performing the test appropriately, retrieving the animals and returning each to the correct cage, then collecting the next set of animals for the next test session. In a repeated-testing study design, failure to test an animal on one of the required occasions may remove all of that animal’s data from the test data set for some statistical models. In between, the technician may help other staff members by retrieving other animals for a different test being performed at the same time in another room. Further, the selection of tests and animals varies each day. Although careful training of technicians can reduce “extrinsic” sources of variability, the logistical requirements of the DNT guidelines contribute to data variability.

An important question that laboratories and regulators grapple with is whether a data set is excessively variable, after considering intrinsic variability. There is no single best reference or “gold standard” for use to gauge variability—it depends on the nature of the data and the specific measures. In some cases, the lower variability of published academic studies has been compared with higher variability in guideline DNT studies, raising the question of whether there is inadequate control of experimental and environmental variables (Raffaele et al., 2008). These comparisons may not be appropriate if the published studies are not conducted according to OECD or US EPA DNT guidelines, which require testing three different sets of 160 pups on multiple behavioral endpoints (OECD, 2007; US EPA, 1998a). For example, some of the academic studies cited in OECD (2007) and Raffaele et al. (2008) include studies in adult males (Crofton et al., 1991), acute age-relevant positive control studies that are not DNT design (Crofton et al., 2008; Marable and Maurissen, 2004), studies that report standard errors across multiple studies (Buelke-Sam et al., 1985), and studies that used substantially-fewer pups and/or a within-litter design that utilized a total of 10 litters per test chemical (Ruppert et al., 1983, 1985; Crofton et al., 1993). Thus, direct comparisons of variability in these types of studies and DNT studies must be done with considerable caution.

To gauge data quality, it is useful to view data from the control animals from a variety of statistical perspectives, including the standard deviation (SD), standard error of the mean (SEM), coefficient of variation (CV), range, and distribution. In addition, the data set (e.g., group mean and SD) should be evaluated to determine whether it varies appropriately with repeated testing or with age and sex. Next, a data set can be compared with data from other laboratories, but it is important to consider whether the test circumstances are appropriate for comparison. For example, data are generally more variable when the circumstances dictate an extended test day, due to diurnal variation in activity (Raffaele et al., 2008), or testing of animals within the cohort over multiple days, compared to testing fewer animals within a narrow time span all on the same day. It is also useful to evaluate consistency for control animals across a number of studies performed under similar conditions (i.e., historical control data). If such comparisons indicate that the data are more variable than expected, the investigator should review the test conditions and procedures in detail and spend time watching how the animals are being handled and the test is being performed. This should include detailed examination and testing of each test apparatus, because the environmental conditions may vary or there may be differences in equipment function or defects in manufacture.

In general, each litter is represented by one male and one female, resulting in a sample size of 20/sex/dose group. In the US EPA (1998a) and OECD (2007) DNT guidelines, a different cohort of animals (one pup/sex/litter) may be assigned to different behavioral tests. In addition, some F1 animals may be selected for blood collection, while others are taken to necropsy for perfusion fixation, necropsy, and tissue collection. In the EOGRTS (OECD, 2012a), the majority of DNT evaluations (behavior and neuropathology) are performed in the same cohort of one male or female/litter (10 males and 10 females/group). The dams also must be attended to and the surviving F1 animals disseminated to new housing.

For motor activity, the workday over the course of the study can range from one test session (e.g., 30 or 60 min) to six or more sessions, depending on the duration of the test and the number of devices used (Fig. 1). Consequently, the test may be completed between 8 and 9 a.m. on some days and may continue until 4 p.m. on other days, which would likely significantly increase variability due to diurnal patterns of activity (Raffaele et al., 2008) (Fig. 1). One should examine the results to determine whether this source of variability is a problem that must be managed. For example, additional devices with the same calibration could decrease the number of test sessions needed over the course of several hours; however, there could be increased variability associated with the additional time required to load more animals.

3.3. Approaches for evaluating motor activity and auditory startle data

The remainder of this presentation was specific to motor activity and the auditory startle response, including a description of the test procedures and discussion of data for control animals from guideline DNT studies performed in my former laboratory at Bayer. In this context, motor activity refers to spontaneous movement of the animal in a controlled environment. The commercial equipment that is commonly used may provide a variety of measures, but this generally consists of some measure of locomotor or ambulatory activity and potentially a measure of non-locomotor movements, which may include anything from rearing to tremor. US EPA (1998a) and OECD (2007) DNT guidelines state that this test should demonstrate habituation in controls over the course of the test session, as an indication of the animal’s ability to adapt to its environment—a simple form of learning. Motor activity is an apical test, because it can be altered through effects on a number of systems. Interpretation of results may take into account the nature of the effect, including persistence after treatment is discontinued, whether activity is increased or decreased, and whether there is an effect on habituation. The device used for this study design must be automated and suited to testing rats from PND 13 to 60. It must also be reliable and suited to provide consistent results, including habituation over an appropriate duration of time (approximately 30 min to 1 h). US EPA (1998a) specifies that the test session should be long enough for motor activity to reach asymptotic levels by the last 20% of the session. The most common choice in testing laboratories is some type of open-field device, while the figure-8 chambers (Ruppert et al., 1985) have been used in the Bayer laboratory.

The conditions and variables that should be controlled for a test of motor activity include background and intermittent sound, light and visual cues, vibration, time of day, and scent in the device. It is appropriate to attend to some of these issues when the room and test equipment are set up, while others are checked before testing begins on each test day or between test sessions. There is generally a practical limit to how much intermittent sound can be reduced in the test environment, so it is common practice to use a white noise generator to provide consistent background noise that is evenly distributed throughout the area. Scent is a very potent and important factor for rodents, so it is important to manage this variable carefully through the choice of procedures used to clean the equipment between animals, balancing the allocation to devices across treatment groups, and testing sexually mature males and females in different equipment or on different days.

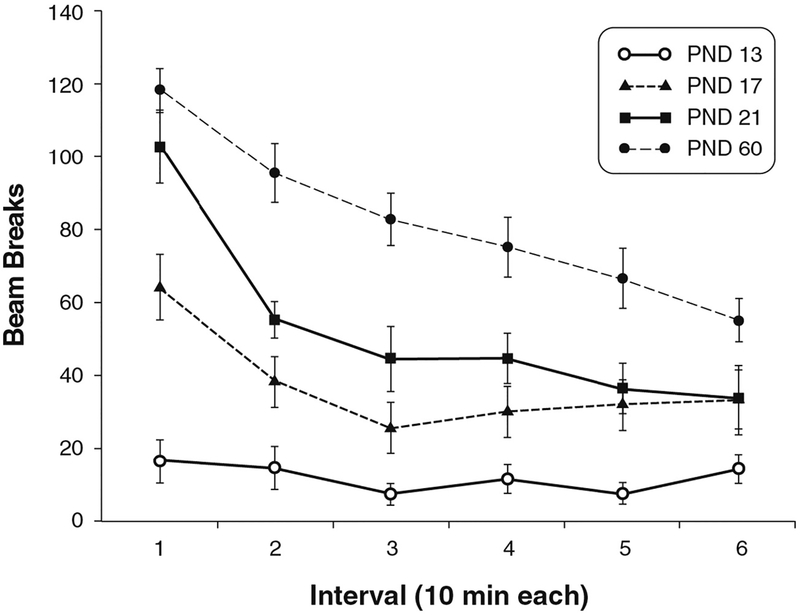

The level and type of activity vary considerably, depending on the size and shape of the device, as well as the distribution of photocells in the device. The figure-8 maze has eight pairs of emitters and detectors placed at specific locations, so activity is measured as beam interruptions when some part of the animal passes through that location. Motor activity is measured as any movement that interrupts a beam, while locomotor activity (ambulation) is measured when the animal breaks two different beams by moving from one location in the maze to another. Our results for control male Wistar rats tested in figure-8 chambers on PND 13, 17, 21, and 60 illustrate a consistent pattern of activity, both within a study and across a complement of several studies performed under the same conditions (Table 3). This pattern includes a progressive, age-related increase in motor activity. On PND 13, the level of motor activity is quite low and the SD approximates or exceeds the group mean, which results in a coefficient of variation from 76% to 138%. Looking at the data in this way indicates that the results are highly variable; however, evaluation of results within and across nine studies shows the results are very consistent, with group means ranging from 46 to 90 counts, compared to group means of 127 to 263 counts on PND 17 (Table 3). Looking at such data, it is easy to determine which results are from PND 13 animals, compared to all other age groups, and it is clear which results are for adults. In my lab, the results within any given study also showed clear age-related differences in within-session habituation, with habituation present on PNDs 17, 21, and 60 but not on PND 13 (Fig. 2). While other laboratories using different equipment and test conditions do not always observe habituation at PND 17, they do consistently report the absence of habituation at PND 13, and consistently report habituation at PND 21 and later (Raffaele et al., 2003; Ruppert et al., 1985). Furthermore, the results for adults show a consistent pattern of habituation in all nine studies (Table 4).

Fig. 2.

Representative intrasession motor activity (mean ± SEM) for control male Wistar rats at PNDs 13, 17, 21, and 60 from a guideline DNT study from Dr. Sheet’s lab.

The auditory startle response (ASR) is measured as the magnitude of the downward force a rat exerts in response to an abrupt acoustical stimulus of sufficient intensity (Davis, 1980; Davis and Eaton, 1984). The ASR is a well-characterized, reflex-based response with a short latency to onset following the presentation of a startle-eliciting stimulus, which is measured using either a load-cell force transducer or an accelerometer (Davis and Eaton, 1984). A load-cell force transducer measures both static (gravitational) force (e.g., the weight of the animal) and movement (typically at 1-msec intervals). This device is calibrated using static weights, and the response is measured in grams. The sensitivity of a load cell is set by the operator to optimize resolution of the output within the device’s dynamic range (e.g., to a maximum of 500 g) for young rats or reduced (e.g., to a maximum of 2000 g) to avoid exceeding the capacity of the device to measure the peak response for adults. By comparison, an accelerometer only measures the dynamic movement of the platform, and the ASR is generally reported in millivolts (mV) or arbitrary units. With this device, the sensitivity setting and the enclosure platform are different for young rats versus adults. As a result of these adjustments, the results with an accelerometer are not directly comparable across age groups; in fact, the magnitude of the response (reported in mV or arbitrary units) may appear to be similar or greater in young animals than in adults.

Important factors to manage include calibration of the equipment used to generate the startle-eliciting stimulus and to measure the ASR. As with motor activity, the test guidelines specify a measure of habituation. To achieve this, the test conditions (e.g., inter-trial interval) are optimized to achieve habituation over the course of the 50-trial session (5 blocks of 10 trials each). The latency of the ASR is also assessed to verify that the response being measured is the primary startle response, which is generally a factor only when the ASR has decreased with habituation and the true peak startle response may be less than other downward movements that occur during the sample period.

Using data from the Bayer laboratory, Tables 5 and 6 show ASR results using load cell detectors, with a 120-dB burst of broad-spectrum noise to elicit the response and a 9-s inter-trial interval to produce habituation. Our results from nine DNT studies show modest habituation in PND 22 control Wistar rats over the course of the 50-trial session, with an average 6% to 35% decrease in group mean response amplitude from the first to the last block of trials (Table 5). The results from these studies show a consistent pattern, based on group means, standard deviations, and habituation. For the same complement of studies, the ASR in PND 60 control males is much greater (39%–68%) than on PND 22, and the magnitude of habituation is also much greater (Table 6). The absolute difference in total and within-session group means among these studies is greater on PND 60 than PND 22, due to the much greater dynamic range of the response for individual animals and individual trials in adults, relative to weanlings (Tables 5 and 6). For females, the response is comparable to males on PND 22 but much less than males on PND 60 (data not shown). This difference between adult males and females cannot be accounted for by differences in body weight, because the output from the load cell during the first 8 ms from the onset of the stimulus (before ASR onset) is subtracted from the measure.

Table 5.

Control data (9 DNT studies) from the Bayer Lab: Intersession auditory startle response (ASR) data (grams) for PND 22 Wistar Male Rats (N = 20 male pups/group).

| Trials 1–10 | Trials 11–20 | Trials 21–30 | Trials 31–40 | Trials 41–50 | Summary session mean ± SD (CV) |

|---|---|---|---|---|---|

| Mean peak ± S.D | Mean peak ± S.D | Mean peak ± S.D | Mean peak ± S.D | Mean peak ± S.D | |

| 40 ± 20 | 39 ± 21 | 34 ± 17 | 32 ± 15 | 31 ± 18 | 35 ± 17 (49%) |

| 43 ± 16 | 36 ± 16 | 31 ± 12 | 30 ± 14 | 28 ± 12 | 34 ± 13 (38%) |

| 45 ± 17 | 44 ± 21 | 41 ± 20 | 39 ± 22 | 36 ± 15 | 41 ± 18 (44%) |

| 54 ± 22 | 53 ± 20 | 49 ± 26 | 41 ± 23 | 38 ± 25 | 47 ± 22 (47%) |

| 53 ± 18 | 52 ± 17 | 52 ± 22 | 46 ± 20 | 41 ± 15 | 49 ± 16 (33%) |

| 42 ± 10 | 42 ± 16 | 42 ± 17 | 35 ± 14 | 35 ± 15 | 39 ± 13 (33%) |

| 48 ± 21 | 44 ± 17 | 44 ± 21 | 44 ± 21 | 42 ± 24 | 45 ± 19 (42%) |

| 53 ± 21 | 54 ± 17 | 54 ± 15 | 52 ± 14 | 50 ± 20 | 53 ± 16 (30%) |

| 50 ± 23 | 44 ± 23 | 38 ± 19 | 36 ± 17 | 33 ± 14 | 40 ± 17 (43%) |

Table 6.

Control data (9 DNT studies) from the Bayer Lab: Intersession auditory startle response (ASR) data (grams) for PND 60 Wistar Male Rats (N = 20 male pups/group).

| Trials 1–10 | Trials 11–20 | Trials 21–30 | Trials 31–40 | Trials 41–50 | Summary session mean ± SD (CV) |

|---|---|---|---|---|---|

| Mean peak ± S.D | Mean peak ± S.D | Mean peak ± S.D | Mean peak ± S.D | Mean peak ± S.D | |

| 278 ± 117 | 233 ± 165 | 215 ± 128 | 169 ± 94 | 141 ± 58 | 207 ± 102 (49%) |

| 303 ± 205 | 254 ± 196 | 213 ± 168 | 177 ± 138 | 146 ± 88 | 218 ± 152 (70%) |

| 299 ± 238 | 231 ± 164 | 188 ± 146 | 175 ± 127 | 149 ± 142 | 208 ± 156 (75%) |

| 383 ± 167 | 278 ± 160 | 197 ± 123 | 179 ± 94 | 122 ± 46 | 232 ± 100 (43%) |

| 249 ± 195 | 220 ± 173 | 180 ± 124 | 162 ± 115 | 120 ± 74 | 186 ± 128 (69%) |

| 171 ± 94 | 155 ± 148 | 124 ± 137 | 116 ± 103 | 105 ± 89 | 134 ± 111 (83%) |

| 255 ± 215 | 194 ± 159 | 162 ± 119 | 149 ± 105 | 113 ± 61 | 175 ± 119 (68%) |

| 303 ± 157 | 299 ± 135 | 234 ± 128 | 148 ± 91 | 164 ± 120 | 230 ± 115 (50%) |

| 239 ± 138 | 251 ± 194 | 155 ± 113 | 143 ± 88 | 111 ± 69 | 180 ± 112 (62%) |

In summary, these examples emphasize that it is important to examine DNT data quality using a number of factors and to compare results from a single study with other data within and across labs performed under comparable conditions to determine whether or not variability is excessive. If appropriate, there are a number of steps that can be taken to reduce variability, in order to achieve high-quality and consistent results. However, the logistical considerations addressing DNT guideline specifications (e.g., sample size, age of testing, habituation design) can contribute to the “intrinsic” variability in appropriately designed studies, which may not be observed in other study designs.

Therefore, when reporting and reviewing DNT data, it is important to describe and understand the test conditions and procedures used and what the measures represent. It is also useful to provide historical control data for reference, with sufficient details provided to support the suitability of those data for comparison.

4. New insights into analysis of highly variable data: motor activity as case study (Wayne J Bowers)

This presentation provides different opinions and additional insights from those presented by LS by focusing on discussion of measurement issues and important test equipment and software factors that may affect motor activity measurement. In addition, the critical biological questions addressed by motor activity test data were described, as well as statistical considerations in the analysis of motor activity data. Considering the excess variability in PND 13 activity data, as indicated by the higher CV at PND 13 compared to other ages (see LS section above), specific emphasis was placed on the nature of pre-weaning activity data, especially PND 13 data, from multiple laboratories to illustrate some of the challenges in analyzing motor activity data from DNT studies.

4.1. Measurement of motor activity

As previously described, motor activity is an apical behavioral test that reflects multiple neural inputs, including motor, sensory and cognitive/reactivity. Motor activity data do not allow one to determine specifically which underlying functional capacity is affected by chemical exposure; only that some function is affected. This non-specificity is a disadvantage for characterizing the nature of treatment-related effects; however, this non-specificity is also an advantage, because multiple functional systems are assessed in motor activity testing.

4.2. Activity equipment and software issues

Both US EPA and OECD DNT test guidelines state that motor activity testing should be conducted with automated test equipment. There is a variety of test equipment available for motor activity testing. The most commonly used recording system is photocell-based equipment, but video-based systems are becoming more common. Test chambers are typically square or rectangular open-field-type chambers; other chambers of other shapes are employed less frequently (e.g., figure-8 chamber; Ruppert et al., 1985; Sheets et al., 2016, Crofton et al., 1991). For photocell systems, a bank of transmitters and receivers is mounted outside the activity chamber. Photocell systems use beam breaks in XY and sometimes Z coordinates to generate raw data, which are then aggregated by system-specific software (usually vendor proprietary software) to generate measures of motor activity, such as ambulatory counts, ambulatory time, ambulatory distance, vertical movement counts, and vertical time. Some systems also provide measures of small movements such as grooming. For photocell-based systems, the number of and spacing between photocells will affect sensitivity to movement; this design consideration can be an important factor in determining photocell measures. This will be important for small animals (such as pre-weaning rodents), where large spaces between photocells may result in small (i.e., young) animals detected as missing by system software. Video-based systems generate data by monitoring the change in location of an image of the animal within the test chamber. The raw data are then aggregated by system-specific software to generate motor activity measures similar to those produced by photocell-based systems. For video-based systems, it is important to optimize ambient light settings to ensure acceptable contrast between animals and the background. It is important to recognize that software settings for both photocell and video systems play a critical role in determining the activity measures generated. Indeed, the actual measures of motor activity reported in DNT studies (e.g., ambulatory counts, vertical counts) are actually generated by equipment software using algorithms that convert photocell beam break sequences into activity measures, or image movement into activity measures. Because of this, most systems permit customization of software settings to take into account animals of different sizes.

There are a number of potential impacts of the equipment and software settings. Because activity test systems can generate absolute activity counts using different algorithms, absolute activity measures might not be comparable among laboratories using different systems or even within laboratories where software settings vary among studies. Similarly, direct comparison of activity measures between ages within the same study may not be comparable, because the same software parameters used to generate activity data at different ages (e.g., where animals differ greatly in size) may exhibit differential sensitivity to movement. Thus, the generated absolute activity measures (e.g., ambulatory counts) may reflect actual differences in activity level, combined with variations in equipment sensitivity to movement. Fortunately, most systems permit the adjustment of software settings and re-analysis of raw data collected by test instruments. Equipment and system settings may also set constraints on the ability to compare absolute values from concurrent controls with historical control values within a laboratory.

Motor activity testing is conducted to assess three primary biological questions: behavioral ontogeny, habituation, and motor function. Behavioral ontogeny for motor activity is an important assessment of neurobehavioral development over the pre-weaning period, which usually is reflected by increases in motor activity from PND 13 to PNDs 17 and 21. Treatment-related alterations in the pattern of pre-weaning motor activity may be related to generalized, non-specific, developmental delays, and this would be associated with other indicators of developmental delay (e.g., altered growth, delay in developmental landmarks). In the absence of other indicators of developmental delay, altered activity in the pre-weaning period generally is interpreted as a disturbance in nervous-system development.

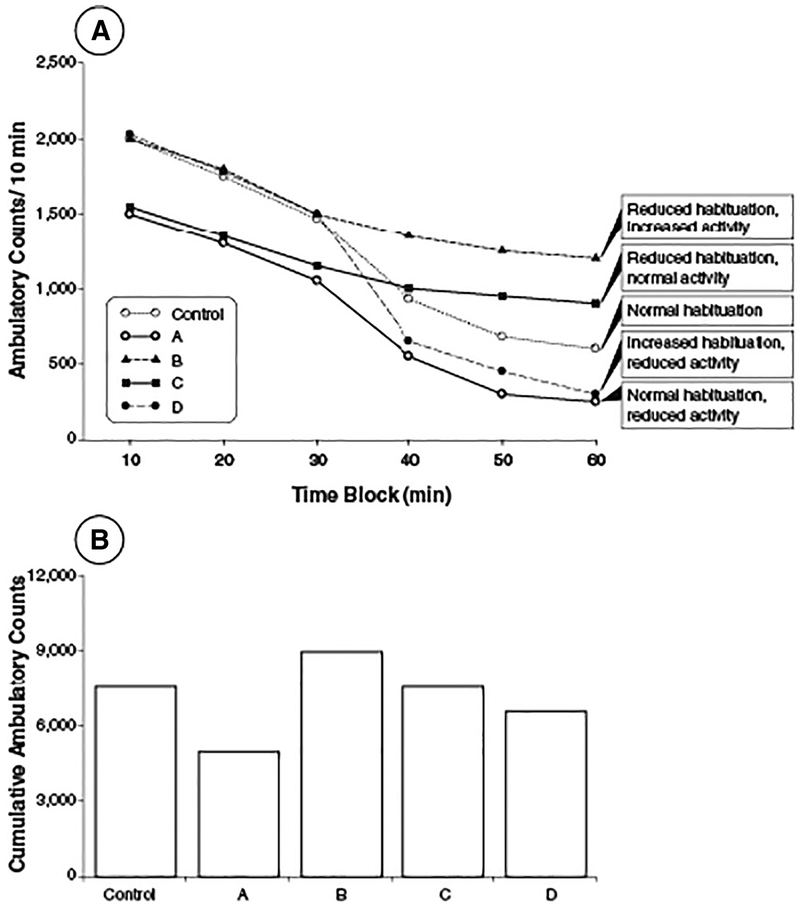

Second, motor activity data provide a measure of habituation, an assessment of non-associative learning that is critical for adaptive behavior. US EPA (1998a) and OECD (2007, 2012a) test guidelines state that habituation in motor activity should be evaluated. Habituation is characterized as a decrease in response to a stimulus following repeated exposure to that stimulus, and this short-term adaptation plays an important role in filtering the environmental stimuli that influence behavior. Habituation is not measured directly in motor activity testing, but rather is inferred by the pattern of activity over a test session. Under normal circumstances, habituation is reflected by a decrease in activity over the course of a test session (Fig. 3). Thus, the slope or rate of decline in activity measures provides an index of habituation. Treatment-related effects on habituation can either decrease habituation (flatter slope, compared to controls) or increase habituation (steeper slope), with either of these outcomes indicating a potential treatment-related effect on non-associative learning.

Fig. 3.

Theoretical data (mean) illustrating test session motor activity data patterns that reflect normal, as well as altered, habituation. Normal habituation in adult rats is shown in Vehicle Control and Treatment A. The specific temporal activity pattern in Control animals for a study will be determined by a range of factors, including the test equipment, test conditions, age, species and strain, etc. Regardless, habituation in control animals will be reflected by a decrease in activity measures over the course of the test session. While Treatment A shows decreased ambulatory activity relative to Control animals, Treatment A animals exhibit habituation rates comparable to Control (note parallel lines). In contrast, Treatment D exhibits an enhanced rate of habituation compared to all other groups, as illustrated by the more rapid decrease in ambulatory counts in the second half of the test session. Treatment B and C animals show reduced habituation, as indicated by the reduced rate of ambulation decrease in the second half of the test session. Note that Treatment C and Control will have comparable total session ambulation counts despite session activity patterns that are not comparable, illustrating the potential for total session data to provide misleading information. In the absence of time block data, it would not be possible to determine that Treatment C alters habituation. Similarly, while total session activity is comparable between Control and Treatment C and D, using only total session activity would mask the fact that Treatment C and D exhibit opposite alterations in habituation pattern. Note that Treatment A through D refer to different arbitrary treatments to illustrate issues to consider.

Third, motor activity testing provides a general measure of motor capacity and can assess whether treatment impairs overall motor function. Hypoactivity or hyperactivity in animals can indicate disturbances in motor function or disturbances in reaction to environmental stimuli. Typically, hyper- or hypoactivity, without an effect on habituation, could be reflected by parallel shifts higher or lower in the intrasession activity pattern. In contrast, altered habituation can be characterized by a change in the intrasession activity pattern, which can result in increases, decreases, or no change in cumulative total activity levels for a test session (Fig. 3).

4.3. Primary measures and statistical considerations

There are a number of important issues to consider when performing motor activity testing. Some key measures that can be evaluated are locomotion (e.g., ambulatory counts, ambulatory time, ambulatory distance) and rearing (e.g., vertical counts, vertical time). In some systems where photocell counts are reported, these are usually treated as measures of ambulation (locomotion). Most activity measures are highly inter-correlated (e.g., ambulatory counts and ambulatory distance), and this should be considered in data analysis. Some systems report total movement, which incorporates all forms of movement, including ambulation (horizontal), rearing (vertical), when measured, and small (localized) movements (e.g., grooming). This total movement measure should not be used by itself, because it could mask biologically-meaningful alterations in specific motor measures. For instance, increases in small movements that are characteristic of stereo-typy (i.e., increased grooming and small repetitive movements) are incompatible with locomotion, so summing all movements may indicate no motor change, when both small movements and locomotion have changed in opposite directions. Note that some laboratories may use similar terminology in reporting total movement as total cumulative ambulation for the entire duration of the test session (e.g., total ambulation for test session). This total or cumulative activity for one specific activity measure differs from the total movement mentioned above, which indicates the total of all movement measures (e.g., ambulatory and fine movements).

In most cases, the generally-accepted statistical approach for analyzing motor activity data is repeated measures analysis of variance (RM-ANOVA), with treatment, sex, and test time blocks included as factors in the analysis. Alternative statistical approaches, such as trend analysis, multivariate analysis, and regression approaches may also be used, as long as they include an assessment of the time blocks factor, which is critical to assess habituation. Including data for male and female offspring with sex as a factor in the data analysis is preferred, because separate analysis of male and female data precludes any direct assessment of sex differences in response to treatment effects (i.e. the dose × sex interaction). Note that when more than one pup/litter is tested, the litter factor should also be included in any data analysis. In addition, if one pup/litter is selected for testing (i.e., one male or one female per litter), combining both sexes in a single analysis may increase power to detect treatment effects. It is also important that the variability and nature of the data distribution are checked to ensure that the ANOVA is appropriately applied.

A number of statistical errors have been found commonly in reports of DNT motor activity data. First, reporting only cumulative session data (sometimes referred to as the total session data) precludes an evaluation of treatment effects on habituation. In addition, cumulative session results can be misleading where there are within-session treatment interactions with time or sex. Second, as already mentioned, reporting statistical results where males and females are analyzed separately is problematic, because it precludes direct assessment of any interaction with sex. Third, some reports follow a significant sex by treatment interaction in the initial global ANOVA (i.e., both sexes combined) with completely independent and separate ANOVAs on male and female data. This is problematic, because the error terms used in these separate ANOVA analyses are not appropriate and can produce inaccurate statistical p-values. Statistical tests to follow-up on sex by treatment interactions should be constructed within each sex using the original global model, so that subsequent analysis and contrast procedures are based on error terms from the initial global ANOVA. The power of this analysis for each sex is higher than when completely separate independent ANOVAs on each sex are performed. Fourth, it is important to check data for extreme values that can distort estimates of the group mean and result in unnecessarily variable data and reduces the statistical power to detect treatment effects. If extreme values are encountered, comparing results of data analysis with and without the extreme values can help determine the impact of the extreme values and how robust are conclusions in the presence and absence of extreme values. In addition, non-parametric statistics using ranks can help reduce the impact of extreme values.

4.4. High variability in PND 13 motor activity data

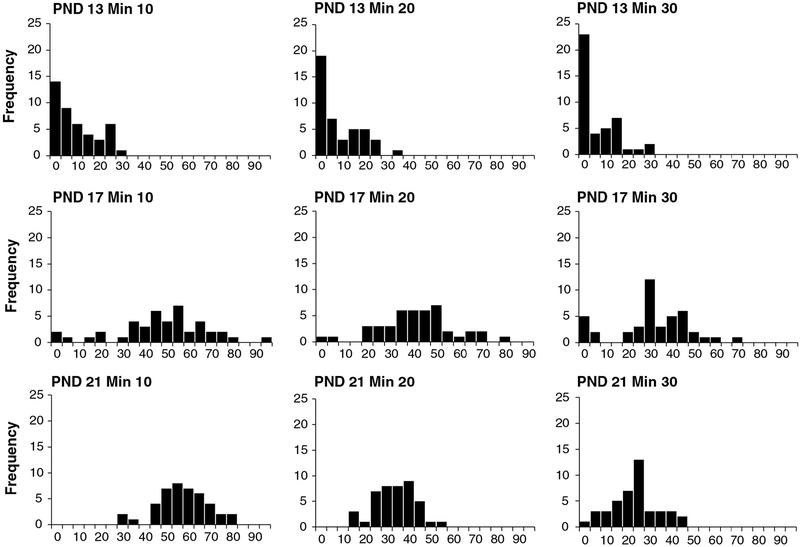

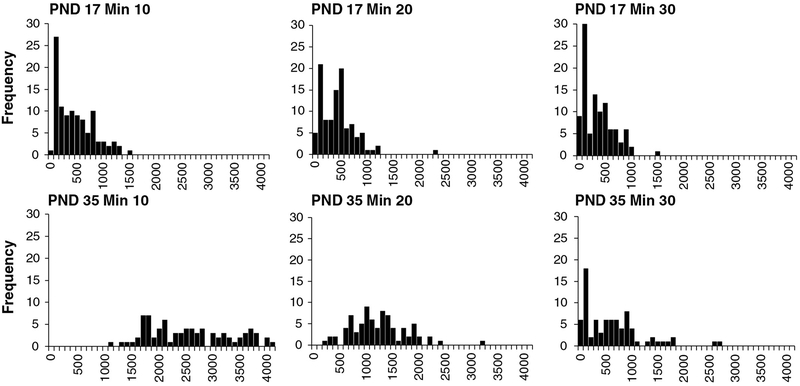

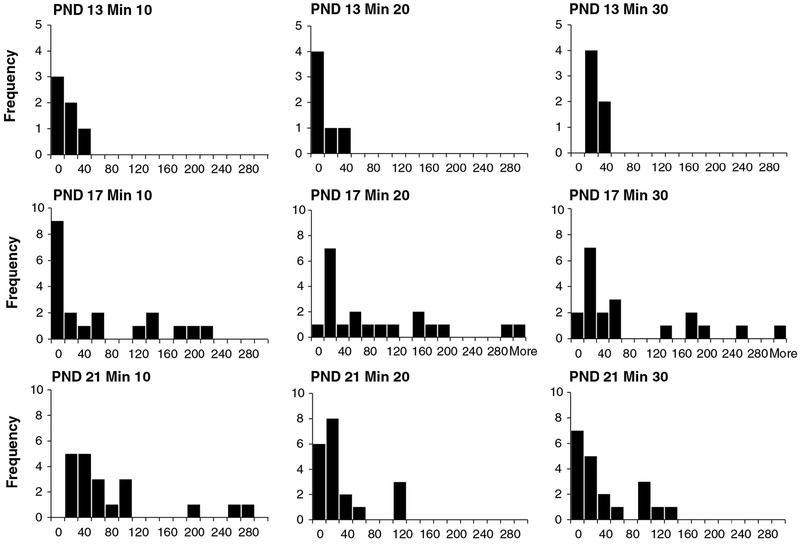

Many laboratories find that motor activity at PND 13 is highly variable. Assessments of the CV (a method to normalize variability between data sets by expressing variability relative to the group mean) indicate that PND 13 activity data typically have higher relative variability than activity data from older animals (Table 3). This higher variability at PND 13 decreases statistical power to detect treatment effects, relative to statistical power at older ages. However, it is not clear that the CV as a measure of relative variability at PND 13 is appropriate. This is because the CV is based on the group standard deviation divided by the group mean. Because the group mean is a reliable indicator of the group central tendency only when data are reasonably normally distributed, the CV is a reliable approach to comparing relative variability among data sets only when group data are normally distributed for all data sets. As illustrated in Figs. 4–6, using control data from three independent laboratories, PND 13 activity data are unlikely to be normally distributed; rather these data are usually markedly skewed or bi-modal. This is less likely to be the case for activity data for older animals. As a result, the CV is not a reliable means to assess relative variability between PND 13 and older animals. Indeed, Table 3 shows that the magnitudes of the raw standard deviations at PND 13 are actually numerically lower than those at other ages. While the CV appears higher at PND 13, this is likely due to the highly skewed PND 13 data, rather than an actual increase in relative variability. Thus, there remains a question of whether PND 13 data are actually more variable or simply has different properties from activity data from older animals. In addition, these data distribution issues have implications for statistical procedures used for analyzing PND 13 data, as well as any direct comparisons between PND 13 activity data and activity data for older animals.

Fig. 4.

Frequency histograms for PND 13 (top), PND 17 and PND 21 (bottom) motor activity data of control animals from the laboratory of Dr. Moser. Data were collected in the figure-8 chamber and include data for males and females combined. X-axis values are photocell counts. Each chart within each age contains data for 10-min time blocks for a total of 30 min of data. The X-axis indicates the number of photocell counts, and the Y-axis indicates the frequency of counts (number of animals). For PND 13 animals, there is a high proportion of animals with little or no detected movement (frequency of 0 counts), especially after the first time block. The data set is highly skewed at PND 13. In contrast, at PND 17 and 21, there are few animals with no movement, and data are normally distributed (n’s = 43).

Fig. 6.

Frequency histograms for PND 17 (top, n = 104) and 35 (bottom, n = 80) motor activity data of control animals (males and females combined) from Dr. Bowers’ laboratory. Data were collected using Med Associates activity system, and values are ambulatory counts from control animals from multiple studies. Each chart within each age contains data for 10-min time blocks, for a total of 30 min of data. The X-axis indicates the number of ambulatory counts, and the Y-axis indicates the frequency of counts (number of animals). For PND 17 animals, the data distribution is skewed at 10 min but approaches a normal distribution at later time points. At PND 35, there is a low frequency of 0 counts, and the data set is normally distributed at most time blocks. The increase in proportion of animals exhibiting little movement (increase in proportion of 0 counts) at 30 min reflects the onset of the expected reduction in activity associated with habituation at this age.

For PND 13 activity data, there are a number of biological reasons to expect that the data distribution will not be normally distributed. First, PND 13 animals do not have a fully developed neuromotor system and normally exhibit a different type of ambulation, compared to older animals (Altman and Sudarshan, 1975; Bolles and Woods, 1987; Vinay et al., 2002; Vinay et al., 2000; Clarac et al., 2004). Because the neuromotor system is developing rapidly at this age, some PND 13 animals may have more advanced neuromotor functioning than others, resulting in subsets of animals with different motor function capacities. Based on neuromotor development, one would expect that a high proportion of PND 13 animals would have little locomotor (horizontal) and rearing (vertical) activity. Indeed, motor activity at PND 13 may actually reflect the proportion of PND 13 animals who have achieved a stage where ambulatory capacity has developed (Altman and Sudarshan, 1975). Such differences in biological development will likely affect the data distribution at PND 13. Second, PND 13 rats normally have not reached the developmental stage where eye opening is completed (a development that usually occurs around PND 14–16, depending on the strain). Because of this, the visual sensory information available to PND 13 rats during motor activity testing will differ from the visual information available to older animals. Although the specific role of visual sensory information in motor activity behavior in pre-weaning rats is not clearly identified, and albino rats generally have poor visual acuity, it is nonetheless clear that, due to developmental stage, the overall sensory environment differs between PND 13 rats and older rats. As with neuromotor capacity, subsets of animals at PND 13 may achieve eye opening by the PND 13 test, and thus, activity data distribution at PND 13 may reflect subsets of animals at different stages with respect to visual system development.

The impact of developmental age on DNT motor activity data is readily seen in pre-weaning rats. Fig. 4 shows motor activity data for 10-min time blocks for PND 13 (top), PND 17 (middle), and PND 21 (bottom) rats collected in a figure-8 chamber with photocells. While data for PND 17 animals are reasonably normally distributed at these time blocks, data for PND 13 rats are not normally distributed and are negatively skewed, with a high proportion of zero values. This reflects a large proportion of PND 13 animals exhibiting little or no movement. This pattern for PND 13 animals is also evident in data from another laboratory using different activity equipment (Fig. 5 top), with many animals exhibiting no movement. However, PND 17 (Fig. 5 middle) data also reveal a large proportion of animals with low activity levels. This non-normal data pattern for PND 17 is probably related to equipment sensitivity, because similar results are evident from another laboratory using similar equipment (Fig. 6 top). While a full analysis of the role of test equipment is beyond the scope here, differences in software and photocell density that determine activity data may account for the results. Finally, data from PND 35 animals (Fig. 6 bottom) show that activity data at this age exhibit the expected normal distribution. Overall, these data indicate that pre-weaning activity data, especially PND 13 data, have a pattern different from post-weaning animals, consistent with the idea that developmental stage constrains the nature of pre-weaning motor activity. Further, the nature of the data properties indicates that pre-weaning data analysis (especially PND 13 data) must take this into account.

Fig. 5.

Frequency histograms for PND 13 (top, n = 6), PND 17 (middle, n = 20) and PND 21 (bottom, n = 20) motor activity data from Charles River Laboratories. Data were collected using Coulbourn Instruments Tru Scan system, and values are ambulatory counts from combined male and female control animals. Each chart within each age contains data for 10-min time blocks for a total of 30 min of data. The X-axis indicates the ambulatory counts and the Y-axis indicates the frequency of counts (number of animals). For PND 13 animals, there is a high proportion of animals with no movement (frequency of 0 counts), and the data set is highly skewed. Unlike the figure-8 chamber data, PND 17 activity data are also highly skewed, with a high proportion of animals with little movement.

There is another consequence of the differences in biological stage of development between PND 13 rats and older rats that was not considered in the previous section. Because of the differences in neuromotor capacity, it is also possible that activity measures collected are not biologically comparable between PND 13 and older animals. As a result, comparisons between activity measures at PND 13 and those gathered at older ages may actually be comparing different motor-based functions, despite employing the same measurement, instruments, and procedures. By way of analogy, this may be comparable to assessing crawling in infants and walking in children by measuring distance moved. While both provide a measure of distance or time in movement, they are fundamentally different behaviors; therefore, it raises the question of whether it is appropriate to directly compare PND 13 activity data with activity data for older animals.

While it seems that direct comparison between activity data from PND 13 and older animals may be problematic, because of differences in developmental stage and capacity as well as differences in data distribution properties, PND 13 data may nevertheless provide useful information on motor system ontogeny. Indeed, the biological capacity differences that characterize PND 13 versus older ages identify traits of motor ontogeny that are associated integrally with nervous-system development (Altman and Sudarshan, 1975; Bolles and Woods, 1987; Vinay et al., 2002; Vinay et al., 2000; Clarac et al., 2004). That PND 13 motor activity levels and pattern (including statistical properties of data) differ from later ages is consistent with the age-dependent development of motor function. Although there is a basis for expecting that PND 13 activity may provide a measure of motor-system development, further work is needed to understand the extent to which PND 13 data alone will be sensitive in detecting biologically-relevant effects on motor function ontogeny. However, it is clear from this example that data should be inspected carefully before applying any statistical approaches, including those that may be well-accepted for these data (e.g., RM-ANOVA; Ruppert et al., 1985; Holson et al., 2008). In the specific case of PND 13 data, preliminary examination of a limited data set from three different laboratories suggests that using other statistical approaches, such as mixture models (Boos and Brownie, 1991; He et al., 2014), may prove useful in characterizing alterations in the ontogeny of motor development.

5. Optimizing experimental design and statistical analysis: auditory startle response (ASR) as a case study (Kathleen Raffaele, Thomas Vidmar, Edmund Lau, Abby Li)

DNT studies conducted for regulatory purposes incorporate multiple endpoints that vary in the specific dependent variables measured, as well as the metrics used to evaluate results. Regulatory guidelines provide flexibility in study design, placing responsibility on the individual investigator to precisely define the hypothesis being tested and design appropriate analyses. Available equipment can record multiple dependent variables, not all of which convey the most pertinent biological information for the purposes of the test guideline. In order to maximize the useful information obtained from each study, the experimental design and subsequent analysis of data should be optimized to test the key hypothesis of interest based on the test guidelines.

Only a brief overview and summary points are included in this manuscript. Although general guidance for statistical analysis of DNT studies is available (Holson et al., 2008), recommendations on the practical implementation of statistical approaches to DNT data sets have been limited. Differences in guideline specifications suggest that substantially different biological hypotheses can be applied within the general guidance provided. The ASR habituation test, as described above by Dr. Sheets, has been used here as a case study to illustrate specific issues regarding DNT study design and behavioral data analysis.

The US EPA DNT guideline (US EPA, 1998a) and the OECD EOGRTS (OECD, 2012a) specify that the ASR test should be designed to evaluate habituation in 10 males and 10 females drawn from 20 litters/dose, such that all 20 litters/dose group are represented (i.e., 1 male or 1 female from each litter; total of 80 animals). In contrast, the OECD DNT guideline (OECD, 2007) only states that some test of sensory and motor function should be conducted at two ages in 1 male and 1 female/litter (i.e., 20 males and 20 females/dose group; total of 160 animals); specifically, the ASR habituation is one of several tests that can be conducted (Table 2). If ASR habituation is being tested, then, as described above by Sheets, the inter-trial interval should be constant so that the control animals will habituate.

Available ASR testing equipment can gather data on many dependent variables. Two or three of these variables will capture most biologically-relevant information within the context of a regulatory DNT study. We identified three variables of primary interest for this evaluation: mean peak amplitude for each of 5 blocks of 10 trials, change in peak amplitude across blocks of trials (habituation), and latency to peak amplitude. Average peak amplitude across all 50 trials is generally not of primary interest, because it is confounded by habituation occurring across the blocks (and thus also has increased variability). By contrast, latency to peak amplitude is not expected to change across blocks, and is thus also useful as verification that the peak amplitude is measuring the startle response rather than other movement. For example, if the average latency to peak is longer than 35–40 ms, the trace recordings for individual data should be checked to determine if the peak amplitude selected by the computer system corresponds with the actual startle response or some other movement.

From a regulatory perspective, the biological question of interest is whether or not a treatment-related change occurs in the endpoints being measured. Although ASR habituation is a key endpoint of interest, treatment-related changes in ASR habituation are not always formally evaluated in submitted study reports, which generally evaluate only the absolute change in mean peak amplitude for each individual block of trials, and the average across all trials. Statistical analysis should thus be designed to evaluate treatment-related changes in all relevant endpoints, including the pattern of ASR habituation.

For behavioral data such as ASR habituation, in which each animal is tested repeatedly, the RM-ANOVA has many advantages over performance of multiple one-way ANOVAs (a commonly reported statistical analysis), because the effect of a given test article dose on all relevant factors can be evaluated using a single analysis, and in particular, the change in ASR across trial blocks (habituation) is tested directly. Separate one-way ANOVAs for each trial block do not directly test the effect of the test chemical on the pattern of habituation. Mixed model statistical approaches are available to evaluate different covariate structures, so that the homogeneity of variance and constant correlation are no longer required (Holson et al., 2008). The RMANOVA for ASR habituation should always include trial blocks and treatment group as factors; the power of the analysis can be increased further by including sex and different time points (i.e., ages) as factors within the same analysis. As described in greater detail by Dr. Bowers, statistical tests to follow-up on sex by treatment interactions should be constructed within each sex so that subsequent analysis and contrast procedures are based on error terms from the initial global ANOVA. In cases where both males and females from each litter are included within the test group, the litter also needs to be included as a factor in the analysis. Given the additional issues (see below, and also discussions by Drs. Sheets and Bowers) regarding combining data across age groups, it may be better to analyze the data for each age group separately, to limit the number of factors to no more than three.

Interactions between dose and other factors are of primary interest in understanding the relationship between treatment and the measured effects, and different decision trees for selecting a suitable statistical approach are available. Combining different factors into a single global analysis requires that the investigator ensure that the equipment setting and experimental design support an analysis that pools all the data together (Holson et al., 2008). For example, if equipment or equipment settings are varied based on the age and/or size of the animal, it may not be appropriate to combine data into one analysis; accordingly, data for each day (or size) will need to be analyzed separately. Similarly, if males and females are tested on different days or at different times during the day, then the interpretation of a sex effect or dose interaction with sex is inextricably confounded by the divergent testing conditions (Maurissen, 2010). The most practical solution is to counterbalance the time of testing across dose levels and the sex of the animals.

In summary, the statistical analysis should be guided by the primary biological hypothesis being tested and should be supported by appropriate experimental design. In the case of ASR habituation, the primary biological hypothesis being tested is the presence of treatment-related changes in startle amplitude, latency, and habituation. It is crucial to look at the raw data to optimize valid approaches for evaluating the data. The RM-ANOVA has many advantages over other analyses commonly used in DNT studies, including the increased power of the analysis, the ability to evaluate changes in response over time, and the ability to consider multiple factors in a single analysis. However, it is important to consider features of equipment and experimental design, and the nature of the data results that may influence the types of analysis that can be performed appropriately.

6. How reported data and methods affect evaluation: learning and memory as case studies. (Virginia Moser and Angela Hofstra)

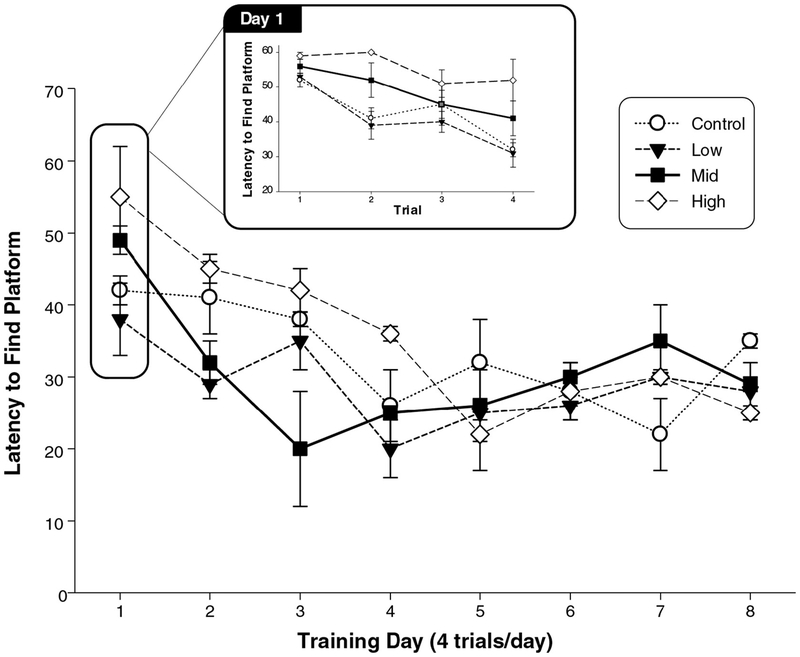

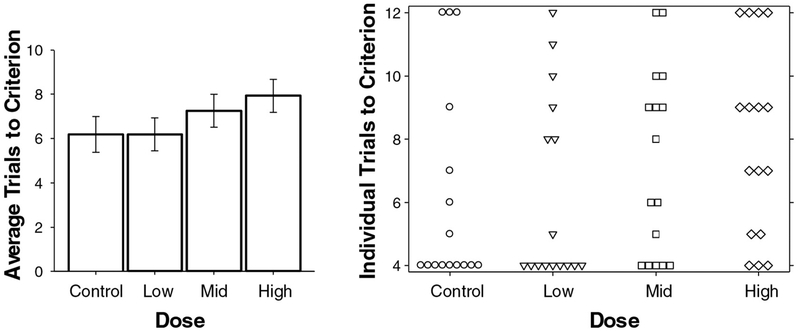

This presentation focused on tests of learning and memory used in laboratory animals in the context of testing in DNT regulatory studies. A background on testing and neurobiology of cognition was provided, along with information and considerations in the selection of tests. Two case studies, specifically with data from the Morris water maze (Morris, 1984) and a water M-maze, were provided to illustrate new approaches to looking at details to inform interpretations of DNT data sets.