Abstract

Here we address the current issues of inefficiency and over-penalization in the massively univariate approach followed by the correction for multiple testing, and propose a more efficient model that pools and shares information among brain regions. Using Bayesian multilevel (BML) modeling, we control two types of error that are more relevant than the conventional false positive rate (FPR): incorrect sign (type S) and incorrect magnitude (type M). BML also aims to achieve two goals: 1) improving modeling efficiency by having one integrative model and thereby dissolving the multiple testing issue, and 2) turning the focus of conventional null hypothesis significant testing (NHST) on FPR into quality control by calibrating type S errors while maintaining a reasonable level of inference efficiency. The performance and validity of this approach are demonstrated through an application at the region of interest (ROI) level, with all the regions on an equal footing: unlike the current approaches under NHST, small regions are not disadvantaged simply because of their physical size. In addition, compared to the massively univariate approach, BML may simultaneously achieve increased spatial specificity and inference efficiency, and promote results reporting in totality and transparency. The benefits of BML are illustrated in performance and quality checking using an experimental dataset. The methodology also avoids the current practice of sharp and arbitrary thresholding in the p-value funnel to which the multidimensional data are reduced. The BML approach with its auxiliary tools is available as part of the AFNI suite for general use.

Introduction

The typical neuroimaging data analysis at the whole brain level starts with a preprocessing pipeline, and then the preprocessed data are fed into a voxel-wise time series regression model for each subject. An effect estimate is then obtained at each voxel as a regression coefficient that is, for example, associated with a task/condition or a contrast between two effects or a linear combination among multiple effects. Such effect estimates from individual subjects are next incorporated into a population model for generalization, which can be parametric (e.g., Student’s t-test, AN(C)OVA, univariate (Poline and Brett, 2012) or multivariate GLM (Chen et al., 2014), linear mixed-effects (LME) (Chen et al., 2013)) or nonparametric (e.g., permutations (Nichols and Holmes, 2001; Smith and Nichols, 2009), bootstrapping, rank-based testing). In either case, this generally involves one or more statistical tests at each spatial element separately.

Issues with controlling false positives

As in many scientific fields, the typical neuroimaging analysis has traditionally been conducted under the framework of null hypothesis significance testing (NHST). As a consequence, a big challenge when presenting the population results is to properly handle the multiplicity issue resulting from the tens of thousands of simultaneous inferences, but this undertaking is met with various subtleties and pitfalls due to the complexities involved: the number of voxels in the brain (or a restricting mask) or the number of nodes on surface, spatial heterogeneity, violation of distributional assumptions, etc. The focus of the present work will be on developing an efficient approach from Bayesian perspective to address the multiplicity issue as well as some of the pitfalls associated with NHST (Appendix A). We first describe the multiplicity issue and how it directly results from the NHST paradigm and inefficient modeling, and then translate many of the standard analysis features to the proposed Bayesian framework.

Following the conventional statistical procedure, the assessment for a BOLD effect is put forward through a null hypothesis H0 as the devil’s advocate; for example, an H0 can be formulated as having no activation at a brain region under, for example, the easy condition, or as having no activation difference between the easy and difficult conditions. It is under such a null setting that statistics such as Student’s t- or F-statistic are constructed, so that a standard distribution can be utilized to compute a conditional probability that is the chance of obtaining a result equal to, or more extreme than, the current outcome if H0 is imagined as the ground truth. The rationale is that if this conditional probability is small enough, one may feel comfortable in rejecting the straw man H0 and in accepting the alternative at a tolerable risk.

While NHST may be a reasonable formulation under some scenarios, there is a long history of arguments that emphasize the mechanical and interpretational problems with NHST (e.g., Cohen, 2014; Gelman, 2016) that might have perpetuated the reproducibility crisis across many disciplines (Loken and Gelman, 2017). Within neuroimaging specifically, there are strong indications that a large portion of task-related BOLD activations are usually unidentified at the individual subject level due to the lack of power (Gonzalez-Castillo et al., 2012). The detection failure, or false negative rate, at the population level would probably be at least as large. Therefore, it is likely far-fetched to claim that no activation or no activation difference exists anywhere in the whole brain, except for the regions of white matter and cerebrospinal fluid. In other words, the global null hypothesis in neuroimaging studies is virtually never true. The situation with resting-state data analysis is likely worse than with task-related data, as the same level of noise is more impactful on seed-based correlation analysis due to the lack of objective reference effect. Since no ground truth is readily available, dichotomous inferences under NHST as to whether an effect exists in a brain region are intrinsically problematic, and it is practically implausible to truly believe the validity of H0 as a building block when constructing a model. Furthermore, the dichotomous filtering under NHST paints a biased picture in the literature and leads to suboptimal meta analyses that are already compromised without the incorporation or availability of effect reporting; for instance, conjunction analysis in neuroimaging is such an artificial dichotomy of overlapping brain regions under two or more conditions based on arbitrary thresholding.

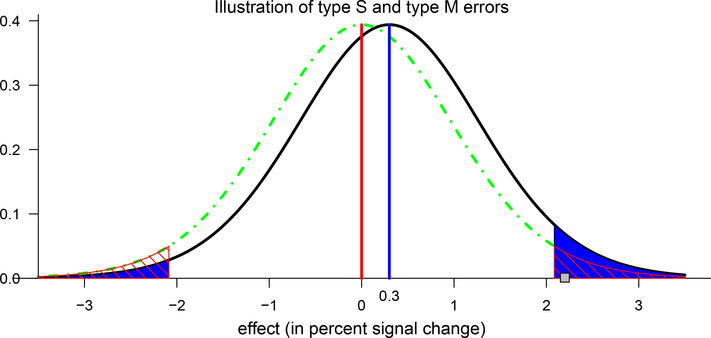

Achieving statistical significance has been widely used as the standard screening criterion in scientific results reporting as well as in the publication reviewing process. The difficulty in passing a commonly accepted threshold with noisy data may elicit a hidden misconception: A statistical result that survives the strict screening with a small sample size seems to gain an extra layer of strong evidence, as evidenced by phrases in the literature such as “despite the small sample size” or “despite limited statistical power.” However, when the statistical power is low, the inference risks can be perilous, as demonstrated with two different types of error as illustrated in Appendix B from the conventional type I and type II errors: incorrect sign (type S) and incorrect magnitude (type M). The conventional concept of FPR controllability is not a well-balanced choice under all circumstances or combinations of effect and noise magnitudes. We consider a type S error to be more severe than a type M error, and thus we aim to control the former while at the same time reducing the latter as much as possible, parallel to the similarly lopsided strategy of strictly controlling type I errors at a tolerable level under NHST while minimizing type II errors.

Issues with handling multiplicity

In statistics, multiplicity is more often referred to as multiple comparisons or multiple testing problem when more than one statistical inference is made simultaneously. In neuroimaging, the multiplicity issue may sneak into data analysis through several channels (Appendix C), affecting expected FPRs in diverse ways. One widely recognized aspect of multi plicity, multiple testing, occurs when the investigator fits the same model for each voxel in the brain. However, multiplicity also occurs when the investigator conducts multiple comparisons within a model, tests two tails of a t-test separately when prior information is unavailable about the directionality, and branches in the analytic pipelines. The challenges of dealing with multiple testing at the voxel or node level have been recognized within the neuroimaging community almost as long as the history of FMRI. Substantial efforts have been devoted to ensuring that the actual type I error (or FPR) matches its nominal requested level under NHST. Due to the presence of spatial non-independence of noise, the classical approach to countering multiple testing through Bonferroni correction in general is highly conservative when applied to neuroimaging, so the typical correction efforts have been channeled into two main categories, 1) controlling for FWE, so that the overall FPR at the cluster or whole brain level is approximately at the nominal value, and 2) controlling for false discovery rate (FDR), which harnesses the expected proportion of identified items or discoveries that are incorrectly labeled (Benjamini and Hochberg, 1995). FDR can be used to handle a needle-in-haystack problem where a small number of effects existing among a sea of zero effects in, for example, bioinformatics. However, FDR is usually quite conservative for typical neuroimaging data and thus is not widely adopted. Therefore, we do not discuss it hereafter in the current context.

Typical FWE correction methods for multiple testing include Monte Carlo simulations (Forman et al., 1995), random field theory (Worsley et al., 1992), and permutation testing (Nichols and Holmes, 2001; Smith and Nichols, 2009). Regardless of the specific FWE correction methodology, the common theme is to use the spatial extent, either explicitly or implicitly, as a pivotal factor. One recent study suggested that the nonparametric methods seem to have achieved a more uniformly accurate controllability for FWE than their parametric counterparts (Eklund et al., 2016), even though parametric methods may exhibit more flexibility in modeling capability (and some parametric methods can show reasonable FPR controllability; Cox et al., 2017). Because of this recent close examination (Eklund et al., 2016) on the practical difficulties of parametric approaches herein controlling FWE, there is currently an implied rule of thumb (e.g., Yeung, 2018) that demands any parametric correction be based on a voxel-wise p-value threshold at 0.001 or less. Such a narrow modeling choice with a harsh cutoff could be highly limiting, depending on several parameters such as trial duration (event-related versus block design), and would definitely make small regions even more difficult to pass through the NHST filtering system. In other words, the leverage on spatial extent with a Procrustean criterion undoubtedly incurs a collateral damage: small regions (e.g., amygdala) or subregions within a brain area are inherently placed in a disadvantageous position even if small regions have similar signal strength as larger ones; that is, to be able to surpass the same threshold bar, small regions would have to reach a much higher signal strength to survive a uniform criterion at the cluster threshold or whole brain level.

The concept of using contiguous spatial extent as a leveraging mechanism to control for multiplicity can be problematic from another perspective. For example, suppose that two anatomically separate regions are spatially distant and the statistical evidence (as well as signal strength) for each of their effects is not strong enough to pass the cluster correction threshold individually. However, if another two anatomically regions that have exactly the same statistical evidence (as well as signal strength) are adjacent, their spatial contiguity could elevate their combined volume to the survival of correction for FWE. Trade-offs are inherently involved in these final interpretations. One may argue that the sacrifice in statistical power under NHST is worth the cost in achieving the overall controllability of type I error, but it may be unnecessarily over-penalizing to stick to such an inflexible criterion rather than utilizing the neurological context or prior knowledge, as discussed below.

To summarize the debate surrounding cluster inferences, multiplicity is directly associated with the concept of false positives or type I errors under NHST, and the typical control for FWE at a preset threshold (e.g., 0.05, the implicitly accepted tolerance level in the field) is usually considered a safeguard for reproducibility. Imposing a threshold on cluster size (perhaps combined with signal strength) to protect against the overall FPR has the undesirable trade-off cost of inflating false negative rates or type II errors, which can greatly affect individual result interpretations as well as reproducibility across studies. The current practice of handling multiple testing through controlling the overall FPR in neuroimaging under the null hypothesis significance testing (NHST) paradigm excessively penalizes the statistical power with inflated type II errors. More fundamentally, the adoption of dichotomous decisions through sharp thresholding under NHST may not be appropriate when the null hypothesis itself is not pragmatically relevant because the effect of interest takes a continuum instead of discrete values and is not expected to be null in most brain regions. When the noise inundates the signal, two different types of error are more relevant than the concept of FPR: incorrect sign (type S) and incorrect magnitude (type M). In general, several multiplicity-related challenges in neuroimaging appear to be tied closely to the fundamental mechanisms of NHST approaches introduced to counterbalance between two counterfactual errors (type I and type II), which are the cornerstones of NHST. Therefore, we put forward a list of potential problems with NHST in Appendix A.

Structure of the work

In light of the aforementioned backdrop, we believe that the current modeling approach is inefficient. First, we question the appropriateness of the severe penalty currently levied to the voxel- or node-wise data analysis. In addition, we endorse the ongoing statistical debate surrounding the ritualization of NHST and its dichotomous approach to results reporting and in the review process, and aim to raise the awareness of the issues embedded within NHST (Loken and Gelman, 2017) in the neuroimaging community. In addition, with the intention of addressing some of the issues discussed above, we view multiple testing as a problem of inefficient modeling induced by the conventional massively univariate methodology. Specifically, the univariate approach starts, in the same vein as a null hypothesis setting, with a pretense of spatial independence, and proceeds with many isolated or segmented models. To avoid the severe penalty of Bonferroni correction while recovering from or compensating for the false presumption of spatial independence, the current practices deal with multiple testing by discounting the number of models due to spatial relatedness. However, the collateral damages incurred by this to-and-fro process are unavoidably the loss of modeling efficiency and the penalty for detection power under NHST.

Here, we propose a more efficient approach through BML that could be used to confirm, complement or replace the standard NHST method. As a first step, we adopt a group analysis strategy under the Bayesian framework through multilevel modeling on an ensemble of ROIs and use this to resolve two of the four multiplicity issues above: multiple testing and double sidedness (Appendix C). Those ROIs are determined independently from the current data at hand, and they can be selected through various methods such as previous studies, an anatomical or functional atlas, or parcellation of an independent dataset in a given study; the regions could be defined through masking, manual drawing, or balls about a center reported previously. The proposed BML approach dissolves multiple testing through a multilevel model that more accurately accounts for data structure as well as shared information, and it consequentially improves inference efficiency. The modeling approach will be extended to other scenarios in our future work.

As a novel approach, BML here is applied to neuroimaging in dealing with multiplicity at the ROI level, with a potential extension to whole brain analysis in future work. We present this work in a purposefully (possibly overly) didactic style in the appendices, reflecting our own conceptual progression. Our goal is to convert the traditional voxel-wise GLM into an ROI-based BML through a step-wise progression of models (GLM → LME → BML). The paper is structured as follows. In the next section, we first formulate the population analysis at each ROI through univariate GLM (parallel to the typical voxel-wise population analysis), then turn multiple GLMs into one LME by pivoting the ROIs as the levels of a random-effects factor1, and lastly convert the LME to a full BML. The BML framework does not make statistical inferences for each measuring entity (ROI in our context) in isolation. Instead, the BML weights and borrows the information based on the precision information across the full set of entities, striking a balance between data and prior knowledge; in a nutshell, the crucial feature here is that the ROIs, instead of being loose, are associated with each other through a Gaussian assumption under BML. As a practical exemplar, we apply the modeling approach to an experimental dataset and compare its performance with the conventional univariate GLM. In the Discussion section, we elaborate the advantages, limitations, and prospects of BML in neuroimaging. Major acronyms and terms are listed in Table 1.

Table 1:

Acronyms and terminology.

| BML | Bayesian multilevel | NHST | null hypothesis significance testing |

| FPR | false positive rate | NUTS | No-U-Turn sampler |

| FWE | family-wise error | power | chance of rejecting H0 when H0 is false |

| GLM | general linear model | PPC | posterior predictive check |

| HMC | Hamiltonian Monte Carlo | type I | chance of rejecting H0 when H0 is true (“false positive”) |

| LME | linear mixed-effects | type II | failing to reject H0 when H0 is false (“false negative”) |

| LOO | leave one out | type M | exaggerating the effect magnitude |

| MCMC | Markov chain Monte Carlo | type S | estimating the effect with an incorrect sign |

Theory: Bayesian multilevel modeling

Throughout this article, the word effect refers to a quantity of interest, usually embodied in a regression (or correlation) coefficient, the contrast between two such quantities, or the linear combination of two or more such quantities from individual subject analysis. Italic letters in lower case (e.g., α) stand for scalars and random variables; lowercase, boldfaced italic letters (a) for column vectors; Roman and Greek letters for fixed and random effects in the conventional statistics context, respectively, on the righthand side of a model equation (the Greek letter θ is reserved for the effect of interest); p(·) represents a probability density function.

Bayesian modeling for two-way random-effects ANOVA

As our main focus here is FMRI population analysis, we extend the BML approach for one-way ANOVA (Appendix D) to a two-way ANOVA structure, and elucidate the advantages of data calibration and partial pooling in more details. At the population level, the variability across n subjects has to be accounted for; in addition, the within-subject correlation structure among the ROIs also needs to be maintained. The conventional approach formulates r separate GLMs each of which fits the data yij from the ith subject at the jth ROI,

| (1) |

where j = 1,2, …, r, θj is the population effect at the jth ROI, and ϵij is the residual term that is assumed to independently and identically follow . Each of the r models in (1) essentially corresponds to a Student’s t-test, and the immediate challenge is the multiple testing issue among those r models: with the assumption of exchangeability among the ROIs, is Bonferroni correction the only valid solution? If so, most neuroimaging studies would have difficulty in adopting ROI-based analysis due to this severe penalty, which may be the major reason that discourages the use of region-level analysis with a large number of regions. Alternatively, the r separate GLMs in (1) can be merged into one GLM by pooling the variances across the r ROIs,

| (2) |

The two approaches, (1) and (2), usually render similar inferences unless the sampling variances are dramatically different across the ROIs. To compare different models through information criteria (Vehtari et al., 2017), we can solve the GLM (2) in a Bayesian fashion,

| (3) |

where the effects θj are assigned with a noninformative prior so that no pooling is applied among the ROIs, leading to virtually identical inferences as the GLM (2).

The approach with model (1), (2), or (3) does not involve any pooling among the ROIs in the sense that the information at one ROI is assumed to reveal nothing about any other ROIs, and may lead to overfitting. To improve model fitting, we first adopt two-way random-effects ANOVA, and formulate the following platform with data from n subjects,

| (4) |

where b0 represents the population effect, πi and ξj code the deviation or random effect of the ith subject and jth ROI from the overall mean b0, respectively, and they are assumed to be iid with2 and , and ϵij is the residual term that is assumed to follow .

Parallel to the situation with one-way ANOVA (Appendix D), the two-way ANOVA (4) can be conceptualized as an LME without changing its formulation. Specifically, the overall mean b0 is a fixed-effects parameter, while both the subject and ROI-specific effects, πi and ξj, are treated as random variables. When n, r ≥ 3, the number of data points nr is greater than the total number of model parameters, n+r+2, both the ANOVA and LME frameworks are identifiable. In addition, we continue to define θj = b0 + ξj as the effect of interest at the jth ROI. The LME framework has been well developed over the past half century, under which we can estimate variance components such as λ2 and τ2, and fixed effects such as b0 in (4). Therefore, conventional inferences can be made by constructing an appropriate statistic for a null hypothesis. Its modeling applicability and flexibility have been substantiated by its adoption in FMRI group analysis (Chen et al., 2013). Furthermore, the LME formulation (4) has a special layout, a crossed random-effects (or cross-classified) structure, which has been applied to inter-subject correlation (ISC) analysis for naturalistic scanning (Chen et al., 2017a) and to ICC analysis for ICC(2,1) (Chen et al., 2017c). A hierarchical model is a particular multilevel model in which parameters are nested within one another, and the cross-classified structure here showcases the difference between the two conceptions: the two clusters (ROI and subjects) intertwine with each other and form a factorial structure (n subjects by r ROIs), distinct from a hierarchical or nested one.

However, LME cannot offer a solution in making inferences regarding the ROI effects θj: to estimate θj, the LME (4) would become over-parameterized (i.e., an over-fitting problem). To proceed for the sake of intuitive interpretations, we temporarily assume a known sampling variance σ2, a known cross-subjects variance λ2, and a known cross-ROI variance τ2, and transform the ANOVA (4) to its Bayesian counterpart,

| (5) |

Then the posterior distribution of θj with prior distributions, and , can be analytically derived (Appendix E) with the data y = {yij},

| (6) |

Similarly to the one-way ANOVA scenario (Appendix D), we have an intuitive interpretation for : the posterior precision for θj|b0, τ, λ, y is the sum of the cross-ROI precision and the combined sampling precision . Under the r completely separate GLMs in (1), the cross-subjects variance λ2 and the sampling variance σ2 could not be estimated separately. Interestingly, the following relationship,

| (7) |

reveals that the posterior precision lies somewhere among the precisions of from the r separate GLMs. Furthermore, the posterior mode of in (6) can be expressed as a weighted average between the individual sample means and the overall mean b0,

| (8) |

where the weight , indicating the counterbalance of partial pooling between the individual mean for the jth entity and the overall mean b0, the adjustment of θj from the overall mean b0 toward the observed mean , or the observed mean being shrunk toward the overall mean b0.

Related to the concept of ICC, the correlation between two ROIs, j1 and j2, due to the fact that they are measured from the same set of subjects, can be derived in a Bayesian fashion as,

| (9) |

Similarly, the correlation between two subjects, i1 and i2, due to the fact that their effects are measured from the same set of ROIs, can be derived in a Bayesian fashion as,

The exchangeability assumption is crucial here as well for the BML system (4). Conditional on ξj (i.e., when the ROI is fixed at index j), the subject effects πi can be reasonably assumed to be exchangeable since the experiment participants are usually recruited randomly from a hypothetical population pool as representatives (thus the concept of coding them as dummy variables). As for the ROI effects ξj, here we simply assume the validity of exchangeability conditional on the subject effect πi (i.e., when subject is fixed at index i), and address the validity later in Discussion.

To summarize, the main difference between the conventional GLM and BML lies in the assumption about the brain regions: the effects (e.g., θj in (3)) are assumed to have a noninformative flat prior while they are assigned with a Gaussian prior under BML. In other words, the effect at each region is estimated independently from other regions under GLM, thus there is no information shared across regions. In contrast, the effects across regions are shared, regularized and partially pooled through the Gaussian assumption under BML for the effects across regions; such a cross-region Gaussian assumption bears the same rationale as the cross-subject Gaussian assumption. So far, we have presented a “simplest” BML scenario. Specifically, we have: ignored the possibility of incorporating any explanatory variables such as subject-specific quantities (e.g., age, IQ) or behavioral data (e.g., reaction time); assumed known variances such as τ2 and σ2; and presumed that the data yij have been directly measured without precision information available. Further extensions are needed and discussed for realistic applications in the next subsection.

Further extensions of Bayesian modeling for two-way random-effects ANOVA and full Bayesian implementations

To gain intuitive interpretations, we have so far assumed that the variances σ2, λ2 and τ2 in (5) (and σ2 in (23) of Appendix D) are known. In practice, those parameters for the prior distributions are not available. Approximate (or empirical) Bayesian approaches could be adopted to provide a computationally economical “workaround” solution. For example, one possibility is to first solve the corresponding LME and directly apply the estimated variances to the analytical formula (6) (and (24) in Appendix D). However, there are two limitations associated with approximate Bayesian approaches. The reliability or uncertainty for the estimated variances are not taken into consideration and thus may result in inaccurate posterior distributions. In addition, analytical formulas such as (6) (and (24) in Appendix D) are usually not available when we extend the prototypical models (5) (and (23) in Appendix D) to more generic scenarios, as shown below.

At the population level, one may incorporate one or more subject-specific covariates such as subject-grouping variables (patients vs. controls, genotypes, adolescents vs. adults), within-subject (e.g., multiple conditions such as positive, negative and neutral emotions) or quantitative explanatory variables (age, behavioral or biometric data). To be able to adapt such scenarios, we first need to expand the models considered previously with a simple intercept (Student’s t-test) to r separate GLMs and one GLM with pooled variances, generalizing the models (1) and (3), respectively, to

| (10) |

| (11) |

where the vector xi contains the subject-specific values of the covariates, with its first component 1 that is associated with the intercept, and the vector θj codes the effects associated with the covariates xi (and each component in θj is assigned with a noninformative prior in (11)), j = 1,2, …, r. In parallel, the conventional two-way random-effects ANOVA or LME (4) evolves to

| (12) |

where b and ξj represent the population effects and region-specific deviations corresponding to those covariates, respectively. Similarly, the BML counterpart can be formulated as

| (13) |

where τ is a 2 × 2 variance-covariance matrix for ξj.

Under the BML (13), the effect of interest θj can be an element of b, the intercept (as in (5)) or the effect for one of the covariates xi. Similar to models with varying intercepts such as (4) and (5), both intercepts and slopes are assumed to be different across ROIs in the models (12) and (13), and they are usually referred to as models with varying intercepts and slopes. When there is only one covariate xi, the four models (10), (11), (12) and (13) simplify to, respectively,

| (14) |

| (15) |

| (16) |

| (17) |

where τ is a 2 × 2 variance-covariance matrix for (ξ0j, ξ1j)T.

The discussion so far has assumed that data yij are directly collected without measurement errors. However, in some circumstances (including neuroimaging) the data are summarized through one or more analytical steps. For example, the data yij in FMRI can be the BOLD responses from subjects under a condition or task that are estimated through a time series regression model, and the estimates are not necessarily equally reliable. Therefore, a third extension is desirable to broaden our model (13) so that we can accommodate the situation where the separate variances of measurement errors for each ROI and subject are known and should be included in the model (13) as inputs, instead of being treated as one hyperparameter. Similarly to the conventional meta-analysis, a BML with known sampling variances can be effectively analyzed by simply treating the variances as known values.

Numerical implementations of BML

Since no analytical formula is generally available for the BML (13), we proceed with the full Bayesian approach hereafter, and adopt the algorithms implemented in Stan, a probabilistic programming language and a math library in C++ on which the language depends (Stan Development Team, 2017). In Stan, the main engine for Bayesian inferences is No-U-Turn sampler (NUTS), a variant of Hamiltonian Monte Carlo (HMC) under the category of gradient-based Markov chain Monte Carlo (MCMC) algorithms.

Some conceptual and terminological clarifications are warranted here. Under the LME framework, the differentiation between fixed- and random-effects is clearcut: fixed-effects parameters (e.g., b in (12)) are considered universal constants at the population level to be estimated; in contrast, random-effects variables (e.g., πi and ξj in (12)) are assumed to be varying and follow a presumed distribution. However, there is no such distinction between fixed and random effects in Bayesian formulations, and all effects are treated as parameters and are assumed to have prior distributions. Nevertheless, there is a loose correspondence between LME and BML: fixed effects under LME are usually termed as population effects under BML, while random effects in LME are typically referred to as entity effects3 under BML.

Essentially, the full Bayesian approach for the BML systems (5) and (13) can be conceptualized as assigning hyperpriors to the parameters in the LME or ANOVA counterparts (4), and (12). Our hyperprior distribution choices follow the general recommendations in Stan (Stan Development Team, 2017). Specifically, an improper flat (noninformative uniform) distribution over the real domain for the population parameters (e.g., b in (13)) is adopted, since we usually can afford the vagueness thanks to the usually satisfactory amount of information available in the data at the population level. For the scaling parameters at the entity level, the variances for the cross-subjects effects πi and as well as in the variance covariance matrix for ξj in (13), we use a weakly informative prior such as a Student’s half-t(3,0,1)4 or half-Gaussian (restricting to the positive half of the respective distribution). For the covariance structure of ξj, the LKJ correlation prior5 is used with the parameter ζ = 1 (i.e., jointly uniform over all correlation matrices of the respective dimension). Lastly, the variance for the residuals ϵij is assigned with a half Cauchy prior with a scale parameter depending on the standard deviation of yij.

To summarize, besides the Bayesian framework under which hyperpirors provide a computational convenience through numerical regularization, the major difference between BML and its univariate GLM counterpart is the Gaussian assumption for the ROIs (e.g., in the model (5)) that plays the pivotal role of pooling and sharing the information among the brain regions. It is this partial pooling that effectively takes advantage of the effect similarities among the ROIs and achieves higher modeling efficiency. In other words, we dissolve the multiple testing issue through borrowing information across the ROIs by incorporating the regions into one model with a prior assumption about their effects. In contrast, the inefficiency of the massively univariate approach lies in the fact that the modeler pretends that each voxel or ROI is unrelated and would have to pay the penalty for the pretense.

Another different aspect about Bayesian inference is that it hinges around the whole posterior distribution of an effect. For practical considerations in results reporting, modes such as mean and median are typically used to show the centrality, while a quantile-based (e.g., 95%) interval or highest posterior density provides a condensed and practically useful summary of the posterior distribution. The typical workflow to obtain the posterior distribution for an effect of interest is the following. Multiple (e.g., 4) Markov chains are usually run in parallel with each of them going through a predetermined number (e.g., 2000) of iterations, half of which are thrown away as warm-up (or “burn-in”) iterations while the rest are used as random draws from which posterior distributions are derived. To gauge the consistency of an ensemble of Markov chains, the split statistic (Gelman et al., 2014) is provided as a potential scale reduction factor on split chains and as a diagnostic parameter to assist the analyst in assessing the quality of the chains. Ideally, fully converged chains correspond to , but in practice is considered acceptable. Another useful parameter, the number of effective sampling draws after warm-up, measures the number of independent draws from the posterior distribution that would be expected to produce the same standard deviation of the posterior distribution as is calculated from the dependent draws from HMC. As the sampling draws are not always independent with each other, especially when Markov chains proceed slowly, one should make sure that the effective sample size is large enough relative to the total sampling draws so that a reasonable accuracy can be achieved to derive the quantile intervals for the posterior distribution. For example, a 95% quantile interval requires at least an effective sample size of 100. As computing parallelization can only be executed for multiple chains of the HMC algorithms, the typical BML analysis can be effectively conducted on any system with at least 4 CPUs.

One important aspect of the Bayesian framework is model quality check through various prediction accuracy metrics. The aim of the quality check is not to reject the model, but rather to check whether it fits the data well. For instance, posterior predictive check (PPC) simulates replicated data under the fitted model and then graphically compares actual data yij to the model prediction. The underlying rationale is that, through drawing from the posterior predictive distribution, a reasonable model should generate new data that look similar to the acquired data at hand. As a model validation tool, PPC intuitively provides a visual tool to examine any systematic differences and potential misfit of the model, similar to the visual examination of plotting a fitted regression model against the original data. Leave-one-out (LOO) cross-validation using Pareto-smoothed importance sampling (PSIS) is another accuracy tool (Vehtari et al., 2017) that uses probability integral transformation (PIT) checks through a quantile-quantile (Q-Q) plot to compare the LOO-PITs to the standard uniform or Gaussian distribution.

BML applied to an ROI-based group analysis

To demonstrate the performances of BML in comparison to the conventional univariate approach at the ROI level, we utilized an experimental dataset from a previous FMRI study (Xiao et al., 2018). Briefly, a cohort of 124 typically developing children (mean age = 6.6 years, SD = 1.4 years, range = 4 to 8.9 years; 54 males) was scanned while they watched Inscapes, a movie paradigm designed for collecting resting-state data to reduce potential head motion. In addition, a subject-level covariate was included in the analysis: the overall theory of mind ability based on a parent-report measure (the theory of mind inventory, or ToMI). FMRI images were acquired with the following EPI scan parameters: B0 = 3 T, flip angle = 70 °, echo time = 25 ms, repetition time = 2000 ms, 36 slices, planar field of view = 192 × 192 mm2, voxel size = 3.0 × 3.0 × 3.5 mm3, 210 volumes with a total scanning time of 426 seconds. Twenty-one ROIs (Table 3) were selected from the literature because of their potential relevancy to the current study, and they were neither chosen nor defined per the whole brain analysis results of the current data. Mean Fisher-transformed z-scores were extracted at each ROI from the output of seed-based correlation analysis (seed: right temporo-parietal junction at the MNI coordinates of (50, −60, 18)) from each of the 124 subjects. The effect of interest at the population level is the relationship at each brain region between the behavioral measure of the overall ToMI and the region’s association with the seed. A whole brain analysis showed the difficulty of some clusters surviving FWE correction (Table 2).

Table 3:

MNI coordinates of the 21 ROIsa

| No | ROI | Coordinates (x, y, z) |

|---|---|---|

| 1 | R PCC | (8, −59, 35) |

| 2 | R TPJp | (56, −56,25) |

| 3 | R Insula | (49, −8, −11) |

| 4 | L IPL | (−55, −65, 27) |

| 5 | L SFG | (−7, 58, 21) |

| 6 | R IFG (BA45) | (47, 22, 6) |

| 7 | R IFG (BA9) | (60, 25, 19) |

| 8 | L MTG | (−51, −62, 5) |

| 9 | L CG | (−5, 8, 42) |

| 10 | L IFG | (−46, 24, 7) |

| 11 | ACC | (0, 38, 10) |

| 12 | SGC | (−2, 32, −8) |

| 13 | PCC/PrC | (−2, −52, 26) |

| 14 | dmPFC | (−2, 5, 14) |

| 15 | L TPJ | (−46 −66, 18) |

| 16 | L vBG | (−6,10, −8) |

| 17 | R vBG | (6, 10, −8) |

| 18 | L aMTS/aMTG | (−54, −10, −20) |

| 19 | R Amy/Hippo | (24, −8, −22) |

| 20 | L Amy/Hippo | (−24, −10, −20) |

| 21 | vmPFC | (−2, 50, −10) |

The 21 ROIs were chosen because of their potential involvement for the current experiment based on previous studies. Each ROI was created as a ball with a center at the coordinates (in millimeters) from the literature (Xiao et al., 2017) and a radius of 6 mm. ROI abbreviations: L, left hemisphere; R, right hemisphere; PCC/PrC, precuneus/posterior cingulate cortex; TPJp, posterior temporo-parietal junction; IPL, inferior parietal lobe; SFG, superior frontal gyrus; IFG, inferior frontal gyrus; aMTS/aMTG, anterior middle temporal sulcus/gyrus; CG, cingulate gyrus; ACC, anterior cingulate cortex; SGC, subgenual cingulate cortex; dmPFC, dorsomedial prefrontal cortex; vBG, ventral basal ganglia; Amy/Hippo, amygdala/hippocampus; vmPFC, ventromedial prefrontal cortex.

Table 2:

ROIs and FWE correction for their associated clustersa

| voxel-wise p | cluster threshold | number of surviving ROIs | ROIs |

|---|---|---|---|

| 0.001 | 28 | 2 | R PCC, PCC/PrC |

| 0.005 | 66 | 4 | R PCC, PCC/PrC., L IPL, L TPJ |

| 0.01 | 106 | 4 | R PCC, PCC/PrC., L IPL, L TPJ |

| 0.05 | 467 | 4 | R PCC, PCC/PrC., L IPL, L TPJ |

| 0.05* | 467 | (4) | (L aMTS/aMTG, R TPJp, vmPFC, dmPFC) |

Monte Carlo simulations were conducted using a mixed exponential spatial autocorrelation function (Cox et al., 2017) instead of FWHM to determine the cluster threshold (voxel size: 3 × 3 × 3 mm3). The ROI abbreviations are listed in Table 3.

Special note for the last row (voxel-wise p-value of 0.05): four ROIs including L IPL, L TPJ, R PCC, PCC/PrC survived together with their clusters from the FWE correction, and the other four ROIs listed here (L aMTS/aMTG, R TPJp, vmPFC, and dmPFC) did not survive with their clusters but showed some evidence of effect when the cluster size requirement was dropped.

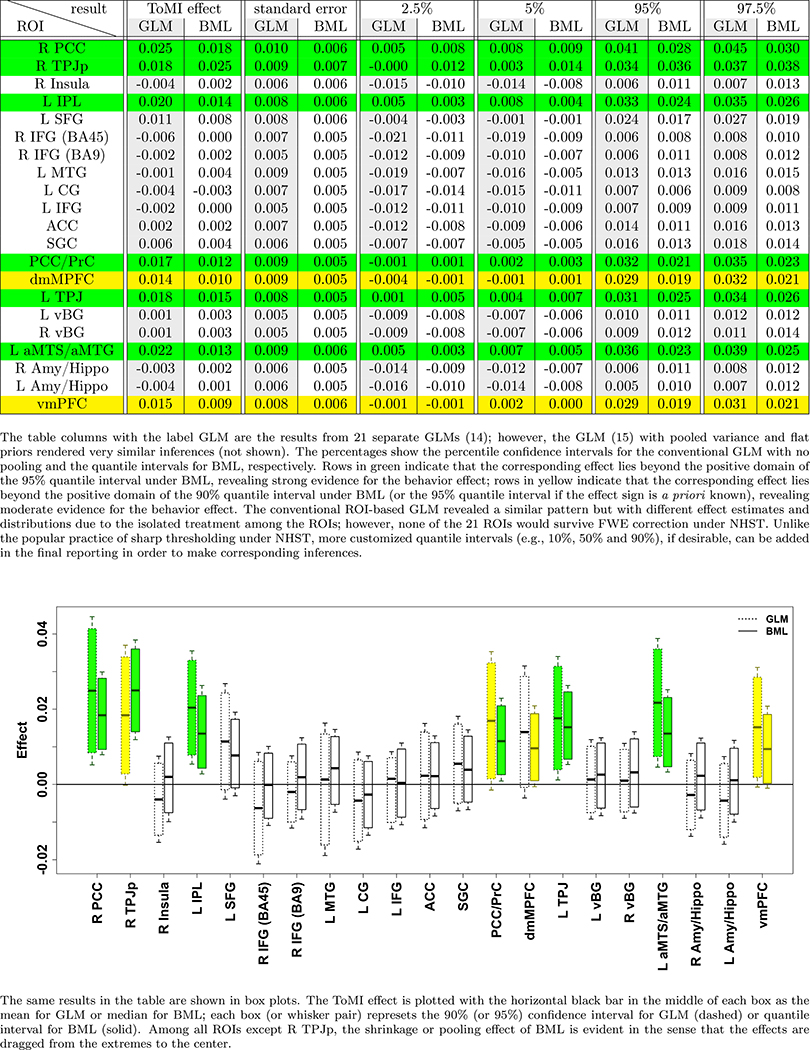

The data from the 21 ROIs were analyzed through the modeling triplets, GLMs (14) and (15), LME (16) and BML (17), with the effect of interest at the jth ROI being the relationship between ToMI and the ROI’s association with the seed: θ1j = b1 + ξ1j. The exchangeability assumption for LME and BML was deemed reasonable because, prior to the analysis, no specific information was available regarding the order and relatedness of the effects across subjects and ROIs. It is worth noting that the data were skewed with a longer right tail than left (black solid curve in Fig. 3a and Fig. 3b). When fitted at each ROI separately with GLM (simple regression in this case) using the overall ToMI as an explanatory variable, the model yielded lackluster fitting (Fig. 3a) in terms of skewness, the two tails, and the peak area. As shown in Fig. 1, five ROIs (R PCC, R TPJp, L IPL, L TPJ, and L aMTS/aMTG) reached a two-tailed significance level of 0.05, and two ROIs (PCC/PrC and vmPFC) achieved a two-tailed significance level of 0.1 (or one-tailed significance level of 0.05 if directionality was a priori known). However, the burden of FWE correction (e.g., Bonferroni) for the ROI-based approach with univariate GLM is so severe that none of the ROIs could survive the penalizing metric.

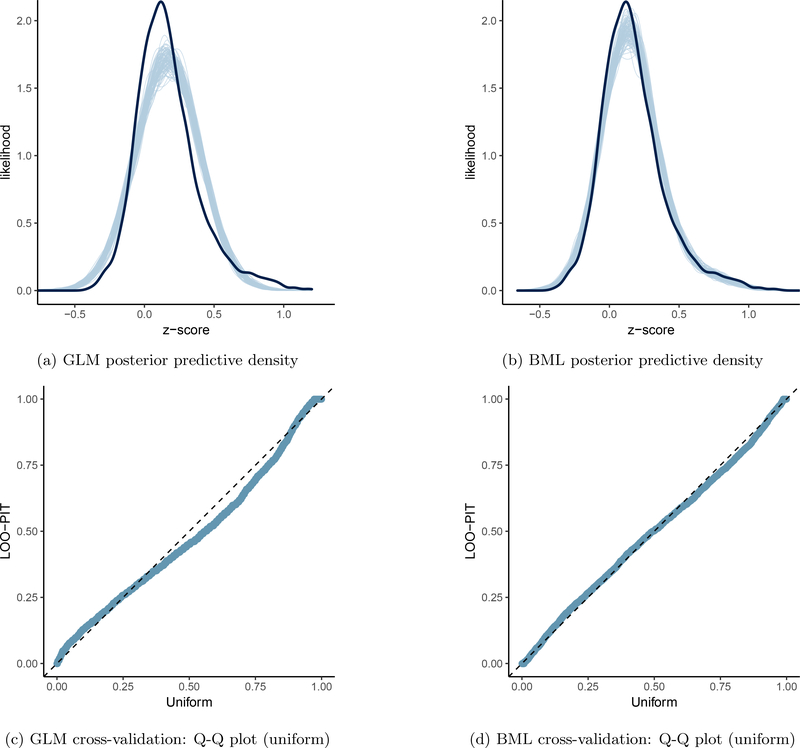

Figure 3:

Model performance comparisons through posterior predictive checks and cross validations between conventional univariate GLM (a and c) and BML (b and d). The subfigures a and b show the posterior predictive density overlaid with the raw data from the 124 subjects at the 21 ROIs for GLM and BML, respectively: solid black curve is the raw data at the 21 ROIs with linear interpolation while the fat curve in light blue is composed of 100 sub-curves each of which corresponds to one draw from the posterior distribution based on the respective model. The differences between the two curves indicate how well the respective model fits the raw data. BML fitted the data better than GLM at the peak and both tails as well as the skewness because pooling the data from both ends toward the center through shrinkage clearly validates our adoption of BML. The subfigures c and d contrast GLM and BML through cross-validation with leave-one-out log predictive densities through the calibration of marginal predictions from 100 draws; the calibration is assessed by comparing probability integral transformation (PIT) checks to the standard uniform distribution. The diagonal dished line indicates a perfect calibration: there are some suboptimal calibration for both models, but BML is clearly a substantial improvement over GLM. To simulate the posterior predictive data for the conventional ROI-based approach (a and c), the Bayesianized version of GLM (15) was adopted with a noninformative uniform prior for the population parameters.

Figure 1:

Comparisons of results between the conventional GLM and BML

The ROI data were fitted with LME (16) and BML (17) using the overall ToMI as an explanatory variable through, respectively, the R (R Core Team, 2017) package lme4 (Bates et al., 2015) and Stan with the code translated to C++ and compiled. Runtime for BML was 5 minutes including approximately 1 minute of code compilation on a Linux system (Fedora 25) with AMD Opteron 6376 at 1.4 GHz. All the parameter estimates at the population level were quite similar between the two models (Table 4(a)), indicating that the weakly informative priors we adopt for hyperparameters in BML had little impact on parameter estimation. However, of interest here are the effects at the entity (i.e., ROI), not population, level, which could be derived through BML but not LME. As for those effects at the ROI level, compared to the traditional ROI-based GLM, the shrinkage under BML can be seen in Fig. 1: most effect estimates were dragged toward the center. Similar to the ROI-based GLM without correction, BML demonstrated (Figures 1 and 2) strong evidence within 95% quantile interval of the overall ToMI effect at six ROIs (R PCC, R TPJp, L IPL, PCC/PrC, L TPJ, and L aMTS/aMTG), and within 90% (or 95% if directionality was a priori known) quantile interval at two additional ROIs (dmMPFC and vmPFC).

Table 4:

Comparisons among GLM, LME and BML. (a) The seed-based correlation results at 21 ROIs from 124 subjects were fitted with LME (using the R package lme4) and BML (using Stan with 4 chains and 1,000 iterations) separately, in which overall ToMI was an explanatory variable. Random effects under LME correspond to group/entity-level effects plus family specific parameters (standard deviation σ for the residuals ϵij) under BML, while fixed effects under LME correspond to population-level effects under BML. The column headers SD, QI, and ESS are short for standard deviation, quantile interval, effect sample size, respectively. The parameter estimates from the LME and BML outputs (columns in gray) are very similar, even though priors were injected into BML. All values under BML were less than 1.1, indicating that all the 4 chains converged well. The effective sizes for the population- and group/entity-level effect of ToMI were 468 and 947, respectively, enough to warrant quantile accuracy in summarizing the posterior distributions. Comparisons between GLM and BML. (b) To directly compare with BML, the Bayesianized version of GLM (15) was fitted with the data, and the higher predictive accuracy of BML is seen here with its substantial lower out-of-sample deviance measured by the leave-one-out information criterion (LOOIC), the widely applicable (or Watanabe-Akaike) information criterion (WAIC) through leave-one-out cross-validation, and the corresponding standard error (SE).

| (a) Comparisons: LME and BML | |||||||

|---|---|---|---|---|---|---|---|

| Term | LME | BML | |||||

| Estimate | SD | Estimate | SD | 95% QI | ESS | ||

| sd(ξ0) | 0.153 | - | 0.162 | 0.027 | [0.118, 0.225] | 551 | 1.00 |

| sd(ξ1) | 0.008 | - | 0.009 | 0.002 | [0.005, 0.014] | 947 | 1.00 |

| corr(ξ0, ξ1) | 0.88 | 0.773 | 0.161 | [0.366, 0.985] | 1054 | 1.00 | |

| sd(π) | 0.076 | - | 0.077 | 0.006 | [0.066, 0.091] | 500 | 1.01 |

| b0 | 0.168 | 0.034 | 0.167 | 0.036 | [0.094, 0.241] | 162 | 1.03 |

| b1 | 0.007 | 0.004 | 0.007 | 0.004 | [-0.001, 0.015] | 468 | 1.00 |

| σ | 0.153 | - | 0.153 | 0.002 | [0.149, 0.157] | 2000 | 1.00 |

| (b) Comparisons: GLM and BML | ||

|---|---|---|

| Model | LOOIC | SE |

| GLM | −300.39 | 98.25 |

| BML | −2247.06 | 86.42 |

| GLM - BML | 1946.67 | 96.35 |

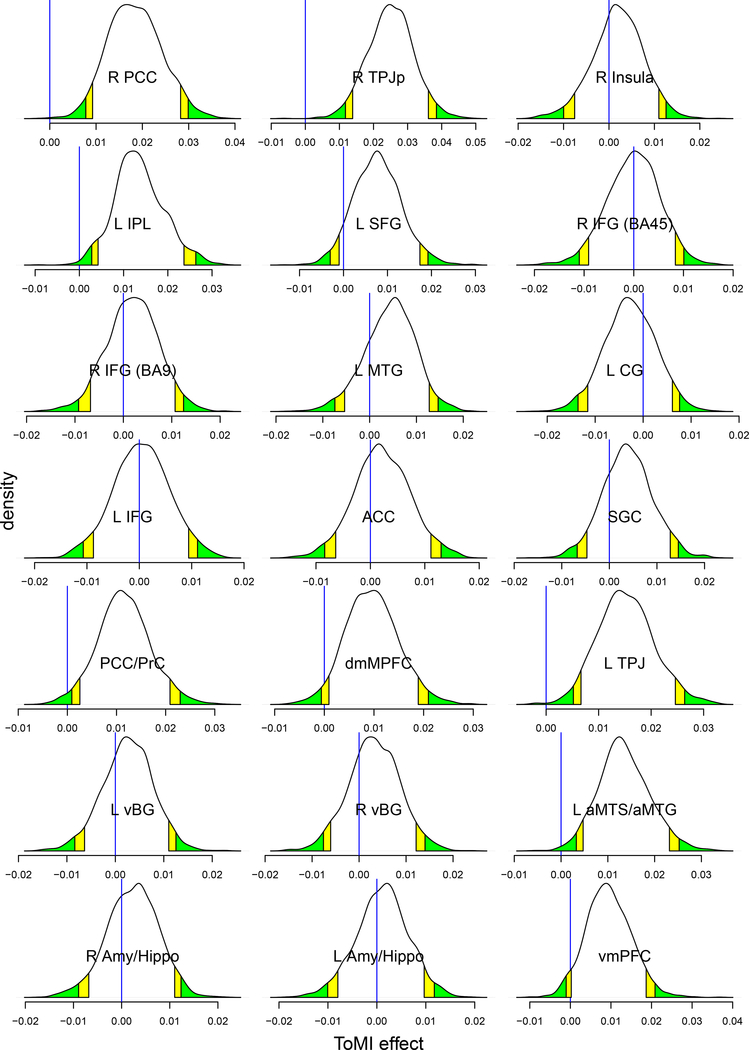

Figure 2:

Posterior density distributions based on 2000 draws from BML. The vertical blue line indicates zero ToMI effect, yellow and green tails mark the 90% and 95% quantile intervals, respectively, and the ROIs with strong evidence of ToMI effect can be identified as the blue line being within the color tails. Compared to the conventional confidence interval that is flat and inconvenient to interpret, the posterior density provides much richer information about each effect such as spread, shape and skewness.

One exception to the general shrinkage under BML is that the median effect, 0.025, at the region of R TPJp (second row in the table and box plot of Fig. 1) was actually higher than that under GLM, 0.018. Such an exception occurred because the final result is a combination or a tug of war between the shrinkage impact as shown in (8) and the correlation structure among the ROIs as shown in (9). Noticeably, the quality and fitness of BML can be diagnosed and verified through posterior predictor check (Fig. 3a and Fig. 3b) that compares the observed data with the simulated data based on the model: not only did BML accommodate the skewness of the data better than GLM, but also did the partial pooling render much better fit for the peak and both tails as well. Cross validation through LOO (Table 4(b), Fig. 3c and Fig. 3d) also manifested the advantage of BML fitting over GLM. Nevertheless, there is still room for the improvement of BML: the peak area could be fitted better, which may require nonlinearity or incorporating other potential covariates.

One apparent aspect that the ROI-based BML excels is the completeness and transparency in results reporting: if the number of ROIs is not overwhelming (e.g., less than 100), the summarized results for every ROI can be completely presented in a tabular form (c.f. Fig. 1) and in full distributions of posterior density (Fig. 2). It is worth emphasizing that Bayesian inferences focus less on the point estimate of an effect and its associated quantile interval, but more on the whole posterior density as shown in Fig. 2 that offers more detailed information about the effect uncertainty. Unlike the whole brain analysis in which the results are typically reported as the tips of icebergs above the water, posterior density reveals the spread, shape and skewness regardless of the statistical evidence. In addition, one does not have to stick to a single harsh thresholding when deciding a criterion on the ROIs for discussion; for instance, even if an ROI lies outside of, but close to, the 95% quantile interval (e.g., dmMPFC and vmPFC in Figures 1 and 2), it can still be reported and discussed as long as all the details are revealed. Such flexibility and transparency are difficult to navigate or maneuver through cluster thresholding at the whole brain level. As a counterpart to NHST, a probability metric could still be provided for each effect under BML in the sense as illustrated in Table 5; however, we opt not to do so for two reasons: 1) such a probability measure could be easily misinterpreted in the NHST sense, and, more importantly, 2) it is the predictive intervals shown in Fig. 1 and the complete posterior distributions illustrated in Fig. 2, not the single probabilities, that fully characterize the posterior distribution, providing richer information than just binary (“in or out”) thresholding.

Table 5:

Interpretation differences between NHST and Bayesian framework

| Probability p | Effect Interval [L, U] | |

|---|---|---|

| NHST | If H0 is true, the probability of having the current result or more extreme is p (based on what would have occurred under other possible datasets); e.g., P(|T(y)| > tc|easy = difficult) = p, where T(y) is a statistic (e.g., Student’s t) based on data y and tc is a threshold. | If the study is exactly repeated an infinite number of times, the percentage of those confidence intervals will cover the true effectis 1 — p; e.g., P(L ≤ easy - difficult ≤ U) = 1 — p, where “easy - difficult” is treated as being fixed while L and U are random. |

| Bayesian | The probability of having the current result being different from zero is p (given the dataset); e.g., P(easy — difficult < L or easy — difficult > U|y) = p, where L and U are lower and upper bounds of the (1 — p)100% quantile interval. | The probability that the effect falls in the predictive interval is 1 — p (given the data); e.g., P(L ≤ easy — difficult ≤ U|y) = 1 — p, where “easy - difficult” is considered random while L and U are known conditional on data y. |

Interestingly, those four regions (L IPL, L TPJ, R PCC, PCC/PrC) that passed the FWE correction at the voxel-wise p-cutoff of 0.005 (Table 2) in the whole brain analysis were confirmed with the ROI-based BML (Figures 1 and 2). Moreover, another four regions (L aMTS/aMTG, R TPJp, vmPFC, dmPFC) revealed some evidence of ToMI effect under BML. In contrast, these four regions did not stand out in the whole brain analysis after the application of FWE correction at the cluster level regardless of the voxel-wise p-threshold (Table 2), even though they would have been evident if the cluster size requirement were not as strictly imposed.

Discussion

Applied to the neuroimaging context, BML adopts partial pooling and can be considered as a trade-off between two extreme modeling choices (Appendix D): complete pooling and no pooling. Complete pooling assumes no variations among the entities (voxels, regions, or surface nodes); that is, all entities are assumed to be identical or homogeneous. Because of the omnipresence of regional heterogeneity in the brain, nobody would be interested in such a modeling strategy in neuroimaging, but it serves here as an extreme anchor for the convenience of comparison. In contrast, no pooling, currently adopted in massively univariate modeling, fully trusts the data, and offers the best fit separately for each individual entity to the current data at hand. As a consequence, each entity is considered autonomous and independent with each other in the analytical model. To some extent, the current approaches pool the information across the neighboring voxels in the step of controlling FPR through clusterization. However, there are two huge disadvantages associated with no pooling: it carries the risk of overfitting, poor inference or prediction for future data; and, to control for multiplicity and overconfidence, the current approaches compromise in efficiency by paying the price in over-penalizing small regions through leveraging the spatial extent.

Through an adaptively regularizing prior (e.g., Gaussian distribution among brain regions), partial pooling achieves a counterbalance between homogenization and autonomy. Specifically, BML treats each entity as a substantiation generated through a random process that adaptively regularizes the entities, and it conservatively pools the effect of each entity toward the center. In other words, the methodology sacrifices model performance in the form of a poorer fit in samples (observed data) for the sake of better interfere and better fit (prediction) out of samples (future data) through partial pooling (McElreath, 2016). Therefore, BML may fit each region individually worse than univariate GLM, but BML excels in collective fitting and overall model performance. It is this counterbalance through regularization that effectively controls the errors of incorrect sign and incorrect magnitude; and as a byproduct, BML leverages the multiplicity issue and equally treats all regions purely based on their signal strength, regardless of their spatial size.

Current approaches to correcting for FPR

Arbitrariness is involved in the multiple testing correction of parametric methods. In the conventional statistics frame work, the thresholding bar ideally plays the role of winnowing the wheat (true effect6) from the chaff (random noise), and a p-value of 0.05 is commonly adopted as a benchmark for comfort in most fields. However, one big problem facing the correction methods for multiple testing is the arbitrariness surrounding the thresholding, in addition to the arbitrariness of 0.05 itself. Both Monte Carlo simulations and random field theory start with a voxel-wise probability threshold (e.g., 0.01, 0.005, 0.001) at the voxel (or node) level, and a spatial threshold is determined in cluster size so that overall FPR can be properly controlled at the cluster level. If clusters are analogized as islands, each of them may be visible at a different sea level (voxel-wise p-value). As the cluster size based on statistical filtering plays a leveraging role, with a higher statistical threshold leading to a smaller cluster cutoff, a neurologically or anatomically small region can only gain ground with a low p-value while large regions with a relatively large p-value may fail to survive the criterion. Similarly, a lower statistical threshold (higher p) requires a higher cluster volume, so smaller regions have little chance of reaching the survival level. In addition, this arbitrariness in statistical threshold at the voxel level poses another challenge for the investigator: one may lose spatial specificity with a low statistical threshold since small regions that are contiguous may get swamped by the overlapping large spatial extent; on the other hand, sensitivity may have to be compromised for large regions with low statistic values when a high statistical threshold is chosen. A recent critique on the rigor of cluster formation through parametric modeling (Eklund et al., 2016) has resulted in a trend to require a higher statistical thresholding bar (e.g., with the voxel-wise threshold below 0.005 or even 0.001); however, the arbitrariness persists because this trend only shifts the probability threshold range.

Permutation testing is limited in modeling capability. For example, it shares the same limitations as univariate GLM in handling missing data and sophisticated random-effects structures; in addition, it does not have an effective approach to taking into consideration the reliability of effect estimates. Furthermore, it has its share of arbitrariness in multiple testing correction too. As an alternative to parametric methods, an early version of permutation testing (Nichols and Holmes, 2001) bears similar arbitrary issues. It starts with the construction of a null distribution through permutations in regard to a maximum statistic (either maximum testing statistic or maximum cluster size based on a predetermined threshold for the testing statistic). The original data are assessed against the null distribution, and the top winners at a designated rate (e.g., 5%) among the testing statistic values or clusters will be declared as the surviving ones. While the approach is effective in maintaining the nominal FWE level, two problems are embedded with the strategy. First of all, the spatial properties are not directly taken into consideration in the case of maximum testing statistic. For example, an extreme case to demonstrate the spatial extent issue is that a small cluster (or even a single voxel) might survive the permutation testing as long as its statistic value is high enough (e.g., t(20) = 6.0) while a large cluster with a relatively small maximum statistic value (e.g., t(20) = 2.5) would fail to pass the filtering. The second issue is the arbitrariness involved in the primary thresholding for the case of maximum cluster size: a different primary threshold may end up with a different set of clusters. That is, the reported results may likely depend strongly on an arbitrary threshold. Addressing these two problems, a later version of permutation testing (Smith and Nichols, 2009) takes an integrative consideration between signal strength and spatial relatedness, and thus solves both problems involving the earlier version of permutation testing7. Such an approach has been implemented in programs such as Randomise and PALM in FSL using threshold-free cluster enhancement (TFCE) (Smith and Nichols, 2009) and in 3dttest++ in AFNI using equitable thresholding and clusterization (ETAC) (Cox, 2018). Nevertheless, the adoption of permutations in neuroimaging, regardless of the specific version, is not directly about the concern of distribution violation as in the classical nonparametric setting (in fact, a pseudo-t value is still computed at each voxel in the process); rather, it is the randomization among subjects in permutations that creates a null distribution against which the original data can be tested at the whole brain level.

We argue that spatial size as a correction leverage unnecessarily pays the cost of lower identification power to achieve the nominal false positive level. All of the current correction methods, parametric and nonparametric, are still meant to use spatial extent or the combination of spatial extent and signal strength as a filter to control the overall FPR at the whole brain level. They all share the same hallmark of sharp thresholding at a preset acceptance level (e.g., 5%) under NHST, and they all use spatial extent as a leverage, penalizing regions that are anatomically small and rewarding large smooth regions (Cremers et al., 2017). The combination of signal strength and spatial extent adopted in the recent permutation methods such as TFCE and ETAC are advantageous in addressing the issue of arbitrariness of primary thresholding in the cluster-based correction methods and the primary permutation approach. Nevertheless, such a method of “extent and height” still discriminates spatially small regions, even though less so. For instance, between two brain regions with the same signal strength, the anatomically larger one would be easier to pass the current approaches including TFCE and ETAC than its smaller counterpart; between one case with one isolated region and another with two or more contiguous regions, the former may fail to pass the current filtering methods even with a stronger signal strength. Due to the unforgiving penalty of correction for multiple testing, some workaround solutions have been adopted by focusing the correction on a reduced domain instead of the whole brain. For example, the investigator may limit the correction domain to gray matter or regions based on previous studies. Putting the justification for these practices aside, accuracy is a challenge in defining such masks; in addition, spatial specificity remains a problem, shared by the typical whole brain analysis, although to a lesser extent.

Questionable practices of correction for FPR under NHST

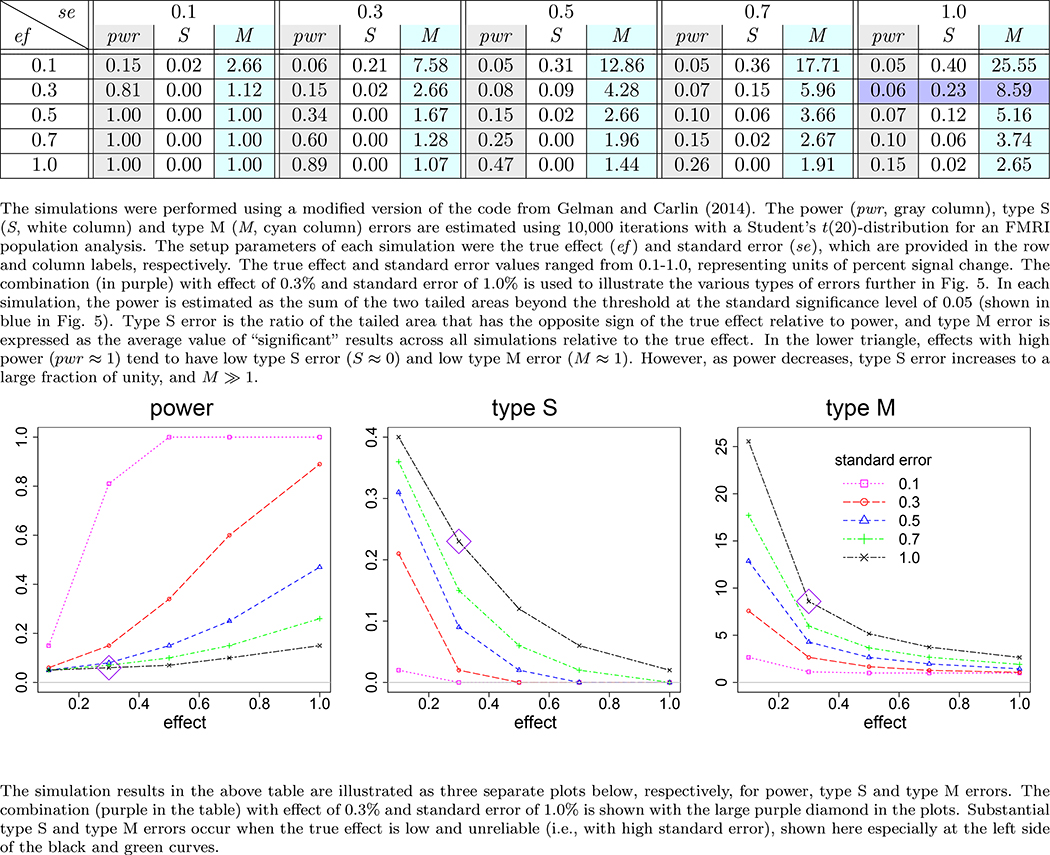

Univariate GLM is inefficient in handling neuroimaging data. It may work reasonably well if the following two conditions can be met: 1) no multiple testing, and 2) high signal-to-noise ratio (strong effect and high precision measurement) as illustrated in the lower triangular part of the table or right side of the curves in Fig. 4 (Appendix B). However, neither of the two conditions is likely satisfied with typical neuroimaging data. Due to the stringent requirements of correction for multiple testing across thousands of resolution elements in neuroimaging, a daunting challenge facing the community is the power inefficiency or high type II errors under NHST. Even if prior information is available as to which ROIs are potentially involved in a study, an ROI-based univariate GLM would still be obliged to share the burden of correction for multiplicity equally and agnostically to any such knowledge. The modeling approach usually does not have the luxury to survive the penalty, as shown with our experimental data in the table of Fig. 1, unless only a few ROIs are included in the analysis. Furthermore, with many low-hanging fruits with relatively strong signal strength (e.g., 0.5% signal change or above) having been largely plucked, the typical effect under investigation nowadays is usually subtle and likely small (e.g., in the neighborhood of 0.1% signal change). Compounded with the presence of substantial amount of noise and suboptimal modeling (e.g., ignoring the HDR shape subtleties), detection power is usually low. It might be counterintuitive, but one should realize that the noisier or more variable the data, the less one should be confident about any inferences based on statistical significance, as illustrated with the type S and type M errors in Figure 5 (Appendix B). With fixed threshold correction approaches, small regions are hard to funnel through the FPR criterion even if their effect magnitude is the same as or even higher than those larger regions. Even for approaches that take into consideration both spatial extent and effect magnitude (TFCE, ETAC), small regions remain disadvantaged when their effect magnitude is at the same level as their larger counterparts.

Figure 4:

Power, type S and type M errors estimated from simulations

Figure 5:

Illustration of the concept and interpretation for power, type I, type S and type M errors (Gelman, 2015). Suppose that there is a hypothetical Student’s t(20)-distribution (black curve) for a true effect (blue vertical line) of 0.3 and a corresponding standard error of 1.0 percent signal change, a scenario highlighted in purple in Fig. 4. Under the null hypothesis (red vertical line and dot-dashed green curve), two-tailed testing with a type I error rate of 0.05 leads to having thresholds at ±2.086; FPR = 0.05 corresponds to the null distribution’s total area beyond these two critical values (marked with red diagonal lines). The power is the total area of the t(20)-distribution for the true effect (black curve) beyond these thresholds, which is 0.06 (shaded in blue). The type S error is the ratio of the blue area in the true effect distribution’s left tail beyond the threshold of −2.086 to the area in both tails, which is 23% here (i.e., the ratio of the “significant” area in the wrong-signed tail to that of the total “significant” area). If a random draw from the t(20)-distribution under the true effect happens to be 2.2 (small gray square), it would be identified as statistically significant at the 0.05 level, and the resulting type M error would quantify the magnification of the estimated effect size as 2.2/0.3 ≈ 7.33, which is much larger than unity.

Furthermore, dichotomous thinking and decision-making under NHST are usually not fully compatible with the underlying mechanism under investigation. Current knowledge regarding brain activations has not reached a point where one can make accurate dichotomous claims as to whether a specific brain region under a condition is activated or not; lack of underlying “ground truth” has made it difficult to validate any but the most basic models. The same issue can be raised about the binary decision as to whether the response difference under two conditions is either the same or different. Therefore, a pragmatic mission is to detect activated regions in terms of practical, instead of statistical, significance. The conventional NHST runs against the idealistically null point H0, and declares a region having no effect based on statistical significance with a safeguard set for type I error. When the power is low, not only reproducibility will suffer, but also the chance of having an incorrect sign for a statistically significant effect be substantial (Fig. 4 and Fig. 5). Only when the power reaches 30% or above does the type S error rate become low. Publication bias due to the thresholding funnel contributes to type S and type M errors as well. The sharp thresholding imposed by the widely adopted NHST strategy uses a single threshold through which a high-dimension dataset is funneled. An ongoing debate has been simmering for a few decades regarding the legitimacy of NHST, ranging from cautionary warning against misuses of NHST (Wasserstein and Lazar, 2016), to tightening the significance level from 0.05 to 0.005 (Benjamin et al., 2017), to totally abandoning NHST as a gatekeeper (McShare et al., 2017; Amrhein and Greenland, 2017). The poor controllability of type S and type M errors is tied up with widespread problems across many fields. It is not a common practice nor a requirement in neuroimaging to report the effect estimates; therefore, power analysis for a brain region under a task or condition is largely obscure and unavailable, let alone the assessment of type S and type M errors.

Lastly, reproducibility may deteriorate through inefficient modeling and dichotomous inferences. Relating the discussion to the neuroimaging context, the overall or global FPR is the probability of having data as extreme as or more extreme than the current result, under the assumption that the result was produced by some “random number generator,” which is built into algorithms such as Monte Carlo simulations, random field theory, and randomly shuffled data as pseudo-noise in permutations. It boils down to the question: are the data truly pure noise (even though spatially correlated to some extent) in most brain regions? Since controlling for FPR hinges on the null hypothesis of no effect, p-value itself is a random variable that is a nonlinear continuous function of the data at hand, therefore it has a sampling distribution (e.g., uniform(0,1) distribution if the null model is true). In other words, it might be under-appreciated that even identical experiments would not necessarily replicate an effect that is dichotomized in the first one as statistically significant (Lazzeroni et al., 2016). The common practice of reporting only the statistically significant brain regions and comparing to those nonsignificant regions based on the imprimatur of statistic- or p-threshold can be misleading: the difference between a highly significant region and a nonsignificant region could simply be explained by pure chance. The binary emphasis on statistical significance unavoidably leads to an investigator only focusing on the significant regions and diverting attention away from the nonsignificant ones. More importantly, the traditional whole brain analysis usually leads to selectively report surviving clusters conditional on statistical significance through dichotomous thresholding, potentially inducing type M errors, biasing estimates with exaggerated effect magnitude, as illustrated in Fig. 5. Rigor, stringency, and reproducibility are lifelines of science. We think that there is more room to improve the current practice of NHST and to avoid information waste and inefficiency. Because of low power in FMRI studies and the questionable practice of binarized decisions under NHST, a Bayesian approach combined with integrative modeling offers a platform to more accurately account for the data structure and to leverage the information across multiple levels.

What Bayesian modeling offers

Bayesian inferences are usually more compatible with the research, not null, hypothesis. Almost all statistics consumers (including the authors of this paper) were a priori trained within the conventional NHST paradigm, and their mindsets are usually familiar with and entrenched within the concept and narratives of p-value, type I error, and dichotomous interpretations of results. Out of the past shadows cast by the theoretical and computation hurdles, as well as the image of subjectivity, Bayesian methods have gradually emerged into the light. One direct benefit of Bayesian inference, compared to NHST, is its concise, intuitive and straightforward interpretation, as illustrated in Table 5. For instance, among its controversies (Morey et al., 2016), the conventional confidence interval weighs equally all possible values a parameter could take within the interval, regardless of how implausible some of them are; in contrast, the quantile interval under Bayesian framework is more subtly expressed through the corresponding posterior density (Fig. 2). Even though the NHST modeling strategy literally falsifies the straw man H0, the real intention is to confirm the alternative (or research) hypothesis through rejecting H0; in other words, the falsification of H0 is only considered an intermediate step under NHST, and the ultimate goal is the confirmation of the intended hypothesis. In contrast, under the Bayesian paradigm, the investigator’s hypothesis is directly placed under scrutiny through incorporating prior information, model checking and revision. Therefore, the Bayesian paradigm is more fundamentally aligned with the hypothetico-deductivism axis along the classic view of falsifiability or refutability by Karl Popper (Gelman and Shalizi, 2013).

In addition to interpretational convenience, Bayesian modeling is less vulnerable to the amount of data available and to the issue of multiple testing. Conventional statistics heavily relies on large sample size and asymptotic property; in contrast, Bayesian inferences bear a direct interpretation conditional on the data regardless of sample size. Practically speaking, should we fully “trust” the effect estimate at each ROI or voxel at its face value? Some may argue that the effect estimate from the typical neuroimaging individual or population analysis has the desirable property of unbiasedness, as asymptotically promised by central limit theory. However, in reality the asymptotic behavior requires a very large sample size, a luxury difficult for most neuroimaging studies to reach. As the determination of a reasonable sample size depends on signal strength, brain region, and noise level, the typical sample size in neuroimaging tends to get overstretched, creating a hotbed for a low power situation and potentially high type S and type M errors. Another fundamental issue with the conventional univariate modeling approach in neuroimaging is the two-step process: first, pretend that the voxels or nodes are independent with each other, and build a separate model for each spatial element; then, handle the multiple testing issue using spatial relatedness to only partially, not fully, recoup the efficiency loss. In addition to the conceptual novelty for those with little experience outside the NHST paradigm, BML simplifies the traditional two-step workflow with one integrative model, fully shunning the multiple testing issue.

Through integrative incorporation across ROIs, the BML approach renders conservative effect estimation in place of controlling FPR. Unlike the p-value under NHST, which represents the probability of obtaining the current data generated by an imaginary machinery (e.g., assuming that no effect exists), a posterior distribution for an effect under the Bayesian framework directly and explicitly shows the uncertainty of our current knowledge conditional on the current data and the prior model. From the Bayesian perspective, we should not put too much faith in point estimates. In fact, not only should we not fully trust the point estimates from GLM with no pooling, but also should we not rely too much on the centrality (median, mean) of the posterior distribution from a Bayesian model. Instead, the focus needs to be placed more on the uncertainty, which can be visualized through the posterior distributions or characterized by the quantile intervals of the posterior distribution if summarization is required. Specifically, BML, as demonstrated here with the ROI data, is often more conservative in the sense that it does not “trust” the original effect estimates as much as GLM, as shown in Fig. 1; additionally, in doing so, it fits the data more accurately than the ROI-based GLM (Table 4(b) and Fig. 3). Furthermore,, the original multiple testing issue under the massively univariate platform is deflected within one unified model: it is the type S, not type I, errors that are considered crucial and controlled under BML. Even though the posterior inferences at the 95% quantile interval in our experimental data were similar to the statistically significant results at the 0.05 level under NHST, BML in general is more statistically conservative than univariate GLM under NHST, as shown with the examples in Gelman et al. (2012).

We reiterate that the major difference is the assumption about the brain regions: noninformitive flat prior for the conventional GLM versus the Gaussian assumption for BML. With a uniform prior, all values on the real axis are equally likely; therefore, no information is shared across regions under GLM. On the other hand, it is worth mentioning that the Gaussian assumption for the priors including the likelihood under a Bayesian model is based on two considerations: one aspect is convention and pragmatism, and the other aspect is the fact that, per maximum entropy principle, the most conservative distribution is Gaussian if the data have a finite variance (McElreath, 2016). However, a Bayesian model tends to be less sensitive to the model (likelihood or prior for the data in Bayesian terminology); in other words, even though the true data-generating process is always unknown, a model is only a convenient framework or prior knowledge to start with the Bayesian updating process so that a Bayesian model is usually less vulnerable to assumption violations. In contrast, statistical inferences with conventional approaches heavily rely on the sampling distribution assumptions. Through adaptive regularization, BML achieves a goal to trade off poorer fit in sample for better inference and improved fit out of sample (McElreath, 2016); the amount of regularization is learned from the data through partial pooling that embodies the similarity assumption of effects among the brain regions. From the NHST perspective, BML can still commit type I errors, and its FPR could be higher under some circumstances than, for example, its GLM counterpart. Such type I errors may sound alarmingly serious; however, the situation is not as severe as its appearance for two reasons: 1) the concept of FPR and the associated model under NHST are framed with a null hypothesis, which is not considered pragmatically meaningful in the Bayesian perspective; and 2) in reality, inferences under BML most likely have the same directionality as the true effect because type S errors are well controlled across the board under BML (Gelman and Teulinckx, 2000). Just consider which of the following two scenarios is worse: (a) when power is low, the likelihood under the NHST to mistakenly infer that the BOLD response to easy condition is higher than difficult could be sizable (e.g., 30%), and (b) with the type S error rate controlled below, for example, 3.0%, the BML might exaggerate the magnitude difference between difficult and easy conditions by, for example, 2 times. While not celebrating the scenario (b), we expect that most researchers would view the scenario (a) as more problematic.