Abstract

Objective:

There is a clear need for improved quality of research publications in the area of cardiothoracic surgical education. With the goals of enhancing the power, rigor, and strength of educational investigations, the Thoracic Education Cooperative Group seeks to outline key concepts in successfully conducting such research.

Methods:

Literature and established guidelines for conduct of research in surgical education were reviewed, and recommendations were developed for investigators in thoracic surgical education.

Results:

Key steps in educational research are highlighted and discussed with regard to their application to cardiothoracic surgical education. Specifically, advice is provided in terms of developing a research question, educational methodology, ethical issues, and handling power and sample sizes. Additional caveats of educational research that are addressed include aspects of validity, survey conduct, and simulation research.

Conclusions:

Educational research can serve to enhance the practices and careers of current trainees, our scientific community, and thoracic surgical educators. To optimize the quality of such educational research, it is imperative that teachers, innovators, and contributors to academic scholarship in our field familiarize themselves with key steps in conducting educational studies.

Keywords: cardiothoracic surgery, surgical education, education research

In recent years, research in the realm of surgical education has evolved substantially. Scholarship in educational research has a frequent presence at the podium within our cardiothoracic surgical meetings, with entire sessions at times concentrating on topics of an educational nature. Certainly, outside of our niche subspecialty, surgical education research has grown exponentially over the last 2 decades, with surgeons devoting entire academic careers to educational pathways and attending national meetings focused on education alone. While interest in educational research has grown, there has been an inevitable lag in augmenting the strength of the educational research produced.

Claims abound regarding the frequent weaknesses of research studies in medical education, such as the following pronunciation at an invited address to the American Research Association: “the quality of published studies in education and related disciplines, is, unfortunately, not high,” going on to remark that methodology experts have found that greater than 60% of such published research involves methods that are completely flawed. 1,2 There exist numerous current issues in improving research efforts in medical education, and they start with the most rudimentary of problems. For basic science, translational, and clinical investigators, it is obvious that research inquiries begin with a precisely defined research question; not surprisingly, this is also the key foundation of developing studies in medical education.3 Nonetheless, it has been shown that the most frequent reason that reviewers reject articles submitted to conferences in medical education have included issues surrounding the lack of a good research question, problem statement, or research hypothesis 4 Thus, there is clearly a need for more hypothesis-driven research, at the very minimum, without even delving into the means of evaluating said hypotheses.2

Similar findings have been drawn elsewhere, particularly within the realm of surgical education. A review of 292 published articles on surgical education found that the majority were editorial in nature, with less than 5% having an experimental basis.5 Frequent issues are, again, lack of explicitly stated and testable hypotheses, as well as lack of generalizability of issues arising from local, institutional concerns.5-9 Beyond the lack of appropriate research questions, a systematic review of medical education publications further found that additional issues with these articles included the absence of other key elements, such as missing results in a majority of articles (54%) and a lack of appropriate control group in others (56%).10

To ensure that research in medical and surgical education remains scientifically rigorous, there have been trends to both measure quality and develop standards for educational investigations. Although a number of authors have discussed the importance of appraising the quality of clinical research studies, few instruments are available to specifically appraise the quality of publications in medical education.11 Two tools, the Medical Education Research Study Quality Instrument and the Newcastle–Ottawa Scale-Education, have been found to be useful, reliable, complementary instruments for appraising quality of these types of studies.11 Such tools can allow for objective evaluation of educational research studies by providing quantifiable scores in specific domains related to various aspects of the methodology, aiming to ultimately discern higher-quality educational research studies from those that are not as strong in their design.

Beyond evaluating the quality of educational research, there have also been efforts to put forth standards for studies that will be published in the realms of curriculum, assessment, and training. In a proposal published in the Journal of General Internal Medicine, Cook and colleagues8 suggested that standards were necessary to ensure acceptable quality of educational scholarship. General standards from this proposal echo those previously published elsewhere, touching on the issues of quality questions, quality methods to match the questions, insightful interpretation of findings, unbiased reporting, and appropriate attention to human subjects’ protection.8,12,13

ENTER THE THORACIC EDUCATION COOPERATIVE GROUP

Recognizing the need to improve quality in surgical education research, and particularly in cardiothoracic surgical education, the Thoracic Education Cooperative Group (TECoG) was organized in 2014 on the premise that significant advances in thoracic surgical education would be made by a cooperative approach to the design and conduct of studies on trainees through multi-institutional research.14

Significant considerations in the conduct of TECoG’s research include the enhancement of excellence, innovation, scholarship, cost-effectiveness and appropriate use of resources, strict adherence to ethical research principles, and equal opportunities for both genders and all race/ethnicities in thoracic surgical education. TECoG has several studies under way, with some early successes published.15,16 TECoG aims not only to conduct high-quality, appropriately powered educational research but also to raise the bar on educational scholarship in the field of cardiothoracic surgery.

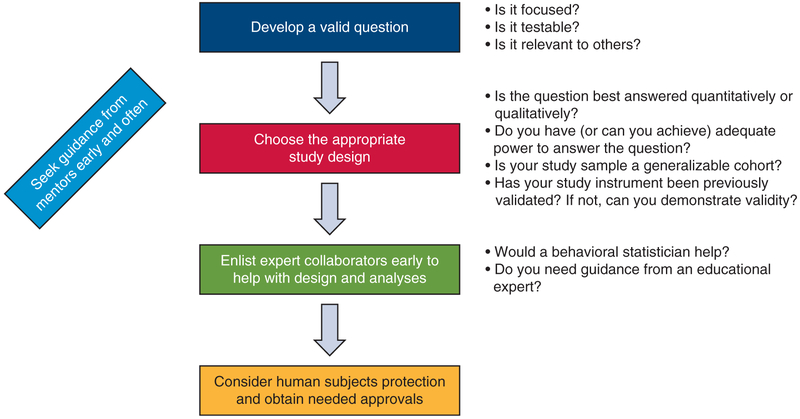

Thus, having highlighted a need for improved quality of publications in educational research, we, on behalf of TECoG, hope to assist investigators who want to design scientifically rigorous studies in cardiothoracic surgical education by providing a brief outline on how to do so (Figure 1).

FIGURE 1.

Key steps in educational research. There are a number of key steps to consider when embarking on an investigation in the area of education.

KEY STEPS IN EDUCATIONAL RESEARCH

Seeking to provide recommendations for best practices in conducting educational research, we conducted a literature review aiming to address key questions in the steps of performing studies in medical and surgical education. The results of this review have been summarized.

Where It All Begins: Developing the Research Question

Although any number of questions related to education may be interesting and important within a single institution, educational research serves the broader community of surgical educators and should provide lessons that are generalizable to other educational settings or subjects.17

The initial step in conducting high-quality research in education is the formulation of an appropriate research question. Research questions should be focused, discrete, and testable.18 Although many of these ideas may originate from anecdotal experiences within the researcher’s own practice, a careful review of the literature will greatly help to frame the question in the context of existing knowledge. Not only will this help to limit the introduction of bias in the study design, but also contextualization of the question in the literature will increase the generalizability of the eventual findings of the study. Of course, given the newness of the field of thoracic surgical education, the literature search may be limited by a lack of existing similar studies. Nonetheless, one should search for relevant concepts, perhaps in other fields of medicine, and for any literature that may be pertinent to the problem being addressed, even if the intervention may be novel. After conducting the literature search and refining the question, one should keep in mind that the scope of the question should also be narrow enough that a discretely identified and testable hypothesis can be generated.

You Have to Have a Plan: Educational Research Design

Although educational research can take a variety of forms, the basic principles are common among them. Once the research question is defined, the next step in the research process is to choose the appropriate study design to answer the question. The design of a study is often a critical determinant in the success of the project, and this is no less true in education research. Educational research can be conducted both prospectively and retrospectively using variations of studies that may be classified into 3 broad categories: quantitative, qualitative, and mixed-methods studies (Table 1). Quantitative research involves the analysis of numeric data to quantify trends and frequencies of a given phenomenon. In contrast, qualitative research gathers non-numeric data to holistically describe a given phenomenon in its natural setting. It seeks to explain “how” and “why” such phenomena occur through observation and interviews.21

TABLE 1.

Examples of key types of educational research

| Type of research |

Example publication | Study aim |

|---|---|---|

| Quantitative | Antonoff and colleagues, 2016 | Comparing in-service exam scores before and after access with the national online curriculum19 |

| Qualitative | Vaporciyan and colleagues, 2017 | Using a Delphi approach to identify key steps of a coronary artery bypass procedureE16 |

| Mixed methods | Minter and colleagues, 2015 | Using structured surveys and open-ended reflective questions from surgical interns at multiple institutions to determine perceived preparedness for the transition from medical school to residencyE17 |

| Survey | Boffa and colleagues, 2012 | Querying graduates of thoracic residencies to determine self-reported thoracoscopic lobectomy proficiencyE18 |

| Simulation | Fann and colleagues, 2010 | Assessing impact of focused training on a porcine model and task station at the Boot Camp on subsequent scores in recorded videos of coronary anastomoses20 |

Quantitative studies are likely the most familiar to physicians and involve the collection and measurement of discrete data. This type of research involves the analysis of numeric data to quantify trends and frequencies of a given phenomenon. These studies typically focus on a testable question or hypothesis that is determined a priori, and then use the data collected to support or refute this hypothesis.22 For example, if you have introduced a new simulation curriculum, you might hypothesize that this curriculum will more rapidly improve the technical skills of the residents who participate than those who do not. Traditional statistical methods are then used to compare groups with one another. For example, in a 2014 article, Fann and colleagues20 described their experience with the thoracic surgery Boot Camp, which was organized by the Thoracic Surgery Directors Association and the American Board of Thoracic Surgery. In this investigation, the authors reported that focused training on a porcine model and task station ultimately resulted in improved scores on recorded videos of coronary anastomoses.

By contrast, qualitative studies focus on developing a better understanding of factors that motivate behavior and gathering non-numeric data to holistically describe a given phenomenon in its natural setting.23 They seek to explain“how” and “why” such phenomena occur through observation and interviews.21 As such, this study design is frequently not focused on testing a predetermined hypothesis, but rather on the generation of a conceptual model that explains the phenomenon or behavior of interest. Data collection in qualitative research typically takes the form of individual interviews or focus group sessions. The text of these sessions is then analyzed using an inductive approach to identify recurrent themes and to develop an explanatory conceptual framework for the relationships being studied.24 Research designs may seek to develop new frameworks to explain concepts or to fit the findings into an existing framework.25,26 Unfortunately, many clinicians have considered qualitative research as inferior to quantitative research, with many assuming that the former is less rigorous and susceptible to a higher degree of subjectivity, whereas quantitative analyses are more systematic and objective.27 This is an inaccurate assumption. One methodology is not better than the other; rather, each may be better suited for studying certain research questions. Ultimately, the research question should drive the choice of methodology. 25,28

Questions based on how and why things occur are best answered by qualitative methods. Such questions can be conceptualized into 4 types: exploratory (studies poorly understood phenomena), descriptive (describes phenomena), explanatory (explains patterns related to phenomena), and predictive (predicts the outcomes of phenomena).29

The 3 primary techniques for collection of data in qualitative research are observation, interviews, and content analysis. Observation involves watching subjects in their natural environment to document their actual behaviors. Interviews are typically open-ended, verbal questionnaires used to uncover people’s thoughts and attitudes about a phenomenon. Content analysis involves analyzing written documents or other forms of communication to obtain further information about a phenomenon.30

As the name implies, mixed-methods research combines qualitative and quantitative research methodology. In mixed-methods studies, quantitative methods are used to identify measurable outcomes, for example, the difference in performance between 2 groups, such as comparing changes in in-service scores for trainees over time, from before they had access to an online curriculum and afterward.19 In this example, to complete a mixed-methods study, qualitative interviews could then be conducted to develop a better understanding of the factors that might have contributed to those differences. (Were they learning more from direct interaction with the curriculum? Did access to the curriculum reflect other aspects about the training environment or the learning style of the trainees?) Although multivariable quantitative methods can theoretically perform a similar function, these strategies are limited to the variables present in the dataset. Combining qualitative observations with these quantitative data provides an opportunity to understand unmeasured factors that may influence the outcome of interest. This may provide a more comprehensive understanding of a complex research question than either methodology alone. To ensure the academic rigor of mixed-methods studies, researchers must have clearly defined research questions and explicitly report the methodological components involved. Ideally, a powerful study should be a collaboration between experts in both methods of research given its complexity.31

Protecting Your Trainees: Ethical Issues in Surgical Education Research

Protection of the rights of human subjects is a key consideration for any study that seeks to create generalizable knowledge. In education research, as many as 3 distinct groups of subjects may need to be considered: students/trainees, who are the focus of educational interventions; teachers, who are often the individuals delivering the educational interventions; and patients, who are potentially affected by these interventions.18

Given these wide-ranging constituencies, proactive communication with the institutional ethics committee or Institutional Review Board is often valuable for the educational researcher. In cases in which data are to be collected prospectively, or when identification of the individual subject is important to the study, such as in longitudinal measures of performance, consent documentation may be required. However, when the impact of an educational initiative or intervention is being studied in an aggregated group, specific individual consent may not be necessary. One example of this scenario is a recent trial assessing the impact of work hour regulations for surgical residents on patient outcomes.32 In this study, the Institutional Review Board determined that the intervention was a policy experiment because it occurred at the institutional level and did not require specific consent from residents or patients who were affected by the change in policy.33

Sampling and Power: Do Your Findings Matter?

The generalizability of the research findings will hinge on 2 key facets of the study design. First, how were subjects for the study chosen (the sample). Second, is the number of subjects or observations included in the study sufficient to provide adequate power to draw generalizable conclusions. If the sample is too specific to an individual group or setting or is too small to provide adequate power, the findings of the study cannot necessarily be applied to other settings. Although this does not invalidate the observations themselves, it does limit the utility of the research to others.17 When developing a study for publication, it is important to understand the ability to determine meaningful differences in the measured outcome and to develop findings that can be generalized beyond the studied population.18 This concept, referred to as the “statistical power” of the study, reflects the likelihood of detecting a difference between 2 groups if such a difference exists.22 The power calculation is influenced by the effect size of the intervention, the desired power itself, and the significance level. Power is typically set at a minimum threshold of 80% and the significance level (or P value) at 5% (P = .05) in most studies. The effect size is typically drawn from prior experience or from the literature and is an estimation of the magnitude of difference expected between groups due to the study intervention. By knowing these variables, a sample size can be calculated to determine the number of participants necessary to draw statistically meaningful conclusions.

Of note, when considering statistical aspects of the study design, seek consultation with a biostatistician to assist with the methodology and planning of the analyses. Such expertise will not only help ensure adequate power but also enhance the rigor of the study in ways that may often be overlooked by educational researchers.

HIGHLIGHTED SPECIAL ISSUES IN EDUCATIONAL RESEARCH

Validity

Validity is a fundamental cornerstone of the scientific method. It refers to the credibility and accuracy of a study and its ability to measure what it intends to measure. There are 3 major types of validity: criterion-related validity, content validity, and construct validity (Table 2).29

TABLE 2.

Types of validity

| Validity type | Definition |

|---|---|

| Criterion-related validity | Correlation between outcomes of the assessment tool and defined criteria indicative of the ability being tested |

| Content validity | Extent to which the assessment method covers the subject matter |

| Construct validity | Ability of an assessment to evaluate traits that cannot be directly measured |

| Unitary concept of validity | Conclusions of assessments are judged for validity rather than the assessment tools themselves. Content validity serves as evidence of the subject matter, and criterion-related validity serves as evidence of relationship to other variables. |

Criterion-related validity refers to the correlation between test scores and some criterion believed to be an important indicator of the ability being tested. For example, criterion-related validity would be applicable in considering whether in-service scores adequately predict the likelihood of passing the actual qualifying exam. Content validity refers to the extent to which an assessment method covers the subject matter. Continuing the example of the in-service exam, content validity would consider whether the exam truly covers the breadth of cardiothoracic surgery; in other words, is the exam representative of the field of study? Construct validity is the ability of a test to assess traits, qualities, or other phenomena that cannot be directly measured.34,35,E1 Safety of a surgeon cannot be directly measured, but the certifying exam is believed to help distinguish between those who are safe to practice independently and those who are not—but it presumes a certain level of construct validity in the exam.

Validity has recently been reconceptualized as a unitary concept to replace the 3 former types, with construct validity the predominant term used to incorporate all forms of validity under a single notion.E2 The major change under this concept is the idea that a test is not inherently valid or invalid; rather, the conclusions drawn from the results are judged for validity.E3 In this reconceptualization, what was once known as content validity can be described as evidence of the test content, whereas what was previously deemed criterion-related validity serves as the evidence of relationships to other variables. Viewing validity in such a way requires the test-user to approach validity as a hypothesis, critically evaluating a wide range of empirical evidence before accepting or rejecting the test results.

There are 5 subtypes of validity evidence that aim to address the central issues implicit to the unitary concept of validity. In contrast to the 3 categoric “types” of validity, these 5 components are interwoven and function as general validity criteria. They include test content, response process, internal structure, relationship to other variables, and consequences of testing.E4

Evidence based on test content refers to the specifications of an assessment (ie, tasks, wording, format) and how well it measures the construct in question. Evidence based on response process evaluates the extent to which the test requires the subject to demonstrate the trait or skill of interest. Evidence based on internal structure examines the properties of the assessment to determine its reliability and reproducibility. Evidence based on relationship to other variables refers to the generalizability of a test result across different sample groups and settings. Finally, evidence based on the consequences of testing evaluates whether test results are used as intended and in a meaningful way.E5

There are 2 major issues that obstruct validity: content under-representation and construct-irrelevant variance.E6 Content under-representation occurs when an assessment contains insufficient items/tasks to assess the full scope of the construct in question. Construct-irrelevant variance refers to extraneous, uncontrolled, and unrelated variables that affect assessment outcomes.

Conducting Surveys

Before constructing a survey, the designer must ask the following: “What is the purpose of this survey? What do I want to learn? Who is the target population? How will I collect and present these data?” Establishing a clear plan and purpose will ultimately drive the production of a well-designed survey.29

In general, well-designed surveys are simple, yet complete (ie, addresses all questions pertinent to the research project) and user-friendly.E7,E8 They should be short and conversational, using familiar vocabulary and avoiding double-barreled, double-negative, and biased questions. There should be an option for “other (please explain)” with room for explanation in multiple choice questions, because this allows for collection of qualitative data. Overall, careful planning of question content will help to maximize respondent engagement and reliable survey responses.

The researcher should maintain consistent communication with the respondent, providing them with multiple opportunities to participate in the survey. Such strategies include sending reminders at regular intervals, which has been suggested to improve response rates.E9

Behavioral scientists can be extraordinarily helpful in both the design and the analysis of survey-type research, and their involvement should be enlisted early to ensure that the methodological approach is appropriate. To achieve a desired level of scientific rigor, one should consider strengthening the study by collaborating with field experts—a concept that holds true throughout educational research studies of all types.

Simulation Research

Simulation has been defined as “a technique, not a technology, to replace or amplify real experiences with guided experiences, often immersive in nature, that evoke or replicate substantial aspects of the real world in a fully interactive fashion.”E10 It is a useful tool in assessing, informing, and modifying behavior to improve real-world performance in a variety of domains. Simulation tools may be of low or high fidelity, meaning the extent to which the details of the situation exactly mimic real life. For example, a low-fidelity cannulation simulation may include all the necessary elements of an aorta, a cannula, and sutures, but may have little else. A higher-fidelity model may include a realistic-appearing patient and a simulated cardiopulmonary bypass machine. Low-fidelity models tend to be less expensive, but are not necessarily any less effective, because both types of simulation have been shown to be beneficial.

Despite the heavy and well-established use of simulation in commercial aviation and other nonmedical industries, simulation training has only gained interest and momentum in the medical profession in recent years. In particular, surgical simulation is now seen as a powerful tool in surgical training, providing a safe and realistic learning environment for surgeons to develop their skills and ultimately improve patient safety.

Access to simulation and skills laboratories is now a requirement for surgical residency programs; however, it is largely unknown as to whether skills learned in simulation are transferable to the clinical setting. A recent meta-analysis of the limited literature on this topic found that the skills are indeed transferable; however, further research must ensue to explore this critical question.E11 Furthermore, successful integration of simulation into surgical training programs is a challenging task with many other unanswered questions, specifically on the design of standardized curricula with robust metrics for performance evaluations.E12,E13 Any evaluation of simulation and its impact on subsequent performance must take into account the quality of the assessment tools being used. Moreover, many studies of simulation are underpowered, single-institution evaluations, further highlighting the importance of previously mentioned concepts in sample size and power.

Simulation training may have the capacity to rapidly revolutionize surgical education, but a number of aspects of simulation and its integration remain largely unknown. One major question is how best to establish criteria and passing scores for competency-based assessments using simulation. Even then, how transferable are these proficiencies to the clinical setting? Other questions surround issues of cost and accessibility of simulation training programs. Currently, there are more than 90 simulation centers accredited by the American College of Surgeons.E14 This provides a great opportunity for multicenter collaborations to address the many unanswered questions regarding simulation in surgical education.

Additional Resources

For those interested in conducting educational research, there are a number of resources available within thoracic surgery and within the greater scope of general surgery (Table 3). Joining TECoG is an easy means of entry to the field of surgical education, with opportunities to collaborate with experienced educational researchers and to participate in discussions critiquing and optimizing these types of studies. To gain knowledge from a larger population of experts in surgical education, there are entire tracks devoted to the topic within meetings, such as the American College of Surgeons, Surgical Education Week, the collaboration of the Association for Surgical Education, and the Association of Program Directors in Surgery. These can be of enormous benefit to broadening one’s understanding of the breadth of research opportunities available in surgical education. There are several excellent publications that may serve as primers for conducting educational research, such as the Association for Science Education’s Guide for Researchers in Surgical Education.29 For those interested in delving into scholarship in educational research to a greater extent, with dedicated time toward expanding knowledge in this realm, there are a number of programs available, ranging from the Association for Science Education Surgical Education Research Fellowship Program to Masters programs throughout the country for health professionals interested in advanced degrees in health education.

TABLE 3.

Online resources for educational researchers

| Title | URL | Description |

|---|---|---|

| Thoracic Education Cooperative Group | www.tsda.org/education/tecog/ | Home page for TECoG, providing contact information for the group and its leadership, as well as the Project Proposal Form and organization bylaws |

| Getting Started in Educational Research, the Association for Surgical Education | https://surgicaleducation.com/getting-started-2/ | Links to helpful books, opportunities for earning educational certificates, funding for educational projects, and Master’s degrees in education research |

| Resources in Surgical Education | https://www.facs.org/education/division-of-education/publications/rise | Resource curated by the American College of Surgeons, including peer-reviewed articles relating to aspects of surgical education |

| Surgical Education Research Fellowship | https://surgicaleducation.com/surgical-education-research-fellowship-overview | Overview of the fellowship program, its objectives, and how to apply |

| Surgeons as Educators | https://www.facs.org/education/division-of-education/courses/surgeons-as-educators | Overview of the American College of Surgeons: Surgeons as Educators course and details for prospective participants |

TECoG, Thoracic Education Cooperative Group.

CONCLUSIONS AND FUTURE DIRECTIONS

By outlining the means of conducting appropriate educational research, we hope that we have inspired thoracic surgical educators to apply more rigorous methods to their current means of evaluating their interventions and perhaps motivated talented researchers to consider education as another path toward scholarship. In other surgical specialties, it has become acceptable and even desirable to develop academic careers that focus on educational scholarship, with surgeons, surgery residents, and medical students attaining advanced degrees in education-related fields.E15 Such growth of surgical education as a career focus in cardiothoracic surgery has yet to become popularized. However, perhaps this trend will extend into our specialty as well, with general thoracic, adult cardiac, and congenital heart surgeons choosing educational research as their academic career pathways.

Certainly, there are still several key limitations in thoracic surgical education research. Of utmost significance, our field lacks universal consensus regarding the goals of training and the expected end points upon matriculation into the workforce. Without a clear, defined expected outcome upon that we can all agree, we remain challenged in our ability to measure the success of our efforts in achieving the desired goals. Moreover, although early efforts have been put forth to establish criteria for evaluating the quality of educational research, these tools are immature and limited in number, and further frame-works are in need for evaluating the success of educational innovations. Nonetheless, great strides have been made in progressing surgical education scholarship within cardiothoracic surgery, and the future leaves much room for ongoing growth.

As we aim to improve the quality of research in thoracic surgical education, we hope to see a subsequent impact on the quality of training for future generations in our specialty. Just as we study our clinical outcomes to improve patient care, we must acknowledge that it is necessary to study our educational interventions to improve the future of cardiothoracic surgical training. Certainly, there will remain challenges and limitations to our educational research studies, as is the case with clinical, basic science, or translational research. Nonetheless, high-quality educational research serves to enhance the practices and careers of current trainees, our scientific community, and thoracic surgical educators. Moreover, as we seek to optimize the quality of thoracic surgical education research, we may someday hope to influence medical education beyond the scope of our own specialty.

Central Message.

Research in cardiothoracic surgical education is of utmost importance, but adherence to standards of scientific rigor is critical.

Perspective.

There is a clear need for improved quality of research publications in the area of cardiothoracic surgical education. With the goals of enhancing the power, rigor, and strength of educational investigations, the TECoG seeks to outline key concepts in successfully conducting such research.

Abbreviation and Acronym

- TECoG

Thoracic Education Cooperative Group

Footnotes

Conflict of Interest Statement

Authors have nothing to disclose with regard to commercial support.

The authors thank the supporting members of TECoG for their ongoing participation in the conduct of high-quality educational research and the Thoracic Surgery Directors Association for their support of TECoG.

The Thoracic Education Cooperative Group received administrative support from the Thoracic Surgery Directors Association.

References

- 1.Thompson B Five methodology errors in educational research: the pantheon of statistical significance and other faux pas. Invited address presented at the annual meeting of the American Educational Research Association, San Diego, California, April 12-17, 1998. [Google Scholar]

- 2.Wolf FM. Methodological quality, evidence, and research in medical education (RIME). Acad Med. 2004;79(10 Suppl):S68–9. [DOI] [PubMed] [Google Scholar]

- 3.Morrison J Developing research questions in medical education: the science and the art. Med Educ. 2002;36:596–7. [DOI] [PubMed] [Google Scholar]

- 4.Bordage G Reasons reviewers reject and accept manuscripts: the strengths and weaknesses in medical education reports. Acad Med. 2001;76:889–96. [DOI] [PubMed] [Google Scholar]

- 5.Calhoun J Manual for Researchers in Surgical Education. Springfield, IL: ASE Clearinghouse by the Committee on Educational Research; 1987. [Google Scholar]

- 6.Albert M, Hodges B, Regehr G. Research in medical education: balancing service and science. Adv Health Sci Educ Theor Pract. 2007;12:103–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cook DA, Bordage G, Schmidt HG. Description, justification and clarification: a framework for classifying the purposes of research in medical education. Med Educ. 2008;42:128–33 [DOI] [PubMed] [Google Scholar]

- 8.Cook DA, Bowen JL, Gerrity MS, Kalet AL, Kogan JR, Spickard A, et al. Proposed standards for medical education submissions to the journal of general internal medicine. J Gen Intern Med. 2008;23:908–13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Regehr G. Trends in medical education research. Acad Med. 2004;79:939–47. [DOI] [PubMed] [Google Scholar]

- 10.Cook DA, Beckman TJ, Bordage G. A systematic review of titles and abstracts of experimental studies in medical education: many informative elements missing. Med Educ. 2007;41:1074–81. [DOI] [PubMed] [Google Scholar]

- 11.Cook DA, Reed DA. Appraising the quality of medical education research methods: the medical education research study quality instrument and the Newcastle-Ottawa Scale-education. Acad Med. 2015;90:1067–76. [DOI] [PubMed] [Google Scholar]

- 12.Guidelines for evaluating papers on educational interventions. BMJ. 1999;318: 1265–7. [PMC free article] [PubMed] [Google Scholar]

- 13.Bordage G, Caelleigh AS, Steinecke A, Bland CJ, Crandall SJ, McGaghie WC, et al. Review criteria for research manuscripts. Acad Med. 2001;76:897–978. [PubMed] [Google Scholar]

- 14.Thoracic education cooperative group. Available at: http://www.tsda.org/education/tecog/. Accessed June 22, 2018.

- 15.Luc JGY, Nguyen TC, Fowler CS, Eisenberg SB, Wolf RK, Estrera AL, et al. Novel debate-style cardiothoracic surgery journal club: results of a pilot curriculum. Ann Thorac Surg. 2017;104:1410–6. [DOI] [PubMed] [Google Scholar]

- 16.Loor G, Doud A, Nguyen TC, Antonoff MB, Morancy JD, Robich MP, et al. Development and evaluation of a three-dimensional multistation cardiovascular simulator. Ann Thorac Surg. 2016;102:62–8. [DOI] [PubMed] [Google Scholar]

- 17.Pugh CM, Sippel RS, eds. Success in Academic Surgery: Developing a Career in Surgical Education. New York, NY: Springer; 2013. [Google Scholar]

- 18.Athanasiou T, Debas H, Darzi A. Key Topics in Surgical Education Research and Methodology. Heidelberg: Springer; 2010. [Google Scholar]

- 19.Antonoff MB, Verrier ED, Allen MS, Aloia L, Baker C, Fann JI, et al. Impact of Moodle-based online curriculum on thoracic surgery in-training examination scores. Ann Thorac Surg. 2016;102:1381–6. [DOI] [PubMed] [Google Scholar]

- 20.Fann JI, Calhoon JH, Carpenter AJ, Merrill WH, Brown JW, Poston RS, et al. Simulation in coronary artery anastomosis early in cardiothoracic surgical residency training: the Boot Camp experience. J Thorac Cardiovasc Surg. 2010; 139:1275–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Al-Busaidi ZQ. Qualitative research and its uses in health care. Sultan Qaboos Univ Med J. 2008;8:11–9. [PMC free article] [PubMed] [Google Scholar]

- 22.Rosner B. Fundamentals of Biostatistics. 8th ed. Boston, MA: Cengage Learning; 2016. [Google Scholar]

- 23.Giacomini MK, Cook DJ. Users’ guides to the medical literature: XXIII. Qualitative research in health care B. What are the results and how do they help me care for my patients? Evidence-based medicine working group. JAMA. 2000;284:478–82. [DOI] [PubMed] [Google Scholar]

- 24.Morse J. Completing a Qualitative Project: Details and Dialogue. Thousand Oaks, CA: Sage Publications; 1997. [Google Scholar]

- 25.Mays N, Pope C. Rigour and qualitative research. BMJ. 1995;311:109–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Mays N, Pope C. Qualitative research in health care. Assessing quality in qualitative research. BMJ. 2000;320:50–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Kohn L, Christiaens W. The Use of Qualitative Research Methods in KCE Studies. Brussels: Belgian Health Care Knowledge Centre, 2012 Report; 2012. [Google Scholar]

- 28.Mays N, Pope C. Qualitative research: Observational methods in health care settings. BMJ. 1995;311:182–4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Capella J. Guide for Researchers in Surgical Education. Woodbury, CT: Cine-Med Publishing, Inc; 2010. [Google Scholar]

- 30.Creswell J. Qualitative Inquiry and Research Design: Choosing Among Five Approaches. Los Angeles, CA: Sage; 2007. [Google Scholar]

- 31.Wisdom JP, Cavaleri MA, Onwuegbuzie AJ, Green CA. Methodological reporting in qualitative, quantitative, and mixed methods health services research articles. Health Serv Res. 2012;47:721–45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Bilimoria KY, Chung JW, Hedges LV, Dahlke AR, Love R, Cohen ME, et al. National cluster-randomized trial of duty-hour flexibility in surgical training. N Engl J Med. 2016;374:713–27. [DOI] [PubMed] [Google Scholar]

- 33.Minami CA, Odell DD, Bilimoria KY. Ethical considerations in the development of the flexibility in duty hour requirements for surgical trainees trial. JAMA Surg. 2017;152:7–8. [DOI] [PubMed] [Google Scholar]

- 34.Cronbach LJ, Meehl PE. Construct validity in psychological tests. Psychol Bull. 1955;52:281–302. [DOI] [PubMed] [Google Scholar]

- 35.Gorlin J. Comments on Lissitz and Samuelson: reconsidering issues in validity theory. Educ Res. 2007;36:456–62 [Google Scholar]

E-References

- E1.Kane M. Validation In: Brennan R, ed. Educational Measurement. 4th ed. New York, NY: American Council on Education/Praeger Series on Higher Education; 2006:17–64. [Google Scholar]

- E2.American Psychological Association. American Educational Research Association, and National Council on Measurement in Education: Joint Commission on Standards for Educational and Psychological Testing: Standards for Educational and Psychological Testing. Washington, DC: American Educational Research Association; 1985. [Google Scholar]

- E3.Downing SM. Validity: on meaningful interpretation of assessment data. Med Educ. 2003;37:830–7. [DOI] [PubMed] [Google Scholar]

- E4.American Psychological Association. American Educational Research Association, and National Council on Measurement in Education: Joint Commission on Standards for Educational and Psychological Testing: Standards for Educational and Psychological Testing. Washington, DC: American Educational Research Association; 1999. [Google Scholar]

- E5.Sullivan GM. A primer on the validity of assessment instruments. J Grad Med Educ. 2011;3:119–20. [DOI] [PMC free article] [PubMed] [Google Scholar]

- E6.Messick S. Validity In: Linn R, ed. Educational Measurement. New York, NY: American Council on Education; MacMillan; 1989:13–103. [Google Scholar]

- E7.Boynton PM. Administering, analyzing, and reporting your questionnaire. BMJ. 2005;328:1372–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- E8.Boynton PM, Greenhalgh T. Selecting, designing, and developing your questionnaire. BMJ. 2004;328:1312–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- E9.Gore-Felton C, Koopman C, Bridges E, Thoresen C, Spiegel D. An example of maximizing survey return rates-methodological issues for health professionals. Eval Health Prof. 2002;25:152–68. [DOI] [PubMed] [Google Scholar]

- E10.Gaba DM. The future vision of simulation in health care. Qual Saf Health Care. 2004;13(Suppl 1):i2–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- E11.Sturm LP, Windsor JA, Cosman PH, Cregan P, Hewett PJ, Maddern GJ. A systematic review of skills transfer after surgical simulation training. Ann Surg. 2008;248:166–79. [DOI] [PubMed] [Google Scholar]

- E12.McLaughlin SA, Doezema D, Sklar DP. Human simulation in emergency medicine training: a model curriculum. Acad Emerg Med. 2002;9:1310–8. [DOI] [PubMed] [Google Scholar]

- E13.Pittini R, Oepkes D, Macrury K, Reznick R, Beyene J, Windrim R. Teaching invasive perinatal procedures: assessment of a high fidelity simulator-based curriculum. Ultrasound Obstet Gynecol. 2002;19:478–83. [DOI] [PubMed] [Google Scholar]

- E14.Accredited Education Institutes. American College of Surgeons. Available at: www.facs.org/education/accreditation/aei. Accessed June 25, 2018.

- E15.Rogers DA. Foreword In: Capella J, Kasten SJ, Steinemann S, Torbeck L, eds. Guide for Researchers in Surgical Education. 3rd ed. Woodbury, CT: Cine-Med Publishing; 2010. v–vi. [Google Scholar]

- E16.Vaporciyan AA, Fikfak V, Lineberry MC, Park YS, Tekian A Consensus-derived coronary anastomotic checklist reveals significant variability among experts. Ann Thorac Surg. 2017;104:2087. [DOI] [PubMed] [Google Scholar]

- E17.Minter RM, Amos KD, Bentz ML, Blair PG, Brandt C, D’Cunha J, et al. Transition to surgical residency: a multi-institutional study of perceived intern preparedness and the effect of a formal residency preparatory course in the fourth year of medical school. Acad Med. 2015;90:1116. [DOI] [PubMed] [Google Scholar]

- E18.Boffa DJ, Gangadharan S, Kent M, Kerendi F, Onaitis M, Verrier E, et al. Self-perceived video-assisted thoracic surgery lobectomy proficiency by recent graduates of North American thoracic residencies. Interact Cardiovasc Thorac Surg. 2012;14:797.. [DOI] [PMC free article] [PubMed] [Google Scholar]