Abstract

For a variety of reasons including cheap computing, widespread adoption of electronic medical records, digitalization of imaging and biosignals, and rapid development of novel technologies, the amount of health care data being collected, recorded, and stored is increasing at an exponential rate. Yet despite these advances, methods for the valid, efficient, and ethical utilization of these data remain underdeveloped. Emergency care research, in particular, poses several unique challenges in this rapidly evolving field. A group of content experts was recently convened to identify research priorities related to barriers to the application of data science to emergency care research. These recommendations included: 1) developing methods for cross-platform identification and linkage of patients; 2) creating central, deidentified, open-access databases; 3) improving methodologies for visualization and analysis of intensively sampled data; 4) developing methods to identify and standardize electronic medical record data quality; 5) improving and utilizing natural language processing; 6) developing and utilizing syndrome or complaint-based based taxonomies of disease; 7) developing practical and ethical framework to leverage electronic systems for controlled trials; 8) exploring technologies to help enable clinical trials in the emergency setting; and 9) training emergency care clinicians in data science and data scientists in emergency care medicine. The background, rationale, and conclusions of these recommendations are included in the present article.

The promise of big data and data science to revolutionize many facets of society, including the practice of medicine, is a common refrain found in the medical literature, particularly within specialty and policy circles for the past several years.1 These discussions have more recently begun to filter to health care providers, adding increasing relevance of the topic to clinicians, who are more likely to encounter such discussions While the definition of big data varies, it generally refers to some combination of increasing size and scope of data, including nondiscrete “natural language” data fields and the novel methods and tools to analyze such large and complex data sets. The potential for electronic data to improve patient care in the emergency department (ED) is particularly exciting, in light of the critical nature of many decisions made there. Electronic data capture to promote a better understanding of health and disease has always been part of the argument for the implementation of electronic health records (EHRs). Despite widespread deployment of such systems, there remains skepticism regarding the ability to actually deliver on such promised value.2 Even with expansion of both infrastructure and computational power, significant barriers exist that limit advances to “learning health care systems.” For a variety of reasons, including the acuity of medical conditions and a fragmented health care system, many of these barriers—interoperability, data availability, and data islands—globally relevant to novel health care data science are magnified when applied to emergency care.3 Addressing the issues identified will allow for more streamlined use of and more valid conclusions resulting from research using these novel methods and to provide new insights into pathophysiology, comparative effectiveness of clinical interventions, and clinical systems and operations. Failure to address the potential pitfalls will at best complicate the conduct of research and at worst contribute to fundamentally flawed conclusions, with widespread consequences such as the development of flawed quality metrics or worthless interventions. For these reasons, identification of research and policy priorities for this field is particularly acute and represents the focus of this report.

Emergency departments are responsible for over 140 million patient encounters in the United States each year, compared with approximately 1 billion outpatient clinic visits and 39 million inpatient stays.4–6 Furthermore, EDs are the most common pathway for hospital admission in the United States. Thus, EDs are a critical interface between health care systems and the communities they serve. Rapid diagnosis, risk stratification, and determination of the need for inpatient admission are core emergency medicine activities.7 ED decisions have far-reaching consequences for patient morbidity and mortality, as well as health care costs.8

Data science and machine learning have the potential to augment clinician cognition in the ED by synthesizing vast quantities of clinical data available in the EHR and cross-referencing with exponentially increasing medical literature to identify subgroups of patients amenable to new, precision treatment.9 However, significant technical and systemic barriers exist to allow collating, aggregating, and analyzing data in a meaningful and actionable manner. Furthermore, algorithms solely designed to detect certain biologic phenomena can become idiosyncratic reflections of what tests doctors tend to order. Algorithms trained purely on data sets have the potential to encode racial and sex biases, resulting in automations or magnification of such problems.10 Understanding data surrounding these encounters is a tremendous opportunity to better characterize acute diseases, health care utilization, and ultimately public health.

In September 2017, the National Institutes of Health (NIH) released a request for information (RFI) regarding data science research priorities (NOT-LM-17–006). A joint committee consisting of members of both the Society for Academic Emergency Medicine and American College of Emergency Physicians Research Committees, as well as selected research and health policy experts, were assembled to respond to this RFI and highlight priorities for data science research of relevance to emergency medicine. Content experts were recruited based on leadership positions in academic societies and clinical trial networks with current or a strong history of NIH research funding, prior publications or funding in project leveraging “big data” in emergency medicine applications and/or significant publications and leadership in the area of emergency medicine health policy. If initial experts were not able to contribute, recommendations for their replacements were considered. Ultimately the group consisted of 12 contributors from 12 unique institutions geo-graphically spread across the United States. The group was gathered rapidly in an ad hoc basis due to a short time frame from release of the RFI to the end of the comment period. As such, recommendations were developed via group e-mail roundtable discussion rather than a modified Delphi approach, with all authors contributing and agreeing on final recommendations (Table 1). Priority areas focus on themes of fragmentation, access, fidelity, and formatting. The goal of this report is to disseminate research and policy targets identified by this group that, if properly addressed, will help overcome identified barriers and move big data science from “promise” to “practice.” In June 2018, the NIH released their strategic plan (https://grants.nih.gov/grants/rfi/NIH-Strategic-Plan-for-Data-Science.pdf) which incorporated a number of our committees recommendations.

Table 1.

Summary of High-priority Research and Policy Recommendations, With Examples of Solutions and Potential Pitfalls to Implementation or Adoption

| Recommendation | Examples | Pitfalls |

|---|---|---|

| 1. Develop improved methods for cross-platform identification and linkage of patients | Global unique identifier (GUID) | Security/privacy |

| 2. Create central, deidentified, open access databases | NIH -omics repositories | Unfunded mandates, system maintenance |

| 3. Improve methodologies for visualization and analysis of intensively sampled data | Continuous telemetry, fitness trackers | File size, data storage, proprietary restrictions |

| 4. Develop methods to identify and standardize electronic medical record data quality | Identification of template overuse, prevention of illogical data entry | Evolving, unreliable history |

| 5. Improve and utilize natural language processing | Leverage richness of natural language over discrete data fields | Clinician level and regional variations |

| 6. Develop and utilize syndrome or complaint-based based taxonomies of disease | Chest pain rather than gastroesophageal reflux disease | Billing tied to diagnosis codes |

| 7. Develop a practical and ethical framework to leverage electronic systems for controlled trials | Patient level or site clustered randomization | Overreliance on statistical modeling and inference |

| 8. Explore technologies to help enable clinical trials in the emergency setting | National database of preencounter consent | Practical framework, time sensitivity |

| 9. Train emergency care clinicians in data science and data scientists in emergency care medicine | K08, K23, and K24 mechanisms | Dissociation of clinical practicalities from data analysis |

HIGH-PRIORITY AREAS FOR RESEARCH AND POLICY RELATED TO DATA SCIENCE IN THE ED

1. Develop Improved Methods for Cross-platform Identification and Linkage of Patients

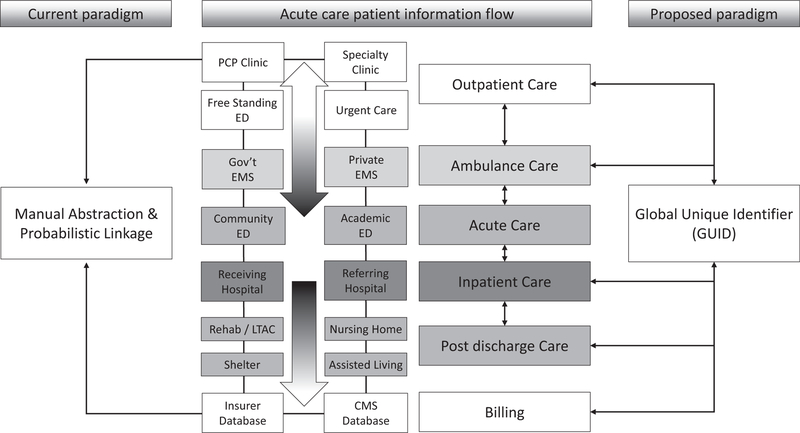

The need to ease cross-platform communication was identified by the group as both a clinical research priority and a critical clinical policy issue (which in turn has implications for observational, epidemiologic, and population research). Emergency, unscheduled patient care encounters involve multiple health care records and the records generated often lack interoperability, leading to significant challenges in transitions of care.10,11 For example, a patient can easily generate three to five unique and unlinked medical records during a single emergency health care encounter (Figure 1). A patient often presents to an independent outpatient setting using one electronic medical record system, is transported via one of several emergency medical services each using its own unique electronic charting system to an ED where the same patient may generate a third unlinked electronic chart, and ultimately is admitted to a hospital that may employ yet another EHR product. Downstream effects of such care become even more opaque when the patient is transferred from one ED or hospital to another or to a postdischarge care setting (e.g., rehabilitation, nursing facilities). Such fragmentation of the medical record is the norm rather than the exception for most emergency care encounters and inability to access data from multiple settings has the potential to systematically bias research findings though the introduction of selection bias based on how patients are identified and tracked longitudinally or measurement and verification bias based on clinicians’ use of testing. Ultimately, erroneous application of big data techniques has the potential to adversely affect care. For example, if only data from a single, nonlinked source are used, filtering EHRs for “complete data” in certain fields can introduce significant bias compared to claims databases, which provides a more holistic view of longitudinal patient care.12 If such cross-platform data are collected at all, it relies on labor-intensive manual chart abstraction or probabilistic linkage of records from multiple sources,13 which can also introduce selection bias that is difficult to identify.14,15 Future work in data science should identify scalable solutions to reduce fragmentation and promote access to data between systems or data aggregation across platforms, as well as ways for clinicians to easily view this information. Prescription drug monitoring programs represent one narrow example of how such systems may work. Future, broader programs would develop, deploy, and adopt standards for interoperability and secure and private keys shared between medical records to allow unique linkage (such as an encrypted globally unique identifier). Voluntary or mandated use of health information exchanges (HIEs) to create virtual complete records with adequate consideration of privacy protections16,17 represents a laudable goal in this regard, but requires investment. Issues to date that have limited HIEs in their ability to fill this gap include incomplete community penetrance, leading to biased patient samples. To maximize their efficacy, federally mandated participation would be needed. Alternatively, a novel, unified, federal HIE could be developed, but would require significantly more investment. Finally, there are largely unexplored opportunities in combining standard medical care with nontraditional sources of data such as environmental exposures, social determinants of health, or patient consumer activity. However, interoperability of data collection systems will be paramount for these types of efforts to be conceivable.

Figure 1.

Example of how a single emergency care encounter can generate multiple unique, unlinked health care records juxtaposing current and proposed paradigms of data collection. CMS = Centers for Medicare & Medicaid Services; LTAC = long-term acute care; PCP = primary care provider.

2. Create an NIH-managed and Maintained Central, Deidentified, Open-access Database for Research Purposes

With increasing data collection, there is significant need for facile methods to seamlessly load increasing granular, deidentified, patient-level data into open access systems for the scientific community. The skeleton of such systems already exist through the Healthcare Cost and Utilization Project (HCUP) and various Centers for Disease Control and Prevention databases, but limited data fields collected limit the hypotheses that can be tested using these resources. Privacy, ethical, and legal challenges need to be surmounted. While the NIH has required public reporting of data for several years,18 there has no single interoperable repository, no mechanism to do this easily, and no way to track when it is completed. The framework for such an approach exists in the NIH-supported genomics, proteomics, and metabolomics central repositories. However, sharing clinical data and linking disparate sources would build capacity to study complex disease states, long-term outcomes, and rare diseases that cannot be adequately studied with current methodology. Open-access data sets also allow for improved reliability of research as well as external verification of statistical analyses. Recently there has been an increased concern regarding reproducibility in research evolving from genetic and microarray analyses.19,20 The issues at play are complex, but range from vague methods, poor quality control, inconsistency in data reporting, and lack of statistical clarity, which all culminate in an inability to reproduce research findings. Such “big data” problems are likely to affect clinical research as the deluge of information continues. Open-access databases would allow for external validation of study findings using similar or orthogonal data analysis methods. Prior to creation of such repositories, however, adequate framework must be developed for their proper use and maintenance. Unless such systems remain facile and adequately supported, increased unfunded requirements of investigators (such as public reporting, ensuring data quality, and responsibility for response to queries) may inadvertently threaten data integrity and public perception of research reliability and quell future research endeavors by investigators and patients alike. As evidence from across the information technology spectrum continues to demonstrate, data breaches seem to be a near-inevitability for purely online data repositories. Expansive data sets may be better maintained on isolated mainframes, with lock-and-key approval for access, under a model similar to the HCUP database. We believe that the NIH is the best poised to develop, operate, and maintain such as database to ensure a high-quality, high-fidelity, and secure data resource.

3. Improve Methodologies for Visualization and Analysis of Intensively Sampled Data

Increasingly, biometric data are accumulated by machines and recorded in an automated fashion. This can generate long streams of intensively sampled longitudinal data. Examples include long electrocardiographic recordings, as well as sequential blood pressure, heart rate, or hemodynamic or biometric measurements. Patients often arrive with self-monitored data (e.g., heart rate from fitness monitors) as well. Standard methods and formats are needed to aggregate, synchronize, and annotate these time-varying data from multiple platforms. Methods to move, visualize, and analyze these data (particularly longitudinally) are also not well established. Future work should examine data management and analysis for such intensive longitudinal data. There also should be exploration of the meaning, significance, and reliability of patient self-monitoring data for making treatment decisions. Individuals have already begun hacking and modifying their own devices, particularly glucose monitoring devices, demonstrating a field in which the medical community, for a variety of reasons is failing to meet patients’ needs.21

Furthermore, akin to The Human Genome Project, there exists significant opportunity to create a human imaging project that includes linked phenotypic and anonymized imaging data that could be explored by researchers across the globe, enabling novel discovery from already acquired resources with due consideration of privacy and ethical issues. Finally, as technology continues to develop, files of huge size are being generated. We expect that as the number of types and intensity of sampling of these data increase, new compression techniques may be required for data transfer and/or storage. This may become particularly acute in the case of aggregate storage of longitudinal data of large numbers of patients, illustrating the need to partner with technology experts to develop not only strategic approaches but also technical solutions.22

4. Develop Methods to Identify and Standardize Electronic Medical Record Data Quality

Improved access to clinical and administrative information offers substantial opportunities for data science researchers. However, limited accuracy and reliability of such sources, especially those created in the emergency setting, may impair or misdirect such investigations.17 Documentation that includes templates, copied text,23 and automated advisories can lead to systemic misrepresentation and inconsistencies in medical records and administrative data sets. In the ED setting, rapidly evolving situations and a high flux of changing preliminary information contribute to inconsistently accurate records. Improving the ability to ensure the fidelity of clinical data sets is an area ripe for investigation with limited attention to date. Future work in data science and medical informatics should include improved methods to detect and reduce problems with data quality in large data sets and establishment of much needed standards. Examples of ensuring fidelity include back-end identification of data patterns indicative of potential systematic error, such as those that are repetitive, overly consistent, or anomalous in appearance. Front-end solutions to reduce error at the time of data creation are also desirable such as prevention of entering illogical or incompatible information.24 Examples might include automated prompting for clinical verification of a positive pregnancy test result in a biologic male patient (which could occur in the setting of testicular cancer) or a normal mental status in a patient who is intubated (which may occur immediately prior to extubation). Such examples demonstrate the need for broad stakeholder input and the development of improved human–computer interfaces, with a commitment to record integrity. Without the development of improved methods at the point of data entry, scientists are likely to have poor research quality data that are prone to erroneous findings and irreproducible or systemically biased studies. Additional consideration should be given to creation of “research-ready” documentation functionality within EHRs to ensure that critical elements are routinely collected in a structured data format. Such an approach would be particularly valuable for accreditation or certification programs, which rely on clear demonstration of process measures (e.g., door to electrocardiogram time or use of order sets for a given condition), and quality reporting, which require delineated numerators and denominators (e.g., proportion of low-risk chest pain patients who undergo stress testing) to derive accurate outcome data. By improving the up-front collection of information in the form of structured data, accuracy will be improved and the burden for back-end work will be diminished. However, implementing this will require a willingness of EHR vendors to deviate from the status quo—something that they have heretofore not displayed.

5. Improve and Utilize Natural Language Processing for the More Robust Study of Patient, Provider, and Systems-level Challenges

Most data for ED encounters are contained in the history, physical examination, evaluation/management services, and imaging report components of chart documentation. Unfortunately, these data are rarely structured in current medical records, and data are most often entered as free text or dictated text.25 This creates a barrier to large-scale exploration of electronic medical records. It is highly likely these data elements are more reliable or more relevant to patient care given the de facto emphasis placed by the clinician on communicating thought process through the use of free text. Absent the ability to implement up-front utilization of structured data at intake, better methods to work with unstructured data and seamlessly convert it to a usable format are needed. While there are a number of technologic solutions have expanded the potential to achieve this, such an approach has yet to be integrated into the clinical arena for routine data management.3,26,27 Future work should examine how to structure abundant free-text data from encounters into analyzable forms to preserve the richness of these data as opposed to forcing artifactual discrete data field entry. Novel methods including two-step “smart” processing should also be explored, whereby discrete data points that correspond to a diagnosis or criteria for study inclusion are automatically transformed (e.g., echocardiogram report of an ejection fraction of 35% is converted to a diagnosis of heart failure with reduced ejection fraction or a potassium level of 6.5 mmol/L is interpreted by the processor as hyperkalemia).

6. Develop and Utilize Syndrome or Complaint-based Taxonomies of Disease

Patient encounters in emergency medicine are poorly characterized using common taxonomies for disease.28,29 As an example, a patient may be classified by a final diagnosis mapped to an ICD-10 code (e.g., gastroesophageal reflux). However, this code does not reflect the initial symptoms or physiologic syndrome that led to an ED visit (e.g., chest pain). Use of ICD-10 or other diagnostic coding mechanisms is therefore a poor manner to assess whether utilization or testing was appropriate (e.g., stress testing or CT scan) for a given presentation. Syndromic taxonomies have been developed by the Centers for Disease Control and Prevention and the National Library of Medicine, including SNOMED-CT,30 that could be used as the basis for such taxonomies. Future work in the data sciences should develop standards for how research findings based on post hoc diagnoses made after diagnostic testing and workup compare to an undifferentiated patient population. For instance, studies of patients with an ICD-10 diagnosis of sepsis could compare their results to an unselected cohort of patients meeting consensus criteria for sepsis in the ED or who present with a vague complaint (e.g., fever or body aches) that may or may not ultimately be coded as sepsis. Aforementioned improvements in natural language processing focused on chief complaint may be particularly useful in this regard. This would enable a better understanding of the diagnostic decision making at the provider level and help to interpret the accuracy of relatively nonspecific criteria that can, by virtue of being tied to performance metrics, trigger unnecessary or even inappropriate care (e.g., administering large amounts of fluid to a patient solely based on sepsis criteria to avoid a perceived or actual penalty). The EM Common Core Model may also serve as a framework for such a system.

7. Develop a Practical and Ethical Framework to Leverage Electronic Systems for Data Collection in Controlled Trials

Data science can often use naturally occurring variability to infer differences between groups of interventions, but such findings are limited by unrecognized confounding. Furthermore, systematic selection bias can easily be introduced and may not be adequately evaluated using typical methods to address missingness.31 More reliable and accurate confirmation of therapeutic effects within large, ongoing clinical and administrative data sets may require incorporating patient-level or site-clustered allocation to an intervention by random or quasi-random methods. Current methods of integration require labor-intensive human-level data abstraction and serve as an impediment to the seamless conduct of clinical trials. This impediment is particularly important outside of academic medical centers and potentially contributes to systemic bias of research findings. Implementation of such integration would enhance data capture, offering novel methods to increase protocol fidelity (e.g., pop-ups or text messages to patients to document pain levels or to nursing to chart updated vital signs) and perhaps expand the type of effects that can be assessed. For example, by incorporating patient flow data into health records, we may be able to automate modeling of patient care efficiency (e.g., throughput times for service, waits in queue) and other data relevant to operational improvements in the ED, while providing a readily accessing test environment to study alternative approaches to care delivery (e.g., fast track, team triage).

8. Explore Technologies to Help Enable Conduction of Clinical Trial in the Emergency Setting by Streamlining Subject Identification

Achieving the aforementioned defragmentation of electronic medical records can lead to improved methods to identify eligible subjects for clinical trials in the emergency care setting. Examples of these that could be explored include a national database of preencounter study consent (i.e., patients consent in advance to participate in an emergency care trial where they may be unable to consent at the time of their acute disease), use of videotaped presentations to provide information necessary for informed consent, matching of patients to potential studies via background electronic medical record analysis, and automated notification of patients and providers about eligibility for studies.33–36 The use of registry-based randomized controlled trials,37 which leverage preexisting registries (which are relative low cost and internally valid) to identify patients or institutions for randomization, may also be an option. As high-quality data are already being collected, such trials decrease the need for data collection and therefore cost and should be part of the pragmatic data science toolbox for learning health systems of the future. However, at present, such methods remain underdeveloped and inconsistently applied. Funding for pilot studies could optimize procedures, leveraging the strengths of novel data science applications.

9. Train Emergency Care Clinicians in Data Science and Data Scientists in Emergency Care Medicine

Development and funding of targeted K-level training grants beyond the K01 mechanism will be required to develop researchers, especially clinician-scientists, in data science. This data scientist workforce must be capable of both creating data science methodology and applying data science approaches to the emergency care setting. Application of data science approaches should include both traditional bioinformatics and clinical health informatics, as well as public health informatics. Programs should also be developed to train a new type of clinician in data science. For success, clinicians must be intimately involved in curating data, choosing out-comes to predict and ultimately building and rigorously testing algorithms to ensure that data science research remains firmly rooted in the realities of clinical care. To help prepare for this, such training ideally would begin at the undergraduate level and continue into graduate education in medical schools as well as computer science and engineering pre- and postdoctoral programs. However, to operationalize in a meaningful way, dedicated fellowship training and ongoing faculty career development programs would be needed.

CONCLUSION

The exponential growth of health care data carries enormous promise for the better understanding of health and disease, with the potential for tangible benefits for patients and providers alike. Such advances are in danger of being stalled by a number of theoretical and practical barriers. Coordinated research and policy approaches may help lower some of these barriers to help fulfill the promise of big data in emergency care.

Footnotes

The authors have no relevant financial information or potential conflicts to disclose.

Contributor Information

Michael A. Puskarich, Department of Emergency Medicine, University of Mississippi Medical Center, Jackson, MS.

Clif Callaway, Department of Emergency Medicine, University of Pittsburgh, Pittsburgh, PA.

Robert Silbergleit, Department of Emergency Medicine, University of Michigan, Ann Arbor, MI.

Jesse M. Pines, Departments of Emergency Medicine and Health Policy & Management, George Washington University, Washington, DC.

Ziad Obermeyer, Brigham and Women’s Hospital, Harvard Medical School, Boston, MA.

David W. Wright, Departments of Emergency Medicine and Health Policy & Management, Emory University, Atlanta, GA.

Renee Y. Hsia, Department of Emergency Medicine and the Institute of Health Policy Studies, University of California San Francisco, San Francisco, CA.

Manish N. Shah, Department of Emergency Medicine, University of Wisconsin–Madison, Madison, WI.

Andrew A. Monte, Department of Emergency Medicine, University of Colorado School of Medicine, Aurora, CO.

Alexander T. Limkakeng, Jr., Division of Emergency Medicine, Duke University School of Medicine, Durham, NC.

Zachary F. Meisel, Perelman School of Medicine, University of Pennsylvania, Philadelphia, PA.

Phillip D. Levy, Department of Emergency Medicine and Integrative Biosciences Center, Wayne State University, Detroit, MI.

References

- 1.Raghupathi W, Raghupathi V. Big data analytics in healthcare: promise and potential. Health Inf Sci Syst 2014;2:3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Kruse CS, Kothman K, Anerobi K, Abanaka L. Adoption factors of the electronic health record: a systematic review. JMIR Med Inform 2016;4:e19. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Janke AT, Overbeek DL, Kocher KE, Levy PD. Exploring the potential of predictive analytics and big data in emergency care. Ann Emerg Med 2016;67:227–36. [DOI] [PubMed] [Google Scholar]

- 4.National Hospital Ambulatory Medical Care Survey: 2015. Emergency Department Summary Tables Available at: https://www.cdc.gov/nchs/data/nhamcs/web_tables/2015_ed_web_tables.pdf. Accessed Jun 15, 2018.

- 5.National Hospital Ambulatory Medical Care Survey: 2014. State and National Summary Tables Available at: https://www.cdc.gov/nchs/data/ahcd/namcs_summary/2014_namcs_web_tables.pdf. Accessed Jun 15, 2018.

- 6.Agency for Heathcare Research and Quality. Trends in Hospital Inpatient Stays by Age and Payer, 2000–2015 Available at: https://www.hcup-us.ahrq.gov/reports/statbriefs/sb235-Inpatient-Stays-Age-Payer-Trends.pdf. Accessed Jun 15, 2018. [PubMed]

- 7.Pines JM, Lotrecchiano GR, Zocchi MS, et al. A conceptual model for episodes of acute, unscheduled care. Ann Emerg Med 2016;68:484–91. [DOI] [PubMed] [Google Scholar]

- 8.Galarraga JE, Pines JM. Costs of ED episodes of care in the United States. Am J Emerg Med 2016;34:357–65. [DOI] [PubMed] [Google Scholar]

- 9.Obermeyer Z, Lee TH. Lost in thought - the limits of the human mind and the future of medicine. N Engl J Med 2017;377:1209–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.McMurray J, Hicks E, Johnson H, Elliott J, Byrne K, Sto-lee P. ‘Trying to find information is like hating yourself every day’: the collision of electronic information systems in transition with patients in transition. Health Inform J 2013;19:218–32. [DOI] [PubMed] [Google Scholar]

- 11.Emergency Department Transitions of Care - A Quality Measurement Framework Final Report Washington, DC: National Quality Forum, 2017. [Google Scholar]

- 12.Weber GM, Adams WG, Bernstam EV, et al. Biases introduced by filtering electronic health records for patients with “complete data”. J Am Med Inform Assoc 2017;24:1134–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Newgard CD, Zive D, Jui J, Weathers C, Daya M. Electronic versus manual data processing: evaluating the use of electronic health records in out-of-hospital clinical research. Acad Emerg Med 2012;19:217–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Harron K, Wade A, Gilbert R, Muller-Pebody B, Gold-stein H. Evaluating bias due to data linkage error in electronic healthcare records. BMC Med Res Methodol 2014;14:36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Harron KL, Doidge JC, Knight HE, et al. A guide to evaluating linkage quality for the analysis of linked data. Int J Epidemiol 2017;46:1699–710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kum HC, Ahalt S. Privacy-by-design: understanding data access models for secondary data. AMIA Jt Summits Transl Sci Proc 2013;2013:126–30. [PMC free article] [PubMed] [Google Scholar]

- 17.Balas EA, Vernon M, Magrabi F, Gordon LT, Sexton J. Big data clinical research: validity, ethics, and regulation. Stud Health Technol Inform 2015;216:448–52. [PubMed] [Google Scholar]

- 18.Zarin DA, Tse T, Williams RJ, Carr S. Trial reporting in ClinicalTrials.gov - The final rule. N Engl J Med 2016;375:1998–2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Allison DB, Cui X, Page GP, Sabripour M. Microarray data analysis: from disarray to consolidation and consensus. Nat Rev Genet 2006;7:55–65. [DOI] [PubMed] [Google Scholar]

- 20.Bustin SA, Huggett JF. Reproducibility of biomedical research - The importance of editorial vigilance. Biomol Detect Quantif 2017;11:1–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mann M This Diabetes Activitist Hacked Her Medical Device and Made an Artificial Pancreas Available at: https://motherboard.vice.com/en_us/article/aekkyj/this-diabetes-activist-hacked-her-medical-device-and-made-an-artificial-pancreas. Accessed Jun 15, 2018. [Google Scholar]

- 22.Francescon R, Hooshmand M, Gadaleta M, Grisan E, Yoon SK, Rossi M. Toward lightweight biometric signal processing for wearable devices. Conf Proc IEEE Eng Med Biol Soc 2015;2015:4190–3. [DOI] [PubMed] [Google Scholar]

- 23.Patterson ES, Sillars DM, Staggers N, et al. Safe practice recommendations for the use of copy-forward with nursing flow sheets in hospital settings. Jt Comm J Qual Patient Saf 2017;43:375–385. [DOI] [PubMed] [Google Scholar]

- 24.Daymont C, Ross ME, Russell LA, Fiks AG, Wasserman RC, Grundmeier RW. Automated identification of implausible values in growth data from pediatric electronic health records. J Am Med Inform Assoc 2017;24: 1080–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kimia AA, Savova G, Landschaft A, Harper MB. An introduction to natural language processing: how you can get more from those electronic notes you are generating. Pediatr Emerg Care 2015;31:536–41. [DOI] [PubMed] [Google Scholar]

- 26.Polnaszek B, Gilmore-Bykovskyi A, Hovanes M, et al. Overcoming the challenges of unstructured data in multisite, electronic medical record-based abstraction. Med Care 2016;54:e65–72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Luo L, Li L, Hu J, Wang X, Hou B, Zhang T, Zhao LP. A hybrid solution for extracting structured medical information from unstructured data in medical records via a double-reading/entry system. BMC Med Inform Decis Mak 2016;16:114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Griffey RT, Pines JM, Farley HL, et al. Chief complaint-based performance measures: a new focus for acute care quality measurement. Ann Emerg Med 2015;65: 387–95. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Berdahl C, Schuur JD, Fisher NL, Burstin H, Pines JM. Policy measures and reimbursement for emergency medical imaging in the era of payment reform: proceedings from a panel discussion of the 2015 Academic Emergency Medicine Consensus Conference. Acad Emerg Med 2015;22:1393–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Lee D, de Keizer N, Lau F, Cornet R. Literature review of SNOMED CT use. J Am Med Inform Assoc 2014;21: e11–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Haneuse S, Daniels M. A general framework for considering selection bias in EHR-based studies: what data are observed and why? EGEMS (Wash DC) 2016;4:1203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Saver JL, Starkman S, Eckstein M, et al. Methodology of the Field Administration of Stroke Therapy - Magnesium (FAST-MAG) phase 3 trial: Part 2 - prehospital study methods. Int J Stroke 2014;9:220–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Furyk J, McBain-Rigg K, Watt K, et al. Qualitative evaluation of a deferred consent process in paediatric emergency research: a PREDICT study. BMJ Open 2017;7:e018562. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.O’Malley GF, Giraldo P, Deitch K, et al. A novel emergency department-based community notification method for clinical research without consent. Acad Emerg Med 2017;24:721–31. [DOI] [PubMed] [Google Scholar]

- 35.Offerman SR, Nishijima DK, Ballard DW, Chetipally UK, Vinson DR, Holmes JF. The use of delayed telephone informed consent for observational emergency medicine research is ethical and effective. Acad Emerg Med 2013;20:403–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Brienza AM, Sylvester R, Ryan CM, et al. Success rates for notification of enrollment in exception from informed consent clinical trials. Acad Emerg Med 2016;23:772–5. [DOI] [PubMed] [Google Scholar]

- 37.Mathes T, Buehn S, Prengel P, Pieper D. Registry-based randomized controlled trials merged the strength of randomized controlled trails and observational studies and give rise to more pragmatic trials. J Clin Epidemiol 2018;120–7. [DOI] [PubMed] [Google Scholar]