Abstract

Importance: Along with growth in telerehabilitation, a concurrent need has arisen for standardized methods of tele-evaluation.

Objective: To examine the feasibility of using the Kinect sensor in an objective, computerized clinical assessment of upper limb motor categories.

Design: We developed a computerized Mallet classification using the Kinect sensor. Accuracy of computer scoring was assessed on the basis of reference scores determined collaboratively by multiple evaluators from reviewing video recording of movements. In addition, using the reference score, we assessed the accuracy of the typical clinical procedure in which scores were determined immediately on the basis of visual observation. The accuracy of the computer scores was compared with that of the typical clinical procedure.

Setting: Research laboratory.

Participants: Seven patients with stroke and 10 healthy adult participants. Healthy participants intentionally achieved predetermined scores.

Outcomes and Measures: Accuracy of the computer scores in comparison with accuracy of the typical clinical procedure (immediate visual assessment).

Results: The computerized assessment placed participants’ upper limb movements in motor categories as accurately as did typical clinical procedures.

Conclusions and Relevance: Computerized clinical assessment using the Kinect sensor promises to facilitate tele-evaluation and complement telehealth applications.

What This Article Adds: Computerized clinical assessment can enable patients to conduct evaluations remotely in their homes without therapists present.

Telerehabilitation interventions (i.e., the delivery of rehabilitation via an internet-connected telecommunication platform) are increasingly being used for a wide range of purposes (Charvet et al., 2017; Cotton et al., 2017; Cottrell et al., 2017; Seidman et al., 2017; Theodoros et al., 2016), including stroke rehabilitation (Chen et al., 2015; Linder et al., 2015). The growth of telerehabilitation is driven by the pressing need to provide care for patients who cannot access traditional in-clinic rehabilitation because they lack the resources (e.g., have limited insurance coverage; Pastora-Bernal et al., 2017) or live in rural areas (Cason, 2009). With this growth in telerehabilitation comes a concurrent need to develop methods to enable therapists to obtain patient evaluation data via telecommunication. These data are needed to appropriately plan and progress the rehabilitation program and to measure outcomes. However, very few standardized methods of tele-evaluation are available (Richardson et al., 2017; Truter et al., 2014; Ward et al., 2013). The purpose of this study was to test an objective evaluation method of assessing a stroke patient’s level of arm movement impairment, using the Kinect sensor (Microsoft Corp., Redmond, WA) to facilitate tele-evaluation.

The Kinect sensor is a low-cost motion tracking device (Zhang, 2012) that can measure upper limb movements (Hawi et al., 2014). Many telerehabilitation systems already use the Kinect sensor to detect and encourage limb movements (Bao et al., 2013; Brokaw et al., 2015; Chang et al., 2011; Lange et al., 2011; Lee, 2013; Levac et al., 2015; Seo, Arun Kumar, et al., 2016), thereby making the addition of a Kinect-based assessment feasible. A therapist can review Kinect-based assessment scores without the need for in-clinic, in-person observation, which thus increases access to therapy.

Whether the Kinect can provide valid assessment data for tele-evaluation of the upper limb is unknown. To date, the psychometric properties of Kinect kinematic measures have been both supported and questioned; specifically, Kinect measures such as reachable workspace volume and arm movement velocity have been shown to have strong test–retest reliability, with intraclass correlations (ICCs) of .96–.98 (Lowes et al., 2013). However, the Kinect is limited in measurement accuracy, and its agreement with measurements of active range of motion using hand-held goniometry is low (mean ICC = .48; Hawi et al., 2014). Compared with research-grade three-dimensional motion capture systems, the Kinect has been shown to have an approximately 10° error in estimating shoulder elevation and elbow angles (Bonnechére et al., 2014; Choppin & Wheat, 2013; Hawi et al., 2014; Kitsunezaki et al., 2013; Kurillo et al., 2013; Seo, Fathi, et al., 2016), and this error is higher for other joint angles (Seo, Fathi, et al., 2016). The Kinect has also been reported to overestimate movement velocity by 18%–23% (Lowes et al., 2013). Despite this limited accuracy, Kinect measures correlate with measures obtained from a research-grade 3-D motion capture system (Kurillo et al., 2013), can distinguish people with neuromuscular disorders from those without such disorders (Han et al., 2013; Lowes et al., 2013), and correlate with standard clinical assessments (Han et al., 2013, 2015; Lowes et al., 2013). Therefore, although the Kinect may not be sufficiently accurate for precise motion analysis, it may be sufficient to classify people into categories of movement ability.

Classification systems are widely used for many diagnoses. For example, in stroke rehabilitation, classification categories have been used to describe the overall independence of rehabilitation patients (Functional Independence Staging; Stineman et al., 2003), the amount of walking assistance needed (Functional Ambulation Categories; Mehrholz et al., 2007), or the level of poststroke arm motor impairment (Fugl–Meyer Assessment for the upper extremity (FMA–UE; Fugl-Meyer et al., 1975). To be specific, on the FMA–UE, ratings of 0, 1, and 2 are given for no, partial, and near-normal movement ability, respectively. These ordinal ratings are, in essence, categories of patient ability. Using the Kinect to determine an item rating category may require less measurement precision and may thus take advantage of Kinect technology.

Two studies have used rating scales in examining the use of Kinect for categorizing the upper limb movements of patients with stroke (Kim et al., 2016; Olesh et al., 2014). In both studies, participants with stroke performed several FMA–UE movement items while being tracked with the Kinect sensor. Their performance was also rated by therapists. Good agreement was observed between the Kinect and therapist ratings (65%–87% agreement in Kim et al. [2016] and a strong linear relationship in Olesh et al. [2014]). Together, these studies provide preliminary evidence that Kinect measures can reliably assign rating categories to arm movements. However, because the Kinect cannot track finger motions, movements that involve object interaction, or movements out of its sight (e.g., with the hand behind the trunk), the Kinect can replicate only 10–13 of the assessment’s 33 items, and in-person observation is still needed for the remaining part of the assessment to formulate treatment targets and interpret treatment response (Woodbury et al., 2013). Thus, the burden of patient travel for assessment and therapist workload is not reduced.

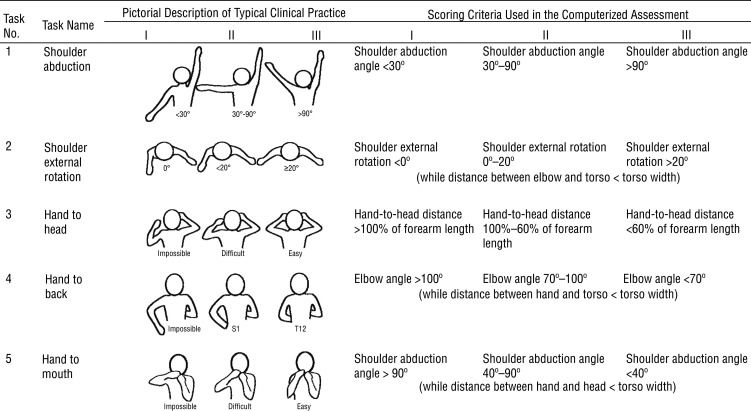

The purpose of this study was to determine feasibility of using the Kinect sensor to perform a standard clinical assessment in its entirety to objectively classify level of arm gross motor impairment and enable tele-evaluation. Specifically, we used the Mallet system (de Luna Cabrai et al., 2015) to classify stroke survivors with the Kinect. The Mallet system is a standardized method commonly used to assess shoulder movement ability in people with brachial plexus injury. It consists of five movement items—shoulder abduction, shoulder external rotation, hand to head, hand to back, and hand to mouth—and each item is given an ordinal rating (Figure 1). The Mallet is not traditionally a stroke rehabilitation assessment. However, the five motions that comprise the items of this assessment are impaired in people with stroke (Beebe & Lang, 2008; Cirstea & Levin, 2000), and they are critical for recovery of functional tasks such as reaching high, opening a door, brushing hair, adjusting a zipper in the back, and eating. Because the Mallet is based on kinematic measurements and the movements tested do not require object handling, it is suitable for measurement with the Kinect. In this study, we tested the idea that a computerized assessment using the Kinect can categorize a patient’s upper limb impairment level as well as a therapist can. If this is the case, the results will add support for the use of Kinect assessment in telerehabilitation.

Figure 1.

Scoring criteria for the five tasks: typical clinical practice and computerized assessment.

Note. Torso width was defined as the distance between the two shoulders. Forearm length was defined as the distance between the elbow and the wrist.

Method

Development of a Computerized Assessment

We developed a computerized assessment program that used the Kinect sensor by means of the OpenNI Software Development Kit (SDK; http://structure.io/openni) and a custom-developed C++ program. This computer program prompted the user to perform each of the five tasks one by one while it obtained the user’s upper body position data in a three-dimensional space from the Kinect sensor. Specifically, the Kinect sensor detected the positions of the user’s hand, wrist, elbow, shoulder, center of torso, and head. Using these joint position data, the program computed joint angles and distances that were relevant to the scoring criteria (described in the next paragraphs) and determined the user’s score according to these criteria. The program monitored the user’s body positions for 10 s. The program displayed a mirror view of the user to clearly show the upper limb being monitored. The best score observed during the 10-s period was used as the score for a task. On completion of a task, the program prompted the user to perform the next task. On completion of all tasks, a summary of scores for all five tasks were displayed on the computer screen.

The scoring criteria (see Figure 1) were constructed to be consistent with the description of the Mallet classification (de Luna Cabrai et al., 2015). Specifically, on Task 1, shoulder abduction, a shoulder abduction angle <30° resulted in a score of I, a shoulder abduction angle of 30°–90° resulted in a score of II, and a shoulder abduction angle of >90° resulted in a score of III. For Task 2, shoulder external rotation, shoulder external rotation angles of <0°, <20°, and ≥20° were assigned scores of I, II, and III, respectively. A shoulder external rotation score was assigned only if the distance between the elbow and the torso was less than the width of the torso.

For the remaining three tasks, an exact angle or distance criteria that the Kinect can follow was not available from the Mallet classification description. Thus, we developed specific criteria to best match the pictorial description of the scoring criteria (Figure 1). Specifically, for the hand-to-head task (Task 3), a distance from the hand to the head of >100%, 100%–60%, and <60% of the forearm length led to scores of I, II, and III, respectively. For the hand-to-back task (Task 4), elbow angles >100°, 100°–70°, and <70° resulted in scores of I, II, and III, respectively. The distance between the hand and torso had to be less than the torso width to obtain any score. For the hand-to-mouth task (Task 5), shoulder abduction angles >90°, 90°–40°, and <40° resulted in scores of I, II, and III, respectively. A shoulder abduction score was not assigned unless the distance between the hand and head was less than the width of the torso.

We obtained position data for the hand, wrist, elbow, shoulder, head, and torso from the Kinect OpenNI skeleton tracking library. These position data were used to compute vectors for body segments. Then we computed shoulder abduction angle as the angle between the upper arm and the vertical axis, projected to the frontal plane. The shoulder external rotation angle represented a rotation of the forearm about the axis of the upper arm according to the literature (Soechting et al., 1995; Wu et al., 2005). The elbow angle was the angle between the upper arm and the forearm.

Evaluation of the Computerized Assessment

Participants.

Two experiments were conducted. Participants in the first experiment were 10 healthy adults with no history of neurological or orthopedic disorders affecting the upper limbs (age range = 20–37 yr). They used the right arm for evaluation. Participants in the second experiment were 7 stroke survivors with upper limb hemiparesis (age range = 34–81 yr) whose stroke had occurred 5–58 mo before the study. Two had the left side affected, and 5 had the right side affected. Their impairment level ranged from severe to mild, with FMA–UE scores of 12–55 out of 60 (Woodbury et al., 2013), representing a range of upper limb impairment levels. They used their affected arm for evaluation.

None of the participants had a language barrier or cognitive impairment that precluded them from following instructions. Evaluations were conducted at the Medical University of South Carolina and the University of Wisconsin–Milwaukee. The study protocols were approved by the institutional review boards of these universities. All participants provided informed consent.

Procedure.

We conducted two experiments to evaluate scoring by the computerized assessment. In the first experiment, healthy participants intentionally aimed to achieve each score for each task (see Figure 1). Thus, healthy participants performed the computerized assessment three times to obtain scores of I, II, and III on each of the five tasks. To help healthy participants move their arm correctly to achieve the target score, pictorial descriptions of the target range (see Figure 1) and a mirror view of the participant were shown on the computer screen. Review of the video recordings confirmed that participants moved their arm in a way that corresponded to the target (similar to the gold standard, described next).

The second experiment involved participants with stroke performing the computerized assessment. Each participant performed the computerized assessment one time and were instructed to try to achieve the movement in each task to the best of their ability. Their movements were simultaneously visually assessed by two occupational therapy students who had been trained in scoring this assessment. For the purposes of this article, we call the process of scoring on the basis of immediate visual assessment typical procedure, because it is how assessments are commonly scored in clinical practice. The participants’ movements were also videotaped. The video recordings were later reviewed by the two occupational therapy students to collaboratively determine the score. For the purposes of this article, we call the process of scoring by reviewing a video the gold standard, because scoring from a video offers ample opportunity to review, discuss, and collaboratively determine a score. For all experiments, the Kinect sensor was placed on a table approximately 1.8 m in front of the participant.

Analysis.

We evaluated the accuracy of the computerized scores as follows. For the first experiment, we compared the scores assigned by the computer program with the target scores. The percentage accuracy was computed as the percentage of trials for which the computer program gave the same score as the target score. In addition, we obtained Cohen’s κ coefficients for all tasks combined and for each task individually. Cohen’s κ coefficient represents interrater agreement for categorical items (Hallgren, 2012); a κ of 1 indicates perfect agreement; .81–.99, almost perfect agreement; .61–.80, substantial agreement; .41–.60, moderate agreement; .21–.40, fair agreement; and .01–.20, slight agreement. A κ of 0 indicates agreement by chance, and negative values indicate less-than-chance agreement (Viera & Garrett, 2005). In this study, Cohen’s κ coefficient represented the extent of agreement with the gold standard or the scores that were collaboratively determined on the basis of the video recordings.

For the second experiment, we compared the computerized scores with the gold standard (collaborative visual assessment using video recordings) using Cohen’s κ. Likewise, we compared the scores resulting from typical practice (based on the immediate visual assessment) with the gold standard. We then compared the κ coefficients for the computerized assessment and typical practice to evaluate whether the Kinect-based computerized assessment scored stroke survivors as accurately as occurred in typical practice.

Results

The results of the first experiment are shown in Figure 2A. Scores assigned by the computer program matched the target scores 85% of the time (the three large circles along the diagonal) for all five tasks combined. For the other almost 15%, the computer program gave scores different than the target scores. For example, for 3% of the trials, the computer program scored the movement as II, when the target score was I (see Figure 2A). The percentage matching for Tasks 1–5 was 97%, 93%, 93%, 60%, and 83%, respectively. Cohen’s κ coefficient for all tasks combined was .78.

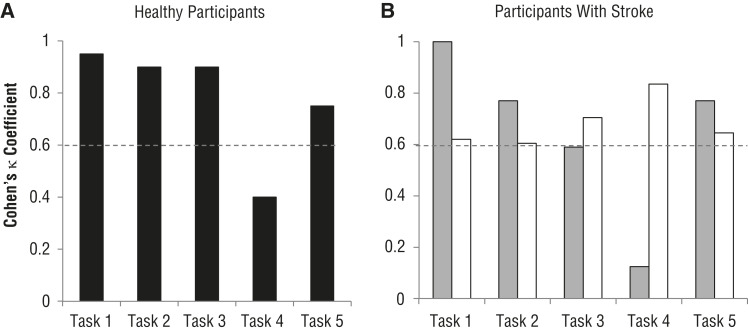

Figure 2.

Results of (A) the first experiment with healthy participants and (B–C) the second experiment with participants with stroke.

Note. The results of the first experiment show the comparison between the target scores and the scores obtained from the computerized assessment using the Kinect sensor. A review of the video recordings confirmed that participants moved their arm in a manner corresponding to the target score. The computer score matched the target score 85% of the time (three large circles along the diagonal) on all five tasks combined. Cohen’s κ coefficient was .78 for all five tasks combined. The results of the second experiment show the comparison between the computerized assessment using the Kinect sensor and the gold standard (B) and the comparison between typical practice and the gold standard (C). For the comparison with the Kinect sensor, the percentage matching was 77%, and Cohen’s κ coefficient was .66 for all five tasks combined. For the comparison with typical practice, the percentage matching was 82%, and Cohen’s κ coefficient was .72 for all five tasks combined (averaged across the two assessors).

The results of the second experiment are shown in Figures 2B and 2C for the computerized assessment and typical practice, respectively. The computer program gave the same scores as the gold standard 77% of the time (the three large circles along the diagonal in Figure 2B) for all five tasks combined. The percentages matching for Tasks 1–5 were 100%, 86%, 71%, 43%, and 86%, respectively. Typical practice (immediate visual assessment) matched the gold standard in scoring 82% of the time (the three large circles along the diagonal in Figure 2C) for all five tasks combined. The percentages matching for Tasks 1–5 were 83%, 75%, 83%, 92%, and 75%, respectively. Cohen’s κ coefficient for all tasks combined was .66 for the computerized assessment and .72 for typical practice (averaged across the two human assessors). These coefficient values are within the range for substantial agreement.

Cohen’s κ coefficients for individual tasks are shown in Figure 3. For the first experiment with healthy participants, Cohen’s κ coefficients for the computerized assessment indicated substantial to almost perfect agreement for all tasks except Task 4 (Figure 3A). This trend for a low level of agreement for Task 4 was seen again for the computerized assessment with participants with stroke (Figure 3B, gray bars). For Task 3, almost perfect agreement was obtained when the computerized assessment scored healthy participants (Figure 3A), whereas moderate agreement was obtained when it scored participants with stroke (Figure 3B, gray bar). Typical practice showed substantial to almost perfect agreement for all tasks (Figure 3B, white bars).

Figure 3.

Cohen’s κ coefficients for individual tasks for (A) the first experiment with healthy participants and (B) the second experiment with participants with stroke for the computerized assessment (gray bars) and typical practice (white bars).

Note. The dashed line at .6 indicates the level above which substantial (.61–.80) to almost perfect (.81–.99) and perfect agreement is obtained and the level below which lower levels of agreement are obtained.

Discussion

For all tasks combined, substantial agreement with the gold standard was achieved for the computerized assessment for both healthy participants (κ = .78) and participants with stroke (κ = .66). That level of agreement was similar to that for typical practice, in which evaluators scored stroke participants’ movements immediately as they saw the movements (κ = .72). These κ values are comparable with those reported in the literature (Bae et al., 2003). Thus, the data suggest that the computerized assessment using the Kinect can categorize arm gross motor impairment as accurately as can typical practice.

The level of agreement differed by task for the computerized assessment, whereas the level of agreement was similar for all tasks for typical practice; specifically, the computerized assessment worked well for the shoulder abduction, shoulder external rotation, and hand-to-mouth tasks (Tasks 1, 2, and 5). The substantial to perfect agreement for Tasks 1 and 5 may be related to the Kinect’s relatively small error in estimating the shoulder abduction angle (mean error of 7° reported in Seo, Fathi, et al., 2016). Substantial to almost perfect agreement was obtained for Task 2, although a previous study reported a large mean error of 36° for shoulder rotation angle determined by the Kinect (Seo, Fathi, et al., 2016a). In this study, the elbow remained flexed during the shoulder external rotation task, whereas the previous study involved a pointing movement using a straight arm with complete elbow extension. Thus, in this study, error associated with estimating the plane of the arm (composed of the wrist, elbow, and shoulder points) and shoulder rotation angle was minimal.

The hand-to-head task (Task 3) was associated with almost perfect and moderate agreement for healthy participants and participants with stroke, respectively. Healthy participants typically swung their hand to the side in the frontal plane before putting their hand behind the head, which provided a clear view of the hand approaching the head from the side. However, participants with stroke kept their hand in front of their chest until they moved their hand to the back of the head, which gave little clearance between the hand and head for the Kinect to detect. This difference in movement pattern between healthy participants and participants with stroke may be the reason for the difference in level of agreement on the computerized assessment between the two participant groups for Task 3.

For the hand-to-back task (Task 4), only fair and slight agreement was obtained for healthy participants and participants with stroke, respectively. It appears that the hand and wrist positions were not well detected when they were behind and obstructed by the participant’s body. The discrepancies with the computerized assessment for Tasks 3 and 4 accounted for 75% of all discrepancies (see Figure 2B). A possible solution for this technical issue includes replacing the general skeletal tracking algorithm in the Kinect’s OpenNI SDK with a custom algorithm to account for stroke-specific and task-specific movement patterns.

Implications for Occupational Therapy Practice

The results of this study have the following implications for occupational therapy practice:

A computerized assessment using the Kinect sensor has potential for use in tele-evaluation, reducing the burden of patient travel and increasing accessibility. The presence of a human rater is not required, thus reducing therapist workload.

The computerized assessment may be integrated into Kinect-based rehabilitation systems (Bao et al., 2013; Brokaw et al., 2015; Chang et al., 2011; Lange et al., 2011; Lee, 2013; Levac et al., 2015; Seo, Arun Kumar, et al., 2016) to complete telerehabilitation. Patients may participate in therapeutic activity and be evaluated periodically, in the comfort of their own home, while therapists review patients’ adherence to the home program and computerized assessment scores to monitor progress over time and modify the therapy program accordingly, without the need for clinic space.

Limitations

To be comparable to typical practice, use of Kinect-based assessment in practice may be limited to scoring movements that have at least substantial agreement with the gold standard. As mentioned earlier, the Mallet classification is not typically used for patients with stroke, and future work may be needed to address this limitation. A drawback of the current Kinect system is that it monitors only body segments, not other objects. Thus, items in other standard clinical assessments that require object grasping and manipulation cannot be assessed using the Kinect (Kim et al., 2016; Olesh et al., 2014) unless additional sensors are used on the patient’s body or objects (Otten et al., 2015), which hampers feasibility.

The number of participants tested in this study was small. Our future research includes a larger study to assess the generalizability of the findings, integration of the computerized assessment into a telerehabilitation system, and evaluation of the system’s usability in a complete telerehabilitation system.

Conclusion

Computerized assessment using the Kinect sensor was able to place upper limb movements in the gross motor categories as accurately as typical practice (immediate visual assessment). Scoring agreement with the gold standard was substantial to perfect except when the hand and wrist were hidden behind the body. We obtained these findings with stroke patients with a wide range of upper limb impairment levels and with healthy adults aiming to achieve prescribed scores. The results suggest that the computerized gross motor categorization using the Kinect sensor has promise in facilitating tele-evaluation and being integrated into telerehabilitation programs to enhance patients’ access to health care.

Acknowledgment

This research was supported by the National Institutes of Health (Grants 5R24HD065688-04 and P20GM109040). Part of this work was presented at the 37th Annual Meeting of the American Society of Biomechanics, Omaha, Nebraska, and the Medical University of South Carolina 2015 Research Day student poster presentation. The authors declare no conflict of interest.

References

- Bae, D. S., Waters, P. M., & Zurakowski, D. (2003). Reliability of three classification systems measuring active motion in brachial plexus birth palsy. Journal of Bone and Joint Surgery, 85-A, 1733–1738. 10.2106/00004623-200309000-00012 [DOI] [PubMed] [Google Scholar]

- Bao, X., Mao, Y., Lin, Q., Qiu, Y., Chen, S., Li, L., . . . Huang, D. (2013). Mechanism of Kinect-based virtual reality training for motor functional recovery of upper limbs after subacute stroke. Neural Regeneration Research, 8, 2904–2913. 10.3969/j.issn.1673-5374.2013.31.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beebe, J. A., & Lang, C. E. (2008). Absence of a proximal to distal gradient of motor deficits in the upper extremity early after stroke. Clinical Neurophysiology, 119, 2074–2085. 10.1016/j.clinph.2008.04.293 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bonnechère, B., Jansen, B., Salvia, P., Bouzahouene, H., Omelina, L., Moiseev, F., . . . Van Sint Jan, S. (2014). Validity and reliability of the Kinect within functional assessment activities: Comparison with standard stereophotogrammetry. Gait and Posture, 39, 593–598. 10.1016/j.gaitpost.2013.09.018 [DOI] [PubMed] [Google Scholar]

- Brokaw, E. B., Eckel, E., & Brewer, B. R. (2015). Usability evaluation of a kinematics focused Kinect therapy program for individuals with stroke. Technology and Health Care, 23, 143–151. [DOI] [PubMed] [Google Scholar]

- Cason, J. (2009). A pilot telerehabilitation program: Delivering early intervention services to rural families. International Journal of Telerehabilitation, 1, 29–38. 10.5195/IJT.2009.6007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang, Y. J., Chen, S. F., & Huang, J. D. (2011). A Kinect-based system for physical rehabilitation: A pilot study for young adults with motor disabilities. Research in Developmental Disabilities, 32, 2566–2570. 10.1016/j.ridd.2011.07.002 [DOI] [PubMed] [Google Scholar]

- Charvet, L. E., Yang, J., Shaw, M. T., Sherman, K., Haider, L., Xu, J., & Krupp, L. B. (2017). Cognitive function in multiple sclerosis improves with telerehabilitation: Results from a randomized controlled trial. PLoS One, 12, e0177177. 10.1371/journal.pone.0177177 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chen, J., Jin, W., Zhang, X. X., Xu, W., Liu, X. N., & Ren, C. C. (2015). Telerehabilitation approaches for stroke patients: Systematic review and meta-analysis of randomized controlled trials. Journal of Stroke and Cerebrovascular Diseases, 24, 2660–2668. 10.1016/j.jstrokecerebrovasdis.2015.09.014 [DOI] [PubMed] [Google Scholar]

- Choppin, S., & Wheat, J. (2013). The potential of the Microsoft Kinect in sports analysis and biomechanics. Sports Technology, 6, 78–85. 10.1080/19346182.2013.819008 [DOI] [Google Scholar]

- Cirstea, M. C., & Levin, M. F. (2000). Compensatory strategies for reaching in stroke. Brain, 123, 940–953. 10.1093/brain/123.5.940 [DOI] [PubMed] [Google Scholar]

- Cotton, Z., Russell, T., Johnston, V., & Legge, J. (2017). Training therapists to perform pre-employment functional assessments: A telerehabilitation approach. Work, 57, 475–482. [DOI] [PubMed] [Google Scholar]

- Cottrell, M. A., Galea, O. A., O’Leary, S. P., Hill, A. J., & Russell, T. G. (2017). Real-time telerehabilitation for the treatment of musculoskeletal conditions is effective and comparable to standard practice: A systematic review and meta-analysis. Clinical Rehabilitation, 31, 625–638. 10.1177/0269215516645148 [DOI] [PubMed] [Google Scholar]

- de Luna Cabrai, J. R., Crepaldi, B. E., de Sambuy, M. T. C., da Costa, A. C., Abdouni, Y. A., & Chakkour, I. (2015). Evaluation of upper-limb function in patients with obstetric palsy after modified Sever-L’Episcopo procedure. Revista Brasileira de Ortopedia, 47, 451–454. 10.1016/S2255-4971(15)30127-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fugl-Meyer, A. R., Jääskö, L., Leyman, I., Olsson, S., & Steglind, S. (1975). The post-stroke hemiplegic patient: 1. A method for evaluation of physical performance. Scandinavian Journal of Rehabilitation Medicine, 7, 13–31. [PubMed] [Google Scholar]

- Hallgren, K. A. (2012). Computing inter-rater reliability for observational data: An overview and tutorial. Tutorials in Quantitative Methods for Psychology, 8, 23–34. 10.20982/tqmp.08.1.p023 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han, J. J., Kurillo, G., Abresch, R. T., de Bie, E., Nicorici, A., & Bajcsy, R. (2015). Reachable workspace in facioscapulohumeral muscular dystrophy (FSHD) by Kinect. Muscle and Nerve, 51, 168–175. 10.1002/mus.24287 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Han, J. J., Kurillo, G., Abresch, R. T., Nicorici, A., & Bajcsy, R. (2013). Validity, reliability, and sensitivity of a 3D vision sensor-based upper extremity reachable workspace evaluation in neuromuscular diseases. PLoS Currents, 5. 10.1371/currents.md.f63ae7dde63caa718fa0770217c5a0e6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hawi, N., Liodakis, E., Musolli, D., Suero, E. M., Stuebig, T., Claassen, L., . . . Citak, M. (2014). Range of motion assessment of the shoulder and elbow joints using a motion sensing input device: A pilot study. Technology and Health Care, 22, 289–295. [DOI] [PubMed] [Google Scholar]

- Kim, W. S., Cho, S., Baek, D., Bang, H., & Paik, N. J. (2016). Upper extremity functional evaluation by Fugl-Meyer Assessment scoring using depth-sensing camera in hemiplegic stroke patients. PLoS One, 11, e0158640. 10.1371/journal.pone.0158640 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitsunezaki, N., Adachi, E., Masuda, T., & Mizusawa, J.-I. (2013). KINECT applications for the physical rehabilitation. In MeMeA: IEEE International Symposium on Medical Measurements and Applications (pp. 294–299). Piscataway, NJ: IEEE. 10.1109/MeMeA.2013.6549755 [DOI] [Google Scholar]

- Kurillo, G., Chen, A., Bajcsy, R., & Han, J. J. (2013). Evaluation of upper extremity reachable workspace using Kinect camera. Technology and Health Care, 21, 641–656. [DOI] [PubMed] [Google Scholar]

- Lange, B., Chang, C. Y., Suma, E., Newman, B., Rizzo, A. S., & Bolas, M. (2011). Development and evaluation of low cost game-based balance rehabilitation tool using the Microsoft Kinect sensor. In 2011 Annual International Conference of the IEEE Engineering in Medicine and Biology Society (pp. 1831–1834). Piscataway, NJ: Engineering in Medicine and Biology Society. 10.1109/IEMBS.2011.6090521 [DOI] [PubMed] [Google Scholar]

- Lee, G. (2013). Effects of training using video games on the muscle strength, muscle tone, and activities of daily living of chronic stroke patients. Journal of Physical Therapy Science, 25, 595–597. 10.1589/jpts.25.595 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levac, D., Espy, D., Fox, E., Pradhan, S., & Deutsch, J. E. (2015). “Kinect-ing” with clinicians: A knowledge translation resource to support decision making about video game use in rehabilitation. Physical Therapy, 95, 426–440. 10.2522/ptj.20130618 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Linder, S. M., Rosenfeldt, A. B., Bay, R. C., Sahu, K., Wolf, S. L., & Alberts, J. L. (2015). Improving quality of life and depression after stroke through telerehabilitation. American Journal of Occupational Therapy, 69, 6902290020. 10.5014/ajot.2015.014498 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lowes, L. P., Alfano, L. N., Yetter, B. A., Worthen-Chaudhari, L., Hinchman, W., Savage, J., . . . Mendell, J. R. (2013). Proof of concept of the ability of the Kinect to quantify upper extremity function in dystrophinopathy. PLoS Currents, 5. 10.1371/currents.md.9ab5d872bbb944c6035c9f9bfd314ee2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mehrholz, J., Wagner, K., Rutte, K., Meissner, D., & Pohl, M. (2007). Predictive validity and responsiveness of the functional ambulation category in hemiparetic patients after stroke. Archives of Physical Medicine and Rehabilitation, 88, 1314–1319. 10.1016/j.apmr.2007.06.764 [DOI] [PubMed] [Google Scholar]

- Olesh, E. V., Yakovenko, S., & Gritsenko, V. (2014). Automated assessment of upper extremity movement impairment due to stroke. PLoS One, 9, e104487. 10.1371/journal.pone.0104487 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Otten, P., Kim, J., & Son, S. H. (2015). A framework to automate assessment of upper-limb motor function impairment: A feasibility study. Sensors, 15, 20097–20114. 10.3390/s150820097 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pastora-Bernal, J. M., Martin-Valero, R., & Baron-Lopez, F. J. (2017). Cost analysis of telerehabilitation after arthroscopic subacromial decompression. Journal of Telemedicine and Telecare, 24, 553–559. 10.1177/1357633X17723367 [DOI] [PubMed] [Google Scholar]

- Richardson, B. R., Truter, P., Blumke, R., & Russell, T. G. (2017). Physiotherapy assessment and diagnosis of musculoskeletal disorders of the knee via telerehabilitation. Journal of Telemedicine and Telecare, 23, 88–95. 10.1177/1357633X15627237 [DOI] [PubMed] [Google Scholar]

- Seidman, Z., McNamara, R., Wootton, S., Leung, R., Spencer, L., Dale, M., . . . McKeough, Z. (2017). People attending pulmonary rehabilitation demonstrate a substantial engagement with technology and willingness to use telerehabilitation: A survey. Journal of Physiotherapy, 63, 175–181. 10.1016/j.jphys.2017.05.010 [DOI] [PubMed] [Google Scholar]

- Seo, N. J., Arun Kumar, J., Hur, P., Crocher, V., Motawar, B., & Lakshminarayanan, K. (2016). Usability evaluation of low-cost virtual reality hand and arm rehabilitation games. Journal of Rehabilitation Research and Development, 53, 321–334. 10.1682/JRRD.2015.03.0045 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seo, N. J., Fathi, M. F., Hur, P., & Crocher, V. (2016). Modifying Kinect placement to improve upper limb joint angle measurement accuracy. Journal of Hand Therapy, 29, 465–473. 10.1016/j.jht.2016.06.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Soechting, J. F., Buneo, C. A., Herrmann, U., & Flanders, M. (1995). Moving effortlessly in three dimensions: Does Donders’ law apply to arm movement? Journal of Neuroscience, 15, 6271–6280. 10.1523/JNEUROSCI.15-09-06271.1995 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stineman, M. G., Ross, R. N., Fiedler, R., Granger, C. V., & Maislin, G. (2003). Functional independence staging: Conceptual foundation, face validity, and empirical derivation. Archives of Physical Medicine and Rehabilitation, 84, 29–37. 10.1053/apmr.2003.50061 [DOI] [PubMed] [Google Scholar]

- Theodoros, D. G., Hill, A. J., & Russell, T. G. (2016). Clinical and quality of life outcomes of speech treatment for Parkinson’s disease delivered to the home via telerehabilitation: A noninferiority randomized controlled trial. American Journal of Speech-Language Pathology, 25, 214–232. 10.1044/2015_AJSLP-15-0005 [DOI] [PubMed] [Google Scholar]

- Truter, P., Russell, T., & Fary, R. (2014). The validity of physical therapy assessment of low back pain via telerehabilitation in a clinical setting. Telemedicine Journal and e-Health, 20, 161–167. 10.1089/tmj.2013.0088 [DOI] [PubMed] [Google Scholar]

- Viera, A. J., & Garrett, J. M. (2005). Understanding interobserver agreement: The kappa statistic. Family Medicine, 37, 360–363. [PubMed] [Google Scholar]

- Ward, E. C., Burns, C. L., Theodoros, D. G., & Russell, T. G. (2013). Evaluation of a clinical service model for dysphagia assessment via telerehabilitation. International Journal of Telemedicine and Applications, 2013, 918526. 10.1155/2013/918526 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Woodbury, M. L., Velozo, C. A., Richards, L. G., & Duncan, P. W. (2013). Rasch analysis staging methodology to classify upper extremity movement impairment after stroke. Archives of Physical Medicine and Rehabilitation, 94, 1527–1533. 10.1016/j.apmr.2013.03.007 [DOI] [PubMed] [Google Scholar]

- Wu, G., van der Helm, F. C., Veeger, H. E., Makhsous, M., Van Roy, P., Anglin, C., . . . Buchholz, B.; International Society of Biomechanics. (2005). ISB recommendation on definitions of joint coordinate systems of various joints for the reporting of human joint motion—Part II: Shoulder, elbow, wrist and hand. Journal of Biomechanics, 38, 981–992. 10.1016/j.jbiomech.2004.05.042 [DOI] [PubMed] [Google Scholar]

- Zhang, Z. (2012). Microsoft Kinect sensor and its effect. IEEE MultiMedia, 19, 4–10. 10.1109/MMUL.2012.24 [DOI] [Google Scholar]