Abstract

The rising penetration of smartphones now gives researchers the chance to collect data from smartphone users through passive mobile data collection via apps. Examples of passively collected data include geolocation, physical movements, online behavior and browser history, and app usage. However, to passively collect data from smartphones, participants need to agree to download a research app to their smartphone. This leads to concerns about nonconsent and nonparticipation. In the current study, we assess the circumstances under which smartphone users are willing to participate in passive mobile data collection. We surveyed 1,947 members of a German nonprobability online panel who own a smartphone using vignettes that described hypothetical studies where data are automatically collected by a research app on a participant’s smartphone. The vignettes varied the levels of several dimensions of the hypothetical study, and respondents were asked to rate their willingness to participate in such a study. Willingness to participate in passive mobile data collection is strongly influenced by the incentive promised for study participation but also by other study characteristics (sponsor, duration of data collection period, option to switch off the app) as well as respondent characteristics (privacy and security concerns, smartphone experience).

Introduction

With the rapid spread of smartphones, alternatives for survey data collection have emerged. In addition to including cell phones in CATI sampling frames (Lavrakas et al. 2017), asking questions on cell phones through texting (Conrad et al. 2017), and mobile surveys on browsers or apps (Couper, Antoun, and Mavletova 2017), researchers are now able to use smartphones for passive mobile data collection (Link et al. 2014). Data that can be collected on mobile devices include, for example, geolocation and physical movements, online search behavior and browser history, app usage, and call and text message logs. These data enable researchers to study, among other things, users’ mobility patterns, physical activity and health, consumer behavior, and social interactions.

Compared to surveys, which rely on self-reporting, passive mobile data collection has the potential to provide richer data (collected at much higher frequency), to decrease respondent burden (fewer survey questions), and to reduce measurement error (reduction in recall errors and social desirability). This approach also allows for combining survey data collection with passive collection of data about participants. Recent examples are studies on the role of geographical context and social networks for prospective employment of men recently released from prison (Sugie 2018), everyday activities and stress among older African Americans (Fritz et al. 2017), and the influence of activities and experiences in different locations on real-time fluctuations in well-being (York Cornwell and Cagney 2017).

However, many early studies that passively collected such mobile data relied on small groups of volunteers from special populations. So far, we lack the general knowledge on how this new form of data collection works in larger populations and what the implications are for data quality. Willingness to participate in passive mobile data collection might correlate with concerns about data privacy and security and smartphone skills. Nonparticipation could lead to bias if participants who allow passive mobile data collection differ from nonparticipants on the variables of interest in a study. Thus, before researchers can use the new technology to answer substantive research questions, we need to better understand the error properties of passively collected mobile data.

The current study aims at estimating the willingness of smartphone users to participate in research that involves passive mobile data collection via a research app. We are particularly interested in identifying study and personal characteristics that drive willingness to participate. We review the current methodological literature on passive mobile data collection and identify potential factors in the participation decision process that might be similar between traditional surveys and passive mobile data collection and those factors that may be unique to the new technology. We then present a study involving vignettes of hypothetical research studies featuring passive mobile data collection via smartphones. Using a factorial survey design with vignettes, we simultaneously test the effect of several study characteristics on willingness to participate.

PREVIOUS RESEARCH

A growing body of literature assesses the use of smartphones for data collection. Recent reviews (Keusch and Yan 2016; Couper et al. 2017) show that most studies have focused on the use of mobile devices when filling out browser-based web surveys on a smartphone. Fewer methodological studies focus on the use of app-based mobile surveys and the combination of survey and passive data collection.

Emerging research on hypothetical and actual willingness to participate in passive mobile data collection studies shows that consent rates vary considerably. Couper, Antoun, and Mavletova (2017) reviewed seven studies from Europe and the United States asking respondents to comply with special requests using mobile devices such as recording the GPS location or taking photos at the end of the survey and found consent rates between 5 and 67 percent. Revilla and her colleagues (2016) showed that nonprobability panel members’ hypothetical willingness to perform additional tasks using smartphones varies across seven Spanish- and Portuguese-speaking countries and by type of sensor.

Research on hypothetical and actual willingness to perform passive data collection tasks includes studies in large-scale probability panels such as the Dutch LISS Panel (Sonck and Fernee 2013; Elevelt, Lugtig, and Toepoel 2017; Scherpenzeel 2017) and the Understanding Society Innovation Panel (IP) in the UK (Jäckle et al. 2017; Wenz, Jäckle, and Couper 2017). In the LISS Panel, respondents were invited to complete app-based time-use diaries, share GPS location, and provide access to collect the number of calls, text messages, and Internet messages. People who did not own a smartphone were provided with a smartphone. Thirty-seven percent of all invited panel members (smartphone owners and non-owners) indicated their willingness to participate, and 68 percent of the willing panelists actually participated (Scherpenzeel 2017). Based on these data, Elevelt and her colleagues (2017) reported that using an opt-out consent to collect the GPS location resulted in 74 percent of respondents sharing their GPS location. In another LISS Panel study on mobility, respondents were asked to share their GPS location on their smartphone or a loaner phone; 37 percent of the invited panel members were willing to participate, and 81 percent of them eventually participated (Scherpenzeel 2017).

Using data from the Understanding Society IP, Wenz, Jäckle, and Couper (2017) reported that willingness differs by task: among panelists who used smartphones, 65 percent were willing to take photos or scan bar codes, 61 percent to use the built-in accelerometer to record physical movements, 39 percent to share GPS location, and 28 percent to download a tracking app that collects anonymous data about phone usage. Jäckle and her colleagues (2017) asked members of the Understanding Society IP (irrespectively of device ownership and use) to download an app that could be used for scanning receipts from purchases for a month. Seventeen percent of all invited panel members participated by downloading the app, and 13 percent actually used the app.

A UNIFIED FRAMEWORK

Previous research suggest that some of the factors that influence willingness or actual participation in passive mobile data collection are directly linked to participants’ smartphone-related usage and attitudes. For example, intensive users of mobile technology are more likely to express willingness (Elevelt, Lugtig, and Toepoel 2017; Jäckle et al. 2017; Wenz, Jäckle, and Couper 2017) and to actually participate (Elevelt, Lugtig, and Toepoel 2017; Jäckle et al. 2017), and respondents’ security concerns about transmitting the data via mobile apps lower willingness (Wenz et al. 2017) and actual participation (Jäckle et al. 2017). But other factors that influence willingness to participate in passive mobile data collection are not specifically associated with participants’ smartphone usage and attitudes and are rather general. For example, general cooperativeness with a survey (i.e., low item nonresponse, providing consent to linkage to administrative records) is predictive of participation in a subsequent smartphone app study (Jäckle et al. 2017).

In this paper, we propose a framework for willingness to participate in smartphone passive measurement. This framework unifies established mechanisms of survey participation, relatively new mechanisms (similar to participation in web and mobile web surveys), and novel mechanisms specific to unique features of passive mobile data collection. Specifically, we suggest that participation in passive data collection will be influenced by three broad classes of variables. First, considerations to participate in passive data collection include factors that influence survey participation more generally. Perceived benefits and interest in the survey topic will increase participation, while perceived costs, such as the length of the survey, will decrease willingness. Second, passive data collection increases the salience of privacy and risk of disclosure. Therefore, respondent concerns about privacy and trust in the data collection organization will have particular salience. Third, willingness to participate in passive data collection via smartphone will be impacted by factors specifically related to the technology of data collection. This includes respondent comfort and experience with smartphone technology and feelings of control over the data collection process.

RESEARCH QUESTIONS AND HYPOTHESES

Based on this framework, we aim to answer three research questions: 1) To what extent are smartphone users willing to download a research app to their phone that passively collects data? 2) What are the reasons for and against participating in passive mobile data collection? 3) What are the mechanisms (i.e., study and individual characteristics) for willingness to participate in passive mobile data collection? For the latter, we have three sets of hypotheses. The first set of hypotheses states that factors influencing the general willingness to participate in surveys, such as survey sponsor, topic, survey burden, incentives, and general attitudes toward surveys and research, will also affect the willingness to participate in passive mobile data collection.

Previous studies have shown that familiarity with the survey sponsor, trust in the sponsor, reputation, and authority of the sponsor influence the participation decision (e.g., Keusch 2015). In line with this research, we therefore hypothesize:

H1.1: Smartphone users are more willing to participate in passive mobile data collection if the study is sponsored by a statistical agency or a university than by a market research company.

A number of web surveys showed that the topic of a survey influences participation and break-off rates (Galesic 2006; Marcus et al. 2007; Keusch 2013). We thus hypothesize:

H1.2: The topic of the study for which the data are collected will influence the willingness to participate in passive mobile data collection.

Depending on the specifics of passive mobile data collection, respondents may differ in perceived burden. Similar to interview length and frequency of being interviewed as indicators of survey burden (Bradburn 1978; Galesic and Bosnjak 2009; Yan et al. 2011), we expect the duration of the passive mobile data collection as well as asking additional survey questions to influence the willingness to participate.

H1.3: Smartphone users are less willing to participate in passive mobile data collection with a longer duration of data collection.

H1.4: Smartphone users are less willing to participate in passive mobile data collection if additional questions are administered through the app.

Independent of mode, (monetary) incentives increase willingness to participate in surveys (see Singer and Ye 2013 for a review). However, Jäckle and her colleagues (2017) find no significant effect of differential promised incentives of two versus six British pounds for downloading the app. On the other hand, additional incentives paid for participating in all waves of an eight-wave online panel study were shown to increase response rates in an online panel (Schaurer 2017).

H1.5: Promised incentives for downloading an app and additional incentives for participating in the study until the end of the study period increase the willingness to participate in passive mobile data collection over no incentive.

We also expect general attitudes toward surveys and research to influence the willingness to participate in passive mobile data collection, as positive attitudes toward surveys in general lead to higher web survey participation (Bosnjak, Tutten, and Wittmann 2005; Brüggen et al. 2011; Haunberger 2011; Keusch, Batinic, and Mayerhofer 2014).

H1.6: Smartphone users with more positive attitudes toward research and surveys are more willing to participate in passive mobile data collection.

The second set of hypotheses focuses on smartphone technology-specific factors. First, smartphones have the ability to collect richer and more detailed data than traditional surveys. For online surveys that collect process data, privacy concerns are shown to influence the willingness to participate (Couper et al. 2008, 2010; Couper and Singer 2013). Revilla, Couper, and Ochoa (2018) and Wenz, Jäckle, and Couper (2017) found that security concerns predict lower willingness to allow smartphone data collection, which is especially relevant for certain activities that are potentially threatening to respondents’ privacy, such as sharing GPS location. Extending this logic to passive mobile data collection, we hypothesize that willingness to participate in passive mobile data collection is correlated with concerns about privacy and data security and trust that data will be protected by the data collecting organization.

H2.1: Smartphone users who are concerned about privacy and data security are less willing to participate in passive mobile data collection than users with lower concerns.

H2.2: Smartphone users who trust companies and organizations not to share users’ personal data with third parties are more willing to participate in smartphone data collection.

Second, Couper, Antoun, and Mavletova (2017) note that familiarity or comfort with the mobile device and the ways respondents use their devices may affect the willingness to participate in mobile web surveys. Respondents who use their phones less frequently or only for short browsing sessions respond at lower rates to mobile web surveys. The level of comfort and familiarity with a smartphone or apps, likewise, can affect the willingness to participate in passive mobile measurement. Pinter (2015) and Wenz, Jäckle, and Couper (2017) found that respondents who use smartphones more intensively have a higher willingness to complete smartphone data collection tasks. Elevelt, Lugtig, and Toepoel (2017) reported that participants who use their phone more often are more likely to share their GPS data. More generally, personal use of technology (i.e., frequency of mobile device use, mobile device skills, and use of features relevant to the study) is a strong predictor of participation in a mobile app study (Jäckle et al. 2017).

H2.3: Smartphone users who are more skilled at using their mobile devices are more willing to participate in passive mobile data collection.

H2.4: Smartphone users who are more familiar with tasks on mobile devices are more willing to participate in passive data collection.

The third hypothesis relates to mechanisms specific to performing additional tasks using mobile devices. Respondents may feel that they do not have control over the data they are asked to provide and thus be less willing to participate in a study involving passive mobile data collection. Revilla, Couper, and Ochoa (2018) showed that respondents are more willing to perform additional tasks in which they feel they are able to control the content (e.g., taking photos or scanning bar codes with their mobile devices) rather than perform passive data collection tasks in which they cannot control the content transmitted to researchers (e.g., sharing GPS location, URLs of websites visited, and Facebook profile content). Similarly, Wenz, Jäckle, and Couper (2017) reported higher willingness for tasks that actively involve respondents in smartphone data collection rather than passively collected data.

H3: Smartphone users are more willing to participate in passive mobile data collection if they have direct control over the data collection process.

Methods

Data for this study come from a two-wave web survey among German smartphone users 18 years and older. Respondents were recruited from a German nonprobability online panel.1 Although the exact levels of willingness observed in this sample may not generalize to the full population, the focus of our study is on randomization rather than representation, to use Kish’s (1987) terminology. Our main interest is in the relative effects of the experimental manipulations rather than the overall levels of willingness. In December 2016, a total of 2,623 respondents completed the Wave 1 online survey.2 Respondents could complete the questionnaire on a PC, tablet, or smartphone; 15 percent of the respondents completed the Wave 1 questionnaire on a smartphone. The questionnaire included items on smartphone use and skills, privacy and security concerns, and general attitudes toward survey research and research institutions (see Supplementary Online Material for full wording of all relevant questions). The median time for completing the questionnaire was 5 minutes and 56 seconds.

In January 2017, all respondents from Wave 1 were invited to participate in a second web survey. Of those, 1,947 or 74.8 percent (AAPOR RR1) completed the Wave 2 questionnaire.3 Again, respondents were free to complete the questionnaire on any device, and about 14 percent of the respondents completed the Wave 2 questionnaire on a smartphone. The Wave 2 questionnaire included a factorial survey design to investigate the effects of different study characteristics, as described in vignettes, on expressed willingness to participate in hypothetical studies involving passive mobile data collection (see Supplementary Online Material). The median response time for the Wave 2 questionnaire was 6 minutes and 7 seconds.

Vignette studies combine the advantages of survey research and multidimensional experimental designs. Participants judge hypothetical situations, objects, or subjects described in vignettes that vary in the level of characteristics (dimensions) of the described situations, objects, or subjects (Auspurg and Hinz 2015). Having each respondent judge a random subset of all possible vignettes allows us to include a large number of dimensions and levels in the design, thereby “enhancing the resemblance between real and experimental worlds” (Rossi and Anderson 1982, 16). Highly contextualized vignettes are thought to have high construct validity (Steiner, Atzmüller, and Su 2016). Factorial surveys are commonly used in the social sciences and are especially popular in sociology to measure social judgments, positive beliefs, and intended actions (Wallander 2009). In survey methodology, factorial surveys with vignettes have been used to measure the influence of topic sensitivity, disclosure risk, and disclosure harm on expressed willingness to participate in surveys (Singer 2003; Couper et al. 2008, 2010).

VIGNETTES

The vignettes in this study describe hypothetical research involving an app that participants would be asked to download to their smartphone to allow for passive mobile data collection by researchers. The vignettes varied six study characteristics (table 1): the sponsor (related to hypothesis H1.1) and the topic of the study (H1.2), the duration of data collection (H1.3), whether the app only collects data passively or administers additional survey questions once a week (H1.4), the incentives provided for participation (H1.5), and whether or not there was an option to switch off the app during the field period (H3).

Table 1.

Vignette dimensions, dimension levels, vignette text, and willingness to participate by vignette levels (n = 1,947)

| Dimensions | Dimension levels | Vignette text (translated into English) | Percent willing to participate | |

|---|---|---|---|---|

| Sponsor | 3 levels | Statistical agency | …a German statistical agency… | 33.1% |

| University | …a German university… | 36.9% | ||

| Market research company | …a German market research company… | 35.6% | ||

| Topic | 3 levels | Consumer behavior | …about how consumers search for and buy products and services on their smartphones… | 36.1% |

| Mobility | ...about how people move around in their everyday life… | 35.3% | ||

| Social interaction | …about how people interact with others through their smartphone… | 34.2% | ||

| Study duration | 2 levels | One month | …for one month… | 37.9% |

| Six months | …for six months… | 32.5% | ||

| Additional survey questions | 2 levels | Yes | Apart from the automatic data collection, the app will not send you any invitations to questionnaires. | 35.7% |

| No | In addition to automatically collecting data on the smartphone, the app will send you invitations to fill out short questionnaires with just one or two questions on your smartphone about once a week. | 34.7% | ||

| Incentives | 4 levels | None | No monetary incentive is provided for participating in the study. | 19.5% |

| 10 Euro for downloading | For downloading the app you will receive 10 Euro. | 38.3% | ||

| 10 Euro at end of study | For downloading the app and leaving it installed on your smartphone until the end of the study, you will receive 10 Euro. | 37.1% | ||

| Both 10 Euro for downloading and 10 Euro at end of study | For downloading the app you will receive 10 Euro. In addition, for leaving the app installed on your smartphone until the end of the study you will receive 10 Euro. | 46.1% | ||

| Option to switch off app | 2 levels | Yes | The research app allows you to switch it off at times when you do not want it to collect any data from your smartphone. However, it is important for this study that you use this option only in rare cases and for short periods. | 33.1% |

| No | There is no option to switch the research app on and off during the course of the study. | 37.3% |

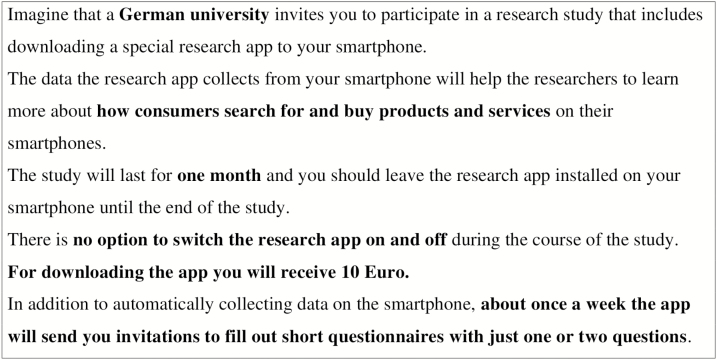

Figure 1 provides a vignette example. On the screen before the first vignette, respondents saw a brief description of the general features of the hypothetical studies that were not varied as part of the factorial survey design. The description included information on the number of vignettes respondents were about to see, general information on the type of data that would be collected through the app, and a data protection and privacy statement for the hypothetical studies (see Appendix A).

Figure 1.

Example of a vignette.

Combining the levels of the six experimental dimensions (3x3x2x2x4x2) produced a vignette universe of 288 unique study descriptions. Each respondent was assigned eight vignettes at random (without replacement) out of the vignette universe. After each vignette, respondents rated their willingness to participate in the described study (QB1: “How likely is it that you would download the app to participate in this research study?”) on a scale from 0 (“Definitely would not participate”) to 10 (“Definitely would participate”).

Based on the response to the first vignette, respondents were asked in an open-ended question for the reason why they would (if QB1 was between 6 and 10) or would not participate (if QB1 was between 0 and 5) in this particular study (QB2). This question was asked only once after the first vignette.

As a manipulation check, we asked respondents after they had rated the eight vignettes to indicate whether they had noticed any differences among the vignettes (QB10), which was followed up by an open-ended question on what differences they had noticed (QB11). About 90 percent of the respondents reported that they had noticed differences. We ran our analysis as described below with and without respondents who said that they did not notice any differences, and we also ran the analysis with and without respondents who took five seconds or less to read and respond to a vignette and were thus flagged as speeders. Since the results in both cases were highly comparable, we only present results for the entire sample here.

WILLINGNESS TO PARTICIPATE

The main dependent variable in this study is based on the responses to the 11-point willingness to participate scale (QB1). We dichotomized the respondents into those who would not participate in the described study (0 to 5 on the original scale) and those who would participate (6 to 10) following prior practice (Couper et al. 2008, 2010). We have two reasons for dichotomizing the dependent variable. First, the distribution of responses to the original 11-point scale is bimodal with heaps at the extreme points of the scale (see figure A in Supplementary Online Material).4 Second, although 11-point scales are the standard in vignette studies (Auspurg and Hinz 2015), dichotomizing the variable mirrors the binary nature of the decision to participate in a study, thus allowing us to estimate participation rates for the described studies.

MEASURES

Six independent variables for our analysis come from the experimental variation of the study characteristics in the vignettes in Wave 2. In addition, we analyzed the influence of survey attitudes (H1.6) using one index measure, privacy and security concerns (H2.1) using four index measures, trust that organizations would not share respondents’ personal data (H2.2) using two index measures, self-reported smartphone skills (H2.3) using one measure, and familiarity with smartphone tasks (H2.4) using four measures on the willingness to participate in passive mobile data collection. These measures come from the Wave 1 questionnaire and were modeled after the questions used by Couper and his colleagues (2008, 2010; Wenz, Jäckle, and Couper 2017). Table 2 shows the descriptive statistics of all measures used in the analysis and their questions they are built off. To facilitate comparison of the different measures used in our analysis, we converted all scales and indexes to a common scale ranging from 0 to 10. A detailed description of how the measures were formed can be found in the Supplementary Online Material.

Table 2.

Descriptive statistics of measures used in the analysis (n = 1,947)

| Construct | Original items | Range | Median | Mean | Standard deviation | Missing values |

|---|---|---|---|---|---|---|

| Attitudes toward surveys and research (H1.6) | QA9a-e | 0–10 | 7.4 | 7.3 | 1.6 | 20 |

| Security concern research app (H2.1) | QA14c | 0–10 | 6.7 | 7.1 | 2.9 | 16 |

| General privacy concern (H2.1) | QA12 | 0–10 | 6.7 | 5.6 | 2.4 | 2 |

| No. perceived privacy violations offline (H2.1) | QA13a-d | 0–4 | 1 | 1.2 | 1.3 | 0 |

| No. perceived privacy violations online (H2.1) | QA13e-g | 0–3 | 3 | 2.2 | 1.2 | 0 |

| Trust data not shared by research organizations (H2.2) | QA10a-c | 0–10 | 6.7 | 6.8 | 2.1 | 22 |

| Trust data not shared by other organizations (H2.2) | QA10d,g,e,h | 0–10 | 3.3 | 3.9 | 2.3 | 16 |

| Smartphone skills (H2.3) | QA8 | 0–10 | 7.5 | 7.4 | 2.4 | 2 |

| No. of devices (H2.4) | QA4a-e | 1–5 | 3 | 3.1 | 0.9 | 0 |

| Frequency of smartphone use (H2.4) | QA6 | 0–10 | 10 | 9.0 | 2.1 | 4 |

| No. of smartphone activities (H2.4) | QA7a-l | 0–12 | 9 | 8.4 | 3.0 | 0 |

| Invited, not downloaded | Never invited | Downloaded | Missing values | |||

| Experience with research apps (H2.4) | QB13,14 | 22.8% | 62.8% | 14.3% | 7 | |

| Less than 30 years | 30–49 years | 50 years and older | Missing values | |||

| Age | QB16 | 24.3% | 50.3% | 25.4% | 4 | |

| Female | Male | Missing values | ||||

| Gender | QB15 | 47.0% | 53.0% | 6 | ||

| w/ HS degree | w/o HS degree | Missing values | ||||

| Education | QB18 | 50.5% | 49.5% | 17 |

ANALYSIS PLAN

All analyses for this study were done in R version 3.5.2 (R Core Team 2018). To answer our first research question about the willingness of smartphone users to download a research app to their phone for passive mobile data collection, we calculated the proportion of respondents indicating that they are willing to participate for the eight vignette questions individually and across all vignettes.

To answer the second research question and identify reasons for and against participating in the described passive mobile data collection studies, we analyzed the responses to the open-ended question that was asked after the first vignette. Two independent coders grouped the responses into nine categories for reasons to participate and nine categories for reasons not to participate in the passive mobile data collection. All codes were compared (Cohen’s Kappa for reasons to participate: 0.64; reasons not to participate: 0.72), and any disagreement was resolved by the first author. We report the frequency of individual codes.

To answer the third research question and test the hypotheses specified above, we analyzed the influence of the experimentally varied study characteristics in the vignettes and respondent-related characteristics regarding privacy and security concerns and familiarity and skills with smartphones on willingness to participate. We controlled for respondents’ age (measured in three groups: less than 30 years, 30–49 years, 50 years and older), gender, and education (without a high school degree vs. with a high school degree). To account for the hierarchical structure of the data, that is, each respondent rated their willingness to participate on eight vignettes, we specified a multilevel logistic regression model. To specify the mixed model, we used the glmer procedure from library(lme4) in R (Bates et al. 2015). Specifically, we regressed the log of the odds of that a respondent j indicated to be willing to participate in a study described in a vignette i on the p vignette characteristics and the q respondent characteristics. In equation (1), the betas (β1 to βp) denote the regression coefficients for variables Xijp on the vignette level (L1) and the gammas (γ1 to γq) describe the regression coefficients for variables Zjq on the respondent level (L2). uj is the random residual error term on the respondent level.

| (1) |

Note that variables Xijp on the vignette level (L1) include the six experimental dimensions of the vignettes plus an indicator for the order of the vignettes (reference category: Vignette 1). Variables Zjq on the respondent level (L2) include the above described measures of privacy and security concern, measures of familiarity and skills with smartphones, and the controls for respondent sociodemographic characteristics.

To facilitate interpretation of the regression coefficients in our model, we calculate average marginal effects (AMEs) and their standard errors using the margins function from library(margins) in R (Leeper 2018), where AMEs are calculated as the marginal effects at every observed value of X and then averaged across the resulting effect estimates.

Results

WILLINGNESS TO PARTICIPATE

Overall, an average of 35.2 percent of respondents indicated that they are willing to participate in the described studies across all eight vignettes (percentages of willingness by experimental condition can be found in the last column of table 1). Eleven percent of respondents consistently reported that they are willing to participate in all eight of the presented studies, and 39 percent of respondents reported that they would not participate in any of the eight studies. Twenty-seven percent of respondents used the same response option on the 11-point willingness scale for all eight vignettes. Individual pairwise comparisons between all vignettes revealed that the willingness to participate is higher in the first vignette presented compared to each of the seven other vignettes (p < 0.05 for all Chi-squared tests between Vignette 1 and all other vignettes after adjusting for multiple comparisons using Bonferroni correction.

REASONS FOR AND AGAINST PARTICIPATION IN PASSIVE MOBILE DATA COLLECTION

Table 3 summarizes the responses to the open-ended questions on reasons for and against participating in passive mobile data collection that were asked after the first vignette. When probed for reasons why they are not willing to participate, the largest share of respondents commented on privacy and data security issues (44 percent). Incentives were mentioned by 17 percent of respondents. About 12 percent of respondents commented on the lack of control over what data are collected and/or shared and with whom the data were to be shared in the described study.

Table 3.

Reasons for and against participation in passive mobile data collection (n = 1,947)

| Reasons for not participating | Reasons for participating | ||

|---|---|---|---|

| Privacy, data security concerns | 44% | Interest, curiosity | 39% |

| No incentive; incentive too low | 17% | Incentive | 26% |

| Not enough information/control of what happens with data | 12% | Help research, researcher | 18% |

| Do not download apps | 7% | Trust, seems legitimate, safe | 11% |

| Not interested, no benefit | 6% | Will help create better products & services | 7% |

| Not enough time, study too long | 5% | No additional burden | 6% |

| Do not use smartphone enough; not right person for this study | 5% | Like surveys & research | 4% |

| Not enough storage | 1% | Fun | 3% |

| Other reasons | 6% | Other reasons | 4% |

| NA | 3% | NA | 2% |

Note.—Percentages do not add up to 100 because respondents could mention multiple reasons.

A total of 39 percent of the respondents named interest in and curiosity about the described study as an answer to the question on reasons for being willing to participate in the passive mobile data collection study presented in the first vignette. About one-quarter of respondents mentioned the incentive offered (26 percent). Altruistic reasons for study participation, that is, helping the researchers (18 percent) or helping create better products and services through study participation (7 percent), were also named. Eleven percent of the respondents commented on trust that participating in the study would not harm the participants.

MECHANISMS FOR WILLINGNESS TO PARTICIPATE IN PASSIVE MOBILE DATA COLLECTION

To assess the influence of the study characteristics and respondent characteristics on willingness to participate, we first fit a base model (M0 in table 4) with random vignette and respondent effects to partition the total variance of willingness ratings into within- and between-components. The model shows that 76 percent of the variance comes from the respondents, indicating that respondents are rather stable in their ratings across the eight vignettes.

Table 4.

Logit coefficients (coeff.) and average marginal effects (AME) with standard errors (s.e.) from a multilevel model predicting likelihood of willingness to participate in passive mobile data collection

| M0 (n = 14,746) | M1 (n = 14,746) | |||||

|---|---|---|---|---|---|---|

| Coeff. | (s.e.) | Coeff. | (s.e.) | AME | (s.e.) | |

| Fixed effects | ||||||

| Intercept | –1.64 ** | (0.08) | –6.30 ** | (0.89) | ||

| Vignette (1) | ||||||

| 2–8 | – | –0.63 ** | (0.09) | –0.054 | (0.008) | |

| H1.1: Sponsor (Market research company) | ||||||

| Statistical agency | – | –0.26 ** | (0.08) | –0.022 | (0.006) | |

| University | – | 0.29 ** | (0.07) | 0.025 | (0.006) | |

| H1.2: Topic (Consumer behavior) | ||||||

| Mobility | – | –0.02 | (0.08) | |||

| Social interaction | – | –0.05 | (0.08) | |||

| H1.3: Study duration (One month) | ||||||

| Six months | – | –0.75 ** | (0.06) | –0.063 | (0.005) | |

| H1.4: Additional survey questions (No) | ||||||

| Yes | – | –0.08 | (0.06) | |||

| H1.5: Incentives (None) | ||||||

| 10 Euro for downloading | – | 2.43 ** | (0.10) | 0.199 | (0.008) | |

| 10 Euro at end of study | – | 2.31 ** | (0.10) | 0.189 | (0.008) | |

| Both | – | 3.12 ** | (0.10) | 0.261 | (0.008) | |

| H3: Option to switch off app (No) | ||||||

| Yes | – | 0.47 ** | (0.06) | 0.039 | (0.005) | |

| H1.6: Attitudes toward surveys and research | – | 0.09 | (0.07) | |||

| H2.1: Security concern research app | – | –0.29 ** | (0.04) | –0.024 | (0.003) | |

| H2.1: General privacy concern | – | –0.07 | (0.04) | |||

| H2.1: No. perceived privacy violations offline | – | 0.26 ** | (0.08) | 0.022 | (0.007) | |

| H2.1: No. perceived privacy violations online | – | –0.27 ** | (0.10) | –0.022 | (0.008) | |

| H2.2: Trust data not shared by research organizations | – | 0.18 ** | (0.06) | 0.015 | (0.005) | |

| H2.2: Trust data not shared by other organizations | – | 0.16 ** | (0.05) | 0.013 | (0.004) | |

| H2.3: Smartphone skills | – | –0.04 | (0.05) | |||

| H2.4: No. of devices | – | 0.11 | (0.11) | |||

| H2.4: Frequency of smartphone use | – | –0.02 | (0.06) | |||

| H2.4: No. of smartphone activities | – | 0.31 ** | (0.04) | 0.026 | (0.004) | |

| H2.4: Experience with research apps (Invited, not downloaded) | ||||||

| Never invited | – | 0.58 * | (0.24) | 0.047 | (0.019) | |

| Downloaded | – | 2.80 ** | (0.31) | 0.250 | (0.029) | |

| Age (less than 30 years) | ||||||

| 30–49 years | – | –0.30 | (0.24) | |||

| 50 years and older | – | –0.56 | (0.30) | |||

| Gender (Female) | ||||||

| Male | – | 0.54 ** | (0.19) | 0.045 | (0.016) | |

| Education (w/HS degree) | ||||||

| w/o HS degree | – | 0.14 | (0.20) | |||

| Random effects | ||||||

| Respondent variance | 10.12 | 12.24 | ||||

| Residuals | 3.29 | 3.29 | ||||

| Model fit statistics | ||||||

| –2 Log Likelihood | 13,018.0 | 10,994.8 | ||||

| AIC | 13,022.1 | 11,054.8 | ||||

| BIC | 13,018.1 | 11,282.8 |

Note.— Reference categories in parentheses. Average marginal effects (AME) only provided for significant coefficients. Listwise deletion of missing values. Mean random effect for M0 = 0.377.

*p < 0.05, **p < 0.01

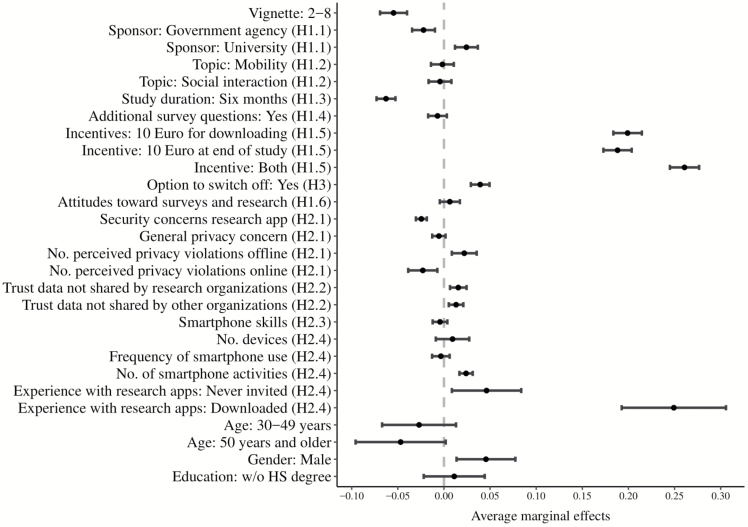

Model 1 in table 4 shows the main effects of the study characteristics experimentally varied in the vignettes and the respondent characteristics on the likelihood of being willing to participate in passive mobile data collection controlling for the order of the vignettes. Supporting H1.1, respondents are significantly more likely to be willing to participate in a study sponsored by a university compared to a study sponsored by a market research company (p < 0.001). Using the average marginal effects (AME), respondents have two percentage points higher predicted probabilities of being willing to participate in passive mobile data collection sponsored by a university than a market research company (see also figure 2). On the other hand, respondents are significantly less likely to be willing to participate in a study sponsored by a statistical agency (AME: –2 percentage points) than a study sponsored by a market research company (p < 0.001). The likelihood of being willing to participate is significantly lower, with a decrease in the predicted probabilities of six percentage points, if the study runs for six months compared to just one month, and it is significantly higher (AME: +4 percentage points) if the app has an option to temporarily switch off data collection (p < 0.001), supporting H1.3 and H3, respectively. In addition, incentives play a significant role in the willingness to participate in the study (p < 0.001), supporting H1.5. Predicted probabilities for the willingness to participate increase with a 10-Euro incentive for downloading the app by 20 percentage points, a 10-Euro incentive at the end of the study by 19 percentage points, and a combination of the two incentives by 26 percentage points over no incentive. The topic of the study and whether or not the app would administer additional survey questions has no influence on the willingness to participate (p > 0.1), showing no support for H1.2 and H1.4, respectively.

Figure 2.

Average marginal effects (points) with 95 percent confidence intervals (lines) from multilevel logistic regression predicting likelihood of willingness to participate in passive mobile data collection.

Turning to respondent-level characteristics, we find no support for H1.6; attitudes toward surveys and research are not significantly correlated with the likelihood to be willing to participate in passive mobile data collection (p > 0.1).

H2.1 is partially supported in that security concerns with using a research app reported in Wave 1 are significantly negatively correlated with likelihood to be willing to participate (p < 0.001). An increase of one scale point on the 11-point scale in security concerns when using a research app decreases the predicted probability to be willing to participate by two percentage points. Along those lines, the more situations respondents perceive as a violation of their privacy online, the lower the likelihood to be willing to participate (p < 0.05). Predicted probabilities decrease by two percentage points with each additional situation perceived as a violation of privacy online. On the other hand, perception of privacy violations offline is positively correlated with likelihood to be willing to participate (p < 0.01), with an increase in predicted probabilities of two percentage points for each additional situation perceived as a violation of privacy offline. The degree of general privacy concerns is not significantly associated with the likelihood to be willing to participate (p > 0.1).

Trust that research and other organizations will not share data with others is positively associated with the likelihood to be willing to participate (p < 0.01), supporting H2.3. One scale point increase on the 11-point scale in trust in research organizations and other organizations increases the predicted probability of being willing to participate by two and one percentage points, respectively. Self-assessed smartphone skills are not associated with the likelihood to be willing to participate (p > 0.1), showing no support for H2.3.

The data partially support H2.4. While the number of devices owned and the frequency of smartphone use are not related to the likelihood to be willing to participate (p > 0.1), the more different activities a respondents reports to do on the smartphone, the higher the likelihood to be willing to participate (p < 0.001). With each additional activity reported, the predicted probabilities of being willing to participate increase by three percentage points. In addition, respondents who had downloaded a smartphone research app in the past (p < 0.001) and respondents who were never asked to participate in such a study before (p < 0.05) are significantly more likely to be willing to participate than respondents who were asked in the past but did not download the app. The predicted probabilities of being willing to participate increase by 25 percentage points for respondents who had downloaded a research app before and by five percentage points for respondents who were never asked to participate in such a study before over respondents who were asked in the past but did not download the app.

Regarding our control variables, male respondents are significantly more likely to be willing to participate in passive mobile data collection than female respondents (p < 0.01; AME: +5 percentage points). While there seems to be a decreasing trend for the likelihood to being willing to participate with age, only the difference between respondents aged 50 and older and respondents under 30 years of age is significant at the 10 percent level (ME: –5 percentage points). Educational level is not associated with likelihood to be willing to participate (p > 0.1).5

Discussion

Passive mobile data collection via smartphone apps gives researchers the opportunity to collect data at a frequency and level of detail that goes beyond what is usually feasible using traditional survey questions. However, consistent with previous research (Revilla et al. 2016; Revilla, Couper, and Ochoa 2018; Wenz, Jäckle, and Couper 2017), we found that smartphone users seem to be reluctant to share these types of data with researchers. Only 35 percent of smartphone users in our study indicated their willingness to download an app that would passively collect technical characteristics of the phone, cell network parameters, geographic location, app usage, and browser history, as well as the number of incoming and outgoing phone calls and text messages. About a third (39 percent) of respondents were not willing to download an app under any of the conditions described in the vignettes. We surveyed members of a nonprobability online panel in Germany who regularly participate in surveys, and only those who participated in our survey were asked about their willingness to participate in the passive mobile data collection. The participants in our study thus might be more cooperative than the general population when it comes to requests to share information—as indicated by relatively positive attitudes toward surveys in general. In addition, while we find little evidence that respondents did not read or did not understand the study descriptions provided in the vignettes, we acknowledge that vignettes ask for self-reports of hypothetical willingness and are thus only an imprecise measure for actual download of a research app. It is reasonable to assume that the actual willingness to download such a research app in the general population would be lower, and we hope to see more research that experimentally varies the features of passive mobile data collection.

We hypothesized that passive mobile data collection shares some of the mechanisms related to the participation decision in traditional survey modes while other mechanisms are unique to passive mobile data collection. As expected, we found that willingness to participate in passive mobile data collection is affected by factors known to be influential when it comes to the decision on whether or not to participate in surveys. Willingness to participate is significantly higher (albeit with a small effect size) when the request comes from a university as compared to a commercial market research company, providing support for the hypothesis that people assign higher authority and trust with regard to data collection by universities. Whether the fact that a statistical agency yielded significantly less willingness to participate compared to both a university and a market research company is the result of a specific mistrust in the German government to collect such data or can be generalized to other federal agencies or other countries needs to be tested in future studies. Also, respondents in our study were recruited from an online panel operated by a market research company. Thus, the positive effect of the market research company as compared to the statistical agency might be overestimated due to the trust in and positive experience with the panel provider.

Consistent with previous research on survey participation, respondent burden and incentives play a role in passive mobile data collection as well. We found less willingness to participate for a field period of six months of passive mobile data collection and no incentive compared to one month and a promised incentive, respectively. In particular, the promise of an incentive strongly influenced the reported willingness to download a research app for passive mobile data collection, increasing the predicted probabilities of being willing to participate by more than 20 percentage points over no incentive. Our finding about large effects of the incentives might have been influenced by the opt-in nature of the online panel, where respondents are used to receiving rewards. Thus, we encourage further research on incentive effects in passive mobile data collection.

Whether or not the app also asks survey questions has no influence on willingness to participate in our study. This finding is an indication that the decision on whether or not to participate in such a study is more driven by concerns related to the passive data collection compared to the additional task of responding to survey questions. Again, whether this finding holds for populations who participate less regularly in surveys and in conditions where users are asked to participate in passive mobile data collection instead of—rather than in addition to—responding to survey questions needs to be tested.

What makes passive mobile data collection different from surveys is that the latter inherently allows respondents to control what information is shared with the researcher because they are based on self-reports, whereas passive mobile data collection does not allow such curation before information is shared. This can be advantageous in avoiding measurement error due to memory problems or social desirability but can be seen as a threat to privacy by participants. We find strong support for this notion in that both a feature to temporarily turn off the app during data collection as well as general and specific privacy concerns are strong predictors for willingness to participate in passive mobile data collection. In addition, privacy and security concerns are by far the most often mentioned reasons for not being willing to participate, confirming previous research (Jäckle et al. 2017; Revilla, Couper, and Ochoa 2018). More research is needed on how control over what data are shared with researchers influences consent. Providing participants with the option to specifically pick which types of data they are willing to share and which not ahead of time (i.e., à la carte consent) might increase the overall willingness to participate over an option to share all or no data. Alternatively, participants could get an option to review, edit, and delete specific data before transmitting them. Both approaches would give participants the ability to choose to share some but not all data. More research is needed on what type of data is more likely to be shared when such options are provided. In addition, we encourage further research on the wording of how data are processed and protected when collecting them passively from a smartphone, and to test how the request can be worded to alleviate respondents’ concerns and increase participation.

Consistent with previous research (Pinter 2015; Jäckle et al. 2017; Wenz, Jäckle, and Couper 2017), we find that familiarity with different features of a smartphone as well as previous experience with a research app increases the willingness to participate in our hypothetical studies. Self-assessed smartphone skills, on the other hand, are not associated with the willingness to participate. If users who engage in more different activities on their smartphones are more likely to participate in a passive mobile data collection study that aims at estimating measures related to smartphone use (e.g., frequency of using specific apps), then nonparticipation bias will likely occur. Whether these differences in nonparticipation concerning smartphone use are also related to other behaviors (e.g., mobility, social interaction) needs to be studied further.

Our research focuses on the effect of different study design features on willingness to have a broad range of information passively collected through a smartphone app (i.e., technical characteristics of the phone, network strength, current location, app and Internet use, call and text logs). Earlier research (Revilla et al. 2016; Couper, Antoun, and Mavletova 2017; Wenz, Jäckle, and Couper 2017) demonstrates that willingness to engage in different research-related tasks on a smartphone can vary substantially by task, thus our estimates of willingness should be considered conservative, given the broad range of data that were included in our study description. To limit complexity, our vignettes did not experimentally vary the type of data that would be collected through the app; thus, we cannot say whether study design characteristics such as sponsor, length, or incentive have a uniform effect on willingness to participate in studies that, for example, collect GPS data only, call and text logs only, or both. We encourage further research to combine the findings of our study with research on willingness to engage in different smartphone tasks to help researchers and practitioners in developing specific designs that increase willingness to participate in studies that, for example, are primarily interested in collecting GPS data.

Supplementary Material

Acknowledgment

The authors wish to thank Theresa Ludwig and Ruben Layes for research assistance, Edgar Treischl for help with the factorial design, Christoph Kern for support with the data analysis, and the members of the FK2RG and three anonymous reviewers for feedback on an earlier draft of the manuscript. This work was supported by the German Research Foundation (DFG) through the Collaborative Research Center SFB 884 “Political Economy of Reforms” (Project A9) [139943784 to Markus Frölich, F.K., and F.K.].

Appendix A: General study description and vignette

General study description.

On the following pages we present you 8 different scenarios which vary in a number of features.

All scenarios will mention a research app that, when downloaded to a smartphone, collects the following data:

technical characteristics of the phone (for example, brand, screen size)

the telephone network currently used (for example, signal strength)

the current location (every 5 minutes)

what apps are used and what websites are visited on the phone

number of incoming and outgoing phone calls and text messages on the phone

Please note that the research app mentioned in the scenarios would only collect information on when other apps are opened or when a call is made. The research app cannot see what happens inside other apps or what is said in a phone call or a text message.

All information collected by the research app described above is confidential. It would only be used by the researchers and they would not share your individual data with anyone else. The described study complies with all German federal regulations about data protection and privacy.

Vignette.

Imagine that a #sponsor# invites you to participate in a research study that includes downloading a special research app to your smartphone.

The data the research app collects from your smartphone will help the researchers to learn more about #topic# on their smartphones.

The study will last for #duration# and you should leave the research app installed on your smartphone until the end of the study.

There is #option to switch off app# during the course of the study.

#Incentive#

#additional short questions#

Footnotes

According to the panel provider, members are recruited through a variety of nonprobability online and offline methods. Panel members are invited to participate in up to two web surveys per week, and members receive 50 points (an equivalent of €0.50) for a 10-minute web survey. Panel members who do not participate in six consecutive surveys are excluded from the panel. To avoid multiple enrollment of the same person in the panel, and to facilitate payment of the incentives, members must provide a valid bank account number upon enrollment.

Nine thousand email invitations were sent to panel members by the online panel provider. A total of 3,144 people started the survey. Quotas for gender and age were used based on the known smartphone penetration in Germany; only smartphone owners were able to proceed to the main questionnaire: 404 panel members who started the survey were screened out because of the quotas; 32 were screened out because they reported not owning a smartphone. Out of the 2,708 remaining respondents, 61 broke off the survey (2.2 percent). Twentyfour respondents had duplicated IDs and were dropped from the data set.

Out of the 2,333 respondents who started the Wave 2 survey, 354 broke off (15.2 percent). An additional 22 respondents were dropped because of duplicated IDs and eight could not be matched because their IDs were not found in the Wave 1 data. For the remaining 1,949 respondents, we checked for consistency in sociodemographics between the two waves using age, gender, and education, allowing respondents to move up one category in age and education from Wave 1 to Wave 2. Two respondents had more than one inconsistency and were dropped.

To assess the stability of our results, we reran all analyses 1) using a different cutoff point (0–4 vs. 5–10) and 2) limiting the analysis to respondents who selected the extreme points (0 vs. 10). Both had little effect on the outcome of the analyses and the conclusions. We report substantive differences between our main analysis strategy and the two alternative analysis strategies in the Results section.

Changing the cutoff point for the dependent variable has only minor effects. When the cutoff is 0–4 versus 5–10, the difference in willingness to participate between a statistical agency and a market research company becomes insignificant (p > 0.1). Respondents are significantly less likely to be willing to participate if the app administers additional survey questions (p < 0.05). Comparing respondents on the extreme points (0 vs. 10) reduces the sample size, and several coefficients become insignificant (Sponsor: University, No. perceived privacy violations online, Trust data not shared by other companies, Experience with research app: Never invited).

References

- Auspurg Katrin, and Hinz Thomas. . 2015. Factorial Survey Experiments. Los Angeles: Sage Publications. [Google Scholar]

- Bates Douglas, Mächler Martin, Bolker Ben, and Walker Steve. . 2015. “Fitting Linear Mixed-Effects Models Using lme4.” Journal of Statistical Software 67:1–48. [Google Scholar]

- Bosnjak Michael, Tuten Tracy L., and Wittmann Werner W.. . 2005. “Unit (Non)Response in Web-based Access Panel Surveys: An Extended Planned-Behavior Approach.” Psychology & Marketing 22:489–505. [Google Scholar]

- Bradburn Norman M. 1978. “Respondent Burden.” Proceedings of the American Statistical Association, Survey Research Methods Section, 35–40. [Google Scholar]

- Brüggen Elisabeth, Wetzels Martin, de Ruyter Ko, and Schillewaert Niels. . 2011. “Individual Differences in Motivation to Participate in Online Panels: The Effect on Response Rate and Response Quality Perceptions.” International Journal of Market Research 53:369–90. [Google Scholar]

- Conrad Frederick G., Schober Michael F., Antoun Christopher, Yanna Yan H., Hupp Andrew L., Johnston Michael, Ehlen Patrick, Vickers Lucas, and Zhang Chan. . 2017. “Respondent Mode Choice in a Smartphone Survey.” Public Opinion Quarterly 81:307–37. [Google Scholar]

- Couper Mick P., Antoun Christopher, and Mavletova Aigul. . 2017. “Mobile Web Surveys: A Total Survey Error Perspective.” In Total Survey Error in Practice, edited by Biemer Paul P., de Leeuw Edith, Eckman Stephanie, Edwards Brad, Lyberg Lars E., Clyde Tucker N., and West Brady T., 133–54. Hoboken, NJ: Wiley. [Google Scholar]

- Couper Mick P., and Singer Eleanor. . 2013. “Informed Consent for Web Paradata Use.” Survey Research Methods 7:57–67. [PMC free article] [PubMed] [Google Scholar]

- Couper Mick P., Singer Eleanor, Conrad Frederick G., and Groves Robert M.. . 2008. “Risk of Disclosure, Perceptions of Risk, and Concerns about Privacy and Confidentiality as Factors in Survey Participation.” Journal of Official Statistics 24:255–75. [PMC free article] [PubMed] [Google Scholar]

- ———. 2010. “Experimental Studies of Disclosure Risk, Disclosure Harm, Topic Sensitivity, and Survey Participation.” Journal of Official Statistics 26:287–300. [PMC free article] [PubMed] [Google Scholar]

- Elevelt Anne, Lugtig Peter, and Toepoel Vera. . 2017. “Doing a Time Use Survey on Smartphones Only: What Factors Predict Nonresponse at Different Stages of the Survey Process?” Paper presented at the 7th Conference of the European Survey Research Association (ESRA), July 17–21, Lisbon, Portugal. [Google Scholar]

- Fritz Heather, Tarraf Wassim, Saleh Dan J., and Cutchin Malcolm P.. . 2017. “Using a Smartphone-Based Ecological Momentary Assessment Protocol with Community Dwelling Older African Americans.” Journal of Gerontology: Psychological Sciences and Social Sciences 72: 876–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Galesic Mirta. 2006. “Dropouts on the Web: Effects of Interest and Burden Experienced During an Online Survey.” Journal of Official Statistics 22:313–28. [Google Scholar]

- Galesic Mirta, and Bosnjak Michael. . 2009. “Effects of Questionnaire Length on Participation and Indicators of Response Quality in a Web Survey.” Public Opinion Quarterly 73:349–60. [Google Scholar]

- Haunberger Sigrid. 2011. “Explaining Unit Nonresponse in Online Panel Surveys: An Application of the Extended Theory of Planned Behavior.” Journal of Applied Social Psychology 41:2999–3025. [Google Scholar]

- Jäckle Annette, Burton Jonathan, Couper Mick P., and Lessof Carli. . 2017. “Participation in a Mobile App Survey to Collect Expenditure Data as Part of a Large-Scale Probability Household Panel: Response Rates and Response Biases.” Understanding Society Working Paper 2017–09. Available at https://www.understandingsociety.ac.uk/research/publications/working-paper/understanding-society/2017-09.pdf.

- Keusch Florian. 2013. “The Role of Topic Interest and Topic Salience in Online Panel Web Surveys.” International Journal of Market Research 55:59–80. [Google Scholar]

- ———. 2015. “Why Do People Participate in Web Surveys? Applying Survey Participation Theory to Internet Survey Data Collection.” Management Review Quarterly 65:183–216. [Google Scholar]

- Keusch Florian, Batinic Bernard, and Mayerhofer Wolfgang. . 2014. “Motives for Joining Nonprobability Online Panels and their Association with Survey Participation Behavior.” In Online Panel Research: A Data Quality Perspective, edited by Callegaro Mario, Baker Reg, Bethlehem Jekle, Göritz Anja S., Krosnick Jon A., and Lavrakas Paul J., 133–54. Chichester, UK: Wiley. [Google Scholar]

- Keusch Florian, and Yan Ting. . 2016. “Web vs. Mobile Web—An Experimental Study of Device Effects and Self-Selection Effects.” Social Science Computer Review 35:751–69. [Google Scholar]

- Kish Leslie. 1987. Statistical Design for Research. New York: John Wiley. [Google Scholar]

- Lavrakas Paul, Benson Grant, Blumberg Stephen, Buskirk Trent, Cervantes Ismael Flores, Christian Leah, Dutwin David, Fahimi Mansour, Fienber Howard, Guterbock Tom, Keeter Scott, Kelly Jenny, Kennedy Courtney, Peytchev Andy, Piekarski Linda, and Shuttles Chuck. . 2017. “The Future of U.S. General Population Telephone Survey Research.” AAPOR Report.

- Leeper Thomas J. 2018. margins: Marginal Effects for Model Objects. R package version 0.3.23. Available at https://CRAN.R-project.org/package=margins. Retrieved September 12, 2018.

- Link Michael W., Murphy Joe, Schober Michael F., Buskirk Trent D., Childs Jennifer Hunter, and Tesfaye Casey Langer. . 2014. “Mobile Technologies for Conducting, Augmenting and Potentially Replacing Surveys: Executive Summary of the AAPOR Task Force on Emerging Technologies in Public Opinion Research.” Public Opinion Quarterly 78:779–87. [Google Scholar]

- Marcus Bernd, Bosnjak Michael, Lindner Steffen, Pilischenko Stanislav, and Schütz Astrid. . 2007. “Compensating for Low Topic Interest and Long Surveys. A Field Experiment on Nonresponse in Web Surveys.” Social Science Computer Review 25:372–83. [Google Scholar]

- Pinter Robert. 2015. “Willingness of Online Access Panel Members to Participate in Smartphone Application-Based Research.” In Mobile Research Methods, edited by Toninelli Daniele, Pinter Robert, and de Pedraza Pablo, 141–56. London: Ubiquity Press. [Google Scholar]

- R Core Team 2018. R: A Language and Environment for Statistical Computing. Vienna, Austria: R Foundation for Statistical Computing; Available at https://www.R-project.org/. [Google Scholar]

- Revilla Melanie, Couper Mick P., and Ochoa Carlos. . 2018. “Willingness of Online Panelists to Perform Additional Tasks.” Methods, Data, Analyses. Advance Access publication June 2018, doi: 10.12758/mda.2018.01. [DOI] [Google Scholar]

- Revilla Melanie, Toninelli Daniele, Ochoa Carlos, and Loewe German. . 2016. “Do Online Access Panels Need to Adapt Surveys for Mobile Devices?” Internet Research 26:1209–27. [Google Scholar]

- Rossi Peter H., and Anderson Andy B.. . 1982. “An Introduction.” In Measuring Social Judgments: The Factorial Survey Approach, edited by Rossi Peter H. and Nock Steven L., 15–68. Beverly Hills, CA: Sage Publications. [Google Scholar]

- Schaurer Ines. 2017. Recruitment Strategies for a Probability-Based Online Panel: Effects of Interview Length, Question Sensitivity, Incentives and Interviewers. PhD dissertation, University of Mannheim; Available at https://ub-madoc.bib.uni-mannheim.de/42976/1/Dissertation_Schaurer-1.pdf. [Google Scholar]

- Scherpenzeel Annette. 2017. “Mixing Online Panel Data Collection with Innovative Methods.” In Methodische Probleme von Mixed-Mode-Ansätzen in der Umfrageforschung, edited by Eifler Stefanie and Faulbaum Frank, 27–49. Wiesbaden: Springer. [Google Scholar]

- Singer Eleanor. 2003. “Exploring the Meaning of Consent: Participation in Research and Beliefs about Risks and Benefits.” Journal of Official Statistics 19:273–85. [Google Scholar]

- Singer Eleanor, and Ye Cong. . 2013. “The Use and Effects of Incentives in Surveys.” ANNALS of the American Academy of Political and Social Science 645:112–41. [Google Scholar]

- Sonck Nathalie, and Fernee Henk. . 2013. Using Smartphones in Survey Research: A Multifunctional Tool. Implementation of a Time Use App. A Feasibility Study. The Hague, Netherlands: The Netherlands Institute for Social Research. Available at https://www.scp.nl/dsresource?objectid=0c7723e5-9498-4c71-bb98-d802b2affd36. Retrieved December 8, 2017. [Google Scholar]

- Steiner Peter M., Atzmüller Christiane, and Su Dan. . 2016. “Designing Valid and Reliable Vignette Experiments for Survey Research: A Case Study on the Fair Gender Income Gap.” Journal of Methods and Measurement in the Social Sciences 7:52–94. [Google Scholar]

- Sugie Naomie F. 2018. “Utilizing Smartphones to Study Disadvantaged and Hard-to-Reach Groups.” Sociological Methods & Research 47:458–91. [Google Scholar]

- Wallander Lisa. 2009. “25 Years of Factorial Surveys in Sociology: A Review.” Social Science Research 38:505–20. [Google Scholar]

- Wenz Alexander, Jäckle Annette, and Couper Mick P.. . 2017. “Willingness to Use Mobile Technologies for Data Collection in a Probability Household Panel.” ISER Working Paper 2017-10. Available at https://www.understandingsociety.ac.uk/research/publications/working-paper/understanding-society/2017-10.pdf. Retrieved December 8, 2017.

- Yan Ting, Conrad Frederic G., Tourangeau Roger, and Couper Mick P.. . 2011. “Should I Stay or Should I Go: The Effect of Progress Feedback, Promised Task Duration, and Length of Questionnaire on Completing Web Surveys.” International Journal of Public Opinion Research 23:131–47. [Google Scholar]

- York Cornwell Erin, and Cagney Kathleen A.. . 2017. “Aging in Activity Space: Results from Smartphone-Based GPS-Tracking of Urban Seniors.” Journals of Gerontology: Series B 72:864–75. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.