Abstract

It is commonly assumed that a person’s emotional state can be readily inferred from the person’s facial movements, typically called “emotional expressions” or “facial expressions.” This assumption influences legal judgments, policy decisions, national security protocols, and educational practices, guides the diagnosis and treatment of psychiatric illness, as well as the development of commercial applications, and pervades everyday social interactions as well as research in other scientific fields such as artificial intelligence, neuroscience, and computer vision. In this paper, we survey examples of this widespread assumption, which we refer to as the “common view”, and then examine the scientific evidence for this view with a focus on the six most popular emotion categories used by consumers of emotion research: anger, disgust, fear, happiness, sadness and surprise. The available scientific evidence suggests that people do sometimes smile when happy, frown when sad, scowl when angry, and so on, more than what would be expected by chance. Yet there is substantial variation in how people communicate anger, disgust, fear, happiness, sadness and surprise, across cultures, situations, and even within a single situation. Furthermore, similar configurations of facial movements variably express instances of more than one emotion category. In fact, a given configuration of facial movements, such as a scowl, often communicates something other than an emotional state. Scientists agree that facial movements convey a range of social information and are important for social communication, emotional or otherwise. But our review suggests there is an urgent need for research that examines how people actually move their faces to express emotions and other social information in the variety of contexts that make up everyday life, as well as careful study of the mechanisms by which people perceive instances of emotion in one another. We make specific research recommendations that will yield a more valid picture of how people move their faces to express emotions, and how they infer emotional meaning from facial movements, as situations of everyday life. This research is crucial to provide consumers of emotion research with the translational information they require.

Executive Summary

It is commonly assumed that a person’s face gives evidence of emotions because there is a reliable mapping between a certain configuration of facial movements, called a “facial expression,” and the specific emotional state that it supposedly signals. This common view of facial expressions remains entrenched in consumers of emotion research, as well as in some scientists, despite an emerging consensus among affective scientists that emotional expressions are considerably more context-dependent and variable. Nonetheless, this common view continues to fuel commercial applications in industry and government (e.g., automated detection of emotions from faces), guide how children are taught (e.g., with posters and books showing stereotyped facial expressions), and impact clinical and legal applications (e.g., diagnoses of psychiatric illnesses and courtroom decisions). In this paper, we evaluate the common view of facial expressions against a review of the evidence and conclude that it rests on a number of flawed assumptions and incorrect interpretations of research findings. Our review is the most comprehensive and systematic to date, encompassing studies of healthy adults across cultures, newborns and young children, as well as people who are congenitally blind, and confirms that specific emotion categories -- anger, disgust, fear, happiness, sadness, and surprise – are each expressed with a particular configuration of facial movements, more reliably than would be expected by mere chance, but contrary to the common view, instances of these emotion categories are NOT expressed with facial movements that are sufficiently reliable and specific across contexts, individuals, and cultures to be considered diagnostic displays of any emotional state. Nor do human perceivers, in fact, infer emotions from particular configurations of muscle movements in a sufficiently reliable and specific way that similarly generalizes. Studies of expression production and perception both demonstrate multiple sources of variability that contradict the common view that smiles, scowls, frowns, and the like, are reliable and specific “expressions of emotion.” We conclude the paper with specific recommendations for both scientists and consumers of science.

Introduction

Faces are a ubiquitous part of everyday life for humans. We greet each other with smiles or nods. We have face-to-face conversations on a daily basis, whether in person or via computers. We capture faces with smartphones and tablets, exchanging photos of ourselves and of each other on Instagram, Snapchat, and other social media platforms. The ability to perceive faces is one of the first capacities to emerge after birth: an infant begins to perceive faces within the first few days of life, equipped with a preference for face-like arrangements that allows the brain to wire itself, with experience, to become expert at perceiving faces (Arcaro et al., 2017; Cassia et al., 2004; Grossmann, 2015; Ghandi et al., 2017; Smith et al., 2018; Turati, 2004; but see Young & Burton (2018) for a more qualified claim).1 Faces offer a rich, salient source of information for navigating the social world: they play a role in deciding who to love, who to trust, who to help, and who is found guilty of a crime (Todorov, 2017; Zebrowitz, 1997, 2017; Zhang, Chen & Yang, 2018). Dating back to the ancient Greeks (Aristotle, in 4th century BCE) and Romans (Cicero), various cultures have viewed the human face as a window on the mind. But to what extent can a raised eyebrow, a curled lip, or a narrowed eye reveal what someone is thinking or feeling, allowing a perceiver’s brain to guess what that someone will do next?2 The answers to these questions have major consequences for human outcomes as they unfold in the living room, the classroom, the courtroom and even on the battlefield. They also powerfully shape the direction of research in a broad array of scientific fields, from basic neuroscience to psychiatry research.

Understanding what facial movements might reveal about a person’s emotions is made more urgent by the fact that many people believe we already know. Specific configurations of facial muscle movements appear as if they summarily broadcast or display a person’s emotions, which is why they are routinely referred to as “emotional expressions” and “facial expressions.”3 A simple Google search using the phrase “emotional facial expressions” [see Box 1, in supplementary on-line materials (SOM)] reveals the ubiquity with which, at least in certain parts of the world, people believe that certain emotion categories are reliably signaled or revealed by certain facial muscle movement configurations – a set of beliefs were refer to as the common view (also called the classical view; Barrett, 2017a). Similarly, many cultural products testify to the common view. Here are several examples:

Technology companies are investing tremendous resources to figure out how to objectively “read” emotions in people by detecting their presumed facial expressions, such as scowling faces, frowning faces and smiling faces in an automated fashion. Several companies claim to have already done it (e.g., https://www.affectiva.com/what/products/; https://azure.microsoft.com/en-us/services/cognitive-services/emotion/). For example, Microsoft’s Emotion API promises to take video images of a person’s face to detect what that individual is feeling. The application states: “The emotions detected are anger, contempt, disgust, fear, happiness, neutral, sadness, and surprise. These emotions are understood to be cross-culturally and universally communicated with particular facial expressions”(https://azure.microsoft.com/en-us/services/cognitive-services/emotion/).

Countless electronic messages are annotated with emojis or emoticons that are schematized versions of the proposed facial expressions for various emotion categories (https://www.apple.com/newsroom/2018/07/apple-celebrates-world-emoji-day/).

Putative emotional expressions are taught to preschool children by displaying scowling faces, frowning faces, smiling faces and so on, in posters (e.g., use “feeling chart for children” in a Google image search), games (https://www.amazon.com/s/ref=nb_sb_noss_2?url=search-alias%3Daps&field-keywords=miniland+emotion) and books (e.g., Cain, 2000; Parr, 2005), and on episodes of Sesame Street (among many examples, see https://www.youtube.com/watch?v=ZxfJicfyCdg, https://vimeo.com/108524970, or https://www.youtube.com/watch?v=y28GH2GoIvc).4

Television shows (e.g., Lie to Me), movies (e.g., Inside Out) and documentaries (e.g., The Human Face, produced by the British Broadcasting Company) customarily depict certain facial configurations as universal expressions of emotions.

Magazine and newspaper articles routinely feature stories in kind: facial configurations depicting a scowl are referred to as “expressions of anger,” facial configurations depicting a smile are referred to as “expressions of happiness,” facial configurations depicting a frown are referred to as “expressions of sadness,” and so on.

Agents of the U.S. Federal Bureau of Investigations (FBI) and the Transportation Security Administration (TSA) were trained to detect emotions and other intentions using these facial configurations, with the goal of identifying and thwarting terrorists (Rhonda Heilig, special agent with the FBI, personal communication, December 15, 2014, 11:20 am; https://how-emotions-are-made.com/notes/Screening_of_Passengers_by_Observation_Techniques).5

The facial configurations that supposedly diagnose emotional states also figure prominently in the diagnosis and treatment of psychiatric disorders. One of the most widely used task in autism research, the “Reading the Mind in the Eyes Test”, asks patients to match photos of the upper (eye) region of a posed facial configuration with specific mental state words, including emotion words (Baron-Cohen t al., 2001). Treatment plans for people living with autism and other brain disorders often include learning to recognize these facial configurations as emotional expressions (Baron-Cohen et al., 2004; Kouo & Egel, 2016). This training does not generalize well to real-world skills, however (Bergren et al., 2018; Kouo & Egel, 2016).

“Reading” the emotions of a defendant (in the words of Supreme Court Justice Anthony Kennedy -- to “know the heart and mind of the offender”) is one pillar of a fair trial in the U.S. legal system and in many legal systems in the Western world (see Riggins v. Nevada, 1992). Legal actors like jurors and judges routinely rely on facial movements to determine the guilt and remorse of a defendant (e.g., Bandes, 2014; Zebrowitz, 1997). For example, defendants who are perceived as untrustworthy receive harsher sentences than they otherwise would (Wilson & Rule, 2015, 2016), and such perceptions are more likely when a person appears to be angry (i.e., facial structure is similar to the hypothesized facial expression of anger, which is a scowl (Todorov, 2017). An incorrect inference about a defendant’s emotional state can cost someone her children, her freedom, or even her life (for recent examples, see Barrett, 2017, beginning on page 183).

But can a person’s emotional state be reasonably inferred from that person’s facial movements? In this paper, we offer a systematic review of the evidence, testing the common view that instances of emotion are signaled with a distinctive configuration of facial movements with enough consistently that it can serve as a diagnostic marker of those instances. We focus our review on evidence pertaining to six emotion categories that have received the lion’s share of attention in the scientific literature -- anger, disgust, fear, happiness, sadness and surprise – and that, correspondingly, are the focus of common view (as evidenced by our Google search, summarized in Box 1, SOM), but our conclusions apply to all emotion categories that have thus far been scientifically studied. We open the paper with a brief discussion of its scope, approach, and intended audience. We then summarize evidence on how people actually move their faces during episodes of emotion, referred to as studies of expression production studies, following which we examine evidence for which emotions are actually inferred from looking at facial movements, referred to as studies of emotion perception. We identify three key shortcomings in the scientific research that have contributed to a general misunderstanding about how emotions are expressed and perceived in facial movements, and that limit the translation of this scientific evidence for other uses:

limited reliability (instances of the same emotion category are neither reliably expressed with or perceived from a common set of facial movements);

lack of specificity (there is no unique mapping between a single configuration of facial movements and instances of the same emotion category); and,

limited generalizability (the effects of context and culture have not been sufficiently documented and accounted for).

We then discuss our conclusions, followed by proposals for consumers on how they might use the existing scientific literature. We also provide recommendations for future research with consumers of emotion research in mind. We have included additional detail on some topics of import or interest in the supplementary on-line materials (SOM).

Scope, Approach and Intended Audience of Paper

The Common View: Reading an Inner Emotional State of Mind From A Set of Unique Facial Movements

In common English parlance, people refer to “emotions” or “an emotion” as if anger, happiness, or any emotion word refers to an object that is highly similar on every occurrence. But an emotion word refers not to a unitary entity, but to a category of instances that vary from one another in their physical features, such as facial expressions and bodily changes, and mental features. Few scientists who study emotion, if any, take the view that every instance of an emotion category, such as anger, is identical to every other instance, sharing a set of necessary and sufficient features across situations, people and cultures. For example, Keltner and Cordaro (2017) recently wrote, “there is no one-to-one correspondence between a specific set of facial muscle actions or vocal cues and any and every experience of emotion” (p. 62). Yet there is considerable scientific debate about the amount of the within-category variation, the specific features that vary, the causes of the within-category variation, and implications of this variation for the nature of emotion (see Figure 1).

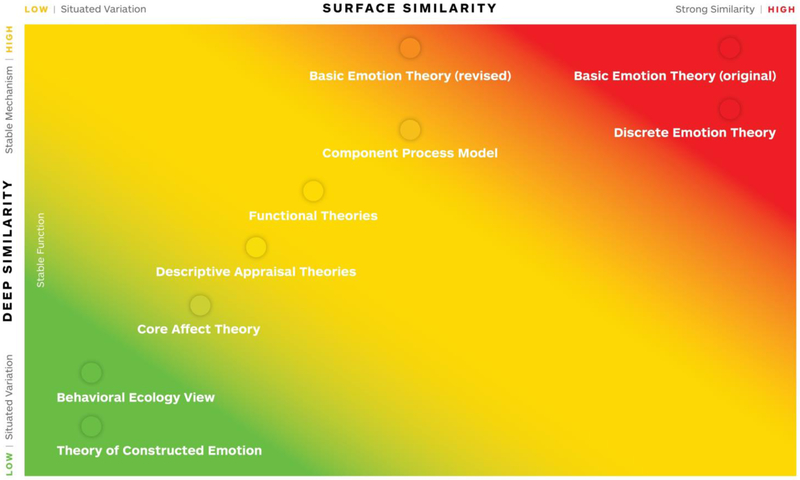

Figure 1. Explanatory frameworks guiding the science of emotion: The nature of emotion categories and their concepts.

Figure is plotted along two dimensions. Horizontal: represents hypotheses about the surface similarities shared by instances of the same emotion category (e.g., the facial movements that express instances of the same emotion category). Vertical: represents hypotheses about the deep similarities in the mechanisms that cause instances of the same emotion category (e.g., to what extent do instances in the same category share deep, causal features?). Colors represent the type of emotion categories that are proposed in each theoretical framework (green = ad hoc, abstract categories; yellow = prototype or theory-based categories; red = natural kind categories).

One popular scientific framework, referred to as the basic emotion approach, hypothesizes that instances of an emotion category are expressed with facial movements that vary, to some degree, around a typical set of movements (called a prototype) (for example, see Table 1). For example, it is hypothesized that in one instance, anger might be expressed with the expressive prototype (e.g., brows furrowed, eyes wide, lips tightened) plus additional facial movements, such as a widened mouth, whereas on other occasions, a facial movement in the prototype might be missing (e.g., anger might be expressed with narrowed eyes or without movement in the eyebrow region; for a discussion, see Box 2, in SOM). Nonetheless, the basic emotion approach still assumes that the core facial configuration – the prototype -- can be used to diagnose a person’s inner emotional state in much the same way that a fingerprint can be used to uniquely recognize a person. More substantial variation in expressions (e.g., smiling in anger, gasping with widened eyes in anger, and scowling not in anger, but in confusion or concentration) is typically explained as the result of some process that is independent of an emotion itself, such as display rules, emotion regulation strategies such as suppressing the expression, or culture-specific dialects (as proposed by various scientists, including Elfenbein, 2013, 2017; Ekman & Cordaro, 2011; Matsumoto, 1990; Matsumoto, Keltner, Shiota, Frank, & O’Sullivan, 2008; Tracy & Randles, 2011).

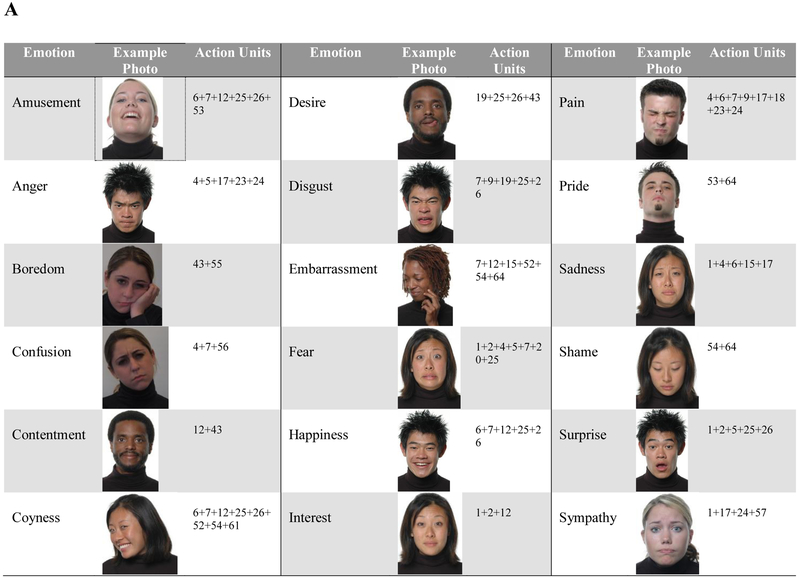

Table 1.

A comparison of the facial configurations listed as the expressions of selected emotion categories

| Proposed Expressive Configurations Described as Facial Action Units | ||||||

|---|---|---|---|---|---|---|

| Emotion Category | Matsumoto, Keltner, Shiota, O’Sullivan & Frank (2008) |

Cordaro, Sun, Keltner, Kamble, Huddar & McNeil (2017) |

Keltner et al. (in press) | Physical Description | ||

| Darnin’s (1872) Description | Observed in reseearch | Reference Configuration Used | International Core Pattern | |||

| Amusement | Not listed | Not listed | 6, 12, 26 or 27, 55 or 56, a “head bounce” (Shiota, Campos & Keltner, 2003) | 6, 7, 12, 16, 25, 26 or 27, 53 | 6+7+12+25+26+53 | Head back, Duchenne smile (6, 7, 12), lips separated, jaw dropped |

| Anger | 4+ 5+ 24+ 38 | 4 + 5 or 7 +22+23+24 | 4 +5 + 7 + 23 (Ekman, Levenson & Friesen, 1983) | 4, 7 | 4+5+17+23+24 | Brows furrowed, eyes wide, lips tightened and pressed together |

| Awe | Not listed | Not listed | 1, 5, 26 or 27, 57 and visible inhalation (Shiota et al., 2003) | 1, 2, 5, 12, 25, 26 or 27, 53 | Not listed | |

| Contempt | 9+ 10+ 22+ 41+ 61 or 62 | 12 (unilateral) + 14 (unilateral) | 12 + 14 (Ekman et al., 1983) | 4, 14, 25 | Not listed | |

| Disgust | 10+ 16+ 22+ 25 or 26 | 9 or 10, 25 or 26 | 9+15+16 (Ekman et al., 1983) | 4, 6, 7, 9, 10, 25, 26 or 27 | 7+9+19+25+26 | Eyes narrowed, nose wrinkled, lips parted, jaw dropped, tongue show |

| Embarrassment | Not listed | Not listed | 12, 24, 51, 54, 64 (Keltner & Buswell, 1997) | 6, 7, 12, 25, 54, participant dampens smile with 23, 24, frown, etc.) | 7+12+15+52+54+64 | Eyelids narrowed, controlled smile, head turned and down, (not scored with FACS: hand touches face) |

| Fear | 1+2+5+20 | 1+2+4+5+20, 25 or 26 | 1+2+4+5+7+20+26 (Ekman et al., 1983) | 1, 2, 5, 7, 25, 26 or 27, participant suddenly shifts entire body backwards in chair | 1+2+4+5+7+20+25 | Eyebrows raised and pulled together, upper eyelid raised, lower eyelid tense, lips parted and stretched |

| Happiness | 6+12 | 6 + 12 | 6+12 (Ekman et al., 1983) | 6, 7, 12, 16, 25, 26 or 27 | 6+7+12+25+26 | Duchenne smile (6, 7, 12) |

| Pride | Not listed | Not listed | 6, 12, 24, 53, a straightening of the back and pulling back of the shoulders to expose the chest (Shiota et al., 2003) | 7, 12, 53, participant sits up straight | 53+64 | Head up, eyes down |

| Sadness | 1 + 15 | 1+15, 4,17 | 1+4+5 (Ekman et al., 1983) | 4, 43, 54 | 1+4+6+15+17 | Brows knitted, eyes slightly tightened, lip corners depressed, lower lip raised |

| Shame | Not listed | Not listed | 54, 64 (Keltner & Buswell, 1997) | 4, 17, 54 | 54+64 | Head down, eyes down |

| Surprise | 1+ 2 + 5+ 25 or 26 | 1+2+5+25 or 26 | 1+2+5+26 (Ekman et al., 1983) | 1, 2, 5, 25, 26 or 27 | 1+2+5+25+26 | Eyebrows raised, upper eyelid raised, lips parted, jaw dropped |

Note. Darwin’s description taken from Matsumoto et al. (2008), Table 13.1. International core patterns (ICPs) refer to expressions of 22 emotion categories that are thought to be conserved across cultures, taken from Cordaro et al. (2017), Tables 4, 5 and 6. A plus sign means “with”; these action units would appear simultaneously. A comma means “sometimes with”; these action units are statistically the most probable to appear, but do not necessarily need to happen simultaneously (David Cordaro, personal communication, 11/11/2018).

By contrast, other scientific frameworks propose that expressions of the same emotion category, such as anger, substantially vary by design, in a way that is tied to the immediate context, which includes the internal context (e.g., the person’s metabolic condition, the past experiences that come to mind, etc.) and the outward context (e.g., whether a person is at work, at school, or at home, who else is present the broader cultural conditions, etc.), both of which vary in dynamic ways over time (see Box 2, SOM). These debates, while useful to scientists, provide little clear guidance for consumers of emotion research who are focused on the practical issue of whether various emotion categories are expressed with facial configurations of sufficient regularity and distinctiveness so that it is possible to read emotion in a person’s face.

The common view of emotional expressions persist, too, because scientists’ actions often don’t follow their claims in a transparent, straightforward way. Many scientists continue to design experiments, use stimuli and publish review papers that, ironically, leave readers with the impression that certain emotion categories each have a single, unique facial expression, even as those same scientists acknowledge that every emotion category can be expressed with a variable set of facial movements. Published studies typically test the hypothesis that there are unique emotion-expression links (for examples, see the reference lists in Elfenbein & Ambady, 2002; Matsumoto, Keltner, Shiota, O’Sullivan & Frank, 2008; Keltner, Sauter, Tracy & Cowen, in press; also see most of the studies reviewed in this paper, e.g., Cordaro, Sun, Keltner, Kamble, Huddar, & McNeil, 2017). The exact facial configuration tested varies slightly from study to study, but a core facial configuration is still assumed (see Table 1 for examples). This pattern of testing the hypothesis that instances of one emotion category are expressed with a single core facial configuration reinforces (perhaps unintentionally) the common view that each emotion category is consistently and uniquely expressed with its own distinctive configuration of facial movements. Review articles (again, perhaps unintentionally) reinforce the impression of unique face-emotion mappings by including tables and figures that display a single, unique facial configuration for each emotion category, referred to as the expression, signal or display for that emotion (Figure 2 presents two recent examples).6 Consumers of this research then assume that a distinctive configuration can be used to diagnose the presence of the corresponding emotion (e.g., that a scowl indicates the presence of anger).

Figure 2. Example figures from recently published papers that reinforce the common belief in diagnostic facial expressions of emotion.

A. Adapted from Cordaro et al. (in press), Table 1, with permission. Face photos © Dr. Lenny Kristal. B. Shariff & Tracy, 2011, Figure 2, with permission.

The common view of emotional expressions has also been imported into other scientific disciplines with an interest in understanding emotions, such as neuroscience and artificial intelligence (AI). For example, from a published paper on AI:

“American psychologist Ekman noticed that some facial expressions corresponding to certain emotions are common for all the people independently of their gender, race, education, ethnicity, etc. He proposed the discrete emotional model using six universal emotions: happiness, surprise, anger, disgust, sadness and fear.” (Brodny, Kolakowska, Landowska, Szwoch, Szwoch, & Wróbel, 2016, p. 1, italics in the original)

Similar examples come from our own papers. One paper series of papers focused on the brain structures involved in perceiving emotions from facial configurations (Adolphs, 2002; Adolphs et al., 1994) and the other focused on early life experiences (Pollak et al., 2000; Pollak & Kistler, 2002). These papers were framed in terms of “recognizing facial expressions of emotion” and exclusively presented participants with specific, posed photographs of scowling faces (the presumed facial expression for anger), wide-eyed gasping faces (the presumed facial expression for fear), and so on. Participants were shown faces of different individuals all posing the same facial configuration for each emotion category, ignoring the importance of context. One reason for this flawed approach to investigating the perception of emotion from faces was that then -- at the time these studies were conducted – as now, published experiments, review articles, and stimulus sets were dominated by the common view that certain emotion categories were signaled with an invariant set of facial configurations, referred to as “facial expressions of basic emotions.”

In this paper, we review the scientific evidence that directly tests two beliefs that form the common view of emotional expressions: that certain emotion categories are each routinely expressed by a unique facial configuration and, correspondingly, that people can reliably infer someone else’s emotional state from a set of facial movements. Our discussion is written for consumers of emotion research, whether they be scientists in other fields or non-scientists, who need not have deep knowledge of the various theories, debates, and broad range of findings in the science of emotion, with sufficient pointers to those discussions if they are of interest (see Box 2, SOM).

In discussing what this paper is about – the common view that a person’s inner emotional state is revealed in facial movements -- it bears mentioning what this paper is not about: This paper is not a referendum on “basic emotion” view we briefly mentioned earlier in this section, proposed by the psychologist Paul Ekman and his colleagues, or any other research program or psychologist’s view. Ekman’s theoretical approach has been highly influential in research on emotion for much of the past 50 years. We often cite studies inspired by the basic emotion approach for this reason. In addition, the common view of emotional expressions is also most readily associated with a simplified version of basic emotion approach, as exemplified by the quotes above. Critiques of Ekman’s basic emotion view (and related views) are numerous (e.g., Barrett, 2006a, 2007, 2011; Ortony & Turner, 1990; Russell, 1991, 1994, 1995), as are rejoinders that defend it (e.g., Ekman, 1992, 1994; Izard, 2007). Our paper steps back from this dialogue. We instead take as our focus the existing research on emotional expression and emotion perception and ask whether it is sufficiently strong to justify the way it is increasingly being used by those who consume it.

A Systematic Approach for Evaluating the Scientific Evidence

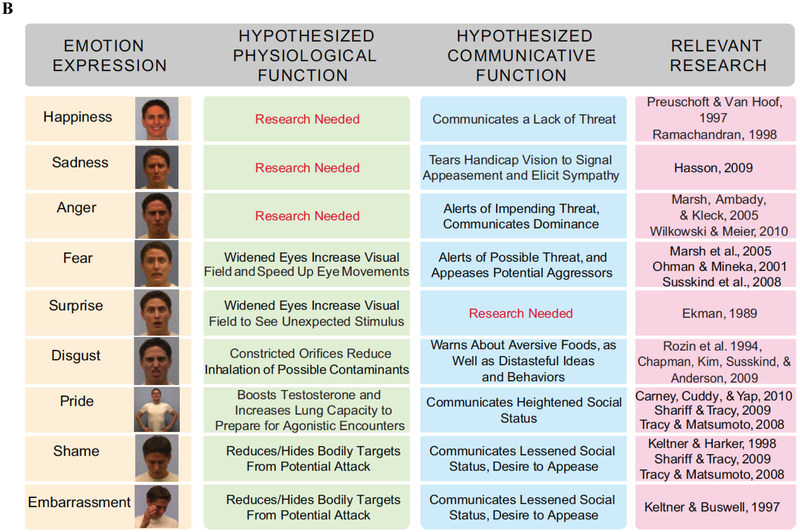

When you see someone smile and infer that the person is happy, you are making what is known as a reverse inference: you are assuming that the smile reveals something about the person’s emotional state that you cannot access directly (see Figure 3). Reverse inference requires calculating a conditional probability: the probability that a person is in a particular emotion episode (such as happiness) given the observation of a unique set of facial muscle movements (such as a smile). The conditional probability is written as:

for example,

Figure 3. Evaluation criteria: Reliability and specificity in relation to forward and reverse inference.

Anger and fear are used as the example categories.

Reverse inferences about emotion are ubiquitous in everyday life – whenever you experience someone as emotional, your brain has performed a reverse inference, guessing at the cause of a facial movement when only having access to the movement itself. Every time an app on a phone or computer measures someone’s facial muscle movements, identifies a facial configuration such as a frowning facial configuration, and proclaims that the target person is sad, that app has engaged in reverse inference, such as:

Whenever a security agent infers anger from a scowl, the agent has assumed a strong likelihood for

Four criteria must be met to justify a reverse inference that a particular facial configuration expresses and therefore reveals a specific emotional state: reliability, specificity, generalizability and validity (explained in Table 2 and Figure 3). These criteria are commonly encountered in the field of psychological measurement and over the last several decades there has been an ongoing dialogue about thresholds for these criteria as they apply in production and perception studies, with some consensus emerging for the first three criteria (see Haidt & Keltner, 1999). Only when a pattern of facial muscle movements strongly satisfies these four criteria can we justify calling it an “emotional expression.” If any of these criteria are not met, then we should instead refer to a facial configuration with more neutral, descriptive terms without making unwarranted inferences, simply calling it a smile (rather than an expression of happiness), a frown (rather than an expression of sadness), a scowl (rather than an expression of anger), and so on.7

Table 2:

Criteria used to evaluate the empirical evidence

| Expression Production | Emotion Perception | |

|---|---|---|

| Reliability | When a person is sad, the proposed expression (a frowning facial configuration) should be observed more frequently than would be expected by chance. Likewise, for every other emotion category that is subject to a commonsense belief. Reliability is related to a forward inference: given that someone is happy, what is the likelihood of observing a smile, p[set of facial muscle movements ∣ emotion category]. | When a person makes a scowling facial configuration, perceivers should consistently infer that the person is angry. Likewise, for every facial configuration that has been proposed as the expression of a specific emotion category. That is, perceivers must consistently make a reverse inference: given that someone is scowling, what is the likelihood that he is angry, p[emotion category ∣ set of facial muscle movements]. |

| Chance means that facial configurations occur randomly with no predictable relationship to a given emotional state. This would mean that the facial configuration in question carries no information about the presence or absence of an emotion category. For example, in an experiment that observes the facial configurations associated with instances of happiness and anger, chance levels of scowling or smiling would be 50%. | Chance means that emotional states occur randomly with no predictable relationship to a given facial configuration. This would mean that the presence or absence of an emotion category cannot be inferred from the presence or absence of the facial configuration. For example, in an experiment that observes how people perceive 51 different facial configurations, chance levels for correctly labeling a scowling face as anger would be 2%. | |

| Reliability also depends on the base rate: how frequently people make a particularly facial configuration overall. For example, if a person frequently makes a scowling facial configuration during an experiment examining the expressions of anger, sadness and fear, he will seem to be consistently scowling in anger when in fact he is scowling indiscriminately. | Reliability also depends on the base rate: how frequently people use a particular emotion label or make a particular emotional inference. For example, if a person frequently labels facial configurations as “angry” during an experiment examining scowling, smiling and frowning faces, she will seem to be consistently perceiving anger when in fact she is labeling indiscriminately. | |

| Reliability rates between 70% and 90% provide strong evidence for the commonsense view, between 40% and 69% provide moderate support for the commonsense view, and between 20% and 39% provide weak support (Ekman, 1994; Haidt & Keltner, 1999; Russell, 1994). | Reliability rates between 70% and 90% provide strong evidence for the commonsense view, between 40% and 69% provide moderate support for the commonsense view, and between 20% and 39% provide weak support (Ekman, 1994; Haidt & Keltner, 1999; Russell, 1994). | |

| Specificity | If a facial configuration is diagnostic of a specific emotion category, then the facial configuration should express instances of one and only one emotion category better than chance; it should not consistently express instances of any other mental event (emotion or otherwise) at better than chance levels. For example, to be considered the expression of anger, a scowling facial configuration must not express sadness, confusion, indigestion, an attempt to socially influence, etc. at better than chance levels. | If a frowning facial configuration is perceived as the diagnostic expression of sadness, then a frowning facial configuration should only be labeled as sadness (or sadness should only be inferred from a frowning facial configuration) at above chance levels. And it should not be consistently perceived as expressions of any mental states other than sadness at better than chance levels. |

| Estimates of specificity, like reliability, depend on base-rates and on how chance levels are defined. | Estimates of specificity, like reliability, depend on base-rates and on how chance levels are defined. | |

| Generalizability | Patterns of reliability and specificity should replicate across studies, particularly when different populations are sampled, such as infants, congenitally blind individuals and individuals sampled from diverse cultural contexts, including small-scale, remote cultures. High generalizability across different circumstances ensures that scientific findings are generalizable. | Patterns of reliability and specificity should replicate across studies, particularly when different populations are sampled, such as infants, congenitally blind individuals and individuals sampled from diverse cultural contexts, including small-scale, remote cultures. High generalizability across different circumstances ensures that scientific findings are generalizable. |

| Validity | Even if a facial configuration is consistently and uniquely observed in relation to a specific emotion category across many studies (strong generalizability), it is necessary to demonstrate that the person in question is really in the expected emotional state. This is the only way that a given facial configuration leads to accurate inferences about a person’s emotional state. A facial configuration is valid as a display or a signal for emotion if and only if it is strongly associated with other measures of emotion, preferably those that are objective and do not rely on anyone’s subjective report (i.e., a facial configuration should be strongly and consistently related to perceiver-independent evidence about the emotional state of the expresser). | Even if a facial configuration is consistently and uniquely labeled with a specific emotion word across many studies (strong generalizability), it is necessary to demonstrate that the person making the facial configuration is really in the expected emotional state. This is the only way that a given perception or inference of emotion is accurate. A perceiver can only be said to be recognizing an emotional expression if and only if the person being perceived is verifiably in the expected emotional state. |

Note: Reliability is also related to sensitivity, consistency, informational value, and the true positive rate (for further description, see Figure 3). Specificity is related to uniqueness, discreteness, the true negative rate and referential specificity. In principle, we can also ask more parametrically whether there is a link between the intensity of an emotional instance and the intensity of facial muscle contractions, but scientists rarely do.

The Null Hypothesis and the Role of Context

Tests of reliability, specificity, generalizability and validity are almost always compared to what would be expected by sheer chance, if facial configurations (in studies of expression production) and inferences about facial configurations (in studies of emotion perception) occurred randomly with no relation to particular emotional states. In most studies, chance levels constitute the null hypothesis. An example of the null hypothesis for reliability is that people do not scowl when angry more frequently than would be expected by chance.8 If people are observed to scowl more frequently when angry than they would by chance, then the null hypothesis can be rejected based on the reliability of the findings. We can also test the null hypothesis for specificity: If people scowl more frequently than they would by chance not only when angry but also when fearful, sad, confused, hungry, etc., then the null hypothesis for specificity is retained.9

In addition to testing hypotheses about reliability and specificity, tests of generalizability are becoming more common in the research literature, again using the null hypothesis. Questions about generalizability test whether a finding in one experiment is reproduced in other experiments in different contexts, using different experimental methods or sampling people from different populations. There are two crucial questions about generalizability when it comes to the production and perception of emotional expressions: Do the findings from a laboratory experiment generalize to observations in the real world? And, do the findings from studies that sample participants from Westernized, Educated, Industrialized, Rich and Democratic (WEIRD; Henrich, Heine, & Norenzayan, 2010) populations generalize to people who live in small-scale, remote communities?

Questions of validity are almost never addressed in production and perception studies. Even if reliable and specific facial movements are observed across generalizable circumstances, it is a difficult and unresolved question as to whether these facial movements can justify an inference about a person’s emotion state. We have more to say about this later. In this paper, we evaluate the common view by reviewing evidence pertaining to the reliability, specificity, and generalizability of research findings from production and perception studies.

A focus on rejecting the null hypothesis, defined by what would be expected by chance alone, provides necessary but not sufficient support for the common view of emotional expressions. A slightly above chance co-occurrence of a facial configuration and instances of an emotion category, such as scowling in anger – for example, a correlation coefficient around r = .20 to .39 (adapted from Haidt & Keltner, 1999) -- suggests that a person sometimes scowls in anger, but not most or even much of the time. Weak evidence for reliability suggests that other factors not measured in the experiment are likely causing people to scowl during an instance of anger. It also suggests that people may express anger with facial configurations other than a scowl, possibly in reliable and predictable ways. Following common usage, we refer to these unmeasured factors collectively as context. A similar situation can be described for studies of emotion perception: when participants label a scowling facial configuration as “anger” in a weakly reliable way (between .20 and .39 percent of the time; Haidt & Keltner, 1999), then this suggests the possibility of unmeasured context effects.

In principle, context effects make it possible to test the common view by comparing it directly to an alternative hypothesis that a person’s brain will be influenced by other causal factors (as opposed to comparing the findings to random chance). It is possible, for example, that a state of anger is expressed differently depending on various factors that can be studied, including the situational context (such as whether a person is at work, at school, or at home), social factors (such as who else is present in the situation and the relationship between the expresser and the perceiver), the person’s internal physical context (based on how much sleep they had, how hungry they are, etc.), a person’s internal mental context (such as the past experiences that come to mind or the evaluations they make), the temporal context (what just occurred a moment ago), differences between people (such as whether someone is male or female, warm or distant), and the cultural context, such as whether the expression is occurring in a culture that values the rights of individuals (vs. group cohesion), is open and allows for a variety of behaviors in a situation (vs. closed, having more rigid rules of conduct). Other theoretical approaches offer some of these specific alternative hypotheses (see Box 2 in SOM). In practice, however, experiments almost always test the common view against the null hypothesis for reliability and specificity and rarely test specific alternative hypotheses. When context is acknowledged and studied, it is usually examined as a factor that might moderate a common and universal emotional expression, preserving the core assumptions of the common view (e.g., Cordaro et al., 2017; for more discussion, see Box 3, SOM).

A Focus on Six Emotion Categories: Anger, Disgust, Fear, Happiness, Sadness and Surprise

Our critical examination of the research literature in this paper focuses primarily on testing the common view of facial expressions for six emotion categories -- anger, disgust, fear, happiness, sadness and surprise. We do not include a discussion of every emotion category ever studied in the science of emotion. We do not discuss the many emotion categories that exist in non-English speaking cultures, such as gigil, the irresistible urge to pinch or squeeze something cute, or liget, exuberant, collective aggression (for discussion of non-English emotion categories, see Mesquita & Frijda, 1992; Pavlenko, 2014; Russell, 1991). We do not discuss the various emotion categories that have been documented throughout history (e.g., Smith, 2016). Nor do we discuss every English emotion category for which a prototypical facial expression has been suggested. For example, recent studies motivated primarily by the basic emotion approach have suggested that there are “more than six distinct facial expressions …in fact, upwards of 20 multimodal expressions” (Keltner et al., in press, pg. 4), meaning that scientists have proposed a prototypic facial configuration as the facial expression for each of twenty or so emotion categories, including confusion, embarrassment, pride, sympathy, awe, and so on.

The reasons for our focus on six emotion categories are twofold. First, anger, disgust, fear, happiness, sadness and surprise categories anchor common beliefs about emotions and their expressions (as is evident from Box 4, in SOM) and therefore represent the clearest, strongest test of the common view. Second, these six emotion categories have been the primary focus of systematic research for almost a century and therefore provide the largest corpus of scientific evidence that can be evaluated. Unfortunately, the same cannot be said for any of other emotion categories in question. This is a particularly important point when considering the twenty plus emotion categories that are now the focus of research attention. A PsycInfo search for the term “facial expression” combined with “anger, disgust, fear, happiness, sadness, surprise” produced over 700 entries, but a similar search including “love, shame, contempt, hate, interest, distress, guilt” returned less than 70 entries (Duran & Fernandez-Dols, 2018). Almost all cross-cultural studies of emotion perception have focused on just anger, disgust, fear, happiness, sadness and surprise (plus or minus a few) and experiments that measure how people spontaneously move their faces to express instances of emotion categories other than these six remain rare. In particular, there are too few studies that measure spontaneous facial movements during episodes of other emotion categories (i.e., production studies) to conclude anything about reliability and specificity, and there are too few studies of how these additional emotion categories are perceived in small-scale, remote cultures to conclude anything about generalizability. In an era where the generalizability and robustness of psychological findings are under close scrutiny, it seemed prudent to focus on the emotion categories for which there are, by a factor of ten, the largest number of published experiments. Our discussion, which is based on a sample of six emotion categories, generalizes to emotion categories that have been studied, however.10

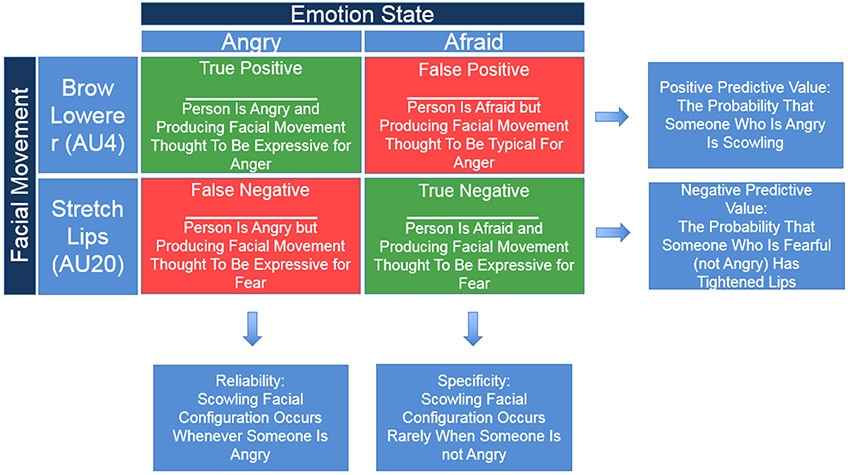

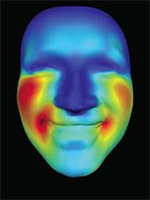

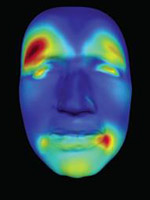

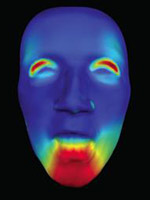

The proposed expressive facial configurations for each emotion category are presented in Figure 4, and the origin of these facial configurations is discussed in Box 4 in SOM. They originated with Charles Darwin, who stipulated (rather than discovered) that certain facial configurations are expressions of certain emotion categories, inspired by photographs taken by Duchenne and drawings made by the Scottish anatomist Charles Bell (Darwin, 1872). These stipulations largely form the basis of the common view of emotional expressions.

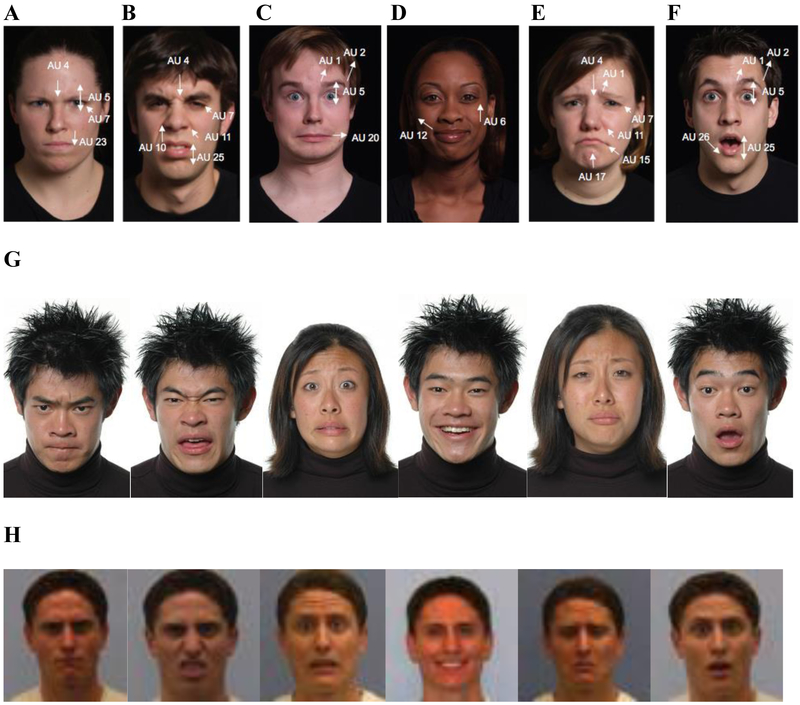

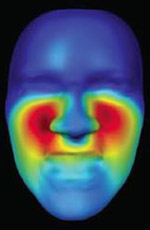

Figure 4. Facial action ensembles for commonsense facial configurations.

Facial action coding system (FACS) codes that correspond to the commonsense expressive configuration in adults. A is proposed expression for anger and corresponds to prescribed EMFACS code for anger (AUs 4, 5, 7, and 23). B is proposed expression for disgust and corresponds to prescribed EMFACS code for disgust (AU 10). C is proposed expression for fear and corresponds to prescribed EMFACS code for fear (AUs 1, 2, and 5 or 5 and 20). D is proposed expression for happiness and corresponds to prescribed EMFACS code for the so-called Duchenne smile (AUs 6 and 12). E is proposed expression for sadness and corresponds to prescribed EMFACS code for sadness (AUs 1, 4, 11 and 15 or 1, 4, 15 and 17). F is proposed expression for surprise and corresponds to prescribed EMFACS code for surprise (AUs 1, 2, 5, and 26). It was originally proposed that infants express emotions with the same facial configurations as adults. Later research revealed morphological differences between the proposed expressive configurations for adults and infants. Only three out of a possible nineteen proposed configurations for negative emotions from the infant coding scheme were the same as the configurations proposed for adults (Oster et al., 1992). G. adapted from Cordaro et al. (in press), Table 1, with permission. Face photos © Dr. Lenny Kristal. H. adapted from Shariff & Tracy, 2011, Figure 2, with permission.

Producing Facial Expressions of Emotion: A Review of the Scientific Evidence

In this section, we first review the design of a typical experiment where emotions are induced and facial movements are measured. This review highlights several observations to keep in mind as we review the reliability, specificity and generalizability for expressions of anger, disgust, fear, happiness, sadness and surprise in a variety of populations, including adults in both urban and small-scale remote cultures, infants and children, and congenitally blind individuals. Our review is the most comprehensive to date and allows us to comment on whether the scientific findings generalize across different populations of individuals. The value of doing so becomes apparent when we observe how similar conclusions emerge from these research domains.

The Anatomy of a Typical Experiment Designed to Observe People’s Facial Movements During Episodes of Emotion

In the typical expression production experiment, scientists expose participants to objects, images or events that they (the scientists) believe will evoke an instance of emotion. It’s possible, in principle, to evoke a wide variety of instances for a given emotion category (e.g., Wilson-Mendenhall et al., 2015), but in practice, published studies evoke the most typical instances of each category, often elicited with a stimulus that is presented without context (e.g., a photograph, a short movie clip separated from the rest of the film, etc.). Scientists usually include some measure to verify that participants are in the expected emotional state (such as asking participants to describe how they feel by rating their experience against a set of emotion adjectives). They then observe participants’ facial movements during the emotional episode and then quantify how well the measure of emotion predicts the observed facial movements. When done properly, this yields estimates of reliability and specificity, and in principle provides data to assess generalizability. There are limitations to assessing the validity of a facial configuration as an expression of emotion, as we explain below.

Measuring facial movements.

Healthy humans have a common set of 17 facial muscle groups on each side of the face that contract and relax in patterns.11 To create facial movements that are visible to the naked eye, facial muscles contract, changing the distance between facial features (Neth & Martinez, 2009) and shaping skin into folds and wrinkles on an underlying skeletal structure. Even when facial movements look the same to the naked eye, there may be differences in their execution under the skin. There are individual differences in mechanics of making a facial movement, including variation in the anatomical details (e.g., everyone has a slightly different configuration and relative size of the muscles, some people lack certain muscle components, etc.), in the neural control of those muscles (Cattaneo & Pavesi, 2014; Hutto & Vattoth, 2015; Muri, 2015), and in the underlying skeletal structure of the face (discussed in Box 5, in SOM).

There are three common procedures for measuring facial movements in a scientific experiment. The most sensitive, objective measure of facial movements detects the electrical activity from actual muscular contractions, called facial electromyography (again, see Box 5, in SOM). This is a perceiver-independent way of assessing facial movements that detects muscle contractions that are not necessarily visible to the naked eye (Tassinary & Cacioppo 1992). Facial EMG’s utility is unfortunately offset by its impracticality: facial EMG requires placing electrodes on a participant’s face, which can cause skin abrasions. In addition, a person can typically tolerate only a few electrodes on the face at a time. At the writing of this paper, there were relatively few published papers using facial EMG (we identified 123 studies), the overwhelming majority of which sparsely sampled the face, measuring the electrical signals for only a small number of muscles (between one to six); none of the studies measured naturalistic facial movements as they occur outside the lab, in everyday life. As a consequence, we focus our discussion on two other measurement methods: a perceiver-dependent method that describes visible facial movements, called facial actions, which uses human coders who indicate the presence or absence of a facial movement while viewing video recordings of participants, and automated methods for detecting of facial actions from photographs or videos.

Measuring facial movements with human coders.

The Facial Action Coding System, or FACS (Ekman et al., 2002), is a systematic approach to describe what a face looks like when facial movements have occurred. FACS codes describe the presence and intensity of facial movements. Importantly, FACS is purely descriptive and is therefore agnostic about whether those movements might express emotions or any other mental event.12 Human coders train for many weeks to reliably identify specific movements called “action units” or AUs. Each AU is hypothesized to correspond to the contraction of a distinct facial muscle or a distinct grouping of muscles that is visible as a specific facial movement. For example, the raising of the inner corners of the eyebrows (contracting the frontalis muscle pars medialis) corresponds to AU 1. Lowering of the inner corners of the brows (activation of the corrugator supercilii, depressor glabellae and depressor supercilii) corresponds to AU 4. AUs are scored and analyzed as independent elements, but the underlying anatomy of many facial muscles constrains them so they cannot move independently of one another, generating dependencies between AUs (e.g., see Hao, Wang, Peng, & Ji, 2018). Facial action units (AU) and their corresponding list of facial muscles can be found in Table 3. Expert FACS coders approach inter-rater reliabilities of .80 for individual AUs (Jeni, Cohn, & De la Torre, 2013). The first version of FACS (Ekman & Friesen, 1978) was largely based on the work of Swedish anatomist Carl-Herman Hjortsjö who catalogued the facial configurations described by Duchenne (Hjortsjö, 1969). In addition to the updated versions of FACS (Ekman et al., 2002), other facial coding systems have been devised for human infants (Izard et al., 1995; Oster, 2003), chimpanzees (Vick et al., 2007), and macaque monkeys (Parr et al., 2010).13 Figure 4 displays the common FACS codes for the configurations of facial movements that have been proposed as the expression of anger, disgust, fear, happiness, sadness and surprise.

Table 3:

The Facial Action Coding System (FACS; Ekman & Friesen, 1978) codes for adults

| AU | Description | Facial muscles (type of activation) | |

|---|---|---|---|

| 1 | Inner brow raiser | Frontalis (pars medialis) |  |

| 2 | Outer brow raiser | Frontalis (pars lateralis) |  |

| 4 | Brow lowerer | Corrugator supercilii, depressor supercilii |  |

| 5 | Upper lid raiser | Levator palpebrae superioris |  |

| 6 | Cheek raiser | Orbicularis oculi (pars orbitalis) |  |

| 7 | Lid tightener | Orbicularis oculi (pars palpebralis) |  |

| 9 | Nose wrinkle | Levator labii superioris alaquae nasi |  |

| 10 | Upper lip raiser | Levator labii superioris |  |

| 11 | Nasolabial deepener | Zygomaticus minor |  |

| 12 | Lip corner puller | Zygomaticus major |  |

| 13 | Cheeks puffer | Levator anguli oris |  |

| 14 | Dimpler | Buccinator |  |

| 15 | Lip corner depressor | Depressor anguli oris |  |

| 16 | Lower lip depressor | Depressor labii inferioris |  |

| 17 | Chin raiser | Mentalis |  |

| 18 | Lip puckerer | Incisivii labii superioris and incisivii labii inferioris |  |

| 20 | Lip stretcher | Risorius w/ platysma |  |

| 22 | Lip funneler | Orbicularis oris |  |

| 23 | Lip tightener | Orbicularis oris |  |

| 24 | Lip pressor | Orbicularis oris |  |

| 25 | Lips part | Depressor labii inferioris or relaxation of mentalis, or orbicularis oris |  |

| 26 | Jaw drop | Masseter, relaxed temporalis and internal terygoid |  |

| 27 | Mouth stretch | Pterygoids, digastric |  |

| 28 | Lip suck | Orbicularis oris |  |

| 41 | Lid Droop | ||

| 42 | Slit |  |

|

| 43 | Eyes Closed |  |

|

| 44 | Squint | ||

| 45 | Blink |  |

|

| 46 | Wink |  |

|

Measuring facial movements with automated algorithms.

Human coders require time-consuming, intensive training and practice before they can reliably assign AU codes. After training, it is a slow process to code photographs or videos frame by frame making human FACS coding impractical to use on facial movements as they occur in everyday life. Large inventories of naturalistic photographs and videos, which have been curated only fairly recently (Benitez-Quiroz et al., 2016), would require decades to manually code. This problem is addressed by automated FACS coding systems using computer vision algorithms (Martinez & Du, 2012; Martinez, 2017; Valstar et al., 2017).14 Recently developed computer vision systems have automated the coding of some (but not all) facial AUs (e.g., Benitez-Quiroz et al., in press; Benitez-Quiroz et al., 2017b; Chu et al., 2017; Corneanu et al., 2016; Essa & Pentland, 1997; Martinez, 2017a; Martinez & Du, 2012; Valstar et al., 2017; see Box 6, SOM) making it more feasible to observe facial movements as they occur in everyday life, at least in principle (see Box 7, SOM). Automated FACS coding is accurate (>90%) when compared to the AU codes from expert human coders, provided that the images were captured under ideal laboratory conditions, where faces are viewed from the front, are well illuminated, are not occluded, and are posed in a controlled way (Benitez-Quiroz et al., 2016). Under ideal conditions, accuracy is highest (~99%) when algorithms are tested and trained on images from the same database (Benitez-Quiroz et al., 2016). The best of these algorithms works quite well when trained and tested on images from different databases (~90%), as long as the images are all taken in ideal conditions (Benitez-Quiroz et al., 2016). Accuracy (compared to human FACS coding) decreases substantially more when coding facial actions in still images or in video frames taken in everyday life where conditions are unconstrained and facial configurations are not stereotypical (e.g.,Yitzhak et al., 2017).15 For example, 38 automated FACS coding algorithms were recently trained on one million images (the 2017 EmotioNet Challenge; Benitez-Quiroz et al., 2017a) and evaluated against separate test images which were FACS coded by experts.16 In these less constrained conditions, accuracy dropped below 83% and a combined measure of precision and recall (a measure called F1, ranging from zero to one) was below .65 (Benitez-Quiroz et al., 2017a).17 These results indicate that current algorithms are not accurate enough in their detection of facial AUs to fully substitute for expert coders when describing facial movements in everyday life. Nonetheless, these algorithms offer a distinct practical advantage because they can be used in conjunction with human coders to speed up the study of facial configurations in millions of images in the wild. It is likely that automated methods will continue to improve as better and more robust algorithms are developed and as more diverse face images become available.

Measuring an emotional state.

Once an approach has been chosen for measuring facial movements, a clear test of the common view of emotional expressions depends on having valid measures that reliably and specifically characterize the instances of each emotion category in a generalizable way, to which the measurements of facial muscle movements can be compared. The methods that scientists use to assess people’s emotional states vary in their dependence on human inference, however, which raises questions about the validity of the measures.

Relatively objective measures of an emotional instance.

The more objective end of the measurement spectrum includes dynamic changes in the autonomic nervous system (ANS), such as cardiovascular, respiratory or perspiration changes (measured as variations in skin conductance), and dynamic changes in the central nervous system, such as changes in blood flow or electrical activity in the brain. These measures are thought to be more objective because the measurements themselves (the numbers) do not require a human judgment (i.e., the measurements are perceiver-independent). Only the interpretation of the measurements (their psychological meaning) requires human inference. For example, a human observer does not judge whether skin conductance or neural activity increases or decreases; human judgment only comes into play when the measurements are interpreted for the emotional meaning.

Currently, there are no objective measures, either singly or as a pattern, that reliability and uniquely identify one emotion category from another in a replicable way. Statistical summaries of hundreds of experiments, called meta-analyses, show for example, that currently there is no relationship between an emotion category, such as anger, and a single, specific set of physical changes in ANS that accompany the instances of that category, even probabilistically (the most comprehensive study published to date is Siegel et al., 2018, but for earlier studies see Cacioppo et al., 2000; Stemmler, 2004; also see Box 8, SOM). In anger, for example, blood pressure can go up, go down, or stay the same (i.e., changes in blood pressure are not consistently associated with anger). And a rise in blood pressure is not unique to instances of anger; it also can occur during a range of other emotional episodes (i.e., changes in blood pressure do not specifically occur in anger and only in anger). 18Individual studies often find patterns of ANS measures that distinguish an instance of one emotion category from another, but those patterns don’t replicate and instead vary across studies, even when studies use the same methods and stimuli, and sample from the same population of participants (e.g., compare findings from Kragel & LaBar, 2013 with Stephens, Christie, & Friedman, 2010). Similar within-category variation is routinely observed for changes in neural activity measured with brain imaging (Lindquist et al., 2012) and single neuron recordings (Guillory & Bujarski, 2014). For example, pattern classification studies discover multivariate patterns of activity across the brain for emotion categories such as anger, sadness, fear, and so on, but these patterns do not replicate from study to study (e.g., Kragel & LaBar, 2015; Saarimäki et al., 2016; Wager et al., 2015; for a discussion, see Clark-Polner et al., 2017). This observed variation does not imply that biological variability during emotional episodes is random, but rather that it may be context-dependent (e.g., yellow and green zones of Figure 1). It may also be the case that current biological measures are simply insufficiently sensitive or comprehensive enough to capture situated variation in a precise way. If this is so, then such variation should be considered unexplained, rather than random.

There is a difficult circularity built into these studies that is worth pointing out, and that we encounter again a few paragraphs down: Scientists must use some criterion for identifying when instances of an emotion category are present in the first place (so as to draw conclusions about whether or not emotion categories can be distinguished by different patterns of physical measurements).19 In most studies that attempt to find bodily or neural “signatures” of emotions, the criterion is a subjective one, either reported by the participants or provided by the scientist, which introduces problems of its own, as we discuss in the next section.

Subjective measures of an emotional instance.

Without objective measures to identify the emotional state of a participant, scientists typically rely on the relatively more subjective measures that anchor the other end of the measurement spectrum. The subjective judgments can come from the participants (who complete self-report measures), from other observers (who infer emotion in the participants), or from the scientists themselves (who use a variety of criteria, including commonsense, to infer the presence of an emotional episode). These are all examples of perceiver-dependent measurements because the measurements themselves, as well as their interpretation, directly rely on human inference.

Scientists often rely on their own judgments and intuitions to stipulate when an emotion is present or absent in participants (as Charles Darwin did). For example, snakes and spiders are said to evoke fear. So are situations that involve escaping from a predator. Sometimes scientists stipulate that certain actions indicate the presence of fear, such as freezing or fleeing or even attacking in defense. The conclusions that scientists draw about emotions depends on the validity of their initial assumptions. It is noteworthy that when it comes to emotions, scientists use exactly the same categories as non-scientists, which may give us cause for concern, as forewarned by William James (James, 1890, 1894)20

Inferences about emotional episodes can also come from other people, for example independent samples of study participants, who categorize the situations in which facial movements are observed. Scientists can ask observers to infer when participants are emotional by having them judge subjects’ behavior or tone of voice; for example, see our discussion of Camras et al. (2007) discussed in the section on infants and children, below.

A third common strategy to identify the emotional state of participants is to simply ask them what they are experiencing. Their self-reports of emotional experience then become the criteria for deciding whether an emotional episode is present or absent. Self-reports are often considered imperfect measures of emotion because they depend on subjective judgements and beliefs and require translation into words. In addition, a person can be experiencing an emotional event yet be unaware of it and therefore unable to report on it (i.e., a person can be conscious but unaware of their experience and unable to report it), or may be unable to express how they feel using emotion words, a condition known as alexithymia. Despite questions about their validity, self-reports are the most common measure of emotion that scientists compare to facial AUs.

Human inference and assessing the presence of an emotional state.

At this point, it should be obvious that any measure of an emotional state, to which measurements of facial muscle movements can be compared, itself requires some degree of human inference; what varies is the amount of inference that is required. Herein lies a problem: To properly test the hypothesis that certain facial movements reliably and specifically express emotion, scientists (ironically) must first make a reverse inference that an emotional event is occurring – that is, they infer the emotional instance by observing changes in the body, brain, and behavior (e.g., only if blood pressure consistently and uniquely rises in anger can a rise in blood pressure be used as a marker of anger). Or they infer (a reverse inference) that an event or object evokes an instance of a specific emotion category (e.g., an electric shock elicits fear but not irritation, curiosity, or uncertainty). These reverse inferences are scientifically sound only if measures of emotion reliably, specifically and validly characterize the instances of the emotion category. So, any clear, scientific test of the common view of emotional expressions rests on a set of more basic inferences about whether an emotional episode is present or absent, and any conclusions that come from such a test are only as sound as those basic inferences.

If all measures of emotion (to which measurements of facial muscle movements are compared) rest on human judgment to some degree, then, in principle, this prevents a scientist from being sure that an emotional state is present, which in turn limits the validity of any experiment designed to test whether a facial configuration validly expresses a specific emotion category. All face-emotion associations that are observed in an experiment reflect human consensus, i.e., the degree of agreement between self-judgments (of the participants), expert-judgments (of the scientist), and/or judgments of other observers (of perceivers who are asked to infer emotion in the participants). These types of agreement are often incorrectly referred to as accuracy. We touch on this point again when we discuss studies that test whether certain facial configurations are routinely perceived as expressions of anger, disgust, fear, and so on.

Testing the common view of emotional expressions: Interpreting the scientific observations.

If a specific facial configuration reliably expresses instances of a certain emotion category in any given experiment, then we would expect measurements of the face (e.g., facial AU codes) to co-occur with measurements that indicate that participants are in the target emotional state. In principle, those measures might be more objective, such as ANS changes during an emotional event, or they might be more subjective, deriving from the scientist, from other perceivers who make judgments about the study participants, or from the participants themselves. In practice, however, most experiments compare facial movements to subjective measures of emotion -- a scientist’s judgment about which emotions are evoked by a particular stimulus, perceivers judgments about participants’ emotional states, or participants’ self-reports of emotional experience -- because ANS and other more objective measurements do not themselves distinguish one emotion category from another in a reliable and specific way. For example, in an experiment, scientists might ask: Do the AUs that create a scowling facial configuration co-occur with self-reports of feeling angry? Do the AUs that create a pouting facial configuration co-occur with perceiver’s judgments that participants are sad? Do the AUs that create a wide-eyed gasping facial configuration co-occur when people are exposed to an electric shock? And so on. If such observations suggest that a configuration of muscle movements is reliably observed during episodes of a given emotion category, then those movements are said to express the emotion in question. As we will see, many studies show that some facial configurations occur more often than random chance, but are not observed with a high degree of reliability (according to the criteria from Haidt & Keltner (1999), outlined in Table 2 and Figure 3).

If a specific facial configuration specifically (i.e., uniquely) expresses instances of a certain emotion category in any given experiment, then we would expect to observe little co-occurrence between measurements of the face and measurements indicating the presence of emotional instances from other categories, except what would be expected by chance (again, see Table 2 and Figure 3). For example, in an experiment, scientists might ask: do the AUs that create a scowling facial configuration co-occur with self-reports of feeling sad, confused, or social motives such as dominance? Do the AUs that create a pouting facial configuration co-occur with perceiver’s judgments that participants are angry or afraid? Do the AUs that create a wide-eyed gasping facial configuration co-occur when people are exposed to a competitor whom they are trying to scare? And so on.

If a configuration of facial movements is observed in instances of a certain emotion category in a reliable, specific way within an experiment, so that we can infer that the movements are expressing an instance of the emotion in that study as hypothesized, then scientists can safely infer that the facial movements in question are an expression of that emotion category’s instances in that situation. One more step is required before we can infer that the facial configuration is the expression of that emotion: we must observe a similar pattern of facial configuration-emotion co-occurrences across different experiments, to some extent generalizing across the specific measures and methods used and the participants and contexts sampled. If the facial configuration-emotion co-occurrences replicate across experiments that sample people from the same culture, then the facial configuration in question can be reasonably be referred to as an emotional expression only in that culture; e.g., if a scowling facial configuration co-occurs with measures of anger (and only anger) across most studies conducted on adult participants in the US who are free from illness, then it is reasonable to refer to a scowl as an expression of anger in the US. If facial configuration-emotion co-occurrences generalize across cultures – that is, replicate across experiments that sample a variety of instances of that emotion category in people from different cultures -- then the facial configuration in question can be said to universally express the emotion category in question.

Studies of Healthy Adults from the U.S. and Other Developed Nations

We now review the scientific evidence from studies that document how people spontaneously move their facial muscles during instances of anger, disgust, fear, happiness, sadness and surprise, and how they pose their faces when asked to indicate how they express each emotion category. We examine evidence gathered in the lab and in naturalistic settings, sampling healthy adults who live in a variety of cultural contexts. To evaluate the reliability, specificity and generalizability of the scientific findings, we adapted criteria set out by Haidt & Keltner (1999), as discussed in Table 2.

Spontaneous facial movements in laboratory studies.

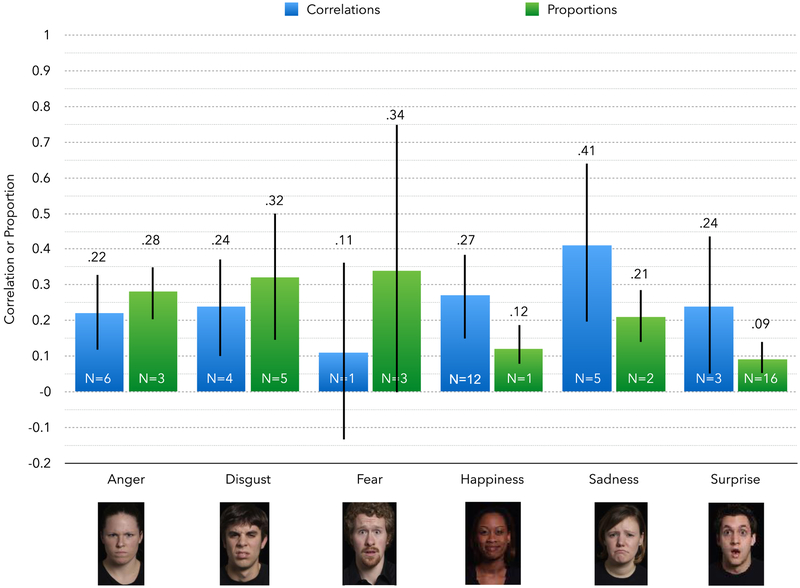

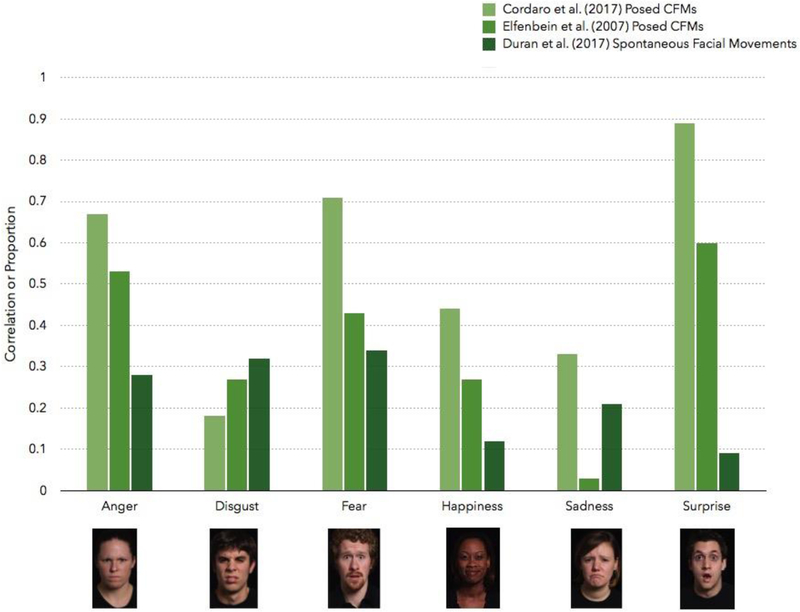

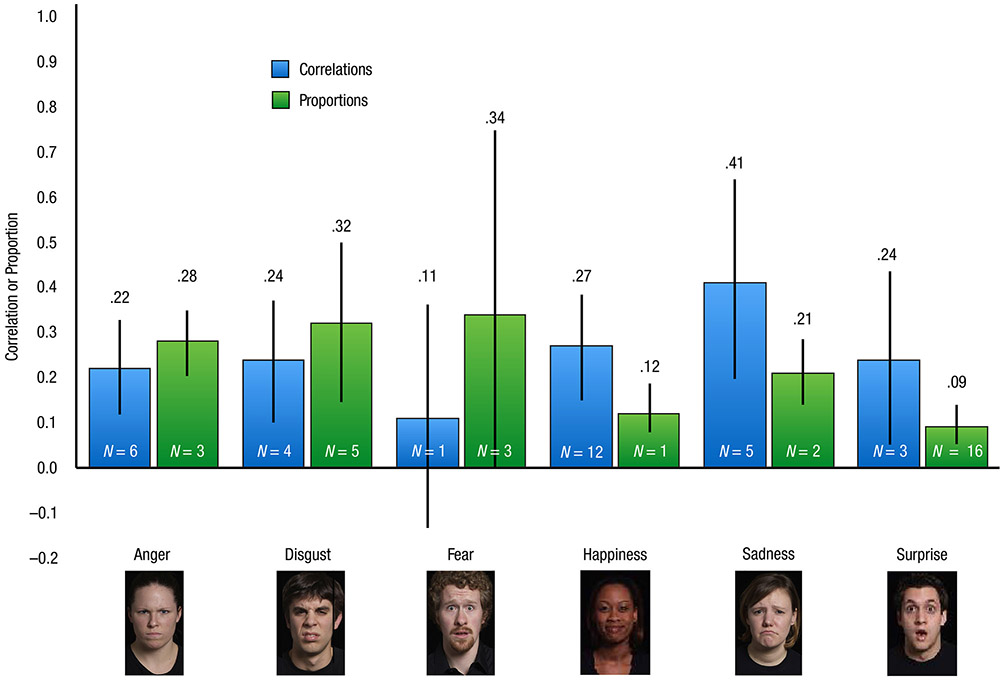

A meta-analysis was recently conducted to test the hypothesis that the facial configuration in Figure 4 co-occur, as hypothesized, with specific emotion categories (Duran et al., 2017). This analysis was published in a book chapter. Thirty-seven published articles reported on how people moved their faces when exposed to objects or events that evoke emotion. Most studies included in the meta-analysis were conducted in the laboratory. The findings from these experiments were statistically summarized to assess the reliability of facial movements as expressions of emotion (see Figure 5). In all emotion categories tested, other than fear, participants moved their facial muscles into the expected configuration more consistently than what we would expect by chance. Consistency levels were weak, however, indicating that the proposed facial configurations in Figure 4 have limited reliability (and to some extent, limited generalizability; i.e., a scowling facial configuration is an expression of anger, but not the expression of anger. More often than not, people moved their faces in ways that were not consistent with the hypotheses of the common view. An expanded version of this meta-analysis (Duran & Fernandez-Dols, 2018) analyzed 89 effect sizes from 47 studies totaling 3599 participants, with similar results: the hypothesized facial configurations were observed, with average effect sizes of r = .32 (for the average correlation between the intensity of a facial configuration and a measure of emotion, with correlations for specific emotion categories ranging from .25 to .38, corresponding to weak evidence of reliability) and proportion = .19 (for the average proportion of the times that a facial configuration was observed during an emotional event, with proportions for specific emotion categories ranging from .15 to .25, interpreted as no evidence to weak evidence of reliability).21

Figure 5. Meta-analysis of facial movements during emotional episodes: A summary of effect sizes across studies (Duran et al., 2017).

Effect sizes are computed as correlations or proportions (as reported in the original experiments). Results include experiments that reported a correspondence between a facial configuration and its hypothesized emotion category and those that reported a correspondence between individual AUs of that facial configuration and the relevant emotion category; meta-analytic summaries for entire ensembles of AUs only (the facial configurations specified in Figure 2) were even lower than those that appear here.

No overall assessment of specificity was reported in either the original or the expanded meta-analysis because most published studies do not report the false positive rate (i.e., the frequency with which a facial AU is observed when an instance of the hypothesized emotion category was not present; see Figure 3). Nonetheless, some striking examples of specificity failures have been documented in the scientific literature. For example, a certain smile, called a “Duchenne” smile, is defined in terms of facial muscle contractions (i.e., in terms of facial morphology): it involves movement of the orbiculari oculis which raises the cheeks and causes wrinkles at the outer corners of the eyes in addition to movement of the zygomatic major which raises the corners of the lips into a smile. A Duchenne smile is thought to be a spontaneous expression of authentic happiness. Research shows that a Duchenne smile can be intentionally produced when people are not happy, however (Gunnery & Hall, 2014; Gunnery et al., 2013; also see Krumhuber & Manstead, 2009), consistent with evidence that Duchenne smiles often occur when people are signaling submission or affiliation rather than solely reflecting happiness (Rychlowska et al., 2017).

Spontaneous facial movements in naturalistic settings.

Studies of facial configuration-emotion category associations in naturalistic settings tend to yield similar results to studies that were conducted in more controlled laboratory settings (Fernandez-Dols, 2017; Fernandez-Dols & Crivelli, 2013). Some studies observe that people express emotions in real world settings by spontaneously making the facial muscle movements proposed in Figure 4, but such observations do not replicate well across studies (e.g., compare Matsumoto & Willingham, 2006 vs. Crivelli, Carrera and Fernandez-Dols, 2015; Rosenberg & Ekman, 1994 vs. Fernandez-Dols, Sanchez, Carrera, & Ruiz-Belda, 1997). For example, two field studies of winning judo fighters recently demonstrated that so-called “Duchenne” smiles were better predicted by whether an athlete was interacting with an audience than the degree of happiness reported after winning their matches (Crivelli, Carrera, & Fernandez-Dols, 2015). Only eight of the 55 winning fighters produced a “Duchenne” smile in Study 1; all occurred during a social interaction. Only 25 out of 119 winning fighters produced a “Duchenne” smile in Study 2, documenting, at best, weak evidence for reliability.

Posed facial movements.