Abstract

Since its earliest days, the field of behavioral medicine has leveraged technology to increase the reach and effectiveness of its interventions. Here, we highlight key areas of opportunity and recommend next steps to further advance intervention development, evaluation, and commercialization with a focus on three technologies: mobile applications (apps), social media, and wearable devices. Ultimately, we argue that future of digital health behavioral science research lies in finding ways to advance more robust academic-industry partnerships. These include academics consciously working towards preparing and training the work force of the 21st century for digital health, actively working towards advancing methods that can balance the needs for efficiency in industry with the desire for rigor and reproducibility in academia, and the need to advance common practices and procedures that support more ethical practices for promoting healthy behavior.

Keywords: digital health, mobile applications, social media, wearable technology, behavior change intervention

In 1982, W. Stewart Agras predicted that the field of behavioral medicine would succeed where previous collaborations between behavioral science and medicine had failed. His rationale was behavioral medicine’s emphasis on translational clinical research – applications of basic behavioral science discoveries to clinical interventions that could be delivered in a variety of settings (Agras, 1982). Technology made its mark on behavioral medicine quite early in the field’s development with the emergence of biofeedback. Behavioral medicine was even considered “synonymous” with biofeedback in its earliest years (Blanchard, 1982). Since that time behavioral medicine has been at the forefront of evidence-based behavioral treatment and prevention for health conditions such as obesity, diabetes, cancer, and cardiovascular disease, to name a few. These interventions have consistently incorporated cutting-edge technology to maximize reach and effectiveness. In this article, we describe uses of technology to deliver behavioral medicine interventions and identify critical methodological and practical challenges to this work going forward, focusing on three technologies: mobile applications (apps), social media, and wearable devices. In particular, we discuss the potential for academic-industry partnerships, and identify key points for researchers who would like to bring new digital intervention tools to market or contribute to the improvement of existing tools.

Increasing Reach: Behavioral Interventions in Daily Life

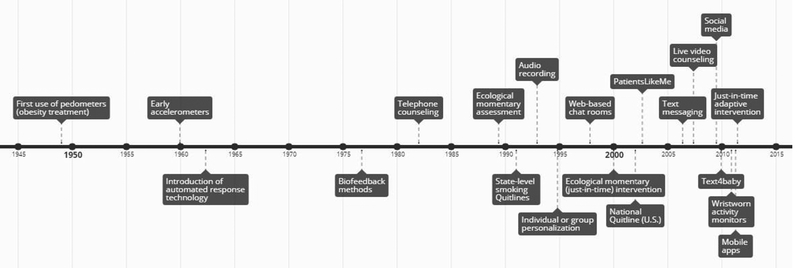

Traditional behavioral interventions were designed to be delivered in face-to-face meetings between a provider and patient(s) or between a public health organization and community member(s). Face-to-face intervention delivery has many advantages, including the opportunity for providers to respond based on physical cues from the patient and the power of social processes such as reinforcement and accountability. However, logistical challenges such as scheduling, travel, childcare, and cost limit the feasibility of face-to-face interventions for many (Keefe et al., 2002; Tate & Zabinski, 2004). Below, we briefly describe technologies that have enabled interventions to be delivered remotely (see Figure 1).

Figure 1.

Timeline of key developments in behavioral medicine’s use of technology to support interventions. (Dates approximated from published work.)

Telephone.

Intervention delivery via telephone seems not to preclude development of a therapeutic alliance (Stiles-Shields et al., 2014), is effective relative to control interventions (e.g., Lichtenstein et al., 1996), and is scalable. Tobacco quitlines represent a large-scale example of effective intervention via telephone (Cummins et al., 2007). Quitlines are funded by government public health agencies and staffed by trained providers. Many vendors offer both reactive call responses (i.e., providers available to take calls from individuals who call in) and proactive call scheduling (i.e., providers initiate calls to individuals referred by healthcare professionals) (Cummins et al., 2007; Lichtenstein, Zhu, & Tedeschi, 2010). State-level quitlines have existed in the U.S. since the early 1990s, with the national quitline (1–800-QUIT-NOW) established in 2004 (Anderson & Zhu, 2007). Available evidence indicates that quitlines are highly effective and reach a diverse population (Lichtenstein, Zhu, & Tedeschi, 2010). As only 1% of U.S. smokers access quitlines (Keller et al., 2010), there is opportunity to further improve the reach of this service.

Cell phones.

Advances in cell phone technology allowed interventions to reach individuals where they are at any time of day. Mobile-delivered interventions may increase access to care, as 95% of U.S. adults own a mobile phone of some kind (Pew Research Center, 2018a). Text messages have been used as stand-alone or adjunctive interventions since the early 2000s and show modest efficacy for improving health behaviors (Fjeldsoe, Marshall, & Miller, 2009; Buckholtz et al., 2013; Head et al., 2013). Together with the low cost of these interventions, text messaging is scalable to large subsets of the population (Fjeldsoe, Marshall, & Miller, 2009; Cole-Lewis & Kershaw, 2010). As an example, Text4baby is a free text messaging service that provides health information to 1 million pregnant mothers in the U.S (Whittaker et al., 2012). Text4baby has been shown to improve glycemic control (Grabosch, Gavard, & Mostello, 2014), change attitudes about alcohol intake during pregnancy (Evans, Wallace, & Snider, 2012), and increase positive attitudes about attending prenatal visits and taking prenatal vitamins (Evans et al., 2014).

Smartphones.

More than 75% of U.S. adults own smartphones (Pew Research Center, 2018a). The increasing popularity of the smartphone has shifted a considerable amount of behavioral medicine research to mobile applications (apps) since 2013 (Müller et al. 2018). Although smartphones are most popular among young, college-educated individuals, ownership rates are comparable across race/ethnicity, and even the majority of those living in rural areas own smartphones (Pew Research Center, 2018a). Further, 58% of the adults use their smartphones for health-related purposes, including tracking health or health behavior via apps (Mack, 2016).

Health apps allow users to engage with health-related content via a variety of media (e.g., videos, text). Many include self-monitoring features (based on active input from the user or passive sensing), gamification strategies (e.g., competition, digital rewards), goal setting and feedback, in-the-moment cues, and/or social features (e.g., social comparison, leaderboards, integrated social networks) (Mendiola, Kalnicki, & Lindenauer, 2015; Payne, Lister, West, & Bernhardt, 2015). Estimates show that more than 24,000 health apps are available in online marketplaces (Dehling, Gao, Schneider, & Sunyaev, 2015). Importantly, however, studies have routinely shown that commercial health apps tend to include very few evidence-based behavior change techniques, and very few have been rigorously tested (Jake-Schoffman et al. 2017). Those that have been tested show mixed effectiveness, and many studies had small sample sizes, lacked optimal comparison groups, and were at risk for biased results (Zhao, Freeman, & Li, 2016). In addition, the expertise necessary to inform the optimal design, development, and testing of health apps involves numerous disciplines (e.g., behavioral science, software engineering, game design) that have not traditionally worked together. Consequently, health apps tend to be weak in the one or more areas in which one area of expertise is lacking.

Social media.

About 69% of the U.S. population uses at least one social media site (median = 3 sites per user), and the majority of users visit at least once per day (Pew Research Center, 2018b). The advent of social media platforms has brought with it new opportunities to study and intervene upon health behavior (Pagoto et al., 2016). About 30% of U.S. adults use social media to access health information (Fox, 2017), as do 65% of adults with chronic health conditions (Shaw & Johnson, 2011). Social media platforms provide new opportunities for health organizations and professionals to communicate evidence-based information, counter-message misinformation, and interface with policymakers (Breland et al., 2017; Waring et al., 2018). Social media sites also allow researchers to study knowledge, attitudes, and behavior in new ways, which may lead to new theoretical models of health behavior change (Charles-Smith et al., 2015; Paul et al., 2016).

Online forums in the form of chat rooms first appeared in the 1990s and became widespread in the mid-2000s. These online forums allowed clients to share text-based messages with groups or individuals (Eysenbach et al., 2004; Suls et al., 2006). More recently, large commercial social media platforms such as Facebook and Twitter have allowed users (and providers) to share a variety of types of content, including text, images, GIFs, videos, competitions, and polls (Arigo et al., 2018). General-use sites such as Facebook allow users to create public or private groups and pages, or to send direct messages; sites such as Twitter use hashtags (#) to allow posts to be searchable by topic. Commercial health apps often include social media features, either by integrating with an existing platform (e.g., Facebook) or by connecting app users directly to one another in an app-specific social network. Many weight loss and fitness apps, for example, allow users to follow each other, see each other’s data, create discussion threads, and compete with each other (Rivera et al., 2016; Conroy et al., 2014). Some also include blogs that provide health information to users.

Some social media sites are for specific subgroups of individuals who are similar with respect to diagnoses or health goals. For example, PatientsLikeMe connects over 600,000 users who identify with specific health conditions to one another, and collects patient-reported data for research on patient experiences (Wicks et al., 2018). Users report experiencing myriad benefits from the site, including having a better understanding of their health condition and treatment options as well as improved conversations with healthcare professionals (Wicks et al., 2018), and the site has been useful for tracking behaviors such as off-label use of medication (Frost et al., 2011).

Social media platforms and features also provide new opportunities to recruit participants and deliver interventions. Participant recruitment through sites such as Facebook, Twitter, Craigslist and specialty networks (e.g., Grindr) show promise for increasing enrollment in intervention programs among hard-to-reach groups, such as men who have sex with men, transgender people, smokers, and young adults with health conditions (Carter-Harris, Willis, Warrick, & Rawl, 2016; Gorman et al., 2014; Iribarren et al., 2018; Martinez, 2014). Social media recruitment can be accomplished via paid ads or by posting in groups or communities, or in the case of Twitter posting with community-specific hashtags or incentivizing community influencers to post study ads (Arigo et al., 2018). It is not yet clear whether any type of social media recruitment is more cost-effective or results in greater overall yield than traditional recruitment methodologies; more work is needed to determine the best approaches to recruiting specific populations via social media as well as how to avoid recruiting an unrepresentative sample (Moreno et al., 2017; Topolovec-Vranic & Natarajan, 2016).

Delivering behavioral interventions via social media reduces or eliminates barriers such as scheduling, transportation, and childcare conflicts, given that individuals can participate anywhere and anytime of the day (Arigo et al., 2018). The frequency with which individuals view their social media feeds, and the ease of access to social media via computer or mobile device, also facilitates real-time intervention (Pagoto et al., 2016). Depending on the intervention design, individuals can access provider-developed intervention content, connect with other intervention participants, create and share their own content, and ask for help whenever they desire or feel in need of support (Maher et al., 2014). Recent systematic reviews reveal promise for social media-delivered interventions (Ashrafian et al., 2014; Han, Lee, & Demiris, 2018).

Increasing Effectiveness: Personalization, Tailoring, and Adaptation

Advances in technology also provide new opportunities to improve intervention effectiveness. In the 1960s, information processing technology afforded by computers introduced two new paths for remote intervention. The first allowed for personalizing behavior change materials to specific groups or to individuals, based on demographic information (e.g., age, race) or personal characteristics (e.g., stage of change). Participant characteristics are fed into a computerized algorithm to determine the optimal content combination(s) for that individual (Kreuter, Strecher, & Glassman, 1999). While this approach allowed content to be individualized based on specific participant characteristics, it did not take into account dynamic changes within an individual over time. Because so many factors vary within a person over time (e.g., marital status, employment, income, stress, weight, behavior), personalizing based on a snapshot of individual characteristics will inevitably be limited in precision.

The second path comprised automating and tailoring responses to real time individual input, allowing technology-based interventions to (1) intervene in real time, and (2) adapt to an individual’s changing characteristics over time. An early albeit rudimentary example of such automation and tailoring was Colby, Wyatt, and Gilbert’s (1966) computerized psychotherapy, for which a client could type sentences and receive automated responses that were determined by key words or phrases. Similar principles apply in automated and tailored responses via modern technology such as websites (Lustria et al., 2009), email (Lustria et al., 2013), and text messages (Patrick et al., 2009) and are increasingly common in commercial settings with the proliferation of chat bots.

Tailoring based on real-time data requires valid and reliable real-time measures of behavior, affect, and other factors. In the 1980s, behavioral medicine researchers began to grapple with the limitations of retrospective self-report (Fahrenberg, 1996) by using wristwatch timers, electronic pagers, and personal digital assistants (PDAs) to prompt patients to complete paper surveys and record survey responses (Stone & Schiffman, 1994). PDAs afforded the advantage of time stamps, allowing researchers and clinicians to verify that assessments were completed when instructed. Such real-time assessment (e.g., ecological momentary assessment [EMA]) enabled ecological momentary intervention ([EMI]; Heron & Smyth, 2010) also known as just-in-time (JIT) interventions. JIT interventions can be delivered via text message (e.g., Riordan, Conner, Flett, & Scarf, 2015), smartphone apps (e.g., Caroll et al., 2013), and wearable devices.

More recent advances in mobile technology now allow for just-in-time adaptive interventions (JITAI), which adjust timing and content to meet an individual’s changing needs in context (Nahum-Shani, Hekler, & Spruijt-Metz, 2015). For example, smartphone apps can use immediate local weather data to inform physical activity recommendations (Klasnja et al., 2017). Many existing JITAIs rely on user-specific input that is self-reported (e.g., mood, social environment, preferences) or gathered from device features such as geolocation (Nahum-Shani et al., 2018). Based on the evolving capabilities of additional technologies, objective behavioral data from users and their environment can also be input to inform JITAIs. For example, an app can provide prompts to increase walking by monitoring sedentary time via the phone’s accelerometer, adjust the content of the prompts based on the length of time spent sedentary, and provide reward messages for increasing physical activity (Bond et al., 2014; Thomas & Bond, 2015). Apps that provide such intervention also can be integrated with wearable devices that collect behavioral data. Emerging research is exploring how to use the principles of control systems engineering to more intensely optimize adaptive interventions, personalizing the experience of behavior change and sustainability for the individual user (Hekler et al., 2018; Conroy et al., 2018.).

Wearable technology.

Some of the earliest uses of technology to support behavior change interventions occurred in the late 1940s and 1950s with the use of mechanical counters to provide users with immediate, personalized feedback on their behaviors. The pedometer is an early-stage mechanical device that collects ambulatory data, which can be incorporated into research and practice; it was first used in the treatment of obesity in 1949 (Larsen, 1949) and commonly applied to the quantification of physical activity engagement in behavioral research by the 1960s (Chrico & Stunkard, 1962; Stunkard, 1960; Stunkard & Petska, 1962). Pedometers provide real-time, individual self-monitoring of physical activity, which is a key behavior change technique (Michie et al., 2009); because they are inexpensive and easy to use, they can be implemented on a large scale (Tudor-Locke & Lutes, 2009). Public health campaigns that include participant access to pedometers show effectiveness for increasing physical activity (Robinson et al., 2014) and cost-effectiveness relative to other types of interventions (Cobiac, Vos, & Barendregt, 2009).

Later versions of activity sensors aligned wrist- and ankle-worn counters (Schulmann & Reisman, 1959) and measured ultrasonic sound (Goldman, 1961; see Schwitzgebel, 1968), and paved the way for modern wearable fitness bands and smartwatches. These collect data on the timing and velocity of movement at lower cost than research-grade accelerometers, which have recently been considered the gold standard (Silfee et al., 2018). Such devices help users self-monitor physical activity, but additional behavior change skills are typically needed to effectively increase and sustain higher physical activity in adults (Samdal et al., 2017; Murray et al. 2017). Behavior change skills can be delivered via traditional face-to-face intervention or integrated web or smartphone apps. Integration of wearable technology and JIT or JITAI methods allows the device to provide intervention content based on clients’ ongoing physical activity behavior; for example, providing reminders to walk if the client has spent a certain amount of time sitting.

While it may seem that wearable technology has revolutionized fitness efforts, we have yet to document much impact on physical activity at the individual or population level. Although these devices and their associated smartphone apps provide more comprehensive data to users than pedometers (such as the timing and intensity of activity, Arigo, 2015), questions remain about the accuracy of the data they collect (Evenson, Goto, & Furberg, 2015) and whether appropriate validation occurs prior to commercial sale (Peake, Kerr, & Sullivan, 2018). Further, they are considerably more expensive than pedometers (i.e., ~$100 vs. $5), and a recent survey found that most users tend to be young (i.e., 18–34 years old), affluent, and self-described “early adopters of technology” (Patel, Asch, & Volpp, 2015; The Nielson Company, 2014). Even among those who show initial interest in these devices, many stop using them within 6–12 months (Ledger, & McCaffrey, 2014). The expense combined with high attrition rate among users even created an opportunity for a non-profit company that collects unused wearables and distributes them to underserved communities (RecycleHealth, 2018). Finally, the integration of social media features into the wearable device platforms (e.g., apps, websites) also could activate beneficial social processes, but further investigation is required to understand how best to incorporate these processes with the self-monitoring data from devices (see Arigo, 2015; Butryn et al., 2016). Overall, additional research is needed to determine how best to use wearable technology to impact physical activity and other health outcomes. Additional emerging areas for wearables include monitors for diabetes (Heintzman, 2016), ultraviolet radiation (Hussain, et al., 2016), alcohol (NIAAA, 2018), and smoking Sazonov, Lopez-Meyer, & Tiffany, 2013).

Summary

The field of behavioral medicine has had a long history of using technology to increase the effectiveness of its interventions. Behavioral science in general has been recognized as a crucial element in the interdisciplinary field of “digital health,” which has emerged in recent years and spans both academia and industry. The heightened interest in leveraging technology to understand behavior and health is also evidenced by the $300 million NIH-funded All of Us initiative, which involves myriad academic and industry partnerships working together to use technology to explore individual differences in behavior, biology, and environment to inform precision medicine approaches to health (National Institutes of Health, 2018a). Behavioral medicine can continue to play an instrumental role in the digital health space by subjecting innovations to rigorous evaluation; new and improved methodologies are emerging to meet this need. In the next section, we outline some of the current challenges for the field of behavioral medicine to advancing the science and practice of digital health interventions (Table 1).

Table 1.

Summary of three key technological developments that have improved the reach and effectiveness of behavioral medicine interventions.

| Technology | Precursors | Current Commercial Examples | Strengths | Opportunities for Further Optimization |

|---|---|---|---|---|

| Smartphone apps | Personal digital assistant (PDA) and text messaging interventions | MyFitnessPal Headspace | Large user base, reach participants where they are, in real time, with a variety of individually and contextually tailored content (text, video, games) | Improved understanding of participant engagement and contextual tailoring variables, inclusion of more evidence-based intervention components |

| Social media platforms | Websites with chat room or message board features | Facebook Twitter PatientsLikeMe | Large user base, reach participants at times at are convenient for them, potential for real-time responsiveness, allows for a variety of content (text, images, video) | Improved understanding of participant engagement and social processes that underlie behavior change |

| Wearable physical activity sensors | Pedometers Accelerometers | Fitbit Garmin Actigraph | Immediate feedback on progress, potential for real-time intervention (via prompts) and integration with app and social media features | Improved understanding of participant adoption and engagement (with emphasis on reaching individuals who are sedentary), as well as optimal integration with app and social media features |

Key Challenges to Reaching the Potential of Behavioral Medicine in Digital Health

In 1982, Agras predicted that a “health promotion industry” would emerge and produce its own set of interventions. He paired this prediction with a warning:

“Although these developments are in many ways desirable (for example, they will increase access to information), they will also cause problems. Too widespread an application before sufficient research has been done might lead to premature disillusionment with this young field. Moreover, such a development might lead to the wide-spread application of procedures that are not based on research findings.”

Indeed, a “health promotion industry” has flourished and now holds an estimated value of $3.7 trillion (Global Wellness Institute, 2018). The digital health industry specifically is valued at $23 billion (Reuters, 2017), and as Agras stated, many innovations are being widely adopted in the absence of research findings. Some of the biggest challenges to realizing the potential of behavioral medicine in digital health arise from the fact that industry and academia are working in parallel, with very little collaboration. As such, problems are arising on both sides, many of which would likely be remedied with collaboration across sectors. Challenges and suggestions to address them are summarized in Table 2.

Table 2.

Current challenges to advancing digital health in behavioral medicine, and proposed solutions.

| Challenge | Key Points | Suggestions for Addressing Challenges |

|---|---|---|

| Commercial apps and devices lack evidence | Limited use of evidence-based behavior change techniques in the design of features; lack of rigorous testing for efficacy or effectiveness on clinical outcomes | Extended efforts to evaluate the usability and efficacy of commercial tools; partnerships between academia and industry to develop and evaluate tools, which will result in more effective, useful, and marketable products |

| Current evaluation methods do not match the needs for determining the effectiveness of digital health tools | RCTs test full intervention packages at the group level, which do not test individual components of interventions or mechanisms of action, and do not allow for iterative improvements; lack of appropriate control conditions; limited opportunities to publish on studies with alternative designs (e.g., N-of-1) | Use of MOST framework and innovative trial designs such as SMART, N-of-1, etc.; increased publishing outlets for alternative research designs |

| Current evaluation methods do not match the needs for determining the effectiveness of digital health tools | RCTs test full intervention packages at the group level, which do not test individual components of interventions or mechanisms of action, and do not allow for iterative improvements; lack of appropriate control conditions; limited opportunities to publish on studies with alternative designs (e.g., N-of-1) | Use of MOST framework and innovative trial designs such as SMART; greater us of N-of-1 designs in lieu of traditional pilot and feasibility trials; increased publishing outlets for N-of-1 and other non-traditional research designs. |

| No science of engagement | Engagement is not well-defined or consistently measured across studies; optimal engagement (amount, type, individual or contextual differences) is unknown | Development of definitions and measures; research to identify meaningful engagement and how to nurture it; consistent reporting of engagement in published research; funding for studies with engagement as an endpoint in its own right |

| An unknown landscape of privacy and data security | Protecting privacy of both participants and bystanders; commercial tools request unnecessary data and have been vulnerable to data breaches | Greater researcher understanding of privacy and security agreements; responsibility of researchers to fully inform participants of potential risks of using technologies; reaching out to or partnering with industry to create ethical guidelines for research; use of CORE research resources for study design. |

| Principles vs. technologies | Technology evolves much more quickly than research to develop and evaluate it; researchers develop and evaluate individual tools that rarely reach the market | Designing APIs and designing/evaluating tool-agnostic interventions |

Note: RCT = randomized controlled trial; MOST = multiphase optimization strategy; SMART = sequential multiple assignment randomized trials; I-Corps = Innovation Corps (National Institutes of Health); CORE = Connected and Open Research Ethics initiative (https://thecore.ucsd.edu/)

Commercial apps and devices lack evidence.

The most widely used digital health tools have been developed in the private sector, and commercial development has several advantages. Companies raise money from investors that they then leverage toward product design, development, and marketing. They are able to move products to market on a relatively short time scale and then leverage user data to inform product refinements. Many companies develop application programming interfaces (APIs) at very early stages of programming so their products can be integrated across devices or platforms. Access to the API is critical for interoperability and allows for streamlined data collection and extraction. The app stores contain thousands of commercial health apps, and while most will never reach many users, some achieve wide dissemination, accruing millions of users.

In spite of how well-disseminated some commercial health apps and devices have become, the degree to which they incorporate evidence-based behavior change strategies or clinical guidelines, are theory-based, or involve health scientists in the development process appears to be low (Conroy et al., 2014; Nikolaou & Lean, 2017; Pagoto et al., 2013; Rivera et al., 2016; Schoffman et al., 2013). This does not necessarily mean that an app or device is not efficacious, but very little rigorous testing has been done on these tools. At present, very few apps or devices could be considered efficacious on any clinical or behavioral outcome.

Current research methods do not match the needs of digital intervention tools.

The traditional randomized controlled trial (RCT), in which two or more treatments are compared, remains the gold standard for evidence in clinical medicine. Evidence from RCTs is necessary for a treatment to be included in clinical guidelines and reimbursed by insurers (Murray et al., 2016; Sackett et al., 1996). Technology-based interventions have unique features that present methodological issues when it comes to the traditional RCT design. First is the difficulty of designing an adequate control group. Apps and other digital health tools often include a variety of features that participants use in different orders and at different times; it is not clear which aspects are effective, for whom, or when, and to what these specific aspects should be compared (Lipschitz & Torous, 2018). Also, investigators should be able to estimate the effect of a control group when calculating sample size, which requires preliminary work with the proposed control group. Even if an adequate control group is found, as with all behavioral treatment studies, participants cannot be “blinded” to condition in the same way as pill placebo-controlled trials, although efforts can be made to conceal the alternative approaches being tested and to blind investigators or statisticians to participants’ group membership.

Another challenge of using traditional RCTs in behavioral interventions is that behavioral interventions, digital ones especially, are rarely unidimensional, meaning they rarely compare a single active ingredient to a placebo. Behavioral interventions typically incorporate “packages” of strategies that do not identify when, where, or for whom different elements will work (Mohr et al., 2015). Careful testing of moderators and mechanisms of efficacy is necessary. Behavioral scientists have developed new RCT frameworks such as the Multiphase Optimization Strategy (MOST), a process through which multiple intervention components can be simultaneously tested (Collins et al. 2014; Murray et al., 2016). A variety of RCT designs can be used to assess the contributions of individual components to change in the outcomes and mediators of interest; these include factorial or fractional factorial designs, sequential multiple assignment randomized trials (SMART), micro-randomized trials, and control optimization trials (see Chakraborty, Collins, Strecher, & Murphy, 2009; Collins et al. 2007; Hekler et al., 2018; Liao, Klasnja, Tewari, & Murphy, 2016), each most suitable for answering different research questions.

Likewise, N-of-1 studies can provide a framework for the rapid iteration and testing of technologies, using small sample sizes (cf. Schaffer et al., 2018). This design is an efficient alternative to traditional pilot and feasibility studies that typically recruit 30 or more participants to test a single iteration. For example, an N-of-1 study can help researchers and developers quickly identify the most effective components of a digital health intervention for a single individual, which is critical to designing adaptive interventions that adjust for dynamic, contextual change (Conroy et al., 2018; Hekler et al., 2016). N-of-1 designs can also disentangle factors that are influential for specific individuals, to aid in defining more appropriate intervention strategies and decision rules (Patak et al., 2018). Although these designs are increasingly popular in the human-computer interaction (HCI) literature, few journals in the behavioral medicine sphere offer explicit opportunities to publish N-of-1 studies. Introducing options to publish this type of article in behavioral medicine’s leading journals could help to facilitate advancements in the science of digital health interventions.

Behavioral medicine lacks a science of engagement.

While digital behavioral interventions may seem more practical, easier to use, and more convenient than face-to-face interventions, they are not immune to the engagement and retention issues that have long plagued behavioral interventions. Even among people who seek out digital health tools, many use them for only a few days or weeks at most (Eysenbach, 2005). In one survey of more than 1600 smartphone users, 58% had voluntarily downloaded a health app and 46% of these users had discontinued their use of the app prior to completing the survey (Krebs & Duncan, 2015). Lack of face-to-face contact may weaken the capacity to build a therapeutic relationship or generate social support in the case of group-based interventions, and this could very well play a role in the engagement and retention issues observed in digital health interventions. Making matters even more complicated, engagement is defined very differently in digital interventions (Yardley et al., 2016) compared to traditional interventions where session attendance was a common metric. For example, engagement in an app-delivered intervention might be measured via use of any number of features, whereas engagement in a social media-delivered intervention might be measured by views, “likes,” comments, or posts (Yardley et al., 2016; Waring et al., 2018).

Unlike disciplines such as HCI, in which user engagement has been a prominent topic of investigation (see Smith et al., 2017, for an example), behavioral medicine has lagged in its focus on this critical aspect of digital health interventions, and there is little shared language around this concept (Perski, Blandford, West, & Michie, 2016). Consensus is not only lacking on how to define engagement, but on how much is needed for adequate intervention receipt or to produce positive clinical outcomes. For example, how long does one need to use an app or wearable device (and which features) to have experienced the full intervention dose? Or, to what degree does one need to engage in a social media-delivered intervention to be considered “engaged”? An emerging discussion in this literature focuses on the degree to which more engagement really is better, as opposed to some optimal level of “effective engagement” that might signal the critical types of interactions with digital forums and materials to produce behavior change (Yardely et al., 2016). These are critical questions that are linked to the evaluation methods for digital health interventions, as engagement may differ between individuals, as well as across stages of behavior change and other contextual factors (Rus & Cameron, 2016; Pagoto & Waring, 2016; Smith et al., 2017).

In behavioral medicine, we also know little about what type of engagement matters. Is a participant who opens a diet tracking app every day to view other participant’s progress but never tracks their own intake considered “engaged”? In a Facebook group for exercise promotion, is a participant who only hits the “like” button but never comments on discussion threads engaged in the intervention? Digital health interventions allow us to measure how participants engage an intervention in ways we never could with traditional interventions, where topics were discussed face-to-face and readings may have been assigned. Tracking everything a participant said and/or read would have been very difficult. Now that we have a digital footprint of a huge range of health-relevant behaviors (Insel, 2017), it is possible to assess many different ways that a participant can engage with an intervention. This could lead to new ways of understanding how interventions work and ultimately help us construct new models of behavior change.

An unknown landscape of privacy and data security.

Another relatively new set of challenges centers around the issues of privacy and data security presented by digital health tools. First, some commercially available technologies that were originally produced for purposes other than promoting healthy behavior (e.g., social media) are now being used to study health behavior and deliver interventions. This poses a variety of potential privacy issues depending on the privacy settings used, including the fact that data from non-participants may inadvertently be viewed and collected, and their rights should also be considered as part of study procedures (Arigo et al. 2018). Privacy may be of particular concern as apps begin to incorporate additional smartphone technologies such as GPS location tracking and cameras (Nebeker, Linares-Orozco, & Crist, 2015). Second, for commercial products that were originally designed for health behavior change (e.g., apps), researchers need to carefully read and understand the associated privacy and security agreements, be sure that participants understand these agreements, and include a summary of this information in their applications to ethics review boards.

A recent study examined app source code to determine if the data access permissions a health app requested from users were necessary to run the app. Results showed that the requested access often exceeded what was necessary, posing unneeded potential threats to the privacy and security of the user (Pustozerov et al., 2016). Recent data breaches and privacy controversies with commercial platforms such as Facebook (Carr, 2018) and MyFitnessPal (Segarra, 2018) highlight the pressing importance of protecting participant data. Further, technologies developed by researchers should also be thoroughly vetted for potential privacy and security issues in order to properly protect participants. As the development of technology has outpaced legislative and ethics initiatives in this area, it is critical for researchers to take a proactive stance in terms of protecting participants, and work together with industry partners to ensure the privacy and security of users’ data.

Privacy and data security are critical to the design and delivery of effective, trustworthy, and safe digital health tools. As the research literature in this area has not kept pace with technological developments, greater attention to and training for these topics is needed, with an emphasis academic-industry partnerships. Programs such as the Connected and Open Research Ethics (CORE) initiative can serve a critical role of helping keep researchers in academia and industry informed about the evolving ethics of digital health research, including assistance with effectively crafting informed consent documents for participants and working with ethics review boards (Torous and Nebeker, 2017; https://thecore.ucsd.edu/).

Academia-produced products lack funding and are poorly disseminated.

Academics regularly develop apps and devices for use in behavioral interventions, which they then test in controlled studies (e.g., Garnett et al. 2018, Pagoto et al. 2018, Turner-McGrievy et al. 2016). The development and testing is typically funded by research grants. One challenge to this model is that app development comes at a high monetary and time cost relative to typical developmental research grant budgets, the latter of which can range from $10K internal grants to $450K for federal grants over 1–3 years. The average app is estimated to require about $270,000 and 7–12 months to develop (Turner-McGrievy et al. 2016). While some academics have instead used responsive-design websites as a cost-effective and relatively fast alternative to app and web program development (Turner-McGrievy et al. 2016, Jake-Schoffman et al. 2018), this does not overcome the fact that funds are not typically available for graphic design, long-term maintenance, or technology updates needed beyond the grant period.

Sample sizes for research studies also limit the amount of data that can be used to inform technology updates. For example, an app with hundreds of thousands of users produces rich data on use patterns, whereas a typical research study of 30–100 participants is far less useful to this end. In addition, academic research is not conducive to rapid iteration and testing of multiple versions of technologies (Riley et al., 2013). For example, institutional review board (IRB) approval is required every time an intervention is modified. IRB amendments can take weeks and even months to get approval, making it very difficult to move swiftly. Thus, the cost, pace, and scope of research grants makes developing and testing novel technologies with grant funding a cumbersome and inefficient process. Behavioral scientists also do not have a strong history of training in entrepreneurialism. Historically, academic “products” have been therapies as opposed to technologies, so few have the skills required for commercialization (e.g., fundraising, filing patents). For these reasons, few academically-developed tools ever become commercially available or disseminated widely, greatly limiting their potential public health impact. Fortunately, the availability of training in these areas is increasing. For example, the NIH-funded Innovation Corps (I-Corps) program educates researchers and technologists on how to commercialize technologies built in the lab (National Institutes of Health, 2018b).

Academics who are interested in widely disseminating their tools, but who are not interested in commercialization, could take one of several other alternative paths. First, academics can develop and test components or modules in digital health tools, which could be easily adapted and incorporated into other interventions. In this way, the advances from each academically-developed tool can more easily be used in future academic and/or industry-produced technologies. Second, web developers have a long history of sharing open source code in an effort to help move the field forward without unnecessary duplication of efforts. Academics could share the code developed for their health relatively easily via existing platforms for open source code, or through more regulated systems on university websites to allow for tracking of downloads and use. Third, academics could establish industry partnerships early in the planning phases of technology development, such that the process of commercialization and dissemination of the final tool is led by the industry partner. Behavioral medicine professional organizations should play a role in facilitating these partnerships.

Alternatively, academics could leverage the robust platforms built by industry, instead of building their own. For example, many behavioral scientists use existing platforms such as Facebook, Twitter, and MyFitnessPal for assessment and intervention delivery (e.g., Pagoto et al., 2015; Ramo et al., 2018; Teixeira, Voci, Mendes-Netto, & da Silva, 2018; Turner-McGrievy & Tate, 2013; Waring et al. 2018). As noted previously, an important outcome of this work is that it has raised issues of data access and control (which are not always straightforward), abrupt changes to the technology, and ethical considerations. For example, a commercially available platform may restrict access to user data, update its features or data security policies without notice, or not adhere to data privacy practices that are necessary for academic ethics regulation boards (Arigo et al., 2018). In the app and social media spaces, this can lead researchers to “reinvent the wheel” (i.e., design their own platform) to test a hypothesis. Although this may address some problems, it is quite costly, and the resulting tool may not be sustainable, scalable, or attractive to the general population. When using commercial platforms, researchers need to do their due diligence to understand all aspects of the platform, stay vigilant of changes and updates, and inform participants accordingly.

Principles versus technologies.

Technology is changing at a pace that is quicker than research can happen. For this reason, academic researchers should, as indicated by Mohr and colleagues, perform “trials of intervention principles” that may play a role in multiple digital health interventions, rather than trials of specific technologies (Mohr et al., 2015). For example, interventions that are device- or platform-agnostic could increase the likelihood of utilization in the future. Also, testing intervention feedback strategies without focus on a single fitness wearable brand or social media platform means that as the market changes, the findings may still be applicable. This approach assumes some similarity across digital health tools, and it is critical for researchers to attend to important differences between tools if they intend to draw broad conclusions.

Similarly, many academics focus on the development of a single digital tool, rather than on the underlying computer programming processes that may be translatable across tools (e.g., algorithms with key decision points). This may be most noticeable in the area of apps, where a variety of researcher-developed tools exist that have only reached the formal feasibility or pilot testing stage (Payne, Lister, West, Bernhardt, 2015). By developing interventions through APIs, rather than focusing on specific apps as outcomes of research projects, academics create opportunities for their content to be utilized in multiple settings (e.g., electronic health records, web-based disease management portals, other stand-alone apps).

Academic-Industry Partnerships as the Future of Digital Health

Despite what might be a natural synergy between academia and industry (see Figure 2), we see few examples of academic-industry collaboration, and it is worth exploring why. Historically in the behavioral sciences, the most relevant “industry” was the pharmaceutical industry, as this was the only industry that shared our goal of developing effective treatments for behavioral problems. However, a disconnect always existed with the pharmaceutical industry because behavioral scientists develop non-pharmacological treatments. For this reason, behavioral scientists do not have a long tradition of working in industry. Eventually, the digital health industry emerged with the shared goal of creating behavioral solutions for health, which presented an enormous and unprecedented opportunity for collaboration. However, behavioral medicine training programs have not been prepared to produce a workforce for industry, which hinders not only collaboration but also knowledge transfer. For example, graduate programs in the behavioral sciences do not facilitate industry internships (unlike the computer sciences and engineering where this is standard), and they rarely provide any sort of training and mentorship toward industry careers (Goldstein et al., 2017). In fact, one could argue that academic behavioral science has passively (if not actively) discouraged the industry career path by exclusively preparing trainees for the academic career path. Training a workforce for industry as well as learning effective ways to communicate science to industry will forge connections that will improve the work produced in both sectors.

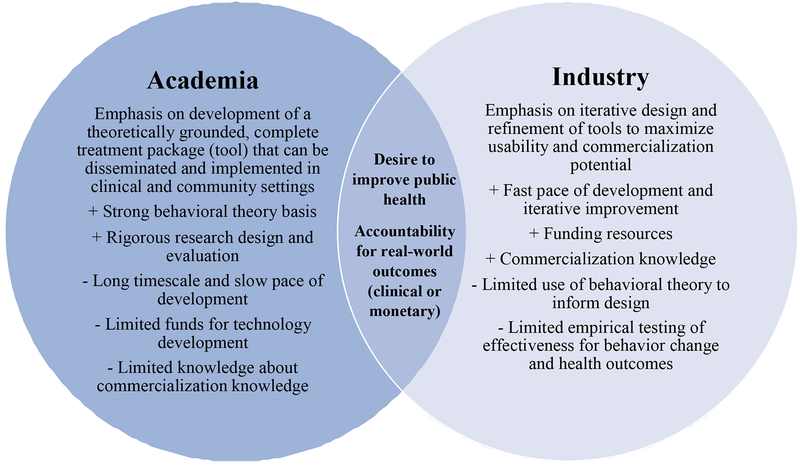

Figure 2.

Strengths, weaknesses, and intersecting priorities for development of digital health tools in academia and industry.

Behavioral scientists’ lack of experience working in industry coupled with a history of public battles with certain industries (e.g., tobacco companies), may have led to misconceptions about industry that create a reticence to collaborate. Misconceptions include accusations that academic and industry have different value systems, that industry is more vulnerable to bias, and that industry is less interested in protecting privacy (Wolin & Pagoto, 2018). Fears of conflict of interest may also prevent academics from working with industry. Other barriers include differing language and paces of work across sectors. Perceived differences are more imagined than real, as both sides share the goal of producing and disseminating effective behavioral solutions to health problems. One potential leverage point for growing successful academic-industry partnerships might be when goals and metrics of success between the two sectors are closely aligned. These opportunities may occur infrequently at present, but offer a chance to demonstrate the success that can be grown from synergy between the sectors.

Health coaching as an example of successful academic-industry partnerships.

Health coaching (or behavioral counseling) is the classic modality for delivering evidence-based interventions. Coaching/counseling models have been developed and tested within academic research, and are now moving towards digital systems. Several studies have now demonstrated the value of both reach and impact associated with the use of digital tools for heath coaching. The digital health coach model has also gained the interest of industry stakeholders looking to scale behavioral health interventions for large user bases (e.g., implementation within a health system). This overlap in goals has created opportunities for cross-sector collaboration.

One key way that academia and industry can work together in this space is for industry to scale the reach of academia-developed health coaching programs by developing digital versions of those programs in a platform-agnostic way. Digital health coaching programs may be implemented in clinical settings (including both health care provider or payer settings), employer settings, or other settings within any number of informatics platforms. Further, it may often be the case that two or more settings with different platforms (e.g., a health care provider with an electronic medical record platform and an insurance company with an internal care management informatics platform) both need to consume data from a digital health coaching program and to communicate about that data in service to the client they share. By creating digital health coaching programs that can be embedded in any informatics platform, industry partners remove a number of significant barriers that the academic developers of those health coaching programs may otherwise face in their efforts to disseminate and implement their evidence-based interventions.

Considerations for the Future of Digital Health in Behavioral Medicine

As the field of behavioral medicine continues to leverage digital tools to improve the reach and effectiveness of interventions, academic-industry partnerships will be essential for success. Creating and nurturing high functioning academic-industry partnerships requires an understanding of how incentives for each side might align (see Figure 2). Industry enjoys a fast pace of development, emphasis on iterative design, and knowledge about the commercialization process. Although academia may have much to offer industry with respect to health behavior change intervention techniques and rigorous methods of evaluation, it must be acknowledged that many commercial efforts have been financially successful without much input from academics, and the benefits of academic involvement may not be obvious to industry professionals. As a result, behavioral medicine researchers and providers who are interested in taking digital tools to market or helping to improve existing tools should consider the following points.

Bringing academia-developed tools to the commercial market.

Researchers interested in bringing a new digital intervention tool to market should consider what makes a tool ready for dissemination. Has the research team considered how best to differentiate their tool from the thousands already available? Have they thought through the monetary, computing, and personnel resources needed to scale up use of the tool? Companies are often uncomfortable rolling out an intervention to clients that has “only” been tested on a few hundred people because serious issues that may not be apparent in a platform that has been used by only 300 people may quickly become visible when offering it to thousands or more. Thus, a great deal of development is necessary by industry before it can implement an academic intervention, and the incentives for the industry partner (e.g., cost-effectiveness) must be clear from the outset.

Even among tools that have shown efficacy in clinical trials, the associated costs to scale up the tool may be prohibitive. As noted previously, some clinical trials of full intervention packages generate important lessons about the behavioral principles that underlie the use of a digital tool (Mohr et al., 2015). In a similar vein, dismantling studies may reveal that some of the intervention components are less effective than others (e.g., Muench & Baumel, 2017). In many cases, implementing a principle or reduced version of the intervention on a large scale will be preferable, rather than bringing a new, full intervention tool to market. Although researchers may worry about losing some of the integrity of the intervention as designed and tested, this approach could maximize the strengths of academia and industry and facilitate the process of commercialization. Further, the principles of implementation science such as the context in which an intervention will be implemented, including what elements are essential to remain in their original forms, what can be adapted, and how to optimize for uptake of the innovation should guide this work (Bauer et al., 2015).

Continued work to optimize existing digital intervention tools.

In addition to creating new intervention tools in an already-crowded digital marketplace, clear opportunities exist to improve the effectiveness of existing tools. Apps and wearables rarely reach individuals who are at greatest health risk (Patel, Asch, & Volpp, 2015). We need to know how can we modify these tools to make them more appealing to, or more effective for, at-risk groups. How can we match people to the tools that may work best for their health needs and goals, as well as their budgets? As use of these tools increases, how can we best integrate the resulting data into clinical care? And as our technology advances, how can we improve our research methods to match the unique demands, and effectively communicate the results to industry partners?

Academic researchers and providers are uniquely positioned to answer these questions, particularly with respect to evolving research designs. The use of the MOST framework, SMART and micro-randomized trials, machine learning, and control systems engineering models is increasing, as these resources are particularly well suited to testing JIT and JITAI intervention tools. Important considerations in this domain are the complexity of these approaches, and expertise needed to develop and evaluate intervention tools that involve them.

Finally, it is critical for both academia and industry to consider whether increasing an intervention’s computing complexity is optimal (or necessary) to produce clinically meaningful health behavior change. At present, many digital tools still rely on users to self-report important information such as mood or current environmental factors to inform intervention decisions (e.g., what type of prompt the user will receive). The self-report burden may lead users to disengage before they receive the minimally effective intervention dose (Nahum-Shani et al., 2018), which limits the tool’s impact on health outcomes. On the other hand, passive sensing technology may have not yet achieved accuracy for certain behaviors, as in the case of dietary intake. Regardless, at least 24% of U.S. adults do not have smartphones (Pew Research Center, 2018a), which limits their access to many of the intervention tools described here. For some patients or users, a simpler mode of delivery such as text messaging may be preferable, more accessible, and/or more effective than an app- or wearable-based JITAI. Additional work is needed to determine for whom and under what circumstances a particular digital intervention tool is best.

Summary and Conclusions

Although it may seem that the field of behavioral medicine is new to technology, we have a long history of embracing new technologies in the pursuit of fostering better health outcomes through behavior change. The newest permutation of digital health is establishing new opportunities for developing scalable effective interventions, but myriad challenges remain related to aligning incentives, methods, and ethical standards between the field of behavioral medicine and industry partners who can facilitate the scaling. However, an emergence of academics is producing and evaluating tools and resources that are used in the real world, just as an emergence of industry partners is interested in using data and evidence to create tools that produce the results they are designed to produce. The profound risk to the behavioral science community is in not acting and finding ways to support the emerging industry that shares our values and goals of better health through scientifically grounded work.

The future of digital health behavioral science research lies in finding ways to advance more robust academic-industry partnerships. These include academics consciously working towards preparing and training the work force of the 21st century for digital health, actively working towards advancing methods that can balance the needs for efficiency in industry with the desire for rigor and reproducibility in academia, and the need to advance common practices and procedures that support more ethnical practices for helping individuals. This work should have a clear focus on respecting privacy, choice, and ownership of personal data. It is an imperative to realize both the opportunities and key considerations for forging robust academic-industry partnerships so that we can move forward on achieving our broader goals of public and population health.

Funding

Additional support for the authors’ time during the preparation of this manuscript was provided by the National Institutes of Health; grant numbers K23HL136657 (Danielle Arigo), R25CA172009 (Danielle E. Jake-Schoffman), and K24HL124366 (Sherry L. Pagoto).

Footnotes

Conflict of Interest: Danielle Arigo, Danielle E. Jake-Schoffman, Kate Wolin, and Ellen Beckjord declare that they have no conflicts of interest. Eric B. Hekler serves as scientific advisor to Omada Health, Proof Pilot, and eEcoSphere. Sherry L. Pagoto serves as scientific adviser to Fitbit.

Ethical approval: This article does not contain any studies with human participants or animals performed by any of the authors.

Contributor Information

Danielle Arigo, Department of Psychology, Rowan University; Department of Family Medicine, Rowan University School of Osteopathic Medicine.

Danielle E. Jake-Schoffman, Department of Health Education & Behavior, University of Florida

Kathleen Wolin, Interactive Health

Ellen Beckjord, Population Health and Clinical Affairs, University of Pittsburgh Medical Center Health Plan.

Eric B. Hekler, Department of Family Medicine Public Health; Center for Wireless and Population Health Systems University of California, San Diego

Sherry L. Pagoto, Department of Allied Health Sciences; Institute for Collaboration in Health, Interventions, and Policy; Center for mHealth and Social Media University of Connecticut.

References

- Agras WS (1982). Behavioral medicine in the 1980s: Nonrandom connections. Journal of Consulting and Clinical Psychology, 50, 797–803. [DOI] [PubMed] [Google Scholar]

- Anderson CM, & Zhu SH (2007). Tobacco quitlines: Looking back and looking ahead. Tobacco Control, 16 (Suppl 1), i81–i86. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arigo D (2015). Promoting physical activity among women using wearable technology and online social connectivity: A feasibility study. Health Psychology and Behavioral Medicine, 3, 391–409. [Google Scholar]

- Arigo D, Pagoto S, Carter-Harris L, Lillie SE, & Nebeker C (2018). Using social media for health research: Methodological and ethical considerations for recruitment and intervention delivery. Digital Health, 4, 1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ashrafian H, Toma T, Harling L, Kerr K, Athanasiou T, & Darzi A (2014). Social networking strategies that aim to reduce obesity have achieved significant although modest results. Health Affairs, 33, 1641–1647. [DOI] [PubMed] [Google Scholar]

- Blanchard EB (1982). Behavioral medicine: Past, present, and future. Journal of Consulting and Clinical Psychology, 50, 795–796. [DOI] [PubMed] [Google Scholar]

- Bond DS, Thomas JG, Raynor HA, Moon J, Sieling J, Trautvetter J,… & Wing RR (2014). B-MOBILE-A smartphone-based intervention to reduce sedentary time in overweight/obese individuals: A within-subjects experimental trial. PloS One, 9, e100821. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bauer MS, Damschroder L, Hagedorn H, Smith J, & Kilbourne AM (2015). An introduction to implementation science for the non-specialist. BMC Psychology, 3, 32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Butryn ML, Arigo D, Raggio GA, Colasanti M, & Forman EM (2016). Enhancing physical activity promotion in midlife women with technology-based self-monitoring and social connectivity: A pilot study. Journal of Health Psychology, 21, 1548–1555. [DOI] [PubMed] [Google Scholar]

- Carr F (2018). Facebook is telling people their data was misused by Cambridge Analytica and they’re furious. http://time.com/5234740/facebook-data-misused-cambridge-analytica/. Access verified June 30, 2018. [Google Scholar]

- Carter MC, Burley VJ, Nykjaer C, & Cade JE (2013). Adherence to a smartphone application for weight loss compared to website and paper diary: Pilot randomized controlled trial. Journal of Medical Internet Research, 15, e32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carter-Harris L, Ellis RB, Warrick A, & Rawl S (2016). Beyond traditional newspaper advertisement: leveraging Facebook-targeted advertisement to recruit long-term smokers for research. Journal of Medical Internet Research, 18, e117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chakraborty B, Collins LM, Strecher VJ, & Murphy SA (2009). Developing multicomponent interventions using fractional factorial designs. Statistics in Medicine, 28, 2687–2708. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Charles-Smith LE, Reynolds TL, Cameron MA, Conway M, Lau EH, Olsen JM… & Corley CD (2015). Using social media for actionable disease surveillance and outbreak management: A systematic literature review. PloS one, 10, e0139701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cobiac LJ, Vos T, & Barendregt JJ (2009). Cost-effectiveness of interventions to promote physical activity: a modelling study. PLoS Medicine, 6, e1000110. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cole-Lewis H, & Kershaw T (2010). Text messaging as a tool for behavior change in disease prevention and management. Epidemiologic Reviews, 32, 56–69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins LM, Murphy SA, & Strecher V (2007). The Multiphase Optimization Strategy (MOST) and the Sequential Multiple Assignment Randomized Trial (SMART): New methods for more potent eHealth interventions. American Journal of Preventive Medicine, 32, S112–S118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins LM, Nahum-Shani I, & Almirall D (2014). Optimization of behavioral dynamic treatment regimens based on the sequential, multiple assignment, randomized trial (SMART). Clinical Trials, 11, 426–434. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conroy DE, Hojjatinia S, Lagoa CM, Yang CH, Lanza ST, & Smyth JM (2018). Personalized models of physical activity responses to text message micro-interventions: A proof-of-concept application of control systems engineering methods Psychology of Sport and Exercise (advance online publication). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Conroy DE, Yang CH, & Maher JP (2014). Behavior change techniques in top-ranked mobile apps for physical activity. American Journal of Preventive Medicine, 46, 649–652. [DOI] [PubMed] [Google Scholar]

- Cummins SE, Bailey L, Campbell S, Koon-Kirby C, & Zhu SH (2007). Tobacco cessation quitlines in North America: A descriptive study. Tobacco Control, 16 (Suppl 1), i9–i15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dehling T, Gao F, Schneider S, & Sunyaev A (2015). Exploring the far side of mobile health: Information security and privacy of mobile health apps on iOS and Android. JMIR mHealth and uHealth, 3, e8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evans WD, Bihm JW, Szekely D, Nielsen P, Murray E, Abroms L, & Snider J (2014). Initial outcomes from a 4-week follow-up study of the Text4baby program in the military women’s population: Randomized controlled trial. Journal of Medical Internet Research, 16, e131. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evans WD, Wallace JL, & Snider J (2012). Pilot evaluation of the text4baby mobile health program. BMC Public Health, 12, 1031. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Evenson KR, Goto MM, & Furberg RD (2015). Systematic review of the validity and reliability of consumer-wearable activity trackers. International Journal of Behavioral Nutrition and Physical Activity, 12, 159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eysenbach G (2005). The law of attrition. Journal of Medical Internet Research, 7, e11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eysenbach G, Powell J, Englesakis M, Rizo C, & Stern A (2004). Health related virtual communities and electronic support groups: systematic review of the effects of online peer to peer interactions. British Medical Journal, 328, 1166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fahrenberg J (1996). Ambulatory assessment: Issues and perspectives In: Fahrenberg J & Myrtek M (Eds.). Ambulatory Assessment: Computer-Assisted Psychological and Psychophysiological Methods in Monitoring and Field Studies. (1996) Seattle, WA: Hogrefe and Huber, pp 3–20. [Google Scholar]

- Fjeldsoe BS, Marshall AL, & Miller YD (2009). Behavior change interventions delivered by mobile telephone short-message service. American Journal of Preventive Medicine, 36, 165–173. [DOI] [PubMed] [Google Scholar]

- Fox S (2017). The Social Life of Health Information. Washington, DC: Pew Internet & American Life Project; 2011. [Google Scholar]

- Frost J, Okun S, Vaughan T, Heywood J, & Wicks P (2011). Patient-reported outcomes as a source of evidence in off-label prescribing: analysis of data from PatientsLikeMe. Journal of Medical Internet Research, 13, e6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Garnett C, Crane D, West R, Brown J, & Michie S (2018). The development of Drink Less: an alcohol reduction smartphone app for excessive drinkers. Translational Behavioral Medicine, iby043-iby043. doi: 10.1093/tbm/iby043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Global Wellness Institute (2018). Global wellness: Statistics and facts. https://globalwellnessinstitute.org/press-room/statistics-and-facts/ Access verified June 11, 2018. [Google Scholar]

- Goldstein CM, Minges KE, Schoffman DE, & Cases MG (2017). Preparing tomorrow’s behavioral medicine scientists and practitioners: a survey of future directions for education and training. Journal of Behavioral Medicine, 40, 214–226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gorman JR, Roberts SC, Dominick SA, Malcarne VL, Dietz AC, & Su HI (2014). A diversified recruitment approach incorporating social media leads to research participation among young adult-aged female cancer survivors. Journal of Adolescent and Young Adult Oncology, 3, 59–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grabosch S, Gavard JA, & Mostello D (2014). 151: Text4baby improves glycemic control in pregnant women with diabetes. American Journal of Obstetrics & Gynecology, 210, S88. [Google Scholar]

- Han CJ, Lee YJ, & Demiris G (2018). Interventions using social media for cancer prevention and management: A systematic review Cancer Nursing (advance online publication). [DOI] [PMC free article] [PubMed] [Google Scholar]

- Head KJ, Noar SM, Iannarino NT, & Harrington NG (2013). Efficacy of text messaging-based interventions for health promotion: A meta-analysis. Social Science &Medicine, 97, 41–48. [DOI] [PubMed] [Google Scholar]

- Heintzman ND (2016). A digital ecosystem of diabetes data and technology: services, systems, and tools enabled by wearables, sensors, and apps. Journal of Diabetes Science and Technology, 10, 35–41. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hekler EB, Klasnja P, Riley WT, Buman MP, Huberty J, Rivera DE, & Martin CA (2016). Agile science: Creating useful products for behavior change in the real world. Translational Behavioral Medicine, 6, 317–328. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hekler EB, Rivera DE, Martin CA, Phatak SS, Freigoun MT, Korinek E,…Buman MP (2018). Tutorial for using control systems engineering to optimize adaptive mobile health interventions. Journal of Medical Internet Research. 20, e214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heron KE, & Smyth JM (2010). Ecological momentary interventions: Incorporating mobile technology into psychosocial and health behavior treatments. British Journal of Health Psychology, 15, 1–39. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hussain MS, Cripwell L, Berkovsky S, & Freyne J (2016). Promoting UV exposure awareness with persuasive, wearable technologies Proceedings of Digital Health Innovation for Consumers, Clinicians, Connectivity and Community: Selected Papers from the 24th Australian National Health Informatics Conference (HIC 2016), 48–54. [PubMed] [Google Scholar]

- Insel TR (2017). Digital phenotyping: Technology for a new science of behavior. JAMA, 318, 1215–1216. [DOI] [PubMed] [Google Scholar]

- Iribarren SJ, Ghazzawi A, Sheinfil AZ, Frasca T, Brown W, Lopez-Rios J,… & Giguere R (2018). Mixed-method evaluation of social media-based tools and traditional strategies to recruit high-risk and hard-to-reach populations into an HIV prevention intervention study. AIDS and Behavior, 22, 347–357. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jake-Schoffman DE, Silfee VJ, Waring ME, Boudreaux ED, Sadasivam RS, Mullen SP, …Pagoto SL (2017). Methods for Evaluating the Content, Usability, and Efficacy of Commercial Mobile Health Apps. JMIR mHealth and uHealth, 5, e190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jake-Schoffman DE, Turner-McGrievy G, Wilcox S, Moore JB, Hussey JR, & Kaczynski AT (2018). The mFIT (Motivating Families with Interactive Technology) Study: A randomized pilot to promote physical activity and healthy eating through mobile technology. Journal of Technology in Behavioral Science (advance online publication). [Google Scholar]

- Keller PA, Feltracco A, Bailey LA, Li Z, Niederdeppe J, Baker TB, & Fiore MC (2010). Changes in tobacco quitlines in the United States, 2005–2006. Preventing Chronic Disease, 7, 1–6. [PMC free article] [PubMed] [Google Scholar]

- Klasnja P, Smith SN, Seewald NJ, Lee AJ, Hall K, & Murphy SA (2017, March). Effects of contextually-tailored suggestions for physical activity: The HeartSteps Micro-randomized trial Annals of Behavioral Medicine, 51, S902–S903 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krebs P, & Duncan DT (2015). Health app use among US mobile phone owners: A national survey. JMIR mHealth and uHealth, 3, e101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Larsen KR, Michie S, Hekler EB, Gibson B, Spruijt-Metz D, Ahern D,… & Yi J (2017). Behavior change interventions: the potential of ontologies for advancing science and practice. Journal of Behavioral Medicine, 40, 6–22. [DOI] [PubMed] [Google Scholar]

- Ledger D, & McCaffrey D (2014). Inside wearables: How the science of human behavior change offers the secret to long-term engagement. https://blog.endeavour.partners/inside-wearable-how-the-science-of-human-behavior-change-offers-the-secret-to-long-term-engagement-a15b3c7d4cf3. Access verified June 20, 2018. [Google Scholar]

- Liao P, Klasnja P, Tewari A, & Murphy SA (2016). Sample size calculations for micro‐randomized trials in mHealth. Statistics in Medicine, 35, 1944–1971. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lichtenstein E, Glasgow RE, Lando HA, Ossip-Klein DJ, & Boles SM (1996). Telephone counseling for smoking cessation: Rationales and meta-analytic review of evidence. Health Education Research, 11, 243–257. [DOI] [PubMed] [Google Scholar]

- Lichtenstein E, Zhu SH, & Tedeschi GJ (2010). Smoking cessation quitlines: An underrecognized intervention success story. American Psychologist, 65, 252–261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lipschitz J, & Torous J (2018). Why it’s so hard to figure out whether health apps work. https://slate.com/technology/2018/05/health-apps-like-headspace-are-hard-to-study-because-we-cant-make-good-placebo-apps.html. Access verified June 20, 2018. [Google Scholar]

- Lustria MLA, Cortese J, Noar SM, & Glueckauf RL (2009). Computer-tailored health interventions delivered over the Web: Review and analysis of key components. Patient Education and Counseling, 74, 156–173. [DOI] [PubMed] [Google Scholar]

- Lustria MLA, Noar SM, Cortese J, Van Stee SK, Glueckauf RL, & Lee J (2013). A meta-analysis of web-delivered tailored health behavior change interventions. Journal of Health Communication, 18, 1039–1069. [DOI] [PubMed] [Google Scholar]

- Mack H (2016). Nearly 60 percent of US smartphone owners use phones to manage health. http://www.mobihealthnews.com/content/nearly-60-percent-us-smartphone-owners-use-phones-manage-health. Access verified June 13, 2018. [Google Scholar]

- Maher CA, Lewis LK, Ferrar K, Marshall S, De Bourdeaudhuij I, & Vandelanotte C (2014). Are health behavior change interventions that use online social networks effective? A systematic review. Journal of Medical Internet Research, 16, e40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mendiola MF, Kalnicki M, & Lindenauer S (2015). Valuable features in mobile health apps for patients and consumers: content analysis of apps and user ratings. JMIR mHealth and uHealth, 3, e40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martinez O, Wu E, Shultz AZ, Capote J, Rios JL, Sandfort T,… & Moya E (2014). Still a hard-to-reach population? Using social media to recruit Latino gay couples for an HIV intervention adaptation study. Journal of Medical Internet Research, 16, e113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michie S, Abraham C, Whittington C, McAteer J, & Gupta S (2009). Effective techniques in healthy eating and physical activity interventions: a meta-regression. Health Psychology, 28, 690–701. [DOI] [PubMed] [Google Scholar]

- Mohr DC, Schueller SM, Riley WT, Brown CH, Cuijpers P, Duan N,… & Cheung K (2015). Trials of intervention principles: evaluation methods for evolving behavioral intervention technologies. Journal of Medical Internet Research, 17, e166. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moreno MA, Waite A, Pumper M, Colburn T, Holm M, & Mendoza J (2017). Recruiting adolescent research participants: in-person compared to social media approaches., Cyberpsychology, Behavior, and Social Networking, 20, 64–67. [DOI] [PubMed] [Google Scholar]

- Murray JM, Brennan SF, French DP, Patterson CC, Kee F, & Hunter RF (2017). Effectiveness of physical activity interventions in achieving behaviour change maintenance in young and middle aged adults: A systematic review and meta-analysis. Social Science &Medicine, 192, 125–133 [DOI] [PubMed] [Google Scholar]

- Murray E, Hekler EB, Andersson G, Collins LM, Doherty A, Hollis C,… & Wyatt JC (2016). Evaluating Digital Health Interventions. American Journal of Preventive Medicine, 51, 843–851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nahum-Shani I, Hekler EB, & Spruijt-Metz D (2015). Building health behavior models to guide the development of just-in-time adaptive interventions: A pragmatic framework. Health Psychology, 34, 1209–1219. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nahum-Shani I, Smith SN, Spring BJ, Collins LM, Witkiewitz K, Tewari A, & Murphy SA (2018). Just-in-time adaptive interventions (JITAIs) in mobile health: key components and design principles for ongoing health behavior support. Annals of Behavioral Medicine, 52, 446–462. [DOI] [PMC free article] [PubMed] [Google Scholar]

- National Institute on Alcohol Abuse and Alcoholism (NIAAA) (2018). A second challenge competition for the wearable alcohol biosensor. https://www.niaaa.nih.gov/research/niaaa-research-highlights/second-challenge-competition-wearable-alcohol-biosensor. Access verified June 30, 2018. [Google Scholar]

- National Institutes of Health (2018a). All of Us Research Program. https://allofus.nih.gov/ Access verified June 30, 2018. [Google Scholar]

- National Institutes of Health (2018b). Innovation Corps (I-Corps™) at NIH Program for NIH and CDC Translational Research (Admin Supp). https://grants.nih.gov/grants/guide/pa-files/PA-18-314.html. Access verified June 20, 2018. [Google Scholar]

- Nebeker C, Linares-Orozco R, & Crist K (2015). A multi-case study of research using mobile imaging, sensing and tracking technologies to objectively measure behavior: Ethical issues and insights to guide responsible research practice. Journal of Research Administration, 46, 118–137. [Google Scholar]