Abstract

Background

Although language deficits after awake brain surgery are usually milder than post-stroke, postoperative language assessments are needed to identify these. Follow-up of brain tumor patients in certain geographical regions can be difficult when most patients are not local and come from afar. We developed a short telephone-based test for pre- and postoperative language assessments.

Methods

The development of the TeleLanguage Test was based on the Dutch Linguistic Intraoperative Protocol and existing standardized English batteries. Two parallel versions were composed and tested in healthy native English speakers. Subsequently, the TeleLanguage Test was administered in a group of 14 tumor patients before surgery and at 1 week, 1 month, and 3 months after surgery. The test includes auditory comprehension, repetition, semantic selection, sentence or story completion, verbal naming, and fluency tests. It takes less than 20 minutes to administer.

Results

Healthy participants had no difficulty performing any of the language tests via the phone, attesting to the feasibility of a phone assessment. In the patient group, all TeleLanguage test scores significantly declined shortly after surgery with a recovery to preoperative levels at 3 months postsurgery for naming and fluency tasks and a recovery to normal levels for the other language tasks. Analysis of the in-person language assessments (until 1 month) revealed a similar profile.

Conclusion

The use of the TeleLanguage battery to conduct language assessments from afar can provide convenience, might optimize patient care, and enables longitudinal clinical research. The TeleLanguage is a valid tool for various clinical and scientific purposes.

Keywords: aphasia, awake brain surgery, language test, telephone assessment, tumor surgery

Awake brain surgery with intraoperative mapping is currently the gold-standard method for brain tumors (gliomas) located in functional regions1 that typically involve language or motor cortices or pathways of the dominant hemisphere.2 According to the meta-analysis of De Witt Hamer et al,3 intraoperative mapping with direct electrical stimulation (DES) is associated with fewer late severe neurological deficits compared to glioma surgery without DES. Still, a number of neuropsychological studies have shown that both in the pre- and postoperative phase of awake surgery relevant clinical language impairments may be found4–9 and patients may suffer from aphasia, the inability to understand or formulate language, as a result of brain injury. However, because the typically slow growth of low-grade gliomas allows neural reorganization, no obvious linguistic deficits may be identified when using standardized language tests for stroke patients.1,10 Therefore, more-sensitive language tests are needed to identify subtler language impairments tumor patients complain about. Consequently, the Dutch Linguistic Intraoperative Protocol (DuLIP)11 was developed to measure language functions in the pre-, intra-, and postoperative phase of awake surgery. This protocol consists of tasks and items at all linguistic levels, including phonology, semantics, and (morpho)syntax at different difficulty levels for language production and perception.

Wilson et al12 evaluated the language of 110 patients from the University of California San Francisco (UCSF) Medical Center. The patients were assessed prior to surgery and 2-3 days and 1 month postsurgery using the Western Aphasia Battery (WAB)13 and the Boston Naming Test.14 Their findings showed that transient aphasia is very common (in 71% of patients) after left hemisphere surgery and that the nature of the aphasia depends on the site of the specific location of the surgical site. At 1 month postsurgery, only naming differed significantly from its presurgical level. More recently, a newly composed test battery, the quick aphasia battery (QAB),15 is applied at UCSF for pre- and postoperative assessments of brain tumor patients. The QAB was developed to gather relevant information in a short period of time on the different linguistic levels and major language functions in patients. In addition, as the QAB uses graded systems to quantify deficits in patients, it might be more sensitive than the WAB to detect mild language deficits.

Unfortunately, postoperative language follow-up of tumor patients in the San Francisco Bay Area can be difficult as many are referred from outside the area and find it difficult to return for follow-up testing. Many other US and European sites experience the same problem, particularly with extended follow-ups. Consequently, few long-term data are collected even though it has been shown that language recovery can take longer than several months.4–6,16–19 Administering language tests such as the WAB13,14 or QAB via video calling (eg, Skype) has proved inefficient as patients are not always able to use video calling and/or their internet connection could not support it. Since most tumor patients are typically contacted by phone post-surgery for clinical follow-up, it seems more feasible to conduct follow-up language testing via the telephone rather than via video calling. In fact, numerous studies have shown that various cognitive functions can be measured reliably and precisely over the telephone in stroke, cancer and Alzheimer disease patients and elderly individuals.20–24 However, no language-focused telephone assessments are available, nor have existing standardized language batteries been tested via the telephone. To fill this void, we developed a short telephone-based language test battery for pre- and postoperative language assessments to follow patients over longer periods of time. In this pilot study, a group of 14 brain tumor patients undergoing awake surgery for resection of language-dominant hemisphere tumors and 14 matched controls were evaluated with a telephone-based test battery. The objectives of this study were (1) to compose a feasible telephone-based language test battery for brain tumor patients, (2) to collect longer follow-up data (currently at 3 months post-surgery), and (3) to validate the telephone-based language battery by comparing the collected telephone data with traditional face-to-face assessments.

Materials and Methods

Development of the TeleLanguage Test

Procedure

Task selection for the TeleLanguage Test was based on the Dutch Linguistic Intraoperative Protocol11 and English aphasia batteries such as the WAB,13 Boston Diagnostic Aphasia Examination25 and the QAB.15 We composed a language battery consisting of a naming task, phonological tasks (repetition, letter fluency), semantic tasks (semantic selection, semantic fluency), and syntactic language tests (story completion, sentence completion) using different difficulty levels to identify both mild and more severe language deficits. A comprehension screening was added to exclude patients with severe comprehension disorders who would not be able to perform the tests via the telephone. The test items were selected on the basis of linguistic variables, such as word frequency, word length, and morphological and phonological form, so each task had both easy and more complex items with increasing levels of difficulty. We developed 2 parallel versions of the TeleLanguage Test (version A and B, except for the fluency tasks) that were matched to avoid learning and practice effects. These 2 versions were tested in a group of 10 healthy native English speakers to evaluate the selection of items. The administration of the whole TeleLanguage Test did not last longer than 20 minutes. Based on the assessment in the healthy test group, some items from the semantic selection task were adapted (eg, when the target answer was not found by more than 2 participants) and some repetition items (including many high-frequency sounds) were deleted to avoid misunderstanding via the telephone before administration in the tumor group.

Description of tests

All tests used are illustrated in Table 1, including their provenance and number of items.

Table 1.

Description of TeleLanguage Tasks With Item Examples

| Task | Example (Item) |

|---|---|

| Comprehension screening (n = 5; adapted from WAB) | I am going to ask you some questions. Answer yes or no: 1. Is your name X? 2. Do you eat a banana before you peel it? |

| Verbal naming test (n = 25) (adapted from verbal naming test26) | I am going to describe an object or a verb and I want you to tell me the name of what I am describing: 1. A large animal in Africa with a trunk. 2. What ice does when it gets hot. |

| Word and sentence repetition (n = 10; adapted from WAB, BDAE) | Repeat after me: 1. Bed 2. Screwdriver 3. Methodist episcopal 4. He unlocks the heavy oak door. |

| Letter fluency (adapted from WAB) | Name as many words that start with the letter F as you can in 1 minute. |

| Semantic noun and verb selection (n = 10; adapted from DuLIP) | Two words will go together best because of their meaning and one word will not. Tell me the word that does not fit. 1. Banana, apple, carrot 2. Talk, tell, sing |

| Semantic fluency (adapted from WAB) | Name as many animals as you can in 1 minute. |

| Story completion (n = 5; adapted from Goodglass story completion27) | Complete the story: 1. My dog is hungry and I have a bone in my hand. What’s next? 2. The mouse was running around. A cat came along. The mouse did not see the cat running after him. What happened to the mouse? The mouse ... |

| Sentence completion (n = 10; adapted from DuLIP) | Complete the sentence in a meaningful way 1. The man knows that ... 2. I’ll do it when ... |

Abbreviations: BDAE, Boston Diagnostic Aphasia Examination; DuLIP, Dutch Linguistic Intraoperative Protocol; WAB, Western Aphasia Battery.

Test administration, registration and scoring

In each version (A or B), the tests were administered in identical order: 1. comprehension screening, 2. verbal naming, 3. repetition, 4. semantic selection, 5. story completion, 6. letter fluency, 7. semantic fluency, 8. sentence completion. Practice items were included for each subtest to ensure that participants understood the task. The answers were recorded verbatim and transcribed. One point was given if the target answer was correctly produced and within the given time frame for the fluency and naming task (within 10 seconds). For the repetition task, minor dysarthric errors are allowed and articulation errors were marked in the column response. A single repetition by the examiner may be given if requested (eg, if the patient asks or does not seem to hear). The accuracy of the scoring of the tests was checked by the first author and was based on qualitative terms agreed on a priori between the first and second authors. The administrators were speech language pathologists and neuropsychologists trained in language assessments. Uncertainties regarding the correctness of test items were discussed with the other authors until a consensus was reached.

Clinical Use of TeleLanguage in Tumor Patients and Matched Healthy Controls

Participants

A consecutive series of 18 patients with left hemisphere tumors, who all underwent intraoperative language mapping during resection, were screened for this study. Exclusion criteria included: nonfluent English speakers, a history of a medical or psychiatric condition known to affect language functions, persistent language deficits as a result of prior treatment, mild to severe preoperative language deficits (less than 4 out of 5 for the comprehension screening), auditory or severe visual disorder, and developmental delay. Four patients were excluded because of nonfluent English, consequently 14 patients were administered the TeleLanguage protocol (Table 2 for demographics and clinical characteristics). Handedness was formally assessed by the short form of the Edinburgh Handedness Inventory,28 which revealed 14 right-handed patients. Intraoperative and postoperative reports revealed detection of cortical language sites in all but 3 patients (Patients 2, 3, and 11), detection of subcortical language sites in Patients 3, 4, 7, and 14, and concomitant apraxia of speech in Patients 3, 7, and 14.

Table 2.

Demographics and Clinical Characteristics of Patients

| Patient | Gender | Age | Education | Handedness | Tumor Location | Tumor Type and Grade |

|---|---|---|---|---|---|---|

| Patients with Highest Mean Language Scores | ||||||

| 2 | M | 63 | 12 | Right | L frontal | Glioblastoma IV |

| 5 | M | 63 | 15 | Right | L temporal | Glioblastoma IV |

| 10 | M | 52 | 18 | Right | L temporal | Glioblastoma IV |

| 11 | M | 52 | 16 | Right | L frontal | Oligodendroglioma III |

| Patients with Lowest Mean Language Scores | ||||||

| 3 | M | 50 | 15 | Right | L temporal or insular | Glioblastoma IV |

| 4 | F | 67 | 12 | Right | L parietal | Glioblastoma IV |

| 7 | M | 58 | 14 | Right | L temporal or insular | Oligodendroglioma II |

| 14 | M | 39 | 17 | Right | L temporal | Glioblastoma IV |

| Patients with Middle Mean Language Scores | ||||||

| 1 | F | 50 | 14 | Right | L temporal | Oligodendroglioma II |

| 6 | F | 68 | 13 | Right | L parietal | Glioblastoma IV |

| 8 | M | 52 | 19 | Right | L insular | Glioblastoma IV |

| 9 | M | 42 | 17 | Right | L frontal | Oligodendroglioma II |

| 12 | M | 66 | 19 | Right | L frontal | Oligodendroglioma II |

| 13 | F | 50 | 16 | Right | L temporal | Oligodendroglioma II |

Abbreviations: F, female; L, left; M, male.

A mean language score was calculated per patient by pooling all postoperative scores per subtest together and taking the mean of these TeleLanguage test scores (using percentages). See Results section for clarification.

The 14 healthy participants were matched for gender (4 females, 10 males), age (mean: 55.29 years, SD: 10.33, range 39-71) and education levels (mean: 15.64 years, SD: 2.24, range 12-19). Exclusion criteria included nonfluent English speakers, history of psychiatric diseases, alcohol and/or drug addiction, sleep medication, impaired hearing or vision. Informed consent for the study was obtained from all participants. This study was performed under a protocol approved by the UCSF Committee on Human Research.

Procedure

The TeleLanguage Test was administered to 14 tumor patients prior to surgery and again within 1 week, 1 month, and 3 months after surgery. Seven patients received the protocol in A-B-A-B order (Patients 1, 3, 5, 7, 9, 11, and 13) and 7 patients (2, 4, 6, 8, 10, 12, and 14) in the reverse order, B-A-B-A.

When possible, in-person assessments were also performed in this patient group to compare TeleLanguage data with data collected face to face. The in-person assessments consisted of the QAB (3 parallel versions) prior to surgery, within 1 week after surgery, and at 1 month after surgery. A selection of subtests from the QAB was used to compare with TeleLanguage (Table 3). The TeleLanguage and QAB do not necessarily have a one-to-one mapping, but the best possible analogs were used.

Table 3.

Selection and Description of Subtests from the QAB that Are Used to Compare with Subtests from the TeleLanguage Test

| Phone Assessments (TeleLanguage) | In-Person Assessments (QAB) |

|---|---|

| Verbal naming | QAB naming: visual naming of 6 colored pictures. (n = 6) |

| Repetition | QAB repetition: repetition of words (n = 4) and sentences (n = 2) increasing in length. |

| Semantic selection | QAB semantic nonverbal cognition: Participants were asked to find the picture (1 out of 6) that did not fit on the basis of semantic relationships (only the first 2 series were selected because the other 4 series of odd picture out were based on visual categorization instead of semantic categorization as in the phone test). (n = 2) |

| Story completion with full sentence | QAB sentence production: The participant needs to describe 2 stimulus cards. “What is happening here?” For example, The boy is pushing the girl. (n = 2) |

| Sentence completion | QAB-connected speech A conversation with the participant for at least 3 minutes, around 1 or more topics. The following scales were rated on a 0 (mute) to 6 score (normal): paragrammatism, agrammatism, semantic paraphasia, phonemic paraphasia, other lexical access difficulties. These scales were selected to compare with sentence completion from TeleLanguage. |

Abbreviation: QAB, quick aphasia battery.

To investigate how the patients scored in comparison to their healthy peers, 14 matched controls (according to gender, age, and education) were selected to participate in this study and also underwent the TeleLanguage protocol. Both version A and B were administered with 2 weeks in between: 7 controls (uneven numbers) in A-B order and 7 controls (even numbers) in B-A order.

Statistics

First, the nonparametric paired Wilcoxon signed rank test was used to compare the healthy control data from version A with those from version B of the TeleLanguage test to define whether version A and B were indeed similar. Second, we investigated whether the mean pre- (T1) and postoperative raw TeleLanguage test scores (T2, T3, and T4) of the patients differed significantly from the healthy controls using the Mann-Whitney U test. Third, the patients’ pre- and postoperative raw scores were compared both for the TeleLanguage assessments and the in-person assessments using nonparametric paired Wilcoxon signed-rank tests. For the TeleLanguage assessments, z scores were also calculated (using the mean and SD from the healthy control data) to identify whether a score was clinically (≤–1.5 SD) or pathologically (≤–2 SD) impaired.29 Finally, given that normative data for the QAB subtests are not yet available, the in-person data and TeleLanguage data were transformed into percentages (the raw score divided by the maximum score and multiplied by 100) and compared with a paired Wilcoxon signed rank test.

Analyses were performed using IBM SPSS Statistics 23 and results were considered significant at P < .05. As this is a small exploratory study, we did not correct for multiple comparisons.

Results

Healthy Controls

Maximum scores for all healthy controls were observed for the comprehension screening (max 5), repetition task (max 10), story completion task (max 5), and sentence completion task (max 10). For naming and semantic selection mean scores of 24.71 (version A: 24.74, version B: 24.67, max 25) and 9.63 (version A: 9.5, version B: 9.75, max 10) were respectively found. Only 1 or 2 errors were made by a small number of healthy controls for naming and semantic selection.

In the healthy control group there was no significant difference in performance between the language tasks from version A and B (for all language tasks: P ≥ .257).

Patients vs Healthy Controls

Patients’ preoperative scores (T1) were worse compared to healthy controls’ on the verbal naming task (P = .001), letter fluency (P = .008) and semantic fluency task (P = .001).

At 1 week postsurgery (T2), patients obtained significantly worse scores for all language tasks: verbal naming (P < .001), repetition (P = .039), semantic selection (P < .001), story completion (P = .014), letter fluency (P < .001), semantic fluency task (P < .001) and sentence completion (P < .001).

At 1 month postsurgery (T3), almost the same psychometric profile was found as at T2. In contrast with T2, only semantic selection (P = .578) recovered to the normal range of healthy controls (verbal naming: P = .001, repetition: P = .016, story completion: P = 0.016, letter fluency: P = .001, semantic fluency task: P < .001, and sentence completion: P = .004).

At 3 months postsurgery (T4), performance on verbal naming (P = .045), letter fluency (P = .05) and semantic fluency (P = .01) was still significantly lower than for healthy controls.

Within Patients, Between Test Moments

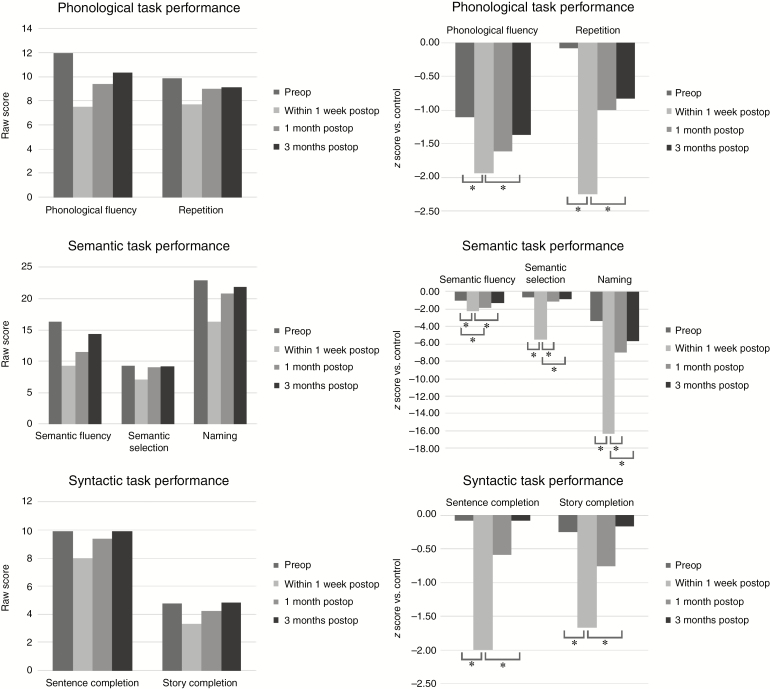

Using raw scores (N = 14), the TeleLanguage assessments revealed a significant decline at 1 week postsurgery for all language tasks: verbal naming (P = .003), repetition (P = .026), semantic selection (P = .003), story completion (P = .010), letter fluency (P = .002), semantic fluency (P = .002), and sentence completion (P = .002). During short-term follow-up (between 1 week and 1 month postsurgery), a significant improvement was observed for verbal naming (P = .002) and semantic selection (P = .007). During long-term follow-up (between 1 week and 3 months postsurgery), a significant improvement was observed for all language tasks: verbal naming (P = .003), repetition (P = .027), semantic selection (P = .005), story completion (P = .013), letter fluency (P = .002), semantic fluency (P = .002), and sentence completion (P = .002). Semantic fluency (P = .005) was the only task that still showed impairment at 1 month postsurgery relative to the preoperative assessments. No significant differences were found between the preoperative assessments and the 3-month postoperative follow-up. We repeated the same analyses with z scores, with similar results. In Fig. 1 the raw scores as well as the z scores illustrate whether the mean scores were clinically (≤–1.5) or pathologically (≤–2) impaired.29

Fig. 1.

Patients’ language performance (in raw scores and z scores) on the TeleLanguage subtests. Asterisk indicates significant results at an alpha level of <.05. Abbreviations: preop, preoperative; postop, postoperative.

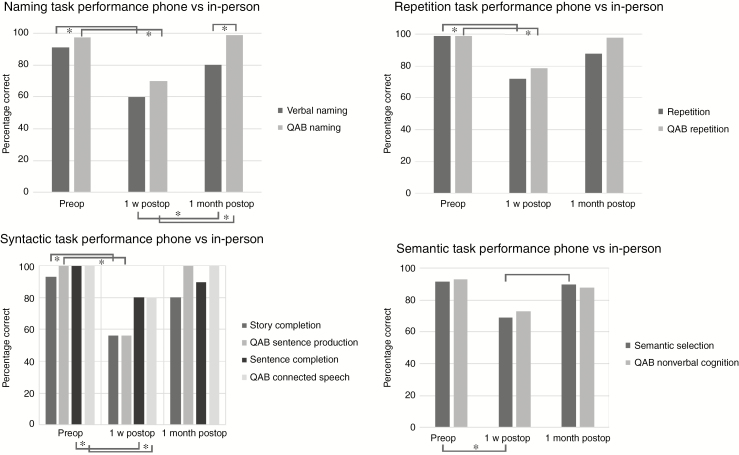

In-person data were missing for three patients and no 3-month follow-up in-person data were available. Consequently, the in-person data were analyzed for the remaining 11 patients prior to surgery and at 1 week and 1 month postsurgery. The QAB tests from Table 3 were selected and percentage scores were used to compare the performances at different time points (Fig. 2). At 1 week postsurgery, there was a significant decline for all selected QAB tasks except for semantic nonverbal cognition (P = .48): QAB naming (P = .018), QAB repetition (P = 0.011), QAB sentence production (P = .028), and QAB connected speech (P = .043). During short-term follow-up (between 1 week and 1 month postsurgery), a significant improvement was observed for QAB naming (P = .018). No significant differences were found between the preoperative assessments and the 1 month postoperative follow-up.

Fig. 2.

Comparison of in-person language performance and TeleLanguage performance in percentage correct. Asterisk indicates significant results at an alpha level of <.05. Abbreviation: QAB, quick aphasia battery.

For the TeleLanguage assessments, the nonparametric paired tests were repeated using percentage scores for a selection of the tests described in Table 3 and for 11 patients for whom in-person data were available. The pattern of results was similar for this set of tasks in this group of patients as it was for the in-person data except for the semantic selection task (Fig. 2). There was a significant decline at 1 week postsurgery for verbal naming (P = .011), repetition (P = .041), semantic selection (P = .011), story completion (P = .027), and sentence completion (P = .011). During short-term follow-up (between 1 week and 1 month postsurgery), a significant improvement was observed for verbal naming (P = .007) and semantic selection (P = .026). No significant differences were found between the preoperative assessments and the 1-month postoperative follow-up.

In-Person Data vs Telephone Data

The in-person data (using percentages) were compared with the TeleLanguage data for 11 patients prior to surgery, at 1 week, and at 1 month postsurgery. The Wilcoxon signed rank test showed significant differences between telephone data and in-person data only for the following task at one test moment: verbal naming vs QAB naming at 1 month postsurgery (P = .041) (Fig. 2).

Discussion

In this study a telephone-based English language battery (TeleLanguage test) was developed for the first time to follow-up more brain tumor patients undergoing awake surgery. The TeleLanguage test consists of a naming task, 2 phonological, 2 semantic, and 2 syntactic tasks and assesses various linguistic functions in a short period of time. In this explorative study, TeleLanguage was administered in a group of 14 brain tumor patients who also had in-person assessments, and in a group of 14 carefully matched healthy controls. The high feasibility and preliminary validity of TeleLanguage as well as some limitations and future directions will be discussed in the sections below.

Feasibility of Telephone-Based Language Battery (TeleLanguage)

Analysis of the data revealed that the TeleLanguage instrument is feasible to assess language functions via the telephone both in healthy controls and brain tumor patients. As no significant differences were found between the 2 parallel versions A and B, both versions are comparable and so equally reliable to detect language deficits.

In the healthy control group, ceiling effects were found for all tasks except for the fluency tasks. The target answers in the fluency tasks were numerous and there was no maximum score, which induced more variance between participants than in the other linguistic tasks. The few errors made by the control group in the naming and semantic selection task could not be systematically related to specific items.

In the patient group, the ceiling effects were no longer visible and, as expected, errors were made in multiple tasks (see next section), especially in the postoperative phase.

Clinical Application and Validity of TeleLanguage

Both pre- and postoperatively, the mean performance of the patients was worse than the performance of the normal population, especially for verbal naming and the fluency tasks as these task scores were still impaired at 3 months postsurgery. This is in accordance with other studies showing long-term deficits for naming and fluency.4–6,18,19

In the patient group, all TeleLanguage test scores significantly declined shortly (1 week) after surgery with a recovery to preoperative levels at 3 months postsurgery for naming and fluency tasks and a recovery to normal levels for the other language tasks (repetition, semantic selection, story completion, and sentence completion). In the study by Wilson et al,12 the WAB13 language tasks revealed language recovery to the presurgical level at 1 month postsurgery except for the naming task. Although a comparable group of brain tumor patients (44% low-grade gliomas, 43% LGG in our study) in the same setting (UCSF Medical Center) was included in their study, the following differences might explain the earlier recovery found. First, the number of patients in Wilson’s study was higher (n = 110) and the group studied included not only brain tumor patients but also patients with epilepsy (n = 13), vascular malformations (n = 5), and other etiologies (n = 4) who might have had higher language scores influencing the total scores. Second, the WAB tests are developed to identify aphasia in stroke patients who typically have more severe language deficits whereas TeleLanguage was composed to detect mild language problems. Therefore, subtler language deficits might have been overlooked by the WAB tests. Third, other factors such as tumor location, extent of resection, and adjuvant therapy might have been different in both studies and might have differentially affected the results.4,5,16,30,31

In agreement with many studies, our study shows that naming and semantic fluency seem to have the worst recovery trajectory. Anomia is indeed one of the most pervasive deficits and has been linked to several (posterior) brain regions and pathways.32,33 The multidimensional nature of semantic fluency (lexical retrieval, semantic memory, attention, executive functions) requiring a larger functional network34 might explain why compensatory mechanisms affect semantic fluency only after restoration of the linguistic and attentional networks. The posterior tumor location in almost two-thirds of our cases (9 out of 14) might also clarify the low scores for semantic tasks and fluency tasks, as naming or semantics and attentional and executive functions have been related to temporal and parietal locations. When we compare the 4 cases with worst language performance (Patients 3, 4, 7, and 14, Table 2) and the 4 cases with best language performance (Patients 2, 5, 10, and 11, Table 2), intraoperative detection of subcortical language sites and concomitant apraxia of speech are linked to the first group and not to the latter. Although the sample size is small, subcortical injuries35 and concomitant speech problems36 have shown to negatively influence language scores.

Analysis of the in-person data (until 1 month) revealed a similar profile as described above except for the semantic nonverbal cognition performance, which did not decline after surgery. Only the first 2 items of the nonverbal cognition task were selected as only these items require semantic categorization (as in TeleLanguage) instead of visual categorization. The small number of items might explain why no significant differences were found between test moments and why the semantic nonverbal cognition task might not be sensitive enough. As the semantic selection task seems to be a sensitive task (significant decline, significant improvement), a longer in-person assessment version (more items) should be developed in the future. Although the psychometric profile of the naming performance between test moments (pre-, post-1 week, post-1 month) was similar both for QAB naming and TeleLanguage verbal naming, a significant difference was found between the in-person and telephone data, including significantly higher scores for the QAB naming than for the TeleLanguage verbal naming. As the QAB naming tasks included only 6 items, fewer low-frequency items, and no verbs, this naming task might be easier than verbal naming.37 Consequently, the difficulty level and not the administration mode (telephone vs in-person) probably explains the significant difference found between both naming tasks.

To conclude, TeleLanguage seems to be a feasible and very practical measure for language assessments in brain tumor patients undergoing awake surgery. TeleLanguage seems to be a valid alternative for in-person assessments as well. In addition, the verbal naming task and especially the semantic selection task of TeleLanguage are even more sensitive than the in-person measures and alternatives.

Limitations and Future Directions

First, this explorative study was performed with only a small number of patients to pilot the use of TeleLanguage for follow-up of brain tumor patients undergoing awake surgery. No correlational analyses were executed as the in-person tasks used visual stimuli and were therefore very different in nature from the telephone tasks using auditory stimuli. Because the psychometric profile of the telephone and in-person language performance between test moments was similar, however, the preliminary validity data are promising and large studies are needed to confirm our findings. In future studies a control group of patients with a brain tumor in the nondominant hemisphere could be included to take into account generalized surgical effects. Currently, nondominant cases are not referred for pre- and postoperative language assessments.

Second, because most of our patients had mild language deficits, we did not test the application of TeleLanguage in patients with more-severe language impairments, which might be more difficult. Until now—in our consecutive series of patients—we have not yet encountered patients with severe language impairments. In the future development of TeleLanguage, the current “general comprehension screening” will be taken as a formal test within the battery to not exclude patients with severe comprehension deficits. For the present manuscript, however, this change would not have affected our results because in our consecutive series of patients, all patients were able to complete the comprehension test. However, this battery is especially designed for patients with mild language deficits such as tumor patients38 and might be further clinically tested in other patient populations with mild language problems such as mild head trauma and cerebellar patients.

Third, all TeleLanguage tasks had 2 parallel versions except for the fluency tasks, which might have positively affected the fluency scores because of practice effects. Nevertheless, because fluency deficits were still found at 3 months postsurgery, a learning effect was probably negligible.

Although in our study no significant differences were found for repetition by telephone vs in person—which was the case in Pendlebury et al22—detailed analysis of the telephone repetition data is more difficult than for the in-person repetition data. A qualitative error analysis of repetition data into error classifications such as deletion or substitution is hard if administered via the telephone and no face images are available. Therefore, the repetition task can be part of a telephone-based language test battery to evaluate language in general, but if the focus of the study is to analyze repetition errors quantitatively and qualitatively, video or in-person assessments are more useful. Although nonword repetition has proven to be sensitive,39,40 we have decided to not include it in the telephone battery for the same reason that video or in-person assessments are required to reliably test and score a nonword repetition task.

For future data collection, the whole assessment will be recorded so we can calculate reaction times, which have shown to add predictive value in addition to correctness.41

Finally, external factors (eg, television, radio, other interruptions) and internal factors (eg, tiredness, medical illness) and the impact on a patient’s performance during telephone assessment cannot be completely excluded. We attempted to reduce any type of interference by requesting at the start of the session that each participant sit alone in a quiet room and turn off all media according to the best practices for remote assessments.42

Conclusion

The use of the TeleLanguage battery to conduct language assessments from afar can provide convenience, reduces traveling costs, and optimizes patient care as assessment services might be unavailable locally. In addition, it may be effective in longitudinal clinical research studies to decrease the number of drop-outs as it reduces the burden associated with frequent follow-up in-person testing. The feasibility and preliminary validity of this brief test battery appears to be high. Therefore, TeleLanguage seems to be a valid and reliable tool for various clinical and scientific purposes.

Funding

This work was supported by a postdoctoral fellowship of the Research Foundation—Flanders (FWO) [to E.D.W.]; and the Netherlands Organization for Scientific Research [446-13-009 to V.P.].

Conflict of interest statement

None declared.

References

- 1. De Witte E, Mariën P. The neurolinguistic approach to awake surgery reviewed. Clin Neurol Neurosurg. 2013;115(2):127–145. [DOI] [PubMed] [Google Scholar]

- 2. Duffau H. Contribution of cortical and subcortical electrostimulation in brain glioma surgery: methodological and functional considerations. Neurophysiol Clin. 2007;37(6):373–382. [DOI] [PubMed] [Google Scholar]

- 3. De Witt Hamer PC, Robles SG, Zwinderman AH et al. Impact of intraoperative stimulation brain mapping on glioma surgery outcome: a meta-analysis. J Clin Oncol. 2012;30(20):2559–2565. [DOI] [PubMed] [Google Scholar]

- 4. Satoer D, Vork J, Visch-Brink E, Smits M, Dirven C, Vincent A. Cognitive functioning early after surgery of gliomas in eloquent areas. J Neurosurg. 2012;117(5):831–838. [DOI] [PubMed] [Google Scholar]

- 5. Satoer D, Visch-Brink E, Smits M et al. Long-term evaluation of cognition after glioma surgery in eloquent areas. J Neurooncol. 2014;116(1):153–160. [DOI] [PubMed] [Google Scholar]

- 6. Talacchi A, Santini B, Savazzi S, Gerosa M. Cognitive effects of tumour and surgical treatment in glioma patients. J Neurooncol. 2011;103(3):541–549. [DOI] [PubMed] [Google Scholar]

- 7. Teixidor P, Gatignol P, Leroy M, Masuet-Aumatell C, Capelle L, Duffau H. Assessment of verbal working memory before and after surgery for low-grade glioma. J Neurooncol. 2007;81(3):305–313. [DOI] [PubMed] [Google Scholar]

- 8. Tucha O, Smely C, Preier M, Becker G, Paul GM, Lange KW. Preoperative and postoperative cognitive functioning in patients with frontal meningiomas. J Neurosurg. 2003;98(1):21–31. [DOI] [PubMed] [Google Scholar]

- 9. Wu AS, Witgert ME, Lang FF et al. Neurocognitive function before and after surgery for insular gliomas. J Neurosurg. 2011;115(6):1115–1125. [DOI] [PubMed] [Google Scholar]

- 10. Duffau H. Brain plasticity and tumors. Adv Tech Stand Neurosurg. 2008;33:3–33. [DOI] [PubMed] [Google Scholar]

- 11. De Witte E, Satoer D, Robert E et al. The Dutch linguistic intraoperative protocol: a valid linguistic approach to awake brain surgery. Brain Lang. 2015;140:35–48. [DOI] [PubMed] [Google Scholar]

- 12. Wilson SM, Lam D, Babiak MC et al. Transient aphasias after left hemisphere resective surgery. J Neurosurg. 2015;123(3):581–593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Kertesz A. Western Aphasia Battery Revised. Examiner’s Manual. San Antonio, Texas: Harcourt Assessment Inc; 2007. [Google Scholar]

- 14. Kaplan EF, Goodglass H, Weintraub S.. The Boston Naming Test. 2nd ed. Philadelphia, PA: Lea and Fibiger; 1983. [Google Scholar]

- 15. Wilson SM, Eriksson DK, Schneck SM, Lucanie JM. A quick aphasia battery for efficient, reliable, and multidimensional assessment of language function. PloS One. 2018;13(2):e0192773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Ilmberger J, Ruge M, Kreth FW, Briegel J, Reulen HJ, Tonn JC. Intraoperative mapping of language functions: a longitudinal neurolinguistic analysis. J Neurosurg. 2008;109(4):583–592. [DOI] [PubMed] [Google Scholar]

- 17. Mandonnet E, De Witt Hamer P, Poisson I et al. Initial experience using awake surgery for glioma: oncological, functional, and employment outcomes in a consecutive series of 25 cases. Neurosurgery. 2015;76(4):382–389; discussion 389. [DOI] [PubMed] [Google Scholar]

- 18. Santini B, Talacchi A, Squintani G, Casagrande F, Capasso R, Miceli G. Cognitive outcome after awake surgery for tumors in language areas. J Neurooncol. 2012;108(2):319–326. [DOI] [PubMed] [Google Scholar]

- 19. Talacchi A, d’Avella D, Denaro L, et al. Cognitive outcome as part and parcel of clinical outcome in brain tumor surgery. J Neurooncol. 2012;108(2):327–332. [DOI] [PubMed] [Google Scholar]

- 20. Kwan RY, Lai CK. Can smartphones enhance telephone-based cognitive assessment (TBCA)?Int J Environ Res Public Health. 2013;10(12):7110–7125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Mitsis EM, Jacobs D, Luo X, Andrews H, Andrews K, Sano M. Evaluating cognition in an elderly cohort via telephone assessment. Int J Geriatr Psychiatry. 2010;25(5):531–539. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Pendlebury ST, Welch SJ, Cuthbertson FC Mariz J, Mehta Z, Rothwell PM. Telephone assessment of cognition after transient ischemic attack and stroke: modified telephone interview of cognitive status and telephone Montreal Cognitive Assessment versus face-to-face Montreal Cognitive Assessment and neuropsychological battery. Stroke. 2013;44(1):227–229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Unverzagt FW, Monahan PO, Moser LR, et al. The Indiana University telephone-based assessment of neuropsychological status: a new method for large scale neuropsychological assessment. J Int Neuropsychol Soc. 2007;13(5):799–806. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Wilson RS, Leurgans SE, Foroud TM et al. ; National Institute on Aging Late-Onset Alzheimer’s Disease Family Study Group Telephone assessment of cognitive function in the late-onset Alzheimer’s disease family study. Arch Neurol. 2010;67(7):855–861. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Goodglass K, Kaplan E, Barresi B.. Boston Diagnostic Aphasia Examination (BDAE-3). 3rd ed. Philadelphia, PA: Lea and Febiger; 2000. [Google Scholar]

- 26. Yochim BP, Beaudreau SA, Kaci Fairchild J et al. Verbal naming test for use with older adults: development and initial validation. J Int Neuropsychol Soc. 2015;21(3):239–248. [DOI] [PubMed] [Google Scholar]

- 27. Goodglass H, Kaplan E.. The Assessment of Aphasia and Related Disorders. Philadelphia, PA: Lea and Fibiger; 1972. [Google Scholar]

- 28. Veale JF. Edinburgh Handedness Inventory—short form: a revised version based on confirmatory factor analysis. Laterality. 2014;19(2):164–177. [DOI] [PubMed] [Google Scholar]

- 29. Palmer BW, Boone KB, Lesser IM, Wohl MA. Base rates of “impaired” neuropsychological test performance among healthy older adults. Arch Clin Neuropsychol. 1998;13(6):503–511. [PubMed] [Google Scholar]

- 30. Ius T, Isola M, Budai R, et al. Low-grade glioma surgery in eloquent areas: volumetric analysis of extent of resection and its impact on overall survival. A single-institution experience in 190 patients: clinical article. J Neurosurg. 2012;117(6):1039–1052. [DOI] [PubMed] [Google Scholar]

- 31. Prabhu RS, Won M, Shaw EG et al. Effect of the addition of chemotherapy to radiotherapy on cognitive function in patients with low-grade glioma: secondary analysis of RTOG 98-02. J Clin Oncol. 2014;32(6):535–541. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Fernández Coello A, Moritz-Gasser S, Martino J, Martinoni M, Matsuda R, Duffau H. Selection of intraoperative tasks for awake mapping based on relationships between tumor location and functional networks. J Neurosurg. 2013;119(6):1380–1394. [DOI] [PubMed] [Google Scholar]

- 33. Hickok G. The cortical organization of speech processing: feedback control and predictive coding the context of a dual-stream model. J Commun Disord. 2012;45(6):393–402. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Goldstein B, Obrzut JE, John C, Hunter JV, Armstrong CL. The impact of low-grade brain tumors on verbal fluency performance. J Clin Exp Neuropsychol. 2004;26(6):750–758. [DOI] [PubMed] [Google Scholar]

- 35. Trinh VT, Fahim DK, Shah K et al. Subcortical injury is an independent predictor of worsening neurological deficits following awake craniotomy procedures. Neurosurgery. 2013;72(2):160–169. [DOI] [PubMed] [Google Scholar]

- 36. Papathanasiou I, Coppens P, Potagas C.. Aphasia and Related Neurogenic Communication Disorders. Burlington, MA: Jones & Bartlett Publishers; 2012. [Google Scholar]

- 37. Bastiaanse R, van Zonneveld R. Broca’s aphasia, verbs and the mental lexicon. Brain Lang. 2004;90(1–3):198–202. [DOI] [PubMed] [Google Scholar]

- 38. Duffau H. The huge plastic potential of adult brain and the role of connectomics: new insights provided by serial mappings in glioma surgery. Cortex. 2014;58:325–337. [DOI] [PubMed] [Google Scholar]

- 39. Sierpowska J, Gabarrós A, Fernandez-Coello A, et al. Words are not enough: nonword repetition as an indicator of arcuate fasciculus integrity during brain tumor resection. J Neurosurg. 2017;126(2):435–445. [DOI] [PubMed] [Google Scholar]

- 40. Leonard MK, Cai R, Babiak MC, Ren A, Chang EF. The peri-Sylvian cortical network underlying single word repetition revealed by electrocortical stimulation and direct neural recordings [published online ahead of print July 19, 2016]. Brain Lang. doi: 10.1016/j.bandl.2016.06.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Moritz-Gasser S, Herbet G, Maldonado IL, Duffau H. Lexical access speed is significantly correlated with the return to professional activities after awake surgery for low-grade gliomas. J Neurooncol. 2012;107(3):633–641. [DOI] [PubMed] [Google Scholar]

- 42. Luxton DD, Pruitt LD, Osenbach JE. Best practices for remote psychological assessment via telehealth technologies. Prof Psychol Res Pr. 2014;45(1):27–35. [Google Scholar]