Abstract

We study a class of non-parametric density estimators under Bayesian settings. The estimators are obtained by adaptively partitioning the sample space. Under a suitable prior, we analyze the concentration rate of the posterior distribution, and demonstrate that the rate does not directly depend on the dimension of the problem in several special cases. Another advantage of this class of Bayesian density estimators is that it can adapt to the unknown smoothness of the true density function, thus achieving the optimal convergence rate without artificial conditions on the density. We also validate the theoretical results on a variety of simulated data sets.

1. Introduction

In this paper, we study the asymptotic behavior of posterior distributions of a class of Bayesian density estimators based on adaptive partitioning. Density estimation is a building block for many other statistical methods, such as classification, nonparametric testing, clustering, and data compression.

With univariate (or bivariate) data, the most basic non-parametric method for density estimation is the histogram method. In this method, the sample space is partitioned into regular intervals (or rectangles), and the density is estimated by the relative frequency of data points falling into each interval (rectangle). However, this method is of limited utility in higher dimensional spaces because the number of cells in a regular partition of a p-dimensional space will grow exponentially with p, which makes the relative frequency highly variable unless the sample size is extremely large. In this situation the histogram may be improved by adapting the partition to the data so that larger rectangles are used in the parts of the sample space where data is sparse. Motivated by this consideration, researchers have recently developed several multivariate density estimation methods based on adaptive partitioning [13, 12]. For example, by generalizing the classical Pólya Tree construction [7, 22] developed the Optional Pólya Tree (OPT) prior on the space of simple functions. Computational issues related to OPT density estimates were discussed in [13], where efficient algorithms were developed to compute the OPT estimate. The method performs quite well when the dimension is moderately large (from 10 to 50).

The purpose of the current paper is to address the following questions on such Bayesian density estimates based on partition-learning. Question 1: what is the class of density functions that can be “well estimated” by the partition-learning based methods. Question 2: what is the rate at which the posterior distribution is concentrated around the true density as the sample size increases. Our main contributions lie in the following aspects:

We impose a suitable prior on the space of density functions defined on binary partitions, and calculate the posterior concentration rate under the Hellinger distance with mild assumptions. The rate is adaptive to the unknown smoothness of the true density.

For two dimensional density functions of bounded variation, the posterior contraction rate of our method is n−1/4(log n)3.

For Hölder continuous (one-dimensional case) or mixture Hölder continuous (multi-dimensional case) density functions with regularity parameter β in (0, 1], the posterior concentration rate is , whereas the minimax rate for one-dimensional Hölder continuous functions is .

When the true density function is sparse in the sense that the Haar wavelet coefficients satisfy a weak−lq (q > 1/2) constraint, the posterior concentration rate is .

We can use a computationally efficient algorithm to sample from the posterior distribution. We demonstrate the theoretical results on several simulated data sets.

1.1. Related work

An important feature of our method is that it can adapt to the unknown smoothness of the true density function. The adaptivity of Bayesian approaches has drawn great attention in recent years. In terms of density estimation, there are mainly two categories of adaptive Bayesian nonparametric approaches. The first category of work relies on basis expansion of the density function and typically imposes a random series prior [15, 17]. When the prior on the coefficients of the expansion is set to be normal [4], it is also a Gaussian process prior. In the multivariate case, most existing work [4, 17] uses tensor-product basis. Our improvement over these methods mainly lies in the adaptive structure. In fact, as the dimension increases the number of tensor-product basis functions can be prohibitively large, which imposes a great challenge on computation. By introducing adaptive partition, we are able to handle the multivariate case even when the dimension is 30 (Example 2 in Section 4).

Another line of work considers mixture priors [16, 11, 18]. Although the mixture distributions have good approximation properties and naturally lead to adaptivity to very high smoothness levels, they may fail to detect or characterize the local features. On the other hand, by learning a partition of the sample space, the partition based approaches can provide an informative summary of the structure, and allow us to examine the density at different resolutions [14, 21].

The paper is organized as follows. In Section 2 we provide more details of the density functions on binary partitions and define the prior distribution. Section 3 summarizes the theoretical results on posterior concentration rates. The results are further validated in Section 4 by several experiments.

2. Bayesian multivariate density estimation

We focus on density estimation problems in p-dimensional Euclidean space. Let be a measurable space and f0 be a compactly supported density function with respect to the Lebesgue measure μ. Y1, Y2, ⋯, Yn is a sequence of independent variables distributed according to f0. After translation and scaling, we can always assume that the support of f0 is contained in the unit cube in . Translating this into notations, we assume that Ω = {(y1, y2, ⋯, yp)}: yl ϵ [0,1]}. denotes the collection of all the density functions on . Then constitutes the parameter space in this problem. Note that is an infinite dimensional parameter space.

2.1. Densities on binary partitions

To address the infinite dimensionality of , we construct a sequence of finite dimensional approximating spaces Θ1, Θ2, ⋯, ΘI, ⋯ based on binary partitions. With growing complexity, these spaces provide more and more accurate approximations to the initial parameter space . Here, we use a recursive procedure to define a binary partition with I subregions of the unit cube in . Let Ω = {(y1, y2, ⋯,yp): yl ϵ [0,1]} be the unit cube in . In the first step, we choose one of the coordinates yl and cut Ω into two subregions along the midpoint of the range of yl. That is, , where and . In this way, we get a partition with two subregions. Note that the total number of possible partitions after the first step is equal to the dimension p. Suppose after I − 1 steps of the recursion, we have obtained a partition with I subregions. In the I-th step, further partitioning of the region is defined as follows:

Choose a region from Ω1, ⋯, ΩI. Denote it as Ωi0.

Choose one coordinate yl and divide Ωi0 into two subregions along the midpoint of the range of yl.

Such a partition obtained by I − 1 recursive steps is called a binary partition of size I. Figure 1 displays all possible two dimensional binary partitions when I is 1, 2 and 3.

Figure 1:

Binary partitions

Now, let

where |Ωi| is the volume of Ωi. Then, ΘI is the collection of the density functions supported by the binary partitions of size I. They constitute a sequence of approximating spaces (i.e. a sieve, see [10, 20] for background on sieve theory). Let be the space containing all the density functions supported by the binary partitions. Then Θ is an approximation of the initial parameter space to certain approximation error which will be characterized later.

We take the metric on , Θ and ΘI to be Hellinger distance, which is defined as

| (1) |

2.2. Prior distribution

An ideal prior Π on is supposed to be capable of balancing the approximation error and the complexity Θ. The prior in this paper penalizes the size of the partition in the sense that the probability mass on each ΘI is proportional to exp(−λI log I). Given a sample of size n, we restrict our attention to , because in practice we need enough samples within each subregion to get a meaningful estimate of the density. This is to say, when I ≤ n/log n, Π(ΘI) ∝ exp(−λI log I), otherwise Π(ΘI) = 0.

If we use TI to denote the total number of possible partitions of size I, then it is not hard to see that log TI ≤ c*I log I, where c* is a constant. Within each ΘI, the prior is uniform across all binary partitions. In other words, let be a binary partition of Ω of size I, and is the collection of piecewise constant density functions on this partition (i.e. ), then

| (2) |

Given a partition , the weights θi on the subregions follow a Dirichlet distribution with parameters all equal to α (α < 1). This is to say, for x1, ⋯, xI ≥ 0 and ,

| (3) |

where .

Let Πn(·|Y1, ⋯, Yn) to denote the posterior distribution. After integrating out the weights θi, we can compute the marginal posterior probability of :

| (4) |

where ni is the number of observations in Ωi. Under the prior introduced in [13], the marginal posterior distribution is:

| (5) |

while the maximum log-likelihood achieved by histograms on the partition is:

| (6) |

From a model selection perspective, we may treat the histograms on each binary partition as a model of the data. When I ≪ n, asymptotically,

| (7) |

This is to say, in [13], selecting the partition which maximizes the marginal posterior distribution is equivalent to applying the Bayesian information criterion (BIC) to perform model selection. However, if we allow I to increase with n, (7) will not hold any more. But if we use the prior introduced in this section, in the case when , as n → ∞, we still have

| (8) |

From a model selection perspective, this is closer to the risk inflation criterion (RIC, [8]).

3. Posterior concentration rates

We are interested in how fast the posterior probability measure concentrates around the true the density f0. Under the prior specified above, the posterior probability is the random measure given by

A Bayesian estimator is said to be consistent if the posterior distribution concentrates on arbitrarily small neighborhoods of f0, with probability tending to 1 under (P0 is the probability measure corresponding to the density function f0). The posterior concentration rate refers to the rate at which these neighborhoods shrink to zero while still possessing most of the posterior mass. More explicitly, we want to find a sequence ϵn → 0, such that for sufficiently large M,

In [6] and [2], the authors demonstrated that it is impossible to find an estimator which works uniformly well for every f in . This is the case because for any estimator , there always exists for which is inconsistent. Given the minimaxity of the Bayes estimator, we have to restrict our attention to a subset of the original parameter space . Here, we focus on the class of density functions that can be well approximated by ΘI’s. To be more rigorous, a density function is said to be well approximated by elements in Θ, if there exits a sequence of fI ϵ ΘI, satisfying that ρ(fI, f) = O(I−r)(r > 0). Let be the collection of these density functions. We will first derive posterior concentration rate for the elements in as a function of r. For different function classes, this approximation rate r can be calculated explicitly. In addition to this, we also assume that f0 has finite second moment.

The following theorem gives the posterior concentration rate under the prior introduced in Section 2.2.

Theorem 3.1. Y1, ⋯, Yn is a sequence of independent random variables distributed according to f0. P0 is the probability measure corresponding to f0. Θ is the collection of p-dimensional density functions supported by the binary partitions as defined in Section 2.1. With the modified prior distribution, if , then the posterior concentration rate is .

The strategy to show this theorem is to write the posterior probability of the shrinking ball as

| (9) |

The proof employs the mechanism developed in the landmark works [9] and [19]. We first obtain the upper bounds for the items in the numerator by dividing them into three blocks, each of which accounts for bias, variance, and rapidly decaying prior respectively, and calculate the upper bound for each block separately. Then we provide the prior thickness result, i.e., we bound the prior mass of a ball around the true density from below. Due to space constraints, the details of the proof will be provided in the appendix.

This theorem suggests the following two take-away messages: 1. The rate is adaptive to the unknown smoothness of the true density. 2. The posterior contraction rate is , which does not directly depend on the dimension p. For some density functions, r may depend on p. But in several special cases, like the density function is spatially sparse or the density function lies in a low dimensional subspace, we will show that the rate will not be affected by the full dimension of the problem.

In the following three subsections, we will calculate the explicit rates for three density classes. Again, all proofs are given in the appendix.

3.1. Spatial adaptation

First, we assume that the density concentrates spatially. Mathematically, this implies the density function satisfies a type of sparsity. In the past two decades, sparsity has become one of the most discussed types of structure under which we are able to overcome the curse of dimensionality. A remarkable example is that it allows us to solve high-dimensional linear models, especially when the system is underdetermined.

Let f be a p dimensional density function and Ψ the p-dimensional Haar basis. We will work with first. Note that g ϵ L2([0,1]p). Thus we can expand g with respect to Ψ as . We rearrange this summation by the size of wavelet coefficients. In other words, we order the coefficients as the following

then the sparsity condition imposed on the density functions is that the decay of the wavelet coefficients follows a power law,

| (10) |

where C is a constant.

We call such a constraint a weak−lq constraint. The condition has been widely used to characterize the sparsity of signals and images [1, 3]. In particular, in [5], it was shown that for two-dimensional cases, when q > 1/2, this condition reasonably captures the sparsity of real world images.

Corollary 3.2. (Application to spatial adaptation) Suppose f0 is a p-dimensional density function and satisfies the condition (10). If we apply our approaches to this type of density functions, the posterior concentration rate is .

3.2. Density functions of bounded variation

Let Ω = [0, 1)2 be a domain in . We first characterize the space BV(Ω) of functions of bounded variation on Ω.

For a vector , the difference operator Δv along the direction v is defined by

For functions f defined on Ω, Δv(f, y) is defined whenever y ϵ Ω(v), where Ω(v) := {y : [y,y + v] ⊂ Ω} and [y,y + v] is the line segment connecting y and y + v. Denote by el, l = 1, 2 the two coordinate vectors in . We say that a function f ϵ L1(Ω) is in BV(Ω) if and only if

is finite. The quantity VΩ(f) is the variation of f over Ω.

Corollary 3.3. Assume that f0 ϵ BV (Ω). If we apply the Bayesian multivariate density estimator based on adaptive partitioning here to estimate f0, the posterior concentration rate is n−1/4(log n)3.

3.3. Hölder space

In one-dimensional case, the class of Hölder functions with regularity parameter β is defined as the following: let κ be the largest integer smaller than β, and denote by f(κ) its κth derivative.

In multi-dimensional cases, we introduce the Mixed-Hölder continuity. In order to simplify the notation, we give the definition when the dimension is two. It can be easily generalized to high-dimensional cases. A real-valued function f on is called Mixed-Hölder continuous for some nonnegative constant C and β ϵ (0, 1], if for any ,

Corollary 3.4. Let f0 be the p-dimensional density function. If is Hölder continuous (when p = 1) or mixed-Hölder continuous (when p ≥ 2) with regularity parameter β ϵ (0, 1], then the posterior concentration rate of the Bayes estimator is .

This result also implies that if f0 only depends on variable where , but we do not know in advance which variables, then the rate of this method is determined by the effective dimension of the problem, since the smoothness parameter r is only a function of . In next section, we will use a simulated data set to illustrate this point.

4. Simulation

4.1. Sequential importance sampling

Each partition is obtained by recursively partitioning the sample space. We can use a sequence of partitions to keep track of the path leading to . Let Πn(·) denote the posterior distribution Πn(·|Y1, ⋯, Yn) for simplicity, and be the posterior be distribution conditioning on ΘI. Then can be decomposed as

The conditional distribution can be calculated by . However, the computation of the marginal distribution is sometimes infeasible, especially when both I and I − i are large, because we need to sum the marginal posterior probability over all binary partitions of size I for which the first i steps in the partition generating path are the same as those of . Therefore, we adopt the sequential importance algorithm proposed in [13]. In order to build a sequence of binary partitions, at each step, the conditional distribution is approximated by . The obtained partition is assigned a weight to compensate the approximation, where the weight is

In order to make the data points as uniform as possible, we apply a copula transformation to each variable in advance whenever the dimension exceeds 3. More specifically, we estimate the marginal distribution of each variable Xj by our approach, denoted as (we use to denote the cdf of Xj), and transform each point (y1, ⋯, yp) to (F1(y1), ⋯, Fp(yp)). Another advantage of this transformation is that after the transformation the sample space naturally becomes [0, 1]p.

Example 1 Assume that the two-dimensional density function is

This density function both satisfies the spatial sparsity condition and belongs to the space of functions of bounded variation. Figure 2 shows the heatmap of the density function and its Haar coefficients. The last panel in the second plot displays the sorted coefficients with the abscissa in log-scale. From this we can clearly see that the power-law decay defined in Section 3.1 is satisfied.

Figure 2:

Heatmap of the density and plots of the 2-dimensional Haar coefficients. For the plot on the right, the left panel is the plot of the Haar coefficients from low resolution to high resolution up to level 6. The middle one is the plot of the sorted coefficients according to their absolute values. And the right one is the same as the middle plot but with the abscissa in log scale.

We apply the adaptive partitioning approach to estimate the density, and allow the sample size increase from 102 to 105. In Figure 3, the left plot is the density estimation result based on a sample with 10000 data points. The right one is the plot of Kullback-Leibler (KL) divergence from the estimated density to f0 vs. sample size in log-scale. The sample sizes are set to be 100, 500, 1000, 5000, 104, and 105. The linear trend in the plot validates the posterior concentrate rates calculated in Section 3. The reason why we use KL divergence instead of the Hellinger distance is that for any and , we can show that the KL divergence and the Hellinger distance are of the same order. But KL divergence is relatively easier to compute in our setting, since we can show that it is linear in the logarithm of the posterior marginal probability of a partition. The proof will be provided in the appendix. For each fixed sample size, we run the experiment 10 times and estimate the standard error, which is shown by the lighter blue part in the plot.

Figure 3:

Plot of the estimated density and KL divergence against sample size. We use the posterior mean as the estimate. The right plot is on log-log scale, while the labels of x and y axes still represent the sample size and the KL divergence before we take the logarithm.

Example 2 In the second example we work with a density function of moderately high dimension. Assume that the first five random variables Y1, ⋯ Y5 are generated from the following location mixture of the Gaussian distribution:

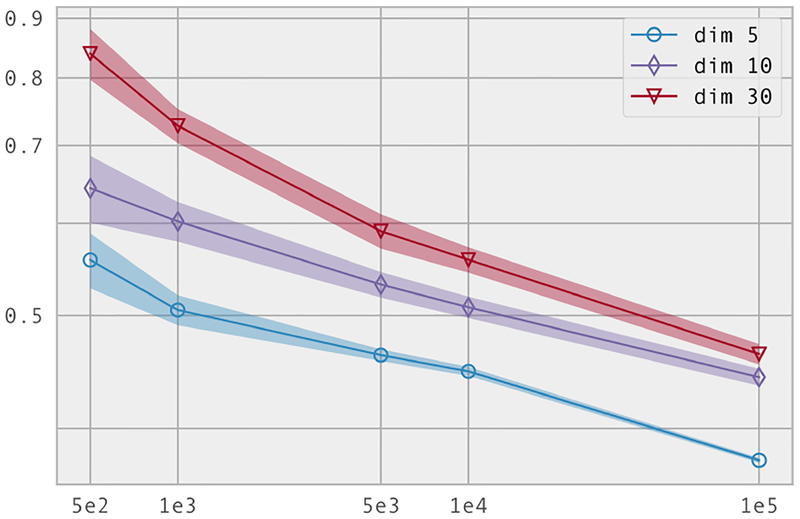

the other components Y6, ⋯, Yp are independently uniformly distributed. We run experiments for p = 5, 10, and 30. For a fixed p, we generate n ϵ {500, 1000, 5000, 104, 105} data points. For each pair of p and n, we repeat the experiment 10 times and calculate the standard error. Figure 4 displays the plot of the KL divergence vs. the sample size on log-log scale. The density function is continuous differentiable. Therefore, it satisfies the mixed-Hölder continuity condition. The effective dimension of this example is , and this is reflected in the plot: the slopes of the three lines, which correspond to the concentration rates under different dimensions, almost remain the same as we increase the full dimension of the problem.

Figure 4:

KL divergence vs. sample size. The blue, purple and red curves correspond to the cases when p = 5, p = 10 and p = 30 respectively. The slopes of the three lines are almost the same, implying that the concentration rate only depends on the effective dimension of the problem (which is 5 in this example).

5. Conclusion

In this paper, we study the posterior concentration rate of a class of Bayesian density estimators based on adaptive partitioning. We obtain explicit rates when the density function is spatially sparse, belongs to the space of bounded variation, or is Hölder continuous. For the last case, the rate is minimax up to a logarithmic term. When the density function is sparse or lies in a low-dimensional subspace, the rate will not be affected by the dimension of the problem. Another advantage of this method is that it can adapt to the unknown smoothness of the underlying density function.

Supplementary Material

Contributor Information

Linxi Liu, Department of Statistics, Columbia.

Dangna Li, ICME, Stanford University.

Wing Hung Wong, Department of Statistics, Stanford University.

Bibliography

- [1].Abramovich Felix, Benjamini Yoav, Donoho David L., and Johnstone Iain M.. Adapting to unknown sparsity by controlling the false discovery rate. The Annals of Statistics, 34(2):584–653, April 2006. [Google Scholar]

- [2].Birgé Lucien and Massart Pascal. Minimum contrast estimators on sieves: exponential bounds and rates of convergence. Bernoulli, 4(3):329–375, September 1998. [Google Scholar]

- [3].Candès EJ and Tao T. Near-optimal signal recovery from random projections: Universal encoding strategies? Information Theory, IEEE Transactions on, 52(12):5406–5425, December 2006. [Google Scholar]

- [4].de Jonge R and van Zanten JH. Adaptive estimation of multivariate functions using conditionally Gaussian tensor-product spline priors. Electron. J. Statist, 6:1984–2001, 2012. [Google Scholar]

- [5].DeVore RA, Jawerth B, and Lucier BJ. Image compression through wavelet transform coding. Information Theory, IEEE Transactions on, 38(2):719–746, March 1992. [Google Scholar]

- [6].Farrell RH. On the lack of a uniformly consistent sequence of estimators of a density function in certain cases. The Annals of Mathematical Statistics, 38(2):471–474, April 1967. [Google Scholar]

- [7].Ferguson Thomas S.. Prior distributions on spaces of probability measures. Ann. Statist, 2:615–629, 1974. [Google Scholar]

- [8].Foster Dean P. and George Edward I.. The risk inflation criterion for multiple regression. Ann. Statist, 22(4):1947–1975, December 1994. [Google Scholar]

- [9].Ghosal Subhashis, Ghosh Jayanta K., and van der Vaart Aad W.. Convergence rates of posterior distributions. The Annals of Statistics, 28(2):500–531, April 2000. [Google Scholar]

- [10].Grenander U. Abstract Inference. Probability and Statistics Series. John Wiley & Sons, 1981. [Google Scholar]

- [11].Kruijer Willem, Rousseau Judith, and van der Vaart Aad. Adaptive bayesian density estimation with location-scale mixtures. Electron. J. Statist, 4:1225–1257, 2010. [Google Scholar]

- [12].Li Dangna, Yang Kun, and Wong Wing Hung. Density estimation via discrepancy based adaptive sequential partition. 30th Conference on Neural Information Processing Systems (NIPS 2016), 2016. [PMC free article] [PubMed] [Google Scholar]

- [13].Lu Luo, Jiang Hui, and Wong Wing H.. Multivariate density estimation by bayesian sequential partitioning. Journal of the American Statistical Association, 108(504):1402–1410, 2013. [Google Scholar]

- [14].Ma Li and Wong Wing Hung. Coupling optional pólya trees and the two sample problem. Journal of the American Statistical Association, 106(496):1553–1565, 2011. [Google Scholar]

- [15].Rivoirard Vincent and Rousseau Judith. Posterior concentration rates for infinite dimensional exponential families. Bayesian Anal, 7(2):311–334, June 2012. [Google Scholar]

- [16].Rousseau Judith. Rates of convergence for the posterior distributions of mixtures of betas and adaptive nonparametric estimation of the density. The Annals of Statistics, 38(1):146–180, February 2010. [Google Scholar]

- [17].Shen Weining and Ghosal Subhashis. Adaptive bayesian procedures using random series priors. Scandinavian Journal of Statistics, 42(4):1194–1213, 2015. 10.1111/sjos.12159. [DOI] [Google Scholar]

- [18].Shen Weining, Tokdar Surya T., and Ghosal Subhashis. Adaptive bayesian multivariate density estimation with dirichlet mixtures. Biometrika, 100(3):623–640, 2013. [Google Scholar]

- [19].Shen Xiaotong and Wasserman Larry. Rates of convergence of posterior distributions. The Annals of Statistics, 29(3):687–714, June 2001. [Google Scholar]

- [20].Shen Xiaotong and Wong Wing Hung. Convergence rate of sieve estimates. The Annals of Statistics, 22(2):pp. 580–615, 1994. [Google Scholar]

- [21].Soriano Jacopo and Ma Li. Probabilistic multi-resolution scanning for two-sample differences. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 79(2):547–572, 2017. [Google Scholar]

- [22].Wong Wing H. and Ma Li. Optional pólya tree and bayesian inference. The Annals of Statistics, 38(3):1433–1459, June 2010. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.