Abstract

Objective

Clinician information overload is prevalent in critical care settings. Improved visualization of patient information may help clinicians cope with information overload, increase efficiency, and improve quality. We compared the effect of information display interventions with usual care on patient care outcomes.

Materials and Methods

We conducted a systematic review including experimental and quasi-experimental studies of information display interventions conducted in critical care and anesthesiology settings. Citations from January 1990 to June 2018 were searched in PubMed and IEEE Xplore. Reviewers worked independently to screen articles, evaluate quality, and abstract primary outcomes and display features.

Results

Of 6742 studies identified, 22 studies evaluating 17 information displays met the study inclusion criteria. Information display categories included comprehensive integrated displays (3 displays), multipatient dashboards (7 displays), physiologic and laboratory monitoring (5 displays), and expert systems (2 displays). Significant improvement on primary outcomes over usual care was reported in 12 studies for 9 unique displays. Improvement was found mostly with comprehensive integrated displays (4 of 6 studies) and multipatient dashboards (5 of 7 studies). Only 1 of 5 randomized controlled trials had a positive effect in the primary outcome.

Conclusion

We found weak evidence suggesting comprehensive integrated displays improve provider efficiency and process outcomes, and multipatient dashboards improve compliance with care protocols and patient outcomes. Randomized controlled trials of physiologic and laboratory monitoring displays did not show improvement in primary outcomes, despite positive results in simulated settings. Important research translation gaps from laboratory to actual critical care settings exist.

Keywords: data display, information display, clinical decision support systems, electronic medical record, health information systems, user-computer interface, critical care, review

INTRODUCTION

Critical care settings are complex environments with demanding care requirements.1 On average, each intensive care unit (ICU) patient receives 178 care interventions daily.2 This challenging care environment fosters human error, experienced by 16% of ICU patients, leading to increased stay or mortality.3–5 Human error in critical care settings may be in part due to the lack of information displays that effectively help clinicians cope with information overload by improving situation awareness and supporting clinical decision making.6

Current displays of complex data in critical care are suboptimal and have been designed with little attention to human factors.7 The majority of current information systems in critical care require clinicians to manually access and integrate data from multiple sources and devices, which requires substantial cognitive effort.6 For example, providers aggregate patient data from disparate modules in the electronic health record (EHR) and bedside monitoring devices. These data are then manually integrated into information that is used to understand the patient’s situation and make care decisions.8 Critical care providers report frustration with locating, customizing, and prioritizing data.8 Current EHR systems have not been designed to support clinicians’ high-level cognitive processes9 and work environment.7

Prior literature reviews outside critical care10,11 and ad hoc reviews in critical care12–14 show promising evidence that improved information display can decrease human error. However, none of the prior reviews systematically evaluated the effect of critical care information displays on patient care. To address this gap, we conducted a systematic review of critical care information displays to (1) identify the types of critical care information displays evaluated in clinical settings and (2) synthesize the evidence on the effect of these displays on process and patient outcomes.

MATERIALS AND METHODS

The study methodology followed the Institute of Medicine Standards for Systematic Reviews15 and the Preferred Reporting Items for Systematic Reviews and Meta-Analysis systematic review guidelines.16 Study procedures were based on formal processes and instruments defined a priori by the authors, and refined based on input from an expert review panel.

Data sources

We searched PubMed and the IEEE Xplore Digital Library from January 1990 to June 2018 to identify graphical user interfaces developed for critical care or anesthesiology. The search strategy for each database was developed iteratively with calibration against a set of known references (see Supplementary Material for search strategies). The final PubMed search was conducted on June 11, 2018 and the final IEEE Xplore search was conducted on June 15, 2018.

Study selection

We included quasi-experimental and experimental studies that compared the effect of the information displays vs usual care on efficiency, healthcare quality, and cost outcomes in critical care or anesthesiology settings. We excluded studies about displays that presented a single variable, displays of standalone monitoring devices, and studies not published in English. Title and abstract screening were done independently and in duplicate. Disagreements were resolved through consensus among all study authors. If the abstract had insufficient information to make a confident decision the article was selected for full-text review. A similar process was followed for articles selected for full-text screening. To adjust for the unbalanced article set, we used a bias and prevalence adjusted kappa to calculate inter-rater reliability.17

Data extraction

We extracted study design, population, setting, participants, intervention (display characteristics), study design, and outcomes. A primary reviewer extracted the information and a second reviewer checked for accuracy. Disagreements were reconciled through consensus. Quality was appraised using a modified Newcastle-Ottawa Scale18 for cohort studies. Scale criteria included representativeness, selection of comparison group, randomization, comparability, outcome follow-up, and outcome assessment.18 Data extracted about the display intervention included target users, purpose for the display, and types of data displayed; display features included the amount of information displayed, types of plots used, use of color or animation, communication of urgency or importance, and organization of the information.

Data synthesis

Information displays were iteratively grouped into categories of similar displays. Findings were narratively summarized according to each display category. Due to heterogeneity in study design and endpoints we were unable to perform a meta-analysis. We categorized studies according to the primary outcome as positive (ie, significant improvement in at least 1 primary outcome), mixed (ie, significant improvement in any secondary outcome, but not in any primary outcome), or neutral (ie, no significant improvement in primary or secondary outcomes) clinical effects. We found no studies with significant worsening in primary or secondary outcomes. Key display characteristics that had positive outcomes were compared, contrasted, and summarized.

RESULTS

Trial flow

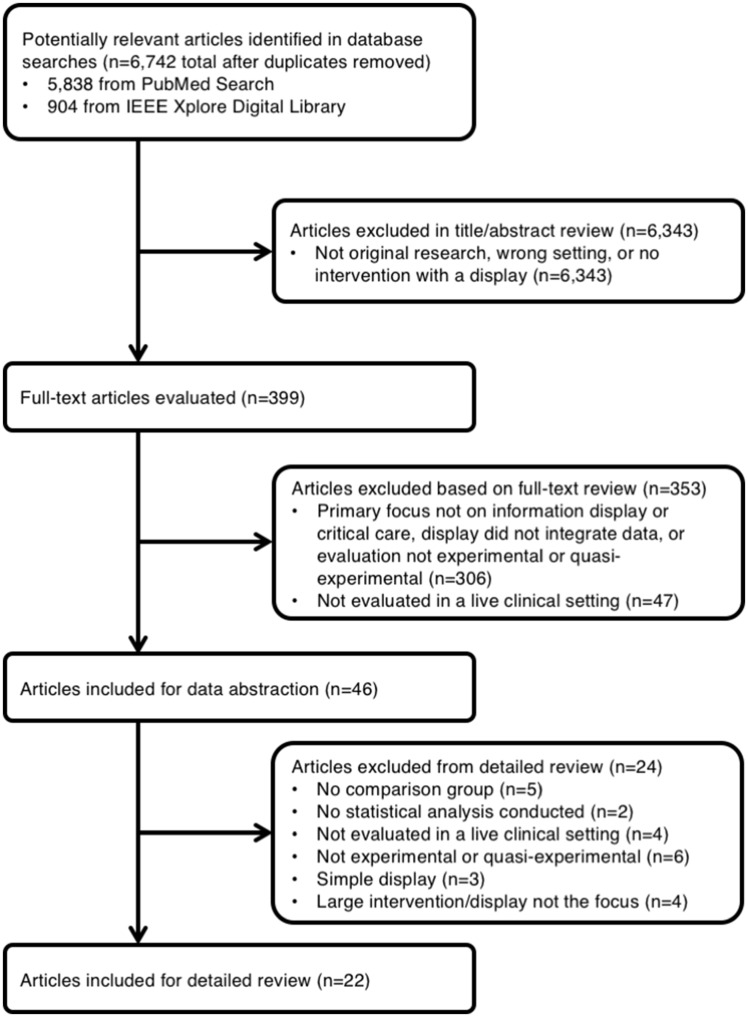

We identified 6742 potentially eligible studies from the literature search. Of these studies, 22 met the inclusion criteria for review (Figure 1 ). Inter-rater agreement was 0.86 for title and abstract screening and 0.78 for full-text review.

Figure 1.

Trial flow.

Study characteristics

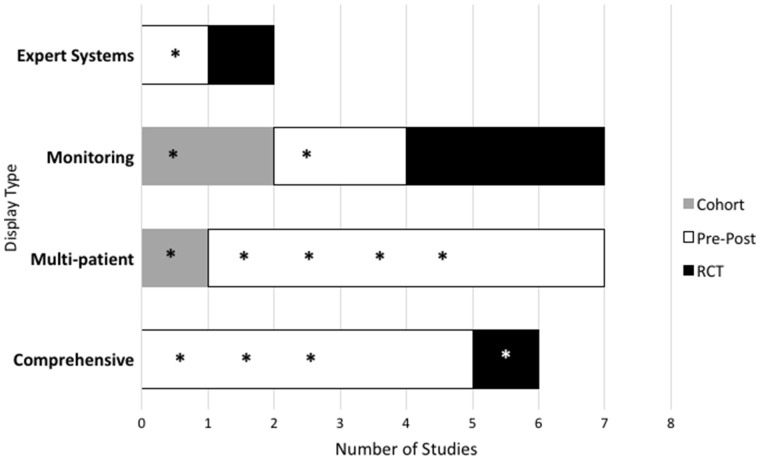

Table 1 summarizes the included manuscripts and study designs. The majority of articles were published since 2014. Seventeen studies were conducted in ICUs, 4 studies were carried out in operating rooms, and 1 study investigated response to critical care events in general hospital wards. Study designs included 14 pre-post studies,19–32 5 randomized controlled trials (RCTs),33–37 and 3 cohort studies.38–40 All studies compared an information display intervention to usual care. Study duration ranged from 2 to 48 months. Primary outcomes included user satisfaction,26 provider efficiency (eg, time to complete tasks),20,36–39 process outcomes,20,21,25,27,30,32,36,40 patient outcomes,23–25,28,29,31,33–35,38,39 and cost (Figure 2 and Table 3).19,22

Table 1.

Characteristics of included studies

| Type | Citation | Intervention | Participants | Location | Setting | Design | Duration (mo) |

|---|---|---|---|---|---|---|---|

| Comprehensive | Dziadzko et al, 201626 | AWARE—comprehensive viewer of ICU data organized by systems to support information management in the ICU | 361 MD, NP, PA, RN, RT | AZ and FL, USA | ICU | Pre-post | 14 |

| Pickering et al, 201537 | 174 rounds | MN, USA | ICU | RCT | 2 | ||

| Hoskote et al, 201727 | 27 handoffs | MN, USA | ICU | Pre-post | Unknown | ||

| Olchanski et al, 201728 | 1839 patients | MN, USA | ICU | Pre-post | 48 | ||

| Kheterpal et al, 201829 | AlertWatch OR—real-time data extraction from physiologic monitors and EHR displayed in schematic “live” view of organ systems | 16 769 patients | MI, USA | OR | Pre-post | 36 | |

| Jiang et al, 201730 | Electronic handoff tool with organized free-text boxes | 50 handoffs | NY, USA | ICU | Pre-post | 5 | |

| Multipatient | Shaw et al, 201520 | Unit-wide dashboard of admission compliance to improve timeliness of compliance with quality and safety measures | 450 patients | DC, USA | ICU | Pre-post | 8 |

| Pageler et al, 201425 | Multipatient dashboard of CLABSI prevention measures to increase compliance with catheter care bundle and decrease CLABSIs | 860 patients | CA, USA | ICU | Pre-post | 39 | |

| Lipton et al, 201121 | CDS for glucose control—current levels, trends, and protocols | 667 patients | Netherlands | ICU | Pre-post | 11 | |

| Zaydfudim et al, 200923 | Multipatient dashboard of ventilator compliance to increase compliance with ventilator bundle and reduce VAP rates | 11 216 ventilator d | TN, USA | ICU | Pre-post | 42 | |

| Bourdeaux et al, 201631 | Multipatient dashboard with visual cues when low tidal volume values are high | 553 tidal volume time series | United Kingdom | ICU | Pre-post | 48 | |

| Cox et al, 201832 | Clinician and family-facing palliative care planner | 78 patients, 67 family members, 10 clinicians | NC, USA | ICU | Pre-post | 5 | |

| Fletcher et al, 201840 | Multipatient dashboard with composite risk scores based on vital signs and laboratory results | 6737 patient admissions | WA, USA | any hospital ward | N-Cohort | 5 | |

| Physiologic and laboratory monitoring | Giuliano et al, 201224 | Horizon Trends—baseline target and range for any physiological parameter, and ST Map—highlights ST changes in ECG | 74 patients | NH, USA | ICU | Pre-post | Unknown |

| Sondergaard et al, 201233 | Display of patient cardiovascular data and trends in the context of target ranges to support anesthesia monitoring | 27 patients undergoing major abdominal surgery | Sweden | OR | RCT | 3 | |

| Kennedy et al, 201039 | Predictive display of anesthetic gas concentration to support anesthetic delivery decision making | 25 patients | New Zealand | OR | N-cohort | Unknown | |

| Kennedy et al, 200438 | 15 patients, 13 anesthesiologists | New Zealand | OR | N-cohort | 6 | ||

| Kirkness et al, 200835 | Highly visible CPP display to help clinicians quickly identify patients with low CPP | 100 patients with cerebral aneurysm | WA, USA | ICU | RCT | 22 | |

| Kirkness et al, 200634 | 157 patients with traumatic brain injury | WA, USA | ICU | RCT | 22 | ||

| Bansal et al, 200119 | CDS for ABG ordering—ABG display over time | ABG lab tests ordered (average of 269/wk pre, 387/wk post) | TN, USA | ICU | Pre-post with parallel control | 3 | |

| Expert system | Semler et al, 201536 | Integrated sepsis management tool | 407 patients | TN, USA | ICU | RCT | 4 |

| Evans et al, 199522 | HELP—CDS for antibiotic ordering | 962 patients | UT, USA | ICU | Pre-post | 20 |

ABG: arterial blood gas; AWARE: Ambient Warning And Response Evaluation; CDS: clinical decision support; CLABSI: central line-associated bloodstream infections; CPP: cerebral perfusion pressure; ECG: electrocardiogram; EHR: electronic health record; HELP: Health Evaluation through Logical Processing; ICU: intensive care unit; MD: medical doctor; NP: nurse practitioner; OR: operating room; PA: physician assistant; RCT: randomized controlled trial; RN: registered nurse; RT: respiratory therapist; VAP: ventilator-associated pneumonia.

Figure 2.

Summary of included manuscripts. Positive findings are marked by an asterisk. RCT: randomized controlled trial.

Table 3.

Key display features and study outcomes according to information display category

| Type | Citation | Design | Key Features | Primary endpoint(s) | Result(s) | Effect |

|---|---|---|---|---|---|---|

| Comprehensive | Dziadzko et al, 201626 | Pre-post | High-value data, extracted from the EMR, are organized by clinical concept and displayed in patient-centered viewers; additional information including interventions, laboratory data, problem lists, and notes can be accessed; urgency of clinical problems displayed by color. | Satisfaction: User | Improved satisfaction in 13 of 15 questions compared with EHR functionality (P < .05). | Positive |

| Pickering et al, 201537 | RCT | Efficiency: Time spent on pre-round data gathering per patient | Decreased time from 12 to 9 min (P = .03). | Positive | ||

| Hoskote et al, 201727 | Pre-post | Process: Percentage agreement in tasks | No significant difference: 24.6% pre vs 31.3% post (P = .1). | Neutral | ||

| Olchanski et al, 201728 | Pre-post | Patient Outcome: ICU mortality | No significant difference: 4.6% pre vs 3.4% post (P = .33). | Positive | ||

| Patient Outcome: Length of stay in ICU | Decreased length of stay: 4.1 d pre vs 2.5 d post (P < .0001) | |||||

| Kheterpal et al, 201829 | Pre-post | AlertWatch OR: real-time data extraction from physiologic monitors and EHR displayed in schematic “live” view of organ systems, color, text, and audible alerts. | Patient Outcome: Time MAP <55 mm Hg (hypotension) | Decreased: 2 min AlertWatch vs 1 min parallel control vs 1 min historical control (P < .001) | Positive | |

| Process Outcome: Inappropriate ventilation | Decreased: 28% AlertWatch vs 37% parallel control vs 57% historical control (P < .001) | |||||

| Process Outcome: Median crystalloid infused (fluid resuscitation rate) | Decreased: 5.88 mL·kg–1·h–1 AlertWatch vs 6.17 mL·kg–1·h–1 parallel control vs 7.40 mL·kg–1·h–1 historical control (P < .001) | |||||

| Jiang et al, 201730 | Pre-post | Electronic handoff tool with labeled free-text boxes for data entry; printout version includes the Handoff Tool and EHR data, such as medication orders and laboratory results. | Process: Mean content overlap index | No difference: 0.06 pre vs 0.06 post (P = .75) | Neutral | |

| Process: Mean discrepancy rate per hands-off group | No significant difference: 0.76 pre vs 1.17 post (P = .17) | |||||

| Multipatient dashboards | Shaw et al, 201520 | Pre-post | Unit-wide dashboard displays noncompliant patients for a set of safety measures. | Process: Median time from ICU admission to treatment consent | No significant difference at preimplementation (393 min), 1 mo postimplementation (304 min), and 4 mo post implementation (202 min) (P = .13). | Neutral |

| Pageler et al, 201425 | Pre-post | Patient-specific, EHR-enhanced checklists, educational information on bundle items, and a unit-wide safety and quality dashboard. Color used to indicate noncompliant. | Process: Compliance with CLABSI prevention bundle (5 elements) | Increased compliance with daily documentation of line necessity (from 30% to 73%; P < .001); dressing changes (from 87% to 90%; P = .003); cap changes (from 87% to 93%; P < .001); and port needle changes (from 69% to 95%; P < .001). Decreased compliance with insertion bundle documentation (from 67% to 62%; P = .001). | Positive | |

| Patient outcome: Rate of CLABSI | Decreased rates from 2.6 to 0.7 per 1000 line-days (P = .03). | |||||

| Lipton et al, 201121 | Pre-post | Current glucose levels and trends for multiple patients along with protocol advice for insulin dosage. | Process: Compliance with glucose measurement time | Increased compliance from 40% to 52% (P < .001) | Positive | |

| Zaydfudim et al, 200923 | Pre-post | Multipatient dashboard of ventilator bundle compliance, ventilator status, deep venous thrombosis, and stress ulcer prophylaxis. Color used to indicate noncompliant. | Patient outcome: Rate of VAP | Reduced rates from 15.2 to 9.3 per 1000 ventilator d (P = .01). | Positive | |

| Bourdeaux et al, 201631 | Pre-post | Dashboard with visual cues for high TVes; multipatient display screens (mounted on the wall at either end of the ICU) showed red when TVe >8 and yellow when TVe >6. | Process outcome: Time it takes the TVe values to drop below threshold | Decreased time: 4.2 h pre, 1.4 h post year 1, 0.95 h post year 2, 0.66 h post year 3 | Positive | |

| Cox et al, 201832 | Pre-post | Clinicians can access a dashboard that allows them to view a list of patients meeting automated palliative care triggers, approve a palliative care consult for any patient on the list, and review family-completed palliative care needs assessments adapted from the needs of the social nature, existential concerns, symptoms, and therapeutic interaction (NEST) scale. | Process: Mean ICU days before palliative care consult | No difference: 3.6 d Intervention vs 6.9 d Control A (P = .21) | Mixed | |

| Process: Mean ICU days after palliative care consult | No difference: 4.4 d Intervention vs 5.1 d Control A (P > .05) | |||||

| Process: Mechanical ventilation days after palliative care consult | No difference: 7 d Intervention vs 9 d Control A (P > .05) | |||||

| Secondary outcome: NEST total unmet needs score | Improved: Decrease in Intervention of 12.7 units vs Increase in Control B of 3.4 units (P = .002) | |||||

| Fletcher et al, 201840 | N-Cohort | Customizable list of patients showing risk of decompensation and composite calculations based on vital signs and laboratory results including (1) a rapid response score and (2) a modified early warning score; scores are color coded to show 3 levels of risk severity. | Process: Number of first rapid response team activations | Significant increase: 71.5 while the display was off vs 86.0 while the display was on per 1000 admissions (IRR, 1.20; P = .04) | Positive | |

| Process: Number of unexpected ICU transfers | No difference: 117 while the display was off vs 145 while the display was on (IRR, 1.15; P = .25) | |||||

| Physiologic and laboratory monitoring | Giuliano et al, 201224 | Pre-post | Horizon Trends displays baseline target and range for any physiological parameter. ST Map highlights ST changes in ECG | Patient outcome: Mean arterial pressure | Increased MAP from 63.7 to 68.1 mm Hg (P = .004) | Positive |

| Patient outcome: % of time MAP levels were within target levels | Increased from 72.8% to 76.3% (P = .031) | |||||

| Sondergaard et al, 201233 | RCT | Graphical and numeric display of patient parameters and targets | Patient outcome: Mean percentage time MAP and CO in target zone averaged standardized difference | No difference: 36.7 (95% CI, 24.2%-49.2%) vs 36.5% (95% CI, 24.0%-49.0%) | Neutral | |

| No difference, 1.5 (range, 1.1–2.3) vs 1.6 (range, 1.2–2.6). | ||||||

| Kennedy et al, 201039 | N-cohort | Anesthetic uptake model that predicts end-tidal sevoflurane and isoflurane concentrations | Patient outcome: Time to change in Ceff levels of sevoflurane | No difference, 220 vs 227 s (95% CI for the difference, –51 s to 32 s) | Neutral | |

| Kennedy et al, 200438 | N-cohort | Patient outcome; Time to change in end-tidal sevoflurane | Changes made on average 1.5–2.3 times faster (P < .05). | Positive | ||

| Kirkness et al, 200835 | RCT | Bars of CPP trend in different colors based on a threshold of 70 mm Hg and numeric display of current CPP. | Patient outcome: GOSE exam 6 months after injury | No difference, 4.16 vs 4.37 (P = .42) | Neutral | |

| FSE 6 months after injury | No difference, 19.78 vs 18.88 (P = .45) | |||||

| Kirkness et al, 200634 | RCT | Patient outcome: GOSE score 6 months after injury | No difference, 4.13 vs 3.82 (P = .389) | Mixed | ||

| FSE score 6 months after injury | No difference, 18.46 vs 19.02 (P = .749) | |||||

| Secondary outcome: improved odds of survival at discharge | Odds ratio, 3.82 (95% CI, 1.13–12.92; P = .03). | |||||

| Bansal et al, 200119 | Pre-post, parallel control | Patient ABG results graphed over time; color shading indicated abnormally high or low values; order entry for ABG ordered to promote less ordering and includes a variety of timing/urgency options | Cost: Ratio of number of ABG tests processed between intervention and control units | Nonsignificant ratio after adjusting for temporal variation in linear regression model (P = .55) | Neutral | |

| Expert system | Semler et al, 201536 | RCT | Integrated sepsis management tool | Process outcome: Time from enrollment to completion of all items on 6-hour sepsis resuscitation bundle | No difference: hazard ratio, 1.98 (95% CI, 0.75–5.20; P = .159) | Neutral |

| Evans et al, 199522 | Pre-post | Integrated display of infection parameters and antibiotic use recommendations | Cost: Average antibiotic per patient | Decreased from $382.68 to $295.65 (P < .04) | Positive |

Outcomes were rated positive if any primary outcome significantly improved, mixed if any secondary but not primary outcomes significantly improved, and neutral if no difference was observed—no studies found an overall negative impact.

ABG: arterial blood gas; Ceff: estimates of past and future effect site; CI: confidence interval; CLABSI: central line–associated blood stream infection; CO: cardiac output; CPP: cerebral perfusion pressure; ECG: electrocardiogram; EHR: electronic health record; FSE: Functional Status Examination; GOSE: Extended Glasgow Outcome Scale; ICU: intensive care unit; IRR: incidence rate ratio; MAP: mean arterial pressure; RCT: randomized controlled trial; TVe: tidal volume; VAP: ventilator-associated pneumonia.

Quality of studies

We reviewed the quality of all the included manuscripts (Table 2). Twenty of the studies selected a comparison group from the same community.20,21,23–25,27–31,33–41 All but 1 study had high (≥75% of the participants) outcome follow-up.19–21,23–25,27–41 Seventeen of the studies had truly or somewhat representative sample groups.20,21,23–25,27–31,33–37,40,41 Fourteen of the studies assessed the outcome in an objective manner.19–21,23–25,28,29,33,36,38–41 Comparability to control groups and randomization was generally low, with only 733–39 and 533–37 studies meeting quality criteria respectively.

Table 2.

Study quality

| Type | Author, Year | Sampling | Comparison Group | Randomized | Comparability | Outcome Follow-Up | Outcome Assessment |

|---|---|---|---|---|---|---|---|

| Comprehensive | Dziadzko et al, 201626 | 0 | 0 | 0 | 0 | 0 | Subjective |

| Pickering et al, 201537 | 1 | 1 | 1 | 1 | 1 | Subjective | |

| Hoskote et al, 201727 | 1 | 1 | 0 | 0 | 1 | Subjective | |

| Olchanski et al, 201728 | 1 | 1 | 0 | 0 | 1 | Objective | |

| Kheterpal et al, 201829 | 1 | 1 | 0 | 0 | 1 | Objective | |

| Jiang et al, 201730 | 1 | 1 | 0 | 0 | 1 | Subjective | |

| Multipatient | Shaw et al, 201520 | 1 | 1 | 0 | 0 | 1 | Objective |

| Pageler et al, 201425 | 1 | 1 | 0 | 0 | 1 | Objective | |

| Lipton et al, 201121 | 1 | 1 | 0 | 0 | 1 | Objective | |

| Zaydfudim et al, 200923 | 1 | 1 | 0 | 0 | 1 | Objective | |

| Bourdeaux et al, 201631 | 1 | 1 | 0 | 0 | 1 | Subjective | |

| Cox et al, 201832 | 0 | 0 | 0 | 0 | 1 | Subjective | |

| Fletcher et al, 201840 | 1 | 1 | 0 | 0 | 1 | Objective | |

| Physiologic and laboratory monitoring | Giuliano et al, 201224 | 1 | 1 | 0 | 0 | 1 | Objective |

| Sondergaard et al, 201233 | 1 | 1 | 1 | 1 | 1 | Objective | |

| Kennedy et al, 201039 | 0 | 1 | 0 | 1 | 1 | Objective | |

| Kennedy et al, 200438 | 0 | 1 | 0 | 1 | 1 | Objective | |

| Kirkness et al, 200835 | 1 | 1 | 1 | 1 | 1 | Subjective | |

| Kirkness et al, 200634 | 1 | 1 | 1 | 1 | 1 | Subjective | |

| Bansal et al, 200119 | 0 | 0 | 0 | 0 | 1 | Objective | |

| Expert system | Semler et al, 201536 | 1 | 1 | 1 | 1 | 1 | Objective |

| Evans et al, 199522 | 1 | 1 | 0 | 0 | 1 | Objective |

Note: Studies were ranked 0 (poor) or 1 (high) for sampling (1 = representative), comparison group (1 = same community), randomization (1 = randomized), comparability (1 = matched cohorts, baseline data, or concealed allocation), and follow-up (1 = ¾ or more participants provided data). Studies were also classified as subjective or objective assessments.

Types of interventions

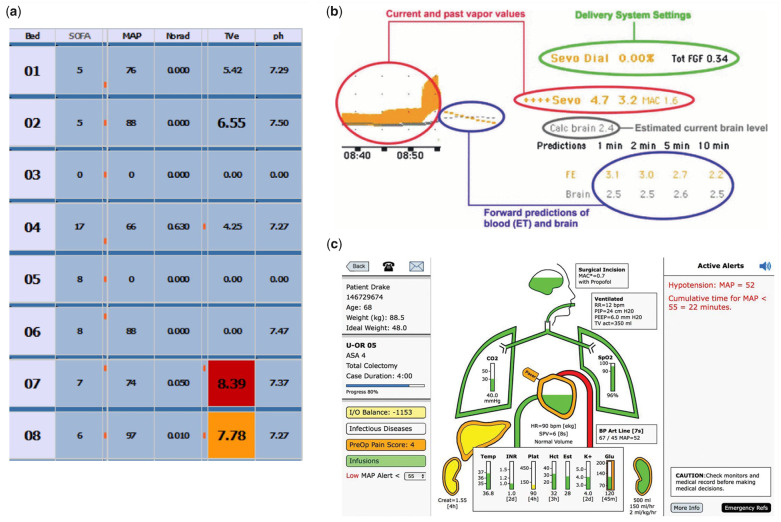

Information displays in the included studies were classified and analyzed according to 4 categories: comprehensive integrated displays,26–30,37 multipatient dashboards,20,21,23,25,31,32 physiologic and laboratory monitoring,19,24,33–35,38,39 and expert systems (Figure 3 and Table 3).36,41

Figure 3.

Example user interfaces: (A) multipatient,31 (B) monitoring,39 and (3) comprehensive.29 Figures reprinted with permission.

Comprehensive integrated displays combine information from different sources within EHRs (eg, medications, problems, vital signs, laboratory results) to support clinically meaningful grouping of related information. Rather than focusing on a specific disease or patient state, these displays provide a comprehensive view of a patient to improve situation awareness and communication (eg, information exchange in handoffs). Six studies evaluated 3 displays that organize information into clinically meaningful concept- and systems-based categories.26–30,37

Multipatient dashboards display multiple patients in a unit to improve compliance with standard care protocols, monitor progress toward treatment goals, and monitor critical care events. These displays were typically placed in a highly visible location, such as a wall next to the nursing station. Six studies evaluated multipatient dashboards to improve admission processes,20 catheter care,25 ventilator management,23 glucose control,21 and palliative care.32 One study monitored patients’ acuity scores for rapid response teams.40

Physiologic and laboratory monitoring displays included interventions that track parameters for a specific patient over time to help providers monitor trends, identify out-of-range values, and verify if certain parameters are within target goals. Unlike comprehensive integrated displays, physiologic and laboratory monitoring displays focus on specific disease states or body systems, such as “shock” or “cardiovascular.” Examples include (1) a display that allows setting target goals and flags out-of-range values for cardiovascular monitoring24; (2) a graphical display of patient vital signs to achieve goal-directed therapy during anesthesia33; (3) a highly visible, shared display of cerebral perfusion for individual patients34,35; (4) a system that monitored anesthetic gas delivery and predicted drug concentration over time to support changes in anesthetic dosing38,39; and (5) a display of arterial blood gas results over time to reduce unnecessary arterial blood gas orders.19

Expert systems included interventions that use automated logic and patient parameters to recommend optimal decisions for specific conditions or care processes. Two studies evaluated expert systems to support care decisions for infectious diseases: an integrated sepsis management tool,36 and a decision support tool for antibiotic ordering.22 These systems integrate relevant information from various sources in the EHR and provide patient-specific treatment recommendations and optimal antibiotic therapy.

Effect of information displays on clinical care

Twelve of the 22 (55%) studies found significant improvement in at least 1 primary outcome (Table 3).21,23,40,41,24–26,28,29,31,37,38 Information display types associated with significant improvement included comprehensive integrated displays (4 of 6 studies; 2 displays),26,28,29,37 multipatient dashboards (5 of 7 studies; 5 displays),21,23,25,31,40 physiologic and laboratory monitoring (2 of 7 studies; 2 displays),24,38 and expert systems (1 of 2 studies; 1 display).22

Overall, the strength of evidence on the effect of information displays on clinical care was low, with only 4 studies being RCTs.33–37 Of the 4 RCTs, only Pickering et al,37 2015 found significant improvement. In this study, investigators designed a comprehensive integrated display that extracted high value from the EHR and organized by clinical concept. Additional information such as interventions, laboratory data, problem lists, and notes can be accessed on demand from the display. Participants who had access to the display significantly decreased preround data-gathering time from 12 to 9 minutes/patient (P = .03).37 Most of the evidence supporting the benefits of information displays came from pre-post and N-cohort studies. Of the 14 pre-post 3 N-cohort studies, 11 (65%) found significant improvement.21,23–26,28,29,31,38,40,41

Kirkness et al34 conducted an RCT with mixed results (ie, no difference in primary outcomes, but improved secondary outcomes). They investigated the effect of a multipatient dashboard of cerebral perfusion pressure over time on patient recovery 6 months after a traumatic brain injury episode.34 There were no differences in primary outcomes related to patient recovery (Extended Glasgow Outcome Scale: 4.13 vs 4.37, P = .389; Functional Status Examination: 18.46 vs 19.02, P = .749). Yet, there was a significant improvement in odds of survival at discharge (3.82; P = .03).34 Kirkness et al,35 another RCT investigating the same display, assessed patient recovery 6 months after a cerebral aneurysm, and found no differences between the control and intervention groups (Extended Glasgow Outcome Scale: 4.16 vs 4.37, P = .42; Functional Status Examination: 19.78 vs 18.88, P = .45).

The remaining 2 RCTs, Semler et al36 and Sondergaard et al,33 had neutral findings. Semler et al36 found no difference in time from enrollment to completion of a 6-item sepsis resuscitation bundle using an expert system for sepsis management (hazard ratio, 1.98; P = .159). Sondergaard et al33 investigated a display of cardiovascular data to support physiologic monitoring in anesthesia during major abdominal surgery and found no effect on the percentage of time mean arterial pressure (36.7% [95% confidence interval, 24.2%-49.2%] vs 36.5% [95% confidence interval, 24.0%-49.0%]) and cardiac output were in the target zone (1.5 [range, 1.1–2.3] vs 1.6 [range, 1.2–2.6]).

DISCUSSION

We systematically reviewed the literature on the clinical effect of electronic information display interventions in critical care and anesthesiology settings. Seventeen information displays were evaluated in 22 studies. Six studies evaluated comprehensive integrated displays, 7 studies evaluated multipatient dashboards, 7 studies evaluated physiologic and laboratory monitoring displays of individual patients, and 2 studies evaluated expert systems that provide decision support recommendations for specific conditions. Although over half (12 of 22) of the studies found significant impacts on primary outcomes such as health care patient outcomes, process outcomes, efficiency, and cost,21,23–26,28,29,31,37,38,40,41 inferences on the efficacy of critical care information displays are limited by low to moderate study quality. Of the 4 RCTs, 4 found no difference in primary outcomes between novel information displays and usual care.33–36 In the following sections, we discuss the findings related to each type of display and compare and contrast the present review findings with a systematic review on similar information displays evaluated in simulated settings.

Comprehensive integrated displays

Four of the 6 studies in this category found positive effects in primary outcomes, including improved clinician satisfaction, improved provider efficiency in preparing for patient rounds, and decreased ICU length of stay. Since comprehensive integrated displays gather and present relevant data from multiple EHR sources in clinically meaningful structures, increased clinician efficiency and satisfaction is expected, possibly resulting from better support for high-level cognitive processing.

Multipatient dashboards

Five of the 7 studies in this category found improvement in process (eg, compliance with care protocols)21,25,40 and patient outcomes (eg, reduced rate of hospital infections).23,25,31 In light of distributed cognition theory,42 multipatient dashboards may effectively help propagate the representational state of all patients in a unit regarding prespecified activities and goals. Although all 4 studies with positive outcomes used a pre-post or cohort design, the large effect sizes on important patient outcomes are compelling and warrant further investigation with stronger study designs.

Support for physiologic and laboratory monitoring

Two of the 7 studies in this category found improvements in surrogate quality outcomes, including increased percentage of time patients’ mean arterial pressure was within target goals (72.8%-76.3%),24 and decreased time to change in end-tidal sevoflurane (1.5–2.3 faster) in anesthesia settings.38 Five studies, which included 4 RCTs and a pre-post with a parallel control, did not find significant changes in the primary outcome.19,33–35,39

Expert systems

Two studies investigated systems that provide automated, patient-specific treatment recommendations for specific conditions.22,36 One pre-post study conducted in 1995 found cost reduction in antibiotic use (from $382.68 to $295.65 per patient) after introduction of an expert system that displays information relevant to infectious diseases and recommends most cost-effective antibiotic choices.22 The other study (RCT design) found no difference in time from enrollment to completion of all items on a 6-hour sepsis resuscitation bundle.36 With recent advances in machine learning and artificial intelligence, and emerging adoption of automated surveillance systems,43–45 there may be an increasing and resurgent role for expert systems in critical care, perhaps as a component of comprehensive integrated displays and multipatient dashboards.

Comparison with studies in simulation settings

When comparing the evidence from studies conducted in clinical vs simulated settings, we found consistent findings in comprehensive integrated displays and translation gaps especially in physiologic and laboratory monitoring displays. Thirteen studies in simulated settings found benefits of comprehensive integrated displays in outcomes such as clinician efficiency, accuracy, and satisfaction (Wright MC, et al, unpublished data, 2018). On the other hand, despite simulation studies showing benefits of physiologic and laboratory monitoring displays using various trend representations, there was little similar evidence in clinical settings. Factors that may have contributed to the translation gaps above include lack of clinician adoption and technical implementation challenges. Authors of physiologic and laboratory monitoring display studies claim low clinician adoption may have compromised the observed effects.34 Specific barriers included information availability lag time,29 poor workflow integration,36 availability of more familiar display options,39,40 and insufficient experience with the new information displays. On the other hand, 2 studies attributed positive outcomes in part to workflow integration with established daily rounds.30,32 Therefore, to be effective in clinical settings, it is possible that the various displays that support the monitoring of specific systems and disease states need to be integrated as a part of multipatient dashboards or comprehensive integrated displays. Interestingly, no studies on multipatient dashboards were identified in simulated settings.46 Research in simulated settings is needed to help design optimal displays, especially investigating more comprehensive dashboards that cover a larger set of care protocols.

Technical implementation challenges may have impeded the implementation of certain categories of information displays in real settings. Comprehensive integrated displays, in particular, require access to a variety of data from different sources in structured computable format, which is not easily accomplished in current closed-architecture EHR systems. The emerging adoption of more open architectures, based on standards such as SMART on Fast Healthcare Interoperability Resources, is creating opportunities to overcome implementation barriers.46

Strengths and limitations

To our knowledge, this is the first systematic review of information display interventions in critical care settings. We followed a standard systematic review process, which included a formal a priori protocol, systematic searching of multiple databases, independent article screening and abstraction by 2 raters, formal quality appraisal using a standard instrument, and integration with the findings of a systematic review focused on similar studies in simulation settings. Limitations include low to moderate quality of included studies, wide heterogeneity of information displays and study designs, which precluded a meta-analysis, and lack of assessment of publication bias, which could have partially contributed to the absence of negative results in the included manuscripts.

Implications for practice and future research

Future research should take measures to help close the gap between research done in laboratory and clinical settings. Implementation science frameworks may help ensure that potential barriers are identified and addressed early in the display design phase and through the implementation. In addition, investigators should take advantage of prevalent commercial EHR systems in U.S. academic medical centers to evaluate critical care information display innovations.47 With the emerging adoption of interoperability standards that allow integration of external applications with EHR systems, information displays shown to be effective in laboratory settings can be integrated into providers’ workflow and tested in multicenter experimental studies. With a much larger number of potential study sites, this EHR ecosystem can also help improve study quality, allowing randomized designs or at least long-term time series with parallel controls. Last, several innovations that have shown promising results in simulated settings still need to be evaluated in clinical settings.

CONCLUSIONS

We identified 22 studies that investigated information display interventions in critical care and anesthesiology settings. Display interventions included comprehensive integrated displays, multipatient dashboards, physiologic and laboratory monitoring, and expert systems. Although over half of the studies observed significant improvement in at least 1 primary outcome, only 1 of 4 RCTs showed significant improvement. Despite promising results both in laboratory and clinical settings, comprehensive integrated displays are relatively understudied. Multipatient dashboards seem to improve compliance to standard care protocols and achieve target treatment goals. Most studies on physiologic and laboratory monitoring displays did not produce positive effects, with low provider adoption raised as the most common explanatory factor. Limitations include overall low quality of the included studies and lack of a meta-analysis due to large heterogeneity in the information display interventions and study designs. Promising results found in a systematic review of information displays in simulated settings have largely not translated to clinical settings, possibly due to technical barriers. Investigators should leverage the evolving EHR landscape in U.S. medical centers to test novel information displays in clinical settings using well-designed study methodology.

FUNDING

This work was supported by National Library of Medicine grant numbers R56LM011925 and T15LM007124 and National Cancer Institute grant number F99CA234943.

AUTHOR CONTRIBUTORS

Study concept and design was completed by MCW, GDF, and NS. PubMed database queries were made by RGW, GDF, MCW, NS, and DB. IEEE database queries were made by MCW, PN, RGW, GDF, NS, and DB. Title and abstract review was completed by RGW, GDF, DB, NS, MCW, and TR. Full text review and data extraction was completed by RGW and GDF. Drafting of the manuscript was completed by RGW and GDF. Critical revision of the manuscript was completed by MCW, GDF, RGW, NS, DB, and TR.

SUPPLEMENTARY MATERIAL

Supplementary material is available at Journal of the American Medical Informatics Association online.

Supplementary Material

ACKNOWLEDGMENTS

We would like to thank our expert panel for their input: Brekk MacPherson, MS (St. Luke’s Health System); Eugene Moretti, MD (Duke University); Atilio Barbeito, MD (Duke University); Bruce Bray, MD (University of Utah); Anthony Faiola, PhD (University of Illinois and Chicago); Kathleen Harder, PhD (University of Minnesota); Jonathan Mark, MD (Duke University and Durham VA); Farrant Sakaguchi, MD, MS (University of Utah); Rebecca Schroeder, MD (Duke University and Durham VA); and Joe Tonna, MD (University of Utah).

Conflict of interest statement: None declared.

REFERENCES

- 1. Donchin Y, Seagull FJ. The hostile environment of the intensive care unit. Curr Opin Crit Care 2002; 8 (4): 316–20. [DOI] [PubMed] [Google Scholar]

- 2. Donchin Y, Gopher D, Olin M et al. A look into the nature and causes of human errors in the intensive care unit. Qual Saf Health Care 2003; 12 (2): 143–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3. Rothschild JM, Landrigan CP, Cronin JW et al. The Critical Care Safety Study: the incidence and nature of adverse events and serious medical errors in intensive care. Crit Care Med 2005; 33 (8): 1694–700. [DOI] [PubMed] [Google Scholar]

- 4. Bracco D, Favre JB, Bissonnette B et al. Human errors in a multidisciplinary intensive care unit: a 1-year prospective study. Intensive Care Med 2001; 27 (1): 137–45. [DOI] [PubMed] [Google Scholar]

- 5. Forster AJ, Kyeremanteng K, Hooper J et al. The impact of adverse events in the intensive care unit on hospital mortality and length of stay. BMC Health Serv Res 2008; 8: 259. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Fackler JC, Watts C, Grome A et al. Critical care physician cognitive task analysis: an exploratory study. Crit Care 2009; 13: R33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Edworthy J. Medical audible alarms: a review. J Am Med Inform Assoc 2013; 20 (3): 584–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Wright MC, Dunbar S, Macpherson BC et al. Toward designing information display to support critical care. Appl Clin Inform 2016; 7: 912–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Islam R, Weir CR, Jones M et al. Understanding complex clinical reasoning in infectious diseases for improving clinical decision support design. BMC Med Inform Decis Mak 2015; 15: 101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Zahabi M, Kaber DB, Swangnetr M. Usability and safety in electronic medical records interface design: a review of recent literature and guideline formulation. Hum Factors 2015; 57 (5): 805–34. [DOI] [PubMed] [Google Scholar]

- 11. Drews FA, Westenskow DR. The right picture is worth a thousand numbers: data displays in anesthesia. Hum Factors 2006; 48 (1): 59–71. [DOI] [PubMed] [Google Scholar]

- 12. McIntosh N. Intensive care monitoring: past, present and future. Clin Med (London) 2002; 2 (4): 349–55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Schmidt JM, De Georgia M. Multimodality monitoring: informatics, integration data display and analysis. Neurocrit Care 2014; 21 Suppl 2: S229–38. [DOI] [PubMed] [Google Scholar]

- 14. Pickering BW, Litell JM, Herasevich V et al. Clinical review: the hospital of the future—building intelligent environments to facilitate safe and effective acute care delivery. Crit Care 2012; 16 (2): 220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Institute of Medicine. Finding What Works in Health Care: Standards for Systematic Reviews. Washington, DC: The National Academies Press; 2011. [PubMed] [Google Scholar]

- 16. Liberati A, Altman DG, Tetzlaff J et al. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. Ann Intern Med 2009; 6: e1–34. [DOI] [PubMed] [Google Scholar]

- 17. Byrt T, Bishop J, Carlin JB. Bias, prevalence and kappa. J Clin Epidemiol 1993; 46 (5): 423–9. [DOI] [PubMed] [Google Scholar]

- 18. Cook DA, Reed DA. Appraising the quality of medical education research methods: the medical education research study quality instrument and the Newcastle-Ottawa Scale-education. Acad Med 2015; 90 (8): 1067–76. [DOI] [PubMed] [Google Scholar]

- 19. Bansal P, Aronsky D, Aronsky D et al. A computer based intervention on the appropriate use of arterial blood gas. Proc AMIA Symp 2001: 32–6. [PMC free article] [PubMed] [Google Scholar]

- 20. Shaw SJ, Jacobs B, Stockwell DC et al. Effect of a real-time pediatric ICU safety bundle dashboard on quality improvement measures. Jt Comm J Qual Patient Saf 2015; 41 (9): 414–20. [DOI] [PubMed] [Google Scholar]

- 21. Lipton J. A, Barendse RJ, Schinkel AFL et al. Impact of an alerting clinical decision support system for glucose control on protocol compliance and glycemic control in the intensive cardiac care unit. Diabetes Technol Ther 2011; 13 (3): 343–9. [DOI] [PubMed] [Google Scholar]

- 22. Evans RS, Classen DC, Pestotnik SL et al. A decision support tool for antibiotic therapy. Proc Annu Symp Comput Appl Med Care 1995: 651–5. [PMC free article] [PubMed] [Google Scholar]

- 23. Zaydfudim V, Dossett L. A, Starmer JM et al. Implementation of a real-time compliance dashboard to help reduce SICU ventilator-associated pneumonia with the ventilator bundle. Arch Surg 2009; 144: 656–62. [DOI] [PubMed] [Google Scholar]

- 24. Giuliano KK, Jahrsdoerfer M, Case J et al. The role of clinical decision support tools to reduce blood pressure variability in critically ill patients receiving vasopressor support. Comput Inform Nurs 2012; 30 (4): 204–9. [DOI] [PubMed] [Google Scholar]

- 25. Pageler NM, Longhurst CA, Wood M et al. Use of electronic medical record-enhanced checklist and electronic dashboard to decrease CLABSIs. Pediatrics 2014; 133 (3): e738–46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Dziadzko MA, Herasevich V, Sen A et al. User perception and experience of the introduction of a novel critical care patient viewer in the ICU setting. Int J Med Inform 2016; 88: 86–91. [DOI] [PubMed] [Google Scholar]

- 27. Hoskote SS, Racedo Africano CJ, Braun AB et al. Improving the quality of handoffs in patient care between critical care providers in the intensive care unit. Am J Med Qual 2017; 32 (4): 376–83. [DOI] [PubMed] [Google Scholar]

- 28. Olchanski N, Dziadzko MA, Tiong IC et al. Can a novel ICU data display positively affect patient outcomes and save lives? J Med Syst 2017; 41 (11): 171. [DOI] [PubMed] [Google Scholar]

- 29. Kheterpal S, Shanks A, Tremper KK. Impact of a novel multiparameter decision support system on intraoperative processes of care and postoperative outcomes. Anesthesiology 2018; 128 (2): 272–82. [DOI] [PubMed] [Google Scholar]

- 30. Jiang SY, Murphy A, Heitkemper EM et al. Impact of an electronic handoff documentation tool on team shared mental models in pediatric critical care. J Biomed Inform 2017; 69: 24–32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Bourdeaux CP, Thomas MJC, Gould TH et al. Increasing compliance with low tidal volume ventilation in the ICU with two nudge-based interventions: evaluation through intervention time-series analyses. BMJ Open 2016; 6: 6–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Cox CE, Jones DM, Reagan W et al. Palliative care planner: a pilot study to evaluate acceptability and usability of an electronic health records system-integrated, needs-targeted app platform. Ann Am Thorac Soc 2018; 15 (1): 59–68. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Sondergaard S, Wall P, Cocks K et al. High concordance between expert anaesthetists actions and advice of decision support system in achieving oxygen delivery targets in high-risk surgery patients. Br J Anaesth 2012; 108 (6): 966–72. [DOI] [PubMed] [Google Scholar]

- 34. Kirkness C, Burr R, Cain K et al. Effect of continuous display of cerebral perfusion pressure on outcomes in patients with traumatic brain injury. Am J Crit Care 2006; 15: 600–9. [PubMed] [Google Scholar]

- 35. Kirkness C, Burr R, Cain K et al. The impact of a highly visible display of cerebral perfusion pressure on outcome in individuals with cerebral aneurysms. Hear Lung 2008; 37 (3): 227–37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Semler MW, Weavind L, Hooper MH et al. An electronic tool for the evaluation and treatment of sepsis in the ICU: a randomized controlled trial. Crit Care Med 2015; 43 (8): 1595–602. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Pickering BW, Dong Y, Ahmed A et al. The implementation of clinician designed, human-centered electronic medical record viewer in the intensive care unit: a pilot step-wedge cluster randomized trial. Int J Med Inform 2015; 84 (5): 299–307. [DOI] [PubMed] [Google Scholar]

- 38. Kennedy RR, French RA, Gilles S. The effect of a model-based predictive display on the control of end-tidal sevoflurane concentrations during low-flow anesthesia. Anesth Analg 2004; 99 (4): 1159–63. [DOI] [PubMed] [Google Scholar]

- 39. Kennedy RR, McKellow MA, French RA. The effect of predictive display on the control of step changes in effect site sevoflurane levels. Anaesthesia 2010; 65 (8): 826–30. [DOI] [PubMed] [Google Scholar]

- 40. Fletcher GS, Aaronson BA, White AA et al. Effect of a real-time electronic dashboard on a rapid response system. J Med Syst 2018; 42; doi: 10.1007/s10916–017–0858–5 [DOI] [PubMed] [Google Scholar]

- 41. Evans RS, Larsen RA, Burke JP et al. Computer surveillance of hospital-acquired infections and antibiotic use. JAMA 1986; 256 (8): 1007–11. [PubMed] [Google Scholar]

- 42. Hazlehurst B, Gorman PN, McMullen CK. Distributed cognition: an alternative model of cognition for medical informatics. Int J Med Inform 2008; 77 (4): 226–34. [DOI] [PubMed] [Google Scholar]

- 43. Johnson AEW, Ghassemi MM, Nemati S et al. Machine learning and decision support in critical care. Proc IEEE 2016; 104 (2): 444–66. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44. Raghupathi W, Raghupathi V. Big data analytics in healthcare: promise and potential. Health Inf Sci Syst 2014; 2: 3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. De Bie AJR, Nan S, Vermeulen LRE et al. Intelligent dynamic clinical checklists improved checklist compliance in the intensive care unit. Br J Anaesth 2017; 119 (2): 231–8. [DOI] [PubMed] [Google Scholar]

- 46. Mandel JC, Kreda DA, Mandl KD et al. SMART on FHIR: a standards-based, interoperable apps platform for electronic health records. J Am Med Inform Assoc 2016; 23 (5): 899–908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. National Coordinator for Health Information Technology. Update on the Adoption of Health Information Technology and Related Efforts to Facilitate the Electronic Use and Exchange of Health Information. 2014:9https://www.healthit.gov/sites/default/files/rtc_adoption_and_exchange9302014.pdf. Accessed March 26, 2018.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.