Abstract

Objectives:

The Eighth Mount Hood Challenge (held in St. Gallen, Switzerland, in September 2016) evaluated the transparency of model input documentation from two published health economics studies and developed guidelines for improving transparency in the reporting of input data underlying model-based economic analyses in diabetes.

Methods:

Participating modeling groups were asked to reproduce the results of two published studies using the input data described in those articles. Gaps in input data were filled with assumptions reported by the modeling groups. Goodness of fit between the results reported in the target studies and the groups’ replicated outputs was evaluated using the slope of linear regression line and the coefficient of determination (R2). After a general discussion of the results, a diabetes-specific checklist for the transparency of model input was developed.

Results:

Seven groups participated in the transparency challenge. The reporting of key model input parameters in the two studies, including the baseline characteristics of simulated patients, treatment effect and treatment intensification threshold assumptions, treatment effect evolution, prediction of complications and costs data, was inadequately transparent (and often missing altogether).Not surprisingly, goodness of fit was better for the study that reported its input data with more transparency. To improve the transparency in diabetes modeling, the Diabetes Modeling Input Checklist listing the minimal input data required for reproducibility in most diabetes modeling applications was developed.

Conclusions:

Transparency of diabetes model inputs is important to the reproducibility and credibility of simulation results. In the Eighth Mount Hood Challenge, the Diabetes Modeling Input Checklist was developed with the goal of improving the transparency of input data reporting and reproducibility of diabetes simulation model results.

Keywords: computer modeling, diabetes, Mount Hood Challenge, transparency

Introduction

The use of economic simulation modeling tools to support decision making in the health care setting is widespread and necessary [1,2]. This is especially true for chronic and progressive diseases such as diabetes mellitus (DM), for which the time horizon of interest for decision making is lifetime and thus beyond the time and resource constraints of clinical trials. Health economic modeling provides a unique opportunity to capture the health and cost consequences of new interventions over the relevant time horizon as well as across all comparators of interest to decision makers.

To inform the allocation of resources, models informing such decisions must be clinically credible and valid for the populations and jurisdictions of interest. This can be achieved by reporting models in a transparent manner and testing their internal and external validity. This was emphasized in the International Society for Pharmacoeconomics and Outcomes Research and the Society for Medical Decision Making (ISPOR-SMDM) Modeling Good Research Practices [2], which advocated for “sufficient information to enable the full spectrum of readers to understand a model’s accuracy, limitations, and potential applications at a level appropriate to their expertise and needs” [3], and in the DM-specific American Diabetes Association (ADA) guidelines for computer modeling [4], which encouraged reporting “in sufficient detail to reproduce the model and its results” [4]. The Second Panel on Cost-Effectiveness in Health and Medicine [5] similarly advocated transparency, although in a more limited manner.

The main focus of these guidelines is on the transparency of model structure, rather than on the assumptions and data used in simulating an individual application (e.g., population characteristics at baseline and the assumed nature and duration of treatment effects). A model with a fully transparent (and internally and externally valid) structure is not sufficient to reproduce the results of any individual simulation. To achieve this, one must also know what assumptions and input data were included. In the spirit of the Turing test [6] of a machine’s ability to exhibit intelligent behavior, we have constructed a hypothetical thought experiment in which two isolated users have access to the same computer simulation model. Awas inadequately transparent simulation would be regarded as transparent if one of the users was able to produce a set of instructions of the simulation they undertook that was sufficiently detailed and comprehensive to allow the other user to implement them and produce identical results using the same model. The ISPOR Consolidated Health Economic Evaluation Reporting Standards checklist [7] outlines many of the items that should routinely be in an economic evaluation, and the Philips checklist is a best practice guideline in model reporting [8]. Both include a range of items concerning application-specific input data. They may, however, be overly general to satisfy the needs in complicated multifactorial disease areas such as DM, and so we have attempted to address this gap in the literature using the Mount Hood diabetes simulation modeling network.

Initiated in 2000 by Andrew Palmer and Jonathan Brown at Timberline Lodge, Mount Hood, OR [9–11], the Mount Hood Challenge is a biennial congress in which as many as 10 DM modeling groups have met to compare and contrast models, methods, and data in the context of simulating standardized treatment scenarios and discussing the results. In September 2016, DM modeling groups gathered in St. Gallen, Switzerland, for the Eighth Mount Hood Challenge, with the aim of standardizing the recording and documentation of simulation inputs and communication of outputs in DM simulation modeling and thereby promoting transparency.

Specifically, the aims of the 2016 Mount Hood Challenge were twofold:

to evaluate transparency of key model inputs using two published studies as examples; and

to develop a DM-specific checklist for transparency of input data that can be used alongside general health economic modeling guidelines to improve reproducibility of health economic analyses in DM.

The present article summarizes the findings from the first objective and how modelers built on these to develop a series of DM-specific transparency recommendations addressing the second aim. The resulting checklist can serve as a means of improving consistency and transparency in diabetes simulation models and provide a framework for developing similar standards in other disease areas.

Methods

The Eighth Mount Hood Challenge was advertised on the Mount Hood Challenge Web site (https://www.mthooddiabeteschallenge.com/) and all known published diabetes modeling groups were invited to participate. The meeting featured two exercises using instructions provided before the meeting: a transparency challenge on day 1 and a communicating outcomes challenge on day 2. Modeling groups were encouraged to submit results for both challenges. Over the course of 2 days, results were presented and discussed, and paths to improvement were debated. A representative from each of the modeling groups was invited to participate on the third day to choose a topic for a meeting proceedings article. The group chose to focus this article on the transparency challenge only. For details of methods and results of the communicating outcomes challenge, interested readers are referred to the Mount Hood Challenge Web site [12].

The Transparency Challenge

Model transparency, “the extent to which interested parties can review a model’s structure, equations, parameter values, and assumptions” [3], is often poor in published economic evaluations, particularly for complex diseases such as DM [4]. More than 10 years after the ADA guidelines promoted increased transparency, this is the first time diabetes modeling groups have attempted to answer the questions “How reproducible are published simulation modeling studies?” and “What is the best way to describe a simulation so that it can be reproduced?”

The modeling groups were assigned two preselected published economic modeling studies in DM [13,14] (see instructions in Appendix 1 in Supplemental Materials found at https://doi.org/10.1016/j.jval.2018.02.002). The first transparency challenge was to replicate the Baxter et al. [13] study, which used the IQVIA-Core Diabetes Model (IQVIA-CDM) to estimate the impact of modest and achievable improvements in glycemic controls on cumulative incidences of microvascular and macrovascular complications and the costs in adults with type 1 (T1DM) or type 2 DM (T2DM) in the UK system [13]. This transparency challenge focused on simulating the T2DM results.

The second transparency challenge was to replicate the UK Prospective Diabetes Study 72 (UKPDS 72), which used the UKPDS Outcomes Model (UKPDS-OM) version 1 to evaluate the cost utility of intensive blood glucose (conventional vs. intensive blood glucose control with insulin or sulphonylureas, and conventional vs. intensive blood glucose control with metformin in overweight patients) and blood pressure control (less tight blood pressure control vs. tight blood pressure control with angiotensin-converting enzyme inhibitors or β-blockers in hypertensive patients) in T2DM [14]. This transparency challenge focused on the comparison of intensive versus conventional blood glucose control in the main randomization.

Modeling groups were asked to use data provided in the study publications including supplementary appendices [13] as inputs into their models and replicate the study analyses. When critical data could not be found in the study publication, they were asked to record assumptions required to fill those data gaps. Simulation results were not blinded. Each group submitted results in advance of the congress.

The data gaps reported by each group were summarized in a tabular format and compared and contrasted during meeting proceedings. Detailed results for costs and cumulative incidences were presented in tables by each model group for each challenge. Agreement between the replicated and original study results was evaluated using scatterplots.

Results

Short biographies of the 10 modeling groups that participated in the Eighth Mount Hood Challenge can be found in Appendix 2 in Supplemental Materials found at https://doi.org/10.1016/j.jval.2018.02.002. This article reports results of the modeling groups that submitted simulation results for the transparency challenges and who agreed to publication in this journal. It should be noted that other modeling groups were involved in the Eighth Mount Hood Challenge and results for the communicating outcomes challenges can be found on the Mount Hood Challenge Web site [12]. Groups were asked to document all their assumptions made in the transparency challenges. These were detailed in the meeting program, also available from the Mount Hood Challenge Web site [15].

Transparency Challenge 1: Reproducing Baxter et al. [13]

Five modeling groups participated in the Baxter challenge: the Cardiff model, the Economics and Health Outcomes Model of T2DM (ECHO-T2DM), the Medical Decision Modeling Inc.—Treatment Transitions Model (MDM-TTM), the Michigan Model for Diabetes (MMD) model, and the IQVIA-CDM. All groups presented simulation results with the exception of the MMD group, which submitted only a document summarizing identified input data gaps. The necessary input data that were not found in the study publications as reported by modeling groups are summarized in Appendix 3 in Supplemental Materials found at http://doi.org/10.1016/j.jvaL.2018.02.002, along with assumptions made and alternative data sources used to fill these gaps. All modeling groups documented a lack of transparency in reporting model inputs in the Baxter study, including important deficiencies such as baseline patient characteristics, treatment effects, and glycated hemoglobin (HbA1c) evolution, thereby forcing modeling groups to make a host of assumptions to fill these gaps. These assumptions differed between groups and contributed to the diverse results. For example, for effect evolution, the Cardiff modeling group assumed that HbA1c was maintained at 7.5% when patients reached this level and the comparator group followed a natural HbA1c progression. The ECHO-T2DM group assumed no evolution in HbA1c or other biomarkers, the IQVIA-CDM modeling group used data from UKPDS 68 for HbA1c evolution [16], and the MDM-TTM modeling group used its default treatment regimen.

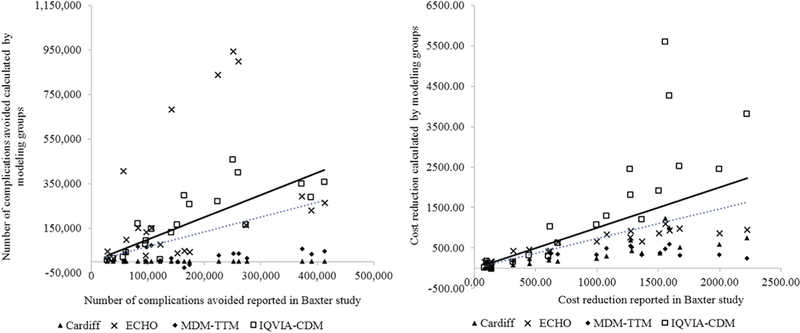

Results of the replication analyses from each modeling group and the original study results are presented in Table 1 for the cost reductions and in Table 2 for the number of complications avoided, over time and by baseline HbA1c subgroup. None of the model results consistently matched the Baxter et al. study results, including the replication using the same IQVIA-CDM underlying the Baxter et al. study results (but without access to unpublished parameters). The scatterplots, shown in Figure 1, confirm discordance between replicated and original results. The IQVIA-CDM modeling group generally overestimated the cost reductions, and other modeling groups generally under-estimated the cost reductions, yielding a best-fitting regression line (intercept suppressed) that indicates general underprediction (slope = 0.71), with an R2 of 0.52. The fit for number of complications avoided is similarly underestimated with an R2 of 0.35.

Table 1 –

Average cost reductions per individual in the UK T2DM population estimated from the Baxter et al. [13] study and by participating modeling groups.

| Baseline HbA1c | Baxter study | Participating modeling groups | |||

|---|---|---|---|---|---|

| Cardiff model | ECHO-T2DM | MDM-TTM | IQVIA-CDM | ||

| < 59 mmol/mol (7.5%) | |||||

| 5 y | £83 | £16 | £154 | £7 | £13 |

| 10 y | £317 | £73 | £418 | £174 | £151 |

| 15 y | £682 | £179 | £644 | £353 | £605 |

| 20 y | £1078 | £307 | £838 | £484 | £1283 |

| 25 y | £1280 | £422 | £911 | £521 | £1799 |

| >59 mmol/mol (7.5%) to 64 mmol/mol (8.0%) | |||||

| 5 y | £132 | £26 | £170 | £60 | £9 |

| 10 y | £449 | £104 | £457 | £208 | £317 |

| 15 y | £995 | £235 | £658 | £337 | £1069 |

| 20 y | £1510 | £385 | £860 | £379 | £1906 |

| 25 y | £1678 | £518 | £976 | £324 | £2503 |

| > 64 mmol/mol (8.0%) to 75 mmol/mol (9.0%) | |||||

| 5 y | £138 | £68 | £157 | £83 | −£16 |

| 10 y | £607 | £201 | £412 | £218 | £294 |

| 15 y | £1366 | £384 | £651 | £329 | £1198 |

| 20 y | £1999 | £580 | £869 | £331 | £2440 |

| 25 y | £2223 | £748 | £942 | £236 | £3810 |

| > 75 mmol/mol (9.0%) | |||||

| 5 y | £105 | £160 | £150 | £146 | £169 |

| 10 y | £622 | £402 | £427 | £372 | £1019 |

| 15 y | £1274 | £697 | £750 | £561 | £2442 |

| 20 y | £1591 | £993 | £923 | £584 | £4255 |

| 25 y | £1559 | £1231 | £1088 | £476 | £5590 |

ECHO-T2DM, Economics and Health Outcomes Model of T2DM; MDM-TTM, Medical Decision Modeling Inc.—Treatment Transitions Model; IQVIA-CDM, IQVIA-CORE Diabetes Model; T2DM, type 2 diabetes mellitus.

Table 2 –

Total complications avoided in the UK T2DM population estimated from the Baxter et al. [13] study and by participating modeling groups.

| Complications | Baxter study | Participating modeling groups | |||

|---|---|---|---|---|---|

| Cardiff model | ECHO-T2DM | MDM-TTM | IQVIA-CDM | ||

| Eye disease | |||||

| 5 y | 56,777 | 12,046 | 403,839 | 5,045 | 19,530 |

| 10 y | 141,792 | 19,764 | 684,490 | 15,825 | 129,321 |

| 15 y | 224,992 | 26,477 | 837,948 | 27,536 | 269,037 |

| 20 y | 261,069 | 31,865 | 898,574 | 34,382 | 399,513 |

| 25 y | 250,768 | 34,701 | 942,337 | 36,514 | 456,766 |

| Renal disease | |||||

| 5 y | 38,151 | 25 | 14,712 | 1,652 | 13,489 |

| 10 y | 95,975 | 40 | 26,794 | 3,724 | 77,000 |

| 15 y | 152,114 | 47 | 35,785 | −1,982 | 164,851 |

| 20 y | 174,601 | 52 | 41,835 | −14,083 | 255,038 |

| 25 y | 164,187 | 59 | 47,992 | −27,836 | 294,751 |

| Foot ulcers, amputations, and neuropathy | |||||

| 5y | 122,013 | 15,847 | 78,367 | 3,934 | 9,343 |

| 10 y | 275,011 | 27,678 | 163,711 | 15,975 | 162,908 |

| 15 y | 389,723 | 35,548 | 229,138 | 33,422 | 286,712 |

| 20 y | 412,535 | 43,458 | 265,308 | 47,265 | 354,630 |

| 25 y | 373,629 | 49,324 | 292,704 | 54,802 | 347,470 |

| Cardiovascular disease | |||||

| 5y | 27,991 | 23,124 | 46,472 | 13,303 | 5,569 |

| 10 y | 61,893 | 50,804 | 97,207 | 40,358 | 40,431 |

| 15 y | 97,890 | 115,335 | 132,514 | 64,231 | 91,852 |

| 20 y | 106,416 | 92,475 | 147,792 | 73,479 | 146,743 |

| 25 y | 82,387 | 112,709 | 149,673 | 68,224 | 168,866 |

ECHO, Economics and Health Outcomes Model of T2DM; MDM-TTM, Medical Decision Modeling Inc.—Treatment Transitions Model; IQVIA-CDM, IQVIA-CORE Diabetes Model; T2DM, type 2 diabetes mellitus.

Fig. 1 –

Comparisons of cumulative complications avoided and cost reductions vs. the Baxter et al. [13] study. Each scatterplot denotes a comparison of results from the modeling groups and those from the Baxter study. The dotted line is the fitted regression line of all comparisons, and the solid line denotes hypothetical perfect agreement between values generated from the modeling groups and those from the original study, that is, R2 = 1 and line intersecting the origin (0). Overall, there is a reasonable good agreement between the results from the modeling groups and those from the Baxter study. The slopes of the regression line are 0.66 and 0.71 and the R2 are 0.35 and 0.52 for the comparisons of complications avoided and cost reductions, respectively. ECHO, Economics and Health Outcomes Model of T2DM; MDM-TTM, Medical Decision Modeling Inc. —Treatment Transitions Model; IQVIA-CDM, IQVIA-Core Diabetes Model; T2DM, type 2 diabetes mellitus.

Transparency Challenge 2: Reproducing UKPDS 72 [14]

Seven modeling groups participated in the UKPDS 72 challenge and consented to publication: the Cardiff model, the ECHO-T2DM model, the MDM-TTM, the Modelling Integrated Care for Diabetes based on Observational data model, the MMD model, the IQVIA-CDM, and the UKPDS-OM (versions 1 and 2). All groups presented their challenge results except for the MMD group. Of note, both UKPDS-OM versions 1 and 2 participated in this exercise, providing an interesting contrast. UKPDS-OM version 1 was used in the UKPDS 72 study, and so provided a clean test of how reproducible the results are from the inputs provided in the publication, whereas the results from UKPDS-OM version 2 provided a further examination of how much the newer equations differ in estimates of health and risk in a controlled environment. The necessary input data that were not found in the study publications as reported by modeling groups, along with their assumptions and alternative data sources used to fill these gaps, are documented in Appendix 4 in Supplemental Materials found at https://doi.org/10.1016/j.jval.2018.02.002. All modeling groups identified gaps in the reporting of UKPDS 72, including absence of information on baseline patient characteristics, initial treatment effects, HbA1c evolution, and treatment use over time. Modeling groups generally sourced missing information from other UKPDS publications, however, with presumably little bias. For example, all groups sourced baseline characteristics from UKPDS 33 [17]. Different assumptions regarding treatment effects, risk factor progression, and unit costs were used.

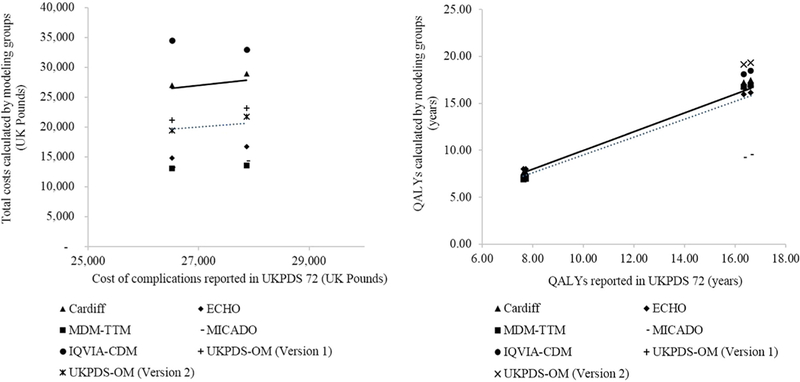

Results of the replication analyses for each modeling group and the original study results are presented in Table 3. The Cardiff model most closely estimated costs; the IQVIA-CDM overestimated costs and the other models underestimated costs. In general, there was a good agreement of quality-adjusted life-years (QALYs) between the replicated and the original study results, although lifetime QALYs estimated by the Modelling Integrated Care for Diabetes based on Observational data model were considerably lower than those reported in UKPDS 72. The scatterplots (Fig. 2) confirm good agreement in results on QALYs, but not on costs, although the R2 values were high in both cases (QALYs 0.97, costs 0.89).

Table 3 –

Undiscounted within - trial total costs and total QALYs as well as differences for conventional vs. intensive blood glucose control estimated from the UKPDS 72 [14] and other modeling groups.

| Simulation outcomes |

UKPDS 72 |

Participating modeling groups |

||||||

|---|---|---|---|---|---|---|---|---|

| Cardiff model |

ECHO- T2DM |

MDM- TTM |

MICADO | IQVIA- CDM |

UKPDS-OM version 1 |

UKPDS-OM version 2 |

||

| Total costs* | ||||||||

| Conventional | 26,516 | 26,996 | 14,806 | 13,094 | 13,288 | 34,523 | 21,154 | 19,428 |

| Intensive | 27,865 | 28,936 | 16,733 | 13,529 | 14,366 | 32,986 | 23,121 | 21,689 |

| Differences in total cost | 1,349 | 1,940 | 1,927 | 435 | 1,078 | −1,537 | 1,967 | 2,261 |

| Within-trial QALYs | ||||||||

| Conventional | 7.62 | 7.64 | 7.96 | 6.94 | NR | 7.27 | NR | NR |

| Intensive | 7.72 | 7.67 | 7.99 | 6.96 | NR | 7.32 | NR | NR |

| Differences in within-trial QALYs | 0.10 | 0.03 | 0.03 | 0.02 | NR | 0.05 | NR | NR |

| Total QALYs | ||||||||

| Conventional | 16.35 | 17.23 | 15.94 | 16.75 | 9.22 | 18.13 | 16.59 | 19.14 |

| Intensive | 16.62 | 17.47 | 16.11 | 16.94 | 9.50 | 18.45 | 16.79 | 19.29 |

| Differences in total QALYs | 0.27 | 0.24 | 1.17 | 0.19 | 0.28 | 0.32 | 0.20 | 0.15 |

ECHO, Economics and Health Outcomes Model of T2DM; MDM-TTM, Medical Decision Modeling Inc.—Treatment Transitions Model; MICADO, Modelling Integrated Care for Diabetes based on Observational data; NR, not reported; QALY, quality-adjusted life-year; IQVIA-CDM, IQVIA-CORE Diabetes Model; T2DM, type 2 diabetes mellitus; UKPDS, UK Prospective Diabetes Study; UKPDS-OM, UKPDS Outcomes Model.

Costs are presented in 2004 British pounds.

Fig. 2 –

Comparisons of total costs and QALYs vs. the UKPDS 72 study [14]. Each scatterplot denotes a comparison of results from the modeling groups and those from the UKPDS 72 study. The dotted line is the fitted linear regression line, and the solid line denotes hypothetical perfect agreement between values generated from the modeling groups and those from the original study, that is, R2 = 1 and line intersecting the origin (0). Overall, there is a good agreement between the results from the modeling groups and those from the UKPDS 72 study. The slopes of the regression line are 0.75 and 0.98 and the R2 are 0.89 and 0.97 for the comparisons of total costs and QALYs, respectively. ECHO, Economics and Health Outcomes Model of T2DM; MDM-TTM, Medical Decision Modeling Inc.—Treatment Transitions Model; QALY, quality-adjusted life-year; IQVIA-CDM, IQVIA-Core Diabetes Model; T2DM, type 2 diabetes mellitus; UKPDS, UK Prospective Diabetes Study; UKPDS-OM, UKPDS Outcomes Model.

The Diabetes Modeling Input Checklist

The representatives of the modeling groups met on the morning of September 19, 2016, to translate the findings of the transparency challenge into recommendations for improved simulation input data reporting. The Diabetes Modeling Input Checklist was developed with the intention that it should be used for future publications of long-term modeling economic evaluations in diabetes and possibly to be adopted by journals when reviewing submissions. The representatives agreed on the following:

There are glaring omissions in the documentation of inputs in published studies (not just the two examples considered in the challenges) that limit reproducibility. The possible reasons are multiple, including publication word limit, lack of thoroughness, and intentional lack of transparency.

Existing checklists [3,7,18] are general and inadequate for informing fully transparent reporting for complex DM simulation modeling with its extreme input data burden.

There is a need for a DM-specific checklist.

Ideally, a DM-specific checklist should be simple (specifying a minimum required amount of information) and complement (not supersede) existing guidelines.

A general consensus should be formed on the specific input items for DM models that should be included in the checklist (note that this did not include a discussion about what numerical values for input may or may not be best).

The Diabetes Modeling Input Checklist is presented in Table 4 and the main summary is given herein.

Table 4 –

Checklist of reporting model input in diabetes health economics studies.

| Model input | Checkbox | Comments (e.g., justification if not reported) |

|---|---|---|

| Simulation cohort | ||

| Baseline age | ||

| Ethnicity/race | ||

| BMI/weight | ||

| Duration of diabetes | ||

| Baseline HbA1C, lipids, and blood pressure | ||

| Smoking status | ||

| Comorbidities | ||

| Physical activity | ||

| Baseline treatment | ||

| Treatment intervention | ||

| Type of treatment | ||

| Treatment algorithm for HbA1c evolution over time | ||

| Treatment algorithm for other conditions (e.g., hypertension, dyslipidemia, and excess weight) | ||

| Treatment initial effects on baseline biomarkers | ||

| Rules for treatment intensification (e.g., the cutoff HbA1C level to switch the treatment, the type of new treatment, and whether the rescue treatment is an addition or substitution to the standard treatment) | ||

| Long-term effects, adverse effects, treatment adherence and persistence, and residual effects after the discontinuation of the treatment | ||

| Trajectory of biomarkers, BMI, smoking, and any other factors that are affected by treatment Cost | ||

| Differentiated by acute event in first year and subsequent years | ||

| Cost of intervention and other costs (e.g., managing complications, adverse events, and diagnostics) | ||

| Please report unit prices and resource use separately and give information on discount rates applied | ||

| Health state utilities | ||

| Operational mechanics of the assignment of utility values (i.e., utility- or disutility-oriented) | ||

| Management of multihealth conditions | ||

| General model characteristics | ||

| Choice of mortality table and any specific event-related mortality | ||

| Choice and source of risk equations | ||

| If microsimulation: number of Monte-Carlo simulations conducted and justification | ||

| Components of model uncertainty being simulated (e.g., risk equations, risk factor trajectories, costs, and treatment effect); number of simulations and justification | ||

| BMI, body mass index; HbA1c, glycated hemoglobin. | ||

Simulation Cohort

Baseline patient characteristics of the simulated cohort should be clearly stated, including age, sex, ethnicity/race, body mass index (BMI)/weight, duration of diabetes, baseline HbA1c, lipids and blood pressure levels, smoking status, comorbidities, physical activity, and baseline treatments (aspirin, statins, angiotensin-converting enzyme inhibitors/angiotensin II receptor blockers, and/or glucose-lowering treatments). Baseline characteristics should be presented in a tabular format as mean with SD or as proportion, as appropriate whenever possible. In addition, the type of distribution of the baseline characteristics should be reported in the table.

Treatment Interventions

First, the chosen treatment(s) and treatment algorithm for blood glucose control or treating hypertension, dyslipidemia, excess weight, or any other relevant condition in the comparator and intervention should all be specified. Second, it is helpful to specify the initial impact of treatment(s) on baseline biomarkers. Third, it is important to state the rules for treatment intensification, for example, the threshold HbA1c (or blood pressure, lipid, BMI, or estimated glomerular filtration rate [eGFR]) level that triggers a change in treatment and the new treatment regimen. Moreover, it should be specified whether the change in treatment is an addition or substitution to the previous treatment. Fourth, modelers should also specify in detail the set of long-term effects, adverse effects, treatment adherence and persistence, and any assumptions on legacy effects (i.e., residual treatment effects after the discontinuation of a treatment) that are considered in the model. In addition, it is helpful to describe the direct and indirect linkages between treatment effects and primary outcomes including health outcomes, costs, and effectiveness (e.g., HbA1c affects stroke, myocardial infarction, retinopathy, and nephropathy risks directly, and indirectly affects mortality through its impact on events specific to cardiovascular disease and associated mortality). Finally, it is important to include the trajectory of biomarkers (e.g., HbA1c, lipids, blood pressure, BMI, eGFR, and smoking) and any other factors that are affected by interventions and have an impact on modeled patient health outcomes.

Costs

All state-specific and treatment-specific costs should be detailed in a separate section and may be differentiated by acute event costs in the first year and ongoing costs in subsequent years. The costs should include costs of intervention themselves as applied in the model and other costs such as adverse events, complication management, and diagnostics if applied. Complication costs should consider the timing of the event. For example, macro-vascular complications often have a high cost at the time of the event and lower follow-up management costs thereafter. If the evaluation is from the societal perspective, it should specify assumptions related to indirect costs such as foregone productivity and any other costs (e.g., transportation). Moreover, the type of productivity losses (i.e., absenteeism, presentism, or early retirement) and the methods used to evaluate productivity losses should be stated in the costs methods section.

Health State Utilities

Methodological approaches to estimating utility in the presence of multiple comorbidities include alternative options including the “minimum,” “multiplicative,” or other approaches. For example, the minimum approach uses the value of the condition with the lowest individual utility score, whereas the multiplicative approach uses the arithmetic product of utility scores as a proportion of full health. It should be clearly stated which method is used to adjust the health state utilities of multiple comorbidities.

General Model Characteristics

There are other factors that may have a substantive impact on model transparency. First, the choice of the country-specific life table for all-cause mortality should be clearly stated in the Methods section, and when a specific event-related mortality is incorporated, it must be stated. Second, it is important to document the source and details of risk equations used in the model. Finally, if using a microsimulation model, authors should report the number of Monte-Carlo simulations performed per individual simulated and justify that choice. When performing probabilistic sensitivity analysis, it is important to document and justify which components of model uncertainty are being propagated to the model outputs (e.g., risk equations, risk factor trajectories, and treatment effect), the methods and assumptions used to propagate the uncertainty, and the number of Monte-Carlo simulations used to reflect parameter uncertainty.

Discussion

ISPOR-SMDM emphasized the importance of transparency for engendering confidence and credibility for health economic decision modeling [3], and the ADA advocates a similar approach for diabetes modeling specifically [4]. The main theme of the Eighth Mount Hood Challenge was transparency and reproducibility of computer simulation models in diabetes. The transparency challenge illustrated substantial difficulties in reproducing study results using the published input data. The modeling groups responded to data gaps with widely varying assumptions, yielding large differences in some outcomes. Differences in the degree of reproducibility of the Baxter et al. [13] and the UKPDS 72 [14] studies were consistent with the comprehensiveness of inputs provided. The results indicate that substantial shortcomings remain, providing impetus for the modeling groups to jointly develop a DM-specific input reporting checklist.

A large number of inputs are required for simulation modeling in DM. Full transparency requires considerable resources of both modelers and the consumers of modeling results, which creates a “cost of transparency.” The Diabetes Modeling Input Checklist represents a pragmatic approach, focusing on parameters and assumptions that are influential in typical applications. It is important to note that models may differ substantially in structure and input variables. Although some inputs may be specific to a particular model, such as the renal risk factor eGFR, it is important that modelers transparently document the assumptions made around the level and time path of that risk factor. Note, however, that additional items important to any specific application should naturally be reported even if not explicitly mentioned in the checklist. Optimizing transparency of inputs to simulation will assist in understanding the assumptions used in projections and will facilitate a better understanding of why model results may not be realized if future conditions change in an unforeseen way. In addition, with an increased use of this checklist in future health economic modeling studies, we plan to test whether this checklist increases transparency of diabetes model inputs at an upcoming Mount Hood Challenge.

This checklist is not free-standing, but should be used to complement the more general guidelines such as the ISPOR-SMDM guidelines for model transparency [3], the Philips guidelines [18], the ISPOR Consolidated Health Economic Evaluation Reporting Standards guidelines for modeling study reporting [7], and the ADA guidelines for modeling of diabetes and its complications [4]. The Mount Hood Challenge Modeling Group recommends routine use of the Diabetes Modeling Input Checklist. Modelers should document simulation inputs in line with the checklist and submit these as supplementary materials with publications. Journal editors and reviewers should permit (even require) the inclusion of the checklist with any DM-related modeling publications.

Conclusions

In previous Mount Hood Challenges, modeling groups worked together to compare outcomes for hypothetical diabetes cohorts and interventions [9] and to compare outcomes simulated from health economic models to those from real-world data [10,11]. In the latest Mount Hood Challenge, the potential shortcomings of poor simulation input transparency were clearly demonstrated, leading to these consensus recommendations intended to promote transparency. Increased transparency can improve both the credibility and the clarity of model-based economic analyses. Although the checklist we propose is specific to DM, it will hopefully inspire modelers in similarly complex fields to promote transparency of model inputs to improve the reliability of model outputs.

Supplementary Material

Acknowledgments

Source of financial support: No funding was received for this article.

Footnotes

Supplemental Materials

Supplemental data associated with this article can be found in the online version at https://doi.org/10.1016/j.jval.2018.02.002.

REFERENCES

- [1].Buxton MJ, Drummond MF, Van Hout BA, et al. Modelling in economic evaluation: an unavoidable fact of life. Health Econ 1997;6: 217–27. [DOI] [PubMed] [Google Scholar]

- [2].Caro JJ, Briggs AH, Siebert U, Kuntz KM. Modeling good research practices—overview: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force-1. Med Decis Making 2012;32:667–77. [DOI] [PubMed] [Google Scholar]

- [3].Eddy DM, Hollingworth W, Caro JJ, et al. Model transparency and validation: a report of the ISPOR-SMDM Modeling Good Research Practices Task Force-7. Med Decis Making 2012;32:733–43. [DOI] [PubMed] [Google Scholar]

- [4].American Diabetes Association Consensus Panel. Guidelines for computer modeling of diabetes and its complications. Diabetes Care 2004;27:2262–5. [DOI] [PubMed] [Google Scholar]

- [5].Sanders GD, Neumann PJ, Basu A, et al. Recommendations for conduct, methodological practices, and reporting of cost-effectiveness analyses: Second Panel on Cost-Effectiveness in Health and Medicine. JAMA 2016;316:1093–103. [DOI] [PubMed] [Google Scholar]

- [6].Pinar Saygin A, Cicekli I, Akman V. Turing test: 50 years later. Minds Mach 2000;10:463–518. [Google Scholar]

- [7].Husereau D, Drummond M, Petrou S, et al. Consolidated Health Economic Evaluation Reporting Standards (CHEERS)—explanation and elaboration: a report of the ISPOR Health Economic Evaluation Publication Guidelines Good Reporting Practices Task Force. Value Health 2013;16:231–50. [DOI] [PubMed] [Google Scholar]

- [8].Philips Z, Ginnelly L, Sculpher M, et al. Review of guidelines for good practice in decision-analytic modelling in health technology assessment. Health Technol Assess 2004;8:1–158, iii-iv, ix-xi. [DOI] [PubMed] [Google Scholar]

- [9].Brown JB, Palmer AJ, Bisgaard P, et al. The Mt. Hood challenge: cross-testing two diabetes simulation models. Diabetes Res Clin Pract 2000;50 (Suppl. 3):S57–64. [DOI] [PubMed] [Google Scholar]

- [10].Hood Mount 4 Modeling Group. Computer modeling of diabetes and its complications: a report on the Fourth Mount Hood Challenge Meeting. Diabetes Care 2007;30:1638–46. [DOI] [PubMed] [Google Scholar]

- [11].Palmer AJ. Computer modeling of diabetes and its complications: a report on the Fifth Mount Hood Challenge Meeting. Value Health 2013;16:670–85. [DOI] [PubMed] [Google Scholar]

- [12].The Mount Hood Challenge Modelling Group. Economics, simulation modelling and diabetes: Mt Hood Diabetes Challenge Network. Available from: https://www.mthooddiabeteschallenge.com/. [Accessed January 31, 2018].

- [13].Baxter M, Hudson R, Mahon J, et al. Estimating the impact of better management of glycaemic control in adults with type 1 and type 2 diabetes on the number of clinical complications and the associated financial benefit. Diabet Med 2016;33:1575–81. [DOI] [PubMed] [Google Scholar]

- [14].Clarke PM, Gray AM, Briggs A, et al. Cost-utility analyses of intensive blood glucose and tight blood pressure control in type 2 diabetes (UKPDS 72). Diabetologia 2005;48:868–77. [DOI] [PubMed] [Google Scholar]

- [15].The Mount Hood Challenge Modelling Group. Economics modelling and diabetes: the Mount Hood 2016 Challenge. 2016. Available from: https://docs.wixstatic.com/ugd/4e5824_0964b3878cab490da965052ac6965145.pdf. [Accessed January 31, 2018].

- [16].Clarke PM, Gray AM, Briggs A, et al. A model to estimate the lifetime health outcomes of patients with type 2 diabetes: the United Kingdom Prospective Diabetes Study (UKPDS) Outcomes Model (UKPDS no. 68). Diabetologia 2004;47:1747–59. [DOI] [PubMed] [Google Scholar]

- [17].Intensive blood-glucose control with sulphonylureas or insulin compared with conventional treatment and risk of complications in patients with type 2 diabetes (UKPDS 33). UK Prospective Diabetes Study (UKPDS) Group. Lancet 1998;352:837–53. [PubMed] [Google Scholar]

- [18].Philips Z, Bojke L, Sculpher M, et al. Good practice guidelines for decision-analytic modelling in health technology assessment. Pharmacoeconomics 2006;24:355–71. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.