Significance

Biomolecules, such as proteins or nucleic acids, can adopt a number of molecular configurations, which grow exponentially with their size. Since conformation change drives biological function, characterizing structural configurations is critical. However, exhaustively sampling an exponentially large number of states and the transitions in between these rapidly becomes difficult by explicit simulation. Here, we make significant strides toward the characterization conformations of such systems. To achieve this, we break molecular configurations into subsystems and model how the subsystems mutually affect the others’ dynamics. We show that this approach may accurately capture the molecular dynamics and systematically outperforms existing methodology by predicting unobserved molecular configurations.

Keywords: graphical models, molecular kinetics, large molecular systems

Abstract

Most current molecular dynamics simulation and analysis methods rely on the idea that the molecular system can be represented by a single global state (e.g., a Markov state in a Markov state model [MSM]). In this approach, molecules can be extensively sampled and analyzed when they only possess a few metastable states, such as small- to medium-sized proteins. However, this approach breaks down in frustrated systems and in large protein assemblies, where the number of global metastable states may grow exponentially with the system size. To address this problem, we here introduce dynamic graphical models (DGMs) that describe molecules as assemblies of coupled subsystems, akin to how spins interact in the Ising model. The change of each subsystem state is only governed by the states of itself and its neighbors. DGMs require fewer parameters than MSMs or other global state models; in particular, we do not need to observe all global system configurations to characterize them. Therefore, DGMs can predict previously unobserved molecular configurations. As a proof of concept, we demonstrate that DGMs can faithfully describe molecular thermodynamics and kinetics and predict previously unobserved metastable states for Ising models and protein simulations.

How many states does a macromolecule have? When is a molecular dynamics (MD) simulation converged? State-based MD analysis methods, such as Markov state models (MSMs) (1–6) and related MD simulation methods (7–10), take a direct approach at these questions: map each macromolecular configuration to a single global state such that they distinguish between metastable states or macro states—sets of configurations that are separated by rare event transitions. The number of states of a macromolecule is then determined by the timescales that one wants to resolve (2), and an MD simulation can be considered converged when all of these metastable states have been found and the transitions between them have been sampled in both directions. This state-based view has enabled characterization of folding (11–13), conformation changes (14, 15), ligand binding (16–19), and association/dissociation (20) in small- to medium-sized proteins. Although seemingly conceptually different, reaction coordinate (RC)-based methods, such as umbrella sampling (21) and flooding/metadynamics (22, 23), are also state-based methods, where the state is characterized by the values of the chosen RCs. Still, with such methods, all rare events that are not statistically independent of the chosen RCs must be sampled, and the corresponding metastable states must be resolved.

Even with massive simulation power and enhanced sampling methods, a converged analysis in the state-based picture fundamentally relies on the fact that there are relatively few metastable states. This is the case for cooperative macromolecules, such as small- to medium-sized proteins, where the long-ranged interactions create relatively smooth free energy landscapes, explaining the success for these systems. However, almost all available MD simulation and analysis methods will break down for systems with many metastable states. The proliferation of metastable states can already be observed in nontrivial protein systems (24), but a more pathological example is nucleic acids, where the number of ways to pair bases grows exponentially in size with the system, creating highly rugged energy landscapes with many metastable states (25, 26). Even for protein systems, the ability to characterize the global system state by a single variable will break down at a certain size. Beyond the size of a few nanometers, electrostatic interactions are weak, and thus, large molecular machines, such as the ribosome and neuronal active zones, are expected to consist of dozens to thousands of sparsely coupled switchable units. Even if each of these units only has 2 possible states, the total number of global system states grows exponential with system size, and any simulation or analysis method that relies on all of these states to be sampled is doomed.

In this paper, we propose a change of perspective from a global approach to one based on local subsystems by introducing dynamic graphical models (DGMs). DGMs represent each molecular configuration by a concatenation of subsystem configurations and then, model how these subsystems evolve in time by a set of local rules, encoding how each subsystem behaves in the field of all of the other subsystems. Although conceptually more involved than models with a single global state, such as MSMs, the decision to model a system as many coupled subsystems is key in reducing the computational complexity for systems that have astronomically large numbers of states. To learn the rules according to which each subsystem switches its state, not all global system configurations need to be sampled but only the states of those other subsystems that are directly coupled to .

DGMs have conceptual connections to prior work in time series modeling as well as in MD. One example is a recent Granger causality model, which captures the time evolution of several binary random variables (27). DGMs, however, are mathematically more similar to Ising models, which are graphical models widely used to describe equilibrium behavior of solid states but also have many other applications (28, 29). In MD, DGMs have conceptual connections with ultracoarse graining (UCG) (30), where coarse-grain beads are used represent large groups of atoms, such as protein domains. Similar to DGM subsystems, each bead can change its interactions with other beads by means of discrete switching events (30, 31). The main difference between these models is that UCG is a particle-based simulation model, where subsystem switches are added to represent a more complex Hamiltonian compared with purely bead-based coarse graining. With DGMs, we here aim to describe the global state space dynamics purely as a set of coupled discrete switches, and they are thus generalizations of MSMs.

The main difficulty in applying graphical models to MD is that it is not clear a priori what the subsystems are and how they are coupled. Estimating the structure of graphical models from data is an extensively studied machine learning problem (32–35). Here, we take a first step toward estimating DGMs from simulation data by using a dynamical version of Ising model estimators (36).

The property that not all global system configurations need to be sampled to parametrize a DGM implies that DGMs are generative models. This means that they should be able to predict previously unobserved molecular configurations and thus, promote the efficient discovery of conformation space. Here, we demonstrate that DGMs can indeed predict previously unobserved protein conformations and that protein thermodynamics and kinetics can be accurately captured as for MSMs.

Dynamic Graphical Models

General Model Structure.

Here, we propose DGMs as a method to model molecular kinetics and thermodynamics inspired by graphical models, a classical machine learning approach to encode dependencies between random variables (34). Instead of encoding the global molecular configuration in a single state variable, DGMs characterize the molecular configuration by a set of subsystems that encode the states of local molecular features (Fig. 1). The coupling parameters of a DGM then encode how each subsystem switches in time as a result of the states of other subsystems at a previous time: that is, . Like MSMs, DGMs are Markovian models in which the current configuration only depends on the previous time step. In contrast to MSMs that encode transition probabilities of global system configurations, DGMs encode the transition probabilities of single subsystems dependent on the settings of all of the subsystems. In addition, each subsystem does not generally depend on all other subsystems but only on a local neighborhood of subsystems—in other words, the coupling parameters are generally sparse.

Fig. 1.

Illustration of molecular representations in MSMs and DGMs. (A) Cartoon representation of protein conformational states along a trajectory. (B) MSM with Markov states, , and transition probabilities between states and , . The number of different Markov states, , may grow exponentially with the number of subsystems, and only subsystem combinations that have been observed can be encoded in Markov states. (C) DGMs, here illustrated using a DIM, represent the current state of the system via the states of its subsystems, , that are coupled by parameters . The DGM can encode exponentially many states, although the number of parameters grows only quadratically with the number of subsystems.

For illustration, consider a DGM of a one-dimensional (1D) Ising model (37) (Fig. 2A) consisting of subsystems on a ring, each of which adopts 1 of 2 configurations: 1 or −1. Each subsystem (or spin) interacts directly only with its nearest neighbors and possibly, an external field, resulting in 3 coupling parameters for each spin. Given a choice of the dynamics used to flip the spins, such as Metropolis, Glauber (Gibbs), or Kawasaki dynamics (38–40), a DGM that describes the probability of each spin’s state at time given the spin configuration at time will thus require parameters. A direct MSM, however, distinguishes all global Markov states and needs to estimate an up to transition matrix from the data. More importantly, an MSM can only retrospectively describe the dynamics between states that have been observed in the simulation data. For example, an MSM is unable to predict that “spins up” and “spins down” are 2 different metastable states unless it has sampled transitions between them. A DGM can in principle be parameterized only using the spin fluctuations in 1 of 2 metastable states, and the global thermodynamics and kinetics can still be predicted. We intend to exploit this property for modeling biomolecular dynamics.

Fig. 2.

DGMs estimated for a 1D Ising model from equilibrium simulation data (ED) and from simulation data only containing configurations with negative net magnetization (NED). (A) Illustration of the circular Ising model. (B) Analytic stationary distributions of and empirical histograms NED dataset. Shown DGM predictions are for models where the local fields are not estimated. (C) Implied timescales of estimated models and the true reference.

When using DGMs to model biomolecular dynamics, we are not given the definition of subsystems and their couplings a priori, but we must rather estimate them from data. A subsystem could be something as complex as a protein domain (Fig. 1A) with multiple internal states or something as simple as a torsion angle rotamer or a contact between 2 chemical groups that each have only 2 settings, similar to spins. After the subsystems are defined, it must be estimated which of them are coupled. Estimating the coupling graph from data is a notoriously difficult problem, often referred to as the inverse Ising problem. (32, 33, 41). However, we have the advantage that modeling the dynamics via a DGM creates a directed dependency graph in time: the spin variables at time only depend on the spin variables at time (Fig. 1C). This makes the problem of estimating the coupling between subsystems tractable.

Dynamic Ising Models.

Here, we propose a first implementation of DGMs using a dynamic variant of the Ising models. Ising models consist of a set of spins with configuration . Each spin can be in 1 of 2 settings, . The probability of a spin configuration is given by

| [1] |

where the model parameters and describe the coupling strength between subsystems and and the local field of subsystem , respectively, and is the partition function. The distribution (1) can be easily sampled (38, 39) given the parameters and . The most common Ising models have spins arranged in a lattice, and only neighboring spins are coupled.

When modeling molecular kinetics, our data consist of (possibly short) time series rather than samples from the equilibrium distribution. Therefore, we use dynamic Ising models (DIMs) (36) that model the conditional distribution that governs how spin configuration—and thus, the configuration of our molecular system—changes in time. Note that, given a model , we can simulate long trajectories and thus, also predict the equilibrium distribution, . The DIM is given by

| [2] |

where is a matrix of coupling parameters, is a vector of local fields, and is the partition function. DIMs can readily be generalized to more than 2 states per subsystem , which we call dynamic Potts models (DPMs) (SI Appendix has details). Unlike in Eq. 1, the self-couplings are nonzero. The coupling matrix is not necessarily symmetric, and it does not have a specified topology. In this work, we use DIMs to implement all of the presented DGMs.

The DIM samples the current state of all subsystems given their configuration at a time prior. This is in contrast to Glauber dynamics, where only a single subsystem is allowed to change its state in some infinitesimal time . Consequently, if we use a , which is larger than the characteristic timescale of 1 or more of the subsystems, the dynamics of these subsystems will not be resolved. This is analogous to how a lag time defines the temporal resolution in an MSM. More detailed analysis of DIM properties is in SI Appendix, section 1.3 and Fig. S1.

Results

Recovery of an Unobserved Phase and Its Thermodynamics and Kinetics in the Ising Model from Biased Data.

One of the most interesting properties of DGMs is that they make predictions for global system configurations that have not been observed in the training data. Here, we test whether a DGM trained with data from an Ising model confined to negative net magnetizations only can predict the existence of configurations with positive net magnetization, their equilibrium probabilities, and the timescale of remagnetizing the system.

As a first test, we simulate a 1D periodic Ising model with 9 spins using Glauber dynamics, which exhibit 2 metastable phases with negative and positive net magnetization, . Nonequilibrium (NED) and equilibrium (ED) datasets are generated by simulating 16 trajectories with all subsystems initialized in state −1. NED simulations are terminated before the net magnetization becomes larger than 0 (i.e., the NED dataset has no configurations with more than 4 subsystems in the configuration). Consequently, the entire metastable state with positive net magnetizations is missing. In the ED data, simulations are run with lengths to match the sampling statistics of the NED data, but trajectories are allowed to reach positive net magnetizations. DGMs are estimated by optimizing SI Appendix, Eq. S4. We find that both the ED and the NED DGMs converge to the correct subsystem couplings (SI Appendix, Fig. S2A) regardless of whether we choose to estimate the external field parameters, , or not (SI Appendix, Fig. S3). This means that the unbiased subsystem couplings can be recovered from a biased dataset, in turn suggesting that global system characteristics can be estimated from incomplete data (Fig. 2).

To compute the overall thermodynamics and kinetics of the system and the estimated DGMs, we generate MSMs with all states and compute their transition probabilities using Eq. 2. Indeed, the distribution of the net magnetizations, , predicted by the DGMs closely resembles the true distribution (SI Appendix, Fig. S1B). In contrast, an MSM estimated using the NED data only contains configurations contained in the data and therefore, fails to predict the existence of positive net magnetizations (SI Appendix, Fig. S1B).

Remarkably, DGMs can also predict the kinetics involving states that have not been observed. The DGM predicts a global transition matrix that correlates well with the reference, albeit with some biases (compare SI Appendix, Fig. S2B with SI Appendix, Fig. S3C). The true global relaxation timescales are recapitulated well by the DGMs (Fig. 1C)—however, for the NED DGM, the timescales are systematically overestimated, as some self-couplings are overestimated (SI Appendix, Fig. S2A). As expected, the NED MSM completely fails to capture the slowest relaxation timescale, as it corresponds to the inversion of net magnetization that has not been observed in the training data.

DGMs of MD.

We now turn to MD, where DGMs are built using a discretization of molecular features and hence, introduce a systematic modeling error. To this end, we model MD simulation data of a pentapeptide Trp-Leu-Ala-Leu-Leu (WLALL) and compare DGMs with MSMs that are well established for peptide simulation data (Fig. 3A).

Fig. 3.

Comparison of DGM and MSM describing backbone torsion dynamics of the peptide Trp-Leu-Ala-Leu-Leu (WLALL). (A) Molecular structure rendering of WLALL in stick representation. (B) Global-state distribution computed according to DGM and MSMs. (C) Torsion angles serve as binary subsystems with value that indicates the rotamer. Vertical and horizontal dotted lines indicate the boundaries of the −1 to 1 transition in the and torsions, respectively. Reference density of () torsions in Leu-2 from the WLALL dataset. (D) The 95% confidence intervals of MSM-implied (orange) and DGM-implied (blue) timescales at . Confidence intervals in B and D are computed using bootstrap with replacement. Markov states shown in B are those observed in all 24 trajectories; DGM state probabilities are renormalized to this set.

We model WLALL by 7 subsystems corresponding to its backbone torsions (). Each subsystem can assume 2 “spin” states defined by splitting at and and at and , thus discretizing the Ramachandran plane into 4 states (Fig. 3C and SI Appendix). Our state vector is thereby a rotameric state vector encoding the current state of the subsystems. We stress that the subsystem representation is a modeling decision and will in general be different for different molecules. We estimate MSMs and DGMs using this representation. For the MSMs, all possible global configurations serve as possible Markov states, and the MSMs are estimated on the subset of observed Markov states. MSMs are computed from DGMs by enumerating all possible global-state transitions. We find that the implied timescales of DGMs match those of the MSMs well (Fig. 3D and SI Appendix, Fig. S4). Additionally, the Markov state probabilities of the DGMs match closely those of the MSM, in particular for high probability states (Fig. 3B).

The DGMs only use 56 parameters but predict equilibrium probabilities for all 128 possible states and all possible transitions. Thus, DGMs are statistically much more efficient than MSMs. MSMs directly estimate a transition matrix for all states that have been observed—for WLALL, 45 states (35.16%) and 337 state-to-state transitions (2.06%) have been observed (). Enumerating all possible system configurations is not scalable to larger systems. For larger systems, MSMs are built by clustering states, preferentially by grouping states that are quickly exchanging (42–45). Still, this approach requires all long-lived sets of configurations to be sampled in the data.

Prediction of Unobserved Metastable Molecular Configurations.

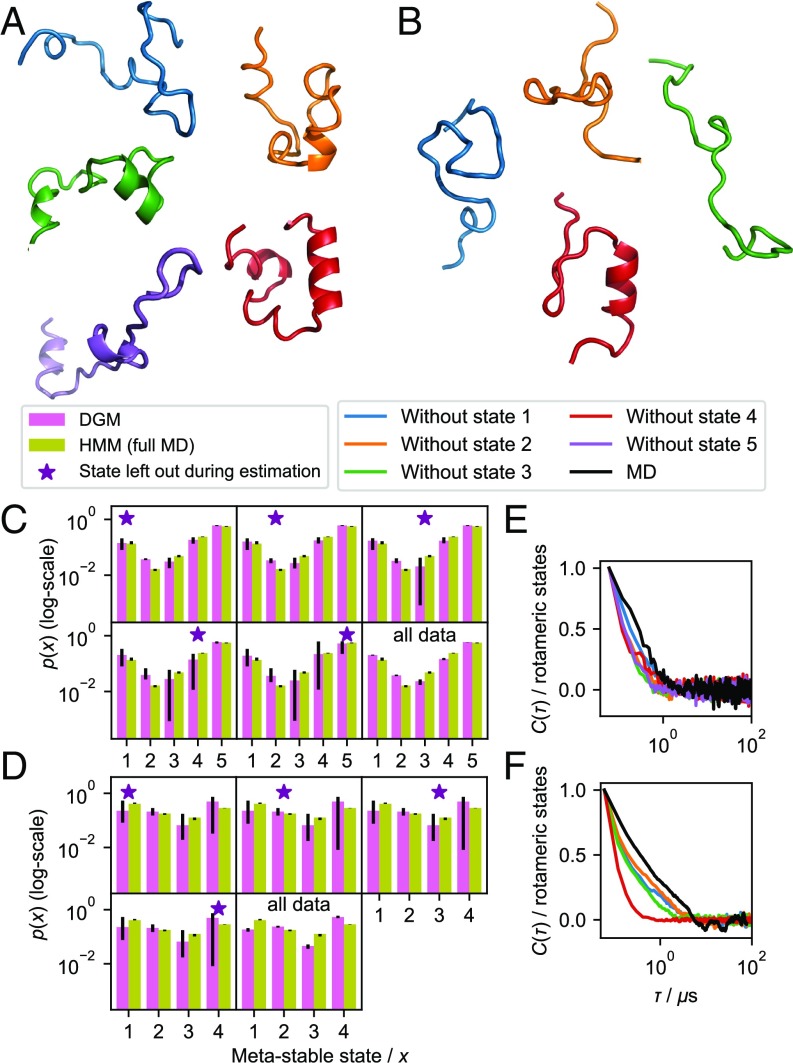

To more systematically test the performance of DGMs in predicting metastable states not observed during training, we estimated several DGMs (Materials and Methods) designed to be selectively blind toward a particular metastable state. We used simulation data previously published of 2 fast-folding proteins villin (-helical) and BBA (-fold) (46). As for WLALL above, we use the backbone torsion rotameric state vector to represent these proteins.

For villin and BBA, we built hidden Markov models (HMMs) (47) that resolves 5 and 4 metastable states, respectively (structures are in Fig. 4 A and B). We then estimated DGMs using the same data and confirmed that they qualitatively reproduce the equilibrium probabilities of the HMMs (Fig. 4 C, last panel, and D, last panel). Using the HMMs, we assign the MD data to metastable states and generate 5 and 4 sets of training data for the 2 proteins, where each set is missing 1 of the states. These subsampled datasets are used for estimating “one-blind” DGMs that are each “blind” to 1 metastable configuration (SI Appendix has details). From the estimated DGMs, we simulate synthetic trajectories of the subsystems to test whether these one-blind DGMs can recover the unobserved states and their statistics.

Fig. 4.

Prediction of macroscopic stationary and dynamic properties of fast-folding proteins villin and BBA with DGMs. (A and B) Molecular renderings of metastable configurations identified by HMMs of villin (A) and BBA (B) using a ribbon representation. Color/metastable state relationship: blue/1, orange/2, green/3, red/4, and purple/5. (C and D) Metastable state distributions sampled by DGMs were estimated leaving data from 1 of 4 metastable state out during estimation and using the full dataset (yellow). Reference distribution estimated using an HMM was estimated with the full MD dataset (magenta). (E and F) Normalized TCF of the rotameric-state vector in villin (E) and BBA (F) as sampled by the DGMs and in the simulation data.

We find that the one-blind DGMs sample the same configurational space as the full MD simulations, although the predicted equilibrium distributions differ (SI Appendix, Fig. S5). This suggests that, although the predicted thermodynamics in the one-blind DGMs are not quantitatively accurate, they are able to predict relevant states that have not been included in the training data. This is mirrored at the microscopic level: configurations sampled by the DGM closely agree with their MD counterparts (SI Appendix, Figs. S6–S8).

Next, we compare the macroscopic stationary and dynamic properties predicted by the one-blind DGMs with the full-data HMMs (Fig. 4). Indeed, all one-blind DGMs predict the existence of the state missing in the training data and in most case, with a population comparable with the MD simulation (Fig. 4 C and D). For visualization, we have reconstructed molecular trajectories from the subsystem trajectories simulated from a select set of one-blind DGMs (SI Appendix, Fig. S9 and Movies 1 and 2).

Finally, we compare the kinetics predicted by the one-blind DGMs with those of the original simulation. We use the time correlation function (TCF) of the rotameric state vector in the reference simulations of villin and BBA and compare them with corresponding TCFs from the DGM trajectories (Fig. 4 E and F). The TCFs are computed as with , where is the time average and is the mean value of . We find that these generally agree well, although the DGMs tend to somewhat overestimate the relaxation rates, in particular the DGMs that were blind to the folded configurations (fourth [red in Fig. 4] state in both cases).

Metastability in One-Blind DGMs.

The analysis above shows that the conformational space sampled by the one-blind DGMs is realistic and recapitulates the conformational space sampled in the full MD datasets. However, whether metastable states predicted by the one-blind DGMs indeed are identical to the metastable states identified in the MD is not clear from this analysis alone. In other words, although we sample a conformational space, which is realistic, it is unclear whether information about slow conformational transitions is captured. To more quantitatively assess this, we estimated HMMs trained on datasets generated using the one-blind DGMs (SI Appendix). In half of the cases, the number of metastable states identified from the one-blind DGM datasets matches the corresponding numbers from the full MD dataset (SI Appendix, Table S1). In most of the remaining cases, 1 metastable state is missing, and in a single case, 1 extra metastable state is identified, closely resembling another metastable state (SI Appendix).

To better understand the identity of the metastable states observed in the one-blind DGMs, we assigned them to corresponding metastable states from the full MD dataset to minimize the total assignment error (SI Appendix). We find that the metastable states sampled by the one-blind DGMs can be unambiguously assigned to a corresponding metastable state observed in the MD dataset, and their sampling statistics correlate well with their MD counterparts (SI Appendix, Fig. S10 A and C). The average lifetimes of these metastable states qualitatively correlate with those of the reference MD dataset but generally are too short as already indicated above (SI Appendix, Fig. S10 B and D).

Discussion and Conclusions

The ability to simulate a molecular system to convergence depends critically on how the simulation is analyzed and how the quantities of interest are computed. Most current simulation and analysis methods use the idea that the molecule is a single global state—most explicitly, MSMs make that assumption. However, this picture leads to a sampling problem that does not scale to large molecular systems. A molecule with independent subunits with metastable states each will have an exponential number, , of states.

In this paper, we introduce the concept of DGMs and their utility to model molecular kinetics. In DGMs, the global configurational state is encoded as a vector of states of its subsystems. As DGMs only model the pairwise interactions between subsystems, an exponential number of global configurations can be represented with a quadratic number of parameters. We illustrate DGMs using the DIM—and its generalization, the DPMs (SI Appendix).

In general, we find that the DGMs—despite their compact parametric form—can approximate well the dynamic behavior of several molecular systems. When gauged against MSMs, DGMs predict similar characteristic timescales and stationary distributions. Beyond the capabilities of MSMs, DGMs also allow for the prediction of transitions to and from unobserved molecular conformations and their stationary probabilities. Specifically, we found that DGMs can predict the presence of metastable configurations, which have not been observed during model estimation, although their absolute probabilities are not always accurately captured.

The representation of a molecule in the DGM framework gives rise to technical challenges that need to be addressed in future studies. (i) How can the subsystems of a molecule be learned from simulation data? Here, we have defined these subsystems a priori by choosing dihedral rotamers, but general optimization principles are needed (e.g., the variational approach for Markov processes) (48–50). (ii) How do we reconstruct molecular configurations from a subsystem-encoded representation? Going from an encoded representation back to a molecular configuration (decoding) is generally a difficult problem. (iii) How do we optimally choose regularization strategy and strength? While several options are available choosing the most appropriate combination remains heuristic. (iv) What are the limits of DGMs? The compact parametric comes with limited expressive power, and it is assumed that the subsystem couplings are not a function of the global configuration—if the latter is violated, we cannot expect quantitative predictions by DGMs. (v) How can we reconcile DGMs with experimental observables? Unlike for MSMs (51), the integration of experimental data into the estimation of DGMs is currently not possible. While in MSMs, experimental observables can be directly expressed in terms of transition matrix properties (52, 53), in DGMs we do not have direct access to these properties, and reconstructing them may be prohibitively expensive.

Machine learning has had a significant impact on molecular modeling in recent years (54–63). In particular, deep learning with its ability to learn complicated nonlinear functions given sufficient available data (64) has seen multiple applications. Problems faced here, including identification of subsystems and their discretization, are highly nonlinear and may benefit from these properties as well. Indeed, the variational approach for Markov processes network (VAMPnets) approach recently illustrated how the featurization, projection, and discretization pipeline of MSM building could be replaced by a deep neural network (54). We envision that a related approach can be taken in the context of identifying subsystems in molecular systems for DGMs and thereby, side step this currently cumbersome and manual process.

We anticipate DGMs and follow-up methods to be broadly applicable in biophysics. The compact parametric form allows us to model molecular systems that are significantly larger than what would be tractable using for example MSMs. Furthermore, a sparse subsystem coupling matrix could enable a spatial decomposition of large molecular systems into conditionally independent fragments, which are more amenable for simulation. The ability of DGMs to predict unobserved global configurations could further be used in an adaptive simulation setting, where previously unobserved states are reconstructed and used to seed new molecular simulations.

Materials and Methods

As we show in SI Appendix, section 1, the estimation of the DGM parameters and is tractable and factorizes into -independent logistic regression problems. Here, we maximize the posterior distribution (SI Appendix, Eq. S4) using the SAGA optimization algorithm (65) as implemented in the scikit-learn python library (66). Additional details are given in SI Appendix, section 2. An open source library (LGPL-3.0 license) for estimation and analysis of DGMs along with example notebooks is available at http://www.github.com/markovmodel/graphtime/.

Supplementary Material

Acknowledgments

We thank Fabian Paul, Hao Wu, Christoph Wehmeyer, Illia Horenko, Moritz Hoffmann, Brooke Husic, Tim Hempel, and Attila Szabo for discussions and comments on drafts of this manuscript. We thank Nuria Plattner for providing simulation data for WLALL and DE Shaw Research for providing the simulation data for villin and BBA. We acknowledge funding from an Alexander von Humboldt Foundation postdoctoral fellowship (to S.O.), European Research Council Grant CoG 772230 “ScaleCell,” and Deutsche Forschungsgemeinschaft Grants TRR186/A12 and SFB1114/A04 (to F.N.).

Footnotes

The authors declare no conflict of interest.

This article is a PNAS Direct Submission.

This article contains supporting information online at www.pnas.org/lookup/suppl/doi:10.1073/pnas.1901692116/-/DCSupplemental.

References

- 1.Schütte C., Fischer A., Huisinga W., Deuflhard P., A direct approach to conformational dynamics based on Hybrid Monte Carlo. J. Comput. Phys. 151, 146–168 (1999). [Google Scholar]

- 2.Noé F., Horenko I., Schütte C., Smith J. C., Hierarchical analysis of conformational dynamics in biomolecules: Transition networks of metastable states. J. Chem. Phys. 126, 155102 (2007). [DOI] [PubMed] [Google Scholar]

- 3.Chodera J. D., et al. , Automatic discovery of metastable states for the construction of Markov models of macromolecular conformational dynamics. J. Chem. Phys. 126, 155101 (2007). [DOI] [PubMed] [Google Scholar]

- 4.Buchete N. V., Hummer G., Coarse master equations for peptide folding dynamics. J. Phys. Chem. B 112, 6057–6069 (2008). [DOI] [PubMed] [Google Scholar]

- 5.Prinz J. H., et al. , Markov models of molecular kinetics: Generation and validation. J. Chem. Phys. 134, 174105 (2011). [DOI] [PubMed] [Google Scholar]

- 6.Singhal N., Pande V. S., Error analysis and efficient sampling in Markovian state models for molecular dynamics. J. Chem. Phys. 123, 204909 (2005). [DOI] [PubMed] [Google Scholar]

- 7.Du W. N., Marino K. A., Bolhuis P. G., Multiple state transition interface sampling of alanine dipeptide in explicit solvent. J. Chem. Phys. 135, 145102 (2011). [DOI] [PubMed] [Google Scholar]

- 8.Noé F., Krachtus D., Smith J. C., Fischer S., Transition networks for the comprehensive characterization of complex conformational change in proteins. J. Chem. Theo. Comp. 2, 840–857 (2006). [DOI] [PubMed] [Google Scholar]

- 9.Preto J., Clementi C., Fast recovery of free energy landscapes via diffusion-map-directed molecular dynamics. Phys. Chem. Chem. Phys. 16, 19181–19191 (2014). [DOI] [PubMed] [Google Scholar]

- 10.Rohrdanz M. A., Zheng W., Maggioni M., Clementi C., Determination of reaction coordinates via locally scaled diffusion map. J. Chem. Phys. 134, 124116 (2011). [DOI] [PubMed] [Google Scholar]

- 11.Noé F., Schütte C., Vanden-Eijnden E., Reich L., Weikl T. R., Constructing the full ensemble of folding pathways from short off-equilibrium simulations. Proc. Natl. Acad. Sci. U.S.A. 106, 19011–19016 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Bowman G. R., Voelz V. A., Pande V. S., Atomistic folding simulations of the five-Helix bundle protein lambda 6-85. J. Am. Chem. Soc. 133, 664–667 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zheng W., et al. , Delineation of folding pathways of a -sheet miniprotein. J. Phys. Chem. B 115, 13065–13074 (2011). [DOI] [PubMed] [Google Scholar]

- 14.Kohlhoff K. J., et al. , Cloud-based simulations on google exacycle reveal ligand modulation of gpcr activation pathways. Nat. Chem. 6, 15–21 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Bowman G. R., Bolin E. R., Harta K. M., Maguire B., Marqusee S., Discovery of multiple hidden allosteric sites by combining markov state models and experiments. Proc. Natl. Acad. Sci. U.S.A. 112, 2734–2739 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Buch I., Giorgino T., De Fabritiis G., Complete reconstruction of an enzyme-inhibitor binding process by molecular dynamics simulations. Proc. Natl. Acad. Sci. U.S.A. 108, 10184–10189 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Plattner N., Noé F., Protein conformational plasticity and complex ligand binding kinetics explored by atomistic simulations and markov models. Nat. Commun. 6, 7653 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gu S., Silva D. A., Meng L., Yue A., Huang X., Quantitatively characterizing the ligand binding mechanisms of choline binding protein using markov state model analysis. PLoS Comput. Biol. 10, e1003767 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Huang D., Caflisch A., The free energy landscape of small molecule unbinding. PLoS Comput. Biol. 7, e1002002 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Plattner N., Doerr S., Fabritiis G. D., Noé F., Protein-protein association and binding mechanism resolved in atomic detail. Nat. Chem. 9, 1005–1011 (2017). [DOI] [PubMed] [Google Scholar]

- 21.Torrie G. M., Valleau J. P., Nonphysical sampling distributions in Monte Carlo free-energy estimation: Umbrella sampling. J. Comp. Phys. 23, 187–199 (1977). [Google Scholar]

- 22.Grubmüller H., Predicting slow structural transitions in macromolecular systems: Conformational flooding. Phys. Rev. E 52, 2893 (1995). [DOI] [PubMed] [Google Scholar]

- 23.Laio A., Parrinello M., Escaping free energy minima. Proc. Natl. Acad. Sci. U.S.A. 99, 12562–12566 (2002). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Paul F., et al. , Protein-ligand kinetics on the seconds timescale from atomistic simulations. Nat. Commun. 8, 1095 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Thirumalai D., Woodson S. A.. Kinetics of folding of proteins and RNA. Acc. Chem. Res. 29, 433–439 (1996). [Google Scholar]

- 26.Keller B. G., Kobitski A. Y., Jäschke A., Nienhaus U. G., Noé F., Complex rna folding kinetics revealed by single molecule fret and hidden markov models. J. Am. Chem. Soc. 136, 4534–4543 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Gerber S., Horenko I., On inference of causality for discrete state models in a multiscale context. Proc. Natl. Acad. Sci. U.S.A. 111, 14651–14656 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Yap W., Saroff H., Application of the ising model to hemoglobin. J. Theor. Biol. 30, 35–39 (1971). [DOI] [PubMed] [Google Scholar]

- 29.Schneidman E., Berry M. J., Segev R., Bialek W., Weak pairwise correlations imply strongly correlated network states in a neural population. Nature 440, 1007–1012 (2006). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Dama J. F., et al. , The theory of ultra-coarse-graining. 1. General principles. J. Chem. Theory Comput. 9, 2466–2480 (2013). [DOI] [PubMed] [Google Scholar]

- 31.Davtyan A., Dama J. F., Sinitskiy A. V., Voth G. A., The theory of ultra-coarse-graining. 2. Numerical implementation. J. Chem. Theory Comput. 10, 5265–5275 (2014). [DOI] [PubMed] [Google Scholar]

- 32.Ravikumar P., Wainwright M. J., Lafferty J. D., High-dimensional ising model selection using . 1 -Regularized logistic regression. Ann. Statist. 38, 1287–1319 (2010). [Google Scholar]

- 33.Parise S., Welling M., Structure Learning in Markov Random Fields, Schölkopf B., Platt J., Hoffman T., Eds. (NIPS, 2006), vol. 19. [Google Scholar]

- 34.Bishop C. M., Pattern Recognition and Machine Learning (Springer Science, 2006). [Google Scholar]

- 35.Koller D., Friedman N., Probabilistic Graphical Models: Principles and Techniques (Adaptive Computation and Machine Learning, The MIT Press, 2009). [Google Scholar]

- 36.Roudi Y., Hertz J., Mean field theory for nonequilibrium network reconstruction. Phys. Rev. Lett. 106, 048702 (2011). [DOI] [PubMed] [Google Scholar]

- 37.Lenz W., Beiträge zum verständnis der magnetischen eigenschaften in festen körpern. Phys. Z. 21, 613–615 (1920). [Google Scholar]

- 38.Glauber R. J., Time-dependent statistics of the ising model. J. Math. Phys. 4, 294–307 (1963). [Google Scholar]

- 39.Kawasaki K., Diffusion constants near the critical point for time-dependent ising models. i. Phys. Rev. 145, 224–230 (1966). [Google Scholar]

- 40.Metropolis N., Rosenbluth A. W., Rosenbluth M. N., Teller A. H., Teller E., Equation of state calculations by fast computing machines. J. Chem. Phys. 21, 1087–1092 (1953). [Google Scholar]

- 41.Roudi Y., Tyrcha J., Hertz J., Ising model for neural data: Model quality and approximate methods for extracting functional connectivity. Phys. Rev. E 79, 051915 (2009). [DOI] [PubMed] [Google Scholar]

- 42.Molgedey L., Schuster H. G., Separation of a mixture of independent signals using time delayed correlations. Phys. Rev. Lett. 72, 3634–3637 (1994). [DOI] [PubMed] [Google Scholar]

- 43.Ziehe A., Müller K. R., TDSEP—An Efficient Algorithm for Blind Separation Using Time Structure in ICANN (Springer, London, 1998), vol. 98, pp. 675–680. [Google Scholar]

- 44.Perez-Hernandez G., Paul F., Giorgino T., De Fabritiis G., Noé F., Identification of slow molecular order parameters for markov model construction. J. Chem. Phys. 139, 015102 (2013). [DOI] [PubMed] [Google Scholar]

- 45.Schwantes C. R., Pande V. S., Improvements in markov state model construction reveal many non-native interactions in the folding of NTL9. J. Chem. Theory Comput. 9, 2000–2009 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Lindorff-Larsen K., Piana S., Dror R. O., Shaw D. E., How fast-folding proteins fold. Science 334, 517–520 (2011). [DOI] [PubMed] [Google Scholar]

- 47.Noé F., Wu H., Prinz J. H., Plattner N., Projected and hidden markov models for calculating kinetics and metastable states of complex molecules. J. Chem. Phys. 139, 184114 (2013). [DOI] [PubMed] [Google Scholar]

- 48.Noé F., Nüske F., A variational approach to modeling slow processes in stochastic dynamical systems. Multiscale Model. Simul. 11, 635–655 (2013). [Google Scholar]

- 49.Nüske F., Keller B. G., Pérez-Hernández G., Mey A. S. J. S., Noé F., Variational approach to molecular kinetics. J. Chem. Theory Comput. 10, 1739–1752 (2014). [DOI] [PubMed] [Google Scholar]

- 50.Wu H., Noé F., Variational approach for learning Markov processes from time series data. arXiv:1707.04659 (11 December 2017).

- 51.Olsson S., Wu H., Paul F., Clementi C., Noé F., Combining experimental and simulation data of molecular processes via augmented markov models. Proc. Natl. Acad. Sci. U.S.A. 114, 8265–8270 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Noé F., et al. , Dynamical fingerprints for probing individual relaxation processes in biomolecular dynamics with simulations and kinetic experiments. Proc. Natl. Acad. Sci. U.S.A. 108, 4822–4827 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Olsson S., Noé F., Mechanistic models of chemical exchange induced relaxation in protein NMR. J. Am. Chem. Soc. 139, 200–210 (2017). [DOI] [PubMed] [Google Scholar]

- 54.Mardt A., Pasquali L., Wu H., Noé F., VAMPnets for deep learning of molecular kinetics. Nat Commun 9, 5 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Hernández C. X., Wayment-Steele H. K., Sultan M. M., Husic B. E., Pande V. S., Variational encoding of complex dynamics. Phys. Rev. E 97, 062412 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Wu H., Mardt A., Pasquali L., Noé F., Deep generative markov state models. arXiv:1805.07601 (11 January 2019).

- 57.Wehmeyer C., Noé F., Time-lagged autoencoders: Deep learning of slow collective variables for molecular kinetics. J. Chem. Phys. 148, 241703 (2018). [DOI] [PubMed] [Google Scholar]

- 58.Jung H., Covino R., Hummer G., Artificial intelligence assists discovery of reaction coordinates and mechanisms from molecular dynamics simulations. arXiv:1901.04595 (14 January 2019).

- 59.Wang W., Gómez-Bombarelli R., Coarse-graining auto-encoders for molecular dynamics. arXiv:1812.02706 (27 March 2019).

- 60.Chen W., Sidky H., Ferguson A. L., Nonlinear discovery of slow molecular modes using hierarchical dynamics encoders. arXiv:1902.03336 (2 June 2019). [DOI] [PubMed]

- 61.Xie T., France-Lanord A., Wang Y., Shao-Horn Y., Grossman J. C., Graph dynamical networks: Unsupervised learning of atomic scale dynamics in materials. arXiv:1902.06836 (22 May 2019). [DOI] [PMC free article] [PubMed]

- 62.Noé F., Olsson S., Köhler J., Wu H., Boltzmann generators–Sampling equilibrium states of many-body systems with deep learning. arXiv:1812.01729 (4 December 2018). [DOI] [PubMed]

- 63.Wang J., et al. , Machine learning of coarse-grained molecular dynamics force fields. ACS Cent. Sci. 5, 755–767 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Goodfellow I., Bengio Y., Courville A., Deep Learning (MIT Press, 2016). [Google Scholar]

- 65.Defazio A., Bach F., Lacoste-Julien S., Saga: A Fast Incremental Bradient Method with Support for Non-Strongly Convex Composite Objectives (NIPS, 2014), vol. 27, pp. 1646–1654. [Google Scholar]

- 66.Pedregosa F., et al. , Scikit-learn: Machine learning in Python. JMLR 12, 2825–2830 (2011). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.