Abstract

A major goal of neuroscience is understanding how neurons arrange themselves into neural networks that result in behavior. Most theoretical and experimental efforts have focused on a top-down approach which seeks to identify neuronal correlates of behaviors. This has been accomplished by effectively mapping specific behaviors to distinct neural patterns, or by creating computational models that produce a desired behavioral outcome. Nonetheless, these approaches have only implicitly considered the fact that neural tissue, like any other physical system, is subjected to several restrictions and boundaries of operations. Here, we proposed a new, bottom-up conceptual paradigm: The Energy Homeostasis Principle, where the balance between energy income, expenditure, and availability are the key parameters in determining the dynamics of neuronal phenomena found from molecular to behavioral levels. Neurons display high energy consumption relative to other cells, with metabolic consumption of the brain representing 20% of the whole-body oxygen uptake, contrasting with this organ representing only 2% of the body weight. Also, neurons have specialized surrounding tissue providing the necessary energy which, in the case of the brain, is provided by astrocytes. Moreover, and unlike other cell types with high energy demands such as muscle cells, neurons have strict aerobic metabolism. These facts indicate that neurons are highly sensitive to energy limitations, with Gibb's free energy dictating the direction of all cellular metabolic processes. From this activity, the largest energy, by far, is expended by action potentials and post-synaptic potentials; therefore, plasticity can be reinterpreted in terms of their energy context. Consequently, neurons, through their synapses, impose energy demands over post-synaptic neurons in a close loop-manner, modulating the dynamics of local circuits. Subsequently, the energy dynamics end up impacting the homeostatic mechanisms of neuronal networks. Furthermore, local energy management also emerges as a neural population property, where most of the energy expenses are triggered by sensory or other modulatory inputs. Local energy management in neurons may be sufficient to explain the emergence of behavior, enabling the assessment of which properties arise in neural circuits and how. Essentially, the proposal of the Energy Homeostasis Principle is also readily testable for simple neuronal networks.

Keywords: homeostasis, energy, neuronal networks, behavior, emergent properties

Introduction

Throughout evolution, the development of the nervous system has enabled animals with the capacity to manifest ever-growing complex behavior, which has helped them survive in a changing environment. Understanding how neurons arrange themselves into neural networks that work at producing different behaviors has always been a major goal of neuroscience. Various conceptual frameworks have aimed to explain how behavior emerges from neuronal activity. Arguably, the most relevant is the Neuron Doctrine, proposed by Santiago Ramón y Cajal and further developed by Heinrich Waldeyer-Hartz and Horace Barlow (Barlow, 1972; Bock, 2013). Since then, the same logic has spread into coding paradigms (Lettvin et al., 1959; Fairhall, 2014; Yuste, 2015), especially in information processing frameworks (Fodor, 1983; Friston, 2002; Robbins, 2010; Lorenz et al., 2011), and has been scaled from neurons up to neural networks (Yuste, 2015). A common and key element of these conceptual approaches has been to find neuronal correlates of behaviors, effectively associating specific behaviors with distinct neural patterns. This top-down approach (using behavior as a reference to be mapped into neuronal circuits) has been very successful in providing single-unit or network models that can implement the observed behaviors, yet simultaneously, may make difficult the capture of the emergence of behavior, which is by-large a bottom-up phenomenon. This methodological approach also limits our capacity of predicting the boundaries of the capabilities or the spectrum of behaviors of a given system, because we map or associate only those behaviors that have been well-characterized. More importantly, all theoretical approaches, to our knowledge, have only implicitly addressed the fact that neural tissue, like any other physical system, is subjected to several restrictions and boundaries of operations.

Cells use energy to stay alive and at the same time, maintain some reserves to respond and adapt to dynamic situations, maintaining their homeostasis. For neurons, energy availability would be further important, as their energy expenses are high, as compared to other somatic cells (Attwell and Laughlin, 2001; Shulman et al., 2004). Indeed, the metabolic consumption of the brain, which represents 20% of whole-body oxygen consumption, contrasts with the neural tissue representing only 2% of whole body weight (Shulman et al., 2004). Interestingly, the total brain energy consumption increases proportionally with the number of neurons among different species, including humans (Herculano-Houzel, 2011), and the total energy expenditure associated to a neuron during the signaling and resting states is constant in different mammalian species (Hyder et al., 2013). Thus, neurons seem to present a highly specialized system for managing their energy demands.

Several evidences demonstrate that it is reasonable to assume a constant value for energy availability for neurons over the long term (energetic homeostasis). For instance, cultured neurons exhibit a steady value for free adenosine triphosphate (ATP) in basal conditions, which transiently decrease during the induction of glutamatergic synaptic activity through various energy challenges (Marcaida et al., 1995, 1997; Rangaraju et al., 2014; Lange et al., 2015). This tight energy management suggests a relevant role for neuronal energy homeostasis on neuronal and network functional properties.

Here, we propose a new bottom-up conceptual paradigm for neuronal networks: The Energy Homeostasis Principle. Under this principle, the condition of maintaining neuronal homeostasis triggers synaptic changes in the individual but connected neurons, resulting in the local energy balance scaling up to a network property. This conceptual framework supposes that energy management might be critical in determining plasticity, network functional connectivity, and ultimately behavior.

Cellular Homeostasis and Gibbs Free Energy

In this article, we propose that behavior may raise as an emergent property rooted in energy requirement of neurons, thus, we would like to start from the level of biochemistry and metabolism. As such, we will begin with the fact that cells are dynamic molecular machines that require the nutrient intake to stay alive. Many biological processes are thermodynamically unfavorable, and through metabolism, cells draw energy from nutrients, and generate metabolic resources necessary to drive their cellular activities (Hofmeyr and Cornish-Bowden, 2000) (for a schematic, see Figure 1A). Cellular homeostasis can be defined as a state where the production and consumption of metabolic resources balance each-other, and thus their concentration is constant in time. For our specific context, balancing the intake and consumption of metabolic resources will unavoidably have a global impact on the cellular processes. The network of metabolic processes is large and complex, limiting, to some extent, our capacity to predict cellular behavior using basic principles. Nonetheless, biochemical reactions must be consistent with the laws of thermodynamics.

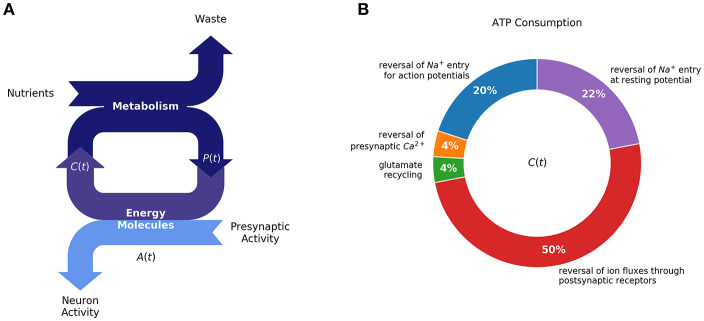

Figure 1.

Homeostasis requires a balance between ATP production (metabolism) and ATP consumption (synaptic activity): (A) Production of energy molecules by metabolism supports neuron activity, in addition to cell maintenance processes, most notably by ATP [A(t)]. Neuronal homeostasis depends on a balance between production and consumption of high-energy molecules. (B) Synaptic activity has been estimated to amount for half of total ATP consumption [diagram redrawn from (Harris et al., 2012)]. For cellular homeostasis to be achieved, neurons must regulate their activity and metabolism in response to changing external perturbations. We propose that regulatory mechanisms, responsible for changes synaptic plasticity, reflect the requirement for maintaining a constant level of energy resources available for neurons to function.

Thermodynamics can help us understand how a system evolves in time through the comparison of the thermodynamic potential between an initial and final state. For processes at a constant temperature and pressure, the thermodynamic potential is given by Gibbs Free Energy (G). This thermodynamic potential will dictate a directional bias of chemical reactions. The Gibbs Free Energy—supporting cellular processes—is provided with a finite amount of metabolic resources. Thus, there is a trade-off between the potential for metabolic work and metabolic expenses, which, we propose, may explain some well-established phenomenology of how cells respond to external perturbations. Additionally, the change in the Gibbs Free Energy (ΔG) and the rate associated with chemical transformations are related (Crooks, 1999). To illustrate the relation between thermodynamics and kinetics, for a reversible reaction X⇔Y, the following relation constrains kinetic rates:

| (1) |

where R is Gas constant, and T the absolute temperature (Cannon and Baker, 2017). This equation describes the relation between the mean rates of any pair of reversible processes (from X to Y, and from Y to X) and the difference in Gibbs Free Energy between the states. Note that by definition the Gibbs Free Energy assumes Temperature to be constant. In simple terms, the difference ΔG{X⇒Y} can be thought of as a “directional bias,” indicating how favorable one direction is over the other. In more detail, the Gibbs Free Energy is divided into two components, Enthalpy (H) and Entropy (S):

| (2) |

Where, T is the absolute temperature (Silbey et al., 2004). In the context of chemical transformations, Enthalpy is a measure of the energy required to form a given substance, disregarding interactions with other molecules; whereas Entropy can be interpreted as a correction accounting for all possible combinations by which molecules can react (Danos and Oury, 2013). Given the combinatorial nature of entropy, it can also be interpreted as a measure of disorder or information, but certain care must be taken for this interpretation to have physical meaning (Jaynes, 1965). We wish to recognize that the direct application of thermodynamics to biology has many challenges, particularly in describing macro-molecular processes (Cannon, 2014; Cannon and Baker, 2017; Ouldridge, 2018), combining large systems of reactions (e.g., kinetic parameters may be required), and accounting for fluctuations from average behavior (Marsland and England, 2018).

A long-standing observation in biology, rooted in thermodynamic laws is that for cells to function, they must couple unfavorable reactions (ΔG > 0) with more favorable ones (ΔG < 0). Common examples of unfavorable processes are the synthesis of macromolecules, and the maintenance of membrane potential; which are coupled with the hydrolysis of ATP, and GTP providing more favorable ΔG (Nicholls, 2013). In turn, ATP, GTP, and monomers for macro-molecules are synthesized from nutrients through metabolism (Nicholls, 2013). For instance, the maximum free energy provided by ATP hydrolysis is related to the concentration of ATP, ADP, and phosphate.

| (3) |

Where, ΔG° is the standard free energy, and log, the natural logarithm. For generality, we will call hereafter “energy resources” the set of reactants that allow cells to maintain unfavorable reactions in the direction conducive to cellular functioning and survival. We wish to emphasize that balancing the internal production and consumption of metabolic resources by different reactions is critical, given that metabolic resources are finite and shared by many cellular processes. Thus, cells must manage their internal production and consumption of metabolic resources to stay alive and remain functional, which may be of special consequence to cellular activities with high energy demands, such as synaptic activity in neurons. Given that neurons are active most of the time, it is reasonable to expect that current and future disposal of energy resources is privileged, which may be reflected in the regulatory mechanisms responsible for synaptic plastic changes. In the following section, we will explain how current evidence regarding neuron plasticity appears to support a relatively simple rule: maintain the levels of energy disposal constant, by reducing the consumption of energy resources (e.g., reducing discharge rate, post-synaptic potential), or by increasing high-energy molecule production (e.g., mitochondria and interactions with glia).

Energy Management of Brain Neurons

Neurons are the paramount example of energy expenditure for their function and survival. This situation is reflected in their large metabolic rates and by the comparatively higher sensibility of brain tissues to oxygen and glucose deprivation (Ames, 2000). Reactions controlling the conversion of nutrients into available cytosolic levels of ATP are important to generate the potential metabolic work that is available to a neuron at any given time. During normal conditions, the primary energy substrate in the brain for neurons is blood-derived glucose; however, when at elevated levels in the blood, ketone bodies and lactate can be used as energy sources as well (Magistretti and Allaman, 2018). The glycolytic pathway is the first step to glucose processing, where two pyruvates and two ATPs are generated from one molecule of glucose. In addition, the pyruvate could either be reduced to lactate or enter the Krebs cycle to produce metabolic reducing intermediates that will generate nearly 29 additional ATP molecules per glucose (through oxidative phosphorylation in the mitochondria). Although neurons and astrocytes are capable of glucose uptake and performing both glycolysis and the Krebs cycle, accumulated evidence supports the hypothesis that neurons may “outsource” glycolytic activity to astrocytes under activity conditions (Weber and Barros, 2015). In addition, the central nervous system is provided with small glycogen reserves, which are predominantly present in astrocytes (Brown and Ransom, 2007), but also found in neurons (Saez et al., 2014). In any case, the lactate derived from glycogen break-down may also provide ATP to the neurons under ischemic or sustained electric activity conditions (Brown and Ransom, 2007).

ATP sources change dynamically with neuronal activity and several mechanisms account for this fine-tuning response. First, neuronal mitochondria are capable of raising ATP synthesis in response to increased synaptic stimuli (Jekabsons and Nicholls, 2004; Connolly et al., 2014; Rangaraju et al., 2014; Toloe et al., 2014; Lange et al., 2015). Although the molecular meditators for this activation are not completely elucidated, the increase of the respiratory rate of an isolated mitochondria correlates well with the ADP concentration (Brown, 1992), and neuronal mitochondrial function has been satisfactorily modeled considering the changes in ATP and ADP levels (Berndt et al., 2015). As an alternative mechanism, it has been reported that operating on milder stimulation conditions, the activity of Na-pump rapidly induces ATP synthesis of the mitochondria, in response to neuronal activity independent from changes in the adenosine nucleotides (Baeza-Lehnert et al., 2018). Second, neuronal activity is known to elicit local increases in blood flow (neurovascular coupling), glucose uptake, and oxygen consumption (Sokoloff, 2008). Coherently, glucose uptake and glycolytic rate of astrocytes are further increased in response to the activity of excitatory neurons, potentially as a consequence of the local rise of glutamate, ammonium (NH4), nitric oxide (NO), and importantly, K+ (Magistretti and Allaman, 2018). As such, an increased glycolytic rate on astrocytes leads to lactate accumulation that is shuttled into neurons which generate ATP through oxidative phosphorylation. Thus, in CNS neurons, different neuronal and non-neuronal ATP sources work “on demand,” depending on the local levels of synaptic activity.

What Is ATP Used for in Neurons?

Neurons are perhaps the largest eukaryotic cell in nature, their surface may be up to 10,000 times larger than an average cell (Horton and Ehlers, 2003). The large size of neurons supposes that structural processes, such as protein and lipid synthesis or the traffic of subcellular organelles, should be sustained by high levels of ATP synthesis. In addition to this fact, energy consumption during signaling is far more important. Indeed, it has been estimated that nearly 75% of the gray-matter energy budget is used during signaling; a number that is coherent with the decrease of energy consumption, observed under anesthesia, and is estimated to be around 20% of the total energy budget (Attwell and Laughlin, 2001; Harris et al., 2012).

Most of the neuron's energy budget during signaling is used to restore ion gradients across the plasma membrane, mediated by the action of different ATP-dependent pumps. For example, assuming an average firing rate of 4 Hz, a presynaptic neuron's ATP is mostly used for restoring the Na+ gradient due to action potentials, and to sustain the resting potential (22% and 20% of energy consumption, respectively). Meanwhile, at the post-synaptic neuron, ATP is primarily used to extrude ions participating in post-synaptic currents—about 50% of the energy consumption (Harris et al., 2012). More detailed descriptions of the neuron energy budget is provided in Figure 1B.

Neuron's ATP Availability Is Tightly Regulated

All cellular organizations require a minimum amount of ATP for survival. It is well-known that when ATP levels decrease below a certain threshold for different eukaryotic cells, apoptosis or necrosis is induced (Eguchi et al., 1997). Nevertheless, determining the maximum and minimum thresholds of a cell's ATP requirement for not only to survive but to realize a specialized function, is less apparent. In any case, this feature must be necessarily shaped by evolutionary adaptations of cells to their specific tissue environment. It is not completely clear how a neuron's ATP levels, during rest and upon activity, may impact its structure and function. Interestingly, by computational and mathematical modeling, it has been proposed that a compromise among energy consumption and information processing capacity has shaped the fundamental features of neuronal structure and physiology, including neuronal body size, ion channel density, and the size and frequency of synaptic inputs (Sengupta et al., 2013). For example, a larger neuronal body has a better capacity to discriminate and respond to different synaptic inputs (coding capacity), but at the cost of higher energy consumption. On the other hand, with a fixed size for the soma, the ion channel density required to obtain maximum energy efficiency is at a lower value than the density needed to maximize the coding capacity. Similarly, although small synaptic inputs at low frequencies are energetically more efficient, better coding capacity arises with larger inputs and rates. These energy constraints may have introduced important consequences during cellular evolution, such that neurons with similar shape and function may harbor similar metabolic features, even across different species.

Remarkably, it has been found that energy consumption of neurons, across the brains of varying species, is constant (Herculano-Houzel, 2011). This result supposes a critical restriction for the function of neuronal networks and their coding properties. For example, sparse coding i.e., brain computations that emerge from the increased firing rate of a few neurons during a task, has been proposed as a mechanistic solution to the limited energy availability for brain neurons (Attwell and Laughlin, 2001; Laughlin, 2001; Lennie, 2003; Weber and Barros, 2015). Thus, it is also possible that variables such as the ATP cytosolic concentration may have been finely tuned during evolution to allow for the emergence of fundamental properties, including some forms of synaptic plasticity.

Accumulating evidence supports that neurons, in time, harbor a narrow window of ATP cytosolic concentration availability [A(t)]. Despite not having dynamic measurements with absolute values of A(t), different experimental approaches on cultured neurons show that this variable tends to remain constant at resting conditions and after momentary synaptic challenges. Accordingly, 60 min of different sorts of glutamatergic stimulation leads to a nearly 5-fold decrease of A(t) (Marcaida et al., 1995, 1997), but when a brief glutamatergic or electric stimulation is applied, only a transient and reversible decrease on ATP levels occurs and the A(t) is subsequently restored to basal levels (Rangaraju et al., 2014; Lange et al., 2015).

Tight management of A(t) also operates on axonal compartments with important functional consequences. For instance, isolated axons from the optical nerve, under low glucose conditions, demonstrate a pronounced decay of ATP levels during high-frequency stimulation (50–100 Hz) (Trevisiol et al., 2017). Interestingly, compound action potentials (CAPs), generated by those stimulated axons, are reduced to the same extent and in high coincidence as the A(t), suggesting that electric activity depends on A(t) (Trevisiol et al., 2017). In addition, isolated axons exhibit a constant value for A(t), which immediately and steeply decays after the inhibition of glycolysis and oxidative phosphorylation, in concomitance with CAPs. However, when inhibitors are washed out, both A(t) and CAPs return to basal levels, further supporting that the system tends to reach a constant value for A(t). The tendency of the system to set a constant value for A(t) is also manifest in conditions where expenditures are highly reduced. For example, A(t) remains constant on pre-synaptic terminals of cultured hippocampal neurons, despite the inhibition of action potential firing due to incubation with the Na+ channel blocker Tetrodotoxin (TTX) (Rangaraju et al., 2014). Conversely, the same study showed that electrical stimulation of 10 Hz by 1 min, concomitantly evokes ATP synthesis on pre-synaptic terminals, restoring A(t) to basal levels (Rangaraju et al., 2014). From now on, we will call the basal value of A(t) as the homeostatic availability of ATP (AH).

Mechanisms accounting for the intrinsic control of AH in neurons are less explored than in other cells. In the short term, there is a direct and fast effect of ATP molecules and their hydrolysis products, such as AMP/ADP, over the activity of different metabolic enzymes and ion channels. Indeed, neurons are largely known for being extremely, even disproportionately, sensitive to decreases in ATP sources, leading to a fast and significant inhibition of electrical activity (Ames, 2000). For example, ATP-sensitive K+ channels open during decreased ATP levels, hyperpolarizing the neuron to reduce endocytosis and the opening of voltage-sensitive Na+ channels, thus preventing the ATP expenditure associated to both processes (Ben-Ari et al., 1990). On the other hand, it has been elegantly shown that action potential firing on pre-synaptic terminals' gate activity-driven ATP production is also required to allow proper synaptic transmission (Rangaraju et al., 2014). This close dependency of ATP levels to synaptic functioning has suggested that the affinity constant for ATP (e.g., Km) of different pre-synaptic enzymes, might be close to certain resting ATP levels (Rangaraju et al., 2014). It is tempting to speculate that the fine-tuning of the affinity constant from key enzymes might be a broader phenomenon in neurons. In addition, it is known that calcium entry, which is transiently modified by electrical activity, is capable of orchestrating changes in ATP production. For example, synaptic stimulation with brief NMDA pulses, not only lead to pronounced increases of cytosolic calcium levels, but also of the mitochondrial matrix, whose ATP producing enzymes are known to be stimulated by calcium increases (Tarasov et al., 2012; Lange et al., 2015). Indeed, transient increases of calcium levels are thought to be a sort of metabolic alarm which prepares cells to confront high energy demands by increasing ATP production by the mitochondria (Bhosale et al., 2015).

As a complementary mechanism, changes in the ATP and AMP ratio gate the activity of other metabolic sensors which, in turn, induce a specific signaling cascade for short and long-term adaptations of neuronal functions. For example, all known eukaryotic cells, including neurons, harbor energy sensors, such as AMP-activated protein kinase (AMPK), which tend to restore ATP concentration by decreasing anabolic and/or energy consuming processes, while increasing energy production through catabolism post-energy challenges (Potter et al., 2010; Hardie, 2011; Hardie et al., 2012). AMPK is a highly evolutionary-conserved serine/threonine kinase enzyme that is activated either by diminished cellular energy (high AMP/ATP ratio) and/or through increased calcium (Hardie et al., 2012). Recent evidence shows that in dorsal root ganglion neurons—which express the transient receptor potential ankyrin 1 (TRPA1) channel for thermal and pain transduction—the AMPK activation results in a fast, down-regulation of membrane-associated TRPA1 and its channel activity within minutes, which is consistent with lowering energy expenditure by diminishing post-synaptic currents (Wang et al., 2018). Furthermore, it has been demonstrated that calcium overload, induced by an excitotoxic NMDA stimulus on cultured cortical neurons, can be reduced by the activation of AMPK, which would save the energy involved in the reversal of a Ca++ potential (Anilkumar et al., 2013). Interestingly, the actions of the catalytic subunit of neuronal AMPK also includes the inhibition of axon outgrowth and dendritic arborization during neuronal development, for adapting to metabolic stress mediated by the suppression of Akt and mTOR signaling pathways (Ramamurthy et al., 2014). This result suggests that AMPK may also operate in mediating structural synaptic changes during the activity of mature neurons, contributing to control energy expenditures in the long-term. Furthermore, it has been shown that the maintenance of long-term potentiation (LTP), which is energetically demanding, is dampened when AMPK activity is pharmacologically activated (mimicking a low ATP/AMP ratio), or conversely, LTP could be rescued when an ATP mimetic, ara-A, was added during an energy challenge. Thus, under low energy conditions, neuronal AMPK tends to inhibit changes on ionic gradients and reduce changes on cytoarchitecture, which can upregulate the value of A(t), impacting plastic capacity as well.

Summarizing, each neuron has a certain amount of ATP available to them, which is constantly consumed by their different functions which can mostly be explained using ion gradient changes on axons and dendrites. At the same time, ATP production will compensate the ATP expenditure reaching an AH that should remain constant until another specific synaptic challenge arrives (Figure 2). In the next section, we will discuss the potential functional consequences of these adaptations in special cases of neuronal plasticity.

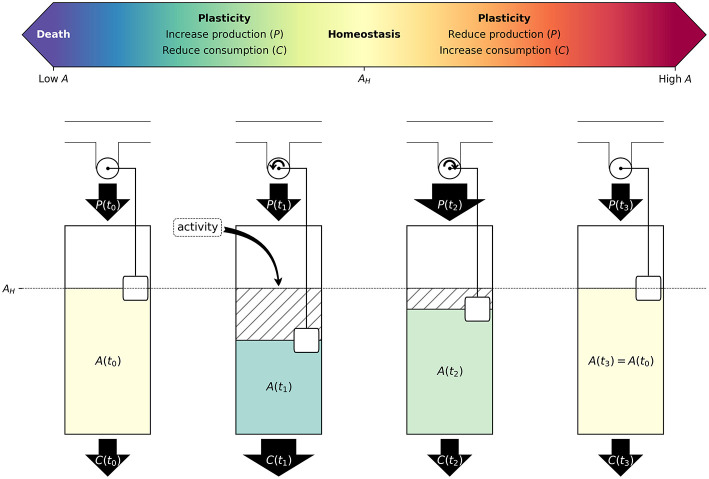

Figure 2.

Neuron activity induces changes in metabolism and synaptic activity to maintain homeostatic energy levels: Schematic illustration of neuronal response to an increase in activity. Above, in scale of colors, ATP concentration indicating from low to high A, with AH at the center. Below, step 1 depicts a resting state with A(t0) = AH. In time step 2, the increase in activity would result in additional ATP consumption, and therefore reduce ATP concentration. We propose that neurons would respond to this perturbation by decreasing ATP consumption [C(t)] and increasing its ATP production [P(t)], as represented in time step 3. Finally, neurons would return to homeostatic levels of ATP (AH), which is illustrated in time step 4. During all these steps, A(t) is colored following the above ATP concentration color scale. Some factors that contribute to ATP consumption are as follows: synaptic post-excitatory currents (excitatory inputs), firing rate (excitatory outputs), ion channel density, size of soma, dendrite arborization and axonal length, neurotransmitter recycling and release, and cytoarchitecture adaptations. Whereas, factors contributing to ATP production are: glycolysis supported by glucose or glycogen breakdown, oxidative phosphorylation supported by neuronal pyruvate, astrocyte-derived lactate, and ketone bodies. Neurons possess regulatory mechanisms that sense current energy levels (here represented by a rotating wheel with a floating balloon), and control production and consumption, to maintain homeostasis. Examples of these control mechanisms would include ATP-Sensitive K+ channels, and AMPK signaling.

Revisiting Neuronal Plasticity Under the Perspective of Energy Constraints

A narrow window of ATP cytosolic concentration across time supports a bottom-up view of neuronal energy constraints, which may explain some well-described plastic adaptations from the literature. Measurements of glucose and oxygen consumption (reflecting energy consumption) have not distinguished between the contribution from glial and neuronal metabolism and the total energy expenditure attributed to one neuron (Hyder et al., 2013). Nonetheless, neurons would keep energy availability during the increment of energy demands, which include action potentials, potential propagation or dendritic depolarization, by dynamically sharing expenses with astrocytes glial cells (Hyder et al., 2006; Barros, 2013). It is worth mentioning that energy management is partly performed by these latter cells (Magistretti, 2006; Magistretti and Allaman, 2015). Indeed, we must consider that ATP neural production is provided by the local pyruvate and glial lactate. Where a theoretical model aimed to explain brain energy availability from rat and human brains, it indirectly suggested that glial and neuron lactate sources may dynamically vary across different species and activity levels, with the condition of maintaining a rather constant energy production (Hyder et al., 2013).

We will follow a very simplified view of ATP metabolism characterized by two collections of processes: Those that produce ATP (e.g., from local pyruvate and glial lactate), and those that consume ATP (e.g., recovery of ion gradients, structural and functional synapse maintenance). We can formalize the effect of these processes on ATP concentration (A) by a simply differential equation:

| (4) |

Where, P(t, A, …) is a function that represents the sum of all reaction rates that produce ATP (e.g., anaerobic and aerobic metabolism), whereas C(t, A, …) is the sum of all reaction rates that consume ATP (e.g., membrane repolarization, structural and functional synapse maintenance). Both production (P) and consumption (C) rates are dynamic (they depend on time), but, more importantly, they depend on the levels of ATP available (A). Homeostasis will be achieved when production and consumption rates are equal, and the concentration of ATP is constant in time. We will represent the homeostatic concentration of ATP by AH.

We can interpret the observations of relatively constant ATP concentrations in neurons, as reflecting the action of feedbacks that adjust ATP production (P) and consumption (C) rates, compensating deviations of ATP (A), such that neurons return to homeostatic ATP levels (AH). We can expect that in case cells have an excess of ATP, they would respond by decreasing production or/and increasing consumption; and analogously, in case ATP levels are reduced, cells would respond by increasing production or/and decreasing consumption. We will call this regulation the neuron “energy management,” and summarize it mathematically using these equations:

| (5) |

Meaning that the differences between A with AH determines whether ATP production (P) and consumption (C) processes increase, decrease, or maintain their rates over time. Note that we also consider the possibility that neurons may respond to energy challenges by adjusting production and consumption, but it must be at least one of those variables.

It is critical to notice that this formalization makes some important simplifications. First, we understand that in addition to ATP, the concentrations of ADP, AMP, and other energy resources do determine homeostasis and influence neuronal changes. ATP is a reasonable departure point, given its prevalence in metabolism and the evidence supporting its role in synaptic plasticity, and therefore, will be the main example of energy resource exploit in our argument. An additional reasonable assumption is that the magnitude of the change in reaction rates should correlate with the magnitude of the distance to homeostasis, which we have omitted from the equations but will become relevant later in our argument for proposing experiments. We expect to expand toward a more detailed formalism in future work. Despite its simplicity, we think that our model can help to understand several previous studies and propose some experiments aimed at empirically evaluating the relation between energy resource availability and neural plasticity. We expect that simple phenomenological models, such as ours, will encourage both theoretical and experimental efforts, provided they can be readily falsified empirically, and be compared to theoretical derivations from biochemical first principles.

The tendency to set ATP at AH might be compatible with homeostatic plastic changes that return a neuronal network to a basal firing rate, after prolonged periods of increased or decreased synaptic activity (homeostatic synaptic plasticity). Accordingly, it has been theoretically proposed that the excitability threshold of neurons might be a direct function of ATP (Huang et al., 2007). For example, the KATP channel-opener diazoxide decreases bursting and regular firing activity of the immature entorhinal cortex neurons (Lemak et al., 2014), which is coherent with a tight association of firing rates with contingent ATP concentration. Also, theoretically, neuronal circuits governed by purely Hebbian-plasticity rules are predicted to converge on instability, or to the opposite—total inactivity (Miller and MacKay, 1994). One possible solution to enable neuronal circuits to remain responsive is to limit the amount of synaptic strength per neuron. At least on excitatory synapses, this problem has shown to be solved by another form of synaptic plasticity termed “homeostatic synaptic plasticity,” and more specifically, “synaptic scaling” (Turrigiano et al., 1998; Turrigiano, 2012). Synaptic scaling emerges to counteract the effects of long periods of increased or decreased synaptic activity in a multiplicative manner, thus allowing neurons to continuously reset the weight of their synaptic inputs to remain responsive to new environmental and cellular contexts. In the long term, the consequence of this regulation is that the firing rate of cortical cells in culture is sustained to an average set point (Turrigiano, 2012). As far as we know, no attempt has been made to relate or prove the influence of neuronal energy load or A(t) on this phenomenon.

Simple experiments on synaptic scaling could be performed to examine whether the tendency to reach AH has a predictive value on the synaptic activity of neuronal networks. As shown in the seminal experiments of Turrigiano's group, when a GABAergic inhibitor bicuculline (Bic) is acutely added to cultured neurons, it produces a significant increase in average firing rate. However, during 48 h of stimulation, firing rates return to control values. On the other hand, neuronal firing rates can be completely abolished soon after adding either tetrodotoxin (TTX) or 6-cyano-7-nitroquinoxaline-2,3-dione (CNQX). Nevertheless, during the 48 h of incubation, activity levels also return to a basal value (Turrigiano et al., 1998). The observed adaptive changes that operate in the long-term makes this experiment ideal for manipulating energy parameters.

Similar to the experiment of synaptic scaling performed by Turrigiano's group, in our theoretical experiment, cultured neurons would be submitted to 48 h of synaptic activity stimulation with Bic. During the stimulus, A(t) will transiently decrease, inducing plastic changes on the network that will return ATP concentration to AH, in a given time period (t1) (Figure 3A). In all conditions, P(t) and C(t) change accordingly with A(t), following Equations 4 and 5. However, if, during the stimulus, the neurons were pharmacologically modified to partially decrease ATP production [e.g., by blocking oxidative phosphorylation with sodium azide], expenditures C(t) are expected to be rapidly lowered and the time window required to return to the AH value will be shortened (Figure 3B). Conversely, one could “enlarge” the theoretical value of AH on cultured neurons by adding an ATP mimetic, such as ara-A. Here we assume that ara-A would cause inhibition of AMPK signaling, and that concentrations employed are low enough not to disturb the ATP synthesis. Thus, we propose that the neurons will take more time to return to AH (Figure 3C). Under these three conditions, the firing rate of neurons should also be adapted to the same level as in the initial state, before stimulation, as well as ATP concentrations should return to the homeostatic value AH.

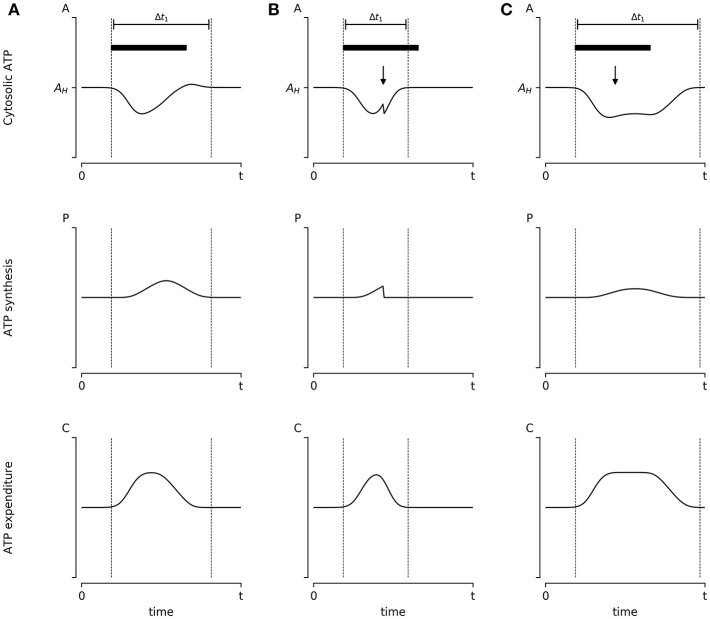

Figure 3.

Hypothetical experiment to evaluate the influence of energy availability on synaptic scaling: The influence of energy availability in synaptic plasticity could be evaluated empirically. Here we propose a simple hypothetical scenario and explain what outcomes we predict under each condition. (A) Cultured neurons are stimulated with bicuculine (Bic) for 48 h (denoted by a black bar), which presumably induces transient changes in ATP concentration [A(t)]. Neurons respond by increasing ATP production [P(t)] and reducing ATP consumption [C(t)] by reducing its firing rate, which leads to the reestablishment of homeostatic ATP concentration within the time window enclosed by dotted lines. (B) During stimulation, cultured neurons can be pharmacologically treated to partially inhibit oxidative phosphorylation (i.e., reducing ATP synthesis) denoted by a black arrow. Following the Energy Homeostasis Principle, we propose this will result in a further reduction of ATP concentration, which will induce an accelerated reduction in ATP consumption through the reduction of synapse firing rates. Thus, we propose that in this scenario the time window required to return to homeostasis is shortened. (C) Almost an identical protocol to (B) is applied to neurons; however, using an ATP mimetic molecule (denoted by the black arrow). We assume that ATP mimetic molecules would delay the reduction of synapsis firing rate by allosterically inhibiting AMPK, resulting in an enlarged period of energy consumption. Thus, we propose a wider time window before reaching AH. All graphics follow Equations 4 and 5, with the additional assumption that the magnitude of the adjustment of P(t) and C(t) are proportional to the distance of ATP levels A(t) to homeostatic levels AH. Results from these kinds of experiments could advance the understanding (and potentially manipulate) of the mechanisms responsible for neural adaptations, uncovering the relevant role of metabolic elements, such as metabolic sensors and/or nutrient availability.

From Molecules to Behavioral Homeostasis

In the previous sections, we have discussed how the energy homeostasis can affect synaptic plasticity in one neuron. Subsequently, this plasticity can impact other neurons that will trigger the same control systems to keep their AH. Since energy demands are transferred through synapses, and synapses appear or disappear according to energy demands, a network homeostasis comes into play. In this section, we argue that energy constraints scale up a level of organization and how homeostasis in one level is affected by homeostasis in the others.

From Neurons to a Neural Network

The first level is single neuron homeostasis, which is the balance between C(t) and P(t) in single neurons. Importantly, as far as an action potential producing a post-synaptic potential goes, it necessarily imposes an increment in C(t) for the post-synaptic neuron. As such, neurons manage their energy needs which also present an external demand from pre-synaptic neurons, and also imposes an energy demand over the post-synaptic neurons. The fact that a local increase in the C(t) can produce a change in post-synaptic neuron's C(t) supports that energy management is also a neural population property, which we will name network homeostasis. The single neuron homeostasis is closely related to the network homeostasis through a two-way directional interaction, where the network structure imposes constraints on the range of possible homeostatic states that a neuron can achieve, which will, in turn, pose stress on the network through interactions with neighboring neurons. In the same way, these neurons will respond by modifying their synaptic weights (also known as network connectivity), the number and the location of their synapses, thus changing the functionality of the neural network structure (maybe even micro-anatomically). In any condition that causes an imbalance between C(t) and P(t), the neurons will tend to change. Since neurons activate each other through synapses, this means that the activity of the pre-synaptic neurons will induce metabolic work in the post-synaptic ones. In turn, a post-synaptic neuron will modulate its synaptic weight to couple the input from the pre-synaptic neuron to its own metabolic needs. This process will continue recursively until the neurons balance their C(t) and P(t), in which case the network would have reached homeostasis. Essentially, network homeostasis is driven by the needs of each neuron, as each of them will change in an attempt to reach their own AH. Note that it is not necessary that every neuron should reach its own AH, as the connectivity of activity within the network may not allow them to improve further. However, every single neuron must have enough P(t) to devote toward maintenance processes required to stay alive. As such, network homeostasis becomes a neural population property.

Network homeostasis is tightly related to single neuron homeostasis; therefore, neural network homeostasis will be only achieved when several of the neurons that compose it individually can maintain themselves within homeostatic ranges (e.g., achieving AH). It is known that synaptic and dendrite pruning are a part of healthy development (Huttenlocher, 1990; Riccomagno and Kolodkin, 2015), which we could interpret as adjustments required to couple with the trade-off between maintaining the structure vs. the energy spent in action and post-synaptic potentials. In worse cases where suboptimal conditions are imposed on a single neuron by the neural network homeostasis, we expect to find neuron death. This phenomenon is documented as a part of normal brain development in some species (Huttenlocher, 1990), and also in pathological conditions (Perry et al., 1991; Kostrzewa and Segura-Aguilar, 2003; Pino et al., 2014).

From Neural Networks to Behavior

Behavior can be broadly described as the set of actions performed by an organism, or anything that an organism does that involves movement and response to stimulation. These actions are adaptive when they increase the survival and reproduction probability. In a top-down interpretation of behavior, these actions are the result of the activation of the neuronal circuit that developed evolutionary to fulfill a need. Nonetheless, according to the Energy Homeostasis Principle, at the neural circuitry level, the actions performed by an organism are out of spatial and temporal context, since all the cells experiences are perturbations of the network activity. For a given neuron, the activity dynamics is dependent on the cumulative synaptic currents, regardless of the type of pre-synaptic cells that evoked them, or in the case of sensory receptors, the type of energy that is transduced. Similarly, it makes no difference for a given neuron to have neuron-neuron or neuro-muscular/endocrine synapses. Conversely, we can reinterpret behavior as the observed consequence of the homeostatic activity of an extended neural network (brain) which interacts with the environment. Sensory input and motor outputs can thus be viewed as “environmental synapsis.” Under this framework, what we call behavior may be not necessarily be different from the range of actions neurons engage in any circuit.

However, the interaction with the environment has an important difference that will impact the energy balance in the neuronal network. We can operationalize behavior in a neural system as a set of inputs and outputs that occur in a closed-loop manner. For instance, when we move our eyes, the brain is generating output activity, which in turn modifies the subsequent input to the retina. These dynamics occur for all sensory systems, where motor acts modify sensory inputs (Ahissar and Assa, 2016). In this process, for each brain action, we should expect changes to occur in some sensory inputs. In other words, behavior can be seen as one of the ways in which the brain stimulates itself.

In principle, this closed-loop scheme would enable the brain to completely predict the sensory consequence of the motor actions. This processes of active inference is in line with previous proposals such as Friston's free energy principle and predictive coding (Friston, 2010; Schroeder et al., 2010). It is crucial to note that Friston's Free Energy Principle used an informational approach where aspects such as temperature do not refer to the absolute temperature measured in an experimental setting. As such, the Energy Homeostasis Principle does not conceptually overlap with Friston's proposal; they can be considered as complementary. From a bottom-up view, Friston's proposal answers the epiphenomena, which can be related to information processing, rather omitting the underlying physiological constraints. However, in any of these proposals, there is an agreement that the brain is capable of predicting sensory input, and that it seems to reduce uncertainty as far as possible. In the case of Friston's proposal, it refers to the reduction of informational uncertainty, while in the Energy Homeostasis Principle, it refers to the reduction of energy sensorial input uncertainty.

Parsimoniously, the brain cannot fully predict the sensory inputs that occur after every motor act, because changes that are independent of the action of the organism also occur in the environment, and these changes may be critical to its survival. According to the Energy Homeostasis Principle, we should expect that neural networks will operate in a way that will favor the behavioral input activities within homeostatic energy ranges. If a given input is energetically too demanding, we should expect a change in behavior. If a given set of motor activity consistently produces an energy stressor input, it will cause synaptic changes in the brain, as the energy balance processes are spread over the neuronal network.

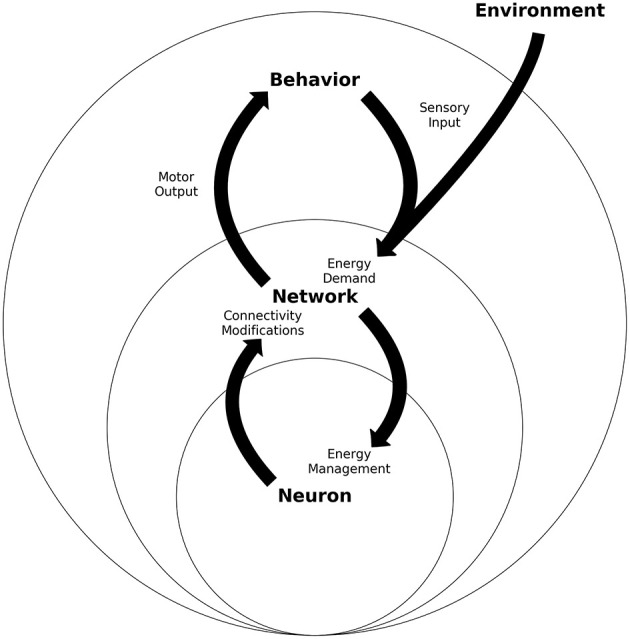

Sensory input represents the major energy challenge in the brain, while the motor output is the only way a neural network can modify this input. This way, the neuron in the network has the chance to regulate its C(t), given the pressure representing sensory input. Neural networks will restrict the palette of behaviors that can be observed, while behavior will impose energy demands that the neural network will couple with by modifying behavior. For these reasons, behavior can also be considered as a phenomenon which affects the energy homeostasis in a two-way direction. Thus, an at least three-level nested system can be depicted, where each level will have a two-way interaction with each other (see Figure 4).

Figure 4.

Energy homeostasis: An integrated view of neurons, networks and behavior schematic of the three nested levels of the energy homeostasis system. Each level represents one unit and its proximal operations. Neuron refers to one neuron which must manage its energy consumption, which will trigger neuron-plastic changes. Many of these neurons will build a network, which has connectivity properties and population energy demands. Many networks working together will deploy behavior through motor actions, while also receive the sensory input. All levels present a two-way energy interaction between behavior, neural networks, and neurons. The figure intends to present how sensory input can be considered an energy demand at network and neuron levels, while motor output through behavior gives room to control part of the sensory input.

According to the Energy Homeostasis Principle, a key aspect to explaining adaptive behavior must reside within the brain's macro-structure and evolutionary mechanisms. Given a certain palette of sensory specializations, set of effectors, and brain structures, it will impose the range of possible energy disposal attractors that can emerge. For instance, the number of sensory cells, and their sensitivity to stimuli will determine the energy input imposed on neural tissue. The effectors given will determine the space in which a neural network must control that input. The macrostructure of the brain will then impose general restrictions on the neural network homeostasis. For instance, the visual cortex mainly receives visual input, and the communication through brain regions is mainly achieved using major tracts. As such, the series of C(t), imposed by one neuron on another, must follow the paths allowed by the macrostructure. This means, that neural network homeostasis will not only be a function of energy input provided by the sensory cells, and the chances to control it using effectors, but also of macro anatomical restrictions produced by genetic mechanisms (Gilbert, 2000a,b).

Evolutive pressures must act over all traits—genetic, physiological, and behavioral—of the organism (Darwin, 2003). As such, evolutive pressures have selected a sensory and effectors pallet, as well as a brain macrostructure. We conjecture that from the set of behaviors that satisfy the energy constrictions, behaviors that statistically improve the chances of surviving will be selected. We propose that the macro-anatomical structures impose a certain system of dynamic energy management among neural networks, which force the emergence of a certain set of energy attractors producing, in turn, a specific set of behaviors. It is important to consider that in animals that display a large set of behaviors, probably what is selected is the ability to learn. This concretely would mean that the selected is not a specific behavior, rather the flexibility with which an organism must adapt behaviorally during its own life.

Given this bottom-up view, we conclude the existence of behaviors strictly required for survival, and others which might present adaptive advantages given the specific context of the organism. In human primates, for example, there is a vast set of behaviors that are not strictly for the survival of the single individual in any context, yet they exist, such as leisure activities, those related to the production of art in its multiple forms, and even pathological behaviors which might directly impact the individual's health or survival. As far as these non-strictly adaptive behaviors do not impact the organism's life, they might be highly adaptive in certain contexts.

In any case, evolutionary mechanisms will shape the nervous system's macrostructure and behavior so that both are aligned in a way where solving the energy constraints of a single neuron and the neural network will lead to survival. If not, that macrostructure is expected to be lost, as those organisms will die. In fact, there is no need that all these levels work in alignment per se, rather they must only be aligned to survive. Evolutive pressures will remove the organisms where the three level systems present goals that don't benefit each other. Since we can only see the animals that present all three level goals aligned, we have historically thought that neurons, neural networks, and organisms share the same goal. We proposed here that evolution shaped organisms, so when a neuron solves their needs, the behavior emerges as an epiphenomenon, which enables the organism to solve its needs, hence surviving.

Perspectives of Reinterpretation

In this section, we aim to contrast our proposal with evidence and highlight the corollary aspects which can open new avenues of research. Concretely, we will evaluate if we can reassess evidence, considering the Energy Homeostasis Principle. We think that this proposal is parsimonious as in spirit the rule is simple, what makes it complex is the wide range of interactions and properties that can emerge from the neural interaction constraint imposed by this rule. We believe that our proposal captures the essence of the concept of Braitenberg's vehicles (Braitenberg, 1986)1 and provides a plausible solution to the dynamics elaborated there. Naturally, Energy Homeostasis Principle still has some limitations. It is unclear how we can scale up this principle in networks as large as the brain. The metabolic mechanisms in neurons are quite complex, and still we need more empirical information to tune the mathematical modeling. We decided to use ATP as an energetic proxy, but many other molecules are used by neurons as energetic resources, and may present a dual signaling-resource condition activating control systems. We have mention abundant literature that shows an association between energy related variables and neural activity. However, we have not presented direct evidence of how energy constraints shape plasticity and neural network properties. Despite these limitations, the Energy Homeostasis Principle can be tested empirically by associating plasticity markers with energy availability, production, and consumption as mentioned in section “Revisiting neuronal plasticity under the perspective of energy constraints.” More importantly, this proposal serves new empiric avenues to study the working of the brain. For instance, plasticity has always been thought to be the changes required to fix a given behavior. However, according to our proposal, plasticity is a process that takes place not only during learning but continuously, as a core component of the constant deployment of behavioral changes. As such, plasticity might not only be a determinant of behavior acquisition but a key aspect of ongoing behavior. In the following subsections, we will briefly discuss different strategies which can be used to extend further from Equation (5), an example of evidence interpreted considering the Energy Homeostasis Principle, and then discuss other theoretical and empirical avenues which can be reinterpreted based on this paradigm.

Modeling Strategies to Implement Energy Homeostasis Principle

We did not extend our mathematical definitions beyond (Equations 4 and 5) as we aim to set a theoretical ground fertile for different modeling strategies. Equations (4) and (5) describe a quite simple idea that neurons take resources to couple with their energetic demands, and that these two must balance each other in order for the neuron to survive. However, the specific strategies used to operationalize the terms within (Equations 4 and 5) was purposefully left open to avoid constrains into specific modeling paradigms. Equations (1–3) were included to better formalize the problem at a metabolic level. These equations are relevant to build the theoretical argument, however, we would not consider them necessary for modeling, at least in a first approach.

In general terms, Energy Homeostasis Principle requires a dynamic modeling, and a topographic or structural component ideally framed from bottom-up. There is already an example that fits with these requirements (Yuan et al., 2018). In this work, they used the ratio between the energy consumed in synaptic transmission and the total metabolic energy consumed in synaptic transmission and dendritic integration over time. This ratio is used as a third component of Hebbian synaptic plasticity, allowing it to change synaptic weighs according to this energetic ratio and pre-synaptic activity. This is a nice example of how to include energetics constraints in neural activity modeling. Under the Energy Homeostasis principle view, the ratio does not make sense in terms of metabolism and neuron needs, because it only address energy consumption, without considering the impacts in productions and availability. Therefore, ignoring the restrictions in energy consumption derived from production and availability. This consumption ratio make sense under a top-down view supported in an information codification logic. Therefore, we suggest to define that ratio according to Equation (4), including consumption, availability, and production following the control mechanisms here presented.

Besides this particular model, graph theory could represent a starting point to define the structure of a dynamic network, in which nodes properties can be updated in a temporal fashion. Graph theory is already used to recall the structural properties of brain networks (Feng et al., 2007; De Vico Fallani et al., 2014), therefore, without a doubt it will be suitable representation which can be extended to consider the energetic management. Moreover, graph theory contains a vast amount of metrics to characterize networks (Costa et al., 2007), and more importantly, could allow to contrast those metrics against real data (Demirtaş and Deco, 2018; Klinger, 2018).

Strategies such as Free Energy Principle (Friston, 2010), or those that profit of predictive coding conceptions (Spratling, 2008; Schroeder et al., 2010; Huang and Rao, 2011), can also serve as a basis for energy homeostatic modeling. However, we suggest to use energy consumption instead of neural activity as predictor. In this case reducing surprise would be analogous to reduce the chances of a neuron to be driven out of energy homeostasis. Energy availability and production ideas are more complex to include. In general terms, and based on the concepts exposed in the previous sections, energy consumption is constrained to energy availability and production. As such, to adapt a predictive coding paradigm requires to include energetic restrictions, which must take into account the rate and amount of energy or activity equivalents that can be managed by the neurons within physiological ranges.

Many other strategies can be used. The above mentioned are often used in neuroscience, however, any modeling strategy that suits the temporal dynamic of energy management, and its topographical bottom-up properties, should be able to capture the essence of the Energy Homeostasis Principle.

Hybrots: An Analysis Using the Energy Homeostasis Principle

Let us discuss the energy principle proposed here in the context of a simple, in vivo network model. Empirically, one critical aspect of relating energy management with behavior is the major challenge of controlling sensory inputs. Most of experimental, in vivo animal models are not only sensible to acoustic, visual, physical, and chemical stimuli of the environment, but also to proprioceptive inputs, such as muscle contraction, tendon tension, blood acidification, hormone levels, among others. Strictly speaking, there is no way to properly control the sensory input of an animal in vivo, and the behavioral in vitro protocol seems to be unreal. Nonetheless, there are some protocols that can be considered as initial efforts of trying to build in vitro behavioral protocols. Specifically, some reports demonstrate that if we connect a neuronal culture of dissociated cortical or hippocampal neurons to an external device, coherent behavior can be obtained (Novellino et al., 2007; Tessadori et al., 2013).

Concretely, a system decodes the firing rate of the neurons in the culture and generates an output which is used to control two wheels of a vehicle. The vehicle has distance sensors. The sensor activity is coded to electrical pulses delivered back to the culture. The stimulation frequency is a function of the distance to an obstacle in front of the sensors. If the vehicle gets closer to an obstacle, then the stimulation frequency increases. If the vehicle crashes into an obstacle, a stimulation (20 Hz for 2 s) is delivered, which is previously known to trigger plasticity (Jimbo et al., 1999; Tateno and Jimbo, 1999; Madhavan et al., 2007; Chiappalone et al., 2008; le Feber et al., 2010). Leaving the vehicle in a circular maze with several obstacles under the operation of this protocol will cause it to “learn” to navigate, while avoiding impacts with obstacles (Tessadori et al., 2012). This model constitutes a protocol that enables studying the molecular, electrophysiological, and behavioral properties of neural processing simultaneously; above all, it allows the full control of the sensory input that this network will have.

Is this learning-like phenomenon compatible with the Energy Homeostasis Principle? When a single neuron is submitted to constant stimulation, we expect to have a 1–1 stimulation-action potential response. However, at a frequency stimulation as low as 10 Hz, the neurons will decay over time until they are unresponsive, or their response is importantly delayed (Gal et al., 2010). If interpreted through the Energy Homeostasis Principle we can hypothesize the following mechanism. First, we can postulate that at a frequency of 10 Hz or higher, stimulations become energetically stressful. As a response, neurons will respond with modifications in their synaptic weights in the short term, and with changes on their cytoarchitecture in the long term. Both processes will result in changes to the network structure. Each time the vehicle crashes, a stressful 20 Hz pulse will be delivered inducing plasticity. Functional restructuration is expected at each impact; leading to a random walk through different neural functional configurations, where each neuron will jump from state to state to minimize energy stress (see Figure 5). It is expected that those network configurations that decrease the effects of the sensory input will reduce energy stress due to impacting obstacles. But the best network configuration to the energy stress is indeed to avoid it. Eventually, a network configuration will arise which will prevent the vehicle from crashing. Since no energy stress will be delivered as a sensory input with this configuration, this structure will seemingly stabilize on a configuration of homeostatic energy expenditure (Figure 5). We are aware that the above interpretation may oversimplify the actual mechanisms followed by the neurons. Neuronal changes are most likely not completely random and more complex regulations may be taking place. However, we want to point out that they can be sufficient to explain the phenomenology of the observations. As such, energy management, as a local rule, will impact the neural network structure as an emergent property, where, in turn, it will impact behavior. Critically, in this example, we have focused on sensorial input as an increment of neural activity. This might not always be the case (such as under sensorial isolation). Despite that, under this specific scenario, we propose that networks will minimize energy consumption; the goal is to arrive to AH, not to the minimum possible energy expenditure. Therefore, if the sensorial input would move A(t) below AH, we would expect network modifications to increase expenses. In any case, the obtained behaviors must be at least compatible with the dynamic constraints imposed by C(t), despite it being too high or low. In this example, behavior emerged to satisfy the energy needs of the neuron by means of C(t). Finally, from all the vehicle movements, only a few, like avoiding the obstacle, might be interpreted as purposeful from an observer's point of view, the remaining ones may be considered a random trajectory. Importantly, this attribute is provided by the observer, as the neurons would only be managing their energetic demands. More research is required to evaluate what is happening with behavior, when the obstacles are out of the sensor's range along with the learning curve of the vehicle. Nonetheless, the Energy Homeostasis Principle allowed us to propose this hypothesis (Figure 5C), and it can be empirically addressed. Naturally, using the same experimental approach, we can evaluate how plasticity is affected by energetic demands induced electrically or by altering neurotransmitter concentrations. We can use the vehicle's behavior, or we can use the Graph Theory index already used to characterize networks (Costa et al., 2007) to associate neural network properties with energetic demands and metabolic activity.

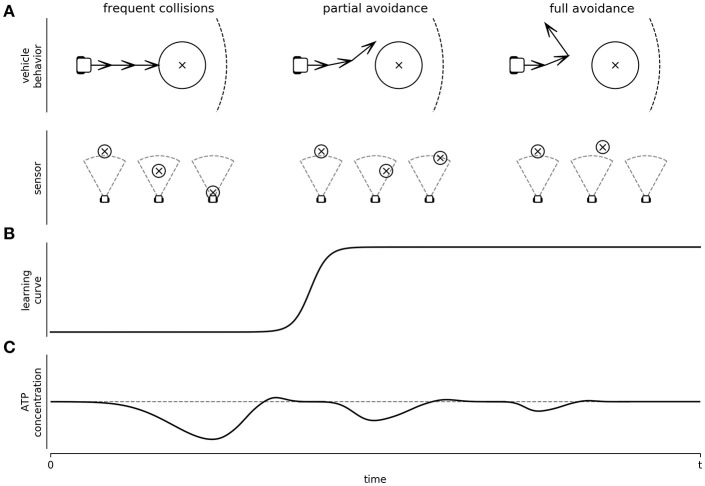

Figure 5.

Depiction of Braitenberg's vehicle behavior as a controlled platform to study learning and network energy adaptation: In this figure we hypothesize, based on the Energy Homeostasis Principle, how a hybrot would learn. (A) Different behaviors observed at different learning levels of the Braitenberg's vehicle (top panel), and the corresponding sensory input (bottom panel) from an obstacle (circle centered to x symbol) is detected by a sensor (dashed area), while the vehicle explores the environment. (B) Learning curve of the vehicle when learning to avoid the obstacles, passing from frequent collisions to full avoidance behavior. (C) Network energy adaptation triggered by the sensory input while minimizing the energy stress.

The Neuron Doctrine and the Energy Homeostasis Principle

Historically, the primary efforts to connect neuron activity with neural network dynamics and behavior was first proposed in 1888 (Barlow, 1972; Bock, 2013), which is referred to as “The Neuron Doctrine,” maintained and developed to this day (Dehaene, 2003; Moser and Moser, 2015; Sheppard, 2016). In general terms, this theoretical proposal tries, in dual form, to solve the information coding and processing problem and has been supported by intracranial recordings, where abundant examples can be found (Lettvin et al., 1959; Fairhall, 2014; Moser and Moser, 2015). Specifically, neurons are expected to code for specific properties of the environment, where its activity is associated with the detection of specific stimuli. For instance, neurons in the primary visual cortex of mammals are selectively sensible to oriented bars (Hubel and Wiesel, 1962; Taylor, 1978), while in the Lateral Geniculate there is evidence supporting the existence of circular receptive fields representing portions of visual space (Reid and Shapley, 1992). In these cases, neurons have receptive fields, which can be interpreted as a specific topologic relation of V1 with a certain retinal region; and therefore, with the image. Receptive fields with the same selectivity feature can also be found in the tactile, and auditory primary cortex, evidence of which is often interpreted as environmental stimuli being coded as a map in the brain (Penfield and Boldrey, 1937; Penfield, 1965; Ruth Clemo and Stein, 1982). This classic evidence is also theoretically line up with the recent hippocampus where neurons (Moser and Moser, 2015).

Critically, most of the evidence supporting the neuron doctrine is associated to the neuron discharge rate. Since this discharge rate is a part of the C(t), it necessarily means that most of the evidence supporting the neuron doctrine supports the Energy Homeostasis Principle as well. For this reason, it is plausible to consider that most of the neuron doctrine evidence is also evidence indicating how energy expenses of one neuron can be directly associated with behavior. Furthermore, high discharge rates, as mentioned above, are expected to trigger plasticity mechanisms. Also, only a low percentage of neurons present high discharge rates (Olshausen and Field, 2005), which should be expected under the Energy Homeostasis Principle scope. Moreover, due to the fact that high discharge rates might trigger changes in functional connectivity (synaptic weights), it should not be surprising that when presenting more complex visual scenes, classic receptive fields are no longer detectable (Fairhall, 2014). We may consider that classic stimulation visual protocols impose an energy input, reflected in the high discharge rate, which needs to be managed. In contrast, visual scenes are regularly experienced, therefore already managed energy, and the firing pattern are considerably lower. As such, we think that the neuron doctrine is not necessarily wrong, rather it has not focused on how the discharge rate is a proxy of energy demands imposed on neurons, which in turn affects their homeostasis. Also, that plasticity might have a functional role in ongoing behavior rather than only stabilizing learned behaviors.

The neural doctrine paradigm has been closely related to information coding paradigms. The coding paradigms follow the same logic as the genetic code; the idea that information is universally coded using the same dictionary or codebook. In the case of genetics, what we call the genetic code, is an arrangement of how the sequence of nucleic acids informs specific amino acid sequences when assembling proteins. In the case of a neural code, the assumption is that environmental stimuli are translated into brain activity, which is then translated into motor output. More specifically, it is possible to map specific neuron activities to specific properties of the environment. For instance, the intensity of the stimulation can be mapped to the discharge rate of the sensory neurons (Gardner and Johnson, 2013). The transduction of the stimuli is usually non-linear and sensitive to differences with previous stimulation rather than the raw value of the stimuli—Weber's law (Gardner and Johnson, 2013). This adaptation law has a direct interpretation in the context of energy expenditure by neurons, as neurons coding raw stimuli would demand a greater energy supply. Weber's law has also been extended to complex cognitive functions, such as quantity estimations (Dehaene, 2003)—where discharge rates are used as the code of quantities for specific neurons—suggesting that energy saving may be a strategy widely used by neurons.

Of course, the discharge rate is far from being the only neural code proposed. Coupled with the complexity of sensory activity, temporal coding was proposed, where the exact temporal relationship between each neuron spike would be the key to understanding how environmental information is translated into brain activity (Connor and Johnson, 1992; Friston, 1997). Temporal coding is implicitly related to energy demands, as the time between action potentials trigger plasticity mechanisms, associated with one of the most expensive items of the neuron physiology—post-synaptic potential and plastic mechanisms (Attwell and Laughlin, 2001). Another strategy was population coding (Georgopoulos et al., 1986; Nicolelis, 2003; Moxon and Foffani, 2015). Population coding uses the activity of a high number of neurons, where the discharge rate, timing, and as many properties can be extracted make it possible for a human or non-human primate to move a robotic arm or similar, with the brain. As more neurons are included, more information is obtained, and we should expect that we will better predict the arm movement. This approximation is good when the aim is to predict behavior but is not useful to understand how behavior emerges from neural activity. If reassessed using Energy Homeostasis Principle, we interpret that population coding works as it is a good assessment of neural network homeostasis, implicitly providing information about plastic changes and neural energy management. Up to some extent, all approaches have to do with when, how much, and which neurons are discharging, which in turn can be interpreted as when and how much energy is expended by individual neurons and the network.

When evaluating evidence related to a whole-brain approach, the neuron doctrine is mostly applied by associating the bold signals of brain regions to specific behaviors. Critically, the fMRI signal is derived, to some extent, by the changes triggered through the glia to couple with the energy demands (Otsu et al., 2015). Therefore, we can interpret that energy management associated to glial function, is already associated directly with behavior. Moreover, it suggests that energy management can be mapped into networks associated to specific behaviors. Naturally, the specifics in which Energy Homeostasis Principle would impact large networks like brains is still elusive, and it probably would require to incorporate formally the functional properties of the glia.

In general, the fMRI approach strongly resembles the serial symbolic programming paradigms, where a module can be homologized to a programming function, and the network would be the general architecture of the software. The loss of a programming function leads to the loss of a specific functionality of the software. This metaphor was addressed in classic literature (Hovland, 1960; Searle, 1980), suggesting that the brain processes information using a symbolic serial paradigm. As such, most of the neural correlates within the neurocognitive domain are interpreted as information processing, ranging from a strictly symbolic to a correlative information approach. However, using a bottom-up approach and the Energy Homeostasis Principle, those attributions are an observer's bias, as the one described in Braitenberg's vehicles (Braitenberg, 1986). Behavioral functions of a neuron or the neural network would be the epiphenomena of neurons regulating their own homeostasis. In fact, as explained in the previous section, we can describe how the vehicle learns to avoid obstacles without using any informational, symbolic, or teleological explanations. Using this bottom-up approach, it is expected that an informational approach will be useful, as far as the neurons' and the neural network's needs are aligned with the organism's. However, it should be interpreted as an epiphenomenon of neural networks solving their own needs.

Reinterpreting Evidence Toward New Research Avenues

As we have discussed above, energy management, though implicitly considered, is a key feature of the nervous system. This necessarily means that most of our current evidence can be reinterpreted in the light of the Energy Homeostasis Principle. We expect that this reinterpretation will trigger new ideas and strategies to understand the neural phenomena. As an example, we may try to explain the neuronal changes associated with learning processes, based on iconic paradigms such as the long-term potentiation (LTP) and depression (LTD) (Nabavi et al., 2014; Jia and Collingridge, 2017). Both phenomena involve a large amount of energy expense where the ATP could be followed to understand the phenomena of plasticity as one of energy management. This is key, considering that even the Hebbian rules (Kempter et al., 1999), operates differently, according to the neuron type (Abbott and Nelson, 2000), highlighting the difficulties in predicting plasticity according to neural activity. At the same time, the calcium ion plays a critical signaling role within neural physiology, where we should ask if it might be a signal of energy expenditure. It is known that metabolic processes sense the ATP-AMP ratio (Ames, 2000), however, they have not been studied in association to the plasticity phenomena.

Consequentially, we can assess energy management and not solely from a molecular or electrophysiological perspective. For instance, can we consider inhibitory neurons as an adaptive feature to control brain energy expenditure? This is most intriguing if we consider that inhibitory neurons are key to increasing the neural circuits' controlling properties (e.g. negative feedback structures).

Simultaneously, the central nervous system is the only structure of the body which is actively isolated from the vascular system. It has its own system to maintain stable the neuron proximal environment. Moreover, astrocytes coordinate themselves through calcium waves, producing local changes in blood flow and hyperemia (increase on blood irrigation) (Otsu et al., 2015). The brain-blood barrier is not only a filter, but it works functionally to support the energy demands of the neural networks. In fact, synapses are currently suggested as tripartite structures (neuron-neuron and astrocyte) (Wang and Bordey, 2008), where the glutamate-release excitatory synapses are proposed to control neurovascular coupling, and thus, brain energy during conditioning and behavior (Robinson and Jackson, 2016). This would be a clear example of a neural activity involving external support for energy management.

Moreover, there is a vast number of shapes for neural cells. It is currently unknown why some neurons display large, dendritic arborizations and short axons, while others present long axons and rather small dendritic arborizations. Similarly, there are varying basal discharge rates of activity. We think it is worth exploring whether the likelihood of particular morphologies and rate of activities are associated with energy constraints. For instance, can a neuron manage to maintain a long axon and at the same time a huge dendritic arborization where it must maintain a large number of dendritic spines? If we explore the evidence we already have, we are confident that new insights into neuron morphology will appear. Even more, if an unlikely neuron shape or size which is energetically more expensive presents itself; we should expect that those neurons would be more sensible to energy demands and may be more susceptible to neural death (Le Masson et al., 2014). In fact, Paul Bolam proposed that the reason behind Parkinson's is due to the dying out of dopaminergic neurons because of their huge size, which is very expensive in energy terms (Bolam and Pissadaki, 2012; Pissadaki and Bolam, 2013). It is most likely that many of these traits are genetically determined, however, energy constraints might limit the possible morphological variety. Furthermore, that genetic determinants of neuron specializations may be triggered in response to the C(t).