Abstract

Under natural conditions, our sound localization capabilities enable us to move constantly while keeping a stable representation of our auditory environment. However, since most auditory studies focus on head-restrained conditions, it is still unclear whether neurophysiological markers of auditory spatial processing reflect representation in a head-centered or an allocentric coordinate system. Therefore, we used human electroencephalography to test whether the spatial mismatch negativity (MMN) as a marker of spatial change processing is elicited by changes of sound source position in terms of a head-related or an allocentric coordinate system. Subjects listened to a series of virtually localized band-passed noise tones and were occasionally cued visually to conduct horizontal head movements. After these head movements, we presented deviants either in terms of a head-centered or an allocentric coordinate system. We observed significant MMN responses to the head-related deviants only but a change-related novelty P3-like component for both head-related and allocentric deviants. These results thus suggest that the spatial MMN is associated with a representation of auditory space in a head-related coordinate system and that the integration of motor output and auditory input possibly occurs at later stages of the auditory “where” processing stream.

Introduction

The perception of sound position is decisive for our ability to evade possible dangers in our environment and to orient toward sudden acoustic events.

Traditionally, studies on sound-source location are conducted under head-restrained conditions not allowing self-generated head movements. However, it has been suggested that head movements facilitate accurate sound localization (Wallach, 1940; Blauert, 1996) and psychophysical studies in cats (Tollin et al., 2005), macaques (Populin, 2006), and human subjects (Wightman and Kistler, 1999) have shown improved sound localization performance when head movements are allowed. Human psychophysical studies have revealed that our brain is able to compensate for self-initiated head movements and to produce a stable representation of auditory objects in terms of allocentric coordinates (Goossens and Van Opstal, 1999; Vliegen et al., 2004).

As most noninvasive brain imaging methods, such as functional magnetic resonance imaging (fMRI) or magnetoencephalography prohibit subject's head movement, it has remained unclear whether a head-centered or an allocentric frame of reference for auditory localization is also reflected at the level of mass neural signals. However, human electroencephalography (EEG) in principle allows head movements, and it provides a well studied and reliable electrophysiological marker for auditory spatial processing: the mismatch negativity (MMN) which is elicited by infrequent changes of sound location after repetitive stimulation from the same location (Paavilainen et al., 1989; Schröger and Wolff, 1996; Winkler et al., 1998; Kaiser et al., 2000). EEG and fMRI studies have shown that the MMN to spatial changes is modulated by the extent of spatial deviation and that it might be generated in the planum temporale (Deouell et al., 2006, 2007). Possibly, these MMN generators are part of the dorsal where- pathway which has been proposed based on neurophysiological studies in nonhuman primates suggesting that cortical processing of auditory sound localization occurs along a dorsal stream including the caudal parts of the auditory belt, posterior parietal, and dorsal prefrontal cortex (Rauschecker, 1998; Romanski et al., 1999).

The aim of the present study was to test whether the representation of spatial auditory information at the level of the auditory cortex is encoded in a head-centered or an allocentric frame of reference. To this end, we recorded EEG responses to sequences of noises, presented from a position along the midsagittal line. During the noise sequences, subjects were occasionally cued to rotate their head horizontally. After head rotation, they were presented with either a stimulus from the same sound source which was now a deviant in terms of a head-related frame of reference or were presented a stimulus from a sound source which was aligned to the new midsagittal line and thus constituted a deviant in terms of an allocentric frame of reference. As subjects were not instructed to conduct body movements in this study, we could not differentiate between a body-centered and an external-world coordinate system. We hypothesized that in case of a head-related representation of the sound source, we should observe a significant MMN for the head-related deviant; whereas for an allocentric representation, we would expect a significant MMN for an allocentric deviant.

Materials and Methods

Subjects.

Twenty healthy volunteers participated in the EEG experiment who had normal hearing abilities as determined by self-report. Two subjects were excluded from data analysis because of excessive eye blink and muscle artifacts, leaving 18 subjects for the final analysis (age range, 21–53; 9 males; 3 left handed). All subjects gave their informed consent to participate in the study which was performed in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki and approved by the local ethics committee of the Goethe University Frankfurt Medical Faculty.

Stimuli and procedure.

Stimuli consisted of band-passed noise (250–4000 Hz), with a duration of 250 ms, sampling rate of 44.1 kHz, and a sound pressure level of ∼78 db(A). The sounds were virtually localized by convolving the stimuli with a generic head-related transfer function (HRTF) derived from the Knowles Electronics Manikin for Acoustic Research head model, similar to previous studies (Altmann et al., 2007). As the HRTF set provided discrete sound source positions only, intermediate sound source positions were next-neighbor interpolated in 5° steps. Auditory stimuli were presented binaurally via air-conducting plastic tubes (E-A-Rtone 3A; Aearo Corporation) with a length of 2 m. Subjects could reliably recognize the lateralization of the used stimuli as tested before the experiment. More specifically, previous to the EEG recording session, subjects were presented with two blocks of sounds localized at −30, 0, and 30° for the first block and −15, 0, and 15° for the second block. The subjects were instructed to name the sound direction (left, middle, right) and correctly localized 96.7% of the presented stimuli for the 30° block and at 96.3% for the 15° block. As a visual cue for the alignment of the head orientation, red light emitting diodes (LED) attached on top of silent loud speakers were arranged in a semicircle with a radius of 126 cm. One LED was positioned at the midsagittal line, and two LEDs were attached 30° to the left and right of this line, respectively (Fig. 1a).

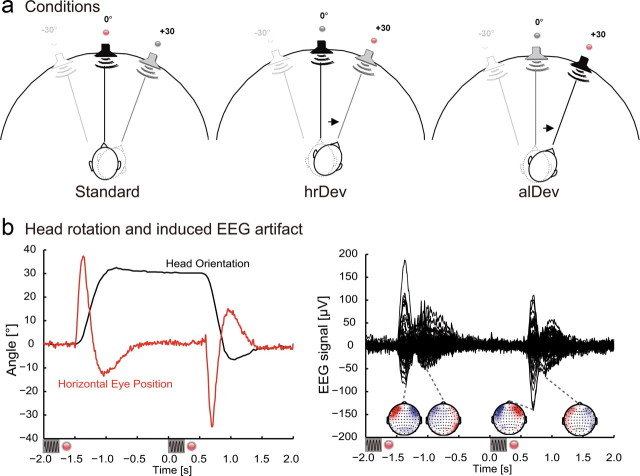

Figure 1.

a, Experimental setup of LEDs and sound source positions for an exemplary run (mid→right). The left figure depicts the standard condition: in this case, standard stimulation entailed a series of noise sounds presented from the central position (in black). The subject's head orientation was cued by the red LED. The central figure depicts the head-related deviant (hrDev): before sound presentation from a central position (in black), subjects were cued to rotate their head to the right (indicated by the arrow). The right figure depicts the allocentric deviant condition (alDev): subjects were cued to rotate their head to the right, but this time the noise sound was presented at the right sound source position (in black). b, Typical artifact (single trial from an exemplary subject) elicited during head rotation from −2 to +2 s after deviant onset. The left graph shows the horizontal head orientation recorded continuously at 30 Hz and eye-in-head position. The small black sine waves below the graphs depict sound stimulation, the red dots symbolize LED cue change. The right graph shows the evoked EEG response during the head turn. Below are the EEG topographies at the time points indicated by the gray dashed lines (extrema of the EEG response).

The EEG experiment was conducted in an electrically shielded and sound-attenuated chamber (Industrial Acoustics Company). Before the EEG experiment, subjects were administered a training run for aligning the head orientation and a calibration run for the eye positions. Particularly, subjects repeatedly performed horizontal saccadic eye movements between the central and the lateralized LEDs (30° left and right). This allowed the estimation of the subjects' horizontal eye position in visual angle by a linear transformation of the EEG signal acquired near the left and right outer canthi. After that, all subjects were administered four runs of stimulus presentation during which EEG was recorded. An experimental run included 364 auditory stimuli that were presented at a rate of 0.5 Hz. We used an oddball design to induce mismatch negativity in which each standard was presented in sequences of three or four repeated presentations. After a standard sequence, the LED at the standard position was turned off 50 ms after sound offset of the last standard stimulus. Synchronously, the red LED at a new position was turned on, and the subjects had to align their head orientation to the new position. After the LED position change (1700 ms), either of two types of deviants were presented: (1) either the sound was presented from the same sound location as the preceding standard sound; this was a deviant in terms of a head-related coordinate system (hrDev); (2) or the sound was presented at the sound location to which the head was rotated, which was a deviant in terms of an allocentric coordinate system (alDev). Four different experimental runs were conducted. As depicted in Figure 1a, the first run entailed standard sound presentation from the central position while the red LED in the center was illuminated. For deviant sound presentation, the subjects were cued to rotate their head to the right (mid→right). The deviant was presented either from the central position (hrDev) or from the right position (alDev). In a second run, the standard position was cued by the right LED, and for deviant presentation, subjects were cued to the central position (right→mid). In a third run, the standard position was central, and for deviant presentation, subjects were cued to the left position (mid→left). Finally, for the fourth run, the standard position was cued by the left LED, and for deviant presentation, subjects were cued to the central position (left→mid). The sequence of the four experimental runs was randomized across subjects, and each experimental run had a duration of 12 min 8 s and contained 80 deviants. Thus, all four runs provided 320 changes, that is 160 deviants per condition. During the whole experiment, subjects were instructed to keep their eyes open, fixate the red LED, and align the head orientation to the LED position.

The horizontal head orientation was recorded by optical tracking at a frame rate of 30 Hz with a Unibrain Fire-I color charge-coupled device camera (Unibrain). To calculate angular differences between rotational positions, we attached a 4 × 6 checkerboard pattern on top of the electrode cap, which was tracked by custom software written in C using the OpenCV library (http://sourceforge.net/projects/opencvlibrary). In the EEG experiment, the head orientation was recorded 100 ms before sound presentation. For the head-related deviant, this position was used to calculate online the virtual position of the deviant sound to elicit the impression of a stable sound source.

EEG acquisition and data analysis.

EEG activity was recorded using a 128-channel QuickAmp amplifier (Brain Products) and Braincap electrode caps (Falk Minow Services) with 124 electrodes and two additional electrodes on the infra-orbital ridge of the left and right eye and two further electrodes on the neck. All channels were recorded with an average reference and a sampling rate of 500 Hz with a 135 Hz anti-aliasing low-pass filter during recording. Positions of the electrodes and of three fiducial landmarks (the left and right preauricular points and the nasion) were recorded with a spatial digitizer (Zebris Medical). EEG data were analyzed with the BESA software package (MEGIS Software) and the Fieldtrip toolbox (www.ru.nl/fcdonders/fieldtrip). Before signal averaging, artifactual epochs were discarded based on visual inspection and a thresholding procedure which removed epochs with a slew rate exceeding 75 μV/ms. On average, 80.4% of all epochs were retained after artifact rejection. The event-related potentials (ERPs) were calculated for standards and deviants in a 400 ms time epoch (100 ms before and 300 ms after stimulus onset). Standards were defined as the last stimulus presentations in a standard sequence. After averaging, the individual ERP data (124 electrodes) were interpolated to a standardized average reference 101 electrode configuration using spherical spline interpolation to account for interindividual differences in head shape and electrode placements. ERP data were low-pass filtered with a cutoff frequency of 50 Hz (zero phase third order Butterworth filter). For group-level statistical analysis of the ERP and to address the problem of multiple comparisons (200 time points, 101 electrodes), we used cluster randomization analysis described previously (Maris, 2004; Maris and Oostenveld, 2007). We compared the different conditions using a two-tailed Student's t test in the time window of 0–280 ms after stimulus onset. Clusters were restricted to a minimum size of five neighboring electrodes showing significant differences between conditions (p < 0.05). As a test-statistic, the sum of t values across a cluster was compared to the distribution of maximum cluster sums of t values derived from a randomization procedure (1000 randomizations). To display the ERP time courses, we averaged the EEG signal across the fronto-central electrodes Fz, FCz, F1, F2, F3, F4, FC1, FC2, FC3, and FC4.

Results

During the EEG experiment, subjects' head rotations before deviant sound presentation were on average 29.98° (SD, 4.94°) for the head-related deviant and 30.04° (SD, 4.92°) for the allocentric deviant. Figure 1b depicts the time course of a typical head movement, including horizontal eye-in-head motion and the topography of the elicited artifacts for a single subject, showing the influence of eye-in-head motion on the EEG signal.

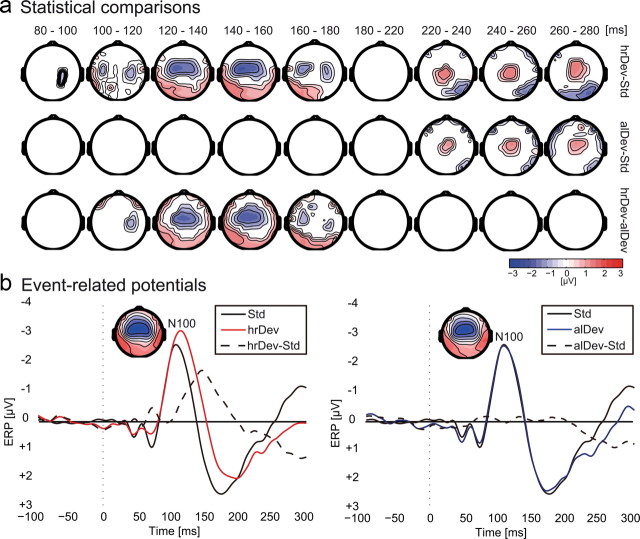

In Figure 2a, we present the statistically significant ERP differences of deviant and standard conditions based on cluster-based randomization statistics. Significant fronto-central negativity (p < 0.05 at a cluster level) was observed for the head-related deviant only, compared to standard stimulation in the time window between 80 and 180 ms after stimulus onset. This negativity corresponded to a spatial MMN and was centered around the Fz and FCz electrodes. For the allocentric deviant, we did not observe a significant MMN, and from 100 to 180 ms after stimulus onset, we found significant differences between the two deviant types. In the time window from 220 ms onwards, our data analysis revealed a significant positivity centered around electrode Cz for both the head-related and the allocentric deviants compared to standards with no significant differences between the two deviants. For illustrative purposes, Figure 2b depicts the time course of the event-related potential averaged across 10 fronto-central electrodes. A clear MMN was only observed for the head-related deviants (average peak negativity, −1.95 ± 1.01 μV SD; average peak latency, 147 ± 20 ms SD) but not for the allocentric deviant (average peak negativity, −0.85 ± 0.99 μV SD; average peak latency, 165 ± 42 ms SD). The supplemental material (available at www.jneurosci.org) additionally provides MMN values for the different experimental runs and ERP data analyzed within an extended time window.

Figure 2.

EEG data. a, Statistical comparisons across conditions based on a nonparametric cluster–randomization procedure. Only difference data from electrodes are shown that were part of a significant cluster (p < 0.05 cluster level) throughout the indicated period. The top row shows significant differences between the hrDev and the standard (Std). The center row depicts significant differences between the alDev and the standard condition, and the bottom row shows significant differences between the two deviant conditions. b, EEG time courses from fronto-central electrodes to compare the ERPs to the head-related deviant (red line), the allocentric deviant (red line), and the standard (black line, both graphs). The dashed black line depicts the difference wave between conditions. The topographies show the deviant N100 event-related potential in the time window between 90 and 110 ms for the two deviant conditions.

Discussion

The present study aimed at testing whether the auditory spatial MMN response is associated with the representation of auditory sound sources in a head-related or an allocentric coordinate system. Thus, we used an oddball paradigm in which subjects were cued to perform horizontal head rotations before deviant presentation. In the case of head-related deviants, the virtual lateralization of the presented sound compensated the horizontal head movement and appeared at a stable external location. In contrast, the allocentric deviant matched the standard sound preceding a head rotation in terms of interaural cues. We observed a significant spatial MMN only for head-related deviants but not for allocentric deviants, indicating that the MMN response to spatial deviants reflected auditory localization based on interaural cues rather than a representation of the sound source invariant to head orientation changes. In other words, the MMN was generated after acoustic changes in terms of binaural and monaural spatial cues and did not show sensitivity to extra-auditory information. In contrast, we found a significant positive deflection from ∼220 ms after stimulus onset for both the head-related and the allocentric deviants compared to standards. Topography and time course of this positivity were consistent with the novelty P3 often observed in ERP oddball paradigms (Friedman et al., 2001). These findings suggest that at later stages of auditory space processing, head orientation was integrated with sound information.

Previous research has shown that MMN responses can be elicited by both interaural time delay (ITD) and interaural level difference (ILD) localization cues, but the additivity of the responses when both dimensions are changed suggest parallel processing of these cues (Schröger, 1996). Thus, the MMN response preserves the type of stimulus feature (ITD or ILD) that leads to spatial perception. Furthermore, a later ERP study used free-field stimuli with similar ITD/ILD cues that were localized at different positions in the so-called cone of confusion and thus only differed in their pinnae-related spectral features (Röttger et al., 2007). MMN responses were elicited by differences due to pinnae-related spectral filtering, and the results suggested that the underlying mechanisms are separate from the correlates of ITD/ILD computation. This was corroborated by studies that used individual head-related transfer functions and that showed a temporal segregation of binaural cue processing at earlier latencies and monaural cue processing at later stages (Fujiki et al., 2002). While different localization cues elicit separate MMN responses, in the present study, allocentric deviants did not lead to the generation of a spatial MMN.

Nevertheless, in our everyday environment we experience sound sources as stable during head and body movements. Psychophysical experiments revealed that in humans, head movements are accounted for during the computation of sound source coordinates (Goossens and Van Opstal, 1999) and that acoustic information is continuously integrated with head orientation feedback to provide a stable sound source position (Vliegen et al., 2004). In a series of auditory and visual location memory tasks involving active eye, head, and body movements, it has been shown that both auditory and visual localization was affected by head-on-body position (Kopinska and Harris, 2003). This suggests that during a location memory task, head-on-body information is integrated in the processing of location, possibly to establish a body-centered representation of sound source position. Correspondingly, based on the observation that the horizontal head orientation affects auditory localization, Lewald et al. (Lewald and Ehrenstein, 1998; Lewald et al., 2000) proposed that auditory stimuli are represented in a body-centered frame of reference. According to the authors, this representation entails a coordinate transformation from head-centered to body-centered coordinates, possibly with an intermediate step in which eye-in-head and head-related coordinates are integrated. One important source of information about head position is proprioceptive input generated by the neck muscle spindles (Biguer et al., 1988), and accordingly, stimulation of human subjects with neck muscle vibrations has been shown to produce a shift of auditory localization toward the side of stimulation (Lewald et al., 1999).

In our study, ERP responses to allocentric sound source changes arose only at a later stage from ∼220 ms onwards. The time course, topography, and experimental circumstances of the late positivity evoked by both head-related and allocentric deviants resembled the novelty P3 as described in previous auditory oddball paradigms (for review, see Friedman et al., 2001). This component has been interpreted as a neural correlate of involuntary attention switch or orientation response to novel stimuli and has been described by a combined fMRI and ERP study to be generated within a cortical network comprising bilateral superior temporal areas and right inferior frontal cortex (Opitz et al., 1999). Interestingly, in the case of the allocentric deviants, a novelty P3-like component has been evoked without a preceding MMN consistent with a study that showed a dissociation between MMN and P3a (Horváth et al., 2008).

Considering that subjects had their eyes open during our experiment, an alternative interpretation could be that audio-visual incongruency was involved in the generation of the MMN potential. Specifically, in the case of the head-related deviant, the tone was not presented at the position of the illuminated LED, while this was the case for the standard and the allocentric deviant. Visual influences on auditory-evoked responses have been demonstrated with various methods and paradigms (Besle et al., 2009), and an ERP study using the ventriloquist's illusion has shown that visually induced sound source shifts can result in MMN generation (Stekelenburg et al., 2004). However, given that cross-modal integration heavily relies on temporal synchronicity (Calvert et al., 2001), and that the separation of sound onset and LED changes was considerably long (1.7 s), it is unlikely that the observed MMN was solely based on audio-visual incongruency. Another limitation of our study was that we used virtual acoustics rather than free-field stimuli to induce spatial perception. Both similarities and differences for these stimulation methods in terms of evoked MMN properties were described in previous experiments (Paavilainen et al., 1989), and thus, future studies with free-field stimulation under carefully controlled anechoic conditions might help to clarify the validity of our results. A further interesting question could be in how far an allocentric representation of auditory space forms over time. Manipulating the interval between head rotation and sound stimulation may lead to different change-related ERP responses and thus reveal the temporal dynamics of reference frame formation. Moreover, in our experiment, we tested for audio-motor integration in a strong sense, in the way that we tested whether extra-auditory signals can generate an MMN without acoustic changes. Future studies could test whether a weaker form of integration occurs, that is whether head orientation might modulate an already existing MMN evoked by an actual acoustic change.

In sum, our data suggest that the auditory MMN reflects spatial differences in a head-related coordinate system based on binaural localization cues. Thus, processes that compute invariance to head orientation are possibly not associated with the auditory spatial MMN. In contrast, a novelty P3-like component from ∼220 ms after stimulus onset was sensitive to both changes in terms of a head-related and an allocentric coordinate system. Thus, our results indicate that, in particular, later stages of the auditory processing stream are involved in the generation of invariance to head orientation changes.

Footnotes

C.F.A. and J.K. were supported by the Deutsche Forschungsgemeinschaft (DFG AL 1074/2-1). We thank Monika Geis-Dogruel for assistance with data acquisition, Christoph Bledowski for helpful discussion, Iver Iversen for help on technical issues, and Jörg Lewald for comments on this manuscript.

References

- Altmann CF, Bledowski C, Wibral M, Kaiser J. Processing of location and pattern changes of natural sounds in the human auditory cortex. Neuroimage. 2007;35:1192–1200. doi: 10.1016/j.neuroimage.2007.01.007. [DOI] [PubMed] [Google Scholar]

- Besle J, Bertrand O, Giard MH. Electrophysiological (EEG, sEEG, MEG) evidence for multiple audiovisual interactions in the human auditory cortex. Hear Res. 2009 doi: 10.1016/j.heares2009:06.016. [DOI] [PubMed] [Google Scholar]

- Biguer B, Donaldson IM, Hein A, Jeannerod M. Neck muscle vibration modifies the representation of visual motion and direction in man. Brain. 1988;111:1405–1424. doi: 10.1093/brain/111.6.1405. [DOI] [PubMed] [Google Scholar]

- Blauert J. The psychophysics of human sound localization. Cambridge, MA: MIT; 1996. Spatial hearing. [Google Scholar]

- Calvert GA, Hansen PC, Iversen SD, Brammer MJ. Detection of audio-visual integration sites in humans by application of electrophysiological criteria to the BOLD effect. Neuroimage. 2001;14:427–438. doi: 10.1006/nimg.2001.0812. [DOI] [PubMed] [Google Scholar]

- Deouell LY, Parnes A, Pickard N, Knight RT. Spatial location is accurately tracked by human auditory sensory memory: evidence from the mismatch negativity. Eur J Neurosci. 2006;24:1488–1494. doi: 10.1111/j.1460-9568.2006.05025.x. [DOI] [PubMed] [Google Scholar]

- Deouell LY, Heller AS, Malach R, D'Esposito M, Knight RT. Cerebral responses to change in spatial location of unattended sounds. Neuron. 2007;55:985–996. doi: 10.1016/j.neuron.2007.08.019. [DOI] [PubMed] [Google Scholar]

- Friedman D, Cycowicz YM, Gaeta H. The novelty P3: an event-related brain potential (ERP) sign of the brain's evaluation of novelty. Neurosci Biobeh Rev. 2001;25:355–373. doi: 10.1016/s0149-7634(01)00019-7. [DOI] [PubMed] [Google Scholar]

- Fujiki N, Riederer KA, Jousmäki V, Mäkelä JP, Hari R. Human cortical representation of virtual auditory space: differences between sound azimuth and elevation. Eur J Neurosci. 2002;16:2207–2213. doi: 10.1046/j.1460-9568.2002.02276.x. [DOI] [PubMed] [Google Scholar]

- Goossens HH, van Opstal AJ. Influence of head position on the spatial representation of acoustic targets. J Neurophysiol. 1999;81:2720–2736. doi: 10.1152/jn.1999.81.6.2720. [DOI] [PubMed] [Google Scholar]

- Horváth J, Winkler I, Bendixen A. Do N1/MMN, P3a, and RON form a strongly coupled chain reflecting the three stages of auditory distraction? Biol Psychol. 2008;79:139–147. doi: 10.1016/j.biopsycho.2008.04.001. [DOI] [PubMed] [Google Scholar]

- Kaiser J, Lutzenberger W, Preissl H, Ackermann H, Birbaumer N. Right-hemisphere dominance for the processing of sound-source lateralization. J Neurosci. 2000;20:6631–6639. doi: 10.1523/JNEUROSCI.20-17-06631.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kopinska A, Harris LR. Spatial representation in body coordinates: evidence from errors in remembering positions of visual and auditory targets after active eye, head, and body movements. Can J Exp Psychol. 2003;57:23–37. doi: 10.1037/h0087410. [DOI] [PubMed] [Google Scholar]

- Lewald J, Ehrenstein WH. Influence of head-to-trunk position on sound lateralization. Exp Brain Res. 1998;121:230–238. doi: 10.1007/s002210050456. [DOI] [PubMed] [Google Scholar]

- Lewald J, Karnath HO, Ehrenstein WH. Neck-proprioceptive influence on auditory lateralization. Exp Brain Res. 1999;125:389–396. doi: 10.1007/s002210050695. [DOI] [PubMed] [Google Scholar]

- Lewald J, Dörrscheidt GJ, Ehrenstein WH. Sound localization with eccentric head position. Behav Brain Res. 2000;108:105–125. doi: 10.1016/s0166-4328(99)00141-2. [DOI] [PubMed] [Google Scholar]

- Maris E. Randomization tests for ERP topographies and whole spatiotemporal data matrices. Psychophysiology. 2004;41:142–151. doi: 10.1111/j.1469-8986.2003.00139.x. [DOI] [PubMed] [Google Scholar]

- Maris E, Oostenveld R. Nonparametric statistical testing of EEG- and MEG-data. J Neurosci Methods. 2007;164:177–190. doi: 10.1016/j.jneumeth.2007.03.024. [DOI] [PubMed] [Google Scholar]

- Opitz B, Mecklinger A, Friederici AD, von Cramon DY. The functional neuroanatomy of novelty processing: integrating ERP and fMRI results. Cereb Cortex. 1999;9:379–391. doi: 10.1093/cercor/9.4.379. [DOI] [PubMed] [Google Scholar]

- Paavilainen P, Karlsson ML, Reinikainen K, Näätänen R. Mismatch negativity to change in spatial location of an auditory stimulus. Electroencephalogr Clin Neurophysiol. 1989;73:129–141. doi: 10.1016/0013-4694(89)90192-2. [DOI] [PubMed] [Google Scholar]

- Populin LC. Monkey sound localization: head-restrained versus head-unrestrained orienting. J Neurosci. 2006;26:9820–9832. doi: 10.1523/JNEUROSCI.3061-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rauschecker JP. Parallel processing in the auditory cortex of primates. Audiol Neurootol. 1998;3:86–103. doi: 10.1159/000013784. [DOI] [PubMed] [Google Scholar]

- Romanski LM, Tian B, Fritz J, Mishkin M, Goldman-Rakic PS, Rauschecker JP. Dual streams of auditory afferents target multiple domains in the primate prefrontal cortex. Nat Neurosci. 1999;2:1131–1136. doi: 10.1038/16056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Röttger S, Schröger E, Grube M, Grimm S, Rübsamen R. Mismatch negativity on the cone of confusion. Neurosci Lett. 2007;414:178–182. doi: 10.1016/j.neulet.2006.12.023. [DOI] [PubMed] [Google Scholar]

- Schröger E. Interaural time and level differences: integrated or separated processing? Hear Res. 1996;96:191–198. doi: 10.1016/0378-5955(96)00066-4. [DOI] [PubMed] [Google Scholar]

- Schröger E, Wolff C. Mismatch response of the human brain to changes in sound location. Neuroreport. 1996;7:3005–3008. doi: 10.1097/00001756-199611250-00041. [DOI] [PubMed] [Google Scholar]

- Stekelenburg JJ, Vroomen J, de Gelder B. Illusory sound shifts induced by the ventriloquist illusion evoke the mismatch negativity. Neurosci Lett. 2004;357:163–166. doi: 10.1016/j.neulet.2003.12.085. [DOI] [PubMed] [Google Scholar]

- Tollin DJ, Populin LC, Moore JM, Ruhland JL, Yin TC. Sound-localization performance in the cat: the effect of restraining the head. J Neurophysiol. 2005;93:1223–1234. doi: 10.1152/jn.00747.2004. [DOI] [PubMed] [Google Scholar]

- Vliegen J, Van Grootel TJ, Van Opstal AJ. Dynamic sound localization during rapid eye-head gaze shifts. J Neurosci. 2004;24:9291–9302. doi: 10.1523/JNEUROSCI.2671-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wallach H. The role of head movements and vestibular and visual cues in sound localization. J Exp Psychol. 1940;27:339–368. [Google Scholar]

- Wightman FL, Kistler DJ. Resolution of front-back ambiguity in spatial hearing by listener and source movement. J Acoust Soc Am. 1999;105:2841–2853. doi: 10.1121/1.426899. [DOI] [PubMed] [Google Scholar]

- Winkler I, Tervaniemi M, Schröger E, Wolff C, Näätänen R. Preattentive processing of auditory spatial information in humans. Neurosci Lett. 1998;242:49–52. doi: 10.1016/s0304-3940(98)00022-6. [DOI] [PubMed] [Google Scholar]