Abstract

The human amygdala can be robustly activated by presenting fearful faces, and it has been speculated that this activation has functional relevance for redirecting the gaze toward the eye region. To clarify this relationship between amygdala activation and gaze-orienting behavior, functional magnetic resonance imaging data and eye movements were simultaneously acquired in the current study during the evaluation of facial expressions. Fearful, angry, happy, and neutral faces were briefly presented to healthy volunteers in an event-related manner. We controlled for the initial fixation by unpredictably shifting the faces downward or upward on each trial, such that the eyes or the mouth were presented at fixation. Across emotional expressions, participants showed a bias to shift their gaze toward the eyes, but the magnitude of this effect followed the distribution of diagnostically relevant regions in the face. Amygdala activity was specifically enhanced for fearful faces with the mouth aligned to fixation, and this differential activation predicted gazing behavior preferentially targeting the eye region. These results reveal a direct role of the amygdala in reflexive gaze initiation toward fearfully widened eyes. They mirror deficits observed in patients with amygdala lesions and open a window for future studies on patients with autism spectrum disorder, in which deficits in emotion recognition, probably related to atypical gaze patterns and abnormal amygdala activation, have been observed.

Introduction

The human amygdala is known to be robustly activated by the presentation of fearful faces (Morris et al., 1996; Hariri et al., 2002; Gläscher et al., 2004; Reinders et al., 2005), which seems to be widely related to the visibility of fearfully widened eyes (Morris et al., 2002). This activation occurs even when these stimuli are unattended (Vuilleumier et al., 2001) and when they are presented very briefly followed by a mask such that participants report being unaware of them (Whalen et al., 1998, 2004; Öhman et al., 2007) (for discrepant results, see Pessoa et al., 2006). However, the precise functional role of this activation remains to be elucidated.

One possible explanation of these findings was provided by a case study on S.M., a patient with rare bilateral amygdala damage, who was found to have severe problems in identifying fearful facial expressions with preserved skills in recognizing other emotional faces (Adolphs et al., 1994). Surprisingly, this specific impairment was not related to a lack of emotional discrimination ability, per se, but was found to be related to a lack of spontaneous gaze fixation on the eye region of facial stimuli, because an explicit instruction to look at the eyes was sufficient to restore her ability to identify fearful faces (Adolphs et al., 2005). For the first time, these data suggested that the amygdala might be involved in detecting salient facial features and reflexively triggering fixation changes toward them rather than being involved in emotion discrimination, per se (Adolphs and Spezio, 2006; Spezio et al., 2007b). In the current study, we combined functional magnetic resonance imaging (MRI) with online monitoring of eye movements to directly examine this relationship between amygdala activation and gaze orientation behavior in healthy human adults.

Materials and Methods

Subjects.

Twenty-four participants were initially examined, but two of them had to be excluded, because of equipment malfunction. The final sample consisted of 22 healthy, right-handed, male subjects (mean age ± SD, 27.5 ± 4.2 years; see supplemental Methods, available at www.jneurosci.org as supplemental material, for additional information). Eye-tracking data could only be analyzed for 16 participants (75% valid trials on average), and corresponding analyses of the gazing behavior are restricted to this subsample. All participants gave written informed consent and were paid for participation.

Emotion classification paradigm.

The experiment was based on a fully crossed 4 × 2 within-subjects design with the factors emotion and initial fixation. Based on a validation study (Goeleven et al., 2008), 160 pictures of 20 women and 20 men, each depicting neutral, fearful, angry, and happy expressions, were selected from a standardized data set (Lundqvist et al., 1998). These faces were slightly rotated such that both eyes had exactly the same height in each image. Colored images were converted to grayscales, and an elliptic mask was fitted to solely reveal the face itself while hiding hair and ears. Finally, the cumulative brightness was normalized across images. The mean viewing angle across all faces was 9.7° in the horizontal and 13.6° in the vertical direction. The average visual angle between eyes and mouth amounted to 5.4°. During the experiment, facial expressions were presented briefly to the participants, such that eye movements only occurred after stimulus offset.

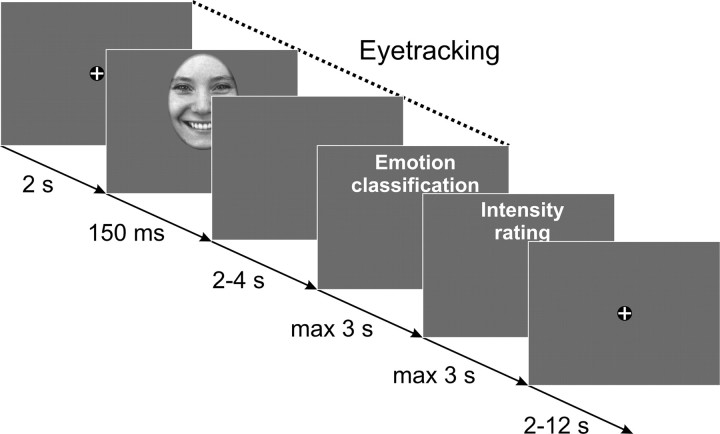

Each trial started with a fixation cross (2 s) followed by the presentation of the face (150 ms) followed by a blank screen (randomly chosen period of 2–4 s). After this time, participants were required to classify the emotional expression by pressing the corresponding key on a button box. Subsequently, volunteers were additionally asked to rate the intensity of the displayed emotion on a four item scale ranging from 1 (low) to 4 (high). This intensity rating was omitted when volunteers classified the image as “neutral.” After this rating, a fixation cross was displayed for another randomly chosen period of 2–12 s (Fig. 1). To precisely control for the initial fixation, half of the stimuli within each emotional expression were unpredictably shifted either downward or upward on each trial, such that the eyes or the mouth appeared at the location of the fixation cross. To avoid continuously presenting the bridge of the nose instead of the eyes at the position of the fixation cross, we additionally shifted half of the stimuli to the left or to the right, such that the left or right eyeball was presented at the position of the fixation cross as often as the center of the mouth. Since no significant differences in amygdala activation as a function of horizontal alignment were found (see supplemental Results, available at www.jneurosci.org as supplemental material), data were collapsed across horizontal displacements.

Figure 1.

Illustration of the trial structure that was used in the experiment. Eye-tracking data were obtained for the face stimuli with a 2 s prestimulus recording period and a 2–4 s measurement period after stimulus offset.

Blood oxygenation level-dependent functional images were acquired during the whole experiment that was split into two experimental sessions with 80 trials each. Eye movements were recorded with a camera-based MRI compatible eye-tracker at 60 Hz (Resonance Technology).

Eye movements.

Details on the eye movement analysis can be found in the supplemental material (available at www.jneurosci.org). In short, we determined the proportion of fixation changes (>0.5°) toward the other major facial feature that were triggered by the stimulus but occurred after stimulus offset. That is, when the eyes were presented at the position of the fixation cross, we determined the proportion of downward fixation changes toward the mouth, and when the mouth followed the fixation cross, we calculated the corresponding proportion of upward fixation changes toward the eyes.

Functional imaging.

Functional imaging was performed on a 3-Tesla whole-body MR-scanner (Siemens Trio) equipped with a 12-channel head coil. Forty transverse slices (slice thickness, 2 mm; 1 mm gap) were acquired in each volume using a T2*-sensitive gradient echo-planar imaging sequence (repetition time, 2380 ms; echo time, 25 ms; flip angle, 90°; field of view, 208 × 208 mm; in-plane resolution, 2 × 2 mm). Additionally, isotropic high-resolution (1 × 1 × 1 mm3) structural images were recorded using a T1-weighted coronal-oriented magnetization-prepared rapid gradient echo sequence with 240 slices.

After standard preprocessing of the images (for details, see supplemental Methods, available at www.jneurosci.org as supplemental material), two different random-effects analyses were carried out: First, a 4 × 2 repeated-measures ANOVA was implemented using the simple contrast maps that were derived from each participant. This analysis was based on the whole sample of 22 subjects and was performed to determine whether the amygdala responds differentially to emotional faces when controlling for the initial fixation. A second set of analyses was performed for a reduced sample of 16 subjects with valid eye-tracking data to find out whether individual differences in gaze-orienting behavior are correlated with amygdala responses. These analyses were performed for each emotion (neutral, fearful, angry, happy). As we were primarily interested in the impact of eyes on gaze orientation behavior and amygdala activation, we calculated the difference between the proportion of upward fixation changes elicited by having the mouth at the level of fixation and downward fixation changes elicited by the eyes following the fixation cross. This measure was used as a parametric regressor in different random-effects analyses for each emotion to reveal brain regions showing a significant correlation between brain activation and gazing behavior.

Since we were primarily interested in amygdala activation, we used a small volume correction in predefined anatomical amygdala regions of interest (Tzourio-Mazoyer et al., 2002). For illustration purposes, only voxels within the amygdala are displayed as statistical maps thresholded at p < 0.01, uncorrected, which are overlaid on the mean structural image from the respective group.

Results

Behavioral data

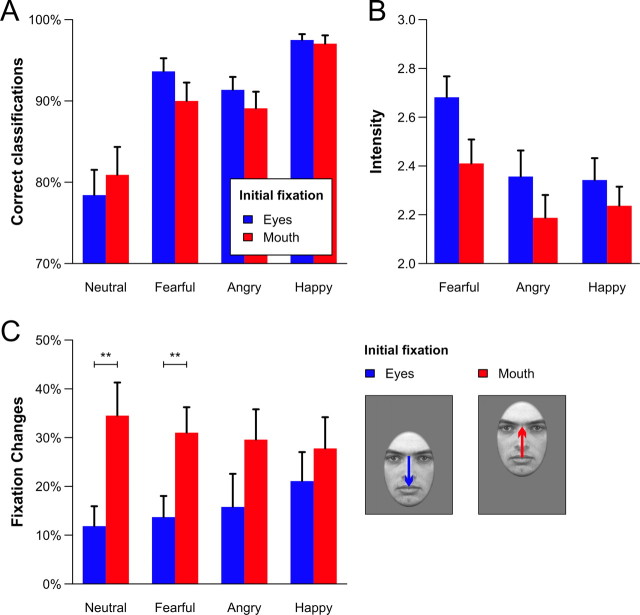

Overall, participants were very accurate in classifying the emotional expressions regardless of the initial fixation position (main effect alignment: F(1,21) < 1). The largest hit rates were observed for happy faces and the lowest for neutral expressions (main effect emotion: F(3,63) = 16.11, Huynh–Feldt ε = 0.61, p < 0.001) (Fig. 2A). The interaction of emotion and alignment did not reach statistical significance (F(3,63) = 1.80, ε = 0.90, p = 0.16). Emotion intensity was rated significantly higher for fearful faces (main effect emotion: F(2,42) = 13.95, ε = 0.84, p < 0.001), but across expressions, higher ratings were given when the eyes were presented at fixation (main effect initial fixation: F(1,21) = 36.93, p < 0.001) (Fig. 2B). No statistically significant interaction was found (F(2,42) = 2.02, ε = 1.00, p = 0.15).

Figure 2.

A–C, Proportion of correct emotion classification (A), average intensity ratings (B), and fixation changes targeting the other major facial feature (C) as a function of the emotional expression and the alignment of the faces. Pairwise post hoc comparisons between the initial fixation positions within each emotion were calculated when a significant interaction effect of both factors was obtained (only for the fixation changes). Error bars represent SEM. **p < 0.01.

Eye movement data

As can be seen from Figure 2C, gaze changes toward the eye region occurred more often than fixation changes leaving the eye region (main effect initial fixation: F(1,15) = 4.70, p < 0.05). However, the size of this effect was found to depend on the emotional expression (interaction of emotion and initial fixation: F(3,45) = 2.95, ε = 0.99, p < 0.05), and it was largest for fearful and neutral faces. The main effect of emotion did not reach statistical significance (F(3,45) < 1).

Imaging data

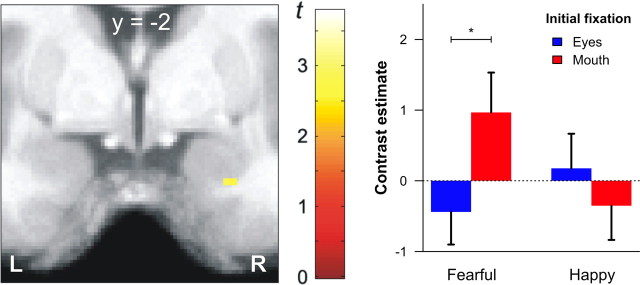

To determine whether the amygdala supports the detection of salient features in the visual periphery and triggers gaze changes toward them (Adolphs and Spezio, 2006), we first calculated the interaction effect of facial expression and initial fixation. In this analysis, we focused on differences between fearful and happy faces, since these expressions differ most clearly in the distribution of diagnostic features across the face (Smith et al., 2005). We observed a significantly elevated amygdala response for fearful faces in trials where the mouth was aligned to the fixation cross (Fig. 3). No such difference was found for happy faces.

Figure 3.

Amygdala regions showing a significant interaction of emotional expression (fearful, happy) and initial fixation (eyes, mouth). The left panel shows the statistical map (coronal plane) of the interaction effect revealing a small cluster in the right amygdala (x = 26, y = −2, z = −28 mm, t(147) = 2.76, p < 0.005, uncorrected). The contrast estimates of this cluster are displayed on the right side. A direct comparison of both initial fixation positions within each emotion revealed only a significant difference for fearful faces (t(21) = 2.58, *p < 0.05). Error bars represent SEM. L, Left; R, right.

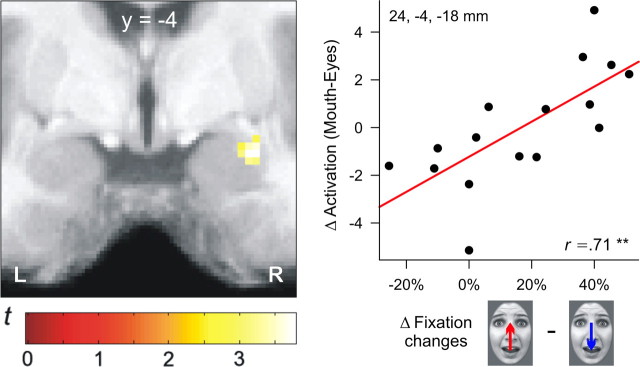

In a second set of analyses, we focused on correlations between individual gazing behavior and brain activations for each emotional expression separately. These parametric analyses revealed a significant correlation between gaze preferences for the eye region and amygdala activation for fearful faces only (r = 0.71, p < 0.01) (Fig. 4). Thus, participants with the largest activity in the right amygdala exhibited the most prominent gaze shifts toward the eye region of fearful faces. No such correlation was found for the other facial expressions (see supplemental Results, available at www.jneurosci.org as supplemental material).

Figure 4.

The left panel shows the significant correlation between lateral amygdala activity and gaze preferences for the eye region of fearful faces (x = 28, y = −4, z = −18; t(14) = 3.77, p < 0.05, familywise error corrected). The scatterplot on the right side depicts the correlation of differential gaze changes and amygdala activation in the peak voxel. L, Left; R, right.

Discussion

In this study, we measured brain activity and eye movements in parallel to elucidate the functional role of the amygdala in processing (fearful) facial expressions. By controlling for the initial fixation, we found that facial expressions were experienced as being more intense when the eyes were presented at fixation. Thus, the eyes seem to be a highly salient facial feature that facilitates the processing of social information by disambiguating the depicted expression (Adolphs, 2008). In line with the supposed biological relevance of the eyes, we observed a larger number of reflexive gaze changes toward the eye region than fixation changes leaving the eye region. This effect was modulated by the depicted emotion and seems to follow the distribution of diagnostically relevant facial features (Smith et al., 2005). Fearful faces, for example, can be best identified when fixating the eye region, and accordingly, we observed a significantly higher proportion of gaze changes from the mouth to the eyes (31%) than vice versa (14%). The most relevant feature for recognizing happiness is the mouth, and consequently, we found a reduced proportion of gaze changes toward the eyes (28%) and a larger number of fixation changes targeting the mouth (21%).

In correspondence with its assumed functional role (Adolphs and Spezio, 2006), amygdala activation was specifically enhanced when fearful faces were presented with the mouth aligned to fixation. No such effect was found for happy faces that triggered much less gaze changes toward the eye region. Importantly, this differential activation pattern cannot be explained by altered visual input during fixation changes, because stimulus duration was very brief and, therefore, gaze changes only occurred after stimulus offset. The magnitude of gaze preferences for the eye region of fearful faces was correlated with amygdala activation across participants, thus substantiating the supposed relevance of this brain structure in reflexive gaze orienting toward fearfully widened eyes (Adolphs et al., 2005; Adolphs and Spezio, 2006).

In contrast to former studies that did not take into account the role of eye movements during stimulus evaluation, we did not observe a general increase of amygdala activation when contrasting fearful versus neutral (Breiter et al., 1996; Lange et al., 2003; Gläscher et al., 2004; Reinders et al., 2005, 2006) or fearful versus happy facial expressions (Morris et al., 1996, 1998; Hardee et al., 2008) when collapsing data across initial fixation positions (see supplemental Results, available at www.jneurosci.org as supplemental material). However, our design allowed us to dissociate neuronal activity related to the perception of fearful faces from gaze reorientation-related activity and thus provides the basis for a reinterpretation of previous data by showing that the amygdala does not seem to be activated by fearful faces or eyes, per se, but plays an important role in initiating reflexive attentional shifts toward salient facial features (such as fearfully widened eyes) that appear in the visual periphery (Adolphs and Spezio, 2006). Thus, enhanced amygdala responses that were previously shown for supraliminally (Morris et al., 2002) and subliminally (Whalen et al., 2004) presented fearful eyes in free viewing conditions might in part be related to attentional shifts toward the eyes.

This functional interpretation of amygdala activation that mirrors specific deficits observed in patients with amygdala lesions (Adolphs et al., 2005; Spezio et al., 2007b) may also explain repeated findings of amygdala hypoactivation (Critchley et al., 2000; Ashwin et al., 2007; Hadjikhani et al., 2007), abnormal fixation patterns (Pelphrey et al., 2002; Spezio et al., 2007a), and behavioral difficulties in identifying facial expressions (Howard et al., 2000; Ashwin et al., 2006) in patients with autism spectrum disorder. Interestingly, the emotion recognition deficits in autism were linked to an inadequate use of low spatial frequencies (Kätsyri et al., 2008). Such coarse image information, however, is sufficient to elicit reliable amygdala activation in healthy humans when containing fearful facial features (Vuilleumier et al., 2003). These findings substantiate the conclusion that amygdala activation elicited by fearful faces is related to attentional shifts toward the eye region (Dalton et al., 2005; Adolphs and Spezio, 2006). Malfunctions of the amygdala might thus result in difficulties of processing specific facial features (such as fearfully widened eyes) that normally trigger these attentional shifts.

Footnotes

This study was supported by a grant from the Bundesministerium für Bildung und Forschung (Network “Social Cognition”). We thank Jan Peters and Bryan A. Strange for helpful comments on an earlier draft of this manuscript and K. Müller and K. Wendt for help with MR scanning.

References

- Adolphs R. Fear, faces, and the human amygdala. Curr Opin Neurobiol. 2008;18:166–172. doi: 10.1016/j.conb.2008.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Spezio M. Role of the amygdala in processing visual social stimuli. Prog Brain Res. 2006;156:363–378. doi: 10.1016/S0079-6123(06)56020-0. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Damasio H, Damasio A. Impaired recognition of emotion in facial expressions following bilateral damage to the human amygdala. Nature. 1994;372:669–672. doi: 10.1038/372669a0. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433:68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Ashwin C, Chapman E, Colle L, Baron-Cohen S. Impaired recognition of negative basic emotions in autism: a test of the amygdala theory. Soc Neurosci. 2006;1:349–363. doi: 10.1080/17470910601040772. [DOI] [PubMed] [Google Scholar]

- Ashwin C, Baron-Cohen S, Wheelwright S, O'Riordan M, Bullmore ET. Differential activation of the amygdala and the ‘social brain’ during fearful face-processing in Asperger syndrome. Neuropsychologia. 2007;45:2–14. doi: 10.1016/j.neuropsychologia.2006.04.014. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, Strauss MM, Hyman SE, Rosen BR. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17:875–887. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- Critchley HD, Daly EM, Bullmore ET, Williams SC, Van Amelsvoort T, Robertson DM, Rowe A, Phillips M, McAlonan G, Howlin P, Murphy DG. The functional neuroanatomy of social behaviour: changes in cerebral blood flow when people with autistic disorder process facial expressions. Brain. 2000;123:2203–2212. doi: 10.1093/brain/123.11.2203. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, Alexander AL, Davidson RJ. Gaze fixation and the neural circuitry of face processing in autism. Nat Neurosci. 2005;8:519–526. doi: 10.1038/nn1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gläscher J, Tüscher O, Weiller C, Büchel C. Elevated responses to constant facial emotions in different faces in the human amygdala: an fMRI study of facial identity and expression. BMC Neurosci. 2004;5:45. doi: 10.1186/1471-2202-5-45. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goeleven E, De Raedt R, Leyman L, Verschuere B. The Karolinska directed emotional faces: a validation study. Cogn Emot. 2008;22:1094–1118. [Google Scholar]

- Hadjikhani N, Joseph RM, Snyder J, Tager-Flusberg H. Abnormal activation of the social brain during face perception in autism. Hum Brain Mapp. 2007;28:441–449. doi: 10.1002/hbm.20283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hardee JE, Thompson JC, Puce A. The left amygdala knows fear: laterality in the amygdala response to fearful eyes. Soc Cogn Affect Neurosci. 2008;3:47–54. doi: 10.1093/scan/nsn001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hariri AR, Tessitore A, Mattay VS, Fera F, Weinberger DR. The amygdala response to emotional stimuli: a comparison of faces and scenes. Neuroimage. 2002;17:317–323. doi: 10.1006/nimg.2002.1179. [DOI] [PubMed] [Google Scholar]

- Howard MA, Cowell PE, Boucher J, Broks P, Mayes A, Farrant A, Roberts N. Convergent neuroanatomical and behavioural evidence of an amygdala hypothesis of autism. Neuroreport. 2000;11:2931–2935. doi: 10.1097/00001756-200009110-00020. [DOI] [PubMed] [Google Scholar]

- Kätsyri J, Saalasti S, Tiippana K, von Wendt L, Sams M. Impaired recognition of facial emotions from low-spatial frequencies in Asperger syndrome. Neuropsychologia. 2008;46:1888–1897. doi: 10.1016/j.neuropsychologia.2008.01.005. [DOI] [PubMed] [Google Scholar]

- Lange K, Williams LM, Young AW, Bullmore ET, Brammer MJ, Williams SC, Gray JA, Phillips ML. Task instructions modulate neural responses to fearful facial expressions. Biol Psychiatry. 2003;53:226–232. doi: 10.1016/s0006-3223(02)01455-5. [DOI] [PubMed] [Google Scholar]

- Lundqvist D, Flykt A, Öhman A. The Karolinska directed emotional faces - KDEF, CD-ROM from Department of Clinical Neuroscience, Psychology section, Karolinska Institutet. 1998 [Google Scholar]

- Morris JS, Frith CD, Perrett DI, Rowland D, Young AW, Calder AJ, Dolan RJ. A differential neural response in the human amygdala to fearful and happy facial expressions. Nature. 1996;383:812–815. doi: 10.1038/383812a0. [DOI] [PubMed] [Google Scholar]

- Morris JS, Friston KJ, Büchel C, Frith CD, Young AW, Calder AJ, Dolan RJ. A neuromodulatory role for the human amygdala in processing emotional facial expressions. Brain. 1998;121:47–57. doi: 10.1093/brain/121.1.47. [DOI] [PubMed] [Google Scholar]

- Morris JS, deBonis M, Dolan RJ. Human amygdala responses to fearful eyes. Neuroimage. 2002;17:214–222. doi: 10.1006/nimg.2002.1220. [DOI] [PubMed] [Google Scholar]

- Öhman A, Carlsson K, Lundqvist D, Ingvar M. On the unconscious subcortical origin of human fear. Physiol Behav. 2007;92:180–185. doi: 10.1016/j.physbeh.2007.05.057. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. J Autism Dev Disord. 2002;32:249–261. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Pessoa L, Japee S, Sturman D, Ungerleider LG. Target visibility and visual awareness modulate amygdala responses to fearful faces. Cereb Cortex. 2006;16:366–375. doi: 10.1093/cercor/bhi115. [DOI] [PubMed] [Google Scholar]

- Reinders AA, den Boer JA, Büchel C. The robustness of perception. Eur J Neurosci. 2005;22:524–530. doi: 10.1111/j.1460-9568.2005.04212.x. [DOI] [PubMed] [Google Scholar]

- Reinders AA, Gläscher J, de Jong JR, Willemsen AT, den Boer JA, Büchel C. Detecting fearful and neutral faces: BOLD latency differences in amygdala-hippocampal junction. Neuroimage. 2006;33:805–814. doi: 10.1016/j.neuroimage.2006.06.052. [DOI] [PubMed] [Google Scholar]

- Smith ML, Cottrell GW, Gosselin F, Schyns PG. Transmitting and decoding facial expressions. Psychol Sci. 2005;16:184–189. doi: 10.1111/j.0956-7976.2005.00801.x. [DOI] [PubMed] [Google Scholar]

- Spezio ML, Adolphs R, Hurley RS, Piven J. Analysis of face gaze in autism using ‘Bubbles’. Neuropsychologia. 2007a;45:144–151. doi: 10.1016/j.neuropsychologia.2006.04.027. [DOI] [PubMed] [Google Scholar]

- Spezio ML, Huang PY, Castelli F, Adolphs R. Amygdala damage impairs eye contact during conversations with real people. J Neurosci. 2007b;27:3994–3997. doi: 10.1523/JNEUROSCI.3789-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. Neuroimage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Effects of attention and emotion on face processing in the human brain: an event-related fMRI study. Neuron. 2001;30:829–841. doi: 10.1016/s0896-6273(01)00328-2. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Armony JL, Driver J, Dolan RJ. Distinct spatial frequency sensitivities for processing faces and emotional expressions. Nat Neurosci. 2003;6:624–631. doi: 10.1038/nn1057. [DOI] [PubMed] [Google Scholar]

- Whalen PJ, Rauch SL, Etcoff NL, McInerney SC, Lee MB, Jenike MA. Masked presentations of emotional facial expressions modulate amygdala activity without explicit knowledge. J Neurosci. 1998;18:411–418. doi: 10.1523/JNEUROSCI.18-01-00411.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, Davis FC, Kim H, Polis S, McLaren DG, Somerville LH, McLean AA, Maxwell JS, Johnstone T. Human amygdala responsivity to masked fearful eye whites. Science. 2004;306:2061. doi: 10.1126/science.1103617. [DOI] [PubMed] [Google Scholar]