Abstract

Impairments in decision making about risks and rewards have been observed in patients with amygdala damage. Similarly, lesions of the basolateral amygdala (BLA) in rodents disrupts cost/benefit decision making, reducing preference for larger rewards obtainable after a delay or considerable physical effort. We assessed the effects of inactivation of the BLA on risk- and effort-based decision making, using discounting tasks conducted in an operant chamber. Separate groups of rats were trained on either a risk- or effort-discounting task, consisting of four blocks of 10 free-choice trials. Selection of one lever always delivered a smaller reward (one or two pellets), whereas responding on the other delivered a larger, four pellet reward. For risk discounting, the probability of receiving the larger reward decreased across trial blocks (100–12.5%), whereas on the effort task, the larger reward was delivered after a ratio of presses that increased across blocks (2–20). Infusions of GABA agonists baclofen/muscimol into the BLA disrupted risk discounting, inducing a risk-averse pattern of choice, and increased response latencies and trial omissions, most prominently during trial blocks that provided the greatest uncertainty about the most beneficial course of action. Similar inactivations also increased effort discounting, reducing the preference for larger yet more costly rewards, even when the relative delays to reward delivery were equalized across response options. These findings point to a fundamental role for the BLA in different forms of cost/benefit decision making, facilitating an organism's ability to overcome a variety of costs (work, uncertainty, delays) to promote actions that may yield larger rewards.

Introduction

The amygdala has been implicated in different forms of cost/benefit decision making in both humans and animals. Patients with amygdala damage make more disadvantageous, risky choices on a variety of tasks that simulate real-life decisions associated with uncertainty, reward, and punishment (Bechara et al., 1999; Brand et al., 2007). Similarly, functional imaging studies have reported increased activation of the amygdala when subjects choose options associated with larger reward magnitudes (Smith et al., 2009), less ambiguous options (Hsu et al., 2005), or risky options in the context of a certain loss (De Martino et al., 2006).

The involvement of the amygdala in decision making has been linked to its well established role in reward-related associative learning (Seymour and Dolan, 2008). Lesions of the basolateral amygdala (BLA) impair the ability of conditioned reinforcers to exert control over overt behavior (Robbins and Everitt, 1996; Murray, 2007) and also disrupt guidance of instrumental action in response to changes in reward value (Salinas et al., 1993; Corbit and Balleine, 2005; McLaughlin and Floresco, 2007). Thus, the contribution of the BLA to different forms of decision making may be related to its proposed role in integrating information about potential outcome value with action–outcome associations to guide behavior (O'Doherty, 2004; Balleine and Killcross, 2006; Weller et al., 2007).

A key component of decision making that can be assessed in animals is the evaluation of costs associated with different candidate actions relative to the potential rewards that may be obtained by those actions. In these studies, animals choose between options that yield smaller, easily obtainable rewards or larger, more costly rewards. Imposition of these costs, such as delaying the delivery of the reward or requiring more physical effort to obtain it, leads to a “discounting” of larger rewards, increasing preference for smaller, low-cost rewards (Floresco et al., 2008a). The BLA plays a critical role in these forms of decision making. Lesions or inactivations of the BLA reduces preference for larger, delayed rewards (Winstanley et al., 2004) or larger rewards that require rats to climb a scalable barrier to obtain them (Floresco and Ghods-Sharifi, 2007). Importantly, these manipulations do not impair discrimination between rewards of different magnitudes when the effort or delay costs associated with both rewards are equal. However, it is unclear whether the contributions of the BLA to effort-based decisions are related specifically to judgments involving effort costs or delays to reinforcement that are intertwined with these costs. Moreover, the role of this nucleus in risk-related decisions has not been explored in animals. Humans with amygdala damage choose riskier options associated with larger rewards, yet these patterns of choice are typically disadvantageous in the long term. Whether BLA lesions cause a uniform increase in risky choice or lead to a more fundamental disruption in calculating the long-term value of risky versus safe choices has yet to be resolved. Thus, the present study investigated the role of the BLA in effort- and risk-based decision making, using recently developed discounting paradigms (Cardinal and Howes, 2005; Floresco et al., 2008b, St Onge and Floresco, 2009). We assessed the effects of reversible inactivation of the BLA on a form of risk discounting, where rats chose between smaller, certain rewards and larger, uncertain rewards. In this task, selection of the larger reward option carries with it an inherent “risk” of not obtaining any reward on a given trial. In addition, the role of the BLA in effort discounting was assessed under normal conditions and also when the delay to reward delivery was equalized across response options.

Materials and Methods

Animals.

Male Long–Evans rats (Charles River Laboratories) weighing 275–300 g at the beginning of behavioral training were used. On arrival, rats were group housed and given 1 week to acclimatize to the colony. Subsequently, they were individually housed and food restricted to 85–90% of their free-feeding weight for 1 week before behavioral training and given ad libitum access to water for the duration of the experiment. Feeding occurred in the rats' home cages at the end of the experimental day. All testing was in accordance with the Canadian Council of Animal Care and the Animal Care Committee of the University of British Columbia.

Apparatus.

Behavioral testing was conducted in 12 operant chambers (30.5 × 24 × 21 cm; Med-Associates) enclosed in sound-attenuating boxes. The boxes were equipped with a fan that provided ventilation and masked extraneous noise. Each chamber was fitted with two retractable levers, one located on each side of a central food receptacle where food reinforcement (45 mg; Bioserv) was delivered via a pellet dispenser. The chambers were illuminated by a single 100 mA house light located in the top-center of the wall opposite the levers. Four infrared photobeams were mounted on the sides of each chamber, and another photobeam was located in the food receptacle. Locomotor activity was indexed by the number of photobeam breaks that occurred during a session. All experimental data were recorded by an IBM personal computer connected to the chambers via an interface.

Lever-pressing training.

Our initial training protocols have been described previously (Floresco et al., 2008b; St Onge and Floresco, 2009). On the day before their first exposure to the operant chambers, rats were given ∼25 reward pellets in their home cage. On the first day of training, 2–3 pellets were delivered into the food cup, and crushed pellets were placed on a lever before the rat was placed in the chamber. Rats were first trained under a fixed-ratio 1 schedule to a criterion of 60 presses in 30 min, first for one lever and then repeated for the other lever (counterbalanced left/right between subjects). They were then trained on a simplified version of the full task. These 90 trial sessions began with the levers retracted and the operant chamber in darkness. Every 40 s, a trial was initiated with the illumination of the houselight and the insertion of one of the two levers into the chamber. If the rat failed to respond on the lever within 10 s, the lever was retracted, the chamber darkened, and the trial was scored as an omission. If the rat responded within 10 s, the lever retracted. For rats used in the effort-discounting experiment, a response on either lever always delivered one pellet, whereas for those to be trained on the risk-discounting task, a successful lever response delivered a single pellet with 50% probability. This latter schedule familiarized the rats to the probabilistic nature of the full task. In every pair of trials, the left or right lever was presented once, and the order within the pair of trials was random. Rats were trained for ∼5 d to a criterion of 80 or more successful trials (i.e., ≤10 omissions), after which they were trained on the full version of either the risk- or effort-discounting tasks, 6–7 d per week.

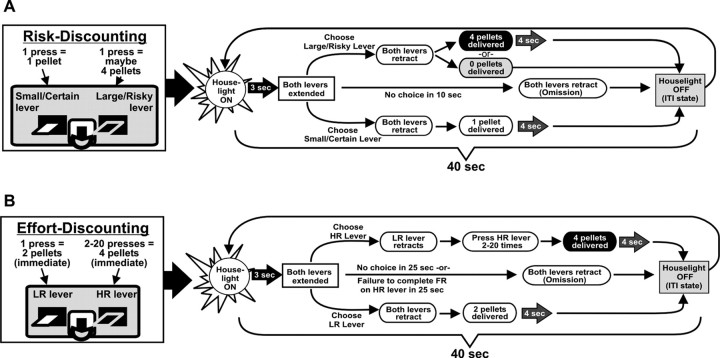

Risk discounting.

This task was modified from the original procedure described by Cardinal and Howes (2005) which we have used previously to assess the role of dopamine in risk-based decision making (St Onge and Floresco, 2009) (Fig. 1A). Each 48 min session consisted of 72 discrete choice trials, separated into four blocks of 18 trials. A session began in darkness with both levers retracted (the intertrial state). A trial began every 40 s with the illumination of the houselight and insertion of one or both levers into the chamber. One lever was designated the large/risky lever, the other the small/certain lever, which remained consistent throughout training (counterbalanced left/right). If the rat did not respond within 10 s of lever presentation, the chamber was reset to the intertrial state until the next trial (omission). A response on either lever caused both to be retracted. Choice of the small/certain lever always delivered one pellet with 100% probability; choice of the large/risky lever delivered four pellets in a probabilistic manner that was varied systematically across the session (see below). The four blocks were comprised of eight forced choice trials where only one lever was presented (four trials for each lever, randomized in pairs), permitting animals to learn the amount of food associated with each lever press and the respective probability of receiving reinforcement during each block. This was followed by 10 free-choice trials, where both levers were presented. When food was delivered, the houselight remained on for another 4 s, after which the chamber reverted back to the intertrial state until the next trial. Multiple pellets were delivered 0.5 s apart. The probability of obtaining four pellets after pressing the large/risky lever was initially 100%, then 50, 25, and 12.5%, respectively, for each successive block. For each session and trial block, the probability of receiving the large reward was drawn randomly from a set probability distribution. Using these probabilities, selection of the large/risky lever would be advantageous in the first two blocks and disadvantageous in the last block, whereas rats could obtain an equivalent number of food pellets after responding on either lever during the 25% block. Latencies to make a choice and locomotor activity (photobeam breaks) were also recorded.

Figure 1.

Task design. Cost/benefit contingencies associated with responding on either lever (left) and format of a single free-choice trial (right) of the risk (A) and effort-discounting tasks (B).

Effort discounting.

These procedures have been described previously (Floresco et al., 2008b) (Fig. 1B) and were similar to the risk-discounting task in a number of respects. Each 32 min session consisted of 48 discrete choice trials, separated into four blocks. Each block of trials comprised of two forced-choice trials (one lever each), followed by 10 free-choice trials. Trials began at 40 s intervals with the illumination of the houselight, followed by extension of one or both levers 3 s later. One lever was designated as the high-reward (HR) lever and the other as the low-reward (LR) lever. Failure to choose a lever within 25 s of lever presentation (omission) resulted in reversion to the intertrial state (houselight off, levers retracted). Selection of the LR lever caused both levers to be retracted and the immediate delivery of two pellets. However, the first response on the HR lever led to the retraction of the LR lever, whereas the HR remained in the chamber. Rats were required to complete a fixed ratio of presses on the HR lever to receive delivery of four pellets, delivered immediately after the last lever press, after which the lever retracted. The ratio of presses required on the HR lever was varied systematically across each block, starting at 2, then 5, 10, and 20. If the rat did not complete the ratio within 25 s, the lever retracted, no food was delivered, and the chamber reverted to the intertrial state. These types of incomplete trials were very rare (mean <1 across all treatment conditions), even on test days that increased the overall omission rate. Rates of pressing on the HR lever (i.e., presses per second) were recorded, as were the latencies to initiate a choice and overall locomotor activity (photobeam breaks).

Effort discounting with equivalent delays.

After the initial microinfusion test days using the effort-discounting task, rats in this experiment were then trained on a modified procedure (Floresco et al., 2008b). Here, a single press on the LR lever delivered two pellets after a delay equivalent to that required for rats to complete the ratio of presses on the HR using the standard effort-discounting procedure (0.5–9 s). Thus, for each block of trials, the delay to food delivery after an initial choice of either lever was equalized. The delay to receive two pellets after a single press on the LR lever increased across trial blocks and was calculated based on the average time it took all rats in the respective groups to press the HR lever 2, 5, 10, and 20 times during the last 3 d of training on the effort-discounting task before the first sequence of infusions. Thus, if rats required 9 s to press the HR lever 20 times during the last trial block, a single press on the LR lever during this block would deliver two pellets after a 9 s delay. During these sessions, the intertrial interval was 40 s, as with the effort-discounting task.

Training procedure, surgery, and microinfusion protocol.

Rats were trained on their respective task until as a group they (1) chose the large/risky (risk) or HR (effort) lever during the first trial block on at least 80% of successful trials and (2) demonstrated stable baseline levels of discounting for 3 consecutive days. Stability was assessed using statistical procedures similar to that described by Winstanley et al. (2004), Floresco et al. (2008b), and St Onge and Floresco (2009). In brief, data from three consecutive sessions were analyzed with a repeated-measures ANOVA with two within-subjects factors (training day and trial block). If the effect of block was significant at the p < 0.05 level, but there was no main effect of day or day times trial block interaction (at p > 0.1 level), animals were judged to have achieved stable baseline levels of choice behavior. After stability criterion was achieved, rats were provided food ad libitum and 1–2 d later, were subjected to surgery.

Rats were anesthetized with 100 mg/kg ketamine hydrochloride and 7 mg/kg xylazine and implanted with bilateral 23 gauge stainless steel guide cannulae into the BLA (flat skull: anteroposterior = −3.1 mm; medialolateral = ±5.2 mm from bregma; and dorsoventral = −6.5 mm from dura) using standard stereotaxic techniques. The guide cannulae were held in place with stainless steel screws and dental acrylic. Thirty-gauge obdurators flush with the end of guide cannulae remained in place until the infusions were made. Rats were given at least 7 d to recover from surgery before testing. During this period, they were handled at least 5 min each day and were food restricted to 85% of their free-feeding weight.

Rats were subsequently retrained on their respective task for at least 5 d until the group displayed stable levels of choice behavior for 3 consecutive days. One to two days before their first microinfusion test day, obdurators were removed, and a mock infusion procedure was conducted. Stainless steel injectors were placed in the guide cannulae for 2 min, but no infusion was administered. This procedure habituated rats to the routine of infusions to reduce stress on subsequent test days. The day after displaying stable discounting, the group received its first microinfusion test day.

A within-subjects design was used for all experiments. Inactivation of the BLA was achieved by microinfusion of a solution containing the GABAB agonist baclofen and the GABAA agonist muscimol (Sigma-Aldrich). Both drugs were dissolved in physiological saline, mixed separately at a concentration of 500 ng/μl, and then combined in equal volumes so that the final concentration of each compound in solution was 250 ng/μl. Drugs or saline were infused at a volume of 0.5 μl so that the final dose of both baclofen and muscimol was 125 ng per side. Infusions of GABA agonists or saline were administered bilaterally into the BLA via 30 gauge injection cannulae that protruded 1.0 mm past the end of the guide cannulae, at a rate of 0.5 μl/75 s by a microsyringe pump. Injection cannulae were left in place for an additional 1 min to allow for diffusion. Each rat remained in its home cage for an additional 10 min period before behavioral testing. Previous studies using similar infusions have observed dissociable effects on behavior when GABA agonists have been infused into adjacent brain regions separated by ∼1 mm (Floresco et al., 2006; Marquis et al., 2007; Moreira et al., 2007), suggesting that the effective functional spread of these treatments is unlikely to be much more than 1 mm in radius.

On the first infusion test day, half of the rats in each group received saline infusions, and the other half received baclofen/muscimol. The next day, they received a baseline training day (no infusion). If, for any individual rat, choice of the large/risky or HR lever deviated by >15% from its preinfusion baseline, it received an additional day of training before the second infusion test. On the following day, rats received a second counterbalanced infusion of either saline or baclofen/muscimol.

Reward magnitude discrimination.

After testing on either the risk or effort-discounting task was complete, a subset of rats from each group was trained on a reward magnitude discrimination task. This procedure was similar to the effort-discounting procedure, as the task consisted of four blocks of 12 trials (first 2 forced-choice, 10 free-choice). Here, a single response on the one lever immediately delivered four pellets with 100% probability, whereas one press of the other lever always delivered one pellet immediately. For each rat, the lever associated with the four pellet reward was the same as it had been for that animal during training on the effort or risk-discounting task. After 7 d of training, rats received another sequence of counterbalanced infusions of saline and baclofen/muscimol.

Histology.

After completion of behavioral testing, rats were killed in a carbon dioxide chamber. Brains were removed and fixed in a 4% formalin solution. The brains were frozen and sliced in 50 μm sections before being mounted and stained with Cresyl Violet. Placements were verified with reference to the neuroanatomical atlas of Paxinos and Watson (1998).

Data analysis.

The primary dependent measure of interest was the proportion of choices directed toward the large/risky (risk) or HR (effort) lever for each block of free-choice trials, factoring in trial omissions. For each block, this was calculated by dividing the number of choices of the large/risky or HR lever by the total number of successful trials. Choice and response latency data were analyzed using two-way, within-subjects ANOVAs, with treatment and trial block as the within-subjects factors. In each of these analyses, the effect of trial block was always significant (all p < 0.001) and will not be reported further. Locomotor activity (i.e., photobeam breaks) and the number of trial omissions were analyzed with one-way repeated-measures ANOVAs. Pairwise comparisons tests (Dunnett's or Tukey's) were used when appropriate.

Results

Risk discounting

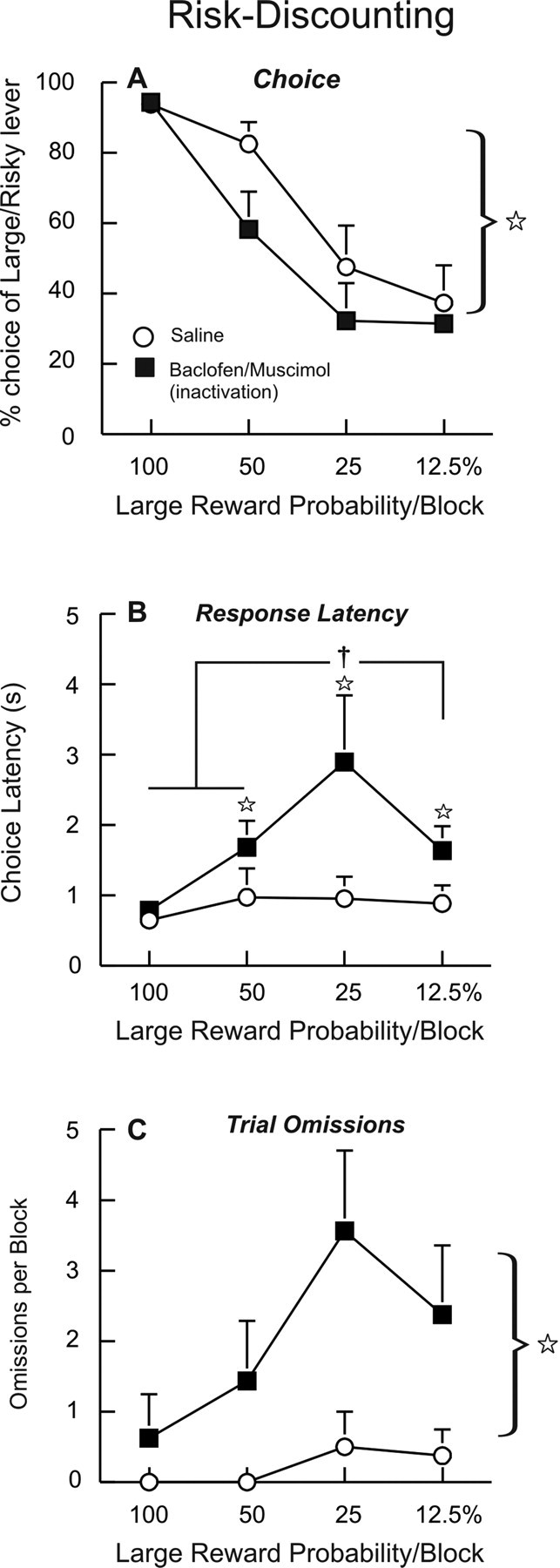

Initially, 12 rats were trained and tested on the risk-discounting task. Three of these animals were excluded from the data analysis because of erroneous placements. One rat displayed a strong side bias, showing no discernable discounting during training or on infusion test days; this animal's data were also excluded. The remaining rats (n = 8) required 28 d of training before displaying stable patterns of choice behavior and receiving surgical implantation of guide cannulae in the BLA. After recovery and 5–6 d of retraining on this task, they received two counterbalanced infusions of saline and baclofen/muscimol on separate days. The actual experienced probabilities for obtaining the large reward during retraining and on saline and inactivation test days for all rats are displayed in Table 1. Analysis of choice behavior revealed a significant main effect of treatment (F(1,7) = 6.53, p < 0.05). As displayed in Figure 2A, inactivation of the BLA induced a risk-averse pattern of choice, significantly decreasing the proportion of responses of the large/risky lever relative to saline treatment. During the first trial block, where the odds of obtaining the larger reward were 100%, choice of the large/risky lever was nearly identical after either treatment. In contrast, BLA inactivation caused the greatest reduction in choice of the large/risky lever during the middle two blocks (i.e., 50, 25%). A targeted analysis of these data confirmed that BLA inactivation significantly reduced choice of the large risky lever relative to saline during these two middle blocks (F(1,7) = 7.07, p < 0.05) but not during the first (100%) or last (12.5%) probability blocks [both F's <1, nonsignificant (n.s.)]. It is notable that relative to either the 100 or 12.5% blocks, the middle two trial blocks provide the most uncertainty in terms of the probability of receiving the larger reward (50% block) or the overall amount of reward that could be obtained after selection of either lever (25% block). This effect did not appear to be attributable to an increased likelihood of switching to the small/certain lever after trials where rats chose the large/risky lever but did not receive reward. For all of the trials where rats chose the small/certain lever after a preceding choice of the large/risky lever, 70 ± 10% of these switches occurred after a nonreceipt of reward after saline infusions, compared with 68 ± 8% after BLA inactivation (F(1,7) = 0.33, n.s.). The relatively few number of trials where rats actually chose the large/risky lever after BLA inactivation did not allow us to conduct a more detailed analysis of the patterns of choice after nonrewarded trials. Thus, inactivation of the BLA reduces preference for larger, probabilistic rewards, an effect that is most pronounced under conditions that provide greater uncertainty about the most beneficial course of action.

Table 1.

Mean (SEM) actual experienced probabilities of obtaining the large reward on the risk-discounting task

| First block | Second block | Third block | Fourth block | |

|---|---|---|---|---|

| Retraining (5 d average) | 1.00 (0) | 0.49 (0.03) | 0.21 (0.02) | 0.135 (0.04) |

| Saline test | 1.00 (0) | 0.55 (0.06) | 0.23 (0.05) | 0.17 (0.08) |

| Inactivation test | 1.00 (0) | 0.56 (0.09) | 0.31 (0.11) | 0.125 (0.07) |

Figure 2.

Inactivation of the BLA induces risk aversion. Data are plotted as a function of the large/risky lever probability by block (x-axis). Symbols represent mean + SEM. A, Percentage choice for the large/risky lever during free-choice trials after infusions of saline or baclofen/muscimol into the BLA. Star denotes a significant (p < 0.05) main effect of treatment. B, BLA inactivations increased response latencies in the latter trial blocks. Stars denote significant (p < 0.05) differences versus saline at a specific block, and dagger denotes p < 0.05 versus all other trial blocks after BLA inactivation. C, BLA inactivations also increased trial omissions, most prominently during the latter trial blocks. Star denotes a significant (p < 0.05) main effect of treatment.

Inactivation of the BLA also caused a significant increase in response latencies that varied as a function of trial block, as analysis of these data revealed a significant treatment times block interaction (F(3,21) = 3.15, p < 0.05) (Fig. 2B). Simple main effects analysis confirmed that during the 100% trial block, rats responded with similar latency after both saline and baclofen/muscimol infusions. However, in the latter three blocks, BLA inactivation significantly increased response latencies relative to saline treatment (Dunnett's, p < 0.05). Again, it is interesting to highlight that the largest increase in response latencies occurred in the 25% probability block. Indeed, after BLA inactivation, latencies to make a choice during this third block were significantly higher than those displayed over the other three blocks (Tukey's, p < 0.05), which did not differ from each other. Furthermore, response latencies after BLA inactivation were comparable on trials where rats chose either the large/risky (1.8 ± 0.4 s) versus the small/certain lever (1.9 ± 0.4 s), although these values were greater when compared with saline infusions (risky = 1.1 ± 0.4 s; safe = 0.9 ± 0.3 s; treatment times lever interaction, F(1,7) = 1.09, n.s.).

A similar pattern was observed following analysis of the trial omissions data (Fig. 2C). This analysis produced a significant main effect of treatment (F(1,7) = 5.82, p < 0.05), although the treatment times block interaction did not achieve statistical significance (F(3,21) = 2.61, p = 0.08). Again, during the 100% probability block, rats made very few omissions after both saline and baclofen/muscimol infusions. However, during the latter trial blocks, when the delivery of the larger reward became probabilistic, rats made substantially more trial omissions after BLA inactivation, with this effect being maximal during the 25% probability block. Notably, the effect of BLA inactivation on omissions and response latencies was not accompanied by any alteration in locomotor activity (F(1,7) = 0.65, n.s.). Thus, in addition to inducing a risk-averse pattern of choice, inactivation of the BLA increased both deliberation times and the likelihood of not making a choice but only when rats were required to choose between small, certain, and larger, yet uncertain rewards.

Effort discounting

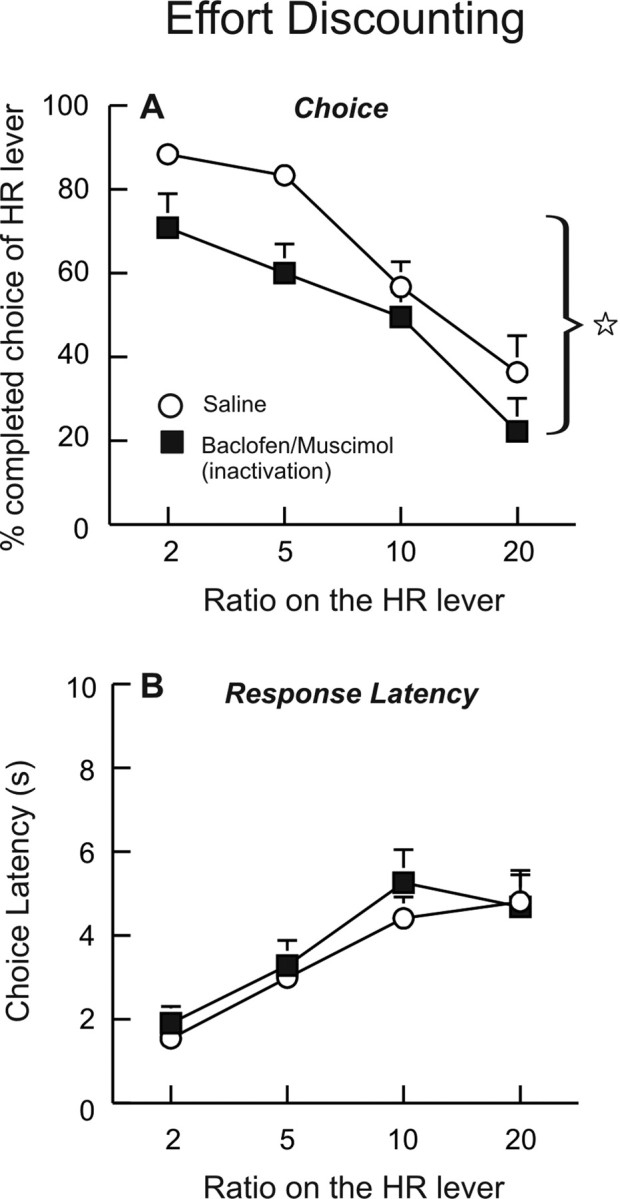

Of an original 15 rats trained on the effort-discounting task, a total of 12 rats had acceptable placements within the BLA and were included in the data analysis. These rats required 18 d of training on the discounting task before displaying stable patterns of choice behavior and subsequently receiving bilateral implantation of guide cannulae into the BLA. After recovery and 5–6 d of retraining on this task, they received two counterbalanced infusions of saline or baclofen/muscimol on separate days. Analysis of the choice data revealed a significant main effect of treatment (F(1,11) = 6.96, p < 0.05), although the treatment by block interaction did not achieve statistical significance (F(3,33) = 0.77, n.s.). As shown in Figure 3A, inactivation of the BLA caused a pronounced decrease in choice of the HR lever that was prominent across all trial blocks. However, these effects were not accompanied by differences in response latencies (Fig. 3B) or rates of pressing on the HR lever (saline = 2.2 ± 0.2; inactivation = 2.3 ± 0.2) between treatment conditions (all F's <1.0, n.s.). Thus, inactivation of the BLA caused a marked reduction in the preference for larger rewards associated with a greater effort cost. However, when rats did choose the HR lever, they responded as quickly and robustly as observed after saline infusions. BLA inactivation caused a slight increase in trial omissions (2.7 ± 1.3) compared with saline (0.4 ± 0.2), but this difference was not statistically significant (F(1,11) = 2.69, n.s.). Locomotor activity was not altered by BLA inactivations relative to saline treatments (F(1,11) = 0.47, n.s.). These findings indicate that inactivation of the BLA reduces the preference for animals to work harder to obtain rewards of larger magnitudes.

Figure 3.

Inactivation of the BLA increases effort discounting. Data are plotted as a function of the ratio on the HR lever by block (x-axis). A, Percentage of completed choices of the HR lever during free-choice trials after infusions of saline or baclofen/muscimol into the BLA. Star denotes a significant (p < 0.05) main effect of treatment. B, Response latencies were not affected by BLA inactivation.

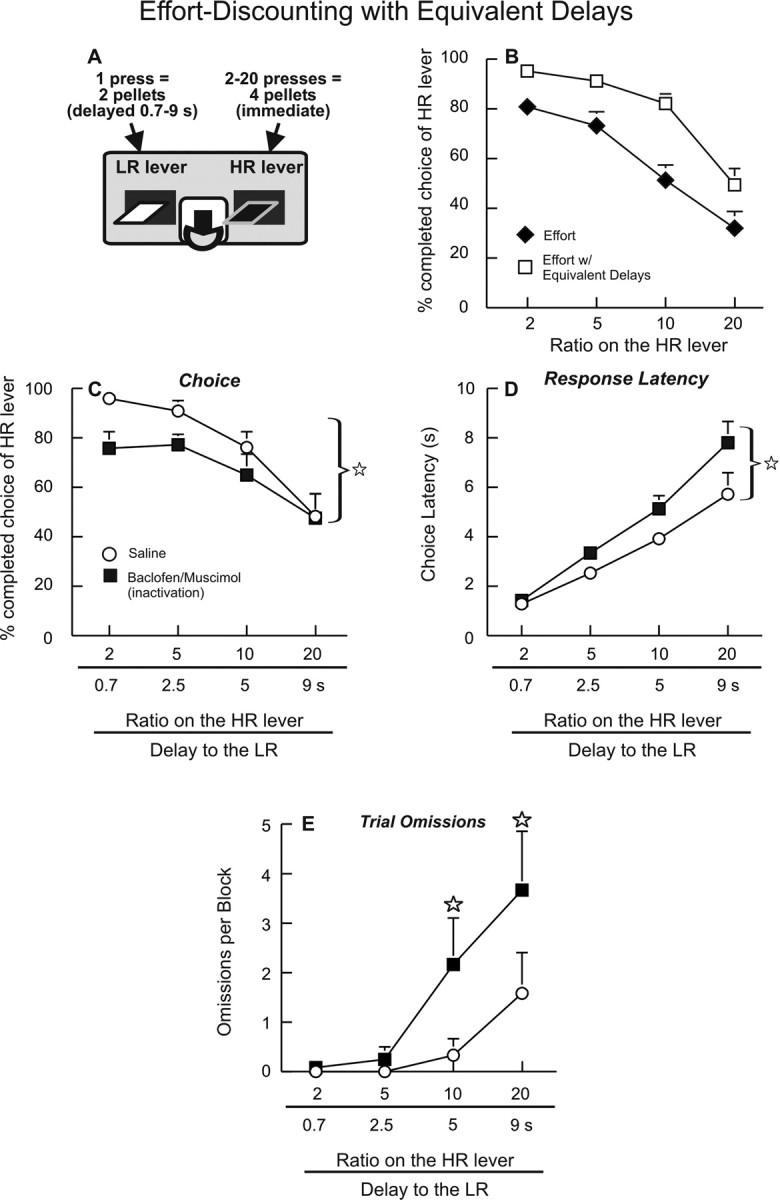

Effort discounting with equivalent delays

With the effort-discounting task used here, an important consideration is that selection of the high-effort option imposes a delay to reward delivery from the time of the initial choice to the completion of presses on the HR lever. Given that lesions of the BLA also reduce preference for larger, delayed rewards (Winstanley et al., 2004), we were particularly interested in determining the effect of parsing out the delay component of this task on choice behavior. Thus, after the first sequence of intracranial infusions, rats in this experiment were trained for 5 d on an effort discounting with equivalent delays task, developed previously in our laboratory (Floresco et al., 2008b). Here, one press on the LR lever delivered two pellets after a delay equivalent to the average amount of time rats took to complete the ratio of presses on the HR lever, effectively equalizing the delay to reward across both response options (Fig. 4A). In essence, this manipulation effectively reduces some of the relative “cost” associated with the high-effort option. In this experiment, the delays to reward delivery after a response on the LR lever were set to 0.7, 2.5, 5, and 9 s across the four trial blocks. In keeping with our previous findings (Floresco et al., 2008b), rats displayed an increased preference for the HR lever across all trial blocks during the final 3 d of testing using the equivalent delays procedure, when compared with their performance on the standard effort-discounting task (F(1,11) = 18.09, p < 0.01) (Fig. 4B). Under these conditions, inactivation of the BLA was again effective at reducing preference for the HR lever, as evidenced by the main effect of treatment (F(1,11) = 6.37, p < 0.05). Inspection of Figure 4C reveals that this effect was more prominent during the earlier trial blocks. By the last trial block, when rats were required to press the HR lever 20 times to obtain the larger reward, the proportion of choices directed toward the HR lever was numerically comparable after either BLA inactivation or saline treatments. Notably, rats displayed no clear preference for either lever during this last block after either saline or inactivation treatments (i.e., 50% choice of the HR and the LR levers). Despite this effect, we did not observe a significant treatment times trial block interaction (F(3,33) = 0.79, n.s.). Analysis of the response latencies showed that inactivation of the BLA did cause a slight increase in response latencies relative to saline treatment, with this effect being more prominent in the latter trial blocks (Fig. 4D) (F(1,11) = 4.69, p = 0.05). Interestingly, in this experiment, inactivation of the BLA did increase trial omissions (Fig. 4E) but only in the last 2 trial blocks, when the response requirements on the HR lever and the delay to reward after selection of the LR lever were both relatively high (treatment times block interactions; F(3,33) = 3.35, p < 0.05). However, BLA inactivation did not affect rates of responding on the HR lever (saline = 2.7 ± 0.2; inactivation = 2.9 ± 0.3; F(1,11) = 1.11, n.s.) or locomotor activity (F(1,11) = 0.28, n.s.). Thus, under conditions where the relative delay to reward delivery for both response options was comparable, inactivation of the BLA was still effective at reducing the preference for larger rewards associated with a greater effort cost. This suggests that the contribution of the BLA to effort-related decisions is relatively independent of its role in delay discounting (Winstanley et al., 2004). Furthermore, when the relative cost of either option became comparatively high (i.e., requiring rats to either wait or work a considerable amount to obtain any reward), inactivation of the BLA increased the likelihood of rats not choosing either option within the allotted time. Last, the fact that infusions of baclofen/muscimol again reduced choice of the HR lever indicates that the efficacy of these inactivations was not diminished by repeated infusions.

Figure 4.

Inactivation of the BLA increases effort discounting using an equivalent delays procedure. A, Cost/benefit contingencies associated with responding on either lever on the modified effort-discounting task. B, Percentage choice of the HR lever during each trial block, averaged over the last 3 d of training on the standard effort-discounting task (diamonds) and after 5 d of training using the equivalent delays procedure (open squares). C, BLA inactivation reduced preference for the HR lever. Numbers on the abscissa denote the effort requirement on the HR lever (top) and the delay to delivery of the two pellets after a single press on the LR lever (bottom). Star denotes significant (p < 0.05) main effect of treatment. D, E, BLA inactivation increased response latencies (D) and trial omissions (E), with these effects being most prominent during the latter trial blocks. Stars denote significant (p < 0.05) differences versus saline at a specific block.

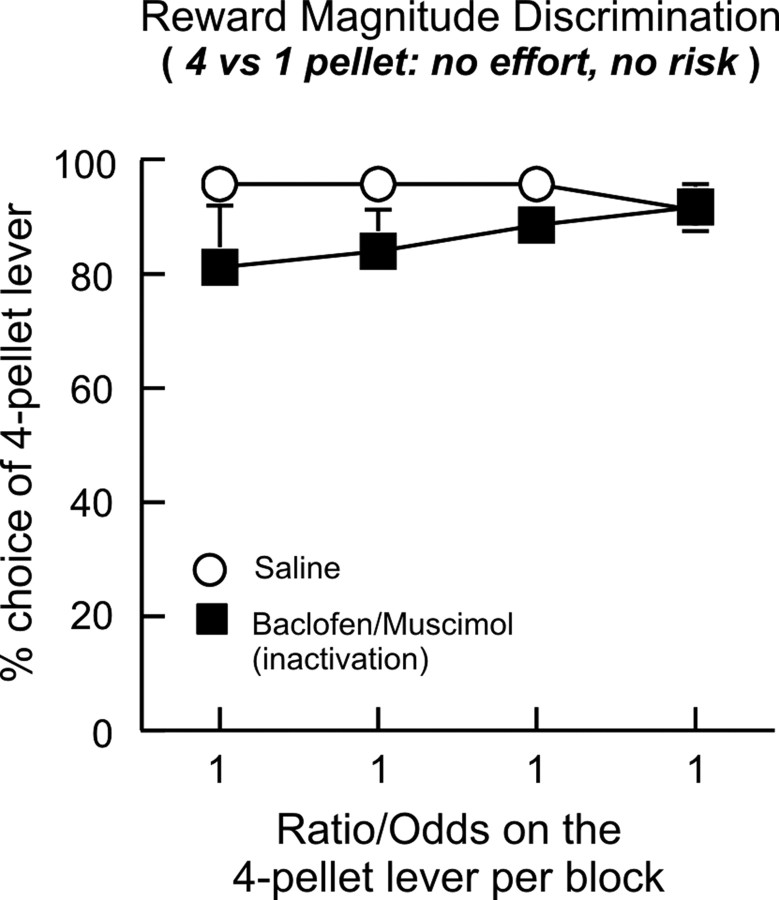

Reward magnitude discrimination

After completion of testing on the discounting tasks, three and four rats from the risk and effort experiments, respectively, were subsequently trained on a reward magnitude discrimination task, where rats chose between one lever that delivered one pellet and another that delivered four pellets. Both the small and large rewards were delivered immediately after a single response with 100% probability. After 5–7 d of training, rats displayed a strong preference for the four-pellet lever. They then received counterbalanced infusions of either saline of baclofen/muscimol into the BLA on separate test days. For this analysis, we incorporated previous task experience (effort or risk) as a between-subjects factor. Inactivation of the BLA caused a slight decrease in the preference for the four-pellet lever during the initial two trial blocks, although as a group, rats still displayed a strong preference for this lever after baclofen/muscimol infusions (Fig. 5). This effect was attributable primarily to two rats trained previously on the effort task. These rats displayed a considerable decrease in choice of the four-pellet lever during the first or second block after BLA inactivation relative to saline. Importantly, by the end of the session, these rats chose the four-pellet lever equally after both treatments. The remaining five rats displayed comparable performance during these blocks after either saline or baclofen/muscimol infusions. Despite this slight decrease, analysis of these data confirmed no significant difference between treatment conditions on choice (main effect of treatment: F(1,5) = 4.62, p = 0.084.; treatment times block interaction: F(3,15) = 0.94, p = 0.44). There was no significant main effect of task history or any interactions with the within-subjects variables (all F's <1.7, all p > 0.26). Similarly, inactivation of the BLA did not affect response latencies (saline = 1.2 ± 0.3 s; baclofen/muscimol = 1.8 ± 0.6 s, F(1,5) = 0.59, n.s.), trial omissions (saline = 0.1 ± 0.1; baclofen/muscimol = 2.7 ± 2.6, F(1,5) = 1.31, n.s.), or locomotor activity (F(1,5) = 2.47, n.s.). Thus, in keeping with previous findings (Winstanley et al., 2004; Floresco and Ghods-Sharifi, 2007; Helms and Mitchell, 2008), disruptions of BLA functioning does not reliably impair the ability to discriminate between larger and smaller rewards when the relative cost of both options are equal.

Figure 5.

BLA inactivation does not affect reward magnitude discrimination. Rats were trained to choose between two levers that delivered either a four- or one-pellet reward immediately after a single press with 100% probability. BLA inactivation did not significantly disrupt the preference for the larger four-pellet reward during free-choice trials relative to saline treatment.

Histology

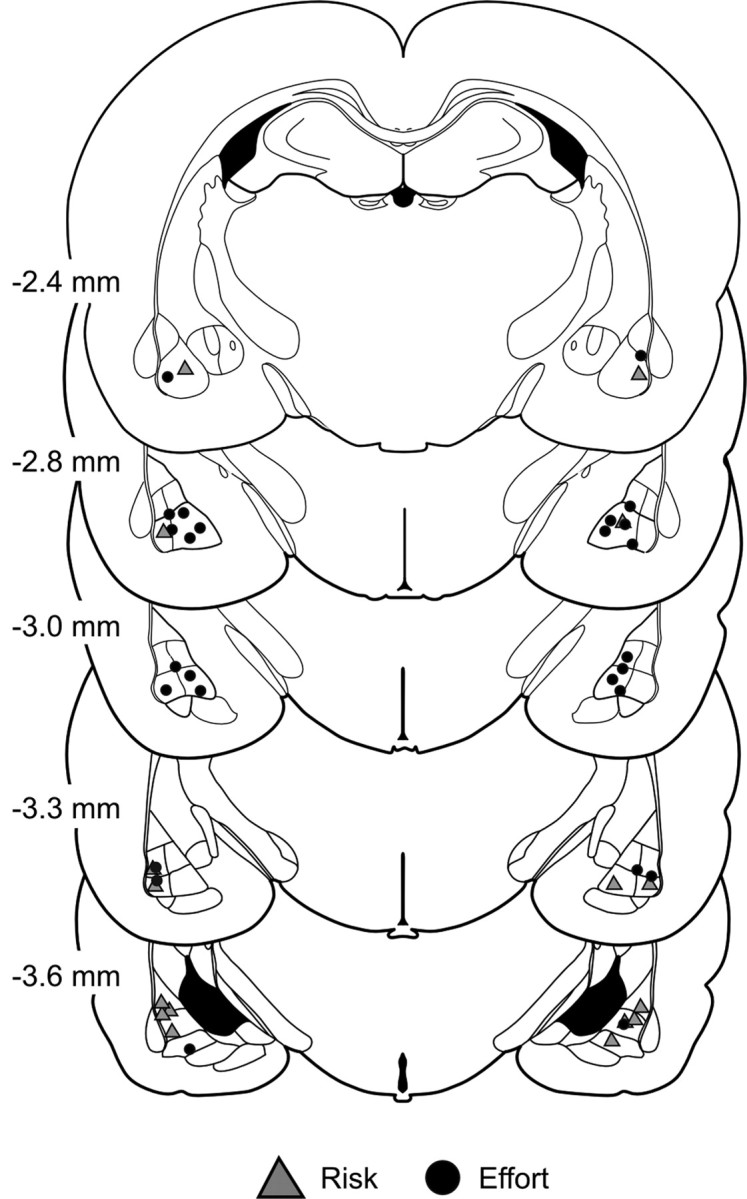

The locations of all acceptable infusions are displayed in Figure 6. As noted above, the data from three rats in the effort experiment and another three in the risk experiment were excluded from the analyses because of inaccurate placements. Rats whose data were excluded from the effort experiment had placements that were either asymmetrical in the medial/lateral plane or were located dorsal or posterior to the BLA. In these rats, the proportion of choice of the HR lever after infusions of baclofen/muscimol (58 ± 16%) was comparable with that after saline infusions (51 ± 15%). For the three rats eliminated from the risk experiment, two had placements located in the central nucleus and another was ventral to the BLA. Infusions of baclofen/muscimol had a discernable effect on choice of the large/risky lever in these animals (baclofen/muscimol = 61 ± 6%; saline = 82 ± 13%). However, this effect was accompanied by a pronounced increase in trial omissions and impairments in feeding behavior, in that they did not consume a large proportion of reward pellets delivered during the test session. This disruption in feeding behavior after inactivation of the central amygdala has been reported by other groups (Ahn and Phillips, 2003). Importantly, this disruption in feeding did not occur in animals with accurate BLA placements. In light of these findings, it is likely that the impairments in decision making induced by bilateral infusions of baclofen/muscimol were due primarily to inactivation of the BLA and not adjacent structures.

Figure 6.

Histology. Schematic of coronal sections of the rat brain showing the range of acceptable location of infusions through the rostral–caudal extent of the BLA for all rats trained on the risk (triangles) or effort (circle) discounting tasks. Numbers beside each plate correspond to millimeters from bregma.

Discussion

The present findings indicate that the BLA plays a fundamental role in different forms of cost/benefit decision making. Inactivation of this nucleus induced risk aversion on a risk-discounting task and also reduced preference for larger rewards associated with a greater effort cost. However, BLA inactivation did not disrupt the preference for larger rewards when the relative costs associated with both options were equal. These data suggest that neural activity in the BLA makes a critical contribution to judgments about the relative value associated with different courses of action, biasing choice toward larger, yet more costly rewards.

Risk discounting

To our knowledge, the present findings are the first to demonstrate in experimental animals that inactivations of the BLA disrupts risk-based decision making. BLA inactivation reduced choice of the large/risky lever most prominently during the 50 and 25% probability blocks. These blocks provided maximal uncertainty about the most beneficial course of action in terms of obtaining the larger reward (50%) or the overall amount of food that could be obtained over the 10 free-choice trials (four pellets at 25% vs one pellet at 100%). In comparison, the 100 and 12.5% blocks present a relatively “easier” choice, as probability of obtaining the larger reward is either certain or highly unlikely. In these blocks, BLA inactivation had minimal effect on choice. These treatments affected response latencies and omissions in a similar manner. During the 100% block, the likelihood of making a choice, as well as the latency to initiate one, were comparable after saline and baclofen/muscimol infusions. However, when delivery of the four-pellet reward became probabilistic, response latencies increased, peaking during the 25% block. Likewise, during these blocks, BLA inactivation increased the number of trials where rats did not make any choice (omissions), which of course, is the least beneficial course of action. These findings are in keeping with the notion that the BLA facilitates judgments about the relative value of different responses and the specific incentive properties of the outcomes of those actions (Cardinal et al., 2002; Balleine et al., 2003; Corbit and Balleine, 2005). Impairments in these processes may reduce tendencies for rats to bias choice toward larger, probabilistic rewards, even if this pattern of choice would provide more reinforcement in the long term. Furthermore, as the uncertainty associated these cost/benefit evaluations increases, inactivation of the BLA severely interferes with the ability for rats to make any choice. Our findings that BLA inactivation induced the most pronounced effects on choice, latencies, and omissions during the blocks that provided the most uncertainty complements neurophysiological studies in humans and animals showing greater activation of the amygdala in response to unpredictable versus predictable motivationally relevant stimuli (Belova et al., 2007; Herry et al., 2007) or with increasing ambiguity about decision options (Hsu et al., 2005).

The finding that BLA inactivations induce risk aversion may appear contradictory to findings from patients with amygdala damage who make more “risky” choices on laboratory tests of decision making when patients learn the probabilities of rewards and losses in a single session (Bechara et al., 1999) or when they are made explicit to the subject (Brand et al., 2007; Weller et al., 2007). One explanation for this discrepancy may be that tests of decision making in humans typically involve choices about rewards and punishments (e.g., loss of accumulated monetary reward). Lesions of the amygdala in animals have been shown to affect the ability of either primary or conditioned punishers to affect instrumental behavior in other paradigms (Shibata et al., 1986; Killcross et al., 1997). The present study did not employ any explicit punishments per se; the risk was a lost opportunity to obtain a reward. However, a recent study by Weller et al. (2007) revealed that amygdala patients show impaired judgments about potential gains but not potential losses, indicating that damage to this region does not induce a general increase in risky choice. Another important consideration is that in the above-mentioned tasks, selecting the high-risk option is typically a disadvantageous strategy, yielding smaller gains or net losses in the long term. In the present study, rats also made more disadvantageous choices after BLA inactivation, selecting the large/risky lever less often when it was beneficial to do so or not making any choice within the allotted time. Viewed from this perspective, the present data, in addition to findings from human patients, indicate that the BLA may be particularly important in guiding choice to maximize long-term gains. Thus, impairments in risk-based decision making induced by disruptions in amygdala function may manifest themselves as increases or decreases in risky choice, depending on which pattern of choice is less profitable in the long term.

Effort discounting

Inactivation of the BLA increased effort discounting, in keeping with previous results from our laboratory (Floresco and Ghods-Sharifi, 2007). Inactivation of the BLA reduced rats' preference to climb a barrier placed in one arm of a T-maze to obtain a larger, four-pellet reward, using a protocol modified from Salamone et al. (1994). In the present study, BLA inactivations also induced greater sensitivity to effort costs, rendering rats less likely to select the lever that required multiple presses to obtain a larger reward. These effects are not easily attributable to motivational impairments, because when rats did choose the HR lever, their rates of responding were comparable after saline or baclofen/muscimol infusions. It is also unlikely that these effects are attributable to alterations in satiety, given that animals display similar patterns of choice on this task regardless of their level of food deprivation (Floresco et al., 2008b), and BLA inactivations did not significantly alter choice of the four-pellet option on a reward magnitude discrimination task.

When we tested rats on a modified procedure where the relative delays to receipt of the smaller or larger reward were comparable, BLA inactivations again reduced choice of the HR lever. Thus, the contribution of the BLA to effort-based decision making cannot be attributed solely to its role in delay discounting (Winstanley et al., 2004). Note that this reduction was not apparent during the last trial block, precluding us from ruling out that delays to reward linked to higher effort requirements may contribute to the effects of BLA inactivation on choice. Yet, during this block, rats were relatively indifferent to the cost/benefit contingencies associated with each lever under control conditions. Thus, an alternative explanation may be that the BLA plays a greater role in effort-related decisions under conditions that evoke a strong preference for one option versus another.

BLA inactivations also increased omissions and deliberation times during the latter trial blocks, when rats chose between emitting 10–20 presses on one lever and waiting 5–9 s after a response on the other to obtain any reward. Under these conditions, discerning the relative value of different actions–outcome associations would be expected to be more difficult, requiring an integration of information about differential reward magnitudes with the considerable effort and delay-related costs linked with each lever. Thus, as was observed with risk discounting, BLA inactivations impaired the ability to select either option when rats were faced with more complex evaluations about the relative outcome value associated with selection of the HR or LR lever.

Fundamental role for the BLA in cost/benefit decision making

Viewed in context of the broader literature on the role of the amygdala in decision making, the present findings point to a fundamental contribution of the BLA in overcoming different response costs to maximize future benefits. All things being equal, animals typically choose more versus less food. Yet, imposition of certain costs leads to a discounting of larger rewards, shifting preference toward smaller, easily obtainable rewards. Inactivation of the BLA makes rats hypersensitive to these costs, which may include delays to reward delivery (Winstanley et al., 2004), effort requirements (Floresco and Ghods-Sharifi, 2007; present study) or uncertainty (present study). The ability of the BLA to facilitate these forms of decision making may be related to its role in calculating the potential value of different actions and outcomes (Baxter and Murray, 2002; Balleine and Killcross, 2006; Belova et al., 2007, 2008). Differences in reward magnitude are one aspect of reward value that is encoded by neural activity in the BLA (Pratt and Mizumori, 1998; Ernst et al., 2005; Belova et al., 2008; Smith et al. 2009). However, the contribution of this nucleus to choice behavior does not appear to be limited to discriminations between larger and smaller rewards (Winstanley et al., 2004; Floresco and Ghods-Sharifi, 2007; Helms and Mitchell, 2008; present study). Rather, the BLA appears crucial for guiding choice in situations requiring integration of multiple types of information (e.g., response costs, reward magnitude and valence, current motivational state, previous experience, etc.) related to the potential reward that may be obtained from different courses of actions. Thus, lesions of the amygdala interfere with processes that bias choice toward options that lead to greater long-term payoffs, which, depending on the task at hand, would induce impulsive, lazy, risky, or risk-averse patterns of choice. The ability of the BLA to generate value representations and in turn guide choice likely occurs via parallel pathways linking it to different regions of the frontal lobes (Mobini et al., 2002; Schoenbaum et al., 2003; Walton et al., 2003; Rudebeck et al., 2006; Floresco and Ghods-Sharifi, 2007; Floresco and Tse, 2007), the ventral striatum (Salamone et al., 1994; Cardinal et al., 2001; Cardinal and Howes, 2005; Hauber and Sommer, 2009), and the mesolimbic dopamine system (Floresco and Magyar, 2006; Salamone et al., 2007; Floresco et al., 2008b; St Onge and Floresco, 2009).

Footnotes

This work was supported by a grant from the Canadian Institutes of Health Research to S.B.F. S.B.F. is a Michael Smith Foundation for Health Research Senior Scholar, and J.R.S.O. is the recipient of scholarships from the Natural Sciences and Engineering Research Council of Canada and the Michael Smith Foundation for Health Research. We are grateful to Zachary Cornfield for his assistance with behavioral testing.

References

- Ahn S, Phillips AG. Independent modulation of basal and feeding-evoked dopamine efflux in the nucleus accumbens and medial prefrontal cortex by the central and basolateral amygdalar nuclei in the rat. Neuroscience. 2003;116:295–305. doi: 10.1016/s0306-4522(02)00551-1. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Killcross S. Parallel incentive processing: an integrated view of amygdala function. Trends Neurosci. 2006;29:272–279. doi: 10.1016/j.tins.2006.03.002. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Killcross AS, Dickinson A. The effect of lesions of the basolateral amygdala on instrumental conditioning. J Neurosci. 2003;23:666–675. doi: 10.1523/JNEUROSCI.23-02-00666.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baxter MG, Murray EA. The amygdala and reward. Nat Rev Neurosci. 2002;3:563–573. doi: 10.1038/nrn875. [DOI] [PubMed] [Google Scholar]

- Bechara A, Damasio H, Damasio AR, Lee GP. Different contributions of the human amygdala and ventromedial prefrontal cortex to decision-making. J Neurosci. 1999;19:5473–5481. doi: 10.1523/JNEUROSCI.19-13-05473.1999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Morrison SE, Salzman CD. Expectation modulates neural responses to pleasant and aversive stimuli in primate amygdala. Neuron. 2007;55:970–984. doi: 10.1016/j.neuron.2007.08.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Belova MA, Paton JJ, Salzman CD. Moment-to-moment tracking of state value in the amygdala. J Neurosci. 2008;28:10023–10030. doi: 10.1523/JNEUROSCI.1400-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brand M, Grabenhorst F, Starcke K, Vandekerckhove MM, Markowitsch HJ. Role of the amygdala in decisions under ambiguity and decisions under risk: evidence from patients with Urbach-Wiethe disease. Neuropsychologia. 2007;45:1305–1317. doi: 10.1016/j.neuropsychologia.2006.09.021. [DOI] [PubMed] [Google Scholar]

- Cardinal RN, Howes NJ. Effects of lesions of the nucleus accumbens core on choice between small certain rewards and large uncertain rewards in rats. BMC Neurosci. 2005;6:37. doi: 10.1186/1471-2202-6-37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardinal RN, Pennicott DR, Sugathapala CL, Robbins TW, Everitt BJ. Impulsive choice induced in rats by lesions of the nucleus accumbens core. Science. 2001;292:2499–2501. doi: 10.1126/science.1060818. [DOI] [PubMed] [Google Scholar]

- Cardinal RN, Parkinson JA, Hall J, Everitt BJ. Emotion and motivation: the role of the amygdala, ventral striatum, and prefrontal cortex. Neurosci Biobehav Rev. 2002;26:321–352. doi: 10.1016/s0149-7634(02)00007-6. [DOI] [PubMed] [Google Scholar]

- Corbit LH, Balleine BW. Double dissociation of basolateral and central amygdala lesions on the general and outcome-specific forms of Pavlovian-instrumental transfer. J Neurosci. 2005;25:962–970. doi: 10.1523/JNEUROSCI.4507-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- De Martino B, Kumaran D, Seymour B, Dolan RJ. Frames, biases, and rational decision-making in the human brain. Science. 2006;313:684–687. doi: 10.1126/science.1128356. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ernst M, Nelson EE, Jazbec S, McClure EB, Monk CS, Leibenluft E, Blair J, Pine DS. Amygdala and nucleus accumbens in responses to receipt and omission of gains in adults and adolescents. Neuroimage. 2005;25:1279–1291. doi: 10.1016/j.neuroimage.2004.12.038. [DOI] [PubMed] [Google Scholar]

- Floresco SB, Ghods-Sharifi S. Amygdala-prefrontal cortical circuitry regulates effort-based decision making. Cereb Cortex. 2007;17:251–260. doi: 10.1093/cercor/bhj143. [DOI] [PubMed] [Google Scholar]

- Floresco SB, Magyar O. Mesocortical dopamine modulation of executive functions: beyond working memory. Psychopharmacology (Berl) 2006;188:567–585. doi: 10.1007/s00213-006-0404-5. [DOI] [PubMed] [Google Scholar]

- Floresco SB, Tse MTL. Dopaminergic regulation of inhibitory and excitatory transmission in the basolateral amygdala-prefrontal cortical pathway. J Neurosci. 2007;27:2045–2057. doi: 10.1523/JNEUROSCI.5474-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Floresco SB, Ghods-Sharifi S, Vexelman C, Magyar O. Dissociable roles for the nucleus accumbens core and shell in regulating set shifting. J Neurosci. 2006;26:2449–2457. doi: 10.1523/JNEUROSCI.4431-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Floresco SB, St Onge JR, Ghods-Sharifi S, Winstanley CA. Cortico-limbic-striatal circuits subserving different forms of cost-benefit decision making. Cogn Affect Behav Neurosci. 2008a;8:375–389. doi: 10.3758/CABN.8.4.375. [DOI] [PubMed] [Google Scholar]

- Floresco SB, Tse MT, Ghods-Sharifi S. Dopaminergic and glutamatergic regulation of effort-and delay-based decision making. Neuropsychopharmacology. 2008b;33:1966–1979. doi: 10.1038/sj.npp.1301565. [DOI] [PubMed] [Google Scholar]

- Hauber W, Sommer S Advance online publication. Prefrontostriatal circuitry regulates effort-related decision making. Cereb Cortex. 2009 doi: 10.1093/cercor/bhn241. [DOI] [PubMed] [Google Scholar]

- Helms CM, Mitchell SH. Basolateral amygdala lesions and sensitivity to reinforcer magnitude in concurrent chain schedules. Behav Brain Res. 2008;191:210–218. doi: 10.1016/j.bbr.2008.03.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herry C, Bach DR, Esposito F, Di Salle F, Perrig WJ, Scheffler K, Lüthi A, Seifritz E. Processing of temporal unpredictability in human and animal amygdala. J Neurosci. 2007;27:5958–5966. doi: 10.1523/JNEUROSCI.5218-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hsu M, Bhatt M, Adolphs R, Tranel D, Camerer CF. Neural systems responding to degrees of uncertainty in human decision-making. Science. 2005;310:1680–1683. doi: 10.1126/science.1115327. [DOI] [PubMed] [Google Scholar]

- Killcross S, Robbins TW, Everitt BJ. Different types of fear-conditioned behaviour mediated by separate nuclei within amygdala. Nature. 1997;388:377–380. doi: 10.1038/41097. [DOI] [PubMed] [Google Scholar]

- Marquis JP, Killcross S, Haddon JE. Inactivation of the prelimbic, but not infralimbic, prefrontal cortex impairs the contextual control of response conflict in rats. Eur J Neurosci. 2007;25:559–566. doi: 10.1111/j.1460-9568.2006.05295.x. [DOI] [PubMed] [Google Scholar]

- McLaughlin RJ, Floresco SB. The role of different subregions of the basolateral amygdala in cue-induced reinstatement and extinction of food-seeking behavior. Neuroscience. 2007;146:1484–1494. doi: 10.1016/j.neuroscience.2007.03.025. [DOI] [PubMed] [Google Scholar]

- Mobini S, Body S, Ho MY, Bradshaw CM, Szabadi E, Deakin JF, Anderson IM. Effects of lesions of the orbitofrontal cortex on sensitivity to delayed and probabilistic reinforcement. Psychopharmacology (Berl) 2002;160:290–298. doi: 10.1007/s00213-001-0983-0. [DOI] [PubMed] [Google Scholar]

- Moreira CM, Masson S, Carvalho MC, Brandão ML. Exploratory behaviour of rats in the elevated plus-maze is differentially sensitive to inactivation of the basolateral and central amygdaloid nuclei. Brain Res Bull. 2007;71:466–474. doi: 10.1016/j.brainresbull.2006.10.004. [DOI] [PubMed] [Google Scholar]

- Murray EA. The amygdala, reward and emotion. Trends Cogn Sci. 2007;11:489–497. doi: 10.1016/j.tics.2007.08.013. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP. Reward representations and reward-related learning in the human brain: insights from neuroimaging. Curr Opin Neurobiol. 2004;14:769–776. doi: 10.1016/j.conb.2004.10.016. [DOI] [PubMed] [Google Scholar]

- Paxinos G, Watson C. The rat brain in stereotaxic coordinates. Ed 4. San Diego: Academic; 1998. [DOI] [PubMed] [Google Scholar]

- Pratt WE, Mizumori SJY. Characteristics of basolateral amygdala neuronal firing on a spatial memory task involving differential reward. Behav Neurosci. 1998;112:554–570. doi: 10.1037//0735-7044.112.3.554. [DOI] [PubMed] [Google Scholar]

- Robbins TW, Everitt BJ. Neurobehavioural mechanisms of reward and motivation. Curr Opin Neurobiol. 1996;6:228–236. doi: 10.1016/s0959-4388(96)80077-8. [DOI] [PubMed] [Google Scholar]

- Rudebeck PH, Walton ME, Smyth AN, Bannerman DM, Rushworth MF. Separate neural pathways process different decision costs. Nat Neurosci. 2006;9:1161–1168. doi: 10.1038/nn1756. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Cousins MS, Bucher S. Anhedonia or anergia? Effects of haloperidol and nucleus accumbens dopamine depletion on instrumental response selection in a T-maze cost/benefit procedure. Behav Brain Res. 1994;65:221–229. doi: 10.1016/0166-4328(94)90108-2. [DOI] [PubMed] [Google Scholar]

- Salamone JD, Correa M, Farrar A, Mingote SM. Effort-related functions of nucleus accumbens dopamine and associated forebrain circuits. Psychopharmacology (Berl) 2007;191:461–482. doi: 10.1007/s00213-006-0668-9. [DOI] [PubMed] [Google Scholar]

- Salinas JA, Packard MG, McGaugh JL. Amygdala modulates memory for changes in reward magnitude: reversible post-training inactivation with lidocaine attenuates the response to a reduction in reward. Behav Brain Res. 1993;59:153–159. doi: 10.1016/0166-4328(93)90162-j. [DOI] [PubMed] [Google Scholar]

- Schoenbaum G, Setlow B, Saddoris MP, Gallagher M. Encoding predicted outcome and acquired value in orbitofrontal cortex during cue sampling depends upon input from basolateral amygdala. Neuron. 2003;39:855–867. doi: 10.1016/s0896-6273(03)00474-4. [DOI] [PubMed] [Google Scholar]

- Seymour B, Dolan R. Emotion, decision making, and the amygdala. Neuron. 2008;58:662–671. doi: 10.1016/j.neuron.2008.05.020. [DOI] [PubMed] [Google Scholar]

- Shibata K, Kataoka Y, Yamashita K, Ueki S. An important role of the central amygdaloid nucleus and mammillary body in the mediation of conflict behavior in rats. Brain Res. 1986;372:159–162. doi: 10.1016/0006-8993(86)91470-8. [DOI] [PubMed] [Google Scholar]

- Smith BW, Mitchell DG, Hardin MG, Jazbec S, Fridberg D, Blair RJ, Ernst M. Neural substrates of reward magnitude, probability, and risk during a wheel of fortune decision-making task. Neuroimage. 2009;44:600–609. doi: 10.1016/j.neuroimage.2008.08.016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- St Onge JR, Floresco SB. Dopaminergic modulation of risk-based decision making. Neuropsychopharmacology. 2009;34:681–697. doi: 10.1038/npp.2008.121. [DOI] [PubMed] [Google Scholar]

- Walton ME, Bannerman DM, Alterescu K, Rushworth MF. Functional specialization within medial frontal cortex of the anterior cingulate for evaluating effort-related decisions. J Neurosci. 2003;23:6475–6479. doi: 10.1523/JNEUROSCI.23-16-06475.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weller JA, Levin IP, Shiv B, Bechara A. Neural correlates of adaptive decision making for risky gains and losses. Psychol Sci. 2007;18:958–964. doi: 10.1111/j.1467-9280.2007.02009.x. [DOI] [PubMed] [Google Scholar]

- Winstanley CA, Theobald DE, Cardinal RN, Robbins TW. Contrasting roles of basolateral amygdala and orbitofrontal cortex in impulsive choice. J Neurosci. 2004;24:4718–4722. doi: 10.1523/JNEUROSCI.5606-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]