Abstract

Though other species of primates also use tools, humans appear unique in their capacity to understand the causal relationship between tools and the result of their use. In a comparative fMRI study, we scanned a large cohort of human volunteers and untrained monkeys, as well as two monkeys trained to use tools, while they observed hand actions and actions performed using simple tools. In both species, the observation of an action, regardless of how performed, activated occipitotemporal, intraparietal, and ventral premotor cortex, bilaterally. In humans, the observation of actions done with simple tools yielded an additional, specific activation of a rostral sector of the left inferior parietal lobule (IPL). This latter site was considered human-specific, as it was not observed in monkey IPL for any of the tool videos presented, even after monkeys had become proficient in using a rake or pliers through extensive training. In conclusion, while the observation of a grasping hand activated similar regions in humans and monkeys, an additional specific sector of IPL devoted to tool use has evolved in Homo sapiens, although tool-specific neurons might reside in the monkey grasping regions. These results shed new light on the changes of the hominid brain during evolution.

Introduction

Tools are mechanical implements that allow individuals to achieve goals that otherwise would be difficult or impossible to reach. Tool use has long been considered a uniquely human characteristic (Oakley, 1956), dating back 2.5 Mi years (Ambrose, 2001). However, there is now general agreement that chimpanzees also use tools, in captivity (Kohler, 1927) as well as in the wild (Beck, 1980; Whiten et al., 1999). Except for Cebus monkeys (Visalberghi and Trinca, 1989; Moura and Lee, 2004), most monkeys, including macaques, vervets, tamarins, marmosets, and lemurs, use tools only after training (Natale et al., 1988; Hauser, 1997; Santos et al., 2005, 2006; Spaulding and Hauser, 2005). While it is clear that the use of tools by humans reflects an understanding of the causal relationship between the tool and the action goals (Johnson-Frey, 2003), this is far less true for apes. The available evidence (Visalberghi and Limongelli, 1994; Povinelli, 2000; Mulcahy and Call, 2006; Martin-Ordas et al., 2008) indicates that chimpanzees may have some causal understanding of a trap, for instance [introduced by Visalberghi and Limongelli (1994)], but lack the ability to establish analogical relationships between perceptually disparate but functionally equivalent tasks (Martin-Ordas et al., 2008; Penn et al., 2008).

Is this capacity to understand tools mediated by an evolutionarily new neural substrate peculiar to humans? Since primates in general exhibit the same level of understanding of tool use, whether they use them spontaneously or only after training, it has been suggested that primates rely on domain-general rather than domain-specific knowledge (Santos et al., 2006). Only humans should therefore possess specialized neuronal mechanisms allowing them to understand the functional properties of tools, a species difference that should apply to all tools, both simple and complex. On the other hand, the finding that the training induces the appearance of bimodal visuo-tactile properties in parietal neurons (Iriki et al., 1996), has led to the suggestion that the use of simple tools might rely on similar mechanisms in both species (Maravita and Iriki, 2004). According to this hypothesis only the use of more complex tools requires special neuronal mechanisms typical of humans (Johnson-Frey, 2004; Frey, 2008). Thus it is unclear whether or not the neuronal mechanisms involved in the use of simple tools, such as a rake, are similar in humans and monkeys. The present series of experiments was designed to decide between these two alternatives.

Unlike previous studies that used static images of tools as stimuli (for review, see Lewis, 2006), we used video-clips presenting actions performed with different implements. Indeed dynamic stimuli seemed more appropriate to the study of the mechanisms involved in comprehending the functional properties of tools than mere static images. The stimuli were presented to both humans and monkeys while being scanned and their respective functional activation patterns were compared. Because naive monkeys differ from humans not only as a species, but also in their expertise with tools, in the final experiment we scanned monkeys that were able to use two tools proficiently, a rake and pliers.

Materials and Methods

Subjects.

Forty-seven right-handed human volunteers (27 of whom were females) with a mean age of 23.7 ± 4.0 years (range 18–32 years) participated in the study. Experiment 1 was performed on 20 volunteers (mean age, 22.4 ± 2.8 years, 12 females). Twenty-one volunteers (mean age, 24.6 ± 3.9 years; 13 females), 8 of whom had also participated in experiment 1, took part in experiment 2. Experiment 3 was performed on 8 volunteers (mean age, 21.6 ± 4.1 years; 5 females), of whom 6 were naive individuals, while 2 also had participated in Experiment 2. Eight volunteers (mean age 24.4 ± 5 years; 3 females) participated in experiment 4. All of these had also taken part in experiment 2. Six volunteers participated in control experiment 1 (mean age, 23.3 ± 3.4 years; 4 females) 3 of whom were naive individuals, while 3 had also participated in experiment 1 and/or 2. Finally, 7 volunteers were scanned in control experiment 2 (mean age, 24.7 ± 4.7 years; 4 females). Of these, 5 were naive, while 2 had participated in experiments 1 and/or 2.

No participant reported a history of neurological disease or was taking psycho- or vasoactive medication. Participants were informed about the experimental procedures and provided written informed consent. The study design was approved by the local Ethics Committee of Biomedical Research at K. U. Leuven and performed in accordance with the ethical standards laid down in the 1964 Declaration of Helsinki. Subjects lay in a supine position and viewed the screen trough a 45 degrees tilted mirror. They were instructed to maintain fixation during scanning. The eye position was monitored at 60 Hz during all fMRI scanning sessions using the ASL 5000/LRO eye tracker system positioned at the back of the magnet (Applied Science Laboratories) to track pupil position and corneal reflection. These eye traces were analyzed to determine the number of saccades per minute. Across all experiments participants averaged 8–12 saccades per minute in the various conditions. In no experiment did this number, averaged over subjects, differ significantly among conditions (p > 0.05).

Five (M1, M5, M6, M13, M14, one female) rhesus monkeys (3–6 kg, 4–7 years of age) were also scanned. Two monkeys participated in experiment 5 (M1, M5), three in experiments 6–8 (M6, M13, M14) and two in experiment 9 (M13, M14). These animals had participated only in experiments in which they were passive with respect to visual (M1, M5, M6) or to visual and auditory stimuli (M13, M14). Hence they were trained only on the fixation task and the high acuity task (Vanduffel et al., 2001) used to calibrate the eye movement recordings. All animal care and experimental procedures met the national and European guidelines and were approved by the ethical committee of the K. U. Leuven Medical School. The details of the surgical procedures, training of monkeys, image acquisition, eye monitoring and statistical analysis of monkeys scans have been described previously (Vanduffel et al., 2001; Fize et al., 2003; Nelissen et al., 2005), and will be described only briefly here. Monkeys sat in a sphinx position in a plastic monkey chair directly facing the screen. A plastic headpost is attached to the skull using C&B Metabond adhesive cement (Parkell) together with Palacos R+G bone cement and ∼15 ceramic screws (Thomas Recording). Throughout the training and testing sessions, the monkey's head is restrained by attaching the implanted headpost to the magnet compatible monkey chair (for details, see Vanduffel et al., 2001). Thus, during the tests, the monkeys were able to move all body parts except their head. It is important to note, however, that body movements are usually infrequent when the monkeys perform a task, be it a fixation task, during the scanning. A receive-only surface coil was positioned just above the head. During training the monkeys were required to maintain fixation within a 2 × 2° window centered on a red dot (0.35 × 0.35°) in the center of the screen. Eye position was monitored at 60 Hz through pupil position and corneal reflection. During scanning the fixation window was slightly elongated in the vertical direction to 3°, to accommodate an occasional artifact on the vertical eye trace induced by the scanning sequence. The monkeys were rewarded (fruit juice) for maintaining their gaze within the fixation window for long periods (up to 6 s), while stimuli were projected in the background. With this strategy monkeys made 7–20 saccades per minute, each monkey exhibiting a relatively stable number of saccades over the different sessions/runs: 7/min for M13, 9/min for M6, 11/min for M14, 13/min for M1 and 20/min for M5. Thus most monkeys were close to the human average in this regard and made about one saccade every 6 s. In no experiment was the number of saccades made by individual subjects significantly different between the experimental conditions.

Before each scanning session, a contrast agent, monocrystalline iron oxide nanoparticle (MION), was injected into the monkey femoral/saphenous vein (4–11 mg/kg). In later experiments the same contrast agent, produced under a different name (Sinerem) was used. Use of the contrast agent improved both the contrast-noise ratio (by approximately fivefold) and the spatial selectivity of the magnetic resonance (MR) signal changes, compared with blood oxygenation level-dependent (BOLD) measurements (Vanduffel et al., 2001; Leite et al., 2002). While BOLD measurements depend on blood volume, blood flow, and oxygen extraction, MION measurements depend only on blood volume (Mandeville and Marota, 1999). For the sake of clarity, the polarity of the MION MR signal changes, which are negative for increased blood volumes, was inverted.

Visual stimuli.

Visual stimuli were projected onto a transparent screen in front of the subject using a Barco 6400i (for humans) or 6300 (for monkeys) liquid crystal display projector (1024 × 768 pixels; 60 Hz). Optical path length between the eyes and the stimulus measured 36 cm (54 cm for monkeys). All tests included a simple fixation condition as a baseline condition in which only the fixation target (red dot) was shown on an empty screen.

In experiment 1, we displayed videos (13 by 11.5° in humans, 16 by 13° in monkeys) showing either a human hand grasping objects (“hand action” supplemental Movie S1, available at www.jneurosci.org as supplemental material) or a mechanical hand grasping the same objects (“mechanical hand action” supplemental Movie S2, available at www.jneurosci.org as supplemental material). A male, female or a mechanical hand grasped and picked up a candy (precision grip) or a ball (whole hand grasp). The mechanical hand (three fingers, Fig. 1B), was moved toward to object by a human operator (invisible), while the grasping was computer controlled. Our mechanical hand action video is thus clearly different from that of (Tai et al., 2004). This description supplements that of (Nelissen et al., 2005) where the same videos were described as robot hand action. One action cycle (grasping and picking up) lasted 3.3 s and 7 (11 in monkey) randomly selected cycles were presented in a block of 24 s (36 s in monkey). Static single frames (24 s duration, 36 s in monkey) and scrambled video sequences (supplemental Movie S3, available at www.jneurosci.org as supplemental material), obtained by phase scrambling each of the frames of the sequence, were used as controls. Human hand and mechanical hand runs were tested separately and typically included action, static, scramble and fixation repeated three times (twice in the monkey), with 3 different runs/orders of conditions.

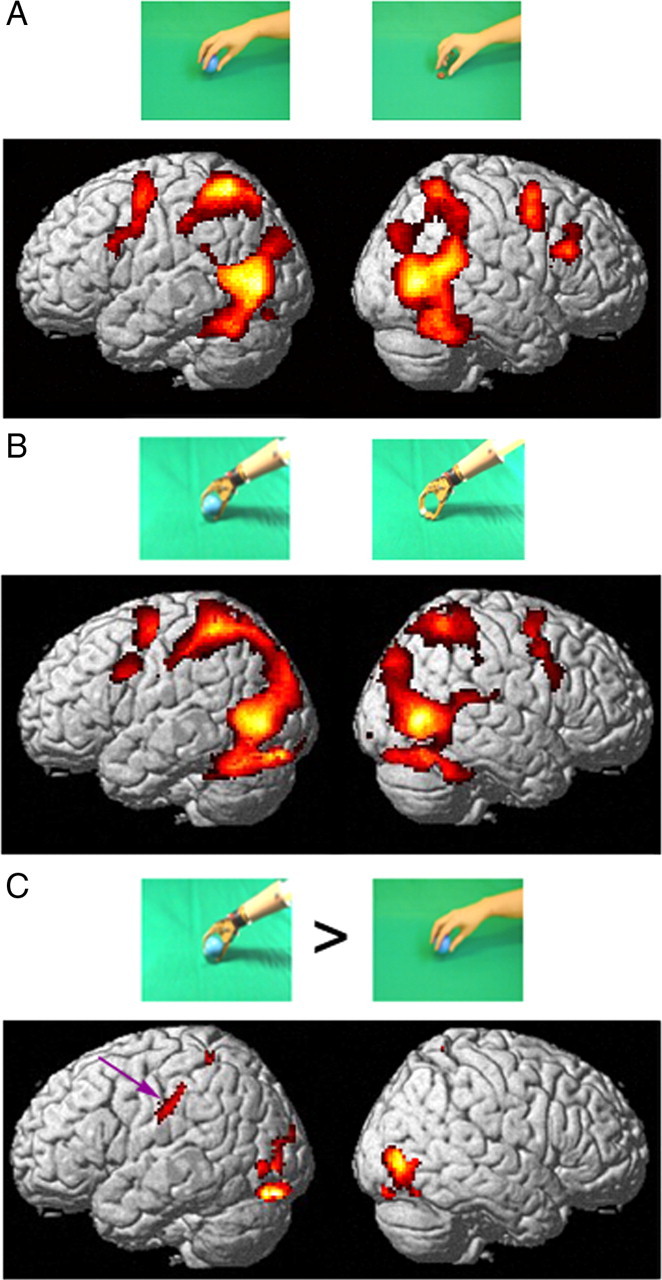

Figure 1.

Results of experiment 1. A, Cortical regions activated (colored red to yellow) by the observation of hand grasping actions relative to their static controls, rendered on lateral views of the standard (MNI) human brain. B, Cortical regions activated in the contrast observation of mechanical hand actions compared with their static control. C, Cortical regions more active during the observation of grasping performed with a mechanical implement than during the observation of the same action by a hand, each relative to its static control. All three contrasts: random effects analysis, n = 20, p < 0.001 uncorrected. Inclusive masking of the contrasts in A and B with the contrast action observation minus fixation, as used in the monkey (Fig. 7) did not alter the activation pattern. The purple arrow indicates the activation in aSMG. Insets show frames from hand actions and mechanical arm actions.

In experiment 2, we presented within the same run videos (same size as in experiment 1) showing either a human hand grasping objects (same as in experiment 1, goal-directed action) or a screwdriver held by a human hand and used to pick up objects (supplemental Movie S4, available at www.jneurosci.org as supplemental material). As a control, a static (refreshed each 3.3 s) single frame of the action videos was used. A typical hand and tool action run included actions performed with a screwdriver, goal-directed hand actions, other actions irrelevant to the present experiment, their respective static controls, and fixation (Nelissen et al., 2005), with the same sequence repeated twice (once for the monkey) and 5 different runs/order of conditions.

In experiments 3 and 4 we used the same 2 × 2 factorial design (tool action, hand action and their static controls) as in experiment 2, but replaced the screwdriver by a rake (experiment 3, supplemental Movie S5, available at www.jneurosci.org as supplemental material) or pliers (experiment 4, supplemental Movie S6, available at www.jneurosci.org as supplemental material) and the corresponding hand actions consisted of dragging (experiment 3) or grasping actions (experiment 4).

In one control experiment we included 2 factorial designs, one with the screwdriver presented in the standard manner i.e., with the action performed mainly in the right visual field, the second with the videos mirrored so that the actions were now presented mainly in the left visual field. In the second control experiment, the four conditions of the factorial design using the screwdriver were extended to include two conditions in which the hand or the hand holding the screwdriver were translating within the display (supplemental Movies S7, 8, available at www.jneurosci.org as supplemental material) rather than appearing as stationary, plus two additional conditions in which the hand and screwdriver actions were presented without the object, goal of the action.

In addition for 40 subjects, 23 of whom participated in one of the four experiments or one of the two control experiments, we presented a time series including moving and static randomly textured patterns (7° diameter) conditions to localize hMT/V5+ and other motion-sensitive regions (Sunaert et al., 1999) and two time series containing intact and scrambled grayscale images and drawings of objects along with fixation as conditions to localize shape-sensitive regions (Kourtzi and Kanwisher, 2000; Denys et al., 2004a).

In experiments 5–8, the exact stimuli presented to humans in experiments 1–4 (grasping performed by a mechanical hand, using a screwdriver to seize objects, a rake to drag objects, and pliers to seize objects and corresponding hand actions) were presented to monkey subjects which were scanned in several daily sessions. Two monkeys (M1, M5) participated in experiment 5 in which, as for humans, hand action and mechanical arm videos were tested in separate runs. Three monkeys (M6, M13, M14) participated in experiments 6–8. In these experiments hand and tool actions were presented in the same runs and runs with different tools were interleaved in the daily sessions. Finally, two monkeys (M13, M14), which had participated in experiments 6–8, were trained (see below) to use the rake and, after a series of scanning sessions, were also trained to use pliers and scanned a third time. In these scanning sessions runs with different tools were again interleaved.

Training of monkeys to use tools (experiment 9).

Monkeys were trained to use two tools: rake and pliers. Both tools were custom built. Both were 25 cm in length; the rake-head was 10 cm wide and the pliers opened to 5 cm. For the training sessions, monkeys sat in an MR-compatible primate chair positioned on a table, on which the tool and the pieces of food were presented. These pieces were located 34–40 cm from the shoulders of the monkey, out of reach with its hands. The monkeys were trained to use the tools with their right hands. After a familiarization phase the training proceeded in steps: touching the tool, lifting the tool, directing the tool toward the food position, and retrieving of the food by retraction of the arm. While training the monkey to use the rake was relatively easy, the use of pliers required more training, largely because the pressure needed to close the pliers had to be applied at the right moment and maintained until the food was retrieved.

Tool use can be considered as a behavioral chain, i.e., a series of related motor acts each of which provides the cue for the next one in the series, with the last motor act producing reinforcement. Training was therefore performed by applying a procedure that reinforced the successive elements of the chosen behavior. The experimenter started with the first step of the chain: training the monkey to touch the tool. Once the monkey could perform that step satisfactorily, the experimenter trained the monkeys to perform the next motor acts (handling and lifting the tool) and reinforced their effort. When the monkeys mastered the succession of these elements, the experimenter added the final elements in the chain.

To assess the level of skill reached by the monkeys to use the rake and the pliers, a series of behavioral tests and an analysis of the movement kinematics were performed at the end of each of these two training phases. The monkeys were tested in three consecutive sessions of 75 trials. Each trial started with the food pellet placed on the table out of animal's reach, while the tool was placed within reach. The food pellets were placed in five different positions: one directly in front of the monkey, the other four at angles of 45° or 22, 5° from the central position (Ishibashi et al., 2000). The tools were placed in two different positions: in front of the monkey when the food was presented laterally and on his right side when the food was presented in front of it. The trials corresponding to the five food positions were presented in a random order, and 15 trials of each type were administered. In any of the five different food positions the monkey had to move its elbow and forearm in a lateral direction, to obtain the food reward. Each trial started when the monkey made contact with the tool and finished when the food was retrieved, when the monkey stopped trying to retrieve the food, or when the food had been displaced by the monkey to a point beyond the reach with the tool. After food and tool placement, the monkey had 30 s to use the tool, otherwise the trial would be considered incorrect. Although after training the monkeys completed these standard trials in much shorter time, a constant time criterion was maintained during training, testing in standard trials, and testing in special trials (see below). The training lasted 3–4 weeks for the rake and 4 (M13) to 6 (M14) weeks for the pliers. The interval between the two training epochs lasted 1 month. Monkeys reached ∼95% correct in the standard trials, except M14 for the pliers (76%).

In addition to these standard trials, two other types of trials were administered during testing. First, some irrelevant ring- or spherically shaped objects were presented at random 10 times in the course of the session. Since monkeys immediately stopped trying to use these objects, this testing was performed only in the first session. Second in 30 trials, interspersed among the standard trials, the tool was presented to the monkey not in its standard upright position but rotated 90° in either direction or turned 180°. In the first session, the monkeys failed to grasp the tool in these trials, but from the second session on they understood that they had to turn the tool to retrieve the food pellet and were able to so in the 30 s allowed. Therefore, we report the percentage correct for the later sessions. For the rake, the percent correct was 95% and 85% for M13 and M14 respectively, but for the pliers only M13 reached 95% correct, whereas M14 never learned to use the rotated pliers.

To analyze the kinematics of the actions of the monkeys when using tools, all trials of the three testing sessions were captured by a digital video camera and markers attached to the tools: one at middle of the rake-head and two on the arms of the pliers 3 cm from the tip. The images thus recorded were sent to a personal computer, where a two dimensional motion analysis of the trajectories and the speed along these trajectories was performed.

fMRI scanning.

The human MRI images were acquired in a 3-T Intera MR scanner (Philips, Best), using a 6 element SENSE head coil (MRI Devices). A functional time series consisted of whole brain BOLD weighted field-echo echoplanar images (FE-EPI) with TR/TE = 3000/30 ms, field of view = 200 mm, acquisition matrix = 80 ×80 (reconstructed to 128 × 128), SENSE reduction factor = 2, slice thickness = 2.5 mm, interslice gap = 0.3 mm, acquiring 50 horizontal slices covering the entire brain. In each experiment, 5–9 time series (runs) were acquired per subject in a single session, yielding a total of 1920 volumes/condition in experiment 1, 2688 volumes/condition in experiment 2, 576 volumes/condition in experiment 3, 1152 volumes/condition in experiment 4, 384 and 504 volumes/condition in the control experiments, whereas 4320 and 4260 volumes/condition were acquired in the shape localizer and the motion localizer tests respectively. At the end of each scanning session a three-dimensional high resolution T1-weighted anatomical image [repetition time (TR)/echo time (TE) = 9.68/4.6 ms, inversion time = 1100 ms, field of view = 250 mm, matrix = 256 × 256, slice thickness = 1.2 mm, 182 slices, SENSE factor = 2) was acquired.

The monkey MRI images were acquired in a 1.5-T Sonata MR scanner (Siemens, Erlangen, Germany) using a surface coil. Each functional time series consisted of gradient-echo echoplanar whole-brain images (1.5 T; TR, 2.4 s; TE, 27 ms; 32 sagittal slices, 2 × 2 × 2 mm voxels). In total, 2160 volumes/condition were analyzed in experiment 5 and 1440 volumes/condition in experiments 6–8. For each subject, a T1-weighted anatomical (three-dimensional magnetization prepared rapid acquisition gradient echo, MPRAGE) volume (1 × 1 × 1 mm voxels) was acquired under anesthesia in a separate session.

Analysis of human data.

Data were analyzed with the Statistical Parameter Mapping package, SPM2 (Wellcome Department of Cognitive Neurology, London, UK), implemented in Matlab 6.5 (The MathWorks). Preprocessing of the data was identical in all human experiments. For each subject, motion correction was performed by realignment of all the functional EPI volumes to the first volume of the first time series and a mean image of the realigned volumes was created. This mean image was coregistered to the anatomical T1-weighted image. Both the anatomical image and the mean EPI were warped to a standard reference system (Talairach and Tournoux, 1988), by normalizing both to their respective template image [Montreal Neurological Institute (MNI)]. Subsequently, the derived normalization parameters, were applied to all EPI volumes, which were subsampled to a voxel size of 2 × 2 × 2 mm and smoothed with a Gaussian kernel of 10 mm FWHM. Statistical analyses was performed using the General Linear Model (Friston et al., 1995a,b), by modeling each condition using a delayed boxcar function and by convolving this with the hemodynamic response function. Global signal intensity was normalized and an appropriate high-pass temporal filter (two times the stimulus period) was applied to remove low-frequency drifts independent of the stimulus-induced signal changes.

In the first two experiments, the first level contrasts for the different action types (human hand, mechanical hand and tool) versus their respective controls were calculated for every individual subject. These contrast images were subjected to a second level, random-effects ANOVA analysis. The interactions were investigated by subtracting hand action minus its control from mechanical hand action minus its control or from action with screwdriver minus its control. These interactions were conjoined with the main effect of actions compared with their static controls. In the other experiments (experiments 3, 4, and the two control experiments) based on fewer subjects, a fixed-effects group analysis was performed. For each contrast, significant MR signal changes were assessed using T-score maps. Thresholds were set at p < 0.001 uncorrected for the interaction effects. As a final analysis we performed a conjunction of the interaction effects in the four different experiments (each at p < 0.001 or p < 0.005 level). This conjunction was itself conjoined with the single effects of mechanical hand or tool action in the same experiments. A threshold of p < 0.05 familywise error corrected for multiple comparisons was used for the single effects of hand actions compared with their static or scrambled controls, and for the shape and motion localizers. For descriptive purposes in tables and in figures we use a lower level: p < 0.001 uncorrected. This is a conservative choice that avoids underestimation of the extent of cortex activated by observation of biological hand actions.

Activity profiles plotting the MR percentage signal changes compared with fixation were obtained for the various experiments. These profiles were calculated for the different regions of interest (ROIs) defined by conjunctions of contrasts. The profiles were calculated from the single subject data by averaging all the voxels in a ROI. The first two data points of a run were not taken into account to compensate for the hemodynamic delay of the BOLD response. In all profiles the SE of the mean was determined across the different subjects.

Analysis of monkey data.

Data were analyzed using statistical parametric map (SPM5) and Match software. Only those runs in which the monkeys maintained fixation within the window for >85% of the time and in which no significant differences in the numbers of saccades between conditions occurred. In these analyses, realignment parameters, as well as eye movement traces, were included as covariates of no interest to remove eye movement and brain motion artifacts. The fMRI data of the monkeys were realigned and nonrigidly coregistered with the anatomical volumes of the template brain [M12, same as subject MM1 in the study by Ekstrom et al. (2008)] using the Match software (Chef d'Hotel et al., 2002). The algorithm computes a dense deformation field by composition of small displacements minimizing a local correlation criterion. Regularization of the deformation field is obtained by low-pass filtering. The quality of the registration can be appreciated in the study by Nelissen et al. (2005), their supplemental Fig. S6. The functional volumes were then subsampled to 1 mm3 and smoothed with an isotropic Gaussian kernel [full-width at half-height, 1.5 mm].

Group analyses were performed with an equal number of volumes per monkey, supplemented with single subject analysis. The level of significance was set for the interactions at p < 0.001 uncorrected for multiple comparisons, as for humans. For all experiments a fixed effect analysis was performed, except for experiment 5, in which we emulated a random effect analysis by calculating first level contrast images for each set of two runs for a single monkey. The single effects of hand action observation were thresholded at p < 0.05 corrected.

In addition, four ROIs were defined directly onto the MR anatomical images of M12, corresponding to the four architectonic subdivisions of IPL: PF, PFG, PG and Opt, as defined by (Gregoriou et al., 2006). Of these four IPL regions, mirror neurons are most prevalent in area PFG (Fogassi et al., 2005; Rozzi et al., 2008). The ROIs included 89 voxels in PF, 70 in PFG, 137 in PG, and 84 in Opt of the left hemisphere. Numbers were very similar for the right hemisphere. Percentage MR signal changes, relative to fixation baseline, in these ROIs were calculated from the group or single subject data. The SE is calculated across runs in single subject profiles and in group profiles. The data of the first two scans of a block were omitted to take into account the hemodynamic delay.

From the activity profiles, we derived the magnitude of the interaction given by the equation: percentage signal change (SC) in tool action minus percentage SC in static tool minus percentage SC in hand action plus percentage SC in static hand. The SEs of the individual conditions were relatively similar. Since the SEs are indicated only for illustrative purposes, we therefore assumed equal SEs in the four conditions and can then set the SE of the interaction magnitude to twice the average of the SEs in the four conditions. To test the selectivity of an activity profile for tool actions two paired t tests were performed. One compared the difference between tool action and its static control to the difference between hand actions and their static control. The second directly compared the tool actions to the hand actions. Comparisons were made across subjects for the human ROIs and across runs for the individual monkey subjects. While the first test ensured that a significant interaction was present in the ROI, the second ensured that this interaction was due to a difference in the experimental conditions, rather than a difference between the static control conditions. For both tests, and in both species, the level of significance was set at p < 0.05 corrected for multiple comparisons.

Surface-based maps.

The human fMRI data were mapped onto the human PALS atlas (Van Essen, 2005) surface in SPM–Talairach space, for both the right and left hemisphere using a volume to surface tool in Caret (Van Essen et al., 2001). Anatomical landmarks were taken from the Caret database and local maxima were inserted as nodes on these flattened cortical maps in Caret. The monkey fMRI data, registered onto the anatomy of M12, were mapped onto the macaque M12 atlas (Durand et al., 2007) using the same tool in Caret. The localization of visual regions (V1-3) was taken from Caret for humans and from (Fize et al., 2003) for monkeys. Area hMT/V5+ was localized in 40 human subjects tested with the motion localizer (Sunaert et al., 1999) and monkey MT/V5 was taken from (Nelissen et al., 2006). The ellipses in the human flatmaps for phAIP, DIPSA and DIPSM (see Fig. 3) were taken from Georgieva et al. (2009).

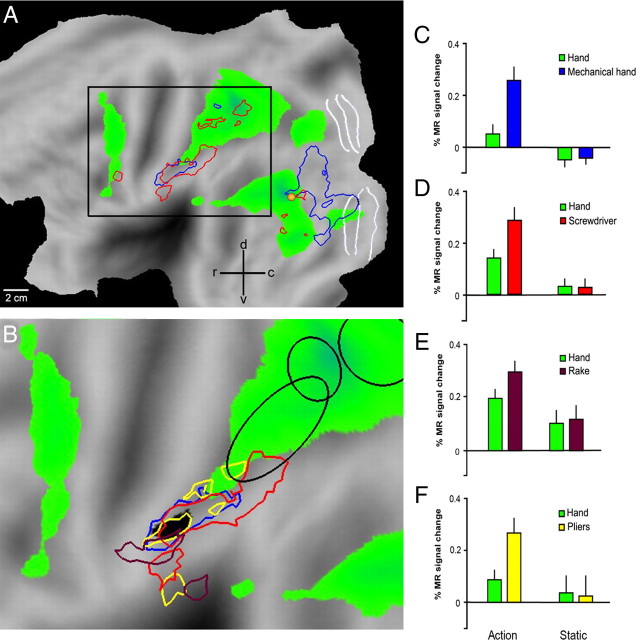

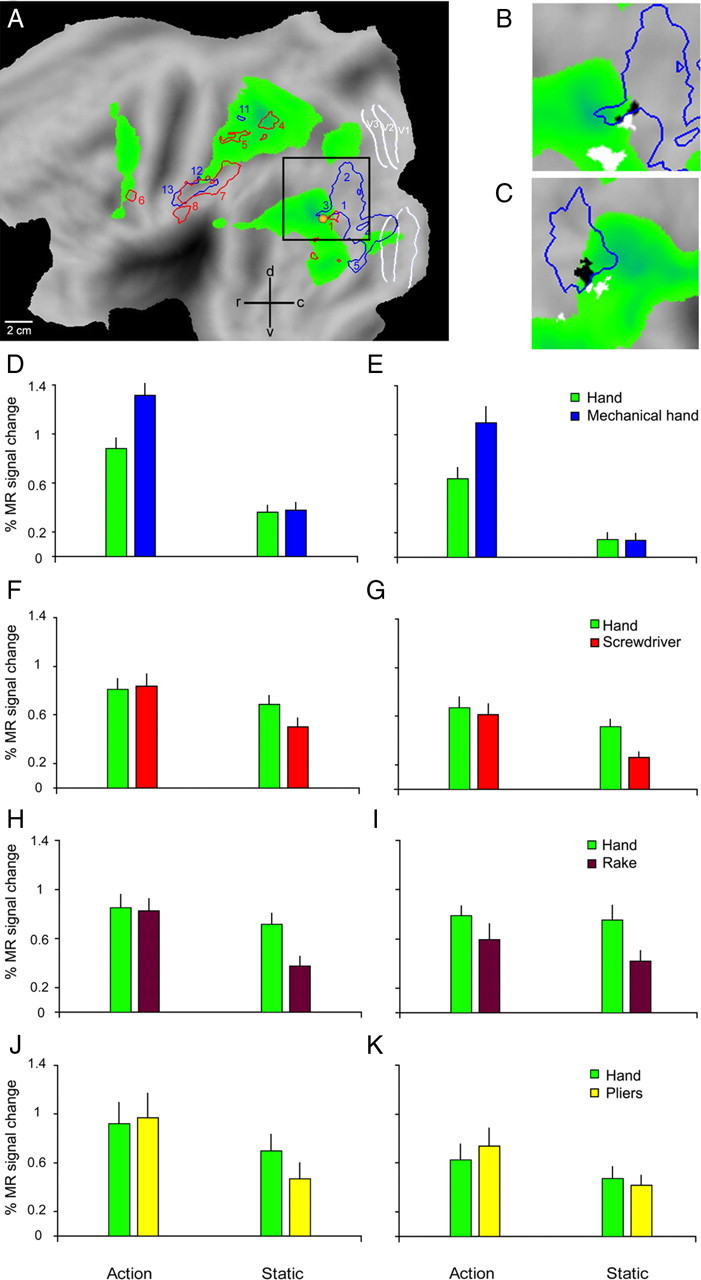

Figure 3.

The anterior supramarginal gyrus interaction site. A, Flattened view of the left hemisphere (human PALS atlas). Green areas show activations in the contrast hand action minus static control (p < 0.001, experiment 1, same data as Fig. 1A). Blue and red outlines show the regions where the interactions tool versus hand, relative to their controls, were significant (p < 0.001) in experiments 1 and 2 respectively (same data as Fig. 2A,B). White lines indicate areas V1-3, yellow dot hMT/V5+. B, Enlarged view of the data shown inside the black square in A. Only interaction sites in the vicinity of anterior SMG are shown. Brown and yellow outlines show the regions where the interactions tool actions versus hand actions, relative to their controls, were significant (p < 0.001) in experiments 3 and 4 respectively. Blue and red outlines as in A. Black voxels indicate the conjunction of the four interactions (each p < 0.005). Blue ellipses indicate from left to right putative human AIP (phAIP), the dorsal IPS anterior (DIPSA) region and the dorsal IPS medial (DIPSM) region. C–F, Activity profiles of the core of the left aSMG region (black voxels in B) in experiments 1–4. All eight paired t tests were significant (see Materials and Methods): p < 0.0001 for both tests in C (interaction and direct comparison of actions), p < 0.001 for both tests in D, p < 0.01 for both tests in E and p < 0.05 for both tests in F.

Caret and the PALS atlas are available at http://www.nitrc.org/projects/caret/ and http://sumsdb.wustl.edu/sums/directory.do?id=636032&dir_name=ATLAS_DATA_SETS and http://sumsdb.wustl.edu/sums/humanpalsmore.do for both hemispheres.

Results

Human fMRI experiments

In the first experiment, 20 volunteers were presented with video-clips showing either human or mechanical hands grasping objects. Dynamic scrambled videos and static pictures were used as controls. The results showed that during the observation of human hand actions, compared with their static controls, fMRI signal increased in occipitotemporal visual areas, in the anterior part of the intraparietal sulcus (IPS) and adjacent inferior parietal lobule (IPL), and in premotor cortex plus the adjacent inferior frontal gyrus, bilaterally as well as the cerebellum (Fig. 1A, Table 1). Observation of actions performed by a mechanical hand, compared with their static control, activated a circuit similar to that activated during hand action observation (Fig. 1B), although the parietal activation in the left hemisphere appears to be more extensive than that for the biological hand. Any difference between the two contrasts is revealed directly by the interaction between the factor type of action (biological versus artificial) and the factor type of condition (action versus controls). These interaction sites, which show a significant signal increase in the contrast observation of mechanical versus human hand actions, relative to their static controls, were located (Fig. 1C) in the occipitotemporal region bilaterally and, dorsally, in the parietal lobe, predominantly of the left hemisphere (Table 2). A substantial activation site was located in the rostroventral part of left IPL convexity, extending into the posterior bank of postcentral sulcus [left anterior supramarginal gyrus (aSMG)] (Fig. 1C, arrow).

Table 1.

Activation sites for observation hand action (experiment 1)

| MNI coordinates |

t value |

||||||

|---|---|---|---|---|---|---|---|

| x | y | z | Hand action—static hand | Hand action—scrambled | Shape localizer | Motion localizer | |

| L MT/ V5 | −44 | −74 | 2 | 9.00 | 10.51 | 27.06 | 20.24 |

| L MTG | −62 | −60 | 6 | 6.04 | 6.94 | n.s. | (4.44) |

| L ITG | −48 | −52 | −20 | 6.31 | 11.09 | 16.96 | n.s. |

| R MT/V5 | 50 | −68 | 2 | 7.59 | 6.87 | 18.52 | 23.17 |

| R STS | 56 | −52 | 12 | 6.10 | 6.95 | n.s. | (5.07) |

| R STG | 64 | −42 | 24 | 5.10 | 5.17 | n.s. | n.s. |

| R OTS | 60 | −52 | −8 | 5.65 | 6.06 | 7.24 | (4.03) |

| R ITG | 48 | −52 | −24 | 7.67 | 8.53 | 15.78 | n.s. |

| R Ant ITG | 40 | −36 | −28 | 5.47 | 5.48 | 14.56 | n.s. |

| L VIPS | −32 | −80 | 24 | 5.65 | 7.41 | 10.37 | 8.28 |

| L SPL | −18 | −68 | 58 | 5.39 | 7.02 | 9.42 | (4.89) |

| L DIPSM | −32 | −56 | 62 | 8.50 | 9.73 | 13.28 | 11.59 |

| L DIPSA | −42 | −48 | 62 | 8.24 | 10.59 | 10.44 | 8.30 |

| L phAIP | −38 | −32 | 42 | 5.37 | 5.84 | 5.81 | n.s. |

| R DIPSM | 28 | −54 | 58 | 6.21 | 7.25 | 10.40 | 7.18 |

| L Pre CG | −28 | −10 | 56 | 5.68 | 5.13 | n.s. | 6.41 |

| L Pre CG | −36 | −4 | 54 | 5.53 | 6.11 | n.s. | (4.77) |

| R Pre CG | 38 | 2 | 50 | 6.24 | 5.46 | n.s. | n.s. |

| L Cer | −6 | −80 | −36 | 7.37 | 6.91 | 6.11 | (4.81) |

| R Cer | 6 | −80 | −38 | 6.52 | 6.93 | (4.56) | (3.97) |

Numbers indicate significant (p < 0.05, corrected) t values; numbers between parentheses indicate t values reaching p < 0.001, uncorrected, but not p < 0.05, corrected. n.s., Nonsignificant; L, left; R, right.

Table 2.

Interaction sites for observation of mechanical hand action (experiment 1)

| Number vox (conj. 0.001) | MNI coordinates | t value | ||||||

|---|---|---|---|---|---|---|---|---|

| Mech. hand action—human hand action (relat. static control) | Mech. hand action—static mech. hand | Shape localizer | Motion localizer | |||||

| x | y | z | ||||||

| L IOG | 151 | −42 | −88 | −4 | 4.37 | 5.81 | 30.91 | (3.89) |

| L V3A* | 151 | −30 | −96 | 18 | 3.69 | 6.96 | 15.38 | 12.80 |

| L IOG | 151 | −50 | −78 | −2 | 4.20 | 14.28 | 36.19 | 17.68 |

| L Fus post | 383 | −26 | −84 | −16 | 4.96 | 10.24 | (4.27) | 9.93 |

| L Fus Mid | 34 | −26 | −56 | −14 | 4.20 | 6.51 | 10.38 | n.s. |

| R MT/V5 | 404 | 50 | −70 | −2 | 3.80 | 12.27 | 29.24 | 23.17 |

| R IOG | 404 | 46 | −86 | 0 | 6.42 | 10.30 | 36.39 | 7.07 |

| R Fus post | 110 | 24 | −86 | −18 | 4.07 | 7.52 | n.s. | 8.90 |

| R Fus post | 110 | 30 | −70 | −20 | 3.85 | 7.37 | 10.26 | (4.82) |

| R DIPSM | 4 | 30 | −52 | 70 | 3.43 | 8.34 | 6.67 | 9.25 |

| L SPL | 25 | −42 | −46 | 64 | 4.08 | 13.25 | 8.56 | 7.30 |

| L IPL BA40 | 117 | −58 | −30 | 46 | 3.80 | 7.92 | n.s. | n.s. |

| L postcent S | 117 | −60 | −18 | 28 | 3.89 | 7.21 | n.s. | n.s. |

Numbers indicate significant t values for interaction (p < 0.001, uncorrected); for other numbers, see Table 1. n.s., Nonsignificant; L, left; R, right; post, posterior; postcentr, postcentral; vox, voxels; relat, relative to.

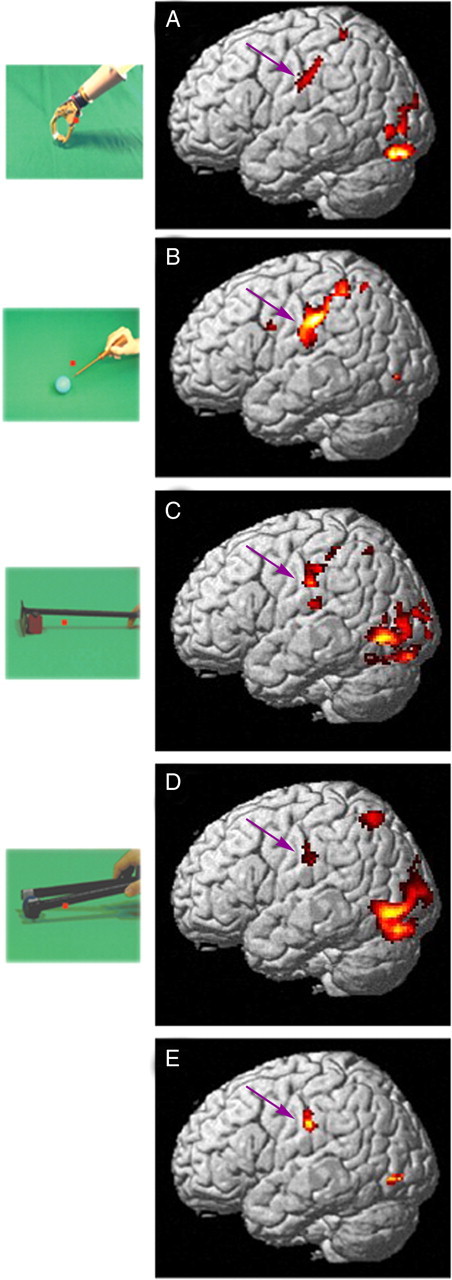

In experiment 2, following the design of experiment 1, we presented 21 volunteers with video-clips showing hand actions and actions in which a tool (a screwdriver) was used to pick up an object. Because of its atypical use, the screwdriver was, most likely, seen by the monkey as a stick used to pickup an object. Tools used in a more standard way were tested in experiments 3 and 4. Confirming experiment 1, the contrast tool use versus hand grasping, both relative to their static controls, revealed a signal increase in the left parietal lobe (Fig. 2B). While some of these interaction sites were within the hand grasping circuit, three-quarter of the voxels were located outside, in the left aSMG (Table 3). Figure 3A uses the projection of the activation pattern onto a flattened hemisphere to show the overlap of the interactions for mechanical hand (blue outlines) and tool actions (red outlines) within the aSMG.

Figure 2.

The left hemisphere interaction sites in the four experiments. A, Cortical regions more active (colored red to yellow) during the observation of grasping performed with a mechanical implement than during the observation of the same action done by hand, each relative to its static control, rendered on lateral views of the left hemisphere of standard (MNI) human brain (experiment 1, n = 20, random effects analysis). B, Cortical regions active in the contrast observation of screwdriver versus hand actions, relative to their static controls (experiment 2, n = 21, random effects analysis). C, SPMs showing significant voxels in the interaction observation of rake vs hand actions (experiment 3, n = 8, fixed effects analysis), relative to their static control. D, Same for experiment 4 (observation of actions with pliers, n = 8, fixed effects). In all four interactions threshold is p < 0.001 uncorrected. E, Conjunction of the interactions in the four experiments; threshold for each interaction p < 0.005 uncorrected, yielding the core of aSMG. Purple arrows indicate left aSMG.

Table 3.

Interaction sites for observation of screwdriver action (experiment 2)

| Number vox (conj. 0.0001) | Number vox (conj. 0.001) | MNI coordinates |

t value |

||||||

|---|---|---|---|---|---|---|---|---|---|

| Action screwdriver—hand action (relat. static control) | Screwdriver action—static screwdriver | Shape localizer | Motion localizer | ||||||

| x | y | z | |||||||

| L IOG | 1 | 13 | −44 | −82 | −4 | 4.31 | 7.71 | 13.65 | 8.40 |

| R IOG | 4 | 48 | −74 | −8 | 3.62 | 6.60 | 14.08 | 9.12 | |

| R ITG post | 4 | 52 | 42 | −62 | −6 | 4.28 | 7.59 | 9.08 | 7.14 |

| L DIPSM | 21 | −32 | −58 | 58 | 3.73 | 6.77 | 9.95 | 6.44 | |

| L DIPSA | 18 | 539 | −42 | −42 | 56 | 4.96 | 9.21 | 7.30 | 5.21 |

| L IFG | 15 | −54 | 4 | 30 | 3.64 | 7.2 | 5.16 | (3.56) | |

| L IPL BA40 | 76 | 539 | −52 | −26 | 34 | 5.04 | 9.08 | (4.09) | (3.37) |

| L postcent S | 76 | 539 | −60 | −20 | 26 | 4.65 | 8.94 | n.s. | n.s. |

Numbers of voxels in the activation site are indicated for two thresholds of the interaction. p < 0.001 and p < 0.0001, uncorrected; t values as in Table 2. n.s., Nonsignificant; L, left; R, right; post, posterior; postcentr, postcentral; conj, conjunction; relat, relative to; vox, voxels.

In experiments 3 and 4 we assessed the generality of these findings, using the same experimental design of experiment 2, but replacing the screwdriver with two other tools (rake and pliers). The results confirmed those of experiment 2: interaction sites included left aSMG (Fig. 2C,D). Since a significant tool versus hand action interaction was observed in aSMG in all four experiments, we performed a conjunction analysis of these interactions. This analysis yielded (Fig. 2E, Table 4) the aSMG site and two inferior occipital gyrus (IOG) sites. Figure 3B illustrates the location on a flat-map of the 75 (black) voxels yielded by the conjunction analysis which define the core of the aSMG region. This core activation is located (59% overlap) in cyto-architectonically defined area PFt of Caspers et al. (2006). Its activity profiles (Fig. 3C–F) clearly indicate that in each of the four experiments the interaction was significant and was due to a significant difference between the action conditions, rather than between the control conditions. Furthermore, the magnitude of the interaction for the observation of screwdriver actions was intermediate between that for observation of rake and pliers actions. Hence, it is unlikely that the aSMG is a region devoted to seeing unusual uses of tools.

Table 4.

Conjunction of interactions for mechanical hand and the three tool actions

| Number vox (conj. 0.001) | Number vox (conj. 0.005) | MNI coordinates | Experiments | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Conjunction | Hand action—static hand (experiment 1) | Shape localizer | Motion localizer | ||||||

| x | y | z | |||||||

| L IOG | 3 | 39 | −49 | −80 | −4 | 3.80 | 7.81 | 9.72 | 9.27 |

| R IOG | 7 | 65 | 47 | −75 | −7 | 4.13 | 5.73 | 12.57 | 9.89 |

| L IPL BA40 | 8 | 75 | −60 | −21 | 31 | 3.63 | n.s | n.s. | n.s. |

Number of voxels in the activation site are indicated for two thresholds of the interaction. p < 0.001 and p < 0.005 uncorrected; t values as in Table 2. n.s., Nonsignificant; L, left; R, right; conj, conjunction; vox, voxels.

In contrast to left aSMG, no activation was found for a symmetrical ROI in the right hemisphere during observation of either hand or tool actions and neither were any of the interactions significant (Fig. 4). Further control experiments revealed that the interaction in the left aSMG was not related to the lateralization of the visual stimulus presentation: presentation of videos with action predominantly in the left rather than the right visual field yielded a similar interaction (Fig. 5A). Nor was the interaction in left aSMG due to mere stimulus translation. Rather, it resulted from the observation of goal-directed action performed with a mechanical device, vanishing when the goal of the action was omitted from the videos (Fig. 5B).

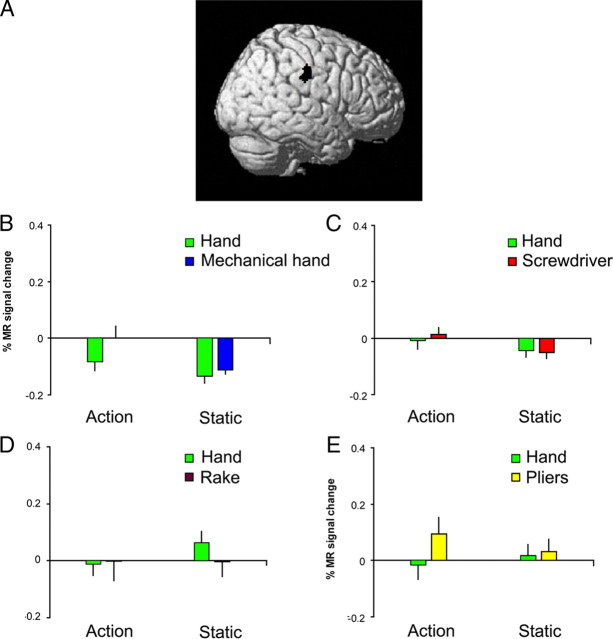

Figure 4.

Right SMG. A, Symmetrical ROI of aSMG core in right hemisphere. B–E, Activity profiles of this ROI in experiments 1–4. In none of the experiments did either paired t test reach significance in this ROI.

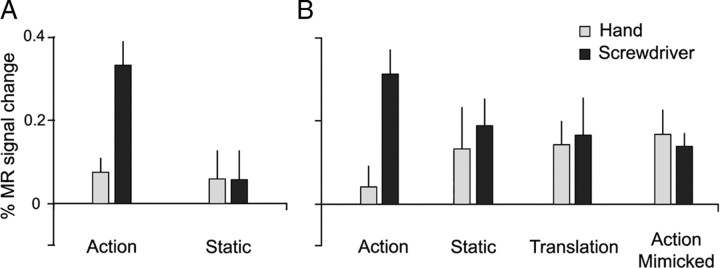

Figure 5.

Control experiments. A, Activity profiles of left aSMG in the first control experiment: actions presented in left visual field instead of right visual field (6 subjects); both paired t tests for the interaction and direct comparison of actions (see Materials and Methods) reached p < 0.05. B, Activity profiles of left aSMG in the second control experiment: actions and static controls in right visual field with gliding (translation of static frame) controls and tool action with no object added (7 subjects). In both cases the profiles are those of the 5 voxels of the aSMG ROI (used as an a priori prediction) in which the interaction with the screwdriver reached p < 0.01 in those particular subjects. In B, the interaction of the tool action with the gliding (translation) and static conditions was significant (paired t test, p < 0.01), as was the direct comparison of actions (p < 0.001).

While the mechanical arm interaction site overlapped the interaction sites for the 3 tools (screwdriver, rake and pliers) in left aSMG (Fig. 3B), this was not the case in the two IOG conjunction regions (Fig. 6). In IOG the interaction sites common to the 3 tools (Fig. 6B,C, white voxels) were located chiefly anterior to the mechanical arm interaction sites (blue outlines), with a few intervening voxels in common, corresponding to the conjunction regions (black voxels). This explains the functional heterogeneity of these conjunction regions indicated by their activity profiles (Fig. 6, compare D,E with F–K). A further difference between the aSMG and IOG conjunction sites is that the latter are clearly visual in nature being both shape and motion sensitive, while the aSMG lacks these simple sensitivities (Table 4). This analysis of the IOG conjunction sites shows that they differ considerably from the left aSMG core.

Figure 6.

Human IOG interaction sites. A, Flatmap of the left hemisphere (human PALS atlas). Green areas show activations in the contrast observation of hand action minus static control (p < 0.001, experiment 1). Blue and red outlines show the regions where the interactions tool versus hand, relative to their controls, were significant in experiments 1 and 2 respectively (p < 0.001). White lines indicate areas V1-3; yellow dot, hMT/V5+; numbers indicate regions as listed in supplemental Tables S2 and S3, available at www.jneurosci.org as supplemental material; black square, part shown in B. B, C, Part of flatmaps of left and right hemispheres of the human PALS atlas showing the regions corresponding to the conjunction of the interactions for the 3 tools (white voxels), to the interaction for the mechanical hand (blue outlines), and the conjunction of the four interactions (black voxels). These latter voxels, located in IOG, reflect the overlap between two largely distinct regions, probably induced by the smoothing and the averaging across many subjects; green voxels same as in A. D–K, Activity profiles of left (D, F, H, J) and right (E, G, I, K) IOG regions yielded by the conjunction analysis of experiments 1–4 (black voxels in B, C). The larger percentage signal changes in these IOG regions compared with those in IPL (Figs. 3, 5) underscore their visual nature.

Naive monkey fMRI experiments

Experiments 1–4, performed in humans, were replicated in five monkeys (M1, M5, M6, M13, M14, experiments 5–8), using the fMRI technique previously described (Vanduffel et al., 2001; Leite et al., 2002). Cortical regions activated in the contrast observation of hand action compared with its static control, and in the contrast mechanical hand action observation compared with its static control are shown in Figure 7, A,B and C, respectively. The activation pattern included occipitotemporal regions, premotor and inferior frontal regions, and parietal regions, as in humans (Fig. 1A,B). For both contrasts the activation in parietal cortex was located mainly in the lateral bank of the IPS (supplemental Fig. S1, available at www.jneurosci.org as supplemental material). The strongest activation was located in the anterior, shape-sensitive part of lateral intraparietal area (LIP) (Durand et al., 2007), with extension into posterior AIP and posterior LIP. It is noteworthy that the parietal activation was asymmetric, being stronger in the left than in the right hemisphere, an observation which also applied to the human parietal cortex (see Fig. 1B). It is likely that this asymmetric activation reflected an asymmetry in the action stimuli, in which most motion occurred in the right visual field. Alternatively, it may reflect the use of the right hand in the videos (Shmuelof and Zohary, 2006). No activation was observed in any of the four areas of monkey IPL: PF, PFG, PG or Opt (Gregoriou et al., 2006).

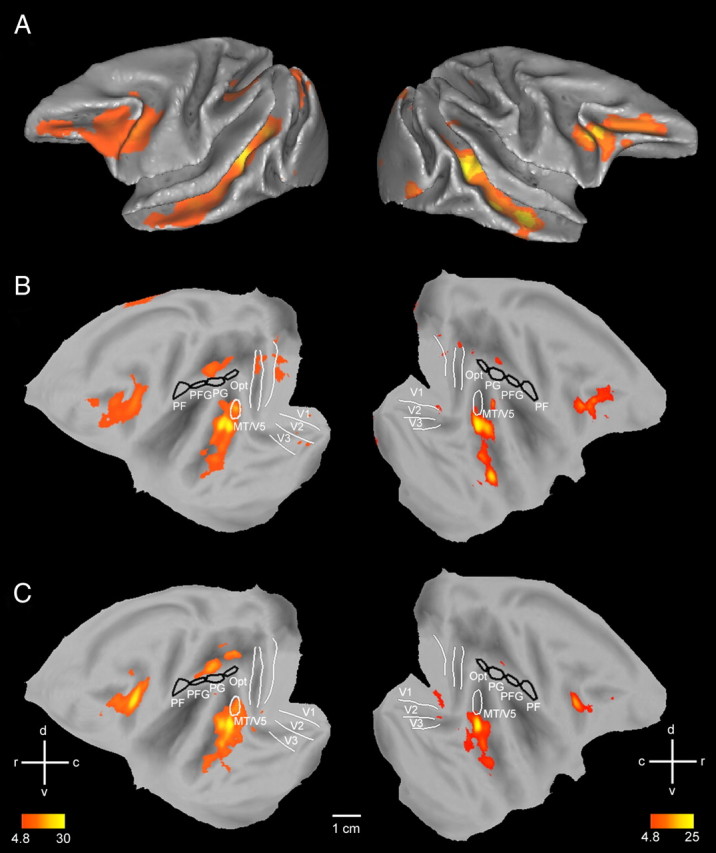

Figure 7.

Results of experiment 5. A–C, Folded left and right hemispheres (A) and flatmaps (B, C) of left and right hemisphere of monkey template (M12) brain (Caret software) showing cortical regions activated (n = 2, fixed effects, p < 0.05 corrected, experiment 5) in the subtraction observation of hand action minus static hand (A, B) and observation of mechanical hand action minus static mechanical hand (C). Both subtractions were masked inclusively with the contrast action observation minus fixation. Black outlines, PF, PFG, PG and Opt; outlines of visual regions (V1-3, MT/V5) are also indicated. Color bars indicate T-scores. See also supplemental Figure S1, available at www.jneurosci.org as supplemental material.

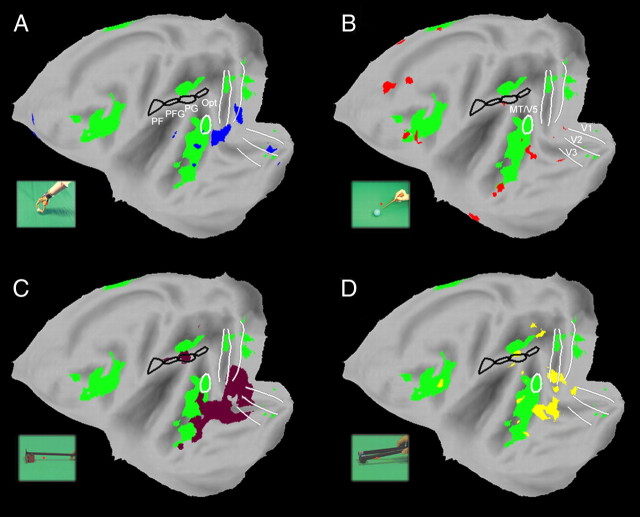

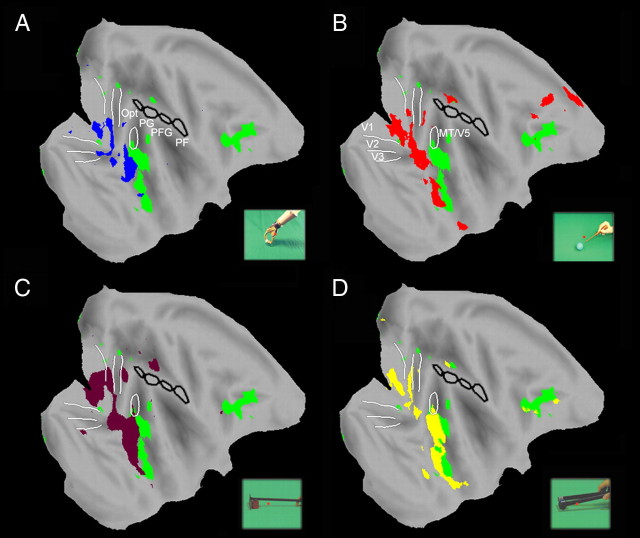

As expected from Figure 7, no significant interaction between the use of mechanical implements and hand actions, relative to their static controls, was observed in monkey IPL in experiment 5 (Figs. 8A, 9A), or in any of the three other experiments (Fig. 8B–D, 9B–D). Figures 8 and 9 illustrate the tool action vs hand action interaction sites observed in experiments 5–8 on the flattened left and right hemispheres respectively (A–D: blue, red, brown and yellow areas respectively). Significant interactions were, however, observed in visual areas of the monkey, predominantly in TEO, sometimes extending into the superior temporal sulcus (STS) or V4, and to a lesser degree in early visual areas, probably reflecting low level visual or shape differences between the videos.

Figure 8.

Interaction sites in the monkey. A–D, Monkey cortical regions activated during the observation of hand grasping action relative to their static controls (green voxels, fixed effect, p < 0.05 corrected, experiment 5, same data as in Fig. 7B). Data plotted on flatmaps of the M12 left hemisphere. The voxels more active (fixed effect, p < 0.001) in the contrast tool versus hand actions relative to their static controls (experiments 5–8) are shown in blue (A, mechanical hand), red (B, screwdriver), brown (C, rake), and yellow (D, pliers). ROIs as in Figure 7.

Figure 9.

Interaction sites in the monkey. A–D, Flatmaps of the right hemisphere of monkey template (M12) brain (Caret software) showing regions significant (fixed effects, p < 0.05 corrected) in the interaction in experiments 5–8 (same color code as in Fig. 8, n = 2 in experiments 5 and n = 3 in experiments 6–8). ROIs as in Figure 7.

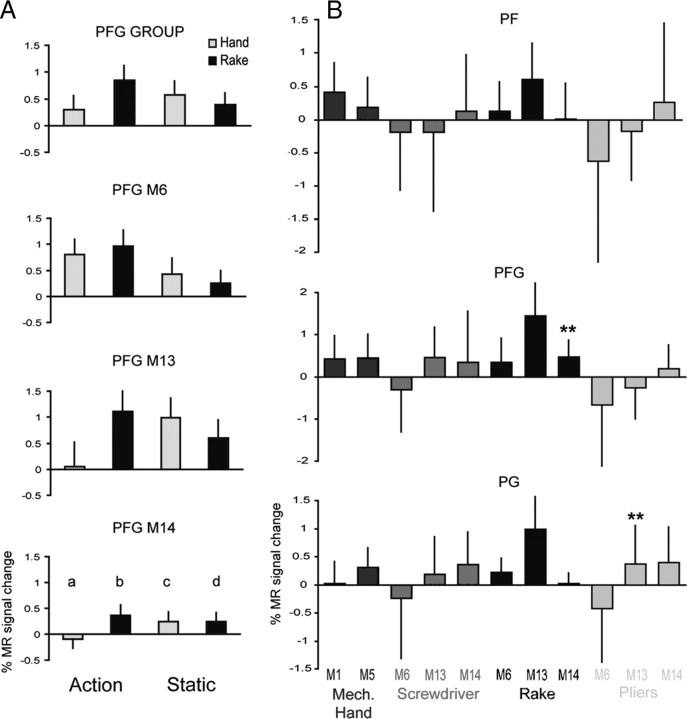

No significant interaction was observed in monkey IPL using the voxel-based analysis, with the sole exception of left area PG in experiment 7 (Fig. 8C, rake action). Thus, to ascertain whether there is indeed a monkey parietal sector specific to tool action observation, we performed an additional ROI analysis of anatomically defined areas PF, PFG [where hand mirror neurons have been reported in single neuron studies; (Fogassi et al., 2005; Rozzi et al., 2008)], and PG of the left hemisphere. The results are shown in Fig. 10A,B. Since the interaction differs from subject to subject (Fig. 10A), we calculated the magnitude of the interaction for each tool-subject combination by the formula (b − a) − (d − c), in which b and a are the MR signals in tool and hand action conditions respectively and d and c the signals in the corresponding static control conditions. The statistical analysis of tool-hand interactions, relative to their static controls, showed that out of 33 parietal interactions calculated, only two, PFG for rake in M14 and PG for pliers in M13, reached significance with the two paired t tests used in the analysis of human aSMG (Fig. 10B). This is close to chance, which predicts 1/20 false positives.

Figure 10.

ROI analysis of monkey IPL. A, Activity profiles in PFG (ROI analysis, experiment 7). B, Magnitude of the interaction (b − d − a + c) in PF, PFG and PG for different tools and monkeys. Stars indicate significant interactions. Notice that the larger percentage signal change in the monkey reflects the use of contrast agent enhanced fMRI (see Materials and Methods).

To investigate the similarity between the occipitotemporal interaction sites of the monkey and the human IOG interaction sites, we also investigated the interactions for the tools separately from that for the mechanical arm. In monkeys the interaction site common to the three tools (Fig. 11A, white voxels) was located in TEO, in front of the mechanical arm interaction site (blue outlines), which involve predominantly V4, extending into posterior TEO. This arrangement is similar to that observed in humans if one takes into account that the human data are averages over many more subjects and are smoothed more than the monkey data. In the TEO regions the interaction for the three tools arose mainly from a reduced response to static tools (Fig. 11B), as was the case in humans. Thus, the monkey occipitotemporal interaction sites bear close similarity to the interaction sites observed in human IOG.

Figure 11.

Occipitotemporal interaction sites in the monkey. A, Flatmap of left hemisphere of monkey 12 (template brain) showing the regions activated (fixed effect, p < 0.001) in the contrast viewing hand action minus viewing static hand (green voxels, experiment 5) and in the interaction for the mechanical hand actions (blue outlines, experiment 5) as well as the voxels common to the interactions for the 3 tools (white voxels, experiments 6–8); ROIs as in Figure 7. B, Activity profiles of the local maximum and 6 surrounding voxels in TEO (white area in A) in experiments 6–8. Again, the larger percentage signal changes in the monkey reflect the use of contrast enhanced fMRI (Denys et al., 2004b) using the V1 activation as common reference estimated the percentage signal changes with MION to average ∼2.5-fold those obtained with BOLD at 3T. A similar factor applies here when comparing to the data of Figure 6, taking into account the larger number of human subjects from whom data are averaged.

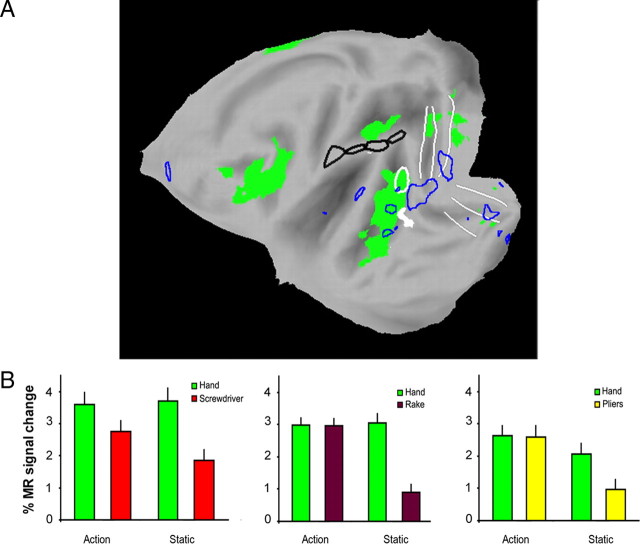

Trained monkey fMRI experiments

The negative results obtained in the five monkeys that we tested might reflect the fact that macaque monkeys do not normally use tools or do so only rarely. Thus, to assess whether the activation observed in humans in the rostral part of IPL might be the neural substrate of the capacity to understand tool use, we trained in experiment 9 two monkeys (M13 and M14) to use a rake, and later pliers, to retrieve food. Both animals learned to use these tools proficiently (supplemental Movies S9–10, available at www.jneurosci.org as supplemental material) and were able to retrieve food positioned in 5 different locations in front of them. M13 and M14 reached 95 and 94% successful trials when using the rake and 97 and 76% when using the pliers, respectively. They were able to use tools even if these implements were presented rotated 180 or 90° (supplemental Movies S11–13, available at www.jneurosci.org as supplemental material), except for M14 when tested with turned pliers (see Materials and Methods). Finally, the analysis of the action kinematics (Fig. 12) revealed stable motion trajectories with both tools for all food positions.

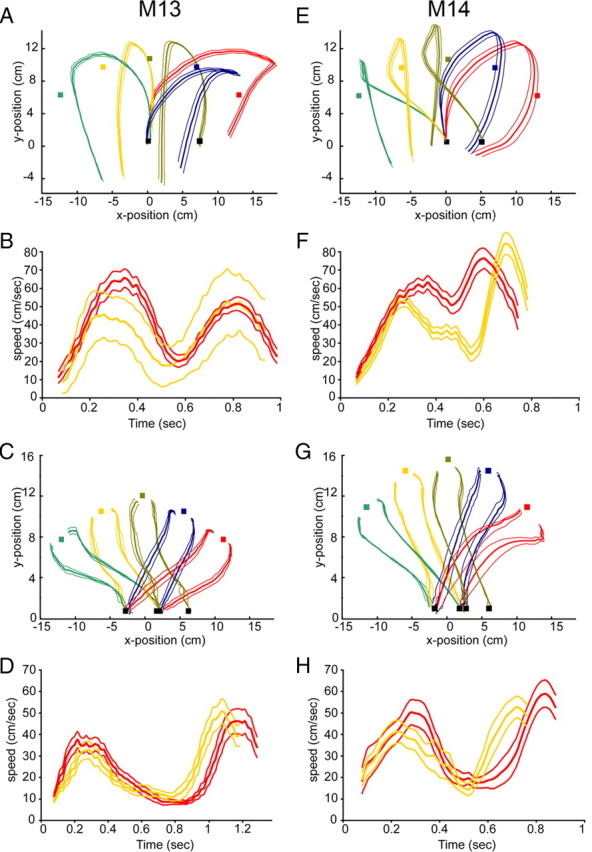

Figure 12.

Effects of training in M13 and M14: kinematic analysis. A, C, E, G, Tool trajectories (mean ± 1 SD) for reaching and retrieving food of trained monkeys. Black squares: starting points. Colored squares: food position (5 directions: −45 to +45°). A, E, Trajectories using rake M13 and M14. A marker was attached at the middle of the rake-head. The left starting point was used for all directions except that straight ahead. C, G, Trajectories using pliers (M14). Markers attached on the arms of the pliers, 3 cm from the tip. B, D, F, H, Mean (±1 SD) speed-time diagrams for two trajectories (−2.5° and +45°). Rake, M13 and M14 (B, F); pliers, M13 and M14 (D, H).

Figure 12, A and E, illustrate the trajectories of the rake head when the monkey placed the rake beyond the piece of food and then pulled the rake back to retrieve the piece of food. For the central food position (olive curves) a different starting position was used, somewhat more to the right than that used for all other food positions. Even though there were differences between monkeys, with M13 always using the left side of the rake to pull the food pellet back and M14 switching sides, the trajectories for both monkeys were extremely stable. The variance was quite small, especially in the direction directly in front of the monkey. The speed diagrams (Fig. 12B,F) also show a very consistent pattern across trials: both monkeys moved more quickly in the first phase and then more slowly in the later, pulling phase for the more peripheral food position (red curves).

Figure 12, C and G, illustrate the trajectories of the two tips of the pliers for the initial phase of movement toward the food. Trajectories are similar in the two animals and again show remarkably little variance. The corresponding speed diagrams are also very constant, with the movements for the more peripheral position being systematically slower. Notice that M13, who mastered the use of tools slightly better than M14, consistently moved the tools more slowly than the other monkey: maximum speeds were lower and total duration of the action longer.

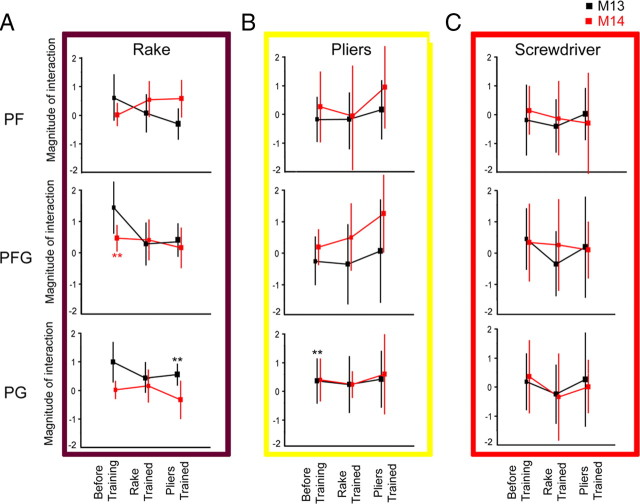

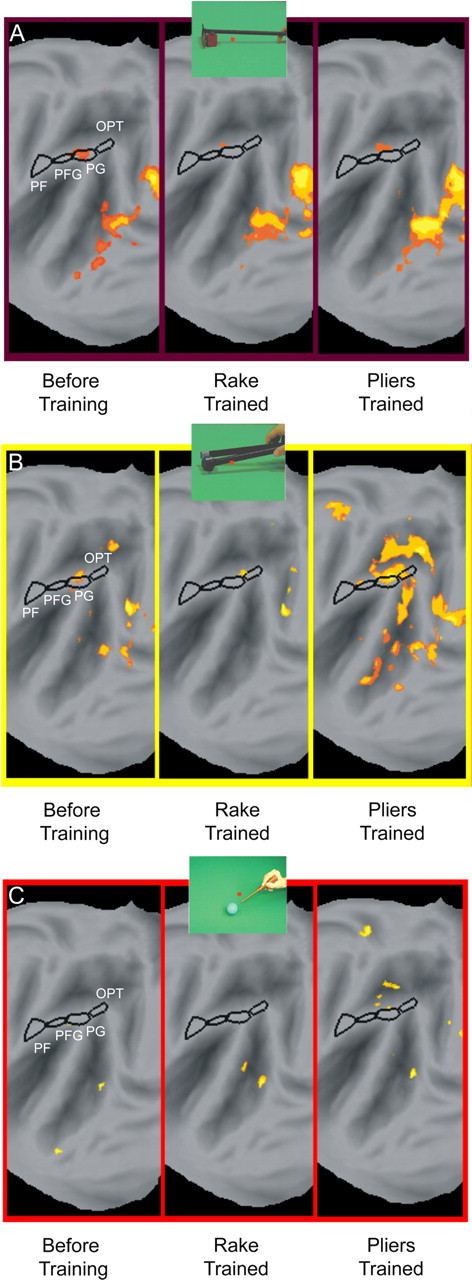

Monkeys 13 and 14 were scanned before tool use training, after learning to use the rake, and a third time after learning to use the pliers, with interleaved runs for the different tools. The interaction patterns were remarkably similar before and after training (Fig. 13), except for the presence of a clearly enhanced interaction between the observation of pliers action versus that of hand action after pliers training (Fig 13B). Yet, no voxels with significant interactions were observed in either PF or PFG, the anterior sectors of monkey IPL, for any of the tools after the trainings. This voxel-based analysis was complemented by a ROI analysis of left PF, PFG and PG similar to that performed in the naive animals. This analysis (Fig. 14) failed to reveal any significant post-training interaction: in none of the ROIs did the interaction reach significance for the observation of actions with the tool, which the monkey had learned to use in the training just preceding the scanning. Overall, only three out of the 54 (2 × 3 × 3 × 3) tests performed in the two trained animals yielded a statistically significant interaction: two tests before training, already mentioned above, and an interaction for the rake in M13 after training with the pliers. This small proportion (3/54) is again close to the predicted chance occurrence of 1/20 false positives.

Figure 13.

Effects of training: voxel-based analysis. A–C, SPMs showing significant interaction (n = 2, fixed effects, p < 0.001) tool action versus hand action observation, relative to their static control, for rake (A), pliers (B) and screwdriver (C) observation before training, after rake training, and after pliers training. The significant voxels (orange-yellow) are overlaid on flatmaps of the left hemisphere of monkey M12.

Figure 14.

ROI analysis of the magnitude of the interaction (see Fig. 10 for definition) after training. A–C, Interaction magnitude is plotted as a function of epoch for the two subjects (M13, M14) in each of the three ROIs (PF, PFG, and PG) for rake action observation (A), the pliers action observation (B) and the screwdriver action observation (C). Significant values (both paired t tests p < 0.05 corrected for multiple comparisons) are indicated by stars. Runs from A–C were tested interleaved at each epoch.

This analysis clearly indicates that even after prolonged training there is no evidence for a tool-related region in the monkey comparable to human aSMG. Yet Figure 13 indicates that the tool vs hand interaction increases in the IPS, with training, especially training of pliers, and might include some parts of the hand action observation circuit. Supplemental Figure S2, available at www.jneurosci.org as supplemental material, shows that these interactions are weak in the anterior part of LIP, the most responsive part of the hand action observation circuit. In fact the interaction is weaker than that observed in the human DIPSM, which is a plausible homolog of anterior LIP (Durand et al., 2009). Furthermore, factors other than tool-action specific mechanisms might account for whatever weak interaction present in the parietal regions of the monkey active during hand action observation. Given the similarity of grasping with the fingers and with the tip of the pliers, the latter type of grasping might have been more clearly understood after training and became therefore a more efficient stimulus. Alternatively, because of its difficulty, observing the use of pliers might have elicited a stronger attentional modulation of the MR activation compared with hand grasping. However, it is also fair to state that even if no interaction is observed in the fMRI, it remains possible that some parts of the lateral bank of monkey IPS house small proportions of neurons similar to those present in human aSMG. Such neurons would escape detection if the proportions are small, because of the coarseness of fMRI (Joly et al., 2009). The proportion has to be small, because parietal neurons responsive to viewing of hand actions would respond to both hand and tool action observation and the presence of additional tool-action specific neurons would create an interaction signal in the fMRI, which was weak (supplemental Fig. S2, available at www.jneurosci.org as supplemental material). Finally, we cannot exclude that the training has induced neuronal changes (Hihara et al., 2006) resulting in the recruitment of additional parietal areas by the observation of tool action. This possibility of an expansion of the hand action observation circuit after training will be the topic a later publication.

Discussion

The present results show that in both humans and monkeys, the observation of grasping actions performed with simple tools activates a parieto-frontal circuit also active during the observation and execution of hand grasping movements (Binkofski et al., 1999; Ehrsson et al., 2000; Buccino et al., 2001; Grèzes et al., 2003; Manthey et al., 2003; Gazzola et al., 2007) (see also Rizzolatti and Craighero, 2004). More importantly, however, in humans the observation of actions performed with these simple mechanical devices also activates a specific sector of the IPL, the aSMG. No equivalent tool-action specific activation was observed in monkey IPL, even after extensive training.

The human aSMG activation found in the present study is distinct from the parietal activation sites observed during the static presentation of tool images (Chao and Martin, 2000; Kellenbach et al., 2003; Creem-Regehr and Lee, 2005) in posterior IPL, i.e., in the regions active during the observation of hand grasping. A possible explanation is that static pictures of tools, or even their translational motion (Beauchamp et al., 2002), activated the representations of how those tools are grasped as objects rather than the cognitive aspects related to their actual use. This view is supported by the study of Valyear et al. (2007) that describes an area, just posterior to left AIP, selectively activated during tool naming. In agreement with our findings, these authors explicitly posit that two left parietal regions are specialized for tool use: a region they describe behind AIP, involved in the planning of skillful grasping of tools, and a more anterior region in the SMG related to the association of hand actions with the functional use of the tool. More generally, left aSMG site is located rostrally compared with the SMG regions involved in audio-visual phonological processing (Calvert and Campbell, 2003; Miller and D'Esposito, 2005; Hasson et al., 2007; Skipper et al., 2007). Similarly, action verbs compared with abstract verbs activate left area SII in the parietal operculum, rostral and ventral to the present aSMG site (Rüschemeyer et al., 2007). The left aSMG region is therefore outside the linguistic network (see Hickok and Poeppel, 2007) and its activation cannot be attributed to verbalization. Finally, there is little evidence that left aSMG would be involved in understanding causal relationships in general. Split-brain studies have indicated that the left and right hemispheres are involved in causal inference and causal dynamic perception respectively (Roser et al., 2005). Yet the parietal regions recruited by viewing causal versus noncausal displays are all located more caudally than the aSMG (Blakemore et al., 2001; Fugelsang et al., 2005).

There is no direct evidence concerning the motor properties of aSMG. However, it has been reported that SMG is activated during preparation for (Johnson-Frey et al., 2005) and pantomimes of tool use (Moll et al., 2000; Rumiati et al., 2004; Johnson-Frey et al., 2005) (for review see Lewis, 2006; Króliczak and Frey, 2009) as well as during the manipulation of virtual tools (Lewis et al., 2005). It is, therefore, plausible that aSMG constitutes a node where the observation of tool use is matched to their use. Thus, as hand action observation triggers the parieto-frontal hand grasping circuit, the particular type of movements required to operate tools appears to activate in addition aSMG which codes specific motor programs for tool use. This view is supported by the activation of aSMG by the sounds of tool use (Lewis et al., 2005).

Negative findings, as those obtained here for monkey IPL, are difficult to interpret in functional imaging studies. In the monkey experiments, however, we used a contrast agent increasing the contrast-to-noise ratio fivefold (Vanduffel et al., 2001). Furthermore, we used a ROI approach which is more sensitive than an whole-brain analysis (Nelissen et al., 2005), and we had precise indications about the ROI locations (Fogassi et al., 2005; Rozzi et al., 2008). The similarity between the interaction in human IOG and monkey TEO/V4 regions indicates that our fMRI technique in the monkey is sufficiently sensitive. Observations were made in 5 monkeys, presenting actions performed with several tools, and repeated after training to use tools. The performance levels and the kinematic analyses clearly indicate that the two monkeys were well trained to use the rake and pliers. Hence, the evidence for a species difference related to IPL activation by observation of tool action appears to be conclusive, although it is fair to state that the training of our monkeys was relatively short compared with the extensive human experience with tools. It is unclear how longer training, with more tools, would alter the results. It is also fair to note that since monkey intraparietal regions responded to observation of both hand and tool actions, we cannot exclude that these regions house small numbers of tool-action specific neurons that escape detection by fMRI (see Results and supplemental Fig. S2, available at www.jneurosci.org as supplemental material).

The homology between human and monkey parietal lobe is under dispute. Brodmann (1909) suggested that the homologues of two main cytoarchitectonics subdivisions of monkey posterior parietal lobe (areas 5 and 7) are both located in the human superior parietal lobule and that human IPL (areas 40 and 39) is an evolutionary new region. However, this view is difficult to accept completely given that the intraparietal sulcus is an ancient sulcus, already present in prosimians (see Foerster, 1936). Hence, recent literature has adopted also for the monkey the nomenclature of (Von Economo, 1929), naming the monkey IPL areas with the same terms as in humans: PF and PG (Von Bonin and Bailey, 1947; Pandya and Seltzer, 1982).

The present findings suggest that while the IPL sector around the intraparietal sulcus is functionally similar (as far as hand manipulation is concerned) in monkeys and humans, the rostral part of IPL is a new human brain area that does not exist in monkeys. We propose that this region proper to humans underlies a specific way of understanding tool actions. While the grasping circuit treats actions done with a tool as equivalent to hands grasping objects, aSMG codes the tool actions in terms of causal relationships between the intended use of the tool and the results obtained by using it. This capacity, whether specific to tools or more general, represents a fundamental evolutionary cognitive leap that greatly enlarged the motor repertoire of humans and, therefore, their capacity to interact with the environment.

The fact that monkeys learned to use simple tools such as a rake or pliers (Iriki et al., 1996; Ishibashi et al., 2000; Umiltà et al., 2008) does not necessarily imply an understanding of the abstract relationship between tools and the goal that can be achieved by using them. The rake, or the pliers, might simply become, with training, a prolongation of the arm, as shown by the response properties of neurons recorded from the medial wall of the IPS of these trained monkeys (Iriki et al., 1996; Hihara et al., 2006). Hence, monkeys can rely on the hand grasping circuit to handle the tool (Obayashi et al., 2001), although this circuit may include some neuronal elements providing some primitive representation of causal relationships.

Macaques separated from the ancestors of humans >30 million years ago. Recent findings concerning the development of tool use during hominid evolution allow us to speculate about the moment at which the parietal region related to tool use emerged. The earliest evidence for hominid tool technology are the sharp-edged flakes of the Oldowan industrial complex [2.5 million years ago (Susman, 1994; Roche et al., 1999)]. During the Acheulian industrial complex, dating back ∼1.5 Ma (Asfaw et al., 1992), large cutting tools were manufactured by Homo erectus and possibly Homo ergaster. Their diversity (handaxes, cleavers, picks) suggest that these early humans had the capacity to represent the causal relationship between tool use and the results obtained with it. Thus the emergence of a new functional area in rostral IPL may have occurred at least 1.5 million years ago (Ambrose, 2001). It may have emerged even earlier, during the Oldowan industrial complex or when apes diverged from monkeys. Apes use tools readily (Whiten et al., 1999) and modify herb stems to make them a more efficient tool, which implies that they have a template of the tool form (Sanz et al., 2009). Causal understanding of tool use, however, may require more than a template as it implies the integration of visual information into specialized motor schemata (Povinelli, 2000; Martin-Ordas et al., 2008).

In conclusion, the description of a region in the human brain, the aSMG, specifically related to tool use sheds new light on the neural basis of an evolutionary new function typical of Homo sapiens. Neurons specifically related to tool use might already be present in monkeys dispersed in the hand action circuit and hence not be detectable by the fMRI. The appearance, however, in humans of a new functional area, in which neurons with similar properties may interact, could have enabled the appearance of new cognitive functions that a less structured organization could hardly mediate.

Footnotes

This work was supported by Grants Neurobotics (European Union), Fonds Wettenschappelijk onderzoek G151.04, GOA 2005/18, EF/05/014, IUAP 6/29, and ASI and by a grant from Ministero dell'Istruzione, dell'Università e della Ricerca. The Laboratoire Guerbet (Roissy, France) provided the contrast agent (Sinerem). We are indebted to C. Fransen, A. Coeman, P. Kayenberg, G. Meulemans, W. Depuydt, and M. Depaep for help with the experiments and to S. Raiguel, R. Vogels, R. Vandenberghe, D. Van Essen, G. Humphreys, B. Blin, and J. Call for comments on an earlier manuscript version.

References

- Ambrose SH. Paleolithic technology and human evolution. Science. 2001;291:1748–1753. doi: 10.1126/science.1059487. [DOI] [PubMed] [Google Scholar]

- Asfaw B, Beyene Y, Suwa G, Walter RC, White TD, WoldeGabriel G, Yemane T. The earliest Acheulean from Konso-Gardula. Nature. 1992;360:732–735. doi: 10.1038/360732a0. [DOI] [PubMed] [Google Scholar]

- Beauchamp MS, Lee KE, Haxby JV, Martin A. Parallel visual motion processing streams for manipulable objects and human movements. Neuron. 2002;34:149–159. doi: 10.1016/s0896-6273(02)00642-6. [DOI] [PubMed] [Google Scholar]

- Beck BB. Animal tool behaviour. New York: Garland STPM; 1980. [Google Scholar]

- Binkofski F, Buccino G, Posse S, Seitz RJ, Rizzolatti G, Freund H. A fronto-parietal circuit for object manipulation in man: evidence from an fMRI-study. Eur J Neurosci. 1999;11:3276–3286. doi: 10.1046/j.1460-9568.1999.00753.x. [DOI] [PubMed] [Google Scholar]

- Blakemore SJ, Fonlupt P, Pachot-Clouard M, Darmon C, Boyer P, Meltzoff AN, Segebarth C, Decety J. How the brain perceives causality: an event-related fMRI study. Neuroreport. 2001;12:3741–3746. doi: 10.1097/00001756-200112040-00027. [DOI] [PubMed] [Google Scholar]

- Brodmann K. Vergleichende lokalisationslehre der Grosshirnrinde. Leipzig, Germany: Johann Ambrosius Barth; 1909. [Google Scholar]

- Buccino G, Binkofski F, Fink GR, Fadiga L, Fogassi L, Gallese V, Seitz RJ, Zilles K, Rizzolatti G, Freund HJ. Action observation activates premotor and parietal areas in a somatotopic manner: an fMRI study. Eur J Neurosci. 2001;13:400–404. [PubMed] [Google Scholar]

- Calvert GA, Campbell R. Reading speech from still and moving faces: the neural substrates of visible speech. J Cogn Neurosci. 2003;15:57–70. doi: 10.1162/089892903321107828. [DOI] [PubMed] [Google Scholar]

- Caspers S, Geyer S, Schleicher A, Mohlberg H, Amunts K, Zilles K. The human inferior parietal cortex: cytoarchitectonic parcellation and interindividual variability. Neuroimage. 2006;33:430–448. doi: 10.1016/j.neuroimage.2006.06.054. [DOI] [PubMed] [Google Scholar]

- Chao LL, Martin A. Representation of manipulable man-made objects in the dorsal stream. Neuroimage. 2000;12:478–484. doi: 10.1006/nimg.2000.0635. [DOI] [PubMed] [Google Scholar]

- Chef d'Hotel C, Hermosillo G, Faugeras O. Flows of diffeomorphisms for multimodal image registration. Proc IEEE Int Symp Biomed Imag. 2002;7–8:753–756. [Google Scholar]

- Creem-Regehr SH, Lee JN. Neural representations of graspable objects: are tools special? Brain Res Cogn Brain Res. 2005;22:457–469. doi: 10.1016/j.cogbrainres.2004.10.006. [DOI] [PubMed] [Google Scholar]

- Denys K, Vanduffel W, Fize D, Nelissen K, Peuskens H, Van Essen D, Orban GA. The processing of visual shape in the cerebral cortex of human and nonhuman primates: a functional magnetic resonance imaging study. J Neurosci. 2004a;24:2551–2565. doi: 10.1523/JNEUROSCI.3569-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Denys K, Vanduffel W, Fize D, Nelissen K, Sawamura H, Georgieva S, Vogels R, Van Essen D, Orban GA. Visual activation in prefrontal cortex is stronger in monkeys than in humans. J Cogn Neurosci. 2004b;16:1505–1516. doi: 10.1162/0898929042568505. [DOI] [PubMed] [Google Scholar]

- Durand JB, Nelissen K, Joly O, Wardak C, Todd JT, Norman JF, Janssen P, Vanduffel W, Orban GA. Anterior regions of monkey parietal cortex process visual 3D shape. Neuron. 2007;55:493–505. doi: 10.1016/j.neuron.2007.06.040. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Durand JB, Peeters R, Norman JF, Todd JT, Orban GA. Parietal regions processing visual 3D shape extracted from disparity. Neuroimage. 2009;46:1114–1126. doi: 10.1016/j.neuroimage.2009.03.023. [DOI] [PubMed] [Google Scholar]

- Ehrsson HH, Fagergren A, Jonsson T, Westling G, Johansson RS, Forssberg H. Cortical activity in precision- versus power-grip tasks: an fMRI study. J Neurophysiol. 2000;83:528–536. doi: 10.1152/jn.2000.83.1.528. [DOI] [PubMed] [Google Scholar]

- Ekstrom LB, Roelfsema PR, Arsenault JT, Bonmassar G, Vanduffel W. Bottom-up dependent gating of frontal signals in early visual cortex. Science. 2008;321:414–417. doi: 10.1126/science.1153276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fize D, Vanduffel W, Nelissen K, Denys K, Chef d'Hotel C, Faugeras O, Orban GA. The retinotopic organization of primate dorsal V4 and surrounding areas: a functional magnetic resonance imaging study in awake monkeys. J Neurosci. 2003;23:7395–7406. doi: 10.1523/JNEUROSCI.23-19-07395.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Foerster O. Motorische Felder und Bahnen. In: Bumke H, Foerster O, editors. Handbuch der Neurologie IV. Berlin: Springer; 1936. pp. 49–56. [Google Scholar]

- Fogassi L, Ferrari PF, Gesierich B, Rozzi S, Chersi F, Rizzolatti G. Parietal lobe: from action organization to intention understanding. Science. 2005;308:662–667. doi: 10.1126/science.1106138. [DOI] [PubMed] [Google Scholar]

- Frey SH. Tool use, communicative gesture and cerebral asymmetries in the modern human brain. Philos Trans R Soc Lond B Biol Sci. 2008;363:1951–1957. doi: 10.1098/rstb.2008.0008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friston KJ, Holmes AP, Poline JB, Grasby PJ, Williams SC, Frackowiak RS, Turner R. Analysis of fMRI time-series revisited. Neuroimage. 1995a;2:45–53. doi: 10.1006/nimg.1995.1007. [DOI] [PubMed] [Google Scholar]

- Friston K, Holmes A, Worsley K, Poline J, Frith C, Frackowiak R. Statistical parametric maps in functional imaging: a general linear approach. Hum Brain Mapp. 1995b;2:189–210. [Google Scholar]

- Fugelsang JA, Roser ME, Corballis PM, Gazzaniga MS, Dunbar KN. Brain mechanisms underlying perceptual causality. Brain Res Cogn Brain Res. 2005;24:41–47. doi: 10.1016/j.cogbrainres.2004.12.001. [DOI] [PubMed] [Google Scholar]