Abstract

Visual imagery is mediated via top-down activation of visual cortex. Similar to stimulus-driven perception, the neural configurations associated with visual imagery are differentiated according to content. For example, imagining faces or places differentially activates visual areas associated with perception of actual face or place stimuli. However, while top-down activation of topographically specific visual areas during visual imagery is well established, the extent to which internally generated visual activity resembles the fine-scale population coding responsible for stimulus-driven perception remains unknown. Here, we sought to determine whether top-down mechanisms can selectively activate perceptual representations coded across spatially overlapping neural populations. We explored the precision of top-down activation of perceptual representations using neural pattern classification to identify activation patterns associated with imagery of distinct letter stimuli. Pattern analysis of the neural population observed within high-level visual cortex, including lateral occipital complex, revealed that imagery activates the same neural representations that are activated by corresponding visual stimulation. We conclude that visual imagery is mediated via top-down activation of functionally distinct, yet spatially overlapping population codes for high-level visual representations.

Keywords: visual imagery, pattern analysis, fMRI, top-down control, visual selectivity, object recognition

Introduction

High-level cognitive functions depend on interactions between multiple levels of processing within distinct, yet highly interconnected brain areas. In particular, interactions between perceptual representations coded within posterior cortical regions and anterior control systems in prefrontal cortex are thought to underlie goal-directed attention (Desimone and Duncan, 1995), working memory (Ranganath and D'Esposito, 2005), and, more generally, executive control (Duncan, 2001; Miller and Cohen, 2001) and conscious awareness (Dehaene et al., 1998; Baars and Franklin, 2003). Here, we explore the precision of top-down access to perceptual representations during mental imagery. Using neural classification procedures to characterize patterns of brain activity associated with visual imagery, we demonstrate that top-down mechanisms can access highly specific visual representations in the complete absence of corresponding visual stimulation.

During normal visual perception, input from the retina selectively activates specific population codes representing distinct perceptual experiences. Each of the seemingly infinite number of perceptual states that we can experience is differentiated by the unique pattern of neural activity distributed across a specific subpopulation of cortical neurons. Importantly, the neural machinery engaged by direct visual stimulation is also accessed in the absence of sensory input. For example, imagining a visual scene activates the visual cortex, even when the eyes are closed (Kosslyn et al., 1995), and functional deactivation of visual cortex degrades the quality of such internally generated images (Kosslyn et al., 1999). Similar to normal perception, the neural responses to mental imagery are differentiated according to the content of the experience. Imagining faces or places differentially activates visual areas associated with perception of actual face or place stimuli (Ishai et al., 2000; O'Craven and Kanwisher, 2000; Mechelli et al., 2004). In this respect, mental imagery has been considered as a special case of perceptual access (Kosslyn et al., 2001): whereas normal perception is driven by sensory input, imagery is triggered by internal signals from higher cognitive centers, such as the prefrontal cortex (Mechelli et al., 2004). Although top-down activation of visual cortex is well established (Miller and D'Esposito, 2005), the extent to which internally generated activity in visual cortex resembles the fine-scale population coding responsible for stimulus-driven perception is unknown. Here we show that top-down mechanisms for visual imagery access the same distributed representations within the visual cortex that also underlie visual perception.

Using functional magnetic resonance imaging (fMRI), we measured changes in brain activity while participants imagined or viewed the letter “X” or “O” (see Fig. 1), and then applied multivoxel pattern analysis (MVPA) (for review, see Haynes and Rees, 2006; Norman et al., 2006) to determine whether observed activation patterns could reliably discriminate between different states of visual imagery, and/or stimulus-driven perception. Furthermore, by extending the logic of the machine-learning approach, we were also able to formally compare activity patterns associated with visual imagery and veridical perception. In our experiment, accurate cross-generalization between the activation patterns in visual cortex underlying perception and imagery confirmed that top-down visual coding shares significant similarity to the corresponding visual codes activated during stimulus-driven perception.

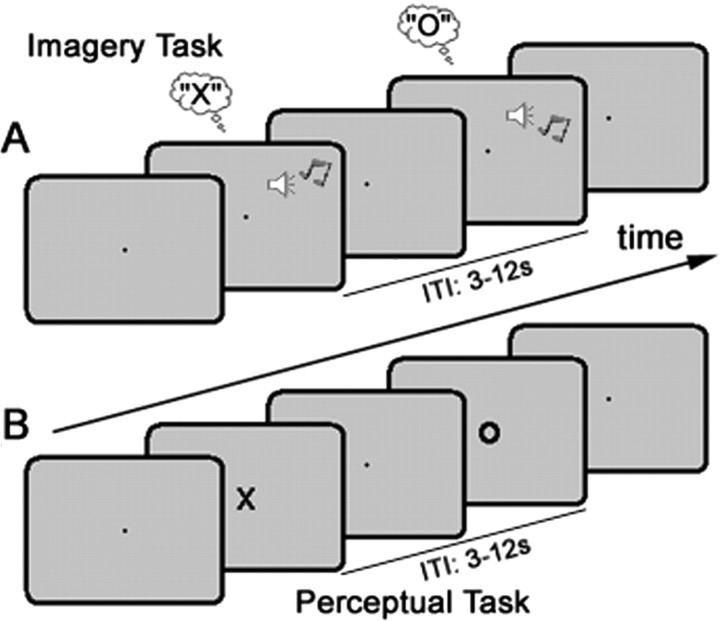

Figure 1.

The experimental design. A, During the imagery task, participants were cued (via either a high or low pitch auditory tone) on a trial-by-trial basis to imagine either X or O. The intertrial interval (ITI) was randomized between 3 and 12 s. B, During the perceptual task, participants were instructed to watch a series of visually presented letter stimuli (X or O; duration 250 ms, ITI 3–12 s) and press the same button following the presentation of each stimulus.

Materials and Methods

Participants and behavioral task.

Participants (15; 6 female; aged 20–38; right-handed) were screened for MR contraindications and provided written informed consent before scanning. The Cambridge Local Research Ethics Committee approved all experimental protocols.

The experiment was conducted over six scanning runs. Each run contained one block (24 randomized trials; ∼4 min) of each task: imagery task and perceptual task (Fig. 1A,B). During the imagery task, participants were instructed via an auditory cue every 3–12 s to imagine either the letter X or O (Fig. 1A). During the perceptual task, a letter (X or O, randomly selected) was presented for 250 ms every 3–12 s (Fig. 1B). Participants responded as quickly as possible to each letter stimulus with a single button press (right index finger) regardless of letter identity. The perceptual task was presented first during the first scanning run, and in each subsequent run task order was randomized. Each block commenced with an instruction indicating the relevant task (e.g., “Imagery”), and blocks were separated by a 16 s rest period.

Visual stimuli were viewed via a back-projection display (1024 × 768 resolution, 60 Hz refresh rate) with a uniform gray background. Visual Basic (Microsoft Windows XP; Dell Latitude 100L Pentium 4 Intel 1.6 GHz) was used for all aspects of experimental control, including stimulus presentation, recording behavioral responses (via an MR-compatible button-box) and synchronizing experimental timing with scanner pulse timing. Central fixation was marked via a black point (∼0.2°) at the center of the visual display. Visual stimuli used during the perceptual task were black uppercase letters X or O in Arial font (∼3 × 4°). The cue stimulus used to direct visual imagery was either a high (600 Hz) or low (200 Hz) pitched tone presented for 100 ms via an MR-compatible earphones. Participants were instructed before scanning to associate the high-pitch tone with the letter X, and the low-pitch tone with the letter O. Participants were also instructed to generate mental images as similar as possible in actual detail to the visual stimuli.

fMRI data acquisition.

Functional data (T2*-weighted echo planar images) were collected using a Siemens 3T Tim Trio scanner with a 12-channel head coil. Volumes consisted of 16 slices (3 mm) acquired in descending order [repetition time = 1 s; echo time = 30 ms; Flip angle = 78°]. The near-axial acquisition matrix (64 × 64 voxels per slice; 3 × 3 × 3 mm voxel resolution with a 25% gap between slices) was angled to capture occipital, posterior temporal and ventral prefrontal cortex. The first eight volumes were discarded to avoid T1 equilibrium effects. High-resolution (1 × 1 × 1 mm) structural images were acquired for each participant to enable coregistration and spatial normalization.

Univariate data analysis.

Data were analyzed initially using a conventional univariate approach in SPM 5 (Wellcome Department of Cognitive Neurology, London, UK). Preprocessing steps were: realignment, unwarping, slice-time correction, spatial normalization [Montreal Neurological Institute (MNI) template], smoothing (Gaussian kernel: 8 mm full-width half-maximum) and high-pass filtering (cutoff = 64 s). At the first level single-participant analysis, events from each of the six scanning runs were modeled using four explanatory variables: Xperception, Operception, Ximagery, Oimagery. These were derived by convolving the onsets for imagery and perceptual events with the canonical hemodynamic response function. Constant terms were also included to account for session effects, and serial autocorrelations were estimated using an AR(1) model with prewhitening. Images containing the β parameter estimates for each condition were then averaged across scanning runs, and entered into a second-level random-effects analysis. The results from the second-level group analysis were corrected for multiple comparisons using the false discovery rate (FDR) of 5%.

Multivoxel pattern analysis.

The principal aim for MVPA is to incorporate information distributed across a set of voxels that might discriminate between two or more experimental conditions (Haynes and Rees, 2006; Norman et al., 2006). Here, we adapted a correlation approach to MVPA (Haxby et al., 2001; Williams et al., 2007). Because this approach to neural classification is typically based on effect-size estimates of continuous regression functions (i.e., β parameters, see Williams et al., 2007), correlation-based methods readily handle event-related fMRI data. Furthermore, unlike other approaches to neural classification including linear discriminant analysis and support vector machines, correlation is scale-and mean-level-invariant, and therefore, not directly influenced by homogeneous differences in condition-specific activation levels. This is important because it is the pattern of differential activity, not magnitude differences, that constitutes the neural signature for differential population coding.

To train our linear classifier, we first estimated the β parameters for a subset of training data (five of the six scanning runs) using the same design matrix described for the univariate analyses. For each voxel, the relative activation bias associated with imagining (or viewing) X relative to imagining (or viewing) O was then calculated by subtracting the mean β estimates for the two conditions. The resultant set of voxel biases were then scaled by the multivariate structure of the noise. Each set of voxel biases was divided by a squared estimate of the voxelwise error covariance matrix. This was derived by first calculating the residual variance (i.e., observed-fitted data) of the training data at each voxel and then estimating the covariance of the residual using a shrinkage procedure (Kriegeskorte et al., 2006). The generalizability of the estimated training vector of weighted voxels biases was then tested against the pattern of voxel biases observed within the test data set. The β parameters were estimated for the test data using the corresponding portion of the design matrix, and the condition-specific contrast [i.e., imagining (or viewing) X minus imagining (or viewing) O] was calculated for each voxel. The test contrast vector was then divided by a training-independent estimate of the noise, the squared error covariance of the test data. The accuracy of classification was indexed by the correlation across space between the training values for each voxel, and those calculated for the test data for training. Correlation coefficients above zero were interpreted as correct classifications of the (X–O) bias pattern, whereas coefficients of zero or below were recorded as incorrect classifications. The overall percent correct classification score was then calculated from across the six possible train-test permutations. Second level group analyses were then performed on mean accuracy scores.

All pattern analyses (implemented in Matlab using customized procedures and SPM 5 for image handling) were performed on minimally preprocessed data to preserve the participant-specific high spatial frequency information used to index differential population codes (Haxby et al., 2001; Haynes and Rees, 2005; Kamitani and Tong, 2005). Functional images were spatially aligned/unwarped and slice-time corrected, but not spatially normalized or smoothed. The time-series data from each voxel were high-pass filtered (cutoff = 64 s) and data from each session were scaled to have a grand mean value of 100 across all voxels and volumes. Each classifier was based on the pattern of activity observed within a sphere, radius = 10 mm, corresponding to ∼90 voxels (median of 91 voxels across all regions, and all participants). Initially, we performed ROI analyses on predefined regions-of-interest (ROIs); however, to explore the data more generally, we also performed a searchlight procedure (Kriegeskorte et al., 2006) (see also Haynes et al., 2007; Soon et al., 2008). During this multiregion MVPA, the ROI was centered on each cortical voxel in turn, and classification accuracy was recorded at the central voxel to construct a discrimination accuracy map. Accuracy maps for each discriminative analysis were then spatially normalized to the MNI template, and assessed via a random-effects group analysis. As with the standard univariate analyses, the group results were corrected for multiple comparisons (FDR <5%).

Results

Activation analysis of visual imagery and stimulus-driven perception

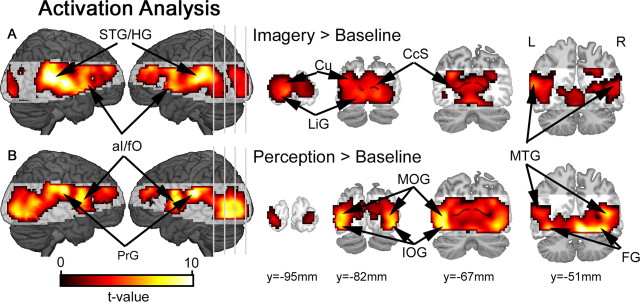

Before more detailed pattern analyses, we first examined the data for overall changes in regional activation levels within each task (imagery vs baseline; perception vs baseline), and also between the stimulus conditions within each task (Ximagery vs Oimagery; Xperception vs Operception). These conventional univariate analyses revealed significant activation of visual cortex during both imagery and perception. Activation peaks were observed within the calcarine sulcus, cuneus and lingual gyrus, and extending anteriorly along the inferior/middle occipital gyri, above-baseline activity was also detected in fusiform and middle temporal gyri (Fig. 2). The cue-related visual response during the imagery task is consistent with previous evidence for selective activation of visual cortical areas during visual imagery (Kosslyn et al., 1995, 1999). Anterior insula/frontal operculum (aI/fO) was also activated during both tasks, and superior temporal sulcus/Heschl's gyrus were activated during the imagery task (in Fig. 2A), presumably owing to the presence of the auditory cue stimuli. Above-baseline activity was also observed within the precentral gyrus during the perceptual task, in line with the motor demands associated with the response to visual stimuli. Contrasting imagery and perception-related activity revealed greater activation of early visual cortex, including calcarine sulcus, during imagery relative to perception (supplemental Fig. 1A, available at www.jneurosci.org as supplemental material); and conversely, inferior/middle occipital gyri were activated more robustly during the perceptual task relative to imagery (supplemental Fig. 1B, available at www.jneurosci.org as supplemental material). Importantly, no differences were observed between the magnitude of activity associated with either stimulus type during the imagery task (Ximagery vs Oimagery) or the perceptual task (Xperception vs Operception).

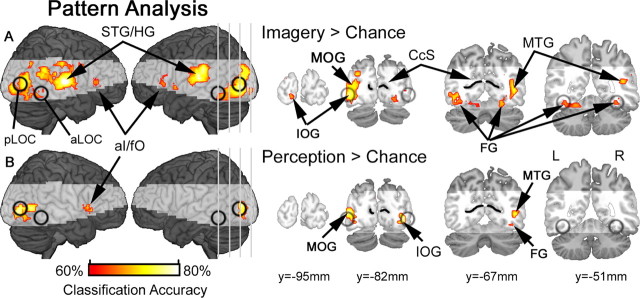

Figure 2.

Activation results for the conventional univariate analyses. A, During the imagery task, comparisons against baseline confirmed relative increases in activity distributed throughout visual cortex, including cuneus (Cu), lingual gyrus (LiG) and middle temporal gyrus (MTG). Event-related increases were also observed within superior temporal sulcus/Heschl's gyrus (STG/HG). B, During the perceptual task, a similar network of brain areas was also activated, with peaks in inferior occipital gyrus (IOG), middle occipital gyrus (MOG), middle temporal gyrus (MTG) and fusiform gyrus (FG). Beyond visual cortex, precentral gyrus (PrG) was also active during the perceptual task. All activation maps are corrected for multiple comparisons (pFDR < 0.05). Shaded brain areas were beyond the functional data acquisition field of view within at least one subject, and therefore not included in the group analyses. The calcarine sulcus (CcS) is indicated by the black line superimposed over coronal slices.

Imagery and perceptual coding in area lateral occipital complex

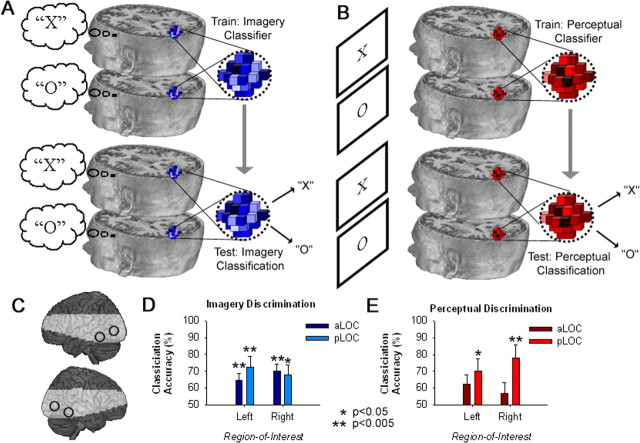

For the principal analyses, we examined patterns of neural activity using a train-test regime (schematized in Fig. 3A,B) to identify imagery (and perceptual) coding within anterior and posterior subregions of the lateral occipital complex (aLOC, pLOC) (Fig. 3C). These regions were derived from a previously published detailed analysis of category-specific processing areas within the ventral visual cortex (Spiridon et al., 2006). Coordinates from this study were averaged across left and right hemispheres for symmetry about the mid-sagittal plane, resulting in the following xyz coordinates in MNI space: ±37, −51, −14 (aLOC); ±45, −82, −2 (pLOC).

Figure 3.

A, B, Train-test neural classification was used to index population coding during visual imagery (A) and perception (B). C, Pattern analyses were performed in aLOC and pLOC of both hemispheres, shown here on a rendered brain surface. Shading indicates the regions beyond the functional data acquisition field of view. D, Differential population coding of the two alternative states of imagery was observed within all subregions of area LOC. E, During the perceptual task, there was a trend toward above-chance discrimination across all subregions of LOC, however, perceptual classification was only significantly above-chance within the left and right pLOC. Error bars represent ± 1 SEM, calculated across participants.

Classification accuracy data for imagery and perception in area LOC are shown in Figure 3, D and E, respectively. During the imagery task, classification of imagery states was significantly above chance (50% classification accuracy) within all LOC subregions [aLOC left: t14 = 3.4, p = 0.004; aLOC right: t14 = 4.9, p < 0.001; pLOC left: t14 = 3.3, p = 0.005; pLOC right: t14 = 2.9, p = 0.012]. In the perception task, discrimination was only significantly above chance within the pLOC [left: t14 = 2.6,p = 0.021; right: t14 = 3.4,p = 0.004; for all comparisons against chance in aLOC, p > 0.052]. Classification accuracy data for both tasks within each of the ROIs were also analyzed using a three-way repeated-measures ANOVA, with factors for task (imagery, perception), ROI (aLOC, pLOC), and laterality (left, right). Although there were trends toward a main effect of laterality (p = 0.053), and an interaction between ROI and laterality (p = 0.066), no other terms approached significance (p > 0.126).

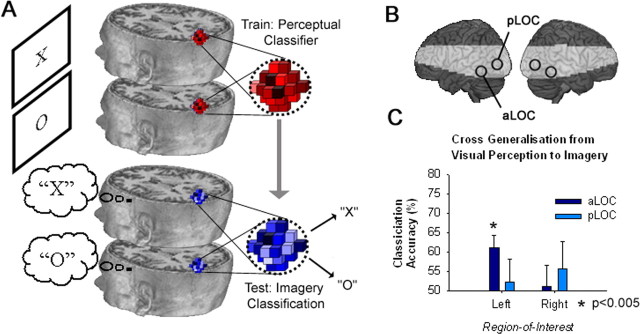

Cross-generalization: predicting imagery from a perceptual classifier in LOC

Accurate discrimination between patterns of visual activity associated with imagining the letters X and O provides evidence for differential population coding of imagery states within higher-level visual cortex. Moreover, the spatial overlap between coding for imagery and stimulus-driven perception in LOC is consistent with the hypothesis that imagery is mediated via top-down activation of the corresponding perceptual codes. However, the spatial coincidence of imagery and perceptual discrimination alone does not strictly require access to a common population code. It remains possible that imagery activates population codes in visual cortex that are functionally distinct from the visual codes activated in the same cortical areas during stimulus-driven perception. To test the relationship between population coding of imagery and stimulus driven perception more directly, we next examined whether a perceptual classifier trained only on patterns of visual activity elicited by visual stimulation could predict the contents of visual imagery during the imagery task. Cross-generalization from visual perception to imagery should be possible only if both mechanisms access a common representation.

First, we trained a perceptual classifier to discriminate perceptual states from visual activity observed during the perceptual task, and then using the trained perceptual classifier, we sought to classify imagery states during the imagery task (Fig. 4A). Focusing this cross-generalization analysis on the predefined ROIs described above (Fig. 4B), we found that the neural classifier trained on perceptual data from the left aLOC could accurately discriminate between imagery states [t14 = 3.4, p = 0.004; for all other ROIs, p > 0.354 (Figure 4C)]. Successful cross-generalization from perception to mental imagery confirms that the neural code underlying visual imagery shares significant similarity to the corresponding code for veridical perception.

Figure 4.

Cross-generalization MVPA reveals the coding similarity between visual imagery and perception. A, First, a perceptual classifier was trained to discriminate between patterns of visual activity associated with alternative perceptual conditions during the perceptual task. Second, the perceptual classifier was used to predict the imagery state associated with visual activity observed during the imagery task. B, Cross-generalization pattern analyses were performed in aLOC and pLOC of both hemispheres, shown here on a rendered brain surface with shading to indicate the regions beyond the functional data acquisition field of view. C, Accurate cross-generalization between perception and imagery confirms significant similarity between respective population coding in the left aLOC. Error bars represent ± 1 SEM, calculated across participants.

Imagery and perceptual coding in other cortical areas

So far, we have examined population coding only within predefined ROIs for high-level visual cortex. Next, we further explore our data using a searchlight MVPA procedure (Kriegeskorte et al., 2006, 2007; Haynes et al., 2007; Soon et al., 2008) to identify other cortical regions coding for alternative states of visual imagery and perception. Classification accuracy maps for each discrimination condition (imagining X vs imagining O; and seeing X vs seeing O) were assessed at the group level via a one-sample t test comparison against chance [50% classification accuracy: for other examples of random-effects second level evaluation of MVPA searchlight data, see Haynes et al. (2007); Soon et al. (2008)]. Above-chance discrimination between imagined letters X and O was observed in activation patterns from visual cortical areas during the imagery task (Fig. 5A), with discriminative peaks observed within lingual gyrus, and extending anteriorly along inferior occipital gyrus, in and around pLOC, to the fusiform regions including aLOC. As expected from previous evidence of stimulus-driven activation of visual population codes (Haynes and Rees, 2005; Kamitani and Tong, 2005; Williams et al., 2007; Kay et al., 2008), training a perceptual classifier on visual activity during the perceptual task also resulted in above-chance prediction as to whether participants were viewing the letter X or O, with distinct discrimination peaks within inferior and middle occipital gyri (Fig. 5B).

Figure 5.

Population coding during visual imagery and perception. A, Searchlight MVPA revealed differential population coding of the two alternative states of imagery within the high-level visual areas, including inferior occipital gyrus (IOG), middle occipital gyrus (MOG), fusiform gyrus (FG) and middle temporal gyrus (MTG). Searchlight analyses also identified discriminative clusters in superior temporal gyrus/Heschl's gyrus (STG/HG), and anterior insula/frontal operculum (aI/fO). B, Searchlight MVPA of visual perception revealed a similar network of discriminative clusters, with the exception of STG/HG. Classification accuracies are shown for voxels that survived correction for multiple comparisons (FDR <5%), and shading indicates brain areas that were beyond the functional data acquisition field of view within at least one subject, and therefore not included in the group analyses. For reference, anterior lateral occipital complex (aLOC), posterior lateral occipital complex (pLOC) and calcarine sulcus (CcS) are superimposed in black.

Overall, imagery discrimination was more distributed throughout visual cortex relative to perceptual discrimination. In particular, above-chance imagery classifications were observed throughout higher levels of the visual processing hierarchy, including anterior portions of inferior occipital gyrus and fusiform cortex corresponding to aLOC. Nevertheless, despite these trends, there were no significant differences between imagery and perceptual classification results, and even at a more liberal statistical threshold (p < 0.01, uncorrected) there was only a trend toward greater discrimination between imagery states relative to perception within left superior occipital gyrus and middle occipital gyrus (supplemental Fig. 2, available at www.jneurosci.org as supplemental material). For completeness, we also explored our principal searchlight results at a less stringent statistical threshold (p < 0.01, uncorrected). These exploratory tests recapitulated the general pattern of above-chance classifications presented in Figure 5, with additional hints toward accurate imagery classification in earlier visual cortex, including calcarine sulcus (supplemental Fig. 3A, available at www.jneurosci.org as supplemental material), and trends toward perceptual classification in higher visual areas, including more anterior occipital cortex corresponding to aLOC (supplemental Fig. 3B, available at www.jneurosci.org as supplemental material). Finally, searchlight analysis of cross-generalization classifications did not reveal any discriminative clusters that survived correction for multiple comparisons; however, trends toward accurate imagery discrimination from a perceptual classifier were observed in inferior/middle occipital gyrus (supplemental Fig. 3C, available at www.jneurosci.org as supplemental material).

Beyond visual cortex, above-chance classification accuracies were also observed within the anterior aI/fO during both the imagery and perceptual task. Exclusive to the imagery task, and in line with our use of two distinct auditory tones to cue the generation of visual images, MVPA of superior aspects of the temporal lobe, corresponding to primary auditory cortex (Rademacher et al., 2001), also discriminated between the two imagery conditions. To examine more explicitly imagery, and perceptual, coding beyond the visual cortex, but within the field of view of our relatively limited functional acquisition, we also performed ROI analyses centered over previously defined aI/fO [±36, 18, 1, in Duncan and Owen (2000)]. Classification performance was above chance for both imagery and perception within the right aI/fO (imagery: t14 = 3.0, p = 0.010; perception: t14 = 3.2, p = 0.006), but not in the corresponding regions of the left hemisphere (p > 0.240). However, despite the trend toward more accurate classification within the right, relative to left aI/fO, an ANOVA (factors for task: imagery, perception; and laterality: left, right) did not reveal any significant terms (p > 0.142).

Discussion

These data demonstrate that visual imagery activates content-specific neural representations in the same regions of visual cortex that code the corresponding states of stimulus-driven perception, including LOC. Moreover, using a cross-generalization approach to formally compare differential neural patterns associated with perception and imagery, we further demonstrate that top-down and stimulus-driven mechanisms activate shared neural representations within high-level visual cortex.

The visual coding properties observed in this study are consistent with previous evidence implicating mental imagery as a special case of perception in the absence of the corresponding sensory input (as for review, see Kosslyn et al., 2001). Previous neuroimaging evidence reveals how mental imagery activates topographically appropriate regions of perceptual cortex. At a global level, visual, auditory and somatosensory imagery selectively activate respective visual (Kosslyn et al., 1995), auditory (Zatorre et al., 1996) and somatosensory (Yoo et al., 2003) cortical areas. Within the visual modality, imagery at distinct spatial locations has been shown to activate the corresponding areas of retinotopically specific cortex (Kosslyn et al., 1995; Slotnick et al., 2005; Thirion et al., 2006). Finally, beyond retinotopically organized earlier visual cortex, content-specificity has also been observed for category-selective areas of higher-level extrastriate cortex (Ishai et al., 2000; O'Craven and Kanwisher, 2000; Mechelli et al., 2004). However, to date, no studies have examined imagery-driven activation of overlapping neural populations. Consequently, the pattern-analytic methods used here extend previous imagery studies to show that top-down mechanisms engaged during mental imagery are capable of accessing highly specific population codes distributed across functionally distinct, yet spatially overlapping, neural populations in higher-level visual cortex.

The degree of neural coordination required for detailed mental imagery presents a major computational challenge, and exemplifies the wider issue of how top-down control mechanisms can access precise representations within distant cortical areas. Indeed, if top-down control signals only required selectivity between anatomically distinct neural populations, functional connectivity could be mediated via relatively coarse-grained anatomical pathways linking distinct neural structures. However, the problem of selective access to overlapping representations presents a far greater connective challenge. This problem is further compounded if coding units within control areas are flexible, adaptively representing different parameters depending on task-demands (Duncan, 2001). The results of the current study provide evidence that the brain can indeed meet this challenge, accessing specific population codes in perceptual cortex during visual imagery. The goal for future research will be to reveal the precise mechanisms underlying top-down control over spatially overlapping visual representations.

The critical role of extra-striate cortex, including area LOC, in visual shape perception is uncontroversial (Reddy and Kanwisher, 2006). However, increasing evidence for interactions between the various sensory modalities challenges the notion of purely unimodal perceptual cortex (Driver and Noesselt, 2008). For example, area LOC has been shown to integrate visual and tactile information during shape perception (Amedi et al., 2001). In the current study, auditory tones were used to manipulate the contents of visual imagery. As a likely consequence of our choice of cue stimuli, pattern analysis of the event-related activity within auditory cortex discriminated between the two imagery conditions. However, if auditory stimuli could also drive tone-specific activation of visual cortex, then above-chance classification of imagery states from a neural classifier trained on the cue-related response in visual cortex could, in principle, be driven by stimulus-driven cross-modal effects. Although we are unaware of any evidence for tone-specific coding in area LOC, the possibility that a small subset of auditory neurons within this highly visually responsive brain area cannot be ruled out. Nevertheless, even if auditory coding was robustly manifest within area LOC, our cross-generalization results argue against a simple stimulus-driven cross-modal account. Above-chance discrimination of imagery states from a classifier trained only on perceptual data from LOC implies that there were significant similarities in the response patterns observed during imagery and visual perception. This degree of similarity would not be expected from a cross-modal response driven purely by the auditory properties of the imagery cues. In this respect, the cross-generalization results extend beyond simple neural decoding, which could be based on any difference between the two imagery conditions, to provide a direct link between the differential patterns observed during imagery and visual perception.

Our results also provide a more general insight into the precision of top-down mechanisms in the cerebral cortex. Although the precision of top-down control is a fundamental neurophysiological parameter in models of interregional interaction and integration, the evidence for top-down modulation of posterior brain areas is generally limited to topographical specificity. For example, while fMRI studies reveal top-down activation of functionally distinct, and content-specific, cortical regions in the absence of corresponding visual input during attentional control (Chawla et al., 1999; Ress et al., 2000; Giesbrecht et al., 2006; Slagter et al., 2007), evidence for top-down access to overlapping perceptual codes is less clear. In recent years, MVPA has been used to demonstrate that selective attention can modulate functionally specific neural codes elicited during on-going visual stimulation (Kamitani and Tong, 2005, 2006). Even more recently, Serences and Boynton (2007) examined activation patterns associated with attending to either one of two directions of motion within dual-surface random dot displays presented either bilaterally, or unilaterally. They found that the direction of attended motion could be decoded from activation patterns within both left and right visual cortex, even when the perceptual input was only presented to one visual field (Serences and Boynton, 2007). Finally, recent evidence also demonstrates that emotion-specific information contained with human body stimuli modulates specific subpopulations within body-selective visual cortex (Peelen et al., 2007), which may also arise from top-down control mechanisms. Using visual imagery, we provide evidence for template-specific population coding in visual cortex that cannot be explained by any differential input to the visual system. These results demonstrate that the same patterns in early visual cortex are reproduced during visual imagery as in veridical perception, however further research is required to investigate whether the same level of specificity can be extended to other top-down mechanisms, such as goal-directed attention.

Although representations in the visual cortex were the primary focus of this experiment, we also identified differential activity patterns in prefrontal/insula cortex associated with the alternative imagery (bilateral aI/fO), and perceptual conditions (right aI/fO). Differential coding of the two imagery conditions within this anterior brain area is consistent with previous evidence implicating the prefrontal cortex as a source of top-down imagery control (Ishai et al., 2000; Mechelli et al., 2004). More generally, convergent evidence also suggests that this region is involved in a broad range of higher-level cognitive functions (Duncan and Owen, 2000), and that prefrontal neurons adapt their response profiles to represent behaviorally relevant information for goal-directed action (Duncan, 2001). Although no previous studies have explicitly analyzed prefrontal activation patterns during imagery, the population response from related prefrontal areas codes different sorts of task-relevant information, including learned stimulus categories (Li et al., 2007) and behavioral intentions (Haynes et al., 2007). In our experiment, accurate discrimination between the alternative perceptual states within prefrontal cortex probably reflects differential coding of the visually presented letter stimuli, whereas prefrontal discrimination during the imagery task could reflect a range of possible task-relevant coding parameters, from representation of the auditory cue to differential states of conscious recognition following imagery generation within visual cortex. One intriguing possibility is that these differential codes might be involved in generating top-down control over visual cortex. Nevertheless, future research is needed to determine the precise role of differential imagery coding within aI/fO, and/or whether other prefrontal areas generate content-specific control signals for precise activation of selective perceptual codes in visual cortex observed in this study.

In summary, the results of this investigation demonstrate that top-down control mechanisms engaged during visual imagery are able to activate precise neural populations within high-level visual cortex. Our cross-generalization approach further verifies that these top-down codes share significant similarity to the visual codes accessed during stimulus-driven perception.

Footnotes

This work was supported by the Medical Research Council (UK) intramural program U.1055.01.001.00001.01. We thank C. Chambers, A. Nobre, and our two anonymous reviewers for valuable comments and suggestions.

References

- Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nat Neurosci. 2001;4:324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- Baars BJ, Franklin S. How conscious experience and working memory interact. Trends Cogn Sci. 2003;7:166–172. doi: 10.1016/s1364-6613(03)00056-1. [DOI] [PubMed] [Google Scholar]

- Chawla D, Rees G, Friston KJ. The physiological basis of attentional modulation in extrastriate visual areas. Nat Neurosci. 1999;2:671–676. doi: 10.1038/10230. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Kerszberg M, Changeux JP. A neuronal model of a global workspace in effortful cognitive tasks. Proc Natl Acad Sci U S A. 1998;95:14529–14534. doi: 10.1073/pnas.95.24.14529. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Desimone R, Duncan J. Neural mechanisms of selective visual attention. Annu Rev Neurosci. 1995;18:193–222. doi: 10.1146/annurev.ne.18.030195.001205. [DOI] [PubMed] [Google Scholar]

- Driver J, Noesselt T. Multisensory interplay reveals crossmodal influences on ‘sensory-specific’ brain regions, neural responses, and judgments. Neuron. 2008;57:11–23. doi: 10.1016/j.neuron.2007.12.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Duncan J. An adaptive coding model of neural function in prefrontal cortex. Nat Rev Neurosci. 2001;2:820–829. doi: 10.1038/35097575. [DOI] [PubMed] [Google Scholar]

- Duncan J, Owen AM. Common regions of the human frontal lobe recruited by diverse cognitive demands. Trends Neurosci. 2000;23:475–483. doi: 10.1016/s0166-2236(00)01633-7. [DOI] [PubMed] [Google Scholar]

- Giesbrecht B, Weissman DH, Woldorff MG, Mangun GR. Pre-target activity in visual cortex predicts behavioral performance on spatial and feature attention tasks. Brain Res. 2006;1080:63–72. doi: 10.1016/j.brainres.2005.09.068. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Gobbini MI, Furey ML, Ishai A, Schouten JL, Pietrini P. Distributed and overlapping representations of faces and objects in ventral temporal cortex. Science. 2001;293:2425–2430. doi: 10.1126/science.1063736. [DOI] [PubMed] [Google Scholar]

- Haynes JD, Rees G. Predicting the orientation of invisible stimuli from activity in human primary visual cortex. Nat Neurosci. 2005;8:686–691. doi: 10.1038/nn1445. [DOI] [PubMed] [Google Scholar]

- Haynes JD, Rees G. Decoding mental states from brain activity in humans. Nat Rev Neurosci. 2006;7:523–534. doi: 10.1038/nrn1931. [DOI] [PubMed] [Google Scholar]

- Haynes JD, Sakai K, Rees G, Gilbert S, Frith C, Passingham RE. Reading hidden intentions in the human brain. Curr Biol. 2007;17:323–328. doi: 10.1016/j.cub.2006.11.072. [DOI] [PubMed] [Google Scholar]

- Ishai A, Ungerleider LG, Haxby JV. Distributed neural systems for the generation of visual images. Neuron. 2000;28:979–990. doi: 10.1016/s0896-6273(00)00168-9. [DOI] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. Decoding the visual and subjective contents of the human brain. Nat Neurosci. 2005;8:679–685. doi: 10.1038/nn1444. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kamitani Y, Tong F. Decoding seen and attended motion directions from activity in the human visual cortex. Curr Biol. 2006;16:1096–1102. doi: 10.1016/j.cub.2006.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kay KN, Naselaris T, Prenger RJ, Gallant JL. Identifying natural images from human brain activity. Nature. 2008;452:352–355. doi: 10.1038/nature06713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kosslyn SM, Thompson WL, Kim IJ, Alpert NM. Topographical representations of mental images in primary visual cortex. Nature. 1995;378:496–498. doi: 10.1038/378496a0. [DOI] [PubMed] [Google Scholar]

- Kosslyn SM, Pascual-Leone A, Felician O, Camposano S, Keenan JP, Thompson WL, Ganis G, Sukel KE, Alpert NM. The role of area 17 in visual imagery: convergent evidence from PET and rTMS. Science. 1999;284:167–170. doi: 10.1126/science.284.5411.167. [DOI] [PubMed] [Google Scholar]

- Kosslyn SM, Ganis G, Thompson WL. Neural foundations of imagery. Nat Rev Neurosci. 2001;2:635–642. doi: 10.1038/35090055. [DOI] [PubMed] [Google Scholar]

- Kriegeskorte N, Goebel R, Bandettini P. Information-based functional brain mapping. Proc Natl Acad Sci U S A. 2006;103:3863–3868. doi: 10.1073/pnas.0600244103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kriegeskorte N, Formisano E, Sorger B, Goebel R. Individual faces elicit distinct response patterns in human anterior temporal cortex. Proc Natl Acad Sci U S A. 2007;104:20600–20605. doi: 10.1073/pnas.0705654104. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li S, Ostwald D, Giese M, Kourtzi Z. Flexible coding for categorical decisions in the human brain. J Neurosci. 2007;27:12321–12330. doi: 10.1523/JNEUROSCI.3795-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mechelli A, Price CJ, Friston KJ, Ishai A. Where bottom-up meets top-down: neuronal interactions during perception and imagery. Cereb Cortex. 2004;14:1256–1265. doi: 10.1093/cercor/bhh087. [DOI] [PubMed] [Google Scholar]

- Miller BT, D'Esposito M. Searching for “the top” in top-down control. Neuron. 2005;48:535–538. doi: 10.1016/j.neuron.2005.11.002. [DOI] [PubMed] [Google Scholar]

- Miller EK, Cohen JD. An integrative theory of prefrontal cortex function. Annu Rev Neurosci. 2001;24:167–202. doi: 10.1146/annurev.neuro.24.1.167. [DOI] [PubMed] [Google Scholar]

- Norman KA, Polyn SM, Detre GJ, Haxby JV. Beyond mind-reading: multi-voxel pattern analysis of fMRI data. Trend Cogn Sci. 2006;10:424–430. doi: 10.1016/j.tics.2006.07.005. [DOI] [PubMed] [Google Scholar]

- O'Craven KM, Kanwisher N. Mental imagery of faces and places activates corresponding stimulus-specific brain regions. J Cogn Neurosci. 2000;12:1013–1023. doi: 10.1162/08989290051137549. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Atkinson AP, Andersson F, Vuilleumier P. Emotional modulation of body-selective visual areas. Soc Cogn Affect Neurosci. 2007;2:274–283. doi: 10.1093/scan/nsm023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rademacher J, Morosan P, Schormann T, Schleicher A, Werner C, Freund HJ, Zilles K. Probabilistic mapping and volume measurement of human primary auditory cortex. Neuroimage. 2001;13:669–683. doi: 10.1006/nimg.2000.0714. [DOI] [PubMed] [Google Scholar]

- Ranganath C, D'Esposito M. Directing the mind's eye: prefrontal, inferior and medial temporal mechanisms for visual working memory. Curr Opin Neurobiol. 2005;15:175–182. doi: 10.1016/j.conb.2005.03.017. [DOI] [PubMed] [Google Scholar]

- Reddy L, Kanwisher N. Coding of visual objects in the ventral stream. Curr Opin Neurobiol. 2006;16:408–414. doi: 10.1016/j.conb.2006.06.004. [DOI] [PubMed] [Google Scholar]

- Ress D, Backus BT, Heeger DJ. Activity in primary visual cortex predicts performance in a visual detection task. Nat Neurosci. 2000;3:940–945. doi: 10.1038/78856. [DOI] [PubMed] [Google Scholar]

- Serences JT, Boynton GM. Feature-based attentional modulations in the absence of direct visual stimulation. Neuron. 2007;55:301–312. doi: 10.1016/j.neuron.2007.06.015. [DOI] [PubMed] [Google Scholar]

- Slagter HA, Giesbrecht B, Kok A, Weissman DH, Kenemans JL, Woldorff MG, Mangun GR. fMRI evidence for both generalized and specialized components of attentional control. Brain Res. 2007;1177:90–102. doi: 10.1016/j.brainres.2007.07.097. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slotnick SD, Thompson WL, Kosslyn SM. Visual mental imagery induces retinotopically organized activation of early visual areas. Cereb Cortex. 2005;15:1570–1583. doi: 10.1093/cercor/bhi035. [DOI] [PubMed] [Google Scholar]

- Soon CS, Brass M, Heinze HJ, Haynes JD. Unconscious determinants of free decisions in the human brain. Nat Neurosci. 2008;11:543–545. doi: 10.1038/nn.2112. [DOI] [PubMed] [Google Scholar]

- Spiridon M, Fischl B, Kanwisher N. Location and spatial profile of category-specific regions in human extrastriate cortex. Hum Brain Mapp. 2006;27:77–89. doi: 10.1002/hbm.20169. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thirion B, Duchesnay E, Hubbard E, Dubois J, Poline JB, Lebihan D, Dehaene S. Inverse retinotopy: inferring the visual content of images from brain activation patterns. Neuroimage. 2006;33:1104–1116. doi: 10.1016/j.neuroimage.2006.06.062. [DOI] [PubMed] [Google Scholar]

- Williams MA, Dang S, Kanwisher NG. Only some spatial patterns of fMRI response are read out in task performance. Nat Neurosci. 2007;10:685–686. doi: 10.1038/nn1900. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yoo SS, Freeman DK, McCarthy JJ, 3rd, Jolesz FA. Neural substrates of tactile imagery: a functional MRI study. Neuroreport. 2003;14:581–585. doi: 10.1097/00001756-200303240-00011. [DOI] [PubMed] [Google Scholar]

- Zatorre RJ, Halpern AR, Perry DW, Meyer E, Evans AC. Hearing in the mind's ear: a PET investigation of musical imagery and perception. J Cogn Neurosci. 1996;8:29–46. doi: 10.1162/jocn.1996.8.1.29. [DOI] [PubMed] [Google Scholar]