Abstract

Observing and learning actions and behaviors from others, a mechanism crucial for survival and social interaction, engages the mirror neuron system. To determine whether vision is a necessary prerequisite for the human mirror system to develop and function, we used functional magnetic resonance imaging to compare brain activity in congenitally blind individuals during the auditory presentation of hand-executed actions or environmental sounds, and the motor pantomime of manipulation tasks, with that in sighted volunteers, who additionally performed a visual action recognition task. Congenitally blind individuals activated a premotor–temporoparietal cortical network in response to aurally presented actions that overlapped both with mirror system areas found in sighted subjects in response to visually and aurally presented stimuli, and with the brain response elicited by motor pantomime of the same actions. Furthermore, the mirror system cortex showed a significantly greater response to motor familiar than to unfamiliar action sounds in both sighted and blind individuals. Thus, the mirror system in humans can develop in the absence of sight. The results in blind individuals demonstrate that the sound of an action engages the mirror system for action schemas that have not been learned through the visual modality and that this activity is not mediated by visual imagery. These findings indicate that the mirror system is based on supramodal sensory representations of actions and, furthermore, that these abstract representations allow individuals with no visual experience to interact effectively with others.

Introduction

In our daily life, we learn novel behaviors from others by observing their actions and understanding their intentions. A particular class of neurons, discovered in the monkey premotor and parietal cortex, discharges both when the monkey performs a goal-directed action and when the animal observes another individual performing the same action. These neurons have been named “mirror neurons” (Gallese et al., 1996; Rizzolatti et al., 1996; Rizzolatti and Fadiga, 1998; Rizzolatti and Craighero, 2004).

A similar “mirror” system has been identified in humans, and is thought to play a major role not only in action and intention understanding, but also in learning by imitation, empathy, and language development (Iacoboni et al., 1999; Carr et al., 2003; Buccino et al., 2004a,b; Rizzolatti and Craighero, 2004; Rizzolatti, 2005; Fabbri-Destro and Rizzolatti, 2008; Rizzolatti and Fabbri-Destro, 2008; Rizzolatti and Sinigaglia, 2008).

Functional brain studies showed that the human mirror system responds similarly to the primate mirror neuron system, and relies on an inferior frontal, premotor, and inferior parietal cortical network (Buccino et al., 2004a,b; Gallese et al., 2004; Dapretto et al., 2006; Chong et al., 2008; Gazzola and Keysers, 2009). Furthermore, this mirror system is more activated when subjects observe movements for which they have developed a specific competence (Calvo-Merino et al., 2005, 2006; Cross et al., 2006) or when they listen to rehearsed musical pieces compared with music they had never played before (Lahav et al., 2007).

Though humans rely greatly on vision, individuals who lack sight since birth still retain the ability to learn actions and behaviors from others. To what extent is this ability dependent on visual experience? Is the human mirror system capable of interpreting nonvisual information to acquire knowledge about the others?

The mirror system is also recruited when individuals receive sufficient clues to understand the meaning of the occurring action with no access to visual features, such as when they only listen to the sound of actions (Kohler et al., 2002; Keysers et al., 2003; Lewis et al., 2005; Gazzola et al., 2006; Lahav et al., 2007) or to action-related sentences (Baumgaertner et al., 2007; Galati et al., 2008). In addition, neural activity in the mirror system while listening to action sounds is sufficient to discriminate which of two actions another individual has performed (Keysers et al., 2003; Etzel et al., 2008). Thus, while these findings suggest that mirror system may be activated also by hearing, they do not rule out that its recruitment may be the consequence of a sound-elicited mental representation of actions through visually based motor imagery (Decety and Grèzes, 1999; Grèzes and Decety, 2001).

Here we used functional magnetic resonance imaging (fMRI) to address the role of visual experience on the functional development of the human mirror system. Specifically, we determined whether an efficient mirror system also develops in individuals who have never had any visual experience. We hypothesized that similar mirror areas that further process visually perceived information of others' actions and intentions are capable of processing the same information acquired through nonvisual sensory modalities, such as hearing. Additionally, we hypothesized that individuals would show a stronger response to those action sounds that are part of their motor repertoire.

Materials and Methods

We used an fMRI sparse sampling six-run block design to examine neural activity in blind and sighted healthy volunteers while they alternated between auditory presentation of hand-executed actions (e.g., cutting paper with scissors) or environmental sounds (e.g., rainstorm), and execution of a “virtual” tool or object manipulation task (motor pantomime). In the sighted subject group, three additional time series were acquired during a visual version of an identical task of motor pantomime alternating with the presentation of action or environmental movies.

Subjects.

Eight blind [six female (F), mean age ± SD: 44 ± 16 years]—seven with congenital blindness and one who became blind at age 2 years due to congenital glaucoma and had no recollection of any visual experience—and 14 sighted (five F, 32 ± 13 years; n.s.) right-handed healthy subjects were recruited for the study. Causes of blindness were as follows: congenital glaucoma (n = 5), retinopathy of prematurity (n = 1), and congenital optic nerve atrophy (n = 2). All sighted and blind subjects received a medical examination, including routine blood tests and a brain structural MRI scan to exclude any disorder that could affect brain function and metabolism other than blindness in the blind group. All subjects gave their written informed consent after the study procedures and risks involved had been explained. The study was conducted under a protocol approved by the University of Pisa Ethical Committee.

Auditory stimuli.

Twenty action and 10 environmental sound samples [44.1 Hz, 16 bit quantization, stereo, Free Sound Project, average mean square power and duration normalized (supplemental Table S1, available at www.jneurosci.org as supplemental material)] were presented by a MR-compatible pneumatic headphone system (PureSound Audio System Wardray Premise). Speech commands for the motor pantomime task were digitally recorded names of objects/tools to be virtually handled, and a beep sound after 10 s signaled the subject to stop executing the action.

Upon completion of the scanning session, individuals were asked to identify the listened sounds, and then to score each item relatively to how competent they were in performing that action (1 = poor competence; 4 = high competence) and how often they would perform it (1 = rarely; 4 = very often). Competence and frequency scores were scaled for each subject by dividing each item score by the averaged response, and then multiplying by the individual SD. These scaled scores were summed across subjects separately for each of the two groups to provide a motor familiarity score for each action sound (supplemental Table S1, available at www.jneurosci.org as supplemental material). The 20 action sounds were ranked according to their motor familiarity score: the top 10 were classified as familiar, and the remaining 10 as unfamiliar action sounds (supplemental Table S1, available at www.jneurosci.org as supplemental material).

Visual stimuli.

Movies were presented on a rear projection screen viewed through a mirror (visual field: 25° wide and 20° high). Motor commands were triggered by words.

Image acquisition and experimental task.

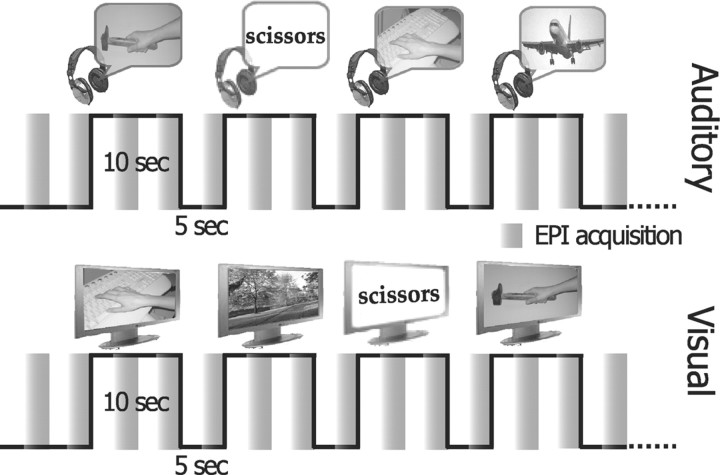

Gradient echo echoplanar (GRE-EPI) images were acquired with a 1.5 tesla scanner (Signa General Electric). A scan cycle (repetition time = 2500 ms) was composed of 21 axial slices (5 mm thickness, field of view = 24 cm, echo time = 40 ms, flip angle = 90, image in-plane resolution = 128 × 128 pixels) collected in 2500 ms followed by a silent gap of 2500 ms (sparse sampling). We obtained six time series of 65 brain volumes (325 s) while each subject listened to sounds, and three time series while the sighted volunteers only looked at movies (Fig. 1). Stimuli were randomly presented with an interstimulus interval of 5 s. The period of silence between successive scans allowed the stimulus perception to be in part uncontaminated by GRE-EPI noise. Each time series began and ended with 15 s of no stimuli. Before the fMRI scanning, subjects underwent a training session to become familiar with the motor pantomimes and the task procedure.

Figure 1.

fMRI experimental paradigm—an fMRI sparse sampling six-run block design was used to examine neural activity in congenitally blind and sighted right-handed healthy volunteers, while they alternated between the random presentation of hand-executed action or environmental sounds/movies, and the motor pantomime of a “virtual” tool or object manipulation task (supplemental Methods, available at www.jneurosci.org as supplemental material).

During the auditory scanning sessions, volunteers were asked to keep their eyes closed, to listen to and recognize sounds (frequent stimuli), and occasionally to execute the motor pantomimes when prompted by a human voice command naming a specific tool (target). During the visual sessions, volunteers were asked to look at movies, and to execute the motor pantomimes when prompted by words. Sensory modality (auditory or visual) was constant for each time series, but auditory and visual runs were presented in randomized order across sighted subjects. An operator inside the MR scanner room checked for the accurate motor performance of the task. Fifteen stimuli were presented in each time series (equally distributed across stimulus classes) and randomly intermixed with five target pantomime commands. Stimulus presentation was handled by using the software package Presentation.

High-resolution T1-weighted spoiled gradient recall images were obtained for each subject to provide detailed brain anatomy.

Image analysis.

We used the AFNI and SUMA package and related software plugins to analyze and view functional imaging data—http://afni.nimh.nih.gov/afni (Cox, 1996). All volumes from the different runs were concatenated and coregistered (3dvolreg program), temporally aligned (3dTshift), and spatially smoothed (isotropic Gaussian filter, σ = 2.5 mm). Individual run data were normalized by calculating the mean intensity value for each voxel, and by dividing the value within each voxel by its mean to estimate the percentage signal change at each time point.

Statistical analysis was performed using a deconvolution to identify regions significantly involved in the perception of auditory and visual stimuli, and in the motor execution of virtual gestures. Due to the sparse sampling block design, the mean response to each stimulus type was modeled with a separate regressor to shape the response in each of the 10 s periods of stimulus presentation followed by the 5 s period of silence. We used four types of auditory stimuli—familiar and unfamiliar actions, environmental sounds, and spoken words for the motor pantomime, and three types of visual stimuli—action and environmental movies, and written words for motor pantomime. By modeling each type of stimulus presentation, this resulted in seven regressors of interest for the sighted subjects (auditory and visual) and in four regressors of interest for the blind subjects (auditory only). Individual time points relative to those sounds that had been mislabeled or not recognized during the postscanning identification session were censored, and thus not included in the analysis. According to deconvolution analysis (3dDeconvolve program), each regressor of interest consists of a series of delta functions, resulting in an estimate of the response to a single stimulus with no assumptions about the shape of the hemodynamic response for each of the five volumes (20 s window with a TR of 2500 ms) after stimulus onset. The six movement parameters derived from the volume registration and the polynomial regressors to account for baseline shifts and linear/quadratic drifts in each scan series were included in the deconvolution analysis as regressors of no interest. The response magnitude to each stimulus type was calculated by averaging the β weights of the regressors for the second and third volumes of the response (capturing the positive blood-oxygenation level-dependent response). Individual unthresholded responses for each of the stimuli of interest were transformed into the Talairach and Tournoux Atlas (Talairach and Tournoux, 1988) coordinate system, and resampled into 1 mm3 voxels for group analyses. Activations were anatomically localized on the sighted and blind group-averaged Talairach-transformed T1-weighted images, and visualized using normalized SUMA surface templates.

We also used a two-way mixed-model (stimulus type × subjects) group ANOVA analysis by using the unthresholded weights of each regressor of interest for stimulus type to construct t contrasts (equivalent to paired t tests) and identify significant differences between conditions. The correction of the t contrasts for multiple comparisons across whole brain was made using Monte Carlo simulations run via AlphaSim in AFNI with a voxelwise threshold of 0.05 that resulted in a minimum cluster volume of 4713 μl, cluster connection radius 1.73 mm for a corrected p value <0.05.

According to previous functional studies exploring the human mirror network (Gazzola et al., 2006), we defined auditory mirror voxels in sighted and blind individuals as those for which the t contrasts (familiar action sounds vs environmental sounds) and (motor pantomime vs rest) were both significant (logical AND) at a cluster-corrected p < 0.05. To perform a small-volume correction of the logical AND, we created a binary mask of the t contrast (familiar action sounds vs environmental sounds) and calculated via AlphaSim the minimum cluster size for the sighted and blind groups for a corrected p value <0.05 (voxelwise threshold of 0.05, minimum cluster size of 997 μl for sighted, and of 711 μl for congenitally blind subjects).

To address the effect of motor familiarity on the activity of brain regions of the human mirror network, a one-way paired t test between familiar and unfamiliar action sounds was performed in sighted and blind individuals. Similarly, we created a common binary mask including both t contrasts (familiar action sounds vs environmental sounds) and (unfamiliar action sounds vs environmental sounds), and calculated via AlphaSim the minimum cluster size for the sighted and blind groups at a corrected p level <0.05 (minimum cluster size of 754 μl for sighted, and of 540 μl for congenitally blind subjects).

To further characterize the effect of familiarity within the activated clusters of interest, we created a t map containing the maximum t values of the (familiar action sounds vs environmental sounds) and the (unfamiliar action sounds vs environmental sounds) contrasts, and explored the peak values. Plots of parameter estimates of all voxels falling within 5-mm-radius spheres centered at local peak values for selected regions of interest (local maxima distance 10 mm) are reported in Figure 4 to show average BOLD signal intensities (percentage change) in the 14 sighted and 8 congenitally blind participants across the auditory experimental conditions (familiar action, unfamiliar action, and environmental sounds) and the motor pantomime for mirror system cortical regions of interest.

Figure 4.

Bar graphs illustrate the mean ± SE of the relative BOLD signal intensity (percentage change) in the 14 sighted and 8 congenitally blind participants across the auditory experimental conditions (familiar action, unfamiliar action, and environmental sounds) and the motor pantomime for mirror system cortical regions of interest [maximum t value map of both (familiar action sounds vs environmental sounds) and (unfamiliar action sounds vs environmental sounds) contrasts], which are traced with yellow circles on the motor familiar versus unfamiliar action sound maps for sighted and blind individuals. Significance for motor familiarity effect between the auditory experimental conditions was reported for t test p value <0.05; trends, reported as dotted lines, are provided only for t test p value <0.1. aIPS, Anterior intraparietal sulcus.

Results

Behavioral report and sound classification

All participants were able to perform correctly the motor pantomime following the relative verbal prompts. Furthermore, in the debriefing session, upon completion of the fMRI study, all subjects achieved >75% accuracy in sound identification [familiar action sounds (average percentage ± SD, sighted vs blind): 98 ± 4 vs 100; unfamiliar action sounds: 86 ± 10 vs 84 ± 16; environmental sounds: 88 ± 8 vs 78 ± 12]. In an experimental group × sound category ANOVA, no difference in sound identification between the sighted and blind groups was found (F(1,60) = 2.4, n.s.). A significant effect for sound category was reported for both groups (F(2,60) = 12.7, p < 0.0001); that is, identification accuracy was higher for familiar than unfamiliar sounds (Bonferroni-corrected post hoc t test p < 0.01), with no differences between unfamiliar action and environmental sounds (n.s.). Further, such an effect was comparable for blind and for sighted individuals (experimental group × sound category F(2,60) = 2.4, n.s.). Motor familiarity scores did not reveal any significant difference between sighted and blind individuals, neither for competence (F(1,36) = 1.8, n.s.) nor frequency (F(1,36) = 0.3, n.s.), for either familiar or unfamiliar action sounds.

Mirror system response to sounds of familiar actions in blind and sighted individuals

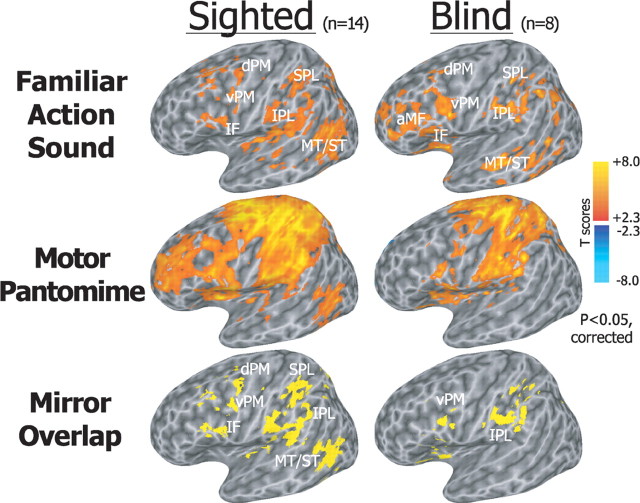

In congenitally blind individuals, aural presentation of familiar actions compared with the environmental sounds elicited patterns of neural activation involving premotor, temporal, and parietal cortex, mostly in the left hemisphere, similar to those observed in sighted subjects during both aural and visual presentation (Figs. 2, 3; supplemental Fig. S2, available at www.jneurosci.org as supplemental material). Specifically, listening to familiar action sounds (familiar action vs environmental sounds, two-tailed paired t test, cluster-size correction for whole brain p < 0.05) recruited bilateral ventral and dorsal areas of the premotor cortex (vPM and dPM), inferior frontal (IF) and an anterior portion of middle frontal (aMF) cortex, insula, supplementary motor area (SMA), middle and posterior aspects of the superior and middle temporal gyri (ST/MT), auditory temporal regions, and superior (SPL) and inferior parietal lobule (IPL), both in congenitally blind and sighted individuals (Figs. 2, 3). In the sighted subjects, aural and visual presentation of actions elicited neural responses that overlapped significantly (supplemental Fig. S2A, available at www.jneurosci.org as supplemental material).

Figure 2.

Statistical maps showing brain regions activated during listening to familiar action compared with environmental sounds, and during the motor pantomime of action compared with rest (corrected p < 0.05). In both sighted and congenitally blind individual, aural presentation of familiar actions compared with the environmental sounds elicited similar patterns of activation involving a left-lateralized premotor, temporal, and parietal cortical network. Hand motor pantomimes evoked bilateral activations in premotor and sensorimotor areas. Auditory mirror voxels are shown in yellow as overlap between the two task conditions in the bottom row. Spatially normalized activations are projected onto a single-subject left hemisphere template in Talairach space.

Figure 3.

Statistical maps showing brain regions activated during listening to motor familiar or unfamiliar action sounds compared with environmental sounds (corrected p < 0.05). Bottom, Brain areas that showed a significantly higher activation during motor familiar than during unfamiliar action sounds (1-tailed paired t test, SVC p < 0.05). Spatially normalized activations are projected onto a single-subject left hemisphere template in the Talairach space.

Hand-mediated motor pantomimes (two-tailed paired t test, cluster-size correction for whole brain p < 0.05) evoked bilateral activations in the motor, somatosensory, and premotor cortex, IF, aMF, SMA, posterior ST/MT, SPL, and IPL in the two groups.

The overlap of brain activations during listening to actions and performing motor pantomime identified a left-lateralized mirror system cortical network, including premotor, temporal, and parietal regions, both in sighted and congenitally blind subjects (Fig. 2; supplemental Fig. S1, Table S2, available at www.jneurosci.org as supplemental material) [small volume-corrected (SVC) p < 0.05]. Bilateral posterior MT/ST and IPL, right IF, aMF, and vPM/dPM cortex also were significantly recruited as auditory mirror system in both groups at a more liberal threshold (supplemental Fig. S1, available at www.jneurosci.org as supplemental material).

Mirror system response is greater for motor familiar than for unfamiliar action sounds

In contrast to the pattern of activations found during aural presentation of familiar actions, listening to unfamiliar actions recruited only the left IPL and SPL in both groups, and the left posterior MT/ST and aMF in the sighted and blind group, respectively (unfamiliar action vs environmental sounds, two-tailed paired t test, cluster-size correction for whole brain p < 0.05). Right posterior MT/ST and IPL, and bilateral IF, aMF, and vPM/dPM cortex also were recruited in both groups during unfamiliar action sound listening at a more liberal threshold (uncorrected p < 0.05) (supplemental Fig. S1, available at www.jneurosci.org as supplemental material).

Furthermore, both in the sighted and blind individuals, this mirror system network showed significantly higher responses to motor familiar than to motor unfamiliar action sounds (one-tailed paired t test, SVC p < 0.05) (Fig. 3). Plots of parameter estimates of significant voxels in selected mirror system cortical regions across the three conditions (familiar and unfamiliar action sounds, and environmental sounds) revealed a significant motor familiarity effect within the auditory mirror system networks of both sighted and congenitally blind individuals (Fig. 4).

Discussion

These findings demonstrate that a left premotor–temporoparietal network subserves action perception through hearing in blind individuals who have never had any visual experience, and that this network overlaps with the left-lateralized mirror system network that was activated by visual and auditory stimuli in the sighted group. Thus, the mirror system can develop in the absence of sight and can process information about actions that is not visual. Further, the results in congenitally blind individuals unequivocally demonstrate that the sound of an action engages human mirror system brain areas for action schemas that have not been learned through the visual modality.

While previous studies have shown that the mirror system is recruited not only during direct action observation, but also when individuals only hear the sound of actions or listen to action-related sentences (Kohler et al., 2002; Keysers et al., 2003; Lewis et al., 2005; Gazzola et al., 2006; Baumgaertner et al., 2007; Lahav et al., 2007; Galati et al., 2008), they have not solved whether such a response is a mere consequence of a sound-elicited mental representation of visually learned actions through visually based motor imagery. Our findings in congenitally blind individuals who, by definition, have no visually based imagery, clearly demonstrate that visual experience is not a necessary prerequisite for the mirror system development, and that blind people “see” the actions of others by recruiting the same network of cortical areas activated by action observation in sighted subjects. This suggests that the mirror system stores a motor representation of others' actions that can be evoked through supramodal sensory mechanisms.

The stronger response to familiar compared with unfamiliar action sounds extends to the auditory modality the finding that the mirror system activation in response to visual stimuli is larger when subjects observe their own movements or movements for which they have acquired competence compared with when they observe unknown or poorly practiced actions. For instance, expert dancers showed larger premotor and parietal activations when they viewed moves from their own motor repertoire, compared with different dance style (Calvo-Merino et al., 2005) or opposite gender moves (Calvo-Merino et al., 2006). Furthermore, listening to musical pieces that had been practiced by nonprofessional musicians for several days activated a frontoparietal motor-related network more than musical pieces that had never been played before, thus confirming that the human mirror system is modulated by the motor familiarity even for more complex sequences of newly acquired actions (Lahav et al., 2007).

Recent functional studies have revealed that individuals with no visual experience rely on supramodal brain areas within the ventral and dorsal extrastriate cortex, which are often referred to as extrastriate visual cortex, to acquire knowledge about shape, movement, and localization of objects, through nonvisual sensory modalities, including touch and hearing (Pietrini et al., 2004; Amedi et al., 2005; Ricciardi et al., 2007, Bonino et al., 2008; Cattaneo et al., 2008). The recruitment of these extrastriate regions in congenitally blind individuals during nonvisual recognition indicates that visual experience or visually based imagery is not necessary for an abstract representation of object and spatial features in these regions. Similarly, the mirror system in congenitally blind individuals is responsible for processing information about others' actions that is acquired through nonvisual sensory modalities.

In summary, congenitally blind individuals showed activation in a premotor–temporoparietal cortical network in response to aurally presented actions, and this network overlapped with the mirror system brain areas found in sighted subjects.

Mirror system recruitment by aural stimuli in congenitally blind individuals indicates that neither visual experience nor visually based imagery is a necessary precondition to form a representation of others' actions in this system, The mirror system, often referred to as visuomotor, is, therefore, not strictly dependent on sight. These findings help to explain how individuals with no visual experience may acquire knowledge of and interact effectively with the external world, and their ability to learn by imitation of others.

Footnotes

This work was supported by European Union Grants Immersence to P.P. and Robotcub, Contact, and Poeticon to L.F., and by Italian Ministero dell'Istruzione, dell'Università e della Ricerca grants to P.P. and L.F. We thank all our volunteers and the Unione Italiana Ciechi. We thank Paolo Nichelli, Laila Craighero, and Maura L. Furey for comments on an earlier version of this manuscript, and the MRI Laboratory at the Consiglio Nazionale delle Ricerche Research Area “S. Cataldo” (Pisa, Italy), coordinated by Luigi Landini and Massimo Lombardi.

References

- Amedi A, von Kriegstein K, van Atteveldt NM, Beauchamp MS, Naumer MJ. Functional imaging of human crossmodal identification and object recognition. Exp Brain Res. 2005;166:559–571. doi: 10.1007/s00221-005-2396-5. [DOI] [PubMed] [Google Scholar]

- Baumgaertner A, Buccino G, Lange R, McNamara A, Binkofski F. Polymodal conceptual processing of human biological actions in the left inferior frontal lobe. Eur J Neurosci. 2007;25:881–889. doi: 10.1111/j.1460-9568.2007.05346.x. [DOI] [PubMed] [Google Scholar]

- Bonino D, Ricciardi E, Sani L, Gentili C, Vanello N, Guazzelli M, Vecchi T, Pietrini P. Tactile spatial working memory activates the dorsal extrastriate cortical pathway in congenitally blind individuals. Arch Ital Biol. 2008;146:133–146. [PubMed] [Google Scholar]

- Buccino G, Binkofski F, Riggio L. The mirror neuron system and action recognition. Brain Lang. 2004a;89:370–376. doi: 10.1016/S0093-934X(03)00356-0. [DOI] [PubMed] [Google Scholar]

- Buccino G, Vogt S, Ritzl A, Fink GR, Zilles K, Freund HJ, Rizzolatti G. Neural circuits underlying imitation learning of hand actions: an event-related fMRI study. Neuron. 2004b;42:323–334. doi: 10.1016/s0896-6273(04)00181-3. [DOI] [PubMed] [Google Scholar]

- Calvo-Merino B, Glaser DE, Grèzes J, Passingham RE, Haggard P. Action observation and acquired motor skills: an FMRI study with expert dancers. Cereb Cortex. 2005;15:1243–1249. doi: 10.1093/cercor/bhi007. [DOI] [PubMed] [Google Scholar]

- Calvo-Merino B, Grèzes J, Glaser DE, Passingham RE, Haggard P. Seeing or doing? Influence of visual and motor familiarity in action observation. Curr Biol. 2006;16:1905–1910. doi: 10.1016/j.cub.2006.07.065. [DOI] [PubMed] [Google Scholar]

- Carr L, Iacoboni M, Dubeau MC, Mazziotta JC, Lenzi GL. Neural mechanisms of empathy in humans: a relay from neural systems for imitation to limbic areas. Proc Natl Acad Sci U S A. 2003;100:5497–5502. doi: 10.1073/pnas.0935845100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cattaneo Z, Vecchi T, Cornoldi C, Mammarella I, Bonino D, Ricciardi E, Pietrini P. Imagery and spatial processes in blindness and visual impairment. Neurosci Biobehav Rev. 2008;32:1346–1360. doi: 10.1016/j.neubiorev.2008.05.002. [DOI] [PubMed] [Google Scholar]

- Chong TT, Cunnington R, Williams MA, Kanwisher N, Mattingley JB. fMRI adaptation reveals mirror neurons in human inferior parietal cortex. Curr Biol. 2008;18:1576–1580. doi: 10.1016/j.cub.2008.08.068. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res. 1996;29:162–173. doi: 10.1006/cbmr.1996.0014. [DOI] [PubMed] [Google Scholar]

- Cross ES, Hamilton AF, Grafton ST. Building a motor simulation de novo: observation of dance by dancers. Neuroimage. 2006;31:1257–1267. doi: 10.1016/j.neuroimage.2006.01.033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dapretto M, Davies MS, Pfeifer JH, Scott AA, Sigman M, Bookheimer SY, Iacoboni M. Understanding emotions in others: mirror neuron dysfunction in children with autism spectrum disorders. Nat Neurosci. 2006;9:28–30. doi: 10.1038/nn1611. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Decety J, Grèzes J. Neural mechanisms subserving the perception of human actions. Trends Cogn Sci. 1999;3:172–178. doi: 10.1016/s1364-6613(99)01312-1. [DOI] [PubMed] [Google Scholar]

- Etzel JA, Gazzola V, Keysers C. Testing simulation theory with cross-modal multivariate classification of fMRI data. PLoS ONE. 2008;3:e3690. doi: 10.1371/journal.pone.0003690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fabbri-Destro M, Rizzolatti G. Mirror neurons and mirror systems in monkeys and humans. Physiology (Bethesda) 2008;23:171–179. doi: 10.1152/physiol.00004.2008. [DOI] [PubMed] [Google Scholar]

- Galati G, Committeri G, Spitoni G, Aprile T, Di Russo F, Pitzalis S, Pizzamiglio L. A selective representation of the meaning of actions in the auditory mirror system. Neuroimage. 2008;40:1274–1286. doi: 10.1016/j.neuroimage.2007.12.044. [DOI] [PubMed] [Google Scholar]

- Gallese V, Fadiga L, Fogassi L, Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119:593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- Gallese V, Keysers C, Rizzolatti G. A unifying view of the basis of social cognition. Trends Cogn Sci. 2004;8:396–403. doi: 10.1016/j.tics.2004.07.002. [DOI] [PubMed] [Google Scholar]

- Gazzola V, Keysers C. The observation and execution of actions share motor and somatosensory voxels in all tested subjects: single-subject analyses of unsmoothed fMRI data. Cereb Cortex. 2009;19:1239–1255. doi: 10.1093/cercor/bhn181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gazzola V, Aziz-Zadeh L, Keysers C. Empathy and the somatotopic auditory mirror system in humans. Curr Biol. 2006;16:1824–1829. doi: 10.1016/j.cub.2006.07.072. [DOI] [PubMed] [Google Scholar]

- Grèzes J, Decety J. Functional anatomy of execution, mental simulation, observation, and verb generation of actions: a meta-analysis. Hum Brain Mapp. 2001;12:1–19. doi: 10.1002/1097-0193(200101)12:1<1::AID-HBM10>3.0.CO;2-V. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Iacoboni M, Woods RP, Brass M, Bekkering H, Mazziotta JC, Rizzolatti G. Cortical mechanisms of human imitation. Science. 1999;286:2526–2528. doi: 10.1126/science.286.5449.2526. [DOI] [PubMed] [Google Scholar]

- Keysers C, Kohler E, Umiltà MA, Nanetti L, Fogassi L, Gallese V. Audiovisual mirror neurons and action recognition. Exp Brain Res. 2003;153:628–636. doi: 10.1007/s00221-003-1603-5. [DOI] [PubMed] [Google Scholar]

- Kohler E, Keysers C, Umiltà MA, Fogassi L, Gallese V, Rizzolatti G. Hearing sounds, understanding actions: action representation in mirror neurons. Science. 2002;297:846–848. doi: 10.1126/science.1070311. [DOI] [PubMed] [Google Scholar]

- Lahav A, Saltzman E, Schlaug G. Action representation of sound: audiomotor recognition network while listening to newly acquired actions. J Neurosci. 2007;27:308–314. doi: 10.1523/JNEUROSCI.4822-06.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis JW, Brefczynski JA, Phinney RE, Janik JJ, DeYoe EA. Distinct cortical pathways for processing tool versus animal sounds. J Neurosci. 2005;25:5148–5158. doi: 10.1523/JNEUROSCI.0419-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pietrini P, Furey ML, Ricciardi E, Gobbini MI, Wu WH, Cohen L, Guazzelli M, Haxby JV. Beyond sensory images: object-based representation in the human ventral pathway. Proc Natl Acad Sci U S A. 2004;101:5658–5663. doi: 10.1073/pnas.0400707101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ricciardi E, Vanello N, Sani L, Gentili C, Scilingo EP, Landini L, Guazzelli M, Bicchi A, Haxby JV, Pietrini P. The effect of visual experience on the development of functional architecture in hMT+ Cereb Cortex. 2007;17:2933–2939. doi: 10.1093/cercor/bhm018. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G. The mirror neuron system and its function in humans. Anat Embryol (Berl) 2005;210:419–421. doi: 10.1007/s00429-005-0039-z. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Craighero L. The mirror-neuron system. Annu Rev Neurosci. 2004;27:169–192. doi: 10.1146/annurev.neuro.27.070203.144230. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fabbri-Destro M. The mirror system and its role in social cognition. Curr Opin Neurobiol. 2008;18:179–184. doi: 10.1016/j.conb.2008.08.001. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fadiga L. Grasping objects and grasping action meanings: the dual role of monkey rostroventral premotor cortex (area F5) Novartis Found Symp. 1998;218:81–95. doi: 10.1002/9780470515563.ch6. discussion 103. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Sinigaglia C. Further reflections on how we interpret the actions of others. Nature. 2008;455:589. doi: 10.1038/455589b. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Fadiga L, Gallese V, Fogassi L. Premotor cortex and the recognition of motor actions. Brain Res Cogn Brain Res. 1996;3:131–141. doi: 10.1016/0926-6410(95)00038-0. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. New York: Thieme Medical; 1988. Co-planar stereotaxic atlas of the human brain. [Google Scholar]