Abstract

Our ability to recognize others by their facial features is at the core of human social interaction, yet this ability varies widely within the general population, ranging from developmental prosopagnosia to “super-recognizers”. Previous work has focused mainly on the contribution of neural activity within the well described face network to this variance. However, given the nature of face memory in everyday life, and the social context in which it takes place, we were interested in exploring how the collaboration between different networks outside the face network in humans (measured through resting state connectivity) affects face memory performance. Fifty participants (men and women) were scanned with fMRI. Our data revealed that although the nodes of the face-processing network were tightly coupled at rest, the strength of these connections did not predict face memory performance. Instead, face recognition memory was dependent on multiple connections between these face patches and regions of the medial temporal lobe memory system (including the hippocampus), and the social processing system. Moreover, this network was selective for memory for faces, and did not predict memory for other visual objects (cars). These findings suggest that in the general population, variability in face memory is dependent on how well the face processing system interacts with other processing networks, with interaction among the face patches themselves accounting for little of the variance in memory ability.

SIGNIFICANCE STATEMENT Our ability to recognize and remember faces is one of the pillars of human social interaction. Face recognition however is a very complex skill, requiring specialized neural resources in visual cortex, as well as memory, identity, and social processing, all of which are inherent in our real-world experience of faces. Yet in the general population, people vary greatly in their face memory abilities. Here we show that in the neural domain this variability is underpinned by the integration of visual, memory and social circuits, with the strength of the connections between these circuits directly linked to face recognition ability.

Keywords: face, fMRI, human, memory, social

Introduction

Memory for faces is one of the core capacities of the human mind. The ability to recognize people, and to know whether the person in front of us is familiar or not, is fundamental to our social functioning, a cornerstone of humanity. And yet, within the general, healthy population there is a great degree of variance in terms of ability to remember and recognize both familiar and novel faces (Carbon, 2008; Herzmann et al., 2008; Elbich and Scherf, 2017).

The neural underpinnings of visual face processing have been studied extensively (Bentin et al., 1996; Haxby et al., 1996; Grill-Spector and Malach, 2004; Grill-Spector et al., 2004; Kanwisher and Yovel, 2006; Pitcher et al., 2007), resulting in the identification of several patches in the ventral visual stream along the fusiform gyrus such as the occipital face area (OFA), the fusiform face area (FFA), and more recently, a more anterior patch in the ventral anterior temporal lobe (ATL), which are highly selective for face stimuli, responding more to faces than to other object categories (Rossion et al., 2012; Avidan et al., 2014). These regions, along with the amygdala, parts of the lateral occipital sulcus (LOS) and the posterior superior temporal sulcus (pSTS) are largely regarded as the elements comprising the face network (Collins and Olson, 2014; Jonas et al., 2015).

Lesion and stimulation studies have shown that face perception and recognition are impaired when face-selective regions are compromised (Damasio et al., 1982; Jonas et al., 2015). The degree of neural activity and selectivity within this face network has been linked to better performance on face memory tasks in numerous studies (Golarai et al., 2007; Furl et al., 2011; Elbich and Scherf, 2017), with patients with congenital prosopagnosia demonstrating significantly reduced selectivity within these regions (Duchaine and Nakayama, 2006b; Jiahui et al., 2018). Neural activity in these regions can be also used to decode individual faces (Davidesco et al., 2014). However, studies investigating the link between resting state functional connectivity and face recognition memory abilities have been scarce and limited in scope, focusing almost exclusively on correlations within the face network itself, and of the face network with early visual cortex. These studies have shown decreased connectivity between these nodes in individuals with congenital prosopagnosia (Avidan et al., 2014; Lohse et al., 2016), and predictive value for the correlation between OFA and FFA for performance on face perception tasks, as well as a long-term recognition task for famous faces (Zhu et al., 2011). Using diffusion tensor imaging, reduced structural connectivity in white matter tracts projecting along the ventral occipitotemporal cortex from the occipital face regions to anterior temporal regions has been reported (Thomas et al., 2009), as has white matter abnormalities in the vicinity of FFA (Gomez et al., 2015; Song et al., 2015).

Although face processing has been one of the most thoroughly researched topics in vision science, the story of face recognition memory, across the full range of abilities, from cases where it is severely disrupted without any apparent brain insult, such as in congenital prosopagnosia, through typical individuals to those with exceptional abilities, has so far been examined primarily through the face network with perhaps one exception that examined correlations between face regions and other brain areas but reported conflicting results (Wang et al., 2016). Surely though, given the nature of the processes involved in face recognition memory and the context in which it is constantly performed in actual everyday life, both memory and social networks must also be profoundly involved. We therefore set out to characterize the relationship between the face patches and other brain networks relevant for face recognition memory by performing a whole-brain search for regions whose connectivity with the ventral face patches predict face memory ability.

Materials and Methods

Participants.

Fifty-three participants (24 female) aged 16–30 (mean age = 23.1) were recruited for this experiment. All participants were screened for any history of neurological and psychiatric disorders. In addition, all participants had normal to corrected-to-normal vision. One participant was excluded from analysis because of abnormal brain structure, and two were excluded because of inattention on behavioral testing (failure on the practice questions). The experiment was approved by the NIMH Institutional Review Board (protocol 10-M-0027). Written informed consent was obtained from all participants.

Behavioral testing.

Before the scan, all participants completed two memory tasks: the Cambridge Face Memory Task (CFMT; Duchaine and Nakayama, 2006a) and the Cambridge Car Memory Test (CCMT; Dennett et al., 2012). All but 4 subjects completed the memory tasks directly before the scan. The CFMT is comprised of three parts; in the first part, participants are shown three views of a target face, and then presented with a forced-choice test with the target face and two distractor faces. Participants had to select the face that matched the original target face. There are six target faces, each of which was presented three times, for a total for 18 trials. In the second part, participants were presented with frontal views of the six target faces for 20 s, followed by 30 forced-choice tests with one target face and two distractor faces. Next, subjects were presented with the frontal views of the six target faces for 20 s, followed by 24 more forced-choice test displays presented with a Gaussian noise overlay. The CCMT uses the same structure as the CFMT, but uses cars, instead of faces. For both the CFMT and the CCMT, recognition scores were the sum correct responses on the three sections. Subjects who had two or more incorrect trials in the first introductory phase of the memory tests were excluded because of concerns about attention to tasks.

Imaging data collection and MRI parameters.

All scans were performed at the Functional Magnetic Resonance Imaging Core Facility on a 32-channel coil GE 3T (GE MR 750 3.0T) magnet and receive-only head coil, with online slice time correction. The scans included a 6 min T1-weighted magnetization prepared rapid gradient echo sequence for anatomical coregistration, which had the following parameters: TE = 2.7, flip angle = 12, bandwidth = 244.141, FOV = 30 (256 × 256), slice thickness = 1.2, axial slices. Functional images were collected using multiecho acquisition using the following parameters: TR = 2 s, voxel size = 3 × 3 × 3, flip angle = 60, multiecho slice acquisition with 3 echoes, TE = 17.5, 35.3, and 53.1 ms, matrix = 72 × 72, slices = 28. Two hundred and seventy TRs were collected for the rest scans, and 250 TRs for the face/scene localizer scans. All scans used an accelerated acquisition (GE's ASSET) with a factor of 2 to prevent gradient overheating.

Scan stimuli and experimental design.

Each scan started with two 9 min rest scans. During this scan, participants were presented with a uniformly gray screen with a fixation cross. Participants were instructed to lie still, not fall asleep, and look at the screen. After the rest scans, participants completed two runs of an 8 min and 20 s face/scene localizer scan. Four subjects completed a different, 9 min 20 s localizer scan, and they were excluded from the face selectivity analysis relying on β weights, as those were not comparable between localizer types. Each localizer began with a 20 s blank gray screen, followed by sixteen 20 s presentation blocks and a 10 s blank gray screen with a fixation cross. During presentation blocks, 20 grayscale pictures of faces (face blocks) or scenes (scene blocks) were presented (stimulus duration = 200 ms, interstimulus interval = 700 ms), with one or two images repeating in succession in each block. Subjects were instructed to look for these repetitions (1-back task) and respond using a button box. There were eight face blocks and eight scene blocks in each localizer run, with 320 exemplars from each category. Each exemplar repeated no more than twice in each run. Exemplars were taken from the stimulus set used by Stevens et al. (2015), and did not overlap with stimuli used in the CFMT or the CCMT.

MRI off-line data preprocessing.

Post hoc signal preprocessing for the functional images was performed in AFNI (Cox, 1996). The first four EPI volumes from each run were removed to ensure all volumes were at magnetization steady state. Any large transients that remained were removed using a squashing function (AFNI's 3dDespike). Volumes were slice-time corrected and motion parameters were estimated with rigid body transformations (using AFNI's 3dVolreg function). Volumes were coregistered to the anatomical scan. The data were then processed using AFNI's meica.py to perform a multiecho ICA analysis. This process removes nuisance signals such as hardware-induced artifacts, physiological artifacts, and residual head motion (Kundu et al., 2013). The functional and anatomical datasets were coregistered using AFNI, then transformed to Talairach space.

ROI selection.

The localizer data were used to define individual face regions-of-interest (ROIs) for each subject. A standard general linear model was used with a 20-s-long boxcar function. This was convolved with a canonical hemodynamic response function, and de-convolved using the AFNI function 3dDeconvolve. Face selective ROIs were found using the faces > scenes contrast. All ROIs for each individual participant were defined in Talairach space. In the faces > scenes contrast, we identified the center of mass for the bilateral FFA, OFA, and amygdala, in addition to the right ATL face patch and right pSTS. We then defined a spherical ROI of 6 mm radius around each of these centers of mass to obtain eight individually localized visual ROIs. ROI coordinates given in the table are converted to MNI coordinates using AFNIs lookup table.

Data analysis and statistical tests.

All data were analyzed with in-house software written in MATLAB, as well as the AFNI software package. Data on the cortical surface were visualized with SUMA (SUrface MApping; Saad et al., 2004). Two-tail t tests were used for all p values on correlations, unless otherwise stated. For the permutation test used to determine the threshold of significance in the specificity for face memory segment, we compared the correlation of the connectivity of each ROI pair to the CFMT scores, to the partial correlation to CFMT scores using CCMT at a regressor. The correlation and partial correlation (calculated used MATLAB's partialcorr function) scores for each ROI pair were first converted to z-scores using Fisher's transform, and then the difference between them was calculated. For each ROI pair, for 10,000 iterations, we permuted the subject labels on the correlation values between that ROI pair, and calculated the permuted correlation to the CFMT, the permuted partial correlation to the CFMT with the CCMT scores regressed, and the difference between them, for each rest scan. We then took the average for each iteration across the two rest scans, and set as the threshold the result of the 95th percentile across all iterations across all possible ROI pairs.

Whole-brain analysis cluster size correction.

In addition to these individually localized ROIs, we obtained 23 group-defined ROIs, by using each of the eight individually defined ROIs as a seed. We first calculated for each voxel its correlation to the seed (for each participant), transformed to z-scores using Fisher's transform, resulting in a vector for each voxel with correlation scores per participant. We next calculated the correlation of that vector for each voxel with the CFMT/CCMT scores, as appropriate, resulting in a single predictiveness value per voxel. We did this for each of the rest scans separately, and then combined the resulting maps for each seed across both rest scans, by requiring that voxels be significantly correlated with behavior in both rest scans at either p < 0.05 or p < 0.01 to be counted, resulting in eight maps, one per seed. We then ran a permutation test cluster size correction for multiple comparisons, for all eight maps together, by permuting the CFMT scores 10,000 times and then testing correlations for each voxel, for each seed, and requiring that voxels be significantly correlated in both rest scans at either p < 0.05 or p < 0.01 to be counted (these 2 tests are not identical, and have different cluster size thresholds; a cluster can be smaller but also more strongly correlated and still significant, or larger and less strongly correlated). We then took the largest cluster at the 95th percentile across all maps as our minimum cluster size for p < 0.05 and p < 0.01, resulting in eight fully corrected individual seed-based predictiveness maps. We next calculated a conjunction map, in which the value of each voxel was the number of predictiveness maps in which it was significant (ranging from 0 to 8). To be considered significant, a voxel had to be predictive in at least three separate seed-based predictiveness maps, meaning a value of 3 or higher in the conjunction map. We therefore thresholded the conjunction map at 3, and identified the centers of mass in the surviving clusters. ROIs were defined as 6 mm spheres around the centers of mass of these clusters in the thresholded conjunction map, resulting in 23 ROIs for CFMT, and none for CCMT. The eight individually localized visual ROIs, in addition to the 23 group-defined ROIs, were used as targets in subsequent analyses. Minimum cluster size for the global connectivity analysis was determined in the same way. There were no significant clusters for CCMT in any if the individual seed-based predictiveness maps, not only in the conjunction map.

Results

Behavioral tests and ROI localization

Before the fMRI scan, participants came in for a behavioral testing session, which included administration of the CFMT (Duchaine and Nakayama, 2006a), a widely used measure of face memory ability, as well as the CCMT (Dennett et al., 2012) in counterbalanced order, outside the MRI scanner. Performance on the two tests was significantly correlated across our 50 participants (r = 0.45, p < 0.0005, two-tailed t test), and the tests were well matched for difficulty, with no significant difference in the mean scores of the tests (mean score = 78.2 for the CFMT, 75.1 for the CCMT, two-tailed paired t test on the difference between the means was insignificant, p = 0.19). Scores on the CFMT ranged from 45% correct to 100% correct, with a SD of 11. CCMT scores ranged between 50 and 98, with a SD of 11.6. These tests were chosen as they have been widely used in the literature, and are well normed and validated (Wilmer et al., 2010). As by Wilmer et al. (2010), we calculated internal reliability on the CFMT and the CCMT in our sample using Cronbach's α, and found very high reliability for both tests (Cronbach's α = 0.86 and 0.85, respectively).

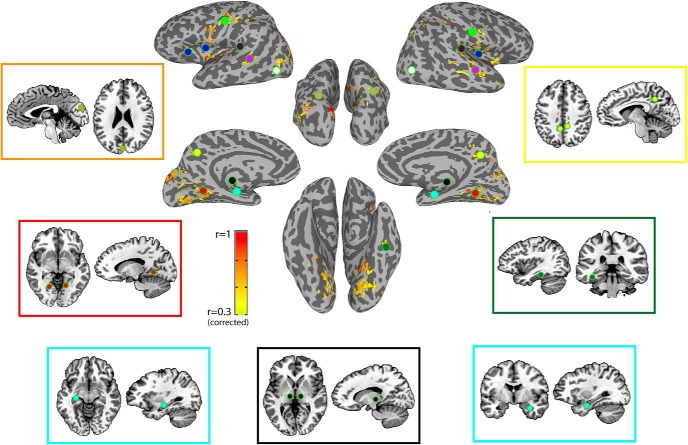

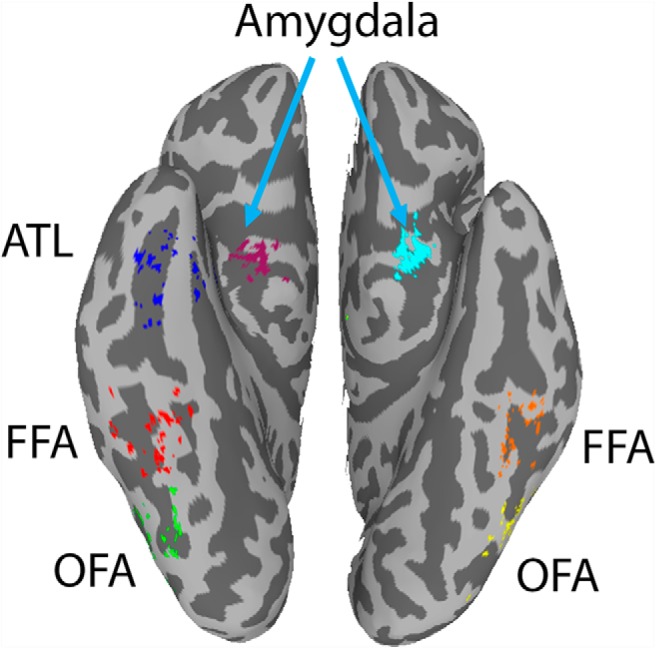

Participants then went on to do an fMRI scan, which included two resting state runs and two face/scene localizer runs. All runs were ∼9 min long (see Materials and Methods for more details). We began by using the face/scene localizer runs to identify the ventral face patches (bilateral OFA, bilateral FFA, and right ATL), as well as bilateral amygdala and right pSTS in each individual participant (N = 50). Left ATL and pSTS were difficult to localize in some participants (congruent with the known right bias for the face network and specifically for ATL; Jonas et al., 2015) and were therefore excluded. These ROIs were defined in each participant as 6-mm-radius spheres around the center of mass of each cluster. Figure 1 shows the location of these centers of mass for each of the 50 participants for the seven ventral regions, and mean MNI coordinates for all face regions across participants can be found in Table 1.

Figure 1.

Individual centers of the seven ventral face ROIs. Colored vertices represent the location of the center of mass of each of the face patches, defined through the localizer for each of the 50 participants. All eight face patches were individually defined for each participant in the volume, and are projected here on the surface. ATL, blue; FFA, right: red, left: orange; OFA, right: green, left: yellow. Right amygdala shown in purple, left amygdala in cyan. pSTS on the lateral surface not shown. For all ROI analyses described later, ROIs were individually defined for each participant by drawing 6 mm spheres in the volume around these individually localized centers (for group localizer data, see Fig. 6).

Table 1.

Mean MNI coordinates for face patches

| x | y | z | |

|---|---|---|---|

| Right ATL | 38 ± 4 | −11 ± 6 | −31 ± 5 |

| Right FFA | 41 ± 4 | −50 ± 5 | −19 ± 3 |

| Left FFA | −42 ± 3 | −50 ± 5 | −19 ± 2 |

| Right OFA | 41 ± 4 | −75 ± 5 | −12 ± 4 |

| Left OFA | −41 ± 4 | −77 ± 5 | −11 ± 3 |

| Right amygdala | 19 ± 2 | −3 ± 2 | −16 ± 2 |

| Left amygdala | −18 ± 2 | −3 ± 2 | −16 ± 2 |

| Right pSTS | 53 ± 5 | −49 ± 6 | 9 ± 4 |

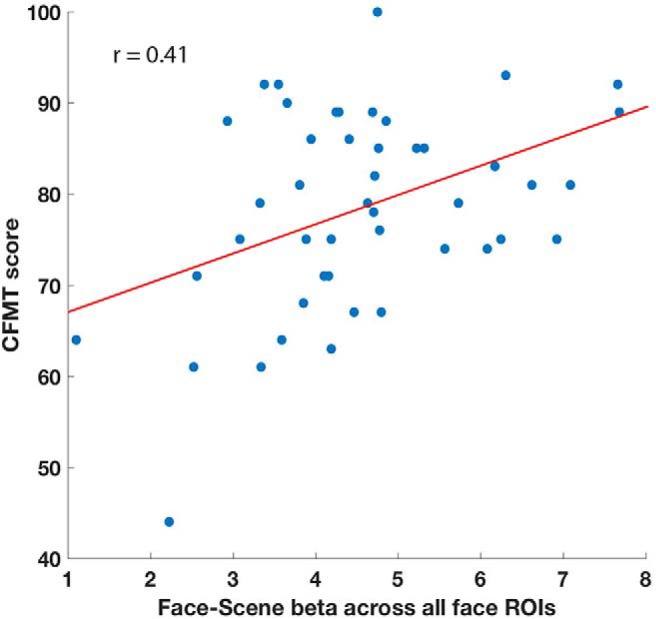

Face selectivity

Next, we sought to reproduce previous findings linking selectivity for faces within the face regions to performance on the face memory task (Golarai et al., 2007; Furl et al., 2011; Elbich and Scherf, 2017; Jiahui et al., 2018). We first defined selectivity as the β values of the face > scene condition in the localizer runs for each participant, averaged across the voxels in each of our individually defined face patches. We then correlated this selectivity value across participants, with their performance on the CFMT (faces) and CCMT (cars). Selectivity of the right FFA and right OFA was significantly correlated with performance on the CFMT (FFA: r = 0.34, p = 0.024; OFA: r = 0.32, p = 0.03), but not with performance on the CCMT (FFA: r = 0.15, p = 0.3; OFA: r = −0.02, p = 0.88). The other face ROIs were not significantly correlated to either face (CFMT, right ATL: r = 0.18, p = 0.24; right amygdala: r = 0.12, p = 0.41; right pSTS: r = 0.17, p = 0.27; left FFA: r = 0.02, p = 0.87; left OFA: r = 0.11, p = 0.46; left amygdala: r = 0.11, p = 0.48) or car memory (CCMT, right ATL: r = 0.14, p = 0.36; right amygdala: r = 0.05, p = 0.74; right pSTS: r = 0.2, p = 0.21; left FFA: r = 0.12, p = 0.44; left OFA: r = 0.16, p = 0.3; left amygdala: r = 0.14, p = 0.35). Although when tested individually, only selectivity in the FFA and OFA was significantly predictive of face memory performance, selectivity averaged across all eight face patches was also significantly predictive of face memory ability (CFMT: r = 0.41, p = 0.004; Fig. 2). The average selectivity across all face patches was not predictive of car memory performance (CCMT: r = 0.19, p = 0.19). To directly compare the correlation of the selectivity to both the CFMT and the CCMT and to better understand what degree of the variance in this correlation between selectivity and CFMT performance is explained by the CCMT, we also calculated the partial correlation of selectivity to CFMT score, accounting for CCMT score. There was no significant change in any of the correlations. The partial correlation of the selectivity of right FFA and right OFA to CFMT remained significant (r = 0.31, p = 0.036 and r = 0.35, p = 0.017, respectively; no other regions were significant). The partial correlation of the average selectivity to CFMT likewise did not significantly change (r = 0.37, p = 0.013).

Figure 2.

Face selectivity predicts CFMT score. Face–scene β during the two localizer runs averaged across all voxels in the eight individually defined face patches (bilateral OFA, bilateral FFA, bilateral amygdala, right STS, and right ATL) shown on the x-axis per participant, with CFMT scores shown on the y-axis. r = 0.41, p = 0.004, N = 46, as four participants were excluded because they had been given a different version of the localizer task (see methods).

Resting state correlations within the face regions fail to predict face memory

Having reproduced the findings relating to the correlation between selectivity and face memory abilities within the face network in the localizer data, we turned to the resting state data. We began by examining the correlations among the face patches themselves during rest. As expected, these correlations were strong, with the strongest correlations (averaged across participants and across both rest scans) occurring between right and left FFA (r = 0.75), right and left OFA (r = 0.74), and right FFA to right OFA (r = 0.73). The average pairwise correlation across all the eight face patches (averaged across participants and rest scans) was 0.49.

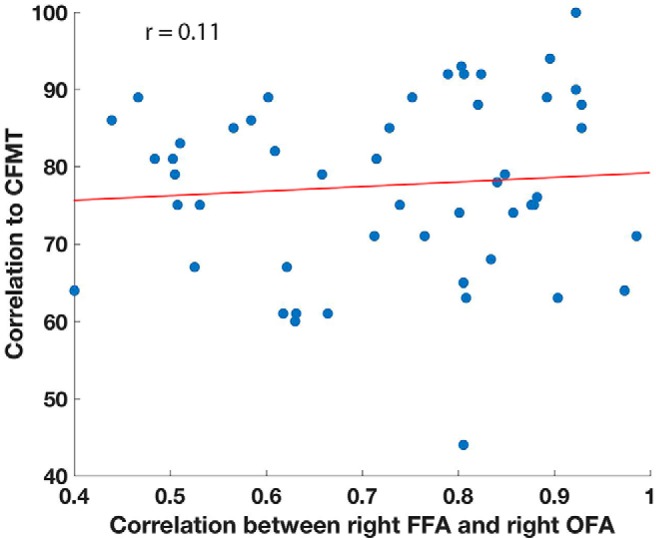

We then sought to determine whether any of the resting-state correlations between the ROIs would predict performance on the CFMT as the β weights had done. To this end, we compared the correlations between pairs of regions and memory test performance for each of the 28 pairs of face regions as well as for the average of all the pairwise correlations. None of these correlations were significantly predictive of face memory ability, and values ranged between r = 0.05 and r = 0.23 (for instance, the correlation of the connectivity between right FFA and right OFA to CFMT score was r = 0.11; Fig. 3).

Figure 3.

FFA–OFA correlation to memory. Correlation between right FFA and right OFA for each participant, plotted against their score on the CFMT. Note the wide range of correlations between right FFA and right OFA, despite the high mean correlation of these two regions (r = 0.72).

This discrepancy between the predictive value of the selectivity of the face patches during the localizer and the predictive value of the resting state correlations between them suggests that these measures do not capture the same intersubject variance. To verify this, we tested whether the resting state correlation between each pair of face patches was correlated to the selectivity of these same face patches during the localizer (measured by the face-scene betas of the pair being tested) across participants. We found no significant correlation between the two measures for any of the pairs (correlations ranged from r = −0.1 to r = 0.16). As the selectivity of the face patches became more predictive of CFMT score when averaging across all face patches, we also examined the correlation between the average resting state connectivity among the face patches (measured by the average pairwise correlation between them), and the average selectivity of the face patches (measured by the average face-scene β across all the face patches) and found those to be uncorrelated as well (r = −0.05, p = 0.85). Thus, although the time-series measured during rest in the individually localized face patches were highly intercorrelated, connectivity between these regions was uncorrelated to the selectivity measured by the localizer scans, indicating that the selectivity of the face patches is not driven by their interconnectedness.

Extending the network

The discrepancy between the selectivity of the face patches and the correlations between them, both in terms of their ability to predict face memory performance and the lack of correlation between the two measures, reinforced the need to expand the search for meaningful interactions in relation to face memory abilities outside the face network. To this end, we took each one of our face ROIs as a seed and calculated the correlation of the time course of that seed during rest with every other voxel in the brain, for each participant. We then calculated for each voxel, across participants, the correlation of that connectivity measure with the CFMT score. This gave us a measure for each voxel of how predictive its correlation with the face seed region was to behavior, as measured by the CFMT score. This measure is hereafter referred to as the predictiveness measure. We repeated this analysis for each of the 8 face ROIs, and separately for each rest scan. To validate the findings, we constrained our results for each seed to voxels for which this predictiveness measure was significant on both rest scans, corrected for multiple comparisons using a strict cluster size permutation test (see Materials and Methods), for a final results of eight seed-based predictiveness maps containing the significant clusters. A similar analysis was conducted with correlation to the car memory scores, as measured by the CCMT, but no significant clusters were found.

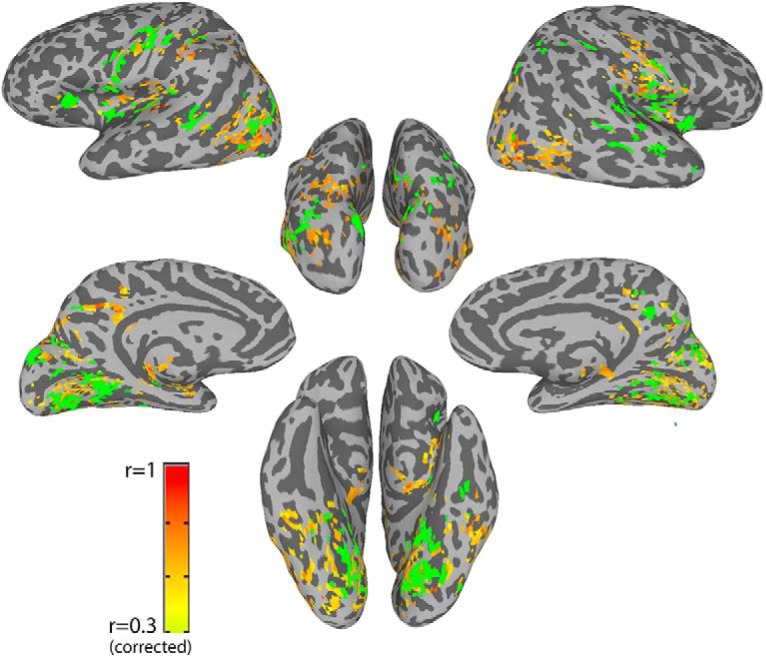

To search for consistency across the eight seed-based predictiveness maps we constructed a conjunction map in which the value of each voxel is the number of maps in which that voxel was significant (after all corrections), so that each voxel can have a value of between 0 and 8. To best identify the regions whose connectivity to the face patches was significantly and consistently correlated with face memory performance we required that voxels be significantly predictive of CFMT scores in at least three of the seed-based predictiveness maps (by thresholding the conjunction map at 3 seeds). These results are displayed in Figure 4. Significant clusters were found in medial parietal and medial temporal regions, in somatosensory regions, along the insula, auditory cortex in the superior temporal gyrus, and in the posterior superior parietal lobule (pSPL). This analysis also picked out a region in the lateral occipital cortex (LOC) and another in the mid-superior temporal sulcus (mid-STS). For later statistical analyses, we next identified the peaks of these significantly predictive clusters and defined those as new ROIs, with 6-mm-radius spheres (see Materials and Methods for details). Twenty-three such ROIs were identified. The locations of these ROIs are also shown in Figure 4, overlaid on top of the conjunction map for the eight individual seeds, and their MNI coordinates are listed in Table 2.

Figure 4.

Significantly predictive voxels and group defined ROI locations. Conjunction map of the eight predictiveness measure seed maps, which shows the voxels that were significantly predictive of CFMT scores in at least three seed maps. Colors indicate number of seed maps for which this voxel was significantly predictive, across both rest scans. Overlaid are the locations of all 23 group defined ROIs represented schematically by circles. Light green, somatosensory; blue, insula and anterior insula; dark purple, STG/auditory cortex; magenta, mid-STS; brown, pSPL; yellow, medial parietal; orange, cuneus; white, LOC; black, thalamus; cyan, hippocampus; red, parahippocampus; dark green, parahippocampus2. Insets, Locations of the ROIs in the volume, including separately for left and right hippocampus.

Table 2.

MNI coordinates for the 23 group-defined ROIs

| x | y | z | |

|---|---|---|---|

| Right LOC | 40 | −76 | 8 |

| Left LOC | −44 | −67 | 0 |

| Right pSPL | 32 | −68 | 40 |

| Left pSPL | −22 | −76 | 38 |

| Cuneus | 0 | −84 | 32 |

| Right parahippocampus | 20 | −56 | −2 |

| Left parahippocampus | −16 | −56 | −4 |

| Left parahippocampus2 | −40 | −36 | −16 |

| Left hippocampus | −32 | −18 | −10 |

| Right anterior hippocampus | 32 | −4 | −26 |

| Right medial parietal | 12 | −44 | 40 |

| Left medial parietal | −6 | −50 | 42 |

| Right insula | 42 | −8 | 8 |

| Left anterior insula | −30 | 28 | 0 |

| Left insula | −36 | 4 | 12 |

| Right mid-STS | 56 | −16 | −4 |

| Left mid-STS | −52 | −36 | 4 |

| Right STG | 44 | −32 | 16 |

| Left STG | −42 | −32 | 14 |

| Right somatosensory | 56 | −8 | 28 |

| Left somatosensory | −52 | −8 | 36 |

| Right thalamus | 16 | −22 | 0 |

| Left thalamus | −8 | −20 | 0 |

This analysis revealed that it is the connectivity between the face network and other visual/memory/social networks that underlies face memory. To further explore the whole-brain underpinnings of face memory, we also took a completely data driven approach, independent of the face ROIs defined by the localizer scans. Instead, we calculated for each participant for each voxel the global connectivity of that voxel (i.e., the average correlation of that voxel to all other voxels), and then calculated the correlation of the global connectivity with the CFMT scores, i.e., global predictiveness. Figure 5 shows the corrected map of voxels in which global predictiveness was significant in both rest scans separately, with the conjunction map from the face patch predictiveness analysis overlaid. Importantly, as illustrated, the peaks of the two analyses overlap almost entirely. The global predictiveness analysis also picks out the face ROIs. This is because this map is driven by the average connectivity of each voxel with all other voxels in the brain, not the pairwise strength of connectivity between specific nodes, such as between the different face patches. We performed a similar analysis looking at the correlation between the global connectivity and the CCMT scores but found no significant clusters.

Figure 5.

Global connectivity. Map shows voxels whose global connectivity, i.e., average connectivity with all the other voxels, during rest is significantly correlated to performance on the CFMT, after corrections for multiple comparisons (orange/red voxels). Overlaid in green is the conjunction map from the previous analysis which was displayed in Figure 4, indicating voxels whose connectivity to at least three of the face ROIs was significantly predictive of performance on the CFMT. Note the high degree of overlap between the two analyses.

Overlap with the face network

To further characterize the regions identified in the above analysis, we tested the degree of overlap between the regions whose correlation to the face ROIs predicted performance on the CFMT and face selective cortex. We overlaid the conjunction map based on the previous seed-based analysis on the group defined GLM of the face > scenes contrast. This is depicted in Figure 6. Remarkably, the overlap with face selective regions was very minimal, regardless of the threshold used in the GLM analysis. Specifically, only 31% of the predictive voxels have Face–Scene β values that are greater than zero, and only 6.3% are significantly more selective for faces than scenes at the very liberal threshold of q = 0.1. At a threshold of q = 0.05 (Fig. 6), only 5% of the predictive voxels are more selective for faces. Thus, fully 95% of the face memory predictive voxels fall outside the face-selective regions as defined by the face localizer data.

Figure 6.

Overlap with the face network. Map shows the group level Faces > Scenes contrast, with face selective voxels shown in red, and scene selective voxels in blue. Map is thresholded at an FDR corrected value of q < 0.05. Overlaid in green is the conjunction map from the previous analysis which was shown in Figure 4, using the face ROIs as seeds. Note the lack of overlap between these face memory predictive voxels and the face network.

Examining the relationship among all nodes

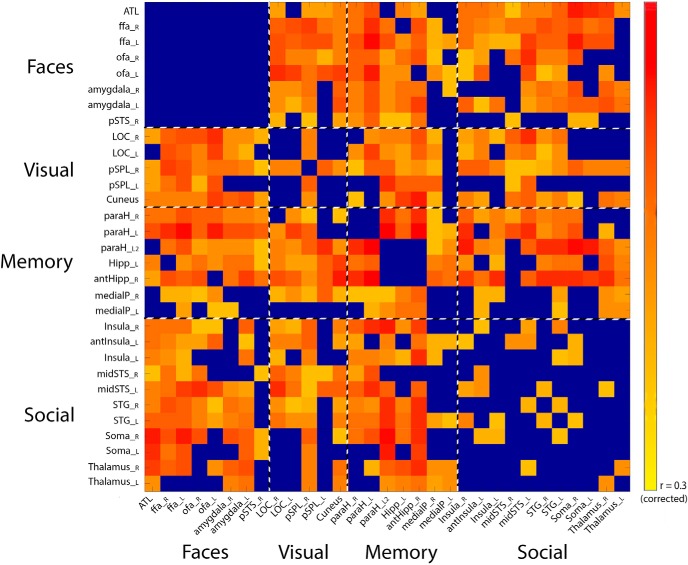

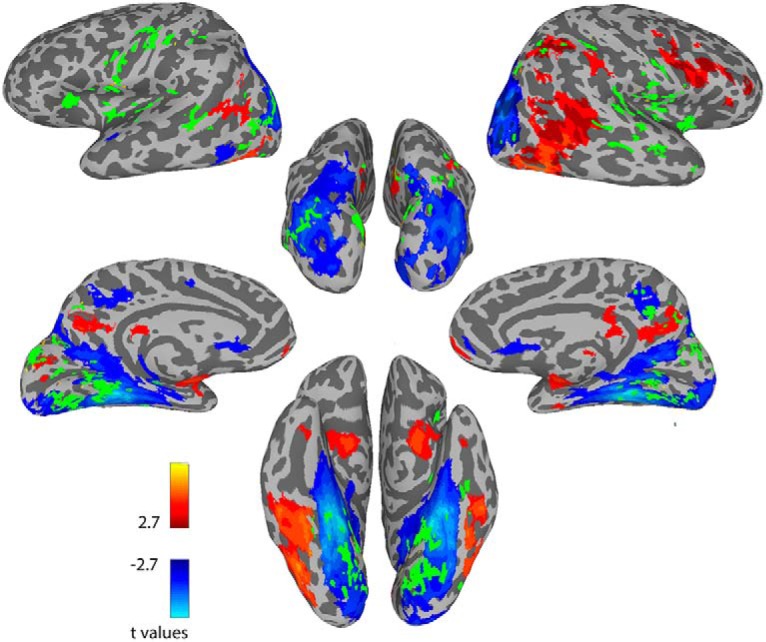

To gain a better understanding of the network structures involving these newly identified regions predictive of face memory, we examined the peaks of the 23 clusters identified in the face connectivity analysis described above, represented by the 23 ROIs defined as 6 mm spheres around those peaks. We then calculated the full correlation matrix between all 31 ROIs, consisting of the 8 face ROIs defined from the localizer, and these additional 23 ROIs, averaged across all participants and both rest scans. Predictably, correlations between homologous regions were the highest, as were correlations between FFA and OFA as noted above, and insula with somatosensory cortex. To see which connections were most predictive of face memory abilities as opposed to simply which areas were most strongly intercorrelated, we again performed the predictiveness analysis of connectivity to behavior, calculating the correlation across participants of the correlations of each pair of ROIs to the CFMT scores. This was performed for each rest scan separately, and we once again constrained the results by requiring that this predictiveness be significant in both rest scans.

The resulting predictiveness matrix, shown in Figure 7, shows all the ROI whose correlation was significantly predictive of performance on the CFMT, in each rest scan. Note that this matrix includes both the individually and independently localizer-defined face ROIs, and the group defined ROIs during the rest scans, which were chosen for the correlation between their connectivity to the face ROIs and CFMT performance. The purpose of this analysis is not to directly compare the localizer-defined and rest-defined ROIs, but rather (1) to show the direct ROI analysis of the connectivity of each of the individual face ROIs with the 23 new ROIs in correlation to CFMT performance (top rows, first columns), and (2) to explore all the other possible relationships within the group rest-defined ROIs (bottom right), because these have previously only been considered in relation to the face ROIs, and not to each other.

Figure 7.

ROI pair correlations with behavior. Correlations of the connectivity between each of the ROI pairs (consisting of the 8 individually localized ROIs from the localizer, and the 23 group-defined ROIs from the seed analysis) and CFMT. Values indicate how predictive the correlation between each ROI pair is of performance on the CFMT. Blue denotes a nonsignificant correlation (determined through a permutation test, which was used to correct for multiple comparisons). Note the lack of significant predictive value of the correlation between the different face ROIs (top left).

As this figure shows, none of the correlations between the face ROI pairs were significantly predictive of face memory abilities, as discussed above. Apart from the predictive connections between the face patches and the group rest-defined ROIs, which are to be expected given how these ROIs were defined, the most predictive connections were within medial temporal lobe, and between STG/somatosensory cortex to the medial temporal lobe. To ensure that the predictive value of an ROI pair (or lack thereof) was not because of the degree of variance between participants in the correlation between the two nodes, we calculated the variance in the correlations across participants between each pair of ROIs (across all 31 ROIs) and tested whether there was a correlation between this variance and the predictive value of the ROI pairs, but found none (r = 0.0015, p = 0.97, permutation test).

Specificity for face memory

To further test whether the new ROIs, defined through their predictive value for the CFMT, are involved specifically in face memory or rather underpin more domain general memory processes, we redid the correlation analysis of the between ROI pair connectivity, this time to the CCMT. The only ROI pair whose correlation was significantly predictive of scores on the CCMT, was right medial parietal with right LOC (r = 0.44, p = 0.0012, corrected for multiple comparisons through permutation test). Right LOC as defined here (Talairach coordinates: 37, −72, 7; MNI: 40, −76, 8) is within 5 mms of previously described object selective regions (Grill-Spector et al., 1998, 1999). To more directly test the degree of variance explained by domain general rather than face specific processes in the predictive power of the connections within our network, we calculated the partial correlations of the correlation of each ROI pair with the CFMT scores, accounting for the CCMT scores, and then examined the difference for each ROI between the correlation with the CFMT, and the partial correlation. We next ran a permutation test to determine the threshold at which this difference can be considered significant (see Materials and Methods), and the only significant differences were found in the correlation of the right and left medial parietal regions to right LOC (correlation difference = 0.1 and 0.085, p < 0.021, p < 0.045, respectively).

Discussion

The focus of this current work was to expand the study of face recognition memory beyond the traditional face network, and identify additional networks which are involved in the myriad of processes which comprise face recognition memory. Using correlations to search for links between whole-brain connectivity and behavior, we were able to uncover a number of regions whose connectivity either with the face patches, or with each other, strongly and significantly predicted face memory abilities, as measured by the CFMT (Figs. 3, 4). Surprisingly, it was not correlations within the face regions that were most predictive, and in fact correlations between FFA/OFA/ATL/pSTS/amygdala did not significantly predict face memory abilities (Fig. 7; and note also the prominent absence of the other face patches in the seed based CFMT predictiveness map shown in Fig. 4, and the incongruence between the ROIs defined from the face seed predictiveness analysis and face selective regions observed in the localizer, Fig. 6). However, the degree of selective activation for faces within the face patches was predictive (Fig. 2).

The combination of the findings described above suggests that while these face selective regions are clearly crucial for face memory, as evidenced by the correlation between selective activation for faces and CFMT scores, and they are strongly linked to each other, as can be seen by the strong correlation between them at rest, the degree of the connectivity between them is not what explains normal variation in face memory (though the connectivity between FFA and OFA has previously been found to be lower in prosopagnosia; Avidan et al., 2014; Lohse et al., 2016). Previous research linking correlations between FFA and OFA to behavior has focused mostly on face perception tasks, rather than memory tasks (Zhu et al., 2011). Moreover, while the selectivity of the face patches is predictive (Fig. 2), we find that selectivity, as measured by the β weights during the localizer, is not at all correlated to the connectivity during rest. Instead, looking at the whole-brain analysis, we find evidence that memory for faces, even unknown faces such as those presented in the CFMT, extends well beyond the visual face patches, and is supported by widespread networks involved in visual, memory, social cognition, and even auditory processing. Thus, in our sample, it is not a perceptual difficulty that drives poor face memory performance (correlations between the face patches are strong in all participants), but rather suboptimal communication between the perceptual face patches and higher-level memory/social regions. Zhu et al., 2011 also found a correlation between the connectivity of FFA and OFA with performance on a famous faces recognition task, though the correlation was stronger with performance on the perceptual face inversion task. This discrepancy between Zhu et al., 2011 and our results might be explained by the very different nature of the memory tasks; whereas the CFMT is a learning task, the famous faces recognition task tests long-term memory. It should also be noted that there were only 18 participants in that paper who completed the famous faces task, and the correlation was only barely significant (p = 0.04), requiring replication.

The correlations between face regions and other networks in relation to face memory have scarcely been studied previously. A recent study did investigate the connectivity within broadly defined face selective regions versus the average correlation of the face selective regions with the rest of the brain (Wang et al., 2016). However, although the authors reported that the degree of within face network connectivity versus between face to non-face network connectivity significantly predicted face recognition ability (albeit weakly), these non-face networks were not described. Moreover, the effects were in opposite directions in FFA and OFA, making the results difficult to interpret (Wang et al., 2016).

The predictive peaks that came up in our analysis can be roughly divided as belonging to visual, memory related, social, and auditory regions. Medial temporal lobe regions, such as the hippocampus and parahippocampus, have long been associated with memory processes (Squire and Zolamorgan, 1991; Yonelinas, 2002; van Strien et al., 2009), as have medial parietal regions (Wagner et al., 2005; Gilmore et al., 2015). On the other hand, somatosensory cortex has been found in multiple studies to be implicated in social processing (Damasio et al., 2000; Adolphs, 2009; Frith and Frith, 2010), and this same region of somatosensory cortex identified here, was previously found to be under-connected both globally, and specifically to other social brain regions such as mid STS, in patients with autism spectrum disorder compared with typically developing individuals (Gotts et al., 2012; Cheng et al., 2015; Ramot et al., 2017). Insular cortex has been shown to receive input from somatosensory cortex among others (Schneider et al., 1993), and to also be involved in social/emotional processing (Gallese et al., 2004; Caruana et al., 2011). One of the most unexpected findings was that face memory performance was strongly predicted by the correlation between the peaks found in STG, along Heschel's gyrus (Zarate and Zatorre, 2008; Yarkoni et al., 2011; Maudoux et al., 2012) and regions of the face processing network (in particular right FFA; r = 0.45). This is congruent with previous findings linking listening to voices to FFA activation, even in the absence of face stimuli (von Kriegstein and Giraud, 2006; von Kriegstein et al., 2006). The same key predictive regions were also found using the data driven global connectivity approach.

Figure 7 shows that it is not only the correlation of these regions to the face patches that is important for face memory abilities, but also their correlation to each other. In particular, the connectivity of the non-face visual regions to memory and social related regions was predictive of CFMT scores, as was the connectivity of the memory regions to each other, and to visual and social regions. This indicates the existence of a network outside of face-selective visual cortex, which is involved in face memory.

The other intriguing finding relates to the specificity of these connections for face memory. The CCMT is identical to the CFMT in format and is matched for difficulty (Dennett et al., 2012), and therefore involves the same general cognitive and memory processes, with the only difference being the object of memory, cars in one and faces in the other. That there were no significant correlations elsewhere in the brain of connectivity with the face patches and the CCMT is expected, as the face patches were defined specifically by their selectivity for faces, and it is therefore unsurprising that they are not involved in memory for other visual objects such as cars. However, the 23 new ROIs that were defined could equally underlie domain general memory processes rather than face-specific ones, and yet the only ROI pair that significantly predicted performance on the CCMT was the right medial parietal ROI, with right LOC. Similarly, when regressing out the variance explained by the CCMT, the predictive value of most ROI pairs to the CFMT did not significantly change, with the only exception again being the medial parietal ROIs with right LOC. Without an individual car/object localizer it is difficult to directly compare the predictive value of each of these ROIs separately for face memory versus car memory, but from the above partial correlation analysis it appears that the network described in these results, with connections not only between the face patches and memory/social regions, but also within memory/social regions, is largely specialized specifically for face memory and not for other objects such as cars (with the possible exception of the medial parietal regions). This is also in line with previous findings, showing the specialization of the anterior hippocampus for processing emotion and affect (Fanselow and Dong, 2010), and category selectivity in the medial temporal lobe (Robin et al., 2019).

Together, these findings indicate that the networks underlying face memory integrate visual information with inferences about the social and auditory properties associated with faces, even when these faces were previously unknown, such as those in the CFMT. Some of the memory regions identified in this study, such as the anterior region in hippocampus as well as specific regions in the medial temporal lobe, appear to be strongly biased toward face processing if not specific to faces, and warrant further study to determine the degree of their selectivity.

Footnotes

This work was supported by the Intramural Research Program, National Institute of Mental Health (ZIAMH002920), clinical trials number NCT01031407. We thank Adrian Gilmore, Cibu Thomas, and Stephen Gotts for helpful conversations and insights.

The authors declare no competing financial interests.

References

- Adolphs R. (2009) The social brain: neural basis of social knowledge. Annu Rev Psychol 60:693–716. 10.1146/annurev.psych.60.110707.163514 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Avidan G, Tanzer M, Hadj-Bouziane F, Liu N, Ungerleider LG, Behrmann M (2014) Selective dissociation between core and extended regions of the face processing network in congenital prosopagnosia. Cereb Cortex 24:1565–1578. 10.1093/cercor/bht007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bentin S, Allison T, Puce A, Perez E, McCarthy G (1996) Electrophysiological studies of face perception in humans. J Cogn Neurosci 8:551–565. 10.1162/jocn.1996.8.6.551 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carbon CC. (2008) Famous faces as icons: the illusion of being an expert in the recognition of famous faces. Perception 37:801–806. 10.1068/p5789 [DOI] [PubMed] [Google Scholar]

- Caruana F, Jezzini A, Sbriscia-Fioretti B, Rizzolatti G, Gallese V (2011) Emotional and social behaviors elicited by electrical stimulation of the insula in the macaque monkey. Curr Biol 21:195–199. 10.1016/j.cub.2010.12.042 [DOI] [PubMed] [Google Scholar]

- Cheng W, Rolls ET, Gu H, Zhang J, Feng J (2015) Autism: reduced connectivity between cortical areas involved in face expression, theory of mind, and the sense of self. Brain 138:1382–1393. 10.1093/brain/awv051 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins JA, Olson IR (2014) Beyond the FFA: the role of the ventral anterior temporal lobes in face processing. Neuropsychologia 61:65–79. 10.1016/j.neuropsychologia.2014.06.005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cox RW. (1996) AFNI: software for analysis and visualization of functional magnetic resonance neuroimages. Comput Biomed Res 29:162–173. 10.1006/cbmr.1996.0014 [DOI] [PubMed] [Google Scholar]

- Damasio AR, Damasio H, Van Hoesen GW (1982) Prosopagnosia: anatomic basis and behavioral mechanisms. Neurology 32:331–341. 10.1212/WNL.32.4.331 [DOI] [PubMed] [Google Scholar]

- Damasio AR, Grabowski TJ, Bechara A, Damasio H, Ponto LL, Parvizi J, Hichwa RD (2000) Subcortical and cortical brain activity during the feeling of self-generated emotions. Nat Neurosci 3:1049–1056. 10.1038/79871 [DOI] [PubMed] [Google Scholar]

- Davidesco I, Zion-Golumbic E, Bickel S, Harel M, Groppe DM, Keller CJ, Schevon CA, McKhann GM, Goodman RR, Goelman G, Schroeder CE, Mehta AD, Malach R (2014) Exemplar selectivity reflects perceptual similarities in the human fusiform cortex. Cereb Cortex 24:1879–1893. 10.1093/cercor/bht038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dennett HW, McKone E, Tavashmi R, Hall A, Pidcock M, Edwards M, Duchaine B (2012) The Cambridge car memory test: a task matched in format to the Cambridge face memory test, with norms, reliability, sex differences, dissociations from face memory, and expertise effects. Behav Res Methods 44:587–605. 10.3758/s13428-011-0160-2 [DOI] [PubMed] [Google Scholar]

- Duchaine B, Nakayama K (2006a) The Cambridge face memory test: results for neurologically intact individuals and an investigation of its validity using inverted face stimuli and prosopagnosic participants. Neuropsychologia 44:576–585. 10.1016/j.neuropsychologia.2005.07.001 [DOI] [PubMed] [Google Scholar]

- Duchaine BC, Nakayama K (2006b) Developmental prosopagnosia: a window to content-specific face processing. Curr Opin Neurobiol 16:166–173. 10.1016/j.conb.2006.03.003 [DOI] [PubMed] [Google Scholar]

- Elbich DB, Scherf S (2017) Beyond the FFA: brain–behavior correspondences in face recognition abilities. Neuroimage 147:409–422. 10.1016/j.neuroimage.2016.12.042 [DOI] [PubMed] [Google Scholar]

- Fanselow MS, Dong HW (2010) Are the dorsal and ventral hippocampus functionally distinct structures? Neuron 65:7–19. 10.1016/j.neuron.2009.11.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frith U, Frith C (2010) The social brain: allowing humans to boldly go where no other species has been. Philos Trans R Soc Lond B Biol Sci 365:165–176. 10.1098/rstb.2009.0160 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Furl N, Garrido L, Dolan RJ, Driver J, Duchaine B (2011) Fusiform gyrus face selectivity relates to individual differences in facial recognition ability. J Cogn Neurosci 23:1723–1740. 10.1162/jocn.2010.21545 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallese V, Keysers C, Rizzolatti G (2004) A unifying view of the basis of social cognition. Trends Cogn Sci 8:396–403. 10.1016/j.tics.2004.07.002 [DOI] [PubMed] [Google Scholar]

- Gilmore AW, Nelson SM, McDermott KB (2015) A parietal memory network revealed by multiple MRI methods. Trends Cogn Sci 19:534–543. 10.1016/j.tics.2015.07.004 [DOI] [PubMed] [Google Scholar]

- Golarai G, Ghahremani DG, Whitfield-Gabrieli S, Reiss A, Eberhardt JL, Gabrieli JD, Grill-Spector K (2007) Differential development of high-level visual cortex correlates with category-specific recognition memory. Nat Neurosci 10:512–522. 10.1038/nn1865 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gomez J, Pestilli F, Witthoft N, Golarai G, Liberman A, Poltoratski S, Yoon J, Grill-Spector K (2015) Functionally defined white matter reveals segregated pathways in human ventral temporal cortex associated with category-specific processing. Neuron 85:216–227. 10.1016/j.neuron.2014.12.027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gotts SJ, Simmons WK, Milbury LA, Wallace GL, Cox RW, Martin A (2012) Fractionation of social brain circuits in autism spectrum disorders. Brain 135:2711–2725. 10.1093/brain/aws160 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Grill-Spector K, Malach R (2004) The human visual cortex. Ann Rev Neurosci 27:649–677. 10.1146/annurev.neuro.27.070203.144220 [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Edelman S, Itzchak Y, Malach R (1998) Cue-invariant activation in object-related areas of the human occipital lobe. Neuron 21:191–202. 10.1016/S0896-6273(00)80526-7 [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Edelman S, Avidan G, Itzchak Y, Malach R (1999) Differential processing of objects under various viewing conditions in the human lateral occipital complex. Neuron 24:187–203. 10.1016/S0896-6273(00)80832-6 [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Knouf N, Kanwisher N (2004) The fusiform face area subserves face perception, not generic within-category identification. Nat Neurosci 7:555–562. 10.1038/nn1224 [DOI] [PubMed] [Google Scholar]

- Haxby JV, Ungerleider LG, Horwitz B, Maisog JM, Rapoport SI, Grady CL (1996) Face encoding and recognition in the human brain. Proc Natl Acad Sci U S A 93:922–927. 10.1073/pnas.93.2.922 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herzmann G, Danthiir V, Schacht A, Sommer W, Wilhelm O (2008) Toward a comprehensive test battery for face cognition: assessment of the tasks. Behav Res Methods 40:840–857. 10.3758/BRM.40.3.840 [DOI] [PubMed] [Google Scholar]

- Jiahui G, Yang H, Duchaine B (2018) Developmental prosopagnosics have widespread selectivity reductions across category-selective visual cortex. Proc Natl Acad Sci U S A 115:E6418–E6427. 10.1073/pnas.1802246115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jonas J, Rossion B, Brissart H, Frismand S, Jacques C, Hossu G, Colnat-Coulbois S, Vespignani H, Vignal JP, Maillard L (2015) Beyond the core face-processing network: intracerebral stimulation of a face-selective area in the right anterior fusiform gyrus elicits transient prosopagnosia. Cortex 72:140–155. 10.1016/j.cortex.2015.05.026 [DOI] [PubMed] [Google Scholar]

- Kanwisher N, Yovel G (2006) The fusiform face area: a cortical region specialized for the perception of faces. Philos Trans R Soc Lond B Biol Sci 361:2109–2128. 10.1098/rstb.2006.1934 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kundu P, Brenowitz ND, Voon V, Worbe Y, Vértes PE, Inati SJ, Saad ZS, Bandettini PA, Bullmore ET (2013) Integrated strategy for improving functional connectivity mapping using multiecho fMRI. Proc Natl Acad Sci U S A 110:16187–16192. 10.1073/pnas.1301725110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lohse M, Garrido L, Driver J, Dolan RJ, Duchaine BC, Furl N (2016) Effective connectivity from early visual cortex to posterior occipitotemporal face areas supports face selectivity and predicts developmental prosopagnosia. J Neurosci 36:3821–3828. 10.1523/JNEUROSCI.3621-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maudoux A, Lefebvre P, Cabay JE, Demertzi A, Vanhaudenhuyse A, Laureys S, Soddu A (2012) Connectivity graph analysis of the auditory resting state network in tinnitus. Brain Res 1485:10–21. 10.1016/j.brainres.2012.05.006 [DOI] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Yovel G, Duchaine B (2007) TMS evidence for the involvement of the right occipital face area in early face processing. Curr Biol 17:1568–1573. 10.1016/j.cub.2007.07.063 [DOI] [PubMed] [Google Scholar]

- Ramot M, Kimmich S, Gonzalez-Castillo J, Roopchansingh V, Popal H, White E, Gotts SJ, Martin A (2017) Direct modulation of aberrant brain network connectivity through real-time NeuroFeedback. eLife 6:e28974. 10.7554/eLife.28974 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Robin J, Rai Y, Valli M, Olsen RK (2019) Category specificity in the medial temporal lobe: a systematic review. Hippocampus 29:313–339. 10.1002/hipo.23024 [DOI] [PubMed] [Google Scholar]

- Rossion B, Hanseeuw B, Dricot L (2012) Defining face perception areas in the human brain: a large-scale factorial fMRI face localizer analysis. Brain Cogn 79:138–157. 10.1016/j.bandc.2012.01.001 [DOI] [PubMed] [Google Scholar]

- Saad ZS, Reynolds RC, Argall B, Japee S, Cox RW (2004) Suma: an interface for surface-based intra- and inter-subject analysis with AFNI. 2004 2nd IEEE International Symposium on Biomedical Imaging: Nano to Macro, Vol. 2, pp 1510–1513. Arlington, VA:IEE; 10.1109/ISBI.2004.1398837 [DOI] [Google Scholar]

- Schneider RJ, Friedman DP, Mishkin M (1993) A modality-specific somatosensory area within the insula of the rhesus-monkey. Brain Res 621:116–120. 10.1016/0006-8993(93)90305-7 [DOI] [PubMed] [Google Scholar]

- Song S, Garrido L, Nagy Z, Mohammadi S, Steel A, Driver J, Dolan RJ, Duchaine B, Furl N (2015) Local but not long-range microstructural differences of the ventral temporal cortex in developmental prosopagnosia. Neuropsychologia 78:195–206. 10.1016/j.neuropsychologia.2015.10.010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Squire LR, Zola-Morgan S (1991) The medial temporal-lobe memory system. Science 253:1380–1386. 10.1126/science.1896849 [DOI] [PubMed] [Google Scholar]

- Stevens WD, Tessler MH, Peng CS, Martin A (2015) Functional connectivity constrains the category-related organization of human ventral occipitotemporal cortex. Hum Brain Mapp 36:2187–2206. 10.1002/hbm.22764 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Thomas C, Avidan G, Humphreys K, Jung KJ, Gao F, Behrmann M (2009) Reduced structural connectivity in ventral visual cortex in congenital prosopagnosia. Nat Neurosci 12:29–31. 10.1038/nn.2224 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Strien NM, Cappaert NL, Witter MP (2009) The anatomy of memory: an interactive overview of the parahippocampal-hippocampal network. Nat Rev Neurosci 10:272–282. 10.1038/nrn2614 [DOI] [PubMed] [Google Scholar]

- von Kriegstein K, Giraud AL (2006) Implicit multisensory associations influence voice recognition. Plos Biol 4:e326. 10.1371/journal.pbio.0040326 [DOI] [PMC free article] [PubMed] [Google Scholar]

- von Kriegstein K, Kleinschmidt A, Giraud AL (2006) Voice recognition and cross-modal responses to familiar speakers' voices in prosopagnosia. Cereb Cortex 16:1314–1322. 10.1093/cercor/bhj073 [DOI] [PubMed] [Google Scholar]

- Wagner AD, Shannon BJ, Kahn I, Buckner RL (2005) Parietal lobe contributions to episodic memory retrieval. Trends Cogn Sci 9:445–453. 10.1016/j.tics.2005.07.001 [DOI] [PubMed] [Google Scholar]

- Wang X, Zhen Z, Song Y, Huang L, Kong X, Liu J (2016) The hierarchical structure of the face network revealed by its functional connectivity pattern. J Neurosci 36:890–900. 10.1523/JNEUROSCI.2789-15.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wilmer JB, Germine L, Chabris CF, Chatterjee G, Williams M, Loken E, Nakayama K, Duchaine B (2010) Human face recognition ability is specific and highly heritable. Proc Natl Acad Sci U S A 107:5238–5241. 10.1073/pnas.0913053107 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yarkoni T, Poldrack RA, Nichols TE, Van Essen DC, Wager TD (2011) Large-scale automated synthesis of human functional neuroimaging data. Nat Methods 8:665–670. 10.1038/nmeth.1635 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yonelinas AP. (2002) The nature of recollection and familiarity: a review of 30 years of research. J Mem Lang 46:441–517. 10.1006/jmla.2002.2864 [DOI] [Google Scholar]

- Zarate JM, Zatorre RJ (2008) Experience-dependent neural substrates involved in vocal pitch regulation during singing. Neuroimage 40:1871–1887. 10.1016/j.neuroimage.2008.01.026 [DOI] [PubMed] [Google Scholar]

- Zhu Q, Zhang J, Luo YL, Dilks DD, Liu J (2011) Resting-state neural activity across face-selective cortical regions is behaviorally relevant. J Neurosci 31:10323–10330. 10.1523/JNEUROSCI.0873-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]