Abstract

Adaptive behavior relies on the integration of perceptual and motor processes. In this study, we aimed at characterizing the cerebral processes underlying perceptuo-motor interactions evoked during prehension movements in healthy humans, as measured by means of functional magnetic resonance imaging. We manipulated the viewing conditions (binocular or monocular) during planning of a prehension movement, while parametrically varying the slant of the grasped object. This design manipulates the relative relevance and availability of different depth cues necessary for accurate planning of the prehension movement, biasing visual information processing toward either the dorsal visual stream (binocular vision) or the ventral visual stream (monocular vision). Two critical nodes of the dorsomedial visuomotor stream [V6A (anterior visual area 6) and PMd (dorsal premotor cortex)] increased their activity with increasing object slant, regardless of viewing conditions. In contrast, areas in both the dorsolateral visuomotor stream [anterior intraparietal area (AIP) and ventral premotor cortex (PMv)] and in the ventral visual stream [lateral-occipital tactile-visual area (LOtv)] showed differential slant-related responses, with activity increasing when monocular viewing conditions and increasing slant required the processing of pictorial depth cues. These conditions also increased the functional coupling of AIP with both LOtv and PMv. These findings support the view that the dorsomedial stream is automatically involved in processing visuospatial parameters for grasping, regardless of viewing conditions or object characteristics. In contrast, the dorsolateral stream appears to adapt motor behavior to the current conditions by integrating perceptual information processed in the ventral stream into the prehension plan.

Keywords: grasping, ventral visual stream, dorsomedial visuomotor stream, dorsolateral visuomotor stream, lateral occipital complex, anterior intraparietal area

Introduction

Visual cortical processing is organized in functionally and anatomically separated circuits (Goodale and Milner, 1992; Jeannerod and Rossetti, 1993), with object recognition and action guidance relying on processes and representations distributed along two distinct pathways, the ventral and the dorsal visual stream, respectively. Both streams originate in the striate cortex, but whereas the ventral stream terminates in the anterior part of the temporal lobe, the dorsal stream reaches into the superior part of the posterior parietal lobe (Felleman and Van Essen, 1991). Subsequent anatomical and functional studies have documented an additional distinction between dorsomedial and dorsolateral circuits within the parieto-frontal network (Tanne-Gariepy et al., 2002; Rizzolatti and Matelli, 2003). We recently suggested that the relative contribution of these two circuits is a function of the degree of on-line control required by a prehension movement (Grol et al., 2007). However, adaptive behavior ultimately requires the integration of perceptual and motor abilities, for instance when we adjust our grasping movement to the ripeness of a tomato as judged by its color. Here, we extend the scope of our previous study (Grol et al., 2007) by investigating the cerebral mechanisms supporting the integration of perceptual and motor abilities during the planning of goal-oriented behavior.

Recent findings on the role of binocular vision in visuomotor behavior provide an empirical possibility for experimental manipulations of this integration. Processing depth information along the dorsal stream is particularly dependent on binocular inputs, like stereopsis (Sakata et al., 1995; Shikata et al., 1996) and vergence (Mon-Williams et al., 2001; Quinlan and Culham, 2007). For instance, in visual agnosic patients, visuomotor performance is disturbed during monocular viewing of a target (Dijkerman et al., 1996, 2004; Marotta et al., 1997). This suggests that, when binocular vision is unavailable, the extraction of depth information (that is crucial for visuomotor control) relies on pictorial cues like texture, illumination gradients, and perspective. These cues are processed along the ventral visual stream (Huxlin et al., 2000; Mon-Williams et al., 2001; Kovacs et al., 2003).

In the current study, we biased visual processing toward either the dorsal or the ventral stream by manipulating viewing conditions (monocular or binocular vision) during the planning of a prehension movement. Both viewing conditions are likely to engage both streams, but the ventral stream should increase its functional integration with the dorsal stream as the relevance of pictorial cues of depth information increases. Accordingly, we independently manipulated the relevance of these cues by varying the slant of the object to be grasped. Increasing the object slant increases the importance of depth information provided by pictorial cues like texture (Knill, 1998a,b), whereas the relevance of binocular cues decreases (Knill and Saunders, 2003). We used this design while measuring cerebral activity with functional magnetic resonance imaging (fMRI) to test the hypothesis that prehension of a horizontal prism under monocular vision increases the functional couplings between the two visual streams as pictorial cues become more relevant for motor planning.

Materials and Methods

Participants.

Nineteen healthy right-handed [Edinburgh Handedness Inventory (Oldfield, 1971), mean ± SD, 97 ± 8%] adult (mean ± SD, 22 ± 2 years) male volunteers were recruited as participants and received a small financial reward. They all had normal or corrected-to-normal visual acuity, stereoscopic depth discrimination thresholds at 60 arcsec or less (TNO stereographs; Laméris Ootech BV, Nieuwegein, The Netherlands; 1972), and they gave informed consent according to the institutional guidelines of the local ethics committee (CMO region Arnhem-Nijmegen, The Netherlands).

Experimental setup.

Subjects lay supine in the magnetic resonance (MR) scanner. The standard mattress of the scanner bed was removed to position the subject's body lower within the bore of the scanner and to aid in comfortably tilting the subject's head forward by 30°. In this way, the subject had a direct line of sight of the object to grasp (Fig. 1A). An eight-channel phased-array receiver head coil was tightly fitted to the subject's head with foam wedges. The subject's right upper arm was immobilized with foam wedges and a Velcro strap band across the lower chest, such that the forearm could comfortably lie horizontally on the subject's abdomen and allow for the reaching-grasping movements performed in this study (see below). An optical response button box (MRI Devices, Waukesha, WI) was positioned on the left side of the abdomen and served as a “home key” in between trials. Using this button box, reaction times (RTs) (i.e., the time interval from stimulus onset until the release of a home key) and movement times (MTs) (i.e., the time interval from the home key release until the subsequent home key press) were measured on each trial during scanning.

Figure 1.

Experimental setup and trial time course. A, The subject was asked to perform visually guided prehension movements while lying supine in the MR scanner. His head was fitted inside a phased-array receiver head-coil (5), tilted forward by ∼30° such that the target object was in direct line of sight. The subject could comfortably move from the home key placed on the left side of the abdomen (not shown) to the object (3; detail, C) by rotating his forearm around the elbow and his hand around the wrist. The experimenter could vary the orientation of the object (from 0 to 90° deviation from the vertical plane, in 4 steps of 30°) by turning a wheel (1; detail, E). Vision was controlled by MR-compatible liquid crystal shutter glasses, which could be made opaque or transparent individually (3; D shows the glasses in the monocular-right condition). During scanning, the target object was located inside the MR-bore, and the turning wheel was outside the bore (E). A white curtain (not shown) provided a stable and homogeneous visual background for the subject and blocked sight of the experimenter. B, Each trial started with a rest period of variable duration (3–8 s). During this period, the subject's hand was resting on the home key and the experimenter could rotate the object in the instructed orientation for the upcoming trial. Vision was blocked by the opaque LCD glasses, but two LEDs (2; detail, C) provided an anchor for fixation. At the end of this rest period, the LEDs turned off and the left, right or both shutters (4) became transparent, providing either monocular or binocular vision of the target object. The subject was instructed to grasp the object using a precision grip (index finger and thumb) as quickly and accurately as possible after the target object became visible, holding it briefly before returning to the home key on their abdomen. When the subject's hand released the home key, the shutters closed. A change in capacitance in a wire surrounding the target object (detail, C) indicated the moment of first contact between the subject's fingers and the target object. In our event-related analysis of the fMRI time series, we used the response and prehension phases of the trial (indicated by the first gray block) for the main effect of GRASP, and the portion of the trials during which the subject held the object and during which his hand moved back from the object to the home key for main effect of HOLD-RETURN (as indicated by the second gray block).

The subjects were asked to grasp a black right rectangular prism (6 × 4 × 2 cm; “target object”) along its longest dimension with their thumb and index finger. The target object was positioned at one end of the scanner bore, along the subject's midsagittal plane, above the subject's pelvis, by means of an adjustable polymer and wooden frame (Fig. 1C). Behind the target object, a white cloth served as a homogeneous background for the object and to block the subject's view of the scanner room. The target object could be rotated along the subject's sagittal plane, in steps of 30° between 0 and 90° from the vertical plane, by means of a pulley system manually operated by an experimenter standing inside the scanner room (Fig. 1E). Conductive wires on the object surface (“touch sensor”) detected changes in capacitance induced by the contact between the subject's finger and the target object. This measurement enabled us to subdivide the movement period of each trial (MT) into two relevantly distinct phases: the prehension phase (PT) (i.e., the time interval from release of the home key until contact of at least one finger on the object) and the hold-return phase (HRT) (i.e., the time interval from finger–object contact until return on the home key).

The subject's vision was controlled by means of MR-compatible liquid crystal shutter goggles (“shutters”; Translucent Technologies, Toronto, Ontario, Canada). The shutters could assume either a transparent (“open”) or an opaque (“closed”) configuration [transition times (10–90%): open = 23 ms; close < 0.5 ms], and they were positioned in front of the left and the right eye of the subject by means of an adjustable polymer frame, ensuring a consistent visual field of view across subjects (Fig. 1D). The shutters covering the left and the right eye were independently controlled and they allowed us to manipulate the type (i.e., monocular or binocular vision) and the timing of visual information available to the subject. When the shutters were closed, a pair of light-emitting diodes (LEDs) positioned on the left and on the right of the object generated two bright spots on the shutter glasses, providing an anchor point for the subject's gaze (Fig. 1C). The LEDs were off when the liquid crystal display (LCD) shutter glasses were open. During this period, the subjects planned the prehension movement. This procedure ensured that the subjects fixated the target object (Johansson et al., 2001). In addition, during the training session they were instructed to focus on the target object. The LEDs remained off during the execution of the movement when the shutter glasses were closed. No explicit instructions were given to the subjects concerning eye movements during this phase. After the return of the hand to the home key, the shutter glasses remained closed, and the LEDs were turned on. Subjects were told that the LEDs served as an anchor for their fixation and that at the beginning of the next trial the object would appear at the same location. A previous study revealed that this procedure was important for minimizing head motion artifacts (Verhagen et al., 2006).

The shutters, the LEDs, the touch sensor, and the home key were controlled by a computer running Presentation software (version 10.1; Neurobehavioral Systems, San Francisco, CA) located outside the scanner room. Custom filters and fiber optic cables were used for the connections.

Each trial started with the shutters closed, the LEDs on, and the subject's right-hand on the home key (Fig. 1B). During a pseudorandomized interval (3000–8000 ms; uniform distribution; steps of 1 ms), the target object was rotated by the experimenter according to a prespecified orientation, communicated to the experimenter via MR-compatible headphones. At the end of this time interval, the left, the right, or both shutters opened, thus allowing left monocular, right monocular, or binocular vision of the target object, respectively. Concurrently, the LEDs were turned off. Subjects were instructed to grasp the object as quickly and accurately as possible as soon as the target object became visible, briefly holding it between their thumb and index finger before returning to the home key. When the subjects left the home key, the shutters closed. When the subjects returned to the home key, the LEDs were turned on and a new trial started. A session had a total duration of ∼30 min and consisted of 240 trials organized in a 3 × 4 design (factor VISION, with three levels: monocular left, monocular right, binocular; and factor ORIENTATION, with four levels: 0, 30, 60, or 90°). Subjects were instructed and trained inside the scanner room until stable error-free performance was achieved.

Behavioral analysis.

The fMRI model collapsed RT and PT periods into a single regressor (GRASP) (see below, Image analysis); therefore, we performed an ANOVA on the same temporal interval (i.e., the trial-by-trial sum of RT and PT) that considered the factors VISION (three levels: monocular left, monocular right, binocular) and ORIENTATION (four levels: 0, 30, 60, or 90° deviation from the vertical plane) and their interaction (p < 0.05).

To validate the reliability of the behavioral measurements collected in the MR scanner and to illustrate the effect of object orientation on the variability of the prehension movement, we also performed additional kinematic measurements on one subject. The experimental settings were identical with those used in the fMRI experiment, but the measurements were performed outside the scanner to be able to sample the position and orientation of the index finger and thumb during task performance (miniBIRD tracking system; Ascension Technology, Burlington, VT). Movements were sampled at 103 Hz, with markers on the wrist, thumb, and index finger. Data were low-passed filtered (fourth-order Butterworth filter at 10 Hz), and the tangential speed of the three markers was calculated. Similarly to the experiment in the MR scanner, the release of the home key at the starting position marked the onset of the movement, whereas the touch sensor on the object marked the offset of the prehension movement. End-point variance of the marker positions 30 ms before the movement offset was expressed by the volume of 95% confidence ellipsoids (McIntyre et al., 1998), calculated separately for each marker and each object orientation. No statistical significance was inferred on these kinematic data because only one subject was measured for validation purposes.

Image acquisition.

Images were acquired on a 3 tesla Trio MRI system (Siemens, Erlangen, Germany), using the body coil for radio frequency transmission, and an eight-channel phased array surface head coil for signal reception. Blood oxygenation level-dependent (BOLD)-sensitive functional images were acquired using a single shot gradient echo-planar imaging (EPI) sequence [repetition time (TR)/echo time (TE), 2030/30 ms; 32 transversal slices; distance factor, 17%; effective voxel size, 3.5 × 3.5 × 3.5 mm]. After the functional scan, high-resolution anatomical images were acquired using an MP-RAGE GRAPPA sequence with an acceleration factor of 2 [TR/TE/inversion time, 2300/2.92/1100 ms; voxel size, 1 × 1 × 1 mm; 192 sagittal slices; field of view, 256 mm].

Image analysis.

Imaging data were preprocessed and analyzed using SPM5 (Statistical Parametric Mapping; http://www.fil.ion.ucl.ac.uk/spm). The first five volumes of each participant's data set were discarded to allow for T1 equilibration. Before analysis, the image time series were spatially realigned using a least-squares approach that estimates six rigid body transformations (translations, rotations) by minimizing head movements between each image and the reference image. The time series for each voxel were temporally realigned to the first slice in time to correct for differences in slice time acquisition. Subsequently, images were normalized onto a Montreal Neurological Institute (MNI)-aligned EPI template using both linear and nonlinear transformations and resampled at an isotropic voxel size of 2 mm in a probabilistic generative model that combines image registration, tissue classification, and bias correction (Ashburner and Friston, 2005). This procedure also provided mean images of segmented gray matter, white matter, and CSF. Finally, the normalized images were spatially smoothed using an isotropic 10 mm full-width at half-maximum (FWHM) Gaussian kernel. Each participant's structural image was spatially coregistered to the mean of the functional images and spatially normalized by using the same transformation matrix applied to the functional images.

The fMRI time series were analyzed using an event-related approach in the context of the general linear model, using standard multiple regression procedures. For each trial, we considered two effects, modeled as square-wave functions: GRASP and HOLD-RETURN. The GRASP phase was taken as the time interval from the opening of the shutters until the contact between the target object and the subject's hand; this effect relates to the preparation and the execution of the reaching-grasping movement and it corresponds to the GRASP interval (Fig. 1B, first gray block). We combined planning and execution phases in a single explanatory variable because the current experimental setting does not allow us to disambiguate the relative contributions of these movement phases. In this experiment, movement planning was quickly followed by movement execution on each and every trial, and the hemodynamic (BOLD) consequences of these two movement phases would be indistinguishable (collinear). Previous studies have distinguished these movement phases by introducing variable delays between instruction cues and movement execution (Toni et al., 1999, 2001), or by using a proportion of no-go trials (Shulman et al., 2002; Thoenissen et al., 2002). In this experiment, we opted not to do so to avoid transforming the task into a delayed grasping protocol (Hu et al., 1999), or introducing motor inhibition effects (Mars et al., 2007).

The HOLD-RETURN phase was taken as the interval from the contact between the target object and the subject's hand, until the return of the subject's hand on the home key (Fig. 1B, second gray block). The effect of GRASP was partitioned in three conditions according to the factor VISION (i.e., monocular left, monocular right, and binocular trials). For each of these conditions, we also considered the linear parametric effect of the factor ORIENTATION (i.e., the deviation of the target object from the vertical: 0, 30, 60, or 90°). On a subject-by-subject basis, trials in which the sum of RT and PT was more than three times the SD from the mean (3 ± 2%; mean percentage of misses ± SD over all subjects) were labeled as MISSES and included in a separate regressor of no interest. Each of the resulting eight regressors [monocular left (main and parametric), monocular right (main and parametric), binocular (main and parametric), HOLD-RETURN (main), MISSES (main)] were convolved with a canonical hemodynamic response function, and its temporal and dispersion derivatives (Friston et al., 1998a). The potential confounding effects of residual head movement-related effects were modeled using both the original, the squared, and the first-order derivatives of the time series of the estimated head movements during scanning (Lund et al., 2005). The intensity changes attributable to the movements of the arm and hand through the magnetic field were accounted for by using the time series of the mean signal from the white matter and CSF (Verhagen et al., 2006). Finally, the fMRI time series were high-pass filtered (cutoff 128 s) to remove low-frequency confounds, such as scanner drifts. Temporal autocorrelation was modeled as a first-order autoregressive process.

For each subject, linear contrasts pertaining to the six regressors of interest [monocular left (main and parametric), monocular right (main and parametric), binocular (main and parametric)] were calculated. Consistent effects across subjects were tested using a random-effects analysis on these contrast images by means of a within-subject ANOVA.

Statistical inference.

Statistical inference (p < 0.05) was performed at the voxel level, correcting for multiple comparisons [false-discovery rate (Benjamini and Hochberg, 1995)] over the search volume. When assessing the effects of movement (i.e., the null hypothesis that there was no effect evoked by planning and executing the reaching-grasping movements), the effects of VISION (i.e., monocular vs binocular and vice versa), and the effects of ORIENTATION (i.e., common effects of increasing deviation of the target object from the vertical plane across both monocular and binocular viewing conditions), the search volume covered the whole brain. When assessing the effects of ORIENTATION across VISION (i.e., the differential effects of increasing object slant between monocular and binocular conditions), the search volume was limited to the combined volume of two representative sets of cortical areas, crucially involved in goal-directed action and object recognition, respectively. In the dorsal visual stream, the anterior intraparietal area (AIP) (Culham et al., 2006) and the rostroventral premotor cortex (area PMv or F5) (Davare et al., 2006) are necessary for preshaping the hand during grasping (Gallese et al., 1994; Fogassi et al., 2001; Tunik et al., 2005). In the ventral visual stream, the lateral occipital complex (LOC) (Grill-Spector et al., 1999; Amedi et al., 2001; Kourtzi and Kanwisher, 2001) plays a crucial role in object recognition, including the perception of geometrical shape and volumetric features of objects (Moore and Engel, 2001) and the integration of multimodal object features [lateral-occipital tactile-visual region (LOtv)] (Amedi et al., 2001, 2002). Using the WFU_PickAtlas SPM5 toolbox (Maldjian et al., 2003), we drew three spherical volumes of interest (VOIs) with a radius of 8 mm and centered at the following coordinates (MNI space): [−48 −64 −16] [LOtv (Amedi et al., 2002)], [−42 −42 +48] [AIP (Culham et al., 2006)], [−60 +16 +24] [PMv (Davare et al., 2006)].

Latency and duration estimation.

To explore differential orientation-related changes in the latency and duration of the BOLD responses during monocular and binocular trials, we calculated temporal shift and dispersion effects following the method of Henson et al. (2002). In this framework, changes in the latency and duration of the BOLD response can be indexed by relating the sign and magnitude of the partial derivative with respect to time (temporal derivative, β2) and the partial derivative with respect to duration (dispersion derivative, β3) of the hemodynamic response model (Friston et al., 1998a) to the sign and magnitude of the canonical component (β1). Positive values of β2 indicate an earlier hemodynamic response, and negative values of β2 indicate a later hemodynamic response. Positive values of β3 indicate a shorter hemodynamic response, and negative values of β3 a longer hemodynamic response. However, the relationship between the derivative/canonical ratios (β2/β1, β3/β1) and the actual latency of the BOLD response is nonlinear because of the fact that the parameterization ignores high-order terms (Henson et al., 2002). Therefore, we transformed the derivative/canonical ratio using the (approximately) sigmoidal logistic function: 2C/(1 + exp(D(βd/β1)) − C, where C = 1.78, D = 3.10, β1 is the parameter estimate for the canonical component, and βd is the parameter estimate for either the temporal (β2) or dispersion (β3) partial derivative (Henson et al., 2002). This approach normalizes condition-specific changes in BOLD latency and duration to the overall amplitude of the corresponding BOLD response. Latency and dispersion maps, with respect to the moment of stimulus presentation, were calculated for each subject, separately for both monocular and binocular trials, as a function of the target object orientation, generating six SPMs for each subject (analogously to the effects described for the canonical response) (see above, Image analysis). After smoothing these SPMs (10 mm FWHM isotropic Gaussian kernel), we entered them into a random-effects analysis of a within-subject ANOVA. We considered this analysis as a post hoc test of BOLD latency and duration on the effects revealed by the main analysis of BOLD amplitudes. Therefore, we only assessed the significance (p < 0.05) of latency and dispersion effects on the peak voxels revealed in the main analysis (Tables 1–3).

Table 1.

Common and differential cerebral activity evoked during monocular and binocular trials

| Anatomical region | Functional region | Hemisphere | t value | p value | Cluster size | Local maximum |

|---|---|---|---|---|---|---|

| Monocular and binocular viewing | ||||||

| Superior precentral gyrus (BA 4) | Motor cortex | Left | 12.96 | <0.001 | 46,668 | −34, −24, 62 |

| Superior postcentral gyrus (BA 1–3) | Somatosensory | Left | 12.86 | −38, −36, 60 | ||

| Cerebellum (posterior and anterior lobes) | IV–VI | Right | 12.16 | 28, −54, −20 | ||

| Left | 8.72 | −34, −56, −24 | ||||

| Lingual gyrus | V1–V3 | Right | 11.77 | 10, −58, −8 | ||

| Left | 6.43 | −14, −68, 2 | ||||

| Cuneus (BA 17–18) | Visual cortex | Left, right | 9.57 | 6, −90, 22 | ||

| Middle occipital gyrus | V5 | Left | 7.18 | −48, −70, 4 | ||

| Right | 5.04 | 44, −74, 14 | ||||

| Superior parietal lobule (BA 2) | Right | 5.94 | 36, −46, 62 | |||

| Binocular > monocular viewing | ||||||

| Superior occipital gyrus (BA 17–18) | V1–V2 | Left, right | 4.70 | 0.015 | 4111 | −12, −96, 14 |

| 4.42 | 12, −92, 22 | |||||

MNI stereotactic coordinates of the local maxima of regions showing an effect of reaching and grasping with the right hand (both the common and differential effects of monocular and binocular viewing conditions are listed). For large clusters spanning several anatomical regions, more than one local maximum is given. Cluster size is given in number of voxels. Statistical inference (p < 0.05) was performed at the voxel level, correcting for multiple comparisons using the false-discovery rate approach over the search volume. IV–VI, Cerebellar hemisphere IV to VI; V1, visual area 1; V2, visual area 2; V3, visual area 3; V5, visual area 5.

Table 2.

Cerebral activity increasing as a function of object slant, independent of viewing conditions

| Anatomical region | Functional region | Hemisphere | t value | p value | Cluster size | Local maximum |

|---|---|---|---|---|---|---|

| Occipitoparietal fissure | V6A | Left | 6.05 | 0.001 | 6057 | −18, −72, 50 |

| Middle occipital gyrus | V5 | Left | 4.62 | −44, −72, 8 | ||

| Posterior inferior occipital gyrus | V4 | Left | 4.35 | −50, −68, −16 | ||

| Middle occipital gyrus | V2 | Right | 4.04 | 0.007 | 1085 | 20, −102, 4 |

| Anterior superior precentral gyrus (BA 6) | PMd | Left | 6.01 | 0.001 | 639 | −22, −4, 56 |

MNI stereotactic coordinates of the local maxima of regions showing cerebral activity that increased as a function of object slant. Other conventions are as in Table 1.

Table 3.

Differential cerebral activity between monocular and binocular viewing conditions as a function of object slant

| Anatomical region | Functional region | Hemisphere | t value | p value | Cluster size | Local maximum |

|---|---|---|---|---|---|---|

| Inferior occipital gyrus | LOtv | Left | 3.07 | 0.049 | 105 | −54, −62, −14 |

| Inferior precentral gyrus (BA 44) | PMv | Left | 3.02 | 0.049 | 80 | −58, 10, 28 |

| Anterior intraparietal sulcus | AIP | Left | 2.71 | 0.049 | 208 | −42, −44, 42 |

MNI stereotactic coordinates of the local maxima of regions showing a positive interaction between viewing conditions (monocular, binocular) and increasing object slant.

Effective connectivity analysis.

We used the psychophysiological interaction (PPI) method to test for changes in effective connectivity between the three VOIs described above (Friston et al., 1997). This method makes inferences about regionally specific responses caused by the interaction between an experimentally manipulated psychological factor and the physiological activity measured in a given index area. The analysis was constructed to test for differences in the regression slope of the activity in a set of target areas on the activity in the index area, depending on the viewing condition (monocular left, monocular right, or binocular) and on the object orientation. For each subject and for each VOI, the physiological activity of the index area was defined by the first eigenvariate of the time series of all voxels within the volumes of interest that showed activation in response to our task (p < 0.05 uncorrected). For each VOI, statistical inference was performed at the voxel level over a search volume defined by the combined volumes of the two remaining VOIs [p < 0.05, corrected with false-discovery rate (Benjamini and Hochberg, 1995)].

Anatomical inference.

Anatomical details of significant signal changes were obtained by superimposing the relevant SPMs on the structural images of the subjects. The atlas of Duvernoy and colleagues (Duvernoy et al., 1991) was used to identify relevant anatomical landmarks. When applicable, Brodmann areas (BAs) were assigned on the basis of the SPM Anatomy Toolbox (Eickhoff et al., 2005). When the literature used for VOI selection reported the sterotaxical coordinates in Talairach space, these coordinates were converted to coordinates in MNI space by a nonlinear transform of Talairach to MNI (http://imaging.mrc-cbu.cam.ac.uk/imaging/MniTalairach).

Results

Behavioral results

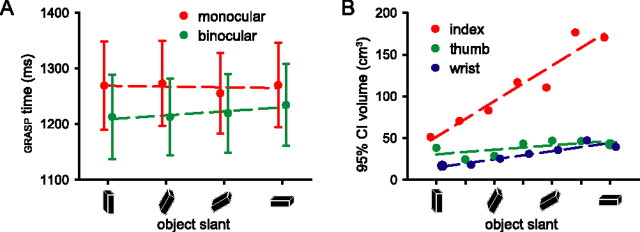

Subjects' GRASP (sum of RT and PT) was influenced by the viewing conditions (F(2,36) = 10.88; p < 0.001), being longer in the monocular trials than in the binocular trials (post hoc comparison of means: F(1,18) = 10.67; p = 0.004, Bonferroni corrected) (Fig. 2A), but there was no effect of object ORIENTATION nor an interaction between VISION and ORIENTATION (F(3,54) = 0.18, p = 0.911; F(6,108) = 0.38, p = 0.889) (Fig. 2A). Figure 2B shows the volume of the 95% confidence ellipsoid describing the end-point positions of the index finger, thumb, and wrist 30 ms before the end of the movement in one subject. The end-point variance of the index finger increases with increasing object slant regardless of viewing condition, but this relationship does not hold for the wrist and thumb.

Figure 2.

Behavioral effects. A, Collapsed reaction and prehension times (mean ± SE over subjects) were longer in trials with monocular (red) than in trials with binocular viewing conditions (green), but did not change as a function of object deviation from the vertical plane (0, 30, 60, or 90°). B, Volumes of the 95% confidence ellipsoids describing the end-point positions (taken 30 ms before the first touch with the object) of the index finger, thumb, and wrist as a function of object slant. The end-point variance was not significantly different for monocular and binocular trials.

Cerebral effects: BOLD amplitude

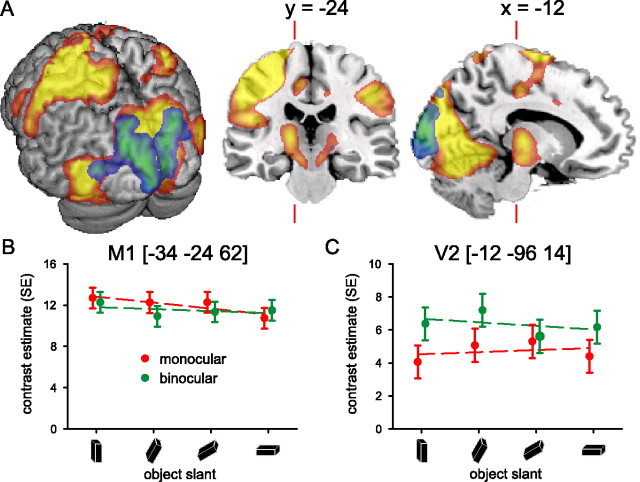

Planning and executing visually guided prehension movements with the right hand evoked activity in visual cortex (bilaterally) and in the dorsolateral portions of left parietal and frontal cortex (Table 1). Figure 3A illustrates the anatomical distribution of cerebral activity evoked during both monocular and binocular viewing conditions (in red-yellow). There were also significant effects in the cerebellum (bilaterally), in the occipito-temporal region (bilaterally), and in the right superior parietal lobule (Table 1).

Figure 3.

Cerebral effects: viewing condition. A, Spatial distribution of cerebral activity related to planning and executing visually guided prehension movements with the right hand. Colors from red to yellow show the common activity across monocular and binocular viewing conditions, whereas colors from blue to green show the distribution of differential activity of binocular versus monocular viewing conditions. There were no significant differential effects when contrasting monocular with binocular conditions. The left side of the image represents the left side of the brain (neurological convention). The image shows the relevant SPM{t}s (p < 0.05 false-discovery rate corrected over the whole brain; random-effect analysis; only voxels within 16 mm from the cortical surface are displayed) superimposed on a rendered brain surface, a coronal section, and a sagittal section of a structural T1 image. The prehension task evoked activity in visual cortex (bilaterally) and in the dorsolateral portions of left parietal and frontal cortex, as well as in the occipito-temporal region bilaterally, and in the right anterior parietal cortex, in the inferior parietal lobule (Table 1). Binocular viewing induced more activity compared with monocular viewing in striate and peristriate cortex. B, C, Cerebral responses over precentral (M1) and peristriate cortex (V2), respectively (numbers in square brackets indicate MNI stereotactic coordinates for the local maxima) (Table 1). The graphs show parameter estimates (in SE units) of the cerebral responses evoked by the prehension task over object orientations for the monocular (red) and binocular (green) viewing conditions separately.

There were also robust differential effects between binocular and monocular viewing conditions, namely in striate and peristriate regions (Fig. 3A, in blue-green; Table 1). Large parts of this cluster overlap with V1 (30%) and V2 (23%). The local maxima of these responses fall within the 80% probability range of area V1 and V2 (Amunts et al., 2000). Given the anatomical location of this effect, it appears more likely to be related to the difference in viewing conditions than differences in planning and/or execution of the movements. More precisely, the effect fits with the relative predominance of neurons specifically sensitive to stereoscopic visual input in the striate cortex (Anzai et al., 1999a,b). There were no significant differential effects when contrasting monocular with binocular conditions.

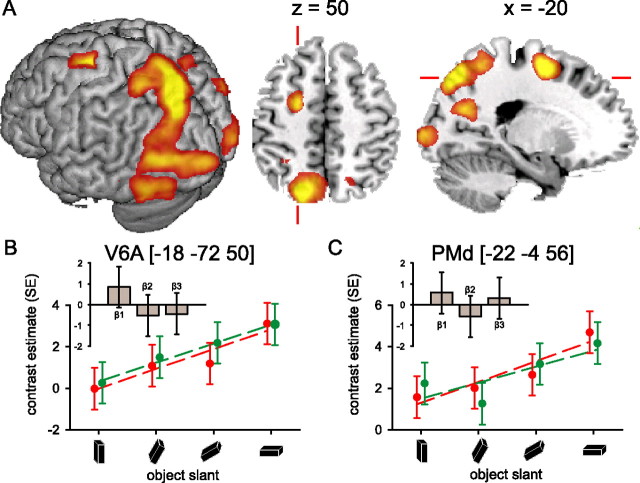

Reaching-grasping movements toward a target object that deviates progressively from the vertical plane increased cerebral activity along the occipito-parietal fissure [anterior visual area 6 (V6A)] and in the anterior part of the dorsal precentral gyrus [dorsal premotor cortex (PMd)] (Fig. 4, Table 2). It might be argued that these effects could be driven by residual between-subjects behavioral differences in planning and/or execution of the movements. To address this issue, we assessed the subject-by-subject relationship between the magnitude of cerebral and behavioral effects. For each subject, we calculated the parameter estimates of the BOLD signal evoked in V6A and PMd (and also in LOtv, AIP, PMv), separately for monocular and binocular viewing conditions, and regardless of object slant. The behavioral effects we considered were the mean RT, PT, and GRASP time. Regression analysis showed that the cerebral (BOLD) variance in those regions could not be explained by the behavioral effects. The same outcome emerged when considering the relationship between cerebral and behavioral changes as a function of object slant. These results indicate that neither the main nor the parametric effect of viewing condition on brain activity could be explained by timing differences in planning and executing the movement.

Figure 4.

Cerebral effects: object slant. A, Cerebral activity related to the prehension task increased as a function of increasing slant of the target object in occipital cortex (bilaterally), along the occipito-parietal fissure and in the anterior part of the dorsal precentral gyrus (Table 2). The image shows the relevant SPM{t} (p < 0.05 false-discovery rate corrected) superimposed on a rendered brain surface, an axial and a sagittal section. B, C, Cerebral responses over the occipito-parietal fissure (V6A) and the anterior part of the superior precentral gyrus (PMd), respectively. The graphs show parameter estimates (in SE units) for the effect evoked by the prehension task over object orientations for both viewing conditions separately. In these regions, the cerebral effects of the monocular (red) and binocular (green) viewing condition were comparable, but a strong effect of increasing object slant on cerebral activity can be seen in V6A and PMd, independent of viewing conditions. The histograms (insets) show the parameter estimates of the three basis functions used in the fMRI analysis [i.e., a canonical hemodynamic response function (β1), the weighted temporal derivative (β2), and the weighted dispersion derivative (β3)] at the corresponding local maxima. These histograms illustrate the differential BOLD amplitude (β1), latency (β2), and duration (β3) relative to the VISION by ORIENTATION interaction (i.e., the differential cerebral activity between monocular and binocular viewing conditions, increasing with object slant). The histograms show that there is no significant difference in the effect of increasing object slant between monocular and binocular viewing conditions in V6A and PMd, neither in BOLD amplitude (β1), latency (β2), nor duration (β3). Other conventions are as in Figure 3.

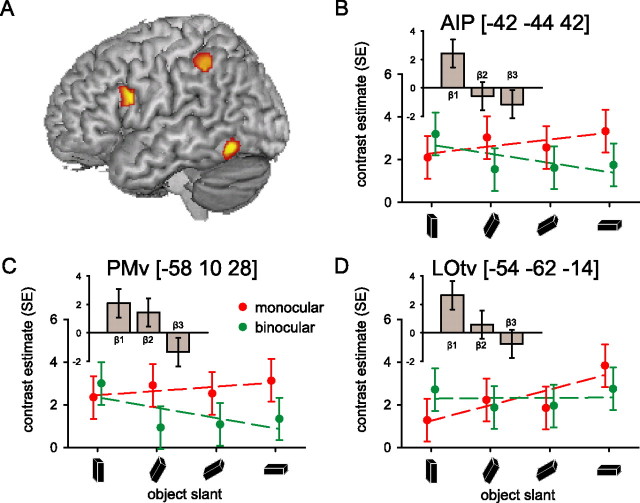

This experiment used different viewing conditions (monocular, binocular) to probe differences in the cerebral responses evoked during the prehension of an object at different slants (VISION by ORIENTATION interaction). We expected that cortical regions involved in processing object slant on the basis of pictorial cues in relation to motor planning would increase their activity more strongly across object orientations in the monocular than in the binocular condition. The left LOtv, a cortical region known to be important for object perception, showed this response pattern (Fig. 5, Table 3). Similar and significant response patterns were also found in two regions of the dorsolateral visuomotor stream, AIP and PMv (Fig. 5, Table 3). The absence of behavioral differences in the VISION by ORIENTATION interaction makes it unlikely that these cerebral effects are a consequence of behavioral differences.

Figure 5.

Cerebral effects: perceptuo-motor interactions. A, Spatial distribution of the differential cerebral activity increasing as a function of object slant between monocular and binocular viewing conditions. The image shows the relevant SPM{t} (p < 0.05 uncorrected for display purposes) superimposed on a rendered brain surface (Table 3). There were no significant differential effects when contrasting the parametric effects of increasing object slant under binocular viewing conditions with the parametric effects under monocular viewing conditions. B–D, Cerebral responses over the anterior inferior intraparietal sulcus (AIP), the inferior precentral gyrus (PMv), and the inferior occipital gyrus (LOtv). The graphs show parameter estimates of the cerebral responses evoked by the prehension task over different object orientations for the monocular (red) and binocular (green) viewing conditions separately. The histograms (insets) illustrate the differential BOLD amplitude (β1), latency (β2), and duration (β3) relative to the VISION by ORIENTATION interaction [i.e., the differential cerebral activity between monocular and binocular viewing conditions, increasing with object slant (as in Fig. 4)]. It can be seen that, in AIP, PMv, and LOtv, the parametric effect of object slant is stronger in the monocular than the binocular condition (positive β1). In addition, in PMv, the BOLD response starts earlier in time (positive β2) and, in AIP and PMv, the BOLD response is extended in time (negative β3) under monocular compared with binocular viewing conditions with increasing object slant.

Cerebral effects: BOLD latency and duration

We performed a post hoc test on the BOLD latency and duration of the local maxima revealed in the main analysis. The results are shown in the histograms (insets) of Figures 4 and 5. These histograms illustrate the differential BOLD amplitude (β1), latency (β2), and duration (β3) relative to the VISION by ORIENTATION interaction. Accordingly, the significantly positive β1 values observed in LOtv, AIP, PMv (Fig. 5B,C) index the effects detailed in the associated scatterplots, namely that the parametric effect of object slant is stronger in the monocular than the binocular condition. Analogously, the β1 values observed in V6A and PMd are not significantly different from zero, indicating the absence of a significant VISION by ORIENTATION interaction (Fig. 4B,C). Crucially, the BOLD latency and duration analyses indicate that the VISION by ORIENTATION interaction evokes earlier BOLD responses in PMv (positive β2), and longer BOLD responses in AIP and PMv (negative β3). Temporal modulation of the BOLD response as a function of viewing conditions was absent in V6A and PMd (Fig. 4B,C).

Cerebral effects: effective connectivity

We assessed the functional relevance of the changes in BOLD signal by testing whether LOtv, AIP, and PMv increased their interregional couplings as processing object slant on the basis of pictorial cues became increasingly relevant for accurate motor planning. We found increased couplings between AIP and both LOtv (p = 0.017) and PMv (p = 0.020) as a function of object slant during the monocular trials (compared with the binocular trials).

Discussion

The current study investigated cerebral processes supporting the integration of perceptual and motor processes by asking subjects to reach and grasp an object under binocular or monocular viewing conditions. In the latter condition, pictorial cues of depth information became more relevant for planning an appropriate prehension movement as the object's slant increased. Under these circumstances, the anterior intraparietal region (AIP) increased its functional coupling with both frontal premotor regions (PMv) and occipito-temporal perceptual areas (LOtv). In the following sections, we elaborate on the implications of these findings for models of the neural control of prehension movements.

Behavioral effects

During MR scanning, subjects performed an ecologically relevant prehension task (i.e., with a direct line of sight of the target object, without mismatches between visual target information and proprioceptive input related to the moving arm). The grasping movement was performed without on-line visual feedback (i.e., differences between viewing conditions were confined to the presentation of the target object before the start of the movement). The difference in the initial viewing conditions (monocular or binocular) influenced subjects' performance, with longer planning and execution times when only monocular object cues were available (Fig. 2A). This result confirms previous observations (Jackson et al., 1997; Bradshaw and Elliott, 2003; Loftus et al., 2004) and corroborate the sensitivity of the behavioral measures acquired during MR scanning. Crucially, GRASP time was not influenced by the object slant, excluding the possibility that orientation-related cerebral effects could be a by-product of behavioral differences. This result might appear at odds with previous studies reporting increasing prehension times with increasing slant when subjects viewed the object during prehension, with prehension time ending when both fingers stopped moving (Mamassian, 1997; van Bergen et al., 2007). However, experimental differences may account for this: the current setting prevented on-line visual feedback, and the first contact of either thumb or index finger with the object defined the end of the movement. Accordingly, the effect of increasing object slant becomes evident in the end-point variance of the prehension movement (regardless of viewing conditions) (Fig. 2B), suggesting that computational demands for specifying the appropriate movement parameters toward slanted objects were increased.

Cerebral effects

Reaching and grasping a rectangular prism with the right hand increased metabolic activity in large portions of occipito-temporal (bilaterally) and parieto-frontal regions (mainly left hemisphere) (Fig. 3). Within these spatially distributed responses, activity in the medial occipito-parietal fissure [putative area V6A (Pitzalis et al., 2006)] and in the superior precentral gyrus [putative area PMd (Davare et al., 2006)] increased proportionally to the slant of the object, regardless of viewing conditions (Fig. 4). The effect is unlikely to be a by-product of longer periods of motor output, somatosensory feedback, or visual inspection of the object evoked by grasping more horizontal objects, because the orientation-related changes in V6A and PMd activity were not associated with changes in GRASP time or with lengthening of the BOLD response (Fig. 4B,C). Rather, given that the end-point variance of the index finger increased with increasing object slant regardless of viewing conditions (Fig. 2B), we suggest that the orientation-related increases in V6A and PMd activity could result from the increased computational demands of specifying appropriate prehension parameters with greater predicted variability of the movement. This hypothesis fits with the general notion that V6A and PMd, two crucial nodes of the dorsomedial visuomotor stream (Tanne-Gariepy et al., 2002; Galletti et al., 2003), are involved in processing visuospatial information for visual control of reaching-grasping movements (Fattori et al., 2001, 2005; Grol et al., 2007). These two regions are known to be critically involved in encoding the spatial location of a movement target (Pisella et al., 2000; Medendorp et al., 2003) and to integrate target- and effector-related information (Pesaran et al., 2006; Beurze et al., 2007). The present findings provide additional support for the hypothesis that the dorsomedial visuomotor stream is involved in the specification of spatial parameters for prehension movements on the basis of visual information acquired before response onset (Pisella et al., 2000; Grol et al., 2007), regardless of viewing conditions or target characteristics.

The activity pattern of V6A and PMd can be contrasted with the responses found in AIP and PMv [parts of the dorsolateral visuomotor stream (Jeannerod et al., 1995)] and in LOtv (a part of the ventral visual stream) (Fig. 5). Four effects were evident. First, these three regions increased their activity when a combination of monocular viewing conditions and object slant increased the relevance of pictorial cues to determine the orientation of the object to be grasped. This VISION by ORIENTATION interaction did not evoke corresponding behavioral differences (Fig. 2), and the subject-by-subject variability in planning and execution time did not account for variations in the activity of these three regions. Second, during binocular vision, whereas AIP and PMv decreased their activity with increasing object slant (p < 0.05) (Fig. 5B,C), LOtv showed a stable level of activity across object orientations (Fig. 5D). Third, AIP increased its effective connectivity with both PMv and LOtv during monocular trials as the slant of the target object increased. Fourth, both PMv and AIP BOLD responses extended over longer portions of monocular trials as the object slant increased (Fig. 5B,C). These temporal modulations were regionally specific (being absent in V6A and PMd) (Fig. 4B,C), differential in nature (being relative to the interaction between viewing conditions and object slant), and occurred over and above changes in BOLD magnitude (see Materials and Methods). Therefore, they cannot be accounted for by generic differences in neurovascular coupling between cerebral regions, or by by-products of increased BOLD responses (Friston et al., 1998b).

These findings suggest a specific scenario of perceptuo-motor interactions involving the dorsomedial, the dorsolateral, and the ventral visual streams. Namely, when prehension movements are organized on the basis of depth information obtained by processing pictorial cues (i.e., monocular trials with slanted objects), the dorsolateral stream enhances its coupling with the ventral stream, increases and lengthens its contribution to the visuomotor process, presumably by strengthening its intrinsic interregional effective connectivity. In other words, when a high degree of visuomotor precision is required, but on-line visual feedback is absent and perceptual information is necessary for planning a correct movement, then the dorsolateral stream appears to support the visuomotor process on the basis of perceptual information provided by the ventral stream. In contrast, when depth information is less relevant for organizing prehension movements (i.e., trials with near-vertical objects), or when it can be obtained by binocular cues (i.e., stereopsis or vergence), then the contributions of the dorsolateral stream to the sensorimotor transformation are reduced, and the perceptual processes in the ventral stream are not involved in organizing the prehension movement. Under these circumstances, the visuomotor process could be driven by the dorsomedial stream relying on information accumulated immediately before motor execution.

Interpretational issues

In this study, we prevented on-line visual control during action performance and separated the effects driven by the prehension movements from those evoked by the stereotypical return movement to the home key. The lack of on-line visual feedback might have increased LOtv activity, given that this region is particularly responsive during delayed actions (Culham et al., 2006). However, the lack of on-line visual feedback cannot explain the differential responses found in LOtv, given that on-line visual feedback was absent during both monocular and binocular trials.

The current study provides specific information for understanding the influence of perceptual processes on the motor system during the initial stages of sensorimotor transformations. However, this focus might also limit the relevance of this study for models of on-line action control. It remains to be seen whether the reported interactions between perceptual and visuomotor processes are specifically evoked by the on-line control component of visually guided movements (Glover, 2003).

In addition to task-related changes in BOLD signals, we also explored changes in interregional couplings among a priori defined regions that are important for reaching-grasping behavior (Amedi et al., 2002; Culham et al., 2006; Davare et al., 2006). Accordingly, the scope of the results is limited by the exploratory nature of the present connectivity analysis (Friston et al., 1997). For instance, it is conceivable that LOtv could influence the dorsolateral visuomotor stream not only through AIP, but also via the ventral prefrontal cortex (Ungerleider et al., 1989; Petrides and Pandya, 2002; Croxson et al., 2005; Tomassini et al., 2007). This issue can be addressed by using more sophisticated and hypothesis-driven models of effective connectivity (Friston et al., 2003).

Conclusion

We have assessed the cerebral processes underlying perceptuo-motor interactions during prehension movements directed toward objects at different slants and viewed under either monocular or binocular conditions. We found that dorsomedial parieto-frontal regions (V6A, PMd) were involved in the prehension movements regardless of viewing conditions. In contrast, perceptual information processed in the ventral stream (LOtv) influenced the visuomotor process through dorsolateral parieto-frontal regions (AIP, PMv). These results point to different functional roles of dorsomedial and dorsolateral parieto-frontal circuits. The latter circuit might provide a privileged computational ground for incorporating perceptual information into a sensorimotor transformation, whereas the dorsomedial circuit might support motor planning on the basis of advance visuospatial information.

Footnotes

This work was supported by The Netherlands Organisation for Scientific Research Project Grant 400-04-379 (H.C.D., I.T.). We thank Paul Gaalman, Erik van den Boogert, and Bram Daams for their excellent technical support.

References

- Amedi A, Malach R, Hendler T, Peled S, Zohary E. Visuo-haptic object-related activation in the ventral visual pathway. Nat Neurosci. 2001;4:324–330. doi: 10.1038/85201. [DOI] [PubMed] [Google Scholar]

- Amedi A, Jacobson G, Hendler T, Malach R, Zohary E. Convergence of visual and tactile shape processing in the human lateral occipital complex. Cereb Cortex. 2002;12:1202–1212. doi: 10.1093/cercor/12.11.1202. [DOI] [PubMed] [Google Scholar]

- Amunts K, Malikovic A, Mohlberg H, Schormann T, Zilles K. Brodmann's areas 17 and 18 brought into stereotaxic space—where and how variable? NeuroImage. 2000;11:66–84. doi: 10.1006/nimg.1999.0516. [DOI] [PubMed] [Google Scholar]

- Anzai A, Ohzawa I, Freeman RD. Neural mechanisms for processing binocular information. I. Simple cells. J Neurophysiol. 1999a;82:891–908. doi: 10.1152/jn.1999.82.2.891. [DOI] [PubMed] [Google Scholar]

- Anzai A, Ohzawa I, Freeman RD. Neural mechanisms for processing binocular information. II. Complex cells. J Neurophysiol. 1999b;82:909–924. doi: 10.1152/jn.1999.82.2.909. [DOI] [PubMed] [Google Scholar]

- Ashburner J, Friston KJ. Unified segmentation. NeuroImage. 2005;26:839–851. doi: 10.1016/j.neuroimage.2005.02.018. [DOI] [PubMed] [Google Scholar]

- Benjamini Y, Hochberg Y. Controlling the false discovery rate—a practical and powerful approach to multiple testing. J R Stat Soc B. 1995;57:289–300. [Google Scholar]

- Beurze SM, de Lange FP, Toni I, Medendorp WP. Integration of target and effector information in the human brain during reach planning. J Neurophysiol. 2007;97:188–199. doi: 10.1152/jn.00456.2006. [DOI] [PubMed] [Google Scholar]

- Bradshaw MF, Elliott KM. The role of binocular information in the “on-line” control of prehension. Spat Vis. 2003;16:295–309. doi: 10.1163/156856803322467545. [DOI] [PubMed] [Google Scholar]

- Croxson PL, Johansen-Berg H, Behrens TE, Robson MD, Pinsk MA, Gross CG, Richter W, Richter MC, Kastner S, Rushworth MF. Quantitative investigation of connections of the prefrontal cortex in the human and macaque using probabilistic diffusion tractography. J Neurosci. 2005;25:8854–8866. doi: 10.1523/JNEUROSCI.1311-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Culham JC, Cavina-Pratesi C, Singhal A. The role of parietal cortex in visuomotor control: what have we learned from neuroimaging? Neuropsychologia. 2006;44:2668–2684. doi: 10.1016/j.neuropsychologia.2005.11.003. [DOI] [PubMed] [Google Scholar]

- Davare M, Andres M, Cosnard G, Thonnard JL, Olivier E. Dissociating the role of ventral and dorsal premotor cortex in precision grasping. J Neurosci. 2006;26:2260–2268. doi: 10.1523/JNEUROSCI.3386-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dijkerman HC, Milner AD, Carey DP. The perception and prehension of objects oriented in the depth plane. I. Effects of visual form agnosia. Exp Brain Res. 1996;112:442–451. doi: 10.1007/BF00227950. [DOI] [PubMed] [Google Scholar]

- Dijkerman HC, Le S, Demonet JF, Milner AD. Visuomotor performance in a patient with visual agnosia due to an early lesion. Brain Res Cogn Brain Res. 2004;20:12–25. doi: 10.1016/j.cogbrainres.2003.12.007. [DOI] [PubMed] [Google Scholar]

- Duvernoy HM, Cabanis EA, Vannson JL. The human brain: surface, three-dimensional sectional anatomy and MRI. Vienna: Springer; 1991. [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. NeuroImage. 2005;25:1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Fattori P, Gamberini M, Kutz DF, Galletti C. “Arm-reaching” neurons in the parietal area V6A of the macaque monkey. Eur J Neurosci. 2001;13:2309–2313. doi: 10.1046/j.0953-816x.2001.01618.x. [DOI] [PubMed] [Google Scholar]

- Fattori P, Kutz DF, Breveglieri R, Marzocchi N, Galletti C. Spatial tuning of reaching activity in the medial parieto-occipital cortex (area V6A) of macaque monkey. Eur J Neurosci. 2005;22:956–972. doi: 10.1111/j.1460-9568.2005.04288.x. [DOI] [PubMed] [Google Scholar]

- Felleman DJ, Van Essen DC. Distributed hierarchical processing in the primate cerebral cortex. Cereb Cortex. 1991;1:1–47. doi: 10.1093/cercor/1.1.1-a. [DOI] [PubMed] [Google Scholar]

- Fogassi L, Gallese V, Buccino G, Craighero L, Fadiga L, Rizzolatti G. Cortical mechanism for the visual guidance of hand grasping movements in the monkey: a reversible inactivation study. Brain. 2001;124:571–586. doi: 10.1093/brain/124.3.571. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Buechel C, Fink GR, Morris J, Rolls E, Dolan RJ. Psychophysiological and modulatory interactions in neuroimaging. NeuroImage. 1997;6:218–229. doi: 10.1006/nimg.1997.0291. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Fletcher P, Josephs O, Holmes A, Rugg MD, Turner R. Event-related fMRI: characterizing differential responses. NeuroImage. 1998a;7:30–40. doi: 10.1006/nimg.1997.0306. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Josephs O, Rees G, Turner R. Nonlinear event-related responses in fMRI. Magn Reson Med. 1998b;39:41–52. doi: 10.1002/mrm.1910390109. [DOI] [PubMed] [Google Scholar]

- Friston KJ, Harrison L, Penny W. Dynamic causal modelling. NeuroImage. 2003;19:1273–1302. doi: 10.1016/s1053-8119(03)00202-7. [DOI] [PubMed] [Google Scholar]

- Gallese V, Murata A, Kaseda M, Niki N, Sakata H. Deficit of hand preshaping after muscimol injection in monkey parietal cortex. NeuroReport. 1994;5:1525–1529. doi: 10.1097/00001756-199407000-00029. [DOI] [PubMed] [Google Scholar]

- Galletti C, Kutz D, Gamberini M, Breveglieri R, Fattori P. Role of the medial parieto-occipital cortex in the control of reaching and grasping movements. Exp Brain Res. 2003;153:158–170. doi: 10.1007/s00221-003-1589-z. [DOI] [PubMed] [Google Scholar]

- Glover S. Optic ataxia as a deficit specific to the on-line control of actions. Neurosci Biobehav Rev. 2003;27:447–456. doi: 10.1016/s0149-7634(03)00072-1. [DOI] [PubMed] [Google Scholar]

- Goodale MA, Milner AD. Separate visual pathways for perception and action. Trends Neurosci. 1992;15:20–25. doi: 10.1016/0166-2236(92)90344-8. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Edelman S, Avidan G, Itzchak Y, Malach R. Differential processing of objects under various viewing conditions in the human lateral occipital complex. Neuron. 1999;24:187–203. doi: 10.1016/s0896-6273(00)80832-6. [DOI] [PubMed] [Google Scholar]

- Grol MJ, Majdandzic J, Stephan KE, Verhagen L, Dijkerman HC, Bekkering H, Verstraten FA, Toni I. Parieto-frontal connectivity during visually guided grasping. J Neurosci. 2007;27:11877–11887. doi: 10.1523/JNEUROSCI.3923-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Henson RN, Price CJ, Rugg MD, Turner R, Friston KJ. Detecting latency differences in event-related BOLD responses: application to words versus nonwords and initial versus repeated face presentations. NeuroImage. 2002;15:83–97. doi: 10.1006/nimg.2001.0940. [DOI] [PubMed] [Google Scholar]

- Hu Y, Eagleson R, Goodale MA. The effects of delay on the kinematics of grasping. Exp Brain Res. 1999;126:109–116. doi: 10.1007/s002210050720. [DOI] [PubMed] [Google Scholar]

- Huxlin KR, Saunders RC, Marchionini D, Pham HA, Merigan WH. Perceptual deficits after lesions of inferotemporal cortex in macaques. Cereb Cortex. 2000;10:671–683. doi: 10.1093/cercor/10.7.671. [DOI] [PubMed] [Google Scholar]

- Jackson SR, Jones CA, Newport R, Pritchard C. A kinematic analysis of goal-directed prehension movements executed under binocular, monocular, and memory-guided viewing conditions. Vis Cogn. 1997;4:113–142. [Google Scholar]

- Jeannerod M, Rossetti Y. Visuomotor coordination as a dissociable visual function: experimental and clinical evidence. In: Kennard C, editor. Visual perceptual defects. London: Bailliere Tindall; 1993. pp. 439–460. [PubMed] [Google Scholar]

- Jeannerod M, Arbib MA, Rizzolatti G, Sakata H. Grasping objects: the cortical mechanisms of visuomotor transformation. Trends Neurosci. 1995;18:314–320. [PubMed] [Google Scholar]

- Johansson RS, Westling G, Backstrom A, Flanagan JR. Eye-hand coordination in object manipulation. J Neurosci. 2001;21:6917–6932. doi: 10.1523/JNEUROSCI.21-17-06917.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knill DC. Surface orientation from texture: ideal observers, generic observers and the information content of texture cues. Vision Res. 1998a;38:1655–1682. doi: 10.1016/s0042-6989(97)00324-6. [DOI] [PubMed] [Google Scholar]

- Knill DC. Discrimination of planar surface slant from texture: human and ideal observers compared. Vision Res. 1998b;38:1683–1711. doi: 10.1016/s0042-6989(97)00325-8. [DOI] [PubMed] [Google Scholar]

- Knill DC, Saunders JA. Do humans optimally integrate stereo and texture information for judgments of surface slant? Vision Res. 2003;43:2539–2558. doi: 10.1016/s0042-6989(03)00458-9. [DOI] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. Representation of perceived object shape by the human lateral occipital complex. Science. 2001;293:1506–1509. doi: 10.1126/science.1061133. [DOI] [PubMed] [Google Scholar]

- Kovacs G, Sary G, Koteles K, Chadaide Z, Tompa T, Vogels R, Benedek G. Effects of surface cues on macaque inferior temporal cortical responses. Cereb Cortex. 2003;13:178–188. doi: 10.1093/cercor/13.2.178. [DOI] [PubMed] [Google Scholar]

- Loftus A, Servos P, Goodale MA, Mendarozqueta N, Mon-Williams M. When two eyes are better than one in prehension: monocular viewing and end-point variance. Exp Brain Res. 2004;158:317–327. doi: 10.1007/s00221-004-1905-2. [DOI] [PubMed] [Google Scholar]

- Lund TE, Norgaard MD, Rostrup E, Rowe JB, Paulson OB. Motion or activity: their role in intra- and inter-subject variation in fMRI. NeuroImage. 2005;26:960–964. doi: 10.1016/j.neuroimage.2005.02.021. [DOI] [PubMed] [Google Scholar]

- Maldjian JA, Laurienti PJ, Kraft RA, Burdette JH. An automated method for neuroanatomic and cytoarchitectonic atlas-based interrogation of fMRI data sets. NeuroImage. 2003;19:1233–1239. doi: 10.1016/s1053-8119(03)00169-1. [DOI] [PubMed] [Google Scholar]

- Mamassian P. Prehension of objects oriented in three-dimensional space. Exp Brain Res. 1997;114:235–245. doi: 10.1007/pl00005632. [DOI] [PubMed] [Google Scholar]

- Marotta JJ, Behrmann M, Goodale MA. The removal of binocular cues disrupts the calibration of grasping in patients with visual form agnosia. Exp Brain Res. 1997;116:113–121. doi: 10.1007/pl00005731. [DOI] [PubMed] [Google Scholar]

- Mars RB, Piekema C, Coles MG, Hulstijn W, Toni I. On the programming and reprogramming of actions. Cereb Cortex. 2007;17:2972–2979. doi: 10.1093/cercor/bhm022. [DOI] [PubMed] [Google Scholar]

- McIntyre J, Stratta F, Lacquaniti F. Short-term memory for reaching to visual targets: psychophysical evidence for body-centered reference frames. J Neurosci. 1998;18:8423–8435. doi: 10.1523/JNEUROSCI.18-20-08423.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Medendorp WP, Goltz HC, Vilis T, Crawford JD. Gaze-centered updating of visual space in human parietal cortex. J Neurosci. 2003;23:6209. doi: 10.1523/JNEUROSCI.23-15-06209.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mon-Williams M, Tresilian JR, McIntosh RD, Milner AD. Monocular and binocular distance cues: insights from visual form agnosia I (of III) Exp Brain Res. 2001;139:127–136. doi: 10.1007/s002210000657. [DOI] [PubMed] [Google Scholar]

- Moore C, Engel SA. Neural response to perception of volume in the lateral occipital complex. Neuron. 2001;29:277–286. doi: 10.1016/s0896-6273(01)00197-0. [DOI] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Pesaran B, Nelson MJ, Andersen RA. Dorsal premotor neurons encode the relative position of the hand, eye, and goal during reach planning. Neuron. 2006;51:125–134. doi: 10.1016/j.neuron.2006.05.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petrides M, Pandya DN. Comparative cytoarchitectonic analysis of the human and the macaque ventrolateral prefrontal cortex and corticocortical connection patterns in the monkey. Eur J Neurosci. 2002;16:291–310. doi: 10.1046/j.1460-9568.2001.02090.x. [DOI] [PubMed] [Google Scholar]

- Pisella L, Grea H, Tilikete C, Vighetto A, Desmurget M, Rode G, Boisson D, Rossetti Y. An “automatic pilot” for the hand in human posterior parietal cortex: toward reinterpreting optic ataxia. Nat Neurosci. 2000;3:729–736. doi: 10.1038/76694. [DOI] [PubMed] [Google Scholar]

- Pitzalis S, Galletti C, Huang RS, Patria F, Committeri G, Galati G, Fattori P, Sereno MI. Wide-field retinotopy defines human cortical visual area v6. J Neurosci. 2006;26:7962–7973. doi: 10.1523/JNEUROSCI.0178-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinlan DJ, Culham JC. fMRI reveals a preference for near viewing in the human parieto-occipital cortex. NeuroImage. 2007;36:167–187. doi: 10.1016/j.neuroimage.2007.02.029. [DOI] [PubMed] [Google Scholar]

- Rizzolatti G, Matelli M. Two different streams form the dorsal visual system: anatomy and functions. Exp Brain Res. 2003;153:146–157. doi: 10.1007/s00221-003-1588-0. [DOI] [PubMed] [Google Scholar]

- Sakata H, Taira M, Murata A, Mine S. Neural mechanisms of visual guidance of hand action in the parietal cortex of the monkey. Cereb Cortex. 1995;5:429–438. doi: 10.1093/cercor/5.5.429. [DOI] [PubMed] [Google Scholar]

- Shikata E, Tanaka Y, Nakamura H, Taira M, Sakata H. Selectivity of the parietal visual neurones in 3D orientation of surface of stereoscopic stimuli. NeuroReport. 1996;7:2389–2394. doi: 10.1097/00001756-199610020-00022. [DOI] [PubMed] [Google Scholar]

- Shulman GL, Tansy AP, Kincade M, Petersen SE, McAvoy MP, Corbetta M. Reactivation of networks involved in preparatory states. Cereb Cortex. 2002;12:590–600. doi: 10.1093/cercor/12.6.590. [DOI] [PubMed] [Google Scholar]

- Tanne-Gariepy J, Rouiller EM, Boussaoud D. Parietal inputs to dorsal versus ventral premotor areas in the macaque monkey: evidence for largely segregated visuomotor pathways. Exp Brain Res. 2002;145:91–103. doi: 10.1007/s00221-002-1078-9. [DOI] [PubMed] [Google Scholar]

- Thoenissen D, Zilles K, Toni I. Differential involvement of parietal and precentral regions in movement preparation and motor intention. J Neurosci. 2002;22:9024–9034. doi: 10.1523/JNEUROSCI.22-20-09024.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tomassini V, Jbabdi S, Klein JC, Behrens TE, Pozzilli C, Matthews PM, Rushworth MF, Johansen-Berg H. Diffusion-weighted imaging tractography-based parcellation of the human lateral premotor cortex identifies dorsal and ventral subregions with anatomical and functional specializations. J Neurosci. 2007;27:10259–10269. doi: 10.1523/JNEUROSCI.2144-07.2007. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Toni I, Schluter ND, Josephs O, Friston K, Passingham RE. Signal-, set- and movement-related activity in the human brain: an event-related fMRI study. Cereb Cortex. 1999;9:35–49. doi: 10.1093/cercor/9.1.35. [Erratum (1999) 9:196] [DOI] [PubMed] [Google Scholar]

- Toni I, Thoenissen D, Zilles K. Movement preparation and motor intention. NeuroImage. 2001;14:S110–S117. doi: 10.1006/nimg.2001.0841. [DOI] [PubMed] [Google Scholar]

- Tunik E, Frey SH, Grafton ST. Virtual lesions of the anterior intraparietal area disrupt goal-dependent on-line adjustments of grasp. Nat Neurosci. 2005;8:505–511. doi: 10.1038/nn1430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ungerleider LG, Gaffan D, Pelak VS. Projections from inferior temporal cortex to prefrontal cortex via the uncinate fascicle in rhesus monkeys. Exp Brain Res. 1989;76:473–484. doi: 10.1007/BF00248903. [DOI] [PubMed] [Google Scholar]

- van Bergen E, van Swieten LM, Williams JH, Mon-Williams M. The effect of orientation on prehension movement time. Exp Brain Res. 2007;178:180–193. doi: 10.1007/s00221-006-0722-1. [DOI] [PubMed] [Google Scholar]

- Verhagen L, Grol MJ, Dijkerman HC, Toni I. Studying visually-guided reach-to-grasp movements in an MR-environment. NeuroImage. 2006;31:S45. [Google Scholar]