Abstract

Traditionally, concepts are conceived as abstract mental entities distinct from perceptual or motor brain systems. However, recent results let assume modality-specific representations of concepts. The ultimate test for grounding concepts in perception requires the fulfillment of the following four markers: conceptual processing during (1) an implicit task should activate (2) a perceptual region (3) rapidly and (4) selectively. Here, we show using functional magnetic resonance imaging and recordings of event-related potentials, that acoustic conceptual features recruit auditory brain areas even when implicitly presented through visual words. Fulfilling the four markers, the findings of our study unequivocally link the auditory and conceptual brain systems: recognition of words denoting objects, for which acoustic features are highly relevant (e.g.,“telephone”), ignited cell assemblies in posterior superior and middle temporal gyri (pSTG/MTG) within 150 ms that were also activated by sound perception. Importantly, activity within a cluster of pSTG/MTG increased selectively as a function of acoustic, but not of visual and action-related feature relevance. The implicitness of the conceptual task, the selective modulation of left pSTG/MTG activity by acoustic feature relevance, the early onset of this activity at 150 ms and its anatomical overlap with perceptual sound processing are four markers for a modality-specific representation of auditory conceptual features in left pSTG/MTG. Our results therefore provide the first direct evidence for a link between perceptual and conceptual acoustic processing. They demonstrate that access to concepts involves a partial reinstatement of brain activity during the perception of objects.

Keywords: conceptual brain systems, auditory cortex, language, embodied cognition, fMRI, EEG

Introduction

Concepts in long-term memory are important building blocks of human cognition and form the basis for object recognition, language and thought (Humphreys et al., 1988; Levelt et al., 1999). Traditionally, concepts are specified as abstract mental entities different from perceptual or motor brain systems (Anderson, 1983; Tyler and Moss, 2001): sensory or motor features of objects and events are transformed into a common amodal representation format, in which original modality-specific information is lost.

Challenging this classical view, recent modality-specific approaches propose close links between the perceptual and motor brain systems and the conceptual system. They assume that concepts are embodied (Gallese and Lakoff, 2005) in the sense that they are essentially grounded in perception and action (Warrington and McCarthy, 1987; Kiefer and Spitzer, 2001; Martin and Chao, 2001; Barsalou et al., 2003; Pulvermüller, 2005): conceptual features (e.g., visual, acoustic, action-related) are represented by cortical cell assemblies in sensory and motor areas established during concept acquisition (Pulvermüller, 2005; Kiefer et al., 2007b). Activation of these modality-specific cell assemblies either bottom-up by words and objects, or top-down by thought constitutes the concept. Hence, access to concepts involves a partial reinstatement of brain activity during perception and action.

Support for modality-specific approaches comes from behavioral (Boulenger et al., 2006; Helbig et al., 2006), neuropsychological (Warrington and McCarthy, 1987), electrophysiological (Kiefer, 2005) and neuroimaging studies (Martin and Chao, 2001). Although conceptual tasks activated sensory brain areas convincing evidence for a link between perceptual and conceptual systems is hitherto missing: conceptual categories with a strong emphasis on visual features (i.e., natural kinds) elicited activity in visual brain areas (Martin et al., 1996). However, these findings were not consistently replicated (Gerlach, 2007). Furthermore, they could be alternatively explained by perceptual processing of the visual stimuli themselves (Gerlach et al., 1999; Kiefer, 2001) or by postconceptual strategic processes such as visual imagery (Machery, 2007) that occur after the concept had been fully accessed (Kosslyn, 1994; Hauk et al., 2008a). Hence, it is not sufficient to simply demonstrate a functional-anatomical overlap between conceptual and perceptual processing.

Bridging the gap between the perceptual and conceptual systems, we investigated the neural representation of acoustic conceptual features during the recognition of visually presented object names. In our study, we used functional magnetic resonance imaging (fMRI) and event-related potentials (ERPs) to obtain four converging markers for a link between auditory and conceptual brain systems: (1) We assess whether implicit retrieval of acoustic conceptual features during the lexical decision task is sufficient to drive activity in auditory brain areas. (2) We determine whether this activity emerges rapidly within the first 200 ms of visual word processing. (3) We identify the functional-anatomical overlap between conceptual and perceptual processing of acoustic information. (4) We test the selectivity of the brain response to acoustic conceptual features. To this end, we assess whether the relevance of acoustic features specifically correlates with the MR signal in regions representing acoustic conceptual information. Only if these four markers are simultaneously met, a grounding of acoustic conceptual representations in the auditory perceptual system can be convincingly demonstrated.

Materials and Methods

General.

All subjects were right-handed (Oldfield, 1971) native German-speaking volunteers with normal or corrected-to normal visual acuity and without any history for neurological or psychiatric disorders. They participated after giving written informed consent; the procedures of the study have been approved by the local Ethical Committee. In the fMRI experiments (experiment 1 and 2), 16 subjects (7 female; mean age = 25.5) participated. In the ERP experiment (experiment 3), a new sample of twenty subjects (10 female; mean age = 24.0) was involved. Experimental control and data acquisition were performed by the ERTS software package (Berisoft). In the fMRI experiments visual stimuli were delivered through MR-compatible video goggles (Resonance Technology), and acoustic stimuli through MR-compatible pneumatic headphones (Siemens). In the ERP experiment, visual stimuli were presented on a computer screen.

Stimuli and procedure for the conceptual acoustic task (experiments 1 and 3).

One hundred words and 100 pseudowords were presented visually for 500 ms, preceded by a fixation cross of 750 ms duration. Pronounceable letter strings (pseudowords) served as distracters and were not further analyzed. Two word sets that differed only with regard to the relevance of acoustic conceptual features were formed according to the results of a preceding norming study: a first subject group (n = 20), who did not participate in the main experiments, had to rate a sample of 261 object names for relevance of acoustic features on a scale from 1 to 6. They were asked how strongly they associate acoustic features with the named object. A second independent subject group (n = 20) had to rate the relevance of visual and motor object features as well as emotional valence (pleasant vs unpleasant) of each named object (n = 238) in the same way. These word sets differed significantly only with regard to the relevance of acoustic features (with vs without acoustic features: 5.0, SD 0.54 vs 1.7, SD 0.55, p < 0.0001), but were comparable for visual (3.76, SD 0.58 vs 3.96, SD 0.49, p = 0.35) and motor features (3.69, SD 0.61 vs 3.54, SD 0.61, p = 0.15). Sets were also matched for emotional valence (0.1, SD 0.70 vs −0.1, SD 0.76, p = 0.12), word length (6.0, SD 1.6 vs 6.0, SD 1.4, p = 1.0) and word frequency (29.0, SD 30 vs 29.0, SD 24, p = 1.0; according to the CELEX lexical data base). Half of the words in each set referred to objects from natural categories (animals, plants, fruit), the other half referred to objects from artifact categories (tools, musical instruments, transportation). A pilot study with a lexical decision task (n = 9) showed that word sets with high and low relevance of acoustic features showed similar reaction times (607 ms vs 609 ms, p = 0.66) and error rates (2.4 vs 2.4, p = 1.0). Hence, word sets exhibited a comparable word recognition difficulty. In fMRI experiment 1, words and pseudowords were presented in a randomized manner (event-related design) intermixed with trials in which just a black screen was shown (null events). For each stimulus, participants had to respond within a time window of 2000 ms before the next trial started. The mean intertrial interval was 6.1 s varying randomly between 2.4 and 9.8 s. Stimuli were presented within five blocks of 40 trials each (plus 10 null events). In the ERP experiment, words and pseudowords were also presented in a randomized order, but participants initiated each trial with a button press to reduce ocular artifacts in the EEG recordings during the trials.

Stimuli and procedure for the perceptual acoustic task (experiment 2).

Ten real sounds from animal and artifactual objects, respectively, and 10 amplitude-modulated colored noise sounds were used as stimuli. All acoustic stimuli had a duration of 500 ms (including rise and fall time) and were presented binaurally at ∼90 dB nHL via closed headphones. All sounds were presented in blocks with a duration of 24 s each (10 stimuli per block with a mean interstimulus interval of 850 ms randomly varying between 400 and 1300 ms). Throughout the experiment, a fixation cross was displayed to minimize eye movements. Participants' task was to attentively listen to the acoustic stimuli while maintaining fixation. Each acoustic stimulation block was preceded and followed by a resting block in which only the fixation cross was shown. The acoustic stimulation blocks (real sounds, acoustic noise) were presented four times in a randomized order. Postexperimental debriefing showed that participants followed the instruction of the sound listening task. Brain activity in response to real sounds and acoustic noise was determined by using functional magnetic resonance imaging (see below).

fMRI scanning and data analysis.

Functional and structural MR images were recorded with a 3 Tesla Allegra MRI system (Siemens). For the functional scans, a T2*-weighted single-shot gradient-echo EPI sequence [TE = 38 ms, TR = 2000 ms, flip angle = 90°, matrix 64 × 64 pixels, field of view (FOV) 210 × 210 mm2, voxel size 3.3 × 3.3 × 4.9 mm3] was used. Starting from the bottom of the brain, 30 transversal slices were acquired in interleaved order. Slice orientation was parallel to a line connecting the bases of the frontal lobe and the cerebellum. There were five imaging runs for the entire fMRI experiment, resulting in a total of 1225 functional volumes. Run duration was ∼8 min. Structural images were acquired with T1-weighted MPRAGE sequence (TR = 2300 ms; TE = 3.9 ms; flip angle = 12°; matrix 256 × 256 pixels, FOV = 256 × 256 mm2, voxel size 1 × 1 × 1 mm3). Functional data preprocessing and statistical analyses were performed with SPM2 (http://www.fil.ion.ucl.ac.uk/spm/spm2.html). Functional images were corrected for differences in slice-timing, spatially realigned to the first volume of the first run and smoothed with an isotropic Gaussian kernel of 6 mm FWHM. Before smoothing, the realigned images were spatially normalized to the MNI reference brain (resampled voxel size: 2 × 2×2 mm3). A temporal high-pass filter with cutoff frequency 1/128 Hz was applied to the data, and temporal autocorrelation in the fMRI time series was estimated (and corrected for) using a first-order autoregressive model. Statistical analysis used a hierarchical random-effects model with two levels. At the first level, single-subject fMRI responses were modeled by a design matrix comprised of the stimuli (experiment 1: words with and without acoustic features and pseudowords; experiment 2: blocks of acoustic stimuli) convolved with the canonical hemodynamic response function. In experiment 1, we additionally determined whether voxels that are responsive to words with acoustic features specifically increase their signal as a function of the relevance of acoustic conceptual features in a parametric manner. Therefore, a second model was set up which included a linear parametric modulation of the canonical hemodynamic response by the semantic relevance ratings of acoustic, visual and action-related features for each individual object name (for a similar approach, see Hauk et al., 2008c; Hauk et al., 2008b). We restricted the analysis to conceptual features as modulators and did not extend this analysis to linguistic variables such as word frequency or word length, because only conceptual factors were of primary interest. For pseudowords, no parametric modulators were defined. In this analysis, only words and pseudowords were distinguished as conditions. To allow for inferences at the population level, a second-level analysis (one-sample t test) considered the contrast images of all subjects and treated subjects as a random effect. In the event-related analysis of experiment 1 (conceptual task), all comparisons were thresholded at a significance level of p < 0.05 and corrected for multiple comparisons across the entire brain [false discovery rate (FDR)]. In the parametric modulation analysis of experiment 1, only voxels exceeding the threshold of p < 0.05 (FDR-corrected) for the contrast between words with and without acoustic conceptual features were considered. For the analysis of the block design of experiment 2 (perceptual task), significance level was set to p < 0.01 (FDR-corrected). The spatial extent threshold of clusters was 10 voxels in all comparisons. All functional group activation maps are overlaid on the MNI reference brain.

ERP recordings and data analysis.

ERP recordings were performed in a dimly lit, sound-attenuated, electrically shielded booth. Scalp potentials were collected using an equidistant montage of 64 sintered Ag/AgCl electrodes mounted in an elastic cap (Easy Cap). An electrode between Fpz and Fz was connected to the ground, and an electrode between Cz and FCz was used as recording reference. Eye movements were monitored with supra- and infraorbital electrodes and with electrodes on the external canthi. Electrode impedance was kept <5 kΩ. Electrical signals were amplified with Synamps amplifiers (low-pass filter: 70 Hz, 24 dB/octave attenuation; 50 Hz notch filter) and continuously recorded (digitization rate: 250 Hz), digitally bandpass filtered (high cutoff: 16 Hz, 24 dB/octave attenuation; low cutoff: 0.1 Hz, 12 dB/octave attenuation) and segmented (152 ms before to 1000 ms after the onset of the stimulus). EEG data were corrected to a 152 ms baseline before the onset of the stimulus. Separately for each experimental condition, artifact-free EEG segments to trials with correct responses were averaged synchronous to the onset of the stimulus (BrainVision Analyzer, BrainProducts). To obtain a reference independent estimation of scalp voltage, the average-reference transformation was applied to the ERP data.

Statistical analysis of the ERP data focused on a time window of interest around the offset of the word recognition process (150–250 ms after stimulus onset) (Pulvermüller et al., 2005). Analysis was performed for central electrodes, at which auditory evoked potentials are typically recorded (electrodes F1/F2, FC1/FC2, CP1/CP2, Cz, FCz, Fz) (Näätänen, 1992). When appropriate, degrees of freedom were adjusted according to the method of Huynh-Feldt (Huynh and Feldt, 1970). Only the corrected significance levels were reported. Significance level was p < 0.05. We determined the neural sources for significant effects of word type (words with and without acoustic features) using distributed source modeling (minimum norm source estimates) (Hauk, 2004) implemented in BESA 5.1 (MEGIS). Sources were computed for the grand-averaged ERP difference waves between words with and without acoustic conceptual features to eliminate unspecific brain activity associated with word reading. Minimum norm source estimates (minimum L2 norm) were calculated using a standardized realistic head model [finite element model (FEM)]. For estimating the noise regularization parameters, the prestimulus baseline was used. Minimum norm was computed with depth weighting, spatio-temporal weighting and noise weighting for each individual channel. Cortical currents were determined within the time window during which significant ERP differences were obtained at the time point of maximal global field power (GFP) in the ERP difference waves to ensure optimal signal-to-noise-ratio (Kiefer et al., 2007a). Talairach coordinates for the activation peaks were determined on the 2D surface covering the cortex on which the source solution was computed. We report the nearest Brodmann areas (BA) to the peak activations located by the Talairach Daemon (Lancaster et al., 2000).

Results

fMRI experiment 1: conceptual task

Analysis of the behavioral data of the lexical decision task revealed similar reaction times (RT) and error rate (ER) for words with acoustic conceptual features (RT: 749 ms, SD 140; ER: 3.3%, SD 3.5) and without acoustic conceptual features (RT: 753 ms, SD 147 ms; ER: 2.1%, SD 2.8; all p > 0.07). This shows that visual recognition of both word types was comparably difficult.

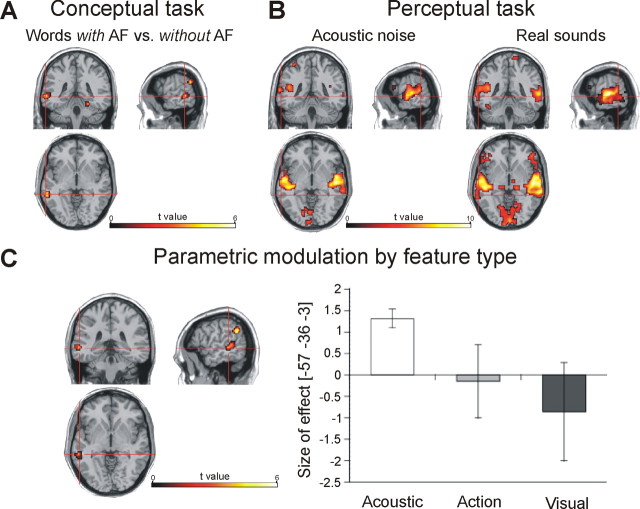

In a first step of the analysis of the MR data, we identified brain areas involved in conceptual processing of acoustic features by comparing the MR signal to words with acoustic conceptual features and to those without such features. As shown in Figure 1A, we found greater activity to words with acoustic conceptual features in a large cluster of voxels encompassing left posterior superior gyrus (pSTG) and middle temporal gyrus (pMTG) corresponding to BA 22 and 21, respectively (p < 0.05, corrected for multiple comparisons across the entire brain). This region belongs to the auditory association cortex (see Fig. 4). Smaller clusters were obtained in left supramarginal and right fusiform gyri. Words without acoustic conceptual features, in contrast, did not elicit a significantly higher MR signal. In a next step, we related relevance ratings of acoustic, visual and action-related conceptual features to the MR signal in a parametric modulation analysis for the entire brain. Most importantly, we observed a specific brain–behavior relationship between activity in left pSTG/MTG and acoustic conceptual features: the MR signal in this area was parametrically modulated and increased linearly as a function of the relevance of acoustic features for a concept as measured by the word ratings (Fig. 1C). In contrast, the relevance of visual and action features did not significantly modulate the MR signal in left pSTG/pMTG. Extend and location of this cluster obtained in the parametric modulation analysis was identical with the cluster found in the conventional contrast analysis. Activity in left supramarginal gyrus was modulated by the relevance of both acoustic and action feature thereby lacking the specificity for acoustic conceptual features found in pSTG/pMTG. Relevance of visual conceptual features as parameter did not significantly modulate the MR signal. These findings show that left pSTG/pMTG is essentially and specifically involved in representing acoustic conceptual features.

Figure 1.

Functional brain activation during the conceptual and the perceptual tasks. A, Conceptual task: activation to words with versus words without acoustic features (AF), p < 0.05, corrected. B, Perceptual task: activation during listening to acoustic noise and real sounds, respectively, p < 0.01, corrected. C, Parametric modulation: linear increase of the MR signal by acoustic feature relevance (left), p < 0.05 corrected. The bar chart (right) depicts the effect size for the contributions of acoustic, action-related, and visual conceptual features at the peak voxel within the pSTG/MTG cluster. Small vertical bars indicate the SEM.

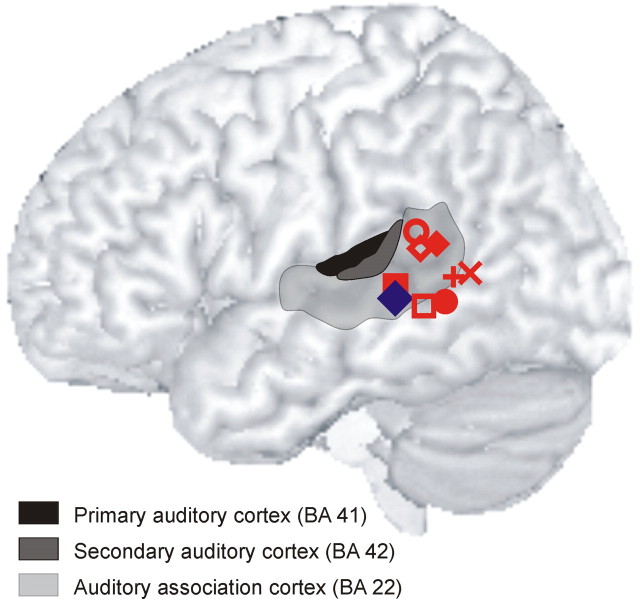

Figure 4.

Summary of findings from previous imaging studies on the role of pSTG/MTG in sound processing. Peak activations (red symbols) are overlaid on the MNI reference brain. Posterior STG/MTG has been found activated during voice listening (Belin et al., 2000) (◇), sound recognition [Lewis et al., 2004 (×); Specht and Reul, 2003 (■)], word recognition (Specht and Reul, 2003) (●), sound retrieval (Wheeler et al., 2000) (○), sound imagery (Zatorre et al., 1996) (♦, □), and sound verification (Kellenbach et al., 2001) (+). Peak activation of acoustic conceptual processing from the present study (blue diamond) is located within this cluster of previously reported activations in pSTG/MTG. The gray-shaded areas schematically delineate the respective anatomical location of primary (BA 41) and secondary auditory cortex (BA 42) as well as auditory association cortex (BA 22).

fMRI experiment 2: perceptual task

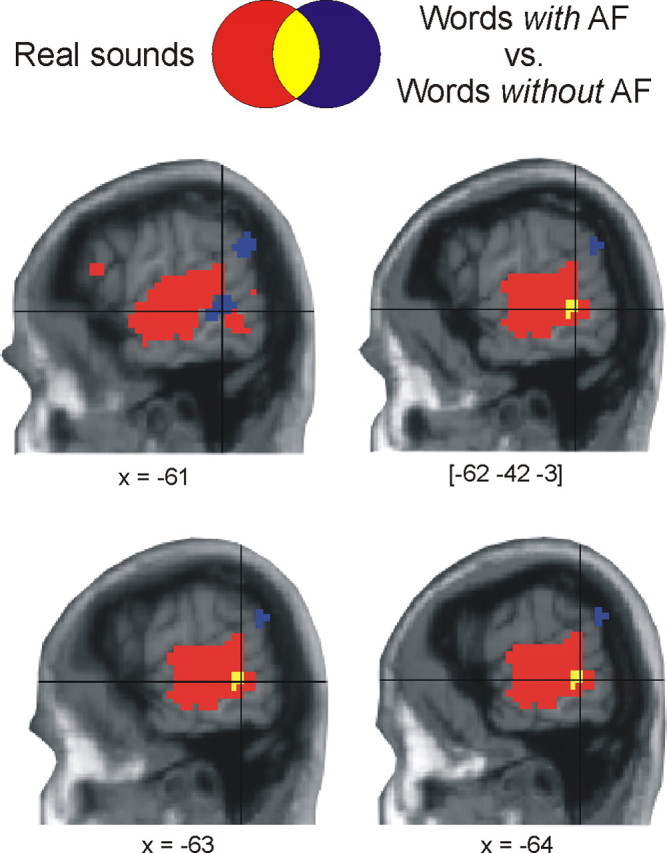

In the second experiment conducted within the participant group of experiment 1, we determined brain areas involved in listening to real sounds (p < 0.01, corrected for multiple comparisons across the entire brain). When contrasted to the resting condition, both active conditions (real sounds, acoustic noise) recruited the superior temporal cortex including the temporal plane bilaterally (Fig. 1B). Based on the known neuroanatomy of the auditory system (Howard et al., 2000), the temporal plane comprises primary and secondary auditory cortex whereas the neighboring areas in superior and middle temporal gyri encompass auditory association areas. The MR signal was generally higher to real sounds than to acoustic noise in the temporal plane and in superior temporal cortex. Most importantly, the large cluster that responded to real sounds encompassed a region of the auditory association cortex in left pSTG/MTG (1.4 cm3 = 7 voxels) which overlapped with the cluster activated by words with acoustic conceptual features (Fig. 2). Because the latter cluster was identical with that obtained in the parametric modulation analysis, this overlapping cluster for perceptual and conceptual sound processing had the same size regardless of the form of analysis in determining the neural substrate of acoustic conceptual features. Hence, perceiving real sounds and processing of acoustic conceptual features share a common neural substrate in temporal cortex.

Figure 2.

Functional-anatomical overlap between conceptual and perceptual processing of acoustic features. Increased functional activation to words with acoustic features (p < 0.05, corrected) overlaps with brain activation during listening to real sounds (p < 0.01, corrected) in pSTG/MTG. Shown are contiguous slices centered on the peak coordinates.

ERP experiment 3: conceptual task

In the ERP experiment, lexical decision latencies and ER were similar for words with acoustic conceptual features (RT: 591 ms, SD 79; ER: 3.9%, SD 1.7) and without acoustic conceptual features (RT 599 ms, SD 80; ER: 3.9%, SD 2.6; all p > 0.08) demonstrating a comparable level of task difficulty for both word types as in experiment 1.

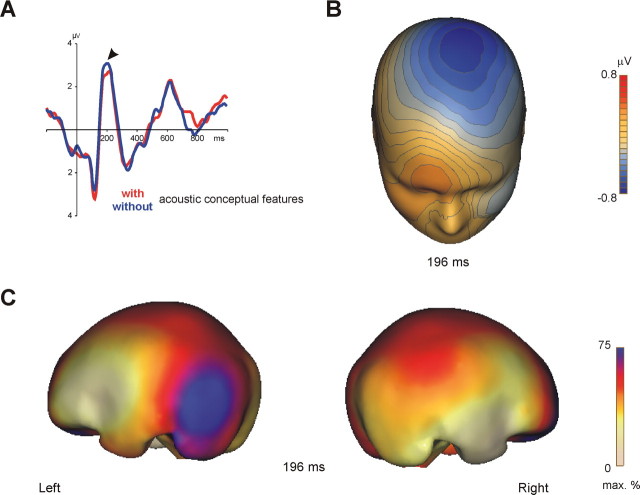

Analysis of ERP recordings revealed that auditory areas are rapidly ignited at ∼150 ms after word onset (Fig. 3): ERPs to words with and without conceptual features diverged significantly (p = 0.03) from each other within a time window of 150–200 ms at all electrodes of the central electrode cluster. At these electrode sites, acoustically evoked potentials are typically recorded (Näätänen, 1992). Hence, scalp ERP effects had the topography of auditory evoked potentials with an amplitude maximum at central electrodes (Scherg and von Cramon, 1984). Amplitude of these ERP components is increased, if attention is directed toward auditory stimuli (Rugg and Coles, 1995). Source analysis of scalp ERPs with minimum norm estimates (Hauk, 2004) identified generators in and close to pSTG/MTG (BA 21, 22).

Figure 3.

Time course of conceptual processing of acoustic features. A, Event-related scalp potentials to words with vs without acoustic features at central electrodes. Potentials are collapsed across electrode sites. The arrow indicates the onset of the effect. B, Topography of the ERP effect as recorded at the scalp at its maximum global field power. Shown is the interpolated potential difference between conditions (ERPs to words with acoustic features subtracted from ERPs to words without acoustic features). Note the relative negativity to words with acoustic features at the central scalp (visualized in blue color). In this scalp region, auditory evoked potentials are typically recorded (Näätänen, 1992). C, Brain electrical sources of scalp ERPs: maps of cortical currents calculated according to the minimum norm algorithm from the ERP difference waves. Maps are shown for the respective maxima in global field power. Strongest cortical currents (visualized in blue color) were observed in and close to left pSTG/MTG.

Discussion

Theories of embodied conceptual representations propose that concepts are grounded in the sensory and motor systems of the brain (Warrington and McCarthy, 1987; Kiefer and Spitzer, 2001; Martin and Chao, 2001; Barsalou et al., 2003; Gallese and Lakoff, 2005; Pulvermüller, 2005). It is assumed that access to a concept depends on a partial reinstatement of brain activity that initially occurred during perception of objects and events. The ultimate test for grounding concepts in perception requires the fulfillment of the following four criteria: conceptual processing during (1) an implicit task should activate (2) a perceptual region (3) rapidly and (4) selectively. We therefore investigated the neural correlates of acoustic conceptual features (conceptual task) and sound perception (perceptual task) in three experiments with fMRI and ERP measurements. In the conceptual task, participants performed lexical decisions on visually presented object names. This task induces an implicit access to conceptual features thereby minimizing the possibility of postconceptual strategic processes such as imagery. In the perceptual task, participants listened to real sounds.

In line with these criteria, we provide four converging markers for a link between auditory and conceptual brain systems. First and second, with the implicit lexical decision task, we found overlapping activation within temporal cortex for conceptual and perceptual processing of acoustic features: words with acoustic conceptual features elicited higher activity in left pSTG/MTG compared with words without acoustic conceptual features. This posterior temporal area was also activated when participants listened to real sounds. Thirdly, ERP recordings revealed an early onset of left pSTG/MTG activity starting at ∼150 ms after word onset. Taking into account the limited spatial resolution of ERPs, the results from source analysis are in good agreement with our fMRI findings.

The time course of brain activity as derived from ERPs allows determining whether the present activation in temporal cortex is due to conceptual processing of acoustic features or auditory imagery. Visual word recognition is completed at ∼150 ms (Pulvermüller et al., 2005), and full access to a concept is a prerequisite for postconceptual imagery processes to subsequently occur (Kosslyn, 1994). Therefore, the early onset of the ERP effects immediately thereafter suggests that the observed activity in left pSTG/MTG reflects access to acoustic conceptual features rather than temporarily later imagery processes (for a distinction between conceptual processing and imagery, see also below). Although an early access to modality-specific conceptual features is an important criterion to differentiate between conceptual processing and imagery, this does not preclude the possibility of conceptual feature retrieval at later time points as proposed by semantic memory models (e.g., Smith et al., 1974). Fourthly and most importantly, activity in left pSTG/MTG increased linearly as a function of the relevance of acoustic conceptual features as determined in a behavioral rating study. In contrast, relevance ratings of visual and action-related related features did not modulate activity in this region. Hence, we found a specific brain–behavior relation between activity in left pSTG/MTG and relevance ratings of acoustic conceptual features.

The MR signal in the supramarginal gyrus (SMG) was modulated by both action-related and acoustic features, thus lacking the specificity of the pSTG/MTG response. SMG activation could reflect simulating the actions that cause a sound to occur given its role in action representation (Vingerhoets, 2008). Alternatively, SMG could support semantic feature integration regardless of feature type (Snyder et al., 1995; Corina et al., 1999). Unlike in earlier studies (Davis et al., 2004; Hauk et al., 2008c; Hoenig et al., 2008), we did not observe a modulation of brain activity by visual and action-related features in sensory and motor cortex. This is because of the fact that the stimulus material was optimized for investigating acoustic conceptual features as the main purpose of this study.

The involvement of left pSTG/MTG in higher level sound processing has been shown by previous imaging studies as summarized in Figure 4. This region contributes to the processing of complex sounds including human voices (Belin et al., 2000; Specht and Reul, 2003) and is activated during sound recognition (Lewis et al., 2004), episodic recall of sounds (Wheeler et al., 2000), and music imagery (Zatorre et al., 1996; Kraemer et al., 2005). Activity in pSTG/MTG was also observed for decisions on acoustic object attributes (Kellenbach et al., 2001; Goldberg et al., 2006) and during recognition of novel objects for which acoustic attributes were learned in a preceding training phase (James and Gauthier, 2003). Patients with a lesion in this area exhibit sound recognition deficits (Clarke et al., 2000). This evidence clearly demonstrates that pSTG/MTG is part of auditory association cortex, both with respect to its functional an anatomical properties (Howard et al., 2000).

The present study shows for the first time that left pSTG/MTG is the neural substrate of acoustic conceptual processing: this region responded to acoustic conceptual features even when probed implicitly in a lexical decision task. Furthermore, activity was gradually modulated by the relevance of acoustic conceptual features, but not by the relevance of visual or action-related features. Hence, this region in auditory association cortex selectively codes acoustic conceptual object features.

Our results strongly suggest that conceptual features are localizable in the brain and not arbitrarily distributed across the cortex as previously proposed (Anderson, 1983; Tyler and Moss, 2001). Rather, they are coded in the corresponding sensory association areas that are also activated during perception. The selective brain–behavior relation for acoustic conceptual features in an auditory brain region (left pSTG/MTG), its early onset of 150 ms and the implicitness of the conceptual task unequivocally point to a conceptual origin of this effect thereby ruling out postconceptual strategies such as imagery. The selective modulation of left pSTG/MTG activity by auditory, but not by visual or action-related conceptual features demonstrates that this region represents auditory conceptual knowledge rather than playing a general, unspecific role in conceptual processing. The present findings provide compelling evidence for a functional and anatomical link between the perceptual and conceptual brain systems, thereby considerably extending findings from earlier studies (Chao et al., 1999; Simmons et al., 2005; Hoenig et al., 2008).

Unlike perceptual sound processing and sound imagery (Zatorre et al., 1996; Kraemer et al., 2005), conceptual processing of acoustic features is confined to higher-level auditory association cortex and does not encompass primary and secondary auditory cortex within the temporal plane. These differences in functional neuroanatomy might reflect differences in experiential quality (for a distinction between imagery and conceptual processing, see Hauk et al., 2008c; Kosslyn, 1994; Kiefer et al., 2007b; Machery, 2007). Conceptual processing of acoustic features lacks the vivid sensory experience typically present in perception and imagery: obviously, we do not experience a “ringing” sound when reading the word “telephone.” In fact, the lack of experiential quality in conceptual processing is appropriate and instrumental because the modality-specific experience of sensory conceptual features during thought and verbal communication would considerably interfere with action planning processes that must rely on perceptual information from the current situation (Milner and Goodale, 1995). Left pSTG/MTG may therefore serve as an auditory convergence zone (Damasio, 1989) that codes higher-level acoustic object information contributing to a concept. Although perceptual and conceptual processing of acoustic features considerably overlap in functional neuroanatomy as demonstrated here, both levels of representation are not identical with regard to subjective phenomenal experience and their neural substrate.

In conclusion, our results provide unequivocal evidence for a link between perceptual and conceptual acoustic processing, with the left pSTG/MTG as the shared neural substrate. Hence, conceptual processing of acoustic features involves a partial reinstatement of brain activity during perceptual experience. The currently presented four converging markers fulfill the requirements for demonstrating this link at a functional and anatomical level: the implicitness of the conceptual task, the selective modulation of left pSTG/MTG activity by acoustic feature relevance, the early onset of this activity at 150 ms and its anatomical overlap with perceptual sound processing show that left pSTG/MTG represents auditory conceptual features in a modality-specific manner. Because we used stimuli from a variety of object categories, our findings strongly suggest a modality-specific representation for a broad range of object concepts that are central for everyday life. Possibly, a modality-specific representation could generalize to other object concepts or even to abstract concepts such as “freedom” or “justice”. These fascinating issues have to be addressed in future studies. Furthermore, our findings stress the necessity of sensory experiences in the relevant modalities to acquire rich, fully developed concepts of our physical and social world. Conversely, a lack of multimodal sensory experience would result in an impoverished development of conceptual representations. Finally, our results draw a strong parallel with the functional neuroanatomy of episodic or working memory systems. For these other memory systems, retrieval of sensory properties of an object or event recruits corresponding modality-specific sensory areas that were initially activated during encoding (Wheeler et al., 2000; Ranganath et al., 2004; Kessler and Kiefer, 2005; Khader et al., 2005). Thus, modality-specificity might be a general organizational principle in cortical memory representation.

Footnotes

This work was supported by grants from the German Research Foundation (DFG Ki 804/1-3) and from the European Social Foundation to M.K. We thank Cornelia Müller, Florian Diehl, and Gerwin Müller for their help with data acquisition. We are grateful to Michael Posner, Stanislas Dehaene, Friedemann Pulvermüller, Lawrence Barsalou, and one anonymous reviewer for providing helpful comments on this manuscript.

References

- Anderson JR. Hillsdale, NJ: Erlbaum; 1983. The architecture of cognition. [Google Scholar]

- Barsalou LW, Kyle Simmons W, Barbey AK, Wilson CD. Grounding conceptual knowledge in modality-specific systems. Trends Cogn Sci. 2003;7:84–91. doi: 10.1016/s1364-6613(02)00029-3. [DOI] [PubMed] [Google Scholar]

- Belin P, Zatorre RJ, Lafaille P, Ahad P, Pike B. Voice-selective areas in human auditory cortex. Nature. 2000;403:309–312. doi: 10.1038/35002078. [DOI] [PubMed] [Google Scholar]

- Boulenger V, Roy AC, Paulignan Y, Deprez V, Jeannerod M, Nazir TA. Cross-talk between language processes and overt motor behavior in the first 200 msec of processing. J Cogn Neurosci. 2006;18:1607–1615. doi: 10.1162/jocn.2006.18.10.1607. [DOI] [PubMed] [Google Scholar]

- Chao LL, Haxby JV, Martin A. Attribute-based neural substrates in temporal cortex for perceiving and knowing about objects. Nat Neurosci. 1999;2:913–919. doi: 10.1038/13217. [DOI] [PubMed] [Google Scholar]

- Clarke S, Bellmann A, Meuli RA, Assal G, Steck AJ. Auditory agnosia and auditory spatial deficits following left hemispheric lesions: evidence for distinct processing pathways. Neuropsychologia. 2000;38:797–807. doi: 10.1016/s0028-3932(99)00141-4. [DOI] [PubMed] [Google Scholar]

- Corina DP, McBurney SL, Dodrill C, Hinshaw K, Brinkley J, Ojemann G. Functional roles of Broca's area and SMG: evidence from cortical stimulation mapping in a deaf signer. Neuroimage. 1999;10:570–581. doi: 10.1006/nimg.1999.0499. [DOI] [PubMed] [Google Scholar]

- Damasio AR. Time-locked multiregional retroactivation: a systems-level proposal for the neural substrates of recall and recognition. Cognition. 1989;33:25–62. doi: 10.1016/0010-0277(89)90005-x. [DOI] [PubMed] [Google Scholar]

- Davis MH, Meunier F, Marslen-Wilson WD. Neural responses to morphological, syntactic, and semantic properties of single words: an fMRI study. Brain Lang. 2004;89:439–449. doi: 10.1016/S0093-934X(03)00471-1. [DOI] [PubMed] [Google Scholar]

- Gallese V, Lakoff G. The brain's concepts: the role of the sensory-motor system in conceptual knowledge. Cogn Neuropsychol. 2005;22:455–479. doi: 10.1080/02643290442000310. [DOI] [PubMed] [Google Scholar]

- Gerlach C. A review of functional imaging studies on category specificity. J Cogn Neurosci. 2007;19:296–314. doi: 10.1162/jocn.2007.19.2.296. [DOI] [PubMed] [Google Scholar]

- Gerlach C, Law I, Gade A, Paulson OB. Perceptual differentiation and category effects in normal object recognition: a PET study. Brain. 1999;122:2159–2170. doi: 10.1093/brain/122.11.2159. [DOI] [PubMed] [Google Scholar]

- Goldberg RF, Perfetti CA, Schneider W. Perceptual knowledge retrieval activates sensory brain regions. J Neurosci. 2006;26:4917–4921. doi: 10.1523/JNEUROSCI.5389-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauk O. Keep it simple: a case for using classical minimum norm estimation in the analysis of EEG and MEG data. Neuroimage. 2004;21:1612–1621. doi: 10.1016/j.neuroimage.2003.12.018. [DOI] [PubMed] [Google Scholar]

- Hauk O, Shtyrov Y, Pulvermuller F. The time course of action and action-word comprehension in the human brain as revealed by neurophysiology. J Physiol Paris. 2008a;102:50–58. doi: 10.1016/j.jphysparis.2008.03.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hauk O, Davis MH, Pulvermüller F. Modulation of brain activity by multiple lexical and word form variables in visual word recognition: a parametric fMRI study. Neuroimage. 2008b;42:1185–1195. doi: 10.1016/j.neuroimage.2008.05.054. [DOI] [PubMed] [Google Scholar]

- Hauk O, Davis MH, Kherif F, Pulvermüller F. Imagery or meaning? Evidence for a semantic origin of category-specific brain activity in metabolic imaging. Eur J Neurosci. 2008c;27:1856–1866. doi: 10.1111/j.1460-9568.2008.06143.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Helbig HB, Graf M, Kiefer M. The role of action representations in visual object recognition. Exp Brain Res. 2006;174:221–228. doi: 10.1007/s00221-006-0443-5. [DOI] [PubMed] [Google Scholar]

- Hoenig K, Sim EJ, Bochev V, Herrnberger B, Kiefer M. Conceptual flexibility in the human brain: dynamic recruitment of semantic maps from visual, motion and motor-related areas. J Cogn Neurosci. 2008;20:1799–1814. doi: 10.1162/jocn.2008.20123. [DOI] [PubMed] [Google Scholar]

- Howard MA, Volkov IO, Mirsky R, Garell PC, Noh MD, Granner M, Damasio H, Steinschneider M, Reale RA, Hind JE, Brugge JF. Auditory cortex on the human posterior superior temporal gyrus. J Comp Neurol. 2000;416:79–92. doi: 10.1002/(sici)1096-9861(20000103)416:1<79::aid-cne6>3.0.co;2-2. [DOI] [PubMed] [Google Scholar]

- Humphreys GW, Riddoch MJ, Quinlan PT. Cascade processes in picture identification. Cogn Neuropsychol. 1988;5:67–103. [Google Scholar]

- Huynh H, Feldt LS. Conditions under which mean square ratios in repeated measures designs have exact F-distributions. J Am Stat Assoc. 1970;65:1582–1589. [Google Scholar]

- James TW, Gauthier I. Auditory and action semantic features activate sensory-specific perceptual brain regions. Curr Biol. 2003;13:1792–1796. doi: 10.1016/j.cub.2003.09.039. [DOI] [PubMed] [Google Scholar]

- Kellenbach ML, Brett M, Patterson K. Large, colorful, or noisy? Attribute- and modality-specific activations during retrieval of perceptual attribute knowledge. Cogn Affect Behav Neurosci. 2001;1:207–221. doi: 10.3758/cabn.1.3.207. [DOI] [PubMed] [Google Scholar]

- Kessler K, Kiefer M. Disturbing visual working memory: Electrophysiological evidence for a role of prefrontal cortex in recovery from interference. Cereb Cortex. 2005;15:1075–1087. doi: 10.1093/cercor/bhh208. [DOI] [PubMed] [Google Scholar]

- Khader P, Burke M, Bien S, Ranganath C, Rösler F. Content-specific activation during associative long-term memory retrieval. Neuroimage. 2005;27:805–816. doi: 10.1016/j.neuroimage.2005.05.006. [DOI] [PubMed] [Google Scholar]

- Kiefer M. Perceptual and semantic sources of category-specific effects in object categorization: event-related potentials during picture and word categorization. Mem Cogn. 2001;29:100–116. doi: 10.3758/bf03195745. [DOI] [PubMed] [Google Scholar]

- Kiefer M. Repetition priming modulates category-related effects on event-related potentials: further evidence for multiple cortical semantic systems. J Cogn Neurosci. 2005;17:199–211. doi: 10.1162/0898929053124938. [DOI] [PubMed] [Google Scholar]

- Kiefer M, Spitzer M. The limits of a distributed account of conceptual knowledge. Trends Cogn Sci. 2001;5:469–471. doi: 10.1016/s1364-6613(00)01798-8. [DOI] [PubMed] [Google Scholar]

- Kiefer M, Schuch S, Schenck W, Fiedler K. Mood states modulate activity in semantic brain areas during emotional word encoding. Cereb Cortex. 2007a;17:1516–1530. doi: 10.1093/cercor/bhl062. [DOI] [PubMed] [Google Scholar]

- Kiefer M, Sim EJ, Liebich S, Hauk O, Tanaka J. Experience-dependent plasticity of conceptual representations in human sensory-motor areas. J Cogn Neurosci. 2007b;19:525–542. doi: 10.1162/jocn.2007.19.3.525. [DOI] [PubMed] [Google Scholar]

- Kosslyn SM. Cambridge, MA: MIT; 1994. Image and brain: the resolution of the imagery debate. [Google Scholar]

- Kraemer DJ, Macrae CN, Green AE, Kelley WM. Musical imagery: sound of silence activates auditory cortex. Nature. 2005;434:158. doi: 10.1038/434158a. [DOI] [PubMed] [Google Scholar]

- Lancaster JL, Woldorff MG, Parsons LM, Liotti M, Freitas CS, Rainey L, Kochunov PV, Nickerson D, Mikiten SA, Fox PT. Automated Talairach atlas labels for functional brain mapping. Hum Brain Mapp. 2000;10:120–131. doi: 10.1002/1097-0193(200007)10:3<120::AID-HBM30>3.0.CO;2-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Levelt WJ, Roelofs A, Meyer AS. A theory of lexical access in speech production. Behav Brain Sci. 1999;22:1–38. doi: 10.1017/s0140525x99001776. [DOI] [PubMed] [Google Scholar]

- Lewis JW, Wightman FL, Brefczynski JA, Phinney RE, Binder JR, DeYoe EA. Human brain regions involved in recognizing environmental sounds. Cereb Cortex. 2004;14:1008–1021. doi: 10.1093/cercor/bhh061. [DOI] [PubMed] [Google Scholar]

- Machery E. Concept empiricism: a methodological critique. Cognition. 2007;104:19–46. doi: 10.1016/j.cognition.2006.05.002. [DOI] [PubMed] [Google Scholar]

- Martin A, Chao LL. Semantic memory and the brain: structure and processes. Curr Opin Neurobiol. 2001;11:194–201. doi: 10.1016/s0959-4388(00)00196-3. [DOI] [PubMed] [Google Scholar]

- Martin A, Wiggs CL, Ungerleider LG, Haxby JV. Neural correlates of category-specific knowledge. Nature. 1996;379:649–652. doi: 10.1038/379649a0. [DOI] [PubMed] [Google Scholar]

- Milner AD, Goodale MA. Oxford: Oxford UP; 1995. The visual brain in action. [Google Scholar]

- Näätänen R. Hillsdale, NJ: Erlbaum; 1992. Attention and brain function. [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh Inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F. Brain mechanisms linking language and action. Nat Rev Neurosci. 2005;6:576–582. doi: 10.1038/nrn1706. [DOI] [PubMed] [Google Scholar]

- Pulvermüller F, Shtyrov Y, Ilmoniemi R. Brain signatures of meaning access in action word recognition. J Cogn Neurosci. 2005;17:884–892. doi: 10.1162/0898929054021111. [DOI] [PubMed] [Google Scholar]

- Ranganath C, Cohen MX, Dam C, D'Esposito M. Inferior temporal, prefrontal, and hippocampal contributions to visual working memory maintenance and associative memory retrieval. J Neurosci. 2004;24:3917–3925. doi: 10.1523/JNEUROSCI.5053-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rugg MD, Coles MGH. The ERP and cognitive psychology: Conceptual issues. In: Rugg MD, Coles MGH, editors. Electrophysiology of mind. Oxford: Oxford UP; 1995. pp. 27–39. [Google Scholar]

- Scherg M, von Cramon D. Topographical analysis of auditory evoked potentials: Derivation of components. In: Nodar RH, Barber C, editors. Evoked potentials. Boston: Butterworth; 1984. pp. 73–81. [Google Scholar]

- Simmons WK, Martin A, Barsalou LW. Pictures of appetizing foods activate gustatory cortices for taste and reward. Cereb Cortex. 2005;15:1602–1608. doi: 10.1093/cercor/bhi038. [DOI] [PubMed] [Google Scholar]

- Smith EE, Shoben EJ, Rips LJ. Structure and process in semantic memory: a featural model for semantic decisions. Psychol Rev. 1974;81:214–241. [Google Scholar]

- Snyder AZ, Abdullaev YG, Posner MI, Raichle ME. Scalp electrical potentials reflect regional blood flow responses during processing of written words. Proc Natl Acad Sci U S A. 1995;92:1689–1693. doi: 10.1073/pnas.92.5.1689. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Specht K, Reul J. Functional segregation of the temporal lobes into highly differentiated subsystems for auditory perception: an auditory rapid event-related fMRI-task. Neuroimage. 2003;20:1944–1954. doi: 10.1016/j.neuroimage.2003.07.034. [DOI] [PubMed] [Google Scholar]

- Tyler LK, Moss HE. Towards a distributed account of conceptual knowledge. Trends Cogn Sci. 2001;5:244–252. doi: 10.1016/s1364-6613(00)01651-x. [DOI] [PubMed] [Google Scholar]

- Vingerhoets G. Knowing about tools: neural correlates of tool familiarity and experience. Neuroimage. 2008;40:1380–1391. doi: 10.1016/j.neuroimage.2007.12.058. [DOI] [PubMed] [Google Scholar]

- Warrington EK, McCarthy RA. Categories of knowledge. Brain. 1987;110:1273–1296. doi: 10.1093/brain/110.5.1273. [DOI] [PubMed] [Google Scholar]

- Wheeler ME, Petersen SE, Buckner RL. Memory's echo: vivid remembering reactivates sensory-specific cortex. Proc Natl Acad Sci U S A. 2000;97:11125–11129. doi: 10.1073/pnas.97.20.11125. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zatorre RJ, Halpern AR, Perry DW, Meyer E, Evans AC. Hearing in the mind's ear: a PET investigation of musical imagery and perception. J Cogn Neurosci. 1996;8:29–46. doi: 10.1162/jocn.1996.8.1.29. [DOI] [PubMed] [Google Scholar]