Abstract

The role of the human amygdala in real social interactions remains essentially unknown, although studies in nonhuman primates and studies using photographs and video in humans have shown it to be critical for emotional processing and suggest its importance for social cognition. We show here that complete amygdala lesions result in a severe reduction in direct eye contact during conversations with real people, together with an abnormal increase in gaze to the mouth. These novel findings from real social interactions are consistent with an hypothesized role for the amygdala in autism and the approach taken here opens up new directions for quantifying social behavior in humans.

Keywords: social cognition, face gaze, lesion, autism, amygdala, eye position, facial

Introduction

Ever since the classic studies of Kluver and Bucy (1937), the amygdala has been implicated in social behavior in primates. Although effects of amygdala lesions on real social behavior have been investigated in monkeys (Meunier et al., 1999; Emery et al., 2001; Prather et al., 2001; Bauman et al., 2004; Bachevalier and Loveland, 2006), all quantitative studies in humans have used artificial social stimuli in the laboratory (Adolphs et al., 1998; Adolphs and Tranel, 2000; Phelps, 2006). Artificial stimuli have also been important in demonstrating the response of the amygdala in healthy participants using neuroimaging (Breiter et al., 1996; Irwin et al., 1996; Whalen, 1999; Kim et al., 2004; Whalen et al., 2004; Johnstone et al., 2005).

Perhaps the most striking findings to emerge out of studies investigating the effects of human amygdala lesions on social cognition come from patient S.M., a 42-year-old woman with extremely rare focal bilateral amygdala lesions (Tranel and Hyman, 1990; Adolphs and Tranel, 2000). Like other subjects with amygdala damage (Adolphs et al., 1999), S.M. is impaired in judgment of the emotions shown in photographs of faces, an impairment caused by a failure to fixate informative features of the faces (Adolphs et al., 2005). Intriguingly, S.M. and other patients with bilateral amygdala damage are also impaired in more complex social judgments, such as trustworthiness, but again, these findings pertain solely to judgments about photographs of faces (Adolphs et al., 1998). It has remained an open question if and how any of these findings might apply to situations in which real people are the social stimuli.

The present study tested the hypothesis that the amygdala would play a critical role in the control of face gaze during real conversations with another person. We found that focal damage to the bilateral amygdala did not in fact reduce overall gaze to the face during conversations, but instead changed the way gaze was deployed to the face. Lacking an amygdala eliminated almost all direct eye contact as measured by fixations to the eyes, and resulted in nearly exclusive gaze to the mouth. We discuss the implications of these findings for hypotheses regarding the role of the amygdala in the top-down attentional control of face gaze, and for its putative role in autism.

Method

Participants.

All research methods were conducted with the approval of the Institutional Review Board at the California Institute of Technology. We tested participant S.M., a 42-year-old woman with bilateral amygdala damage whose detailed neuropsychological profile has been published previously (Tranel and Hyman, 1990; Adolphs and Tranel, 2000), five healthy women similar in age (49.8 ± 8.1 years; mean ± SD), and 21 healthy younger participants (12 women; age 27.9 ± 2.5 years). S.M. participated in all experiments and controls participated as specified below. All participants had normal or corrected to normal visual acuity, normal basic visuoperception (e.g., normal performance on the Benton facial recognition task), and IQ in the normal range.

Face-to-face conversation.

Our experiment consisted of conversations with a professional, trained actor as part of a larger study of the effect of social context (i.e., telling the truth vs lying) on face gaze. Before speaking with the actor, participants wrote out sets of true and false responses to a set of interview questions regarding controversial social issues, including reasons for their answers (for a list of questions, see Table 1). Participants needed only to memorize their initial yes/no response and knew that the interviewer would not know whether they were telling the truth or lying, but that he would judge the truth of each response. Participants were instructed to respond always in as convincing a manner as possible.

Table 1.

Interview questions

| Questions |

|---|

| In your opinion… |

| Q1a: Is it moral to lie to achieve success?* |

| Q1b: Is it moral to lie to make others feel better about themselves?* |

| Q2a: Is euthanasia morally just?* ‡ |

| Q2b: Is capital punishment morally just?* |

| Q3a: Are women, in general, less intelligent than men? |

| Q3b: Do women, in general, think less logically than men? |

| Q4a: Are men, in general, less socially active than women? |

| Q4b: Are men, in general, less sensitive than women? |

| Q5a: Should prayer be banned in public schools?* |

| Q5b: Should corporal punishment be used in public schools?* |

| Q6a: Are organic foods better than regular foods?* ‡ |

| Q6b: Are psychological experiments important?* |

| Q7a: Is it ethical to use animals in research?* |

| Q7b: Is it ethical to clone humans?* |

| Q8a: Should social security be abolished?* ‡ |

| Q8b: Should immigration be more tightly controlled?* |

*Used with S.M.

‡true responses, used in the analysis; Q, question.

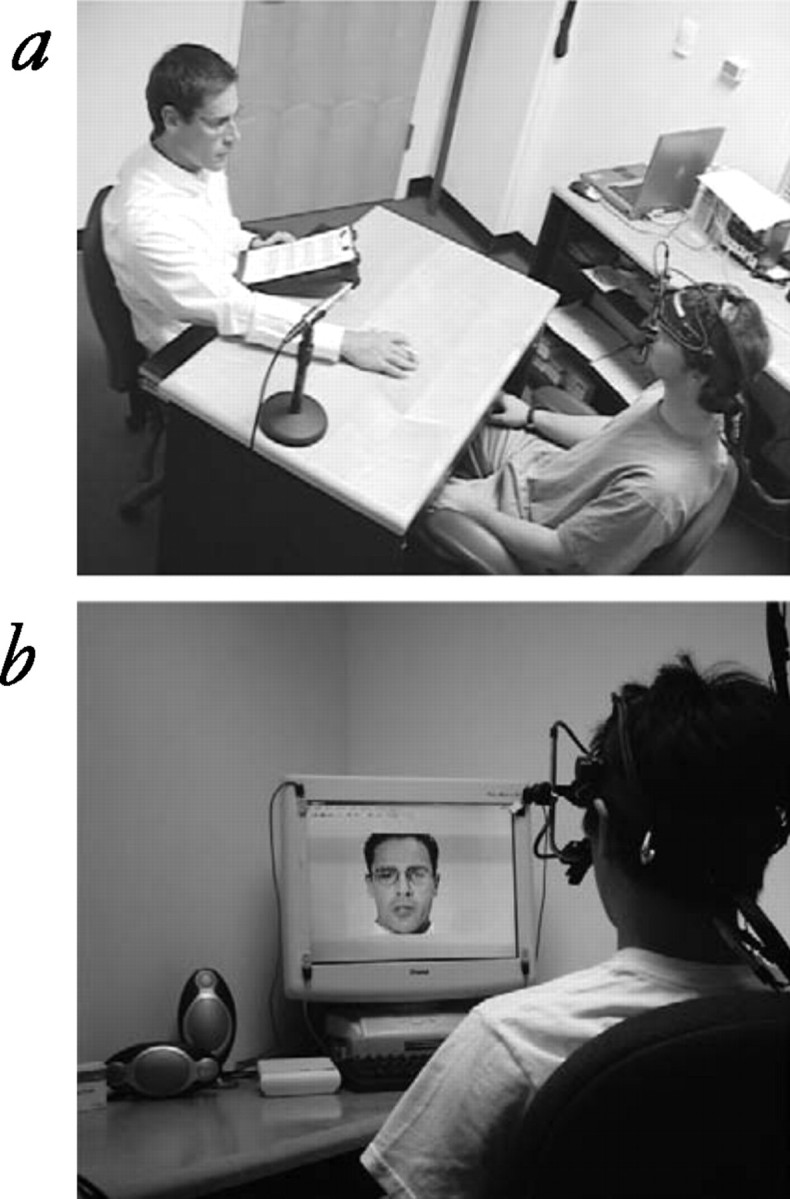

Immediately before the beginning of a face-to-face session, participants were fitted with an eye tracker worn on the head (Eyelink II; SR Research, Osgoode, Ontario, Canada). This enabled video recording of the actor via a scene camera mounted near the forehead, with concurrent eye tracking in three dimensions at a temporal resolution of 250 Hz and a spatial resolution of ∼1° of visual angle. Calibration in three dimensions used a set of points at several depths, allowing the Eyelink II to use binocular disparity to correct for parallax. Participants were free to move their head and to gesture during the session. Each face-to-face session consisted of either six (S.M. and five matched controls) or eight (21 younger controls) questions. The actor was seated ∼1.2 m (∼10° visual angle) across a small desk from the subject for the duration of the session (Fig. 1 a).

Figure 1.

Experimental setup. a, In the face-to-face paradigm, the actor (left) is shown seated across from the participant (right), who is wearing the eye tracker. b, In the live video setup, the actor (on screen) is shown over one-way live video so that the participant can see and hear the actor, although the actor can only hear, but not see, the participant.

Participants were signaled by an experimenter via an earphone either to give their true opinion or to lie. All data presented here were taken only from trials on which participants told the truth (a preliminary analysis found no effect of truth/lying on the amygdala-specific differences in the measures reported here). The professional actor (Nick Annunziata) was instructed to maintain eye contact, maintain a neutral facial expression and tone of voice, avoid gestures, and keep his head position fairly stable. The actor was unaware of the neurological status of subject S.M. and completely unfamiliar with any of our hypotheses. None of our participants stated that they recognized the actor.

A trial began when the actor issued question A, a yes/no question regarding a participant's opinion (e.g., “In your opinion, should social security be abolished?”), and then proceeded in the following order: (1) participant's response A; (2) follow-up question B (“Why?” or “Why not?”); (3) participant's response B; (4) follow-up question C (“How strongly do you feel about that?”); (5) participant's response C; (6) follow-up question D (“Why?” or “Why not?”); and (7) participant's response D, after which the trial ended. At the end of each trial, the actor looked down at a notepad for a few seconds to read the next question, and then proceeded to make eye contact again and the next trial started.

To determine whether familiarity with a conversational partner would change any putative effects of amygdala lesion on fixations to the face, we tested S.M. with one of the experimenters (R.A.) whom S.M. has known for over 12 years. There was a short break between S.M.'s session with the actor and this session, during which two experimenters (M.L.S. and R.A.) spoke with S.M. casually. For the interview session, R.A. asked the same questions the actor had just asked, in the same order. Effects of familiarity were tested only for S.M., and only in the face-to-face condition.

Conversation over live video.

The procedure over live color video was a modified form of the face-to-face condition. The actor could only hear, but not see, the participants, although the participants could both see and hear the actor via live video and knew he was present in an adjacent room (Fig. 1 b). Live video was presented at 25 frames per second and recorded simultaneously at 15 frames per second using a webcam (640 pixel width × 480 pixel height; Logitech, Fremont, CA) and the Image Acquisition Toolbox in Matlab (R14Sp2; Mathworks, Natick, MA), and eye tracking was done by interfacing with the Eyelink II within Matlab using the Eyelink Toolbox (Cornelissen et al., 2002). Video was presented on a cathode ray tube monitor (40 cm width × 30 cm height; MA203DT D, Vision Master 513 Pro; Iiyama, Warminster, PA) at a resolution of 720 × 578 pixels with a vertical refresh rate of 100 Hz. Only the center of the webcam image (320 × 240 pixels), framing the actor's head and face, was presented against a gray background. Eye-to-screen distance was ∼76 cm, and the actor's face on the screen subtended ∼11° of visual angle.

SM and the five matched controls all participated in the video condition first, and the face-to-face condition second; of the 21 younger controls, seven first participated in the video condition and 13 first participated in the face-to-face condition (there were no order effects on the effects reported here in the 21 younger controls and so these subgroups were pooled).

Automated face detection.

The scene camera mounted on the subject's forehead and the recording of the webcam video provided frame-by-frame datasets of the stimulus (i.e., the actor) onto which a participant's gaze data were superimposed. To perform a region of interest (ROI)-based analysis of fixation patterns, it was necessary to determine the ROI-specific coordinates for all frames corresponding to fixations made during a given trial. Face detection was automated using software libraries developed at the Fraunhofer Institute for Integrated Circuits (Fröba and Ernst, 2004; Fröba and Küblbeck, 2004) and generously provided to our laboratory by Dr. Christian Küblbeck. The method has a hit rate of 90% and a false alarm rate of 0.4% (Fröba and Küblbeck, 2004). We decreased the likelihood of false alarms by only selecting those frames on a given trial whose face and eye coordinates were within 2 SDs of the mean coordinate values. This range allowed for changes in coordinates caused by head movements by participants (for the face-to-face condition) and the actor (for both conditions).

Data analysis.

All data analyses focused on fixations that occurred between a participant's first response (response A) and her last response (response D), using the Eyelink Data Viewer. Note that the analyses included only those video frames when the participant, not the actor, was speaking. This was done to avoid those frames during which attention might be captured by a speaking mouth facing the participant. We are unable to confidently analyze fixations that occurred during the very brief time when the actor, and not the participant, was speaking, because of the low number of fixations obtained during this time (typically, N < 25 across all trials). To discriminate fixations, we set a threshold of 0.1° of drift within 50 ms. ROIs were defined for the face, eyes, and mouth, based on the results of the automated face detection software. Each fixation was associated with an ROI by calculating the mean ROI coordinates across all frames for the duration of the fixation. SDs for these means were typically <5 pixels in both directions. Fixations falling within the ROIs, but not on ROI edges, were included for the ROI-specific analyses. The proportion of time spent fixating a given ROI was calculated by summing the fixation durations within that ROI and dividing by the total trial time, yielding values for fractional dwell time. Fractional dwell times within the eye and mouth regions were further normalized by the face-specific fractional dwell time (Fig. 2 e).

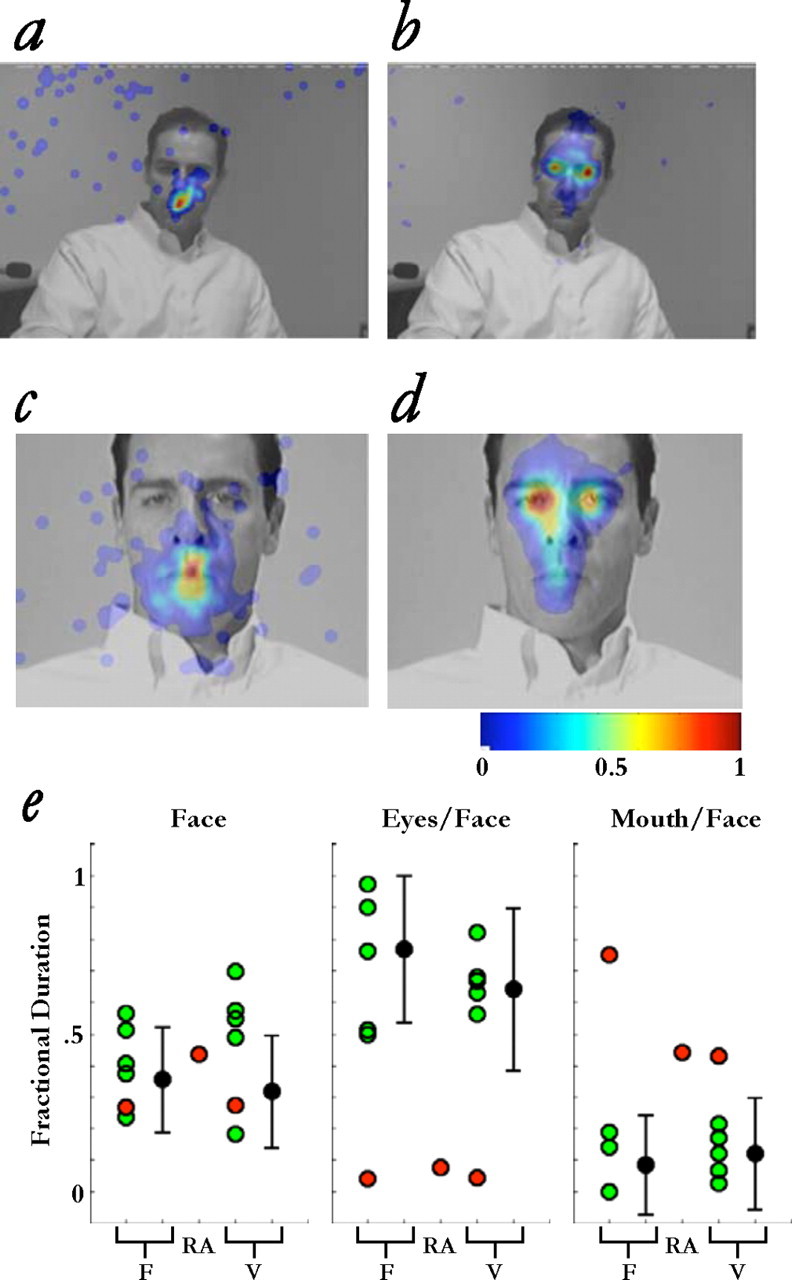

Figure 2.

Fixations made during naturalistic social interaction. a–d, Color encodes the normalized number of fixations (see bar at bottom right). The number of fixations S.M. (a, c) and five controls (b, d) made to regions of the face during face-to-face conversations with the actor (N.A.), either face-to-face (a, b) or over live video (c, d), is shown. e, Proportion of time spent fixating facial features in the face-to-face (F) and live video (V) conditions, as well as during face-to-face interaction with a familiar person (one of the experimenters, R.A.). Values for the eyes (middle) and mouth (right) were normalized with respect to overall gaze to the face (left). Red circles, S.M.; green circles, five matched controls; filled black circles, comparison group of 21 younger participants (means and SDs). Across all conditions and compared with all subject groups, S.M. made an abnormally large number of fixations on the mouth and an abnormally small number of fixations on the eyes.

Results

We found no effect of amygdala lesion on the overall time taken to respond to questions or on the length of time spent fixating the face during a trial (Fig. 2 e, left). S.M.'s face-to-face and video condition trials had a mean duration of 26.39 ± 9.86 s (mean ± SD), which fell within the range of the mean trial durations of both the matched (17.92 ± 7.56 s; n = 5) and younger (25.21 ± 11.05 s; n = 21) control groups. S.M. completed the initial questionnaires and answered the actor's questions normally.

We compared S.M.'s pattern of fixations to those of five healthy females of similar age, and to those of a larger group of 21 healthy younger subjects, analyzing first those fixations during which the participant, not the actor, was speaking (see Materials and Methods). Relative to these two comparison groups, which did not differ on the measures of gaze reported here (t (24) < 0.75; p values > 0.4), S.M. spent less time making fixations to the eyes (t (4) = −2.87, p = 0.023, d′ = 3.13 and t (21) = −3.06, p = 0.003, d′ = 3.15, respectively for each of the two comparison groups), and significantly more time making fixations to the mouth (t (4) = 6.74, p = 0.001, d′ = 7.38 and t (21) = 4.13, p < 0.001, d′ = 4.23, respectively) (Fig. 2 a,b).

In a second experiment with the same actor in an adjacent room, conversation proceeded as in the face-to-face experiment but via live video. The findings here were essentially the same: S.M. barely fixated the eyes (t (25) = −2.53; p = 0.009; d′ = 2.58), instead fixating the mouth (t (25) = 1.90; p = 0.035; d′ = 1.93; pooled data from comparison groups) (Fig. 2 c,d). A final experiment done only with S.M. consisted of a face-to-face conversation using the same structured interview with someone S.M. has known for over 12 years (one of the experimenters, R.A.). The findings here paralleled those with the actor. Once again, S.M. hardly fixated the eyes (Fig. 2 e, middle) and fixated the mouth almost exclusively (Fig. 2 e, right), suggesting that familiarity was not a factor in S.M.'s deficit.

Discussion

S.M.'s face gaze during social interactions was similar to S.M.'s gaze to photographs of faces (Adolphs et al., 2005) in that S.M. made nearly no fixations on the eyes during a social interaction with another person. Yet, there was an important difference between the finding with photographs and our results here in real conversations. Whereas S.M. focused more on the center of the face when looking at photographs, in real conversations S.M. spent most of the time looking at the mouth. This difference may be related to the putative role of the amygdala in top-down visual attention to faces and other social stimuli (Anderson and Phelps, 2001; Vuilleumier et al., 2004; Adolphs et al., 2005; Adolphs and Spezio, 2006). Lacking an amygdala could interfere with normal face gaze because of a lack of top down control, such that guidance of overt visuospatial attention is dominated by low-level visual cues (Peters et al., 2005). In static photographs, the unmoving mouth is often less of a low-level visually salient cue than are the eyes. This changes dramatically in real social interactions, however, where the motion and sound of the mouth provide highly visually and aurally salient attentional cues. To directly test this hypothesis for our observations with S.M., additional study would be needed of S.M.'s face gaze while S.M. listens closely to another person during social interactions.

It is intriguing in this light to consider similarities between our findings and those in autism. People with autism do not fixate photographs and videos of faces normally (Klin et al., 2002; Pelphrey et al., 2002), and are anecdotally reported to gaze at the mouth in social interactions (Grandin, 1996). It has been suggested that the amygdala dysfunction is in part to blame for these abnormalities in autism (Baron-Cohen et al., 2000), and recent findings using neuroimaging with photographs of faces support this hypothesis (Dalton et al., 2005). Our finding that S.M. almost exclusively fixated the mouth during conversations is also consistent with this hypothesis. However, it is important to note that autism likely involves an active aversion to fixating the eyes (Hutt and Ounsted, 1966; Richer and Coss, 1976; Spezio et al., 2007), whereas we do not have any evidence of direct gaze aversion in S.M. Instead, our observations to date strongly suggest that S.M. simply is not attracted to fixating the eyes, but not averse to them (e.g., S.M. will fixate them on instruction with no psychophysiological or self-report evidence of aversion). These considerations suggest a modified two-component hypothesis for the increased mouth gaze in autism: it may result from low-level attentional capture by the moving mouth, together with aversion to fixate the eyes. The first component could be similar to the effect of amygdala lesions, which we discussed above. The second component would not appear to arise from a decrease in amygdala function (as would result from lesions), but rather could arise from exaggerated amygdala activity linked to social stress (Dalton et al., 2005). It is conceivable that both decreased and increased amygdala function could contribute to autism, provided that they arise from separate cell populations, or separate nuclei, within the amygdala.

Social gaze is known as a critical component of nonverbal communication and has been studied via observational coding during live situations or on video (Argyle and Cook, 1976). The technological advance facilitating our study promises new links between the study of social gaze and other methodologies in social neuroscience. As the first high-resolution quantification of social gaze in real conversations, our study opens up possibilities for probing the function of specific brain regions in relation to social gaze, as we have shown. Additional developments will aim at better understanding pathology and rehabilitation in a range of psychiatric disorders, and investigating more thoroughly how social contexts (e.g., deception) and cognitive frames influence gaze in healthy individuals (Granhag and Strömwall, 2002).

Footnotes

This work was supported by grants from the National Institute of Mental Health, the Cure Autism Now Foundation, Autism Speaks/National Alliance for Autism Research, and the Caltech Summer Undergraduate Research Fellow Program. We thank Nick Annunziata for his expert participation as the actor in our experiments, Lisa Lyons for help with programming the automated face detector, and Sol Simpson for advice and guidance in the analysis of fixation data.

References

- Adolphs R, Spezio ML. Role of the amygdala in processing visual social stimuli. Prog Brain Res. 2006;156:363–378. doi: 10.1016/S0079-6123(06)56020-0. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D. Emotion recognition and the human amygdala. In: Aggleton JP, editor. The amygdala. A functional analysis. New York: Oxford UP; 2000. pp. 587–630. [Google Scholar]

- Adolphs R, Tranel D, Damasio AR. The human amygdala in social judgment. Nature. 1998;393:470–474. doi: 10.1038/30982. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Tranel D, Hamann S, Young A, Calder A, Anderson A, Phelps E, Lee GP, Damasio AR. Recognition of facial emotion in nine subjects with bilateral amygdala damage. Neuropsychologia. 1999;37:1111–1117. doi: 10.1016/s0028-3932(99)00039-1. [DOI] [PubMed] [Google Scholar]

- Adolphs R, Gosselin F, Buchanan TW, Tranel D, Schyns P, Damasio AR. A mechanism for impaired fear recognition after amygdala damage. Nature. 2005;433:68–72. doi: 10.1038/nature03086. [DOI] [PubMed] [Google Scholar]

- Anderson AK, Phelps EA. Lesions of the human amygdala impair enhanced perception of emotionally salient events. Nature. 2001;411:305–309. doi: 10.1038/35077083. [DOI] [PubMed] [Google Scholar]

- Argyle M, Cook M. Cambridge, UK: Cambridge UP; 1976. Gaze and mutual gaze. [Google Scholar]

- Bachevalier J, Loveland KA. The orbitofrontal-amygdala circuit and self-regulation of social-emotional behavior in autism. Neurosci Biobehav Rev. 2006;30:97–117. doi: 10.1016/j.neubiorev.2005.07.002. [DOI] [PubMed] [Google Scholar]

- Baron-Cohen S, Ring HA, Bullmore ET, Wheelwright S, Ashwin C, Williams SC. The amygdala theory of autism. Neurosci Biobehav Rev. 2000;24:355–364. doi: 10.1016/s0149-7634(00)00011-7. [DOI] [PubMed] [Google Scholar]

- Bauman MD, Lavenex P, Mason WA, Capitanio JP, Amaral DG. The development of social behavior following neonatal amygdala lesions in rhesus monkeys. J Cogn Neurosci. 2004;16:1388–1411. doi: 10.1162/0898929042304741. [DOI] [PubMed] [Google Scholar]

- Breiter HC, Etcoff NL, Whalen PJ, Kennedy WA, Rauch SL, Buckner RL, Strauss MM, Hyman SE, Rosen BR. Response and habituation of the human amygdala during visual processing of facial expression. Neuron. 1996;17:875–887. doi: 10.1016/s0896-6273(00)80219-6. [DOI] [PubMed] [Google Scholar]

- Cornelissen FW, Peters EM, Palmer J. The eyelink toolbox: eye tracking within MATLAB and the psychophysics toolbox. Behav Res Methods Instrum Comput. 2002;34:613–617. doi: 10.3758/bf03195489. [DOI] [PubMed] [Google Scholar]

- Dalton KM, Nacewicz BM, Johnstone T, Schaefer HS, Gernsbacher MA, Goldsmith HH, Alexander AL, Davidson RJ. Gaze fixation and the neural circuitry of face processing in autism. Nat Neurosci. 2005;8:519–526. doi: 10.1038/nn1421. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emery NJ, Capitanio JP, Mason WA, Machado CJ, Mendoza SP, Amaral DG. The effects of bilateral lesions of the amygdala on dyadic social interactions in rhesus monkeys. Behav Neurosci. 2001;115:515–544. [PubMed] [Google Scholar]

- Fröba B, Ernst A. In: Sixth IEEE international conference on automatic face and gesture recognition. Seoul, Korea: IEEE; 2004. Face detection with the modified census transform; p. 91. [Google Scholar]

- Fröba B, Küblbeck C. Face tracking by means of continuous detection. In: Conference on computer vision and pattern recognition workshop. :65. [Google Scholar]

- Grandin T. New York: Vintage; 1996. Thinking in pictures: and other reports from my life with autism. [Google Scholar]

- Granhag PA, Strömwall LA. Repeated interrogations: verbal and non-verbal cues to deception. Appl Cogn Psychol. 2002;16:243–257. [Google Scholar]

- Hutt C, Ounsted C. The biological significance of gaze aversion with particular reference to the syndrome of infantile autism. Behav Sci. 1966;11:346–356. doi: 10.1002/bs.3830110504. [DOI] [PubMed] [Google Scholar]

- Irwin W, Davidson RJ, Lowe MJ, Mock BJ, Sorenson JA, Turski PA. Human amygdala activation detected with echo-planar functional magnetic resonance imaging. NeuroReport. 1996;7:1765–1769. doi: 10.1097/00001756-199607290-00014. [DOI] [PubMed] [Google Scholar]

- Johnstone T, Somerville LH, Alexander AL, Oakes TR, Davidson RJ, Kalin NH, Whalen PJ. Stability of amygdala BOLD response to fearful faces over multiple scan sessions. NeuroImage. 2005;25:1112–1123. doi: 10.1016/j.neuroimage.2004.12.016. [DOI] [PubMed] [Google Scholar]

- Kim H, Somerville LH, Johnstone T, Polis S, Alexander AL, Shin LM, Whalen PJ. Contextual modulation of amygdala responsivity to surprised faces. J Cogn Neurosci. 2004;16:1730–1745. doi: 10.1162/0898929042947865. [DOI] [PubMed] [Google Scholar]

- Klin A, Jones W, Schultz R, Volkmar F, Cohen D. Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. Arch Gen Psychiatry. 2002;59:809–816. doi: 10.1001/archpsyc.59.9.809. [DOI] [PubMed] [Google Scholar]

- Kluver H, Bucy PC. “Psychic blindness” and other symptoms following bilateral temporal lobectomy in rhesus monkeys. Am J Physiol. 1937;119:352–353. [Google Scholar]

- Meunier M, Bachevalier J, Murray EA, Malkova L, Mishikin M. Effects of aspiration versus neurotoxic lesions of the amygdala on emotional responses in monkeys. Eur J Neurosci. 1999;11:4403–4418. doi: 10.1046/j.1460-9568.1999.00854.x. [DOI] [PubMed] [Google Scholar]

- Pelphrey KA, Sasson NJ, Reznick JS, Paul G, Goldman BD, Piven J. Visual scanning of faces in autism. J Autism Dev Disord. 2002;32:249–261. doi: 10.1023/a:1016374617369. [DOI] [PubMed] [Google Scholar]

- Peters RJ, Iyer A, Itti L, Koch C. Components of bottom-up gaze allocation in natural images. Vision Res. 2005;45:2397–2416. doi: 10.1016/j.visres.2005.03.019. [DOI] [PubMed] [Google Scholar]

- Phelps EA. Emotion and cognition: insights from studies of the human amygdala. Annu Rev Psychol. 2006;57:27–53. doi: 10.1146/annurev.psych.56.091103.070234. [DOI] [PubMed] [Google Scholar]

- Prather MD, Lavenex P, Mauldin-Jourdain ML, Mason WA, Capitanio JP, Mendoza SP, Amaral DG. Increased social fear and decreased fear of objects in monkeys with neonatal amygdala lesions. Neuroscience. 2001;106:653–658. doi: 10.1016/s0306-4522(01)00445-6. [DOI] [PubMed] [Google Scholar]

- Richer JM, Coss RG. Gaze aversion in autistic and normal children. Acta Psychiatr Scand. 1976;53:193–210. doi: 10.1111/j.1600-0447.1976.tb00074.x. [DOI] [PubMed] [Google Scholar]

- Spezio ML, Adolphs R, Hurley RS, Piven J. Analysis of face gaze in autism using “Bubbles”. Neuropsychologia. 2007;45:144–151. doi: 10.1016/j.neuropsychologia.2006.04.027. [DOI] [PubMed] [Google Scholar]

- Tranel D, Hyman BT. Neuropsychological correlates of bilateral amygdala damage. Arch Neurol. 1990;47:349–355. doi: 10.1001/archneur.1990.00530030131029. [DOI] [PubMed] [Google Scholar]

- Vuilleumier P, Richardson MP, Armony JL, Driver J, Dolan RJ. Distant influences of amygdala lesion on visual cortical activation during emotional face processing. Nat Neurosci. 2004;7:1271–1278. doi: 10.1038/nn1341. [DOI] [PubMed] [Google Scholar]

- Whalen PJ. Fear, vigilance, and ambiguity: initial neuroimaging studies of the human amygdala. Curr Dir Psychol Sci. 1999;7:177–187. [Google Scholar]

- Whalen PJ, Kagan J, Cook RG, Davis FC, Kim H, Polis S, McLaren DG, Somerville LH, McLean AA, Maxwell JS, Johnstone T. Human amygdala responsivity to masked fearful eye whites. Science. 2004;306:2061. doi: 10.1126/science.1103617. [DOI] [PubMed] [Google Scholar]