Abstract

Audition and vision both form spatial maps of the environment in the brain, and their congruency requires alignment and calibration. Because audition is referenced to the head and vision is referenced to movable eyes, the brain must accurately account for eye position to maintain alignment between the two modalities as well as perceptual space constancy. Changes in eye position are known to variably, but inconsistently, shift sound localization, suggesting subtle shortcomings in the accuracy or use of eye position signals. We systematically and directly quantified sound localization across a broad spatial range and over time after changes in eye position. A sustained fixation task addressed the spatial (steady-state) attributes of eye position-dependent effects on sound localization. Subjects continuously fixated visual reference spots straight ahead (center), to the left (20°), or to the right (20°) of the midline in separate sessions while localizing auditory targets using a laser pointer guided by peripheral vision. An alternating fixation task focused on the temporal (dynamic) aspects of auditory spatial shifts after changes in eye position. Localization proceeded as in sustained fixation, except that eye position alternated between the three fixation references over multiple epochs, each lasting minutes. Auditory space shifted by ∼40% toward the new eye position and dynamically over several minutes. We propose that this spatial shift reflects an adaptation mechanism for aligning the “straight-ahead” of perceived sensory–motor maps, particularly during early childhood when normal ocular alignment is achieved, but also resolving challenges to normal spatial perception throughout life.

Keywords: auditory localization, adaptation, eye movement, multisensory, spatial perception, gaze

Introduction

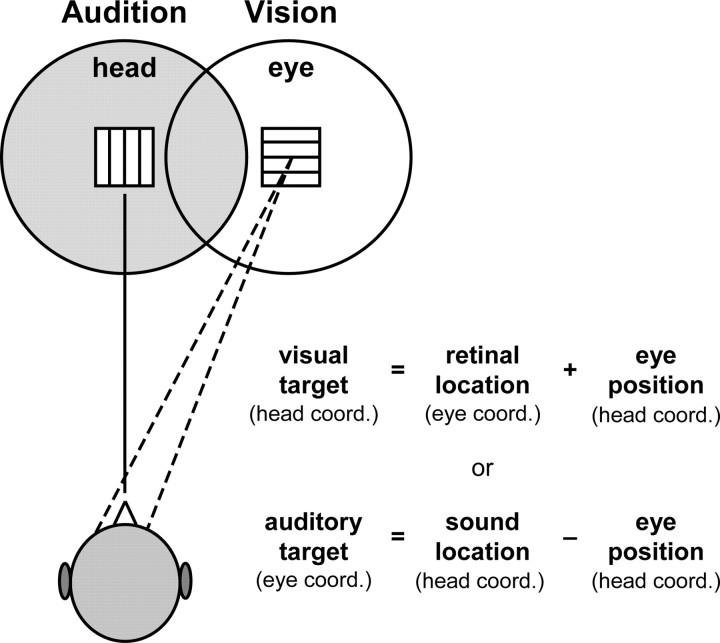

Audition and vision are used by the brain to construct spatial maps of the external world in support of daily activities. However, auditory and visual maps are generated through separate sensory pathways using different mechanisms and coordinate schemes (Knudsen and Brainard, 1995). “Visual space” is eye centered and projects directly onto the retina. In contrast, “auditory space” is head centered and not directly mapped in the cochlea but instead computed centrally based on interaural time and intensity differences and spectral cues from the two ears. Moreover, the auditory and visual frames of reference shift relative to one another during eye movements (Fig. 1). Presumably, the brain takes into account eye position in the head to maintain auditory–visual congruence and space constancy (Groh and Sparks, 1992). Shortcomings in this process would lead to cross-sensory spatial mismatch and potentially erroneous localization of objects that emit both light and sound.

Figure 1.

Eye movements misalign auditory space (left circle) and visual space (right circle). The two sensory maps can only be registered into a common reference frame (eye or head) by taking into account eye position in the head (equations). Otherwise, an object that is both audible (square with horizontal lines) and visible (square with vertical lines) would deliver perceptually conflicting spatial cues. head coord., Head coordination; eye coord., eye coordination.

Behavioral studies in humans and non-human primates show that eccentric eye position actually shifts sound localization anywhere from 3 to 37% of eye position (Weerts and Thurlow, 1971; Bohlander, 1984; Lewald and Ehrenstein, 1996, 1998; Lewald, 1997, 1998; Yao and Peck, 1997; Getzmann, 2002; Metzger et al., 2004; Lewald and Getzmann, 2006), suggesting limitations in aligning auditory and visual space. However, the reported direction, magnitude, and spatial uniformity of this shift vary greatly among studies, which may reflect differences in fixation duration, auditory stimulus, pointing method, localization strategy, and target environment, plane, and distribution. The reported shifts are conventionally attributed to a partial, but static, mismatch between auditory and visual space, possibly caused by an inaccurate eye position signal (Yao and Peck, 1997; Metzger et al., 2004). Nevertheless, varied findings under such diverse experimental conditions preclude any clear and unifying explanation of why and how eye position influences spatial hearing.

In this study, we used novel paradigms to quantify the influence of eye position on sound localization across a broad spatial field and over time. Our efforts were motivated by a peculiar observation made in a preliminary experiment: spatial gain (i.e., the slope of the response vs target relationship) is greater when the eyes are free and foveal vision guides sound localization using a laser pointer than when the eyes are centrally fixed and peripheral vision guides the pointer. We hypothesized that a dynamic shift in auditory space, linked to changes in eye position during sound localization, accounts for the difference.

Several features of our approach addressed methodological limitations in the literature. First, a large number of targets were distributed widely across the frontal field to avoid spatial quantization (memorization of locations) and assess the spatial uniformity of potential eye position-related effects. The target array included a subset of multisampled locations to better quantify response accuracy. Second, acoustic stimuli were presented continuously to preclude the effects of spatial memory (Lewald and Ehrenstein, 2001; Dobreva et al., 2005). However, no feedback was given regarding response outcome, thereby preventing task-related training effects. Third, equalized wideband stimuli ensured that all auditory spatial cues (interaural time, intensity, and spectral) were available and presented at the same sound pressure level (SPL) (Middlebrooks and Green, 1991). Fourth, a laser pointer was used to localize auditory targets. The consistently superior accuracy and precision of vision for guiding the pointer minimized task-related errors. Last, fixation duration and eye position were carefully monitored.

Materials and Methods

Subjects

Twelve human subjects (seven females and five males; age, 21–47 years old) participated in this study. All subjects were recruited from the University of Rochester community and were experienced in other sound localization studies in our laboratory. Subjects were screened with routine clinical examinations to ensure that they were free of neurological or sensory abnormalities and had normal clinical audiograms (0.25–8 kHz). The study was performed with approval from the University of Rochester Research Subjects Review Board in accordance with the 1964 Declaration of Helsinki. All subjects gave informed consent and were compensated for their participation.

Target apparatus and positioning

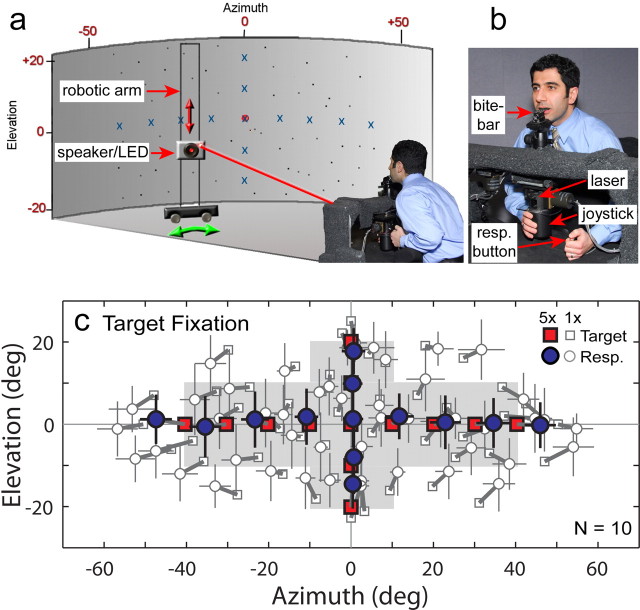

Subjects sat in a dark room lined with acoustic foam (Sonex; Illbruck, Minneapolis, MN) backed by high-density vinyl to attenuate echoes and extrinsic sounds, respectively. They faced the center of a cylindrical screen of acoustically transparent black speaker cloth at 2 m distance (Fig. 2a). The head was fixed using a personalized bite-bar (dental impression compound over a steel bite plate) and positioned at the origin of the cylindrical screen (Fig. 2b). Reid's baseline, an imaginary line extending from the inferior margin of the orbit to the superior aspect of the auditory meatus, was aligned with the horizontal plane. This baseline served as a reliable anatomical landmark for consistent positioning of subjects over multiple experimental sessions. Acoustic targets were presented using an 8-cm-diameter (2.2° subtended angle) two-way coaxial speaker (model PCx 352; Blaupunkt, Hildesheim, Germany). A 2-mm-diameter red light-emitting diode (LED) was mounted at the center of the speaker. The LED projected a spot onto the back of the cylindrical screen to serve as a visual target (3 mm diameter, ≈ 0.1° subtended angle) for calibration of the speaker positioning system and laser pointer (see below). The speaker/LED assembly was installed on a two-axis servo-controlled robotic arm hidden behind the cylindrical screen. The setup enabled rapid, accurate, and precise positioning of the speaker in cylindrical coordinates and provided an unlimited, continuous array of targets over the range of ±65° azimuth and ±25° elevation (Perrett and Noble, 1995).

Figure 2.

Experimental setup: all experiments were conducted inside a darkened, echo-attenuated room. a, Subjects faced a black cylindrical screen (shaded) behind which nonvisible auditory targets were presented across the frontal field from a small loudspeaker under robotic control. Three laser LEDs (0° azimuth, red spot; left and right 20°, not shown) projected directly onto the screen and served as reference spots for fixation. b, The head was fixed using a custom bite-bar, and sound location was reported using a visually guided laser pointer mounted on a cylindrical joystick. resp. button, Response button. c, Plot of auditory target locations and mean subject responses during the target fixation task. The eyes were unconstrained and foveally guided the laser pointer. Targets (squares) and associated responses (circles) are connected with thick lines. The targets in the shaded area and the accompanying responses were used to calculate spatial gain, offset, and accuracy (see Figs. 3, 5). “Multisampled” targets (filled squares) were presented five times (5×) in each subject; all others (open squares) were sampled only once (1×). Horizontal and vertical error bars [thin lines through each mean response (Resp.)] are SDs in this and all subsequent figures.

Spatially diffuse, Gaussian white noise (65 dB SPL) presented between trials during speaker movements masked potential predictive positional cues emanating from the motors and mechanics of the device. The masking noise was delivered through two stationary loudspeakers placed in the corners of the room (±75° azimuth, 20° elevation). Furthermore, the robotic arm positioned the target speaker in two steps, first to a random azimuth and then to its final location. This approach decoupled target travel time from the actual change in target location across trials, another possible predictive cue. Three red laser LEDs rigidly mounted to the room ceiling above the subject projected fixation spots (3 mm projected diameter, ≈0.1° subtended angle) directly onto the cylindrical screen at the center (Ctr; 0°), 20° left (L20°), or 20° right (R20°), all at 0° elevation.

Stimulus characteristics

The auditory stimulus consisted of 150 ms bursts of fresh wideband (0.1–20 kHz) Gaussian noise (equalized, 75 dB SPL), repeating at 5 Hz. The noise bursts were synthesized digitally using SigGenRP software and presented through TDT System II hardware (Tucker-Davis Technologies, Alachua, FL). Because target positions were presented in a cylindrical coordinate plane, targets at extreme elevations (±25°) were ∼21 cm farther from the subject and possibly of slightly lower intensity than targets at 0° elevation. To mask such potential elevation-dependent loudness cues, as well as any idiosyncratic amplitude cues related to target location, stimulus levels were randomly varied from trial to trial between 70 and 75 dB SPL in 1 dB steps.

Response measures

The pointer consisted of a red laser LED (1 mm projected diameter, <0.03° subtended angle) mounted on a freely rotating two-axis cylindrical joystick providing no tactile cues related to its position (Fig. 2b). The joystick was coupled to two orthogonal optical encoders (resolution, <0.1°) that directly measured its angle in azimuth and elevation. The utility of visually guided (laser) pointing for sound localization has been demonstrated both in our own laboratory (Paige et al., 1998) and in others (Lewald and Ehrenstein, 1998; Seeber, 2002; Choisel and Zimmer, 2003). The laser pointer offers two advantages for localizing auditory targets. First, the pointer provides an unlimited, continuous array of response choices with a range extending beyond that of the targets. Thus, localization is not biased by spatial quantization because of limited response choices, windowing, or expectation effects (Perrett and Noble, 1995). Second, the minute projected beam of the laser pointer provides superior spatial resolution in the pointing device, thereby ensuring that responses reflect the subject's localization performance, not the pointing technique itself. We confirmed this by comparing localization of auditory and visual targets across the frontal field. Subjects guided the laser pointer using either the fovea or peripheral vision (eyes fixed and centered). Accuracy and precision for visual targets (<1°) far surpassed those for auditory targets (<6°). Moreover, laser pointing constitutes a form of direct pointing that has been shown to yield superior sound localization performance compared with indirect techniques, which require more training and higher cognitive participation (Brungart et al., 2000).

For each target presentation, response end point was registered with a key press by the subject, and the target and pointer positions were then recorded and transformed into azimuth and elevation following a cylindrical coordinate scheme (Razavi et al., 2005a). Response times were typically ∼4 s. Subjects used their preferred hand to move the joystick and the other to press the response button. No feedback was ever given on localization performance.

At the end of each experiment, localization of visual targets (projected from the speaker-mounted LED) in a range comparable to the auditory targets served as a calibration of the robotic arm and the subjects' ability to perform the task.

Experimental paradigms

Three localization paradigms assessed the influence of eye position on sound localization in space and over time. Target locations were always chosen randomly to preclude prediction. In all cases, target localization trials were similar. A trial began with the onset of the masking noise and positioning of the speaker. The masking noise was switched off once the speaker reached its final target location. The auditory stimulus was then presented, and localization began immediately. The stimulus continued to play until the subject signaled the end of localization by pressing the response button. At this time, the stimulus was extinguished and the next trial started without delay.

Target fixation.

Subjects used eye movements and foveal vision to guide the laser pointer and align the projected spot with the perceived sound location. Thus, eye movements were unconstrained and were indeed an integral part of the localization process. Targets consisted of 113 random locations selected across ±50° azimuth and ±25° elevation (Fig. 2c). The target array included a subset of multisampled locations (five samples each) at 10° intervals along the primary meridians (±40° in azimuth and ±20° in elevation). Each experiment was divided into two sets of 55–60 trials. Each set of trials lasted 13–20 min depending on the number of trials and subjects' response times. Subjects rested up to 20 min between the trial sets.

Sustained fixation.

Subjects fixated one of the three visual spots (in separate sessions) continuously during an entire set of trials while localizing the same set of auditory targets as in target fixation, but using the laser pointer now guided by peripheral vision. Thus, localization was strictly visual because eye position was constrained to the fixation spot.

Alternating fixation.

Subjects maintained fixation on one of the three visual spots in a sequence of five separate but contiguous epochs comprising a single session. Targets consisted of 35 random locations: 21 of these targets were within the central ±10° azimuth and elevation (see Fig. 6c), and another 14 locations extended azimuth to ±25°. These extended targets were introduced in the second half of each fixation epoch and randomly interleaved with the central ones to determine whether an initial effect of eye position on sound localization in one part of the field transfers to another. The spatial distribution and order of presentation of the targets were identical for all the epochs, with the exception of the last one (Ctr fixation), which was limited to the central ±10° azimuth. A session always started with Ctr fixation and then alternated between L20°, R20°, and L20° before returning to Ctr. The order of L20° and R20° fixation was reversed for a second session. Because fixation started from center and alternated between the two frontal hemifields, the paradigm measured the dynamics of the shift in response to a ±20° (e.g., epoch 1 → 2) as well as ±40° (e.g., epoch 2 → 3) change in eye position. Epochs typically lasted 7–14 min., depending on subjects' response times, whereas an entire session of five epochs lasted 30–50 min.

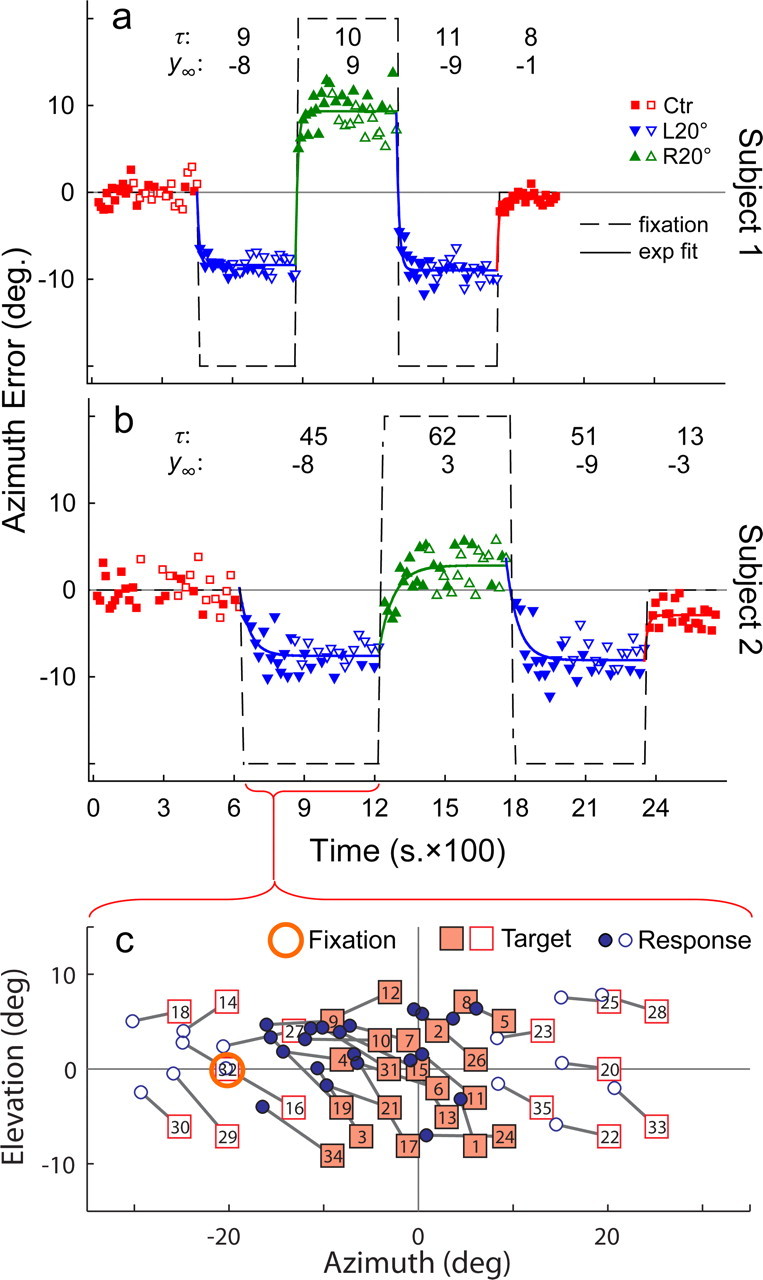

Figure 6.

a, b, Localization error over time during one session of the alternating fixation task in two subjects. Eye position alternated between L20° (upward triangles) and R20° (downward triangles) in 7–14 min epochs. All responses were offset by average error during the initial period of Ctr fixation (squares). The numbers displayed at the top of each panel are the time constant (τ, in seconds) and steady-state magnitude (y∞, in degrees) of the shift for each epoch based on a first-order exponential (exp) model (see Materials and Methods). Subject 1 (a) had a larger steady-state shift magnitude (8.90° ± 0.48 SD) but smaller time constant (9.66 s ± 1.30 SD) than subject 2 (b). Errors for central targets (filled symbols; inside ±10° azimuth) and peripheral targets (open symbols; outside ±10° azimuth) are comparable (see Fig. 7a), indicating that the shift transfers from central to peripheral space in the absence of previous exposure to the peripheral targets. Data from a second session (separate day; data not shown), in which the order of L20° and R20° fixation was switched, are similar. c, Two-dimensional “space” plot corresponding to an epoch of L20° fixation for subject 2 (b). The value inside each target symbol denotes the trial number. Filled and open symbols indicate targets (and associated responses) within and outside of ±10° azimuth, respectively. Consistent with the time plot (b), the azimuth component of the response shows a small leftward shift beginning in trial 1 that grows progressively larger with subsequent trials. Furthermore, the effect is so robust that it overcomes visual capture, and even the target spatially aligned with the fixation reference (trial 32) is perceived to be shifted.

Ten subjects participated in the target and sustained fixation tasks, and 11 subjects participated in the alternating fixation task. Nine subjects participated in all three tasks. Accurate and persistent fixation was assured during all sustained and alternating fixation experiments. Subjects were encouraged to report via the room intercom instances when fixation was interrupted. Voluntary reporting actively engaged subjects in the task, promoted vigilance, and emphasized the importance of maintaining steady fixation. The operator also monitored fixation continuously using electro-oculography (EOG), noting whenever inadvertent eye movements occurred and reinstructing subjects to maintain fixation on the projected reference spot. In addition to the EOG, real-time monitoring of the laser-pointing position also ensured that subjects were performing the paradigms correctly. Despite the challenging nature of the task, all subjects had little trouble maintaining fixation for long periods and only rarely made eye movements. These interruptions in fixation typically comprised a saccade away from, and then immediately back to, the fixation spot. Off-line inspection of EOG records (∼3000 trials) collected during the sustained (two subjects) and alternating (eight subjects) fixation tasks confirmed that such saccadic intrusions were infrequent and occurred in only 0–8% (median, 2%) of trials in a given experimental session. These easily identifiable breaks from fixation lasted 60–1000 ms (median, 350 ms) and were 1–30° (median, 3°) in magnitude, almost always toward the center. Trials during which fixation was broken were excluded from analysis.

Model for the dynamics of the auditory spatial adaptation

The time course of the shift (or adaptation) in sound localization in response to a change in eye position was parameterized using the first-order exponential equation:

where τ is the time constant for the adaptation and captures its pace and 1/τ is its bandwidth or rate. Smaller values of τ indicate faster adaptation or a larger bandwidth. y0 estimates the initial value of the shift (starting point) at the onset of eccentric fixation (i.e., at t = 0), possibly because of a small (static) error in the signal conveying immediate changes in eye position (before any further adaptation). a is the amplitude (from starting point to asymptote) of the adaptation for a given change in eye position. Subsequently, the quantity (y0 + a) predicts the steady-state magnitude of the adaptation (y∞; asymptote) that results from prolonged fixation (i.e., at t = ∞). Nonlinear regression was used to estimate the parameters (y0, a, τ) by minimizing the sum-squared error between the exponential equation and the data (localization error). The parameters were calculated separately for each epoch (2–5) comprising a change in fixation.

Equation 1 was also used to predict the change in spatial gain when the eyes directly guide localization (target fixation task) in contrast to when they remain centrally fixed (Ctr fixation task). Predictions were made separately for every subject based on their shift parameters (y0, a, τ) obtained from the alternating fixation task using the exponential model and response durations from the target fixation task. Predictions were made only for peripheral auditory space, because performance in central space is influenced by the effect of visual capture during the Ctr fixation task (see Results).

The goal was to predict the shift in sound localization attributable to eye movements during the target fixation task. Because eye movement for each trial is proportional in magnitude to target eccentricity, the prediction of the model translates to a change in spatial gain. To implement the exponential model, initial offset y0 was assigned the difference between the initial value of the shift at the onset of a given fixation epoch (at t = 0) and its final value from the previous one. The estimated amplitude of the shift was used for a. Both y0 and a were then normalized to their corresponding change in eye position to reference their values to an ideal gain of 1.0 and facilitate prediction for changes in spatial gain. For example, a was normalized to 0.5 when the exponential fit yielded 10° for the amplitude of the shift (see above) in response to a 20° change in eye position during the alternating fixation task. If y0 = 0, then spatial gain would increase by 0.5 (y∞ = y0 + a) given a shift time constant of 1 min and sustained fixation beyond 3 min (3× time constant). τ was simply set to the time constant estimated based on the exponential model. In each subject, the resulting three parameters (y0, a, τ) were averaged across L20° and R20° fixation and both sessions.

The change in gain during target fixation also depends on a subject's localization response time (t), which determines the duration of the new position of the eyes for a given trial (typically ∼4 s.). This parameter was estimated as the average localization response time when localizing peripheral targets (±10° azimuth or more; left and right field pooled) during the target fixation task. With the four parameters (y0, a, τ, t) plugged into Equation 1, y(t) predicts the extent to which eye movements alter spatial gain during sound localization. The predictions were made for the nine subjects who participated in all experiments. Note that reciprocal combinations of τ and t may produce similar changes in spatial gain for the target fixation trials.

Statistical analysis

Algebraic average (mean) and SD were used as the measures of central tendency and variability, respectively, for all summary statistics. We applied Student's t test to evaluate statistical significance related to different experimental conditions. Using this test was justifiable because data sets typically contained ∼10 or more samples (i.e., subjects). p values were also calculated to assign statistical significance to regression parameters (e.g., slope and intercept) whenever regression analysis was used to parameterize the relationship between variables. Statistical tests were one-tailed and performed at a 5% (α = 0.05) significance level.

Results

Target fixation

Sound localization in azimuth consistently overshot target position when the eyes were unconstrained, and foveal fixation guided localization (Fig. 2c; see Fig. 5a). However, responses to the targets were closely centered on the median plane of the head. We quantified the relationship between response and target position using linear regression to yield spatial gain (slope) and offset (intercept) based on a straight-line equation (Fig. 3a,b; see Fig. 5b). Gain was significantly greater than the ideal of 1.0 (1.16 ± 0.08, mean ± SD, or an overshoot of 16%; p ≪ 0.0001), whereas offset was negligible (−0.12 ± 1.59; p = 0.41).

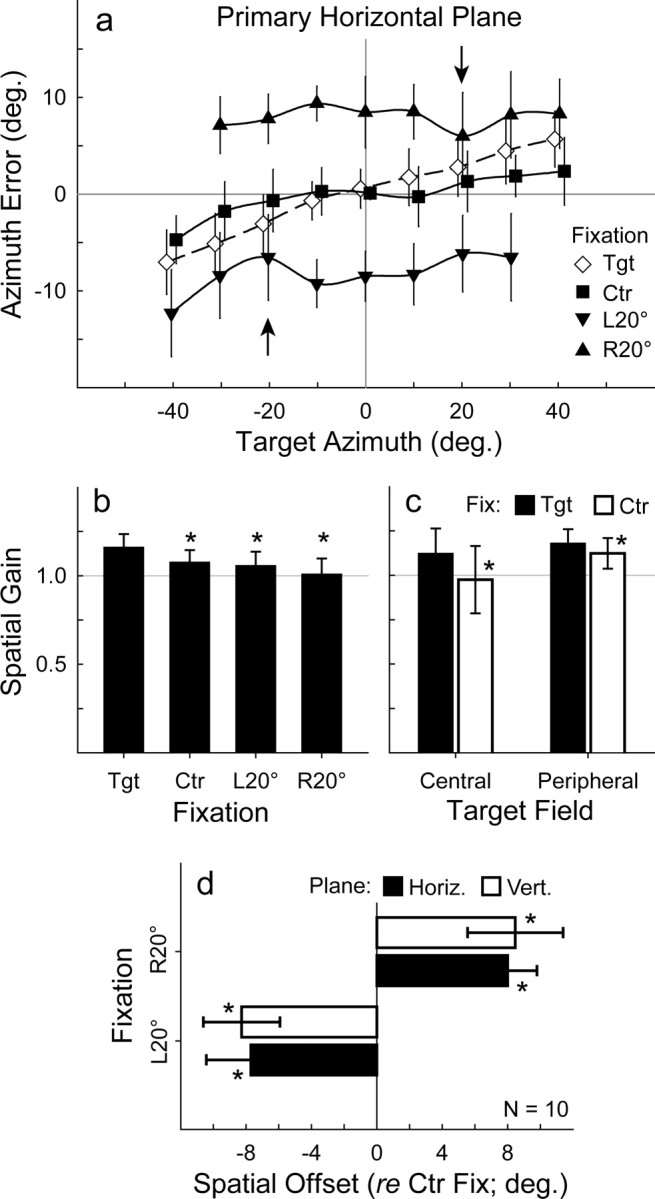

Figure 5.

a, Sound localization accuracy in azimuth during target (Tgt; ◇), Ctr (■), R20° (▴), and <20° (▾) fixation along the primary horizontal plane (0° elevation). For Ctr, L20°, and R20° fixation, errors were adjusted for each subject by subtracting the error for the straight-ahead targets during Ctr fixation and then averaged across the population to yield means and SD. Data points for target and Ctr fixation are offset slightly for clarity. Arrows mark the locations of the two eccentric fixation spots. The shifts during L20° and R20° are robust and span the entire target range. b, Overall spatial gain for target versus sustained fixation tasks (Ctr, L20°, or R20°). c, d, Central versus peripheral spatial gain in the target versus Ctr fixation tasks (c) and spatial offset (d). The asterisks denote statistical significance (p < 0.05, paired-sample Student's t test) for the difference between spatial gains during target and sustained (Ctr, L20°, or R20°) fixation (b, c) or spatial offsets during Ctr and eccentric (L20° or R20°) fixation (d). Fix, Fixation; Horiz., horizontal; Vert., vertical.

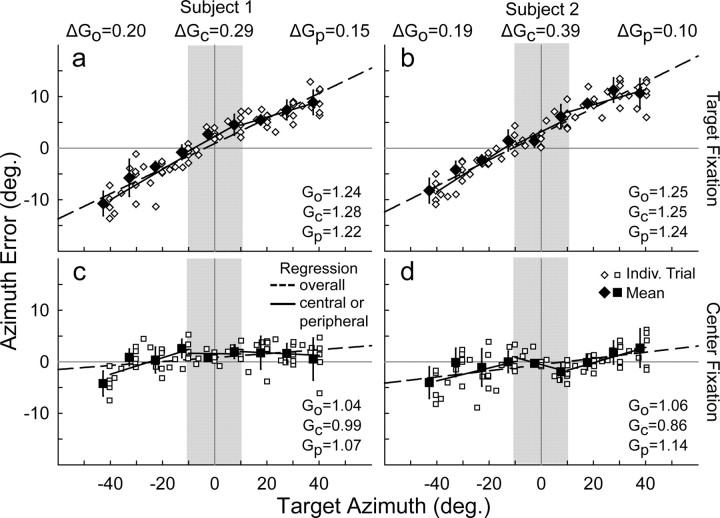

Figure 3.

Sound localization accuracy (error between response and target location) in azimuth for target (a, b) and Ctr (c, d) fixation tasks from two representative subjects (a and c vs b and d). Each point (open symbols) is the error for a single trial. Mean error values for the multisampled targets (solid symbols) are offset slightly to the left for clarity. Spatial gain (G) was calculated as the slope of the response versus target relationship. G is equivalent to the slope of the error versus target relationship (as displayed here), plus 1.0. Positive and negative slopes (i.e., G >1.0 or <1.0) indicate response overshoot and undershoot, respectively. Go is overall gain (dashed lines) across the entire frontal field (see Fig. 2c, shaded area). Gc and Gp are central (limited to ±10° azimuth; shaded area) and peripheral (more than ±10° or less and ≥10° azimuth) gains, respectively (solid lines). ΔG values (shown above a and b) indicate the difference in spatial gain between target and Ctr fixation. The two subjects showed comparable changes in overall spatial gain but contrasting changes in central and peripheral gains between the two tasks. Indiv. Trial, Individual trial.

Center fixation

In contrast to target fixation when the eyes were free to move, spatial gain decreased in all subjects, except one, to an average of 1.08 (±0.07) when the eyes were fixed centrally, and the laser pointer was guided by peripheral vision (Figs. 4a, 5a,b). This 8% reduction in spatial gain (p = 0.003) brought localization closer, but not equal to, ideal performance (p = 0.004, relative to 1.0).

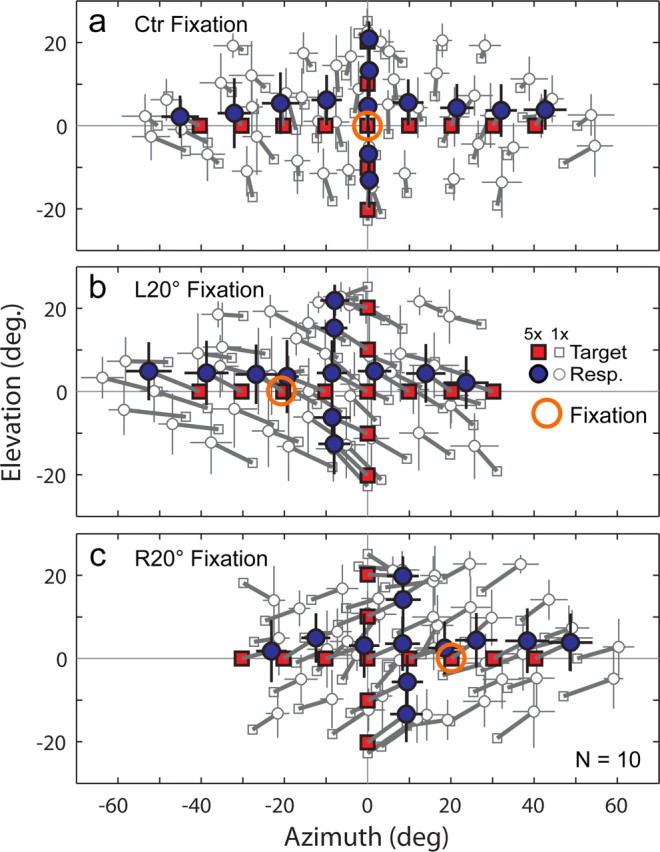

Figure 4.

Sound localization during Ctr (a), L20° (b), and R20° (c) sustained fixation (uninterrupted for 13–20 min). The format is the same as Figure 2c. Responses (Resp.) are shifted in the direction of eye position for the entire target range (frontal field) during sustained L20° and R20° fixation but are centered during Ctr fixation. The effect is so robust that even targets spatially aligned with the fixation spot (open circle) are perceived to be shifted. Targets lateral to 30 and −30° azimuth were eliminated for L20° and R20° fixation, respectively, to avoid localization in the extreme visual periphery.

Visual capture, related to the well known ventriloquism effect (Recanzone, 1998; Battaglia et al., 2003; Alais and Burr, 2004), likely biased the perceived location of auditory targets toward the central fixation spot and reduced spatial gain in its vicinity. This visual bias in central space reduced the overall gain calculated across the entire frontal field (Fig. 3, compare a, b with c, d). We surmised that changes in peripheral gain during Ctr fixation would more accurately reflect the impact of constraining the eyes independently of vision. Consequently, we calculated spatial gain separately for central (azimuth, ±10° or less) and peripheral (azimuth, ±10° or more; averaging left and right fields) auditory space (Figs. 3, 5c). Piece-wise regression revealed that central gain decreased from 1.12 (±0.14) for target fixation to 0.98 (±0.19) for Ctr fixation (14% drop; p = 0.02). In contrast, peripheral gain decreased notably less, but still significantly, from 1.18 (±0.08) for target fixation to 1.12 (±0.09) for Ctr fixation. This 6% drop (p = 0.01) in peripheral gain resulting from restraining the eyes is considerably less than the 14% decrement in central gain, presumably because of the Ctr fixation reference visually capturing sound localization in its vicinity.

Sustained eccentric fixation

Results from the Ctr fixation task show that restraining the eyes reduces peripheral spatial gain by 6% compared with when the eyes are allowed to move freely and guide localization. The brief eye movements used to guide the laser pointer in the target fixation task constitute changes in eye position (lasting 4 s, on average) that are proportional to target eccentricity. The 6% difference suggests that even brief changes in eye position proportionally alter perceived auditory space during sound localization. To further explore this 6% contribution of eye movements to peripheral gain, we systematically quantified the influence of prolonged eccentric eye position on sound localization using a sustained fixation paradigm. Subjects localized auditory targets presented widely across the frontal field while continuously fixating an eccentric visual reference (L20° or R20°, as opposed to Ctr) for long periods (13–20 min).

Sound localization shifted systematically in the same direction as eye position during both L20° (Fig. 4b) and R20° (Fig. 4c) fixation. Several observations are noteworthy. First, the direction of the shift was consistent across subjects. Second, the shift reached ∼40% of eye position for both L20° (−7.70 ± 2.73°; p ≪ 0.0001) and R20° (7.99 ± 1.80°; p ≪ 0.0001) fixation (Fig. 5d). Third, the effect was so robust that even targets spatially aligned with the eccentric fixation reference (i.e., when subjects looked directly at the speaker hidden behind the screen) (Fig. 4b,c, large open circle) were perceived to be shifted distally and beyond visual capture. Fourth, responses to targets along the midline of the head and elsewhere show that the shift extended across the frontal field. Last, the magnitude of the shift was slightly reduced in the vicinity of the fixation reference (Fig. 5a, arrows), presumably reflecting a modest effect of visual capture.

Time course of the auditory spatial shift

Sustained eccentric eye position for an extended period produced a large shift in sound localization across the frontal field. In contrast, past studies have typically reported markedly smaller shifts while only using transient eccentric fixation (Weerts and Thurlow, 1971; Bohlander, 1984; Lewald and Ehrenstein, 1996, 1998; Lewald, 1997, 1998; Yao and Peck, 1997; Getzmann, 2002; Metzger et al., 2004; Lewald and Getzmann, 2006). We surmised that the eye position-driven shift in sound localization is time dependent and represents a form of physiological adaptation. We quantified its dynamics using an alternating fixation paradigm. Starting and ending with Ctr fixation, eye position alternated between L20° and R20° over multiple epochs, each lasting 7–14 min (Fig. 6a,b). Thus, the paradigm invoked ±20° (e.g., Ctr to L20°) as well as ±40° (e.g., L20° to R20°) changes in eye position. The majority of the auditory targets tested were restricted to the central ±10°, augmented by a number of locations outside of this region (Fig. 6c). The target distribution allowed us to focus on the temporal nature of the shift by sampling a region near the physiological center of auditory space where sound localization is most precise (less scattered) and least influenced by visual capture during eccentric fixation.

Predictably, no systematic bias in sound localization error was present during the initial period of Ctr fixation (Fig. 6a,b, first epoch). In subsequent epochs, localization shifted increasingly with time in the direction of eye position and typically reached a steady state within the allotted fixation time (epochs 2–4). Finally, during the last (fifth) epoch of Ctr fixation, average localization error returned to zero, usually at a quicker pace than the other eccentric transitions.

Although the eye position-dependent shift in sound localization was robust and consistent, its dynamics (rate) and magnitude varied greatly from subject to subject. For example, subjects with faster adaptation rates showed an appreciable shift even in the first trial immediately after the change in fixation (Fig. 6a). The earliest we could sample sound localization after a change in eye position depended on the subject's response time (typically 2–6 s), making it particularly challenging to capture the earliest portion of the auditory shift in subjects with rapid dynamics. Regardless, in rapidly adapting subjects, the shift increased with continued fixation and then slowed to approach a steady state. In contrast, subjects with slower dynamics showed a more gradual shift starting from near zero to reach a steady state (Fig. 6b).

Interestingly, the shift in central (azimuth less than ±10°) auditory space transferred to more peripheral (azimuth more than ±10°) regions without previous exposure to eccentric eye position and the peripheral targets simultaneously (Fig. 7a). The peripheral targets were introduced in the second half of each fixation epoch when the spatial adaptation had neared or reached completion for the central targets (see Materials and Methods). In general, the localization accuracy for these peripheral targets (Fig. 6, open symbols) seamlessly mingled with those limited to the central ±10° (Fig. 6, filled symbols).

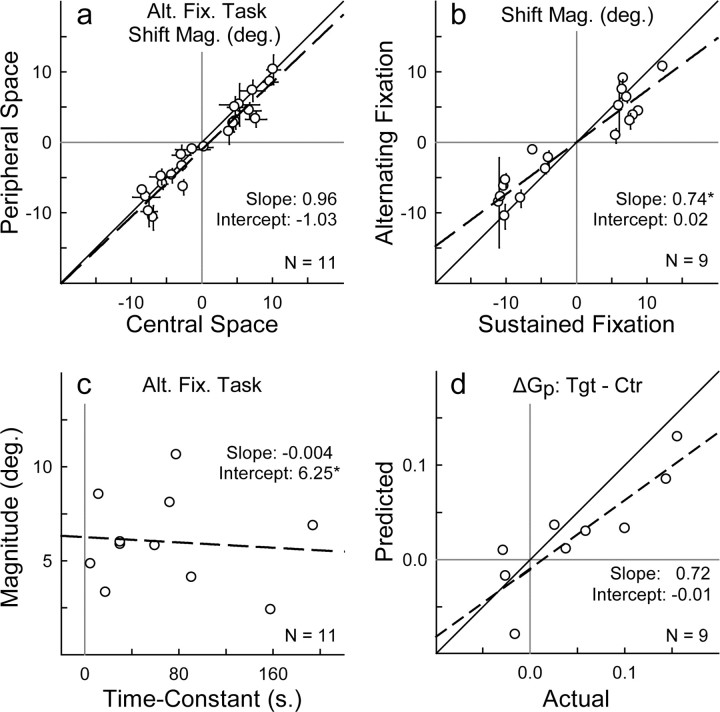

Figure 7.

a, Shift magnitude (localization error) for peripheral targets (from Fig. 6, open symbols) plotted against values for central targets (from Fig. 6, filled symbols) during alternating fixation. Each data point is mean localization error for a single subject during trials 13–26, when central and peripheral targets (seven each) were interleaved. In a, b, and d, asterisks indicate that the slope or intercept (based on a straight-line equation) is statistically different (p < 0.05) from the ideal of 1.0 (diagonal solid line) or 0.0, respectively. A shift in central auditory space is accompanied by a simultaneous shift in peripheral auditory space, suggesting that changes in eye position alone invoke the effect across the entire frontal field without previous exposure to specific target locations. b, Shift magnitude during the alternating (see Fig. 6) as opposed to sustained (see Fig. 5d) fixation task for each subject. The magnitude of the shift was proportionally smaller during alternating fixation, suggesting that the long-term component of the shift is more accurately captured during sustained fixation. c, The steady-state magnitude (y∞) plotted against the time constant (τ) of the shift during alternating fixation for each subject. The magnitude of the shift averages to 6.25° (intercept) independent of the time constant (slope, −0.004; p = 0.79 relative to 0.0). d, Predicted change in peripheral spatial gain (target − Ctr fixation) plotted against the actual values. Predictions are based on a first-order exponential model (see Materials and Methods) and data from the alternating and target fixation tasks and account for 72% (slope, 0.72) of the change in peripheral gain. Alt. Fix., Alternating fixation; Shift Mag., shift magnitude.

Regardless of its pace or steady-state magnitude, the shift in sound localization appeared to develop exponentially over time. We modeled this relationship as a first-order exponential (Eq. 1 in Materials and Methods) with time constant τ (inversely proportional to rate), initial offset y0 (starting point), amplitude a (starting point to asymptote), and steady-state magnitude (y∞ = y0 + a, asymptote). As illustrated by the two subjects in Figure 6, we found a wide range of time constants (τ) in our population (9–151 s), averaging 59 ± 49 s. We also noted several features of the time course of the shift based on the exponential model and exemplified in Figure 6b. These data appear to show a larger time constant when the eyes moved away from center (epoch 2) than when they returned to center (epoch 5); a smaller time constant in one direction of fixation (epoch 3) than the other (epoch 4); and a slightly larger time constant for 40° (epochs 3 and 4) than for 20° (epoch 2) changes in eye position. When tested across the population, only the first observation was statistically significant, although marginally (p = 0.05, one-tailed Wilcoxon signed rank test), and the remaining two were statistically insignificant (p > 0.05) (Fig. 6, compare a, b). Recall also that fixation alternated multiple times during a session. Thus, epoch 4 constituted a repeat of the same eye position as epoch 2, interrupted by opposite fixation during epoch 3. The time constant was comparable in the two epochs with the same eye position, suggesting that the time course of adaptation is independent of the previous locus of fixation.

The amplitude of the shift (a), estimated by the exponential model, was comparable for both directions of eye position. Furthermore, the shift was proportionally similar for 20° (∼33%; 6.51 ± 2.09°) and 40° (28%; 11.02 ± 3.64°) changes in eye position (L20° and R20° fixation epochs pooled; p = 0.02). The steady-state magnitude (y∞) for the shift reached −6.41° (±3.22) and 5.72° (±2.67) during L20° and R20° fixation, respectively, and was proportionally smaller than in the sustained fixation experiments (Fig. 7b). Furthermore, the steady-state magnitude of the shift showed no systematic relationship with the time constant across the population (Fig. 7c).

To facilitate comparison of intersubject variability across the three exponential parameters, we calculated their coefficients of variation (CV = SD/mean; L20° and R20° pooled). Both the amplitude (CV = 0.31) and steady-state magnitude (CV = 0.39) of the shift displayed less than half the variability of the time constant (CV = 0.83) across the population.

Recall that in both the alternating and sustained fixation tasks, subjects were required to fixate the same visual reference spot for long periods (>7 min). Subjects did prove vigilant and rarely broke fixation (see Materials and Methods). For cases in which fixation was broken, we determined whether these inadvertent interruptions in fixation (<1 s) altered the temporal profile of the auditory spatial shift. Using EOG records from 10 subjects, we identified 40 such rare trials during eccentric fixation (L20° or R20°). The shifts in sound localization (errors) in these trials were within 1 SD of similar trials within 5° of the same target location. Most importantly, the average shifts for the three trials before and after the break in fixation were also within 1 SD of each other. Thus, the overall shift remained effectively unaltered by brief eye movements intruding on steady fixation.

Predicting changes in auditory spatial gain

Our experiments thus far have shown that eye position has a robust, spatially broad, and time-dependent effect on sound localization. Recall that peripheral spatial gain was greater during the target fixation task, when eye movements helped guide sound localization, than when the eyes were fixed centrally. We postulated that the eye movements used to guide localization during target fixation constitute transient, continuously changing fixation, simultaneously invoking a dynamic shift in auditory space in the same direction during the response time. Because the shift would be proportional to the magnitude of eye movements, it would manifest as a small increase in spatial gain during target fixation.

The data from the two representative subjects of Figure 6 and response times of ∼4 s support this notion. For example, the subject who shifted more quickly (smaller time constant) to reach a larger magnitude (Fig. 6a) also had a larger increase in peripheral gain when the eyes guided localization (target fixation) (Fig. 3a) than when fixed at center (Ctr fixation) (Fig. 3c). Motivated by this relationship, we predicted the difference in peripheral spatial gain between the two tasks by using our exponential model (Eq. 1 in Materials and Methods) and the shift parameters estimated from the alternating fixation experiment (y0, a, and τ), along with the response times from the target fixation task.

The predicted values correlated well with their actual counterparts (Fig. 7d). The exponential model accounted for 72% of the actual change in peripheral spatial gain between the target and Ctr fixation tasks across the population. Furthermore, the model correctly predicted the direction of the change in eight of the nine subjects who participated in all of the experiments.

Discussion

We used novel paradigms to quantify the influence of eye movements on sound localization and found that the perception of auditory space dynamically and systematically shifted in the direction of the new eye position. The shift proved robust and spatially broad, transferred from central to peripheral auditory space, and its magnitude approached ∼40% of eye angle after ∼3 min (mean time constant of ∼1 min based on a simple exponential model). The eye-position effect was superimposed with visual capture (ventriloquism), such that the spatial shift was slightly smaller near the ocular fixation spot. Furthermore, we showed that the larger auditory spatial gain observed when eye movements directly guide sound localization are likely caused by the same adaptation of perceived auditory space, albeit for far shorter periods of steady eye position. The phenomenon poses an interesting conundrum arising from the “observer effect”: the eye movements that are used to orient toward auditory targets also modify their perceived location and, ironically, lead to sound localization errors. We speculate that other forms of spatial orienting (e.g., reaching, perceived “straight-ahead”) follow similar characteristics, potentially with different magnitudes and dynamics of adaptation.

The time dependence of the auditory spatial shift reported in this study is a novel finding. Our results help explain the wide range of shift magnitudes (3–37% of eye angle) reported in the literature, where fixation times were brief but varied greatly across studies (1–20 s) (Weerts and Thurlow, 1971; Bohlander, 1984; Lewald and Ehrenstein, 1996, 1998; Lewald, 1997, 1998; Yao and Peck, 1997; Getzmann, 2002; Metzger et al., 2004; Lewald and Getzmann, 2006). We found that the shift increases exponentially over time at a highly variable rate among subjects but eventually reaches a steady-state magnitude with considerably less intersubject variability. Because exponential behaviors display the largest change close to their onset, the first few seconds after eye movements are most crucial for governing the magnitude of the auditory shift. Thus, small differences in fixation time can lead to relatively large differences in spatial shift. With fixation times well under 20 s, past studies have collected data early in this eye movement-dependent adaptation of auditory space when the shift is small and variable. The fact that we deliberately included long fixation periods explains why our observed shifts greatly exceed those typically reported in the literature. Interestingly, results from several previous studies support the observation that fixation time affects the magnitude of the shift, although this relationship was never quantified (Weerts and Thurlow, 1971; Lewald and Ehrenstein, 1996; Getzmann, 2002).

Transient eccentric eye position (<5 s) has also been shown to modulate the spatial responses of auditory neurons in the inferior colliculus (Groh et al., 2001; Zwiers et al., 2004), superior colliculus (Jay and Sparks, 1987; Hartline et al., 1995; Peck et al., 1995; Zella et al., 2001; Populin et al., 2004), auditory cortex (Werner-Reiss et al., 2003; Fu et al., 2004), and the intraparietal sulcus (Stricanne et al., 1996; Mullette-Gillman et al., 2005). These short-term effects may reflect a step in the eye-to-head-centered transformation of sound source coordinates, their associated errors, or an early component of the auditory spatial adaptation reported here. To our knowledge, the effects of sustained eccentric fixation on neural responses remain unknown. Characterizing a long-term adaptation neurophysiologically during voluntary eccentric fixation would be challenging in awake behaving animals.

The dynamics of the eye position-dependent auditory adaptation during alternating fixation account for the majority (∼72%), but not all, of the change in spatial gain observed when the eyes are unconstrained (target fixation task) and directly guide sound localization. Although predictions from Equation 1 (see Materials and Methods) correlate well with their measured counterparts, the spatial shift likely contains dynamics that are too rapid to be resolved by our task. A small auditory shift that immediately follows an eye movement would reflect a calibration error in the eye position signal required to maintain spatial congruence between auditory and visual space. This error has traditionally been the interpretation of small and variable effects reported in previous studies (Yao and Peck, 1997; Metzger et al., 2004; Lewald and Getzmann, 2006). In the current experiments, such errors are buried within the first trial of each epoch in our alternating fixation task and in no way explain the large time-dependent phenomenon observed subsequently in each epoch, or in the sustained fixation experiment. Note that our subjects showed a wide range of time constants and magnitudes for the auditory shift, as well as changes in spatial gain during target fixation. Thus, results should be interpreted cautiously when a subject exhibits no measurable difference in gain between target and Ctr fixation, because the influence of eye movements on sound localization may be masked by a very long time constant (slow adaptation rate), particularly in combination with a small magnitude of spatial shift. These factors may explain an idiosyncrasy in a previous report from our laboratory, in which spatial gain was comparable during target and Ctr fixation (Zwiers et al., 2003).

To our knowledge, besides our previous study (Zwiers et al., 2003), only one other study compared target and Ctr fixation for localizing ongoing auditory stimuli combined with laser pointing (Lewald and Ehrenstein, 1998). However, the latter study used a limited target range (±22°) and a narrow-band noise stimulus (1–3 kHz), thus preventing a direct comparison with our results. Despite a large spatial overshoot during both target and Ctr fixation, which may reflect the limited stimulus bandwidth (Middlebrooks, 1992; Lewald and Ehrenstein, 1998), subtle differences in performance between the two tasks corroborate our data. Although not quantified by the authors, spatial gain appears slightly smaller for Ctr versus target fixation [Lewald and Ehrenstein (1998), their Fig. 7].

Implications of auditory spatial adaptation to eye movements

The dynamic shift in sound localization reported here reflects a true physiological adaptation of auditory space to changes in eye position. Perhaps the brain interprets a prolonged new eye position as a new oculomotor straight-ahead and in turn adjusts auditory space (head centered) to match this new eye position along with visual space (eye centered). In line with this notion, our subjects typically reported that their sense of straight-ahead partly shifted toward sustained eye position during and after the experiment, as also observed previously (Weerts and Thurlow, 1971; Bohlander, 1984; Lewald and Ehrenstein, 2000). Interestingly, the adaptation transferred from central to peripheral auditory space, suggesting that changes in eye position alone are sufficient to invoke the effect without simultaneous exposure to specific regions of auditory space. Arguably, the shift may reflect an adaptation of the pointing method instead of auditory space. However, proprioceptive or visual feedback is an unlikely locus for the adaptation. The manual joystick used to position the laser spot has no hand or finger grip, thus providing no tactile or proprioceptive cues about its orientation. This feature ensured that only vision provided information about the position of the laser spot. Conversely, the adaptation also occurs when using the same joystick pointer but without the visual spot (Razavi et al., 2005b). Below we address several relevant considerations that might underlie the adaptation of auditory space related to eye position.

The time dependence of the auditory spatial adaptation suggests an increasing mismatch between auditory and visual space after eye movements. Perhaps the intersensory slip is attributable to a decaying eye position signal needed to maintain alignment between the two maps (Groh and Sparks, 1992). The oculomotor integrator (OMI) (Leigh and Zee, 1999) provides an obvious candidate, because it maintains eccentric fixation after changes in eye position, but its output decays with a reported time constant of ∼1 min (Becker and Klein, 1973; Hess et al., 1985; Eizenman et al., 1990). This time constant is comparable to that of the shift in sound localization reported here. However, the OMI as a possible substrate for the adaptation is unlikely for two reasons. First, the auditory shift was effectively unaltered by interruptions in fixation. These interruptions were quite brief (<1 s) compared with the overall duration of eccentric fixation (>7 min) and the time constant of the auditory spatial shift (mean, ∼1 min). The lack of saccadic resetting of the entire shift suggests the presence of an alternative adaptive process. Second, control studies revealed that even rapidly adapting subjects were able to maintain eccentric eye position in the absence of a fixation spot (which depends on OMI function) far longer than the time required for the auditory spatial adaptation (Cui et al., 2006).

The adaptive characteristic of the auditory spatial shift and its apparent link to a shift in the egocentric direction suggests the presence of a physiological “set-point” controller that defines and adjusts the straight-ahead, or “zero” of perceived space with respect to the head (Fig. 8). The notion of such a set point adapting to changes in eye position may be exemplified in the clinical phenomenon of rebound nystagmus (Hood et al., 1973; Hood, 1981; Leigh and Zee, 1999). This phenomenon consists of nystagmus induced by an eccentric saccade, beating in the direction of eye position, which declines gradually and stabilizes over time. However, the entire process reverses when the eyes return to center moments later, as if any new location of the eyes becomes the new straight-ahead with prolonged fixation. Interestingly, a more subtle form of rebound nystagmus manifests in normal subjects after prolonged eccentric fixation (Shallo-Hoffmann et al., 1990). We suspect that the decline and reversal (but not the drift or slow phase) in rebound nystagmus and the shift in sound localization with eye movements reflect similar adaptation mechanisms. In light of our results, rebound nystagmus can be then described as a condition of compromised OMI, poor smooth pursuit (both underlying the ocular drift during eccentric fixation), and a rapidly adapting physiological set point (gradual decline in nystagmus while attaining stable ocular gaze).

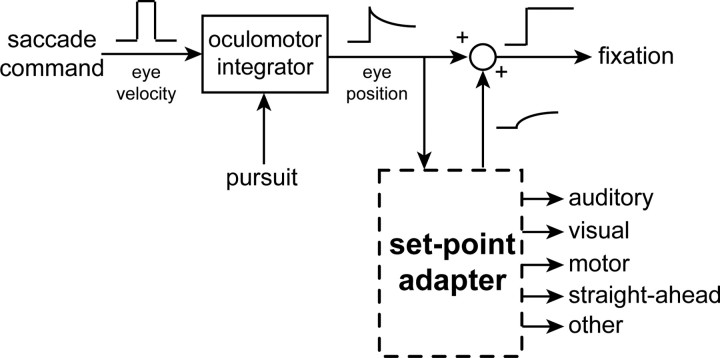

Figure 8.

A conceptual model for the adaptation of perceived auditory space to changes in eye position. Our findings are consistent with a physiological set-point adapter that defines the straight-ahead based on the position of the eyes in the orbit over time. The saccade and pursuit systems synergistically form a motor command to maintain eccentric eye position. The OMI converts a velocity signal into an imperfect control signal for eye position in the head. This position signal serves as an input to the set-point adapter (with a long time constant), which over time shifts auditory space, and possibly other sensory or motor maps at different rates, to approach the new perceived straight-ahead defined by eye position. In the clinical condition of rebound nystagmus, the output of the set-point adapter would reduce ocular drift and stabilize gaze over time.

Adaptation of the physiological set point to eye position could also guide the development of a common straight-ahead (or perceptual zero) for audition, vision, proprioception, body, head, and other sensory or motor reference frames. Such adaptation would be beneficial especially during early childhood when normal ocular alignment develops after a critical period of binocular visual experience (Simons, 1993). Adaptation of the set point may also resolve conflicts between a head-referenced zero and acquired changes in ocular alignment throughout life caused by disease (e.g., oculomotor palsies) and aging. Although directing the eyes toward eccentric objects leads to adaptation of auditory space, and in turn large localization errors, it is unlikely to compromise navigation and other natural behaviors. Normal gaze orienting consists of eye, head, and sometimes trunk movements, with the eyes resting near the center of the orbits after completion (Tomlinson and Bahra, 1986; Goossens and van Opstal, 1997; Freedman and Sparks, 1997; Populin et al., 2002; Razavi et al., 2006). Sustained eccentric eye position is rare in natural behavior and, if present, likely represents pathology. Under such abnormal conditions, the head may be turned to counteract the deflected eye position, thereby aligning gaze with the body's straight-ahead in space. A realignment of auditory space to match the body-centered straight-ahead would then be useful and corrective.

Footnotes

This work was supported by National Institutes of Health (NIH)–National Institute on Aging Grant RO1-AG16319, NIH–National Institute on Deafness and Other Communication Disorders Grant P30-DC05409 (Center for Navigation and Communication Sciences), and NIH–National Eye Institute (NEI) Grant P30-EY01319 (Center for Visual Science). B.R. was supported by training grants from the NIH–NEI (T32-EY07125) and the NIH–National Institute of General Medical Sciences (T32-GM07356). We thank Martin Gira, Anand Joshi, John Housel, and Mike Wieckowski for technical assistance; Ashley R. Glade for implementing pilot versions of the alternating fixation task; and Scott H. Seidman and Qi N. Cui for valuable insights on data analysis.

References

- Alais D, Burr D. The ventriloquist effect results from near-optimal bimodal integration. Curr Biol. 2004;14:257–262. doi: 10.1016/j.cub.2004.01.029. [DOI] [PubMed] [Google Scholar]

- Battaglia PW, Jacobs RA, Aslin RN. Bayesian integration of visual and auditory signals for spatial localization. J Opt Soc Am A. 2003;20:1391–1397. doi: 10.1364/josaa.20.001391. [DOI] [PubMed] [Google Scholar]

- Becker W, Klein HM. Accuracy of saccadic eye movements and maintenance of eccentric eye positions in the dark. Vision Res. 1973;13:1021–1034. doi: 10.1016/0042-6989(73)90141-7. [DOI] [PubMed] [Google Scholar]

- Bohlander RW. Eye position and visual attention influence perceived auditory direction. Percept Mot Skills. 1984;59:483–510. doi: 10.2466/pms.1984.59.2.483. [DOI] [PubMed] [Google Scholar]

- Brungart DS, Rabinowitz WM, Durlach NI. Evaluation of response methods for the localization of nearby objects. Percept Psychophys. 2000;62:48–65. doi: 10.3758/bf03212060. [DOI] [PubMed] [Google Scholar]

- Choisel S, Zimmer K. A pointing technique with visual feedback for sound source localization experiments. 115th Audio Engineering Society Convention; October; New York, NY. 2003. Paper presented at. [Google Scholar]

- Cui QN, Razavi B, O'Neill WE, Paige GD. Influence of eye position on human sound localization: characterization and age-related changes. Soc Neurosci Abstr. 2006;36:544–1. [Google Scholar]

- Dobreva MS, O'Neill WE, Paige GD. Effects of memory and aging on auditory and visual spatial localization. Soc Neurosci Abstr. 2005;35:283–2. [Google Scholar]

- Eizenman M, Cheng P, Sharpe JA, Frecker RC. End-point nystagmus and ocular drift: an experimental and theoretical study. Vision Res. 1990;30:863–877. doi: 10.1016/0042-6989(90)90055-p. [DOI] [PubMed] [Google Scholar]

- Freedman EG, Sparks DL. Eye-head coordination during head-unrestrained gaze shifts in rhesus monkeys. J Neurophysiol. 1997;77:2328–2348. doi: 10.1152/jn.1997.77.5.2328. [DOI] [PubMed] [Google Scholar]

- Fu KM, Shah AS, O'Connell MN, McGinnis T, Eckholdt H, Lakatos P, Smiley J, Schroeder CE. Timing and laminar profile of eye-position effects on auditory responses in primate auditory cortex. J Neurophysiol. 2004;92:3522–3531. doi: 10.1152/jn.01228.2003. [DOI] [PubMed] [Google Scholar]

- Getzmann S. The effect of eye position and background noise on vertical sound localization. Hear Res. 2002;169:130–139. doi: 10.1016/s0378-5955(02)00387-8. [DOI] [PubMed] [Google Scholar]

- Goossens HH, van Opstal AJ. Human eye-head coordination in two dimensions under different sensorimotor conditions. Exp Brain Res. 1997;114:542–560. doi: 10.1007/pl00005663. [DOI] [PubMed] [Google Scholar]

- Groh JM, Sparks DL. Two models for transforming auditory signals from head-centered to eye-centered coordinates. Biol Cybern. 1992;67:291–302. doi: 10.1007/BF02414885. [DOI] [PubMed] [Google Scholar]

- Groh JM, Trause AS, Underhill AM, Clark KR, Inati S. Eye position influences auditory responses in primate inferior colliculus. Neuron. 2001;29:509–518. doi: 10.1016/s0896-6273(01)00222-7. [DOI] [PubMed] [Google Scholar]

- Hartline PH, Vimal RL, King AJ, Kurylo DD, Northmore DP. Effects of eye position on auditory localization and neural representation of space in superior colliculus of cats. Exp Brain Res. 1995;104:402–408. doi: 10.1007/BF00231975. [DOI] [PubMed] [Google Scholar]

- Hess K, Reisine H, Dürsteler MR. Normal eye drift and saccadic drift correction in darkness. Neuro-Ophthalmology. 1985;5:247–252. [Google Scholar]

- Hood ID. Further observations on the phenomenon of rebound nystagmus. Ann NY Acad Sci. 1981;374:532–539. doi: 10.1111/j.1749-6632.1981.tb30898.x. [DOI] [PubMed] [Google Scholar]

- Hood JD, Kayan A, Leech J. Rebound nystagmus. Brain. 1973;96:507–526. doi: 10.1093/brain/96.3.507. [DOI] [PubMed] [Google Scholar]

- Jay MF, Sparks DL. Sensorimotor integration in the primate superior colliculus. II. Coordinates of auditory signals. J Neurophysiol. 1987;57:35–55. doi: 10.1152/jn.1987.57.1.35. [DOI] [PubMed] [Google Scholar]

- Knudsen EI, Brainard MS. Creating a unified representation of visual and auditory space in the brain. Annu Rev Neurosci. 1995;18:19–43. doi: 10.1146/annurev.ne.18.030195.000315. [DOI] [PubMed] [Google Scholar]

- Leigh RJ, Zee DS. The neurology of eye movements. Oxford: Oxford UP; 1999. [Google Scholar]

- Lewald J. Eye-position effects in directional hearing. Behav Brain Res. 1997;87:35–48. doi: 10.1016/s0166-4328(96)02254-1. [DOI] [PubMed] [Google Scholar]

- Lewald J. The effect of gaze eccentricity on perceived sound direction and its relation to visual localization. Hear Res. 1998;115:206–216. doi: 10.1016/s0378-5955(97)00190-1. [DOI] [PubMed] [Google Scholar]

- Lewald J, Ehrenstein WH. The effect of eye position on auditory lateralization. Exp Brain Res. 1996;108:473–485. doi: 10.1007/BF00227270. [DOI] [PubMed] [Google Scholar]

- Lewald J, Ehrenstein WH. Auditory-visual spatial integration: a new psychophysical approach using laser pointing to acoustic targets. J Acoust Soc Am. 1998;104:1586–1597. doi: 10.1121/1.424371. [DOI] [PubMed] [Google Scholar]

- Lewald J, Ehrenstein WH. Visual and proprioceptive shifts in perceived egocentric direction induced by eye-position. Vision Res. 2000;40:539–547. doi: 10.1016/s0042-6989(99)00197-2. [DOI] [PubMed] [Google Scholar]

- Lewald J, Ehrenstein WH. Spatial coordinates of human auditory working memory. Brain Res Cogn Brain Res. 2001;12:153–159. doi: 10.1016/s0926-6410(01)00042-8. [DOI] [PubMed] [Google Scholar]

- Lewald J, Getzmann S. Horizontal and vertical effects of eye-position on sound localization. Hear Res. 2006;213:99–106. doi: 10.1016/j.heares.2006.01.001. [DOI] [PubMed] [Google Scholar]

- Metzger RR, Mullette-Gillman OA, Underhill AM, Cohen YE, Groh JM. Auditory saccades from different eye positions in the monkey: implications for coordinate transformations. J Neurophysiol. 2004;92:2622–2627. doi: 10.1152/jn.00326.2004. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC. Narrow-band sound localization related to external ear acoustics. J Acoust Soc Am. 1992;92:2607–2624. doi: 10.1121/1.404400. [DOI] [PubMed] [Google Scholar]

- Middlebrooks JC, Green DM. Sound localization by human listeners. Annu Rev Psychol. 1991;42:135–159. doi: 10.1146/annurev.ps.42.020191.001031. [DOI] [PubMed] [Google Scholar]

- Mullette-Gillman OA, Cohen YE, Groh JM. Eye-centered, head-centered, and complex coding of visual and auditory targets in the intraparietal sulcus. J Neurophysiol. 2005;94:2331–2352. doi: 10.1152/jn.00021.2005. [DOI] [PubMed] [Google Scholar]

- Paige GD, O'Neill WE, Chen C. Quantification of human sound localization by visually guided pointing. Soc Neurosci Abstr. 1998;28:24–1401. [Google Scholar]

- Peck CK, Baro JA, Warder SM. Effects of eye position on saccadic eye movements and on the neuronal responses to auditory and visual stimuli in cat superior colliculus. Exp Brain Res. 1995;103:227–242. doi: 10.1007/BF00231709. [DOI] [PubMed] [Google Scholar]

- Perrett S, Noble W. Available response choices affect localization of sound. Percept Psychophys. 1995;57:150–158. doi: 10.3758/bf03206501. [DOI] [PubMed] [Google Scholar]

- Populin LC, Tollin DJ, Weinstein JM. Human gaze shifts to acoustic and visual targets. Ann NY Acad Sci. 2002;956:468–473. doi: 10.1111/j.1749-6632.2002.tb02857.x. [DOI] [PubMed] [Google Scholar]

- Populin LC, Tollin DJ, Yin TCT. Effect of eye position on saccades and neuronal responses to acoustic stimuli in the superior colliculus of the behaving cat. J Neurophysiol. 2004;92:2151–2167. doi: 10.1152/jn.00453.2004. [DOI] [PubMed] [Google Scholar]

- Razavi B, O'Neill WE, Paige GD. Both interaural and spectral cues impact sound localization in azimuth. Proc 2nd Int IEEE EMBS Conf Neural Eng. 2005a;2:587–590. [Google Scholar]

- Razavi B, O'Neill WE, Paige GD. Sound localization adapts to eccentric gaze across multiple sensorimotor reference frames. Soc Neurosci Abstr. 2005b;35:852–2. [Google Scholar]

- Razavi B, Rozanski MT, O'Neill WE, Paige GD. Sound localization using gaze pointing under different sensori-motor contexts. Assoc Res Otolaryngol Abstr. 2006;29:149. [Google Scholar]

- Recanzone GH. Rapidly induced auditory plasticity: the ventriloquism aftereffect. Proc Natl Acad Sci USA. 1998;95:869–875. doi: 10.1073/pnas.95.3.869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seeber B. A new method for localization studies. Acta Acustica united with Acustica. 2002;88:446–450. [Google Scholar]

- Shallo-Hoffmann J, Schwarze H, Simonsz HJ, Muhlendyck H. A reexamination of end-point and rebound nystagmus in normals. Invest Ophthalmol Vis Sci. 1990;31:388–392. [PubMed] [Google Scholar]

- Simons K. Early visual development, normal and abnormal. Oxford: Oxford UP; 1993. [Google Scholar]

- Stricanne B, Andersen RA, Mazzoni P. Eye-centered, head-centered, and intermediate coding of remembered sound locations in area LIP. J Neurophysiol. 1996;76:2071–2076. doi: 10.1152/jn.1996.76.3.2071. [DOI] [PubMed] [Google Scholar]

- Tomlinson RD, Bahra PS. Combined eye-head gaze shifts in the primate. I. Metrics. J Neurophysiol. 1986;56:1542–1557. doi: 10.1152/jn.1986.56.6.1542. [DOI] [PubMed] [Google Scholar]

- Weerts TC, Thurlow WR. The effects of eye position and expectation on sound localization. Percept Psychophys. 1971;9:35–39. [Google Scholar]

- Werner-Reiss U, Kelly KA, Trause AS, Underhill AM, Groh JM. Eye position affects activity in primary auditory cortex of primates. Curr Biol. 2003;13:554–562. doi: 10.1016/s0960-9822(03)00168-4. [DOI] [PubMed] [Google Scholar]

- Yao L, Peck CK. Saccadic eye movements to visual and auditory targets. Exp Brain Res. 1997;115:25–34. doi: 10.1007/pl00005682. [DOI] [PubMed] [Google Scholar]

- Zella JC, Brugge JF, Schnupp JW. Passive eye displacement alters auditory spatial receptive fields of cat superior colliculus neurons. Nat Neurosci. 2001;4:1167–1169. doi: 10.1038/nn773. [DOI] [PubMed] [Google Scholar]

- Zwiers MP, van Opstal AJ, Paige GD. Plasticity in human sound localization induced by compressed spatial vision. Nat Neurosci. 2003;6:175–181. doi: 10.1038/nn999. [DOI] [PubMed] [Google Scholar]

- Zwiers MP, Versnel H, Van Opstal AJ. Involvement of monkey inferior colliculus in spatial hearing. J Neurosci. 2004;24:4145–4156. doi: 10.1523/JNEUROSCI.0199-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]