Abstract

Although the involvement in the striatum in the refinement and control of motor movement has long been recognized, recent description of discrete frontal corticobasal ganglia networks in a range of species has focused attention on the role particularly of the dorsal striatum in executive functions. Current evidence suggests that the dorsal striatum contributes directly to decision-making, especially to action selection and initiation, through the integration of sensorimotor, cognitive, and motivational/emotional information within specific corticostriatal circuits involving discrete regions of striatum. We review key evidence from recent studies in rodent, nonhuman primate, and human subjects.

Keywords: choice, utility, frontal cortex, executive, reward, striatum

To choose appropriately between distinct courses of action requires the ability to integrate an estimate of the causal relationship between an action and its consequences, or outcome, with the value, or utility, of the outcome. Any attempt to base decision-making solely on cognition fails fully to determine action selection because any information, such as “action A leads to outcome O,” can be used both to perform A and to avoid performing A. It is interesting to note in this context that, although there is an extensive literature linking the cognitive control of executive functions specifically to the prefrontal cortex (Goldman-Rakic, 1995; Fuster, 2000), more recent studies suggest that these functions depend on reward-related circuitry linking prefrontal, premotor, and sensorimotor cortices with the striatum (Chang et al., 2002; Lauwereyns et al., 2002; Tanaka et al., 2006).

Importantly, evidence from a range of species suggests that this corticostriatal network controls functionally heterogeneous decision processes involving (1) actions that are more flexible or goal directed, sensitive to rewarding feedback, and mediated by discrete regions of association cortices particularly medial, orbitomedial, premotor, and anterior cingulate cortices together with their targets in caudate/dorsomedial striatum (Haruno and Kawato, 2006; Levy and Dubois, 2006); and (2) actions that are stimulus bound, relatively automatic or habitual, and mediated by sensorimotor cortices and dorsolateral striatum/putamen (Jog et al., 1999; Poldrack et al., 2001). These processes have been argued to depend on distinct learning rules (Dickinson, 1994) and, correspondingly, distinct forms of plasticity (Partridge et al., 2000; Smith et al., 2001). Furthermore, degeneration in these corticostriatal circuits has been linked to distinct forms of psychopathology, e.g., in Huntington's, obsessive compulsive disorder, and Tourette's syndrome on the one hand (Robinson et al., 1995; Bloch et al., 2005; Hodges et al., 2006) and in Parkinson's and multiple system atrophy on the other (Antonini et al., 2001; Seppi et al., 2006).

Here, we review recent evidence implicating the dorsal striatum in decision-making and point to the considerable commonalities in the functionality of this region in rodent, nonhuman primate, and human subjects.

Instrumental conditioning in rats

Behavioral research over the last two decades has identified forms of learning in rodents homologous to goal-directed and habitual learning in humans. This suggestion is based on extensive evidence that choice between different actions, e.g., pressing a lever or pulling a chain when these actions earn different food rewards, is determined by the animals' encoding the association between a specific action and outcome and the current value of the outcome; choice is sensitive both to degradation of the action–outcome contingency and to outcome revaluation treatments (Dickinson and Balleine, 1994; Balleine and Dickinson, 1998). In contrast, when actions are overtrained, decision processes become more rigid or habitual; performance is no longer sensitive to degradation and devaluation treatments but rather is controlled by a process of sensorimotor association (Dickinson, 1994; Dayan and Balleine, 2002). As such, whereas action–outcome encoding appears to be mediated by a form of error-correction learning rule, the development of habits is not (Dickinson, 1994); indeed, traditionally this form of learning has been argued to be sensitive to contiguity rather than contingency (Dickinson et al., 1995).

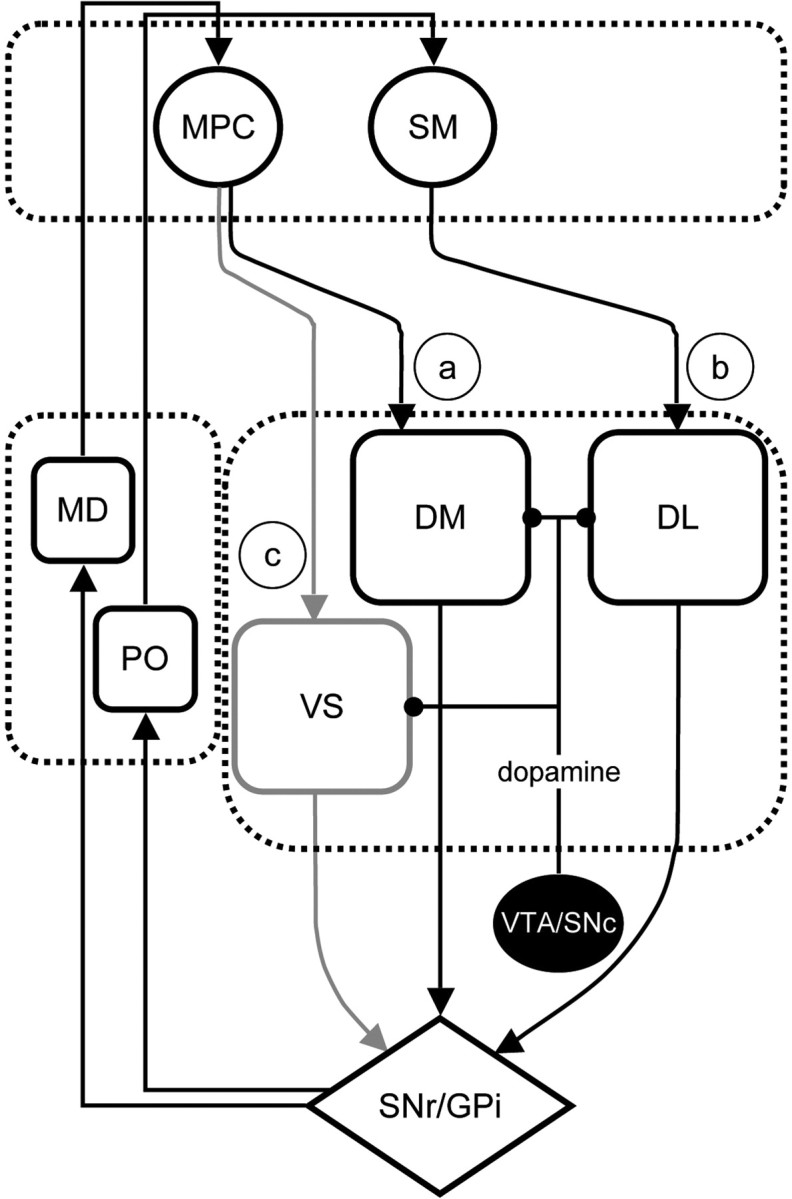

Recent experiments have started to reveal differences in the circuitry associated with these distinct forms of decision process in rodents (see Fig. 1). Cell body lesions of prefrontal cortex, particularly the dorsal prelimbic (Balleine and Dickinson, 1998; Corbit and Balleine, 2003), but not infralimbic (Killcross and Coutureau, 2003), region abolishes the acquisition of goal-directed actions, and performance is acquired by sensorimotor association alone. Prelimbic involvement in goal-directed learning is, however, limited to acquisition (Ostlund and Balleine, 2005; Hernandez et al., 2006), suggesting that this cortical region is involved in learning-related plasticity localized to an efferent structure. Prelimbic cortex projects densely to both dorsomedial striatum and accumbens core (Gabbott et al., 2005) and, although well controlled studies assessing specifically goal-directed learning suggests that the latter region is not involved in this learning process (Corbit et al., 2001), recent evidence has implicated dorsomedial striatum (Balleine, 2005). Thus, both pretraining and posttraining lesions (Yin et al., 2005c), muscimol-induced inactivation (Yin et al., 2005c), and the infusion of the NMDA-antagonist AP5 (Yin et al., 2005b) within a posterior region of dorsomedial striatum all abolish goal-directed learning and render choice performance insensitive to both contingency degradation and outcome devaluation treatments, i.e., choice becomes rigid and habitual (Yin et al., 2005a).

Figure 1.

Corticostriatal circuits involved in decision-making. a, b, The learning processes controlling the acquisition of reward-related actions are mediated by converging projections from regions of anteromedial prefrontal cortex (MPC) to the rodent dorsomedial striatum or primate dorsoanterior striatum (DM), whereas the processes mediating the acquisition of stimulus-bound actions, or habits, are thought to be mediated by projections from sensorimotor cortex (SM) to the rodent dorsolateral–primate dorsoposterior striatum (DL) (b). These corticostriatal connections are parts of distinct feedback loops that project back to their cortical origins via substantia nigra pars reticulata (SNr)/globus pallidus internal segment (GPi) and the mediodorsal (MD)/posterior (PO) nuclei of the thalamus. c, Reward and predictors of reward are the major motivational influences on the performance of goal-directed and habitual actions that are thought to be mediated by corticostriatal circuits involving, particularly, ventral striatum (VS) and regions of the amygdala. Dopamine is an important modulator of plasticity in the dorsal striatum, whereas its tonic release has long been associated with the motivational processes mediated by the ventral circuit. VTA, Ventral tegmental area; SNc, substantia nigra pars compacta.

Interestingly, evidence suggests that a parallel corticostriatal circuit involving infralimbic and sensorimotor cortices together with the dorsolateral striatum in rodents may mediate the transition to habitual decision processes associated with sensorimotor learning (Jog et al., 1999; Killcross and Coutureau, 2003; Barnes et al., 2005). Whereas the infralimbic region has been argued to mediate aspects of the reinforcement signal controlling sensorimotor association (Balleine and Killcross, 2006), changes in motor and dorsolateral striatum appear to be training related (Costa et al., 2004; Hernandez et al., 2006; Tang et al., 2007) and to be coupled to changes in plasticity as behavioral processes become less flexible (Costa et al., 2006; Tang et al., 2007). Correspondingly, whereas overtraining causes performance to become insensitive to outcome devaluation, lesions of dorsolateral striatum reverse this effect rendering performance goal-directed and once again sensitive to outcome devaluation treatments (Yin et al., 2004). Likewise, muscimol inactivation of dorsolateral striatum renders otherwise habitual performance sensitive to changes in the action–outcome contingency (Yin et al., 2005a). Current evidence suggests, therefore, that, whereas stimulus-mediated action selection is mediated by this lateral corticostriatal circuit, the more flexible, outcome-mediated action selection subserving goal-directed action is mediated by a more medial corticostriatal circuit consistent with the general claim that distinct corticostriatal networks control different forms of decision process (Daw et al., 2005).

Striatal-based learning processes in nonhuman primates

Similarly, recent studies using nonhuman primates have suggested that the striatum may be an important brain area in decision-making. A clue to this hypothesis came from single-unit recording studies using trained animals. Neurons responding to task-related sensory events that become active before task-related motor behaviors and that are tonically active until expected rewards are delivered have been described in a circumscribed region of dorsal striatum (Hikosaka et al., 1989; Hollerman et al., 1998). Importantly, the activity of these neurons has been found to be modulated by the expected presence, amount, or probability of reward or by the magnitude of attention or memory required to execute the task (Kawagoe et al., 1998; Shidara et al., 1998; Cromwell and Schultz, 2003). The coexpression of the sensorimotor, cognitive, and motivational/emotional signals in single neurons provides conditions favorable for learning. Some of the recent studies addressed this question more directly by examining neuronal activity while the animals were learning new sensorimotor associations, new motor sequences, or adapting to new reward outcomes. They found that, indeed, many striatal neurons decrease or increase their activity as learning progressed (Tremblay et al., 1998; Blazquez et al., 2002; Miyachi et al., 2002; Hadj-Bouziane and Boussaoud, 2003; Brasted and Wise, 2004). Notably, visuomotor (saccadic) activity appropriate for the correct saccadic response appeared earlier in the associative striatum (caudate nucleus) than in the dorsolateral prefrontal cortex during the course of learning (Pasupathy and Miller, 2005).

The results of single-unit recordings were further supported by experimentally manipulating learning-related neuronal activity. An orthodox method was to suppress activity of neurons in a small functional area in the striatum by injecting GABA agonists such as muscimol. Consistent with dissociations observed in rodents, the suppression of the anterior, associative striatum disrupted learning of new sequential motor procedures, whereas the suppression of the putamen disrupted execution of well learned motor sequences (Miyachi et al., 1997). A second method has sought to promote learning by electrically stimulating the striatum (Nakamura and Hikosaka, 2006b; Williams and Eskandar, 2006). Importantly, this was effective only when the stimulation was applied just after the animal executed a motor response correctly. The timing specificity raises the possibility that sensorimotor, cognitive, and motivational/emotional signals reach single striatal neurons concurrently at the time around the motor execution to cause plastic changes in synaptic mechanisms.

These experiments on learning have been conducted mostly in the dorsal striatum in which motivational/emotional signals from cortex are thought to be sparse. Rather, it appears more likely that motivational signals are supplied by dopaminergic inputs originating mainly from the substantia nigra pars compacta. It has been hypothesized that dopaminergic neurons encode a mismatch between the expected and the actual reward value (Schultz, 1998). This so-called reward prediction error signal is ideal for guiding learning until the gain of reward is maximized (Houk et al., 1995).

Indeed, studies on the synaptic mechanisms in the striatum have shown that long-term potentiation (LTP) or long-term depression (LTD) can occur in the corticostriatal synapses depending on the combination of cortical inputs, striatal outputs, and D1 and D2 dopaminergic inputs (Reynolds and Wickens, 2002). A study using behaving animals showed that these mechanisms are necessary for behavioral adaptation of motor behavior to changing reward-position contingencies (Nakamura and Hikosaka, 2006a). Local injections of a D1 antagonist into the caudate nucleus lengthened the motor (saccadic) reaction times when large rewards were expected, whereas injections of a D2 antagonist lengthened the reaction times when small rewards were expected.

Action–contingency learning in the human dorsal striatum

Studies in humans corroborate the research in animals suggesting that the dorsal striatum is an integral part of a circuit involved in decision-making. Accumulating evidence, primarily from neuroimaging but also neuropsychological investigations, has implicated the dorsal striatum in different aspects of motivational and learning processes that support goal-directed action. For instance, positron emission tomography (PET) studies report increases in dopamine release in the dorsal striatum (as measured by displacement of endogenous dopamine by radioligands) when participants are presented with potential rewards, such as the opportunity to gain money (Koepp et al., 1998; Zald et al., 2004) or even when presented with food stimuli while in a state of hunger (Volkow et al., 2002). Similarly, fMRI studies typically report increases in blood oxygenation level dependent (BOLD) responses in the dorsal striatum during anticipation of either primary (O'Doherty et al., 2002) or secondary (Knutson et al., 2001) rewards, much like the ventral striatum.

What distinguishes the human dorsal striatum from the rest of the basal ganglia is its involvement in action-contingent learning (Delgado et al., 2000, 2005a; Knutson et al., 2001; Haruno et al., 2004; O'Doherty, 2004; Tricomi et al., 2004). Similar to the animal literature (Ito et al., 2002; Yin et al., 2005c), learning about actions and their reward consequences involves the dorsal striatum (O'Doherty et al., 2004; Tricomi et al., 2004), as opposed to more passive forms of appetitive learning found to depend on the ventral striatum (O'Doherty, 2004). These results mirror neuropsychological studies conducted on patients afflicted with Parkinson's disease, who are impaired in their learning of probabilistic stimuli when action contingencies are present (Poldrack et al., 2001) but are unimpaired when no contingency between action and outcome exists (Shohamy et al., 2004). Within the human dorsal striatum, learning of action–reward associations has been found in both putamen and caudate nucleus with potentially different roles based on their sensorimotor or associative connectivity, respectively (Alexander and Crutcher, 1990). Some studies argue, for example, that the putamen is important for stimulus–action coding (Haruno and Kawato, 2006). In contrast, a number of studies suggest that the head of the caudate nucleus is involved in coding reward-prediction errors during goal-directed behavior (Davidson et al., 2004; O'Doherty et al., 2004; Delgado et al., 2005a; Haruno and Kawato, 2006).

More recently, neuroimaging studies have extended these general ideas on the function of the human dorsal striatum to more complex social issues. Increases in BOLD responses in the dorsal striatum, for example, have been reported when an interactive social component exists, such as the occurrence of cooperation (Rilling et al., 2002) or revenge (de Quervain et al., 2004). The caudate nucleus has also been implicated in the acquisition of social reputations (via reciprocity in an economic exchange game, the “trust game”) through trial and error (King-Casas et al., 2005). However, existing social biases (e.g., knowledge about moral characteristics) can also hinder corticostriatal learning mechanisms and influence subsequent decisions (Delgado et al., 2005b). A future challenge for researchers is, therefore, to understand the role of the dorsal striatum in goal-directed behaviors with respect to the vast array of existing social complexities.

Summary and future directions

Together, this recent evidence suggests that the dorsal striatum mediates important aspects of decision-making, particularly those related to encoding specific action–outcome associations in goal-directed action and the selection of actions on the basis of their currently expected reward value. We have summarized these findings and the major trends in research that they imply in Figure 1. These findings are consistent with computational theories of adaptive behavior, notably forms of reinforcement learning, and when considered in the context of current views of the broader corticobasal ganglia system, appear likely to provide the basis for an integrated approach to striatal function.

Important questions still remain, such as the anatomical and functional similarities across species, particularly with respect to the cognitive control of actions, whether the striatum is the only site at which goal-directed learning occurs (Hikosaka et al., 2006) and whether dopamine is the sole teacher of this learning (Aosaki et al., 1994; Seymour et al., 2005). Is the striatum involved only in reward-based learning, or does it contribute to the inhibition of responses associated with aversive consequences (Hikosaka et al., 2006)? Finally, is the product of learning (e.g., the motor memory) stored in the striatum? The differential effects of muscimol injections suggests one of two things: either the associative striatum guides acquisition and the motor memory is stored in the putamen (perhaps in addition to other motor areas), or these regions are involved in quite distinct motor processes; the associative striatum encoding a more abstract relationship between actions and their consequences and the putamen, which is connected with motor cortical areas, encoding actions in the muscle–joint domain that, after extensive practice, become resistant to changes in outcome: the hallmark of habitual behavior.

Footnotes

This work was supported by National Institute of Mental Health Grant 56446 (B.W.B.) and by the National Eye Institute Intramural Research Program (O.H.).

References

- Alexander GE, Crutcher MD. Functional architecture of basal ganglia circuits: neural substrates of parallel processing. Trends Neurosci. 1990;13:266–271. doi: 10.1016/0166-2236(90)90107-l. [DOI] [PubMed] [Google Scholar]

- Antonini A, Moresco RM, Gobbo C, De Notaris R, Panzacchi A, Barone P, Calzetti S, Negrotti A, Pezzoli G, Fazio F. The status of dopamine nerve terminals in Parkinson's disease and essential tremor: a PET study with the tracer [11-C]FE-CIT. Neurol Sci. 2001;22:47–48. doi: 10.1007/s100720170040. [DOI] [PubMed] [Google Scholar]

- Aosaki T, Tsubokawa H, Ishida A, Watanabe K, Graybiel AM, Kimura M. Responses of tonically active neurons in the primate's striatum undergo systematic changes during behavioral sensorimotor conditioning. J Neurosci. 1994;14:3969–3984. doi: 10.1523/JNEUROSCI.14-06-03969.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balleine BW. Neural bases of food seeking: affect, arousal and reward in corticostriatolimbic circuits. Physiol Behav. 2005;86:717–730. doi: 10.1016/j.physbeh.2005.08.061. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Dickinson A. Goal-directed instrumental action: contingency and incentive learning and their cortical substrates. Neuropharmacology. 1998;37:407–419. doi: 10.1016/s0028-3908(98)00033-1. [DOI] [PubMed] [Google Scholar]

- Balleine BW, Killcross S. Parallel incentive processing: an integrated view of amygdala function. Trends Neurosci. 2006;29:272–279. doi: 10.1016/j.tins.2006.03.002. [DOI] [PubMed] [Google Scholar]

- Barnes TD, Kubota Y, Hu D, Jin DZ, Graybiel AM. Activity of striatal neurons reflects dynamic encoding and recoding of procedural memories. Nature. 2005;437:1158–1161. doi: 10.1038/nature04053. [DOI] [PubMed] [Google Scholar]

- Blazquez PM, Fujii N, Kojima J, Graybiel AM. A network representation of response probability in the striatum. Neuron. 2002;33:973–982. doi: 10.1016/s0896-6273(02)00627-x. [DOI] [PubMed] [Google Scholar]

- Bloch MH, Leckman JF, Zhu H, Peterson BS. Caudate volumes in childhood predict symptom severity in adults with Tourette syndrome. Neurology. 2005;65:1253–1258. doi: 10.1212/01.wnl.0000180957.98702.69. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brasted PJ, Wise SP. Comparison of learning-related neuronal activity in the dorsal premotor cortex and striatum. Eur J Neurosci. 2004;19:721–740. doi: 10.1111/j.0953-816x.2003.03181.x. [DOI] [PubMed] [Google Scholar]

- Chang JY, Chen L, Luo F, Shi LH, Woodward DJ. Neuronal responses in the frontal cortico-basal ganglia system during delayed matching-to-sample task: ensemble recording in freely moving rats. Exp Brain Res. 2002;142:67–80. doi: 10.1007/s00221-001-0918-3. [DOI] [PubMed] [Google Scholar]

- Corbit LH, Balleine BW. The role of prelimbic cortex in instrumental conditioning. Behav Brain Res. 2003;146:145–157. doi: 10.1016/j.bbr.2003.09.023. [DOI] [PubMed] [Google Scholar]

- Corbit LH, Muir JL, Balleine BW. The role of the nucleus accumbens in instrumental conditioning: evidence of a functional dissociation between accumbens core and shell. J Neurosci. 2001;21:3251–3260. doi: 10.1523/JNEUROSCI.21-09-03251.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Costa RM, Cohen D, Nicolelis MA. Differential corticostriatal plasticity during fast and slow motor skill learning in mice. Curr Biol. 2004;14:1124–1134. doi: 10.1016/j.cub.2004.06.053. [DOI] [PubMed] [Google Scholar]

- Costa RM, Lin SC, Sotnikova TD, Cyr M, Gainetdinov RR, Caron MG, Nicolelis MA. Rapid alterations in corticostriatal ensemble coordination during acute dopamine-dependent motor dysfunction. Neuron. 2006;52:359–369. doi: 10.1016/j.neuron.2006.07.030. [DOI] [PubMed] [Google Scholar]

- Cromwell HC, Schultz W. Effects of expectations for different reward magnitudes on neuronal activity in primate striatum. J Neurophysiol. 2003;89:2823–2838. doi: 10.1152/jn.01014.2002. [DOI] [PubMed] [Google Scholar]

- Davidson MC, Horvitz JC, Tottenham N, Fossella JA, Watts R, Ulug AM, Casey BJ. Differential cingulate and caudate activation following unexpected nonrewarding stimuli. NeuroImage. 2004;23:1039–1045. doi: 10.1016/j.neuroimage.2004.07.049. [DOI] [PubMed] [Google Scholar]

- Daw ND, Niv Y, Dayan P. Uncertainty-based competition between prefrontal and dorsolateral striatal systems for behavioral control. Nat Neurosci. 2005;8:1704–1711. doi: 10.1038/nn1560. [DOI] [PubMed] [Google Scholar]

- Dayan P, Balleine BW. Reward, motivation, and reinforcement learning. Neuron. 2002;36:285–298. doi: 10.1016/s0896-6273(02)00963-7. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Nystrom LE, Fissell C, Noll DC, Fiez JA. Tracking the hemodynamic responses to reward and punishment in the striatum. J Neurophysiol. 2000;84:3072–3077. doi: 10.1152/jn.2000.84.6.3072. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Miller MM, Inati S, Phelps EA. An fMRI study of reward-related probability learning. NeuroImage. 2005a;24:862–873. doi: 10.1016/j.neuroimage.2004.10.002. [DOI] [PubMed] [Google Scholar]

- Delgado MR, Frank RH, Phelps EA. Perceptions of moral character modulate the neural systems of reward during the trust game. Nat Neurosci. 2005b;8:1611–1618. doi: 10.1038/nn1575. [DOI] [PubMed] [Google Scholar]

- de Quervain DJ, Fischbacher U, Treyer V, Schellhammer M, Schnyder U, Buck A, Fehr E. The neural basis of altruistic punishment. Science. 2004;305:1254–1258. doi: 10.1126/science.1100735. [DOI] [PubMed] [Google Scholar]

- Dickinson A. Instrumental conditioning. In: Mackintosh NJ, editor. Animal cognition and learning. London: Academic; 1994. pp. 4–79. [Google Scholar]

- Dickinson A, Balleine BW. Motivational control of goal-directed action. Anim Learn Behav. 1994;22:1–18. [Google Scholar]

- Dickinson A, Balleine BW, Watt A, Gonzales F, Boakes RA. Overtraining and the motivational control of instrumental action. Anim Learn Behav. 1995;22:197–206. [Google Scholar]

- Fuster JM. Executive frontal functions. Exp Brain Res. 2000;133:66–70. doi: 10.1007/s002210000401. [DOI] [PubMed] [Google Scholar]

- Gabbott PL, Warner TA, Jays PR, Salway P, Busby SJ. Prefrontal cortex in the rat: projections to subcortical autonomic, motor, and limbic centers. J Comp Neurol. 2005;492:145–177. doi: 10.1002/cne.20738. [DOI] [PubMed] [Google Scholar]

- Goldman-Rakic PS. Architecture of the prefrontal cortex and the central executive. Ann NY Acad Sci. 1995;769:71–83. doi: 10.1111/j.1749-6632.1995.tb38132.x. [DOI] [PubMed] [Google Scholar]

- Hadj-Bouziane F, Boussaoud D. Neuronal activity in the monkey striatum during conditional visuomotor learning. Exp Brain Res. 2003;153:190–196. doi: 10.1007/s00221-003-1592-4. [DOI] [PubMed] [Google Scholar]

- Haruno M, Kawato M. Different neural correlates of reward expectation and reward expectation error in the putamen and caudate nucleus during stimulus-action-reward association learning. J Neurophysiol. 2006;95:948–959. doi: 10.1152/jn.00382.2005. [DOI] [PubMed] [Google Scholar]

- Haruno M, Kuroda T, Doya K, Toyama K, Kimura M, Samejima K, Imamizu H, Kawato M. A neural correlate of reward-based behavioral learning in caudate nucleus: a functional magnetic resonance imaging study of a stochastic decision task. J Neurosci. 2004;24:1660–1665. doi: 10.1523/JNEUROSCI.3417-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hernandez PJ, Schiltz CA, Kelley AE. Dynamic shifts in corticostriatal expression patterns of the immediate early genes Homer 1a and Zif268 during early and late phases of instrumental training. Learn Mem. 2006;13:599–608. doi: 10.1101/lm.335006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hikosaka O, Sakamoto M, Usui S. Functional properties of monkey caudate neurons. III. Activities related to expectation of target and reward. J Neurophysiol. 1989;61:814–832. doi: 10.1152/jn.1989.61.4.814. [DOI] [PubMed] [Google Scholar]

- Hikosaka O, Nakamura K, Nakahara H. Basal ganglia orient eyes to reward. J Neurophysiol. 2006;95:567–584. doi: 10.1152/jn.00458.2005. [DOI] [PubMed] [Google Scholar]

- Hodges A, Strand AD, Aragaki AK, Kuhn A, Sengstag T, Hughes G, Elliston LA, Hartog C, Goldstein DR, Thu D, Hollingsworth ZR, Collin F, Synek B, Holmans PA, Young AB, Wexler NS, Delorenzi M, Kooperberg C, Augood SJ, Faull RL, et al. Regional and cellular gene expression changes in human Huntington's disease brain. Hum Mol Genet. 2006;15:965–977. doi: 10.1093/hmg/ddl013. [DOI] [PubMed] [Google Scholar]

- Hollerman J, Tremblay L, Schultz W. Influence of reward expectation on behavior-related neuronal activity in primate striatum. J Neurophysiol. 1998;80:947–963. doi: 10.1152/jn.1998.80.2.947. [DOI] [PubMed] [Google Scholar]

- Houk JC, Adams JL, Barto A. A model of how the basal ganglia generate and use neural signals that predict reinforcement. In: Houk JC, Davis JL, Beiser DG, editors. Models of information processing in the basal ganglia. Cambridge, MA: MIT; 1995. pp. 249–270. [Google Scholar]

- Ito R, Dalley JW, Robbins TW, Everitt BJ. Dopamine release in the dorsal striatum during cocaine-seeking behavior under the control of a drug-associated cue. J Neurosci. 2002;22:6247–6253. doi: 10.1523/JNEUROSCI.22-14-06247.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jog MS, Kubota Y, Connolly CI, Hillegaart V, Graybiel AM. Building neural representations of habits. Science. 1999;286:1745–1749. doi: 10.1126/science.286.5445.1745. [DOI] [PubMed] [Google Scholar]

- Kawagoe R, Takikawa Y, Hikosaka O. Expectation of reward modulates cognitive signals in the basal ganglia. Nat Neurosci. 1998;1:411–416. doi: 10.1038/1625. [DOI] [PubMed] [Google Scholar]

- Killcross S, Coutureau E. Coordination of actions and habits in the medial prefrontal cortex of rats. Cereb Cortex. 2003;13:400–408. doi: 10.1093/cercor/13.4.400. [DOI] [PubMed] [Google Scholar]

- King-Casas B, Tomlin D, Anen C, Camerer CF, Quartz SR, Montague PR. Getting to know you: reputation and trust in a two-person economic exchange. Science. 2005;308:78–83. doi: 10.1126/science.1108062. [DOI] [PubMed] [Google Scholar]

- Knutson B, Adams CM, Fong GW, Hommer D. Anticipation of increasing monetary reward selectively recruits nucleus accumbens. J Neurosci. 2001;21(RC159):1–5. doi: 10.1523/JNEUROSCI.21-16-j0002.2001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Koepp MJ, Gunn RN, Lawrence AD, Cunningham VJ, Dagher A, Jones T, Brooks DJ, Bench CJ, Grasby PM. Evidence for striatal dopamine release during a video game. Nature. 1998;393:266–268. doi: 10.1038/30498. [DOI] [PubMed] [Google Scholar]

- Lauwereyns J, Watanabe K, Coe B, Hikosaka O. A neural correlate of response bias in monkey caudate nucleus. Nature. 2002;418:413–417. doi: 10.1038/nature00892. [DOI] [PubMed] [Google Scholar]

- Levy R, Dubois B. Apathy and the functional anatomy of the prefrontal cortex-basal ganglia circuits. Cereb Cortex. 2006;16:916–928. doi: 10.1093/cercor/bhj043. [DOI] [PubMed] [Google Scholar]

- Miyachi S, Hikosaka O, Miyashita K, Karádi Z, Rand MK. Differential roles of monkey striatum in learning of sequential hand movement. Exp Brain Res. 1997;115:1–5. doi: 10.1007/pl00005669. [DOI] [PubMed] [Google Scholar]

- Miyachi S, Hikosaka O, Lu X. Differential activation of monkey striatal neurons in the early and late stages of procedural learning. Exp Brain Res. 2002;146:122–126. doi: 10.1007/s00221-002-1213-7. [DOI] [PubMed] [Google Scholar]

- Nakamura K, Hikosaka O. Role of dopamine in the primate caudate nucleus in reward modulation of saccades. J Neurosci. 2006a;26:5360–5369. doi: 10.1523/JNEUROSCI.4853-05.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakamura K, Hikosaka O. Facilitation of saccadic eye movements by postsaccadic electrical stimulation in the primate caudate. J Neurosci. 2006b;26:12885–12895. doi: 10.1523/JNEUROSCI.3688-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- O'Doherty J, Dayan P, Schultz J, Deichmann R, Friston K, Dolan RJ. Dissociable roles of ventral and dorsal striatum in instrumental conditioning. Science. 2004;304:452–454. doi: 10.1126/science.1094285. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP. Reward representations and reward-related learning in the human brain: insights from neuroimaging. Curr Opin Neurobiol. 2004;14:769–776. doi: 10.1016/j.conb.2004.10.016. [DOI] [PubMed] [Google Scholar]

- O'Doherty JP, Deichmann R, Critchley HD, Dolan RJ. Neural responses during anticipation of a primary taste reward. Neuron. 2002;33:815–826. doi: 10.1016/s0896-6273(02)00603-7. [DOI] [PubMed] [Google Scholar]

- Ostlund SB, Balleine BW. Lesions of medial prefrontal cortex disrupt the acquisition but not the expression of goal-directed learning. J Neurosci. 2005;25:7763–7770. doi: 10.1523/JNEUROSCI.1921-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Partridge JG, Tang KC, Lovinger DM. Regional and postnatal heterogeneity of activity-dependent long-term changes in synaptic efficacy in the dorsal striatum. J Neurophysiol. 2000;84:1422–1429. doi: 10.1152/jn.2000.84.3.1422. [DOI] [PubMed] [Google Scholar]

- Pasupathy A, Miller EK. Different time courses of learning-related activity in the prefrontal cortex and striatum. Nature. 2005;433:873–876. doi: 10.1038/nature03287. [DOI] [PubMed] [Google Scholar]

- Poldrack RA, Clark J, Pare-Blagoev EJ, Shohamy D, Creso Moyano J, Myers C, Gluck MA. Interactive memory systems in the human brain. Nature. 2001;414:546–550. doi: 10.1038/35107080. [DOI] [PubMed] [Google Scholar]

- Reynolds JN, Wickens JR. Dopamine-dependent plasticity of corticostriatal synapses. Neural Netw. 2002;15:507–521. doi: 10.1016/s0893-6080(02)00045-x. [DOI] [PubMed] [Google Scholar]

- Rilling J, Gutman D, Zeh T, Pagnoni G, Berns G, Kilts C. A neural basis for social cooperation. Neuron. 2002;35:395–405. doi: 10.1016/s0896-6273(02)00755-9. [DOI] [PubMed] [Google Scholar]

- Robinson D, Wu H, Munne RA, Ashtari M, Alvir JM, Lerner G, Koreen A, Cole K, Bogerts B. Reduced caudate nucleus volume in obsessive-compulsive disorder. Arch Gen Psychiatry. 1995;52:393–398. doi: 10.1001/archpsyc.1995.03950170067009. [DOI] [PubMed] [Google Scholar]

- Schultz W. Predictive reward signal of dopamine neurons. J Neurophysiol. 1998;80:1–27. doi: 10.1152/jn.1998.80.1.1. [DOI] [PubMed] [Google Scholar]

- Seppi K, Schocke MF, Prennschuetz-Schuetzenau K, Mair KJ, Esterhammer R, Kremser C, Muigg A, Scherfler C, Jaschke W, Wenning GK, Poewe W. Topography of putaminal degeneration in multiple system atrophy: a diffusion magnetic resonance study. Mov Disord. 2006;21:847–852. doi: 10.1002/mds.20843. [DOI] [PubMed] [Google Scholar]

- Seymour B, O'Doherty JP, Koltzenburg M, Wiech K, Frackowiak R, Friston K, Dolan R. Opponent appetitive-aversive neural processes underlie predictive learning of pain relief. Nat Neurosci. 2005;8:1234–1240. doi: 10.1038/nn1527. [DOI] [PubMed] [Google Scholar]

- Shidara M, Aigner TG, Richmond BJ. Neuronal signals in the monkey ventral striatum related to progress through a predictable series of trials. J Neurosci. 1998;18:2613–2625. doi: 10.1523/JNEUROSCI.18-07-02613.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shohamy D, Myers CE, Grossman S, Sage J, Gluck MA, Poldrack RA. Cortico-striatal contributions to feedback-based learning: converging data from neuroimaging and neuropsychology. Brain. 2004;127:851–859. doi: 10.1093/brain/awh100. [DOI] [PubMed] [Google Scholar]

- Smith R, Musleh W, Akopian G, Buckwalter G, Walsh JP. Regional differences in the expression of corticostriatal synaptic plasticity. Neuroscience. 2001;106:95–101. doi: 10.1016/s0306-4522(01)00260-3. [DOI] [PubMed] [Google Scholar]

- Tanaka SC, Samejima K, Okada G, Ueda K, Okamoto Y, Yamawaki S, Doya K. Brain mechanism of reward prediction under predictable and unpredictable environmental dynamics. Neural Netw. 2006;19:1233–1241. doi: 10.1016/j.neunet.2006.05.039. [DOI] [PubMed] [Google Scholar]

- Tang C, Pawlak AP, Prokopenko V, West MO. Changes in activity of the striatum during formation of a motor habit. Eur J Neurosci. 2007;25:1212–1227. doi: 10.1111/j.1460-9568.2007.05353.x. [DOI] [PubMed] [Google Scholar]

- Tremblay L, Hollerman JR, Schultz W. Modifications of reward expectation-related neuronal activity during learning in primate striatum. J Neurophysiol. 1998;80:964–977. doi: 10.1152/jn.1998.80.2.964. [DOI] [PubMed] [Google Scholar]

- Tricomi EM, Delgado MR, Fiez JA. Modulation of caudate activity by action contingency. Neuron. 2004;41:281–292. doi: 10.1016/s0896-6273(03)00848-1. [DOI] [PubMed] [Google Scholar]

- Volkow ND, Wang GJ, Fowler JS, Logan J, Jayne M, Franceschi D, Wong C, Gatley SJ, Gifford AN, Ding YS, Pappas N. “Nonhedonic” food motivation in humans involves dopamine in the dorsal striatum and methylphenidate amplifies this effect. Synapse. 2002;44:175–180. doi: 10.1002/syn.10075. [DOI] [PubMed] [Google Scholar]

- Williams ZM, Eskandar EN. Selective enhancement of associative learning by microstimulation of the anterior caudate. Nat Neurosci. 2006;9:562–568. doi: 10.1038/nn1662. [DOI] [PubMed] [Google Scholar]

- Yin HH, Knowlton BJ, Balleine BW. Lesions of dorsolateral striatum preserve outcome expectancy but disrupt habit formation in instrumental learning. Eur J Neurosci. 2004;19:181–189. doi: 10.1111/j.1460-9568.2004.03095.x. [DOI] [PubMed] [Google Scholar]

- Yin HH, Knowlton BJ, Balleine BW. Inactivation of dorsolateral striatum enhances sensitivity to changes in the action-outcome contingency in instrumental conditioning. Behav Brain Res. 2005a;166:189–196. doi: 10.1016/j.bbr.2005.07.012. [DOI] [PubMed] [Google Scholar]

- Yin HH, Knowlton BJ, Balleine BW. Blockade of NMDA receptors in the dorsomedial striatum prevents action-outcome learning in instrumental conditioning. Eur J Neurosci. 2005b;22:505–512. doi: 10.1111/j.1460-9568.2005.04219.x. [DOI] [PubMed] [Google Scholar]

- Yin HH, Ostlund SB, Knowlton BJ, Balleine BW. The role of the dorsomedial striatum in instrumental conditioning. Eur J Neurosci. 2005c;22:513–523. doi: 10.1111/j.1460-9568.2005.04218.x. [DOI] [PubMed] [Google Scholar]

- Zald DH, Boileau I, El-Dearedy W, Gunn R, McGlone F, Dichter GS, Dagher A. Dopamine transmission in the human striatum during monetary reward tasks. J Neurosci. 2004;24:4105–4112. doi: 10.1523/JNEUROSCI.4643-03.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]