Abstract

Everyday objects can vary in a number of feature dimensions, such as color and shape. To identify and recognize a particular object, often times we need to encode and store multiple features of an object simultaneously. Previous studies have highlighted the role of the superior intraparietal sulcus (IPS) in storing single object features in visual short-term memory (VSTM), such as color, orientation, shape outline, and shape topology. The role of this brain area in storing multiple features of an object together in VSTM, however, remains mostly unknown. In this study, using an event-related functional magnetic resonance imaging design and an independent region-of-interest-based approach, how an object's color and shape may be retained together in the superior IPS during VSTM was investigated. Results from four experiments indicate that the superior IPS holds neither integrated whole objects nor the total number of objects (both whole and partial) stored in VSTM. Rather, it represents the total amount of feature information retained in VSTM. The ability to accumulate information acquired from different visual feature dimensions suggests that the superior IPS may be a flexible information storage device, consistent with the involvement of the parietal cortex in a variety of other cognitive tasks. These results also bring new understanding to the object benefit reported in behavioral VSTM studies and provide new insights into solving the binding problem in the brain.

Keywords: visual working memory, feature binding, feature conjunction, visual perception, vision, brain imaging

Introduction

In everyday life, to sustain attended visual objects across saccades and other visual interruptions and to use this information to guide behavior and thoughts, it is often necessary to store object information in a temporary buffer known as visual short-term memory (VSTM) (Phillips, 1974; Phillips and Christie, 1977). Although the role of the frontal/prefrontal cortices in the control and maintenance of VSTM (also known as visual working memory) has been well described by previous studies (Goldman-Rakic, 1987; Desimone, 1996; Miller et al., 1996; Cohen et al., 1997; Courtney et al., 1997; Smith and Jonides, 1998; Pessoa et al., 2002; Curtis and D'Esposito, 2003), Todd and Maroisa (2004, 2005) and Xu and Chun (2006) showed that the superior intraparietal sulcus (IPS) may play the most important role in storing object information in VSTM, because functional magnetic resonance imaging (fMRI) activations in this brain area correlated strongly with the number of objects held in VSTM (see also Vogel and Machizawa, 2004; Song and Jiang, 2006).

In the studies by Todd and Marois (2004, 2005) and Xu and Chun (2006), each object in the display contained a single feature. Thus, although superior IPS response is believed to reflect the number of objects retained in VSTM, it could also reflect the number of features and the total amount of visual information retained in VSTM, a possibility that has not been examined previously. Moreover, everyday objects can vary in a number of feature dimensions, such as color and shape, and often times we need to encode multiple features of an object simultaneously to identify and recognize that object. Although the superior IPS has been shown to store various kinds of single object features (e.g., color, orientation, shape outline, and shape topology), how this brain area may store multiple features of an object together in VSTM remains mostly unknown.

In behavioral studies, researchers have discovered the well known object benefit in VSTM. Namely, when attention is directed to an object, all of its features may be automatically encoded together into VSTM (Irwin and Andrews, 1996; Luck and Vogel, 1997; Xu, 2002a, 2006). To account for this benefit, Luck and Vogel (1997) argued that visual objects rather than individual features are the units of information processing in VSTM and that features are first integrated to form whole objects which are then stored in VSTM (Irwin and Andrews, 1996; Vogel et al., 2001).

These behavioral results suggest that, when an object's color and shape are stored together in VSTM, the superior IPS may store integrated whole objects, requiring all attended object features to be represented together in this brain area (integration account). Alternatively, this brain area may store the total number of objects encoded in VSTM, regardless of whether they are whole and partial objects (object account). In a third possibility, the superior IPS may not store discrete objects, but simply the total amount of feature information retained in VSTM (feature account). This study examined which one of these accounts best describes the representation of multifeature objects in the superior IPS.

Materials and Methods

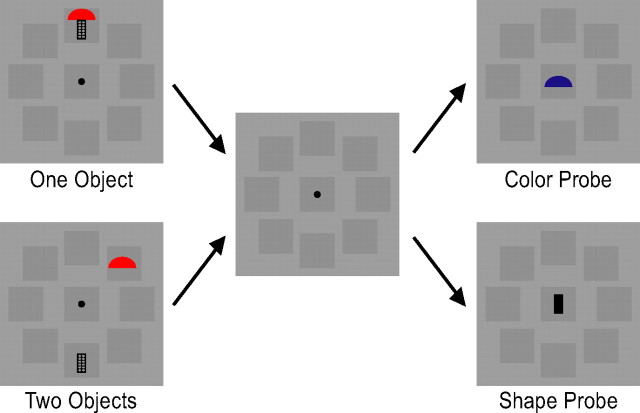

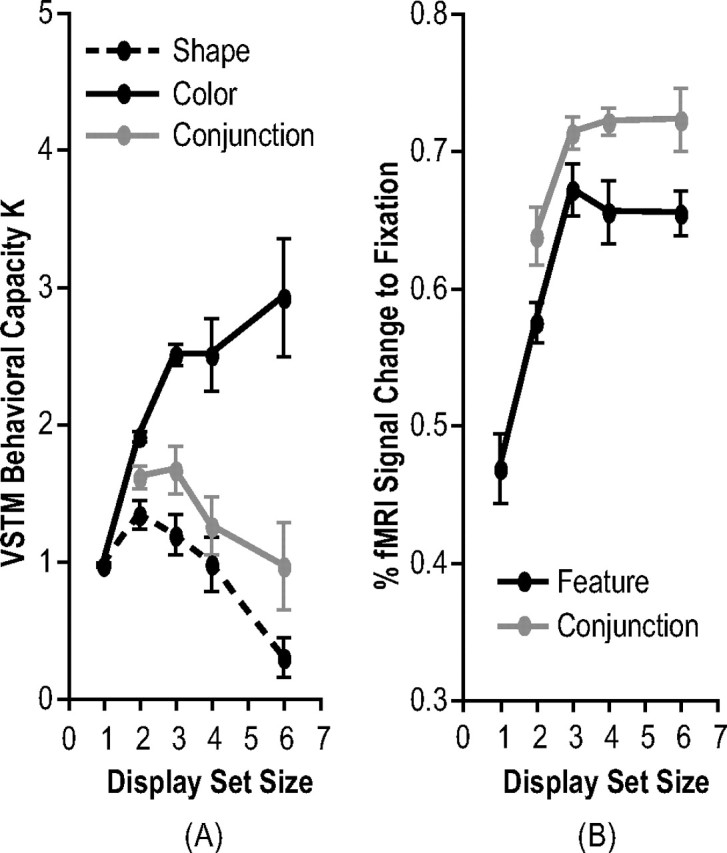

To delineate how multiple features of an object are retained together in the superior IPS, imagine the following manipulation: a set of similar shape features are conjoined with a set of dissimilar color features, such that, when both types of features are retained together, the maximum VSTM capacities (Ks) are ∼3 for colors (easy) and 1 for shapes (hard). That is, as display set size increases, K plateaus at ∼3 for colors and ∼1 for shapes (Fig. 1A).

Figure 1.

A, Behavioral performance from a hypothetical VSTM experiment in which a set of dissimilar colors are conjoined with a set of similar shapes, resulting in a maximal of three colors and one shape retained together in VSTM. As display set size increases, Ks (the number of features retained in VSTM) increase and plateau at three for colors and at one for shapes. B–D, Possible superior IPS response patterns. The integration account argues that the superior IPS stores integrated whole objects, requiring all attended object features to be represented together in this brain area; the object account argues that superior IPS stores the total number of objects encoded in VSTM, regardless of whether they are whole or partial, and the feature account argues that the superior IPS simply stores the total amount of feature information retained in VSTM. Note that, under this experimental manipulation, the last two accounts predict the same superior IPS response pattern.

If the superior IPS represents whole and integrated objects, it would require both features of each object to be stored. Under this scenario, responses in this brain area would be limited by the maximal number of the harder features encoded. Thus, an integration account would predict that only one integrated object would be represented in the superior IPS as display set size increases (Fig. 1B). However, if the superior IPS represents the total number of objects stored in VSTM, regardless of whether they are whole or partial objects, responses in this brain area would be limited by the maximal number of the easier features encoded. An object account thus predicts that maximally one whole object (with both features stored) plus two partial objects (with only the color feature stored), totaling three objects, can be stored in the superior IPS. Responses in this brain area would therefore increase as display set size increases and plateau at set size 3 (Fig. 1C). Alternatively, if the superior IPS represents the total amount of feature information retained, responses in this brain area would be limited by the maximal numbers of both types of features retained, which would be four features (one shape plus three colors). Consequently, according to the feature account, response in the superior IPS would increase as display set size increases and plateau at set size 3 (Fig. 1D). Note that, under this experimental manipulation, the last two accounts predict the same superior IPS response pattern. In experiments 1 and 2, the above design was performed to test the predictions of the integration account against both the object account and the feature account.

To further distinguish between the object account and the feature account, experiment 3 varied the amount of feature information retained in VSTM while keeping the total number of objects constant, and experiment 4 varied the number of objects retained in VSTM while keeping the total amount of feature information constant. The object account predicts that the superior IPS would track the number of objects present, independent of the amount of feature information retained. In contrast, the feature account predicts that this brain area would track the total amount of feature information retained independent of the number of objects that this information comes from.

Participants

Fourteen observers (eight females) participated in both experiments 1 and 2 in the same scanning session; eight observers (four females) participated in experiment 3, with one observer (male) who also participated in experiments 1 and 2 about 6 months earlier; and seven observers (two females) participated in experiment 4, with two observers (males) also participated in experiment 3 about 13 months earlier. All observers were recruited from the Yale University campus, were right handed, had normal or corrected to normal vision and normal color vision, and were paid for their participation. Informed consent was obtained from all observers and the study was approved by the Yale committee on the use of humans as experimental subjects. Data from two observers (both females) were excluded from experiments 1 and 2 because of excessive head movements.

Design and procedure

Main experiments.

All displays subtended 13.7° × 13.7° and were presented on a light gray background. A given object subtended maximally 3.1° × 3.1°. In all four experiments, each trial lasted 6 s and consisted of fixation (1000 ms), sample display (200 ms), blank delay (1000 ms), test display/response period (2500 ms), and response feedback (1300 ms) as either a happy face for a correct response or a sad face for an incorrect response presented at fixation.

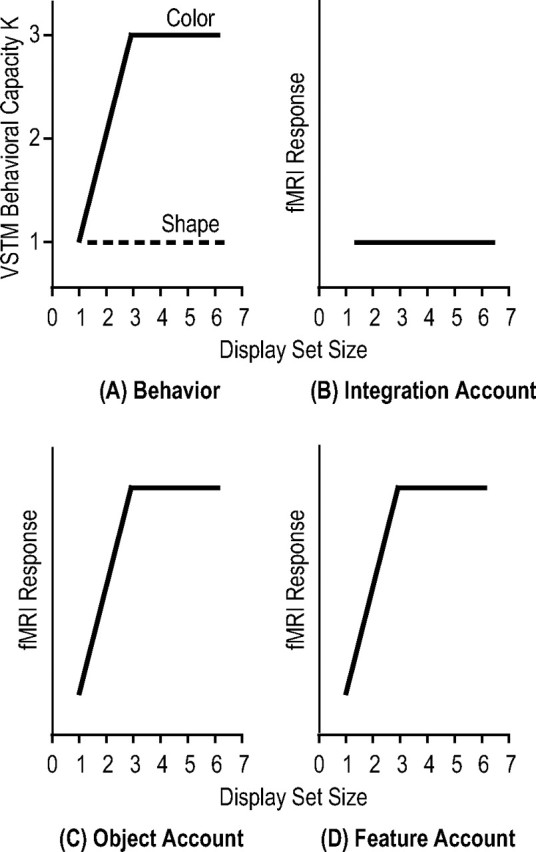

In experiment 1, to test the predictions of the integration account (that the superior IPS stores integrated whole objects), a set of similar shape features were conjoined with a set of dissimilar color features. Observers were asked to retain these objects in VSTM and, after a brief delay, judged whether a particular color or shape feature was present in the initial display. The sample display contained either 1–4, or 6 mushroom-like colored objects presented at different spatial locations around the central fixation (Fig. 2A). A given object could be in one of seven dissimilar colors (red, green, blue, orange, yellow, white, or magenta) and in one of nine similar shapes (Fig. 2). Each shape was constructed by attaching one of three mushroom-cap-like top part to one of three mushroom-stem-like bottom part, rendering them to be highly similar to each other and difficult to retain in VSTM (Xu and Chun, 2006). A given shape or color was never repeated in a sample display. The test display contained either a colored square (color probe) or a black shape (shape probe) at the center of the display (Fig. 2A). Observers judged whether the color of the color probe or the shape of the shape probe was present or absent in the sample display (50% probability for each). Color and shape were probed equally often and in random order, encouraging observers to encode both features of each object in the sample display. This also allowed the measurement of separate behavioral VSTM capacity estimates for the two features when they were retained together. In addition to the experimental trials, there were also blank fixation trials in which only a fixation dot was present throughout the 6 s trial duration. The presentation order of the different trial types was pseudorandom and balanced in a run (Kourtzi and Kanwisher, 2000, 2001; Todd and Marois, 2004; Xu and Chun, 2006). Each run contained 12 trials for each display set size, half with color probes and half with shape probes. Each observer was tested with four runs, each lasting 7 min and 57 s.

Figure 2.

A, An example trial from experiment 1. Observers encoded one, two, three, four, or six mushroom-like objects presented around the center fixation in a sample display and, after a brief blank delay, judged whether the probe color or shape at the center of the test display was present or absent in the sample display. B, An example trial from experiment 2. The trial was identical to that illustrated in A, except that the probe in the test display was a colored shape. Observers judged whether the color and shape conjunction of the probe was present or absent in the sample display.

In experiment 2, to further test the predictions of the integration account, VSTM for feature binding was explicitly required. Observers retained in VSTM the same set of objects used in experiment 1; however, instead of having their memory probed separately for color and shape features as in experiment 1, memory for feature conjunction was probed in this experiment. Here, only set sizes 2, 3, 4, and 6 displays were used and the test display either contained one of the sample objects or an object with a color and shape conjunction from two different sample objects (Fig. 2B). Observers judged whether the probe object was present or absent in the sample display (50% probability for each). Other aspects of the experiment were identical to those of experiment 1. Each observer was tested with 2 runs, each containing 15 trials for each display set size and lasting 8 min and 15 s. The same observers participated in both experiments 1 and 2 in the same scanning session, with the testing order of the two experiments counterbalanced between observers.

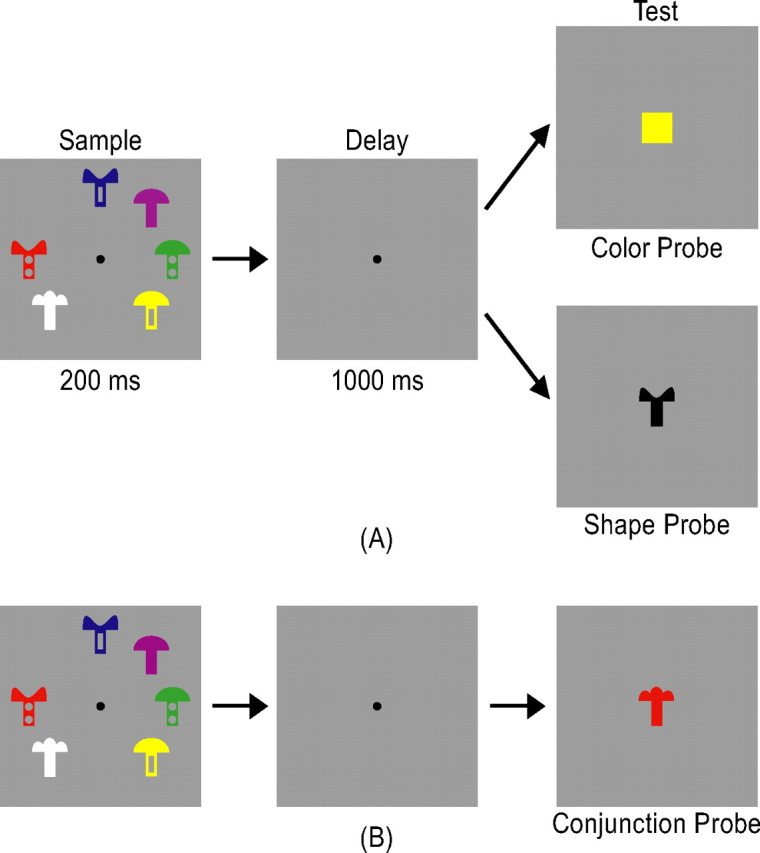

In experiment 3, to distinguish between the object account and the feature account, while the number of objects was kept constant, the amount of object features encoded in VSTM varied between displays. The sample display contained either two colored shapes or two black shapes. The same seven distinctive colors used in experiment 1 were used here. However, unlike experiments 1 and 2, seven distinctive shapes, which were easier to retain in VSTM [as in experiment 4 of Xu and Chun (2006)], were used in this experiment (Fig. 4, supplemental Fig. 1B, available at www.jneurosci.org as supplemental material). When the sample display contained colored shapes, the test display contained either a shape probe or a color probe that matched a color or shape in the sample display with a 50% probability as in experiment 1 (Fig. 4A). When the sample display contained black shapes, the test display always contained a shape probe that matched a shape in the sample display with a 50% probability (Fig. 4B). Given the purpose of this experiment, only set size 2 colored-shape displays when shape was probed, and set size 2 black-shape displays were considered. Set size 2 colored-shape displays when color was probed served as filler trials to ensure that both features of each object in the sample display were encoded. To increase task difficulty and ensure observers' attention on the display, displays containing four black shapes were also included as filler trials.b. Filler trials were not analyzed for the purpose of the experiment. This experiment thus contained five conditions: (1) a set size 2 colored-shape display with shapes probed (experimental condition), (2) the same display with colors probed (filler condition), (3) a set size 2 black-shape display (experimental condition), (4) a set size 4 black-shape display (filler condition), and (5) a fixation display. As in experiments 1 and 2, the presentation order of the different display types was pseudorandom and balanced in a run. Each observer was tested with two runs, each containing 15 trials per condition and lasting 8 min and 15 s.

Figure 4.

Example trials from experiment 3. There were two types of experimental trials. In A, observers viewed two colored shapes in the sample display and, after a brief delay, judged in the test display whether a particular color or shape was present in the sample display. In B, observers viewed two black shapes in the sample display and judged whether a particular shape was present in the sample display. A and B thus contained the same number of objects, but differed in the amount of feature information observers encoded into VSTM.

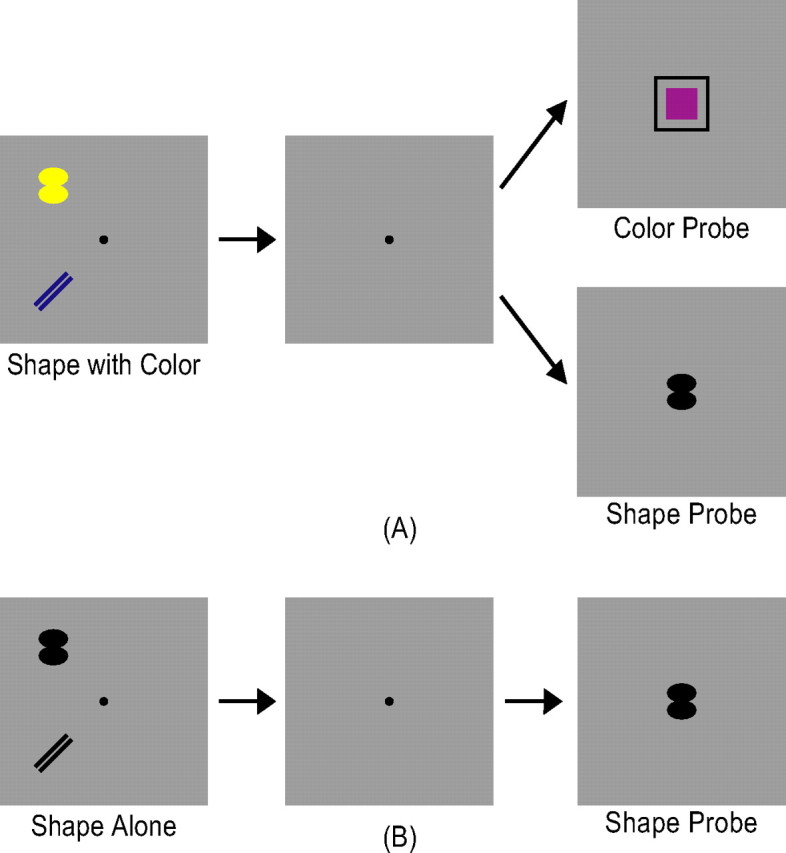

In experiment 4, to further distinguish between the object account and the feature account, the reverse manipulation of experiment 3 was used: while the number of object features was kept constant, the number of objects varied between displays. The mushroom-like objects used in experiments 1 and 2 were used here. Observers were asked to remember the color of a mushroom cap and the shape of a mushroom stem. The cap and the stem could either be attached, forming parts of one object (Fig. 6, top), or detached and presented at different spatial locations, forming two different objects (Fig. 6, bottom) [see Xu and Chun (2006), their experiment 2, for a similar manipulation with two shape features]. To facilitate object individuation and prevent grouping when objects appeared next to each other, eight dark squares, marking all possible object locations, were always present in the display (Xu and Chun, 2006) (Y. Xu, unpublished observation). The shape of the mushroom cap (always half-moon shaped) and the color of the mushroom stem (always black) never changed between the sample and the test displays and were task irrelevant. Thus, the relevant color and shape features could either appear on one object, or on two different objects. The cap color was in one of seven distinctive colors as in experiments 1 and 2. The stem shape was in one of four distinctive shapes [three of which were used in experiments 1 and 2 (Fig. 2); the fourth one is shown in Fig. 6]. The test display contained either a colored mushroom cap (color probe) or a black mushroom stem (shape probe) that matched the relevant color or shape in the sample display with a 50% probability (Fig. 6). To increase task difficulty and ensure observers' attention on the display, displays containing three attached mushrooms (totaling three objects) or three detached mushrooms (totaling six objects) were also included and served as filler trials.c. Filler trials were not analyzed for the purpose of the experiment. This experiment thus contained four conditions: (1) a one-object condition, containing one mushroom cap attached to one mushroom stem, (2) a two-object condition, containing one mushroom cap and one mushroom stem detached, (3) a filler condition, containing either three pairs of attached mushroom caps and stems or three pairs of detached mushroom caps and stems, and (4) a fixation condition. As in the preceding experiments, the presentation order of the different trial types was pseudorandom and balanced in a run. Each observer was tested with two runs, each containing 15 trials per condition and lasting 8 min and 15 s.

Figure 6.

Example trials from experiment 4. There were two types of experimental trials. In A, observers viewed in the sample display one object containing a colored cap attached to a black stem. In B, observers viewed in the sample display two objects, one containing a colored cap and the other containing a black stem. In both conditions, after a brief delay, observers judged in the test display whether a particular cap color or stem shape was present in the sample display. A and B thus contained the same number of features, but different numbers of objects. To facilitate object individuation and prevent grouping when objects appeared next to each other, eight dark squares, marking all possible object locations, were always present in the display.

Localizer scans.

As was done previously (Xu and Chun, 2006), to define the superior IPS region of interest (ROI) in each experiment, a VSTM color experiment with a design identical to that of the main experiments was conducted. A given sample display contained either 2–4 or 6 colored squares around the central fixation (supplemental Fig. 1A, available at www.jneurosci.org as supplemental material). The probe color in the test display either matched a color at the same location in the sample display for no-change trials (supplemental Fig. 1A, top, available at www.jneurosci.org as supplemental material), or it was a color present elsewhere in the sample displays for change trials (supplemental Fig. 1A, bottom, available at www.jneurosci.org as supplemental material). Seven colors (red, green, blue, cyan, yellow, white, and magenta) were used. As in the main experiments, all displays subtended 13.7° × 13.7° and were presented on a light gray background. Each colored square subtended 2.0° × 2.0°. Each observer was tested with two runs, each containing 12 trials for each display set size and lasting 5 min and 12 s.

In experiment 3, to evaluate attention-related brain activations, in addition to the superior IPS ROI, the lateral occipital complex (LOC) and the inferior IPS ROIs were also localized in each observer. To define these ROIs, as in the study by Xu and Chun (2006), observers viewed blocks of black object images and blocks of noise images (supplemental Fig. 1B, available at www.jneurosci.org as supplemental material). Each object image contained six black shapes, created by the same algorithm used to generate the displays in experiment 3 (supplemental Fig. 1B, top, available at www.jneurosci.org as supplemental material). This procedure ensured that only brain regions involved in processing the types of visual objects used in the VSTM experiment were localized (Xu and Chun, 2006). Each image was presented for 750 ms and followed by a 50 ms blank interval before the next image appeared. To ensure attention on the displays, observers fixated at the center and detected a slight spatial jitter, occurring randomly in one of every 10 images. Each observer was tested with 2 runs, each containing 160 black object images and 160 noise images. Each run lasted 4 min and 40 s. Displays used in this localizer scan had the same spatial extent as those in the main experiments.

In experiment 4, because only the superior and the inferior IPS ROIs were examined and because the inferior IPS does not encode object identity (Xu and Chun, 2006) (Y. Xu, unpublished observation), the same inferior IPS localizer as used in experiment 3 was used to localize the inferior IPS ROI in this experiment.

Because the fMRI data analysis software could accurately align brain images acquired from the same observer in different scan sessions, localizer scans were not always acquired in the same scanning session as the main experimental scans. This usually happened when the same localizer scans had already been acquired from the observer in a different study.

fMRI methods

Observers lied on their back inside a Siemens (Erlangen, Germany) Trio 3T scanner and viewed through a mirror the displays projected onto a screen at the head of the scanner bore by an LCD projector. Stimulus presentation and behavioral response collection were controlled by an Apple (Cupertino, CA) Powerbook G4 running Matlab with Psychtoolbox extensions (Brainard, 1997; Pelli, 1997). Standard protocols were followed to acquire the anatomical images. A gradient echo pulse sequence (echo time, 25 ms; flip angle, 90°; matrix 64 × 64) with a repetition time (TR) of 2.0 s was used in the blocked object and noise image runs and a TR of 1.5 s in the event-related VSTM runs. Twenty-four 5-mm-thick (3.75 mm × 3.75 mm in-plane, 0 mm skip) axial slices parallel to the AC–PC line were collected.

Data analysis

fMRI data collected were analyzed using BrainVoyager QX (Brain Innovation, Maastricht, Netherlands). Data preprocessing included slice acquisition time correction, 3D motion correction, linear trend removal, and Talairach space transformation (Talairach and Tournoux, 1988).

A multiple regression analysis was performed separately on each observer on the data acquired in the color VSTM task. The regression coefficient for each set size was weighted by the corresponding behavioral K estimate from that observer for that set size (Todd and Marois, 2004). The superior IPS ROI was defined as the voxels that showed a significant activation in the regression analysis (false discovery rate, q < 0.05) and whose Talairach coordinates matched those reported previously (Todd and Marois, 2004). When extensive activations were observed in the superior IPS, only 20 (1 × 1 × 1 mm3) voxels around the reported Talairach coordinates were chosen. As in the previous study by Xu and Chun (2006), the LOC ROI in experiment 3 and the inferior IPS ROI in experiments 3 and 4 were defined as regions in the ventral and lateral occipital cortex and in the inferior IPS, respectively, whose activations were higher for objects than for noise images (false discovery rate, q < 0.05). Example ROIs are shown in supplemental Figure 1C, available at www.jneurosci.org as supplemental material.

These ROIs were overlaid onto the data from the main VSTM experiments and time courses from each observer were extracted. As in previous studies (Kourtzi and Kanwisher, 2000; Todd and Marois, 2004; Xu and Chun, 2006), these time courses were converted to percent signal change for each stimulus condition by subtracting the corresponding value for the fixation trials and then dividing by that value. Following previous convention (Todd and Marois, 2004; Xu and Chun, 2006), peak responses were derived by collapsing the time courses of all the conditions and determining the time point of greatest signal amplitude in the averaged response. This was done separately for each observer in each ROI. The resulting peak responses were then averaged across observers.

Results

Experiment 1: superior IPS representation of colored shapes in VSTM

In experiment 1, to test the predictions of the integration account that the superior IPS stores integrated whole objects, a set of similar shapes was conjoined with a set of dissimilar colors. Observers encoded 1, 2, 3, 4, or 6 mushroom-like objects presented around the central fixation in a sample display. After a brief blank delay, they judged whether the probe color or shape at the center of the test display was present or absent in the sample display (Fig. 2A). Color and shape were probed separately in random order. To evaluate behavioral performance, change detection accuracies were transformed to K estimates as a function of display set size for each feature by applying Cowan's K formula (Cowan, 2001). To evaluate fMRI responses, an ROI approach was used and averaged fMRI responses from the superior IPS ROI was extracted. This ROI was defined as voxels whose activations correlated with VSTM capacity in a separate color VSTM task (supplemental Fig. 1A, available at www.jneurosci.org as supplemental material) and whose Talairach coordinates matched those reported previously (Todd and Marois, 2004).

Overall, among set size 1–4 displays, Ks were greater for colors than for shapes (F(1,11) = 100.92; p < 0.001), with a significant interaction between feature and display set size (F(3,33) = 13.46; p < 0.001) (Fig. 3, left). For shapes, Ks did not vary significantly from set size 1 to set size 4 (F(3,33) = 1.89; p > 0.15). For colors, however, Ks varied significantly from set sizes 1 to 4 (F(3,33) = 32.40; p < 0.001), increasing from set size 1 to set size 3 and plateauing at set size 3 (F(1,11) = 636.29, p < 0.001; F(1,11) = 60.73, p < 0.001; and F < 1, respectively, for the differences between set sizes 1 and 2, 2 and 3, and 3 and 4). These results indicated that VSTM capacity was ∼1 for shapes and 3 for colors.

Figure 3.

Results of experiments 1 and 2 (with within-subject SEs). A, Behavioral results. B, Superior IPS fMRI results. Behavioral results from experiment 1 indicated that VSTM capacity was approximately one for shapes and three for colors. Peak superior IPS responses were correlated with Ks for colors in both experiments 1 and 2, consistent with both the object account and the feature account as depicted in Figure 1.

Ks for shapes dropped significantly between set sizes 4 and 6 (F(1,11) = 10.64; p < 0.01), whereas those for colors did not vary (F(1,11) = 1.04; p > 0.32). Because only approximately one shape could be retained in VSTM, with set size 6 displays, the probability of retaining the right shape to correctly detect its presence in the test display was ∼17%. Meanwhile, to decide whether a particular shape was absent in the display, observers would always have to guess. The probability of answering correctly to the shape probes of the set size 6 displays was thus extremely low. To increase overall task performance, observers might have been motivated to abandon shapes all together and only encode colors from the set size 6 displays. This possible switch of strategy could account for the observed behavioral response pattern, although it did not seem to affect fMRI responses in the superior IPS as reported below.

In the superior IPS, the peak fMRI activation was significantly modulated by display set size (F(4,40) = 13.22; p < 0.001) (Fig. 3, right) such that it increased from set sizes 1 to 3 and plateaued at set size 3 (F(1,11) = 22.97, p < 0.01; F(1,11) = 11.94, p < 0.01; F < 1; and F < 1, respectively, for the differences between set sizes 1 and 2, 2 and 3, 3 and 4, and 4 and 6). In addition, fMRI activation in this brain area significantly correlated with Ks for colors (F(1,3) = 36.86; p < 0.01). Compared with the predictions illustrated in Figure 1, these results indicated that the superior IPS does not represent whole or integrated objects for which both attended object features are successfully retained as predicted by an integration account. Rather, its response pattern is consistent with both the object account and the feature account.

One may argue that in addition to the three accounts outlined earlier, the superior IPS may only store object colors when colors and shapes are retained together (the color only account). Although this account is also consistent with fMRI results from this experiment, it is unlikely the case. First, Xu and Chun (2006) showed that the superior IPS can store both simple and complex object shapes. It is unclear what would prevent this brain area from storing object shapes in the presence of object colors. If anything, one would assume that more, rather than less, neural resources would be recruited to support the harder feature (object shapes) in the present VSTM task. Second, in a post hoc analysis, trials in which shapes were probed were selected and then separated into two groups according to whether the test shape matched one of the sample shapes or not. Repeated presentation of the same visual stimulus has been shown to result in decreased fMRI response in brain areas representing that visual stimulus (Wiggs and Martin, 1998; Henson, 2003; Grill-Spector et al., 2006). If shapes were encoded together with colors in the superior IPS, then repeating a sample shape in the test display should result in a lower fMRI response than presenting a shape that was not in the sample display. This repetition effect was present and significance for set size 1 displays (F(1,11) = 6.48; p < 0.05), showed a trend for set size 2 displays (F(1,11) = 2.01; p = 0.18), and diminished as display set size increased from 3 to 6 (F values < 1). The interaction of this repetition effect and display set size was marginally significant (F(4,44) = 2.13; p = 0.093). Because of VSTM capacity limitations, the likelihood that observers did not retain a particular sample shape in VSTM, making it look “new” when it reappeared in the test display, increased as display set size increased. This could explain why the repetition effect decreased as display set size increased. Moreover, for repeat trials, the amount of stimulus overlap between the sample and the test display decreased as display set size increased, from 100% for set size 1 to 17% for set size 6, which could also modulate the magnitude of this repetition effect. Nevertheless, the significant repetition effect for set size 1 displays and a trend for set size 2 displays indicated that shapes were encoded together with colors in the superior IPS, and argued against the notion that the superior IPS only stores colors when colors and shapes are retained together in VSTM.

Experiment 2: the effect of explicit feature binding on superior IPS representation of colored shapes in VSTM

Results from experiment 1 indicated that the superior IPS does not represent integrated objects. Because color and shape were probed separately, however, observers might have been encouraged to encode colors and shapes independently, rather than form integrated object representations in the superior IPS. That is, the superior IPS may be capable of representing integrated whole objects when such integration is explicitly required.

To test this possibility, in this experiment, the design of experiment 1 was repeated, but instead of probing colors and shapes separately in the test display, the conjunctions of color and shape were probed. The probe was either a colored shape present in the sample display, or one consisted of color and shape from two different objects in the sample display (Fig. 2B). Observers were asked to judge whether the conjunction probe was present or absent in the sample display. Here, only VSTM for explicit color and shape conjunctions, rather than independent VSTM for color and shape features, would lead to successful task performance. Because of the nature of the task, only set sizes 2, 3, 4, and 6 displays were used. This experiment was performed in the same scanning session with experiment 1. The order of the two experiments was counterbalanced between different observers and the experiment order did not affect the results.

Overall, Ks for color and shape conjunction were marginally significantly modulated by display set size (F(3,33) = 2.63; p < 0.07) (Fig. 3, left), with a trend of Ks decreasing as display set sizes increasing from 2 to 6 (F < 1; F(1,11) = 2.72, p > 0.12; and F < 1, respectively, for the differences between set sizes 2 and 3, 3 and 4, and 4 and 6). Comparing this experiment to experiment 1, Ks for conjunction were between Ks for color and shape features probed alone. Namely, Ks were lower for conjunction than for color (F(1,11) = 40.57; p < 0.001), with a significant interaction between conjunction versus color and display set size (F(3,33) = 6,75; p < 0.01), and Ks were higher for conjunction than for shape (F(1,11) = 6.74; p < 0.05), with no interaction between conjunction versus shape and display set size (F < 1). Thus, when color and shape were retained together, although Ks for explicit color and shape conjunction were higher than Ks for the shape feature and benefited from the higher Ks for the color feature, the overall K pattern mirrored that for the shape feature across display set sizes.

Although behavioral results from this experiment and experiment 1 indicated that only about one whole objects could be retained in VSTM, peak fMRI activation in the superior IPS was still significantly modulated by display set size (F(3,33) = 4.15; p < 0.05) (Fig. 3, right), increasing from set sizes 2 to 3, and plateauing at set size 3 (F(1,11) = 12.27, p < 0.01; F < 1; and F < 1, respectively, for the differences between set sizes 2 and 3, 3 and 4, and 4 and 6). Comparing superior IPS responses between experiments 1 and 2, there was neither significant difference between the two experiments (F(1,11) = 2.17; p > 0.16), nor an interaction between experiments and display set size in peak fMRI activations (F < 1).

These results thus replicated those of experiment 1 and showed that the superior IPS does not store integrated whole objects, as predicted by an integration account, even when feature binding is explicitly required. Rather, response in this brain area reflects either the retention of object features (two, three, four, four, and four features for set sizes 1, 2, 3, 4 and 6 displays, respectively), or the retention of total number of (whole plus partial) objects (one, two, three, three, and three objects for set sizes 1, 2, 3, 4 and 6 displays, respectively).

In previous studies (Xu and Chun, 2006; Todd and Marois, 2004, 2005), because each object in the display contained a single feature and the task only required judgment on the retention of that feature, superior IPS response was found to be strongly correlated with behavioral K measures. The use of the conjunction probe in this experiment, however, required observers to retain both the color and the shape features of an object to perform the task successfully. Because only about one shape and three color features could be retained, behavioral performance was limited by the shape feature retained. As display set size increased, although the number of color features retained could still increase and contribute to superior IPS response increase, they could not contribute to behavioral performance improvement. Thus, whereas behavioral K measures asymptoted at around set sizes 1 and 2, superior IPS response asymptoted at set size 3, resulting in a disagreement between behavioral and neural measures that was not observed in previous studies. However, these results do not contradict previous findings. But rather, they provide us with a better understanding of how multiple object features may be retained together in the superior IPS.

Experiment 3: retaining the same number of objects but different number of features in superior IPS

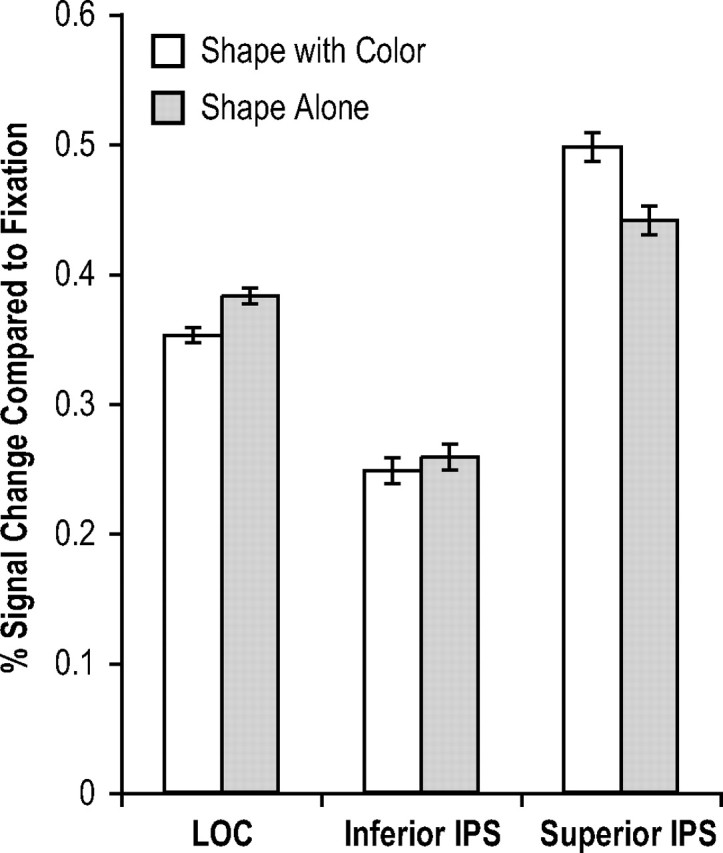

Although results from experiments 1 and 2 ruled out the integration account, it is unclear whether the object account or the feature account best describes how object features are stored in the superior IPS during VSTM. To differentiate between these two accounts, this experiment varied the number of features that might be encoded while holding the number of objects constant between displays. Observers retained either two colored shapes in VSTM and memory for colors and shapes was probed separately as in experiment 1, or they retained just two black shapes in VSTM and memory for shapes was probed (Fig. 4). The experiment used the same set of dissimilar colors as in the previous two experiments; however, instead of similar shapes, this experiment used a set of dissimilar shapes that had a maximal VSTM capacity of approximately three (Xu and Chun, 2006). If the superior IPS represents the total number of objects encoded as predicted by the object account, then responses in this brain area should be comparable whether observers retained two colored shapes or two black shapes in VSTM. However, if the superior IPS represents the total amount of object feature information encoded as predicted by the feature account, then response in this brain area should be higher for the colored shapes (with a total of four features retained) than for the black shapes (with a total of only two features retained).

Because differences in task difficulty and attention allocation between conditions could potentially modulate brain responses, especially before superior IPS response reaches plateau, two measures were taken to guard against such effects. First, observers only had to remember a maximal of two colored shapes or two black shapes, a task load that was below the maximal VSTM capacity of three for these objects. Task performance was thus at the ceiling for both conditions, eliminating possible differences in task difficulty between the two. Second, to further assess differences in attention allocation between the two conditions, fMRI responses from two other brain areas were examined. If response differences in the superior IPS between the two conditions were caused by differences in overall attention allocation, then the same response differences should be observed in other brain areas involved in the VSTM task. For this purpose, in this experiment, in addition to the superior IPS, the LOC and the inferior IPS regions were also localized in each observer. LOC participates in visual object shape processing and conscious object perception (Malach et al., 1995; Grill-Spector et al., 1998, 2000; Kourtzi and Kanwisher, 2000, 2001), whereas the inferior IPS has been linked to attention-related processing (Wojciulik and Kanwisher, 1999; Kourtzi and Kanwisher, 2000). In a VSTM task, Xu and Chun (2006) showed that, whereas the inferior IPS selects a fixed number of approximately four objects via their spatial locations, the LOC, together with the superior IPS, encodes the shape features of a subset of the selected objects into great detail in VSTM. Thus, both the LOC and the inferior IPS participate in VSTM and are good candidate areas to examine attention related responses for the purpose of the present experiment. These two brain areas were localized following the procedures described by Xu and Chun (2006).

fMRI response for the black shapes was compared with that for the colored shapes when shape was probed. Thus, the critical comparison involved comparing two displays that had the same shape probe, but differed in the amount of feature information initially encoded. Trials where colors were probed were treated as fillers and their fMRI responses were not analyzed. Behavioral Ks for the number of object shapes retained in VSTM were at ceiling and were 1.85 (SE, 0.04) and 1.91 (SE, 0.06) for the colored shapes and the black shapes, respectively, with no difference between the two (F < 1) (K for the number of colors retained from the colored shape condition was 1.80 (SE, 0.05), with no difference between this condition and either of the shape conditions (F < 1 between the two features of the colored shape condition, and F(1,7) = 1.39, p > 0.27 between the color of the colored shape condition and the black shape condition). In the LOC, peak fMRI response was lower for the colored than that for the black shapes (F(1,7) = 6.62; p < 0.05) (Fig. 5). This difference was not significant in the inferior IPS (F < 1) (Fig. 5). In contrast, in the superior IPS, peak fMRI response was higher for the colored than that for the black shapes (F(1,7) = 6.61; p < 0.05) (Fig. 5). The interaction between display type and superior IPS vs LOC and the interaction between display type and superior IPS vs inferior IPS were both significant (F(1,7) = 14.50, p < 0.01; and F(1,7) = 9.44, p < 0.05, respectively) (Time courses of the fMRI responses from all three ROIs are plotted in supplemental Fig. 2, available at www.jneurosci.org as supplemental material).

Figure 5.

fMRI results of experiment 3 (with within-subject SEs). Peak fMRI responses in the superior IPS were greater for shape with color than for shape alone. However, they did not differ in the inferior IPS and reversed direction in the LOC. These results indicate that the superior IPS represents the total amount of feature information stored in VSTM as predicted by the feature account, rather than the total number of objects retained as predicted by the object account.

Thus, neither behavioral results nor responses from the inferior IPS or the LOC indicated that remembering the colored shapes was more difficult and more attentional demanding than remembering the black shapes. In fact, a higher response was observed in the LOC for the black than for the colored shapes. The higher superior IPS response for the colored shapes (four features retained) than for the black shapes (two features retained) therefore indicated that the superior IPS represents the total amount of feature information retained in VSTM, rather than the total number of objects. The feature account therefore seems to best describe how colors and shapes are retained together in this brain area.

Xu and Chun (2006) showed that the inferior IPS tracks the number of objects in a display up to appoximately four via their spatial locations. Consistent with this finding, given that both the colored shape display and the black shape display contained two objects, the inferior IPS responses did not differ between these two conditions. LOC responses were greater for the black shapes than for the colored shapes. This could be because of shape outlines being sharper for the black shapes than for the colored shapes. Consequently, LOC responses might reflect this difference in contour contrast, although it did not affect behavioral performance.

Experiment 4: retaining different number of objects but the same number of features in superior IPS

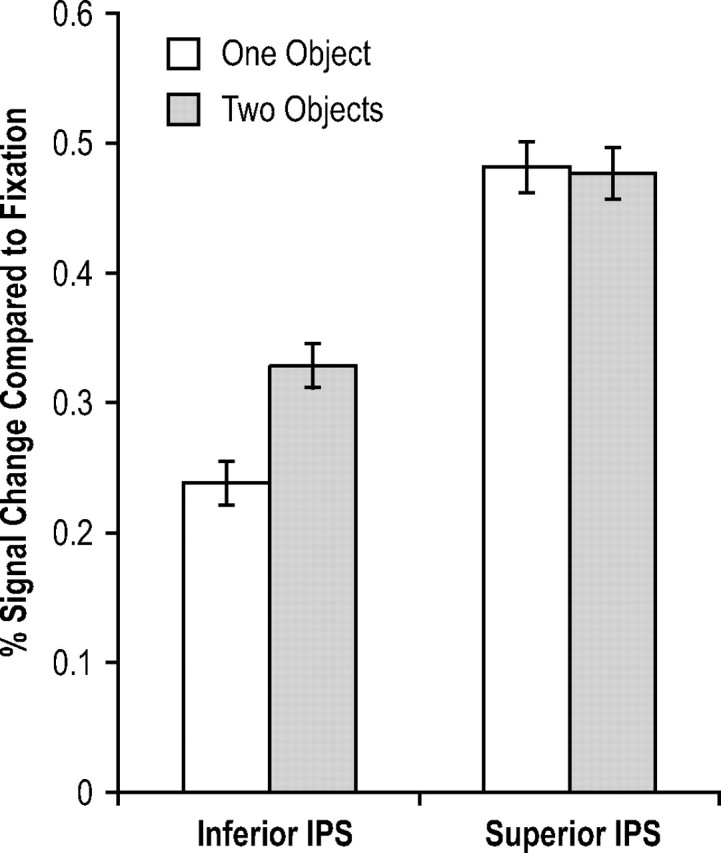

To further distinguished between the object account and the feature account of information storage in the superior IPS, this experiment varied the number of objects encoded while holding the number of object features constant between displays. Observers were asked to remember the color of a mushroom cap and the shape of a mushroom stem. The shape of the mushroom cap (always half-moon shaped) and the color of the mushroom stem (always black) never changed between the sample and the test displays and they were task irrelevant. The cap and the stem could either be attached, forming parts of one object, or detached and presented at different spatial locations, forming two separate objects (Fig. 6) [see Xu and Chun (2006), their experiment 2, for a similar manipulation with two shape features]. Thus, the same set of object features was present on different number of objects in the two display conditions. VSTM for color and shape was probed separately as in experiment 1. The seven dissimilar colors and the three dissimilar stem shapes used in experiments 1 and 2 were used here, with the addition of a new stem shape (Fig. 6). If the superior IPS represents the total number of objects encoded as predicted by the object account, then response in this brain area should be lower for one than for two objects present in the display. However, if the superior IPS represents the total amount of object feature information encoded as predicted by the feature account, then response in this brain area should be comparable between the two display conditions. Because the inferior IPS tracks the number of objects in a display up to approximately four via their spatial locations (Xu and Chun, 2006), this brain area should show a lower response for the one than for the two-object display. The inferior IPS response thus was also examined in this experiment to verify that the experimental manipulation worked as intended.

Behavioral Ks for the number of features retained in VSTM were at ceiling and were 1.92 (SE, 0.04) and 1.89 (SE, 0.05) for displays containing one and two objects, respectively, with no difference between the two (F < 1). In the inferior IPS, peak fMRI response was lower for one than for two objects (F(1,6) = 7.04; p < 0.05) (Fig. 7). This difference, however, was not significant in the superior IPS (F < 1) (Fig. 7). The interaction between display type and inferior versus superior IPS was significant (F(1,6) = 11.31; p < 0.05) (time courses of the fMRI responses from both ROIs are plotted in supplemental Fig. 3, available at www.jneurosci.org as supplemental material).

Figure 7.

fMRI results of experiment 4 (with within-subject SEs). Although peak fMRI responses were greater for one than for two objects in the inferior IPS, they did not differ in the superior IPS. These results indicate that whereas the inferior IPS tracks the number of objects, the superior IPS tracks the total amount of feature information retained in VSTM, consistent with the prediction of the feature account, and not the object account.

Thus, whereas inferior IPS response reflected the number of objects encoded, as in the study by Xu and Chun (2006), superior IPS response reflected the total amount of feature information retained, independent of the number of objects these features came from. These results further support the feature account of information storage in the superior IPS.

Discussion

Previous fMRI studies reported that response in the superior IPS correlates strongly with the number of objects held in VSTM (Todd and Marois, 2004, 2005; Xu and Chun, 2006). Because each object contained a single feature in these studies, superior IPS response could reflect either the number of objects or the number of features successfully retained in VSTM. In addition to this ambiguity, it is also unknown how an object's color and shape may be retained together in VSTM in this brain area. Using an event-related fMRI design and an independent ROI-based approach, this study showed that the superior IPS holds neither integrated whole objects nor the total number of objects stored in VSTM. Rather, it represents the total amount of feature information retained in VSTM.

The ability to accumulate information acquired from different visual feature dimensions suggests that the superior IPS may be a flexible information storage device. Consistent with this idea, tasks involving numerical representations, which usually require the accumulation of input visual information, also activate the parietal cortex, especially areas around the IPS (Dehaene et al., 1999; Eger et al., 2003; Fias et al., 2003; Nieder and Miller, 2004; Piazza et al., 2004; Sawamura et al., 2002; Nieder, 2005). Likewise, decision processes relying on sensory information accumulation also elicit parietal activations (Eskandar and Assad, 1999; Platt and Glimcher, 1999; Shadlen and Newsome, 2001; Toth and Assad, 2002; Sugreu et al., 2004; Huk and Shadlen, 2005). Finally, multisensory integration involving integrating information from different sensory modalities (e.g., vision and audition) activates parietal cortex as well (Bremmer et al., 2001; Kitada et al., 2006) (for review, see Macaluso and Driver, 2005). Whether the same or different parietal areas participate in VSTM and these other cognitive tasks, however, awaits additional research.

Todd and Marois (2004) and Xu and Chun (2006) reported that the superior IPS participates in both VSTM encoding and maintenance. Because VSTM maintenance sustains an already formed representation rather than creates a new representation, the same object representation should be present during both VSTM encoding and maintenance. Thus, although this study only used short VSTM retention intervals and could not separate VSTM encoding- and maintenance-related responses because of the sluggishness of the fMRI response, the superior IPS response pattern obtained should generalize to both VSTM encoding and maintenance if an extended delay period was used.

Task difficulty and superior IPS response

Although experiment 3 attempted to match task difficulty between the two conditions by making the task easy which resulted in ceiling performance and there were no response differences in two other brain areas involved in the VSTM task, one could argue that it might have been more effortful to retained two colored shapes than two black shapes, with different brain areas showing different sensitivity to differences in task difficulty. Consequently, response differences in the superior IPS could reflect differences in task difficulty, rather than information storage in this brain area. In experiment 4, task difficulty between the two conditions was also matched by making the task easy which resulted in ceiling performance. However, one could similarly argue that it might have still been more effortful to retain two than one object in VSTM because of object-based encoding (Luck and Vogel, 1997; Xu, 2002a, 2006). In fact, inferior IPS response was higher for two than for one object in this experiment. Yet, there was no superior IPS response difference between these two conditions. Together, task difficulty is unlikely to account for the superior IPS results from both experiments 3 and 4.

In both experiments 1 and 2, superior IPS response did not increase linearly with increasing task difficulty as display set size increased. But rather, it plateaued at set size 3, corresponding to the number of features retained in VSTM. Similarly results were also obtained in Todd and Marois (2004, 2005) and in Xu and Chun (2006). Although one could argue that superior IPS response simply saturates at some display set size with increasing task difficulty, given that its response always saturates at the set size corresponding to the number of features retained in VSTM, what drives response in this brain area thus cannot be general task difficulty, but rather, the amount of feature information retained in VSTM as shown in the present study.

The object benefit in VSTM

To explain the behavioral VSTM object benefit that all of an attended object's features may be automatically encoded together into VSTM, Luck and Vogel (1997) argued that only integrated objects are stored in VSTM (see also Irwin and Andrews, 1996; Vogel et al., 2001). Later studies, however, argued that different object features are retained in independent VSTM storages and the VSTM object benefit results from features not competing for the same VSTM storage (Wheeler and Treisman, 2002; Xu, 2002b). The present fMRI results challenge these behavioral accounts in three ways and bring new understanding to the object benefit in VSTM.

First, although the superior IPS response correlates most strongly with VSTM capacity, it does not seem to represent integrated object representations. Second, because the superior IPS stores the total amount of feature information retained in VSTM, different object features are therefore not always stored independently. This could explain the weak object benefit reported by Olson and Jiang (2002), who found in their behavioral study that both the number of objects and features affect VSTM capacity. Third, because observers could only retain approximately three colors and one shape together in VSTM when similar shapes and dissimilar colors were conjoined in experiments 1 and 2, not all features of an attended object could be automatically stored together in VSTM. Because only salient and highly discriminable features were used in previous studies and similar VSTM capacities were obtained for single object features (Luck and Vogel, 1997), different object features were likely matched in similarity/complexity, resulting in the VSTM object benefit reported in the behavioral literature. Real-world objects are less likely to be so. Consequently, the object benefit may not apply to the encoding of object features in VSTM in general.

The binding problem

Understanding how multifeature objects are stored in the superior IPS not only broadens our knowledge of the neural mechanisms supporting VSTM, but also provides new insights into the well known “binding problem” in the brain. Because different object features are initially processed by different visual areas, understanding how different object features are properly bound to form the percept of a coherent multifeature object has been the pursuit of decades of brain research (for review, see Robertson, 2003; Humphreys and Riddoch, 2006). The influential work by Treisman et al. (Treisman and Gelade, 1980; Treisman and Schmidt, 1982; Treisman, 1998) showed that focused visual attention to objects is essential for feature binding, which links different object features at the same spatial location to each other. The parietal cortex has been shown to play an important role in achieving successful feature binding. In patient studies, bilateral or unilateral damage to the parietal cortex could lead to severe impairment in feature binding (Cohen and Rafal, 1991; Friedman-Hill et al., 1995; Humphreys et al., 2000). In brain-imaging studies, activations in the parietal areas have been correlated with feature binding (Corbetta et al., 1995; Wojciulik and Kanwisher, 1999; Shafritz et al., 2002). For example, after controlling for task difficulty and eye-movements, Shafritz et al. (2002) observed higher activation in the right superior parietal cortex and the right IPS when feature binding demand was high compared with when such demand was low. Synchronized neural firing between features of the same object (Singer and Gray, 1995) has been proposed as a plausible method for feature binding. Damage to the spatial attention mechanisms in the parietal cortex is believed to disrupt feedback signals to early visual cortex which normally help produce synchronized neuronal firing between relevant visual features (Humphreys and Riddoch, 2006).

Although the role of the parietal cortex in feature binding has been highlighted in previous research, it is assumed that object features are not directly represented in the parietal cortex, but rather, in the ventral visual cortex where they are first encoded and analyzed. The present results, however, suggest a plausible different neuronal solution to the binding problem. Instead of using synchronized neuronal firing to link visual features in different visual areas, different object features may simply be encoded and stored together by neurons in the superior IPS to achieve the binding between two arbitrary visual features. Although, presently, it is unclear how object features are represented together in the superior IPS, whether as bound entities, or as randomly scattered features. Moreover, parietal areas outside the superior IPS may be critical for feature bindingd and need to be included in this framework. Nevertheless, the present results provide new insights into the binding problem in the brain and shall enrich our understanding of how multifeature objects are represented in the brain.

Footnotes

Although the IPS region described by Todd and Marois (2004, 2005) encompassed both the inferior and the superior IPS, the mean Talairach coordinates for this brain region reported by Todd and Marois (2004) were located at the superior IPS. In fact, the superior IPS region studied in Xu and Chun (2006) was localized by applying the Talaraich coordinates reported in Todd and Marois (2004). Thus, although the inferior and the superior IPS regions were both activated in the studies by Todd and Marois (2004, 2005), the center of this activation, or the region that most strongly correlated with behavioral performance, is located at the superior IPS, consistent with findings from the study by Xu and Chun (2006).

If the superior IPS represents the total amount of feature information retained in VSTM, its response should also be similar for four features distributed on two objects (the two-colored-shape condition) and for four features distributed on four objects (the four-black-shape filler condition). This experiment, however, was not optimally designed to test this prediction. First, because maximal VSTM capacity for the shape features used was about three [Xu and Chun (2006), their experiment 4], only about three shapes would be retained in VSTM when four shapes were present in the display. Thus, different numbers of features were retained in the two conditions (four for the two-colored-shape displays, and three for the four-shape displays). Second, in pilot testing, VSTM performance for colors was found to be slightly higher than that for shapes, suggesting that a single shape feature carried a higher information load than a single color feature. The total amount of feature information extracted from the two displays thus might not be matched exactly (even with different number of features extracted from each display). Third, selecting four objects for VSTM encoding could be more attentional demanding than selecting just two. Overall task difficulty therefore was not matched between these two conditions, which could affect superior IPS response when it had not reach plateau. Because of these complications, a direct comparison between the two-colored-shape and the four-shape displays would be hard to interpret. A comparison of this nature was performed in experiment 4 instead, after all of the factors listed above were accounted for.

The purpose of this experiment was to show that the superior IPS responds similarly to the same amount of feature information distributed on different number of objects. Although, in theory, the same hypothesis could be tested by comparing brain responses to the three attached and the three detached mushroom objects (the filler trials), in practice, because of object-based encoding in VSTM (Xu, 2002a, 2006), more feature information would be encoded for the attached than for the detached mushroom objects. Thus, a proper test of the central hypothesis cannot be made by comparing these two conditions. The effects of grouping and object-based encoding on brain responses have been addressed in detail in a separate study (Xu and Chun, in press). For these reasons, this experiment only compared brain responses for one pair of attached and one pair of detached mushroom objects.

The feature-binding parietal areas reported by Shafritz et al. (2002) showed a higher activation for color and shape binding than for shape alone. Although the superior IPS response from experiment 3 was consistent with this result, if this is indeed the same area reported by Shafritz et al. (2002), its response in experiments 1 and 2 should reflect the number of successful binding (i.e., integrated objects) retained in VSTM, rather than the total amount of object features retained. In addition, superior IPS Talairach coordinates did not match those reported by Shafritz et al. (2002). Finally, response of the binding area reported by Shafritz et al. (2002) did not correlate with behavioral VSTM capacity, inconsistent with superior IPS response profiles reported in this study, in the study by Xu and Chun (2006), and in the study by Todd and Marois (2004, 2005). Together, areas identified by Shafritz et al. (2002) seem to be distinctive from the superior IPS area examined in this study.

This work was supported by National Science Foundation Grants 0518138 and 0719975 (Y.X.) and National Institutes of Health Grant EY014193 (Marvin M. Chun). I am greatly indebted to Marvin M. Chun for his valuable comments on previous drafts of this manuscript and to Jenika Beck for assistance with MRI scanning.

References

- Brainard DH. The psychophysics toolbox. Spatial Vision. 1997;10:433–436. [PubMed] [Google Scholar]

- Bremmer F, Schlack A, Shah NJ, Zafiris O, Kubischik M, Hoffmann K, Zilles K, Fink GR. Polymodal motion processing in posterior parietal and premotor cortex. Neuron. 2001;21:287–296. doi: 10.1016/s0896-6273(01)00198-2. [DOI] [PubMed] [Google Scholar]

- Cohen A, Rafal RD. Attention and feature integration: illusory conjunctions in a patient with a parietal lobe lesion. Psychol Sci. 1991;2:106–110. [Google Scholar]

- Cohen JD, Perlstein WM, Braver TS, Nystrom LE, Noll DC, Jonides J, Smith EE. Temporal dynamics of brain activation during a working memory task. Nature. 1997;386:604–608. doi: 10.1038/386604a0. [DOI] [PubMed] [Google Scholar]

- Corbetta M, Schulman G, Muezzin F, Petersen S. Superior parietal cortex activation during spatial attention shifts and visual feature conjunctions. Science. 1995;270:802–805. doi: 10.1126/science.270.5237.802. [DOI] [PubMed] [Google Scholar]

- Courtney SM, Ungerleider LG, Kiel K, Haxby JV. Transient and sustained activity in a distributed neural system for human working memory. Nature. 1997;386:608–611. doi: 10.1038/386608a0. [DOI] [PubMed] [Google Scholar]

- Cowan N. The magical number 4 in short-term memory: a reconsideration of mental storage capacity. Behav Brain Sci. 2001;24:87–114. doi: 10.1017/s0140525x01003922. [DOI] [PubMed] [Google Scholar]

- Curtis CE, D'Esposito M. Persistent activity in the prefrontal cortex during working memory. Trends Cogn Sci. 2003;9:415–423. doi: 10.1016/s1364-6613(03)00197-9. [DOI] [PubMed] [Google Scholar]

- Dehaene S, Spelke E, Pinel P, Stansky R, Siskin S. Sources of mathematical thinking: behavioral and brain imaging evidence. Science. 1999;284:970–974. doi: 10.1126/science.284.5416.970. [DOI] [PubMed] [Google Scholar]

- Desimone R. Neural mechanisms for visual memory and their role in attention. Proc Natl Acad Sci USA. 1996;93:13494–13499. doi: 10.1073/pnas.93.24.13494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eger E, Sterner P, Russ MO, Girard AL, Kleinschmidt A. A supramodal number representation in human intraparietal cortex. Neuron. 2003;37:719–725. doi: 10.1016/s0896-6273(03)00036-9. [DOI] [PubMed] [Google Scholar]

- Eskandar EN, Assad JA. Dissociation of visual, motor and predictive signals in parietal cortex during visual guidance. Nat Neurosci. 1999;2:88–93. doi: 10.1038/4594. [DOI] [PubMed] [Google Scholar]

- Fias W, Lumberton J, Reinvent B, Dupont P, Orban GA. Parietal representation of symbolic and no symbolic magnitude. J Cogn Neurosci. 2003;15:47–56. doi: 10.1162/089892903321107819. [DOI] [PubMed] [Google Scholar]

- Friedman-Hill SR, Robertson LC, Treisman A. Parietal contributions to visual feature binding: evidence from a patient with bilateral lesions. Science. 1995;269:853–855. doi: 10.1126/science.7638604. [DOI] [PubMed] [Google Scholar]

- Goldman-Rakic PS. Circuitry of the prefrontal cortex and the regulation of behavior by representational memory. In: Mount castle VB, Plum F, Geiger SR, editors. Handbook of physiology: the nervous system, higher functions of the brain. Bethesda, Maryland: American Physiological Society; 1987. pp. 373–417. [Google Scholar]

- Grill-Spector K, Kushnir T, Edelman S, Yitzchak Y, Malach R. Cue-invariant activation in object-related areas of the human occipital lobe. Neuron. 1998;21:191–202. doi: 10.1016/s0896-6273(00)80526-7. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Kushnir T, Hendler T, Malach R. The dynamics of object-selective activation correlate with recognition performance in humans. Nat Neurosci. 2000;3:837–843. doi: 10.1038/77754. [DOI] [PubMed] [Google Scholar]

- Grill-Spector K, Henson R, Martin A. Repetition and the brain: neural models of stimulus-specific effects. Trends Cogn Sci. 2006;10:14–23. doi: 10.1016/j.tics.2005.11.006. [DOI] [PubMed] [Google Scholar]

- Henson RN. Neuroimaging studies of priming. Prog Neurobiol. 2003;70:53–81. doi: 10.1016/s0301-0082(03)00086-8. [DOI] [PubMed] [Google Scholar]

- Huk AC, Shadlen MN. Neural activity in macaque parietal cortex reflects temporal integration of visual motion signals during perceptual decision making. J Neurosci. 2005;25:10420–10436. doi: 10.1523/JNEUROSCI.4684-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Humphreys GW, Cinel C, Wolfe J, Olson A, Klempen N. Fractionating the binding process: neuropsychological evidence distinguishing binding of form from binding of surface features. Vision Res. 2000;40:1569–1596. doi: 10.1016/s0042-6989(00)00042-0. [DOI] [PubMed] [Google Scholar]

- Humphreys GW, Riddoch MJ. Features, objects, action: the cognitive neuropsychology of visual object processing, 1984–2004. Cogn Neuropsychol. 2006;23:156–183. doi: 10.1080/02643290542000030. [DOI] [PubMed] [Google Scholar]

- Irwin DE, Andrews RV. Integration and accumulation of information across saccadic eye movements. In: Inui T, McClelland JL, editors. Attention and performance XVI. Cambridge, MA: MIT; 1996. [Google Scholar]

- Kitada R, Kito T, Saito DN, Kochiyama T, Matsumura M, Sadato N, Lederman SJ. Multisensory activation of the interapraietal area when classifying grating orientation: a functional magnetic resonance imaging study. J Neurosci. 2006;26:7491–7501. doi: 10.1523/JNEUROSCI.0822-06.2006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. Cortical regions involved in perceiving object shape. J Neurosci. 2000;20:3310–3318. doi: 10.1523/JNEUROSCI.20-09-03310.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kourtzi Z, Kanwisher N. Representation of perceived object shape by the human lateral occipital cortex. Science. 2001;293:1506–1509. doi: 10.1126/science.1061133. [DOI] [PubMed] [Google Scholar]

- Luck SJ, Vogel EK. The capacity of visual working memory for features and conjunctions. Nature. 1997;390:279–281. doi: 10.1038/36846. [DOI] [PubMed] [Google Scholar]

- Macaluso E, Driver J. Multisensory spatial interactions: a window onto functional integration in the human brain. Trends Neurosci. 2005;28:264–271. doi: 10.1016/j.tins.2005.03.008. [DOI] [PubMed] [Google Scholar]

- Malach R, Reppas JB, Benson R, Kwong KK, Jiang H, Kennedy WA, Ledden PJ, Brady TJ, Rosen BR, Tootell RB. Object-related activity revealed by functional magnetic resonance imaging in human occipital cortex. Proc Natl Acad Sci USA. 1995;92:8135–8139. doi: 10.1073/pnas.92.18.8135. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller EK, Erickson CA, Desimone R. Neural mechanisms of visual working memory in prefrontal cortex of the macaque. J Neurosci. 1996;16:5154–5167. doi: 10.1523/JNEUROSCI.16-16-05154.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nieder A. Counting on neurons: the neurobiology of numerical competence. Nat Rev Neurosci. 2005;6:177–190. doi: 10.1038/nrn1626. [DOI] [PubMed] [Google Scholar]

- Nieder A, Miller EK. A parieto-frontal network for visual numerical information in the monkey. Proc Natl Acad Sci USA. 2004;101:7457–7462. doi: 10.1073/pnas.0402239101. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Olson IR, Jiang Y. Is visual short-term memory object based? Rejection of the “strong-object” hypothesis. Percept Psychophys. 2002;64:1055–1067. doi: 10.3758/bf03194756. [DOI] [PubMed] [Google Scholar]

- Pelli DG. The video toolbox software for visual psychophysics: transforming numbers into movies. Spatial Vision. 1997;10:437–442. [PubMed] [Google Scholar]

- Pessoa L, Gutierrez E, Bandettini PA, Ungerleider LG. Neural correlates of visual working memory: fMRI amplitude predicts task performance. Neuron. 2002;35:975–987. doi: 10.1016/s0896-6273(02)00817-6. [DOI] [PubMed] [Google Scholar]

- Phillips WA. On the distinction between sensory storage and short-term visual memory. Percept Psychophys. 1974;16:283–290. [Google Scholar]

- Phillips WA, Christie DF. Components of visual memory. Q J Exp Psychol. 1977;29:117–133. doi: 10.1080/14640747708400638. [DOI] [PubMed] [Google Scholar]

- Piazza M, Izard V, Pinel P, Le Bihan D, Dehaene S. Tuning curves for approximate numerosity in the human intraparietal sulcus. Neuron. 2004;44:547–555. doi: 10.1016/j.neuron.2004.10.014. [DOI] [PubMed] [Google Scholar]

- Platt ML, Glimcher PW. Neural correlates of decision variables in parietal cortex. Nature. 1999;400:233–238. doi: 10.1038/22268. [DOI] [PubMed] [Google Scholar]

- Robertson LC. Binding, spatial attention and perceptual awareness. Nat Rev Neurosci. 2003;4:93–102. doi: 10.1038/nrn1030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sawamura H, Shima K, Tanji J. Numerical representation for action in the parietal cortex of the monkey. Nature. 2002;415:918–922. doi: 10.1038/415918a. [DOI] [PubMed] [Google Scholar]

- Shadlen MN, Newsome WT. Neural basis of a perceptual decision in the parietal cortex (area LIP) of the Rhesus monkey. J Neurophysiol. 2001;86:1916–1936. doi: 10.1152/jn.2001.86.4.1916. [DOI] [PubMed] [Google Scholar]

- Shafritz KM, Gore JC, Marois R. The role of the parietal cortex in visual feature binding. Proc Natl Acad Sci USA. 2002;99:10917–10922. doi: 10.1073/pnas.152694799. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Singer W, Gray CM. Visual feature integration and the temporal correlation hypothesis. Annu Rev Neurosci. 1995;18:555–586. doi: 10.1146/annurev.ne.18.030195.003011. [DOI] [PubMed] [Google Scholar]

- Smith EE, Jonides J. Neuroimaging analyses of human working memory. Proc Natl Acad Sci USA. 1998;95:12061–12068. doi: 10.1073/pnas.95.20.12061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song JH, Jiang Y. Visual working memory for simple and complex features: an fMRI study. NeuroImage. 2006;30:963–972. doi: 10.1016/j.neuroimage.2005.10.006. [DOI] [PubMed] [Google Scholar]

- Sugreu LP, Corrado GS, Newsome WT. Matching behavior and the representation of value in the parietal cortex. Science. 2004;304:1782–1787. doi: 10.1126/science.1094765. [DOI] [PubMed] [Google Scholar]

- Talairach J, Tournoux P. In: Co-planar stereotaxic atlas of the human brain. Rayport M, translator. New York: Thieme Medical; 1988. [Google Scholar]

- Todd JJ, Marois R. Capacity limit of visual short-term memory in human posterior parietal cortex. Nature. 2004;428:751–754. doi: 10.1038/nature02466. [DOI] [PubMed] [Google Scholar]

- Todd JJ, Marois R. Posterior parietal cortex activity predicts individual differences in visual short-term memory capacity. Cogn Affect Behav Neurosci. 2005;6:144–155. doi: 10.3758/cabn.5.2.144. [DOI] [PubMed] [Google Scholar]

- Toth LJ, Assad JA. Dynamic coding of behaviorally relevant stimuli in parietal cortex. Nature. 2002;415:165–168. doi: 10.1038/415165a. [DOI] [PubMed] [Google Scholar]

- Treisman A. Feature binding, perception and object perception. Philos Trans R Soc Lond B Biol Sci. 1998;353:1295–1306. doi: 10.1098/rstb.1998.0284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Treisman A, Gelade G. A feature-integration theory of attention. Cognitive Psychol. 1980;12:97–136. doi: 10.1016/0010-0285(80)90005-5. [DOI] [PubMed] [Google Scholar]

- Treisman A, Schmidt H. Illusory conjunction in the perception of objects. Cognit Psychol. 1982;14:107–141. doi: 10.1016/0010-0285(82)90006-8. [DOI] [PubMed] [Google Scholar]

- Vogel EK, Machizawa MG. Neural activity predicts individual differences in visual working memory capacity. Nature. 2004;428:748–751. doi: 10.1038/nature02447. [DOI] [PubMed] [Google Scholar]

- Vogel EK, Woodman GF, Luck SJ. Storage of features, conjunctions, and objects in visual working memory. J Exp Psychol Hum Percept Perform. 2001;27:92–114. doi: 10.1037//0096-1523.27.1.92. [DOI] [PubMed] [Google Scholar]

- Wheeler M, Treisman AM. Binding in short-term visual memory. J Exp Psychol Gen. 2002;131:48–64. doi: 10.1037//0096-3445.131.1.48. [DOI] [PubMed] [Google Scholar]

- Wiggs CL, Martin A. Properties and mechanisms of perceptual priming. Curr Opin Neurobiol. 1998;8:227–233. doi: 10.1016/s0959-4388(98)80144-x. [DOI] [PubMed] [Google Scholar]

- Wojciulik E, Kanwisher N. The generality of parietal involvement in visual attention. Neuron. 1999;23:747–764. doi: 10.1016/s0896-6273(01)80033-7. [DOI] [PubMed] [Google Scholar]

- Xu Y. Encoding color and shape from different parts of an object in visual short-term memory. Percept Psychophys. 2002a;64:1260–1280. doi: 10.3758/bf03194770. [DOI] [PubMed] [Google Scholar]

- Xu Y. Limitations in object-based feature encoding in visual short-term memory. J Exp Psychol Hum Percept Perform. 2002b;28:458–468. doi: 10.1037//0096-1523.28.2.458. [DOI] [PubMed] [Google Scholar]

- Xu Y. Encoding objects in visual short-term memory: the roles of location and connectedness. Percept Psychophys. 2006;68:815–828. doi: 10.3758/bf03193704. [DOI] [PubMed] [Google Scholar]

- Xu Y, Chun MM. Dissociable neural mechanisms supporting visual short-term memory for objects. Nature. 2006;440:91–95. doi: 10.1038/nature04262. [DOI] [PubMed] [Google Scholar]

- Xu Y, Chun MM. Visual grouping in human parietal cortex. Proc Natl Acad Sci USA. 2007 doi: 10.1073/pnas.0705618104. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]